1. Introduction

The most successful quantum algorithms rely on the quantum computer’s ability to efficiently switch between different representations of a function. Then, a measurement in the corresponding basis delivers information about some structural property of the function. The most prominent example, a measurement in the Fourier basis, can extract information about the periodicity of the function and allows breaking schemes like the RSA. Similarly, the algorithm proposed in [

1] uses the Fourier transform over the

to find the parity basis representation [

2]. In this paper, we want to follow a similar approach, however, in a different setting. We begin with an easy-to-prepare yet exponentially big quantum state. Next, we perform a quantum basis change, which in a classical setting is costly, and retrieve meaningful information about the function.

The HHL algorithm, proposed in 2009 by [

3], is a quantum procedure that computes the preimage of a given input. Unlike the classical approaches, it does not linearly depend on the size of the matrix. Rather, its runtime is defined by the sparseness and condition number of the matrix, and only logarithmically by the matrix size. Since most of the modern ciphers can be described as some form of exponentially big equation system, the idea of using HHL to cryptanalyse ciphers came forward [

4] and was quickly followed by other publications. However, the common factor was always the abuse of the logarithmic speed-up in the runtime of the HHL algorithm.

In this paper, we will also tackle an exponentially big input

. In contrast to the standard approaches of HHL-based cryptanalysis, we will consider matrices of a special form. We prove that, if the matrix

M can be decomposed into a tensor product

, then computing the pre-image can be significantly sped up. On classical computers, pre-image computation can be done in polynomial time in regard to the vector’s size. Only in the case when the input

can likewise be decomposed into the following tensor product:

are we able to obtain a subpolynomial runtime. Here, the sizes of

’s must match the sizes of corresponding matrices

for each

. If such a decomposition of

is unknown, or even impossible, the fastest known classical approximation algorithm is the conjugate gradient method, with a runtime of

. In our approach, we do not need a vector decomposition to perform the HHL algorithm, allowing a runtime advantage over classical computers. We summarize this observation in the following Theorem:

Theorem 1. Let be a horizontal decomposition of a Hermitian matrix M. Further, for all , let be a Hermitian matrix with its condition number and its sparseness. The time complexity of the vertical HHL from Theorem 2 for the matrix M is: Based on the above Theorem, our approach is next applied to a cryptographically significant matrix corresponding to the Boolean Möbius transform. The transform allows transition between the algebraic normal form (ANF) of a Boolean function and its truth table (TT). We decided to decompose this matrix as, in its composed form, it has high sparsity (one full column). Applying the standard HHL algorithm in such a scenario would be counterproductive, as the runtime would be comparable to the gradient approach (, where N is the number of rows in the matrix. Instead, we decompose the Möbius transform into a tensor product of n small sub-matrices ( before Booleanization) and achieve a runtime of with .

By capturing the truth table in the superposition and computing its preimage under the Boolean Möbius transform, we manage to encapsulate the algebraic normal form of a Boolean function

f under attack in the quantum register. The result is as follows:

where

is the binary coefficient of the monomial

.

can be used to both retrieve the ANF of the function, as well as to estimate its Hamming weight. We also use the technique introduced in [

5] to extract information about a Boolean function using the

algebraic transition matrices (ATMs).

A standard requirement in quantum computation is the unitarity of evolutions applied to the qubits. We employ the HHL algorithm to allow inversion of non-unitary matrices. The HHL algorithm is a well-established framework suitable for this operation. However, in the Boolean Möbius transform setting, the procedure could be adjusted. As the Möbius transform is self-inverse, we do not need to compute the preimage. Instead, computing the image under the transform delivers the same result.

We introduce the notation in

Section 1.2.

Section 2 focuses on the HHL algorithm. We estimate the runtime and present how to Booleanize a matrix and how the HHL algorithm is used in cryptanalysis. In

Section 3, we discuss our new approach to applying HHL to a tensor product. The final section concludes with the proof of Theorem 1.

Section 4 shows how to apply the tensor approach to a specific function—the Boolean Möbius transform. We define the Boolean Möbius transform and its tensor decomposition. We explain why it is better to apply the algorithm to decomposed matrices and how to generate the input quantum state. Finally, we describe the result of our algorithm. In

Section 5 we elaborate on the cryptographic use-cases of the Boolean Möbius transform. We show how the transform can be used to analyse the algebraic normal form of a Boolean function.

1.1. State-of-the-Art of HHL in Cryptanalysis

The cryptographic appeal of the HHL algorithm is clear. Most modern ciphers are characterized by complex equation systems needed to describe them. The first attempt was introduced in [

4], and used the so-called Macaulay matrix—an exponentially big and sparse matrix which can be solved for the Boolean solution of a polynomial system. The next proposal targeted the NTRU system [

6] and used the HHL algorithm to solve a polynomial system with noise [

7]. Finally, in [

8], the Grain-128 and Grain-128a ciphers were cryptanalysed using techniques based on [

4,

7]. Ref. [

9] suggested improvements upon the above papers, and proved a lower-bound on the condition number of the Macaulay matrix approach. In [

10], two systems of equations for AES-128, one over

and another over

based on the BES construction introduced in [

11], were analysed under the HHL algorithm.

1.2. Notation

We will shortly introduce the common notation used throughout this paper. A function

is called a Boolean function. It is known that each Boolean function

f has a polynomial representation

[

2]. For an index set

, we define

. We also define

as the coefficient of the term

, and will sometimes switch to the notation where the index is the decimal number defined by the binary interpretation of

I. For a Boolean function

, the Algebraic Normal Form (ANF) of

f is

. The representation of

f in the ANF is unique and defines the function

f. Moreover, we can compute the coefficients

’s as follows:

where

[

2]. In other words, we can compute the coefficient

by adding (over

) the images of all inputs dominated by

I (cf. to precursor sets defined in [

5]).

The Hamming weight of a Boolean vector

is denoted as

, and it is the number of non-zero entries. The degree of a coefficient can be computed using the Hamming weight:

The truth table of a Boolean function f is a binary vector defined as .

A vectorial Boolean function is defined as for . Further, for all , is a Boolean function and the projection of F to the ith component. A projection of a string s to its i’th component will be denoted by .

By we denote the Hadamard gate, and by its n-fold tensor product. The E is an identity matrix. In the cases where the size of the identity matrix is not explicitly stated, the index j indicates the size of the matrix .

2. HHL Algorithm

The HHL algorithm, named after the authors Harrow, Hassidim, and Lloyd, is one of the new tools in the quantum toolbox used for cryptanalysis. It was first proposed in [

3] as an efficient algorithm to solve exponentially big systems of linear equations, outperforming any classical algorithm. Its cryptographic significance was quickly discovered, and [

4] proposed the first attack scenario. Since then, a series of cryptographic publications have considered the HHL setting.

We introduce two problems closely related to the HHL algorithm:

Problem 1. Given a Hermitian matrix and an input state with find the state such that: We can also reframe this into the quantum setting as follows:

Problem 2. Given a Hermitian matrix and an input state with find the state such that: with .

The HHL algorithm solves Problem 2. To compute the pre-image of

, we need to represent the vector as a quantum register

. Then,

M is transformed into a unitary operator

, and the operator is applied to

using the Hamiltonian simulation technique [

12]. This is equivalent to decomposing

to an eigenbasis of

M and determining the corresponding eigenvalues. The register after this transformation is in the following state:

where

,

is the eigenbasis and

’s are the corresponding eigenvalues. Using an additional ancilla register, we perform a rotation conditioned on the value of

to obtain the following state:

We finish with a measurement of the ancilla register. If

is observed, the eigenvalues

of the matrix

M are inverted, and the register ends in the following state:

2.1. HHL Runtime

In [

3], the authors show how to run the HHL algorithm for a matrix

in time

, where

s is the sparseness of the matrix

M,

is its condition number, and

N is the size. The

notation suppresses all slowly-growing parameters (logarithmic with respect to

or

). The algorithm requires that

M is either efficiently row-computable, or an access to the oracle which returns the indices of non-zero matrix entries for each row. The phase estimation (PE) step is responsible for a significant portion of the runtime of the HHL. For an

s-sparse matrix, the PE takes

computations. We observe that, after the rotation conditioned on

, we have only a certain probability of observing the ancilla register in a state

. However, since the constant

C from Equation (2) is of magnitude

, with

applications of the amplitude amplification algorithm [

13] we can lower the probability of the undesired measurement to a negligible value. Ref. [

14] improved the HHL algorithm by lowering the condition number dependence from quadratic to almost linear. Ref. [

15] proposed a further improvement to achieve the runtime as follows:

This will be the complexity that we will use in this paper. Nevertheless, since the values of the matrices we propose are constant, the choice of the implementation should not have much influence on the feasibility of the algorithm we develop.

2.2. HHL over Finite Fields

The HHL algorithm in its initial form is defined for matrices and vectors over

. Since most of the cryptographic constructions use finite field arithmetic, the question of whether HHL can be adapted to this setting came forward. In this part, we want to present a set of rules which describe how to transform an equation system

M over a finite field

to an equation system over

as required by the HHL algorithm. Most of the reductions were proposed in [

7]. The result will be an equation system over

which shares the solution with the original system. We will call the resulting system the

Booleanization of M, and the

-matrix corresponding to the system a

Booleanized matrix. The total sparseness of a matrix is the number of non-zero entries in all of its rows and columns.

Reduction from Boolean Equations to Equations over

For a given set of Boolean functions

with

n variables, we want to find a system

, such that when

is a solution to

, then

is a solution of

. To do this, we will define a new set of variables

and

new quadratic equations as follows:

The first two sets of equations guarantee that the value of . This represents the Boolean addition logic in the original system . The last equation guarantees that the only possible values of are from . An important observation here is also that .

Next, we need to present

’s in a form usable by the quantum computer. Each

will be represented in its binary form with

bits:

However, since Equation (4) holds, we know

. This means we actually only consider the following representation:

We need to incorporate this into the equation system mentioned above in the following way:

Similarly, as in (5), the equation forces the values of . The additional equations mentioned in this section increase the number of needed variables from n to and the number of equations from m to . Thus, in the notation, the runtime of the HHL remains the same for .

Finally, the HHL algorithm requires the input matrix to be Hermitian. The resulting Booleanized matrix corresponding to the system

is not. Luckily, we can use the procedure proposed by the original authors of the HHL algorithm to cover this case [

3]. The technique uses the property that for an arbitrary matrix

M that is not Hermitian, we can construct a new matrix

:

The newly constructed matrix will be Hermitian and a valid HHL input. Here, the is the conjugate transpose of M. We finish this section with a Lemma characterizing the setting and the runtime of the HHL algorithm:

Lemma 1. ([

3,

15]).

Let be a Hermitian matrix and be a quantum state. We denote the application of the HHL with the matrix M to a state as:The result is a quantum state which solves Problem 2 and the procedure takes time.

3. HHL for Tensors of Matrices

Usually, when considering the use-case of HHL, we want to leverage the exponential speed-up in regard to the size of the matrix. This is the natural direction, as that is the obvious runtime difference between the HHL and the classical approach. The hindrance is, however, that, even though the matrix can be exponentially big, it should not have too many entries. In fact, a matrix with a single full column eliminates the potential use of the HHL (cf.

Section 2.1).

In this section, we present an approach to overcome the above-mentioned obstacles for a special set of matrices. We prove that if for a matrix M we are given a tensor decomposition , where each is again Hermitian and has a small size, applying the HHL approach to each has a low runtime and delivers the same result. In some cases, our algorithm computes the preimage of a quantum state under the matrix M exponentially faster than the standard HHL approach. A similar technique is a building block of known quantum algorithms, among others, Shor’s or Simon’s algorithm. For these two algorithms, instead of applying a matrix to an n-long register , we apply to each of n qubits of .

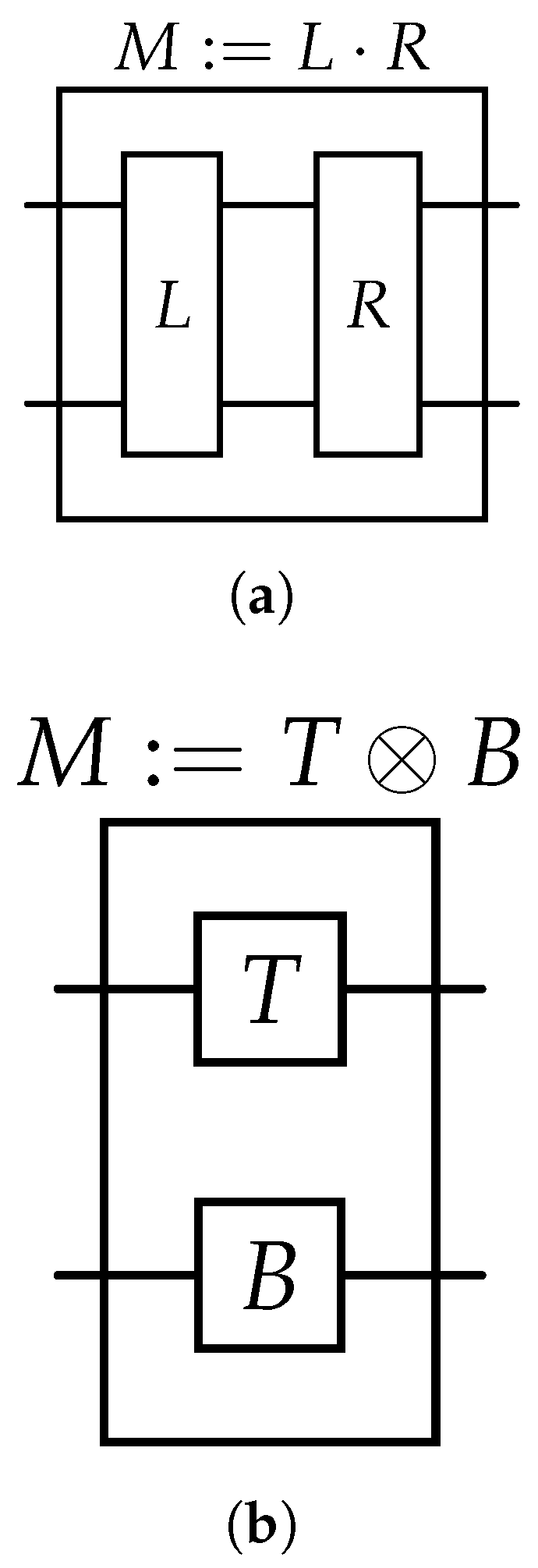

We start with a simple Lemma saying that applying a vertical/tensor (cf.

Figure 1) decomposition of a unitary matrix

to quantum sub-registers results in the same state as applying the matrix

U to the whole register:

Lemma 2. Let be unitary matrices with . Further, let be the corresponding quantum states with . Then: This result is unsurprising and is the reason for most quantum algorithms’ efficiency. However, we will generalize the argument to show that this equality also holds for non-unitary matrices.

Lemma 3. Let be matrices and be identity matrices with and and . Then: Proof. Since both the product and the tensor of two matrices are matrices again, it suffices to show this property for two matrices. The rest follows from the associativity of matrix products. Let

,

. Further, let

. Then, by standard tensor properties, we know the following:

Since is indifferent to M, it does not make a difference which one we apply to a vector . □

Again, this is a simple fact from linear algebra, but it will constitute a major stepping stone for our algorithm. We want to highlight that the vector from Lemma 3 does not have to be a valid quantum state even if is. Since the matrix M is not unitary, it means the result does not have to be normed.

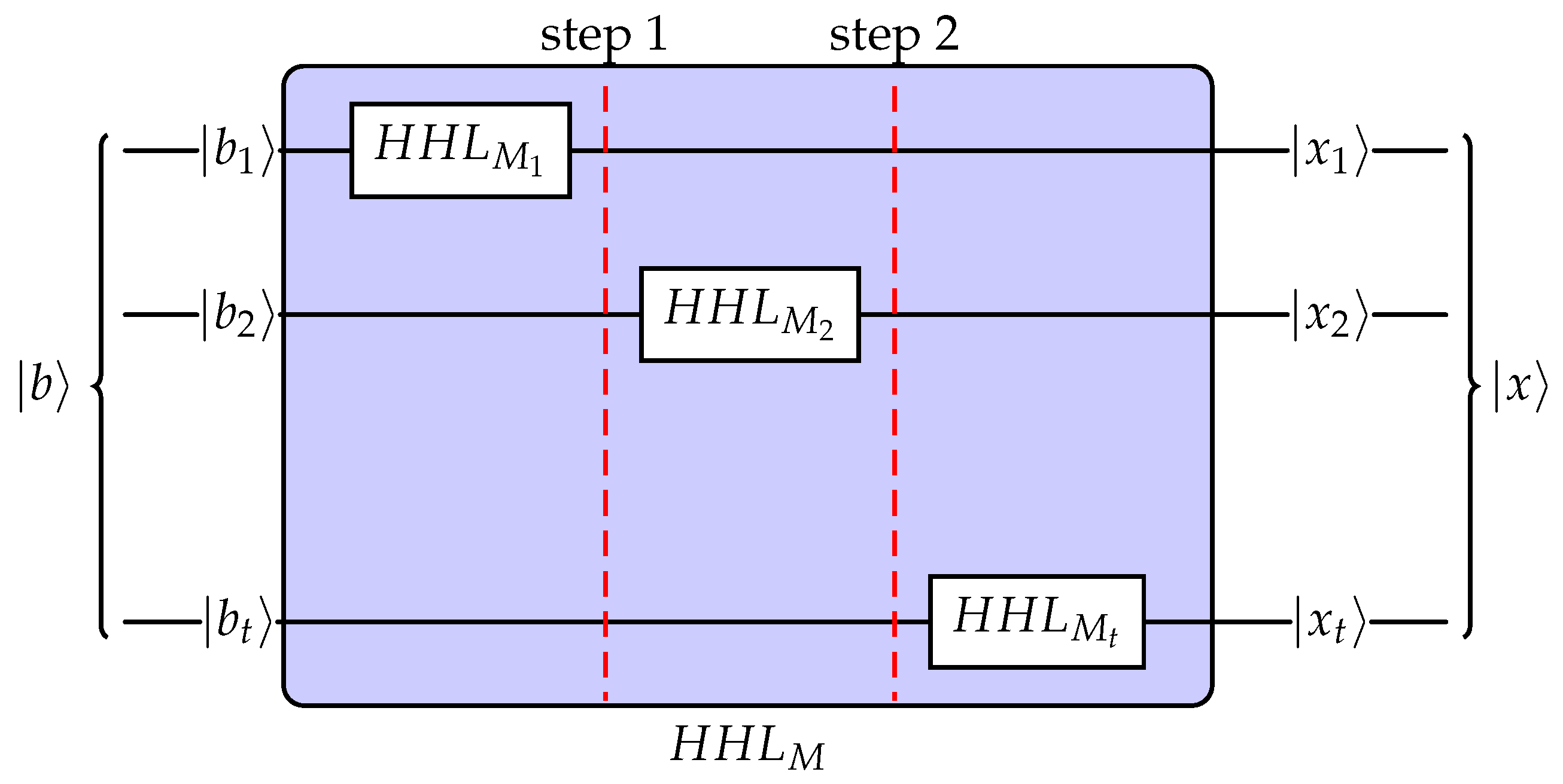

We will now use the observation from Lemma 3 to build a procedure to solve Problem 2 for a matrix

with the property

. Instead of applying HHL to the whole matrix

M, we will consider its vertical decomposition and apply HHL to the sub-matrices. We highlight that finding such a decomposition is an NP-hard problem [

16], but we assume it is given to us. The idea is depicted in

Figure 2. The resulting state will be identical to that of HHL applied to the matrix

M.

We begin with a Lemma showing that, for a simple-structured tensor product (a tensor product of mostly diagonal matrices), applying the HHL algorithm on the combined state or the horizontal decomposition delivers the same result and has almost identical runtime.

Lemma 4. Let be a Hermitian matrix and be the HHL algorithm from Lemma 1. Further, let M have the following structure:for a Hermitian . Here, ’s are identity matrices of appropriate dimension. Then, for a given input , the following equality holds: Proof. For a matrix

, the HHL algorithm first uses the Hamiltonian simulation technique to apply

to the register

. Observe that we can rewrite

M as follows:

for

and

. Then, the following equality holds:

As the remaining steps of the HHL algorithm do not depend on the matrix M, the claim follows. □

Because of the simple structure of M from Lemma 4, the runtime of will be . This accounts for an overhead of when compared to (under the assumption that ’s and are of relatively similar size).

Theorem 2. Let be a vertical decomposition of a Hermitian M. Given ’s are Hermitian matrices as well, let and be the unitary HHL algorithm circuit for a matrix M and , respectively. Then for all quantum states :and the result is a valid quantum state from Problem 2. Proof. Let

and

be the unitary circuit which implements the application of the HHL algorithm with input matrix

M and

, respectively. Then, by the Lemmas 3 and 4, the following constructions are equal:

Further, since

is a valid quantum circuit, the output of the algorithm is a proper quantum state. Finally, as described in

Section 2, the output of the algorithm is a vector

such that the following is true:

This is exactly the solution to Problem 2. □

As the vertical construction is well-parallelizable, the runtime of the vertical HHL will be upper bound by the complexity of the most time-intensive inversion.

Theorem 3. Let be a horizontal decomposition of a Hermitian matrix M. Further, for all , let be a Hermitian matrix with its condition number and its sparseness. The time complexity of the vertical HHL from Theorem 2 for the matrix M is: Proof. The time complexity of applying each

on a subregister is upper-bound by applying the most cost-intensive one. As presented in

Section 2.1, this corresponds to a time complexity as follows:

Further, applying

to any subregister is equivalent to not evolving the register in any way. Therefore in each step, we apply

to one of the subregisters and leave the other registers constant. Since we need to invert

t sub-matrices, we have

t steps, each upper-bound by Equation (6). This results in the runtime as follows:

In our analysis, we implicitly hide the fact that, as mentioned in

Section 2.1, in the HHL algorithm we need to perform a measurement of the ancilla register which, with a certain probability, might fail. However, using the amplitude amplification we can boost the probability of measuring the correct state to be almost 1 in

steps for each HHL-subroutine. In [

13], the authors describe a method how to slow the rotation down, when the amplitude of the correct state is big enough. In fact, for each subroutine, with

steps we can obtain a probability of measuring the incorrect state upper-bound by

. Then, for the state

, which is the result of the vertical HHL algorithm, the probability of measuring at least a single

can be lower-bound by the following:

Therefore, with at least probability the vertical HHL algorithm will succeed. It should be noted that the ancilla registers indicate when one of the matrix inversions has failed. □

Finally, we want to highlight that, in our considerations, all the subroutines are not executed at the exact same time. Instead, they are sequentially applied to each of the registers. We believe that simultaneously applying all the routines would not drastically change the result and could lead to a speed-up factor of compared to Theorem 3. We keep it as future research to formally prove the claim.

4. HHL for Möbius Transform

In this section, we introduce the Boolean Möbius transform, a building block of many cryptanalytical tools. Further, we present how the method from

Section 3 can be used for the Möbius transform.

4.1. Möbius Transform of Boolean Functions

The Boolean Möbius transform is the mapping

. It acts as the bijective transform between the ANF of a Boolean function

f, and its truth table

. It is defined in the following way:

where

is the Boolean function defined through the coefficients [

17] (cf. Equation (

1)). For a Boolean function

f, we can compute its Möbius transform in terms of matrices.

Claim 1. Let be a Boolean matrix recursively defined as:

- 1.

- 2.

where is a 0-matrix.

For a Boolean function f, can serve as the Möbius transform acting on the truth table and the corresponding ANF as follows:

.

A direct consequence is that is self-inverse.

The above claim is thoroughly discussed in [

18,

19]. As a consequence, we can describe the Möbius transform as a tensor product of polynomial terms:

Lemma 5. We can describe using the tensor product as: Proof. Using the definition of tensor products for matrices, we have the following:

□

Lemma 6. Let be the truth table of a Boolean function f. Then we can compute the algebraic normal form of f as: Proof. By Claim 1 and Lemma 5, we know

can be described as follows:

Further, by Lemma 3, we know that the constructions are equivalent:

Finally, by using Claim 1, we know that proving the statement. □

Example 1. Let . Then, the truth table of f is . We will compute the ANF of f using the procedure mentioned in Lemma 6: We highlight that the formula for the ANF of f from Lemma 6 resembles the formula mentioned in Theorem 2. We will next show that by using the HHL algorithm we can mimic the application of and the result will be a quantum state which represents the .

4.2. Möbius Transform Using HHL

In this section, we show how to tackle a specific initialization of Problem 2. We will compute the preimage of the matrix describing the Möbius transform.

Problem 3. Given a matrix defined in Claim 1, and an input state with , find the state such that: As we have seen in

Section 2, inverting the matrix

would solve Problem 3. However, we cannot simply apply the HHL algorithm for matrix

to an arbitrary register

. First, observe that

is defined as an

-matrix, while HHL operates over

. Instead, we need to use the reduction mentioned in

Section 2.2 to create a different matrix

, which delivers the same result as

over

. We need additional variables and equations to force the solution to be an

-vector and ensure that the addition modulo 2 is correct. It must be noted that this procedure will expand the size of the matrix only polynomially.

Second, the HHL algorithm requires the matrix to be inverted to be Hermitian. The resulting matrix

is not. We can use the procedure already mentioned in

Section 2 by [

3]. The main idea is that, for an arbitrary matrix

M that is not Hermitian, we can construct a new matrix

:

and the matrix

will be Hermitian. Since, for our target matrix

, all the entries are real, it is clear that

. No conjugation needs to be calculated to provide oracle access to

. Instead, we now also need the column-oracle of the matrix (cf.

Section 2.1). The reason for this is the observation that the bottom rows of

are in fact the rows of

, which are actually the columns of

. For matrices of exponentially big sparsity, this is an additional requirement.

Further, the input vector must be adjusted. While, for

, the input was a vector of the form

, for the new matrix

C we need the vector

. Also, the output will have a different form,

, due to the following:

There is one problem when we try to apply this approach to the matrix

. The sparsity plays a crucial role in the runtime of the HHL. As the Boolean Möbius transform always has one full column, the sparseness of

is exponential:

We will overcome the runtime implications of the high sparsity of as input to HHL using the decomposition technique.

4.3. Booleanized vs. Booleanized

To overcome the exponentially big runtime of , we will instead call the HHL with the Boolean Möbius transform for each single qubit. Again, we want to highlight that the HHL algorithm requires a Hermitian matrix, which neither nor are. In the construction above, we Booleanized the matrix to guarantee that the addition is performed modulo 2 and the solution is an -vector. However, it is not guaranteed that the matrix can be decomposed into a tensor of small matrices.

Instead, for the vertical HHL, we will first decompose the matrix

, and then perform the Booleanization on each sub-matrix

. Refs. [

7,

8] mentioned a set of additional or alternative equations, which when incorporated into the equation system, allow

-computation. In the example below, we present one candidate for such a matrix.

Example 2. Using the techniques mentioned in Section 2.2, we contruct a matrix . For , the preimage under of the vector has the form: We define as:such, that: The construction uses a handful of extra qubits for each HHL application, however, the number of needed qubits will stay polynomial in terms of the size of the register holding the state

. The matrix must be Hermitianized before being plugged into the HHL (see Equation (7)). The result will be a matrix

. We want to highlight that the sparsity in Example 2 does not depend on the number

n:

and the condition number

is constant.

With the machinery in place, we can solve Problem 3 using the algorithm from Theorem 2. Further, we can estimate the runtime of the algorithm:

Theorem 4. Let be a horizontal decomposition of . Further, let be the Booleanized version of . Then, using the vertical HHL algorithm with input , for an arbitrary quantum state , we can solve Problem 3 in time.

Proof. As seen in Theorem 1, for a matrix decomposition

, the runtime is as follows:

In our case, all

’s correspond to the Booleanized version of the

matrix. We can use the techniques mentioned in

Section 2.2 to create a Booleanized

. All the reduction steps keep the sparsity, the condition number, and the size of the resulting matrix polynomial in terms of the size of

[

7]. Let

be the sparsity, condition number, and size of the matrix

, respectively. Plugging the values into the upper-bound, we obtain the collected runtime for the algorithm:

Keeping in mind that Problem 3 is an initialization of Problem 2, as well as Theorem 4 is an initialization of Theorem 2, the claim follows. □

4.4. How to Generate Input

We propose two approaches to generate the input

as described in Problem 3. They will consider two different settings and target different encryption scheme types. We first introduce the Q1 model, the more realistic yet less powerful one. In Q1 model, the attacker has access to a quantum computer and some classical oracle. This is the standard model used for Shor’s or Grover’s algorithm. In the Q2 model, we assume a quantum oracle to which the attacker has access. A quantum oracle allows superposition queries. While this attack scenario is based on a very strong assumption, it might still lead to interesting insights into ciphers structure [

20,

21].

Our Q1 attack will target plaintext-independent constructions, like classical stream ciphers or block ciphers in counter-mode with fixed IVs. Since for a fixed cipher

f and a fixed IV value we can build a deterministic classical circuit, we are also able to build its unitary version

[

22]. Using

and an equally distributed superposition of all states, we can prepare the following state in polynomial time:

Now, when we measure a

in the second register, only values

i for which

will remain with a non-zero amplitude in the superposition:

The register

has now exactly the desired form of

from Problem 3, and each

corresponds to the bit output for a given key

i. Obviously, the

measurement only happens with a certain probability. However, as in the cryptographic setting, we mostly work with functions that are almost balanced [

2], and we expect the probability to measure the desired state to be fairly high. For a Boolean function

f, the said probability is the ratio of the Hamming weight of

and the size of its truth table, as it is for a balanced function

. We show the exact procedure in Algorithm 1.

| Algorithm 1: Q1 model input preparation for Problem 3 |

| Input: Quantum implementation of a keyed cipher |

| ; |

| ;

|

| ; |

| Measure register ; |

| If go to step 1; |

| return ; |

Output: , where is the value of scaled by the factor

to produce a valid quantum state.

|

In the Q2 model, we can perform a similar trick to retrieve the superposition of all outputs of a function with a fixed key. The superposition, however, encapsulates the possible inputs to an encryption function with a secret key. Especially in the case where there are weak keys, this might allow retrieving some additional information about the key and some encrypted plaintext. The Q2 attack resembles the previous one and is presented in Algorithm 2.

| Algorithm 2: Q2 model input preparation for Problem 3 |

| Input: Quantum oracle for a cipher |

| ;

|

| ;

|

| ;

|

| Measure register ;

|

| If go to step 1;

|

| return ;

|

Output: , where is the value of scaled by the factor

to produce a valid quantum state.

|

5. Cryptographic Use-Case

In this section, we want to use the HHL algorithm for Möbius transform to perform cryptanalysis of Boolean functions. We begin with an approach that allows creating the state , which might be polynomially reconstructible for sparse functions.

5.1. Retrieving the Algebraic Normal Form

In

Section 4.2, we showed how to generate the input corresponding to the

for a Boolean function

f. The procedure runs in polynomial time and the result is a vector of the following form:

We also showed how to construct the matrix input for the HHL algorithm, which corresponds to the Boolean Möbius transform. The matrix is sparse and of low condition number and a constant size. The HHL algorithm would take constant time to run for a single instance of , and time to apply the Möbius transform to the whole register (cf. Theorem 4).

As seen in

Section 4.1, applying

to the vector

results in a vector which describes the algebraic normal form of the function

f. A similar result is obtained when the vertical HHL algorithm with

input is applied to the register

.

Theorem 5. Let be the vertical application of to n qubits: The ancilla register is needed to cover for the special Booleanized construction of . Then, applying the to the state results in the following register: With an additional measurement of some helper register we can obtain the pure state .

Proof. As seen in Theorem 4, applying the vertical HHL approach with input allows for computing of the preimage of the input vector . The result is equivalent to the preimage of under the Boolean Möbius transformation . As seen in Claim 1, the result is a vector holding the algebraic normal form of f scaled by a factor dependent on the superposition. Finally, we can discard the ancilla register (or perform some additional conditional measurement) and only consider the register . □

The resulting register corresponds to the vector description of the ANF of f, scaled by a factor. The important observation is that only the coefficients which are one have a non-zero amplitude.

5.1.1. Sparse ANF Representation

Classically, reconstruction of an unknown Boolean function is a difficult problem. One of the approaches can be obtained from the learning theory and is based on the Fourier spectrum [

2]. One can also use the Formula (

1) mentioned in

Section 1.2 to reveal the ANF. While obtaining the coefficients of low degree coefficients is not problematic, the complexity grows exponentially with the degree of the coefficient. On the other hand, if the degree of the coefficient is too high, it affects a small number of possible outputs and has a low influence on the truth table of the function.

When considering the result of the algorithm described in Theorem 5, we want to highlight that the state

is an equally distributed superposition of all the base states which contribute to the ANF. Upon measurement, we obtain any of the ANF’s active (non-zero) coefficients with the same probability. The difference, opposing the classical scenario, is that the number of steps we need for each coefficient is independent of the coefficient’s degree. Especially for Boolean functions with sparse representation (polynomial number of coefficients with respect to

n) and many high-order coefficients, the ANF reconstruction can be significantly sped up. Simple measurements can be combined with techniques like amplitude amplification to guarantee that the whole ANF is properly recovered in polynomial time [

13].

In the Q1 model, retrieving the can give us information about the secret key that is being used to generate some bit-stream. By combining information from multiple Boolean functions (e.g., multiple one-bit projections generated by the stream cipher) we could build a polynomial-size equation system which can be solved for the secret key. The ANF obtained in the Q2 model does not give us direct information about the key, but could be used to decrypt future ciphertexts.

5.1.2. Estimating the Number of Terms

In some cases, especially when

is super-polynomial, we might consider other properties of

f rather than its ANF. The state

allows us to estimate the number of non-zero coefficients in the algebraic normal form of the Boolean function. We can achieve this using a technique called

Quantum Amplitude Estimation [

13].

Quantum amplitude estimation deals with a problem connected to the Grover’s algorithm setting. However, instead of searching for an

, we are interested in the size of said set. Similarly, as in Grover’s algorithm, the algorithm divides the space into two subspaces following some indicator function

f:

We call

the good components and

the bad components. We want to estimate the probability of measuring a good component. To achieve it, the algorithm uses the famous Grover’s operator combined with phase estimation. After [

13] was published, other potential candidates to solve the same problem were constructed [

23,

24,

25].

We begin with a straightforward observation that the state is an equal superposition of all states which correspond to the non-zero coefficients of . This means that the amplitudes of are only zeros and ones scaled by a factor of . If we were able to estimate the amplitude of one of the active entries, we could determine the Hamming weight . This is exactly the sparsity of the function f. Further, since all non-zero amplitudes are equal, we just need to find the amplitude of a single element from the ANF (e.g., by measuring we find the element from and subsequently estimate its amplitude). Sometimes, we might not be interested in finding the exact number but rather proving that it is above a certain threshold. Since most of the amplitude estimation algorithms have their runtime dependent on an error threshold, we could verify if the amplitude is only smaller than some value, which guarantees the desired ANF sparseness.

We could iterate the above ideas to prove additional properties. By carefully choosing the function used for amplitude estimation, we could check how many higher-order terms are in the ANF. The

good states (as defined in [

13]) could be the terms with a degree greater than some threshold. This task would take exponential time in the classical scenario, but could be efficiently performed on a quantum computer.

5.1.3. Comparison to Classical Approaches

Retrieving the algebraic normal form of a Boolean function is a concept closely related to computational learning theory. In the classical scenario, given a set of samples of the form

, we want to compute a function

such that the following is true:

for some non-negligible constant

c. Obviously, reconstructing the original function

f would solve this problem. However, since higher-order terms of

only influence a few outputs, ignoring terms with orders bigger than some predetermined value

t might be beneficial. How many such high-order terms might be ignored depends on the value of

c.

In [

2], a learning approach is proposed, which relies on determining the Fourier coefficients of

f instead of its ANF. As there are

such coefficients, and computing each one is not a trivial task; for bigger

n-values, this is not a manageable approach. The corollary below estimates the runtime of such an approach for a specific set of with total influence bounded by some value

t.

Corollary 1 ([

2]).

Let be the total influence of a Boolean function f, defined as:where are the Fourier coefficients of f.For , let . Then is learnable from random examples with error ϵ in time .

An alternate approach would be learning the actual ANF coefficients of the function

f. As mentioned in

Section 1.2, the formula for a coefficient of the term

is as follows:

So, to compute a coefficient of order , we need to evaluate the function f at inputs and add them together. For higher-order terms, the number of computational steps is thus .

In comparison, our approach depends solely on n and the Hamming weight of the algebraic normal form of f. The high-order coefficients are as easy to determine as the low-order terms. Furthermore, we are not aware of any classical algorithm other than trivial counting, which determines the Hamming weight of the ANF of a function.

Historically, certain sparse but high-order functions were used in cryptography. A famous example, the Toyocrypt cipher, was attacked in [

26]. Even though the polynomial used there was not secret, some of the currently used ciphers may use functions with sparse polynomial representation.

5.2. Algebraic Transition Matrix

Another approach using a concept closely related to the Boolean Möbius Transform was proposed in [

5]. For a vectorial Boolean function

, ref. [

5] define a linear operator mapping from

to

, where

is the Kronecker delta function. The operator is called a transition matrix:

Definition 1 (Transition matrix [

5]).

Let be a vectorial Boolean function. Define as the unique linear operator that maps to . The transition matrix of F is the coordinate representation of with respect to the Kronecker delta bases of and . The transition matrix has a set of useful properties which allow combining smaller transformations to a bigger, more complex one [

5]. However, in this paper, we will not focus on those and rather will show that, in the Q2 model, we can obtain oracle access to the transition matrix.

Example 3 ([

5]).

Consider a function defined asThe truth table of such a function is as follows: The transition matrix is then: as follows We want to highlight that, given a column index i, the only non-zero entry appears in the ’th row. If we allow superposition queries to the function F (as is assumed in the Q2 model), we have a quantum column-oracle for the transition matrix. To obtain the row-oracle which, given a row index, returns the indices of non-zero entries in that row, we additionally need an oracle for the function. For a permutation, the oracle acts as a bijection. However, as seen in the example above, this must not be the case in general. With some assumptions about the maximal number of elements that map to the same value, we can still solve this problem with a bigger output register. We also want to highlight that such a matrix is well-suited for the HHL algorithm—the sparseness and condition number can be upper-bound by . Especially for F, which is a permutation, the runtime of HHL with is .

While the transition matrix is not necessarily useful in the HHL setting, they are a building block of a more interesting construction. We will first introduce a dual to the Boolean Möbius transform, as defined in [

5], and then use it to build an algebraic transition matrix.

Definition 2 (Dual Boolean (binary) Möbius Transformation [

5]).

The dual Boolean Möbius transformation of a Boolean function is the Boolean function , where is the coordinate of f corresponding to in the precursor basis. The precursor basis vectors are the indicator function of precursor sets:The matrix representation of has the following form: Observe that the dual Boolean Möbius transform

is actually the transpose of

defined in

Section 4.1. By techniques similar to the ones mentioned above, we can also Booleanize

. With the definitions above, we can finally introduce the algebraic transition matrices:

Definition 3 (Algebraic Transition Matrix [

5]).

Let be a vectorial Boolean function. Define as the dual Boolean Möbius transformation of . That is:The algebraic transition matrix of F is the coordinate representation of with respect to the precursor bases of and .

The algebraic transition matrix can be computed as a horizontal decomposition of three matrices, two dual Möbius transforms and a transition matrix. We observe that like the Boolean Möbius transform, its dual is self-inverse. So in fact, we can compute

as follows:

The HHL algorithm for each of those matrices runs in time

. The two dual transforms can be vertically decomposed (cf.

Figure 1b) using the technique from

Section 4.2, while

is well-suited for HHL as we argued above. We can combine the three HHL routines by straight concatenation:

As a result, we can compute the preimage of a quantum state under the algebraic transition matrix in polynomial time by combining the classical HHL algorithm with the vertical HHL.

5.2.1. Interpretation of ATMs Mappings

The algebraic transition matrix gives us deep insights into the vectorial Boolean function which is being investigated. In [

5], the authors show that, for

,

, the matrix

following holds:

where

is the

Jth coefficient

of the algebraic normal form of the Boolean function

f as defined in

Section 1.2.

Using the HHL algorithm does not give us direct access to the algebraic transition matrix. However, ref. [

5] comes with a theorem which explains the result of multiplying

by some vector

:

Theorem 6 (Property of Algebraic Transition Matrices [

5]).

Let be a vectorial Boolean function and its algebraic transition matrix. Further, let be a Boolean function with m inputs. Then: In other words, for a given Boolean function of the form , using the HHL algorithm with input matrix and a vector , we can find the state which encapsulates the searched function.

Example 4 ([

5]).

Consider a function defined as followsThe algebraic transition matrix is then as follows: The rows correspond to products of the projection functions ’s, while the columns indicate whether the coefficient of each is one or zero.

The algebraic transition matrix also allows quick computation of the Boolean function obtained by composition of F with any Boolean function. For example for the Boolean function , we can compute : A possible attack scenario includes finding a Boolean form to compute the value of each

from the output of the function

F. For example, to find the formula for

, the input vector to the HHL algorithm would have to be as follows:

We highlight that the matrix to be inverted is not

, but

. This is not a problem as we can just switch the row- and column-oracles to obtain the transpose of the matrix

and use the following fact:

For each low-order term

, we can use the techniques used in

Section 5.1.2 to verify whether the preimage has a small Hamming weight. If such an input is found, there exists a sparse Boolean function that allows the computation of the value of

. We can recover such a function and use it to deduce the values of

for

. For example, we can use the fact that if

, then

follows

.

5.2.2. Comparison to Classical Approaches

In the classical setting, to find a formula to compute certain bits, the attacker would have to analyze the ciphers algebraic properties. The concept is similar to algebraic cryptanalysis, where the cipher is rewritten as a set of equations for which the solution is the secret key [

27].

We are, however, not interested in building a huge equation system, but rather want to find a function which, combined with the original function, leaks secret bits. The idea resembles the concept of annihilators, introduced in [

26]. An annihilator of a Boolean function

is another Boolean function

such that the following is true:

The annihilators allow reducing the degree of algebraic equations and, as a consequence, finding some of the secret key bits used in encryption. In our approach, we are rather interested in analyzing a vectorial Boolean function

using a Boolean function

such that the following is true:

but the desired outcome is similar.

6. Conclusions

In this paper, we have shown how tensor-decomposable matrices can be used in the HHL setting to improve the runtime of the linear system solving algorithm proposed in [

3]. We have proven that a vertical decomposition has a direct influence on the run-time, and in the quantum setting allows preimage computation even if the input to be inverted is not tensor-decomposable. This is a direct improvement over the classical computation.

Next, we have shown a polynomial-time procedure to create a quantum state which describes the algebraic normal form of a Boolean function f. The register has the form , where represents the coefficient vector of f. We further show how could be used to extract some information about the ANF. In most straightforward scenarios, a distinguisher attack could be performed to differentiate a cryptographic function from a random function. The Hamming weight of the ANF of a random function will be roughly . Meanwhile, a cryptographic function is likely to deviate from this value. The attack could also be used as a testing method for new ciphers. It would allow a quicker verification of the sparsity of ANF representations.

We show how a construct called an algebraic transition matrix can be used in the HHL setting to improve the cryptanalysis of Boolean functions. By using the polynomial-time HHL algorithm for all three parts of the horizontal decomposition of , with specific input, we can find formulas for the values of the key used in the encryption process.

We finally point to the fact that the Boolean Möbius transform is self-inverse. Therefore, it is not necessary to compute the preimage under the Möbius transformation. We could compute the value and obtain the same result. We would not have to invert the eigenvalue , but use it as a factor. It remains an open question how significantly such an alteration improves the runtime. We believe that, for some use cases, it might pose an interesting question.