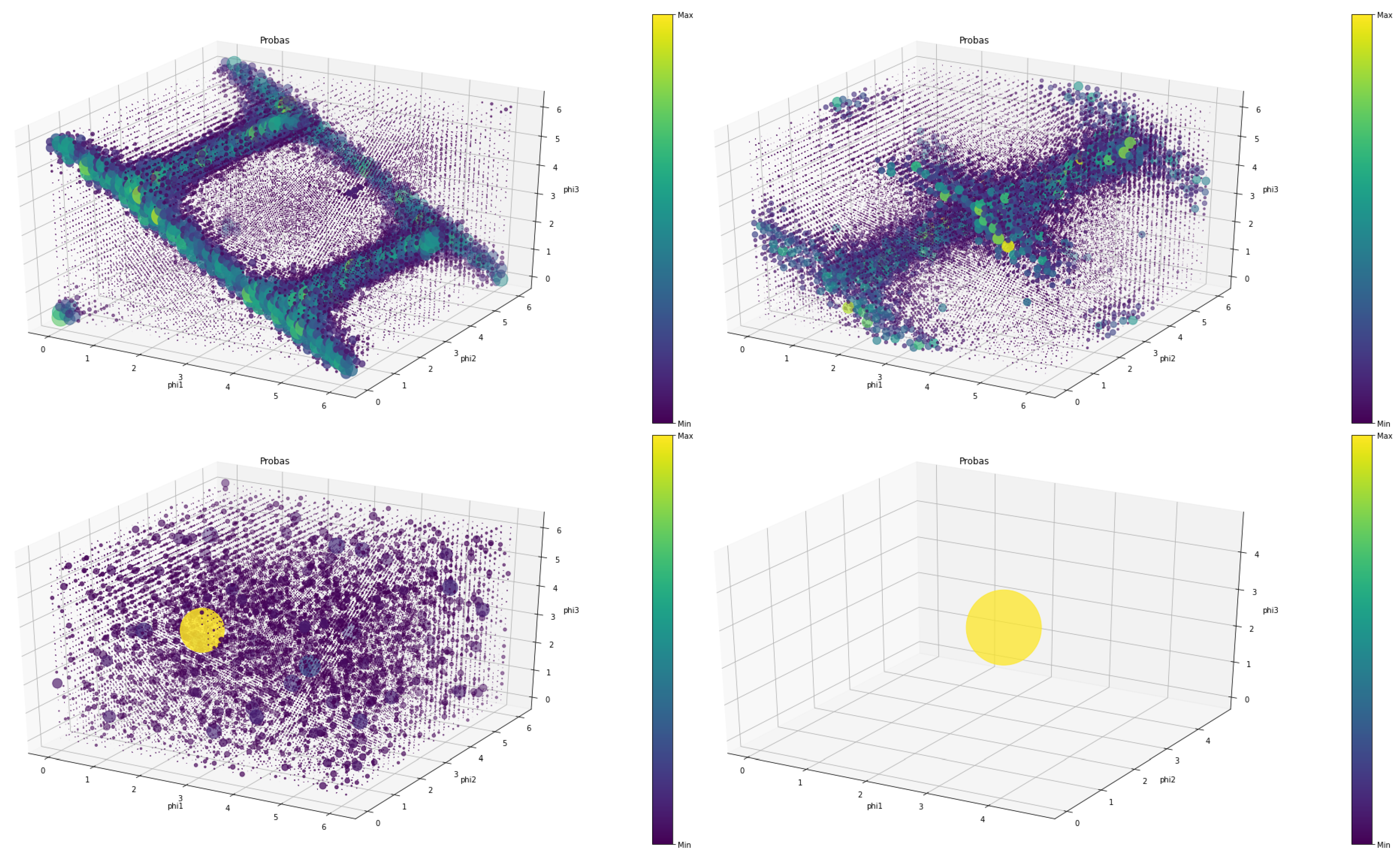

This section presents a new method for learning mixed strategies in quantum games. The results are focused on games with two players but the method is extensible for N players. The main difficulty when calculating mixed strategies in quantum games is that the possible pure strategies are already infinite (any real value for , , and ). Therefore, a mixed strategy is obtained through a probability density function over these three continuous variables.

3.1. Learning Algorithm

First, the values of

,

, and

are restricted to any real value between

(since they are angles). So, the final mixed strategy is obtained through a probability density function (PDF) of three variables. The principal idea is to use Q-learning [

29], discretizing the strategy space, to learn the approximate value of every strategy, and construct a PDF with it. Players select their strategies at each iteration by sampling from the PDF. They receive a reward, update their own Q-tables and PDFs with this new information, and continue with the following iteration.

Three difficulties arise when agents try to learn their mixed strategies in quantum games. The first problem arises because when learning in games, each player’s reward depends not only on the strategy she chooses but also on the strategies of other players. In other words, the feedback that a player uses to update the Q-value of a strategy will vary depending on the strategies that the other players have selected. This multi-agent scenario makes learning more difficult than the single-agent learning case where the feedback depends only on the strategy selected by the single player.

The second problem comes from the fact that there is a difference in nature between the classical and quantum strategies. Although pure classical strategies return always deterministic outcomes and mixed classical strategies always lead to random outcomes, pure quantum strategies can lead to random outcomes. For example, in Prisoner’s Dilemma version 2a, if player0 selects the strategy and player1 also selects the strategy , the quantum state before measuring is going to be . This means that, the final actions are going to be (cooperate, cooperate) with probability 0.25, (cooperate, defect) with probability 0.25, (defect, cooperate) with probability 0.25, and (defect, defect) with probability 0.25. Therefore, even if both players repeat their strategies, rewards for player0 (and player1) will be with probability 0.25, 0 with probability 0.25, 10 with probability 0.25, and 3.3 with probability 0.25. This nondeterministic property of pure quantum strategies makes learning of mixed quantum strategies slower.

The third one is due to the fact that when dealing with mixed strategies, learning the Q-values is not enough. Agents need to be able to properly transform that information into a probability density function that makes them neither too greedy players nor too hesitant. A greedy behavior will make agents try to obtain more rewards by using the present estimated value of the strategies and not learning enough from the environment, this concept is called exploitation and may cause convergence to local minima. On the other hand, A hesitant behavior will cause agents to primarily focus on improving their knowledge about each action instead of obtaining more rewards, this is called exploration and may cause no convergence at all.

Having said that, players start in the first iteration by initializing their Q-table, ideally this table will converge to a quantitative representation of how good each strategy is. Then, they initialize in zero a counter that indicates how many times each strategy was selected. This counter is going to be used to update the Q-table, the more times a strategy has been selected by the player, the lower the influence that the obtained reward will have when updating its Q-value. Finally, they select a strategy at random. After all the players have a strategy, they play the game and obtain the corresponding reward according to its 2 × 2 payoff matrix.

In the next iteration, agents use their selected strategies and received rewards to update the counter: , and the Q-value: . Now, players have to select a new strategy. In order to achieve this, they are going to convert Q-values into probabilities and create a PDF from which they are going to sample to select the next strategy. The probability for each strategy is going to be defined by the softmax function: , where T is called a temperature parameter. For high temperatures (), all strategies have nearly the same probability and the lower the temperature, the more Q-values affect the probability. For a low temperature (), the probability of the strategy with the highest expected reward tends to 1.

There are two last concepts worth mentioning before going on to study the results of the algorithm. To avoid local optima, we add an -greedy factor. In every iteration, each player generates a random real number between 0 and 1 and if this number is less than , the player selects its strategy randomly instead of sampling from the PDF. Lastly, the T factor of the softmax function will start from a initial high value (prioritizing exploration) and then decrease to a final low value (prioritizing exploitation) following the equation: , being a decay rate factor.

In Algorithm 1, it is possible to observe a complete description of the learning method by using pseudocode.

| Algorithm 1 Agents learning mixed strategies in quantum games |

Require:

▹ Number of players > ▹ Initial Temperature ▹ Final Temperature ▹ Velocity of T decay ▹-greedy factor for do ▹ Number of iterations ▹ Update Temperature for do ▹ For all players if then ▹ First step ▹ Initialize strategy counter ▹ Initialize Q-table ▹ Return random strategy for player n else ▹ Update counter for strategy s ▹ Update Q-table for strategy s if then ▹ If not greedy ▹ Return random action for player n end if for s in strategies do ▹ For all strategies ▹ Update probability density function over strategies end for ▹ Select a strategy by sampling in the PDF ▹ Return the selected strategy for player n end if end for ▹ Obtain the Reward for all players from their strategies end for

|

3.2. Decentralized Model

The learning system is decentralized, each agent makes local autonomous decisions towards its individual goals which may possibly conflict with those of other agents. They will learn individually and independently, without communication between them or a central ordering influence of a centralized system that exercises control over the lower-level components of the system directly.

In addition, agents will not have perfect information about the environment. Specifically, agents will not know the payout matrix of the game they are currently playing, they will not know the strategy or reward other agents are receiving at any point in time, and they do not even know how many agents they are playing against. Their only feedback is the reward they receive after selecting a strategy.

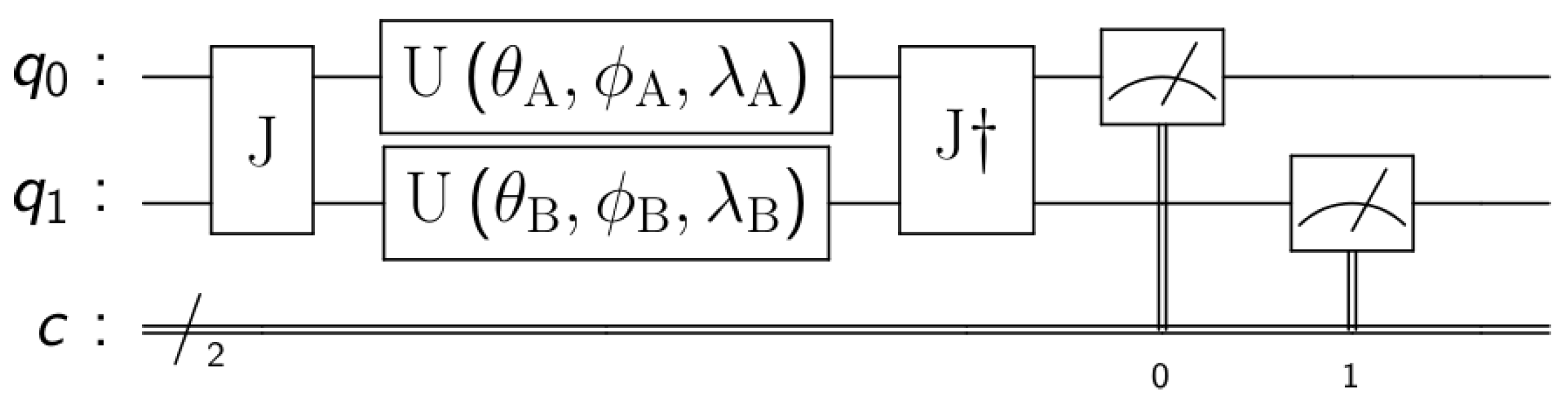

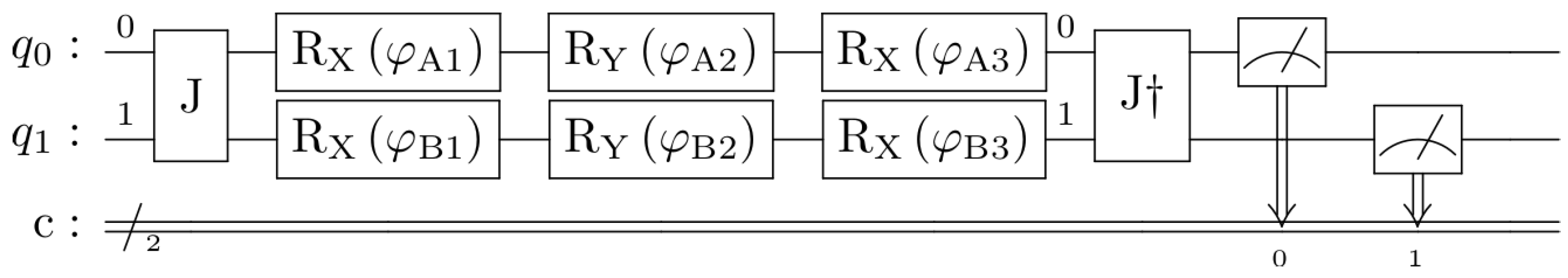

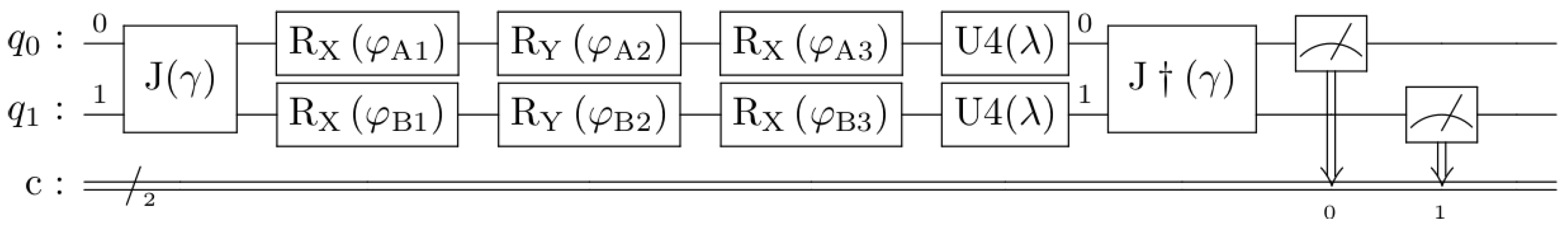

Both the decentralized and the imperfect information model for two players can be observed in

Figure 3. Players select a strategy

and send it to the quantum device. The two strategies of the players work as 6 parameters (

,

,

,

,

, and

) in a parameterized quantum circuit. The quantum circuit is executed and the readouts are mapped to their corresponding classical actions (the ones corresponding to the first step of the

EWL protocol). These actions are used to play the ongoing game and the rewards are sent to their players. Players use their reward to adjust their strategy (according to Algorithm 1), select a new strategy and the parameterized quantum circuit is executed again.