Scenario Metrics for the Safety Assurance Framework of Automated Vehicles: A Review of Its Application

Abstract

1. Introduction

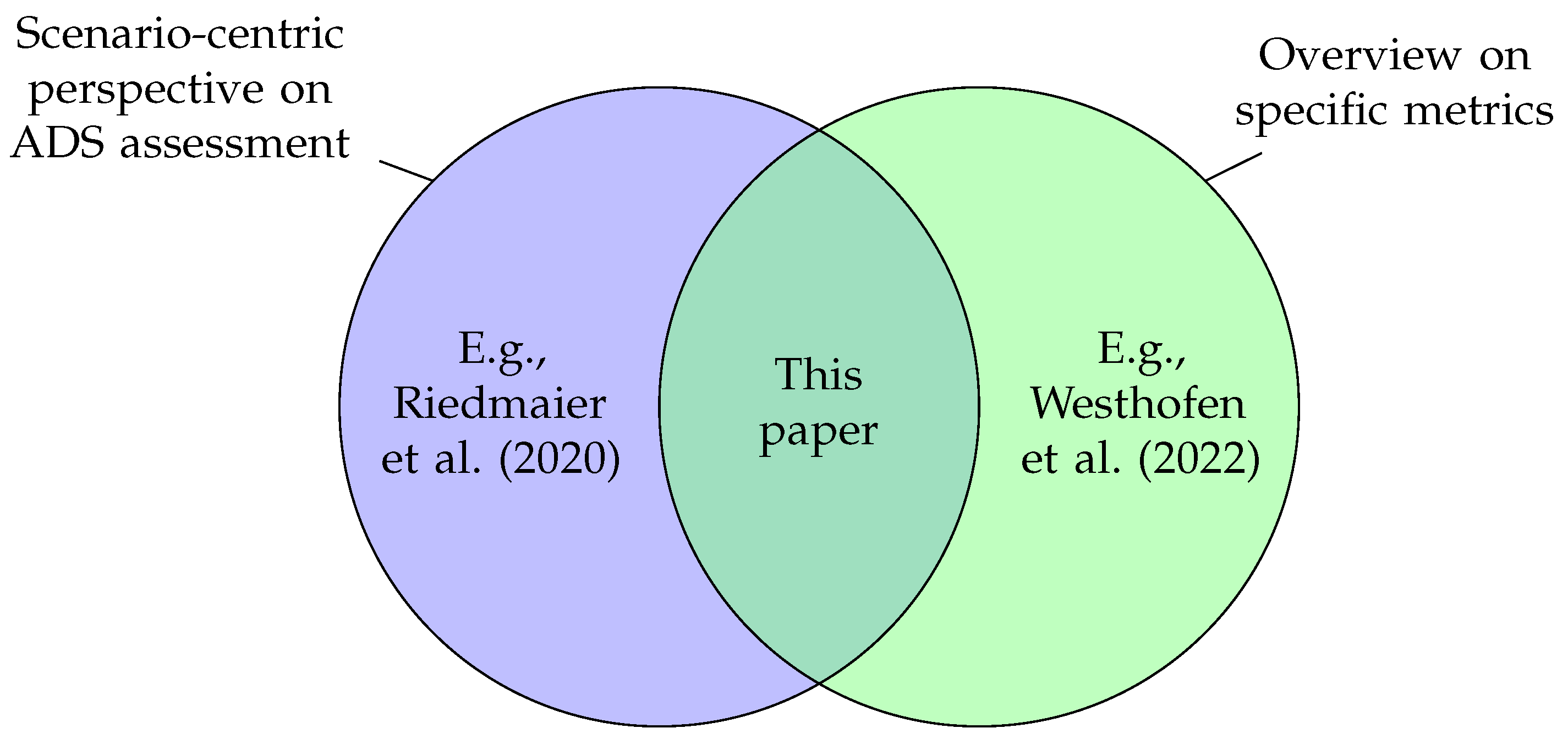

2. Related Surveys

3. Metrics

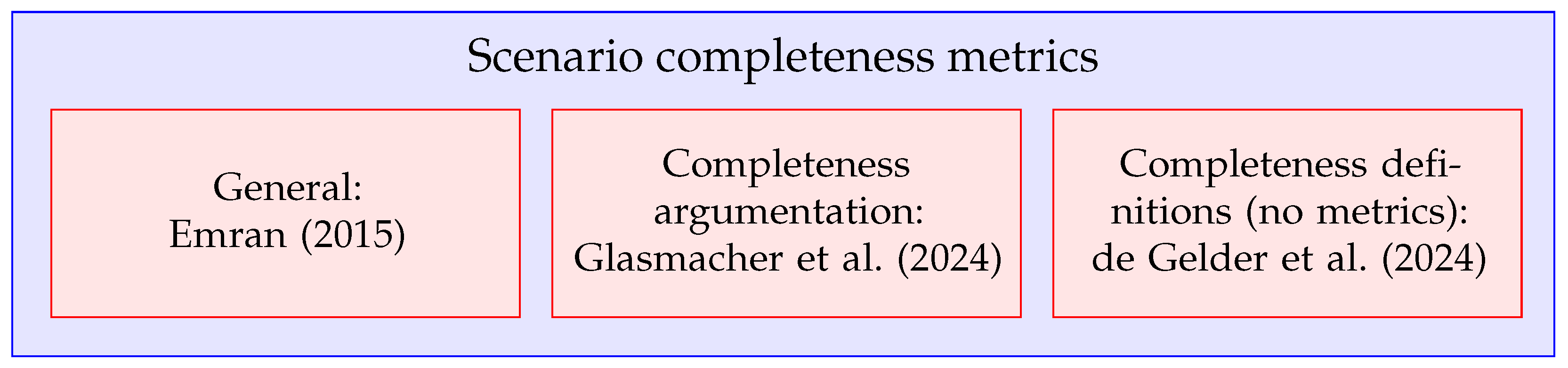

3.1. Scenario Completeness

3.2. Scenario Criticality

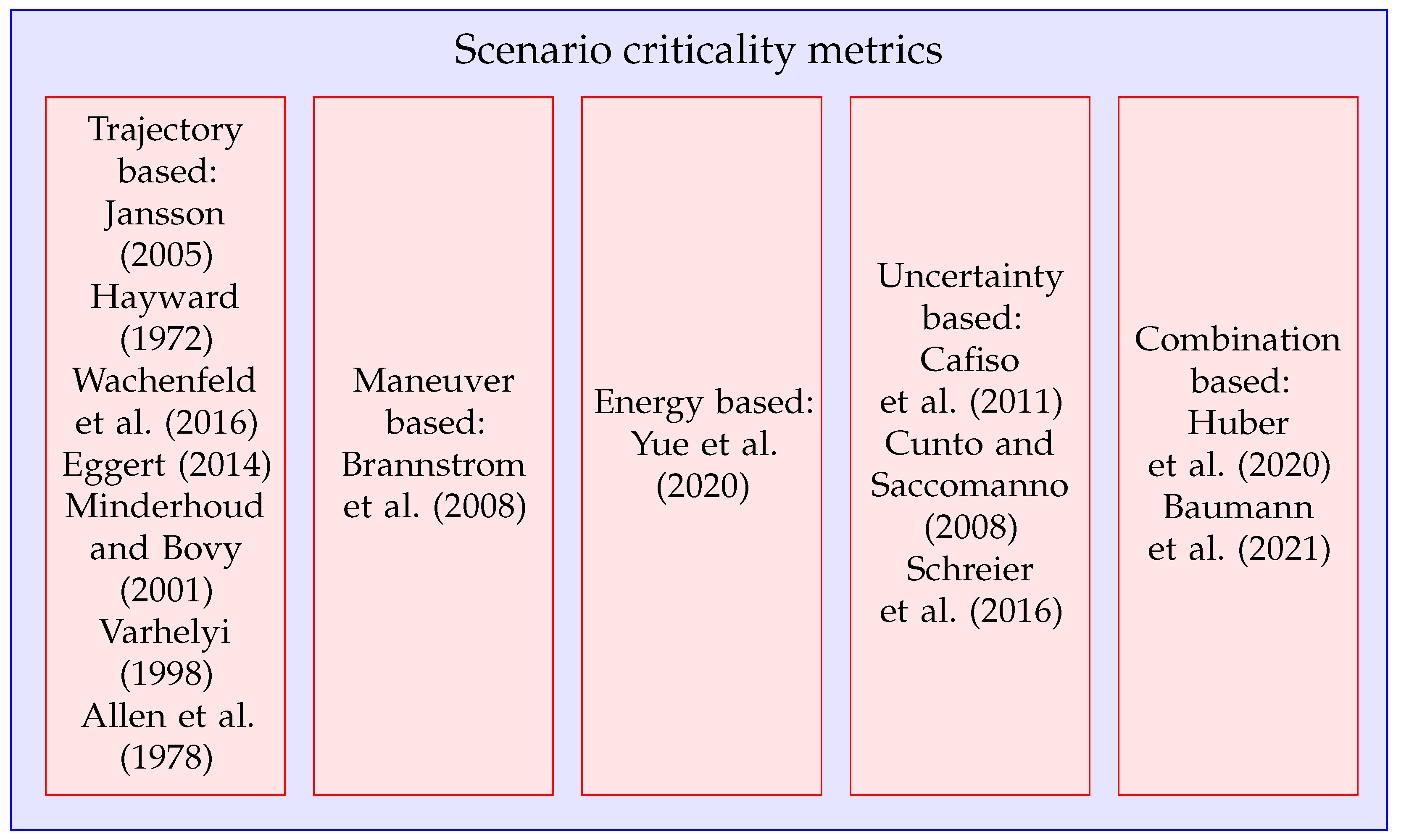

- Trajectory-based metrics: These metrics calculate the spatial or temporal gaps between traffic participants based on their trajectories or positions within a scene. Examples include Time Headway (THW) [19], gap time, distance headway, Time-to-Collision (TTC) [20], worst TTC [21], time to closest encounter [22], time exposed TTC [23], time integrated TTC [23], time to zebra [24], and post-encroachment time [25]. These metrics are crucial for scenarios where the precise movement and interaction of vehicles are central to assessing risk.

- Maneuver-based metrics: These metrics measure the difficulty of avoiding an accident through specific maneuvers such as braking and steering. For braking, key metrics include time to brake, deceleration to safety time, brake threat number [26], required longitudinal acceleration, and longitudinal jerk. For steering, important metrics include time to steer, steer threat number [26], required lateral acceleration, required longitudinal acceleration, and lateral jerk. These metrics are essential for evaluating the immediate actions required to prevent collisions.

- Energy-based metrics: These metrics assess the severity of a crash. For example, Yue et al. [27] use the kinematic energy of the ego vehicle to compute the scenario risk index. These metrics are critical for understanding the potential impact and damage severity in crash scenarios.

- Uncertainty-based metrics: These metrics capture the uncertainties inherent in traffic scenarios. The level of uncertainty in a scenario generally correlates with the number of challenges faced by the SUT. Examples include the pedestrian risk index by Cafiso et al. [28], which quantifies the temporal variation in estimated collision speed between a vehicle and a pedestrian, and the crash potential index [29], which estimates the average crash possibility if the required deceleration exceeds the maximal available deceleration. Schreier et al. [30] utilized Monte Carlo simulations to estimate behavioral uncertainties of traffic participants with the time-to-critical-collision probability. These metrics are pivotal for scenarios with high variability and unpredictability.

- Combination-based metrics: These metrics integrate several criticality metrics, addressing different aspects of a scenario to provide a more comprehensive assessment. Huber et al. [31] presented a multidimensional criticality analysis combining various metrics to evaluate overall scenario criticality. Baumann et al. [32] proposed a combination-based metric that includes longitudinal acceleration, THW, and TTC. These metrics offer a holistic view but require careful consideration of the weights assigned to different components.

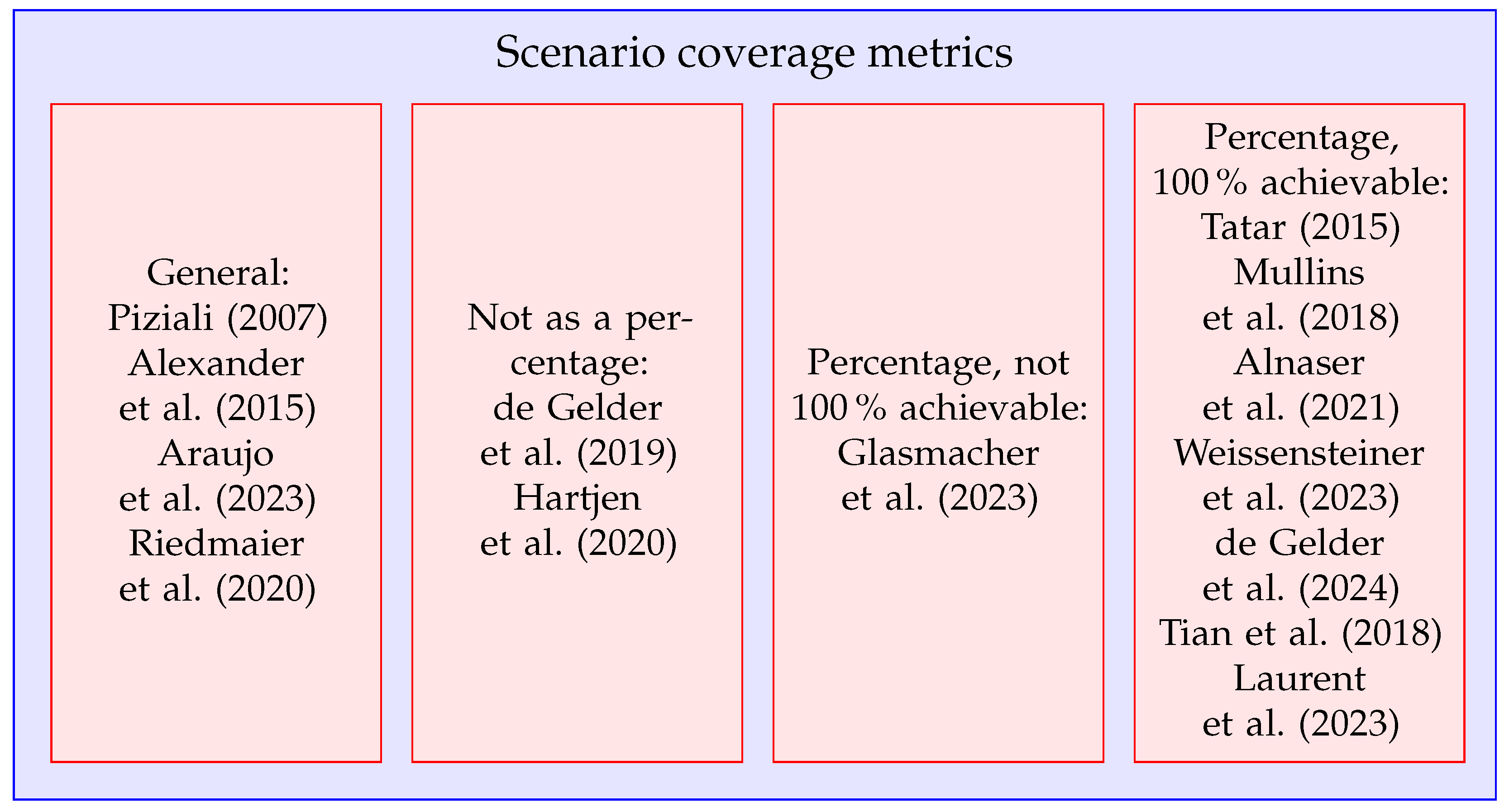

3.3. Scenario Coverage

- Calculable percentage: The metric should be expressible as a percentage. Metrics like the number of kilometers driven or the number of (simulated) scenarios are inadequate because they can be infinite. Similarly, the number of failures found is not useful since the total number of possible failures is unknown.

- Coverage of 100% achievable: The metric should allow for 100% coverage to be realistically achievable under practical conditions.

3.4. Scenario Diversity and Dissimilarity

- Identifying redundant scenarios such that they can be skipped to reduce test efforts.

- Clustering and categorization of concrete scenarios to obtain logical scenarios or scenario categories. Logical scenarios/scenario categories help with understanding, storage, and querying of scenarios.

- Promoting a diverse set of scenarios when using scenario generation methods such as optimization.

- 1.

- Dissimilarity based on scenario parameters: These metrics are applied particularly to multiple concrete scenarios of the same logical scenario. As concrete scenarios are obtained by sampling values for parameters of the logical scenario, dissimilarity is computed by comparing parameter values of concrete scenarios. For example, Zhu et al. [45] compute dissimilarity based on the Euclidean distance in parameter space. Alternatively, Zhong et al. [46] define the dissimilarity of a (traffic violation) scenario based on the percentage of scenario parameters that differ between two scenarios. Here, a continuous parameter is said to differ between two scenarios when the difference in the parameter value is greater than a user-defined resolution.

- 2.

- Dissimilarity based on scenario trajectories: These metrics compute dissimilarity considering the complete trajectories of all actors in each scenario. For example, Ries et al. [47] use dynamic time warping to estimate similarity between the trajectories of actors in two scenarios. Nguyen et al. [51] use the Levenshtein distance to compute the similarity between trajectories. The Levenshtein distance measures the number of “edits” needed to convert one trajectory to another. Alternatively, Lin et al. [48] create matrix profiles that consist of dissimilarities between the sub-sequences of one trajectory with the nearest neighbor sub-sequences from the other trajectory. The dissimilarity is based on the number of elements that are lower than a certain threshold.

- 3.

- Dissimilarity based on scenario features: These metrics define dissimilarity based on features extracted based on expert knowledge or through feature extraction methods. The considered features include, e.g., behavior of scenario actors (e.g., average occupancy around the ego vehicle) and ODD features (e.g., road layout orientation). Kerber et al. [49] compute average occupancy of an 8-cell grid around the ego vehicle over the entire scenario and use it as a dissimilarity measure to compare scenarios. Kruber et al. [50] perform unsupervised random forest clustering based on road infrastructure and trajectory features and use hierarchical clustering to estimate a similarity measure. Alternatively, in [51,52], feature maps are computed based on similar features, including behavior aspects such as steering angle standard deviation. Some studies prioritize the critical segments of scenarios for dissimilarity calculation. Wheeler and Kochenderfer [53] determine the critical segment based on a risk threshold and then estimate dissimilarity based on behavioral features such as relative speeds, acceleration change, and attentiveness. References [54,55] use criticality metrics, e.g., the scene of the minimum distance between actors, to determine the most critical scene. The dissimilarity score is based on both discrete features, such as driving path ids and actor types, and continuous features—which characterize the interaction, e.g., the relative heading angle of actors. A case study is presented on a database with a thousand scenarios generated by simulations in an intersection.

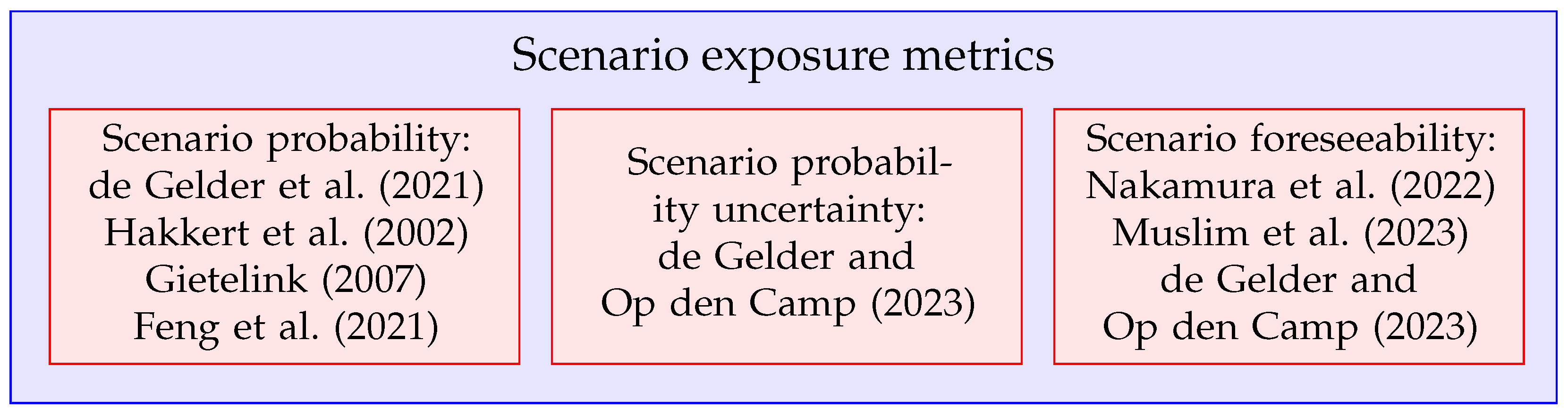

3.5. Scenario Exposure

3.5.1. Scenario Probability

3.5.2. Scenario Probability Uncertainty

- With the first approach, a parametric distribution is used to estimate the PDF, such as a normal or Gaussian distribution or a gamma distribution. In those cases, the distribution parameters (not to be confused with the scenario parameters for which the PDF is estimated) are typically fitted to some data. When using a Bayesian approach to fit those distribution parameters, the posterior uncertainty of the distribution parameters can be used to estimate the uncertainty of the density [62].

- With the second approach, a non-parametric distribution is used to estimate the PDF, such as Kernel Density Estimation (KDE). In those cases, the uncertainty is either based on a theoretical model or bootstrapping is used [63]. In the domain of AD, bootstrapping is used in [36,60] to estimate the probability uncertainty of the scenario parameters’ probability density.

3.5.3. Scenario Foreseeability

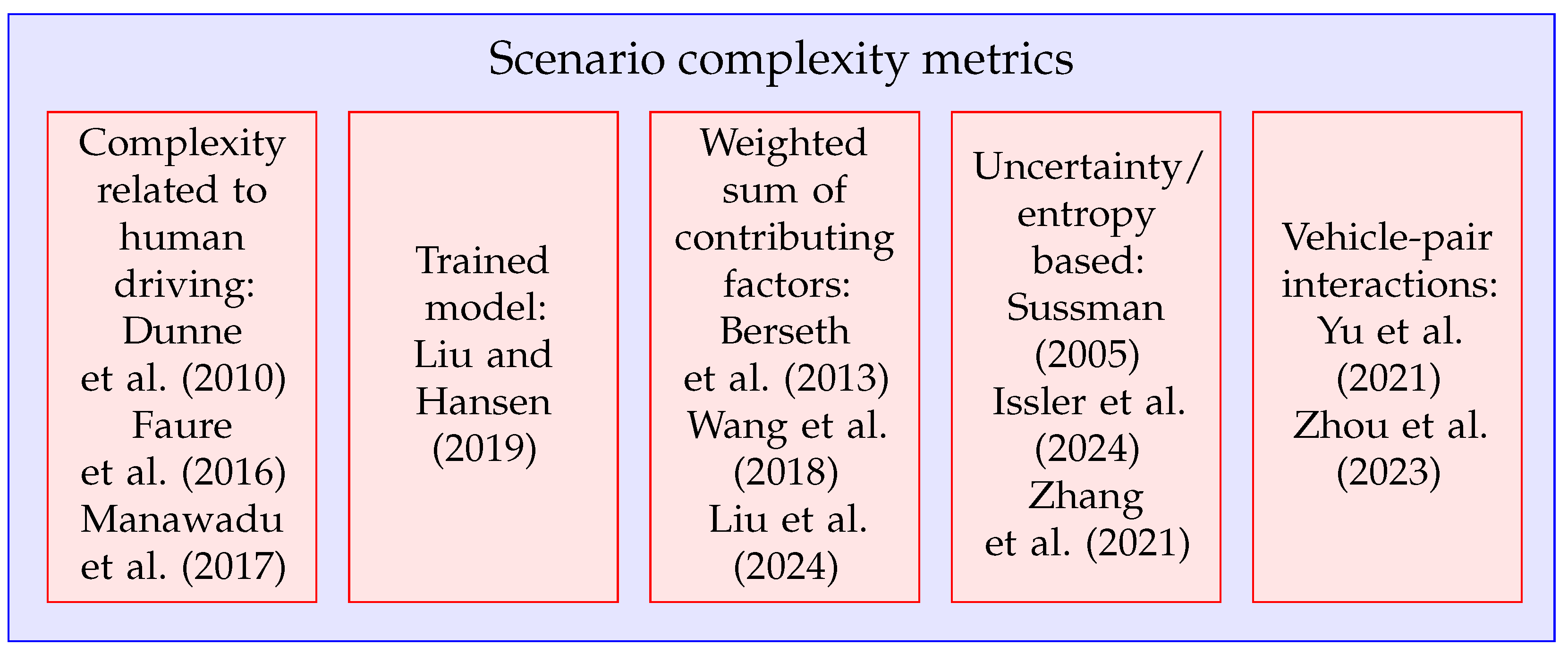

3.6. Scenario Complexity

- The complexity of the task, i.e., the number of acts that the driver needs to perform;

- The number of possible ways the task can be performed, meaning that the driver need to take more decisions if there are more ways to perform a task;

- The number of external stimuli.

3.7. Other Metrics

3.7.1. Realism

3.7.2. Rarity/Novelty

3.7.3. Reproducibility

3.7.4. Outcome Severity

3.7.5. Traceability

3.7.6. Representativeness

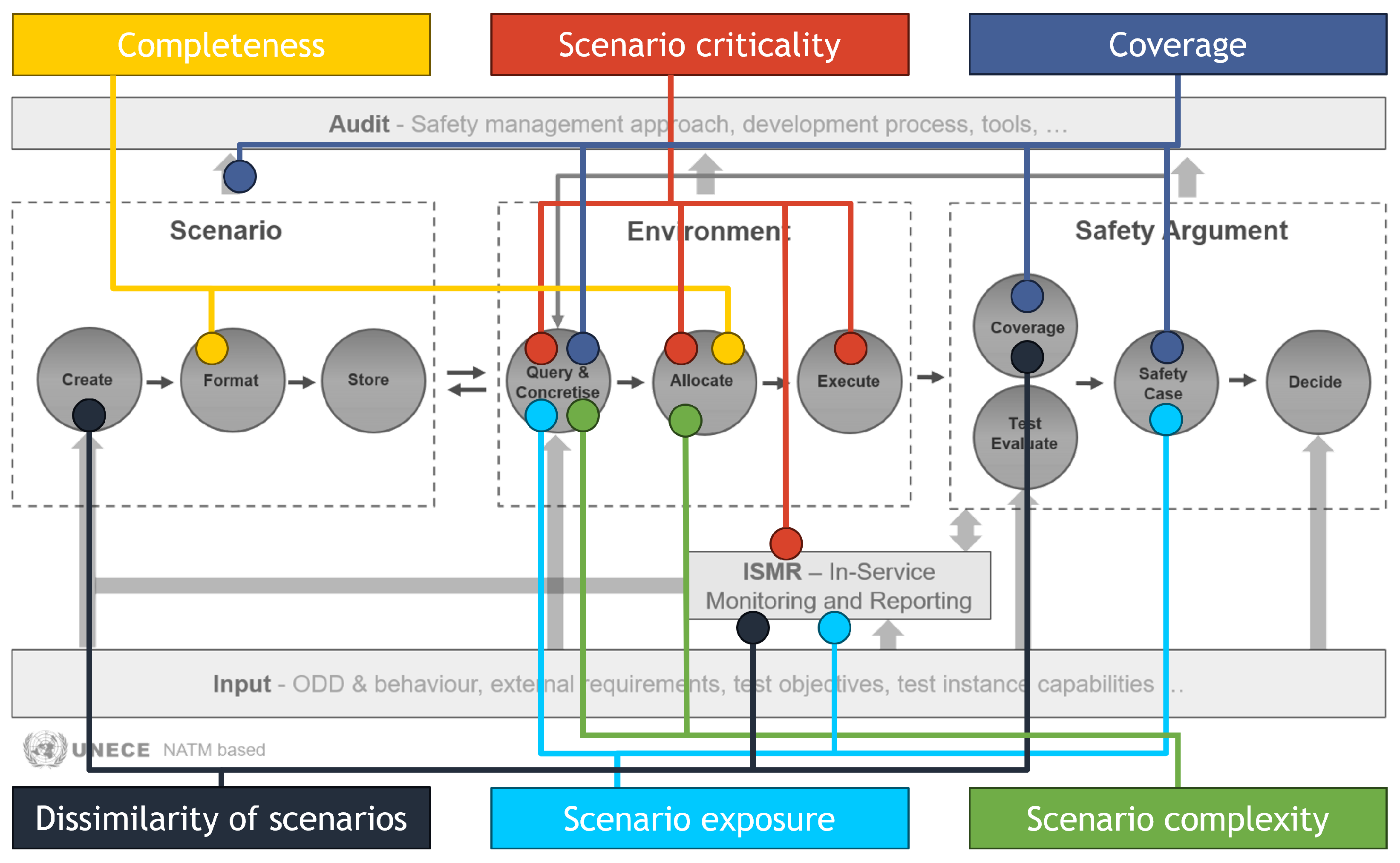

4. Metrics for the Safety Assurance Framework

- 1.

- Scenario: It creates, formats, and stores (test) scenarios in databases;

- 2.

- Environment: It converts scenarios into concrete test cases and runs them on various testing environments;

- 3.

- Safety Argument: It evaluates test results, coverage, and overall system safety, which leads to a decision on a pass or fail for the SUT;

- 4.

- In-Service Monitoring and Reporting (ISMR): It monitors the system during deployment, ensuring continual safety and providing input for future system designs;

- 5.

- Audit: It ensures proper safety processes throughout the development lifecycle.

4.1. SAF Component—Scenario

4.2. SAF Component—Environment

4.3. SAF Component—Safety Argument

4.4. SAF Component—ISMR

4.5. SAF Component—Audit

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kalra, N.; Paddock, S.M. Driving to Safety: How Many Miles of Driving Would It Take to Demonstrate Autonomous Vehicle Reliability? Transp. Res. Part A Policy Pract. 2016, 94, 182–193. [Google Scholar] [CrossRef]

- Riedmaier, S.; Ponn, T.; Ludwig, D.; Schick, B.; Diermeyer, F. Survey on Scenario-Based Safety Assessment of Automated Vehicles. IEEE Access 2020, 8, 87456–87477. [Google Scholar] [CrossRef]

- de Gelder, E.; Op den Camp, O.; Broos, J.; Paardekooper, J.P.; van Montfort, S.; Kalisvaart, S.; Goossens, H. Scenario-Based Safety Assessment of Automated Driving Systems; Technical Report; TNO: Helmond, The Netherlands, 2024. [Google Scholar]

- Op den Camp, O.; de Gelder, E. Operationalization of Scenario-Based Safety Assessment of Automated Driving Systems. In Proceedings of the IEEE International Automated Vehicle Validation Conference, Baden-Baden, Germany, 30 September–2 October 2025. [Google Scholar] [CrossRef]

- ECE/TRANS/WP.29/2021/61; New Assessment/Test Method for Automated Driving (NATM)–Master Document. Technical Report; World Forum for Harmonization of Vehicle Regulations: Geneva, Switzerland, 2021.

- Finkeldei, F.; Thees, C.; Weghorn, J.N.; Althoff, M. Scenario Factory 2.0: Scenario-Based Testing of Automated Vehicles with CommonRoad. Automot. Innov. 2025, 8, 207–220. [Google Scholar] [CrossRef]

- Althoff, M.; Koschi, M.; Manzinger, S. CommonRoad: Composable Benchmarks for Motion Planning on Roads. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; Volume 6, pp. 719–726. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhou, J.; Bi, D.; Mihalj, T.; Hu, J.; Eichberger, A. A Survey on the Application of Large Language Models in Scenario-Based Testing of Automated Driving Systems. arXiv 2025, arXiv:2505.16587. [Google Scholar] [CrossRef]

- Yan, S.; Zhang, X.; Hao, K.; Xin, H.; Luo, Y.; Yang, J.; Fan, M.; Yang, C.; Sun, J.; Yang, Z. On-Demand Scenario Generation for Testing Automated Driving Systems. ACM Softw. Eng. 2025, 2, 86–105. [Google Scholar] [CrossRef]

- Emran, N.A. Data Completeness Measures. In Pattern Analysis, Intelligent Security and the Internet of Things; Springer: Berlin/Heidelberg, Germany, 2015; pp. 117–130. [Google Scholar] [CrossRef]

- Westhofen, L.; Neurohr, C.; Koopmann, T.; Butz, M.; Schütt, B.; Utesch, F.; Neurohr, B.; Gutenkunst, C.; Böde, E. Criticality Metrics for Automated Driving: A Review and Suitability Analysis of the State of the Art. Arch. Comput. Methods Eng. 2022, 30, 1–35. [Google Scholar] [CrossRef]

- Wang, C.; Xie, Y.; Huang, H.; Liu, P. A Review of Surrogate Safety Measures and Their Applications in Connected and Automated Vehicles Safety Modeling. Accid. Anal. Prev. 2021, 157, 106157. [Google Scholar] [CrossRef]

- Liu, T.; Wang, C.; Yin, Z.; Mi, Z.; Xiong, X.; Guo, B. Complexity Quantification of Driving Scenarios with Dynamic Evolution Characteristics. Entropy 2024, 26, 1033. [Google Scholar] [CrossRef]

- Tahir, Z.; Alexander, R. Coverage Based Testing for V&V and Safety Assurance of Self-Driving Autonomous Vehicles: A Systematic Literature Review. In Proceedings of the IEEE International Conference On Artificial Intelligence Testing (AITest), Oxford, UK, 3–6 August 2020; pp. 23–30. [Google Scholar] [CrossRef]

- ISO 34502; Road Vehicles–Test Scenarios for Automated Driving Systems–Engineering Framework and Process of Scenario-Based Safety Evaluation. International Organization for Standardization: Geneva, Switzerland, 2022.

- Glasmacher, C.; Weber, H.; Eckstein, L. Towards a Completeness Argumentation for Scenario Concepts. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Jeju Island, Republic of Korea, 2–5 June 2024. [Google Scholar] [CrossRef]

- de Gelder, E.; Buermann, M.; Op den Camp, O. Coverage Metrics for a Scenario Database for the Scenario-Based Assessment of Automated Driving Systems. In Proceedings of the IEEE International Automated Vehicle Validation Conference, Pittsburgh, PA, USA, 21–23 October 2024. [Google Scholar] [CrossRef]

- Cai, J.; Deng, W.; Guang, H.; Wang, Y.; Li, J.; Ding, J. A Survey on Data-Driven Scenario Generation for Automated Vehicle Testing. Machines 2022, 10, 1101. [Google Scholar] [CrossRef]

- Jansson, J. Collision Avoidance Theory: With Application to Automotive Collision Mitigation. Ph.D. Thesis, Linköping University Electronic Press, Linköping, Sweden, 2005. [Google Scholar]

- Hayward, J.C. Near Miss Determination Through Use of a Scale of Danger; Technical Report TTSC-7115; Pennsylvania State University: University Park, PA, USA, 1972. [Google Scholar]

- Wachenfeld, W.; Junietz, P.; Wenzel, R.; Winner, H. The Worst-Time-to-Collision Metric for Situation Identification. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Gotenburg, Sweden, 19–22 June 2016; pp. 729–734. [Google Scholar] [CrossRef]

- Eggert, J. Predictive Risk Estimation for Intelligent ADAS Functions. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; pp. 711–718. [Google Scholar] [CrossRef]

- Minderhoud, M.M.; Bovy, P.H. Extended Time-to-Collision Measures for Road Traffic Safety Assessment. Accid. Anal. Prev. 2001, 33, 89–97. [Google Scholar] [CrossRef]

- Varhelyi, A. Drivers’ Speed Behaviour at a Zebra Crossing: A Case Study. Accid. Anal. Prev. 1998, 30, 731–743. [Google Scholar] [CrossRef] [PubMed]

- Allen, B.L.; Shin, B.T.; Cooper, P.J. Analysis of Traffic Conflicts and Collisions. Transp. Res. Board 1978, 667, 67–74. [Google Scholar]

- Brannstrom, M.; Sjoberg, J.; Coelingh, E. A Situation and Threat Assessment Algorithm for a Rear-End Collision Avoidance System. In Proceedings of the IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 102–107. [Google Scholar] [CrossRef]

- Yue, B.; Shi, S.; Wang, S.; Lin, N. Low-Cost Urban Test Scenario Generation Using Microscopic Traffic Simulation. IEEE Access 2020, 8, 123398–123407. [Google Scholar] [CrossRef]

- Cafiso, S.; Garcia, A.G.; Cavarra, R.; Rojas, M.R. Crosswalk Safety Evaluation Using a Pedestrian Risk Index as Traffic Conflict Measure. In Proceedings of the 3rd International Conference on Road safety and Simulation, Indianapolis, IN, USA, 14–16 September 2011; Volume 15. [Google Scholar]

- Cunto, F.; Saccomanno, F.F. Calibration and Validation of Simulated Vehicle Safety Performance at Signalized Intersections. Accid. Anal. Prev. 2008, 40, 1171–1179. [Google Scholar] [CrossRef] [PubMed]

- Schreier, M.; Willert, V.; Adamy, J. An integrated approach to maneuver-based trajectory prediction and criticality assessment in arbitrary road environments. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2751–2766. [Google Scholar] [CrossRef]

- Huber, B.; Herzog, S.; Sippl, C.; German, R.; Djanatliev, A. Evaluation of Virtual Traffic Situations for Testing Automated Driving Functions Based on Multidimensional Criticality Analysis. In Proceedings of the IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Virtual, 20–23 September 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Baumann, D.; Pfeffer, R.; Sax, E. Automatic Generation of Critical Test Cases for the Development of Highly Automated Driving Functions. In Proceedings of the IEEE 93rd Vehicular Technology Conference (VTC2021-Spring), Helsinki, Finland, 25 April–19 May 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Piziali, A. Functional Verification Coverage Measurement and Analysis; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2007. [Google Scholar] [CrossRef]

- Alexander, R.; Hawkins, H.; Rae, D. Situation Coverage—A Coverage Criterion for Testing Autonomous Robots; Technical Report; Department of Computer Science, University of York: York, UK, 2015. [Google Scholar]

- Araujo, H.; Mousavi, M.R.; Varshosaz, M. Testing, Validation, and Verification of Robotic and Autonomous Systems: A Systematic Review. ACM Trans. Softw. Eng. Methodol. 2023, 32, 1–61. [Google Scholar] [CrossRef]

- de Gelder, E.; Paardekooper, J.P.; Op den Camp, O.; De Schutter, B. Safety Assessment of Automated Vehicles: How to Determine Whether We Have Collected Enough Field Data? Traffic Inj. Prev. 2019, 20, 162–170. [Google Scholar] [CrossRef]

- Hartjen, L.; Philipp, R.; Schuldt, F.; Friedrich, B. Saturation effects in recorded maneuver data for the test of automated driving. In Proceedings of the 13. Uni-DAS eV Workshop Fahrerassistenz und Automatisiertes Fahren, 16–17 July 2020; pp. 74–83. [Google Scholar]

- Glasmacher, C.; Schuldes, M.; Weber, H.; Wagener, N.; Eckstein, L. Acquire Driving Scenarios Efficiently: A Framework for Prospective Assessment of Cost-Optimal Scenario Acquisition. In Proceedings of the IEEE 26th International Conference on Intelligent Transportation Systems (ITSC), Bilbao, Spain, 24–28 September 2023; pp. 1971–1976. [Google Scholar] [CrossRef]

- Tatar, M. Enhancing ADAS Test and Validation with Automated Search for Critical Situations. In Proceedings of the Driving Simulation Conference (DSC), Tübingen, Germany, 16–18 September 2015; pp. 1–4. [Google Scholar]

- Mullins, G.E.; Stankiewicz, P.G.; Hawthorne, R.C.; Gupta, S.K. Adaptive Generation of Challenging Scenarios for Testing and Evaluation of Autonomous Vehicles. J. Syst. Softw. 2018, 137, 197–215. [Google Scholar] [CrossRef]

- Alnaser, A.J.; Sargolzaei, A.; Akbaş, M.I. Autonomous Vehicles Scenario Testing Framework and Model of Computation: On Generation and Coverage. IEEE Access 2021, 9, 60617–60628. [Google Scholar] [CrossRef]

- Weissensteiner, P.; Stettinger, G.; Khastgir, S.; Watzenig, D. Operational Design Domain-Driven Coverage for the Safety Argumentation of Automated Vehicles. IEEE Access 2023, 11, 12263–12284. [Google Scholar] [CrossRef]

- Tian, Y.; Pei, K.; Jana, S.; Ray, B. DeepTest: Automated Testing of Deep-Neural-Network-Driven Autonomous Cars. In Proceedings of the 40th International Conference on Software Engineering, Gothenburg, Sweden, 27 May–3 June 2018; pp. 303–314. [Google Scholar] [CrossRef]

- Laurent, T.; Klikovits, S.; Arcaini, P.; Ishikawa, F.; Ventresque, A. Parameter Coverage for Testing of Autonomous Driving Systems under Uncertainty. ACM Trans. Softw. Eng. Methodol. 2023, 32, 1–31. [Google Scholar] [CrossRef]

- Zhu, B.; Zhang, P.; Zhao, J.; Deng, W. Hazardous Scenario Enhanced Generation for Automated Vehicle Testing Based on Optimization Searching Method. IEEE Trans. Intell. Transp. Syst. 2021, 23, 7321–7331. [Google Scholar] [CrossRef]

- Zhong, Z.; Kaiser, G.; Ray, B. Neural Network Guided Evolutionary Fuzzing for Finding Traffic Violations of Autonomous Vehicles. IEEE Trans. Softw. Eng. 2022, 49, 1860–1875. [Google Scholar] [CrossRef]

- Ries, L.; Rigoll, P.; Braun, T.; Schulik, T.; Daube, J.; Sax, E. Trajectory-Based Clustering of Real-World Urban Driving Sequences with Multiple Traffic Objects. In Proceedings of the IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 1251–1258. [Google Scholar] [CrossRef]

- Lin, Q.; Wang, W.; Zhang, Y.; Dolan, J.M. Measuring Similarity of Interactive Driving Behaviors Using Matrix Profile. In Proceedings of the American Control Conference (ACC), Denver, CO, USA, 1–3 July 2020; pp. 3965–3970. [Google Scholar] [CrossRef]

- Kerber, J.; Wagner, S.; Groh, K.; Notz, D.; Kühbeck, T.; Watzenig, D.; Knoll, A. Clustering of the Scenario Space for the Assessment of Automated Driving. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 578–583. [Google Scholar] [CrossRef]

- Kruber, F.; Wurst, J.; Botsch, M. An Unsupervised Random Forest Clustering Technique for Automatic Traffic Scenario Categorization. In Proceedings of the 21st International conference on intelligent transportation systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2811–2818. [Google Scholar] [CrossRef]

- Nguyen, V.; Huber, S.; Gambi, A. SALVO: Automated Generation of Diversified Tests for Self-Driving Cars from Existing Maps. In Proceedings of the IEEE International Conference on Artificial Intelligence Testing (AITest), Oxford, UK, 23–26 August 2021; pp. 128–135. [Google Scholar] [CrossRef]

- Zohdinasab, T.; Riccio, V.; Gambi, A.; Tonella, P. Deephyperion: Exploring the Feature Space of Deep Learning-Based Systems through Illumination Search. In Proceedings of the 30th ACM SIGSOFT International Symposium on Software Testing and Analysis, Virtual, 11–17 July 2021; pp. 79–90. [Google Scholar] [CrossRef]

- Wheeler, T.A.; Kochenderfer, M.J. Critical Factor Graph Situation Clusters for Accelerated Automotive Safety Validation. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 2133–2139. [Google Scholar] [CrossRef]

- Mahadikar, B.B.; Rajesh, N.; Kurian, K.T.; Lefeber, E.; Ploeg, J.; van de Wouw, N.; Alirezaei, M. Formulating a dissimilarity metric for comparison of driving scenarios for Automated Driving Systems. In Proceedings of the 2024 IEEE Intelligent Vehicles Symposium (IV), Jeju Island, Republic of Korea, 2–5 June 2024; pp. 1091–1098. [Google Scholar] [CrossRef]

- Dokania, N.; Singh, T.; Lefeber, E.; Ploeg, J.; Alirezaei, M. Implementing a dissimilarity metric for scenarios categorization and selection for automated driving systems. IFAC-PapersOnLine 2025, 59, 145–150. [Google Scholar] [CrossRef]

- Tian, H.; Jiang, Y.; Wu, G.; Yan, J.; Wei, J.; Chen, W.; Li, S.; Ye, D. MOSAT: Finding Safety Violations of Autonomous Driving Systems Using Multi-Objective Genetic Algorithm. In Proceedings of the 30th ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Singapore, 14–16 November 2022; pp. 94–106. [Google Scholar] [CrossRef]

- de Gelder, E.; Elrofai, H.; Khabbaz Saberi, A.; Op den Camp, O.; Paardekooper, J.P.; De Schutter, B. Risk Quantification for Automated Driving Systems in Real-World Driving Scenarios. IEEE Access 2021, 9, 168953–168970. [Google Scholar] [CrossRef]

- Hakkert, A.S.; Braimaister, L.; Van Schagen, I. The Uses of Exposure and Risk in Road Safety Studies; Technical Report R-2002-12; SWOV Institute for Road Safety: The Hague, The Netherlands, 2002. [Google Scholar]

- Gietelink, O. Design and Validation of Advanced Driver Assistance Systems. Ph.D. Thesis, Delft University of Technology, Delft, The Netherlands, 2007. [Google Scholar]

- de Gelder, E.; Op den Camp, O. How Certain Are We That Our Automated Driving System Is Safe? Traffic Inj. Prev. 2023, 24, S131–S140. [Google Scholar] [CrossRef]

- Feng, S.; Yan, X.; Sun, H.; Feng, Y.; Liu, H.X. Intelligent Driving Intelligence Test for Autonomous Vehicles with Naturalistic and Adversarial Environment. Nat. Commun. 2021, 12, 748. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Chen, Y.C. A Tutorial on Kernel Density Estimation and Recent Advances. Biostat. Epidemiol. 2017, 1, 161–187. [Google Scholar] [CrossRef]

- E/ECE/TRANS/505/Rev.3/Add.156; Uniform Provisions Concerning the Approval of Vehicles with Regard to Automated Lane Keeping Systems. World Forum for Harmonization of Vehicle Regulations: Geneva, Switzerland, 2021.

- Nakamura, H.; Muslim, H.; Kato, R.; Préfontaine-Watanabe, S.; Nakamura, H.; Kaneko, H.; Imanaga, H.; Antona-Makoshi, J.; Kitajima, S.; Uchida, N.; et al. Defining Reasonably Foreseeable Parameter Ranges Using Real-World Traffic Data for Scenario-Based Safety Assessment of Automated Vehicles. IEEE Access 2022, 10, 37743–37760. [Google Scholar] [CrossRef]

- Muslim, H.; Endo, S.; Imanaga, H.; Kitajima, S.; Uchida, N.; Kitahara, E.; Ozawa, K.; Sato, H.; Nakamura, H. Cut-out Scenario Generation with Reasonability Foreseeable Parameter Range from Real Highway Dataset for Autonomous Vehicle Assessment. IEEE Access 2023, 11, 45349–45363. [Google Scholar] [CrossRef]

- de Gelder, E.; Op den Camp, O. A Quantitative Method to Determine What Collisions Are Reasonably Foreseeable and Preventable. Saf. Sci. 2023, 167, 106233. [Google Scholar] [CrossRef]

- Sussman, J.M. Perspectives on Intelligent Transportation Systems (ITS); Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Issler, M.; Goss, Q.; Akbaş, M.İ. Complexity Evaluation of Test Scenarios for Autonomous Vehicle Safety Validation Using Information Theory. Information 2024, 15, 772. [Google Scholar] [CrossRef]

- Yu, R.; Zheng, Y.; Qu, X. Dynamic driving environment complexity quantification method and its verification. Transp. Res. Part C Emerg. Technol. 2021, 127, 103051. [Google Scholar] [CrossRef]

- Dunne, R.; Schatz, S.; Fiore, S.M.; Martin, G.; Nicholson, D. Scenario-Based Ttraining: Scenario Complexity. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, San Francisco, CA, USA, 27 September–1 October 2010; pp. 2238–2242. [Google Scholar] [CrossRef]

- Faure, V.; Lobjois, R.; Benguigui, N. The Effects of Driving Environment Complexity and Dual Tasking on Drivers’ Mental Workload and Eye Blink Behavior. Transp. Res. Part F TRaffic Psychol. Behav. 2016, 40, 78–90. [Google Scholar] [CrossRef]

- Manawadu, U.E.; Kawano, T.; Murata, S.; Kamezaki, M.; Sugano, S. Estimating Driver Workload with Systematically Varying Traffic Complexity Using Machine Learning: Experimental Design. In Proceedings of the International Conference on Intelligent Human Systems Integration, Dubai, United Arab Emirates, 7–9 January 2018; pp. 106–111. [Google Scholar] [CrossRef]

- Liu, Y.; Hansen, J.H. Towards Complexity Level Classification of Driving Scenarios Using Environmental Information. In Proceedings of the IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 810–815. [Google Scholar] [CrossRef]

- Berseth, G.; Kapadia, M.; Faloutsos, P. SteerPlex: Estimating Scenario Complexity for Simulated Crowds. In Proceedings of the Motion on Games, Dublin, UK, 6–8 November 2013; pp. 67–76. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, C.; Liu, Y.; Zhang, Q. Traffic Sensory Data Classification by Quantifying Scenario Complexity. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Suzhou, China, 26–30 June 2018; pp. 1543–1548. [Google Scholar] [CrossRef]

- Zhang, L.; Ma, Y.; Xing, X.; Xiong, L.; Chen, J. Research on the Complexity Quantification Method of Driving Scenarios Based on Information Entropy. In Proceedings of the IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 3476–3481. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, L.; Wang, X. Online adaptive generation of critical boundary scenarios for evaluation of autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2023, 24, 6372–6388. [Google Scholar] [CrossRef]

- Association for Standardization of Automation and Measuring Systems. ASAM OpenSCENARIO XML, 2024. Available online: https://www.asam.net/standards/detail/openscenario (accessed on 30 July 2025).

- ISO 34504; Road Vehicles–Test Scenarios for Automated Driving Systems–Scenario Categorization. International Organization for Standardization: Geneva, Switzerland, 2024.

- Neurohr, C.; Westhofen, L.; Butz, M.; Bollmann, M.; Eberle, U.; Galbas, R. Criticality Analysis for the Verification and Validation of Automated Vehicles. IEEE Access 2021, 9, 18016–18041. [Google Scholar] [CrossRef]

- de Gelder, E.; Paardekooper, J.P.; Khabbaz Saberi, A.; Elrofai, H.; Op den Camp, O.; Kraines, S.; Ploeg, J.; De Schutter, B. Towards an Ontology for Scenario Definition for the Assessment of Automated Vehicles: An Object-Oriented Framework. IEEE Trans. Intell. Veh. 2022, 7, 300–314. [Google Scholar] [CrossRef]

- ISO 26262; Road Vehicles–Functional Safety. International Organization for Standardization: Geneva, Switzerland, 2018.

- Paardekooper, J.P.; Montfort, S.; Manders, J.; Goos, J.; de Gelder, E.; Op den Camp, O.; Bracquemond, A.; Thiolon, G. Automatic Identification of Critical Scenarios in a Public Dataset of 6000 km of Public-Road Driving. In Proceedings of the 26th International Technical Conference on the Enhanced Safety of Vehicles (ESV), Eindhoven, The Netherlands, 10–13 June 2019. [Google Scholar]

- Sagmeister, S.; Kounatidis, P.; Goblirsch, S.; Lienkamp, M. Analyzing the Impact of Simulation Fidelity on the Evaluation of Autonomous Driving Motion Control. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Jeju Island, Republic of Korea, 2–5 June 2024; pp. 230–237. [Google Scholar] [CrossRef]

- Akella, P.; Ubellacker, W.; Ames, A.D. Safety-Critical Controller Verification via Sim2Real Gap Quantification. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 10539–10545. [Google Scholar] [CrossRef]

- Sangeerth, P.; Jagtap, P. Quantification of Sim2Real Gap via Neural Simulation Gap Function. arXiv 2025, arXiv:2506.17675. [Google Scholar] [CrossRef]

- Park, S.; Pahk, J.; Jahn, L.L.F.; Lim, Y.; An, J.; Choi, G. A Study on Quantifying Sim2real Image Gap in Autonomous Driving Simulations Using Lane Segmentation Attention Map Similarity. In Proceedings of the International Conference on Intelligent Autonomous Systems, Qinhuangdao, China, 22–24 September 2023; pp. 203–212. [Google Scholar] [CrossRef]

- Mahajan, I.; Unjhawala, H.; Zhang, H.; Zhou, Z.; Young, A.; Ruiz, A.; Caldararu, S.; Batagoda, N.; Ashokkumar, S.; Negrut, D. Quantifying the Sim2real gap for GPS and IMU sensors. arXiv 2024, arXiv:2403.11000. [Google Scholar] [CrossRef]

- Pahk, J.; Shim, J.; Baek, M.; Lim, Y.; Choi, G. Effects of Sim2Real Image Translation via DCLGAN on Lane Keeping Assist System in CARLA Simulator. IEEE Access 2023, 11, 33915–33927. [Google Scholar] [CrossRef]

- Waheed, A.; Areti, M.; Gallantree, L.; Hasnain, Z. Quantifying the Sim2Real Gap: Model-Based Verification and Validation in Autonomous Ground Systems. IEEE Robot. Autom. Lett. 2025, 10, 3819–3826. [Google Scholar] [CrossRef]

- Petrucelli, E.; States, J.D.; Hames, L.N. The Abbreviated Injury Scale: Evolution, Usage and Future Adaptability. Accid. Anal. Prev. 1981, 13, 29–35. [Google Scholar] [CrossRef]

- de Gelder, E.; Hof, J.; Cator, E.; Paardekooper, J.P.; Op den Camp, O.; Ploeg, J.; De Schutter, B. Scenario Parameter Generation Method and Scenario Representativeness Metric for Scenario-Based Assessment of Automated Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18794–18807. [Google Scholar] [CrossRef]

- Zhang, J.X.; Op den Camp, O.; de Vries, S.; Bourauel, B.; Hillbrand, B.; Nieto Doncel, M.; Gronvall, J.F.; Stern, D.; Bolovinou, A.; Arrieta Fernández, A.; et al. SUNRISE D2.3: Final SUNRISE Safety Assurance Framework; Technical Report; European Union, 2025. [Google Scholar]

- Scholtes, M.; Schuldes, M.; Weber, H.; Wagener, N.; Hoss, M.; Eckstein, L. OMEGAFormat: A comprehensive format of traffic recordings for scenario extraction. In Proceedings of the Workshop Fahrerassistenz und automatisiertes Fahren, Berkheim, Germany, 9–11 May 2022; pp. 195–205. [Google Scholar]

- Beckmann, J.; Torres Camara, J.M.; Kaynar, E.; de Gelder, E.; Daskalaki, E.; Hillbrand, B.; Amler, T.; Thorsén, A.; Irvine, P.; Kirchengast, M.; et al. SUNRISE D3.4: Report on Subspace Creation Methodology; Technical Report; European Union, 2025; in preparation. [Google Scholar]

- Hillbrand, B.; Kirchengast, M.; Ballis, A.; Panagiotopoulos, I.; Menzel, T.; Amler, T.; Collado, E.; Beckmann, J.; Skoglund, M.; Thorsén, A.; et al. SUNRISE D3.3: Report on the Initial Allocation of Scenarios to Test Instances; Technical Report; European Union, 2024. [Google Scholar]

| Name | Definition in the Context of ADS Safety Assessment | Relevant References |

|---|---|---|

| Completeness | The extent to which a scenario description contains all the information necessary for meaningful analysis and decision-making | [10,16,17] |

| Criticality | Quantification of the potential risks and challenges in a scenario | [11,15,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32] |

| Coverage | The adequacy of a testing effort; the extent to which a set of scenarios addresses a given ODD | [2,17,33,34,35,36,37,38,39,40,41,42,43,44] |

| Diversity or dissimilarity | Quantification of how two scenarios are different from each other; spread across a scenario set | [45,46,47,48,49,50,51,52,53,54,55,56] |

| Exposure | The likelihood of encountering a scenario | [57,58,59,60,61,62,63,64,65,66,67] |

| Complexity | The degree of challenge a scenario presents to a human driver or ADS | [13,68,69,70,71,72,73,74,75,76,77,78] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

de Gelder, E.; Singh, T.; Hadj-Selem, F.; Vidal Bazan, S.; Op den Camp, O. Scenario Metrics for the Safety Assurance Framework of Automated Vehicles: A Review of Its Application. Vehicles 2025, 7, 100. https://doi.org/10.3390/vehicles7030100

de Gelder E, Singh T, Hadj-Selem F, Vidal Bazan S, Op den Camp O. Scenario Metrics for the Safety Assurance Framework of Automated Vehicles: A Review of Its Application. Vehicles. 2025; 7(3):100. https://doi.org/10.3390/vehicles7030100

Chicago/Turabian Stylede Gelder, Erwin, Tajinder Singh, Fouad Hadj-Selem, Sergi Vidal Bazan, and Olaf Op den Camp. 2025. "Scenario Metrics for the Safety Assurance Framework of Automated Vehicles: A Review of Its Application" Vehicles 7, no. 3: 100. https://doi.org/10.3390/vehicles7030100

APA Stylede Gelder, E., Singh, T., Hadj-Selem, F., Vidal Bazan, S., & Op den Camp, O. (2025). Scenario Metrics for the Safety Assurance Framework of Automated Vehicles: A Review of Its Application. Vehicles, 7(3), 100. https://doi.org/10.3390/vehicles7030100