Abstract

The emergence of automotive-grade LiDARs has given rise to new potential methods to develop novel advanced driver assistance systems (ADAS). However, accurate and reliable parking slot detection (PSD) remains a challenge, especially in the low-light conditions typical of indoor car parks. Existing camera-based approaches struggle with these conditions and require sensor fusion to determine parking slot occupancy. This paper proposes a parking slot detection (PSD) algorithm which utilizes the intensity of a LiDAR point cloud to detect the markings of perpendicular parking slots. LiDAR-based approaches offer robustness in low-light environments and can directly determine occupancy status using 3D information. The proposed PSD algorithm first segments the ground plane from the LiDAR point cloud and detects the main axis along the driving direction using a random sample consensus algorithm (RANSAC). The remaining ground point cloud is filtered by a dynamic Otsu’s threshold, and the markings of parking slots are detected in multiple windows along the driving direction separately. Hypotheses of parking slots are generated between the markings, which are cross-checked with a non-ground point cloud to determine the occupancy status. Test results showed that the proposed algorithm is robust in detecting perpendicular parking slots in well-marked car parks with high precision, low width error, and low variance. The proposed algorithm is designed in such a way that future adoption for parallel parking slots and combination with free-space-based detection approaches is possible. This solution addresses the limitations of camera-based systems and enhances PSD accuracy and reliability in challenging lighting conditions.

1. Introduction

ADAS have developed rapidly during the last decade. Among them, automated parking systems can not only assist drivers to park safely but will also take over the complete parking process in the future. The first step of this automation is the detection of parking slots. An automated parking system can detect vacant parking slots with sensors and guide the vehicle towards the parking slot chosen by the driver, monitoring the environment to avoid collision. According to [1], parking slot detection methods can be divided into vision-based and non-vision-based methods, like ultrasonic- or LiDAR-based systems. As vison-based methods, parking-slot-marking-based methods typically take camera images as input and utilize computer vision technology to determine parking slot markings.

Although cameras have been widely used in production vehicles, their performance is strongly affected by the light condition and limitations of indirect distance calculations. Cameras, which are mainly used in around view monitoring (AVM) systems, can only provide a two-dimensional representation of the environment due to perspective projection. To determine the occupancy status of the detected parking slots from camera images effectively, fusion with other domain sensors, such as ultrasonic sensors [2] or LiDAR [3], is mandatory. This approach requires additional calibration to determine the extrinsic transform and time synchronization between different sensors.

In comparison to cameras, LiDARs can provide point cloud data that not only contain the three-dimensional coordinates of each point, but also the reflection intensity. With the emergence of automotive-grade LiDARs, vehicle manufacturers and suppliers are exploring the potential of LiDARs to develop novel ADAS functions. For various scenarios of driving, LiDAR data have already been widely applied. For example, on well-marked roads, LiDAR point cloud data are segmented to detect the road plane, and the intensity levels are used to detect lane markings [4]. For scenarios of automated parking, detection of parking slot markings via LiDAR is still not well-studied and is lacking in applied approaches for real-world use.

In this paper, an algorithm to detect perpendicular parking slots and to determine their occupancy status based on a LiDAR point cloud was developed and tested in a well-marked semi-indoor car park, extending the use of automotive LiDAR towards a new application field. Compared to camera-based PSD systems, the robustness is increased by using LiDAR sensors, in particular in low-light conditions, like in semi-indoor or indoor car parks. The proposed solution uses a single sensor without the need for multiple sensors or complex sensor fusion, like those used in hybrid PSD systems. In addition, it can detect both the parking markings as well as the occupancy status of the parking slots simultaneously, which is a big benefit compared to existing solutions as it reduces complexity and effort, e.g., in terms of computational power or the combined calibration of several sensors. As the LiDAR PSD system uses well-known algorithms, like RANSAC, it can easily be extended to other parking scenarios, like parallel parking or other parking geometries.

The paper is organized as follows:

- Section 2 provides an overview about the related work for PSD.

- Section 3 introduces the methodology.

- Section 4 describes the processing pipeline of the proposed algorithm.

- Section 5 presents the test object and discusses the detection results.

- Section 6 concludes this paper with a summary and an outlook into future work.

2. Related Work

There are various sensor technologies and corresponding algorithms that can be used for PSD, each with their own advantages and limitations. Most of the approaches make use of sensors which are already in use within the automotive field, like cameras, ultrasonic sensor, or LiDAR, to avoid adding additional hardware to the car. This section is categorized based on specific sensor technology.

2.1. Ultrasonic-Based Systems

Ultrasonic sensors are frequently used for parking assistance systems to support the driver during the parking process. Mounted to the car body, they measure the distance to objects, like other cars. They can also be used to detect empty parking spaces in drive-by parking space sensing applications [5]. Nevertheless, ultrasonic-based systems can only detect if an object is present in the vicinity of the vehicle. If a wide free space is placed in front of the ultrasonic sensor, parking slots cannot be detected, as the parking markings cannot be detected with these sensors. These sensors are widely used due to their low cost and weight.

2.2. Camera-Based Systems

Camera-based algorithms are a widely used technique for PSD. These systems analyze the camera footage to identify empty parking spaces based on visual features. Several studies have shown that camera-based systems can achieve high accuracy in the detection of parking slots and their occupancy, especially when combined with machine learning techniques, such as convolutional neural networks (CNNs) [6]. They detect parking lines by analyzing images of the parking lot captured by cameras mounted on the vehicle. For example, Hu et al. developed a PSD algorithm based on deep learning and fisheye image of the camera installed around the car body [7].

In a similar approach, Hamada et al. proposed a new surround view-based parking assistance system and its implementation [8]. PSDs using surround view imaging in combination with different deep-learning algorithms were also developed, like region-based convolutional neural networks (R-CNN) [9,10], YOLOv4 [11], or semantic segmentation models. Refs. [12,13] provides a review of camera-based deep learning PSD algorithms based on surround view images.

Camera-based systems suffer from limitations in low-light conditions and in accurately estimating depth and distance information. As these systems are also based on a training-based approach, the detection results depend on the training data of the algorithm. It is possible that the detections vary.

2.3. LiDAR-Based Systems

LiDAR-based PSD has gained significant attention in recent years. LiDAR sensors use laser light to create a 2D or 3D representation of the environment (a point cloud) and to detect and locate objects in real time. Thornton et al. proposed an algorithm to monitor parking utilization in unmarked parallel parking areas using a two-dimensional LiDAR [14]. In [15], the authors used a 2D LiDAR system in a public parking lot with metal canopies over parking spaces for a valet parking system for autonomous vehicles. Parts of the canopy were used as reference elements for accurate positioning within the parking lot.

Using LiDAR sensors, it is also possible to detect road markings and to identify the shape, location, and orientation of the detected lines [16].

LiDAR sensors are also less affected by difficult light conditions and can operate effectively during the day or night, thereby proving themselves as a good alternative to the camera-based systems.

2.4. Hybrid Systems

Different sensors with different algorithms can be combined for PSD applications using sensor fusion to detect parking lines’ shape, location and orientation, making parking more efficient and convenient and providing a more comprehensive understanding of the parking lot environment. These hybrid approaches, together with machine-based algorithms, can improve performance and adapt to different environmental conditions.

A fusion of camera and LiDAR can offer significant advantages for the accurate detection of parking spaces, as described in [3]. The camera provides visual information for the detection of various objects including parking spaces, parking markings, and signs. LiDAR provides precise distance measurements and enables accurate depth perception and 3D mapping of the surroundings, including the detection of obstacles and the shape of parking spaces. The detection of parking spaces fuse AVM and LiDAR data based on parking lines. The parking line features are extracted using principal component analysis (PCA) and histogram analysis after LiDAR-based filtering and are used for both the localization and mapping process. The parking line-based rapid loop closure method is proposed for accurate localization in the parking lot.

The advantages of a hybrid system are its better accuracy and redundancy. The combination of data from different sensors eliminates false detections.

3. Methodology

As stated in Section 2, different sensors and sensor combinations can be used for parking slot detection and/or occupancy checks. So far, LiDAR sensors have not been used for simple parking areas without additional features, but as LiDAR sensors can detect markings as well as objects, they are suitable for a simultaneous detection of parking slots and occupancy status.

3.1. Target

The primary objective of this research is to devise an algorithm capable of detecting parking slots and their occupancy status. The target function is to be a fast and reliable algorithm based only on the input data from one type of sensor. The algorithm should be a rule-based algorithm, to eliminate the possibility of false detections due to improper training data.

3.2. Sensor Selection

The selection of the sensor plays a pivotal role in the effectiveness and reliability of the proposed algorithm. In this regard, LiDAR is chosen as the preferred sensor due to its ability to provide detailed 3D point cloud data, robust performance in various lighting conditions, and compatibility with automotive environments.

3.3. Available Data Sources for Development

The development of the algorithm relied on real-world LiDAR data collected from controlled test scenarios in parking facilities. The available raw point cloud data from the LiDAR sensor on both sides of the vehicle is used for development.

3.4. Selection of Algorithm

The algorithmic framework for parking slot detection and occupancy status determination is chosen to align with the objectives of the research and the capabilities of the LiDAR sensor. This involved designing the algorithm based on the principles of point cloud processing, including preprocessing techniques, employing various feature extraction techniques, and occupancy determination.

3.5. Implementation and Evaluation

The implementation phase involves translating the selected algorithmic framework into executable code and incorporating necessary adjustments to ensure compatibility with the target hardware and software. Subsequent tuning of the algorithm parameters to optimize detection accuracy and robustness is also performed, considering factors, such as environmental conditions and sensor characteristics.

3.6. Validation

To validate the efficacy and practicality of the proposed algorithm, real-life testing was conducted. This involved deploying the algorithm in a test vehicle equipped with the necessary sensor hardware and driving parking maneuvers in real-world parking environments. The performance of the algorithm was assessed in terms of its ability to accurately detect parking slots and determine their occupancy status in various scenarios.

By delineating each step of the methodology, this chapter provides a comprehensive overview of the research approach and the systematic process followed in the development and evaluation of the proposed algorithm.

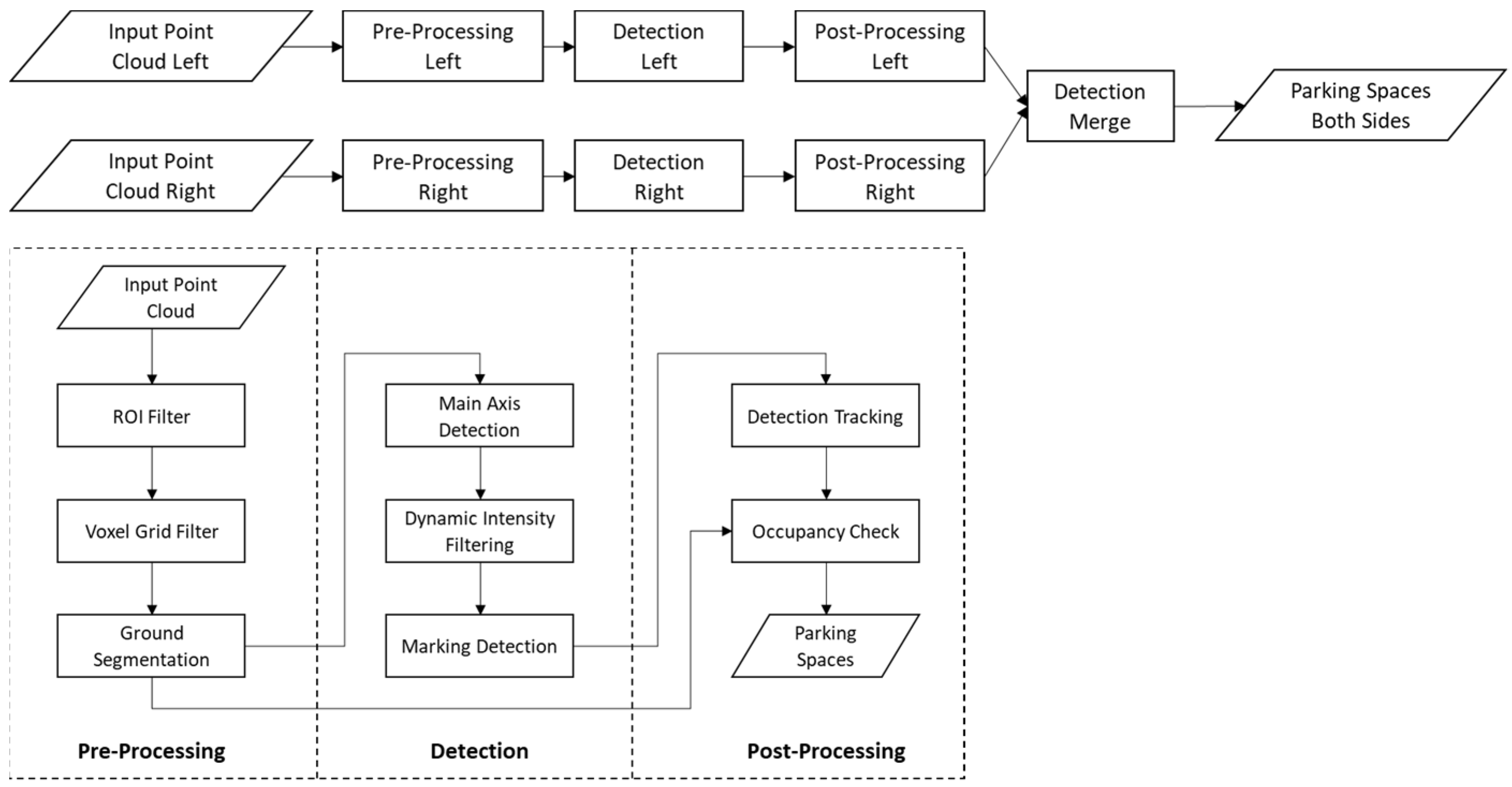

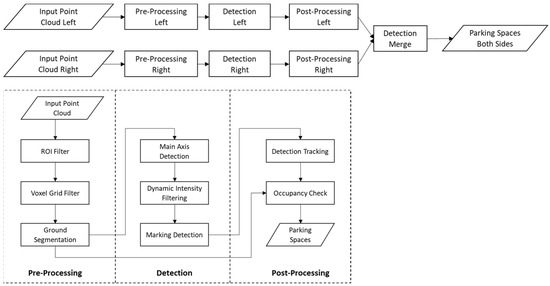

4. Proposed Algorithm

Figure 1 (top) illustrates the overall processing pipeline of the proposed algorithm. The detection takes point cloud data from both the side facing LiDARs as the input, and the detected parking slots from each side are merged to generate the output parking slots. No fusion of the LiDAR data is conducted in the sense of filters, as the LiDARs do not have an overlapping FOV. Instead, the steps provided in Figure 1 (bottom) are applied to each sensor individually. Figure 1 (bottom) depicts a schematic view of the algorithm, and the following subsections explain these steps of detection from a single LiDAR in detail. The input LiDAR point cloud is first cropped and sampled down to increase computation efficiency. The ground plane is then segmented from the remaining point cloud in the region of interest (ROI). The main axis along the vehicle driving direction and markings of perpendicular parking slots is detected on the ground plane using the RANSAC algorithm. Parking slot hypotheses are generated between adjacent markings and tracked with the help of vehicle odometry data. As the last step, the tracked parking slots are cross-checked with the non-ground point cloud to determine their occupancy status.

Figure 1.

Processing pipeline of proposed PSD algorithm. Top: High-level processing pipeline for both sides facing LiDARs. Bottom: Detection pipeline for a single LiDAR.

4.1. Preprocessing

Preprocessing refers to a set of data manipulation and data filtration techniques applied to raw LiDAR point cloud data. It aims to enhance the quality, accuracy, and usefulness of the point cloud data by addressing issues, such as noise, outliers, and irregularities.

This module ensures that the incoming data are first normalized. It transforms the point cloud data from a sensor frame to a common global transform frame called “base_link”. All the further operations are performed in this global frame. The known convention in the automotive field is that the “base_link” is located in the middle of the rear axis on the ground level, with the x-axis pointing in the driving direction and the y-axis pointing to the left of the vehicle.

4.1.1. ROI Filtering and Downsampling

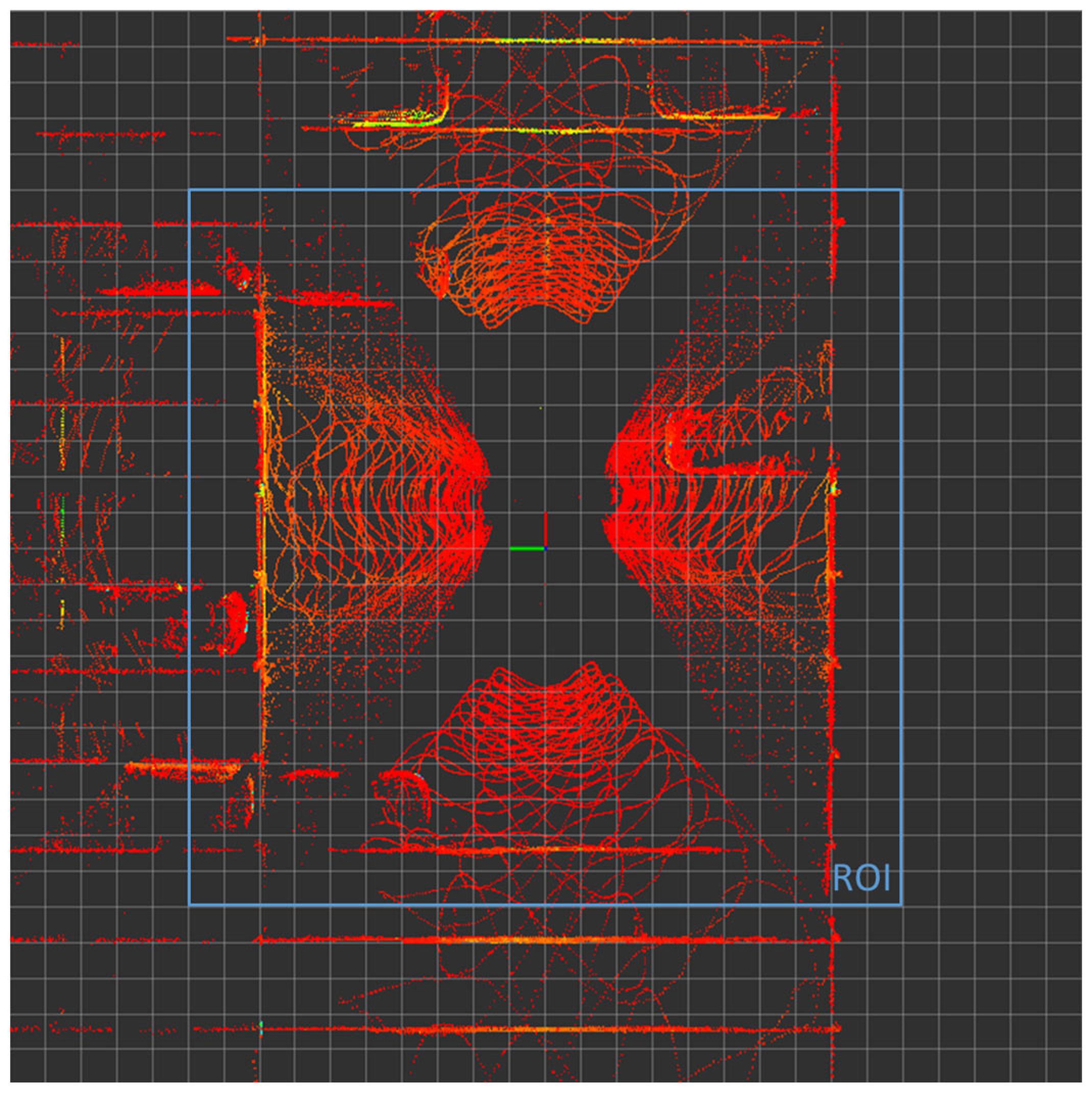

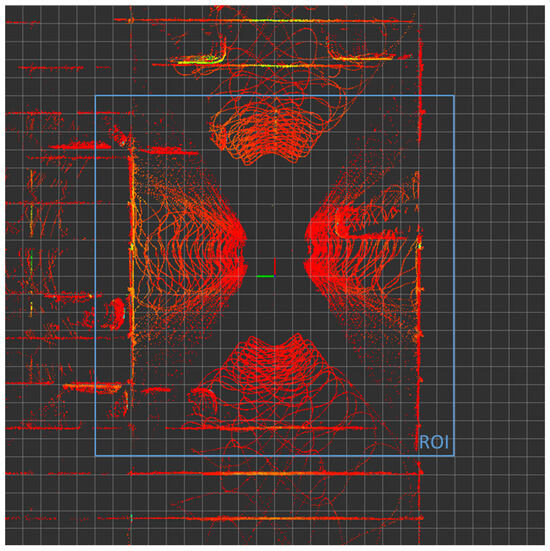

After data normalization, the point cloud is filtered with an ROI, which is determined via analysis of the target use case in a well-marked car park. The ISO Standard 20900 defines the typical depth of a perpendicular parking slot line to be 6 m [17] and the ROI should be significantly larger for proper detection of perpendicular parking slots. Considering the field of view (FOV) of the LiDAR sensor, as well as the distance between the vehicle side and the beginning of the parking slot markings, the horizontal ROI is defined as a 20 m × 20 m square. As for the vertical dimension, a height of 3 m is chosen to remove the LiDAR points on the ceiling inside the car park, while preserving enough information for potential obstacles on the ground. As a result, all points outside the ROI cuboid (20 m × 20 m × 3 m) are removed to reduce the point cloud size and exclude possible outliers. Figure 2 shows the input point cloud (including data from four LiDARs mounted on each face of the vehicle) and the ROI. The size of the grid cell is 1 m. It is evident that the selected ROI includes the most significant LiDAR points around the vehicle.

Figure 2.

Input point cloud and ROI.

To further reduce the point cloud size and increase the computation speed in the following detection pipeline, a voxel grid filter is applied to the ROI-filtered point cloud. The commonly utilized leaf size in tutorials falls within the range of 0.01~0.1 m [18]. Practical experimentation revealed that a leaf size of 0.1 m strikes an effective balance between detection accuracy, processing speed and overall robustness. After downsampling, the point cloud is suitable for ground plane segmentation.

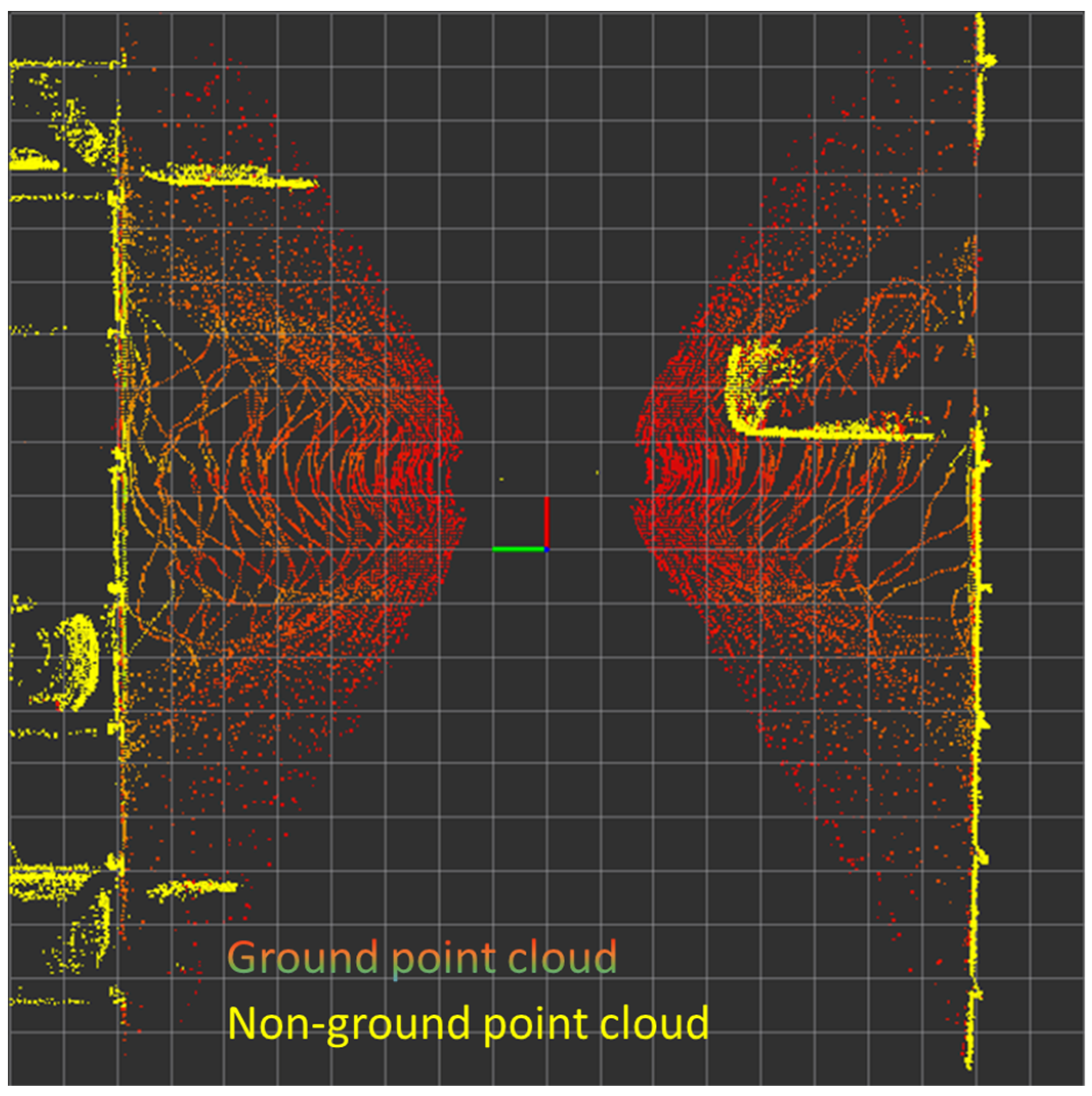

4.1.2. Ground Plane Segmentation

PSD uses a normal estimation method in combination with RANSAC [19] for ground plane segmentation, which estimates the surface normals for each point in the point cloud. Normal estimation calculates the direction of the surface normal at each point by analyzing the local neighborhood of the point. The normals provide information about the orientation of the underlying surfaces and can help identify the ground plane.

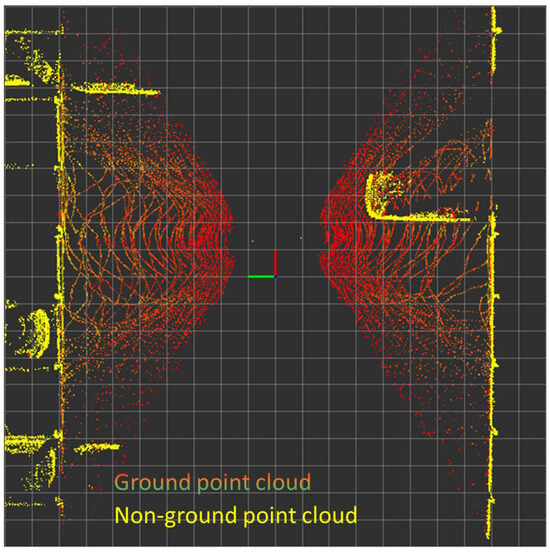

The plane segmentation starts with randomly selecting a small subset of points from the original point cloud to represent a candidate ground plane model. A plane model is fitted to the selected points using methods, such as least-squares fitting. RANSAC then evaluates how well the fitted plane model represents the ground surface by measuring the number of inliers, which are points that lie within a predefined threshold (for example the Euclidean distance) to the fitted plane. If the number of inliers exceeds a predefined threshold, the model is considered valid. These steps are repeated for a fixed number of iterations. After each iteration, RANSAC keeps track of the best model found so far. Once the specified number of iterations is completed, the model with the highest number of inliers is selected as the final ground plane model. All the points that lie close to this model within the threshold are considered part of the ground, and the rest are considered as non-ground points. Figure 3 shows the segmentation results in the ROI, where the non-ground point cloud is highlighted in yellow. After segmentation of the point cloud, the ground and non-ground point clouds are processed separately.

Figure 3.

Preprocessed point cloud.

4.2. Detection

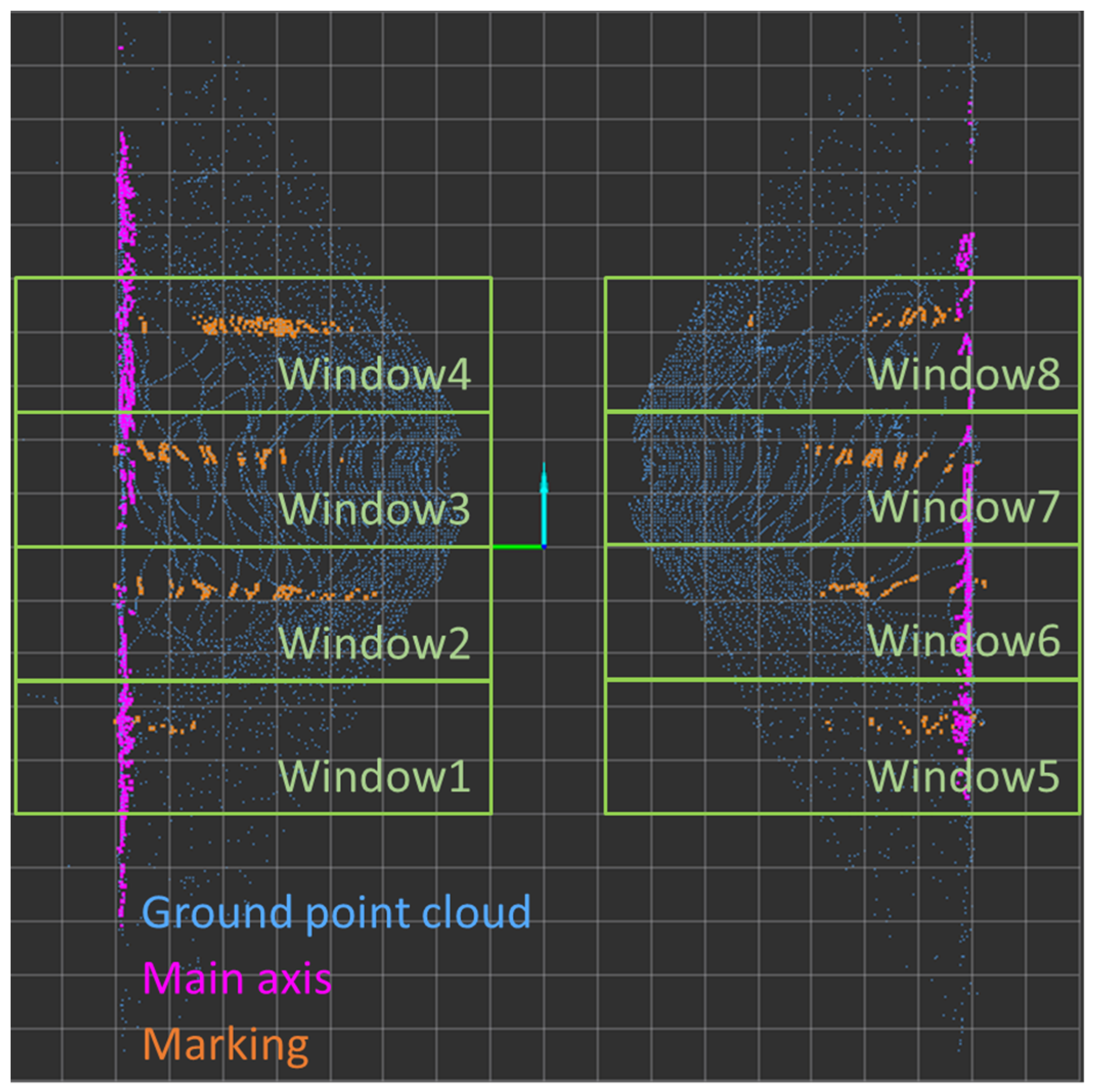

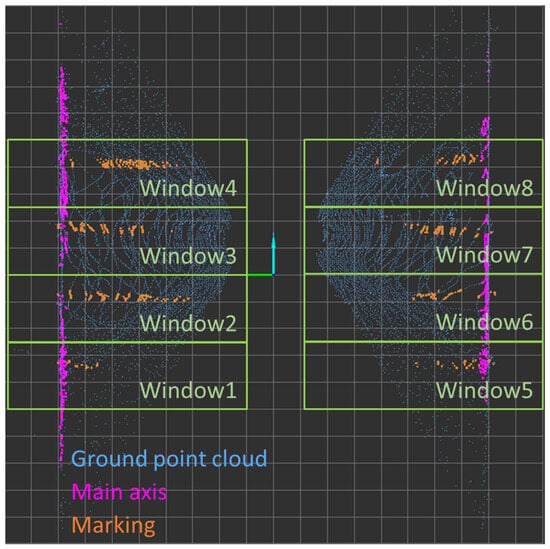

After the preprocessing step, the ground point cloud is evaluated to detect the parking slots using the difference in the intensity of LiDAR point between points lying on the markings and other points. First, points of higher intensity are filtered out dynamically by considering the overall intensity of LiDAR points on the ground plane and considering LiDAR points with intensity levels exceeding the overall intensity as higher intensity points. Then, the main boundary line (main axis) along the driving direction as well as the markings of the perpendicular parking slots are detected. Based on the detected markings, hypotheses of parking slots are generated between two neighboring markings with a predefined depth of 5 m.

4.2.1. Intensity Filtering

LiDAR data analysis shows that the intensity of LiDAR points on markings is higher than those on the ground. Therefore, it is possible to extract the points of interest by filtering the intensity of reflection of the said points. The intensity of the LiDAR points can be affected by the environmental conditions, such as temperature, humidity or light, and it is found that the same material can still have a different reflection intensity under varying environmental conditions. Therefore, a dynamic thresholding method is applied to increase the flexibility and robustness of the marking detection.

Otsu’s method (the iterative thresholding method) [19], which is originally used for thresholding in image processing, is adapted for the LiDAR point cloud in the proposed algorithm. Otsu’s method iterates through all the points in the point cloud and classifies the points into two classes: high or low intensity. The threshold of the intensity is dynamically determined by maximizing the inter-class variance of the two classes. This threshold intensity is then used to filter out the higher intensity points for marking detection. Figure 4 shows the intensity-filtered point cloud on the ground plane, where the ground point cloud is marked as dark blue for reference.

Figure 4.

Intensity-filtered ground point cloud.

4.2.2. Main Axis Detection

RANSAC is again employed to estimate lines in the intensity-filtered point cloud. The main axis, as highlighted in a violet color in Figure 4, corresponds to the boundary of the parking area. It is derived from the intensity-filtered point cloud but aligned parallel to the vehicle’s driving direction. However, it has been observed through experimentation that there are instances where the main axis is not consistently detected. One such situation occurs when objects obstruct the sensor’s field of vision, a condition later referred to as "shadowing". In such cases, the main axis might be only partially detected, potentially leading to inaccurate detection. To mitigate this, assessments are made regarding the number of points on the main axis and its alignment with the driving direction. Only a valid main axis will trigger the marking detection.

In addition, the detection process stops in the presence of insufficient input point cloud data, a valuable mechanism for navigating parking ramps, entrances, and exits, effectively minimizing false positives and enhancing computational efficiency.

4.2.3. Marking Detection

After detecting a valid main axis, the points on the axis are removed from the ground point cloud, and the remaining points serve as the input for marking detection. Multiple windows (green boxes in Figure 4) are placed along the driving detection area on both sides of the vehicle. In each window, the RANSAC algorithm is applied to detect a valid marking, which results in the orange points in Figure 4. Initial hypotheses of parking slots are generated between two neighboring marking lines with a predefined depth of five meters. The parking slot hypotheses will be finally postprocessed to determine the occupancy status.

4.3. Postprocessing

Postprocessing of detected parking slots involves refining and analyzing the results obtained from the algorithms. All the detected parking slots are assigned a unique identification ID which is constant over the detection cycles. The tracked parking slots are then cross-checked with the non-ground point cloud to determine the occupancy status.

4.3.1. Detection Tracking

The task of the tracking algorithm is to assign a constant ID to the detected parking slots. To achieve this, the previously detected parking slots are saved in the memory and their new position and orientation are predicted with the help of the vehicle displacement (odometry). For the newly detected parking slots that are matched with the predicted counterparts, the same ID from the previous cycle is assigned. For the parking slots that cannot be matched with the prediction, a new ID is assigned. As for the predicted parking slots, if there is no newly detected counterpart, the parking slots will be preserved for a maximal range to keep the prediction accurate. The tracking algorithm is later used for evaluation of the detection performance.

4.3.2. Occupancy Check

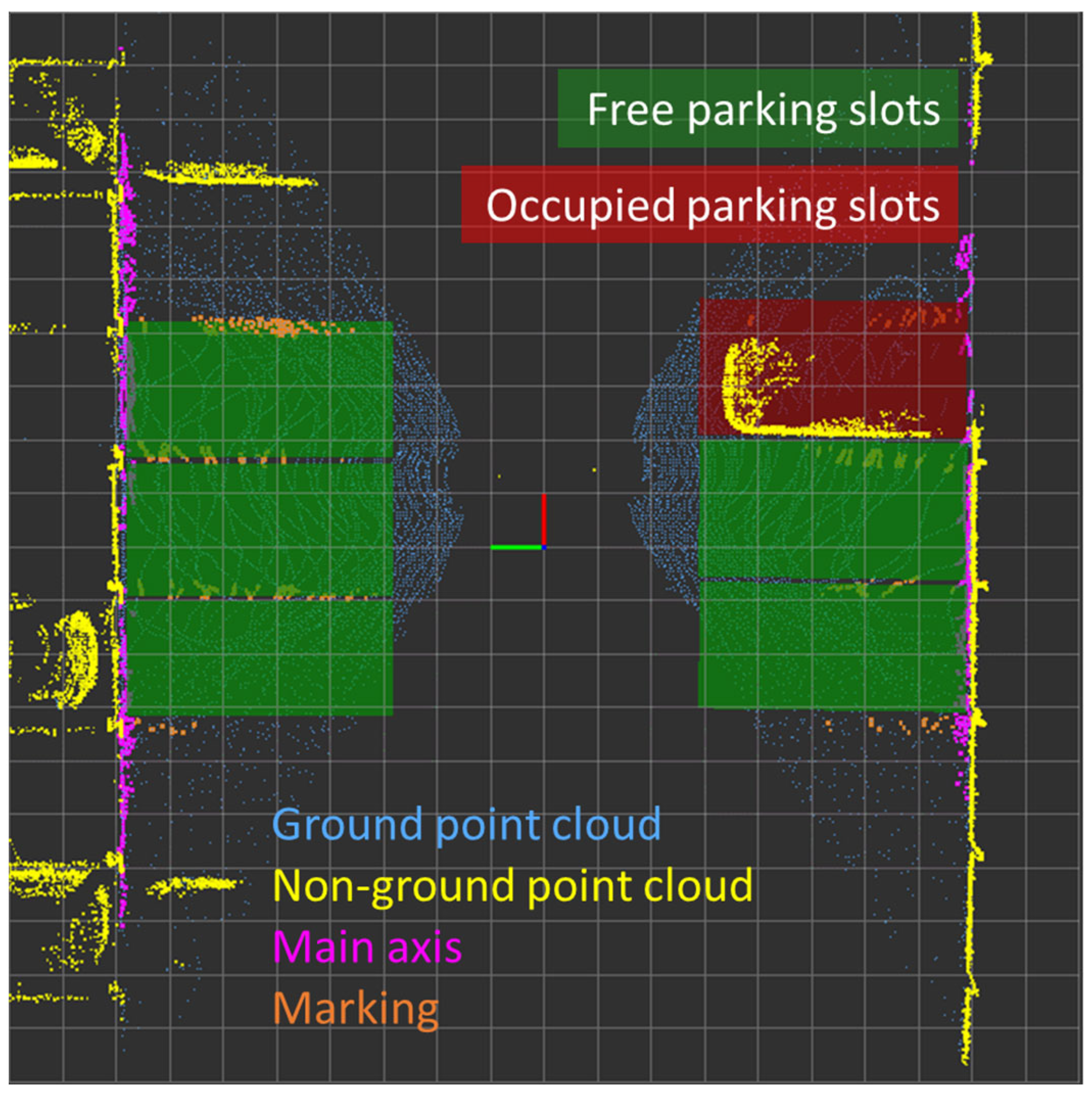

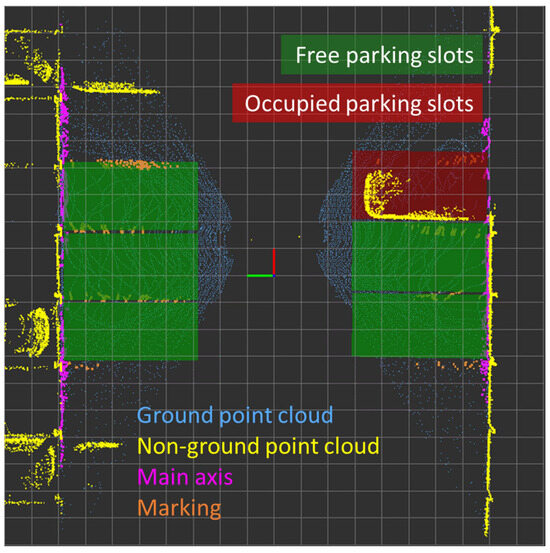

The parking slots detected and tracked from the ground point cloud are cross-checked with the non-ground point cloud to determine the occupancy status. As the ground and the non-ground point clouds are segmented from the same input point cloud in the previous step, neither extra calibration nor time synchronization is needed. The occupancy status of the parking slot is determined by counting the number of LiDAR points inside a cuboid over it. From prior experiments, a threshold of 25 points is chosen to determine whether a parking slot is vacant or occupied. The transparent green boxes visualized in Figure 5 are the detected parking slots which are identified as free.

Figure 5.

Detected parking slots.

5. Experiments and Analysis

5.1. Experimental Setup

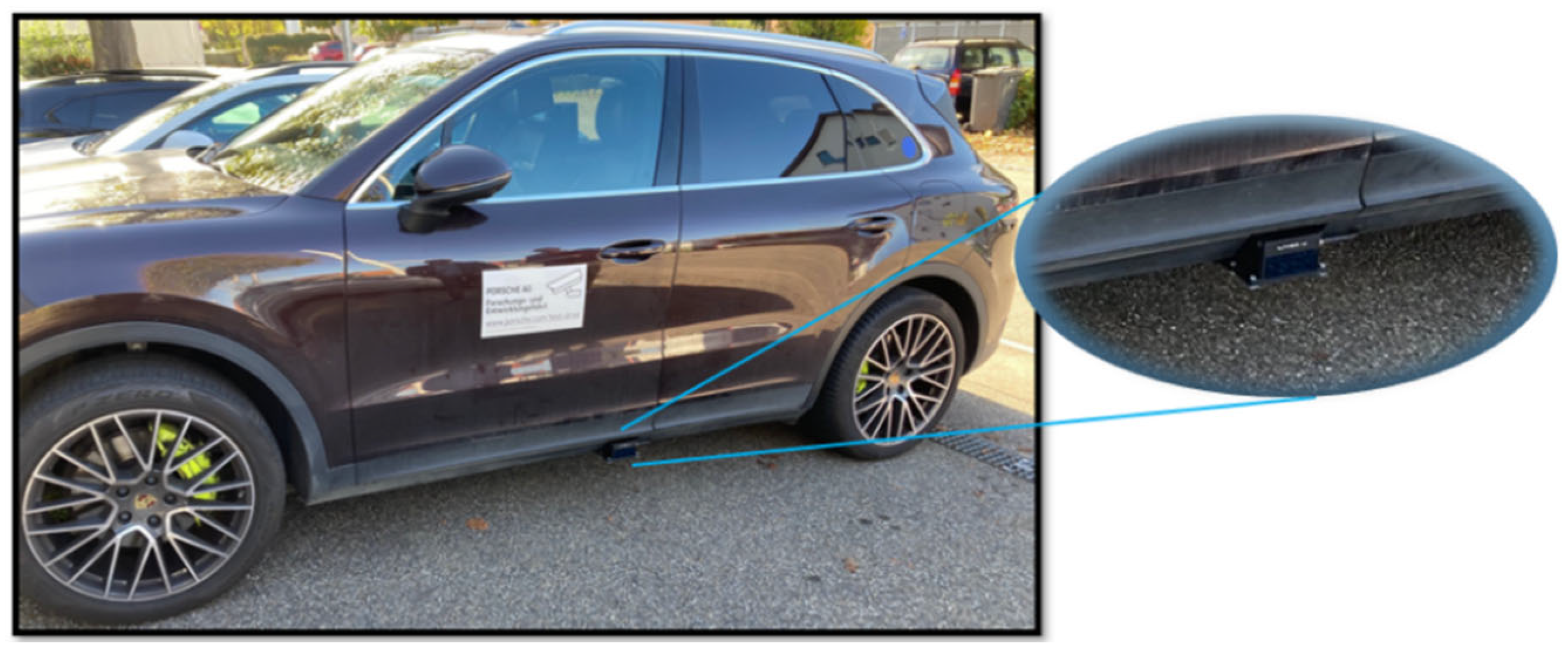

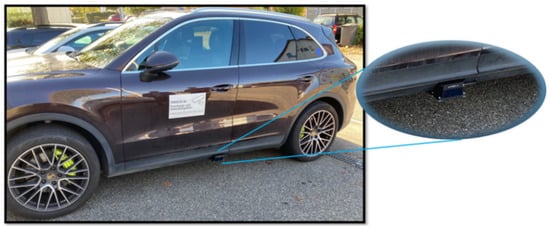

The ROS-based research platform JUPITER [20] of Porsche Engineering was used to test the proposed algorithm. Two Livox HAP liDARs with a horizontal FOV of 120° are mounted on the left and right side of the vehicle, as demonstrated in Figure 6, and served as the input for the detection pipeline. Side LiDARs have been calibrated with a 40-line Hesai Pandora 360° LiDAR sensor kit on the roof of the vehicle. The transformation between the roof LiDAR and the side LiDARs has been identified by the point cloud matching algorithm. The extrinsic transformation between the side LiDARs and the vehicle coordinate system has been determined.

Figure 6.

Test vehicle JUPITER and side LiDAR.

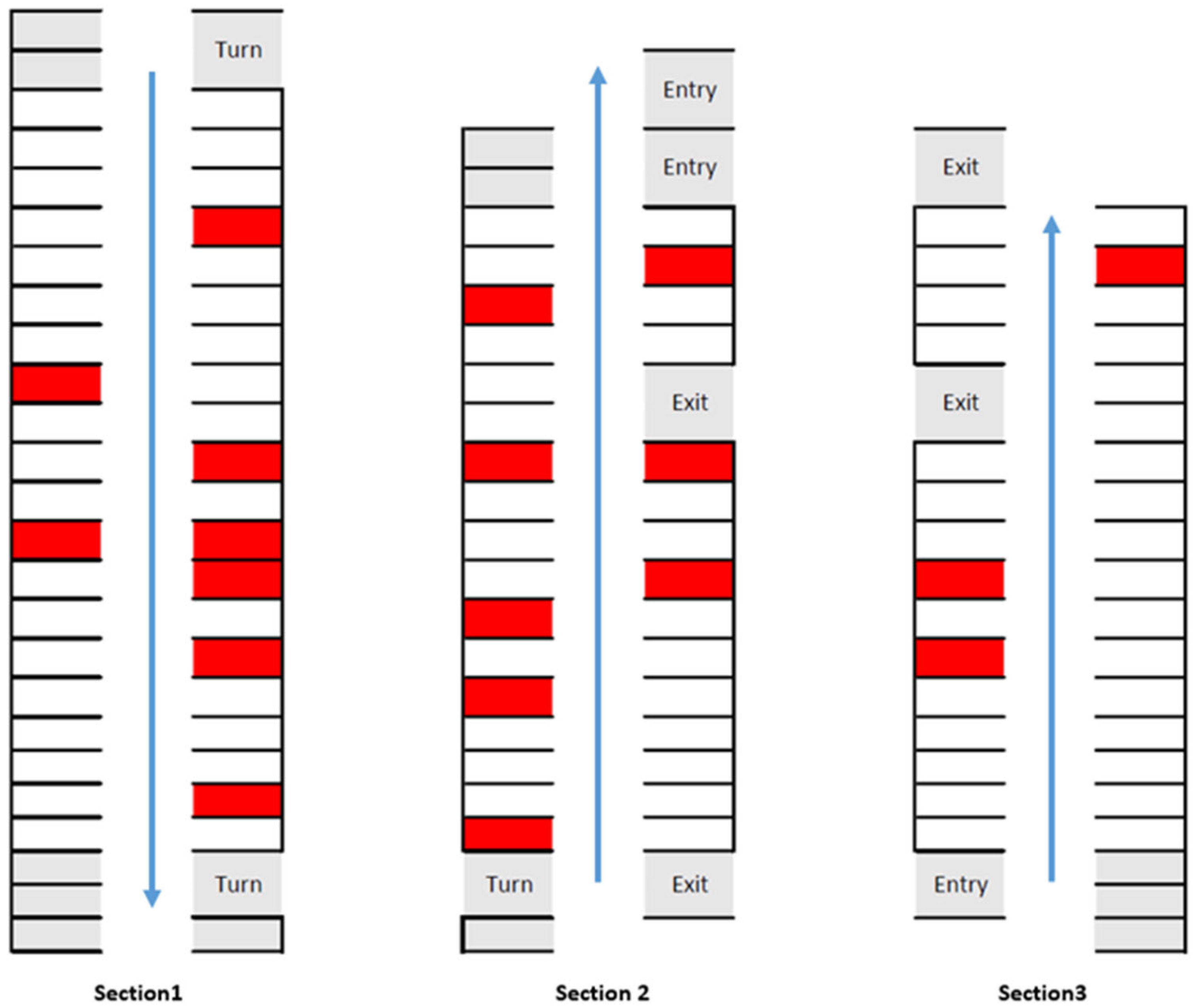

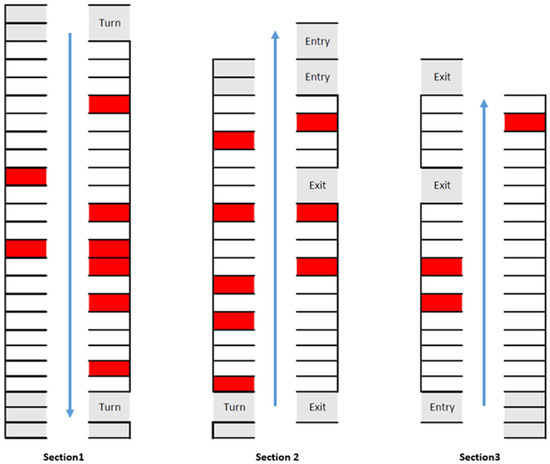

The experiments were conducted in a multistory car park near Porsche Engineering in Mönsheim, Germany, where open-end perpendicular parking slots are well-marked. Figure 7 illustrates the driven routes of the test drives that correspond to the standard driving routes within this car park, which was split into three sections. Occupied parking slots are marked red. Due to the assumption that the vehicle is driven along the main driving lane, the gray parking slots were excluded from the evaluation. During the driving experiment, the maximum angle of the driving direction with regard to the main driving lane was about 15°.

Figure 7.

Test section of the car park.

5.2. Evaluation Metrices

Metrices were first defined to evaluate the performance of the proposed algorithm. The assessment of object detection methods is mostly based on the recall and precision [21]. In the context of parking slot detection, the metrics are defined as follows:

The occupancy check was calculated for the correctly detected parking slots and has the following definition:

5.3. Results and Discussion

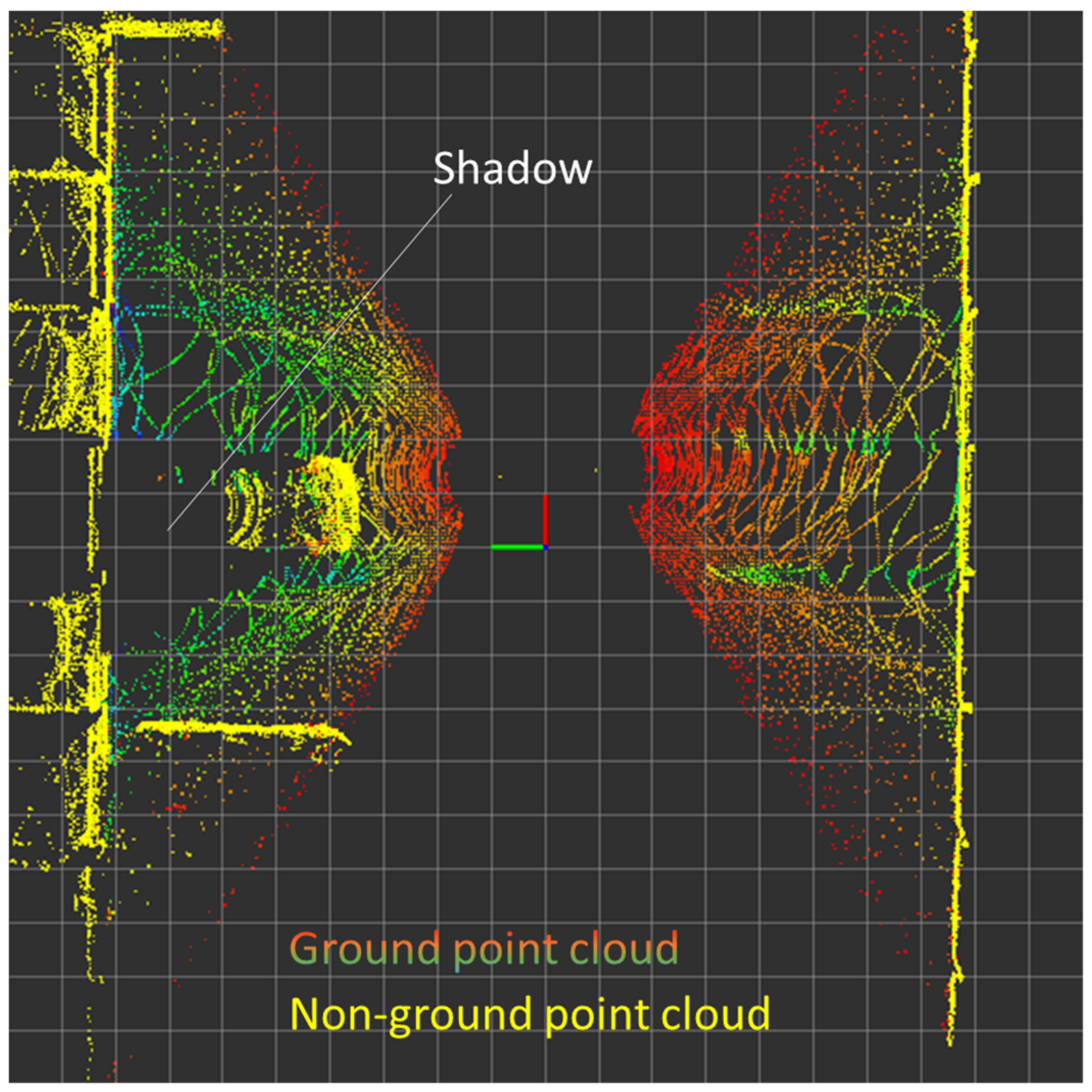

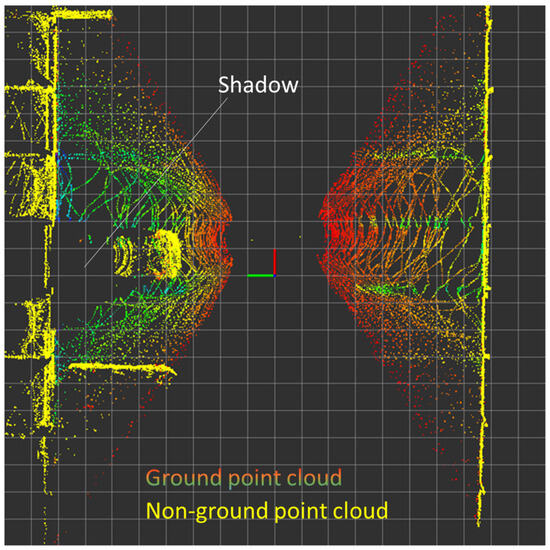

Table 1 summarizes the test results of the proposed algorithm. The algorithm can detect parking slots and check their occupancy status with high precision at different speeds typical for driving in a car park. The detection recall was affected by the number of occupied parking slots in the test environment, as all of the missed parking slots were occupied. The reduced detection recall can be explained by the shadowing effect, as illustrated in Figure 8. The parked vehicles blocked the LiDAR scans, resulting in an insufficient number of LiDAR points on the main axis and/or the markings for a successful detection.

Table 1.

Summary of test results.

Figure 8.

Shadowing effect due to the parked vehicles.

A direct comparison of these results with the results of other papers, like [2], is difficult, as there is no benchmark dataset, and our data input is different from the data input from previous work. Compared to [2], both the detection recall (average of 98.12%) and detection precision (average of 97.9%) are significantly better for perpendicular parking than the results of a test using AVM images only (55.4% detection recall, 94.8% detection precision, respectively), and better than the hybrid method using the sensor fusion of AVM images and odometry (95.5% detection recall, 95.9% detection precision, respectively).

Furthermore, the width accuracy of the detected parking slots is calculated by comparing the detected width to the ground truth width of 2.5 m, which was measured directly in the car park. The average error of a single parking slot is calculated and then averaged over all detected parking slots. The absolute average width error lies well below 10 cm in all test runs, and is significantly better compared to single-sensor solutions, like the odometry-based method and the image-based method for perpendicular parking slots, and slightly higher compared to the more complex fusion-based method in [2]. Nevertheless, a direct comparison is difficult due to different experimental setups and environments. In our case, this error could be caused by the width of the markings in the car park, which was 25 cm.

As for the detection stability, the average variance of a single parking slot is calculated and then averaged over all the detected parking slots. The average variance of all the detected parking slot lies at around 3 cm. This shows that the detection results are quite stable over the cycles.

6. Conclusions

In this paper, a novel algorithm to detect parking markings in a semi-indoor car park using standard automotive LiDARs is proposed. It implements a new application for LiDAR sensors and makes the use of several sensor systems for marking detection and occupancy check obsolete. Instead, a single-sensor solution is presented to realize the marking detection and occupancy check simultaneously, reducing the number of sensors, system complexity, and effort compared to existing hybrid solutions, as no sensor fusion is needed, unlike in [2]. In addition, the effort for sensor calibration is reduced to just one sensor, whereas in hybrid systems multiple sensors have to be calibrated. This multi-sensor calibration is more complex, as described in [22]. It provides an improved robustness with regard to external noise, like light conditions, compared to vision-based systems [23].

The main axis along the driving direction is detected from the segmented ground point cloud by RANSAC. The remaining ground point cloud is further filtered by a dynamic Otsu’s threshold and parking markings are detected in multiple windows around the vehicle with RANSAC. The occupancy status of the detected parking slots is determined by cross-checking with the non-ground point cloud, avoiding the effort needed for sensor fusion and extrinsic calibration. The test results show that vacant parking slots can be detected with high recall (average of 98.12%) and precision (average of 97.9%) stability, which makes the detection results of the proposed single sensor-based algorithm a robust input for automated parking functions. Due to the use of well-known algorithms, the proposed algorithm can be extended to other parking scenarios as well, as it could be extended to detect parallel parking slots or combined with free-space-based detection approaches to handle different parking scenarios.

Author Contributions

Conceptualization, J.G. and M.P.; methodology, J.G., M.P., F.H. and A.R; software, A.R.; validation, A.R. and J.G.; investigation, A.R. and J.G.; data curation, J.G. and A.R.; writing—original draft preparation, A.R.; writing—review and editing, M.P., J.G. and F.H.; visualization, A.R. and J.G.; supervision, F.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

Jing Gong, Amod Raut, and Marcel Pelzer are employed by Porsche Engineering Services GmbH. The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Ma, Y.; Liu, Y.; Zhang, L.; Cao, Y.; Guo, S.; Li, H. Research Review on Parking Space Detection Method. Symmetry 2021, 13, 128. [Google Scholar] [CrossRef]

- Suhr, J.K.; Jung, H.G. Sensor Fusion-Based Vacant Parking Slot Detection and Tracking. IEEE Trans. Intell. Transp. Syst. 2014, 15, 21–36. [Google Scholar] [CrossRef]

- Im, G.; Kim, M.; Park, J. Parking Line Based SLAM Approach Using AVM/LiDAR Sensor Fusion for Rapid and Accurate Loop Closing and Parking Space Detection. Sensors 2019, 19, 4811. [Google Scholar] [CrossRef] [PubMed]

- Ghallabi, F.; Nashashibi, F.; El-Haj-Shhade, G.; Mittet, M.-A. LIDAR-Based lane Marking Detection For Vehicle Positioning in an HD Map. In Proceedings of the 2018 IEEE 21th International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018. [Google Scholar]

- Park, W.-J.; Kim, B.-S.; Seo, D.-E.; Kim, D.-S.; Lee, K.-H. Parking space detection using ultrasonic sensor in parking assistance system. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 1039–1044. [Google Scholar] [CrossRef]

- Zhang, L.; Huang, J.; Li, X.; Xiong, L. Vision-Based Parking-Slot Detection: A DCNN-Based Approach and a Large-Scale Benchmark Dataset. IEEE Trans. Image Process. 2018, 27, 5350–5364. [Google Scholar] [CrossRef] [PubMed]

- Xu, C.; Hu, X. Real time detection algorithm of parking slot based on deep learning and fisheye image. J. Phys. Conf. Ser. 2020, 1518, 012037. [Google Scholar] [CrossRef]

- Hamada, K.; Hu, Z.; Fan, M.; Chen, H. Proceedings of the 2015 IEEE Intelligent Vehicles Symposium, Seoul, Republic of Korea, 27 June–1 July 2015.

- Zinelli, A.; Musto, L.; Pizzati, F. A Deep-Learning Approach for Parking Slot Detection on Surround-View Images. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019. [Google Scholar]

- Jiang, S.; Jiang, H.; Ma, S.; Jiang, Z. Detection of parking slots based on mask R-CNN. Appl. Sci. 2020, 10, 4295. [Google Scholar] [CrossRef]

- Wang, L.; Musabini, A.; Leonet, C.; Benmokhtar, R.; Breheret, A.; Yedes, C.; Bürger, F.; Boulay, T.; Perrotton, X. Holistic Parking Slot Detection with Polygon-Shaped Representations. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 5797–5803. [Google Scholar] [CrossRef]

- Lai, C.; Yang, Q.; Guo, Y.; Bai, F.; Sun, H. Semantic Segmentation of Panoramic Images for Real-Time Parking Slot Detection. Remote Sens. 2022, 14, 3874. [Google Scholar] [CrossRef]

- Wong, G.S.; Goh, K.O.M.; Tee, C.; Sabri, A.Q.M. Review of Vision-Based Deep Learning Parking Slot Detection on Surround View Images. Sensors 2023, 23, 6869. [Google Scholar] [CrossRef] [PubMed]

- Thornton, A.A.; Redmill, K.; Coifman, B. Automated parking surveys from lidar equipped vehicle. Transp. Res. Part C Emerg. Technol. 2014, 39, 23–35. [Google Scholar] [CrossRef]

- Jiménez, F.; Clavijo, M.; Cerrato, A. Perception, positioning and decision-making algorithms adaption for an autonomous valet parking system based on infrastructure reference points using one single lidar. Sensors. 2022, 22, 979. [Google Scholar] [CrossRef] [PubMed]

- Hata, A.; Wolf, D. Road marking detection using LIDAR reflective intensity data and its application to vehicle localization. In Proceedings of the 2014 17th IEEE International Conference on Intelligent Transportation Systems, ITSC, Qingdao, China, 8–11 October 2014; pp. 584–589. [Google Scholar] [CrossRef]

- ISO 20900:2023(en); Intelligent Transport Systems—Partially-Automated Parking Systems (PAPS)—Performance Requirements and Test Procedures. International Organization for Standardization: Geneva, Switzerland, 2023.

- Downsampling a PointCloud Using a VoxelGrid Filter. Available online: https://pcl.readthedocs.io/projects/tutorials/en/latest/voxel_grid.html (accessed on 21 December 2023).

- Wang, R.; Lai, X.; Hou, W. Study on Edge Detection of LIDAR Point Cloud. In Proceedings of the 2011 International Conference on Intelligent Computation and Bio-Medical Instrumentation, Wuhan, China, 14–17 December 2011; pp. 71–73. [Google Scholar] [CrossRef]

- Haselberger, J.; Pelzer, M.; Schick, B.; Müller, S. JUPITER—ROS based Vehicle Platform for Autonomous Driving Research. In Proceedings of the 2022 IEEE International Symposium on Robotic and Sensors Environments (ROSE), Abu Dhabi, United Arab Emirates, 14–15 November 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar] [CrossRef]

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef] [PubMed]

- Wang, P. Research on Comparison of LiDAR and Camera in Autonomous Driving. J. Physics. Conf. Ser. 2021, 2093, 012032. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).