Collision Risk in Autonomous Vehicles: Classification, Challenges, and Open Research Areas

Abstract

1. Introduction

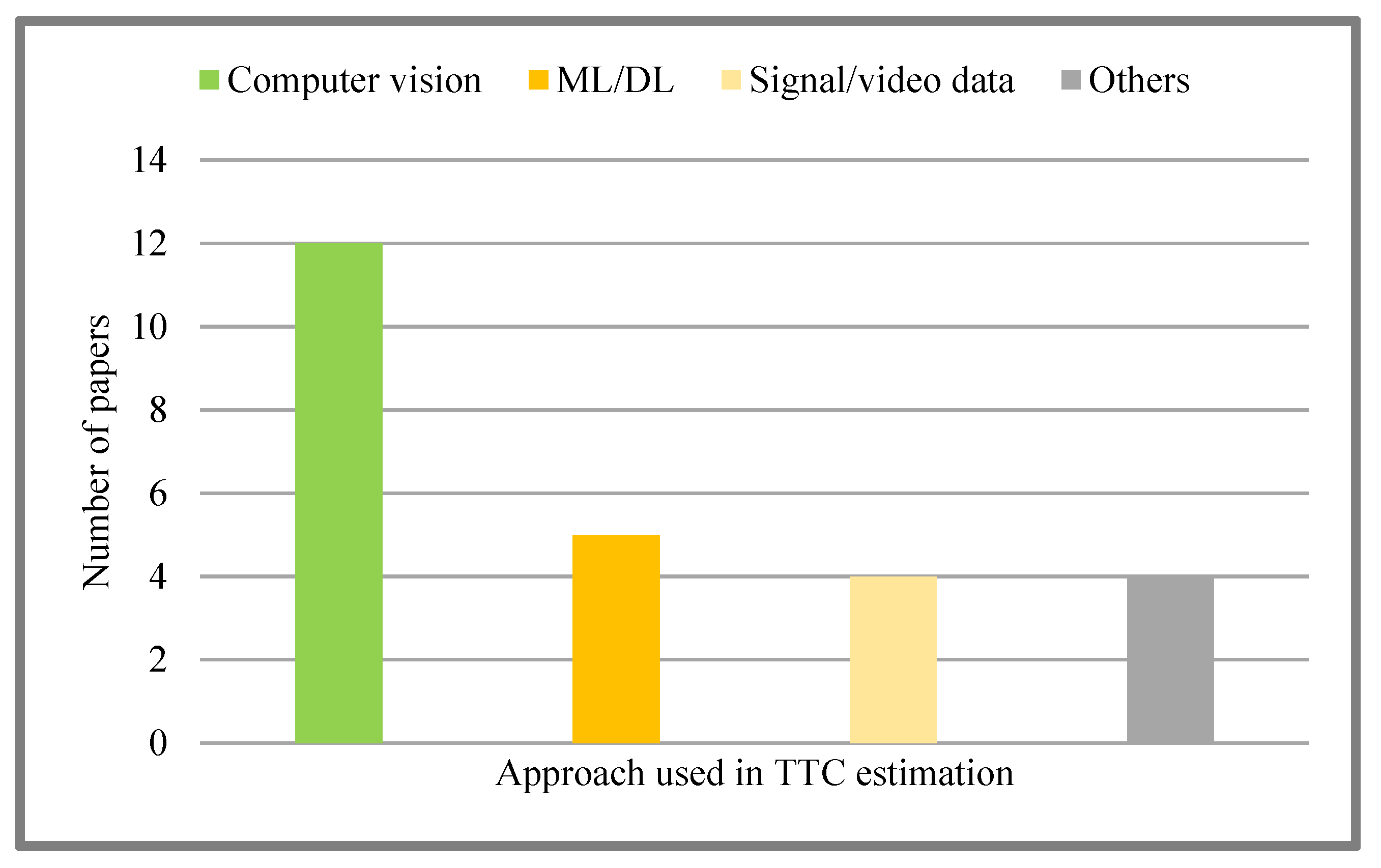

2. Time to Collision

2.1. Computer Vision-Based Techniques

2.2. AI-Based Techniques

2.3. Miscellaneous Techniques

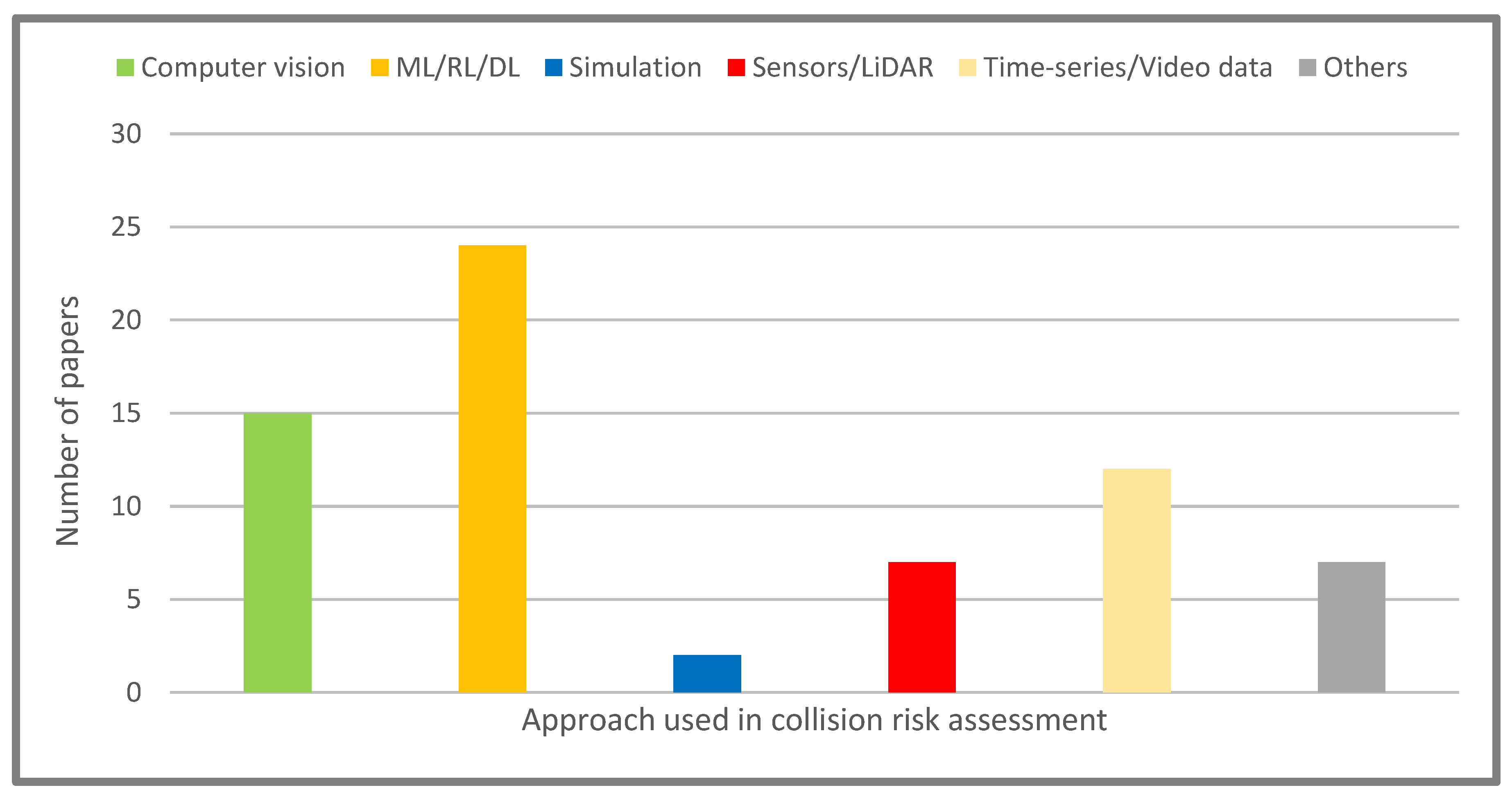

3. Collision Risk

3.1. AI-Based Techniques

3.2. Sensor-Based Techniques

3.3. Miscellaneous Techniques

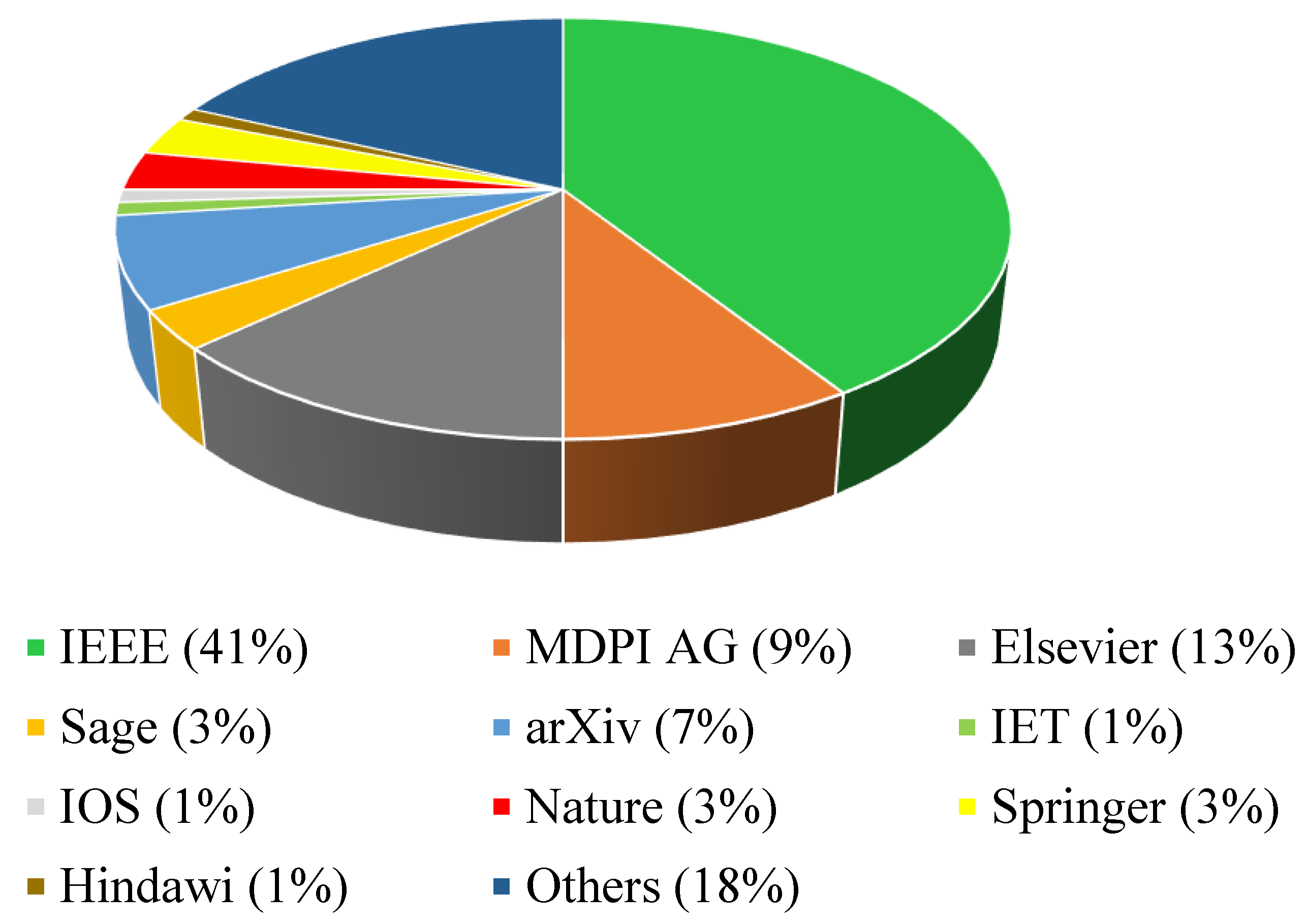

4. Data and Methods

5. Open Areas

6. Challenges

6.1. Calculations

6.2. System Design

6.3. Implementation

6.4. Generalization

6.5. Validation

6.6. Safety Considerations

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 5G NR | Fifth-Generation New Radio |

| ACAS | Active Contour Affine Scale |

| ADAS | Advanced Driver-Assistance Systems |

| ACV | Autonomous Connected Vehicle |

| AI | Artificial Intelligence |

| AMP | Automatic Mixed Precision |

| AUC | Area Under the ROC Curve |

| AV | Autonomous Vehicle |

| BLE | Bluetooth Low Energy |

| CDR | Correct Detection Rate |

| CEP | Collision Event Probability |

| CSP | Collision State Probability |

| C-V2X | Cellular Vehicle to Everything |

| CV | Connected Vehicle |

| DBN | Deep Belief Network |

| DFR | Detection Failure Rate |

| DL | Deep Learning |

| DRAC | Deceleration Rate to Avoid Collision |

| DSRC | Dedicated Short-Range Communication |

| FL | Fuzzy Logic |

| FPS | Frames Per Second |

| GNSS | Global Navigation Satellite System |

| GPS | Global Positioning System |

| HD | High Definition |

| IBD | Image Brightness Derivative |

| IPR | Information Provision Rate |

| KNN | K-Nearest Neighbor |

| LiDAR | Light Detection And Ranging |

| LSTM | Long Short-Term Memory |

| MAPE | Mean Absolute Percentage Error |

| MEC | Multi-Access Edge Computing |

| ML | Machine Learning |

| NGSIM | Next-Generation Simulation |

| NFV | Network Function Virtualization |

| PTSM | Proactive Traffic Safety Management |

| RADAR | Radio Detection And Ranging |

| RGB | Red–Green–Blue |

| ROC | Receiver Operating Characteristic |

| RL | Reinforcement Learning |

| SDN | Software-Defined Networks |

| SIRS | Scale-Invariant Ridge Segment |

| TSP | Traveling Salesman Problems |

| TTC | Time-To-Collision |

| TTI | Time-To-Impact |

| V2V | Vehicle-to-Vehicle |

| VIO | Visual Inertial Odometry |

| VT | Virtual Traffic |

| UWB | Ultra-Wide Bandwidth |

References

- Ahangar, M.N.; Ahmed, Q.Z.; Khan, F.A.; Hafeez, M. A Survey of Autonomous Vehicles: Enabling Communication Technologies and Challenges. Sensors 2021, 21, 706. [Google Scholar] [CrossRef]

- Hakak, S.; Gadekallu, T.R.; Reddy, K.R.; Swarna, M.; Ramu, P.; Parimala, M.; De Alwis, C.; Liyanage, M. Autonomous vehicles in 5G and beyond: A survey. Veh. Commun. 2023, 39, 100551. [Google Scholar] [CrossRef]

- Chen, L.; Li, Y.; Huang, C.; Xing, Y.; Tian, D.; Li, L.; Hu, Z.; Teng, S.; Lv, C.; Wang, J.; et al. Milestones in Autonomous Driving and Intelligent Vehicles—Part I: Control, Computing System Design, Communication, HD Map, Testing, and Human Behaviors. IEEE Trans. Syst. Man Cybern. Syst. 2023, 53, 5831–5847. [Google Scholar] [CrossRef]

- Alenya, G.; Negre, A.; Crowley, J.L. A comparison of three methods for measure of time to contact. IEEE/RSJ Int. Conf. Intell. Robot. Syst. IROS 2009, 2009, 4565–4570. [Google Scholar] [CrossRef]

- Zhang, H.; Zhao, J. Bio-inspired vision based robot control using featureless estimations of time-to-contact. Bioinspir. Biomim. 2017, 12, 025001. [Google Scholar] [CrossRef]

- Burner, L.; Sanket, N.J.; Fermuller, C.; Aloimonos, Y. Fast Active Monocular Distance Estimation from Time-to-Contact. 2022. Available online: http://arxiv.org/abs/2203.07530 (accessed on 20 December 2023).

- Sagrebin, M.; Pauli, J. Improved time-to-contact estimation by using information from image sequences. Inform. Aktuell 2009, 2009, 26–32. [Google Scholar]

- Wang, L.; Horn, B.K.P. Time-To-Contact control for safety and reliability of self-driving cars. Int. Smart Cities Conf. ISC2 2017, 3, 16–19. [Google Scholar] [CrossRef]

- Watanabe, Y.; Sakaue, F.; Sato, J. Time-to-contact from image intensity. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4176–4183. [Google Scholar] [CrossRef]

- Gormer, S.; Muller, D.; Hold, S.; Meuter, M.; Kummert, A. Vehicle recognition and TTC estimation at night based on spotlight pairing. In Proceedings of the 2009 12th International IEEE Conference on Intelligent Transportation Systems, St. Louis, MO, USA, 4–7 October 2009; pp. 196–201. [Google Scholar] [CrossRef]

- Hecht, H.; Brendel, E.; Wessels, M.; Bernhard, C. Estimating time-to-contact when vision is impaired. Sci. Rep. 2021, 11, 21213. [Google Scholar] [CrossRef]

- Shi, C.; Dong, Z.; Pundlik, S.; Luo, G. A hardware-friendly optical flow-based time-to-collision estimation algorithm. Sensors 2019, 19, 807. [Google Scholar] [CrossRef] [PubMed]

- Badki, A.; Gallo, O.; Kautz, J.; Sen, P. Binary TTC:A temporal geofence for autonomous navigation. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2021, 2021, 12941–12950. [Google Scholar] [CrossRef]

- Walters, C.; Hadfield, S. EVReflex: Dense Time-to-Impact Prediction for Event-based Obstacle Avoidance. IEEE Int. Conf. Intell. Robot. Syst. 2021, 2021, 1304–1309. [Google Scholar] [CrossRef]

- Kilicarslan, M.; Zheng, J.Y. Predict Vehicle Collision by TTC from Motion Using a Single Video Camera. IEEE Trans. Intell. Transp. Syst. 2019, 20, 522–533. [Google Scholar] [CrossRef]

- Sikorski, O.; Izzo, D.; Meoni, G. Event-based spacecraft landing using time-to-contact. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021; pp. 1941–1950. [Google Scholar] [CrossRef]

- Altendorfer, R.; Wilkmann, C. A new approach to estimate the collision probability for automotive applications. Automatica 2021, 127, 109497. [Google Scholar] [CrossRef]

- St-Aubin, P.; Saunier, N.; Miranda-Moreno, L. Comparison of Various Time-to-Collision Prediction and Aggregation Methods for Surrogate Safety Analysis. Transp. Res. Board 94th Annu. Meet. 2015, 1, 1–21. [Google Scholar]

- Das, S.; Maurya, A.K. Defining Time-to-Collision Thresholds by the Type of Lead Vehicle in Non-Lane-Based Traffic Environments. IEEE Trans. Intell. Transp. Syst. 2020, 21, 4972–4982. [Google Scholar] [CrossRef]

- Sanchez Garcia, A.J.; Rios Figueroa, H.V.; Hernandez, A.M.; Cortes Verdin, M.K.; Vega, G.C. Estimation of time-to-contact from Tau-margin and statistical analysis of behavior. In Proceedings of the 2016 International Conference on Systems, Signals and Image Processing (IWSSIP), Bratislava, Slovakia, 23–25 May 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Benamar, F.Z.; El Fkihi, S.; Demonceaux, C.; Mouad-dib, E.; Aboutajdine, D. Gradient-Based time to contact on paracatadioptric camera. In Proceedings of the IEEE International Conference on Image Processing, ICIP’2013, Melbourne, Australia, 15–18 September 2013. [Google Scholar]

- Horn, B.K.P.; Fang, Y.; Masaki, I. Time to contact relative to a planar surface. IEEE Intell. Veh. Symp. Proc. 2007, 2007, 68–74. [Google Scholar] [CrossRef]

- Tottrup, D.; Skovgaard, S.L.; Sejerson, J.F.; Figueiredo, R.P. Real-Time Method for Time-to-Collision Estimation from Aerial Images. J. Imaging 2022, 8, 62. [Google Scholar] [CrossRef]

- Ozbek, M.; Celebi, A.T. Performance Evaluation of Camera-Based Time to Collision Calculation with Different Detectors & Descriptors. Eur. J. Sci. Technol. 2021, 32, 59–67. [Google Scholar]

- Lin, P.; Javanmardi, E.; Tao, Y.; Chauhan, V.; Nakazato, J.; Tsukada, M. Time-to-Collision-Aware Lane-Change Strategy Based on Potential Field and Cubic Polynomial for Autonomous Vehicles. arXiv 2023. [Google Scholar] [CrossRef]

- Beyrle, M. Time To Collision Calculation for an Autonomous Model Vehicle with CARLA; Technical Reports in Computing Science; University of Applied Sciences: Kempten, Germany, 2020. [Google Scholar]

- Abdelhalim, A.; Abbas, M. A Real-Time Safety-Based Optimal Velocity Model. IEEE Open J. Intell. Transp. Syst. 2022, 3, 165–175. [Google Scholar] [CrossRef]

- Bugusa, Y.; Patil, S. An improved accident crash risk prediction model based on driving outcomes using ensemble of prediction algorithms. Int. J. Sci. Technol. Res. 2019, 8, 603–611. [Google Scholar]

- Jo, Y.; Jang, J.; Ko, J.; Oh, C. An In-Vehicle Warning Information Provision Strategy for V2V-Based Proactive Traffic Safety Management. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19387–19398. [Google Scholar] [CrossRef]

- Staudemeyer, R.C.; Morris, E.R. A tutorial into Long Short-Term Memory Recurrent Neural Networks. Ralf C. Staudemeye 2019, 2019, 1–42. [Google Scholar]

- Nahata, R.; Omeiza, D.; Howard, R.; Kunze, L. Assessing and Explaining Collision Risk in Dynamic Environments for Autonomous Driving Safety. IEEE Conf. Intell. Transp. Syst. Proc. ITSC 2021, 2021, 223–230. [Google Scholar] [CrossRef]

- Rill, R.A.; Farago, K.B. Collision Avoidance Using Deep Learning Based Monocular Vision. SN Comput. Sci. 2021, 2, 1–10. [Google Scholar] [CrossRef]

- Jiang, Y.; Hu, J.; Liu, H. Collision Risk Prediction for Vehicles with Sensor Data Fusion through a Machine Learning Pipeline. In Proceedings of the International Conference on Transportation and Development, Seattle, WA, USA, 31 May–3 June 2022. [Google Scholar]

- Strickland, M.; Fainekos, G.; Ben-Amor, H. Deep predictive models for collision risk assessment in autonomous driving. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 4685–4692. [Google Scholar] [CrossRef]

- Lee, S.; Lee, D.; Kee, S.C. Deep-Learning-Based Parking Area and Collision Risk Area Detection Using AVM in Autonomous Parking Situation. Sensors 2022, 22, 1986. [Google Scholar] [CrossRef]

- Guo, L.; Jia, Y.; Hu, X.; Dong, F. Forwarding Collision Assessment with the Localization Information Using the Machine Learning Method. J. Adv. Transp. 2022, 2022, 9530793. [Google Scholar] [CrossRef]

- Jimenez, F.; Naranjo, J.E.; Gomez, O. Autonomous collision avoidance system based on accurate knowledge of the vehicle surroundings. IET Intell. Transp. Syst. 2015, 9, 105–117. [Google Scholar] [CrossRef]

- Kilicarslan, M.; Zheng, J.Y. Bridge motion to collision alarming using driving video. Proc. Int. Conf. Pattern Recognit. 2016, 2016, 1870–1875. [Google Scholar] [CrossRef]

- Al-Qizwini, M.; Barjasteh, I.; Al-Qassab, H.; Radha, H. Deep learning algorithm for autonomous driving using GoogLeNet. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 89–96. [Google Scholar] [CrossRef]

- Chang, B.R.; Tsai, H.F.; Young, C.P. Intelligent data fusion system for predicting vehicle collision warning using vision/GPS sensing. Expert Syst. Appl. 2010, 37, 2439–2450. [Google Scholar] [CrossRef]

- Nair, S.; Shafaei, S.; Kugele, S.; Osman, M.H.; Knoll, A. Monitoring safety of autonomous vehicles with crash prediction network. CEUR Workshop Proc. 2019, 2301. [Google Scholar]

- Annell, S.; Gratner, A.; Svensson, L. Probabilistic collision estimation system for autonomous vehicles. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 473–478. [Google Scholar] [CrossRef]

- Ammoun, S.; Nashashibi, F. Real time trajectory prediction for collision risk estimation between vehicles. In Proceedings of the 2009 IEEE 5th International Conference on Intelligent Computer Communication and Processing, Cluj-Napoca, Romania, 27–29 August 2009; pp. 417–422. [Google Scholar] [CrossRef]

- Phillips, D.J.; Aragon, J.C.; Roychowdhury, A.; Madigan, R.; Chintakindi, S.; Kochenderfer, M.J. Real-time Prediction of Automotive Collision Risk from Monocular Video. 2019. Available online: http://arxiv.org/abs/1902.01293 (accessed on 20 December 2023).

- Wulfe, B.; Hartong-Redden, R.; Chintakindi, S.; Kodali, A.; Choi, S.C.T.; Kochenderfer, M.J. Real-time prediction of intermediate-horizon automotive collision risk. Proc. Int. Jt. Conf. Auton. Agents Multiagent Syst. AAMAS 2018, 2, 1087–1096. [Google Scholar]

- Bhavsar, P.; Das, P.; Paugh, M.; Dey, K.; Chowdhury, M. Risk analysis of autonomous vehicles in mixed traffic streams. Transp. Res. Rec. 2017, 2625, 51–61. [Google Scholar] [CrossRef]

- Li, G. Risk assessment based collision avoidance decision-making for autonomous vehicles in multi-scenarios. Transp. Res. Part C. Emerg. Technol. 2021, 122, 102820. [Google Scholar] [CrossRef]

- Kilicarslan, M.; Zheng, J.Y. Towards collision alarming based on visual motion. In Proceedings of the 2012 15th International IEEE Conference on Intelligent Transportation Systems, Anchorage, AK, USA, 16–19 September 2012; pp. 654–659. [Google Scholar] [CrossRef]

- Fang, J.; Qiao, J.; Bai, J.; Yu, H.; Xue, J. Traffic Accident Detection via Self-Supervised Consistency Learning in Driving Scenarios. IEEE Trans. Intell. Transp. Syst. 2022, 23, 9601–9614. [Google Scholar] [CrossRef]

- Aichinger, C.; Nitsche, P.; Stutz, R.; Harnisch, M. Using Low-cost Smartphone Sensor Data for Locating Crash Risk Spots in a Road Network. Transp. Res. Procedia 2016, 14, 2015–2024. [Google Scholar] [CrossRef]

- Kilicarslan, M.; Zheng, J.Y. Direct vehicle collision detection from motion in driving video. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1558–1564. [Google Scholar] [CrossRef]

- Meng, D.; Xiao, W.; Zhang, L.; Zhang, Z.; Liu, Z. Vehicle Trajectory Prediction based Predictive Collision Risk Assessment for Autonomous Driving in Highway Scenarios. arXiv 2023, arXiv:2304.05610. [Google Scholar]

- Zhang, E.; Zhang, R.; Masoud, N. Predictive trajectory planning for autonomous vehicles at intersections using reinforcement learning. Trans. Research Part C Emerg. Tech. 2023, 149, 104063. [Google Scholar] [CrossRef]

- Katrakazas, C.; Quddus, M.; Chen, W.-H. A new integrated collision risk assessment methodology for autonomous vehicles. Accid. Anal. Prev. 2019, 127, 61–79. [Google Scholar] [CrossRef]

- Katrakazas, C. Developing an Advanced Collision Risk Model for Autonomous Vehicles. Ph.D. Dissertation, Loughborough University Research Repository, Loughborough, UK, 2017. [Google Scholar]

- Wu, B.; Yan, Y.; Ni, D.; Li, L. A longitudinal car-following risk assessment model based on risk field theory for autonomous vehicles. Int. J. Transp. Sci. Technol. 2021, 10, 60–68. [Google Scholar] [CrossRef]

- Philipp, A.; Goehring, D. Analytic collision risk calculation for autonomous vehicle navigation. Proc. IEEE Int. Conf. Robot. Autom. 2019, 2019, 1744–1750. [Google Scholar] [CrossRef]

- Sabry, Y.; Aly, M.; Oraby, W.; El-demerdash, S. Fuzzy Control of Autonomous Intelligent Vehicles for Collision Avoidance Using Integrated Dynamics. SAE Int. J. Passeng. Cars Mech. Syst. 2018, 11, 5–21. [Google Scholar] [CrossRef]

- Hruschka, C.M.; Topfer, D.; Zug, S. Risk Assessment for Integral Safety in Automated Driving. In Proceedings of the 2019 2nd International Conference on Intelligent Autonomous Systems (ICoIAS), Singapore, 28 February–2 March 2019; pp. 102–109. [Google Scholar] [CrossRef]

- Osman, M.H.; Kugele, S.; Shafaei, S. Run-Time Safety Monitoring Framework for AI-Based Systems: Automated Driving Cases. Proc. Asia-Pac. Softw. Eng. Conf. APSEC 2019, 2019, 442–449. [Google Scholar] [CrossRef]

- Szenasi, S.; Kertesz, G.; Felde, I.; Nadai, L. Statistical accident analysis supporting the control of autonomous vehicles. J. Comput. Methods Sci. Eng. 2021, 21, 85–97. [Google Scholar] [CrossRef]

- Hortel, J.-B.; Ledent, P.; Marsso, L.; Laugier, C.; Mateescu, R.; Paigwar, A.; Renzaglia, A.; Serwe, W. Verifying Collision Risk Estimation using Autonomous Driving Scenarios Derived from a Formal Model. J. Intell. Robot. Syst. 2023, 107, 59. [Google Scholar] [CrossRef]

- Wang, D.; Fu, W.; Song, Q.; Zhou, J. Potential risk assessment for safe driving of autonomous vehicles, under occluded vision. Sci. Rep. 2022, 12, 4891. [Google Scholar] [CrossRef]

- Song, Y.; Huh, K. Driving and steering collision avoidance system of autonomous vehicle with model predictive control based on non-convex optimization. Adv. Mech. Eng. 2021, 13, 16878140211027669. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Fischer, P.; Ilg, E.; Hausser, P.; Hazirbas, C.; Golkov, V.; Van Der Smagt, P.; Cremers, D.; Brox, T. FlowNet: Learning optical flow with convolutional networks. IEEE Int. Conf. Comput. Vis. (ICCV) 2015, 2015, 2758–2766. [Google Scholar]

- Mayer, N.; Ilg, E.; Hausser, P.; Fischer, P.; Cremers, D.; Dosovitskiy, A.; Brox, T. A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Persistence of Vision Pty, Ltd. Persistence of Vision Raytracer [Computer Software]. 2004. Available online: http://www.povray.org/download/ (accessed on 20 December 2023).

- Shah, S.; Dey, D.; Lovett, C.; Kapoor, A. Airsim: High-fidelity visual and physical simulation for autonomous vehicles. In Field and Service Robotics; Springer: Berlin/Heidelberg, Germany, 2018; pp. 621–635. [Google Scholar]

- Available online: https://github.com/fanweng/Udacity-Sensor-Fusion-Nanodegree (accessed on 20 December 2023).

- CARLA. CAR Learning to Act. Available online: https://carla.org/ (accessed on 20 December 2023).

- Wen, L.; Du, D.; Cai, Z.; Lei, Z.; Chang, M.-C.; Qi, H.; Lim, J.; Yang, M.-H.; Lyu, S. UA-DETRAC: A new benchmark and protocol for multi-object detection and tracking. Comput. Vis. Image Understand. 2020, 193, 102907. [Google Scholar] [CrossRef]

- Real-World Use of Automated Driving Systems and their Safety Consequences: A Naturalistic Driving Data Analysis [Supporting Datasets]. 2020. Available online: https://vtechworks.lib.vt.edu/items/22442930-c5be-40c4-af7d-4c2f1ea8d416 (accessed on 20 December 2023).

- Houston, J.; Zuidhof, G.; Bergamini, L.; Ye, Y.; Jain, A.; Omari, S.; Iglovikov, V.; Ondruska, P. One thousand and one hours: Self-driving motion prediction dataset. arXiv 2020, arXiv:2006.14480. [Google Scholar]

- Rohmer, M.F.E.; Singh, S.P.N. V-rep: A versatile and scalable robot simulation framework. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013. [Google Scholar]

- Tian, R.; Li, L.; Yang, K.; Chien, S.; Chen, Y.; Sherony, R. Estimation of the vehicle-pedestrian encounter/conflict risk on the road based on TASI 110-car naturalistic driving data collection. IEEE Intell. Veh. Symp. 2014, 2014, 623–629. [Google Scholar]

- Alexiadis, V.; Colyar, J.; Halkias, J.; Hranac, R.; McHale, G. The next generation simulation program. Institute of Transportation Engineers. ITE J. 2004, 74, 22. [Google Scholar]

- Google. Autonomous Vehicles Annual Disengagement Report. In California Department of Autonomous Vehicles; Department of Motor Vehicles (DMV): Sacramento, CA, USA, 2016. [Google Scholar]

- Delphi. Autonomous Vehicles Annual Disengagement Report. In California Department of Autonomous Vehicles; Department of Motor Vehicles (DMV): Sacramento, CA, USA, 2016. [Google Scholar]

- Nissan. Autonomous Vehicles Annual Disengagement Report. In California Department of Autonomous Vehicles; Department of Motor Vehicles (DMV): Sacramento, CA, USA, 2016. [Google Scholar]

- Mercedes-Benz. Autonomous Vehicles Annual Disengagement Report. In California Department of Autonomous Vehicles; Department of Motor Vehicles (DMV): Sacramento, CA, USA, 2016. [Google Scholar]

- Volkswagen. Autonomous Vehicles Annual Disengagement Report. In California Department of Autonomous Vehicles; Department of Motor Vehicles (DMV): Sacramento, CA, USA, 2016. [Google Scholar]

- Virginia Traffic Crash Facts 2014. Virginia Highway Safety Office, Virginia Department of Motor Vehicles; Department of Motor Vehicles (DMV): Richmond, VA, USA, 2015. [Google Scholar]

- Summary of Motor Vehicle Crashes: 2014 Statewide Statistical Summary. In New York State Department of Motor Vehicles; Department of Motor Vehicles (DMV): New York City, NY, USA, 2015.

- Dezfuli, H.; Benjamin, C.A.; Everett, G.; Maggio, M.; Stamatelatos, R. NASA Risk Management Handbook; Publication NASA/SP-2011-3422; NASA: Greenbelt, Maryland, 2011. [Google Scholar]

- DADA-2000: Can Driving Accidents be Predicted by Driver Attention? Analyzed by A Benchmark. Available online: https://arxiv.org/abs/1904.12634 (accessed on 20 December 2023).

- Krajewski, R.; Bock, J.; Kloeker, L.; Eckstein, L. The highD dataset: A drone dataset of naturalistic vehicle trajectories on German highways for validation of highly autonomous driving systems. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2118–2125. [Google Scholar]

- Theofilatos, A. An Advanced Multi-Faceted Statistical Analysis of Accident Probability and Severity Exploiting High Resolution Traffic and Weather. Ph.D. Thesis, National Technical University of Athens, Athens, Greece, 2015. [Google Scholar]

- Fagnant, D.J.; Kockelman, K. Preparing a nation for autonomous vehicles: Opportunities, barriers and policy recommendations. Transp. Res. PartA. 2015, 77, 167–181. [Google Scholar] [CrossRef]

- Clark, F.; Zhang, M. Caltrans PEMS highway sensor average flows by occupancy [Dataset]. Dryad 2018. [Google Scholar] [CrossRef]

- BS ISO 3888-2; British Standard. Passenger Cars-Test Track for a Severe Lane Change Maneuver-Part 2: Obstacle Avoidance. ISO: Geneva, Switzerland, 2002.

- Schabenberger, R. ADTF: Framework for driver assistance and safety systems. VDI BERICHTE 2007, 2007, 701–710. [Google Scholar]

- Li, D.; Shi, X.; Long, Q.; Liu, S.; Yang, W.; Wang, F.; Wei, Q.; Qiao, F. DXSLAM: A Robust and Efficient Visual SLAM System with Deep Features; IEEE: Las Vegas, NV, USA, 2020. [Google Scholar]

- Rong, G.; Shin, B.H.; Tabatabaee, H.; Lu, Q.; Lemke, S.; Možeiko, M.; Boise, E.; Uhm, G.; Gerow, M.; Mehta, S.; et al. Lgsvl simulator: A high fidelity simulator for autonomous driving. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–6. [Google Scholar]

- dSpace. Over-the-Air Simulation of Echoes for Automotive Radar Sensors. Available online: https://www.dspace.com/en/ltd/home/news/engineersinsights/over-the-air-simulation.cfm (accessed on 20 December 2023).

- Mechanical Simulation Corporation. Unreal Engine Marketplace Showcase. Available online: https://www.carsim.com/publications/newsletter/2021_03_17.php (accessed on 20 December 2023).

- TASS International. Prescan Overview. Available online: https://tass.plm.automation.siemens.com/prescan-overview (accessed on 20 December 2023).

- PTV Group. Virtual Testing of Autonomous Vehicles with PTV Vissim. Available online: https://www.ptvgroup.com/en/solutions/products/ptv-vissim/areas-of-application/autonomous-vehicles-and-newmobility/ (accessed on 20 December 2023).

- Carballo, A.; Lambert, J.; Monrroy, A.; Wong, D.; Narksri, P.; Kitsukawa, Y.; Takeuchi, E.; Kato, S.; Takeda, K. In Proceedings of the LIBRE: The Multiple 3d Lidar Dataset, Intelligent Vehicles Symposium (IV); Las Vegas, NV, USA, 19 October–13 November 2020, IEEE: Toulouse, France, 2020; pp. 1094–1101. [Google Scholar]

- Sakaridis, C.; Dai, D.; Van Gool, L. Semantic foggy scene understanding with synthetic data. Int. J. Comput. 2018, 126, 973–992. [Google Scholar] [CrossRef]

- Pitropov, M.; Garcia, D.E.; Rebello, J.; Smart, M.; Wang, C.; Czarnecki, K.; Waslander, S. Canadian adverse driving conditions dataset. Int. J. Robot. Res. 2021, 40, 681–690. [Google Scholar] [CrossRef]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. Bdd100k: A diverse driving dataset for heterogeneous multitask learning. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE/CVF: Toulouse, France, 2020; pp. 2636–2645. [Google Scholar]

- Neuhold, G.; Ollmann, T.; RotaBulo, S.; Kontschieder, P. Themapillaryvistas dataset for semantic understanding of street scenes. In Proceedings of the International Conference on Computer Vision, ICCV; Venice, Italy, 22–29 October 2017, IEEE: Toulouse, France, 2017; pp. 4990–4999. [Google Scholar]

- Braun, M.; Krebs, S.; Flohr, F.; Gavrila, D.M. Eurocity persons: A novel benchmark for person detection in traffic scenes. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1844–1861. [Google Scholar] [CrossRef] [PubMed]

- Maddern, W.; Pascoe, G.; Linegar, C.; Newman, P. 1 year, 1000 km: The Oxford robot car dataset. Int. J.Robot.Res. 2017, 36, 3–15. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. Nuscenes: Amultimodal dataset for autonomous driving. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; IEEE/CVF: Toulouse, France, 2020; pp. 11621–11631. [Google Scholar]

- Che, Z.; Li, G.; Li, T.; Jiang, B.; Shi, X.; Zhang, X.; Lu, Y.; Wu, G.; Liu, Y.; Ye, J. D2-city: A large-scale dashcam video dataset of diverse traffic scenarios. arXiv 2019, arXiv:1904.01975. [Google Scholar]

- Binas, J.; Neil, D.; Liu, S.-C.; Delbruck, T. DDD17: End-to-endDAVISdriving dataset. arXiv 2017, arXiv:1711.01458. [Google Scholar]

- Chang, M.-F.; Lambert, J.; Sangkloy, P.; Singh, J.; Bak, S.; Hartnett, A.; Wang, D.; Carr, P.; Lucey, S.; Ramanan, D.; et al. Argoverse: 3d tracking and forecasting with rich maps. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8748–8757. [Google Scholar]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in perception for autonomous driving: Waymo open dataset. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2446–2454. [Google Scholar]

- Pham, Q.-H.; Sevestre, P.; Pahwa, R.S.; Zhan, H.; Pang, C.H.; Chen, Y.; Mustafa, A.; Chandrasekhar, V.; Lin, J. A*3D dataset: Towards autonomous driving in challenging environments. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 2267–2273. [Google Scholar]

- Lei, Y.; Emaru, T.; Ravankar, A.A.; Kobayashi, Y.; Wang, S. Semantic Image Segmentation Snow Driving Scenarios. In Proceedings of the 2020 IEEE International Conference on Mechatronics and Automation (ICMA), Beijing, China, 13–16 October 2020; pp. 1094–1100. [Google Scholar]

- Huang, X.; Wang, P.; Cheng, X.; Zhou, D.; Geng, Q.; Yang, R. Theapolloscape open dataset for autonomous driving and its application. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2702–2719. [Google Scholar] [CrossRef]

- Ros, G.; Sellart, L.; Materzynska, J.; Vazquez, D.; Lopez, A.M. The synthia dataset: A large collection of synthetic images for semantic segmentation of urban scenes. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3234–3243. [Google Scholar]

- Richter, S.R.; Hayder, Z.; Koltun, V. Playing for benchmarks. In Proceedings of the International Conferenceon Computer Vision, ICCV, Venice, Italy, 22–29 October 2017; IEEE: Toulouse, France, 2017; pp. 2213–2222. [Google Scholar]

- Liu, D.; Cui, Y.; Cao, Z.; Chen, Y. A large-scale simulation dataset: Boost the detection accuracy for special weather conditions. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Sakaridis, C.; Dai, D.; VanGool, L. ACDC: The adverse conditions dataset with correspondences for semantic driving scene understanding. arXiv 2021, arXiv:2104.13395. [Google Scholar]

- Carlevaris-Bianco, N.; Ushani, A.K.; Eustice, R.M. University of Michigan North Campus long-term vision and lidar dataset. Int. J. Robot. Res. 2016, 35, 1023–1035. [Google Scholar] [CrossRef]

- Wenzel, P.; Wang, R.; Yang, N.; Cheng, Q.; Khan, Q.; von Stumberg, L.; Zeller, N.; Cremers, D. 4Seasons: Across-season dataset for multi-weather SLAMin autonomous driving. In Proceedings of the DAGM German Conference on Pattern Recognition GPCR, Deutsche Arbeitsgemeinschaft für Mustererkennung (DAGM); Dortmund, Germany, 10–13 September 2021, DAGM: Bonn, Germany, 2021; pp. 404–417. [Google Scholar]

- Tung, F.; Chen, J.; Meng, L.; Little, J.J. The raincouver scene parsing Benchmark for self-driving adverse weather and at night. Robot. Autom. Lett. (RA-L) 2017, 2, 2188–2193. [Google Scholar] [CrossRef]

- Zendel, O.; Honauer, K.; Murschitz, M.; Steininger, D.; Dominguez, G.F. Wildash-creating hazard-aware benchmarks. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; ECCV: Prague, Czech, 2018; pp. 402–416. [Google Scholar]

- Choi, Y.; Kim, N.; Hwang, S.; Park, K.; Yoon, J.S.; An, K.; Kweon, I.S. KAIST multi-spectral day/night dataset for autonomous and assisted driving. IEEE Trans. Intell. Transp. Syst. 2018, 19, 934–948. [Google Scholar] [CrossRef]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing through fog without seeing fog: Deep multimodal sensor fusion in unseen adverse weather. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: Toulouse, France, 2020; pp. 11682–11692. [Google Scholar]

- Geyer, J.; Kassahun, Y.; Mahmudi, M.; Ricou, X.; Durgesh, R.; Chung, A.S.; Hauswald, L.; Pham, V.H.; Mühlegg, M.; Dorn, S.; et al. A2D2:Audi autonomous driving dataset. arXiv 2020, arXiv:2004.06320. [Google Scholar]

- Uřičář, M.; Křížek, P.; Sistu, G.; Yogamani, S. Soilingnet: Soiling Detection Automotive Surround-view cameras. In Proceedings of the Intelligent Transportation Systems Conference, ITSC, Auckland, New Zealand, 27–30 October 2019; IEEE: Toulouse, France, 2019; pp. 67–72. [Google Scholar]

- Sheeny, M.; De Pellegrin, E.; Mukherjee, S.; Ahrabian, A.; Wang, S.; Wallace, A. RADIATE: A radar dataset for automotive perception in bad weather. In Proceedings of the International Conference on Robotics and Automation, ICRA, Xi’an, China, 30 May–5 June 2021; IEEE: Toulouse, France, 2021; pp. 1–7. [Google Scholar]

- Yan, Z.; Sun, L.; Krajník, T.; Ruichek, Y. EU long-term dataset with multiple sensors for autonomous driving. In Proceedings of the International Conference on Intelligent Robots and Systems, IROS, Las Vegas, NV, USA, 24 October 2020–24 January 2021; IEEE/RSJ: Toulouse, France, 2020; pp. 10697–10704. [Google Scholar]

- Basterretxea, K.; Martínez, V.; Echanobe, J.; Gutiérrez-Zaballa, J.; DelCampo, I. HSI-drive: A dataset for the research of hyperspectral image processing applied to autonomous driving systems. In Proceedings of the 2021 IEEE Intelligent Vehicles Symposium (IV), Nagoya, Japan, 11–17 July 2021; pp. 866–873. [Google Scholar]

- Bos, J.P.; Chopp, D.; Kurup, A.; Spike, N. Autonomy At the end of the earth: An inclement weather autonomous driving dataset. Auton. Syst. Sens. Process. Secur. Veh. Infrastruct. 2020, 11415, 36–48. [Google Scholar]

- Burnett, K.; Yoon, D.J.; Wu, Y.; Li, A.Z.; Zhang, H.; Lu, S.; Qian, J.; Tseng, W.-K.; Lambert, A.; Leung, K.Y.; et al. Boreas: A multi-season autonomous driving dataset. arXiv 2020, arXiv:2203.10168. [Google Scholar] [CrossRef]

- Naseri, M.; Shahid, A.; Gordebeke, G.J.; Lemey, S.; Boes, M.; Van De Velde, S.; De Poorter, E. Machine Learning-Based Angle of Arrival Estimation for Ultra-Wide Band Radios. IEEE Commun. Lett. 2022, 26, 1273–1277. [Google Scholar] [CrossRef]

- Margiani, T.; Cortesi, S.; Keller, M.; Vogt, C.; Polonelli, T.; Magno, M. Angle of Arrival and Centimeter Distance Estimation on a Smart UWB Sensor Node. IEEE Trans. Instrum. Meas. 2023, 72, 9508110. [Google Scholar] [CrossRef]

- Yasmin, R.; Petäjäjärvi, J.; Mikhaylov, K.; Pouttu, A. On the integration of LoRaWAN with the 5G test network. In Proceedings of the 2017 IEEE 28th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), Montreal, QC, Canada, 8–13 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Navarro-Ortiz, J.; Sendra, S.; Ameigeiras, P.; Lopez-Soler, J.M. Integration of LoRaWAN and 4G/5G for the Industrial Internet of Things. IEEE Commun. Mag. 2018, 56, 60–67. [Google Scholar] [CrossRef]

- Meijers, J.; Michalopoulos, P.; Motepalli, S.; Zhang, G.; Zhang, S.; Veneris, A.; Jacobsen, H.-A. Blockchain for V2X: Applications and Architectures. IEEE Open J. Veh. Technol. 2022, 3, 193–209. [Google Scholar] [CrossRef]

- Zhang, Y.; Carballo, A.; Yang, H.; Takeda, K. Perception and sensing for autonomous vehicles under adverse weather conditions: A survey. ISPRS J. Photogramm. Remote Sens. 2023, 196, 146–177. [Google Scholar] [CrossRef]

- Leitch, S.G.; Ahmed, Q.Z.; Abbas, W.B.; Hafeez, M.; Laziridis, P.I.; Sureephong, P.; Alade, T. On Indoor Localization Using WiFi, BLE, UWB, and IMU Technologies. Sensors 2023, 23, 8598. [Google Scholar] [CrossRef]

- Manzoni, P.; Calafate, C.T.; Cano, J.-C.; Hernández-Orallo, E. Indoor Vehicles Geolocalization Using LoRaWAN. Future Internet 2019, 11, 124. [Google Scholar] [CrossRef]

| References | Computer Vision | Machine Learning | Deep Learning | Signal | Video Data | Others | Advantage | Disadvantage |

|---|---|---|---|---|---|---|---|---|

| [4,5,6,7,8,9,10,11] | √ | - | - | - | - | - | Enhanced automation, improved accuracy, increased efficiency, improved accessibility | Limited context awareness, privacy/ethical concerns, bias and inaccuracy, dependence on infrastructure |

| [13,14] | √ | - | √ | - | - | - | Handling large and complex data, handling structured and unstructured data, improved performance | Increased complexity, overfitting tendencies, legal/ethical concerns |

| [15] | √ | - | √ | - | √ | - | Handling large and complex data, handling structured and unstructured data, improved performance | Increased complexity, overfitting tendencies, legal/ethical concerns, dependency on video data |

| [12] | √ | √ | - | - | - | - | Handling large and complex data, automation, improved performance | Privacy/ethical concerns, dependence on infrastructure |

| [17,21,25,26] | - | - | - | - | - | √ | Accurate mathematical modeling, enhanced prediction accuracy | Context dependent |

| [16] | - | √ | - | - | - | - | Handling large and complex data, automation, improved performance | Privacy/ethical concerns |

| [19,23,24] | - | - | - | √ | - | - | Using real-time sensory data, accuracy in prediction | Dependency on sensor/signal type |

| References | Computer Vision | ML | DL | Simulation | Sensor | Video Data | Lidar | Time-Series Data | RL | Others | Advantage | Disadvantage |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| [46,47,56,58,62,63,64] | - | - | - | - | - | - | - | - | - | √ | Accurate mathematical modeling, enhanced prediction accuracy | Context dependent |

| [27,30,31,32,34,51] | √ | - | √ | - | - | √ | - | - | - | - | Handling large and complex data, handling structured and unstructured data, improved performance | Increased complexity, overfitting tendencies, legal/ethical concerns, dependency on video data |

| [29,59] | - | - | √ | - | - | - | - | - | - | - | Handling large and complex data, handling structured and unstructured data, improved performance | Increased complexity, overfitting tendencies, legal/ethical concerns |

| [57] | √ | - | - | √ | - | - | √ | - | - | - | Enhanced automation, improved accuracy, increased efficiency, improved accessibility, higher precision, more flexibility due to simulations | Limited context awareness, privacy/ethical concerns, bias and inaccuracy, dependence on infrastructure, bias on LiDAR data |

| [28,33,49,52,53,54,55] | - | √ | - | - | - | - | - | - | - | - | Handling large and complex data, automation, improved performance | Privacy/ethical concerns |

| [37,60] | √ | - | - | - | - | - | - | - | - | - | Enhanced automation, improved accuracy, increased efficiency, improved accessibility | Limited context awareness, privacy/ethical concerns, bias and inaccuracy, dependence on infrastructure |

| [36] | √ | √ | - | - | - | - | - | √ | √ | - | Handling large and complex data, automation, improved performance, accurate mathematical modeling, model-free, online | Privacy/ethical concerns, dependence on infrastructure |

| [41] | - | √ | √ | - | √ | - | - | - | - | - | Handling large and complex data, automation, improved performance, using exact sensory data | Privacy/ethical concerns, increased complexity, overfitting tendencies, bias on sensor type |

| [44] | √ | - | √ | - | - | √ | - | √ | - | - | Handling large and complex data, handling structured and unstructured data, improved performance, accurate mathematical modeling | Increased complexity, overfitting tendencies, legal/ethical concerns, dependency on video data |

| [38] | √ | - | - | - | - | √ | - | - | - | - | Enhanced automation, improved accuracy, increased efficiency, improved accessibility | Limited context awareness, privacy/ethical concerns, bias and inaccuracy, dependence on infrastructure, bias on video data |

| [50] | - | √ | - | - | √ | - | - | - | - | - | Handling large and complex data, automation, improved performance, using exact sensory data | Privacy/ethical concerns, bias on sensor type |

| [39,40] | √ | - | √ | - | √ | - | - | - | - | - | Handling large and complex data, handling structured and unstructured data, improved performance, using exact sensory data | Increased complexity, overfitting tendencies, legal/ethical concerns, bias on sensor type |

| [35] | √ | - | √ | - | - | - | - | √ | - | - | Handling large and complex data, handling structured and unstructured data, improved performance, accurate mathematical modeling | Increased complexity, overfitting tendencies, legal/ethical concerns |

| [61] | - | - | - | - | - | - | - | √ | - | - | Accurate mathematical modeling, improved estimation accuracy | Inefficient in complex scenarios |

| [43] | - | - | - | - | √ | - | - | - | - | - | Using exact sensory data, reduced complexity | Bias on sensor type, decreased performance |

| [42] | - | - | - | - | √ | - | √ | - | - | - | Using exact sensory data, reduced complexity, higher precision | Bias on LiDAR data, decreased performance |

| [45] | - | - | √ | √ | - | - | - | - | - | - | Handling large and complex data, handling structured and unstructured data, improved performance, more flexibility due to simulations | Increased complexity, overfitting tendencies, legal/ethical concerns |

| [48] | - | √ | - | - | - | √ | - | - | - | - | Handling large and complex data, automation, improved performance | Privacy/ethical concerns, bias on video data |

| Reference | Data | Method |

|---|---|---|

| [4] | Captured sequence of frames involving displacement change. | Active Contour Affine Scale (ACAS), with image flow approximated by an affine transformation |

| [5] | Captured Sequence of images at a constant vehicle speed. | Featureless Based Control with Kalman Filtering and Gain Scheduling |

| [6] | Captured ten sets of sequence images with five scenes. | Acceleration constraint (τ-constraint) and distance constraint (Φ-constraint) methods |

| [7] | Feature position information of two consecutive sets of image sequences as well as odometry data. | Feature Based Robust TTC Calculation |

| [8] | Captured Video on an Android Smartphone | Relative Velocity Estimation via Depth and Motion Sensing |

| [9] | Captured Sequence of Images from a Light Source via a Fixed Camera | Analyzing Photometric Features via Measuring Changes in Intensity with Ambient Elimination |

| [10] | Captured radar sensor data labeled by hand | Spot detection and paring |

| [11] | Synthetic object data | Using visual stimulus degradation |

| [12] | Synthetic and Real Sequence of Images with constant velocity camera | Dense Optical Flow-Based Time-to-Collision |

| [13] | FlyingChairs2 [65] and FlyingThings3D Datasets [66] | Binary, Quantized, and Continuous Estimation |

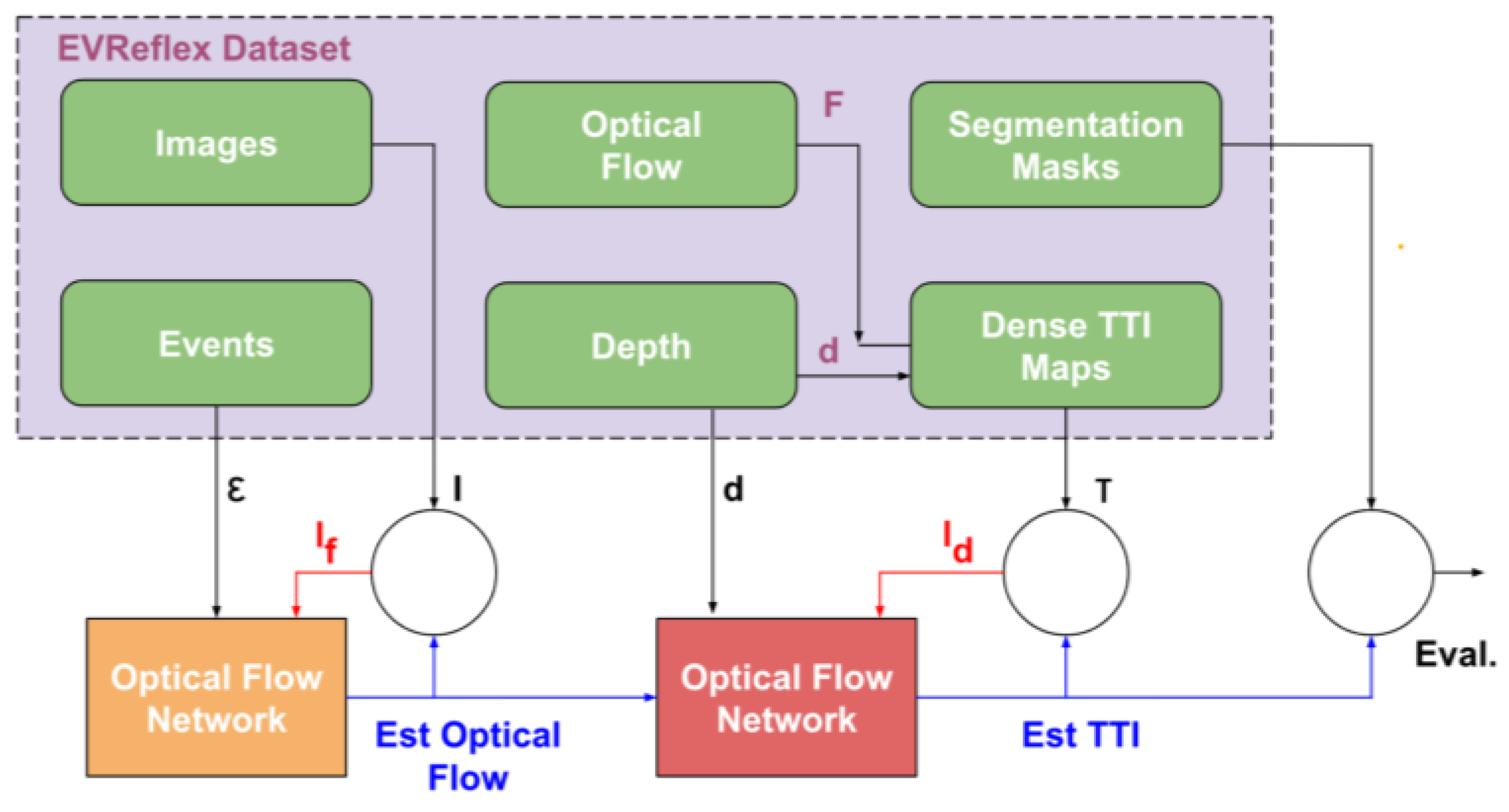

| [14] | EVReflex Dataset | Merged event camera and lidar streams without requiring prior scene geometry or obstacle knowledge. |

| [15] | KITTI Vision Benchmark [67] | Horizontal and Vertical Motion Divergence |

| [16] | Synthetic Event-Based Camera Model using DVS | Vertical Descent Control via Estimating Divergence of Optical Flow |

| [17] | Monte Carlo Simulation | Linking the collision probability rate distribution to TTC distribution and deriving the upper bound for the collision distribution. |

| [18] | Video feeds from 1 to 3 merging zones were captured across 20 roundabouts in Quebec, covering a total of 37 distinct sites. | Assessing TTC Indicators and Aggregation Methods using Constant Velocity, Normal Adaptation, and Motion Pattern Prediction Methods for Surrogate Safety Analysis. |

| [19] | video feeds from five Indian cities with varied lane setups, straight road sections away from intersections, in clear weather conditions and visibility. | Use urban road trajectory data to create temporal safety indicators in non-lane-based traffic |

| [20] | Captured video of 210 frames on a moving monocular vision robot | Estimate TTC using the so-called Tau-margin by the ratio of change of apparent size of obstacles via color segmentation |

| [21] | Real and Synthetic Images by Pov-ray Software [68] | Spatial and Temporal Gradient-based methods |

| [22] | Synthetic Video Feed and Stop-Motion Sequence | Featureless Direct Method via Brightness Derivative Based on Two Consecutive Frames as a MontiVision Filter |

| [23] | Synthetic Data Using AirSim [69] Simulator, and Aerial Drones | Multi-object Deep Feature Detection via Pixel-Level Object Segmentation |

| [24] | UDACITY Sensor Fusion Dataset [70] | Detector Descriptor TTC Detection |

| [25] | MATLAB/Simulink Simulation | Time To Collision Lane Change via Potential Field and Cubic Polynomial |

| [26] | PointCloud2 Data via CARLA Simulator [71] | Bypass Object Detection with Line Intersection via Laser-Scan |

| Reference | Data | Method |

|---|---|---|

| [27] | UA-Detrac Dataset: Traffic Video Feed Data with Over 1.2 million Bounding Boxes [72] | Data-Driven Optimal Velocity Model via Deep-SORT Tracker, Classification by VT-Lane Framework, and Kalman Filtering |

| [28] | VTTI Dataset [73] | Ensemble Model of C5.0, K-Nearest Neighbor, J48 Classification, Naive Bayes, and Gradient Boosting Machine |

| [29] | Vehicle Interaction Data | Proactive V2V-based Warning System via Long Short-Term Memory (LSTM) Risk Prediction |

| [31] | Lyft Level-5 [73], KITTI Vision [67] Benchmark, and Waymo [110] datasets | Planar 2D Collision Machine Learning Model via Decision-Trees and Random-Forests |

| [32] | Self-Collected Video Sequence Similar to KITTI Vision Benchmark [67] | Monocular Vision Deep Learning via Optical Flow Modeling, Depth Estimation, Object Detection, Ego-vehicle Speed Estimation, Lead Vehicle Identification, and Car Stop Collision Timing |

| [33] | NuScenes Dataset [106] | Multi-sensor Fusion to Extract Motion Features and Predict Trajectories, via Minimum Future Spacing (MFS) and Extended Kalman Filter (EKF) |

| [34] | Synthetic Data via Robotics Simulator CoppeliaSim (V-REP) [75] | Bayesian Convolutional Long Short-Term Memory (LSTM) |

| [35] | Around View Monitor (AVM) Images with Four 190 Wide-Angle Cameras Based on the Chungbuk National University Parking Lot, and National Institute of Intelligent Information Society (NIA) Dataset | Bird-Eye View Area Detection and Semantic Segmentation via CSPHarDNet, CSPDenseNet, and HarDNet |

| [36] | Car-Following On-board Diagnosis (OBD) Dataset Collected from Xi’an Rao Cheng Expressway for Three Days | Forward Collision Detection in Advanced Driver-Assistance Systems (ADAS) via Long Short-Term Memory (LSTM) and Deep Belief Network (DBN) |

| [37] | Single-Layer Laser-Scanner Data with 10 Hz Frequency | Automatic Collision Avoidance System via Obstacle Detection and Fuzzy-Logic Control |

| [38] | Forward Facing Camera Data Based on TASI 110-Car Naturalistic Driving Study [76], Caltech Pedestrian, ETH, TUD-Brussels, Daimler, and INRIA Datasets | Motion Divergence Detection by Analyzing Horizontal and Vertical Trace Expansion form Cluster of Line Segments |

| [39] | TORCS (The Open Racing Car Simulator) | Direct Perception Deep Learning via GoogLeNet Autonomous Driving (GLAD) and ConvNet based on AlexNet |

| [40] | Event Data Recording via GPS and Vision Sensing, and Vehicle-to-Vehicle Communication | Neural Network (QT-BPNN) and Adaptive Network-Based Fuzzy Inference System (ANFIS), with Distributed Dual-Platform DaVinci+XScale_NAV270 |

| [41] | Synthetic Data Using Automated Test Trajectory Generation (ATTG) | Bayesian Deep Learning (BDL), and Reinforcement Learning |

| [42] | LIDAR, Vehicle-to-Vehicle (V2V), Vehicle-to-Infrastructure (V2I), GPS, and IMU Readings | Robust System to Estimate the Likelihood of Collisions, Validated by Controlling the Ego Velocity of Vehicle with Velocity Planning Control (VPC) |

| [43] | Two La Route Automatise (LaRA) vehicles exchanging their positions and velocities, and GPS Data | Trajectory Prediction and Kalman Filtering via Geometric and Dynamic Approaches |

| [44] | KITTI Dataset [67] | FasterRCNN-101 for Object Detection, Particle-based Object Tracking and Distance Estimation Based on First Principles, and Risk Assessment Based on Inverse Time-to-Collision (TTC), using Monocular Vision Input Feed. |

| [45] | Next-Generation Simulation (NGSIM) Dataset [77] | Generate High-risk Scenes Using a Bayesian Network Model. |

| [46] | California Department of Motor Vehicles Autonomous Vehicle Testing Records [78,79,80,81,82], Virginia Department of Transportation (DOT) [83], the New York State DOT Traffic Crash Reports [84], NASA Risk Assessment Data [85] | Fault Tree Analysis: Bayesian Belief Network for LIDAR and Camera Failure, Chi-square distribution for Radar Failure, Extended Markov Bayesian Network for Software Failure, Kalman filter for Wheel Encoder Failure, Least Squares for GPS Failure, Generic quorum-system evaluator for Database System Failure, In IEEE 802.11b Network and CAP for Communication Failure, Markov Chain Model for Integrated Platform Failure, Human Reliability Analysis (THERP, CREAM, and NARA) for Human Command Error, and Artificial Neural Networks on Clean Speech for System Failure for Human Command Detection |

| [47] | CARLA Simulator | Risk Assessment Based Decision-Making to Avoid Collisions with Likelihood Analysis via Conditional Random Field (CRF) |

| [48] | Captured Video Feed | Bayesian Framework and Decision Trees |

| [49] | AnAn Accident Detection (A3D) [111], and DADA-2000 [86] Datasets | Appearance, Motion, and Context Consistency Learning via Self-Supervised Consistency Traffic Accident Detection (SSC-TAD) Learning |

| [50] | Collected 200 Hours of Smartphone GPS and Video Data | Quantile Regression (QR) Modeling, EuroFOT Thresholding, and Spacial Clustering |

| [51] | Collected 30 Frames-per-second (FPS) Video Data | Analyzing Horizontal and Vertical Motion Divergence without Object Detection and Depth Sensing |

| [52] | NGSIM US101 [77], and highD [87] Datasets | Long Short-term Memory (LSTM) Encoder-Decoder for Sequence Generation, Convolutional Social Pooling (CSP) for Extracting Local Spatial vehicle interactions, and Graph Attention Network (GAN) for Distant Spatial Vehicle Interactions |

| [53] | Lyft Level-5 Dataset [74] | Reinforcement Learning: Partially Observable Markov Decision Process (POMDP) for Sequential Decision-making, Bayesian Gaussian Mixture Models for Learning Patterns of Trajectory, and Gibbs Sampling for Validating Simulations |

| [54] | Athens Dataset [88] | Dynamic Bayesian Networks (DBN) |

| [55] | UK [89], and Athens [88] Datasets | Network-level Collision Prediction (NLCP), and Dynamic Bayesian Networks (DBN) |

| [56] | NGSIM [77], and California’s Caltrans PeMS System Loop Detector [90] Datasets | Collision Risk Assessment Indicator by Risk Repulsion |

| [57] | Monte Carlo Simulation | Collision state probability (CSP), and Collision event probability (CEP) |

| [58] | ISO 3888 Test Track [91] | Dynamical Autonomous Intelligent Vehicles (AIV) Modeling and Fuzzy Logic Control |

| [59] | Automotive Data and Time Triggered Framework (ADTF) [92] | Risk-based Criticality Measurement: General Integral Criticality Measurement, Integration of Severity Prediction Functions, Environmental Risk Parameters, and Multi-dimensional Risk Mdoels |

| [60] | SLAM System [93] | Run-time Safety Monitoring Framework via Lane Detection, and Object Detection |

| [61] | Hungarian Road Network from the Hungarian Central Statistics Department (HCSD) | Historical Accident Data Risk Assessment Based on Thresholding and Distribution Analysis via Sliding Window |

| [62] | CARLA Simulator [71] | Formal Conformance Test Generation, and Statistical Analysis on Traces |

| [63] | Dynamic Simulation | Occluded Vision Analysis Based on Dynamic Bayesian Network (DBN) Inference |

| [64] | Constant Turn-Rate and Acceleration (CTRA) | Micro-genetic Algorithm, and Model Predictive Control (MPC) |

| [65] | Vehicle-to-Everything (V2X) Data | Directed Acyclic Graph (DAG) Blockchains |

| [66] | LIBRE [99], Foggy Cityscape [100], CADCD [101], Berkley DeepDrive [102], Mapillary [103], EuroCity[104], Oxford RobotCar[105], nuScenes [106], D2-City [107], DDD17 [108], Argoverse [109], Waymo Open [110], A3D [111], Snowy Driving [112], ApolloScape [113], SYNTHIA [114], P.F.B [115], ALSD [116], ACDC [117], NCLT [118], 4Seasons [119], Raincouver[120], WildDash [121], KAIST multispectral [122], DENSE [123], A2D2 [124], SoilingNet [125], Radiate [126], EU [127], HSI-Drive [128], WADS [129], and Boreas [130] Datasets; as well as, CARLA [71], LG SVL [94], dSPACE [95], CarSim [96], TASS PreScan [97], AirSim [69], and PTV Vissim [98] Simulation Environments | Assessing Effects of Weather on Automated Driving Systems (ADS) Perception and Sensing |

| [67] | BLE AoA Dataset [131,132] | Analyze Indoor Localization Systems: Wireless Fidelity (Wi-Fi), Ultra-Wide Bandwidth Radio (UWB), Inertial Measurement Units(IMU), Bluetooth Low Energy (BLE), |

| [68] | Long Range Wide Area Network (LoRaWAN) [133,134] | Vehicle Geolocalization |

| Open Area Field | Related References |

|---|---|

| Real-time navigation | [4,5] |

| Using novel statistical methods | [6,7,12,27,28,50] |

| Generalization | [9,14,41,44,45,53,55] |

| Using new sensors | [18,20] |

| Enhancing robustness | [29,32,34] |

| Parameter engineering | [37,38,39,46,47] |

| Safety considerations | [51,137,138] |

| Deploying novel technology (blockchain and mmWave, etc.) | [52,135,136] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Goudarzi, P.; Hassanzadeh, B. Collision Risk in Autonomous Vehicles: Classification, Challenges, and Open Research Areas. Vehicles 2024, 6, 157-190. https://doi.org/10.3390/vehicles6010007

Goudarzi P, Hassanzadeh B. Collision Risk in Autonomous Vehicles: Classification, Challenges, and Open Research Areas. Vehicles. 2024; 6(1):157-190. https://doi.org/10.3390/vehicles6010007

Chicago/Turabian StyleGoudarzi, Pejman, and Bardia Hassanzadeh. 2024. "Collision Risk in Autonomous Vehicles: Classification, Challenges, and Open Research Areas" Vehicles 6, no. 1: 157-190. https://doi.org/10.3390/vehicles6010007

APA StyleGoudarzi, P., & Hassanzadeh, B. (2024). Collision Risk in Autonomous Vehicles: Classification, Challenges, and Open Research Areas. Vehicles, 6(1), 157-190. https://doi.org/10.3390/vehicles6010007