In this section we present the overall results from the simulation. The simulation was run in a Linux-based High-Performance Cluster for computing efficiency, which took 13.4 years of computing time.

3.1.1. Convergence

When looking at the ability of each method to find a final solution across simulation conditions (

Table 1), we found that the NUTS MCMC method converged for every data set (

), without any outliers. It is important to point out that the Bayesian model constrains the parameter estimates to admissible values, so it did not present improper solutions. For WLSMV, 99.5% of the data sets converged on a solution, but several data sets presented outliers, where these outliers were more common in conditions with smaller sample size, and larger number of dimensions. Finally, 1476 data sets presented Heywood cases (improper solutions) [

43,

44].

In the case of the EM algorithm, we found that the model converged for 57.6% of the data sets. The models with six and eight factors had 0% convergence and the models with one and two factors had 100% convergence, which was expected, as the EM algorithm is more likely to fail with higher dimensionality. For the four-factor model, 87.9% of the data sets converged. Across sample size, the convergence rate increased from 53.8% for to 60.0% for . In terms of the number of items, with 5 and 10 indicators per factor convergence was 55.2% and 60.0%, respectively. From the converged results, 13 data sets produced outliers. Outliers were more common in the data sets with smaller sample size. Lastly, parameters could be estimated for all datasets, but standard errors (SE) were unable to be computed for three of the datasets due to non-positive definite information matrices. This occurred in the condition with five items per factor with four factors and .

For QMCEM, 96.7% of the data sets converged, the data sets that presented outliers more commonly had a smaller sample size and greater number of factors. Convergence rates were 100% for the one-, two-, and four-factor models, 99.2% for the six-factor model, and 84.3% for the eight-factor model. Across sample sizes, the convergence rate is consistently between 95.8% and 97.4%. Between number of items, we have 100% convergence for 5 indicators per factor, and 93.4% for 10 indicators. Lastly, standard errors could not be computed for 11.0% of the converged data sets. We found that the number of factors affected SEs, as they could not be computed for 53.2% of the eight-factor models, followed by 7.1% of the six-factor models, 1.5% of the four-factor models, and 0% for the one- and two-factor models. Sample size was also related to the problem with the computation of SEs, as they could not be computed for 18.7% of data sets with . When , this was true for only 6.9% of datasets. Unsurprisingly, the cross condition where SEs could not be computed for the highest proportion of datasets was for eight factors and . In this condition, SEs could only be computed 78.4% of the time.

For the final estimation method, MHRM, models converged for 97.3% of the data sets, the outliers were more commonly present in data sets with smaller sample size and greater number of factors. There is no difference in convergence rate across the number of items per factor (5 items: 97.8%, 10 items: 96.7%). For sample size, we see a convergence rate of 89.1% for , 99.9% for , and 100% for . The same pattern holds across number of factors: For eight factors we see 89.0%, and for six and four factors we see 97.5% and 99.9% convergence, respectively. Finally, for one and two factors we see 100% convergence. This leaves us with a convergence rate without outliers of 97.2%. SEs could not be computed for 13.7% of the converged data sets. Across number of items, there were problems computing the SEs for 11.3% of the 10-item models and 16.0% of the 5-item models. Across number of factors, we see this increase from 4.9% for the one-factor model to 22.0% for the eight-factor model. Finally, across sample size, SEs could not be computed for 17.2% of models when , and around 12.5% when .

For the following results, when discussing parameter estimates, we used all the converged results without outliers (column

Included in

Table 1), but included the models where SEs could not be computed. For results related to variability and coverage of the estimates, we also exclude the data sets in which there were problems computing the SEs.

3.1.3. Bias

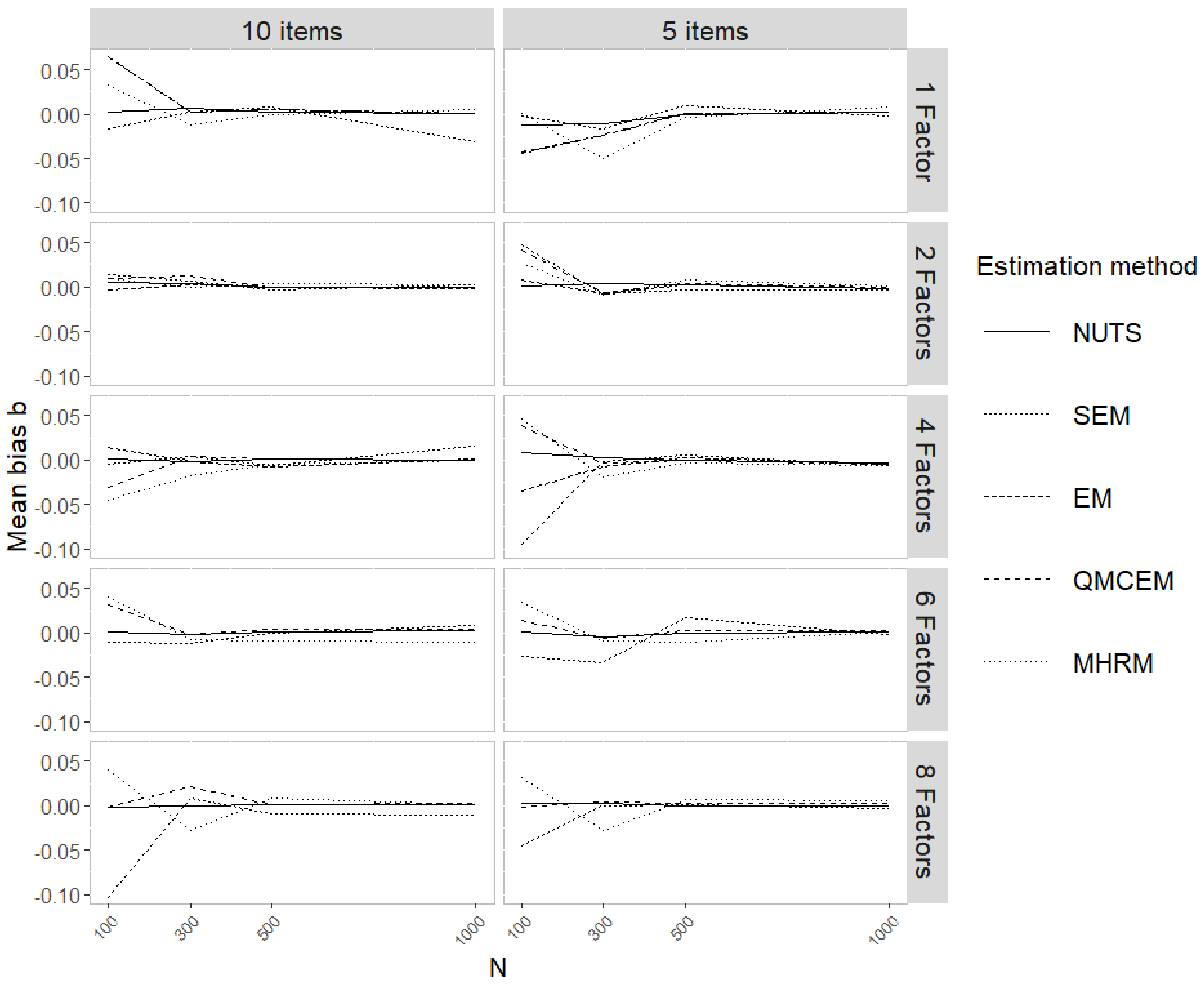

For the average bias (

Table 3), for the difficulty

b parameter, the differences in bias between estimation methods are small, as all of them achieved average bias of zero up to the first decimal (

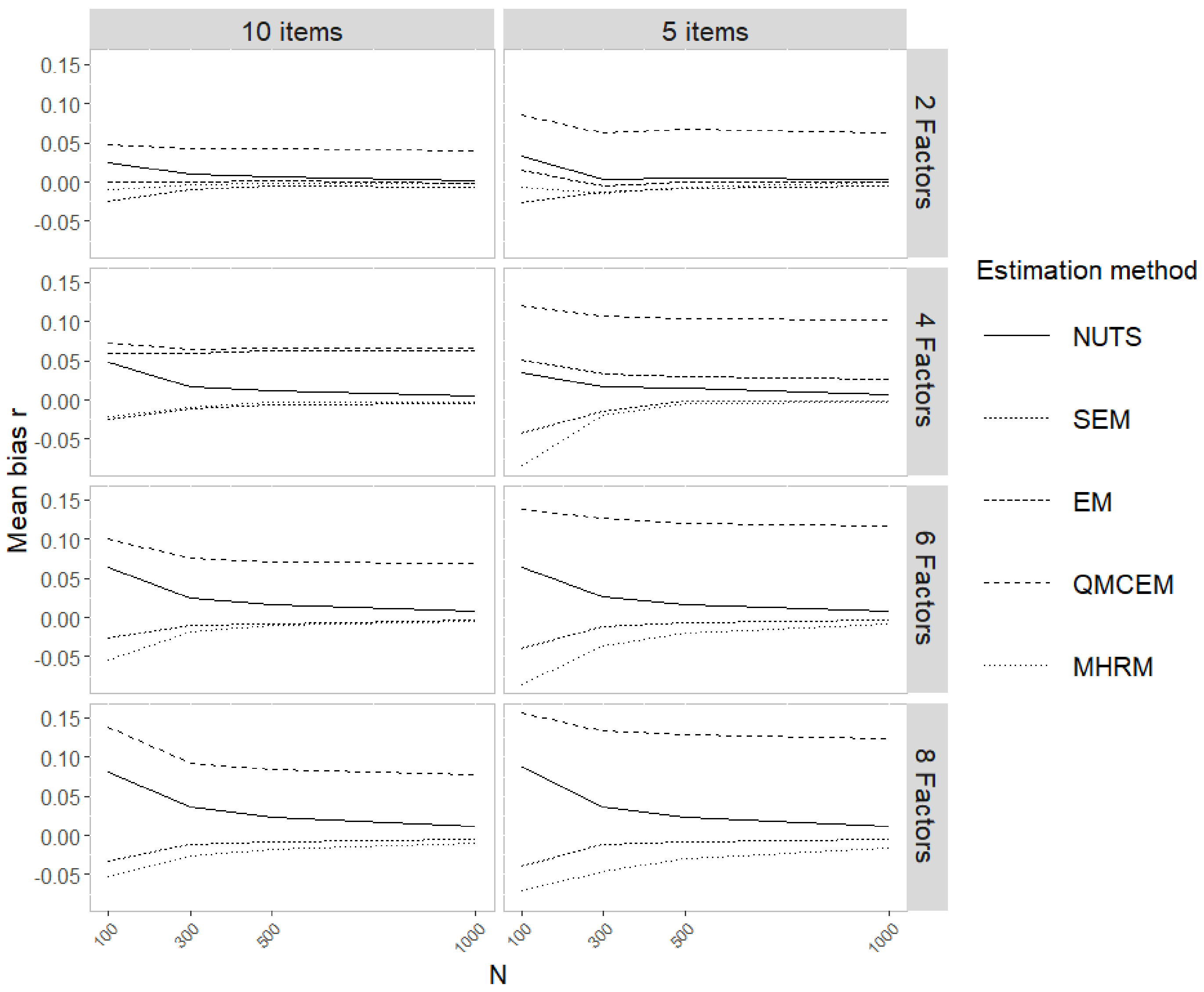

Figure 1). For the factor correlations

r, we find that WLSMV results in the lowest bias, followed by NUTS, EM, and MHRM, consistently across conditions (

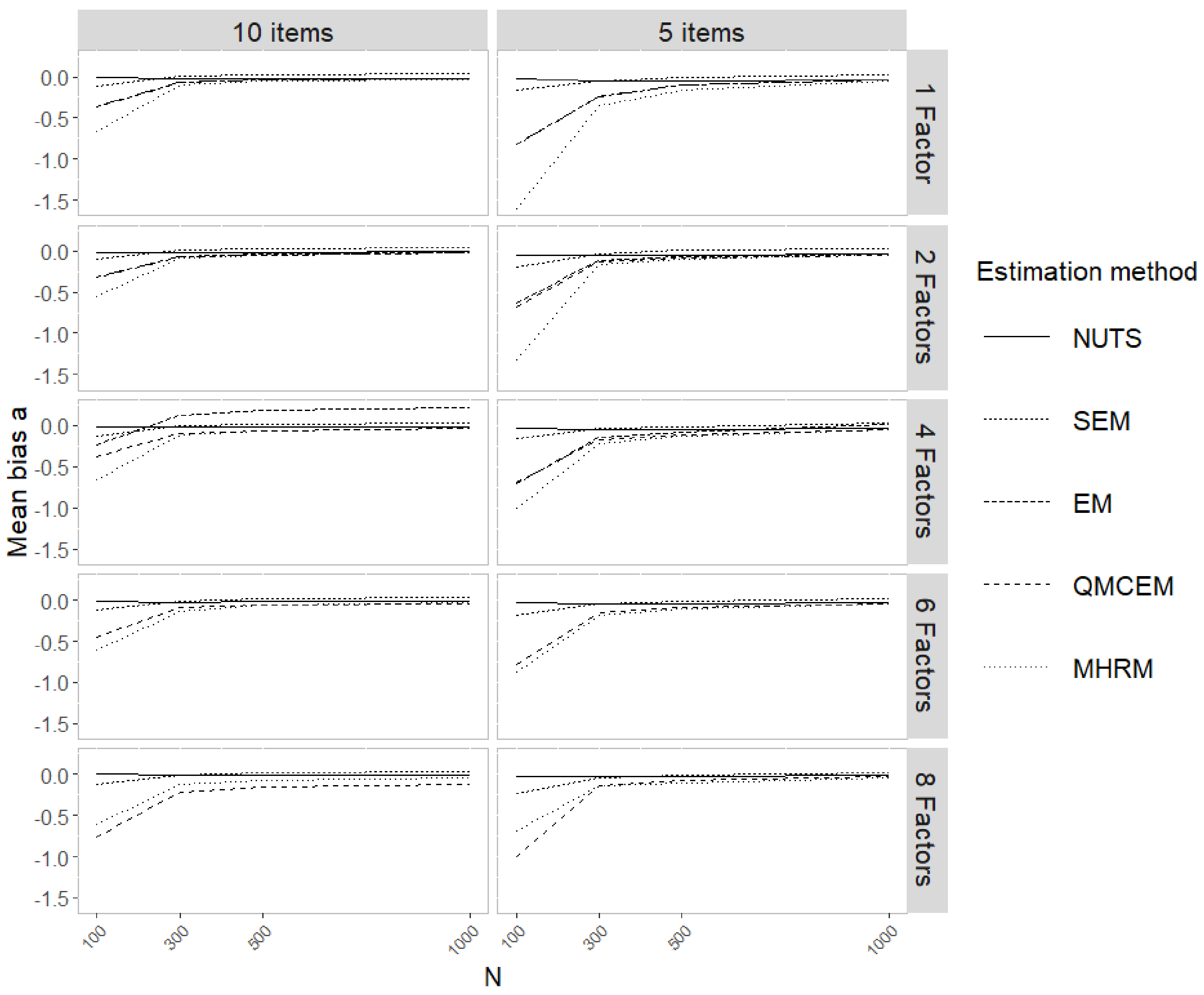

Figure 2). Finally, for the discrimination

a parameters, we see the largest bias differences between methods, where NUTS and WLSMV result in the lowest bias, followed by EM. While QMCEM and MHRM show the highest average bias (

Figure 3). Across simulation conditions, we see that, in general, as sample size increases bias decreases. MHRM presents lower bias as number of factors increase.

When looking at absolute bias in

Table 4, for the factor correlation

r, most estimation methods present similar absolute bias (NUTS, WLSMV, EM, and MHRM), while QMCEM consistently presents higher absolute bias. As sample size increases, the bias decreases from around 0.09 to around 0.02. NUTS, WLSMV, and MHRM present consistent absolute bias as the number of factors increase, while QMCEM absolute bias increases as the number of factors increases.

For the difficulty b parameters, we see that NUTS results in the lowest absolute bias across N and D, while the other methods show similar absolute bias; the only exception is that MHRM presents absolute bias similar to NUTS when the number of factors is larger. As sample size increases, we see the bias decrease, and with the bias for NUTS and MHRM are similar, while the other three methods stay similar across conditions.

Lastly, for the discrimination

a parameter, NUTS again shows the lowest absolute bias, followed by WLSMV, and EM. QMCEM and MHRM show similar absolute bias. For all the methods, as sample size increases, bias decreases. With larger sample sizes, WLSMV and MHRM absolute bias becomes similar to NUTS, while EM and QMCEM consistently presents larger bias. As number of factors increase, MHRM presents lower bias, still NUTS presents lower bias across

D (plots with the bias and the 90% CI for each method across simulation conditions are presented on the OSF site for this project:

https://osf.io/9drne/, accessed on 1 August 2021).

As we simulated population parameters from continues distributions, we looked further into the bias across different ranges from the parameter values. We split the population parameters into three quantiles, presenting bias across low, medium, and high ranges. As the discrimination parameter followed the distribution , the low range was values lower than 1.258, medium ranged from 1.258 to 2.116, and high values were greater than 2.116. As the difficulty parameter followed the distribution , the low ranges were values lower than −0.439, the medium range was between −0.439 and 0.412, and the high values were greater than 0.412. As the the factor correlations followed the distribution , the low range were values lower than 0.332, the medium range was between 0.332 and 0.464, and high values were greater than 0.464.

The average bias across ranges of population values and sample sizes is presented in

Table 5. Similar patterns as before appeared—as sample size increases, bias decreases. With

for the

a parameter we see the IRT methods presenting higher bias at higher ranges of the population values. QMCEM and MHRM repeat this pattern as sample size increases, while EM presents similar results at larger sample sizes. WLSMV presents similar bias at different ranges of the population values, except with

, where the medium range presents higher bias. NUTS presents similar bias across the different ranges of the population values.

For the b parameters, NUTS presents similar bias across different ranges, with the medium range presenting slightly smaller bias. The other methods present a pattern, with higher bias at the low and high ranges, and smaller bias at the medium range, and these differences decrease as sample size increases. For the factor correlations, NUTS, WLSMV, EM, and MHRM presents similar bias across the different ranges, while QMCEM presents higher bias as the range goes higher.

3.1.4. Variability

When looking at the estimated parameter variability, we see in

Table 6 the standard deviation of the estimated parameter around the population values (bias). For all cases, as sample size increases, variability decreases. Across sample size, we see that NUTS presents the smallest variability. For the factor correlations, the other methods present similar variability, except that QMCEM presents higher variability at larger sample sizes. For the

a parameters, WLSMV presents lower variability than the IRT methods, but this difference decreases as

N increases. While for the

b parameters, the IRT methods present smaller variability than WLSMV.

Across the number of factors, for the factor correlations, NUTS, WLSMV, and EM present smaller variability in most cases, as number of factors increase MHRM variability decrease, while QMCEM variability increases. For the

a parameter, NUTS presents the smaller variability, followed by WLSMV. With EM and QMCEM having similar levels, MHRM variability goes from the highest in the one-factor model, to being close to WLSMV with eight factors. Lastly, for the

b parameters, NUTS present the smallest variability and is consistent across the different number of factors. The IRT methods present similar levels of variability, and WLSMV presents the larger variability (plots comparing the estimation methods variability across simulation conditions are presented on the OSF site for this project:

https://osf.io/9drne/, accessed on 1 August 2021).

3.1.5. Coverage

We were also interested in whether each of the methods would result in users making the correct inference—that is, that the parameters from the true population model fall within their respective 95% CI.

Table 7 shows the average percentage of times that the respective 95% CI included the population value. Overall, NUTS has the highest coverage, with an average of at least 95.1%, and as high as 99.1% across all sample sizes and number of factors. It is followed by WLSMV with an average coverage of at least 90.9% and up to 98.5% across all sample sizes and number of factors. All the IRT methods presented low coverage for the

b parameter, around 62% for small sample sizes, and increases to be around 72% with larger samples. Consistently show similar coverage across different number of factors, around 70%. They show similar coverage for the

a parameter, across different sample sizes presented similar results around 92%, except with large sample size QMCEM and EM presented slightly lower at 85% and 88%, respectively. Across different numbers of factors in the model, their coverage is high (around 93%) with one or two factors, but as the number of factors increases, coverage decreases down to 79.% and 86.8% for QMCEM and MHRM, respectively. For the

r parameter, we see that EM and QMCEM start with high coverage with a small sample and decrease as sample sizes increases, while MHRM starts with low coverage at small sample size and increases as sample size increases. Across a different number of factors, all IRT methods have high coverage with small number of factors, and it decreases for all of them as the number of factors increases, going from being around 95% to being around 78%.