1. Introduction

Ransomware (or more formally crypto-ransomware) is the branch of malware that, after infecting a computer, encrypts and deletes original data files before demanding a ransom to recover access to the files [

1,

2,

3,

4,

5]. While early variants of ransomware were typically amenable to reverse engineering [

6,

7], we have now seen many variants that are cryptographically robust meaning that the files cannot be recovered without the private keys held by the criminals [

8]. Indeed, robust ransomware is now available as a service (RaaS) for use by malicious actors who have little technical knowledge [

9]. This means that ransomware provides a relatively simple business model for criminals to earn significant illicit gains [

10].

Cryptolocker was one of the first, if not the first, to implement ransomware in a technically sound way [

11]. Cryptolocker was distributed using non-targeted phishing attacks and most victims were individuals. The ransom demand was typically in the region of USD 200–400. The precise number of Cryptolocker victims and proportion of victims who paid ransoms are unknown. There is, however, evidence that enough people paid the ransom to generate a large amount of money for the criminals. Conservative estimates put the amount of ransom at USD 300,000 [

12] and USD 1,000,000 [

13]. Cryptolocker was shut down in 2014, but this has proved to be the beginning rather than the end of the ransomware story. Other large-scale attacks have followed, and new families such as CryptoWall, TorLocker, Fusob, Cerber, TeslaCrypt, Ryuk, etc., have emerged [

1]. In recent years, ransomware has become increasingly targeted at organisations and wealthy individuals [

14,

15,

16]. The potential, however, for non-targeted phishing attacks that are primarily aimed at individuals remains a very live threat.

Given the threat of ransomware, and its negative impact on society, there is a pressing policy interest in analysing the ransomware business model of criminals in order to identify its weak points and pre-empt future attacks. This entails, among other things, understanding the factors that can influence a victim’s willingness to pay the ransom. This willingness to pay is influenced not only by objective factors, such as whether the victim had backups and/or the files were important to them, but also psychological and emotional factors, such as whether the victim experiences anger and/or is willing to engage with criminals. Extensive evidence from psychology and behavioural economics shows that financial decisions can be influenced by the way choices are presented or framed [

17,

18]. In this paper we explore

whether victims’ willingness to pay a ransom is influenced by the way the ransom demand is framed by the criminals. The first point of contact between the criminal and victim comes when the ransomware reveals itself and makes the ransom demand. Typically, this comes in the form of a pop-up window, or similar, stating that files have been encrypted and a ransom must be paid. This pop-up window may only be the start of the process. For instance, modern ransomware strains typically come with a functioning customer service to ‘help’ the victim [

19,

20]. However, the framing of the initial ransom demand is a critical first stage in the relationship between the criminal and victim. Comparing across ransomware strains, it is readily apparent that there is a huge variation in the look and feel of ransom demands [

21].

In exploring whether victims’ willingness to pay a ransom is influenced by the framing of the ransom demand, we apply insights from behavioural economics. A key theme in behavioural economics is that gain–loss framing matters, in the sense that an individual’s choice between options may depend on whether the options are presented as gains or losses. For example, Tversky and Kahneman [

17] showed that people’s judgement between health interventions was dramatically influenced by whether the choice was framed in terms of ‘people will be saved’ or ‘people will die’. Similarly, choices between gambles are influenced by whether they are framed in terms of gaining money or losing money [

22]. Gain–loss framing has been shown to influence online security behaviour, with loss-framed messages leading to more secure behaviour [

23]. As we explain in

Section 2, a ransom demand can use a

gain frame, focusing on regaining access to files, or use a

loss frame, focusing on the loss of files. Moreover, it is notable that some ransom demands seen in the wild focus on regaining access to files while others focus on loss of files. Therefore, differences in gain–loss framing are observed across ransomware strains. We present a simple theoretical model with which to analyse the potential impact of ransom framing on willingness to pay and demonstrate that there are non-trivial trade-offs when designing the ransomware frame. For example, there is a trade-off between a frame that is helpful and supportive (which, ceteris paribus, increases willingness to pay) and one that emphasizes the threat of losing the files (which, ceteris paribus, also increases willingness to pay).

We subsequently report the results of an experiment in which participants () were exposed to eight ransomware splash screens closely modelled on ransomware splash screens seen in the wild. Participants were asked to rate and rank each splash screen across six different measures, including willingness to pay, trust the criminals will provide a decryption key, and how positive they would feel about paying the ransom. We find that willingness to pay systematically depends on the way ransom demands are framed. The key mediating factors are trust and positivity. Therefore, overall, we find that willingness to pay is highest for positively framed ransom demands, e.g., CryptoWall, that contain helpful information and instil trust in the victim that they will recover their files. More negative and threatening demands, e.g., CryptoLocker, can only be effective if the victim has trust in the criminals to return access to the files.

Our findings can be of interest to researchers, policymakers and other stakeholders in better understanding the victim’s response to a ransomware attack. This can have two key benefits: (i) It allows pre-emption of future developments in ransomware. In particular, ransomware criminals appear adept at developing their strategy in order to maximize their financial gain. It can therefore be expected that the framing of ransom demands will evolve towards frames that increase willingness to pay. (ii) It allows more targeted advice to victims on how to respond to a ransomware attack, particularly if the aim is to delay and ultimately stop a ransom payment. For instance, we find there can be benefits in undermining a victim’s trust in the criminals.

2. Gain–Loss Framing and Ransom Demands

In this section we introduce a simple model to capture the channels through which a ransomware demand splash screen could potentially influence a victim’s willingness to pay a ransom. Various studies have analysed game theoretic models of ransomware (e.g., [

24,

25,

26,

27,

28,

29,

38,

39,

40]). The focus of the prior literature has been on strategic factors that may influence willingness to pay, such as the presence of backups or insurance and the potential bargaining power of the two parties (e.g., [

10,

41,

42,

43]). Our model takes a complimentary approach in focusing on what we believe are likely to be the most important behavioural factors a criminal can use to influence the framing of the ransom demand. In doing so, we take as given the value an individual puts on their files and their relative bargaining power.

A key behavioural factor we explore is the distinction between a gain and loss frame. Abundant evidence from economics and psychology suggests that individuals are loss averse, meaning that an individual experiences a loss more severely than they would enjoy an equally sized gain [

44,

45,

46,

47,

48]. This means that individuals have incentives to avoid losses and make choices that minimize the chances of loss. Crucially, losses and gains must be judged relative to a reference level and this reference level can be influenced by the framing of choice [

49,

50]. More specifically, a decision context can be framed in a way that a particular outcome is perceived as a loss, or reframed so that the same outcome is perceived as a gain [

17]. The reframing of gains or losses has been widely shown to influence choice across a range of settings (e.g., [

51,

52,

53]) including online security [

23].

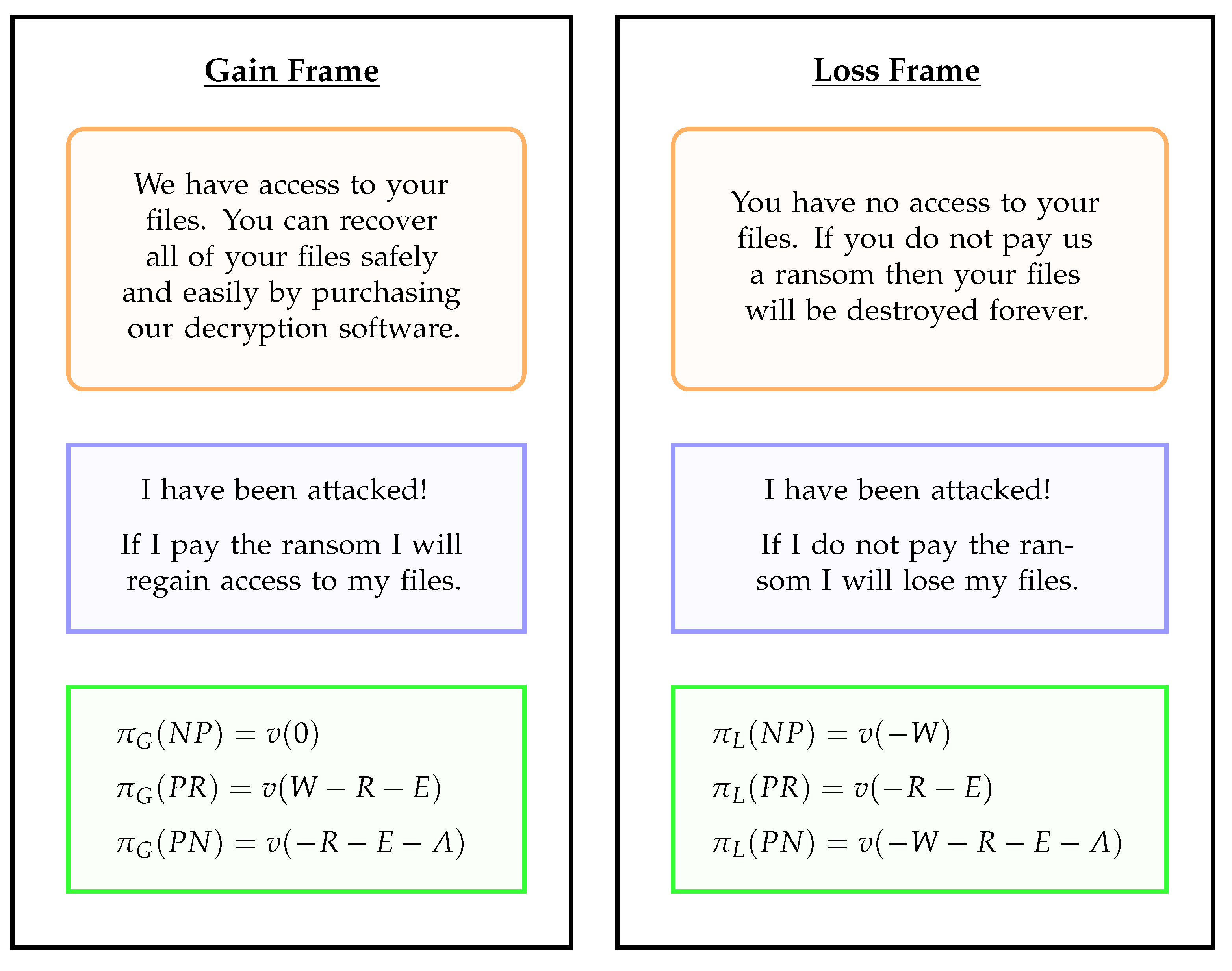

Here, we consider the implications for ransomware. In

Figure 1, we illustrate how a ransomware demand could be framed to emphasize the gain or loss of files. The reference point in the gain frame is that the victim has internalized the loss of files. Thus, to

recover access to the files would be perceived as a gain. The wording in

Figure 1 from a splash screen highlights how the victim can recover their files by paying a ransom. By contrast, in a loss frame, we take as the reference point that the victim has not internalized the loss of access to files. In this case, to

not recover access to the files would be perceived as a loss. Again, the wording in

Figure 1 from a splash screen emphasizes loss. We shall discuss in

Section 3 specific examples of ransom demands in the wild that have framing similar to both these gain and loss examples.

To formalise the gain–loss framing distinction, we introduce some notation. We take as given a value function

v that maps outcomes, relative to the reference point, into an associated payoff. It is assumed that

and

is a strictly increasing function of

x. Hence, positive outcomes are associated with positive payoff and negative outcomes with negative payoff. Consider a

gain frame in which the victim has internalized the loss of files. We distinguish three possible outcomes along with the associated payoffs, summarised in

Figure 1.

The victim does not pay the ransom and so stays without access to their files. Denote this NP for not pay. Without loss of generality, we set the payoff from NP at 0, denoted .

The victim pays the ransom and recovers access to their files. Denote this PR for pay and recover. In evaluating the payoff, we need to consider the value of the files, denoted W, as well as the ransom paid, denoted R. We also take into account that the victim may experience disutility on moral and ethical grounds from having paid a ransom to criminals. Denote this cost by E. The net payoff is then given by .

The victim pays the ransom and does not recover their files. Denote this PN for pay and not recover. In this case, the victim again pays the ransom R and experiences disutility on moral grounds. In addition, we also take into account additional disutility from ‘anger’ at the criminals not honouring their promise to return the files. Denote this cost by A. The net payoff is then given by .

The expected payoff from paying the ransom depends on the perceived probability of regaining access to the files. Denote this by

. The expected payoff from paying the ransom is given by:

The victim will optimally pay the ransom if

or, equivalently,

. In other words, the victim will optimally pay if the expected gain from recovering the files exceeds the expected loss from not recovering the files. The decision to pay a ransom is likely to have a large stochastic element that represent vagaries of the situation which are not modelled here. Even so, we can unambiguously say that the victim’s willingness to pay (for a fixed ransom demand

R) will be increasing in

p and

W and decreasing in

E and

A. For more on the cost–benefit trade-offs of ransom payment, see Connolly and Borrion [

30].

Consider next a loss frame in which the victim has not internalized the loss of files. We can distinguish the same three outcomes as in the gain frame:

The victim does not pay and so stays without access to their files. The payoff takes into account the loss of files, valued at W, hence .

The victim pays the ransom and recovers access to their files. In this outcome the victim has paid the ransom and incurred the ethical cost. The net payoff is thus .

The victim pays the ransom and does not recover their files. In this outcome the victim has lost their files, paid the ransom and incurred the ethical cost and anger cost. The net payoff is thus .

The expected payoff from paying the ransom again depends on the perceived probability of regaining access to the files,

p. The expected payoff from paying the ransom in a loss frame is given by:

The victim will optimally pay the ransom if the expected loss from paying the ransom is less than the loss from not paying the ransom, . Again, the victim’s willingness to pay (for a fixed ransom demand R) is increasing in p and W and decreasing in E and A.

If we compare the gain and loss frame then it is possible to show, under reasonable assumptions on

v and everything else held constant, that the victim has more incentive to pay the ransom in the loss than gain frame. To illustrate, consider a linear value function of the form

where

measures the extent of loss aversion. Then, the maximum

R for which it is optimal to pay the ransom can be explicitly calculated. In the gain frame, we set

and use Equation (

1) to obtain condition

. Solving for

gives

In the loss frame, we set

. Solving for

gives

If

, then

. The intuition for this result is that in the gain frame, the victim does not lose anything by not paying the ransom (because the loss is already internalized) while in the loss frame, they lose by not paying the ransom. Thus, loss aversion makes the victim more willing to gamble on the return of their files in the loss frame. Estimates of the loss aversion parameter,

, are around 2 [

48]. Therefore, we obtain a prediction that victims may be more willing to pay ransom demands if the criminals use a loss frame.

3. Manipulation of Ransomware Frames

Our model shows that if the criminal is motivated by financial gain, it is in their interest to increase the victim’s perception of W and p, decrease E and A and put the victim in a loss frame. Clearly, there are natural limits to how much the criminal can influence the victim’s willingness to pay through variations in the splash screen. For instance, a victim who recently performed a comprehensive backup is going to put a much smaller value on losing access to their files in an attack, W, than if they had not performed such a backup. We argue, however, the criminals can have an influence on and A and the perception of gain or loss.

We begin with factors that may increase the victim’s perception on the probability of recovering a file,

p. A professional-looking website, with a smooth interface, clear instructions on how to recover the files and allusion to customer service should increase the victim’s trust in recovery. By contrast, a very basic webpage with spelling and grammatical errors together with contradictory or incomplete advice may leave the victim thinking recovery is unlikely. The opportunity to recover some files for free is also something that could increase the perceived probability of recovering subsequent files. Evidence suggests that individuals often anchor perceptions on readily available information even if that information is superficial [

54]. It would therefore seem vital that the criminals make the ransom splash screen look as ‘professional’ as possible [

55,

56,

57].

Hypothesis 1. The victim’s willingness to pay the ransom increases with the perceived quality of the splash screen as well as the clarity of instructions on how to recover the files, because this increases the estimated probability of recovering the files.

We turn our attention next to the moral and ethical dimension. Ransomware splash screens used in the wild vary widely between the use of threatening or supportive language. Threatening language is perhaps most expected (given this is a criminal attack) and exists. For instance, Cryptolocker included phrases such as ‘Any attempt to remove or damage this software will lead to the immediate destruction of the private key’. Much of the language in ransom demands is, however, relatively supportive. For instance, Cerber was similar to many in alluding to ‘a special software – Cerber Decryptor’. Petya claimed that ‘We guarantee that you can recover all your files safely and easily’ if you purchase the software. Such framing can create an illusory gap between the criminal who stole the files and the ‘service provider’ who is offering to recover those files. This converts the ransom demand into the purchase of ‘special software’. The victim may be more willing to pay a service provider for special software than they would a criminal’s ransom demand. Indeed, service friendliness can have significant influence on customer satisfaction and consequently sales [

58,

59,

60]. A splash screen that focuses on supportive service may therefore decrease the ethical cost,

E, of paying a ransom and the anger from not recovering files,

A.

Hypothesis 2. The victim’s willingness to pay the ransom increases with the perceived supportiveness of the splash screen, because this decreases the ethical cost of paying a ransom and the anger from not recovering files.

The distinction between threatening and supportive language also feeds into the framing of losses versus gains. Threats to ‘destroy files’ appeal to loss aversion because they emphasize the loss. Allusions to ‘recovery of files’, by contrast, do not appeal to loss aversion because the frame is more about gaining files that seem lost. Generally, individuals are more loss averse when the loss aspect is primed [

61,

62,

63]. Thus, frames that emphasize destruction may make it more likely the victim will perceive a loss frame. There are other factors that may also convey a loss frame. One common ploy is to include a countdown timer on the splash screen beyond which the encrypted files are destroyed or the ransom demand increases. There is little evidence that this threat is credible. Even so, it may create a sense of urgency in the victim and consequently lead to bias in perceptions of the value of files. For instance, we know that time pressure can be used to manipulate customers [

64,

65].

Hypothesis 3. The victim’s willingness to pay the ransom is higher with signals that the files will be destroyed, e.g., a countdown timer, because this increases the sense of loss.

Our three hypotheses suggest that there are ways of influencing a victim’s willingness to pay a ransom. Crucially, however, there are some non-trivial trade-offs. The most important trade-off we have identified is that between threatening and supportive language. We would argue that it is difficult to combine the kind of supportive language that would increase the perceived probability of returning the files (Hypothesis 1) and lower the ethical cost of paying a ransom (Hypothesis 2), with the kind of threatening language that would focus a victim’s mind on the loss of files (Hypothesis 3). Factors that increase

p and decrease

E and

A may therefore be inconsistent with inducing a loss frame. This means we need to reconsider the extent to which a victim would be more willing to pay in a loss than gain frame. When arguing, in

Section 2, that a victim had more incentive to pay in a loss than gain frame, we assumed everything else remained the same. We now need to consider what happens if

and

A do not remain the same in a loss frame.

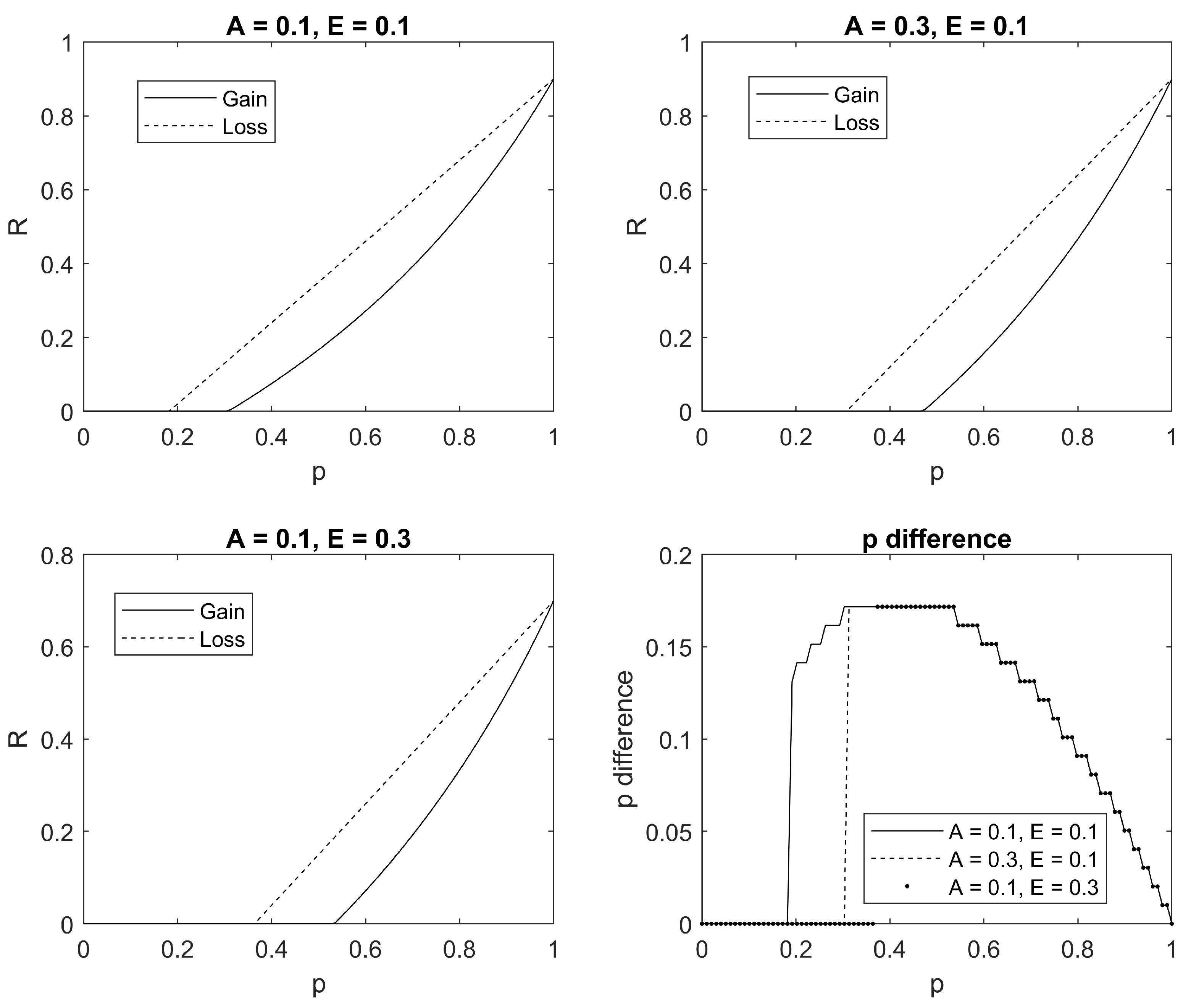

To illustrate, consider again the model from

Section 2 and the cut-off values for paying the ransom as given by Equations (

4) and (

5). Suppose the perceived probability of regaining the files,

p, and ethics and anger cost of paying,

E and

A, depend on the frame. We assume the perceived probability of regaining the files is higher with a gain frame,

, because of the supportive language. Similarly, the ethics and anger cost from paying is lower with a gain frame than a loss frame,

and

. Then, the victim is willing to pay a higher ransom in the gain frame,

, if

If is sufficiently small and sufficiently large, then the impact of loss aversion, represented by , is negated. For instance, if the threatening language of a loss frame results in while the supportive language of a gain frame results in , then it is trivial that .

To better understand the relative trade-offs, we calculated the value of

and

for a wide range of values of

E and

A with

,

,

and

. We summarise our findings in

Figure 2. In the first three subpanels, we plotted the value of

(‘Gain’) and

(‘Loss’) for three different combinations of

A and

E. Consistent with our previous analysis, you can see that for a fixed value of

p, we have

. However, our interest is in settings where

(because a loss frame results in less trust). For any value of

, we can calculate the value of

such that

. In other words, we can calculate the required difference in perceived probability

such that the victim is willing to pay the same ransom in a loss and gain frame

. This ‘p difference’ is plotted (as a function of

) in the bottom right panel of

Figure 2 for the three combinations of

A and

E. We see that this p difference is everywhere less than

. Thus, if the gain frame results in the victim putting an increased probability of

or more of regaining access to their files, then they will be willing to pay a higher ransom in the gain than loss frame. Given that a gain frame is likely to lead to a higher perceived probability of regaining files, we suggest that the gain frame will ultimately result in a higher willingness to pay the ransom.

Hypothesis 4. The victim’s willingness to pay the ransom is higher in a gain frame than loss frame because the gain frame increases the estimated probability of recovering the files enough to offset a higher willingness to pay in a loss frame, ceteris paribus.

In Hypothesis 4, we consider a potential trade-off between a ransom frame and the victim’s perceived probability of recovering the files,

p. The value of

p can be influenced by the strategic interaction between the criminal and victim, and not solely by the ransom frame. For instance, the criminals can decrypt a sample file to signal the files can be recovered. Ransomware actors may also build a reputation over time for returning files to victims [

66]. Our model abstracts away from such strategic considerations in focusing solely on the impressions of the victim when viewing the ransom demand. Everything else being the same, it can be expected that strategic considerations would lead to a reduction in the difference in perceived probability,

, as initial impressions become replaced by more informed judgment. Thus, Hypothesis 4 should be seen as reflecting initial impressions, which likely influence decision making.

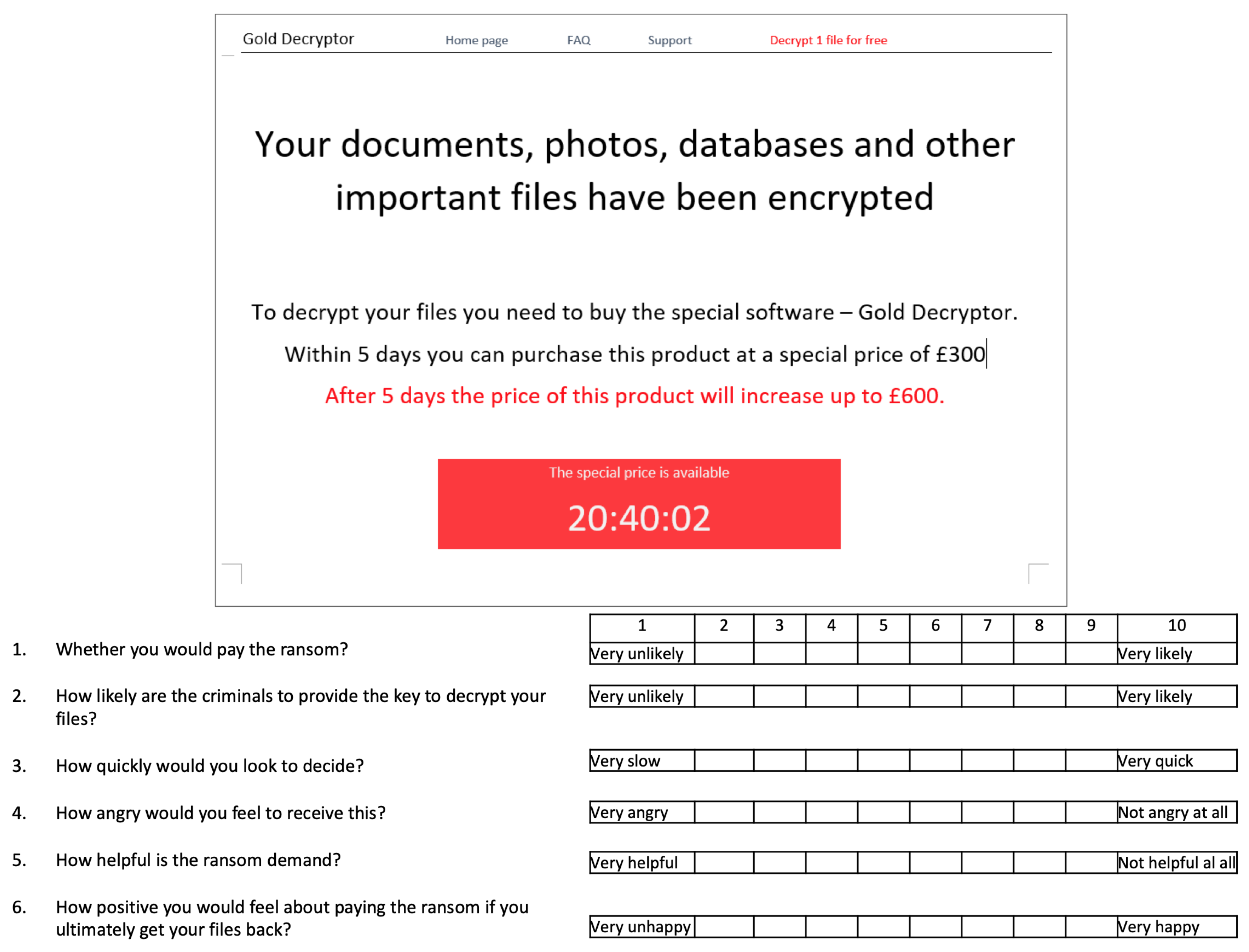

4. Experiment Design

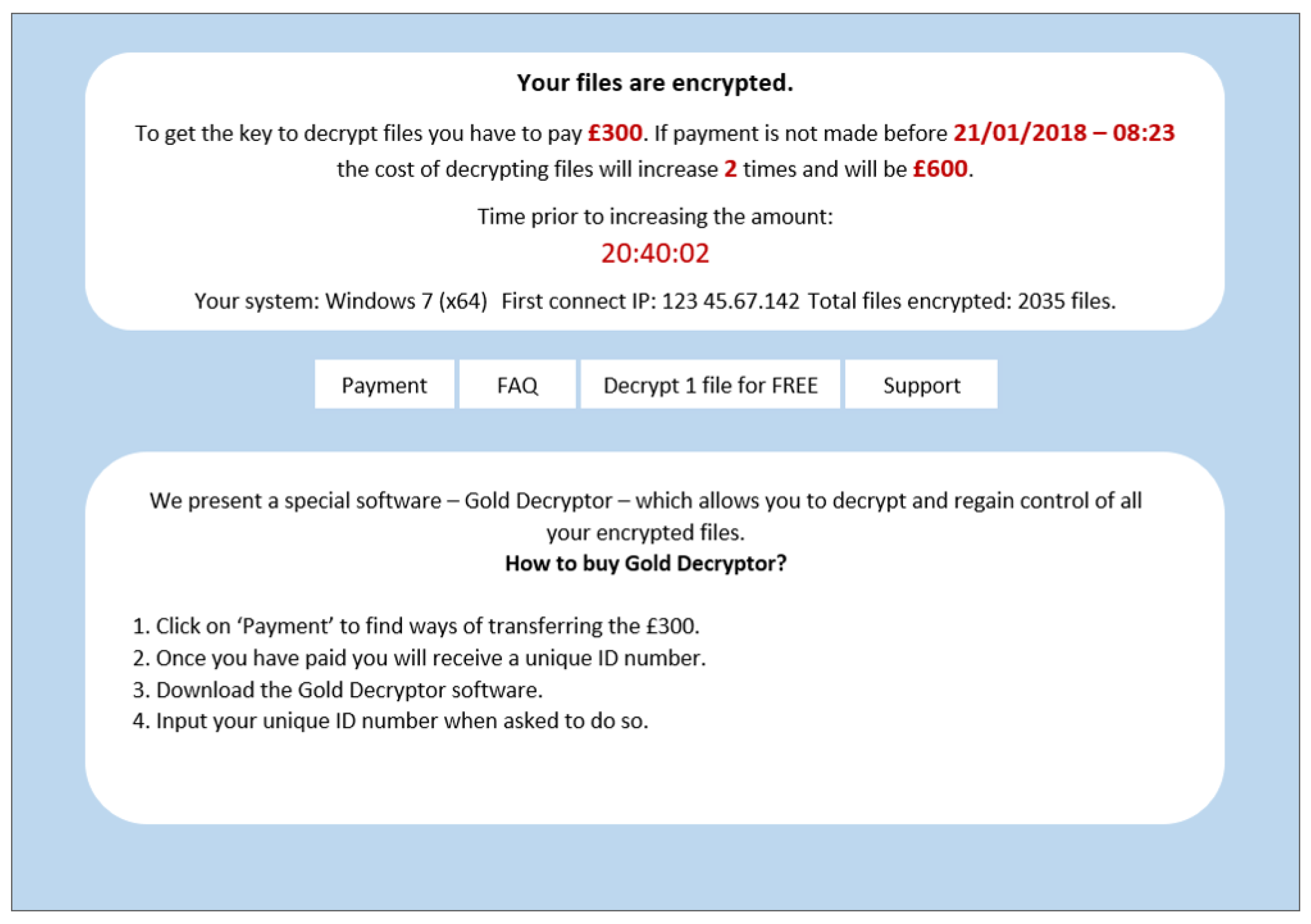

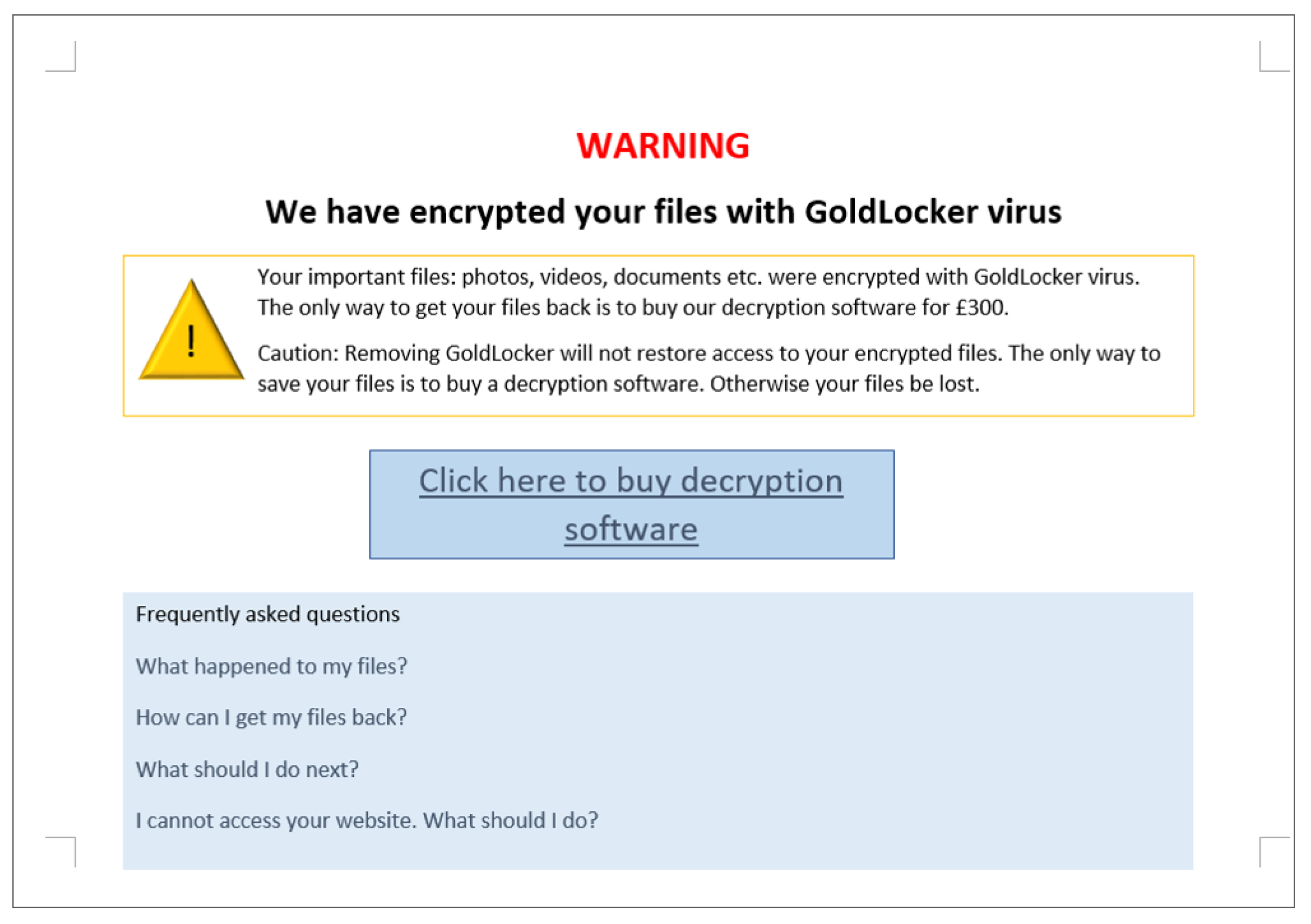

In the experiment, participants were exposed to eight different ransomware splash screens. The splash screens were based on screenshots of genuine ransomware demands, attributed to ransomware strains: Cerber, Cryptolocker, WannaCry, CTBLocker, CryptoWall, Petya, Locky and TorrentLocker. These ransomware demands were chosen to reflect some of the main ransomware strains that have impacted individuals and to reflect the wide variety of ransom splash screens existing in the wild. While the splash screens were designed to be as close as possible to those observed in the wild, we deliberately modified some information to create homogeneity across the eight splash screens, namely, the ransom amount (GBP 300 rising to GBP 600 if not paid on time), the means of paying the ransom, and the use of correct spelling and grammar. Fixing these factors across splash screens allowed us to focus on differences in how the ransom demand was framed rather than, e.g., differences in ransom amount. (The splash screens, together with all the other information on the experiment, are available in

Appendix B.)

At the beginning of the experiment participants were given a basic introduction to ransomware. Participants were then told the following: Imagine that you had on your computer some files that are very valuable and for which you do not have a backup. For instance, you have important work and sentimental photos. You would, without any hesitation, be willing to pay GBP 300 to, say, a friend or computer expert if they could recover your files. But your choice is whether to pay the ransom to the criminals. For each ransomware example, we want you to rate your attitudes to six different questions:

How likely would you be to pay the ransom? [WTP]

How likely do you think it is that the criminals will provide the key to decrypt your files? [Trust]

How fast do you think it is that you would make a decision? [Fast]

To what extent would this ransom demand make you feel angry? [Angry]

How helpful is the ransom demand in informing you about what has happened and what to do about it? [Helpful]

If you ultimately get your files back, how positive you would feel about paying the ransom? [Positivity]

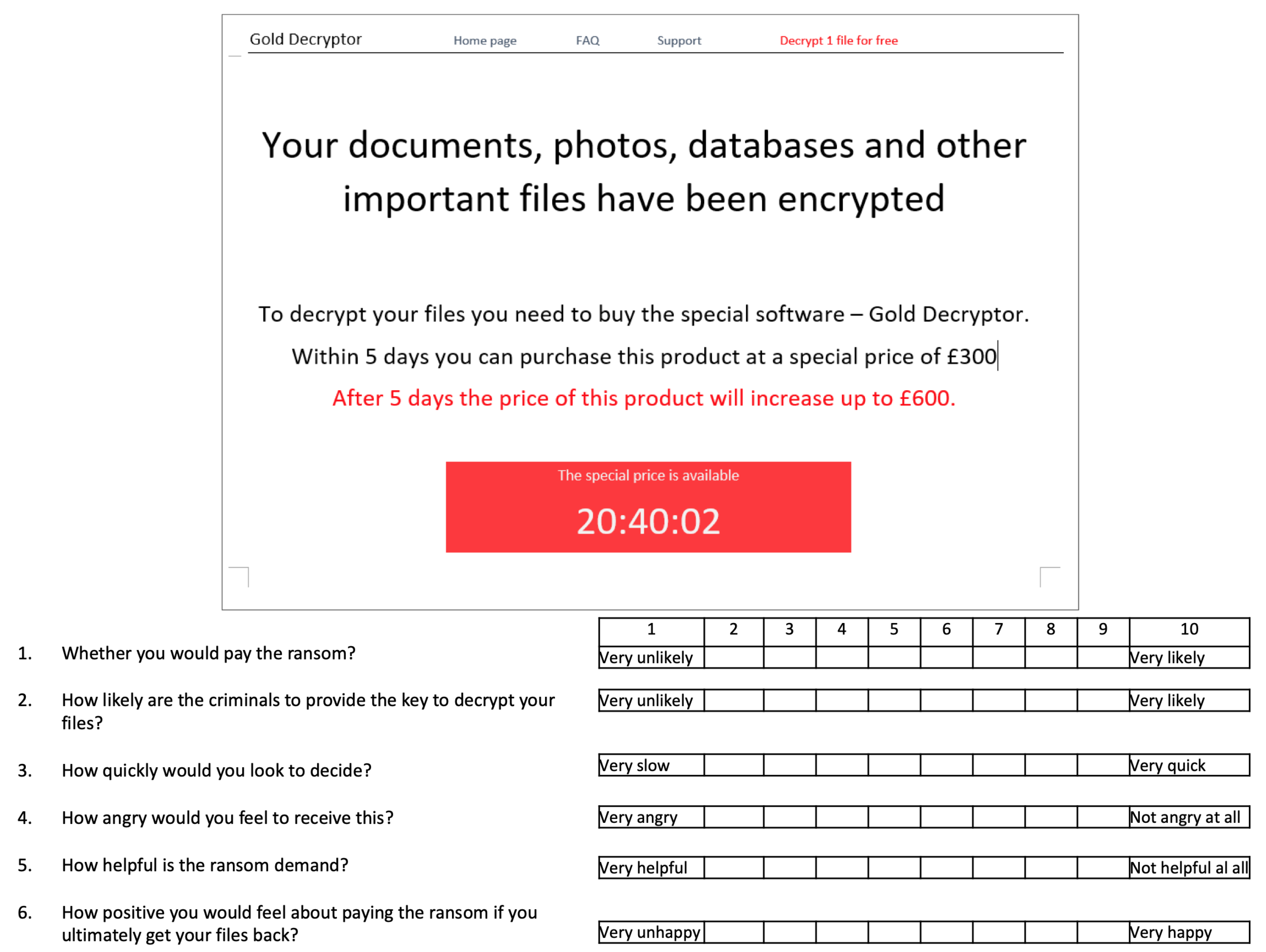

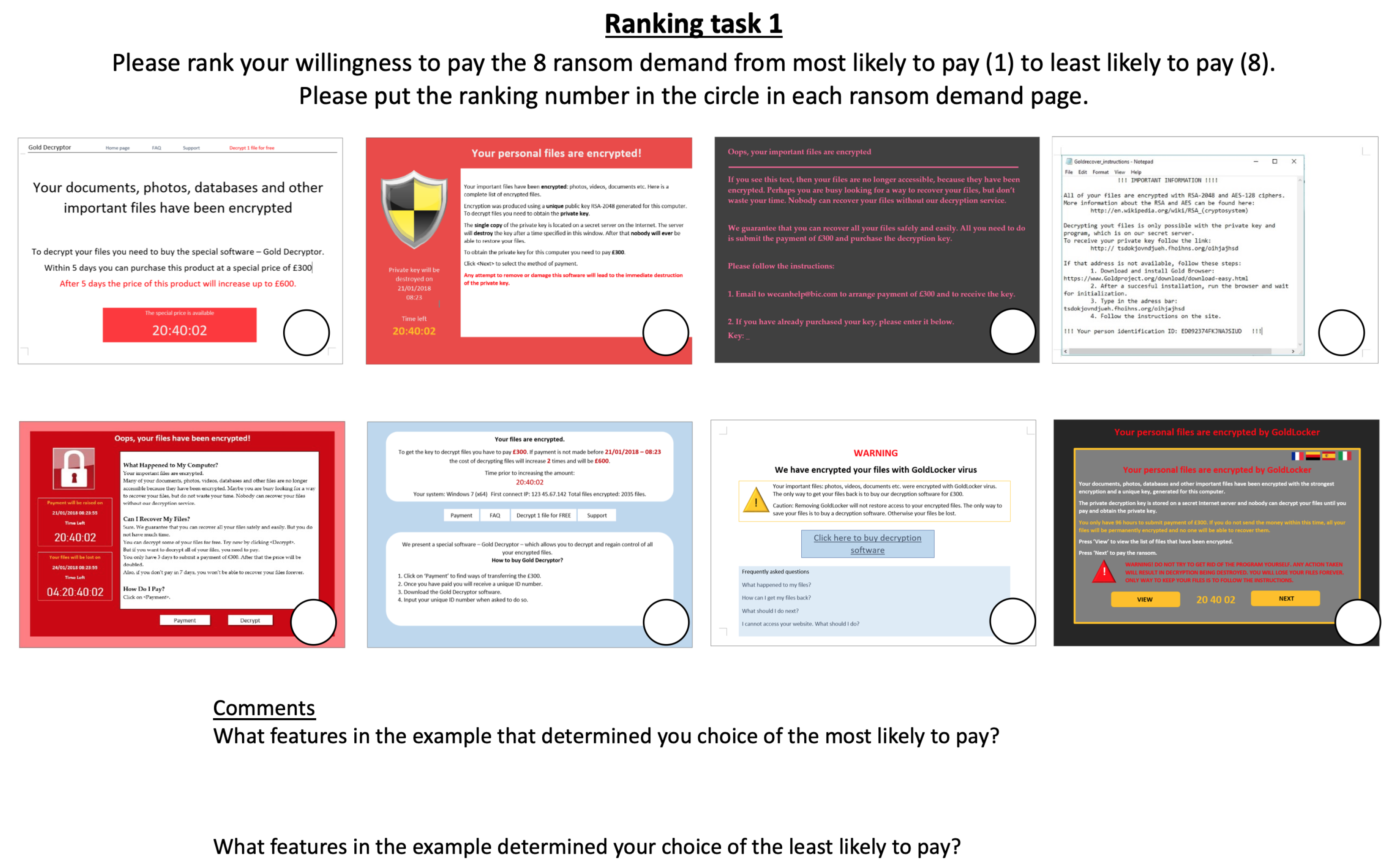

The participants were then asked to rate each ransomware splash-screen example one by one. All questions were rated on a 10-item Likert scale. An example is provided in

Figure 3. We used four different orderings of the eight splash-screen examples to control for any order effect. After participants had made independent ratings for the eight ransomware examples, we asked them to rank the examples from one to eight with respect to the six questions. For example, for question 1, we asked participants to ‘Please rank your willingness to pay the 8 ransom demands from most likely to pay (1) to least likely to pay (8)’. We also asked them to briefly comment on their choices for those they ranked first and last. An example is provided in

Figure 4.

Table 1 summarises the differences in frames across the eight splash screens. We first identified a range of factors that were objectively determined: (1) The number of words in the demand; (2) whether or not the demand explicitly referred to buying ‘software’; (3) whether there was a time limit; (4) whether the price rose after a set time; (5) whether or not a free sample was offered; (11) text colour; (12) background colour; (13) whether there was use of a logo. We then identified a range of factors that were more subjective: (6 and 7) we scored the level of information provided (from 0 to 3) as a signal of ‘helpfulness’, distinguishing whether information was provided on how to pay (6) and on what encryption meant (7); (8) whether information was provided about the victim’s computer and files; (9) the number of positive phrases in the demand; (10) the number of negative phrases in the demand. Examples of positive phrases included ‘guarantee you will recover’ and ‘regain control’ while negative phrases included ‘destroy’ and ‘nobody will ever’.

As can be seen from

Table 1, there was a large variation in splash-screen design. This reflected differences seen in the wild [

21]. For example, Cerber has all the ‘gimmicks’, like a timer and price rise, but contains very little information. Petya has none of the gimmicks but more information. To look at another dimension, CyrptoWall and TorrentLocker have a positive frame while CryptoLocker and CTBLocker have a negative frame. You can see that half of the splash screens are framed as ‘software’ and all four of these use a positive frame. Five of the splash screens provide information on how to pay, four give information on encryption, and three provide information about the encrypted files. Therefore, there iswas considerable heterogeneity across all the characteristics we considered.

The experiment was set up in such a way as to fix intrinsic value across the eight ransomware demands. In particular, . The experiment questions were then designed to probe the influence of trust, moral and ethical views and loss aversion. We took question 1 as a measure of overall willingness to pay. Question 2 probed beliefs on the likelihood the criminals would provide the decryption key and so was a measure of p. We refer to this as trust in the sense the victim believes the criminal is (i) capable of and (ii) intends to return access to the files. Question 3, in measuring how fast a decision would be made, and question 6, in measuring positivity at getting the files back, were measures of loss aversion. Finally, question 4, in measuring anger, and question 5, in measuring helpfulness of the demand, were measures of moral and ethical costs E and A.

The experiment took place on a university campus with subjects recruited from the student population. We recognize that this is a specific participant pool of young adults who were relatively technology literate. For instance, there is evidence that young adults are more willing to pay ransomware [

42]. There were a total of 93 participants (48% male). Participants were paid a flat fee of GBP 5 for completing the experiment which took around 20–30 min. The experiment took place after subjects had participated in a separate and unrelated economic experiment. The experiment was conducted using pen and paper. A research assistant then converted the responses into a usable data file. A power analysis confirmed that

was sufficient to detect differences across frames. For instance, if the difference in average WTP ratings across two ransom splash screens was 1, and the standard deviation was 2, then a two-sided t-test with a significance level of

would have a power of

to correctly reject the null hypothesis that WTP is similar across splash screens.

A set protocol was used to deal with missing values or incomplete rankings from participants failing to enter all information. In terms of the rating data, each participant was required to rate six items across eight splash screens. This equates to 48 data points per participant, 744 observations per aspect, and 4464 data points in total. There were a total of seven missing values: three from trust, three from helpful and one from fast. In terms of ranking data, each participant was required to rank eight splash screens across six items. Again, this equates to 48 data points per participant and 4464 data points in total. There were 40 missing values: 5 from WTP, 5 from trust, 7 from fast, 8 from angry, 4 from helpful and 11 from positivity. In our analysis, we focused on completed data and therein assumed that missing data were random. This was consistent with our low rate of missing data.

5. Experiment Results

Table 2 reports the average rating of each ransomware splash screen across the six aspects (on a 10 item Likert scale). A higher rating represents higher willingness to pay, more trust in the criminal, faster decision, more anger towards the criminal, more helpful information, and more positive feeling after recovery. The eight ransomware splash screens are ordered based on participants being most likely to pay (CryptoWall) to least likely to pay (Locky). We saw a highly statistically significant variation in willingness to pay and trust ratings across the eight splash screens (

, Kruskal–Wallis test). This also held for how fast a decision would be made and perceived helpfulness and positivity on recovery (

, Kruskal–Wallis test). The only factor that was not influenced by the frame was anger, which was universally high (

, Kruskal–Wallis test). The individual comments of subjects confirmed the high level of anger: ‘I would be furious to receive any of these’, ‘I would be equally angry in each and every case, the design doesn’t affect my angriness’, ‘Treat them all the same, would always make me feel angry’, or ‘Anyone demanding money from me would make me angry’.

Result 1. The splash screen of the ransom demand significantly influences willingness to pay, trust that the files will be returned, how fast a decision will be made, the perceived helpfulness of the demand, and perceived positivity if files are recovered. It does not influence anger.

Table 3 reports participants’ average rankings across the eight ransomware splash screens (we omitted missing observations; hence, the number of observations is less than the 93 participants in the experiment). Among the six rankings, a lower rank represents more likely to pay the ransom, more trust in the criminal, quicker to decide, more anger, more helpful information and more positive feelings after recovering the files. The eight ransomware splash screens are sorted by average ranking in WTP. The ordering of ransomware splash screens based on their WTP ranking is identical to that based on their ratings, except for Petya and Locky exchanging places. More generally, we see a high level of consistency between the ratings and rankings. Thus, in the following, we concentrate on the ratings data.

To explore the correlation of ratings across the six aspects, we used linear regression analysis, with standard errors robust for clustering at the individual level. The unit of observation was a subject’s rating of the ransomware demands across each of the six aspects.

Table 4 presents the results. Specification (1) was used to examine the influence of other factors on a participant’s willingness to pay and can be seen as our preferred model. In particular, it provided the most direct test of our theoretical model. Specifications (2–6) allowed us to explore the potential relationship between other factors. Specification (1) in

Table 4 shows a strong positive relationship between a participant’s willingness to pay the ransom and both trust and anticipated positivity. We found no statistically significant influence of speed of decision, anger or helpfulness.

Result 2. Willingness to pay the ransom is strongly positively correlated with a participant’s trust the criminals will return the files and anticipated positivity if the files are returned.

Consistent with Hypothesis 1, Result 2 suggests that splash screens which increase the perceived probability of regaining access to the files and positivity trigger the highest willingness to pay.

Figure 5 is a radar plot of participants’ average ratings of WTP and trust across the eight ransomware splash screens. The figure clearly illustrates that trust has a very close correlation with WTP. In short, among all aspects, trust has the strongest explanatory power for WTP. The self-reported reasons for participants’ choice of highest- and lowest-ranked WTP are also consistent with the importance of trust. For instance, one subject wrote of CryptoWall that ‘something is free and able to see if it is a scam’ and another of WannaCry that ‘Option to decrypt some files for free, indicated they would decrypt all for payment’. On the flip side a subject wrote of Locky that it ‘Doesn’t look real’ and another of Cerber that the ‘Design shows the encrypters are amateurs, think of other solutions before paying’.

Having seen that trust is strongly related to willingness to pay, we then looked at the factors which underpinned trust. Subjects reported highest trust ratings for CryptoWall, CryptoLocker and WannaCry and lowest ratings for Cerber, Locky and Petya. If we look at

Table 1, we can see that the highest-ranked ransomware splash screens have, on average, more words, more gimmicks (timer, logo, price rise, free sample) and more information. If we quantify the 10 properties in

Table 1 (excluding word count and colours) and add up all the features, then the most trusted ransomware splash screens scored 11, 10 and 8, respectively, whereas the lowest scored 4, 6 and 4, respectively. This is consistent with the notion that ransomware splash screens with more information are considered more trustworthy. Further evidence for this can be seen in specification (2) of

Table 4, where trust is positively influenced by helpful. While causality is difficult to infer with confidence, it seems reasonable to infer that helpful information influences trust which then influences willingness to pay. This is consistent with Hypotheses 1 and 2.

Result 3. Trust the files will be returned is positively correlated with the amount of information on the ransom demand splash screen and the helpfulness of the information.

Recall that trust in our setting captures both the capability and the intention of the criminals to return a victim’s access to their files. Participants’ comments, which are provided in full in

Appendix A, appeared to capture both these elements. For instance, WannaCry was ranked highest for trust and when asked to comment on their choice, participants wrote, e.g., ‘information about file decryption’, ‘description of how to get files back’, ‘more of a solution’, and ‘informative details’. Petya was ranked lowest for trust, and participants commented, e.g., ‘unprofessional style’, ‘looks less professional’, ‘vague’, ‘doesn’t seem authentic’, and ‘looks like amateurs’. A focus on professionalism and information (or lack of it) can be seen to reflect both capability and intention in the sense that professionalism conveys an ability to decrypt the files and a reputational incentive to honour ransom payments.

Having looked at the role of trust, we then turned our attention to perceived positivity in the case of recovering files. From

Table 4, we can see that positivity was not related to any of the other measured factors. This would suggest that positivity is a factor, independent of trust and helpful, that influences willingness to pay. From

Table 2, we can see that CryptoWall, WannaCry and CTBLocker scored relatively highly on positivity while Petya scored the lowest. If we look at

Table 1, however, it is difficult to discern any characteristic that explains these results. For instance, CryptoWall has positive and helpful information, while CTBLocker is a negative frame with less information. Similarly, CryptoWall and WannaCry offer a free sample, while CTBLocker does not. The comments of participants offer further perspective. For example, one comment on WannaCry was that it was ‘Professional scammer, could happen to anyone’, and one comment to CryptoWall was that it ‘looks like software’. By contrast, a comment on Petya was: ‘Seems most criminal, paying through emails feels like you’re directly paying the criminal’.

Finally, we looked at the fast aspect, that is, the speed of paying. Combined with the comments of participants on their reasons for choosing the most/least quickly to pay, we found that the design of the timer and count down in the ransomware demand appeared to facilitate victims’ quick response (e.g., WannaCry, CryptoWall, CryptoLocker and CTBLocker). On the other hand, a lack of information or timer (e.g., Locky) appeared to result in participants being less quick to pay. Variation in fast ratings was statistically significant across ransomware demands (

, Kruskal–Wallis). However, we can see from

Table 4 that the speed of the decision did not affect participants’ willingness to pay the ransom, and so we found little support for Hypothesis 3. Speed did appear to relate to anger. Specifically, ransomware splash screens that subjects more quickly reacted to were those that made participants less angry. This correlation was statistically significant (

from post-estimation results of specification 4 in

Table 4).

Result 4. Ransomware splash screens can have significant influence on how quickly participants decide whether or not to pay the ransom. This influences perceived anger and not willingness to pay the ransom.

6. Discussion

Our theoretical model suggested a trade-off between a positive and negative frame. We argued that a negative frame may increase willingness to pay because it makes the victim think about the loss of their files, and loss aversion would then suggest incentives to pay the ransom. On the flip side, a positive frame may increase willingness to pay because it increases trust the files will be returned and decreases ethical and anger costs from paying the ransom. In our parameterized example, we found that a small increase in trust would be enough to offset the impact of loss aversion. This led to our key Hypothesis 4, that any increases in willingness to pay from the use of a loss frame would be offset by a corresponding decrease in perceived probability of the files being returned.

Our experiment results are consistent with Hypothesis 4 and suggest that a positive frame with induced trust, helpfulness and positivity increases willingness to pay. This would imply that splash screens with a positive, gain frame may lead to higher income for the criminals than a negative, loss frame. Our version of CryptoWall is a great example of this in that it had all the gimmicks (except a logo) together with a positive frame. Thus, it was no surprise that it was top ranked and top rated amongst our participants.

While our results suggest the splash screens with a positive frame may be adopted by criminals, there are some caveats, as evidenced by CrytpoLocker. CryptoLocker picked up second place in both the ranking and ratings but had fewer gimmicks and a highly negative frame. If we look at the comments of subjects, we find that the threats seemed to increase willingness to pay because they seemed credible. For instance, one subject wrote ‘Negative and serious language, company feel, so makes it seem more valid, threat at the end increases the likelihood that I pay’. Threats within a professional and credible looking frame may therefore work. Threats within a less credible frame seem particularly likely to backfire. Either way, the contrast between CryptoLocker and CryptoWall show that it is unlikely there is one set way of manipulating victims. Being friendly or threatening can increase a victim’s willingness to pay.

6.1. Limitations

Our theoretical framework focused on a static and partial equilibrium framework in which a victim is deciding whether to pay a ransom given their preferences and beliefs. There is the potential to extend our model to include a dynamic and strategic game-theoretic element in which the victim’s beliefs about the probability of regaining access to their files is updated over time. For instance, the victim’s beliefs may be informed by signals from the criminal, such as decryption of a sample file. The criminal may also have a reputation for returning access to files. Modelling such possibilities would allow a more informed understanding of the perceived probability of regaining the files, a crucial aspect of our model.

Our experimental design had several limitations that could be addressed in future work. First, we used a student sample, so our results should be interpreted as applying to young adults who are technologically literate. There is consistent evidence that experimental results obtained with students are similar to those obtained with the general population (in settings that are relevant to students), e.g., [

67,

68]. That said, a student sample can lead to bias if a characteristic that does not vary in the sample, i.e., age, influences the responses [

68]. Survey evidence from a large sample of the UK general population showed that willingness to pay a ransom varied with age [

42]. Of particular relevance to our study is the direction of any gain–loss framing effect. Blake et al. [

69], surveying a representative sample of the UK population, found that loss aversion was highest for 18–24-year-olds and lowest for 45–54-year-olds. This would mean that students are strongly influenced by a loss frame, providing a ‘tough’ test of our crucial Hypothesis 4. Therefore, it seems reasonable to conjecture that our main findings (Results 1–4) extend to a general population. Future work, however, would be needed to test this conjecture across different demographics.

A second limitation of our experiment design is that a lab-based experiment cannot recreate the heightened emotions that would be experienced in a real ransomware attack. Our results should therefore be seen in the light of participants imagining how they would feel in the event of a ransomware attack. That said, our participants reported very high ratings of anticipated anger. They also reported relatively high ratings for willingness to pay. A priori, we would expect participants to underestimate their true willingness to pay because of the instinct to not pay a ransom to criminals. For instance, Cartwright et al. [

39] give the example of a CISO discussing a ransomware exercise in which the conversation went quite quickly from a “well we definitely shouldn’t pay money to bad people”, to “we should definitely pay money”. It is unclear how a lack of emotional saliency would impact the relative ratings and rankings of splash screens. A third limitation of our experimental design is that we only considered eight splash screens, which varied across many different characteristics.

6.2. Future Research Directions

The most promising area for future research would be to extend our experimental design to systematically study specific characteristics of ransomware splash screens. We based our study on eight real splash screens, but this caused a loss of experimenter control because the splash screens varied on many different characteristics. A future study could focus on some key characteristics, such as the presence of a free sample, countdown timer and/or the distinction between positive and negative language. Hypothetical splash screens could then be designed that vary only on these key dimensions. This would allow us to directly identify the characteristics that have the most influence on willingness to pay.

It would be particularly interesting to consider a choice experiment that focuses specifically on gain versus loss framing. While our set of splash screens contained some examples with positive language (consistent with a gain frame) and some with negative language (consistent with a loss frame), the frames differed along other dimensions, such as text colour, background colour, logo, etc. A future experiment could fix all elements of the splash screen except for whether the text talks of regaining access to files or losing files. This would allow a more direct test of the influence of the gain–loss framing.

We remind readers that the objective of our work is to inform policy makers and law enforcement in identifying ransomware strains that are likely to be most socially damaging. In doing so, it is important to factor in the importance of the ransom amount, because willingness to pay is likely influenced by a combination of framing and ransom size. In our study, we fixed the ransom demand at GBP 300. A further extension of our experimental design would therefore be to systematically vary the ransom amount (e.g., GBP 300 and GBP 600) alongside the framing of the ransom demand. This would help identify ransom frames that could be particularly socially damaging because they induce victims to pay a large ransom.

It would also be of interest to more directly test the relationship between trust and willingness to pay. We found that trust was strongly correlated with willingness to pay but could not judge causality. In order to see if trust influences willingness to pay (and not the other way around), an experiment could be designed that systematically manipulates trust. For instance, participants could be primed before seeing the splash screen. One set of participants could be given information that ‘some victims recover access to their files’, and another set that ‘some victims do not recover access to their files’. This should manipulate trust and the impact on willingness to pay could then be measured.

7. Conclusions

Ransomware is a major threat to modern economies. While ransomware is currently targeted primarily at organizations there is no reason why it could not return to being a threat widely aimed at individuals. It is important for policymakers and law enforcement to be ahead of the game and anticipate this evolution. In this paper, we used insights from behavioural economics and evidence from an experiment to analyse how the framing of a ransom demand may influence a victim’s willingness to pay the ransom. This can potentially help predict which ransomware strains are likely to be ‘most successful’ in raising revenue for criminals and therefore pose a bigger threat.

We found that the main determinant of willingness to pay was trust that access to the files would be restored. Correlation does not imply causation, but it seems reasonable to conjecture that if victims trust their files will be returned, they are more willing to pay the ransom demand. We should expect, therefore, that criminals will look to signal a reputation for being trustworthy, whether that be free samples or maintaining a reputation for honouring promises. From the point of view of law enforcement, our results suggest a need to disrupt trust in order to undermine the ransomware business model. If the criminals typically do not return access to files then it is seemingly possible to undermine confidence through targeted public and business awareness campaigns and advice. If, however, the criminals typically

do return access to files, then there are ethical questions about ‘misleading’ information. Indeed, better information may actually increase trust. Thus, this is a potentially complex issue, as illustrated by an FBI agent being quoted in 2015 as saying ‘The ransomware is that good … To be honest, we often advise people to just pay the ransom’ [

70].

Alongside trust, we found that the helpfulness of the ransom splash screens also positively correlated with willingness to pay, potentially through its effect on trust. A professionally designed website, promise of free trial, frequently asked questions, and clear instructions can all increase the ‘helpfulness’ of the ransom demand. Interestingly, we also found that a positively framed ransom demand appeared more effective than a negatively framed one. In particular, subjects seemed to appreciate a professional looking website and the impression they were purchasing decryption software. They were less amenable to threats and a framing that did not hide that the money was going to criminals. The ransomware business model could therefore be further disrupted by making victims more aware of criminal tactics. Social engineering tactics can be used to scare victims but also to ‘reassure’ and ‘befriend’ victims. Cybersecurity awareness campaigns should reflect both styles of tactic.