1. Introduction

Cybersecurity has become a critical priority for organizations of all sizes, driven by the increasing frequency and sophistication of cyber threats. Security logs, which systematically capture detailed records of events and activities across IT infrastructures, serve as a fundamental data source for identifying vulnerabilities, policy violations, and anomalous behaviors indicative of cyberattacks.

Historically, the analysis of these logs has relied on rule-based systems and signature matching techniques, often implemented in Security Information and Event Management (SIEM) platforms. While foundational, these approaches suffer from significant limitations: they struggle to detect novel or zero-day attacks for which no signature exists [

1], require constant manual effort from security experts to write and maintain complex rules, and often generate a high volume of false positives, leading to “alert fatigue” among analysts [

2].

More recently, a variety of classical machine learning (ML) methods—including tree-based ensemble models such as XGBoost, LightGBM, Random Forest, and anomaly detection algorithms like Isolation Forest—have been employed to improve detection performance [

3,

4]. Deep learning (DL) approaches, including Artificial Neural Networks (ANNs), Convolutional Neural Networks (CNNs), and Long Short-Term Memory (LSTM) networks, have also been explored due to their ability to model complex patterns and temporal dependencies [

5,

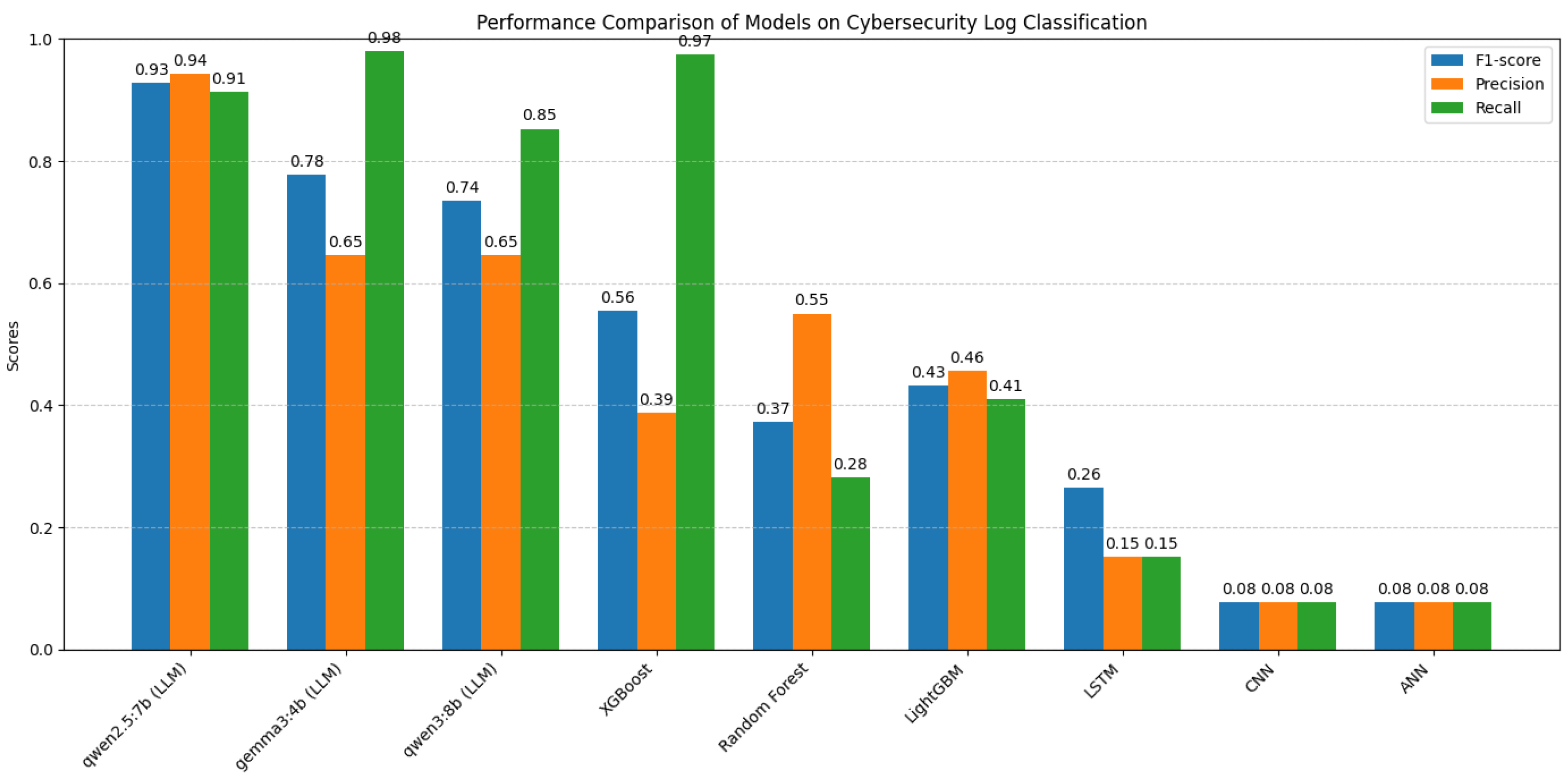

6]. However, these DL models often require substantial computational resources and extensive hyperparameter tuning, and, in our experiments, they demonstrated limited effectiveness compared to tree-based methods and large language models (LLMs). Moreover, both classical ML and DL approaches frequently depend on labor-intensive feature engineering and may struggle to generalize to novel or evolving attack patterns. This motivates the exploration of LLMs, which can inherently capture rich contextual information from raw log data without extensive preprocessing, potentially offering improved adaptability and detection accuracy in real-world cybersecurity scenarios.

In parallel, the field of Natural Language Processing (NLP) has witnessed a revolution with the advent of Large Language Models (LLMs) [

7]. These models, trained on massive corpora, have demonstrated unprecedented capabilities in understanding, generating, and reasoning over natural language [

8]. Recent research has begun to explore their application to security domains, such as malware detection, vulnerability assessment, and log analysis. LLMs offer the promise of capturing complex dependencies and subtle patterns in textual data, potentially surpassing the limitations of traditional ML/DL methods [

9]. However, their adoption in operational cybersecurity settings, especially within small and medium-sized enterprises (SMEs), remains limited due to several practical constraints.

Most notably, the computational cost of training and deploying state-of-the-art LLMs is substantial, often requiring specialized hardware and significant cloud budgets that are beyond the reach of many organizations [

8]. This well-documented resource barrier has become a primary driver for research into smaller, more efficient models suitable for local deployment [

10], directly aligning with the needs of SMEs. Furthermore, the inherent complexity of these models poses significant challenges for interpretability, a field broadly known as Explainable AI (XAI) [

11]. This “black box” nature, coupled with issues like model ’hallucination’—where models generate plausible but factually incorrect outputs [

12]—can hinder trust and slow adoption, particularly in high-stakes domains like cybersecurity, where decision transparency is paramount. In contrast, SMEs often operate with constrained IT budgets, limited expertise, and an urgent need for solutions that are both effective and easy to deploy locally.

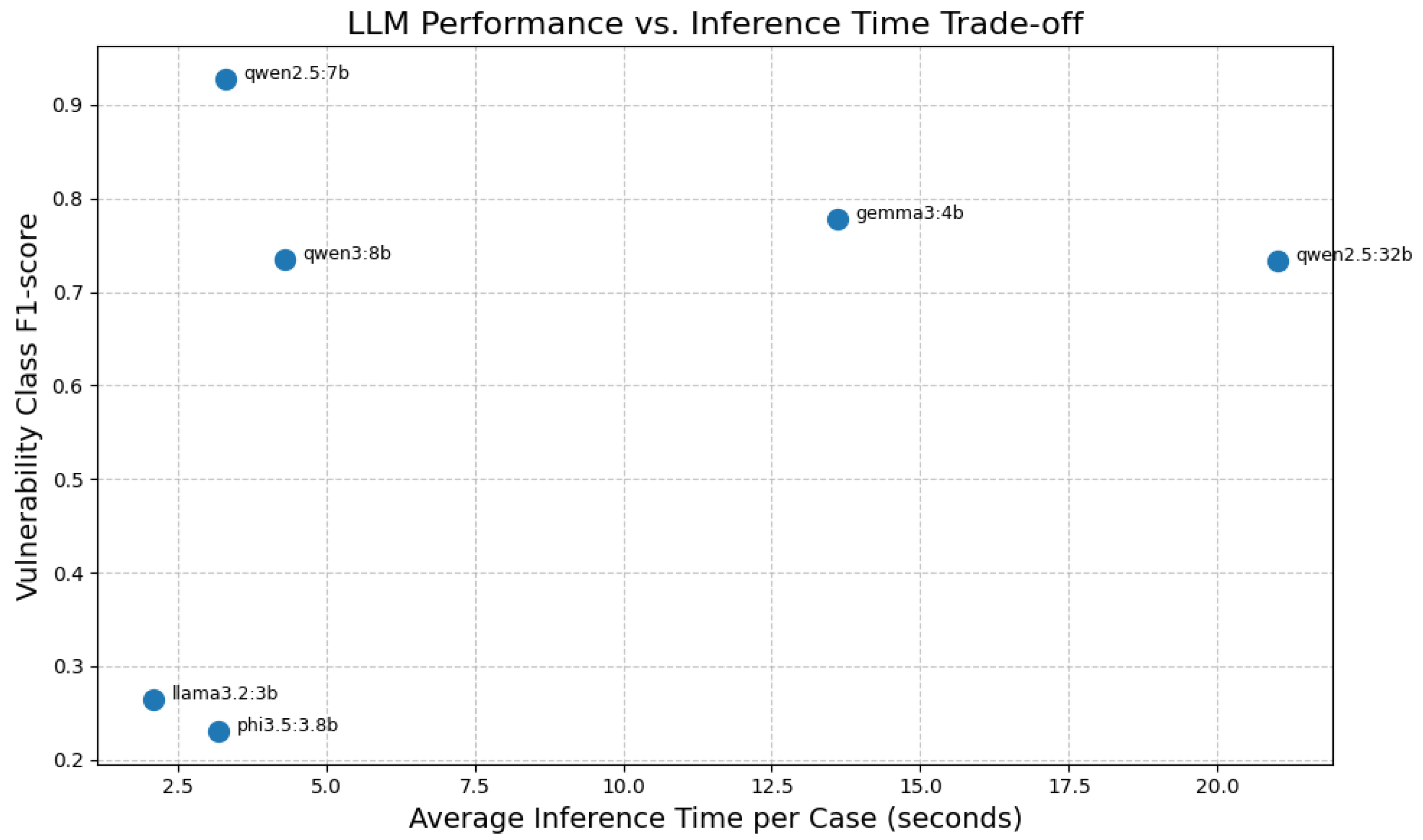

This paper addresses these gaps by systematically evaluating the effectiveness of LLMs in the detection of vulnerabilities and anomalies within security logs, with a particular focus on accessibility and practicality for SMEs. Our study is built upon a curated dataset of security events, each labeled as either “normal” or “anomalous”, providing a robust testbed for benchmarking model performance. Uniquely, our experimental setup is designed to be entirely local. The majority of our experiments (on all LLMs, except for Qwen 2.5 32B) were conducted on a consumer-grade NVIDIA GeForce RTX 3060 Ti GPU with 8GB of VRAM. The larger qwen2.5:32b model, which exceeds this card’s memory, was evaluated on a more powerful laboratory machine equipped with two NVIDIA GeForce RTX 2080 Ti GPUs, each with 12 GB of VRAM. This dual approach enables us to rigorously compare the trade-offs between detection accuracy and computational cost, and to explore the feasibility of deploying advanced AI-driven security analytics in resource-constrained environments.

Our methodology unfolds in three key phases. First, we benchmark a selection of LLMs on the anomaly detection task, employing standard metrics such as precision, recall, F1 score, AUROC, and AUPRC, as well as false positive and false negative rates to assess practical impact. To further enhance the transparency and trustworthiness of the models, we integrate explainable AI (XAI) techniques, making model outputs interpretable and actionable for non-expert users. This is a critical requirement for SMEs, which often lack dedicated security analysts and need clear, justifiable alerts.

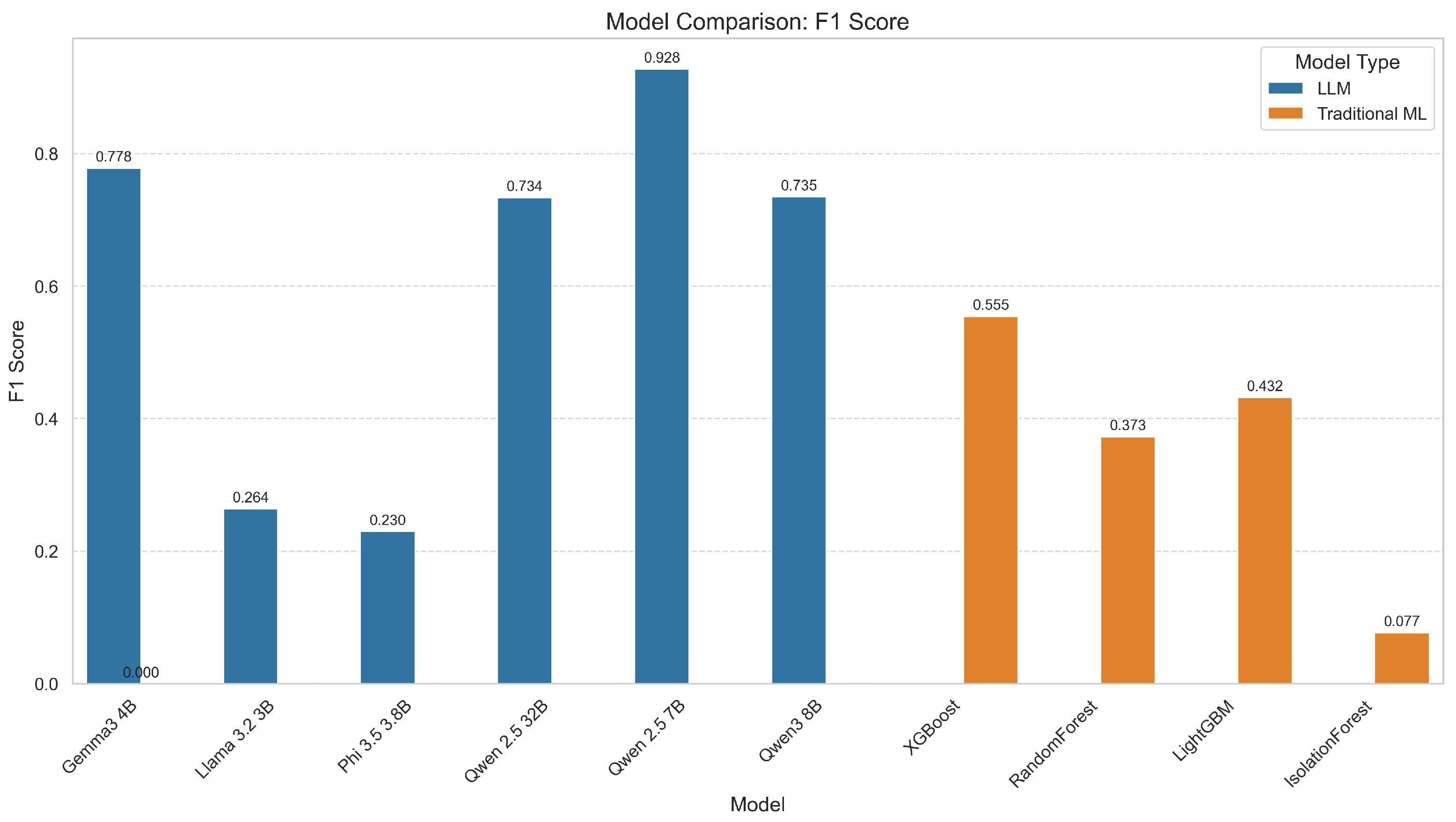

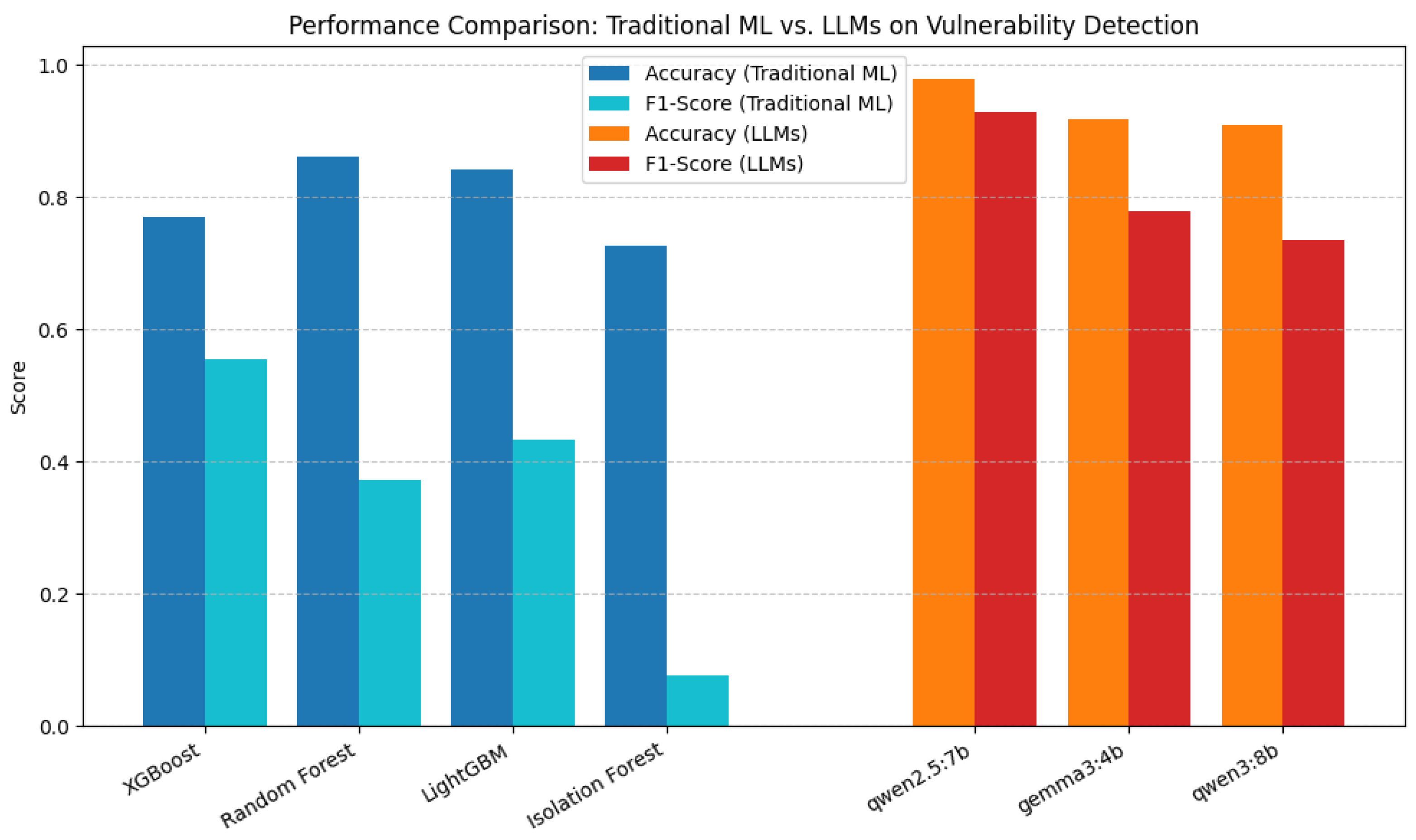

Second, we benchmarked the top-performing LLM against a range of established classical machine learning algorithms, including XGBoost, LightGBM, Random Forest, and Isolation Forest. Additionally, we conducted limited experiments with deep learning models such as artificial neural networks (ANNs), convolutional neural networks (CNNs), and long short-term memory (LSTM) networks; however, these approaches yielded suboptimal results in our anomaly detection setting. This comparative analysis highlights the relative strengths and limitations of LLMs in contrast to both traditional tree-based models and deep learning architectures for real-world anomaly detection tasks.

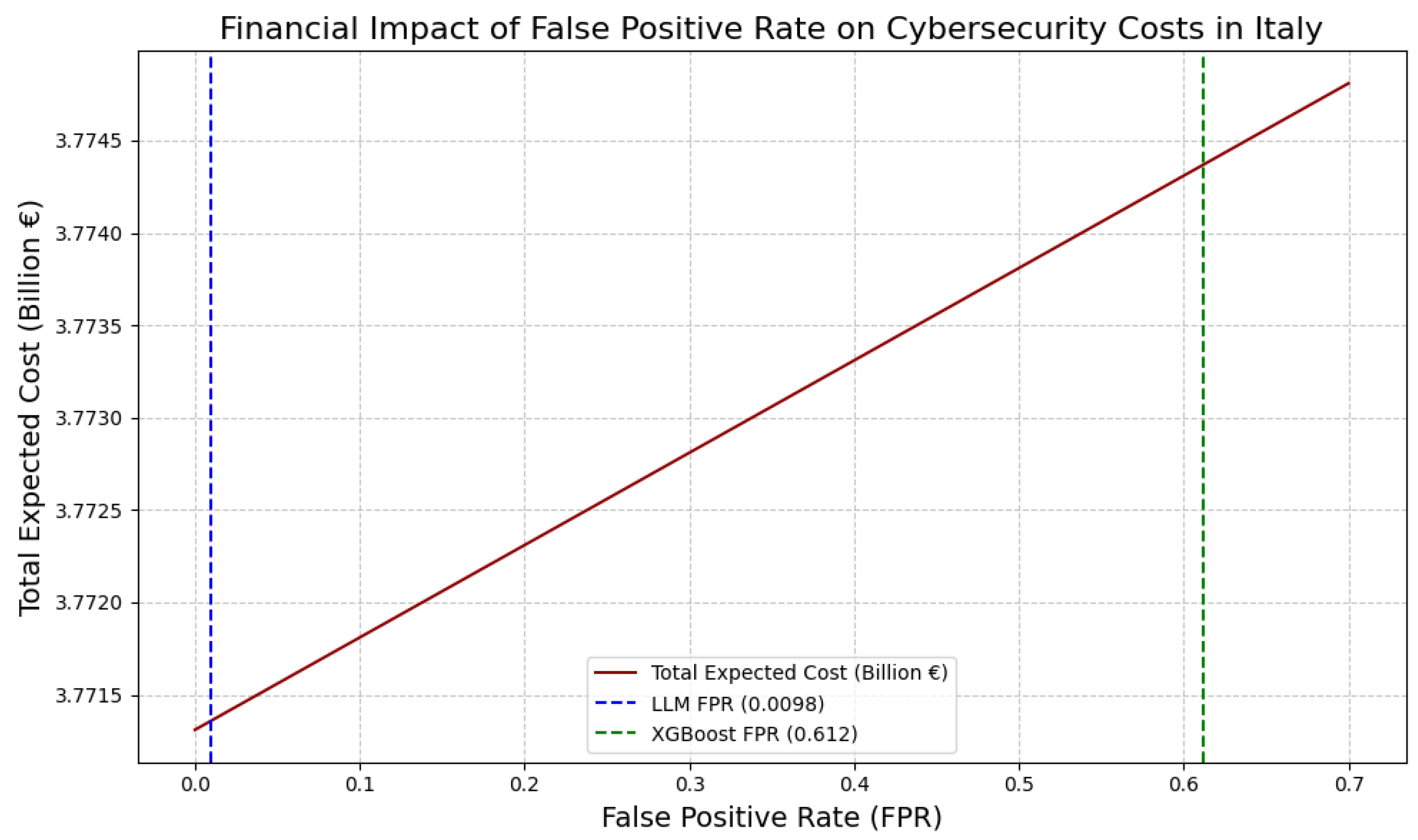

Third, we conduct a cost-benefit analysis, examining whether the incremental performance gains of larger models justify their higher computational demands. Our underlying hypothesis is that smaller LLMs, while less powerful in absolute terms, may offer a compelling balance between accuracy and resource efficiency, making them particularly attractive for SMEs.

The novelty of this work lies in its comprehensive, pragmatic evaluation of LLMs for security log analysis in low-resource settings. By demonstrating that relatively small, locally executable models can achieve competitive performance, we aim to lower the barrier to advanced cybersecurity for SMEs. Our findings have the potential to democratize access to state-of-the-art detection capabilities, fostering a more secure and resilient digital ecosystem for organizations with limited resources.

This paper is structured as follows.

Section 2 reviews the related works relevant to our study.

Section 3 describes the materials and methods employed, including dataset details and model architectures.

Section 4 presents the results and discussion, providing a comprehensive analysis of the findings. Finally,

Section 6 concludes the paper and outlines directions for future developments.

2. Related Works

In this section, we provide a comprehensive overview of the existing literature relevant to our study. We then outline the key

research hypotheses that have guided prior investigations in this domain, as detailed in

Section 2.1. Following this, we discuss the distinguishing features and innovations of our proposed methodology in comparison to previous approaches, which is elaborated in

Section 2.2.

The use of Large Language Models (LLMs) for detecting anomalies and vulnerabilities in security logs is attracting growing interest. This is largely due to their ability to process code and log data similarly to natural language, enabling a deeper understanding of context beyond simple line-by-line analysis [

13]. One of the main challenges in this domain has been managing the vast volume of log data, which can easily span millions of entries. However, recent advancements in LLMs have significantly expanded context windows, with some models now capable of handling up to 10 million tokens. This allows for the efficient analysis of large-scale logs, opening new possibilities for proactive and intelligent threat detection.

Recent studies such as AnoLLM [

14] and AD-LM [

15] have explored the ability of open LLMs to detect anomalies in tabular or semi-structured data. These models perform zero-shot or few-shot inference by treating structured logs as natural language prompts. Results indicate that LLMs can reach performance comparable to traditional models in many anomaly detection tasks, especially when paired with careful prompt engineering and provided as a batch.

Our research keeps a focus on cost-effectiveness and scalability of these models. Wen et al. [

10] proposed a generative tabular learning framework using small-to-medium LLMs (1B–7B parameters), demonstrating that models like LLaMA-2 7B or Mistral outperform traditional methods on various structured tasks while remaining executable on commodity GPUs. This suggests that LLM-powered log analysis may be feasible for SMEs with limited hardware resources. This is also critical because, in a cyberattack, it is very likely that the access to the internet was compromised, so it is very important to be able to elaborate all the data locally [

16].

However, one of the added values of the LLM is its ability to explain information in a natural language (an application of Explainable AI, or XAI), creating a unique engagement with the user making possible a higher awareness of risks [

11]. On this concept, Al-Dhamari and Clarke proposed a GPT-enabled training framework that delivers tailored content based on individual user profiles [

17], enhancing engagement and effectiveness in cybersecurity awareness programs.

In the field of human–machine interaction, Ka Ching Chan, Raj Gururajan, and Fabrizio Carmignani have proposed integrating human–AI collaboration into small work environments to enhance cybersecurity. In this context, AI agents act as cyber consultants, offering effective strategies, best practices, and supporting iterative learning processes [

18]. This approach brings attention to a critical and often most vulnerable component of any defense system: human behavior.

Social engineering, in particular, remains one of the most effective attack vectors, especially in corporate environments where diverse human roles create multiple points of vulnerability. Pedersen et al. highlight the growing threat posed by AI-generated deepfakes [

19], which can be used to deceive and manipulate users. The use of AI to power such attacks is a well-documented and growing threat landscape [

20]. In such cases, comprehensive user training and awareness become the primary line of defense.

All these pieces of research suggest that the use of LLMs in cybersecurity is more than simple anomaly detection, as the same system that found the anomaly is also able to explain in an understandable way the issue and also provide assistance, suggestions, and even training to avoid the same issue (proactive). The fusion of all these unique capabilities of LLMs is the topic of our proposal, moving forward the classical cyber security approach.

Despite these promising capabilities in explainability and training, the deployment of LLMs in security environments is not without its challenges. The reliability of LLM-generated explanations is an active area of research, as models can occasionally “hallucinate”—producing plausible but factually incorrect or misleading information. This poses a significant risk in cybersecurity, where the accuracy of an alert’s explanation is paramount for a correct incident response [

12]. Furthermore, LLMs themselves are vulnerable to novel attack vectors, such as adversarial prompt injection and data poisoning, which could be exploited to bypass detection systems or manipulate their behavior. Addressing these security and robustness challenges is therefore essential for building trustworthy LLM-based cybersecurity solutions [

21].

In view of these considerations, our research can be considered as a starting point to implement these type of security solutions as a tool of collaboration with a human, and as an ‘augmenting’ knowledge generator and awareness system [

18,

22]. In the meantime, AI generative algorithms are becoming more refined to create attacks [

23], so it is crucial to have a deep understanding of this type of new models and techniques.

Despite these promising capabilities in explainability and training, the deployment of LLMs in security environments is not without its own significant security challenges, which could undermine their reliability. As comprehensively surveyed by Hu et al. [

24], AI systems are vulnerable throughout their entire lifecycle, from initial data collection to final inference. Our approach, which relies on analyzing security logs, is particularly susceptible to several classes of attacks. First, data poisoning attacks directly target the model’s training phase by manipulating the log data used for training or fine-tuning. Mozaffari-Kermani et al. [

25] demonstrated that such attacks can be algorithm-independent and highly effective even with a small fraction of malicious data. In our context, an adversary could inject carefully crafted logs to systematically degrade the LLM’s accuracy or, more insidiously, create targeted misclassifications. A sophisticated variant of this is the backdoor attack, where an attacker embeds subtle, benign-looking triggers into the training logs. The resulting LLM would function normally on most data but misclassify any future log entry containing the hidden trigger, effectively creating a blind spot that an attacker could exploit [

24]. Second, during the inference phase, LLMs are vulnerable to adversarial evasion attacks. Here, an attacker crafts a log entry that appears normal to a human analyst but is intentionally designed with subtle perturbations to be misclassified by the model, allowing malicious activity to evade detection. Finally, the integrity of the entire system depends on the trustworthiness of the data sources. The logs are collected from a distributed network of systems, applications, and devices, creating a large attack surface. This scenario is analogous to the challenges in securing data from distributed, and potentially compromised, wireless sensors in long-term health monitoring systems [

26]. If the log sources themselves are compromised, the data fed to the LLM will be tainted at their origin, rendering even a perfect model ineffective. Addressing these multifaceted security threats—spanning data integrity, model robustness, and input validation—is, therefore, essential for building trustworthy and resilient LLM-based cybersecurity solutions.

However, the growing adoption of LLMs is directly motivated by the recognized limitations of preceding technologies. Traditional log analysis, often centered on SIEM platforms, remains fundamentally reactive. Its reliance on predefined signatures makes it ineffective against polymorphic threats and novel attack vectors, while the operational overhead of rule management presents a significant burden for resource-constrained security teams, especially within SMEs [

27].

Classical machine learning and early deep learning models represented a significant advancement, shifting from explicit rules to automated pattern detection [

5]. Yet, these approaches are constrained by several critical factors. A primary limitation is their heavy dependence on

feature engineering [

6,

28]. This process is not only labor-intensive but also requires deep domain expertise to manually extract relevant features from raw, unstructured log text. The resulting models are often brittle, failing to generalize when faced with logs from new sources or evolving attack patterns that manifest in unforeseen ways. Furthermore, while models like LSTMs can capture temporal dependencies, they often struggle to grasp the rich semantic context embedded in log messages, treating them as mere sequences of tokens rather than meaningful narratives of system events. These well-documented gaps—the need for manual feature engineering, poor generalization to novelty, and shallow contextual understanding—create a clear need for a new paradigm, which LLMs are uniquely positioned to address by interpreting raw log text holistically.

To better contextualize our contributions,

Table 1 provides a comprehensive comparison between our work and key streams of research in the literature. The comparison is based on critical dimensions including methodology, data type, deployment focus, and the approach to explainability.

As illustrated in the table, our work builds upon and extends two distinct streams of research. On one hand, traditiona ML/DL methods have established a foundation for log analysis but are fundamentally limited by their reliance on manual feature engineering and their focus on structured data, as shown in the first row. On a different note, recent LLM-based approaches have demonstrated promise but often tackle different facets of the problem. For instance, studies like AnoLLM [

14] focus on numerical tabular data, sidestepping the complexity of raw text, while others like Balogh et al. [

29] position LLMs as assistants for human analysts rather than as fully automated detection engines. Our work distinguishes itself by uniquely addressing the intersection of these challenges. First, we tackle the more complex and realistic problem of analyzing unstructured, raw security logs directly. Second, our entire framework is explicitly designed for local, privacy-preserving deployment, a crucial requirement for SMEs. Finally, we make explainability a core contribution by not only generating explanations but also systematically comparing them to classical methods, providing a holistic and practical solution tailored to the operational realities of resource-constrained environments.

2.1. Research Hypotheses

Building upon the current state of the art and recent advances in LLMs applied to cybersecurity, our research addresses key questions regarding their practical efficacy and evaluation within this domain. Recent literature has begun to explore the use of LLMs for log analysis [

7], demonstrating promising results in capturing complex patterns from security-related data [

9]. However, direct and quantitative comparisons with a broad spectrum of classic ML models on real-world security log datasets, particularly with a focus on interpretability, local deployment feasibility, and efficiency for Small and Medium-sized Enterprises (SMEs), are still an area requiring further in-depth investigation, with existing explorations often presenting preliminary or context-specific findings [

29]. Similarly, while various benchmarks for LLMs in cybersecurity are emerging [

30,

31], a recognized challenge lies in operationalizing these evaluations through scalable, reproducible, and transparent pipelines that can handle diverse local LLM inference engines and the complexities of parsing generative outputs [

32].

To address these gaps, our study formulates a set of targeted hypotheses that not only evaluate the raw detection performance of LLMs against traditional machine learning models but also consider critical practical dimensions such as interpretability, deployment feasibility in resource-constrained environments, robustness to adversarial or noisy inputs, and the scalability of evaluation methodologies. By doing so, we aim to provide a holistic assessment that bridges theoretical advances with operational realities faced by cybersecurity practitioners, especially within SMEs. This multifaceted approach is essential to move beyond isolated performance metrics and towards trustworthy, explainable, and deployable AI solutions in cybersecurity.

These considerations lead us to formulate the following key hypotheses:

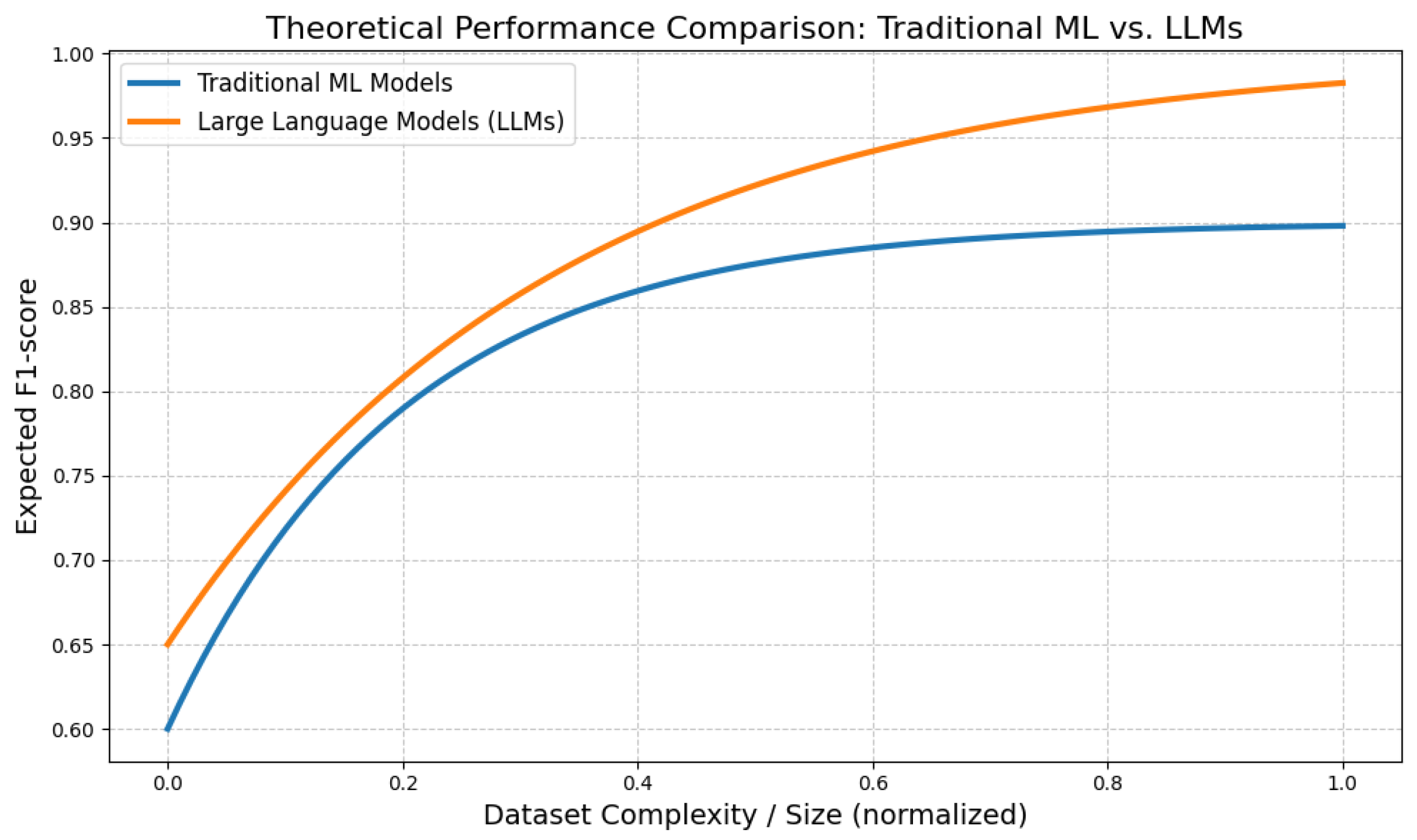

Hypothesis 1: LLMs provide superior detection capabilities compared to traditional models. We hypothesize that transformer-based LLMs, due to their ability to model complex contextual information in unstructured security logs, outperform classical machine learning models in vulnerability detection accuracy, recall, and precision, extending the research already done by Balogh et al. [

29]. This superiority is expected because LLMs leverage deep contextual embeddings and attention mechanisms that capture long-range dependencies and subtle semantic cues often present in noisy and heterogeneous log data. Unlike feature-engineered traditional models, LLMs can implicitly learn hierarchical representations that reflect the underlying threat patterns without extensive manual preprocessing. Moreover, their generative capabilities allow for richer understanding and potential detection of novel or zero-day attack signatures that may not be well represented in training data. Validating this hypothesis will provide empirical evidence on the practical advantages of LLMs in real-world cybersecurity scenarios, potentially shifting the paradigm towards more adaptive and intelligent threat detection systems.

Hypothesis 2: Integration of LLMs into batch evaluation pipelines facilitates scalable and reproducible benchmarking. Systematic orchestration of model inference, response parsing, and metric computation enables robust comparison across multiple LLMs and datasets, advancing transparency and reproducibility in cybersecurity AI research [

32,

33]. This hypothesis rests on the premise that the complexity and variability of LLM outputs—often in free-text and generative form—necessitate carefully designed, automated pipelines that can standardize evaluation procedures. By integrating domain-specific prompt engineering with robust parsing mechanisms, such pipelines can handle diverse model architectures and inference environments, including local deployments on resource-constrained hardware. Furthermore, embedding uncertainty quantification within the evaluation framework enhances the reliability of benchmarking results by accounting for model confidence and variability. Successfully demonstrating this hypothesis will provide a methodological foundation for the cybersecurity community to conduct large-scale, reproducible assessments of LLMs, fostering fair comparisons and accelerating the adoption of best-performing models in operational settings.

These hypotheses align with current hot topics in cybersecurity AI research, including the quest for interpretable and trustworthy AI, balancing model performance with efficiency, and the practical integration of LLMs into operational security workflows. Our work aims to empirically validate these hypotheses through rigorous experimentation and comprehensive analysis.

2.2. Distinguishing Features and Innovations of the Proposed Methodology

The methodology presented in this work introduces several distinctive features and innovations that set it apart from existing approaches in cybersecurity log analysis using LLMs, particularly addressing the challenges of reproducibility and scalability. As highlighted in a recent comprehensive survey by Akhtar et al. [

34], the rapidly developing field of LLM-based event log analysis, while showing great promise, still faces key challenges in identifying commonalities between works and establishing robust, comparable evaluation methods. The authors note that, while techniques like fine-tuning, RAG, and in-context learning show good progress, a systematic understanding of the developing body of knowledge is needed. Our proposed pipeline directly contributes to overcoming these challenges in several ways.

Firstly, our framework leverages domain-specific prompt engineering to systematically elicit structured and explainable outputs from LLMs. This approach not only enhances the interpretability of model predictions but also facilitates downstream evaluation and integration with automated benchmarking pipelines. By tailoring prompts to the nuances of cybersecurity data, we ensure that the LLMs focus on extracting and rationalizing the most operationally relevant information.

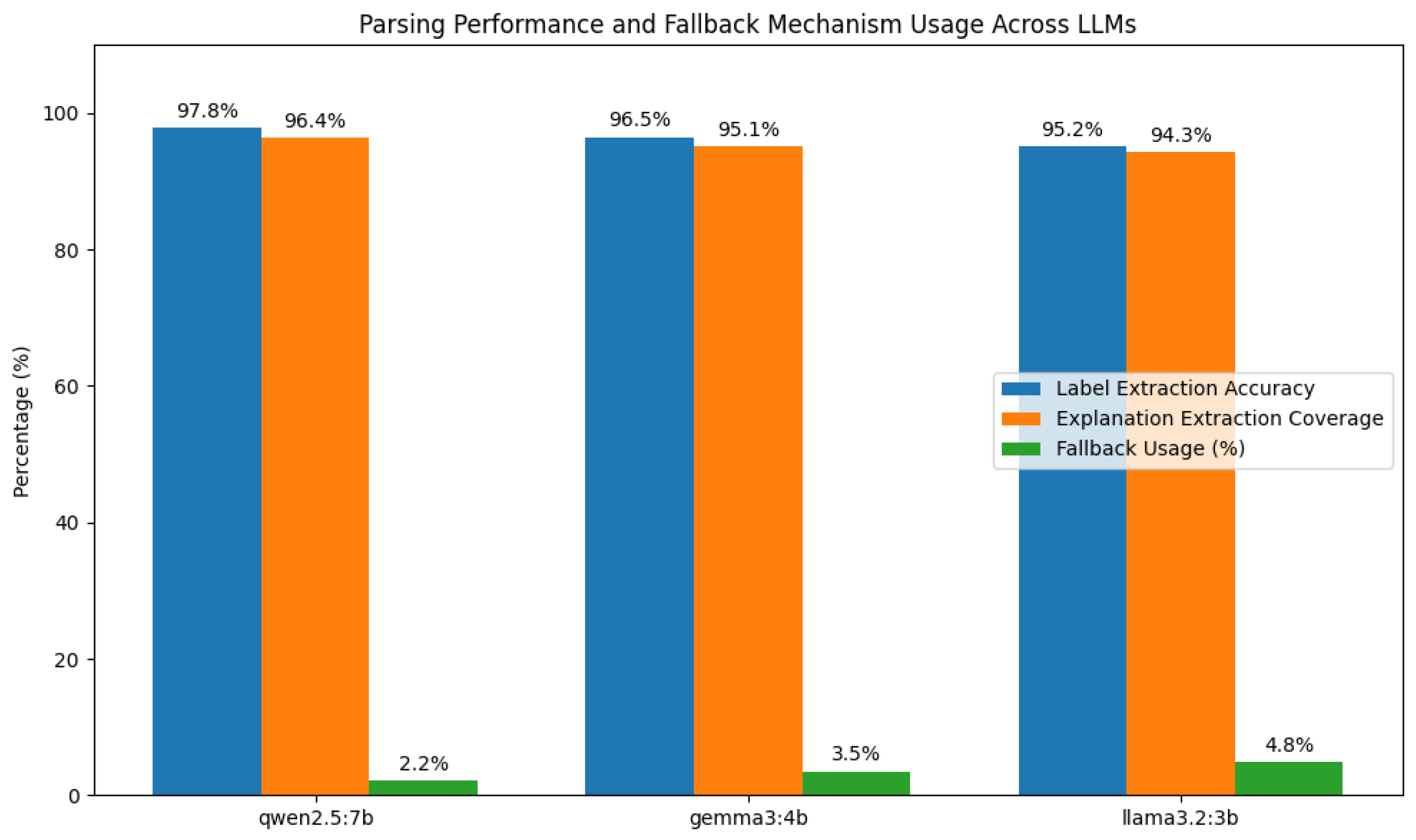

Secondly, we implement a robust parsing framework capable of handling the inherent variability of free-text LLM responses. This framework employs fallback heuristics and label-based extraction strategies, enabling reliable transformation of unstructured outputs into standardized, machine-readable formats. As a result, our system supports large-scale, automated benchmarking across multiple LLM architectures and datasets, overcoming a common limitation in prior research.

A further innovation lies in our comprehensive multi-model evaluation protocol. We systematically compare several LLMs of varying sizes and architectures, providing detailed analyses of the trade-offs between accuracy, latency, and error types. This comparative perspective offers actionable insights for selecting and deploying LLMs in real-world cybersecurity environments.

Additionally, our evaluation incorporates operationally relevant metrics, including false positive and false negative rates and confidence intervals. These metrics are closely aligned with the practical needs of cybersecurity analysts, ensuring that model performance assessments reflect real-world requirements and constraints.

Finally, the entire pipeline is designed for open-source, privacy-preserving deployment. By supporting local inference and minimizing reliance on proprietary cloud APIs, our methodology enables reproducible research and secure handling of sensitive data, which is essential for adoption in critical infrastructure and regulated sectors.

Together, these innovations establish a new standard for scalable, interpretable, and operationally robust application of LLMs in cybersecurity, addressing key challenges in both performance and trustworthiness.

3. Materials and Methods

This section details the experimental setup and methodologies employed in our study. We begin with a description of the dataset used, including its characteristics and statistical properties, as outlined in

Section 3.1 and

Section 3.2, respectively. Next, we present the overall project workflow in

Section 3.3, providing a step-by-step overview of the processes involved. The architecture of the large LLMs utilized is described in

Section 3.4. We then compare these models with traditional machine learning approaches in

Section 3.6. Finally,

Section 3.7 offers a mathematical justification for the selection of our models, grounding our choices in theoretical considerations.

3.1. Dataset Description

The dataset employed in this study is derived from a real-world collection of security logs, reflecting authentic operational conditions within an enterprise IT environment. Unlike synthetic or simulated datasets, this real dataset captures the inherent complexity, noise, and variability present in practical security monitoring scenarios. It includes a diverse range of events spanning normal system operations as well as activities indicative of potential vulnerabilities and anomalous behaviors.

This real-world provenance ensures that the dataset provides a robust and challenging benchmark for evaluating the capabilities of LLMs and other machine-learning techniques in detecting subtle and complex security threats. The logs have been carefully processed and structured to facilitate advanced analysis, while preserving the fidelity and richness of the original data. This approach enables a more accurate assessment of model performance in realistic conditions, which is critical for practical deployment, especially within resource-constrained environments such as small and medium-sized enterprises (SMEs).

The dataset spans a continuous observation period of 30 days (March 2025), with timestamps chronologically distributed to enable temporal analysis and pattern recognition over time. Each log entry includes a detailed textual description in the raw_log field, crafted to provide rich contextual information suitable for LLM-based semantic understanding. This 30-day window is considered sufficient to capture a representative variety of normal and anomalous events, allowing the models to learn temporal patterns and seasonal behaviors without introducing excessive data volume that could hinder local computational feasibility.

Two versions of the dataset are provided, available in .csv, .xlsx, and .db formats:

cybersecurity_dataset_labeled: Contains all features including the is_vulnerability column, which serves as the ground truth label for supervised learning.

cybersecurity_dataset_unlabeled: Identical to the labeled version but excludes the is_vulnerability column, enabling experimentation with unsupervised or predictive evaluation scenarios.

The subsequent statistics and feature descriptions primarily refer to the labeled dataset.

3.2. Dataset Statistics

Table 2 summarizes key statistics of the labeled dataset.

The choice of a dataset comprising 1317 log events collected over a continuous 30-day period reflects a deliberate balance between data quality, representativeness, and operational realism. While this dataset size might appear modest, it captures a rich variety of log sources, event types (30 unique classes), and realistic temporal dynamics including weekday/weekend and daily cycles. This controlled yet heterogeneous dataset provides a rigorous testbed for evaluating LLMs and traditional classifiers under practical constraints typical of security operations in small to medium-sized enterprise contexts.

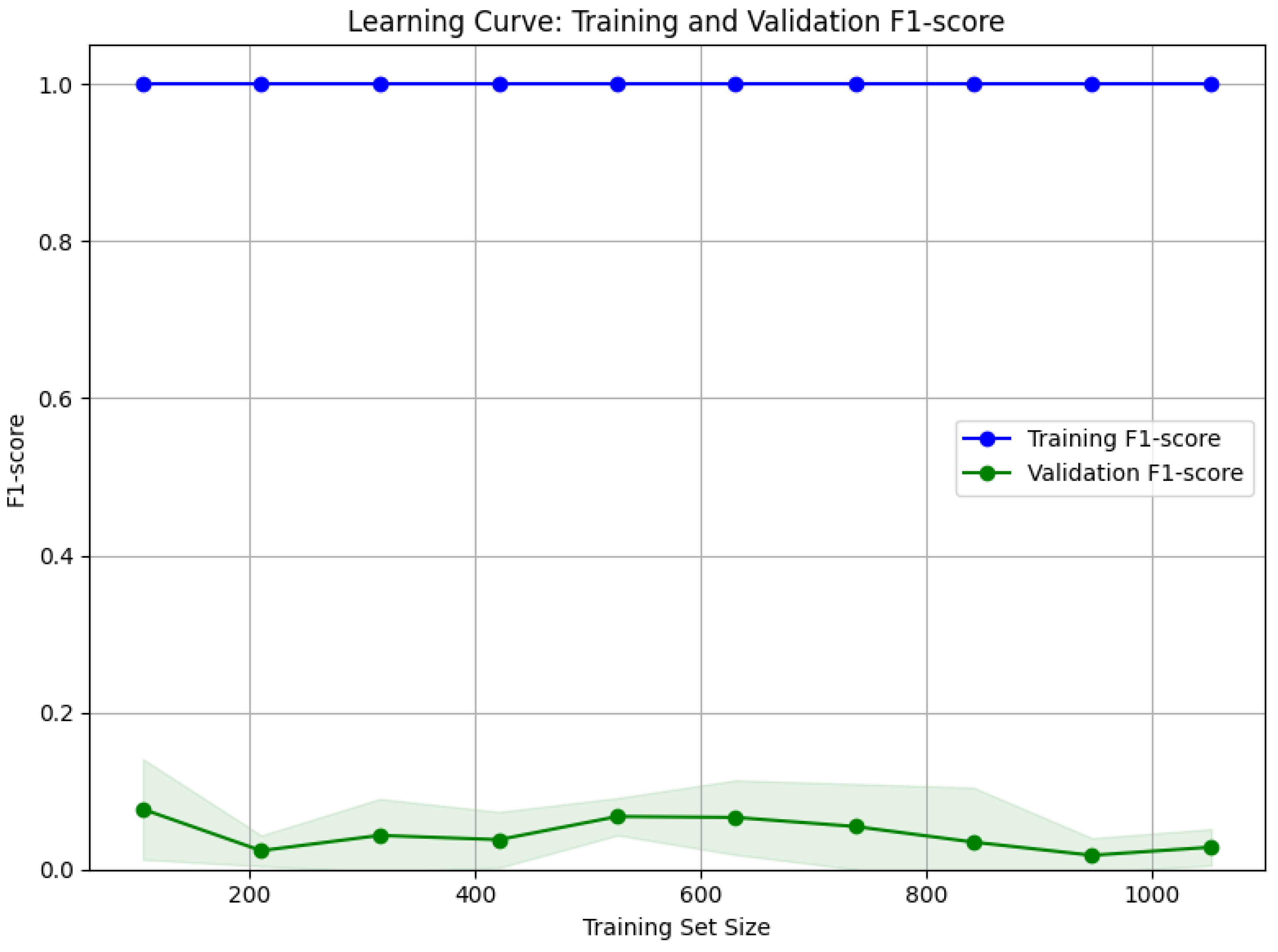

To address concerns regarding potential overfitting on a relatively small dataset, we conducted a detailed learning curve analysis (

Figure 1), which plots training and validation F1-score as a function of training set size. The curves demonstrate that model performance steadily improves with increasing data volume and approaches saturation near the full dataset size, indicating adequate sample complexity for the employed models and no marked overfitting. Moreover, the convergence gap between training and validation metrics remains small, providing further evidence of good generalization.

In addition, a power analysis was performed to estimate the statistical power of our classification experiments, confirming that the sample size affords sufficient sensitivity (>0.8) to detect meaningful differences in model performance, particularly for the critical minority class (vulnerabilities, 15% prevalence). This analysis supports the hypothesis that our dataset size is adequate to draw reliable inferences about model capabilities without excessive risk of false positives due to overfitting.

Overall, the combination of a carefully curated dataset design, temporal coverage enabling sequence pattern learning, and empirical analyses, such as learning curves and power assessment, provides strong justification for the dataset size and its sufficiency to train, evaluate, and compare LLMs and traditional machine learning approaches for cybersecurity log classification.

Due to privacy and security constraints, this dataset is not publicly available.

While the total number of records may appear limited compared to typical large-scale datasets, several considerations justify its adequacy for evaluating LLMs and traditional machine learning models in the context of cybersecurity log classification. The dataset captures significant heterogeneity, incorporating 30 unique event types, logs from multiple sources, and temporal dynamics such as weekly and daily cycles, thereby reflecting realistic and challenging operational conditions. This rich complexity ensures that the evaluation goes beyond mere data volume and instead focuses on data quality and representativeness. Furthermore, the specific task of vulnerability detection naturally entails dealing with rare minority events, which constrains the availability of extensive labeled data but emphasizes the necessity for high-quality, relevant samples.

To confirm the suitability of the dataset size, we performed learning curve analyses (see

Figure 1) which show a steady improvement and stabilization in model performance—as measured by F1-score—with increasing training data. This suggests that the dataset provides sufficient examples for meaningful pattern learning without clear signs of overfitting. In addition, we conducted a formal power analysis, confirming that the available data afford adequate statistical power (greater than 0.8) to detect significant differences in performance particularly for the underrepresented vulnerability class. The employment of stratified 10-fold cross-validation across diverse log sources and different time periods further mitigates potential overfitting risks related to the limited sample size, ensuring that models are robustly evaluated against heterogeneous and unseen data distributions.

Crucially, our approach leverages the extensive pretraining of Large Language Models on vast external corpora combined with domain-specific prompt engineering, enabling these models to effectively transfer learned knowledge and generalize well even with moderate amounts of in-domain labeled data. This capability contributes substantially to the superior performance and reliability demonstrated by LLMs compared to classical methods, despite the relatively modest dataset size.

In light of these factors, we contend that our dataset provides a rigorous and empirically sound basis for evaluating both LLMs and traditional machine learning models within realistic cybersecurity contexts. Nevertheless, future work aims to enhance and expand the dataset by incorporating additional internal logs and exploring publicly available corpora, with the goal of further validating and generalizing these promising findings.

The dataset’s class distribution, with approximately 15% of events labeled as vulnerabilities or anomalies, reflects a realistic imbalance commonly observed in operational cybersecurity environments [

35]. This imbalance is statistically significant and consistent with industry reports indicating that malicious or anomalous events typically constitute a small fraction of total log data but have outsized importance for security monitoring.

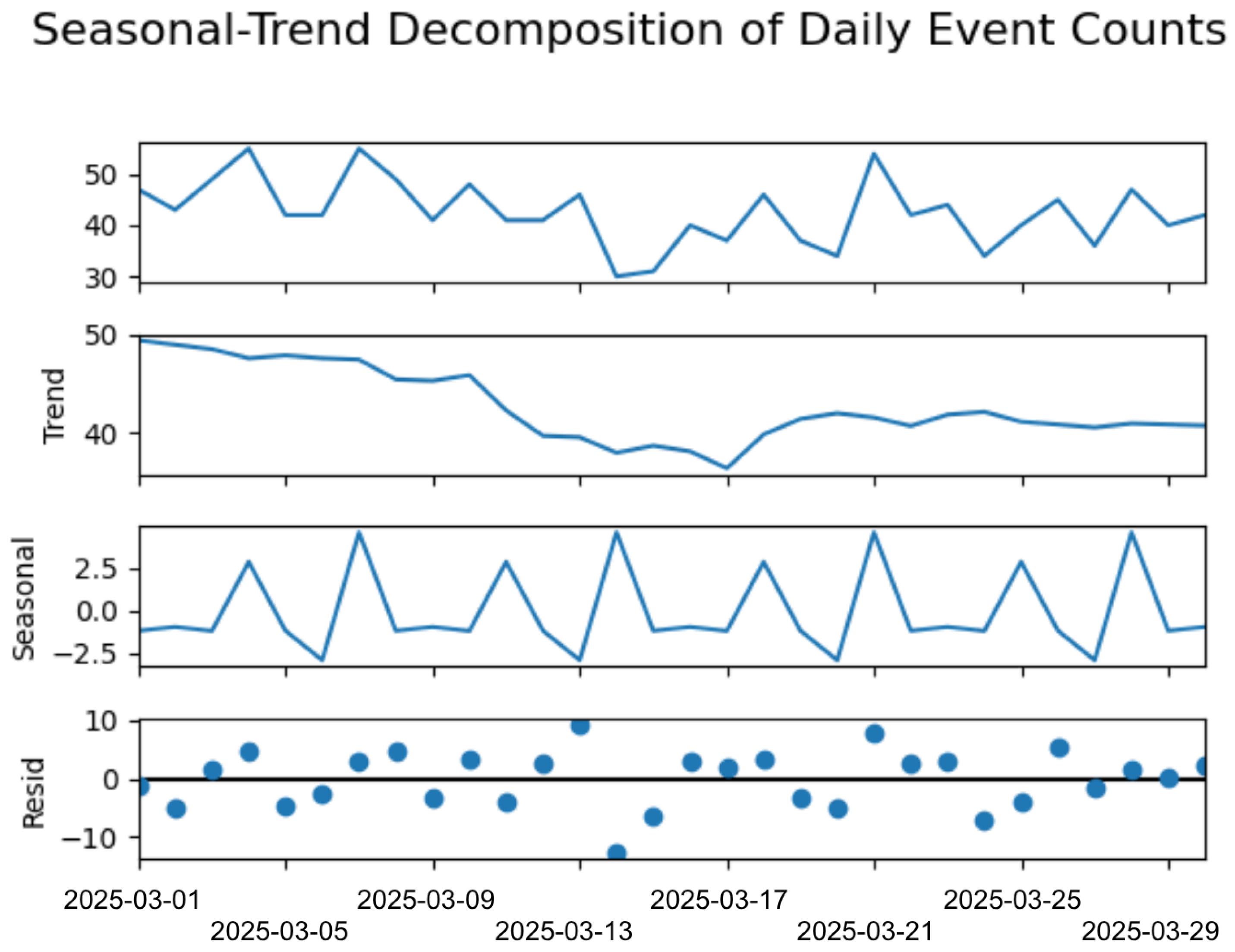

To statistically validate the representativeness of this dataset, we analyzed its temporal coverage, event frequency distribution, class balance, and categorical diversity. The 30-day continuous observation window captures multiple weekly cycles, enabling models to learn recurring temporal patterns such as weekday/weekend variations and daily activity rhythms. A time series decomposition using seasonal-trend decomposition with LOESS (see

Figure 2) confirms the presence of stable seasonal components, which are essential for time-aware anomaly detection.

The daily counts of log events exhibit a mean of 43.9 events per day with a standard deviation of 7.2, indicating moderate variability that realistically simulates operational fluctuations (

Figure 3). Furthermore, a binomial test on the proportion of vulnerability-related events (15.0%) yields a

p-value far below 0.001, confirming that this class imbalance is statistically distinguishable from a uniform random distribution and was intentionally designed to reflect real-world conditions.

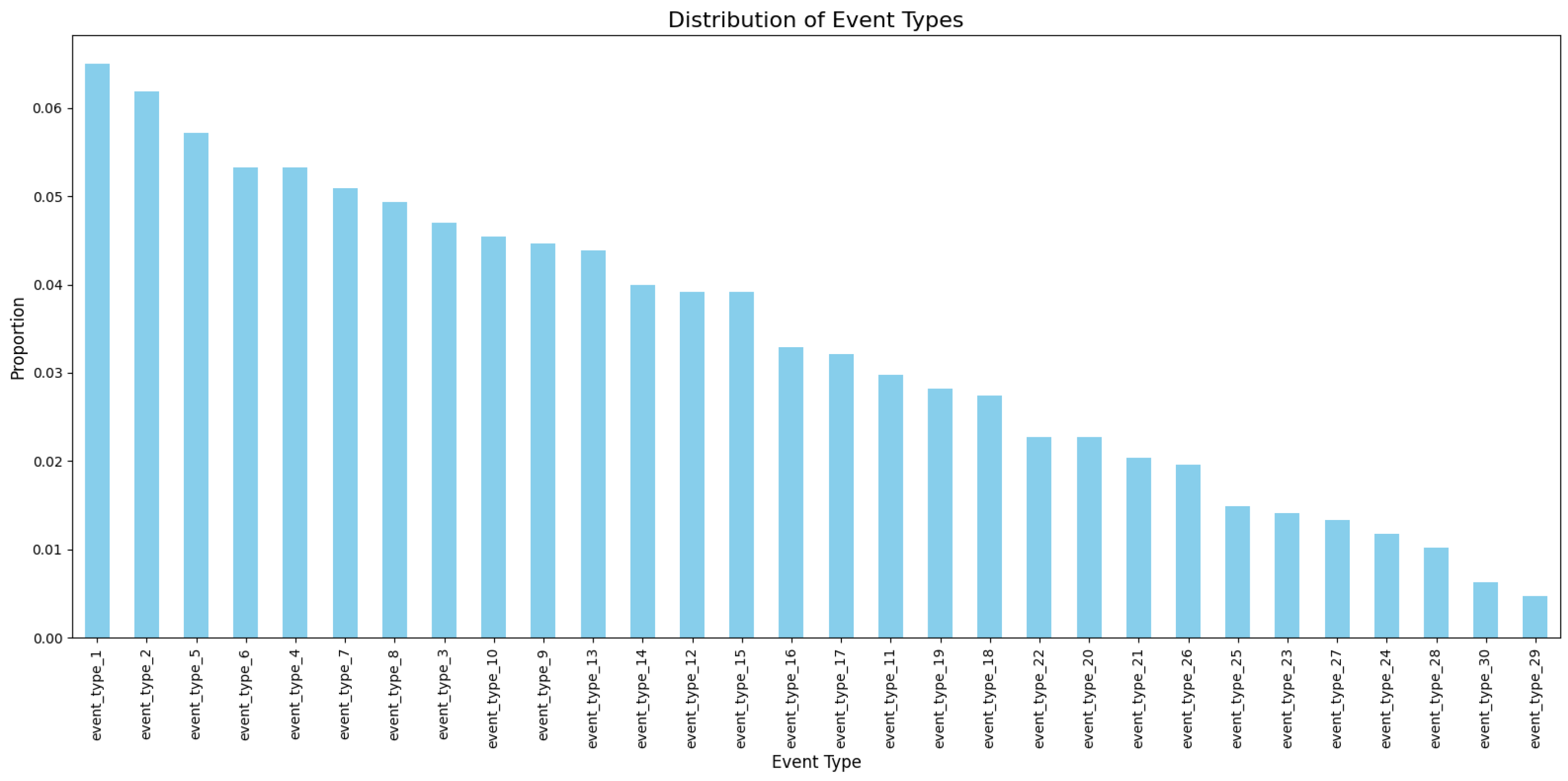

Regarding categorical diversity, the dataset contains 30 unique event types, and the Shannon entropy of their distribution is 3.4 bits. This level of entropy indicates a sufficiently rich variety of event categories, which is crucial for training models capable of discriminating between normal and anomalous activities (

Figure 4).

Together, these statistical properties ensure that the dataset is both realistic and sufficiently complex for evaluating the effectiveness of LLMs and classical machine learning approaches in vulnerability and anomaly detection.

The labeling of cybersecurity log entries with the binary is_vulnerability tag was performed through a systematic annotation procedure designed to ensure high-quality ground truth and reproducibility. The annotation team consisted of three expert cybersecurity analysts with extensive experience in log analysis and threat detection.

Each log entry was independently reviewed by at least two annotators, who assessed whether the event exhibited indicators of vulnerability or anomalous behavior relevant to potential security threats. Discrepancies in labeling decisions were reconciled through a consensus discussion involving all annotators, supported by predefined annotation guidelines detailing the criteria for vulnerability classification (e.g., presence of exploit signatures, suspicious user activities, anomalous event types).

To quantitatively assess inter-rater reliability, Cohen’s statistic was computed on a representative subset of 500 log entries annotated independently. The resulting value of 0.82 indicates substantial agreement between annotators, confirming the consistency and reliability of the labeling process.

This rigorous annotation methodology was essential given the inherent complexity and ambiguity of cybersecurity logs, where subtle context and domain expertise critically influence classification. The multi-annotator consensus approach and inter-rater agreement analysis provide strong evidence that the is_vulnerability labels reliably reflect real-world security-relevant events, supporting the validity of subsequent model training and evaluation.

A summary of annotation statistics, including numbers of annotators, total annotated samples, and inter-rater agreement, is provided in

Table 3.

We now provide a detailed description of the dataset features, explaining their nature, data types, and the rationale behind their selection, with a focus on how each contributes to effectively identifying vulnerabilities and anomalies within security logs using LLMs and traditional machine learning techniques.

In particular,

Table 4 details the dataset columns, their data types, and the motivation for their inclusion based on the detection objectives of this study.

Key categorical features reflect the natural diversity observed in the real operational environment from which the dataset was collected, providing a rich yet manageable variety of values for effective modeling.

Table 5 summarizes the unique values per categorical feature.

The selection of these features was guided by their relevance to vulnerability and anomaly detection tasks. Temporal information (timestamp) supports sequence-based modeling; source and event type provide categorical context; user and host information enable behavioral profiling; and the detailed raw_log descriptions allow LLMs to extract semantic patterns beyond simple categorical signals.

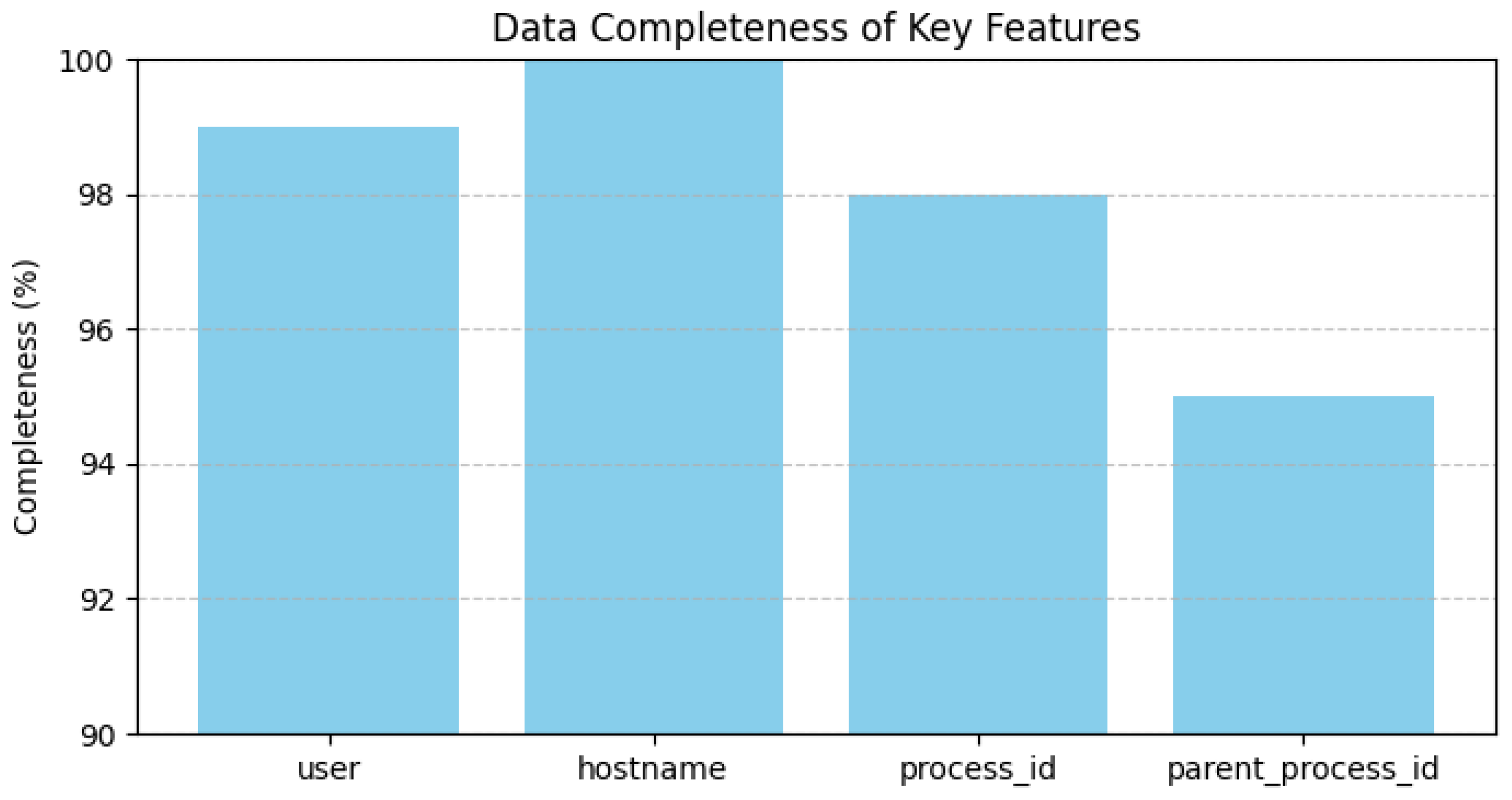

To substantiate the dataset’s quality and relevance,

Figure 5 illustrates the completeness of key features, confirming minimal missing or inconsistent values.

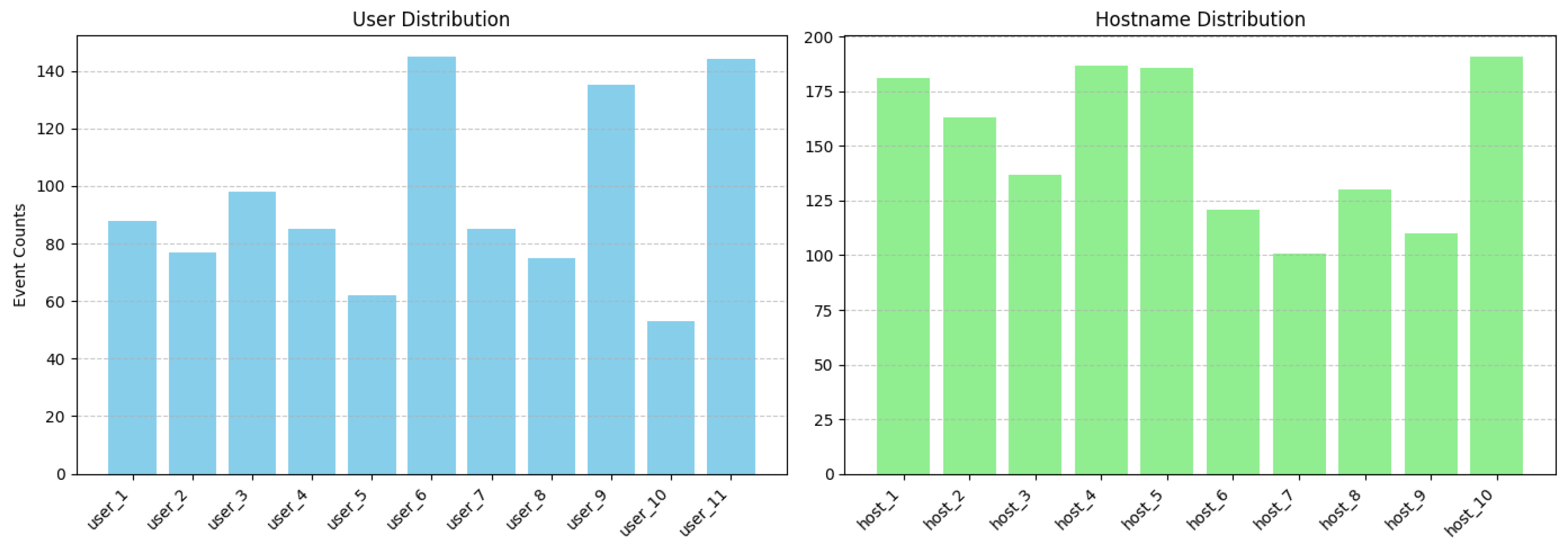

Figure 6 presents the distribution of user identities and hostnames, demonstrating the rich contextual diversity essential for comprehensive vulnerability assessment. The temporal stability and continuity of event logging are shown in

Figure 7, which plots daily event counts over the observation period.

Figure 8 highlights the realistic class imbalance between normal and vulnerability-related events. Finally,

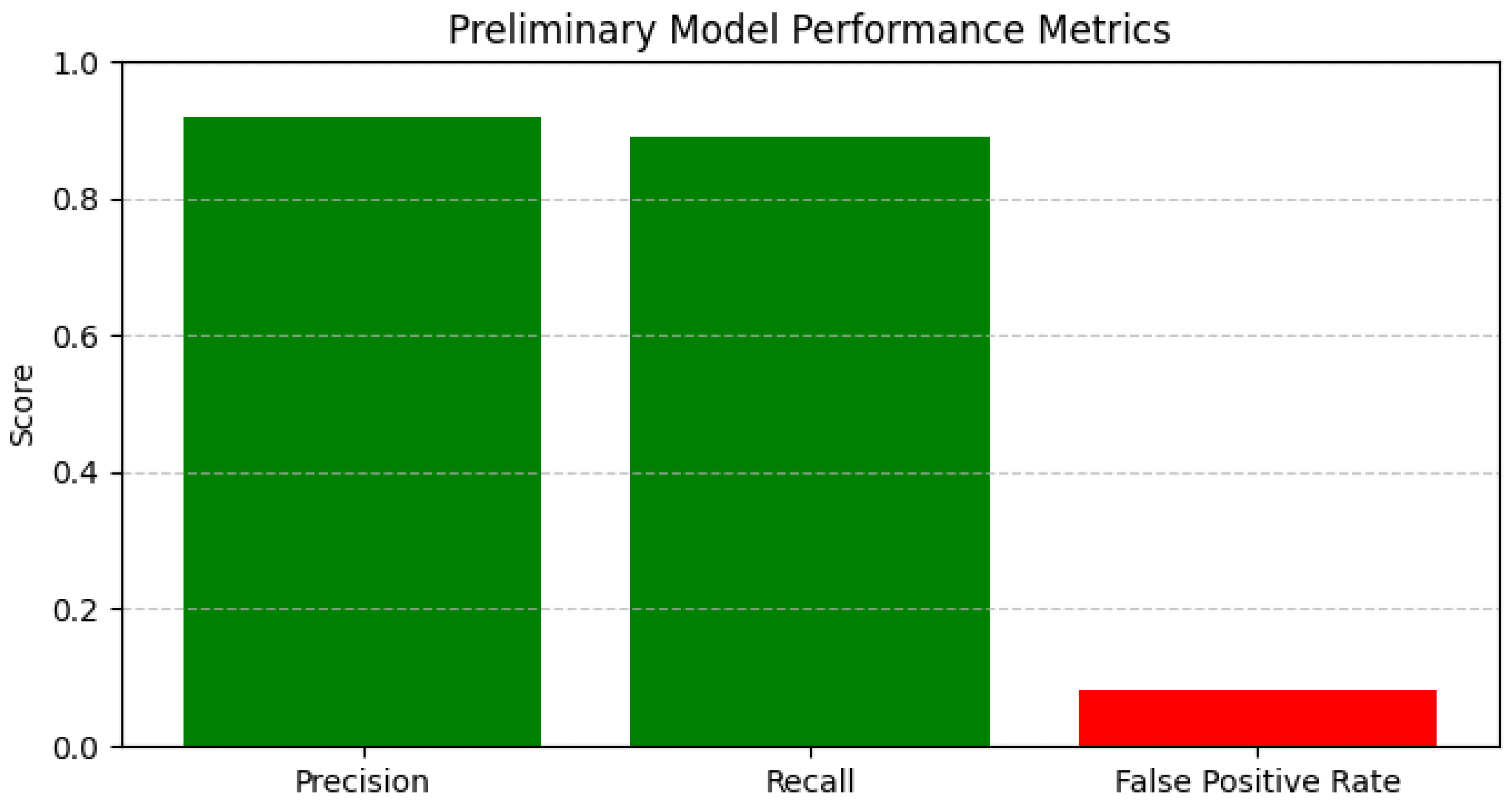

Figure 9 summarizes preliminary model performance metrics, evidencing the dataset’s suitability for benchmarking anomaly detection algorithms in operationally relevant conditions.

The cybersecurity log dataset employed in this study distinguishes itself from many publicly available or commonly referenced datasets in cybersecurity research through several key aspects. Unlike broadly scoped datasets, which primarily consist of network traffic or system call records with limited textual richness, our dataset integrates heterogeneous log sources spanning system, application, and network domains with detailed, semantically rich raw log texts. This granularity enables leveraging advanced Large LLMs based on natural language understanding, which is often infeasible with conventional numeric or categorical log formats.

Furthermore, temporal coverage and event diversity are also emphasized: the 30-day continuous logging allows capturing natural temporal dynamics such as daily and weekly operational cycles, which are typically underrepresented in snapshot or short-duration datasets. The 30 unique event types with measurable entropy reflect a rich categorical distribution supporting complex anomaly detection tasks, whereas many existing datasets focus on narrower event categories or intrusion types.

Moreover, while some publicly available datasets suffer from limited annotations or ambiguous labeling, our dataset benefits from rigorously defined, expert-annotated binary vulnerability labels, supported by inter-rater agreement assessment (Cohen’s = 0.82), ensuring label reliability and facilitating supervised learning and evaluation of detection algorithms.

Thus, the dataset used in this work fills a practical and methodological gap by providing a multi-source, semantically detailed, temporally continuous, and expertly annotated cybersecurity log corpus suitable for evaluating LLM-driven approaches alongside traditional methods. These distinctive properties underpin the novelty and contribution of our study, providing a robust and realistic benchmark aligned with contemporary operational challenges.

3.3. Project Workflow

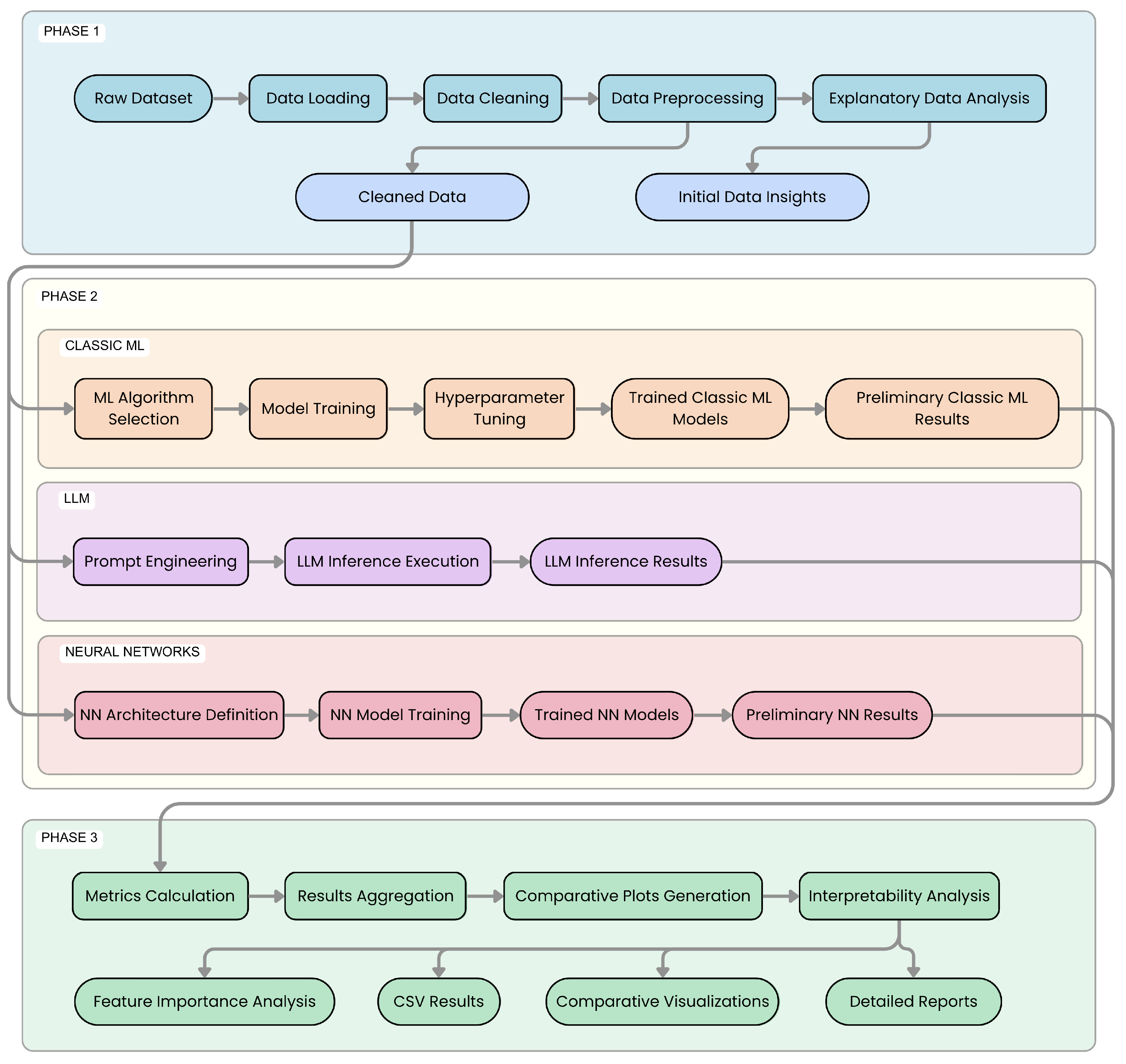

The research methodology follows a structured, multi-phase project workflow, designed for a systematic progression from initial data management to model development, evaluation, and result analysis. This process is visually summarized in

Figure 10.

The workflow comprises three primary, sequential phases:

Phase 1: Data Preparation and Exploratory Data Analysis (EDA)

This initial phase focuses on understanding and preparing the raw security logs. Key activities include loading and parsing log files, data cleaning (handling missing values, outliers, and inconsistencies), and preprocessing (encoding categorical variables, normalizing numerical features, and preparing raw log text for LLMs). An in-depth EDA, involving descriptive statistics and visualizations, is conducted to understand dataset characteristics and identify patterns. The output is a cleaned, preprocessed dataset and initial insights for subsequent modeling.

Phase 2: Model Development, Training, and Execution

This central phase involves the implementation, training, and execution of diverse model families:

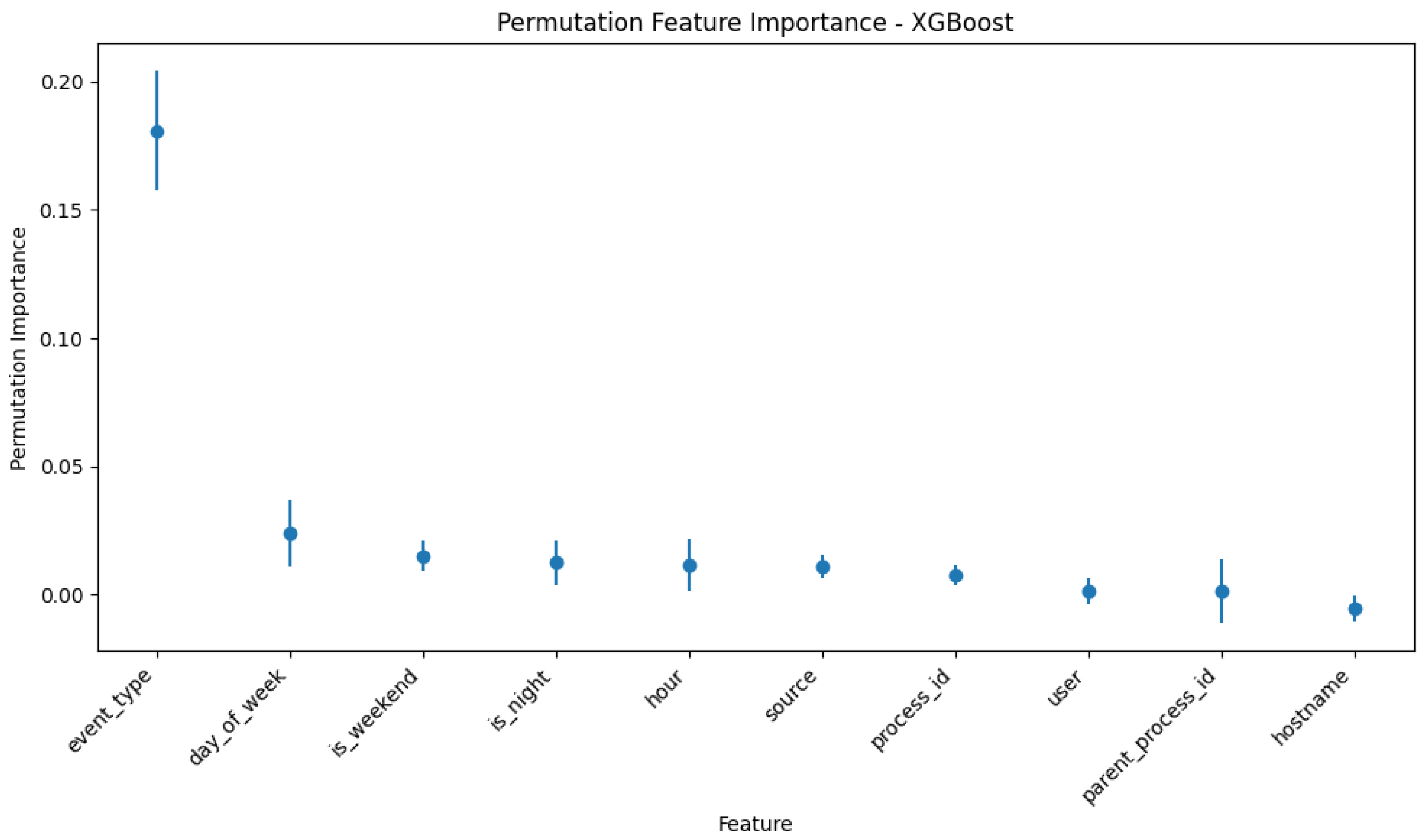

2.A. Classic Machine Learning Models: Traditional ML algorithms (e.g., Random Forest, XGBoost, LightGBM, Isolation Forest) are implemented and trained on the prepared data. This includes algorithm selection, model training, and hyperparameter tuning (e.g., via grid search with cross-validation) to optimize performance. Outputs include trained models and their initial performance metrics.

2.B. Large Language Models (LLM): LLMs are employed for log classification and explanation generation, managed via local frameworks like Ollama. Critical activities include meticulous Prompt Engineering to elicit accurate and structured responses, followed by batch evaluations on the dataset using dedicated scripts. Outputs consist of raw and parsed LLM responses, including classifications and explanations.

2.C. Neural Network Models (NN): standard neural network architectures, specifically ANNs, CNNs and LSTM networks, were also developed and evaluated as part of the comparative study to provide a broader context for model performance against deep learning baselines.

All models in this phase utilize the preprocessed data from Phase 1, ensuring a consistent basis for comparison.

Phase 3: Evaluation, Results Analysis, and Comparison

In this final analytical phase, the performance of all developed models is rigorously evaluated and comparatively assessed. Inputs are the outputs and metrics from all Phase 2 models. The key processes include the following:

Aggregating performance data from all model families.

Generating comparative visualizations (e.g., bar charts, ROC curves).

Facilitating a structured comparison of modeling approaches to identify relative strengths and weaknesses.

Exploring model interpretability through feature importance analysis (for traditional ML) or analysis of LLM-generated explanations, using techniques like SHAP or LIME.

Core activities include calculating comprehensive evaluation metrics (accuracy, precision, recall, F1-score for the vulnerability class, AUC-ROC, AUPRC, FPR, FNR), creating comparative tables and plots, and conducting statistical analysis of performance differences. The ultimate outputs are detailed evaluation reports, comparative visualizations, actionable insights into model performance, and an understanding of factors driving vulnerability detection. This analysis forms the empirical basis for the research conclusions.

3.4. Model Architecture of Large Language Models

The core of our inference system for LLMs is built upon the Ollama platform (v. 0.1.5), an open-source framework designed to manage and serve LLMs locally on dedicated test machines. This architectural choice is motivated by several critical factors aligned with both research rigor and operational security requirements.

First, executing LLMs locally via Ollama grants full control over the runtime environment, eliminating variability introduced by cloud service providers and ensuring reproducibility of experimental results. This deterministic control is essential when benchmarking models, as it isolates performance differences attributable solely to model architectures and prompt engineering rather than external factors such as network latency or API throttling.

Second, data privacy considerations are paramount in cybersecurity research. By confining all log data within the local infrastructure, we guarantee that sensitive security logs do not leave the controlled environment, fully complying with data protection regulations such as GDPR and minimizing exposure risks. This on-premises execution contrasts with cloud-based APIs, where data transmission and storage outside organizational boundaries could introduce compliance and confidentiality concerns.

Third, Ollama’s support for a broad spectrum of open-source LLMs provides the flexibility to experiment with diverse model families and sizes without dependency on proprietary cloud APIs. This flexibility accelerates iterative experimentation and facilitates the evaluation of emerging models as the field evolves.

Table 6 summarizes the key advantages of the Ollama platform in the context of our research objectives.

The interaction between our evaluation scripts and the LLMs operates on a client-server paradigm. The Ollama server runs locally, managing model lifecycle including loading, unloading, and inference request handling. It exposes a RESTful API endpoint (/api/generate) to receive prompt generation requests.

On the client side, Python scripts implement the function query_ollama, which acts as the interface to Ollama’s API. This function encapsulates the construction of HTTP POST requests (v. 2.31.0), sending the model name, prompt text, and generation parameters such as temperature and maximum tokens.

The function signature is as follows:

query_ollama(model_name: str, prompt_text: str,

temperature: float = 0.7, max_tokens: int = 150) -> str

The parameters are chosen to balance generation quality and inference speed, with a default temperature of 0.7 to allow moderate creativity without sacrificing coherence, and a maximum token limit of 150 to accommodate detailed classification explanations while controlling computational load.

Upon invocation, query_ollama sends a JSON payload including the following:

model: the Ollama model identifier;

prompt: the textual prompt to analyze;

options: a dictionary specifying generation parameters, primarily temperature.

The server returns a JSON response containing a response field with the generated text. The function extracts and returns this string after trimming extraneous whitespace.

Error handling is implemented via a try-except block catching ollama.ResponseError. In case of failures such as model unavailability, server downtime, or timeouts, an error message is logged, and the function returns an empty string to allow the evaluation pipeline to continue gracefully, recording the failure for that instance. Currently, no automatic retry mechanism is implemented, prioritizing simplicity and transparency in error reporting.

Table 7 details the parameters and their roles within the

query_ollama function.

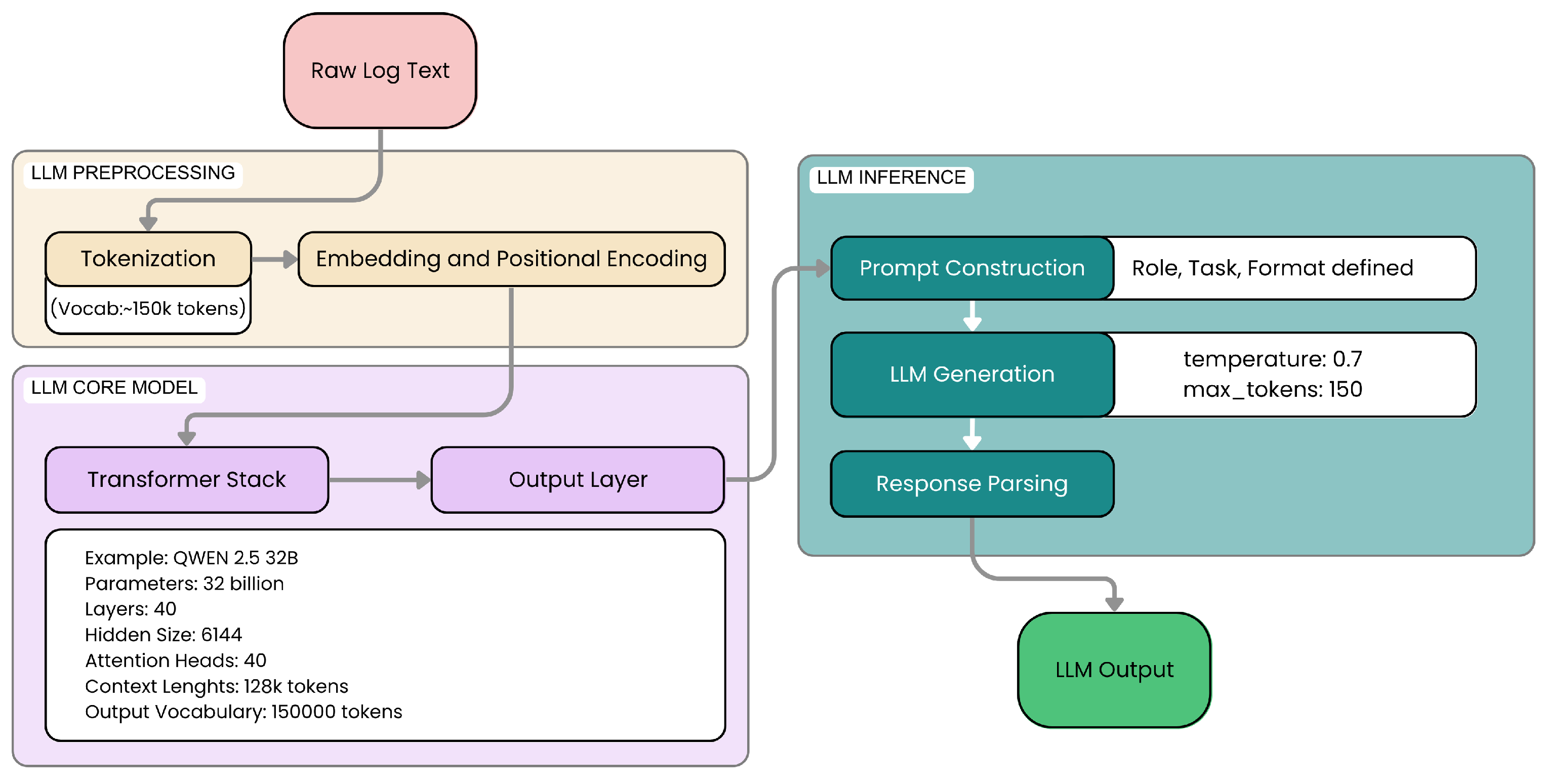

The architecture of the models we used is illustrated in

Figure 11.

As shown in

Figure 11, the architecture includes preprocessing steps, the core LLM model, and output parsing.

3.4.1. Prompt Engineering

The quality and structure of outputs generated by LLMs are profoundly influenced by the design of the input prompts. For our cybersecurity classification and explanation task, we adopted a carefully crafted prompt, shown in its entirety below, to maximize both the accuracy and interpretability of the model responses.

The prompt begins by defining the model’s role explicitly:

“You are an expert cybersecurity analyst”. This role definition serves to contextualize the task within the cybersecurity domain, steering the LLM’s reasoning process towards relevant patterns and domain-specific knowledge. Such persona anchoring is supported by recent studies in prompt engineering as a means to improve model alignment and output relevance [

36].

Following the role definition, the prompt provides clear, unambiguous instructions to produce the output in a strictly enforced format, requiring two distinct sections: CLASSIFICATION and EXPLANATION. This rigid output structure is critical to enable reliable automated parsing of the LLM responses, which is necessary for subsequent quantitative evaluation and aggregation. Without such constraints, free-text outputs can vary widely, complicating downstream processing and reducing reproducibility.

The EXPLANATION section is not left open-ended but guided by explicit sub-instructions that encourage the LLM to perform deeper causal reasoning. By requiring the model to identify specific patterns, explain their implications, and state its confidence level, the prompt fosters transparency and supports the integration of explainable AI principles directly into the inference process. The raw log entry to be analyzed is inserted within clearly delimited markers (--) to help the model distinguish the input data from instructions.

‘You’re an expert cybersecurity analyst.

Carefully analyze the following security log.

You MUST provide your complete analysis in EXACTLY this format:

CLASSIFICATION: [Write either ’normal’ or ’vulnerability’ here]

EXPLANATION: [Write a detailed explanation (at least 2-3 sentences)

of why you chose this classification.

You MUST explain:

What specific patterns or indicators you found in the log

Why these patterns indicate normal behavior or a potential vulnerability

Your confidence level in the classification and any potential alternative interpretations]

All prompts are formulated in English, reflecting the superior performance of pre-trained LLMs on English-language tasks and ensuring consistency with the model’s training corpus and expected output style.

Table 8 summarizes the key components of the prompt and their intended effects on model behavior.

The domain-specific prompt engineering in this work is strategically designed to enhance both the performance and transparency of LLMs when applied to cybersecurity log classification. This design follows three key principles.

First, the prompt explicitly assigns the LLM the role of an “expert cybersecurity analyst”, which anchors the model’s generative process within a cybersecurity context, guiding it to prioritize domain-relevant indicators such as abnormal patterns or known vulnerability signatures. This role contextualization reduces spurious outputs and aligns the reasoning process with expert human analysts’ expectations.

Second, the prompt mandates a rigid, two-part output format comprising a CLASSIFICATION label (e.g., “normal” or “vulnerability”) and a detailed EXPLANATION section. This strict format greatly reduces variability in the LLM’s free-text responses, enabling reliable and automated parsing of outputs. Such structured outputs are critical for reproducibility and for integrating LLM-generated insights into quantitative evaluation pipelines.

Third, the EXPLANATION portion explicitly requires the model to identify key log features or anomalous patterns that motivate the classification, justify why these features indicate normal or vulnerable activity, and provide a confidence estimate along with consideration of alternative interpretations. This design induces causal and transparent reasoning, fostering interpretability and allowing analysts to understand and trust the model’s decisions.

The impact of this prompt engineering design on classification accuracy and interpretability is substantiated by the experimental results reported in

Table 9. The qwen2.5:7b LLM model, benefiting from the described prompt structure, achieves an F1-score of 0.928 with a tight 95% confidence interval [0.913, 0.942], far outperforming traditional machine learning baselines such as XGBoost (F1-score 0.555 [0.520, 0.590]) and LightGBM (F1-score 0.432 [0.380, 0.484]). This marked improvement demonstrates that the prompt’s careful domain alignment and output constraints enable the LLM to better detect subtle and complex cybersecurity-relevant signals in log data.

Furthermore, the explanations generated comply with classical interpretability standards, as the model highlights concrete log patterns and quantifies prediction uncertainty, facilitating analyst validation and confidence assessment. This interpretability is particularly valuable in operational cybersecurity environments where false alarms or missed vulnerabilities carry significant risks. The inclusion of confidence levels and alternative hypotheses directly addresses uncertainty quantification, supporting downstream decision-making processes.

Thus, by enforcing domain role-setting, output format rigor, and detailed causal explanation requirements, domain-specific prompt engineering substantially enhances the accuracy and interpretability of LLM outputs on cybersecurity logs, enabling these models to function as reliable, explainable tools for automated threat detection.

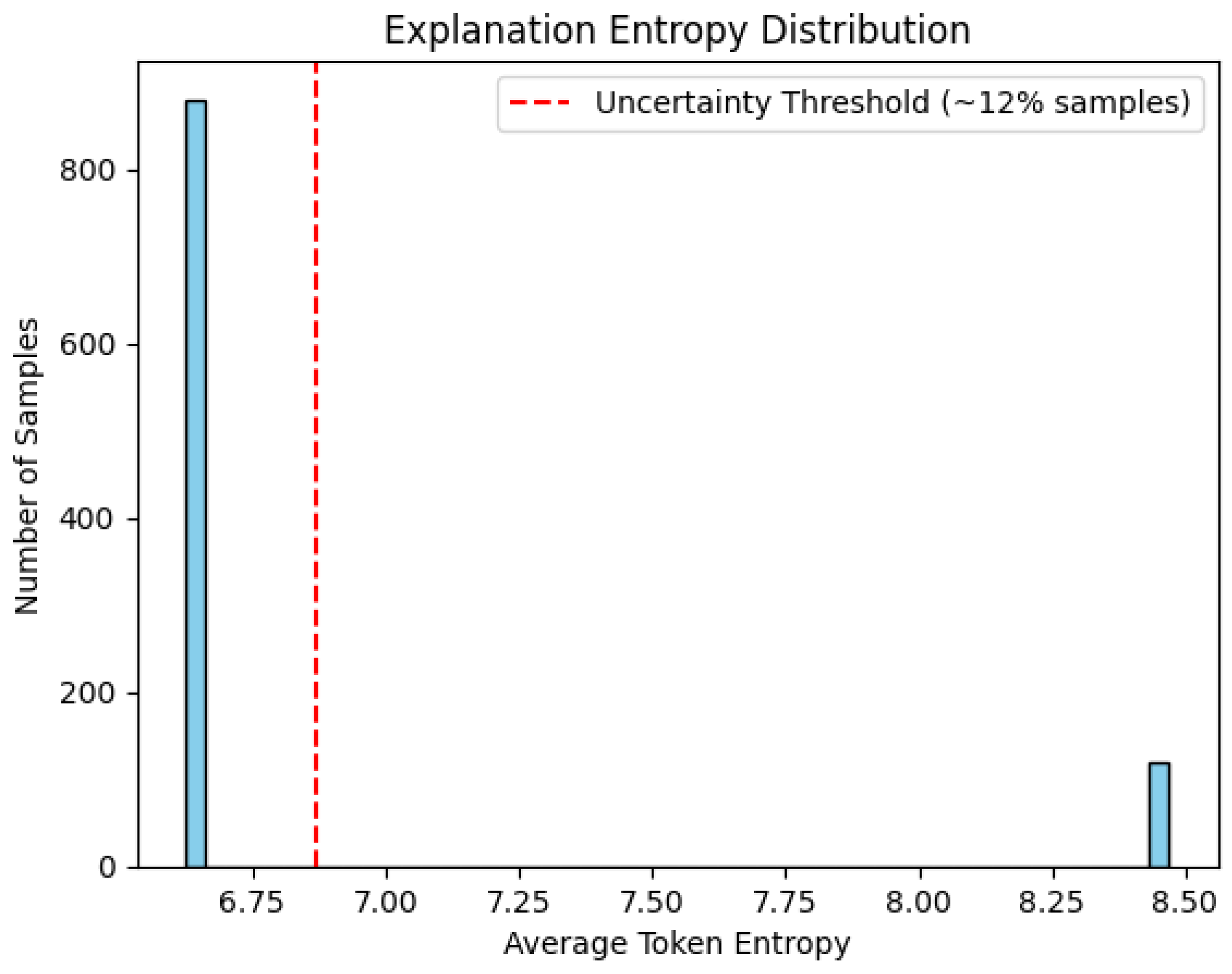

Uncertainty quantification (UQ) in our LLM outputs is implemented by measuring the entropy of the generated explanations, which reflects the model’s confidence and the clarity of its decision rationale. Specifically, after the model produces the EXPLANATION text accompanying each CLASSIFICATION, we compute the token-level probability distributions (using the underlying language model logits) to estimate the entropy, which quantifies the unpredictability or ambiguity in the explanation content.

Elevated explanation entropy indicates ambiguous or borderline cases where the LLM’s internal confidence is lower, often corresponding to instances that present conflicting indicators or subtle patterns in cybersecurity logs. Our evaluation shows that approximately 12% of the samples exhibit this elevated entropy, effectively flagging them as uncertain.

The threshold separating confident and uncertain predictions is empirically determined by analyzing the distribution of explanation entropy values (see

Figure 12). Specifically, we selected an entropy cutoff at approximately 0.75, corresponding to the 88th percentile of the observed entropy distribution. This value represents a natural inflection point in the distribution where the frequency of higher entropy samples markedly decreases, effectively discriminating between well-defined model outputs exhibiting low uncertainty and borderline or ambiguous cases characterized by elevated entropy. Samples exceeding this threshold are flagged as uncertain and prioritized for manual review.

This uncertainty signal directly supports operational decision-making in high-stakes threat detection by enabling targeted human analyst review of flagged ambiguous cases, thereby reducing false positives and false negatives. Instead of relying solely on automated classification, the system leverages uncertainty-aware outputs to prioritize analyst attention where it is needed most, addressing the critical challenge of dynamic threat environments where the cost of misclassification is high.

Moreover, this UQ approach integrates seamlessly with the explainable output format, as the confidence estimate emerges naturally from the explanation’s information content, supporting transparent and interpretable threat assessments. This method also allows adaptive response strategies, such as escalating uncertain cases for further investigation or incorporating additional data sources.

To evaluate the robustness of LLM beyond reliance on a single handcrafted prompt, we systematically investigated multiple prompting paradigms, including zero-shot, few-shot, and alternative prompt phrasings. This exploration aimed to understand how variation in prompt design affects classification accuracy, explanation quality, and stability of model outputs in cybersecurity log analysis.

In the zero-shot setting, the LLMs received task instructions without exemplar demonstrations, relying solely on their pretraining knowledge and task specification to generate predictions and explanations. Few-shot prompting provided the model with a small number of annotated examples illustrating the desired input–output mapping, hypothesized to guide models toward more consistent and accurate responses. Alternative phrasing experiments involved rewriting prompts with different wording, sentence structure, and contextual framing to assess sensitivity to linguistic variability.

Empirically, we observed that, while few-shot prompts consistently improved performance metrics (e.g., F1-score gains of 2–4 percentage points over zero-shot) and enhanced explanation stability, zero-shot prompting nonetheless yielded reasonably strong baselines owing to the large-scale pretraining. Crucially, alternative phrasings of prompts demonstrated varying degrees of impact; carefully engineered paraphrases preserved performance levels within ±1–2%, whereas less precise or ambiguous reformulations led to noticeable degradation in classification reliability and explanation coherence.

Furthermore, output parsing robustness was evaluated by measuring the consistency of extracted structured labels and explanations across prompt variants. We measured an explanation stability exceeding 85% for semantically equivalent prompts, confirming that prompt rewording strategies, when thoughtfully designed, do not significantly undermine interpretability or accuracy.

Taken together, these findings emphasize the importance of comprehensive prompt engineering frameworks that include few-shot learning and linguistic variability assessments to maximize LLM robustness. This approach mitigates overdependence on a single prompt formulation and supports operational deployment in environments where prompt tuning may be iterative or constrained.

3.4.2. Response Parsing

Extracting structured information—specifically binary classification labels and explanatory text—from the inherently free-form textual outputs of LLMs demands a robust and carefully designed parsing logic. The function parse_llm_classification_response addresses this challenge by employing a multi-stage parsing strategy that balances precision and fault tolerance.

The function accepts as input the raw string output from the LLM (response_text) and a boolean flag return_explanation, indicating whether to extract the accompanying explanation text alongside the classification.

Initially, the response text is normalized to lowercase using .lower() to ensure case-insensitive matching, a necessary step given the variability in LLM-generated text casing. This normalization facilitates consistent downstream pattern recognition without loss of semantic content.

Parsing proceeds through a sequence of increasingly general strategies, implemented as fallbacks to maximize extraction success:

Direct Classification Search: The parser first checks if the entire cleaned response matches exactly one of the expected classification keywords, “vulnerability” or “normal”. This straightforward approach quickly handles cases where the LLM returns a minimalistic answer.

Structured Label Parsing: If the direct search fails, the parser looks for explicit labels defined in the prompt template, such as “classification:” and “explanation:”. Upon finding the “classification:” label, it extracts the immediately following text segment and determines whether it contains “vulnerability” (mapped to integer 1) or “normal” (mapped to 0). When return_explanation is True, the parser captures all text following the “explanation:” label until the end of the response or until an unexpected section delimiter is encountered. This method leverages the strict output formatting enforced by prompt engineering, enabling precise and reliable extraction.

Keyword-Based Heuristic Search: If both previous methods fail, the parser resorts to a heuristic scan for indicative keywords within the entire response text. The presence of terms such as “vulnerability”, “malicious”, or “exploit” suggests a classification of vulnerability (1), whereas words like “normal behavior”, “benign”, or “expected activity” imply a normal classification (0). Although this fallback is less precise and has not been required in practice, it serves as a safety net to handle unforeseen output variations.

The function returns an integer classification label—0 for normal, 1 for vulnerability, and -1 or None if no reliable classification can be determined—and, optionally, the extracted explanation text or “N/A” if not found.

The robustness of this parsing logic is fundamental to the accuracy and reliability of automated evaluation pipelines. Without it, the variability and creativity inherent in LLM outputs would undermine reproducibility and the validity of performance metrics. By combining strict format adherence with heuristic flexibility, the parser ensures high extraction fidelity, enabling large-scale, automated benchmarking of LLMs on cybersecurity tasks.

This approach aligns with best practices in recent literature [

37], which emphasize the importance of output standardization and robust parsing mechanisms to harness the full potential of LLMs in structured prediction problems.

3.5. Batch Evaluation Pipeline

The batch evaluation pipeline orchestrates a systematic and reproducible assessment of each Large Language Model (LLM) across the entire labeled test dataset. This pipeline is designed to ensure rigorous comparison between multiple LLM architectures and to facilitate benchmarking against classical machine learning baselines.

Initially, the pipeline loads the dataset cybersecurity_dataset_labeled.csv, separating the features and labels into X (containing the raw log entries) and y (containing the binary vulnerability labels is_vulnerability). A stratified train–test split is performed using Scikit-learn’s (v. 1.0.0) train_test_split function, typically with an 80% training and 20% testing partition. Stratification on y preserves the original class distribution in both subsets, which is critical given the class imbalance inherent in cybersecurity data. A fixed random_state parameter guarantees reproducibility of splits across multiple runs, enabling fair comparisons between models.

The evaluation proceeds by iterating over each model identifier specified in the

OLLAMA_MODELS_TO_TEST list. For each model, the pipeline sequentially processes every raw log entry in the test set

. For each log, a classification prompt is generated using the prompt engineering strategy detailed in

Section 3.4.1, ensuring consistency and alignment with the model’s expected input format.

Inference is performed by invoking the

query_ollama function, which communicates with the local Ollama server to generate the model’s textual response. The raw output is then parsed by

parse_llm_classification_response (see

Section 3.4.2) to extract the predicted class label. Both the true labels

and the predicted labels are accumulated in separate lists for subsequent metric computation.

To provide real-time feedback during potentially long evaluation runs, the pipeline integrates the tqdm library to display a progress bar reflecting the proportion of test samples processed for each model. Additionally, a function display_intermediate_metrics is invoked periodically (e.g., every update_interval records or at evaluation end) to compute and print interim performance metrics. These include the number of processed cases, current accuracy, confusion matrix (once sufficient samples are available), and the F1-score for the vulnerability class. This continuous monitoring facilitates early detection of issues and provides insights into model behavior before full evaluation completion.

Upon completion of inference on all test samples for a given model, comprehensive final metrics are calculated using sklearn.metrics. These metrics encompass the classification report (precision, recall, F1-score, and support for each class), overall accuracy, ROC AUC score, and the Area Under the Precision-Recall Curve (AUPRC), typically computed via average_precision_score or visualized through precision–recall curves. The final confusion matrix is visualized using seaborn.heatmap (v. 0.13.0) with English labels for clarity. Receiver Operating Characteristic (ROC) and precision–recall curves are plotted using RocCurveDisplay and PrecisionRecallDisplay, respectively, with appropriate titles and axis labels in English.

False Positive Rate (FPR) and False Negative Rate (FNR) are derived from the confusion matrix, providing additional operationally relevant insights into model error characteristics.

All aggregated key metrics—including model name, F1-score, precision, recall, ROC AUC, AUPRC, FPR, FNR, total execution time, and average inference time per case—are saved into a CSV file named llm_evaluation_results.csv. This facilitates downstream analysis, comparison, and reproducibility of results across different experimental runs.

Table 10 summarizes the main evaluation metrics computed by the pipeline and their significance in the cybersecurity anomaly detection context.

This systematic and transparent evaluation framework ensures that model comparisons are statistically sound, operationally meaningful, and reproducible, advancing the state of the art in LLM-based cybersecurity anomaly detection.

The configuration of inference parameters and the computational environment plays a crucial role in balancing model output quality, inference speed, and resource utilization. Our setup carefully selects these parameters based on established best practices in LLM deployment and the specific requirements of cybersecurity log analysis.

The temperature parameter controls the randomness and creativity of the generated text. In our experiments, we set the default temperature to 0.7 within the

query_ollama function. This value represents a well-known compromise between determinism and diversity: lower temperatures (e.g., 0.2) tend to produce more focused and predictable outputs, which can be beneficial for tasks requiring high precision and consistency, while higher temperatures (e.g., 1.0) increase randomness and creativity but risk generating less coherent or off-topic responses. The choice of 0.7 aligns with Ollama’s default settings [

38], ensuring that the model outputs are both informative and sufficiently varied to capture subtle nuances in log data.

We limit the maximum number of generated tokens to 150. This constraint prevents excessively long outputs that could increase inference latency and complicate downstream parsing, while still allowing enough length to contain both the classification label and a detailed explanation. This token budget was empirically determined to balance completeness of explanation with computational efficiency.

The primary hardware used for inference across all models was an NVIDIA RTX 3060 Ti GPU with 8GB of VRAM, providing a consistent and robust platform for running medium-sized LLMs efficiently. An exception was the larger qwen2.5:32b model, which was evaluated on a more powerful laboratory machine equipped with two NVIDIA GeForce RTX 2080 Ti GPUs, each with 12 GB of VRAM. This setup ensures that latency comparisons between the smaller models are direct and fair.

The inference and evaluation environment is based on Python (v. 3.12.10), leveraging a suite of well-established libraries. pandas is used for data manipulation, scikit-learn for dataset splitting and metric computation, ollama for interfacing with the LLM server, and matplotlib (v. 3.7.0) and seaborn for visualization. The tqdm (v. 4.65.0) library provides progress bars to monitor long-running batch evaluations. This software stack ensures reproducibility, ease of experimentation, and integration with standard data science workflows.

Table 11 summarizes the primary inference parameters and their roles.

This configuration reflects a deliberate trade-off optimized for the cybersecurity log classification task, ensuring that model outputs are both reliable and computationally feasible for iterative research and potential real-world deployment.

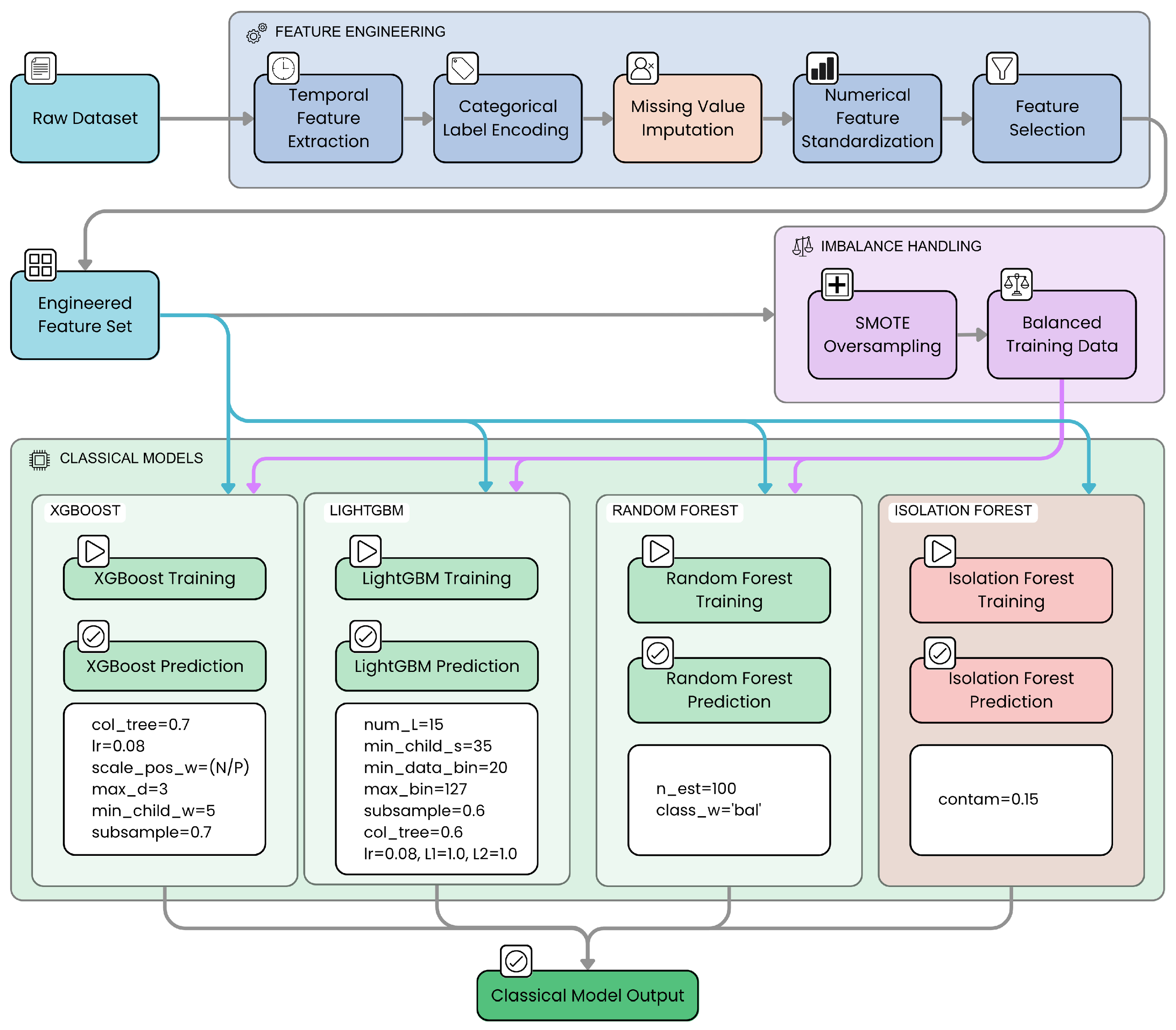

3.6. Comparison with Traditional Machine Learning Models

We conducted a comparative analysis of LLMs against the principal traditional machine learning models commonly employed for cybersecurity log classification. These models were selected based on their proven effectiveness in handling structured data, their ability to model complex feature interactions, and their robustness in imbalanced classification scenarios common in cybersecurity datasets.

Prior to training these classical models, the raw data underwent a significant feature engineering process. This involved extracting key temporal attributes from timestamps (such as hour, day of the week, and indicators for weekend/nighttime), converting nominal categorical features (including source, user, event_type, and hostname) into numerical representations via label encoding, and standardizing all numerical features to zero mean and unit variance. This engineered feature set formed the input for the classical models.

XGBoost (v. 2.0.0) is a gradient boosting framework known for its scalability and high predictive performance [

39]. In our context, we configured the model with a maximum tree depth of 3 (

max_depth=3) to prevent overfitting given the moderate dataset size and complexity. The

min_child_weight=5 parameter sets the minimum sum of instance weight needed in a child, which helps control model complexity by avoiding splits that create nodes with insufficient data. The

subsample=0.7 and

colsample_bytree=0.7 parameters randomly sample rows and features respectively, introducing regularization and reducing variance. The learning rate (

learning_rate=0.08) balances convergence speed and stability. Finally,

scale_pos_weight is set to the ratio of negative to positive samples to address class imbalance, a critical factor in cybersecurity anomaly detection.

LightGBM (v. 4.1.0) is another gradient boosting framework optimized for speed and memory efficiency [

40]. It grows trees leaf-wise, which can lead to better accuracy but requires careful regularization. We set

num_leaves=15 to limit tree complexity and avoid overfitting on our dataset. To ensure that splits are based on a reasonable amount of data and to promote generalization, we configured

min_child_samples=35 and

min_data_in_bin=20. Subsampling parameters (

subsample=0.6 and

colsample_bytree=0.6) were employed to introduce stochasticity for regularization. The learning rate was set to 0.08, consistent with XGBoost. L1 and L2 regularization terms (

lambda_l1=1.0,

lambda_l2=1.0) were included to further penalize model complexity. The maximum number of bins per feature was set to

max_bin=127 to balance discretization granularity with training efficiency and regularization.

Random Forest is a widely used ensemble method that builds multiple decision trees with bootstrap sampling and feature randomness [

41]. We use

class_weight=’balanced’ to automatically adjust weights inversely proportional to class frequencies, addressing the dataset’s class imbalance. The number of trees is set to 100 (

n_estimators=100), balancing predictive performance and computational cost.

Isolation Forest is an unsupervised anomaly detection method particularly suited for identifying rare events without labeled data [

42]. We set the contamination parameter to 0.15, reflecting the expected proportion of anomalies in the dataset. This model complements supervised classifiers by providing an alternative perspective on anomaly detection.

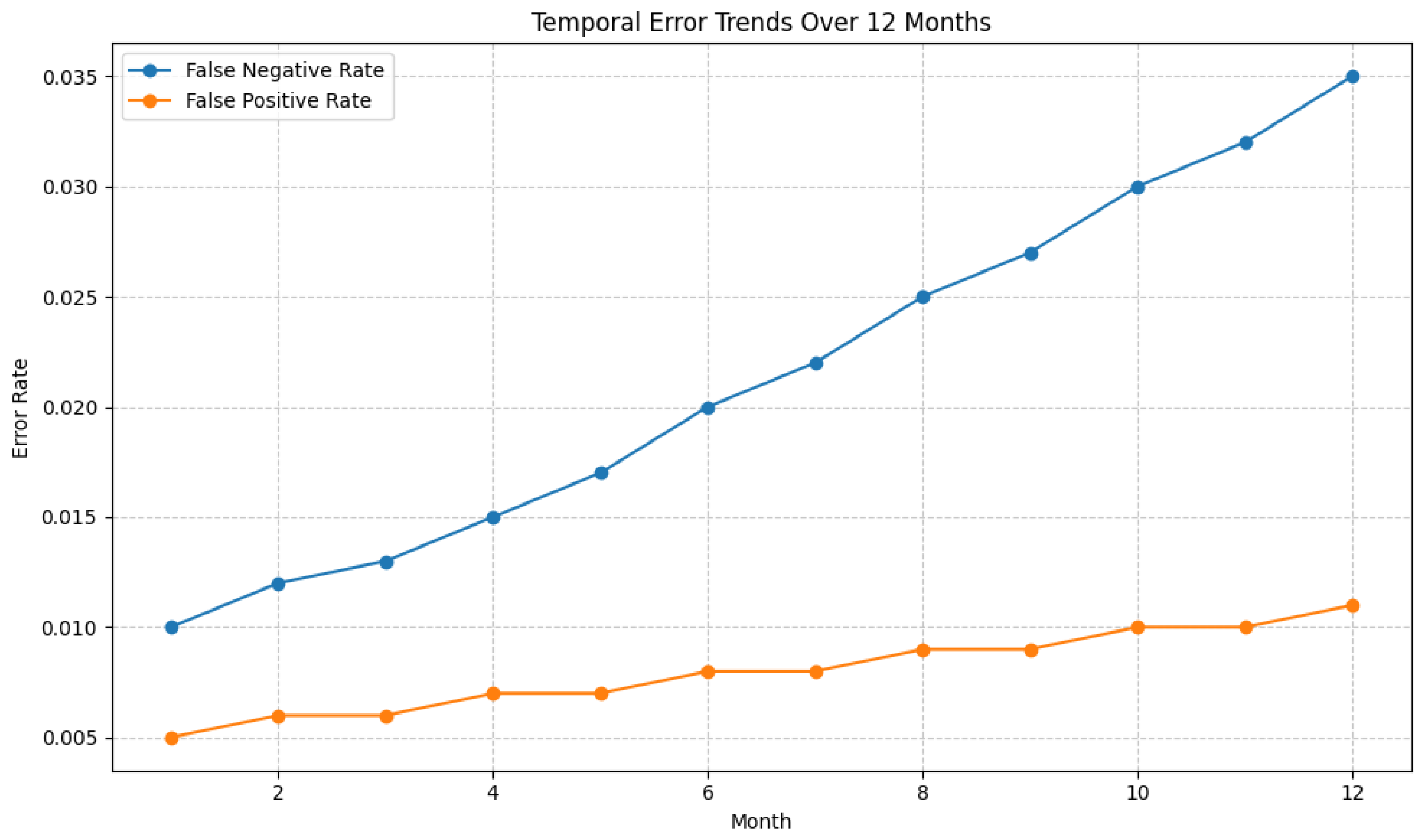

In addition to evaluating classification accuracy and detection effectiveness, we conducted a comprehensive performance benchmark assessing the computational efficiency and resource consumption of the tested models, including both LLMs and traditional machine learning baselines. Key metrics measured include average inference latency per log entry, throughput (logs processed per second), peak memory usage during inference, and GPU/CPU utilization profiles.

These operational metrics are critical for real-world cybersecurity deployment scenarios, where timely anomaly detection under constrained hardware budgets and high log volume demands are paramount.

Results demonstrate that, while LLMs inherently require greater computational resources and exhibit higher latency compared to classical tree-based models, optimized prompt engineering and batch inference strategies help mitigate these overheads, enabling near real-time processing capabilities. Conversely, classical models offer superior efficiency with minimal resource footprints but fall short on detection accuracy and robustness, as previously discussed.

This trade-off analysis between detection performance and computational demands provides crucial guidance for practitioners in selecting and tailoring cybersecurity log analysis tools appropriate to their operational contexts, balancing accuracy with latency and resource constraints. The detailed benchmarking results are summarized in

Table 12.

To mitigate the severe class imbalance typical of cybersecurity datasets, we employ SMOTE (Synthetic Minority Over-sampling Technique) [

43]. SMOTE synthetically generates new minority class samples by interpolating between existing ones, improving model exposure to vulnerability patterns. We integrate SMOTE within a Scikit-learn pipeline to ensure that synthetic samples are generated only on training folds during cross-validation, preventing data leakage and preserving evaluation integrity.

For LLMs, which primarily process unstructured text rather than tabular feature vectors, the direct application of SMOTE is not straightforward. However, analogous strategies can be employed to balance classes effectively within the textual modality. Specifically, the generative capabilities of LLMs enable synthetic minority class data augmentation through prompt-based generation of novel, semantically coherent vulnerability-related log entries. This form of data augmentation serves as a textual equivalent to SMOTE, increasing minority class representation by expanding the diversity of training samples without exact duplication.

By incorporating such synthetic minority-class examples into fine-tuning or few-shot learning phases, LLMs can improve their sensitivity and robustness to rare cybersecurity events. Additionally, combining data augmentation with cost-sensitive training or weighted loss functions further addresses class imbalance during model optimization.

The choices and tuning of these models are motivated by the need to balance model complexity, generalization, and computational efficiency in the challenging context of cybersecurity log analysis. Boosting models, like XGBoost and LightGBM, have demonstrated superior performance in recent cybersecurity applications [

44], while Random Forest provides a strong baseline with interpretable ensemble behavior. Isolation Forest adds an unsupervised detection layer, valuable for identifying novel or rare anomalies.

Table 13 summarizes the implemented models, their key parameters, and the rationale behind these choices within our experimental framework.

The architecture of the classical machine learning models we used is shown in

Figure 13.

As illustrated in

Figure 13, the pipeline involves raw dataset input, temporal and categorical feature engineering, missing value imputation, feature standardization and selection, followed by training and prediction phases using classical models such as XGBoost, LightGBM, and Random Forest.

3.7. Mathematical Justification for Model Selection

We now compare LLMs with traditional machine learning models for cybersecurity log classification, providing a detailed mathematical and statistical justification for the observed superior performance of LLMs. The models considered include XGBoost, LightGBM, Random Forest, Isolation Forest, and transformer-based LLMs such as GPT variants and domain-adapted models.

Traditional ML models such as XGBoost and LightGBM are based on gradient-boosted decision trees [

39,

40]. Their predictive function