Leveraging Transfer Learning for Determining Germination Percentages in Gray Mold Disease (Botrytis cinerea)

Abstract

1. Introduction

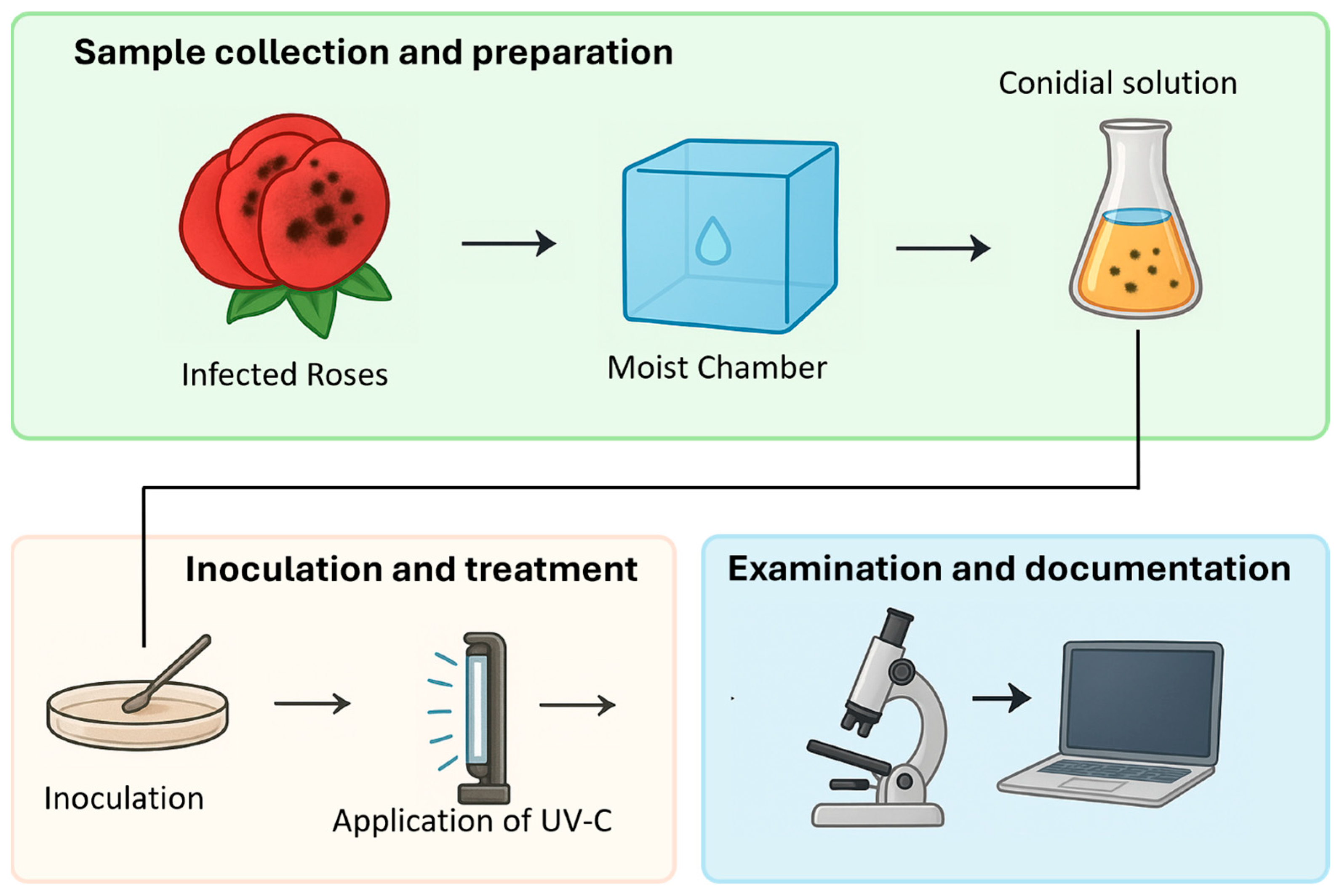

2. Materials and Methods

2.1. Extraction and Culture of Botrytis cinerea

2.2. UV-C Radiation Experiments

2.3. Dataset Generation

2.3.1. Image Acquisition

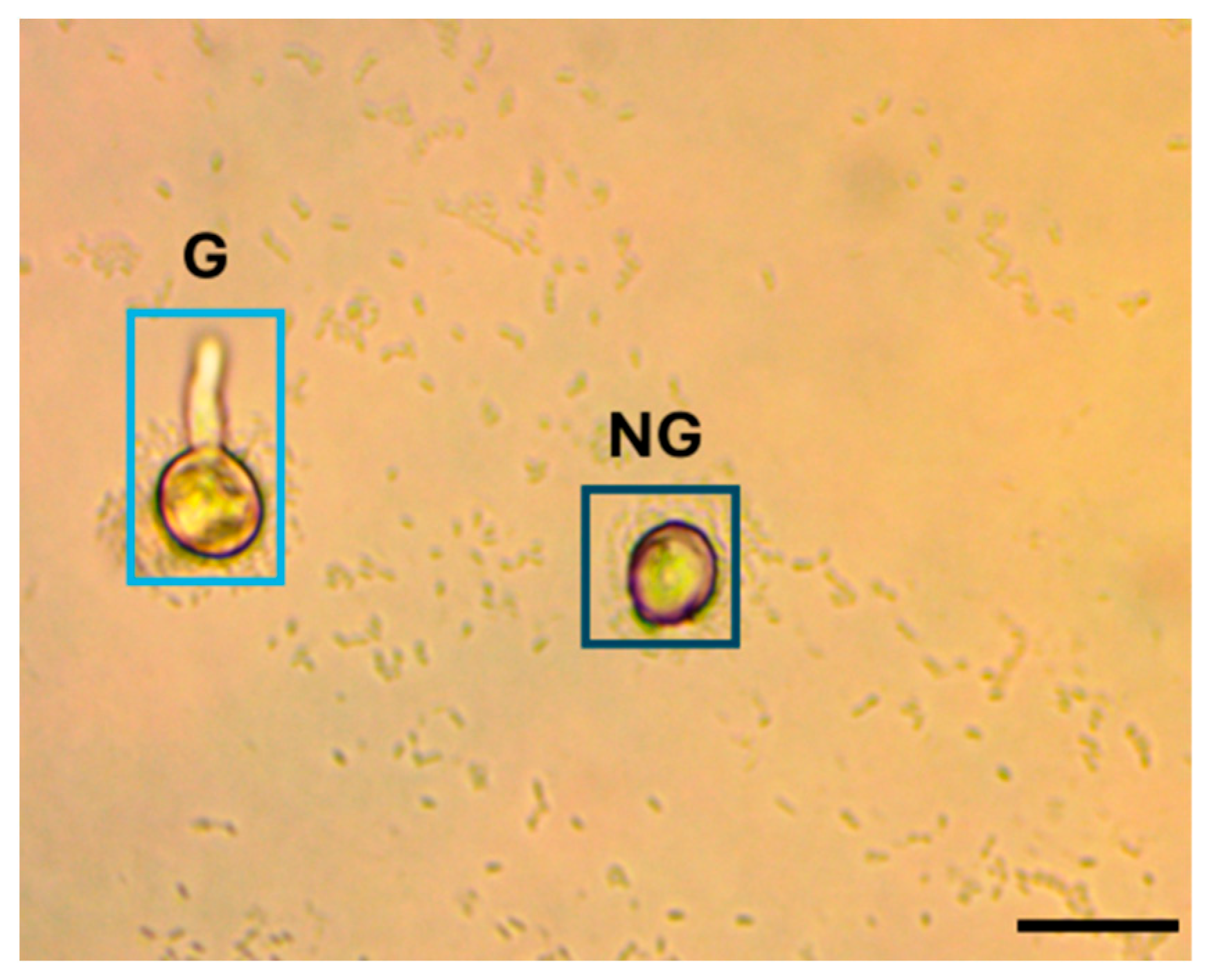

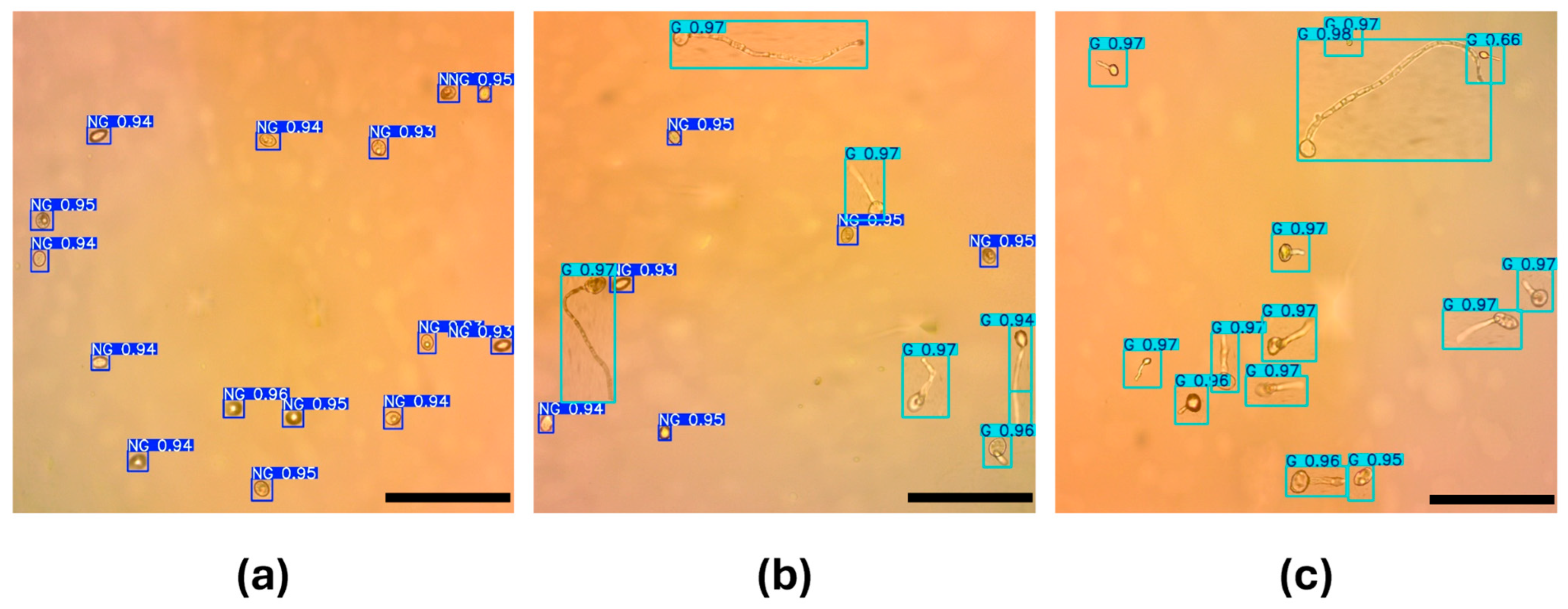

2.3.2. Image Labeling

2.3.3. Dataset Synthesis and Augmentation

- 1.

- The individual annotated conidia were randomly rotated before being inserted into the background images at 40×. To implement a data augmentation strategy without introducing bias from minor variations, each crop was rotated at discrete angles of 90°, 180°, or 270°. This operation is described by the rotation matrix in Equation (1).

- 2.

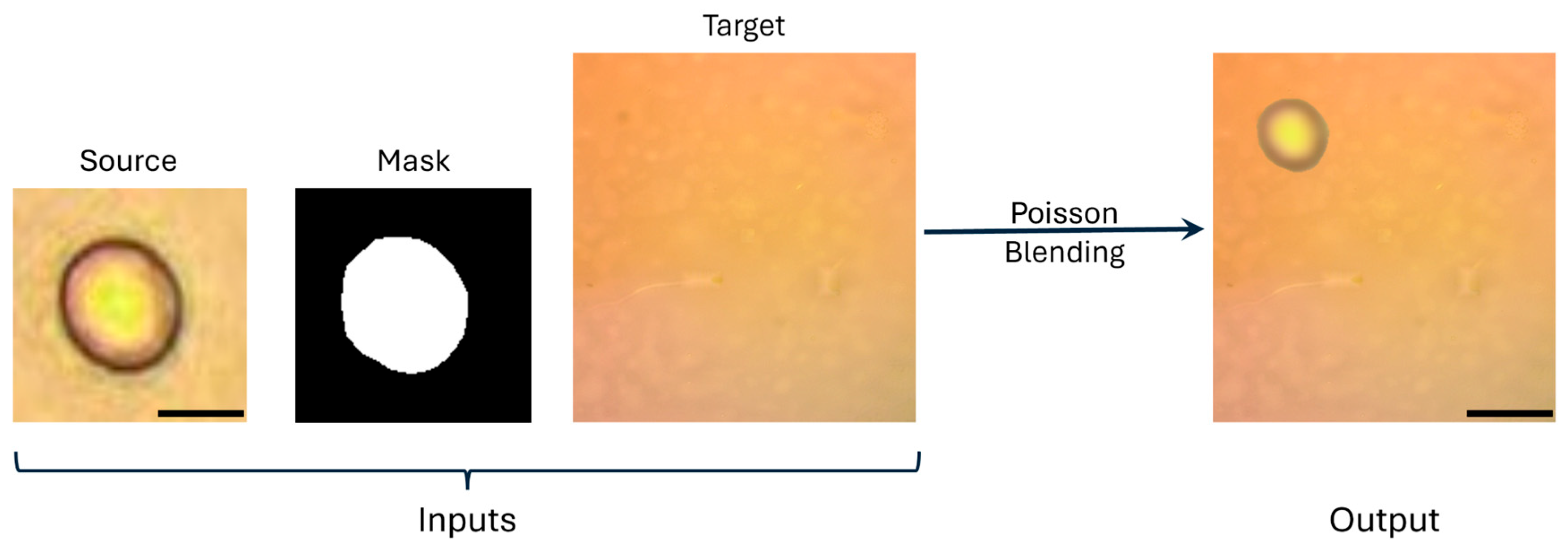

- Augmented crops were inserted into clean background fields, also captured at 40×. We applied the Poisson blending technique because it is an image composition approach that preserves the local gradient structure of the inserted object while adapting to the illumination and texture of the background, avoiding visible seams [59]. Figure 3 shows this process that begins with a source image, obtained through manual annotation, consisting of cropped conidia extracted from images acquired using a 40× microscope objective. A target image is then used, also captured with a 40× objective. A binary mask defines the exact region of the conidium to be blended. The blending was performed using OpenCV (version 4.11.0) seamlessClone in NORMAL_CLONE mode with full-resolution masks, ensuring that inserted crops conformed to local intensity and texture variations in the background. To reduce boundary carry-over, masks were morphologically eroded with a disk structuring element of 3 pixels. To objectively validate the realism of these synthetic images, we computed two no-reference image quality metrics: NIQE (Natural Image Quality Evaluator) [60] and BRISQUE (Blind/Referenceless Image Spatial Quality Evaluator) [61]. In both metrics, lower scores correspond to higher visual naturalness and fewer artifacts.

- 3.

- The centroid of each inserted conidium was randomly sampled, and proposals whose Intersection-over-Union (IoU) with previously placed instances exceeded a predefined threshold of 1% were discarded to prevent overlaps.

2.3.4. Dataset Preparation and Subset Allocation

2.4. Single-Stage Detection Models

2.4.1. YOLOv8

2.4.2. YOLOv11

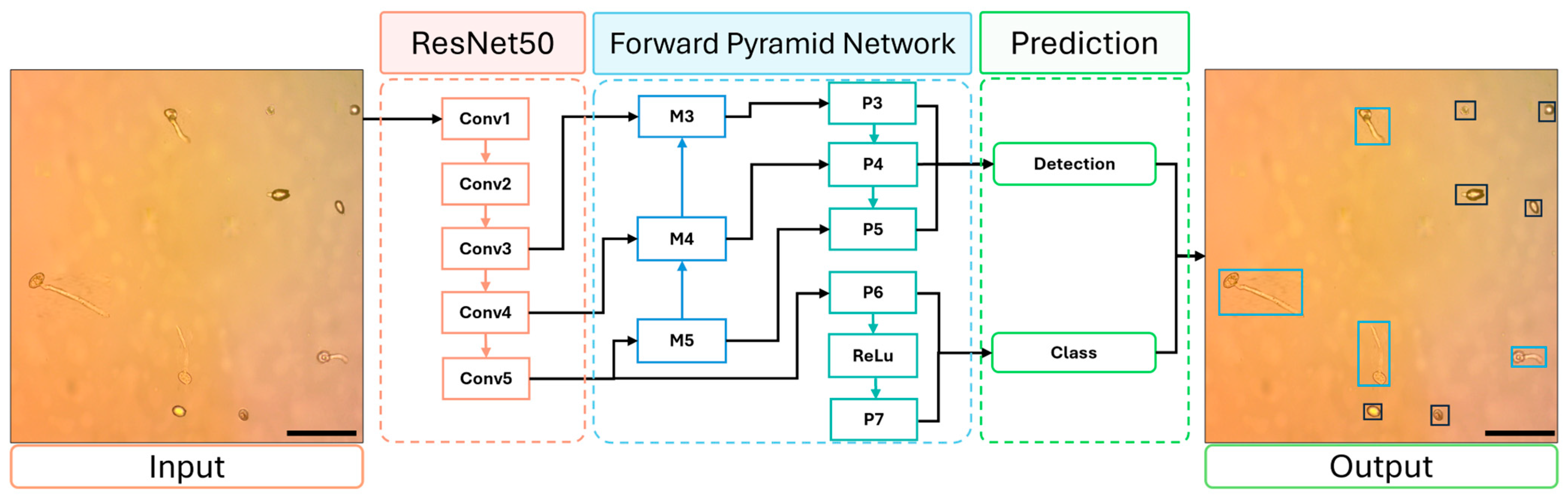

2.4.3. RetinaNet

2.5. Evaluation Metrics

2.6. Transfer Learning and Training Protocol

3. Results

3.1. Training and Validation Results

3.2. Test of the Models for Conidium Detection

3.3. Germination Percentage Estimation and Comparison with the Manual Approach

4. Discussion

Limitations and Sources of Error

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Deshpande, D.; Chhugani, K.; Ramesh, T.; Pellegrini, M.; Shiffman, S.; Abedalthagafi, M.S.; Alqahtani, S.; Ye, J.; Liu, X.S.; Leek, J.T.; et al. The Evolution of Computational Research in a Data-Centric World. Cell 2024, 187, 4449–4457. [Google Scholar] [CrossRef]

- Andersen, L.K.; Reading, B.J. A Supervised Machine Learning Workflow for the Reduction of Highly Dimensional Biological Data. Artif. Intell. Life Sci. 2024, 5, 100090. [Google Scholar] [CrossRef]

- Reddy, G.S.; Manoj, S.; Reddy, K. Leveraging Machine Learning Techniques in Agriculture: Applications, Challenges, and Opportunities. J. Exp. Agric. Int. 2025, 47, 43–57. [Google Scholar] [CrossRef]

- Xu, C.; Jackson, S.A. Machine Learning and Complex Biological Data The Revolution of Biological Techniques and Demands for New Data Mining Methods. Genome Biol. 2019, 20, 76. [Google Scholar] [CrossRef]

- Aijaz, N.; Lan, H.; Raza, T.; Yaqub, M.; Iqbal, R.; Pathan, M.S. Artificial Intelligence in Agriculture: Advancing Crop Productivity and Sustainability. J. Agric. Food Res. 2025, 20, 101762. [Google Scholar] [CrossRef]

- Dean, R.; Van Kan, J.A.L.; Pretorius, Z.A.; Hammond-Kosack, K.E.; Di Pietro, A.; Spanu, P.D.; Rudd, J.J.; Dickman, M.; Kahmann, R.; Ellis, J.; et al. The Top 10 Fungal Pathogens in Molecular Plant Pathology. Mol. Plant Pathol. 2012, 13, 414–430. [Google Scholar] [CrossRef] [PubMed]

- Cheung, N.; Tian, L.; Liu, X.; Li, X. The Destructive Fungal Pathogen Botrytis cinerea—Insights from Genes Studied with Mutant Analysis. Pathogens 2020, 9, 923. [Google Scholar] [CrossRef]

- Elad, Y.; Williamson, B.; Tudzynski, P.; Delen, N. Botrytis: Biology, Pathology and Control; SpringerNature: Berlin, Germany, 2007; pp. 1–403. [Google Scholar] [CrossRef]

- Orozco-mosqueda, M.C.; Kumar, A.; Fadiji, A.E.; Babalola, O.O.; Puopolo, G.; Santoyo, G. Agroecological Management of the Grey Mould Fungus. Plants 2023, 12, 637. [Google Scholar] [CrossRef]

- Iwaniuk, P.; Lozowicka, B. Biochemical Compounds and Stress Markers in Lettuce upon Exposure to Pathogenic Botrytis cinerea and Fungicides Inhibiting Oxidative Phosphorylation. Planta 2022, 255, 61. [Google Scholar] [CrossRef] [PubMed]

- Darras, A.I.; Joyce, D.C.; Terry, L.A. Postharvest UV-C Irradiation on Cut Freesia Hybrida, L. Inflorescences Suppresses Petal Specking Caused by Botrytis cinerea. Postharvest. Biol. Technol. 2010, 55, 186–188. [Google Scholar] [CrossRef]

- Marquenie, D.; Lammertyn, J.; Geeraerd, A.H.; Soontjens, C.; Van Impe, J.F.; Nicola, B.M.; Michiels, C.W. Inactivation of Conidia of Botrytis cinerea and Monilinia Fructigena Using UV-C and Heat Treatment. Int. J. Food Microbiol. 2002, 74, 27–35. [Google Scholar] [CrossRef]

- Marquenie, D.; Geeraerd, A.H.; Lammertyn, J.; Soontjens, C.; Van Impe, J.F.; Michiels, C.W.; Nicolaï, B.M. Combinations of Pulsed White Light and UV-C or Mild Heat Treatment to Inactivate Conidia of Botrytis cinerea and Monilia Fructigena. Int. J. Food Microbiol. 2003, 85, 185–196. [Google Scholar] [CrossRef]

- Pan, J.; Vicente, A.R.; Martínez, G.A.; Chaves, A.R.; Civello, P.M. Combined Use of UV-C Irradiation and Heat Treatment to Improve Postharvest Life of Strawberry Fruit. J. Sci. Food Agric. 2004, 84, 1831–1838. [Google Scholar] [CrossRef]

- Janisiewicz, W.J.; Takeda, F.; Glenn, D.M.; Camp, M.J.; Jurick, W.M. Dark Period Following UV-C Treatment Enhances Killing of Botrytis cinerea Conidia and Controls Gray Mold of Strawberries. Phytopathology 2016, 106, 386–394. [Google Scholar] [CrossRef] [PubMed]

- Jin, P.; Wang, H.; Zhang, Y.; Huang, Y.; Wang, L.; Zheng, Y. UV-C Enhances Resistance against Gray Mold Decay Caused by Botrytis cinerea in Strawberry Fruit. Sci. Hortic. 2017, 225, 106–111. [Google Scholar] [CrossRef]

- Mercier, J.; Roussel, D.; Charles, M.-T.; Arul, J. Systemic and Local Responses Associated with UV- and Pathogen-Induced Resistance to Botrytis cinerea in Stored Carrot. Phytopathology 2000, 90, 981–986. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Mercier, J.; Baka, M.; Reddy, B.; Corcuff, R.; Arul, J. Shortwave Ultraviolet Irradiation for Control of Decay Caused by Botrytis cinerea in Bell Pepper: Induced Resistance and Germicidal Effects. J. Am. Soc. Hortic. Sci. 2001, 126, 128–133. [Google Scholar] [CrossRef]

- Charles, M.T.; Makhlouf, J.; Arul, J. Physiological Basis of UV-C Induced Resistance to Botrytis cinerea in Tomato Fruit. II. Modification of Fruit Surface and Changes in Fungal Colonization. Postharvest. Biol. Technol. 2008, 47, 21–26. [Google Scholar] [CrossRef]

- Charles, M.T.; Benhamou, N.; Arul, J. Physiological Basis of UV-C Induced Resistance to Botrytis cinerea in Tomato Fruit. III. Ultrastructural Modifications and Their Impact on Fungal Colonization. Postharvest. Biol. Technol. 2008, 47, 27–40. [Google Scholar] [CrossRef]

- Charles, M.T.; Goulet, A.; Arul, J. Physiological Basis of UV-C Induced Resistance to Botrytis cinerea in Tomato Fruit. IV. Biochemical Modification of Structural Barriers. Postharvest. Biol. Technol. 2008, 47, 41–53. [Google Scholar] [CrossRef]

- Charles, M.T.; Tano, K.; Asselin, A.; Arul, J. Physiological Basis of UV-C Induced Resistance to Botrytis cinerea in Tomato Fruit. V. Constitutive Defence Enzymes and Inducible Pathogenesis-Related Proteins. Postharvest. Biol. Technol. 2009, 51, 414–424. [Google Scholar] [CrossRef]

- Nigro, F.; Ippolito, A.; Lima, G. Use of UV-C to Reduce Storage Rot of Table Grapes. Postharvest. Biol. Technol. 1998, 13, 171–181. [Google Scholar] [CrossRef]

- Ouhibi, C.; Attia, H.; Nicot, P.; Lecompte, F.; Vidal, V.; Lachaâl, M.; Urban, L.; Aarrouf, J. Effects of Nitrogen Supply and of UV-C Irradiation on the Susceptibility of Lactuca Sativa L to Botrytis cinerea and Sclerotinia Minor. Plant Soil. 2015, 393, 35–46. [Google Scholar] [CrossRef]

- Vega, K.; Ochoa, S.; Patiño, L.F.; Herrera-Ramírez, J.A.; Gómez, J.A.; Quijano, J.C. UV-C Radiation for Control of Gray Mold Disease in Postharvest Cut Roses. J. Plant Prot. Res. 2020, 60, 351–361. [Google Scholar] [CrossRef]

- Valencia, M.A.; Patiño, L.F.; Herrera-Ramírez, J.A.; Castañeda, D.A.; Gómez, J.A.; Quijano, J.C. Using UV-C Radiation and Image Analysis for Fungus Control in Tomato Plants. Opt. Pura Y Apl. 2017, 50, 369–378. [Google Scholar] [CrossRef]

- Cucu, M.A.; Choudhary, R.; Trkulja, V.; Garg, S.; Matić, S. Utilizing Environmentally Friendly Techniques for the Sustainable Control of Plant Pathogens: A Review. Agronomy 2025, 15, 1551. [Google Scholar] [CrossRef]

- Nassr, S. Effect of Factors on Conidium Germination of Botrytis cinerea in Vitro. Int. J. Plant Soil. Sci. 2013, 2, 41–54. [Google Scholar] [CrossRef]

- Uddin, M.J.; Huang, X.; Lu, X.; Li, S. Increased Conidia Production and Germination In Vitro Correlate with Virulence Enhancement in Fusarium Oxysporum f. Sp. Cucumerinum. J. Fungi. 2023, 9, 847. [Google Scholar] [CrossRef]

- Lopes, R.B.; Martins, I.; Souza, D.A.; Faria, M. Influence of Some Parameters on the Germination Assessment of Mycopesticides. J. Invertebr. Pathol. 2013, 112, 236–242. [Google Scholar] [CrossRef]

- Waqas, M.; Naseem, A.; Humphries, U.W.; Hlaing, P.T.; Dechpichai, P.; Wangwongchai, A. Applications of Machine Learning and Deep Learning in Agriculture: A Comprehensive Review. Green Technol. Sustain. 2025, 3, 100199. [Google Scholar] [CrossRef]

- Ballena-Ruiz, J.; Arcila-Diaz, J.; Tuesta-Monteza, V. Automated Detection and Counting of Gossypium Barbadense Fruits in Peruvian Crops Using Convolutional Neural Networks. AgriEngineering 2025, 7, 152. [Google Scholar] [CrossRef]

- Huang, T.-S.; Wang, K.; Ye, X.-Y.; Chen, C.-S.; Chang, F.-C. Attention-Guided Transfer Learning for Identification of Filamentous Fungi Encountered in the Clinical Laboratory. Microbiol. Spectr. 2023, 11, e0461122. [Google Scholar] [CrossRef]

- Zhou, H.; Lai, Q.; Huang, Q.; Cai, D.; Huang, D.; Wu, B. Automatic Detection of Rice Blast Fungus Spores by Deep Learning-Based Object Detection: Models, Benchmarks and Quantitative Analysis. Agriculture 2024, 14, 290. [Google Scholar] [CrossRef]

- Zieliski, B.; Sroka-Oleksiak, A.; Rymarczyk, D.; Piekarczyk, A.; Brzychczy-Woch, M. Deep Learning Approach to Describe and Classify Fungi Microscopic Images. PLoS ONE 2020, 15, e0234806. [Google Scholar] [CrossRef]

- Attri, I.; Awasthi, L.K.; Sharma, T.P.; Rathee, P. A Review of Deep Learning Techniques Used in Agriculture. Ecol. Inform. 2023, 77, 102217. [Google Scholar] [CrossRef]

- Hossen, M.I.; Awrangjeb, M.; Pan, S.; Mamun, A. Al Transfer Learning in Agriculture: A Review. Artif. Intell. Rev. 2025, 58, 97. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Zhang, D.; Sun, Y.; Nanehkaran, Y.A. Using Deep Transfer Learning for Image-Based Plant Disease Identification. Comput. Electron. Agric. 2020, 173, 105393. [Google Scholar] [CrossRef]

- Shoaib, M.; Sadeghi-Niaraki, A.; Ali, F.; Hussain, I.; Khalid, S. Leveraging Deep Learning for Plant Disease and Pest Detection: A Comprehensive Review and Future Directions. Front. Plant Sci. 2025, 16, 1538163. [Google Scholar] [CrossRef]

- Pacal, I.; Kunduracioglu, I.; Alma, M.H.; Deveci, M.; Kadry, S.; Nedoma, J.; Slany, V.; Martinek, R. A Systematic Review of Deep Learning Techniques for Plant Diseases. Artif. Intell. Rev. 2024, 57, 304. [Google Scholar] [CrossRef]

- Bharman, P.; Ahmad Saad, S.; Khan, S.; Jahan, I.; Ray, M.; Biswas, M. Deep Learning in Agriculture: A Review. Asian J. Res. Comput. Sci. 2022, 13, 28–47. [Google Scholar] [CrossRef]

- Kiyuna, T.; Cosatto, E.; Hatanaka, K.C.; Yokose, T.; Tsuta, K.; Motoi, N.; Makita, K.; Shimizu, A.; Shinohara, T.; Suzuki, A.; et al. Evaluating Cellularity Estimation Methods: Comparing AI Counting with Pathologists’ Visual Estimates. Diagnostics 2024, 14, 1115. [Google Scholar] [CrossRef]

- Xie, W.; Noble, J.A.; Zisserman, A. Microscopy Cell Counting and Detection with Fully Convolutional Regression Networks. Comput. Methods Biomech. Biomed. Eng. Imaging Vis 2018, 6, 283–292. [Google Scholar] [CrossRef]

- Li, C.; Ma, X.; Deng, J.; Li, J.; Liu, Y.; Zhu, X.; Liu, J.; Zhang, P. Machine Learning-Based Automated Fungal Cell Counting under a Complicated Background with Ilastik and ImageJ. Eng. Life Sci. 2021, 21, 769–777. [Google Scholar] [CrossRef]

- Patakvölgyi, V.; Kovács, L.; Drexler, D.A. Artificial Neural Networks Based Cell Counting Techniques Using Microscopic Images: A Review. In Proceedings of the 2024 IEEE 18th International Symposium on Applied Computational Intelligence and Informatics (SACI), Timisoara, Romania, 23–25 May 2024; pp. 327–332. [Google Scholar] [CrossRef]

- Aldughayfiq, B.; Ashfaq, F.; Jhanjhi, N.Z.; Humayun, M. YOLOv5-FPN: A Robust Framework for Multi-Sized Cell Counting in Fluorescence Images. Diagnostics 2023, 13, 2280. [Google Scholar] [CrossRef]

- Emre Dedeagac, C.; Koyuncu, C.F.; Adams, M.M.; Edemen, C.; Ugurdag, B.C.; Ilgim Ardic-Avci, N.; Fatih Ugurdag, H. A Guided-Ensembling Approach for Cell Counting in Fluorescence Microscopy Images. IEEE Access 2024, 12, 1. [Google Scholar] [CrossRef]

- Berg, S.; Kutra, D.; Kroeger, T.; Straehle, C.N.; Kausler, B.X.; Haubold, C.; Schiegg, M.; Ales, J.; Beier, T.; Rudy, M.; et al. Ilastik: Interactive Machine Learning for (Bio)Image Analysis. Nat. Methods 2019, 16, 1226–1232. [Google Scholar] [CrossRef]

- Li, K.; Zhu, X.; Qiao, C.; Zhang, L.; Gao, W.; Wang, Y. The Gray Mold Spore Detection of Cucumber Based on Microscopic Image and Deep Learning. Plant Phenomics 2023, 5, 0011. [Google Scholar] [CrossRef] [PubMed]

- Lei, Y.; Yao, Z.; He, D. Automatic Detection and Counting of Urediniospores of Puccinia Striiformis f. Sp. Tritici Using Spore Traps and Image Processing. Sci. Rep. 2018, 8, 13647. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Liu, S.; Hu, Z.; Bai, Y.; Shen, C.; Shi, X. Separate Degree Based Otsu and Signed Similarity Driven Level Set for Segmenting and Counting Anthrax Spores. Comput. Electron. Agric. 2020, 169, 105230. [Google Scholar] [CrossRef]

- Zhao, E.; Zhao, H.; Liu, G.; Jiang, J.; Zhang, F.; Zhang, J.; Luo, C.; Chen, B.; Yang, X. Automated Recognition of Conidia of Nematode-Trapping Fungi Based on Improved YOLOv8. IEEE Access 2024, 12, 81314–81328. [Google Scholar] [CrossRef]

- Zhang, M.; Zhao, J.; Hoshino, Y. Deep Learning-Based High-Throughput Detection of in Vitro Germination to Assess Pollen Viability from Microscopic Images. J. Exp. Bot. 2023, 74, 6551–6562. [Google Scholar] [CrossRef]

- Colmer, J.; O’Neill, C.M.; Wells, R.; Bostrom, A.; Reynolds, D.; Websdale, D.; Shiralagi, G.; Lu, W.; Lou, Q.; Le Cornu, T.; et al. SeedGerm: A Cost-Effective Phenotyping Platform for Automated Seed Imaging and Machine-Learning Based Phenotypic Analysis of Crop Seed Germination. New Phytol. 2020, 228, 778–793. [Google Scholar] [CrossRef] [PubMed]

- Zanetoni, H.H.R.; Araujo, L.G.; Almeida, R.P.; Cabral, C.E.A. Artificial Intelligence in the Identification of Germinated Soybean Seeds. AgriEngineering 2025, 7, 169. [Google Scholar] [CrossRef]

- Genze, N.; Bharti, R.; Grieb, M.; Schultheiss, S.J.; Grimm, D.G. Accurate Machine Learning-Based Germination Detection, Prediction and Quality Assessment of Three Grain Crops. Plant Methods 2020, 16, 157. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.J.; Bourdev, L.D.; Girshick, R.B.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Pérez, P.; Gangnet, M.; Blake, A. Poisson Image Editing. ACM Trans. Graph 2003, 22, 313–318. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “Completely Blind” Image Quality Analyzer. IEEE Signal Process Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-Reference Image Quality Assessment in the Spatial Domain. IEEE Trans. Image Process 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J. Ultralytics YOLO11; Ultralytics: Frederick, MD, USA, 2024. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8; Ultralytics: Frederick, MD, USA, 2023. [Google Scholar]

- Hahlbohm, A.; Struck, C.; de Mol, F.; Strehlow, B. UV-C Treatment Has a Higher Efficacy on Reducing Germination of Spores Rather than Mycelium Growth. Eur. J. Plant Pathol. 2025, 172, 761–777. [Google Scholar] [CrossRef]

- Jiang, L.; Chen, W.; Shi, H.; Zhang, H.; Wang, L. Cotton-YOLO-Seg: An Enhanced YOLOV8 Model for Impurity Rate Detection in Machine-Picked Seed Cotton. Agriculture 2024, 14, 1499. [Google Scholar] [CrossRef]

- Wang, N.; Cao, H.; Huang, X.; Ding, M. Rapeseed Flower Counting Method Based on GhP2-YOLO and StrongSORT Algorithm. Plants 2024, 13, 2388. [Google Scholar] [CrossRef] [PubMed]

| Subset | High Resolution Images | Bonding Boxes with Labels | Percentage |

|---|---|---|---|

| Training | 400 | 4006 | 80% |

| Validation | 50 | 484 | 10% |

| Test | 50 | 494 | 10% |

| Total | 500 | 4984 | 100% |

| Model | Accuracy (mAPbbox on COCO) | Inference Speed (FPS) | Model Size |

|---|---|---|---|

| YOLOv8 | 52.9% | ~95 | ~87 MB |

| YOLOv11 | 53.4% | ~250 | ~49 MB |

| RetinaNet | 33.5% | ~18 | ~146 MB |

| Hyperparameter | YOLOv8 | YOLOv11 | RetinaNet |

|---|---|---|---|

| Batch size | 16 | 16 | 16 |

| GPU | GPU NVIDIA T4 | GPU NVIDIA T4 | GPU NVIDIA T4 |

| Optimizer | AdamW | AdamW | AdamW |

| Epochs | 50 | 50 | 50 |

| Image size | 640 | 640 | 640 |

| Scheduler | Cosine + LR | Cosine with warmup | Step LR |

| Loss | BCE + CIoU loss | BCE + CIoU loss | Focal Loss |

| Data augmentation | HSV | HSV | HSV |

| Backbone | C2f-based (custom) | EfficientRep | ResNet50-FPN |

| Pretrained | COCO | COCO | COCO |

| Model | Class | Precision | Recall | F1-Score | IoU | AP50 | mAP50 | Inference Time (s) |

|---|---|---|---|---|---|---|---|---|

| YOLOv8 | G NG | 0.958 0.973 | 0.988 0.995 | 0.973 0.986 | 0.920 0.913 | 0.989 0.995 | 0.992 | 0.1556 |

| YOLOv11 | G NG | 0.977 0.995 | 0.998 0.995 | 0.988 0.995 | 0.968 0.950 | 0.998 0.995 | 0.997 | 0.1443 |

| RetinaNet | G NG | 0.934 0.922 | 0.990 0.995 | 0.966 0.957 | 0.913 0.884 | 0.995 0.999 | 0.997 | 3.83 |

| Groups of 10 Images | Mean Germination Percentage (Manual) | Mean Germination Percentage (YOLOv11) | RMSE (%) |

|---|---|---|---|

| 1080 J·m−2 UV-C treatment | 2.31 | 2.25 | 0.17 |

| 810 J·m−2 UV-C treatment | 44.80 | 44.44 | 3.07 |

| 0 J·m−2 UV-C (Control) | 95.59 | 99.9 | 8.72 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gómez-Meneses, L.M.; Pérez, A.; Sajona, A.; Patiño, L.F.; Herrera-Ramírez, J.; Carrasquilla, J.; Quijano, J.C. Leveraging Transfer Learning for Determining Germination Percentages in Gray Mold Disease (Botrytis cinerea). AgriEngineering 2025, 7, 303. https://doi.org/10.3390/agriengineering7090303

Gómez-Meneses LM, Pérez A, Sajona A, Patiño LF, Herrera-Ramírez J, Carrasquilla J, Quijano JC. Leveraging Transfer Learning for Determining Germination Percentages in Gray Mold Disease (Botrytis cinerea). AgriEngineering. 2025; 7(9):303. https://doi.org/10.3390/agriengineering7090303

Chicago/Turabian StyleGómez-Meneses, Luis M., Andrea Pérez, Angélica Sajona, Luis F. Patiño, Jorge Herrera-Ramírez, Juan Carrasquilla, and Jairo C. Quijano. 2025. "Leveraging Transfer Learning for Determining Germination Percentages in Gray Mold Disease (Botrytis cinerea)" AgriEngineering 7, no. 9: 303. https://doi.org/10.3390/agriengineering7090303

APA StyleGómez-Meneses, L. M., Pérez, A., Sajona, A., Patiño, L. F., Herrera-Ramírez, J., Carrasquilla, J., & Quijano, J. C. (2025). Leveraging Transfer Learning for Determining Germination Percentages in Gray Mold Disease (Botrytis cinerea). AgriEngineering, 7(9), 303. https://doi.org/10.3390/agriengineering7090303