Abstract

Prays oleae Bernard, 1788, or the olive moth, is a significant pest in Croatian olive groves. This study aims to develop a functional model based on an artificial neural network to detect olive moths in real time. This study was conducted in two different orchards in Zadar County, Croatia, in the periods from April to September 2022 and from May to July 2023. Moth samples were collected by placing traps with adhesive pads in these orchards. Photos of the pads were taken every week and were later annotated and used to develop the dataset for the artificial neural network. This study primarily focused on the average precision parameter to evaluate the model’s detection capabilities. The average AP value for all classes was 0.48, while the average AP value for the Olive_trap_moth class, which detected adult P. oleae, was 0.59. The model showed the best results at an IoU threshold of 50%, achieving an AP50 value of 0.75. The AP75 value was 0.56 at an IoU = 75%. The mean average precision (mAP) was 0.48. This model is a promising tool for P. oleae detection; however, further research is advised.

1. Introduction

The olive moth (Prays oleae Bernard, 1788) (Lepidoptera: Praydidae) is one of the most important pests in the Mediterranean basin [1], along with the olive fruit fly (Bactrocera oleae (Rossi, 1790)) (Diptera: Tephritidae). As a monophagous species, the olive moth feeds only on one plant species, the olive [2]. This pest poses a considerable threat to olive yields, with potential crop losses ranging from 20% to 90%, depending on climatic conditions and geographical location [3].

Three generations of P. oleae occur annually. The anthophagous generation emerges from April to May, primarily damaging olive flower buds, where females oviposit [4]. A single larva can damage up to 40 flower buds [3]. The carpophagous generation is the most economically important due to its tendency to cause major olive fruit drops [5]. It occurs mostly in phenophases 71 to 75 on the Biologische Bundesanstalt, Bundessortenamt und Chemische Industrie (BBCH) scale [6]. The emergence of adults of this generation occurs in June when olive fruits are in their swelling stage [7]. Adult females oviposit near the stem where the emerging larvae make tunnels into the fruit’s stone, causing damage [2]. High temperatures, which do not exceed 30 °C, favor the fast development of moths in this phase. However, extreme temperatures can slow down and eventually stop the development of the carpophagous generation [8]. Early detection of the carpophagous generation is crucial to a healthy and productive olive harvest [9]. Shehata et al. [10] describe how increasing temperatures decrease the days needed for eggs, larvae, pupae, and adults to fully develop. In addition, the oviposition capacities of P. oleae are positively correlated with increased temperature, meaning the number of oviposited eggs also increases with temperature and relative humidity. Oviposition occurs at night, while the first flight of adults occurs during the day [10].

The phyllophagous generation does not cause olive fruit to drop and is not economically important [9]. Adults from this generation appear in September and feed on olive leaves, which results in major leaf damage and defoliation [9]. These adults overwinter and resume their reproduction cycle in spring the following year [9].

Olive trees have been one of the most valuable plant species since ancient times in the Mediterranean basin [11]. Managing the P. oleae population is of key importance in these areas. Today, pest management consists of using integrated pest management strategies [12]. These strategies may include introducing natural enemies or targeted pesticide application [2]. However, traditional pest control and pest monitoring methods often suffer from inefficiency, high costs, and delayed response times [12]. To combat these problems, a need for more practical solutions arises.

Artificial neural networks (ANNs) are one of the proposed technologies used in precision agriculture to solve early pest and disease detection [13]. ANNs are used for image recognition (IR) as a means of early pest detection [14]. They were made as an imitation of processes in the human brain [15]. An ANN usually consists of an input layer, one or more hidden layers, and an output layer [16]. One example of such a set is using red, green, and blue (RGB) imagery for object detection [17]. Their value comes from reducing data processing time while keeping high detection accuracy [16]. To address the limitations of traditional farming practices, researchers have increasingly adopted ANN-based models and other machine learning techniques to detect plant pathogens and pests [18].

Čirjak et al. [19] have developed an automatic monitoring system for the early detection of Leucoptera malifoliella (O. Costa, 1836) (Lepidoptera: Lyonetiidae) in its natural environment. The authors have also created a monitoring system for detecting leaf damage, thus obtaining a complete picture of the overall situation in the orchard. Other scientists focused more on reducing the amount of data needed for developing a fully functional ANN-based model. Popescu et al. [20] used ANNs for the automatic detection of Halyomorpha halys (Stål, 1855) (Hemiptera: Pemtatomidae). The authors have used several different types of ANNs with various amounts of data input. Their goal was to emphasize the negative correlation between models’ detection accuracy and the amount of data needed, which means that the best models should use less data and still achieve great pest detection accuracy. Similarly, Hong et al. [21] used ANNs in their research for the simultaneous detection of several moth types—Spodoptera litura (Fabricius, 1775) (Lepidoptera: Noctuidae), Spodoptera exigua (Hübner, 1808) (Lepidoptera: Noctuidae), and Helicoverpa assaulta (Guenée, 1852) (Lepidoptera: Noctuidae). The focus was on the quantity of pest samples versus pest detection time. The authors also pointed out data loss due to moth overlapping, which decreased their model detection accuracy. Another convenient way to reduce the amount of data required is to improve an already existing dataset. Rajput et al. [22] emphasized image manipulation in their pest detection systems, meaning that quality and datasets can be improved without the need to collect additional field data, which significantly reduces the labor needed for pest detection.

This paper aims to present the development of an artificial neural network-based model for the detection of P. oleae, as well as to identify the key parameters required for successful model training. Given the persistent presence of olive moths in olive groves, a model was specifically designed for P. oleae. Furthermore, the collected data may serve as a foundation for developing models targeting other pests in olives or even in other fruit crops.

In Section 2, it is shown how the research is conducted in three phases. The Data Acquisition Process describes the first step in the methods used to gather data that were to be used as the base dataset for the training of the model. The prototype model for image acquisition is described in this section. Data Preparation and Annotation describes the second phase, discussing the way in which the collected data were prepared and annotated for further analysis. Artificial Neural Network and the Parameters Used for Its Training is the third and final phase, which explains how these data are used in developing an ANN-based device for P. oleae monitoring and which parameters are measured in this research.

The Section 3 describes which parameters were measured in the research and shows their values. Additionally, it discussed the similarities and differences between this model and other ANN-based detection models that were used by other authors.

2. Materials and Methods

This research is split into three phases. The first phase refers to the collection of field data, which will serve as the training dataset for the ANN-based pest detection model. The second phase focuses on the annotation and categorization of pests and other objects within the collected images. Finally, the third phase involves the development of automatic P. oleae detection using data from earlier collected images, including the evaluation of the model’s accuracy and effectiveness.

2.1. Data Acquisition Process

The dataset needed for this research was obtained by capturing images of adhesive pads from delta traps that contained adult P. oleae moths. The data collection process was carried out between May and October 2022 and between May and July 2023 in olive groves located in Islam Grčki (44°09′15.4″ N 15°26′29.9″ E) and Poličnik (44°10′39.7″ N 15°21′52.9″ E) in Zadar County, Croatia. Six delta traps of the RAG type (CSalomon, Plant Protection Institute, Hungarian Academy of Science, Budapest, Hungary) were set in 2022 and eight were set in 2023, to collect enough data from the antophagous and carpophagous generations of P. oleae. The traps were diagonally set in the orchards to randomize samples and to gather as much data as possible. They were set in treetops to increase the chance of an adult P. oleae landing on the adhesive pads.

RAG delta traps consist of an adhesive pad, coated with a colorless and scentless glue, a plastic container, and pheromone ampules, used to attract adult male P. oleae moths. These traps were fixed with a wire on the tree branches. During the data collection phase, the traps were inspected, and adhesive pads were changed weekly. This phase lasted from 28 April–13 September 2022, and from 3 May–31 July 2023.

The pads were carefully stored in transparent stretch wrap and transported under controlled conditions to maintain specimen integrity before image acquisition.

Images of the adhesive pads were captured using a modified image acquisition device. Images were taken once per week.

Figure 1 shows the prototype of a modified device for image collection, equipped with a 12.5-megapixel RGB camera mounted in protective polycarbonate housing.

Figure 1.

A modified prototype device and a single-board computer used for image acquisition are shown from different angles (left—front view; middle—sideways view; right—SBC).

The housing has two entrances on opposite sides. The primary purpose of the housing is to shield the camera from external factors that could cause damage. Additionally, it ensures consistent lighting conditions during image capture, maintaining uniformity across all collected data. Oscillations in lighting could have potentially disrupted the overall model precision.

The housing’s length is 24 cm, its width is 16 cm, and its height is 25 cm. The top of the housing is made of metal, which ensures camera stability. The RGB camera has a horizontal field of vision of 75 degrees and a 3.9 mm lens. The camera and the single-board computer (SBC) are connected by an 80 cm long flat flexible cable (FFC). Powering consists of an external battery connected to the SBC by a USB-C cable.

The collected adhesive pads were placed at the bottom of the housing. Images were taken after removing all the light noise around the housing.

Photos were captured and stored, and data were transferred, on the SBC with the Raspberry Pi 4 operating system. The length of the SBC (device) was 11 cm, the width was 4 cm, and the height was 11 cm. The SBC had built-in software with an Ethernet connection, which enabled data storage and transfer to a cloud server.

2.2. Data Preparation and Annotation

Images of the adhesive pads were processed using LabelImg 1.8.1. program [23]. It enabled the annotation of each P. oleae moth, other insects, and other objects on the adhesive pads. Each of these items was inspected and manually placed in a differently colored bounding box by an expert.

Three classes were defined to categorize the annotated objects, which were later used for the creation of the automatic P. oleae detection model. Those classes are Olive_trap_moth, used for P. oleae male moths; Trap_insects_other, used for other insects that appeared on the adhesive pads; and Trap_other, used for other objects.

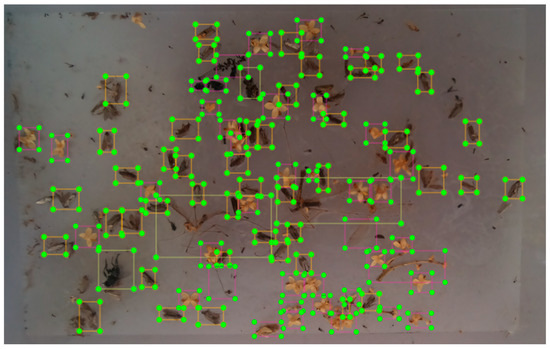

Figure 2 shows an example of the annotation process for one of the images using the LabelImg program. Orange bounding boxes were used to annotate P. oleae adults, green ones were used for other insects, and purple ones were used for other objects that appeared occasionally on the adhesive pads.

Figure 2.

Orange boxes represent the annotation of the Olive_trap_moth (adult P. oleae) class, purple boxes represent the Trap_other class, and green boxes represent the Trap_insects_other class.

Annotated images were formatted as Pascal Visual Object Classes (PascalVOCs). PascalVOCs belong to the Extensible Markup Language (XML) structure group; they are free and often used for the development of automatic models that use datasets with several different categories [24]. Blurred and incomplete objects, as well as objects found at the edges of the images, were not included in the annotation process, meaning they did not contribute to the development of this model. Additionally, the images were not resized or reshaped in any way during the preprocessing stage.

2.3. Artificial Neural Network and Parameters Used for Its Training

The visual dataset used for ANN learning consists of randomly chosen images acquired in the earlier stages of the research.

TensorFlow [25] was the platform of choice for developing this ANN-based model. It is based on the Python 3.6 programming language. Python is commonly used for machine learning and ANN-based models, supported by artificial intelligence (AI). It was developed by Guido van Rossum in 1991 as a programming language that can be used in a wide spectrum of applicability [26]. Scalable and Efficient Object Detection Lite 4 (EfficiendDet-Lite 4) is a base model used to develop other automated object monitoring models. EfficientDet was created in 2019 to improve and reduce processing time in AI-aided object detection [27]. The EfficientDet-Lite model uses 50 epochs by default, which means that this model went through the training set 50 times to detect objects. EfficientDet uses a weighted bi-directional feature pyramid network (BiFPN), which means it places learnable weights for different input features, while applying top-down and bottom-up multi-scale feature fusion [27]. Unlike its predecessors, the EfficientDet model uses fast fusion normalization where the value of weights falls between 0 and 1, which greatly increases the model’s feature extraction speed [27].

Loss function, average precision, and average recall are some of the terms commonly used to describe the effectiveness of an automated detection model [28].

Loss function describes the difference between a real and an ANN-based model’s prediction of annotated classes. It can also be used as a function that measures the relevancy of the dataset used for the development of a model [29]. In other words, the loss function is the sum of all errors made by an automated detection model [29].

Average precision (AP) and average recall (AR) are statistical terms used to measure the accuracy of an ANN-based model. AP is defined as the average number of relevant data used in a dataset or as the number of relevant objects per image and is measured between 0 and 1 [30]. In this case, AR is used to compare the real and computer-detected amounts of adult P. oleae, other insects, and other objects in the dataset.

The mean average precision (mAP) is a value calculated by averaging all APs across all classes in a specific database [30]. The formula used to calculate it is as follows:

where APi is the ith class and N is the total number of classes [30].

This research used AP50 and AP75 values as model accuracy indicators. These values were used at IoU = 50 and IoU = 75. Intersection over union (IoU) refers to the area overlapping two objects [20]. In the case of ANN-based model development, it refers to the real and predicted areas of the classified objects in the given dataset [20].

The training images were not resized or normalized in the preprocessing phase. Blurred features and those located at the edges of the images were excluded from the training process. Data was processed using the LabelImg program (Figure 2), where only the necessary elements for the model’s detection were annotated, ultimately enhancing the model’s speed in detecting new data.

3. Results and Discussion

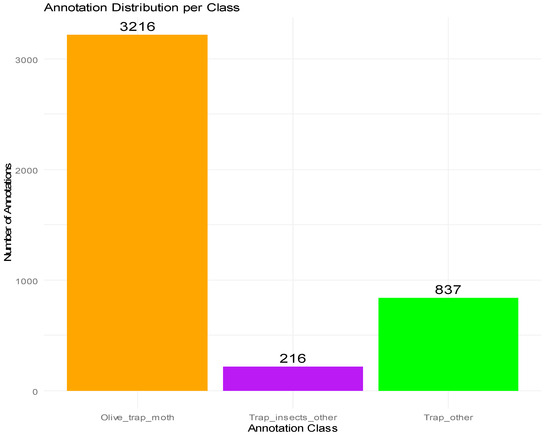

A total of 157 images were captured and used as the basis for ANN learning. In total, 4269 annotations were made on those images, with 3216 annotations belonging to the Olive_trap_moth class, 837 to the Trap_other class, and 216 to the Trap_insects_other class, as seen in Figure 3.

Figure 3.

Visualization of the number of annotations in this research. A total of 3216 annotations belong to the Olive_trap_moth class, 216 belong to the Trap_insects_other class, and 837 belong to the Trap_other class.

After running the automatic model for P. oleae detection, the result showed an AP value of 0.59 for the Olive_trap_moth class, while the AP values for Trap_other and Trap_insects_other were 0.47 and 0.38, respectively. The model performed best at an IoU threshold of 50%, achieving an AP50 value of 0.75, while at a stricter IoU threshold, the AP75 value was 0.56. Table 1 shows all the values obtained by the automatic P. oleae monitoring model.

Table 1.

Parameters and their values of the Prays oleae monitoring device.

In the dataset for the precision of the detection of this model, 59% of the images were relevant for the development of the model. Within this dataset, 38% of the images had relevant data for the Trap_insects_other class, while 47% included relevant data for the Trap_other class. The AP value of the overall model had an average of 0.48, meaning that 48% of the randomized images of the adhesive pads in the dataset were valid for the development of the ANN-based model for P. oleae detection.

The AP values of classes Olive_trap_moth, Trap_other and Trap_insects_other directly affect the mAP value, since it is a value that depends on the average AP value of all the classes in the dataset. In this research, the mAP is 0.48. This value would be significantly greater if the AP values for the classes Trap_other and Trap_insects_other were higher. A possible explanation for this result is the fact that the class with the highest AP value, Olive_trap_moth, consists of only one element—adult P. oleae moths. By focusing on only one element, this detection model gives better results. In order to increase the mAP value, it is important to increase the AP values of both the Trap_other and Trap_insects_other classes. To improve detection accuracy for the Trap_insect_other and Trap_other classes, it is recommended to refine the classification process by introducing additional sub-classes, such as distinct categories for flowers, leaves, and other non-target objects. This refinement could enhance the model’s ability to differentiate between various elements and improve overall detection performance, which would increase the mAP value of this model.

During the development phase of this model, the biggest importance was given to the AP value, as it served as a key indicator of the model’s accuracy. Additionally, several other factors were considered critical to optimizing performance, including the number of images used, the background consistency of the images, image manipulation techniques, and the way insects were positioned on the adhesive pads. These elements played a significant role in enhancing the model’s detection capabilities and overall reliability. Popescu et al. [20] researched the importance of image background while developing an ANN-based detection model. They used images with complex backgrounds, such as plants and natural environments, and compared the success rate of their pest detection model with such backgrounds versus images that used plain white backgrounds. Additionally, Hong et al. [21] described the influence of pest positioning on adhesive pads on detection model accuracy. Some moths twisted beyond the point of recognition by the model, which invalidated some of the collected samples.

Popescu et al. [20] concluded that the EfficientDet ANN is not suitable for complex backgrounds, meaning they had better detection on white backgrounds. The AP value for the P. oleae model, which used exclusively white backgrounds, was greater than in their H. halys model, which used both complex and white backgrounds. These are important data for the possible further development of the P. oleae model, which indicates that the dataset used in the research was correct, but a different type of ANN should be considered for use. Similarly, the model for P. oleae detection could be tested with another type of ANN to possibly increase its accuracy while using an already established dataset.

Another important variable is the collected dataset, which was used in establishing this model and the way the data were classified. Table 1 shows the AP value for P. oleae detection, as well as the same value for detecting other insects and other objects that occasionally appear on the adhesive pads. The AP value for the class Olive_trap_moth class was higher than those for the Trap_insect_other and Trap_other classes, suggesting that the model performed best in detecting adult P. oleae individuals compared to other elements on the adhesive pads. A possible explanation for the homogeneity of the Olive_trap_moth class is that it consists of a single, well-defined category—adult P. oleae—whereas the Trap_insects_other and Trap_other classes encompass a variety of different insects and objects, leading to increasing variability and making detection more challenging. A similar conclusion was presented by Čirjak et al. [19] in their L. malifoliella detection research. The authors developed an ANN-based pest monitoring device, which, similar to the P. oleae detection model, had the highest detection in the MINERS class that contained only one type of data, which related to L. malifoliella adults.

Furthermore, in analyzing the AP value for the MINERS class, it is evident that it outperforms the detection of other objects, indicating that their model is most effective in identifying L. malifoliella adults [19]. A similar trend is observed in the P. oleae detection model, where the AP value for Olive_trap_moth was 0.12 higher than that for detecting other objects and 0.23 higher than the AP value for detecting other insects. This suggests that the model performs best when trained on a single, well-defined class, further reinforcing the correlation between dataset structure and detection accuracy. Čirjak et al. [19] created an automated pest detection system for L. malifoliella and its damage to apple leaves. The same model for object detection was used—EfficientDet-Lite 4. The authors used EfficientDet-Lite 4 for the development of their detection model because they believed that it is the most suitable for fast, reliable, and accurate pest detection [19]. However, they also emphasize how the model’s accuracy greatly depends on the dataset, meaning that bigger datasets equate to better accuracy, which our P. oleae detection model currently lacks. The dataset could be expanded in the future, which should increase the model’s accuracy.

Additionally, the Olive_trap_moth class had a significantly higher number of annotations, meaning a larger dataset and more inputs for the ANN, which likely contributed to its superior detection accuracy. This finding aligns with the conclusion, confirming that model accuracy is directly influenced by dataset size, meaning that a larger and more structured dataset generally leads to improved detection performance. Comparing these findings with previous research, similar ANN-based models have shown higher detection parameters when trained on datasets with more structured class distributions [19]. The introduction of preprocessing techniques such as background standardization and targeted annotation strategies may further optimize the detection of P. oleae and related pest species.

Liu et al. [31] also mentioned the importance of the proper classification of the dataset in their paper. The authors developed an automatic detection model for cotton pest detection. The ResNetV2 ANN with 56 layers was used. The model failed to achieve high accuracy when many different objects were introduced in images, meaning that the model was better suited to detecting images with fewer objects [31]. The accuracy of the model was 85.7%. The AP value was not stated, but the model’s accuracy significantly decreased when mixing images with white backgrounds and images with complex backgrounds in their dataset. The model’s accuracy decreased by 10.7%. The connection between lower accuracy and a higher number of objects in images is also presented in the P. oleae detection model. Table 1 shows that the highest accuracy, expressed as the AP value, was for the Olive_trap_moth class, which contained only one type of object—adult P. oleae. By looking at the results of Liu et al. [31] and Čirjak et al. [20], and the P. oleae model’s accuracy, it is fair to conclude that the accuracy of automatic monitoring models for pest detection is negatively correlated with the number of objects present in images used as a dataset. This means that the probability of an ANN-based model detecting a pest decreases with the increase in other elements in images, and more complex backgrounds interfere with its accuracy in detecting key pests.

A possible solution to increase the size of the dataset without the need to collect additional field data is to manipulate already existing data. Čirjak et al. [19] managed to increase the amount of data by manipulating their images, which greatly improved the accuracy of their pest-detecting model. Image manipulation includes image rotation of 90, 180, and 270 degrees, as well as the vertical and horizontal mirroring of those images. These procedures increased the size of their dataset and the number of annotations, which gave their model more data to learn from. The MINERS class ended up having 4700 annotations, while Olive_trap_moth had only 3216 annotations. Given the results from previous authors’ research [19], it is possible to conclude that image manipulation improves the ANN-based object detection model’s accuracy. Such image transformations might help increase the detection of the P. oleae model since the images used were in their unchanged forms. It would increase the number of annotations in such a limited number of images. During the development of the Prays oleae detection model, no image manipulation techniques were used. However, if the model is to be updated, using some of these techniques is highly recommended. Since the model was created from scratch, transfer learning was not used either. The authors believe that this model is capable of achieving greater accuracy, average precision, and average recall by increasing and manipulating the dataset.

Similarly, Rajput et al. [22] also mentioned image manipulation in their research and the positive influence of increasing image quality as one of the means of increasing a model’s accuracy with a limited dataset. The authors studied the connection between the number of images in a dataset and the accuracy of an ANN-based object detection model by using simulated data. They concluded that AP values increase with the number of dataset images, but a large dataset can also harm the object-detecting model’s accuracy by decreasing its AP value. Such procedures can lower the need for field research and decrease the amount of labor while maintaining satisfying accuracy of an ANN-based model for object detection [22]. According to the above, it can be concluded that the quality manipulation of images in a dataset with a low number of images could improve the AP value for classes used in the automated model for P. oleae detection.

Xia et al. [32] also used image rotation to increase the dataset. Combined with the ‘Salt and Pepper Noise’ filter on images, they managed to enlarge their original dataset eight times just by manipulating existing images [32]. Some of these features should also be investigated when further improving the P. oleae detection model if additional field data collection is not an option. Additionally, Sütő [33] developed an ANN-based model for Cydia pomonella (Linnaeus, 1758) (Lepidoptera: Torticidae) monitoring. The authors did not use any image manipulation during the model development process and concluded that a small dataset reduced their model’s accuracy. Similarly, the P. oleae model also has a small dataset, which could be an explanation for the lower AP values. This evaluation will be useful for further upgrades to the model.

Increasing the size of the dataset is a possible solution to increase the AP values of the classes, as well as the mAP value of the model. Retraining the model helps maintain its performance and could increase its accuracy [34]. However, the process of retraining mixes old and new data, which causes data drift [35]. Data or concept drift is a term that refers to the changes in data distribution over time, where the distribution of the output values changes given new input, while the distribution of the input stays unchanged [35]. Before retraining the existing model, it is important to assess its costs [34]. The decision to retrain a model depends on two factors: model staleness cost and model retraining cost [34]. Model staleness cost refers to the way data drift impacts the model’s performance loss [34]. The need for model retraining rises with an increase in staleness cost. Model retraining cost refers to the resources needed to train the model on new data [34]. If the authors decide to expand the dataset of the existing detection model, it is important to calculate the costs of acquiring and implementing new data.

In addition to the parameters, the position of P. oleae adults on the adhesive pads also influenced the AP value of the established model. Shape identification problems could potentially be solved by upgrading shape feature extraction techniques in the model [36]. Kasinathan et al. [36] proposed using the Sobel edge extraction algorithm during the segmentation process and an improved shape feature extraction process featuring nine geometric shape features. The nine shape features are area, perimeter, major axis length, minor axis length, eccentricity, circularity, solidity, form factor, and compactness [36]. Hong et al. [21] mention in their paper how bent wings and legs from adult H. halys decreased their model’s detection accuracy because they could not be classified as either pests or another object. These variations especially impact models with a low number of images in the training dataset. The model for P. oleae is also one such model, and many adult P. oleae moths had overlapping wings, legs, or other body parts, which resulted in them not being recognized as pests.

4. Conclusions

Prays oleae is one of the principal olive fruit pests in Croatia. This paper presents an ANN-assisted monitoring model to aid farmers in early pest detection, which helps in using targeted pest management methods. Given the economic and cultural importance of the olive groves, it is crucial to improve the pest monitoring system for better olive production and a reduction in pesticide levels.

Based on the results obtained, it can be concluded that this is a working ANN-based model for P. oleae detection. However, there is a lot of room for improvement, and other techniques, such as image manipulation and detailed classification, should be implemented. Other types of ANNs should also be considered and compared in relation to the detection of P. oleae.

A model like this could be modified for the detection of other olive pests, such as B. oleae, which could reduce the need for excessive pesticide use altogether. These models can help in the transition from conventional to ecological farming while keeping fruit-bearing, olive tree health, and human health as top priorities.

Author Contributions

Conceptualization, T.K., A.Z., D.Č. and I.P.Ž.; methodology, T.K., D.Č., and A.Z.; software, A.Z.; validation, T.K. and I.P.Ž.; formal analysis, A.Z.; investigation, A.Z., M.Z. and Š.K.; resources, T.K. and A.Z.; data curation, T.K. and A.Z.; writing—original draft preparation, A.Z.; writing—review and editing, T.K., A.Z., Š.K., M.Z., D.Č. and I.P.Ž.; visualization, A.Z.; supervision, T.K. and I.P.Ž.; project administration, T.K.; funding acquisition, T.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded as part of the “Smart Agriculture Network” (SAN—KK.01.2.1.01.0100) project, co-financed by the European Union (“IRI—Research, Development and Innovation”), Operational Program “Competitiveness and Cohesion” 2014–2020.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author(s).

Acknowledgments

The authors thank Alen Dabčević, who supported the study analyses by developing the ANN-based model.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Alves, J.F.; Mendes, S.; da Silva, A.A.; Sousa, J.P.; Paredes, D. Land-Use Effect on Olive Groves Pest Prays oleae and on Its Potential Biocontrol Agent Chrysoperla carnea. Insects 2021, 12, 46. [Google Scholar] [CrossRef] [PubMed]

- Tiring, G.; Ada, M.; Ada, M.; Dona, R. The Population Fluctuation of Prays oleae Bern (Lepidoptera: Praydidae, Yponomeutidae) in Three Different Olive Orchards. Cukurova Univ. Agric. Fac. 2024, 39, 367–374. [Google Scholar] [CrossRef]

- Maceljski, M. Poljoprivredna Entomologija, 2nd ed.; Zrinski d.d.: Čakovec, Croatia, 2008; pp. 286–287. [Google Scholar]

- Pappalardo, S.; Villa, M.; Santos, A.P.S.; Benhadi-Marín, J.; Pereira, J.A.; Venturino, E. A tritrophic interaction model for an olive tree pest, the olive moth—Prays oleae (Bernard). Ecol. Model. 2021, 462, 109776. [Google Scholar] [CrossRef]

- Pascual, S.; Ortega, M.; Villa, M. Prays oleae (Bernard), its potential predators and biocontrol depend on the structure of the surrounding landscape. Biol. Control 2022, 176. [Google Scholar] [CrossRef]

- Krapac, M.; Sladonja, B. Fenofaze masline. Glas. Zaštite Bilja 2010, 33, 56–66. [Google Scholar]

- Mansour, A.; Ouanaimi, F.; Chemseddine, M.; Boumezzough, A. Study of the flight dynamics of Prays oleae (Lepidoptera: Yponomeutidae) using sexual trapping in olive orchards of Essaouira region, Morocco. J. Entomol. Zool. Stud. 2017, 5, 943–952. [Google Scholar]

- Civantos-Ruiz, M.; Gómez-Guzmán, J.A.; Pérez, M.; González-Ruiz, R. Application of Accumulated Heat Units in The Control of The Olive Moth, Prays Oleae (BERN.) (LEP., PRAYDIDAE), in Olive Groves in Southern Spain. Rev. Bras. Frutic. 2022, 44. [Google Scholar] [CrossRef]

- Bjeliš, M. Zaštita Masline u Ekološkoj Proizvodnji, 2nd ed.; Own Edition: Solin, Croatia, 2009; pp. 142–147. [Google Scholar]

- Shehata, W.A.; Abou-Elkhair, S.; Youssef, A.A.; Nasr, F. Biological studies on the olive leaf moth, Palpita unionalis Hübner (Lepid., Pyralidae), and the olive moth, Prays oleae Bernard (Lepid., Yponomeutidae). Anz. Für Schädlingskunde 2003, 76, 155–158. [Google Scholar] [CrossRef]

- Solomou, A.D.; Sfougaris, A. Contribution of Agro-Environmental Factors to Yield and Plant Diversity of Olive Grove Ecosystems (Olea europaea L.) in the Mediterranean Landscape. Agronomy 2021, 11, 161. [Google Scholar] [CrossRef]

- Čirjak, D.; Miklečić, I.; Lemić, D.; Kos, T.; Pajač Živković, I. Automatic Pest Monitoring Systems in Apple Production under Changing Climatic Conditions. Horticulturae 2022, 8, 520. [Google Scholar] [CrossRef]

- Sharma, A.; Georgi, M.; Tregubenko, M.; Tselykh, A.; Tselykh, A. Enabling smart agriculture by implementing artificial intelligence and embedded sensing. Comput. Ind. Eng. 2022, 165, 107936. [Google Scholar] [CrossRef]

- Fu, X.; Ma, Q.; Yang, F.; Zhang, C.; Zhao, X.; Chang, F.; Han, L. Crop pest image recognition based on the improved ViT method. Inf. Process. Agric. 2024, 22, 249–259. [Google Scholar] [CrossRef]

- Ching, T.; Himmelstein, D.; Beaulieu-Jones, B.; Kalinin, A.; Do, B.T.; Way, G.P.; Ferrero, E.; Agapow, P.M.; Zietz, M.; Hoffman, M.M.; et al. Opportunities and obstacles for deep learning in biology and medicine. J. R. Soc. Interface 2018, 15, 1520170387. [Google Scholar] [CrossRef]

- Angermueller, C.; Pärnamaa, T.; Parts, L.; Stegle, O. Deep learning for computational biology. Mol. Syst. Biol. 2016, 12, 828. [Google Scholar] [CrossRef]

- Kaya, Y.; Gürsoy, E. A novel multi-head CNN design to identify plant diseases using the fusion of RGB images. Ecol. Inform. 2023, 75, 101998. [Google Scholar] [CrossRef]

- Yuan, Y.; Chen, L.; Wu, H.; Li, L. Advanced Agricultural Disease Image Recognition Technologies: A Review. Inf. Process. Agric. 2022, 9, 48–59. [Google Scholar] [CrossRef]

- Čirjak, D.; Aleksi, I.; Miklečić, I.; Antolković, A.M.; Vrtodušić, R.; Viduka, A.; Lemic, D.; Kos, T.; Pajač Živković, I. Monitoring System for Leucoptera malifoliella (O. Costa, 1836) and Its Damage Based on Artificial Neural Networks. Agriculture 2023, 13, 67. [Google Scholar] [CrossRef]

- Popescu, D.; Ichim, L.; Dimoiu, M.; Trufelea, R. Comparative Study of Neural Networks Used in Halyomorpha halys Detection. In Proceedings of the 30th Mediterranean Conference on Control and Automation (MED), Limassol, Cyprus, 28 June–1 July 2022; pp. 182–187. [Google Scholar]

- Hong, S.J.; Kim, S.Y.; Kim, E.; Lee, C.H.; Lee, J.S.; Lee, D.S.; Bang, J.; Kim, G. Moth Detection from Pheromone Trap Images Using Deep Learning Object Detectors. Agriculture 2020, 10, 170. [Google Scholar] [CrossRef]

- Rajput, D.; Wang, W.J.; Chen, C.C. Evaluation of a Decided Sample Size in Machine Learning Applications. BMC Bioinform. 2023, 24. [Google Scholar] [CrossRef]

- LabelImg 1.8.1. on GitHub. Available online: https://github.com/HumanSignal/labelImg (accessed on 1 March 2025).

- Everingham, M.; Van Gool, L.; Williams, C.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation, OSDI 2016. USENIX Association, Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar] [CrossRef]

- VanRossum, G.; DeBoer, J. Interactively testing remote servers using the Python programming language. CWI Q. 1991, 4, 283–304. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EFfIcientDET: Scalable and efficient object Detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10778–10787. [Google Scholar]

- Terven, J.; Cordova-Esparza, D.M.; Ramirez-Pedraza, A.; Chavez-Urbiola, E.A.; Romero-Gonzalez, J.A. Loss functions and metrics in deep learning. arXiv 2023. [Google Scholar] [CrossRef]

- Wang, Q.; Ma, Y.; Zhao, K.; Tian, Y. A comprehensive survey of loss functions in machine learning. Ann. Data Sci. 2020, 9, 187–212. [Google Scholar] [CrossRef]

- Padilla, R.; Netto, S.; da Silva, E. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar] [CrossRef]

- Liu, C.; Zhai, Z.; Zhang, R.; Bai, J.; Zhang, M. Field Pest Monitoring and Forecasting System for Pest Control. Front. Plant Sci. 2022, 13, 990965. [Google Scholar] [CrossRef]

- Xia, D.; Chen, P.; Wang, B.; Zhang, J.; Xie, C. Insect Detection and Classification Based on an Improved Convolutional Neural Network. Sensors 2018, 18, 4169. [Google Scholar] [CrossRef]

- Sütő, J. Embedded System-Based Sticky Paper Trap with Deep Learning-Based Insect-Counting Algorithm. Electronics 2021, 10, 1754. [Google Scholar] [CrossRef]

- Mahadevan, A.; Mathioudakis, M. Cost-aware retraining for machine learning. Knowl.-Based Syst. 2024, 293, 111610. [Google Scholar] [CrossRef]

- Gama, J.; Zliobaite, I.; Bifet, A.; Pechenizkiy, M.; Bouchachia, A. A survey on concept drift adaptation. ACM Comput. Surv. 2014, 46, 1–37. [Google Scholar] [CrossRef]

- Kasinathan, T.; Singaraju, D.; Uyyala, S.R. Insect classification and detection in field crops using modern machine learning techniques. Inf. Process. Agric. 2020, 8, 446–457. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).