Converging Extended Reality and Robotics for Innovation in the Food Industry

Abstract

1. Introduction

2. Overview of Extended Reality Technologies

2.1. Definition and Classification of XR Technologies (VR, AR, MR)

2.2. Key Devices Used in XR Applications

2.2.1. Oculus Quest by Meta

2.2.2. Vision Pro by Apple

2.2.3. HoloLens by Microsoft

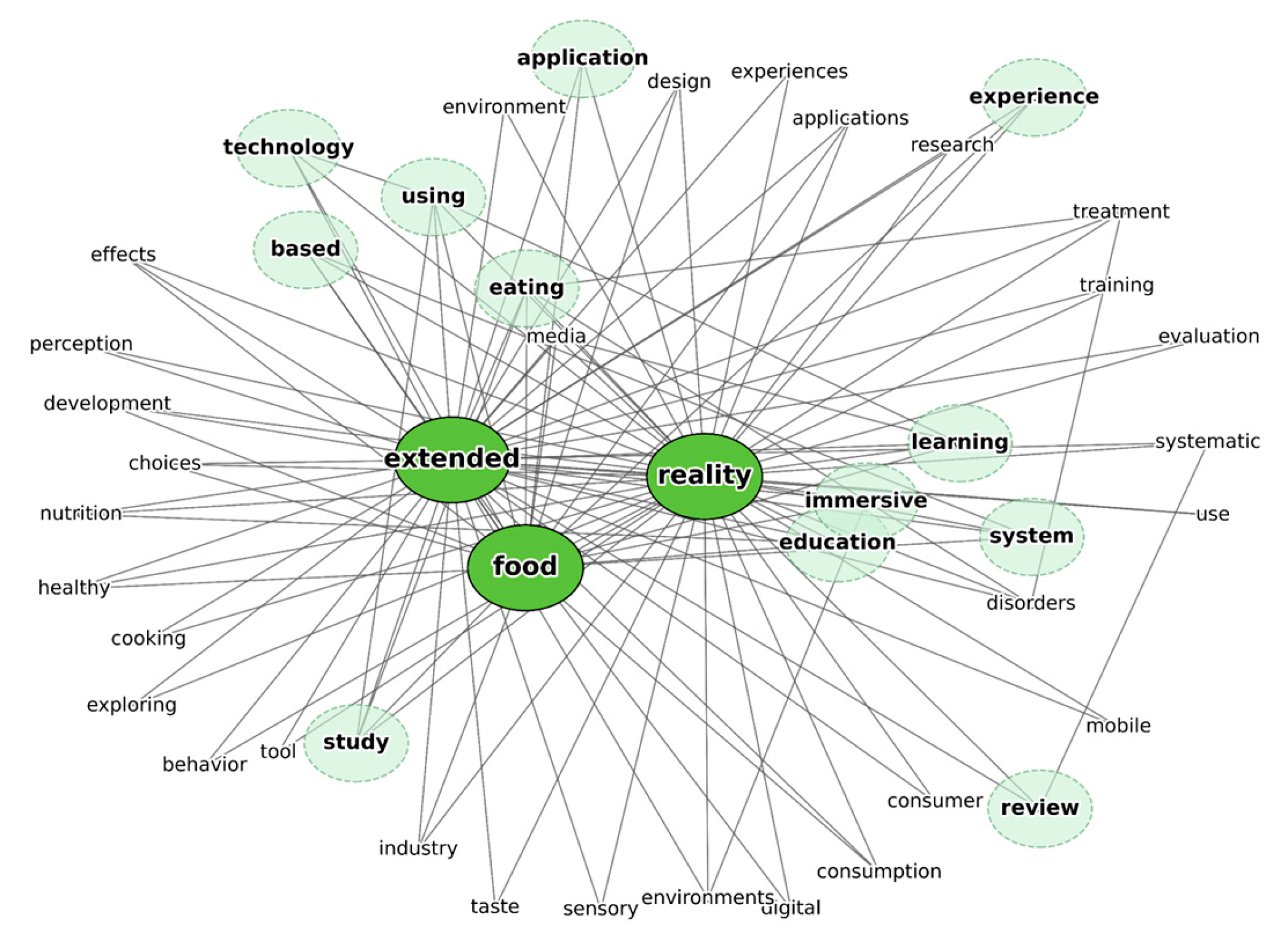

3. Keyword Association Analysis of XR in the Food Industry

3.1. Methodology for Web Crawling and Keyword Analysis

3.2. Key Research Trends and Emerging Topics

4. Application of XR in the Food Industry

4.1. Methodology of Literature Review

4.2. Application of VR in the Food Industry

4.2.1. Simulating and Validating Consumer Food Choice Behavior

4.2.2. Enhancing Food Education and Promoting Sustainable Behavior

4.2.3. Stimulating Appetite and Sensory Perception in VR

4.2.4. Measuring Disgust, Bias, and Eating-Related Psychopathology

4.3. Application of AR in the Food Industry

4.3.1. Stimulating Consumer Behavior and Sensory Engagement Through AR

4.3.2. Enhancing Nutrition and Sustainability Awareness with AR

4.3.3. Designing Intelligent and Personalized AR Food Systems

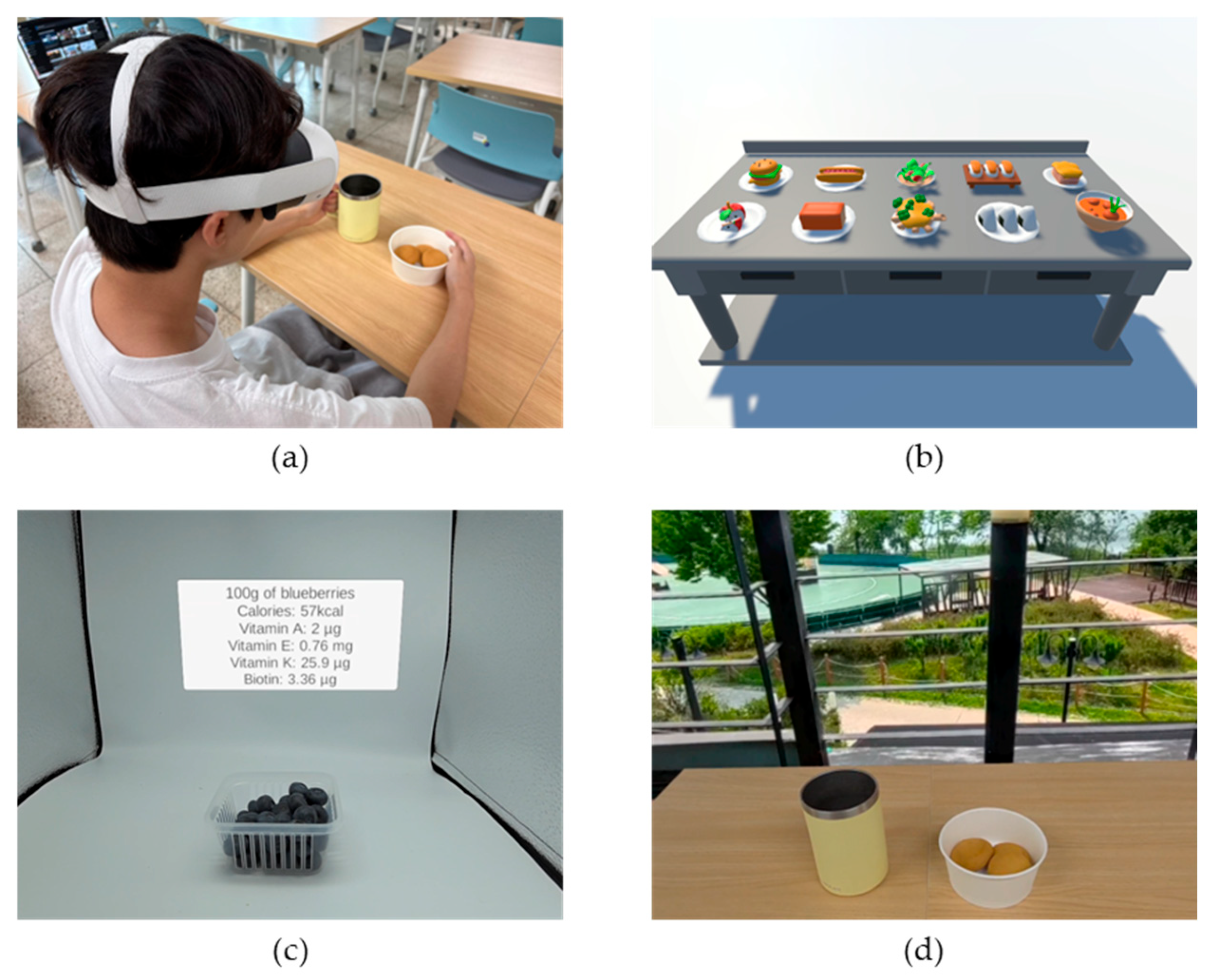

4.4. Applications of MR in the Food Industry

4.5. Synthesis and Comparative Analysis of XR Modalities

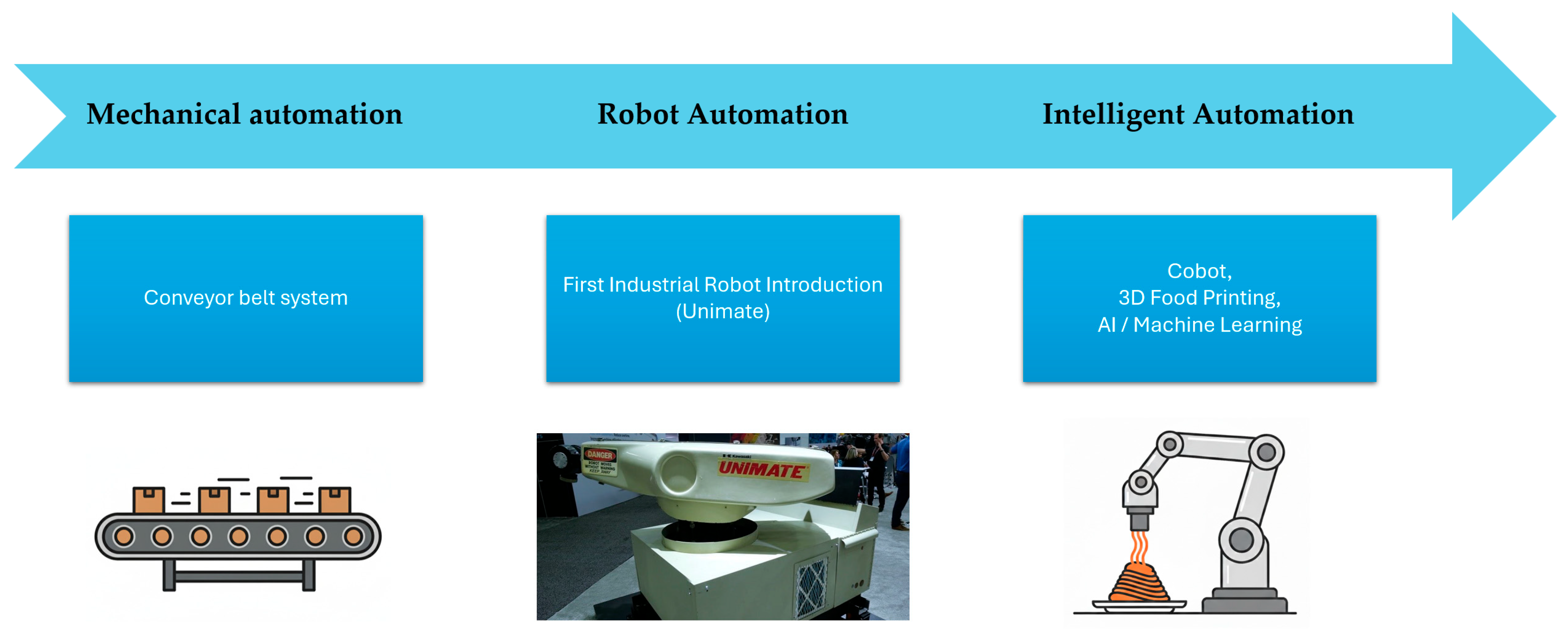

5. Overview of Robotics in Food Processing

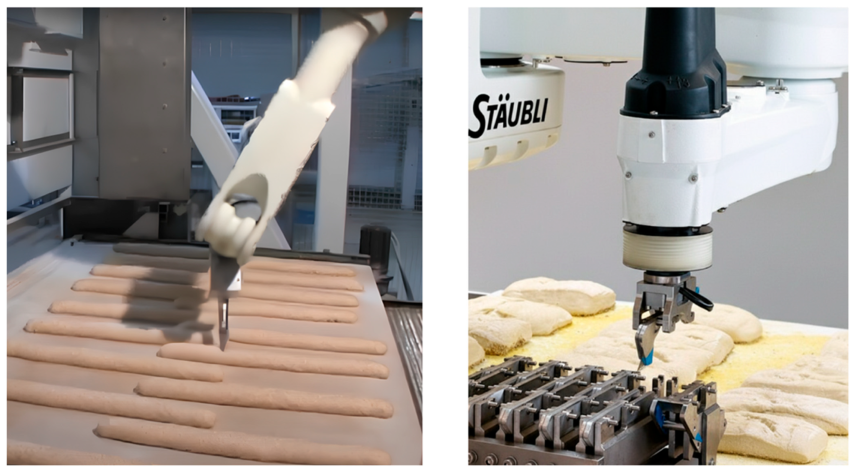

5.1. Roles and Types of Robots in Food Processing

5.1.1. The Necessity of Automation

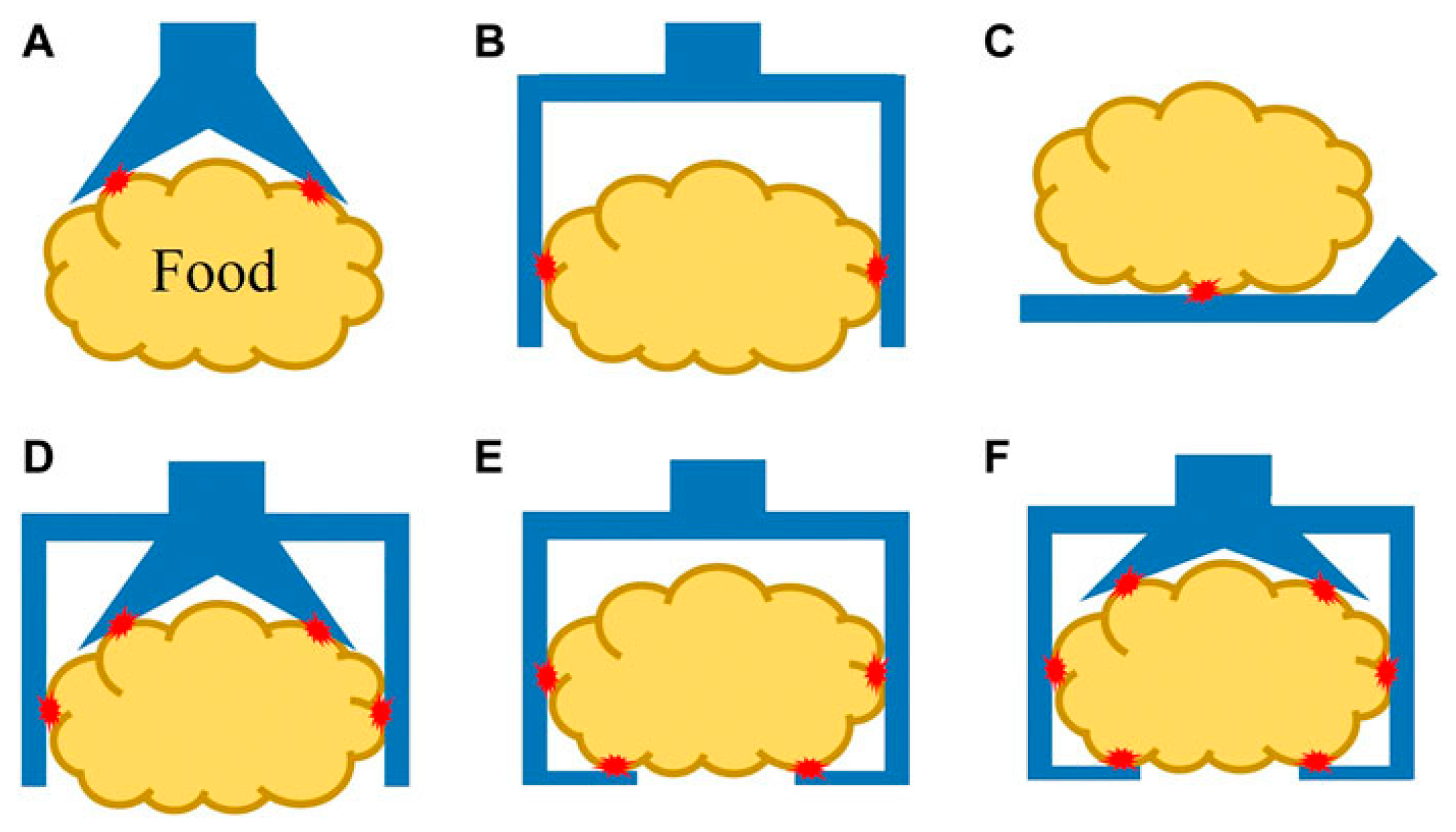

5.1.2. Components and Technological Characteristics of Food Automation Solutions

5.1.3. Key Advantages and Limitations of Robotics in Food Processing

5.2. Robotic Selection Methodology by Bader and Rahimifard

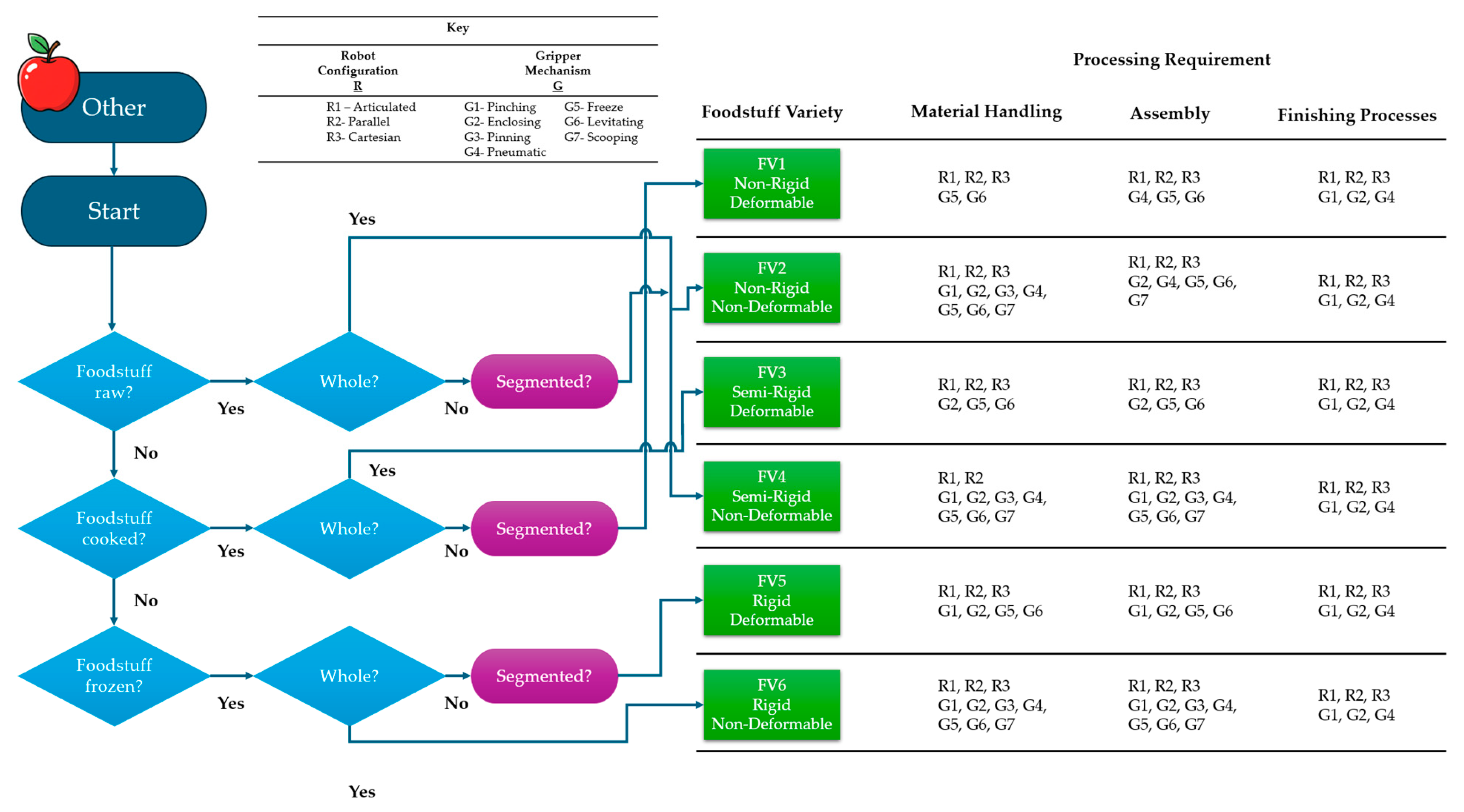

Core Structure of the FIRM Methodology

5.3. Expanding Application Domains and Emerging Challenges

5.3.1. Non-Traditional Applications and Technological Demands

5.3.2. Implications of Expanded Application Areas

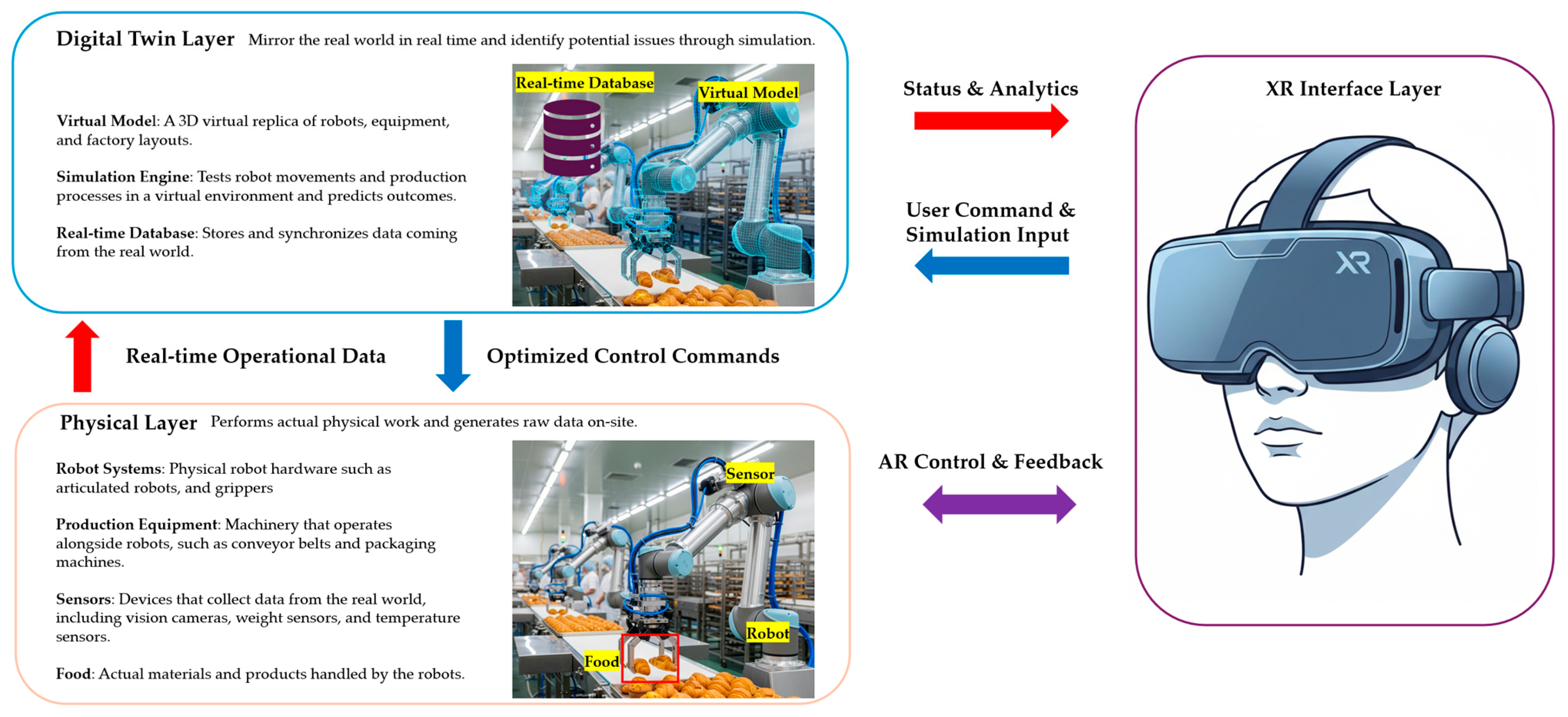

5.4. Integrating XR and Robotic Digital Twins as a New Paradigm for Food Systems

5.4.1. Enhancing Simulation, Control, and Training Through XR–Robotic Twins

5.4.2. XR and Robotic Digital Twin Integration in the Food Industry

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chai, J.J.; O’Sullivan, C.; Gowen, A.A.; Rooney, B.; Xu, J.L. Augmented/mixed reality technologies for food: A review. Trends Food Sci. Technol. 2022, 124, 182–194. [Google Scholar] [CrossRef]

- Shamshiri, R.R.; Weltzien, C.; Hameed, I.A.; Yule, I.J.; Grift, T.E.; Balasundram, S.K.; Chowdhary, G. Research and development in agricultural robotics: A perspective of digital farming. Int. J. Agric. Biol. Eng. 2018, 11, 1–14. [Google Scholar] [CrossRef]

- Kim, Y.; Kim, S. Automation and optimization of food process using CNN and six-axis robotic arm. Foods 2024, 13, 3826. [Google Scholar] [CrossRef]

- Hassoun, A.; Jagtap, S.; Trollman, H.; Garcia-Garcia, G.; Abdullah, N.A.; Goksen, G.; Lorenzo, J.M. Food processing 4.0: Current and future developments spurred by the fourth industrial revolution. Food Control 2023, 145, 109507. [Google Scholar]

- Grobbelaar, W.; Verma, A.; Shukla, V.K. Analyzing human robotic interaction in the food industry. J. Phys. Conf. Ser. 2021, 1714, 012032. [Google Scholar] [CrossRef]

- Mason, A.; Haidegger, T.; Alvseike, O. Time for Change: The Case of Robotic Food Processing [Industry Activities]. IEEE Robot. Autom. Mag. 2023, 30, 116–122. [Google Scholar] [CrossRef]

- Bader, F.; Rahimifard, S. A methodology for the selection of industrial robots in food handling. Innov. Food Sci. Emerg. Technol. 2020, 64, 102379. [Google Scholar] [CrossRef]

- Protogeros, G.; Protogerou, A.; Pachni-Tsitiridou, O.; Mifsud, R.G.; Fouskas, K.; Katsaros, G.; Valdramidis, V. Conceptualizing and advancing on extended reality applications in food science and technology. J. Food Eng. 2025, 396, 112557. [Google Scholar] [CrossRef]

- Andrews, C.; Southworth, M.K.; Silva, J.N.; Silva, J.R. Extended reality in medical practice. Curr. Treat. Options Cardiovasc. Med. 2019, 21, 18. [Google Scholar] [CrossRef]

- Çöltekin, A.; Lochhead, I.; Madden, M.; Christophe, S.; Devaux, A.; Pettit, C.; Hedley, N. Extended reality in spatial sciences: A review of research challenges and future directions. ISPRS Int. J. Geo-Inf. 2020, 9, 439. [Google Scholar] [CrossRef]

- Maples-Keller, J.L.; Bunnell, B.E.; Kim, S.J.; Rothbaum, B.O. The use of virtual reality technology in the treatment of anxiety and other psychiatric disorders. Harv. Rev. Psychiatry 2017, 25, 103–113. [Google Scholar] [CrossRef] [PubMed]

- Carmigniani, J.; Furht, B.; Anisetti, M.; Ceravolo, P.; Damiani, E.; Ivkovic, M. Augmented reality technologies, systems and applications. Multimed. Tools Appl. 2011, 51, 341–377. [Google Scholar] [CrossRef]

- Park, B.J.; Hunt, S.J.; Martin, C., III; Nadolski, G.J.; Wood, B.J.; Gade, T.P. Augmented and mixed reality: Technologies for enhancing the future of IR. J. Vasc. Interv. Radiol. 2020, 31, 1074–1082. [Google Scholar] [CrossRef] [PubMed]

- Reiners, D.; Davahli, M.R.; Karwowski, W.; Cruz-Neira, C. The combination of artificial intelligence and extended reality: A systematic review. Front. Virtual Real. 2021, 2, 721933. [Google Scholar] [CrossRef]

- Cárdenas-Robledo, L.A.; Hernández-Uribe, Ó.; Reta, C.; Cantoral-Ceballos, J.A. Extended reality applications in Industry 4.0—A systematic literature review. Telemat. Inform. 2022, 73, 101863. [Google Scholar] [CrossRef]

- Herur-Raman, A.; Almeida, N.D.; Greenleaf, W.; Williams, D.; Karshenas, A.; Sherman, J.H. Next-generation simulation—Integrating extended reality technology into medical education. Front. Virtual Real. 2021, 2, 693399. [Google Scholar] [CrossRef]

- Sharma, R. Extended reality: It’s impact on education. Int. J. Sci. Eng. Res. 2021, 12, 247–251. [Google Scholar]

- Xu, C.; Siegrist, M.; Hartmann, C. The application of virtual reality in food consumer behavior research: A systematic review. Trends Food Sci. Technol. 2021, 116, 533–544. [Google Scholar] [CrossRef]

- Styliaras, G.D. Augmented reality in food promotion and analysis: Review and potentials. Digital 2021, 1, 216–240. [Google Scholar] [CrossRef]

- Ahn, J.; Gaza, H.; Oh, J.; Fuchs, K.; Wu, J.; Mayer, S.; Byun, J. MR-FoodCoach: Enabling a convenience store on mixed reality space for healthier purchases. In Proceedings of the 2022 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Singapore, 17–21 October 2022; pp. 891–892. [Google Scholar]

- Angelidis, A.; Plevritakis, E.; Vosniakos, G.C.; Matsas, E. An Open Extended Reality Platform Supporting Dynamic Robot Paths for Studying Human–Robot Collaboration in Manufacturing. Int. J. Adv. Manuf. Technol. 2025, 138, 3–15. [Google Scholar] [CrossRef]

- Su, Y.P.; Chen, X.Q.; Zhou, C.; Pearson, L.H.; Pretty, C.G.; Chase, J.G. Integrating Virtual, Mixed, and Augmented Reality into Remote Robotic Applications: A Brief Review of Extended Reality-Enhanced Robotic Systems for Intuitive Telemanipulation and Telemanufacturing Tasks in Hazardous Conditions. Appl. Sci. 2023, 13, 12129. [Google Scholar] [CrossRef]

- Akindele, N.; Taiwo, R.; Sarvari, H.; Oluleye, B.I.; Awodele, I.A.; Olaniran, T.O. A state-of-the-art analysis of virtual reality applications in construction health and safety. Results Eng. 2024, 23, 102382. [Google Scholar] [CrossRef]

- Oyman, M.; Bal, D.; Ozer, S. Extending the technology acceptance model to explain how perceived augmented reality affects consumers’ perceptions. Comput. Hum. Behav. 2022, 128, 107127. [Google Scholar] [CrossRef]

- Monterubbianesi, R.; Tosco, V.; Vitiello, F.; Orilisi, G.; Fraccastoro, F.; Putignano, A.; Orsini, G. Augmented, virtual and mixed reality in dentistry: A narrative review on the existing platforms and future challenges. Appl. Sci. 2022, 12, 877. [Google Scholar] [CrossRef]

- Arena, F.; Collotta, M.; Pau, G.; Termine, F. An overview of augmented reality. Computers 2022, 11, 28. [Google Scholar] [CrossRef]

- Devagiri, J.S.; Paheding, S.; Niyaz, Q.; Yang, X.; Smith, S. Augmented reality and artificial intelligence in industry: Trends, tools, and future challenges. Expert Syst. Appl. 2022, 207, 118002. [Google Scholar] [CrossRef]

- Rakkolainen, I.; Farooq, A.; Kangas, J.; Hakulinen, J.; Rantala, J.; Turunen, M.; Raisamo, R. Technologies for multimodal interaction in extended reality—A scoping review. Multimodal Technol. Interact. 2021, 5, 81. [Google Scholar] [CrossRef]

- Bondarenko, V.; Zhang, J.; Nguyen, G.T.; Fitzek, F.H. A universal method for performance assessment of Meta Quest XR devices. In Proceedings of the 2024 IEEE Gaming, Entertainment, and Media Conference (GEM), Stuttgart, Germany, 4–6 June 2024; pp. 1–6. [Google Scholar]

- Yoon, D.M.; Han, S.H.; Park, I.; Chung, T.S. Analyzing VR game user experience by genre: A text-mining approach on Meta Quest Store reviews. Electronics 2024, 13, 3913. [Google Scholar] [CrossRef]

- Aros, M.; Tyger, C.L.; Chaparro, B.S. Unraveling the Meta Quest 3: An out-of-box experience of the future of mixed reality headsets. In Proceedings of the International Conference on Human-Computer Interaction, Washington, DC, USA, 29 June–4 July 2024; Springer: Cham, Switzerland, 2024; pp. 3–8. [Google Scholar]

- Criollo-C, S.; Guerrero-Arias, A.; Samala, A.D.; Arif, Y.M.; Luján-Mora, S. Enhancing the educational model using mixed reality technologies with Meta Quest 3: A usability analysis using IBM-CSUQ. IEEE Access 2025, 13, 56930–56945. [Google Scholar] [CrossRef]

- Waisberg, E.; Ong, J.; Masalkhi, M.; Zaman, N.; Sarker, P.; Lee, A.G.; Tavakkoli, A. Apple Vision Pro and the advancement of medical education with extended reality. Can. Med. Educ. J. 2024, 15, 89–90. [Google Scholar] [CrossRef]

- Woodland, M.B.; Ong, J.; Zaman, N.; Hirzallah, M.; Waisberg, E.; Masalkhi, M.; Tavakkoli, A. Applications of extended reality in spaceflight for human health and performance. Acta Astronaut. 2024, 214, 748–756. [Google Scholar] [CrossRef]

- Waisberg, E.; Ong, J.; Masalkhi, M.; Zaman, N.; Sarker, P.; Lee, A.G.; Tavakkoli, A. The future of ophthalmology and vision science with the Apple Vision Pro. Eye 2024, 38, 242–243. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Bokijonov, S.; Choi, Y. Review of Microsoft HoloLens applications over the past five years. Appl. Sci. 2021, 11, 7259. [Google Scholar] [CrossRef]

- Long, Z.; Dong, H.; El Saddik, A. Interacting with New York City data by HoloLens through remote rendering. IEEE Consum. Electron. Mag. 2022, 11, 64–72. [Google Scholar] [CrossRef]

- Kumar, M.; Bhatia, R.; Rattan, D. A Survey of Web Crawlers for Information Retrieval. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2017, 7, e1218. [Google Scholar] [CrossRef]

- Uzun, E. A Novel Web Scraping Approach Using the Additional Information Obtained from Web Pages. IEEE Access 2020, 8, 61726–61740. [Google Scholar] [CrossRef]

- Slater, M. Place Illusion and Plausibility Can Lead to Realistic Behaviour in Immersive Virtual Environments. Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 3549–3557. [Google Scholar] [CrossRef]

- Siegrist, M.; Ung, C.Y.; Zank, M.; Marinello, M.; Kunz, A.; Hartmann, C.; Menozzi, M. Consumers’ food selection behaviors in three-dimensional (3D) virtual reality. Food Res. Int. 2019, 117, 50–59. [Google Scholar] [CrossRef]

- Cheah, C.S.; Barman, S.; Vu, K.T.; Jung, S.E.; Mandalapu, V.; Masterson, T.D.; Gong, J. Validation of a virtual reality buffet environment to assess food selection processes among emerging adults. Appetite 2020, 153, 104741. [Google Scholar] [CrossRef]

- Allman-Farinelli, M.; Ijaz, K.; Tran, H.; Pallotta, H.; Ramos, S.; Liu, J.; Calvo, R.A. A virtual reality food court to study meal choices in youth: Design and assessment of usability. JMIR Form. Res. 2019, 3, e12456. [Google Scholar] [CrossRef]

- Oliver, J.H.; Hollis, J.H. Virtual reality as a tool to study the influence of the eating environment on eating behavior: A feasibility study. Foods 2021, 10, 89. [Google Scholar] [CrossRef]

- Gouton, M.A.; Dacremont, C.; Trystram, G.; Blumenthal, D. Validation of food visual attribute perception in virtual reality. Food Qual. Prefer. 2021, 87, 104016. [Google Scholar] [CrossRef]

- Meijers, M.H.; Smit, E.S.; de Wildt, K.; Karvonen, S.G.; van der Plas, D.; van der Laan, L.N. Stimulating sustainable food choices using virtual reality: Taking an environmental vs health communication perspective on enhancing response efficacy beliefs. Environ. Commun. 2022, 16, 1–22. [Google Scholar] [CrossRef]

- Wan, X.; Qiu, L.; Wang, C. A virtual reality-based study of color contrast to encourage more sustainable food choices. Appl. Psychol. Health Well-Being 2022, 14, 591–605. [Google Scholar] [CrossRef]

- Ledoux, T.; Nguyen, A.S.; Bakos-Block, C.; Bordnick, P. Using virtual reality to study food cravings. Appetite 2013, 71, 396–402. [Google Scholar] [CrossRef]

- Schroeder, P.A.; Collantoni, E.; Lohmann, J.; Butz, M.V.; Plewnia, C. Virtual reality assessment of a high-calorie food bias: Replication and food-specificity in healthy participants. Behav. Brain Res. 2024, 471, 115096. [Google Scholar] [CrossRef] [PubMed]

- Gorman, D.; Hoermann, S.; Lindeman, R.W.; Shahri, B. Using virtual reality to enhance food technology education. Int. J. Technol. Des. Educ. 2022, 32, 1659–1677. [Google Scholar] [CrossRef] [PubMed]

- Plechatá, A.; Morton, T.; Perez-Cueto, F.J.; Makransky, G. Why just experience the future when you can change it: Virtual reality can increase pro-environmental food choices through self-efficacy. Technol. Mind Behav. 2022, 3, 11. [Google Scholar] [CrossRef]

- Harris, N.M.; Lindeman, R.W.; Bah, C.S.F.; Gerhard, D.; Hoermann, S. Eliciting real cravings with virtual food: Using immersive technologies to explore the effects of food stimuli in virtual reality. Front. Psychol. 2023, 14, 956585. [Google Scholar] [CrossRef]

- Ramousse, F.; Raimbaud, P.; Baert, P.; Helfenstein-Didier, C.; Gay, A.; Massoubre, C.; Lavoué, G. Does this virtual food make me hungry? Effects of visual quality and food type in virtual reality. Front. Virtual Real. 2023, 4, 1221651. [Google Scholar] [CrossRef]

- Ammann, J.; Hartmann, C.; Peterhans, V.; Ropelato, S.; Siegrist, M. The relationship between disgust sensitivity and behaviour: A virtual reality study on food disgust. Food Qual. Prefer. 2020, 80, 103833. [Google Scholar] [CrossRef]

- Bektas, S.; Natali, L.; Rowlands, K.; Valmaggia, L.; Di Pietro, J.; Mutwalli, H.; Cardi, V. Exploring correlations of food-specific disgust with eating disorder psychopathology and food interaction: A preliminary study using virtual reality. Nutrients 2023, 15, 4443. [Google Scholar] [CrossRef] [PubMed]

- Gu, C.; Huang, T.; Wei, W.; Yang, C.; Chen, J.; Miao, W.; Sun, J. The effect of using augmented reality technology in takeaway food packaging to improve young consumers’ negative evaluations. Agriculture 2023, 13, 335. [Google Scholar] [CrossRef]

- Dong, Y.; Sharma, C.; Mehta, A.; Torrico, D.D. Application of augmented reality in the sensory evaluation of yogurts. Fermentation 2021, 7, 147. [Google Scholar] [CrossRef]

- Fritz, W.; Hadi, R.; Stephen, A. From tablet to table: How augmented reality influences food desirability. J. Acad. Mark. Sci. 2023, 51, 503–529. [Google Scholar] [CrossRef]

- Honee, D.; Hurst, W.; Luttikhold, A.J. Harnessing augmented reality for increasing the awareness of food waste amongst Dutch consumers. Augment. Hum. Res. 2022, 7, 2. [Google Scholar] [CrossRef]

- Mellos, I.; Probst, Y. Evaluating augmented reality for ‘real life’ teaching of food portion concepts. J. Hum. Nutr. Diet. 2022, 35, 1245–1254. [Google Scholar] [CrossRef]

- Sonderegger, A.; Ribes, D.; Henchoz, N.; Groves, E. Food talks: Visual and interaction principles for representing environmental and nutritional food information in augmented reality. In Proceedings of the 2019 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Beijing, China, 10–18 October 2019; pp. 98–103. [Google Scholar]

- Juan, M.C.; Charco, J.L.; García-García, I.; Mollá, R. An augmented reality app to learn to interpret the nutritional information on labels of real packaged foods. Front. Comput. Sci. 2019, 1, 1. [Google Scholar] [CrossRef]

- Capecchi, I.; Borghini, T.; Bellotti, M.; Bernetti, I. Enhancing education outcomes integrating augmented reality and artificial intelligence for education in nutrition and food sustainability. Sustainability 2025, 17, 2113. [Google Scholar] [CrossRef]

- Nakano, K.; Horita, D.; Sakata, N.; Kiyokawa, K.; Yanai, K.; Narumi, T. DeepTaste: Augmented reality gustatory manipulation with GAN-based real-time food-to-food translation. In Proceedings of the 2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Beijing, China, 14–18 October 2019; pp. 212–223. [Google Scholar]

- Han, D.I.D.; Abreu e Silva, S.G.; Schröder, K.; Melissen, F.; Haggis-Burridge, M. Designing immersive sustainable food experiences in augmented reality: A consumer participatory co-creation approach. Foods 2022, 11, 3646. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A Taxonomy of Mixed Reality Visual Displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Low, J.Y.; Lin, V.H.; Yeon, L.J.; Hort, J. Considering the application of a mixed reality context and consumer segmentation when evaluating emotional response to tea break snacks. Food Qual. Prefer. 2021, 88, 104113. [Google Scholar] [CrossRef]

- Fujii, A.; Kochigami, K.; Kitagawa, S.; Okada, K.; Inaba, M. Development and evaluation of mixed reality co-eating system: Sharing the behavior of eating food with a robot could improve our dining experience. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 357–362. [Google Scholar]

- Nair, M.; Fernandez, R.E. Advancing mixed reality digital twins through 3D reconstruction of fresh produce. IEEE Access 2024, 12, 4315–4327. [Google Scholar] [CrossRef]

- Ghavamian, P.; Beyer, J.H.; Orth, S.; Zech, M.J.N.; Müller, F.; Matviienko, A. The Bitter Taste of Confidence: Exploring Audio-Visual Taste Modulation in Immersive Reality. In Proceedings of the 2025 ACM International Conference on Interactive Media Experiences, Stockholm, Sweden, 18–21 June 2025; ACM: New York, NY, USA, 2025; pp. 462–467. [Google Scholar]

- Long, J.W.; Masters, B.; Sajjadi, P.; Simons, C.; Masterson, T.D. The Development of an Immersive Mixed-Reality Application to Improve the Ecological Validity of Eating and Sensory Behavior Research. Front. Nutr. 2023, 10, 1170311. [Google Scholar] [CrossRef] [PubMed]

- Waterlander, W.E.; Jiang, Y.; Steenhuis, I.H.M.; Mhurchu, C.N. Using a 3D Virtual Supermarket to Measure Food Purchase Behavior: A Validation Study. J. Med. Internet Res. 2015, 17, e3774. [Google Scholar]

- Pini, V.; Orso, V.; Pluchino, P.; Gamberini, L. Augmented Grocery Shopping: Fostering Healthier Food Purchases through AR. Virtual Real. 2023, 27, 2117–2128. [Google Scholar] [CrossRef]

- Karkar, A.; Salahuddin, T.; Almaadeed, N.; Aljaam, J.M.; Halabi, O. A Virtual Reality Nutrition Awareness Learning System for Children. In Proceedings of the 2018 IEEE Conference on e-Learning, e-Management and e-Services (IC3e), Langkawi, Malaysia, 26–28 November 2018; pp. 97–102. [Google Scholar]

- Kalimuthu, I.; Karpudewan, M.; Baharudin, S.M. An Interdisciplinary and Immersive Real-Time Learning Experience in Adolescent Nutrition Education through Augmented Reality Integrated with Science, Technology, Engineering, and Mathematics. J. Nutr. Educ. Behav. 2023, 55, 914–923. [Google Scholar] [CrossRef]

- Kelmenson, S. Between the farm and the fork: Job quality in sustainable food systems. Agric. Hum. Values 2023, 40, 317–358. [Google Scholar]

- Gottlieb, N.; Jungwirth, I.; Glassner, M.; de Lange, T.; Mantu, S.; Forst, L. Immigrant workers in the meat industry during COVID-19: Comparing governmental protection in Germany, the Netherlands, and the USA. Glob. Health 2025, 21, 10. [Google Scholar] [CrossRef]

- Anderson, J.D.; Mitchell, J.L.; Maples, J.G. Invited review: Lessons from the COVID-19 pandemic for food supply chains. Appl. Anim. Sci. 2021, 37, 738–747. [Google Scholar] [CrossRef]

- Moerman, F.; Kastelein, J.; Rugh, T. Hygienic Design of Food Processing Equipment. In Food Safety Management; Elsevier: Amsterdam, The Netherlands, 2023; pp. 623–678. [Google Scholar]

- Rosati, G.; Oscari, F.; Barbazza, L.; Faccio, M. Throughput maximization and buffer design of robotized flexible production systems with feeder renewals and priority rules. Int. J. Adv. Manuf. Technol. 2016, 85, 891–907. [Google Scholar] [CrossRef]

- Wang, Z.; Hirai, S.; Kawamura, S. Challenges and opportunities in robotic food handling: A review. Front. Robot. AI 2022, 8, 789107. [Google Scholar] [CrossRef] [PubMed]

- Talpur, M.S.H.; Shaikh, M.H. Automation of mobile pick and place robotic system for small food industry. arXiv 2012, arXiv:1203.4475. [Google Scholar]

- Lyu, Y.; Wu, F.; Wang, Q.; Liu, G.; Zhang, Y.; Jiang, H.; Zhou, M. A review of robotic and automated systems in meat processing. Front. Robot. AI 2025, 12, 1578318. [Google Scholar] [CrossRef]

- McClintock, H.; Temel, F.Z.; Doshi, N.; Koh, J.S.; Wood, R.J. The milliDelta: A high-bandwidth, high-precision, millimeter-scale Delta robot. Sci. Robot. 2018, 3, eaar3018. [Google Scholar] [CrossRef]

- Mehmood, Y.; Cannella, F.; Cocuzza, S. Analytical modeling, virtual prototyping, and performance optimization of Cartesian robots: A comprehensive review. Robotics 2025, 14, 62. [Google Scholar] [CrossRef]

- Matheson, E.; Minto, R.; Zampieri, E.G.; Faccio, M.; Rosati, G. Human–robot collaboration in manufacturing applications: A review. Robotics 2019, 8, 100. [Google Scholar] [CrossRef]

- Ngui, I.; McBeth, C.; He, G.; Santos, A.C.; Soares, L.; Morales, M.; Amato, N.M. Extended Reality System for Robotic Learning from Human Demonstration. In Proceedings of the 2025 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Orlando, FL, USA, 22–26 March 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1304–1305. [Google Scholar]

- Zhang, Y.; Gao, P.; Wang, Z.; He, Q. Research on Status Monitoring and Positioning Compensation System for Digital Twin of Parallel Robots. Sci. Rep. 2025, 15, 7432. [Google Scholar] [CrossRef]

- Pai, Y.S.; Yap, H.J.; Md Dawal, S.Z.; Ramesh, S.; Phoon, S.Y. Virtual Planning, Control, and Machining for a Modular-Based Automated Factory Operation in an Augmented Reality Environment. Sci. Rep. 2016, 6, 27380. [Google Scholar]

- Badia, S.B.I.; Silva, P.A.; Branco, D.; Pinto, A.; Carvalho, C.; Menezes, P.; Rodrigues, L.; Almeida, S.F.; Pilacinski, A. Virtual Reality for Safe Testing and Development in Collaborative Robotics: Challenges and Perspectives. Electronics 2022, 11, 1726. [Google Scholar] [CrossRef]

- Caldwell, D.G. Robotics and Automation in the Food Industry: Current and Future Technologies; Elsevier: Amsterdam, The Netherlands, 2012. [Google Scholar]

- Blanes Campos, C.; Mellado Arteche, M.; Ortiz Sánchez, M.C.; Valera Fernández, Á. Technologies for Robot Grippers in Pick and Place Operations for Fresh Fruits and Vegetables. In Proceedings of the VII Congreso Ibérico de Agroingeniería, Logroño, Spain, 28–30 September 2011; pp. 1480–1487. [Google Scholar]

- Lien, T.K. Gripper Technologies for Food Industry Robots. In Robotics and Automation in the Food Industry; Caldwell, D.G., Ed.; Woodhead Publishing: Cambridge, UK, 2013; pp. 143–170. [Google Scholar]

- Salvietti, G.; Iqbal, Z.; Malvezzi, M.; Eslami, T.; Prattichizzo, D. Soft Hands with Embodied Constraints: The Soft ScoopGripper. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 2758–2764. [Google Scholar]

- Gebbers, R.; Adamchuk, V.I. Precision Agriculture and Food Security. Science 2010, 327, 828–831. [Google Scholar] [CrossRef]

- Hillers, V.N.; Medeiros, L.; Kendall, P.; Chen, G.; Dimascola, S. Consumer Food-Handling Behaviors Associated with Prevention of Foodborne Illnesses. J. Food Prot. 2003, 66, 1893–1899. [Google Scholar] [CrossRef] [PubMed]

- Curi, P.R.; Pires, E.J.; Bornia, A.C. Challenges and Opportunities in the Adoption of Industry 4.0 by the Food and Beverage Sector. Procedia CIRP 2020, 93, 268–273. [Google Scholar]

- Masey, R.J.M.; Gray, J.O.; Dodd, T.J.; Caldwell, D.G. Guidelines for the Design of Low-Cost Robots for the Food Industry. Ind. Robot 2010, 37, 509–517. [Google Scholar] [CrossRef]

- Derossi, A.; Di Palma, E.; Moses, J.A.; Santhoshkumar, P.; Caporizzi, R.; Severini, C. Avenues for non-conventional robotics technology applications in the food industry. Food Res. Int. 2023, 173, 113265. [Google Scholar] [CrossRef]

- Deponte, H.; Tonda, A.; Gottschalk, N.; Bouvier, L.; Delaplace, G.; Augustin, W.; Scholl, S. Two complementary methods for the computational modeling of cleaning processes in food industry. Comput. Chem. Eng. 2020, 135, 106733. [Google Scholar] [CrossRef]

- Figgis, B.; Bermudez, V.; Garcia, J.L. PV module vibration by robotic cleaning. Sol. Energy 2023, 250, 168–172. [Google Scholar] [CrossRef]

- Tang, X.; Qiu, F.; Li, H.; Zhang, Q.; Quan, X.; Tao, C.; Liu, Z. Investigation of the intensified chaotic mixing and flow structures evolution mechanism in stirred reactor with torsional rigid-flexible impeller. Ind. Eng. Chem. Res. 2023, 62, 1984–1996. [Google Scholar] [CrossRef]

- Kalayci, O.; Pehlivan, I.; Akgul, A.; Coskun, S.; Kurt, E. A new chaotic mixer design based on the Delta robot and its experimental studies. Math. Probl. Eng. 2021, 2021, 6615856. [Google Scholar] [CrossRef]

- Mizrahi, M.; Golan, A.; Mizrahi, A.B.; Gruber, R.; Lachnise, A.Z.; Zoran, A. Digital gastronomy: Methods & recipes for hybrid cooking. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016; pp. 541–552. [Google Scholar]

- Zoran, A.; Gonzalez, E.A.; Mizrahi, A.B. Cooking with computers: The vision of digital gastronomy. In Gastronomy and Food Science; Elsevier: Amsterdam, The Netherlands, 2021; pp. 35–53. [Google Scholar]

- Danno, D.; Hauser, S.; Iida, F. Robotic Cooking Through Pose Extraction from Human Natural Cooking Using OpenPose. In Intelligent Autonomous Systems 16; Ang, M.H., Jr., Asama, H., Lin, W., Foong, S., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2022; pp. 288–298. [Google Scholar]

- Liu, J.; Chen, Y.; Dong, Z.; Wang, S.; Calinon, S.; Li, M.; Chen, F. Robot cooking with stir-fry: Bimanual non-prehensile manipulation of semi-fluid objects. IEEE Robot. Autom. Lett. 2022, 7, 5159–5166. [Google Scholar] [CrossRef]

- Sochacki, G.; Hughes, J.; Hauser, S.; Iida, F. Closed-loop robotic cooking of scrambled eggs with a salinity-based ‘taste’ sensor. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 594–600. [Google Scholar]

- Abdurrahman, E.E.M.; Ferrari, G. Digital Twin applications in the food industry: A review. Front. Sustain. Food Syst. 2025, 9, 1538375. [Google Scholar] [CrossRef]

- Verboven, P.; Defraeye, T.; Datta, A.K.; Nicolai, B. Digital twins of food process operations: The next step for food process models? Curr. Opin. Food Sci. 2020, 35, 79–87. [Google Scholar] [CrossRef]

- Koulouris, A.; Misailidis, N.; Petrides, D. Applications of process and digital twin models for production simulation and scheduling in the manufacturing of food ingredients and products. Food Bioprod. Process. 2021, 126, 317–333. [Google Scholar] [CrossRef]

- Maheshwari, P.; Kamble, S.; Belhadi, A.; Mani, V.; Pundir, A. Digital twin implementation for performance improvement in process industries—A case study of food processing company. Int. J. Prod. Res. 2023, 61, 8343–8365. [Google Scholar] [CrossRef]

- Kaarlela, T.; Padrao, P.; Pitkäaho, T.; Pieskä, S.; Bobadilla, L. Digital twins utilizing XR-technology as robotic training tools. Machines 2022, 11, 13. [Google Scholar] [CrossRef]

- Miner, N.E.; Stansfield, S.A. An interactive virtual reality simulation system for robot control and operator training. In Proceedings of the 1994 IEEE International Conference on Robotics and Automation, San Diego, CA, USA, 8–13 May 1994; pp. 1428–1435. [Google Scholar]

- Burdea, G.C. Invited review: The synergy between virtual reality and robotics. IEEE Trans. Robot. Autom. 2002, 15, 400–410. [Google Scholar] [CrossRef]

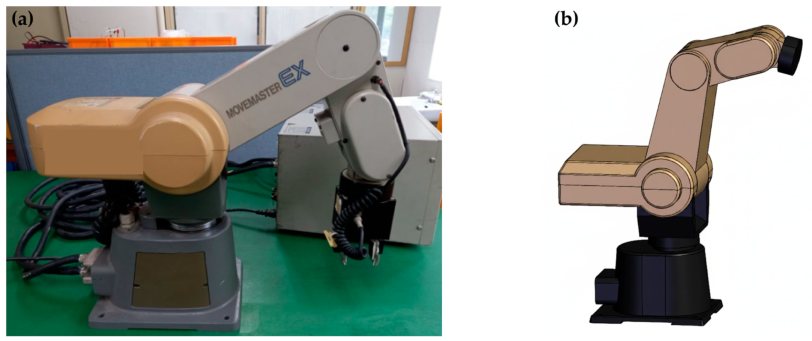

- Crespo, R.; García, R.; Quiroz, S. Virtual reality application for simulation and off-line programming of the Mitsubishi MoveMaster RV-M1 robot integrated with the Oculus Rift to improve students training. Procedia Comput. Sci. 2015, 75, 107–112. [Google Scholar] [CrossRef]

- He, Z.; Liu, C.; Chu, X.; Negenborn, R.R.; Wu, Q. Dynamic Anti-Collision A-Star Algorithm for Multi-Ship Encounter Situations. Appl. Ocean Res. 2022, 118, 102995. [Google Scholar] [CrossRef]

- González de Cosío Barrón, A.; Gonzalez Almaguer, C.A.; Berglund, A.; Apraiz Iriarte, A.; Saavedra Gastelum, V.; Peñalva, J. Immersive learning in agriculture: XR design of robotic milk production processes. In Proceedings of the DS 131: International Conference on Engineering and Product Design Education (E&PDE 2024), Birmingham, UK, 5–6 September 2024; pp. 575–580. [Google Scholar]

- Tian, X.; Pan, B.; Bai, L.; Wang, G.; Mo, D. Fruit picking robot arm training solution based on reinforcement learning in digital twin. J. ICT Stand. 2023, 11, 261–282. [Google Scholar] [CrossRef]

- Singh, R.; Seneviratne, L.; Hussain, I. A Deep Learning-Based Approach to Strawberry Grasping Using a Telescopic-Link Differential Drive Mobile Robot in ROS-Gazebo for Greenhouse Digital Twin Environments. IEEE Access 2024, 13, 361–381. [Google Scholar] [CrossRef]

- Jin, S. A Study on Innovation Resistance and Adoption Regarding Extended Reality Devices. J. Korea Contents Assoc. 2021, 21, 918–940. [Google Scholar]

| Category | Technical Specifications (H/W, S/W, Key Feature) | Key Outcome (Quantitative) | Summary of Findings | Reference |

|---|---|---|---|---|

| SVC | H/W: HTC Vive S/W: Unity Key Feature: Eating pizza rolls while measuring heart rate, skin temp and mastication data. | The restaurant scene significantly increased presence scores (5.0 vs. 3.9, p < 0.006) and heart rate (83 vs. 79 bpm, p = 0.02) compared to a blank room, but did not significantly affect total food intake (p = 0.98). | The virtual eating environment altered participants’ sense of presence and physiological arousal but did not significantly change their total food intake or sensory ratings. | Oliver & Hollis [44] |

| SVC | H/W: HTC Vive S/W: Unity Key Feature: Used photogrammetry to create highly realistic virtual cookie models. | Perceptual differences between cookie types were greater than the differences between real and virtual versions of the same cookie, with 33 of 40 descriptors discriminating products similarly. | The visual perception of virtual and real cookies was highly consistent, with only minor discrepancies in brightness and color contrast. | Gouton et al. [45] |

| EFPB | H/W: HTC Vive S/W: Unity Key Feature: Interactive pop-ups with impact information appeared on product pickup. | Impact pop-ups significantly increased pro-environmental food choices (F(4, 241) = 16.80, p < 0.001), an effect mediated by higher personal response efficacy. | VR pop-ups boosted sustainable choices by increasing personal efficacy, an effect consistent across different message types (health vs. environment, text vs. visual). | Meijers et al. [46] |

| EFPB | H/W: 17-in. computer monitor (Desktop VR) S/W: Vizard 4.0 Key Feature: Used background color (red vs. green) as a behavioral nudge for food choice. | A red (vs. green) table background significantly reduced meat-heavy meal choices (61.2% vs. 66.9%; p = 0.007). | A red table background acted as a nudge, reducing the visual appeal of meat and prompting more plant-based choices. | Wan et al. [47] |

| SSV | H/W: HMD S/W: NeuroVR Key Feature: Compared food craving levels induced by four different cues: neutral VR, food VR, food photos, and real food. | For primed participants, VR-induced cravings were significantly higher than neutral cues (p < 0.05), similar to food photos, but significantly lower than real food (p < 0.05). | VR food stimuli elicited craving levels comparable to food photographs, but significantly less than real food. | Ledoux et al. [48] |

| MBE | H/W: Oculus Rift DK2, S/W: Unity Key Feature: Used hand-motion tracking to measure reaction times for grasping (approach) vs. pushing (avoidance) tasks. | Motion-tracking data revealed that while push responses were comparable, grasping and collecting high-calorie food was significantly faster than for low-calorie food (e.g., object contact time, p = 0.021; collection time, p = 0.018). | VR motion-tracking revealed a motor-based approach bias, with healthy participants grasping high-calorie foods faster than low-calorie or neutral items. | Schroeder et al. [49] |

| Category | Technical Specifications (H/W, S/W, Key Feature) | Key Outcome (Quantitative) | Summary of Findings | Reference |

|---|---|---|---|---|

| SCSA | H/W: Mobile devices S/W: Custom mobile AR application Key Feature: Used AR to superimpose food items into real-time environments and compared responses with non-AR formats. | In a field experiment at a restaurant (Study 1), diners who viewed desserts in AR were significantly more likely to purchase than those using a standard digital menu (41.2% vs. 18.0%; p = 0.01). | AR-based food visualizations boosted desirability and purchase intent by enhancing personal relevance and process-oriented mental simulation, consistently across food types and devices. | Fritz et al. [58] |

| ENSA | H/W: Mobile Phone, optional Aryzon headset S/W: Aryzon AR SDK, Unity AR app that visualizes catering food waste by projecting 3D models into users’ environments. | In a pilot evaluation (N=19), 58% of participants rated the app as motivating for food waste reduction (4–5 on a 5-point scale), 60% agreed it improved their understanding of waste scale, and all participants reported the waste was larger than expected. | AR visualization of food waste data increased consumer awareness and comprehension of waste quantities, showing potential to incentivize reduction behaviors, though tested on a small sample. | Honee et al. [59] |

| ENSA | H/W: Smartphone S/W: Javascript libraries, Blender Key Feature: Quasi-experimental study comparing an AR food portion app (1:1 scale) with an online tool and infographic control. | In a pre-test/post-test comparison of estimation accuracy, the AR tool group showed the highest improvement (+12.2%), outperforming both the online tool group (+11.6%) and the infographic control group, which showed a decrease (−1.7%). | The AR tool was the most effective method for improving the accuracy of nutrition students’ food portion size estimations compared to an online tool and a traditional infographic. | Mellos & Probst [60] |

| DPAF | H/W: OnePlus 5T Smartphone S/W: Custom mobile AR application Key Feature: Compared AR vs. static-page app for presenting environmental and nutritional food information. | Between-subjects study (N = 84): AR users learned significantly more than static users (F(1, 78) = 4.8, p <.05), while both versions scored highly on usability (mean SUS = 86.4). | AR enhanced user learning about food products without compromising usability or aesthetics, supporting its credibility as a medium for food information. | Sonderegger et al. [61] |

| Application Domain | XR Technology | Technical Specifications (H/W, S/W, Method) | Key Outcome (Quantitative) | Summary of Efficacy & Limitations | Reference |

|---|---|---|---|---|---|

| Research on Contextual Effects of the Eating Experience | VR | H/W: HTC Vive S/W: Unity Method: Consumed real food within a fully virtual environment. | Virtual restaurant increased presence (p < 0.006) and arousal (p = 0.02), but had no significant effect on total intake (p = 0.98) or sensory ratings. | Efficacy: Provides high experimental control for studying psychological/physiological responses. Limitation: Bulky HMD setup can disrupt natural eating behavior and may not affect key outcomes like intake. | [44] |

| AR | H/W: Meta Quest 3 S/W: Unity Method: Drank sugar-water through a straw with AR visual filters and synchronized audio cues. | Sweet-associated pink filter reduced bitterness alone, but paradoxically increased bitterness when combined with sweet-associated audio cue (p = 0.044). | Efficacy: Enables natural interaction with real food/drinks while studying subtle crossmodal effects. Limitation: Restricted to simple chromatic overlays; lacks ability to simulate richer environmental contexts. | [70] | |

| MR | H/W: Meta Quest Pro S/W: Unity Method: Consumed real food with hands and tabletop visible via passthrough, embedded in a virtual restaurant. | Experts rated MR more ecologically valid than a lab booth but less than a real restaurant (mean 72.6/100). | Efficacy: Offers a methodological “middle ground,” merging VR’s immersion with AR’s realism to balance control and ecological validity. Limitation: Dependent on passthrough quality (resolution, latency) for naturalistic experience. | [71] | |

| Supermarket Food Choice Studies | VR | H/W: PC (Keyboard/Mouse) S/W: Unity Method: Validated a desktop 3D virtual supermarket by comparing purchases with real grocery receipts. | Top four food groups matched real shopping; significant differences in 6/18 categories, notably dairy (+6.5%, p < 0.001). | Efficacy: Suitable for tracking overall purchasing patterns. Limitation: Less accurate for specific categories (e.g., fresh produce); lacks HMD immersion. | [72] |

| AR | H/W: Microsoft HoloLens S/W: Unity, HoloToolkit Method: Compared AR supermarket (3D models + nutritional overlays) vs. traditional packaging. | AR group more often chose high-nutrition products (p < 0.001) and relied on nutrition info (p = 0.034); also spent more time exploring (p = 0.02). | Efficacy: Effective at shifting attention to nutritional data and promoting healthier choices. Limitation: No real purchase context (no prices), HMD burden, limited student sample. | [73] | |

| Nutrition Education | VR | H/W: Oculus DK2 S/W: Vizard Method: Children prepared virtual breakfast in immersive VR; compared with paper- and narrative-based learning | VR group quiz score 87% (Narrative 88%, Paper 85%); Task time longer in VR (112 s vs. 38 s) | Efficacy: Highly engaging, effective for immediate knowledge transfer. Limitation: Longer completion time; requires HMD hardware; cultural generalizability not tested | [74] |

| AR | H/W: Smartphones (Android/iOS) S/W: Vuforia Engine with Unity Method: An 8-week AR nutrition curriculum (8 activities + 3 STEM projects) grounded in Kolb’s experiential learning theory. | The 8-week curriculum led to statistically significant improvements in adolescents’ knowledge (mean score +3.82), attitude (+1.88), and self-reported behavior (+1.04), with p < 0.001 for all changes | Efficacy: Improved knowledge, attitudes, and behaviors; effective as a long-term, structured, and scalable curriculum within formal schools. Limitation: Effects reflect the whole curriculum rather than AR alone; tested in one school with a limited sample, limiting generalizability. | [75] |

| Robot Type | Key Characteristics | Food Industry Suitability | XR Integration Potential | Reference |

|---|---|---|---|---|

| Articulated Robot | Human arm-like structure, high degrees of freedom, wide working range | Meat processing, packaging, palletizing | Intuitive control and simulation of complex movements in virtual environments | [87] |

| Parallel Robot | Multiple arms connected to a single platform structure. High speed/high precision motion within limited space | Sorting and classification, packaging | Status monitoring and position compensation | [88] |

| Cartesian Robot | Linear motion based on X-Y-Z axes. Simple structure, high precision and load-bearing capacity | Packaging, palletizing and other simple, repetitive downstream processes | Intuitive tuning of path and speed profiles and real-time collision verification | [89] |

| Collaborative Robot | Capable of collaborating with workers without safety fencing. Intuitive programming, high flexibility | Quality inspection, collaborative assembly | Safe simulation of hazardous scenarios | [90] |

| Gripper Type | Description | Food Texture Compatibility | Hygiene Compliance | XR Integration Potential |

|---|---|---|---|---|

| Pinching | Mechanical gripping between two or more fingers. Grips via friction between the finger and the part, and releases by opening the finger. | Rigid, semi-rigid non-deformable, deformable, non-sticky, slippery. Used for pick-and-place operations in baked goods production. | Food residue may get trapped in mechanical joint areas, making cleaning difficult. | Simulation that prevents product damage by adjusting grip force in a virtual environment |

| Enclosing | law/jaw-like attachments encompass components to achieve partial or full grip, release achieved by opening of apparatus | Rigid, semi-rigid non-deformable, deformable, non-sticky, slippery. Used for sorting, packaging, and palletizing fruits and vegetables. | Food residue may get trapped in mechanical joint areas, making cleaning difficult. | Simulating grip strategies and forces for complex food shapes |

| Pinning | Insert one or more pins into the part. From a surface or deep grip through penetration, then release by removing the pins. | Rigid, semi-rigid non-deformable, slippery Used for pick and place operations of meat and poultry, fish and seafood | May create microbial contamination pathways | Simulation for penetration depth optimization. Penetration points training system for specific foods in a virtual environment. |

| Pneumatic | Grasping using air or pressurized gas through a vacuum. Releasing by removing pressure. | Rigid, semi-rigid non-deformable, deformable Smooth surface non-sticky, slippery Egg pick and place operations, packaging, and palletizing | Food residue may accumulate. | Real-time AR overlays for monitoring vacuum pressure and seal integrity |

| Freezing | Form ice through an instantaneous freezing point between the gripper and food components. Instantly melt the ice to release. | Rigid, non-rigid, semi-rigid non-deformable, deformable Smooth surface Pick and place for meat, poultry, fish, seafood, or frozen fruits and vegetables | Risk of microbial growth during freezing and thawing processes | Visualization of temperature gradients in virtual environments and optimization of freeze–thaw cycles |

| Levitating | Based on Bernoulli’s principle. Gripper lifts parts using differential air velocity. Releases by blocking the air flow. | Rigid, non-rigid, semi-rigid non-deformable, deformable smooth surface Used for pick and place operations involving soft and light foods, such as baked goods. | Theoretically, it is non-contact and offers hygienic advantages, but research is needed. | Simulating airflow levitation for the delicate handling of light foods |

| Scooping | The gripper design is flat or parabolic. It picks up food with a ‘sweeping’ motion and releases it by tilting. | Rigid, non-rigid, semi-rigid, non-deformable, slippery, non-sticky Sauces, powders, etc. | Food residue may accumulate. | Predicting material skew and spillage in scoops |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Woo, S.; Kim, Y.; Kim, S. Converging Extended Reality and Robotics for Innovation in the Food Industry. AgriEngineering 2025, 7, 322. https://doi.org/10.3390/agriengineering7100322

Woo S, Kim Y, Kim S. Converging Extended Reality and Robotics for Innovation in the Food Industry. AgriEngineering. 2025; 7(10):322. https://doi.org/10.3390/agriengineering7100322

Chicago/Turabian StyleWoo, Seongju, Youngjin Kim, and Sangoh Kim. 2025. "Converging Extended Reality and Robotics for Innovation in the Food Industry" AgriEngineering 7, no. 10: 322. https://doi.org/10.3390/agriengineering7100322

APA StyleWoo, S., Kim, Y., & Kim, S. (2025). Converging Extended Reality and Robotics for Innovation in the Food Industry. AgriEngineering, 7(10), 322. https://doi.org/10.3390/agriengineering7100322