YOLO-FDLU: A Lightweight Improved YOLO11s-Based Algorithm for Accurate Maize Pest and Disease Detection

Abstract

1. Introduction

- A modified detection framework based on YOLO11s is proposed for accurate identification of typical maize pests and diseases;

- Multiple lightweight and feature-enhancement modules are constructed and evaluated for their performance improvements;

- A comprehensive evaluation of model precision and robustness is conducted using standard performance metrics and confusion matrices.

2. Materials and Methods

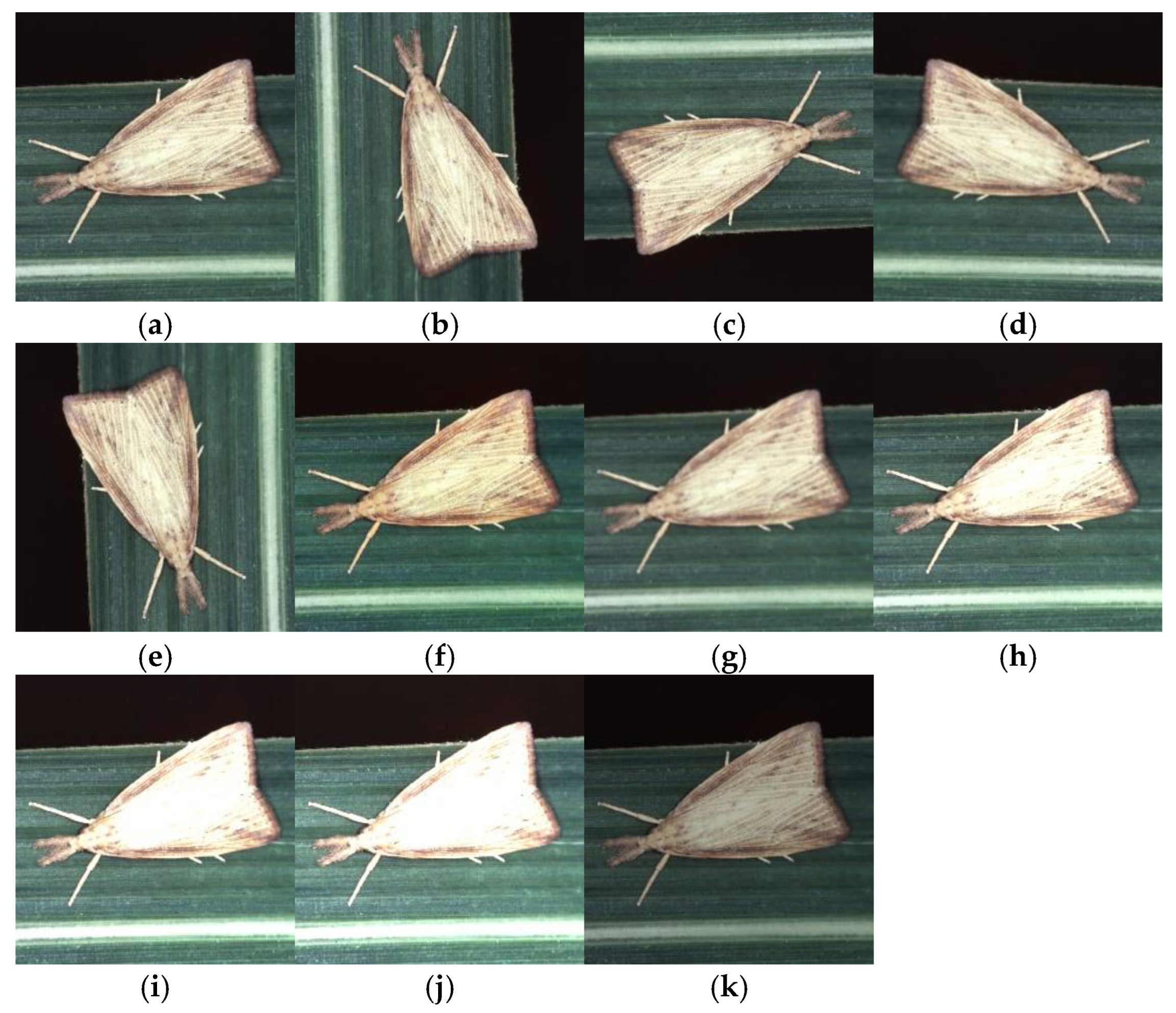

2.1. Datasets

2.1.1. Dataset Description

2.1.2. Image Data Augmentation

2.1.3. Dataset Size Evaluation

2.2. YOLO-FDLU Model Design

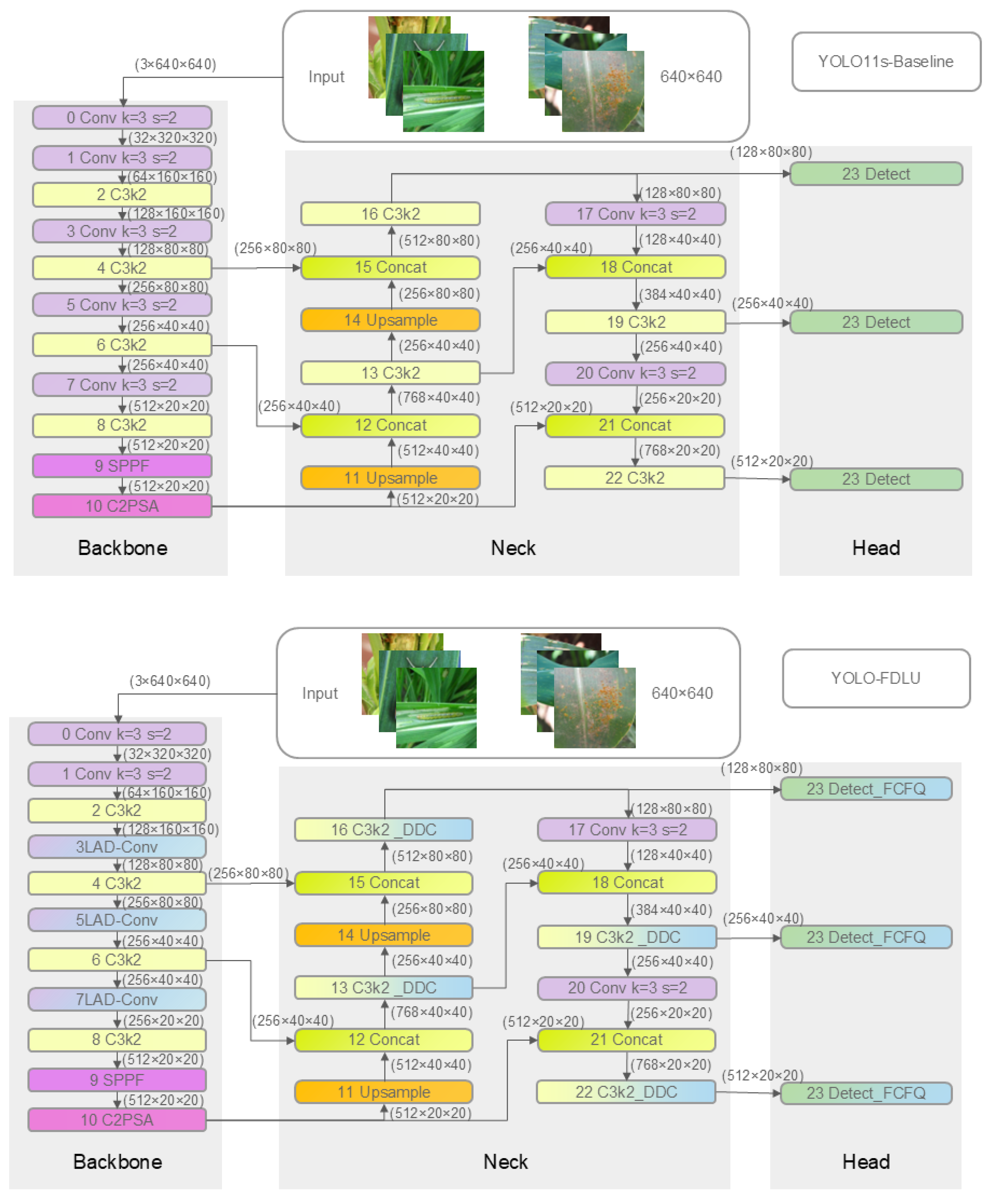

2.2.1. YOLO-FDLU Architecture

- Backbone optimization: Replacing the standard 3 × 3 stride-2 convolutional downsampling layers at the P3, P4, and P5 stages with the proposed Light Attention-Downsampling Convolution (LAD-Conv) module. This modification addresses the loss of fine-grained features (e.g., early-stage CRL spots, small CAWL) during downsampling, enhancing the model’s ability to capture small-target details;

- Neck optimization: Replacing all original C3k2 modules in the neck (feature fusion stage) with the improved C3k2_DDC (DilatedReparam–DilatedReparam–Conv) module. This strengthens cross-scale feature connectivity (e.g., fusing 2 mm CGLSs and 10 mm CB targets), improving multi-scale detection adaptability;

- Detection head optimization: Replacing the standard Detect module with the custom Detect_FCFQ (Detection Head with Feature-Corner Fusion and Quality Estimation) module. This integrates corner feature fusion and bounding box quality estimation, reducing false positives caused by background clutter (e.g., dry leaves mistaken for CLB);

- Loss function optimization: Integrating the Unified-IoU (UIoU) loss proposed by Luo et al. (2024) [21] to replace the original CIoU loss, enhancing the consistency and accuracy of bounding box regression for irregular targets (e.g., clustered CBL).

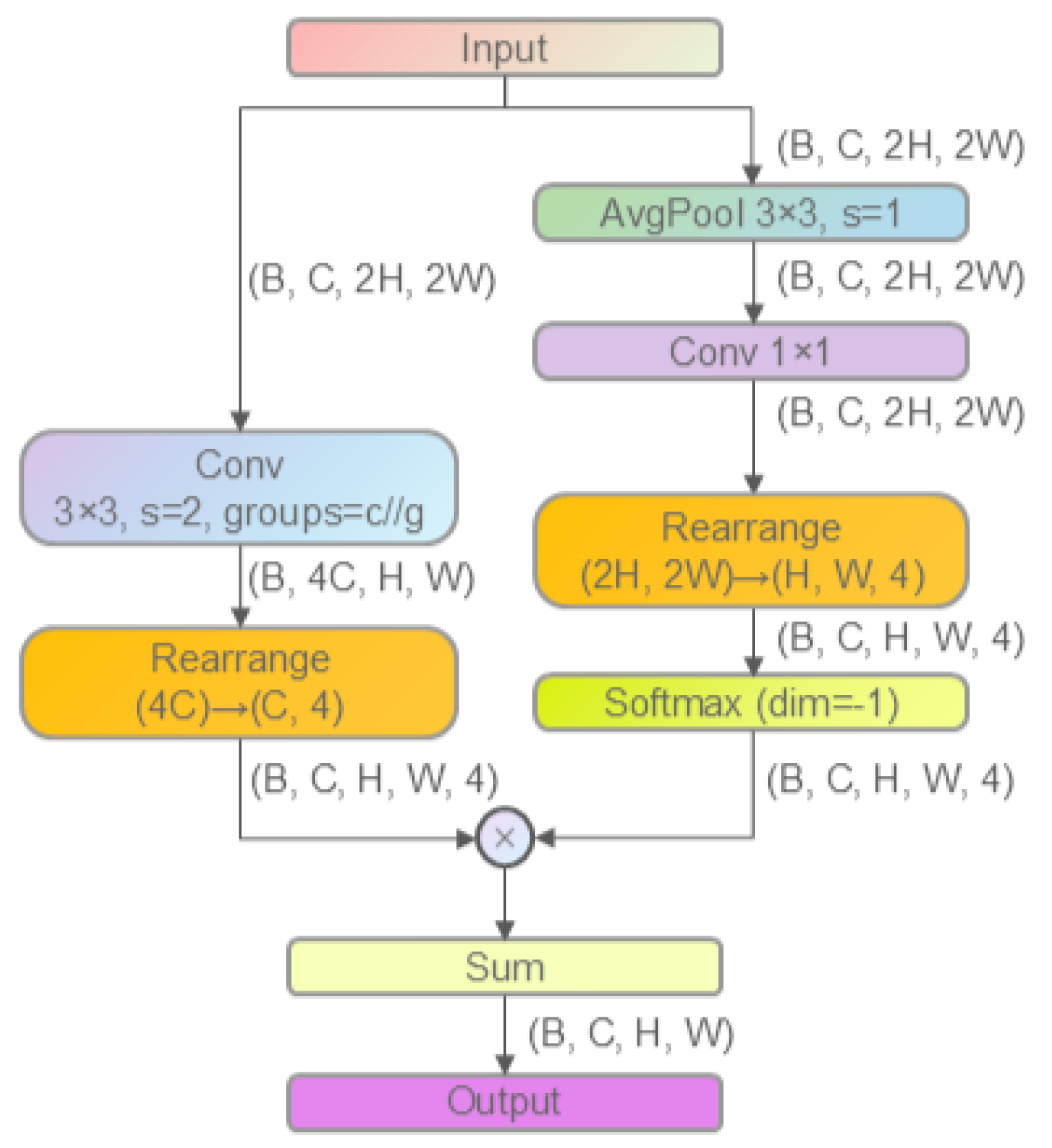

2.2.2. Improved Downsampling Module: LAD-Conv

- Module Application Scope

- 2.

- Module Structure and Working Principle

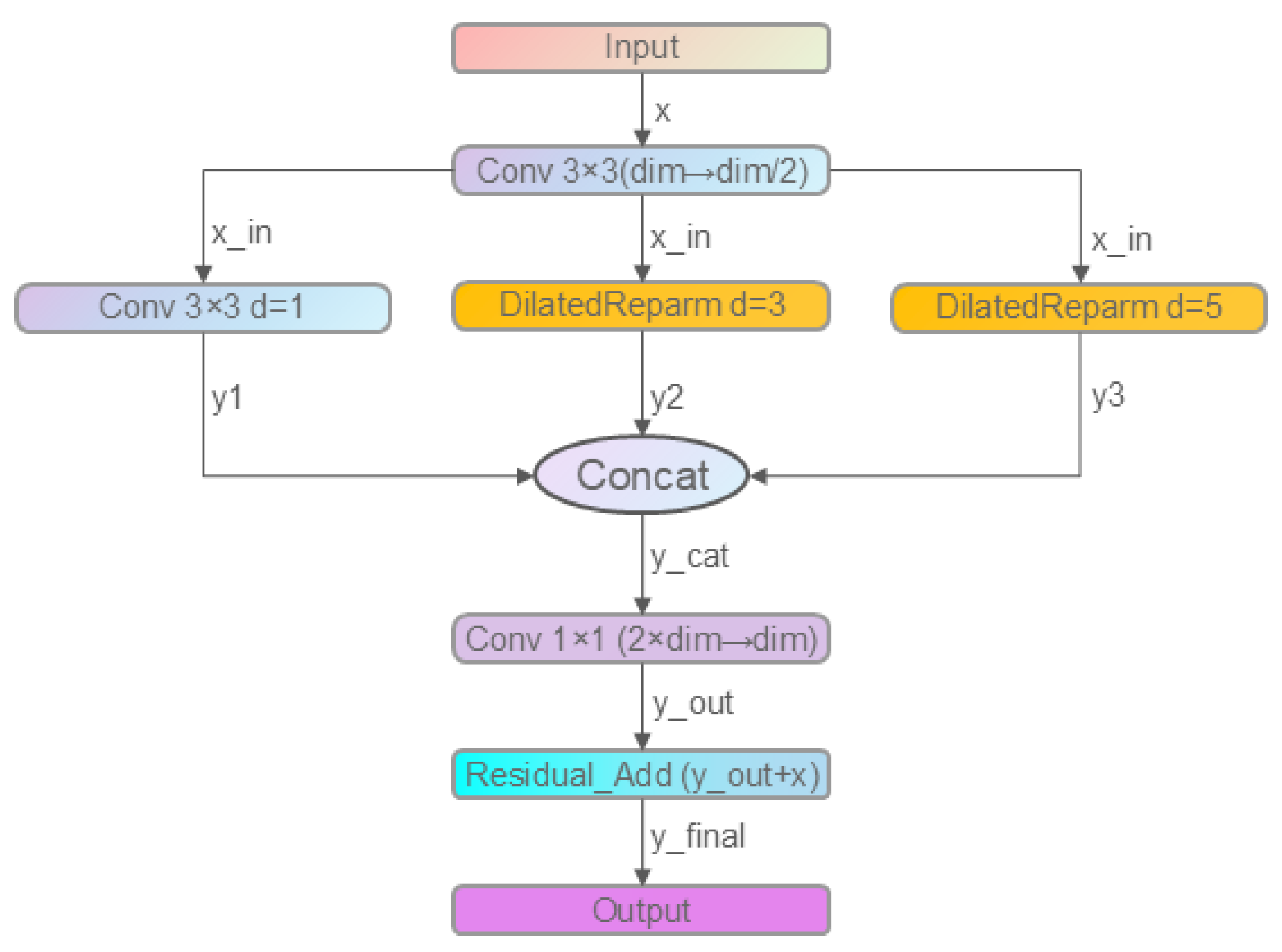

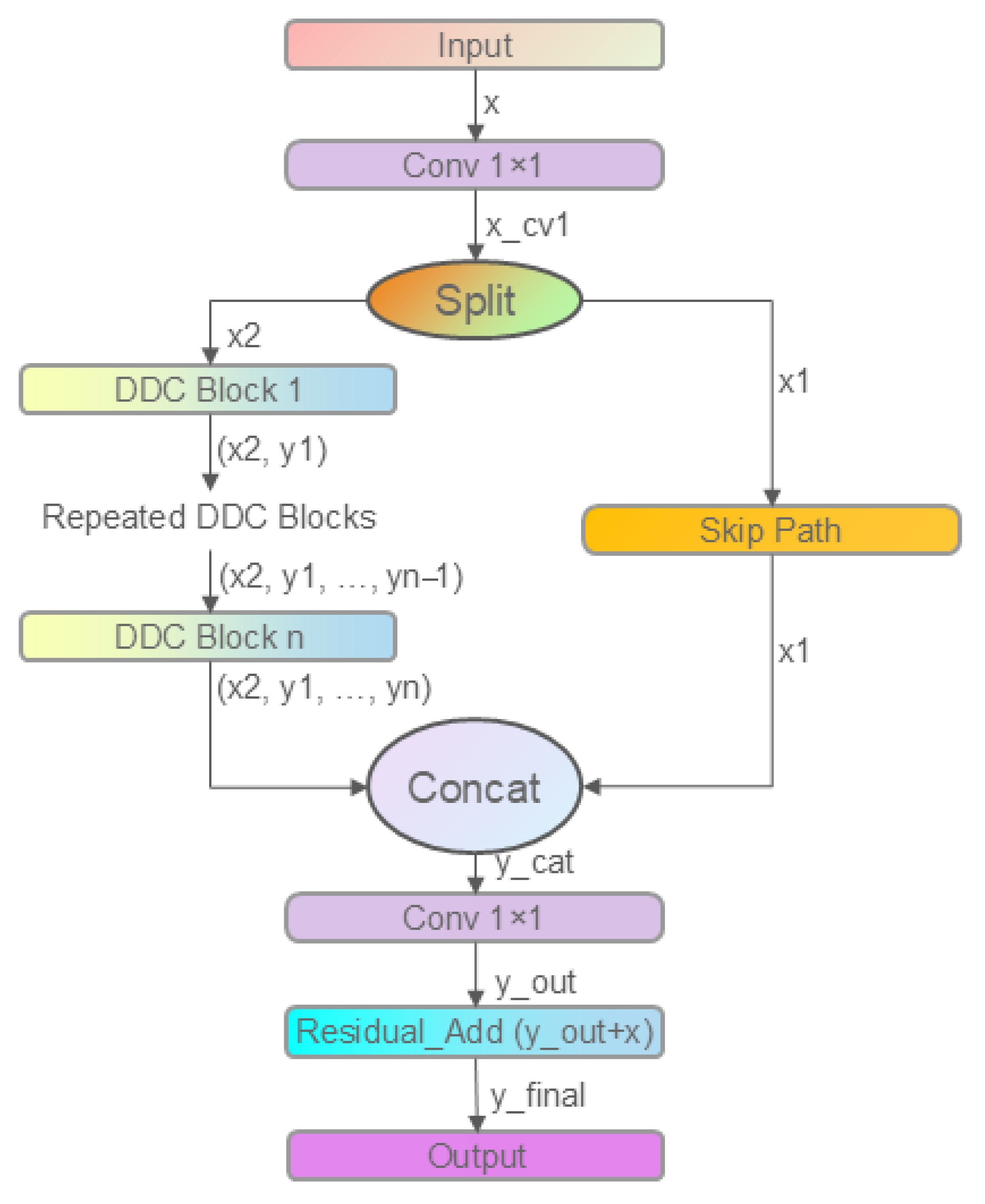

2.2.3. Improved C3k2 Module: C3k2_DDC

- Branch 1: Standard 3 × 3 convolution (dilation = 1)

- Branches 2 and 3: DilatedReparamBlocks with dilation rates of 3 and 5

2.2.4. Improved Detection Head: Detect_FCFQ

- Feature Processing: Input multi-scale features are processed via cv2 and cv3 branches to generate high-resolution feature maps, which are then concatenated to preserve both spatial details and contextual information;

- Corner Regression Branch: Predicts the coordinates of the target’s four corners (top-left, top-right, bottom-left, bottom-right) to improve localization accuracy for irregular targets;

- Classification Branch: Predicts the category probability of the target (e.g., CAWL vs. CGLS) using a 1 × 1 convolution and softmax activation;

- FCFQ Sub-module: As the core component (structure shown in Figure 8), it receives the predicted corner distributions (from the corner regression branch) and high-level contextual features (from the concatenated cv2/cv3 features). These inputs are fused via two lightweight 3 × 3 convolutions (with batch normalization and SiLU activation), followed by a 1 × 1 convolution to output a single bounding box quality score (range: 0–1). This score quantifies the reliability of the predicted bounding box (e.g., distinguishing a true CGLS lesion from a false positive caused by a leaf spot).

2.2.5. UIoU Loss Function

2.3. Experimental Setup

2.3.1. Hardware and Software Environment

2.3.2. Evaluation Metrics

- Core Accuracy Metrics

- 2.

- Efficiency Metrics

2.3.3. Training Configuration

3. Results

3.1. Overview

3.2. Ablation Experiments

3.2.1. Ablation on LAD-Conv Placement

3.2.2. Ablation on C3k2_DDC Placement

3.2.3. Full Ablation of All Enhancement Modules

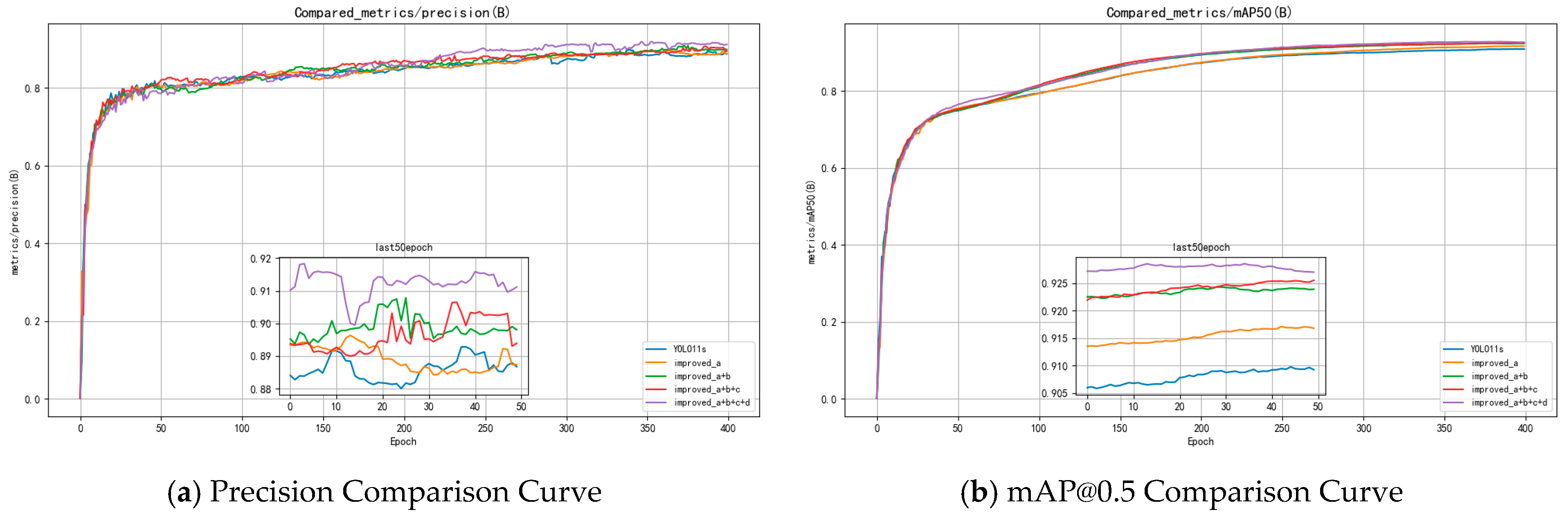

- The baseline model achieved 88.67% Precision, 90.92% mAP@0.5, with 21.3 GFLOPs and a model size of 18.3 MB. (Baseline-YOLO11s)

- Adding LAD-Conv alone improved mAP@0.5 to 91.68% while also reducing the model size and GFLOPs. (Improved: a)

- With the addition of C3k2_DDC, mAP@0.5 further increased to 92.39%. (Improved: a + b)

- Incorporating Detect_FCFQ pushed mAP@0.5 to 92.55%, albeit with a slight drop in precision. (Improved: a + b + c)

- Finally, with all four improvements, the model reached 91.12% Precision and 92.70% mAP@0.5, with only 20.2 GFLOPs and a compact size of 15.3 MB. (Improved: a + b + c + d)

3.3. Comparison with State-of-the-Art Models

3.4. Per-Class Detection Performance

3.5. Detection Visualization and Confusion Matrix

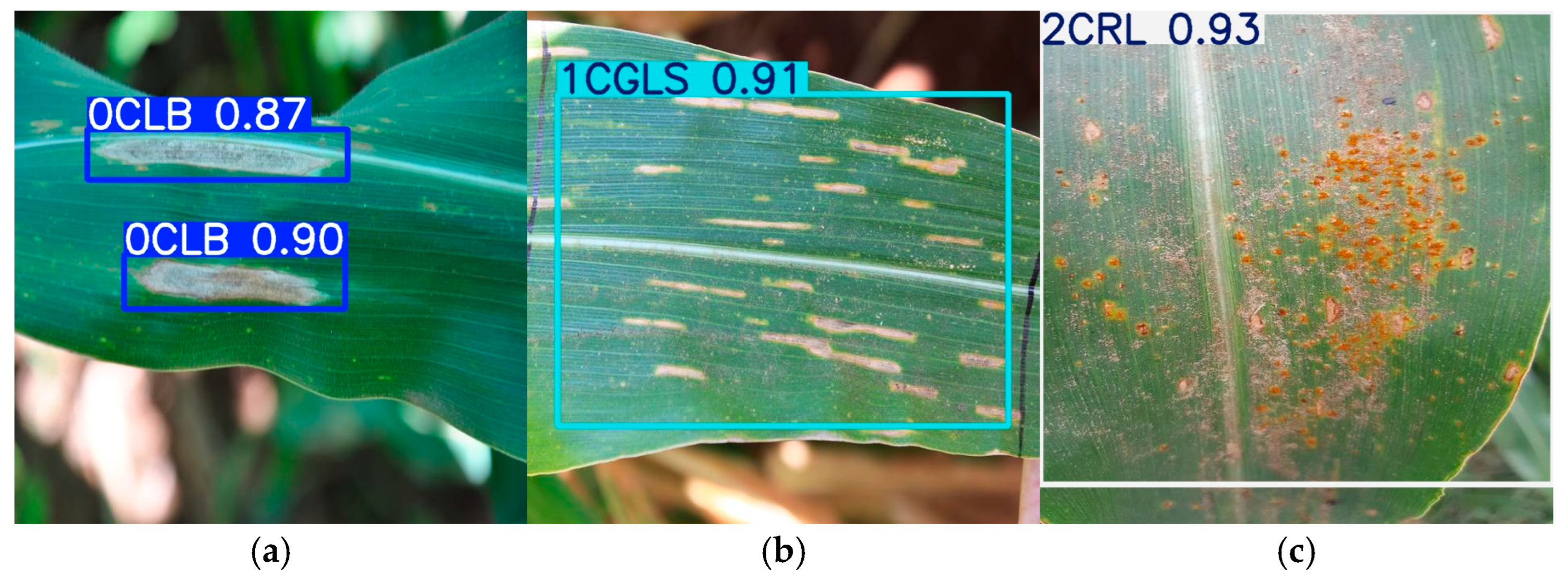

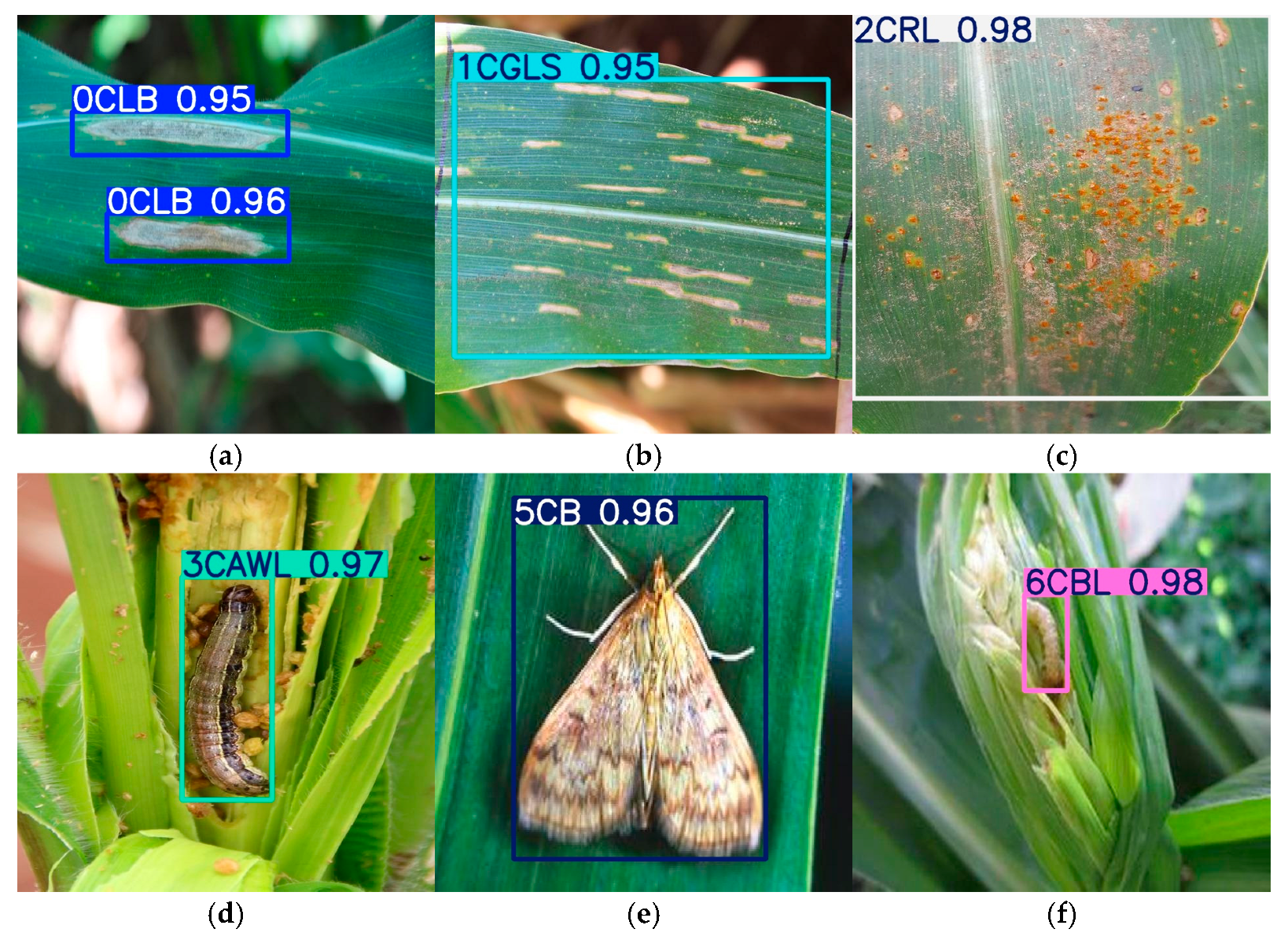

3.5.1. Detection Result Visualization

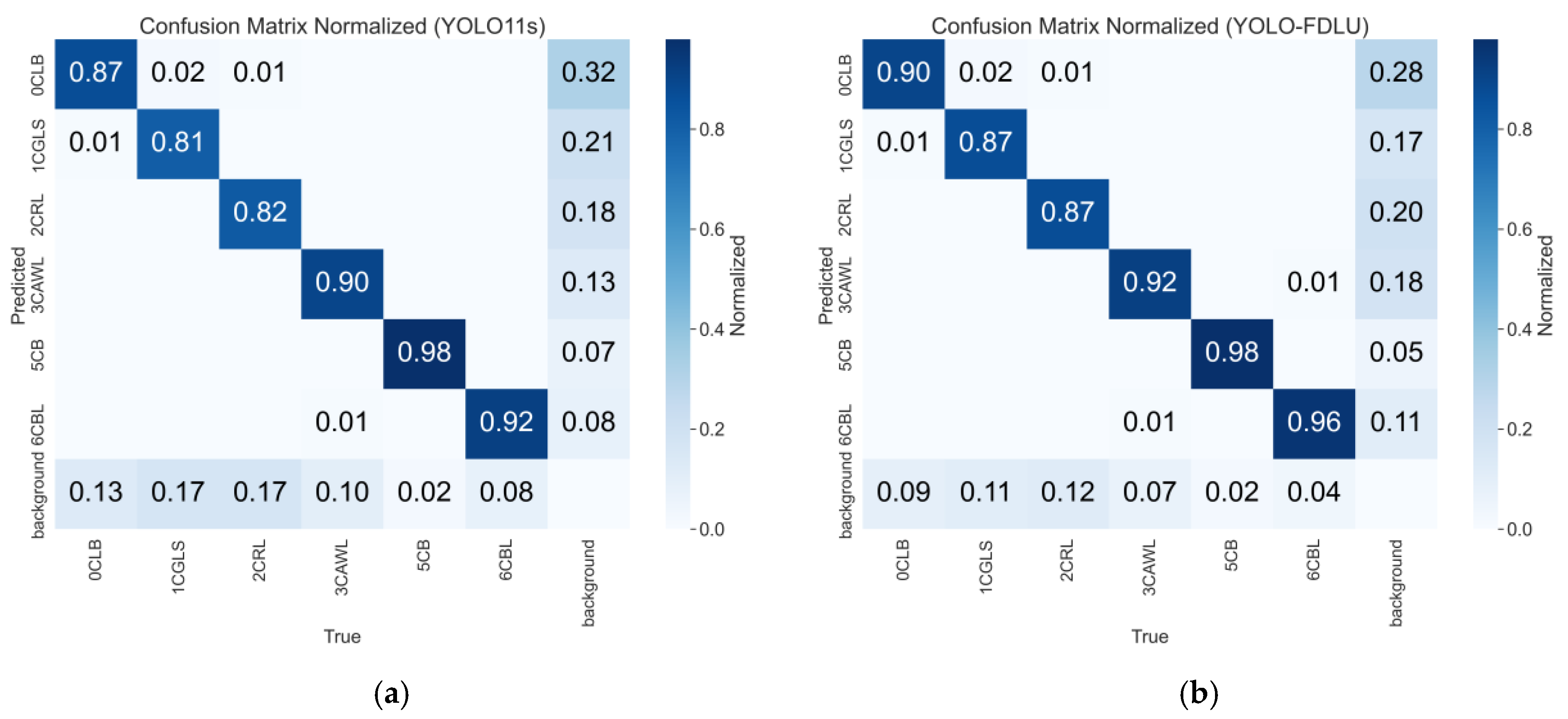

3.5.2. Confusion Matrix Analysis

4. Discussion

4.1. Core Contributions and Mechanistic Analysis

4.1.1. Complementary Roles of Enhancement Modules

- LAD-Conv (Lightweight Attention Downsampling): Unlike standard stride-2 convolutions that discard fine-grained features, LAD-Conv’s local attention mechanism preserves edge textures of small lesions (e.g., 3 mm CLB spots) and small pests in deep layers (P3–P5). This explains the 0.76-percentage-point mAP@0.5 improvement for CAWL (Table 8) and the 2.7-percentage-point gain for CLB, as the module retains critical features for distinguishing small targets from background noise.

- C3k2_DDC (Multi-Scale Fusion Block): By incorporating multi-dilation convolutions (rates = 3, 5), C3k2_DDC bridges feature gaps between large pests (e.g., 10 mm CB adults) and small lesions, addressing the multi-scale mismatch in baseline necks. This is evidenced by the 0.71-percentage-point mAP@0.5 gain for CGLS (Table 8) and the 5.1-percentage-point reduction in inter-class confusion between CGLS and CRL (from 8% to 3%, Figure 12), as the module enhances discriminative features for visually similar categories.

- Detect_FCFQ (Quality-Aware Detection Head): Through joint corner fusion and quality estimation, this module reduces false positives from background clutter— a common issue in agricultural scenes. Experimental results show a 6% drop in CGLS background misclassification (from 12% to 6%, Figure 12) and a 0.16-percentage-point mAP@0.5 gain for CBL, confirming its ability to improve localization reliability for low-contrast targets (e.g., CBL on leaf veins).

- UIoU Loss (Unified IoU Regression Loss): Compared to the baseline CIoU loss, UIoU’s progressive scaling factor stabilizes training and reduces boundary localization errors. This contributes to the 0.15-percentage-point mAP@0.5 improvement when integrating the loss (Table 5) and the 0.07 increase in CGLS AUC (from 0.82 to 0.89, Figure 12), as the loss optimizes box alignment for irregular lesions.

4.1.2. Comparison with Generic Lightweight YOLO Models

- Accuracy Leadership: It achieves the highest Precision (91.12%) and mAP@0.5 (92.70%), outperforming the closest competitor (YOLO11-s) by 2.45 and 1.78 percentage points, respectively. This confirms that task-specific module design (rather than scaling network depth/width) is more effective for agricultural detection, where target characteristics (small size, irregular shape) differ from generic object detection.

- Efficiency Balance: With 20.2 GFLOPs and a 15.3 MB model size, YOLO-FDLU is 8.5 GFLOPs more efficient than YOLOv8-s and 3.0 MB smaller than YOLO11-s.

- Robustness to Complex Scenes: It reduces multi-IoU performance variance (mAP@0.5–0.95 = 78.5%) by 5.1 percentage points compared to YOLOv10-s, and suppresses background false positives by 3–6% for leaf disease categories (Figure 12). This robustness is attributed to the integrated modules’ ability to handle field-specific challenges (e.g., light variation, occlusion).

4.2. Limitations and Future Directions

- Dataset Scalability: The current dataset (6 categories, 26,376 samples) covers only common maize pests/diseases, limiting generalization to regional variants (e.g., maize dwarf mosaic virus) or cross-crop scenarios. Future efforts will expand the dataset using semi-supervised learning (SSL) with unlabeled field images (≥50,000 samples) to improve category coverage without excessive manual annotation.

- Occlusion and Extreme Lighting: The model still misses 7% of occluded CAWL (leaf overlap >40%) and exhibits a 2.3-percentage-point mAP@0.5 drop under strong light (>8000 lux). A dynamic attention fusion module will be integrated to reconstruct occluded features, and a lightweight adaptive lighting augmentation (ALA) module will be added to enhance light robustness—both with minimal GFLOP overhead (<0.5).

- Cross-Source Generalization: Preliminary tests show a 4.2-percentage-point mAP@0.5 drop when testing on the IP102 dataset (unseen in training). Future work will adopt domain adaptation (DA) techniques to align feature distributions between datasets, improving real-world applicability.

5. Conclusions

- Dataset Construction: A high-quality maize pest and disease dataset was built, containing 26,376 images across 6 categories (CLB, CGLS, CRL, CAWL, CB, CBL) with diverse scenarios (different growth stages, lighting conditions). This dataset fills the gap of limited task-specific data for maize pest detection,

- Model Enhancements: Four task-specific modules were integrated into the YOLO11s baseline to form YOLO-FDLU:

- (1)

- LAD-Conv (P3–P5 deployment) preserves small-target features while reducing computational cost;

- (2)

- C3k2_DDC (neck deployment) enhances multi-scale feature fusion for irregular lesions;

- (3)

- Detect_FCFQ improves localization reliability and reduces background false positives;

- (4)

- UIoU loss optimizes boundary regression and training stability.

- Performance Superiority: Compared to mainstream YOLO variants, YOLO-FDLU achieves the highest Precision (91.12%) and mAP@0.5 (92.70%) while maintaining low complexity (20.2 GFLOPs, 15.3 MB). It balances accuracy and efficiency, making it suitable for edge deployment in field agricultural monitoring.

- Practical Applicability: The model reduces inter-class confusion (CGLS-CRL: 8%→3%) and background misclassification (CGLS: 12%→6%), demonstrating strong robustness to complex field environments. This validates its potential for real-world maize pest and disease management, supporting precision agriculture practices.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yang, S.; Xing, Z.; Wang, H.; Dong, X.; Gao, X.; Liu, Z.; Zhao, Y. Maize-YOLO: A new high-precision and real-time method for maize pest detection. Insects 2023, 14, 278. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Huang, H.; Sun, Y.; Wu, X. AgriPest-YOLO: A rapid light-trap agricultural pest detection method based on deep learning. Front. Plant Sci. 2022, 13, 1079384. [Google Scholar] [CrossRef]

- Li, R.; Li, Y.; Qin, W.; Abbas, A.; Li, S.; Ji, R.; Yang, J. Lightweight network for corn leaf disease identification based on improved YOLO v8s. Agriculture 2024, 14, 220. [Google Scholar] [CrossRef]

- de Almeida, G.P.S.; dos Santos, L.N.S.; da Silva Souza, L.R.; da Costa Gontijo, P.; de Oliveira, R.; Teixeira, M.C.; do Carmo França, H.F. Performance analysis of YOLO and Detectron2 models for detecting corn and soybean pests employing customized dataset. Agronomy 2024, 14, 2194. [Google Scholar] [CrossRef]

- Lu, Y.; Liu, P.; Tan, C. MA-YOLO: A pest target detection algorithm with multi-scale fusion and attention mechanism. Agronomy 2025, 15, 1549. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 1419. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X. Plant diseases and pests detection based on deep learning: A review. Plant Methods 2021, 17, 22. [Google Scholar] [CrossRef]

- Craze, H.; Berger, D. Maize_in_Field_Dataset [Data Set]. Kaggle. 2022. Available online: https://www.kaggle.com/datasets/hamishcrazeai/maize-in-field-dataset (accessed on 14 August 2024).

- Wu, X.; Zhan, C.; Lai, Y.K.; Cheng, M.M.; Yang, J. Ip102: A large-scale benchmark dataset for insect pest recognition. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8787–8796. [Google Scholar]

- Wu, X.; Zhan, C.; Lai, Y.; Cheng, M.; Yang, J. IP102: A Large-Scale Benchmark Dataset for Insect Pest Recognition [Data Set]. GitHub. 2022. Available online: https://github.com/xpwu95/IP102 (accessed on 12 July 2024).

- Ghose, S. Corn or Maize Leaf Disease Dataset. Kaggle. 2020. Available online: https://www.kaggle.com/datasets/smaranjitghose/corn-or-maize-leaf-disease-dataset/data (accessed on 21 July 2024).

- Ferentinos, K.P. Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 2018, 145, 311–318. [Google Scholar] [CrossRef]

- Too, E.C.; Yujian, L.; Njuki, S.; Yingchun, L. A comparative study of fine-tuning deep learning models for plant disease identification. Comput. Electron. Agric. 2019, 161, 272–279. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Min, B.; Kim, T.; Shin, D.; Shin, D. Data augmentation method for plant leaf disease recognition. Appl. Sci. 2023, 13, 1465. [Google Scholar] [CrossRef]

- Wang, K.; Chen, K.; Du, H.; Liu, S.; Xu, J.; Zhao, J.; Liu, Y. New image dataset and new negative sample judgment method for crop pest recognition based on deep learning models. Ecol. Inform. 2022, 69, 101620. [Google Scholar] [CrossRef]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef]

- Ghosal, S.; Zheng, B.; Chapman, S.C.; Potgieter, A.B.; Jordan, D.R.; Wang, X.; Guo, W. A weakly supervised deep learning framework for sorghum head detection and counting. Plant Phenomics 2019, 2019, 1525874. [Google Scholar] [CrossRef] [PubMed]

- Milioto, A.; Lottes, P.; Stachniss, C. Real-time semantic segmentation of crop and weed for precision agriculture robots leveraging background knowledge in CNNs. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2229–2235. [Google Scholar]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting unreasonable effectiveness of data in deep learning era. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 843–852. [Google Scholar]

- Luo, X.; Cai, Z.; Shao, B.; Wang, Y. Unified-IoU: For High-Quality Object Detection. Available online: https://github.com/lxj-drifter/UIOU_files (accessed on 20 January 2025).

- Li, P.; Zhou, J.; Sun, H.; Zeng, J. RDRM-YOLO: A high-accuracy and lightweight rice disease detection model for complex field environments based on improved YOLOv5. Agriculture 2025, 15, 479. [Google Scholar] [CrossRef]

- Yue, J.; Tian, J.; Philpot, W.; Tian, Q.; Feng, H.; Fu, Y. VNAI-NDVI-space and polar coordinate method for assessing crop leaf chlorophyll content and fractional cover. Comput. Electron. Agric. 2023, 207, 107758. [Google Scholar] [CrossRef]

- Yu, Y.; Zhou, Q.; Wang, H.; Lv, K.; Zhang, L.; Li, J.; Li, D. LP-YOLO: A lightweight object detection network regarding insect pests for mobile terminal devices based on improved YOLOv8. Agriculture 2024, 14, 1420. [Google Scholar] [CrossRef]

- Kim, D.S.; Kim, Y.H.; Park, K.R. Semantic segmentation by multi-scale feature extraction based on grouped dilated convolution module. Mathematics 2021, 9, 947. [Google Scholar] [CrossRef]

- Chen, C.; Wei, J.; Peng, C.; Qin, H. Depth-quality-aware salient object detection. IEEE Trans. Image Process. 2021, 30, 2350–2363. [Google Scholar] [CrossRef]

- Xiao, L.; Pan, Z.; Du, X.; Chen, W.; Qu, W.; Bai, Y.; Xu, T. Weighted skip-connection feature fusion: A method for augmenting UAV oriented rice panicle image segmentation. Comput. Electron. Agric. 2023, 207, 107754. [Google Scholar] [CrossRef]

- Zheng, Z.; Ye, R.; Wang, P.; Ren, D.; Zuo, W.; Hou, Q.; Cheng, M.M. Localization distillation for dense object detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–25 June 2022; pp. 9407–9416. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 April 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Su, K.; Cao, L.; Zhao, B.; Li, N.; Wu, D.; Han, X. N-IoU: Better IoU-based bounding box regression loss for object detection. Neural Comput. Appl. 2024, 36, 3049–3063. [Google Scholar] [CrossRef]

- Tsai, Y.S.; Tsai, C.T.; Huang, J.H. Multi-scale detection of underwater objects using attention mechanisms and normalized Wasserstein distance loss. J. Supercomput. 2025, 81, 5372–5403. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Fei-Fei, L. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Upadhyay, A.; Sunil, G.C.; Zhang, Y.; Koparan, C.; Sun, X. Development and evaluation of a machine vision and deep learning-based smart sprayer system for site-specific weed management in row crops: An edge computing approach. J. Agric. Food Res. 2024, 18, 101331. [Google Scholar] [CrossRef]

| Number of Images (pcs) | Precision (%) | mAP@0.5 (%) | Training Time (hours) |

|---|---|---|---|

| 8793 | 76.8 | 69.5 | 14.49 |

| 17,598 | 85.8 | 85.7 | 28.88 |

| 26,376 | 88.7 | 90.9 | 42.34 |

| Component | Version/Parameter |

|---|---|

| Operating System | Linux Ubuntu 22.04 LTS |

| Memory | 45 GB DDR4 (3200 MHz) |

| GPU | NVIDIA GeForce RTX 3090 (24 GB) |

| CUDA Toolkit | 12.1 |

| CuDNN | 8.9.2 |

| PyTorch Version | 2.3.0 |

| Python Version | 3.10.12 |

| mmDetection Version | 3.3.0 |

| mmcv Version | 2.1.0 |

| Ultralytics Version | 8.10.12 |

| Hyperparameter | Setting |

|---|---|

| Batch Size | 64 |

| Number of Epochs | 400 |

| Optimization algorithm | SGD (momentum = 0.9, dampening = 0) |

| Initial learning rate | Cosine annealing: initial learning rate = 0.01, final learning rate = 0.0001; warm-up for the first 5 epochs (linear increase to 0.01) |

| Weight Decay | 0.0005 |

| Batch Normalization Momentum | 0.937 |

| Random Seed | 42 |

| Model Scale | s |

| Input Image Size | 640 × 640 pixels |

| Loss Function | CIoU for baseline models; UIoU loss for YOLO-FDLU |

| P1 + P2 | P3 + P4 + P5 | Precision (%) | mAP@0.5 (%) | mAP@0.5–0.95 (%) | GFLOPS | Model Size (MB) | |

|---|---|---|---|---|---|---|---|

| Baseline: YOLO11s | - | - | 88.67 | 90.92 | 77.39 | 21.3 | 18.3 |

| Baseline+ LAD-CONV | √ | √ | 89.51 | 91.60 | 78.64 | 19.8 | 14.6 |

| Baseline+ LAD-CONV | - | √ | 88.74 | 91.68 | 78.44 | 20.0 | 15.4 |

| Improved a | C3k2_DDC Locate | Precision (%) | mAP@0.5 (%) | mAP@0.5–0.95 (%) | GFLOPS | Model Size (MB) | |

|---|---|---|---|---|---|---|---|

| Backbone | Neck | ||||||

| √ | - | - | 88.74 | 91.68 | 78.44 | 20.0 | 15.4 |

| √ | √ | √ | 90.72 | 91.87 | 79.97 | 20.1 | 15.0 |

| √ | √ | - | 91.51 | 91.66 | 78.68 | 19.9 | 15.1 |

| √ | - | √ | 89.81 | 92.39 | 80.37 | 20.1 | 15.3 |

| LAD-CONV | C3k2 _DDC | Detect _FCFQ | UIoU | Precision (%) | mAP@0.5 (%) | mAP@0.5–0.95 (%) | GFLOPS | Model Size (MB) | |

|---|---|---|---|---|---|---|---|---|---|

| Baseline-YOLO11s | - | - | - | - | 88.67 | 90.92 | 77.39 | 21.3 | 18.3 |

| Improved: a | √ | - | - | - | 88.74 | 91.68 | 78.44 | 20.0 | 15.4 |

| Improved: a + b | √ | √ | - | - | 89.81 | 92.39 | 80.37 | 20.1 | 15.3 |

| Improved: a + b + c | √ | √ | √ | - | 89.39 | 92.55 | 80.74 | 20.2 | 15.3 |

| Improved: a + b + c + d | √ | √ | √ | √ | 91.12 | 92.70 | 76.28 | 20.2 | 15.3 |

| Precision (%) | mAP@0.5 (%) | mAP@0.5–0.95 (%) | GFLOPS | Model Size (MB) | |

|---|---|---|---|---|---|

| YOLOv5master-s | 85.50 | 88.07 | 67.31 | 16.0 | 14.5 |

| YOLOv8-s | 88.29 | 89.48 | 74.50 | 28.7 | 22.6 |

| YOLOv10-s | 87.88 | 90.56 | 78.01 | 24.8 | 16.6 |

| YOLO11-s | 88.67 | 90.92 | 77.39 | 21.3 | 18.3 |

| YOLO12-s | 87.64 | 88.06 | 73.95 | 21.5 | 19.8 |

| YOLO-FDLU (Ours) | 91.12 | 92.70 | 76.28 | 20.2 | 15.3 |

| YOLO11s | YOLO-FDLU | |||||

|---|---|---|---|---|---|---|

| Precision (%) | mAP@0.5 (%) | mAP@0.5–0.95 (%) | Precision (%) | mAP@0.5 (%) | mAP@0.5–0.95 (%) | |

| All | 88.7 | 90.9 | 77.4 | 91.6 | 92.8 | 76.4 |

| CLB | 83.6 | 88.6 | 71.8 | 88.8 | 91.3 | 71.2 |

| CGLS | 84.7 | 84.8 | 68.4 | 89.6 | 88.2 | 68.7 |

| CRL | 85.5 | 85.8 | 75.2 | 89.9 | 88.8 | 75.9 |

| CAWL | 92.5 | 92.5 | 78.6 | 92.3 | 93.6 | 75.5 |

| CB | 92.8 | 98.1 | 89.5 | 94.6 | 97.6 | 88.5 |

| CBL | 92.9 | 95.9 | 80.9 | 94.1 | 97.2 | 78.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, B.; Yu, L.; Zhu, H.; Tan, Z. YOLO-FDLU: A Lightweight Improved YOLO11s-Based Algorithm for Accurate Maize Pest and Disease Detection. AgriEngineering 2025, 7, 323. https://doi.org/10.3390/agriengineering7100323

Li B, Yu L, Zhu H, Tan Z. YOLO-FDLU: A Lightweight Improved YOLO11s-Based Algorithm for Accurate Maize Pest and Disease Detection. AgriEngineering. 2025; 7(10):323. https://doi.org/10.3390/agriengineering7100323

Chicago/Turabian StyleLi, Bin, Licheng Yu, Huibao Zhu, and Zheng Tan. 2025. "YOLO-FDLU: A Lightweight Improved YOLO11s-Based Algorithm for Accurate Maize Pest and Disease Detection" AgriEngineering 7, no. 10: 323. https://doi.org/10.3390/agriengineering7100323

APA StyleLi, B., Yu, L., Zhu, H., & Tan, Z. (2025). YOLO-FDLU: A Lightweight Improved YOLO11s-Based Algorithm for Accurate Maize Pest and Disease Detection. AgriEngineering, 7(10), 323. https://doi.org/10.3390/agriengineering7100323