Detection and Early Warning of Duponchelia fovealis Zeller (Lepidoptera: Crambidae) Using an Automatic Monitoring System

Abstract

1. Introduction

2. Materials and Methods

2.1. Biological Material

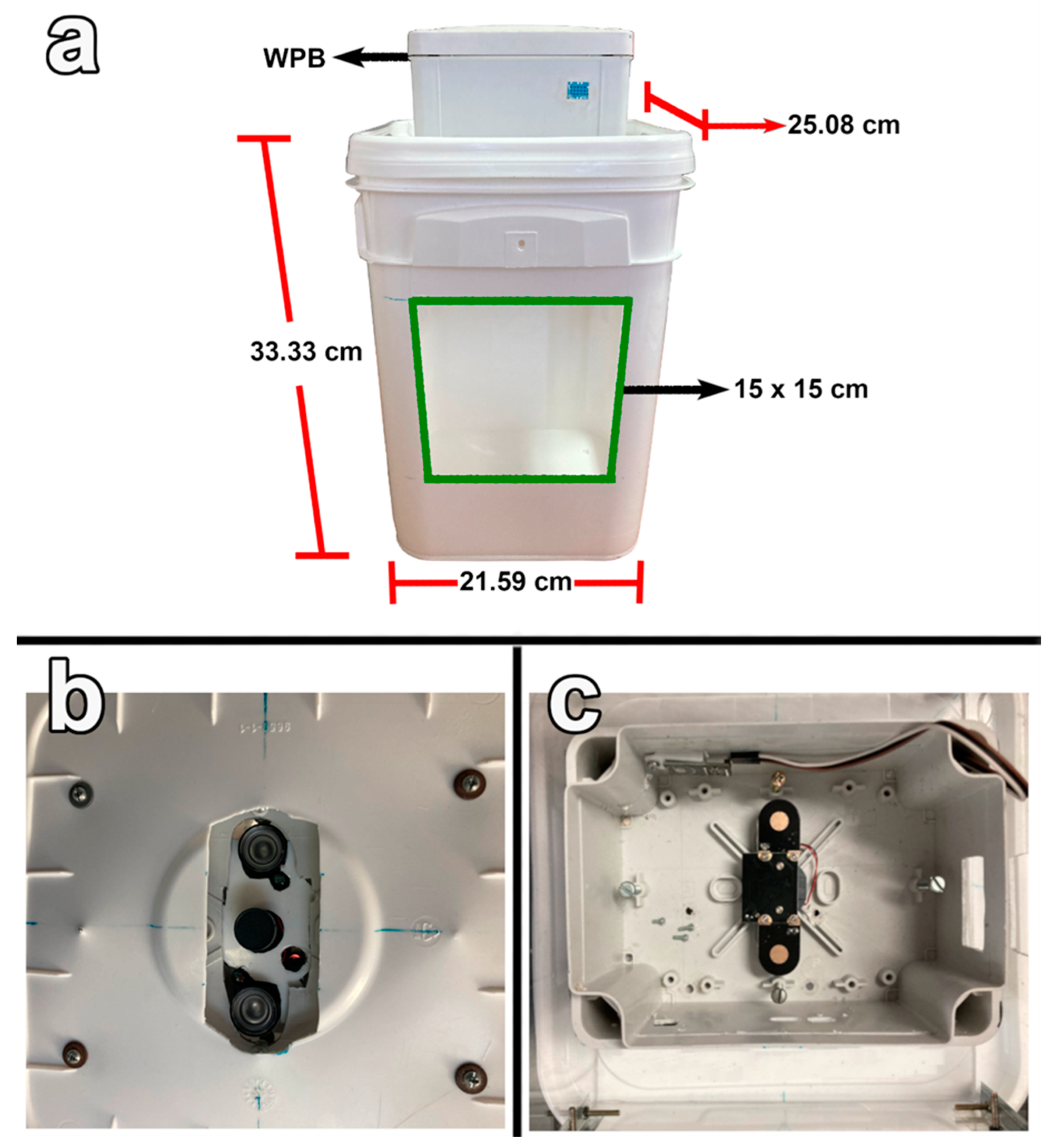

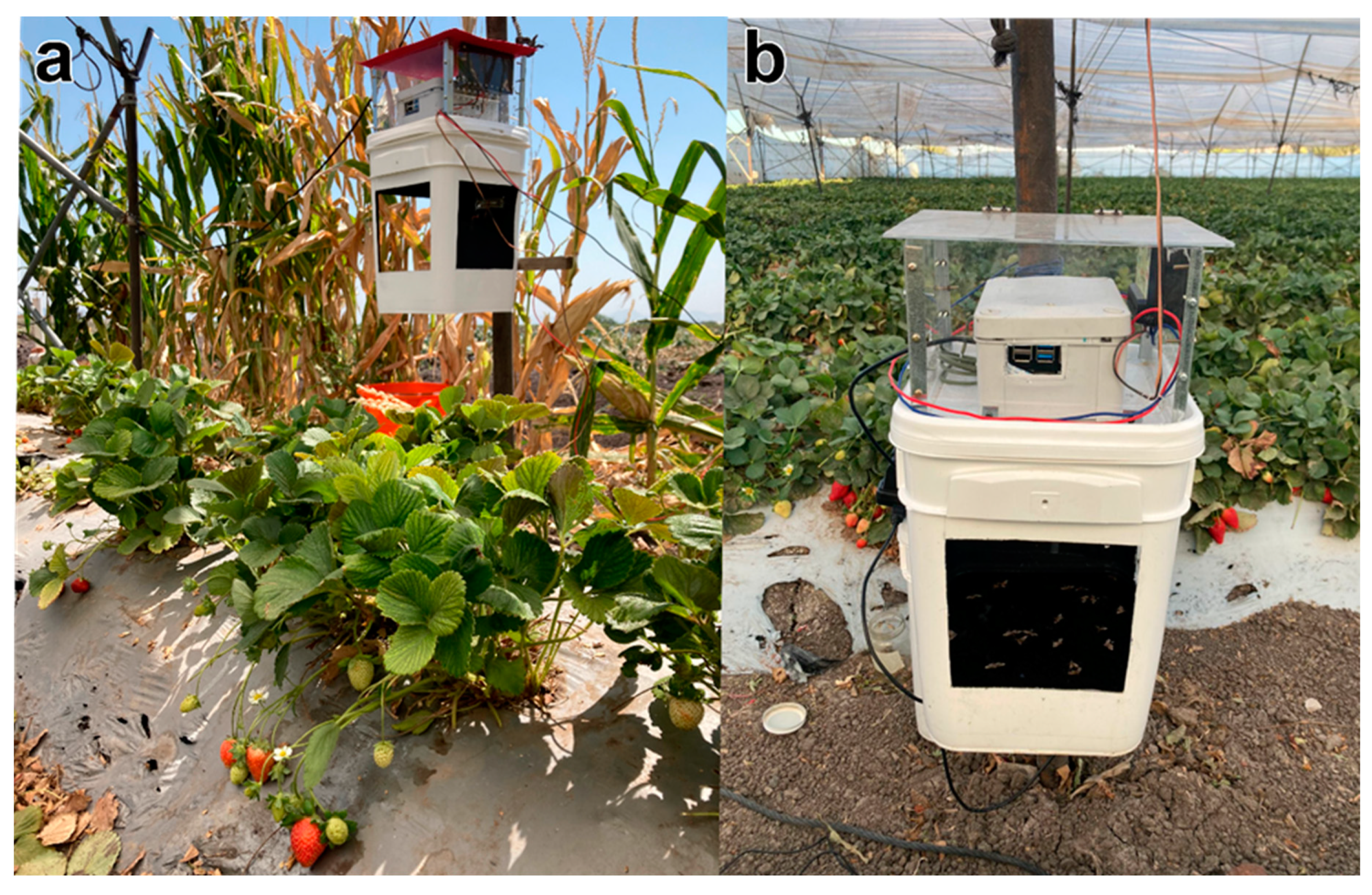

2.2. Trap Design

2.3. Laboratory Image Acquisition

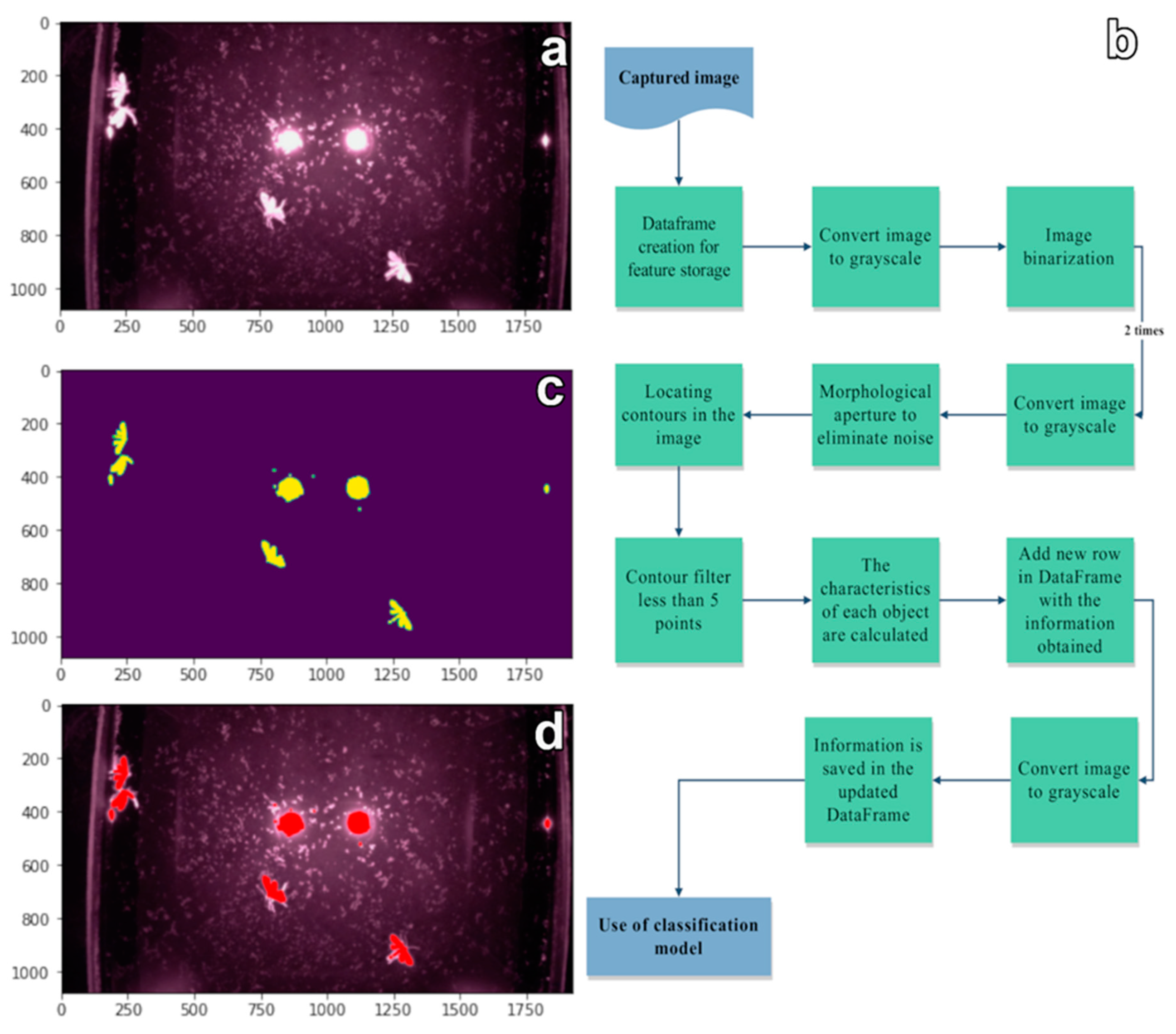

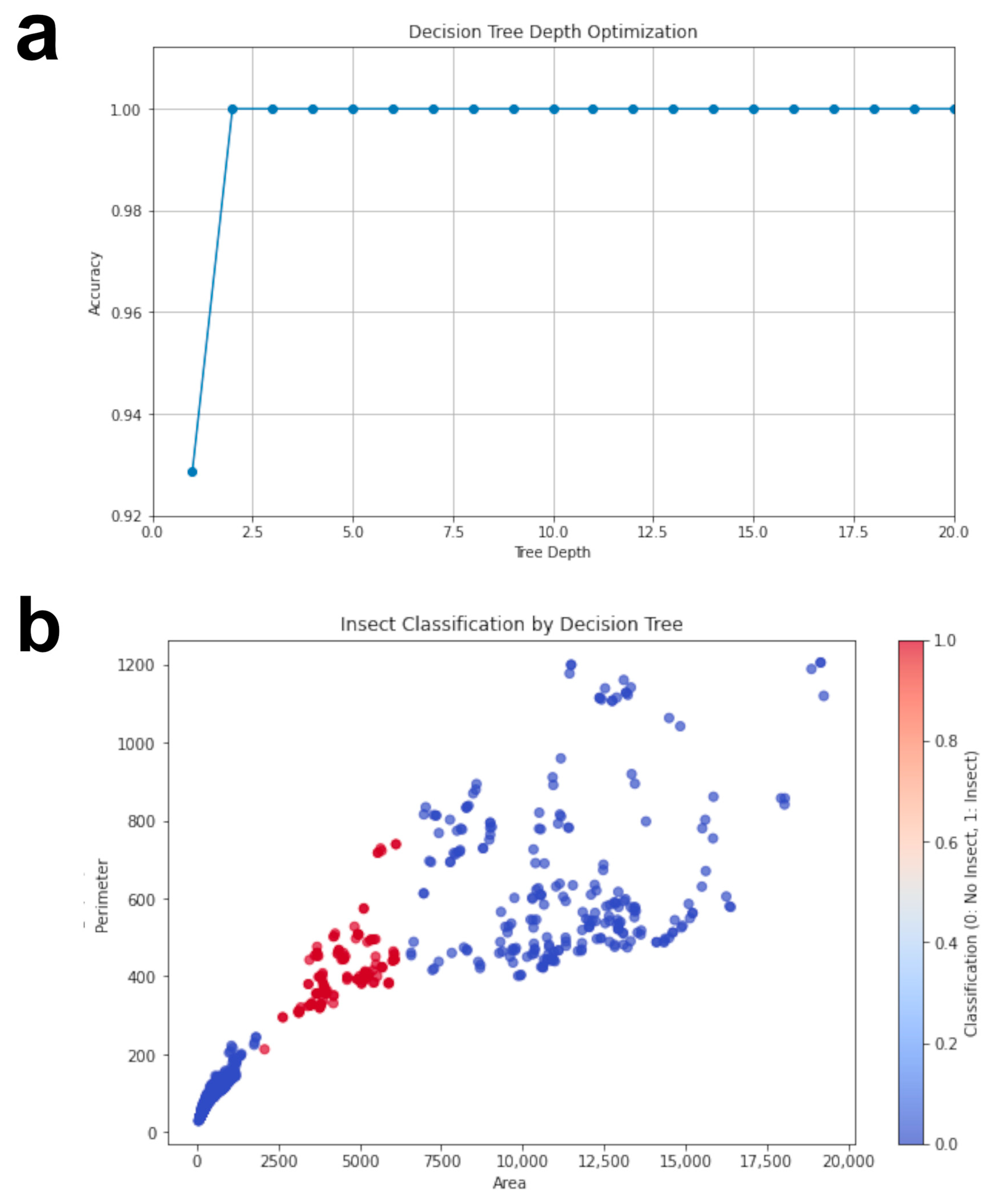

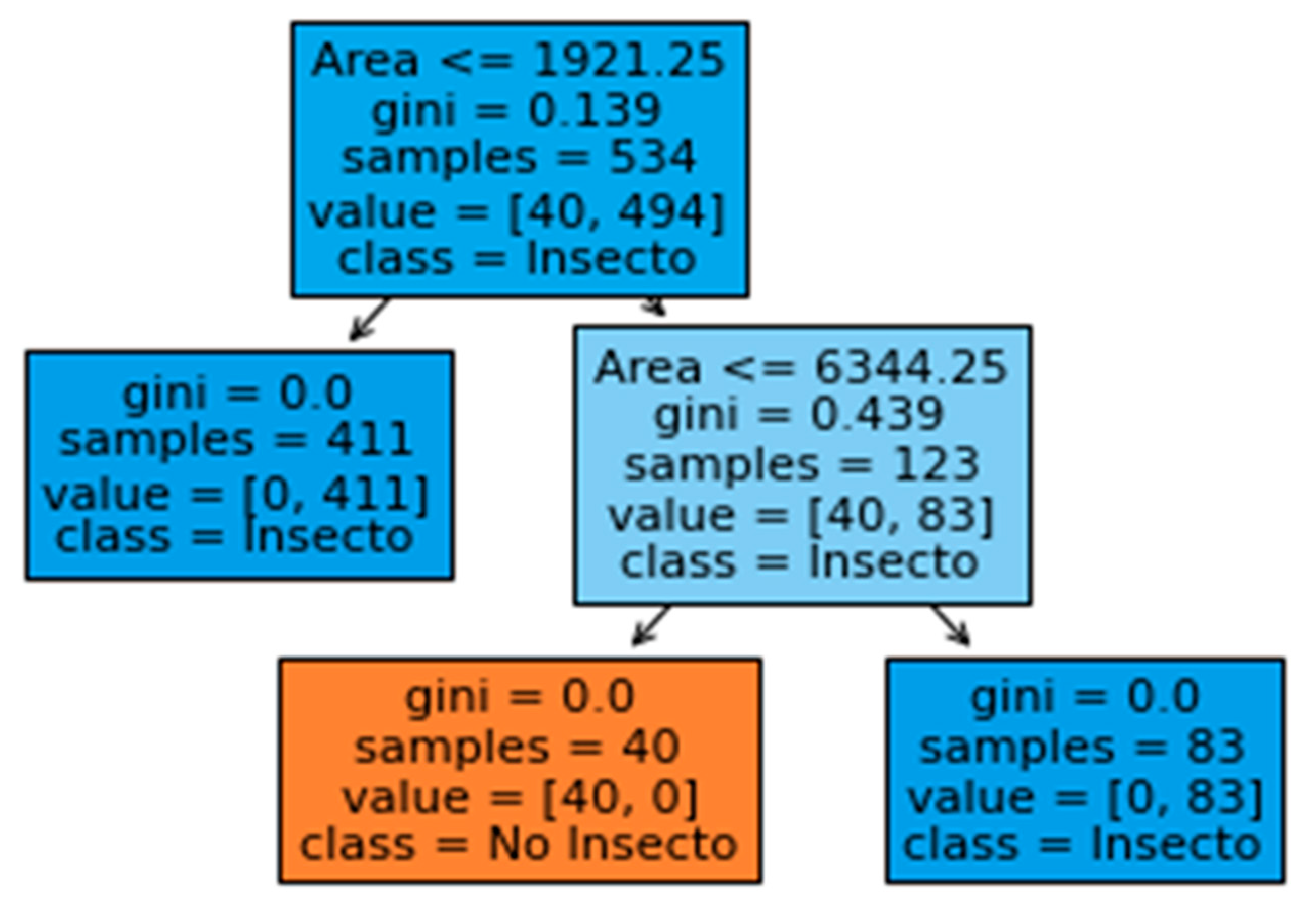

2.4. Image Processing and Training of the Machine Learning Model

2.5. Training and Validation of the D. fovealis Detection Model

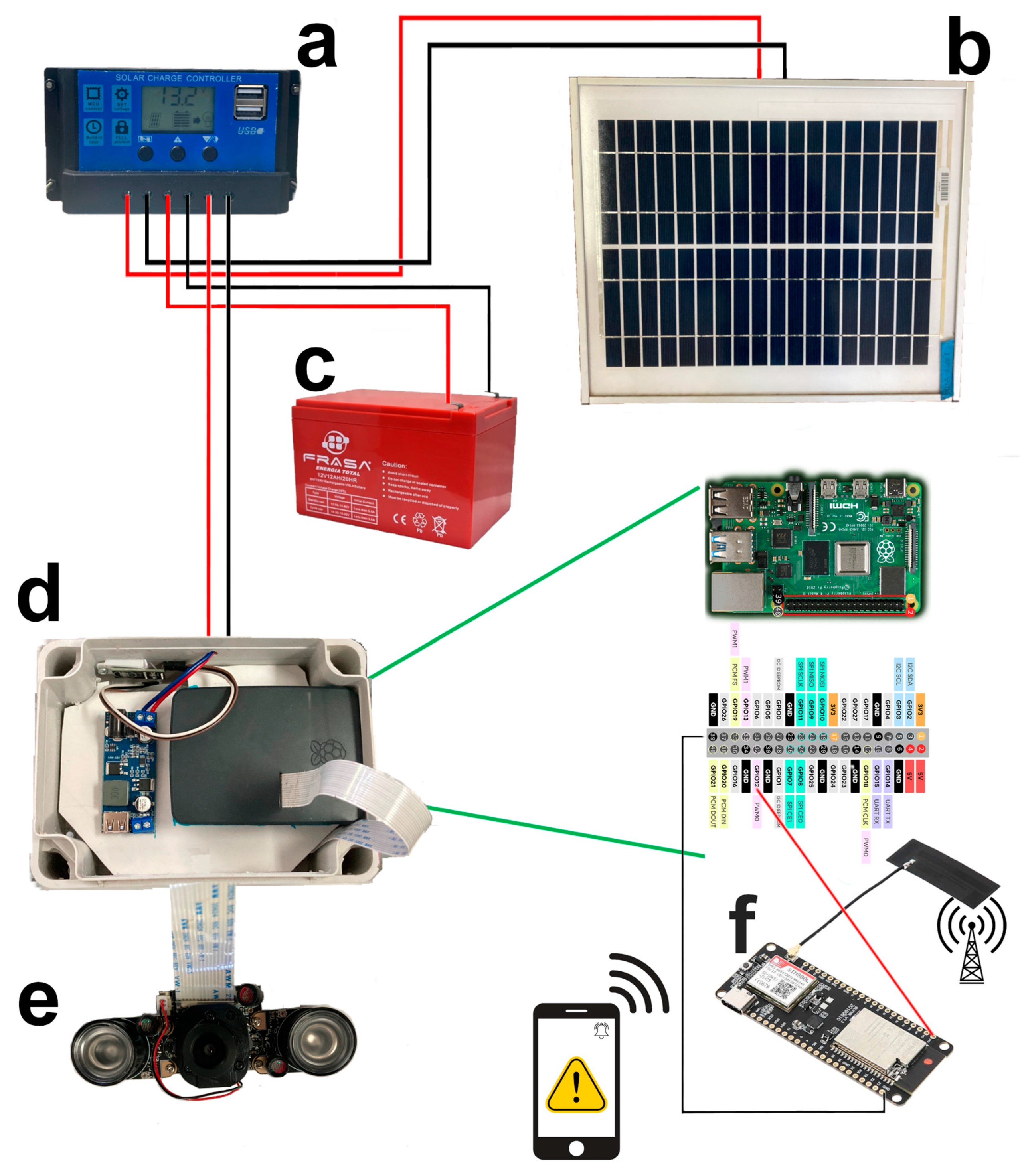

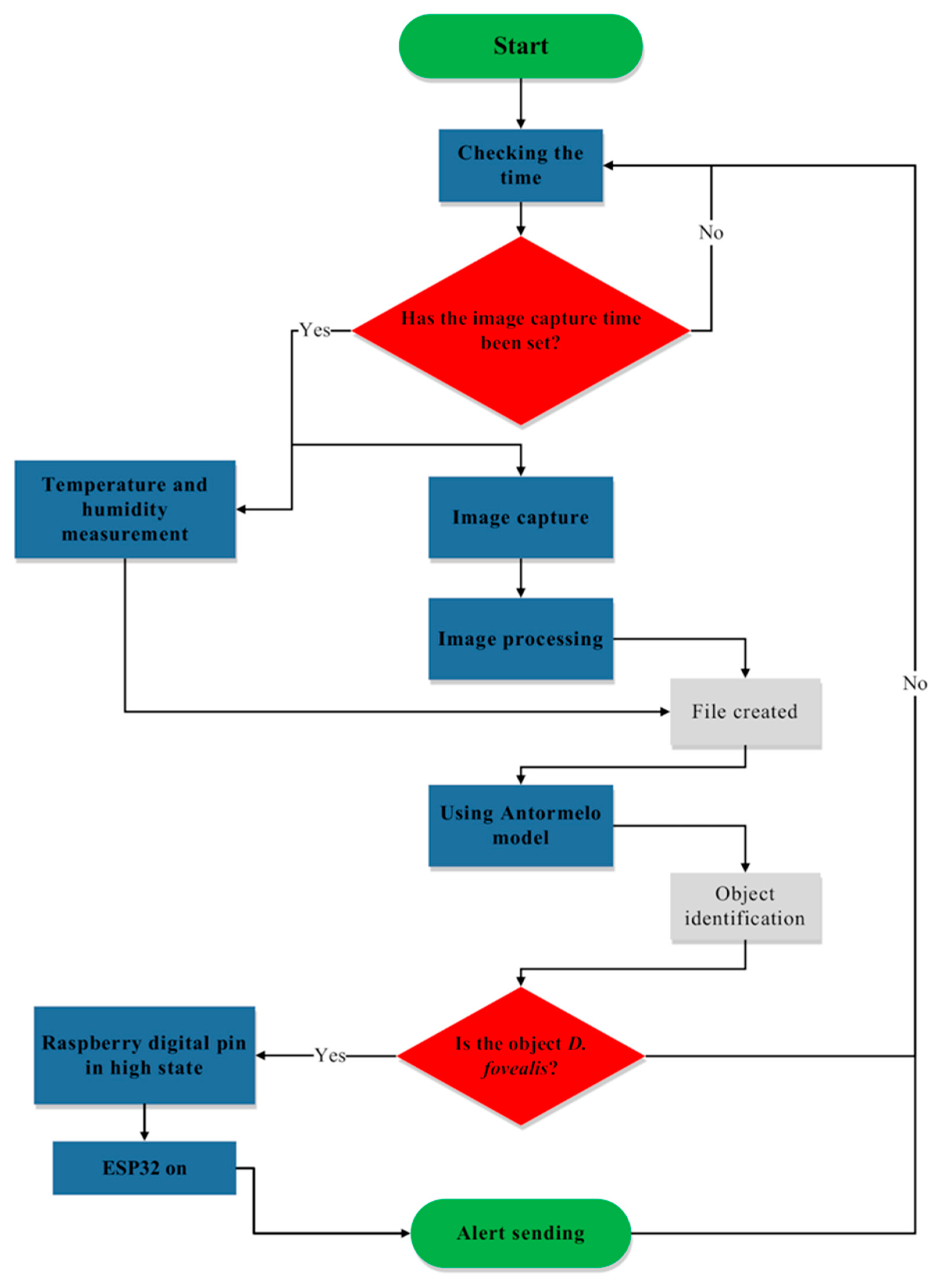

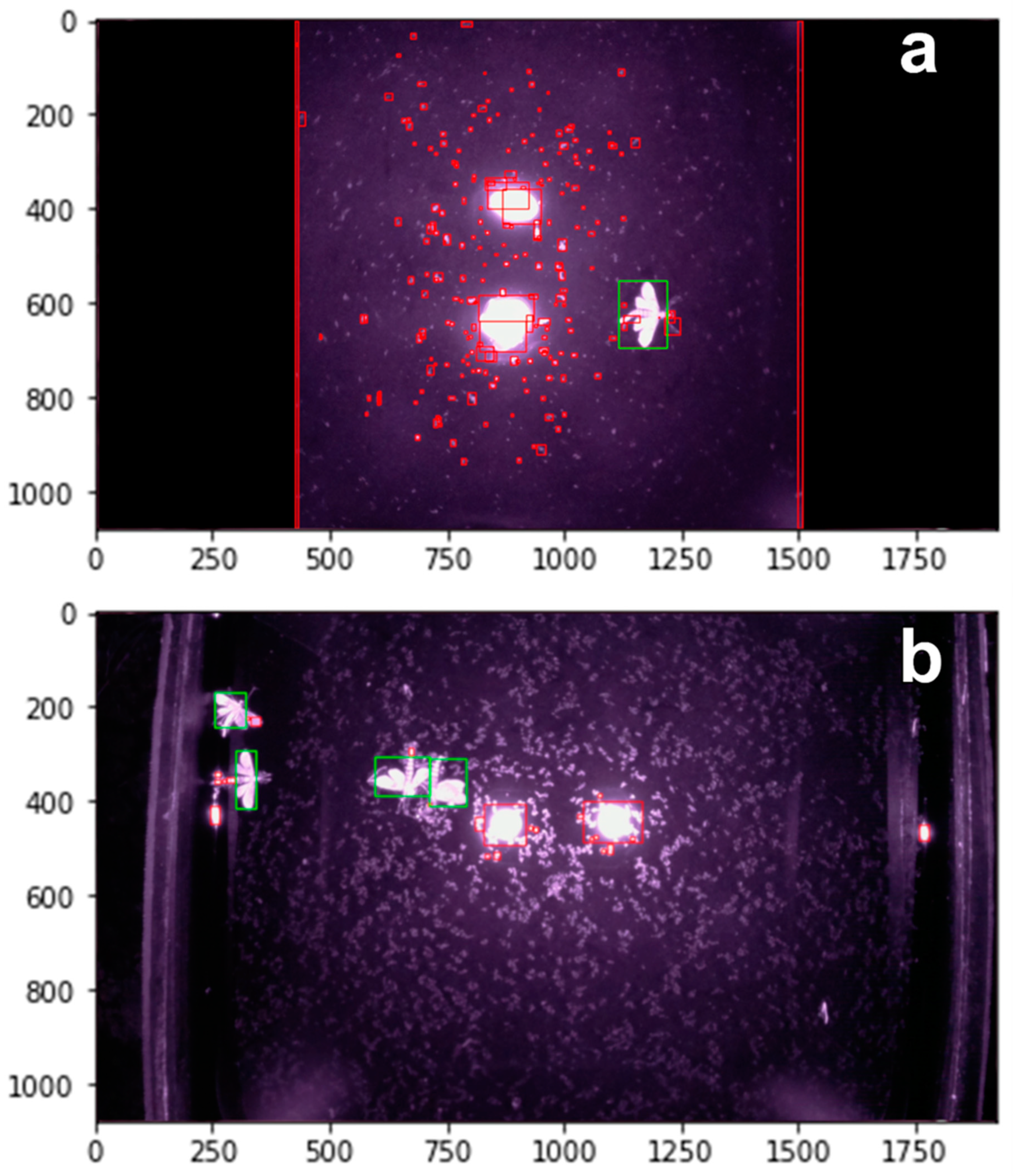

2.6. Design of the Trap Operation Control Algorithm and Field Tests

3. Results and Discussion

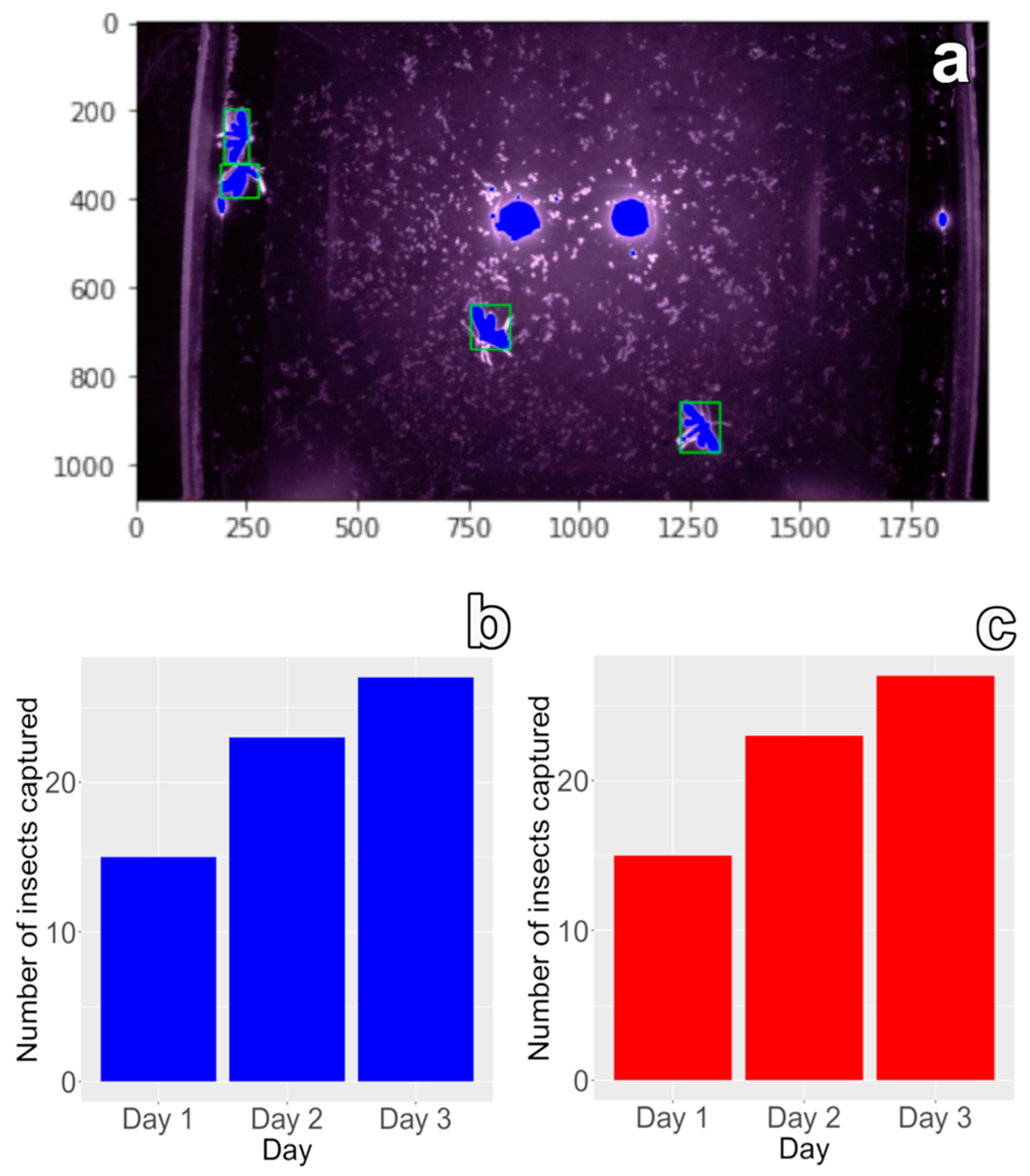

3.1. Insect Detection Model

3.2. Field Operation and Testing

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, D.; Song, Z.; Quan, C.; Xu, X.; Liu, C. Recent advances in image fusion technology in agriculture. Comput. Electron. Agric. 2021, 191, 106491. [Google Scholar] [CrossRef]

- Rustia, D.J.A.; Lin, C.E.; Chung, J.Y.; Zhuang, Y.J.; Hsu, J.C.; Lin, T.T. Application of an image and environmental sensor network for automated greenhouse insect pest monitoring. J. Asia Pac. Entomol. 2020, 23, 17–28. [Google Scholar] [CrossRef]

- Barrera, J.F.; Montoya, P.; Rojas, J. Bases para la Aplicación De Sistemas De Trampas Y Atrayentes En Manejo Integrado De Plagas. In Simposio de Trampas y Atrayentes en Detección, Monitoreo y Control de Plagas de Importancia Económica; Barrera, J.F., Montoya, P., Eds.; Sociedad Mexicana de Entomología y el Colegio de la Frontera Sur: Manzanillo, Colima, Mexico, 2006; pp. 1–16. Available online: https://www.researchgate.net/publication/237736490 (accessed on 13 March 2024).

- Dent, D.; Binks, R.H. Insect Pest Management; CABI Digital Library: Iver, UK, 2020; pp. 12–38. [Google Scholar]

- Yen, A.L.; Madge, D.G.; Berry, N.A.; Yen, J.D.L. Evaluating the effectiveness of five sampling methods for detection of the tomato potato psyllid, Bactericera cockerelli (Šulc) (Hemiptera: Psylloidea: Triozidae). Aust. J. Entomol. 2013, 52, 168–174. [Google Scholar] [CrossRef]

- Espinoza, K.; Valera, D.L.; Torres, J.A.; López, A.; Molina-Aiz, F.D. Combination of image processing and artificial neural networks as a novel approach for the identification of Bemisia tabaci and Frankliniella occidentalis on sticky traps in greenhouse agriculture. Comput. Electron. Agric. 2016, 127, 495–505. [Google Scholar] [CrossRef]

- Rustia, D.J.A.; Lin, T.T. An IoT-based wireless imaging and sensor node system for remote greenhouse pest monitoring. Chem. Eng. Trans. 2017, 58, 601–606. [Google Scholar] [CrossRef]

- Bashir, M.; Alvi, A.M.; Naz, H. Effectiveness of sticky traps in monitoring of insects. J. Agric. Food Environ. Sci. 2014, 1, 1–2. [Google Scholar]

- Devi, M.S.; Roy, K. Comparable study on different coloured sticky traps for catching of onion thrips, Thrips tabaci Lindeman. J. Entomol. Zool. Stud. 2017, 5, 669–671. [Google Scholar]

- Sütő, J. Embedded system-based sticky paper trap with deep learning-based insect-counting algorithm. Electronics 2021, 10, 1754. [Google Scholar] [CrossRef]

- Zhong, Y.; Gao, J.; Lei, Q.; Zhou, Y. A vision-based counting and recognition system for flying insects in intelligent agriculture. Sensors 2018, 18, 1489. [Google Scholar] [CrossRef]

- Tian, H.; Wang, T.; Liu, Y.; Qiao, X.; Li, Y. Computer vision technology in agricultural automation—A review. Inf. Process. Agric. China Agric. Univ. 2020, 7, 1–19. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Detecting and Classifying Pests in Crops Using Proximal Images and Machine Learning: A Review. AI 2020, 1, 312–328. [Google Scholar] [CrossRef]

- Qiao, M.; Lim, J.; Ji, C.W.; Chung, B.K.; Kim, H.Y.; Uhm, K.B.; Myung, C.S.; Cho, J.; Chon, T.S. Density estimation of Bemisia tabaci (Hemiptera: Aleyrodidae) in a greenhouse using sticky traps in conjunction with an image processing system. J. Asia-Pac. Entomol. 2008, 11, 25–29. [Google Scholar] [CrossRef]

- Liu, H.; Lee, S.H.; Chahl, J.S. A review of recent sensing technologies to detect invertebrates on crops. Precis. Agric. 2017, 18, 635–666. [Google Scholar] [CrossRef]

- Farooq, M.S.; Riaz, S.; Abid, A.; Umer, T.; Zikria, Y.B. Role of IoT technology in agriculture: A systematic literature review. Electronics 2020, 9, 319. [Google Scholar] [CrossRef]

- Lima, M.C.F.; Leandro, M.E.D.d.A.; Valero, C.; Coronel, L.C.P.; Bazzo, C.O.G. Automatic detection and monitoring of insect pests: A review. Agriculture 2020, 10, 161. [Google Scholar] [CrossRef]

- Čirjak, D.; Miklečić, I.; Lemić, D.; Kos, T.; Živković, I.P. Automatic Pest Monitoring Systems in Apple Production under Changing Climatic Conditions. Horticulturae 2022, 8, 520. [Google Scholar] [CrossRef]

- Solis-Sánchez, L.O.; Castañeda-Miranda, R.; García-Escalante, J.J.; Torres-Pacheco, I.; Guevara-González, R.G.; Castañeda-Miranda, C.L.; Alaniz-Lumbreras, P.D. Scale invariant feature approach for insect monitoring. Comput. Electron. Agric. 2011, 75, 92–99. [Google Scholar] [CrossRef]

- Rodríguez-Vázquez, E.; Pineda-Guillermo, S.; Hernández-Juárez, A.; Tejeda-Reyes, M.A.; López-Bautista, E.; Illescas-Riquelme, C.P. Sexual dimorphism, diagnosis and damage caused by Duponchelia fovealis (Lepidoptera: Crambidae). Rev. Soc. Entomol. Argent. 2023, 82, 13–20. [Google Scholar] [CrossRef]

- Kasinathan, T.; Singaraju, D.; Uyyala, S.R. Insect classification and detection in field crops using modern machine learning techniques. Inf. Process. Agric. 2021, 8, 446–457. [Google Scholar] [CrossRef]

- Villano, F.; Mauro, G.M.; Pedace, A. A Review on Machine/Deep Learning Techniques Applied to Building Energy Simu-lation, Optimization and Management. Thermo 2024, 4, 100–139. [Google Scholar] [CrossRef]

- Daniya, T.; Geetha, M.; Kumar, K.S. Classification and Regression Trees with Gini Index. Adv. Math. Sci. J. 2020, 9, 8237–8247. [Google Scholar] [CrossRef]

- Tangirala, S. Evaluating the Impact of GINI Index and Information Gain on Classification Using Decision Tree Classifier Algorithm. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 612–619. [Google Scholar] [CrossRef]

- Bertsimas, D.; Dunn, J. Optimal Classification Trees. Mach Learn. 2017, 106, 1039–1082. [Google Scholar] [CrossRef]

- Miao, J.; Zhu, W. Precision–Recall Curve (PRC) Classification Trees. Evol. Intell. 2022, 15, 1545–1569. [Google Scholar] [CrossRef]

- Günlük, O.; Kalagnanam, J.; Li, M.; Menickelly, M.; Scheinberg, K. Optimal Decision Trees for Categorical Data via Integer Programming. J. Glob. Optim. 2021, 81, 233–260. [Google Scholar] [CrossRef]

- Xie, C.; Wang, R.; Zhang, J.; Chen, P.; Dong, W.; Li, R.; Chen, T.; Chen, H. Multi-Level Learning Features for Automatic Classification of Field Crop Pests. Comput. Electron. Agric. 2018, 152, 233–241. [Google Scholar] [CrossRef]

- Yasmin, R.; Das, A.; Rozario, L.J.; Islam, M.E. Butterfly Detection and Classification Techniques: A Review. Intell. Syst. Appl. 2023, 18. [Google Scholar] [CrossRef]

- Xia, D.; Chen, P.; Wang, B.; Zhang, J.; Xie, C. Insect Detection and Classification Based on an Improved Convolutional Neural Network. Sensors 2018, 18, 4169. [Google Scholar] [CrossRef]

- Albanese, A.; d’Acunto, D.; Brunelli, D. Pest Detection for Precision Agriculture Based on IoT Machine Learning. Conference on Applications in Electronics Pervading Industry, Environment and Society; Springer: Berlin/Heidelberg, Germany, 2019; pp. 65–72. [Google Scholar] [CrossRef]

- Kargar, A.; Zorbas, D.; Tedesco, S.; Gaffney, M.; O’flynn, B. Detecting Halyomorpha Halys Using a Low-Power Edge-Based Monitoring System. Comput. Electron. Agric. 2024, 221, 108935. [Google Scholar] [CrossRef]

- Rigakis, I.I.; Varikou, K.N.; Nikolakakis, A.E.; Skarakis, Z.D.; Tatlas, N.A.; Potamitis, I.G. The E-Funnel Trap: Automatic Monitoring of Lepidoptera; a Case Study of Tomato Leaf Miner. Comput. Electron. Agric. 2021, 185. [Google Scholar] [CrossRef]

- Preti, M.; Verheggen, F.; Angeli, S. Insect Pest Monitoring with Camera-Equipped Traps: Strengths and Limitations. J. Pest. Sci. 2021, 94, 203–217. [Google Scholar] [CrossRef]

- Flórián, N.; Jósvai, J.K.; Tóth, Z.; Gergócs, V.; Sipőcz, L.; Tóth, M.; Dombos, M. Automatic Detection of Moths (Lepidoptera) with a Funnel Trap Prototype. Insects 2023, 14, 381. [Google Scholar] [CrossRef]

- Nanni, L.; Manfè, A.; Maguolo, G.; Lumini, A.; Brahnam, S. High Performing Ensemble of Convolutional Neural Networks for Insect Pest Image Detection. Ecol. Inform. 2022, 67, 101515. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rodríguez-Vázquez, E.; Hernández-Juárez, A.; Reyes-Rosas, A.; Illescas-Riquelme, C.P.; Lara-Viveros, F.M. Detection and Early Warning of Duponchelia fovealis Zeller (Lepidoptera: Crambidae) Using an Automatic Monitoring System. AgriEngineering 2024, 6, 3785-3798. https://doi.org/10.3390/agriengineering6040216

Rodríguez-Vázquez E, Hernández-Juárez A, Reyes-Rosas A, Illescas-Riquelme CP, Lara-Viveros FM. Detection and Early Warning of Duponchelia fovealis Zeller (Lepidoptera: Crambidae) Using an Automatic Monitoring System. AgriEngineering. 2024; 6(4):3785-3798. https://doi.org/10.3390/agriengineering6040216

Chicago/Turabian StyleRodríguez-Vázquez, Edgar, Agustín Hernández-Juárez, Audberto Reyes-Rosas, Carlos Patricio Illescas-Riquelme, and Francisco Marcelo Lara-Viveros. 2024. "Detection and Early Warning of Duponchelia fovealis Zeller (Lepidoptera: Crambidae) Using an Automatic Monitoring System" AgriEngineering 6, no. 4: 3785-3798. https://doi.org/10.3390/agriengineering6040216

APA StyleRodríguez-Vázquez, E., Hernández-Juárez, A., Reyes-Rosas, A., Illescas-Riquelme, C. P., & Lara-Viveros, F. M. (2024). Detection and Early Warning of Duponchelia fovealis Zeller (Lepidoptera: Crambidae) Using an Automatic Monitoring System. AgriEngineering, 6(4), 3785-3798. https://doi.org/10.3390/agriengineering6040216