Driveway Detection for Weed Management in Cassava Plantation Fields in Thailand Using Ground Imagery Datasets and Deep Learning Models

Abstract

1. Introduction

1.1. Related Works

1.2. Objectives

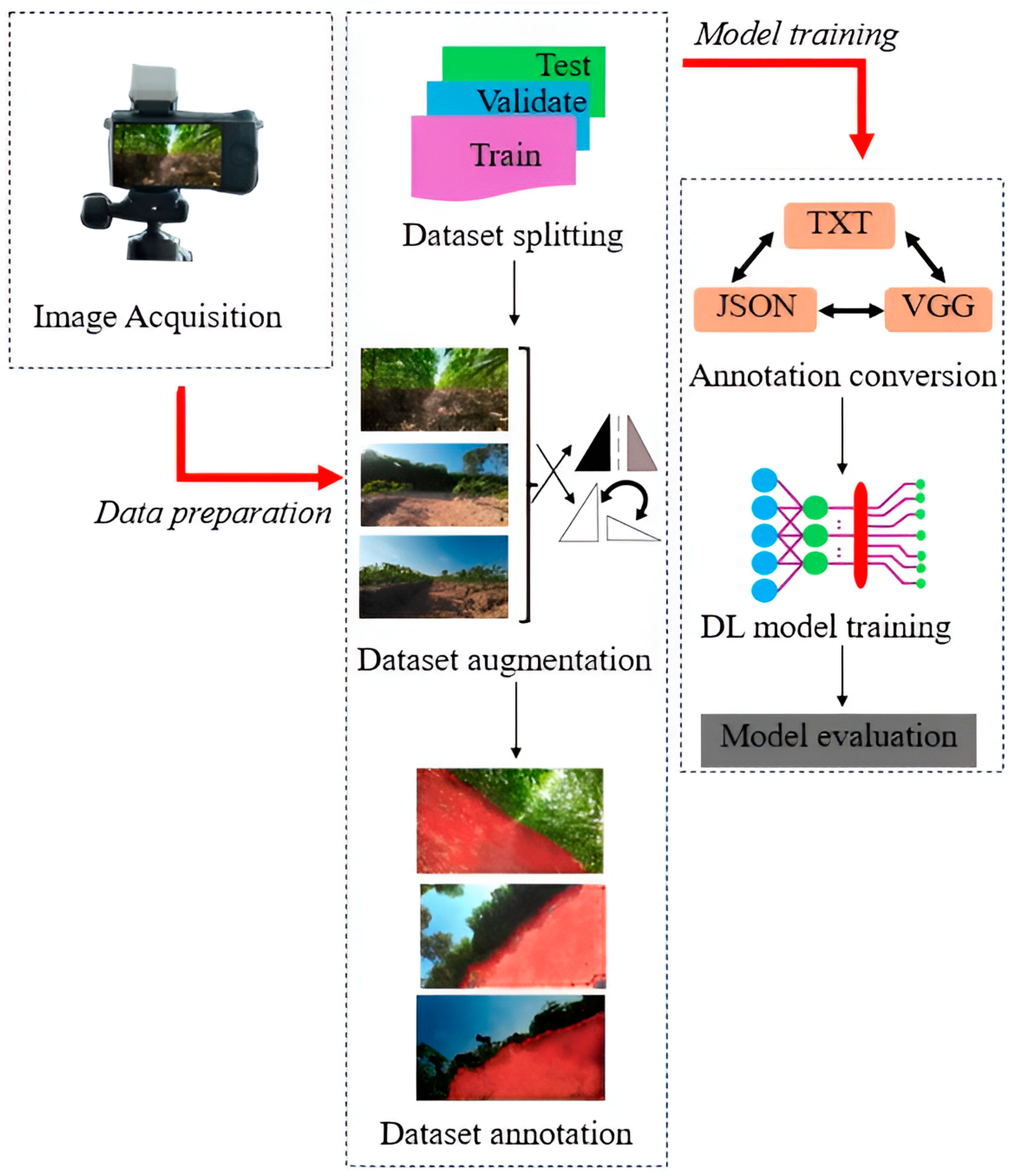

2. Materials and Methods

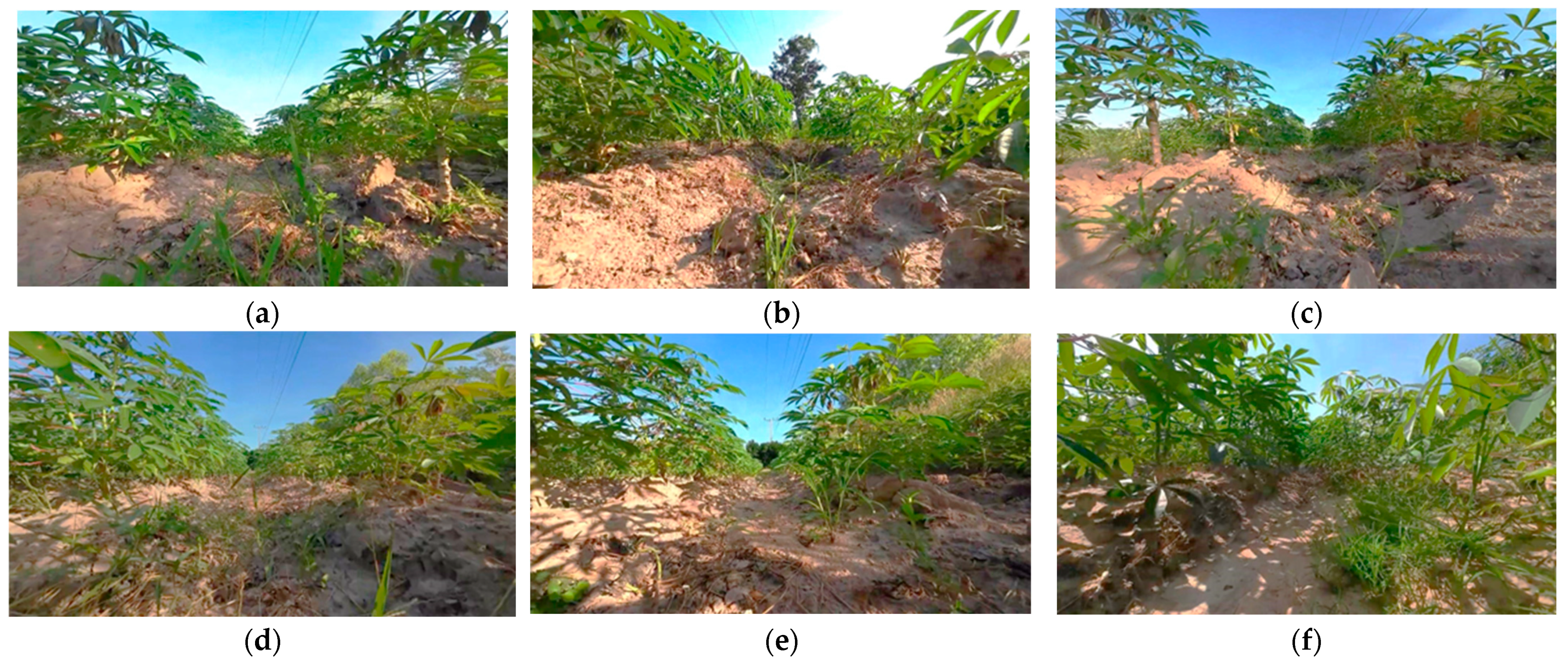

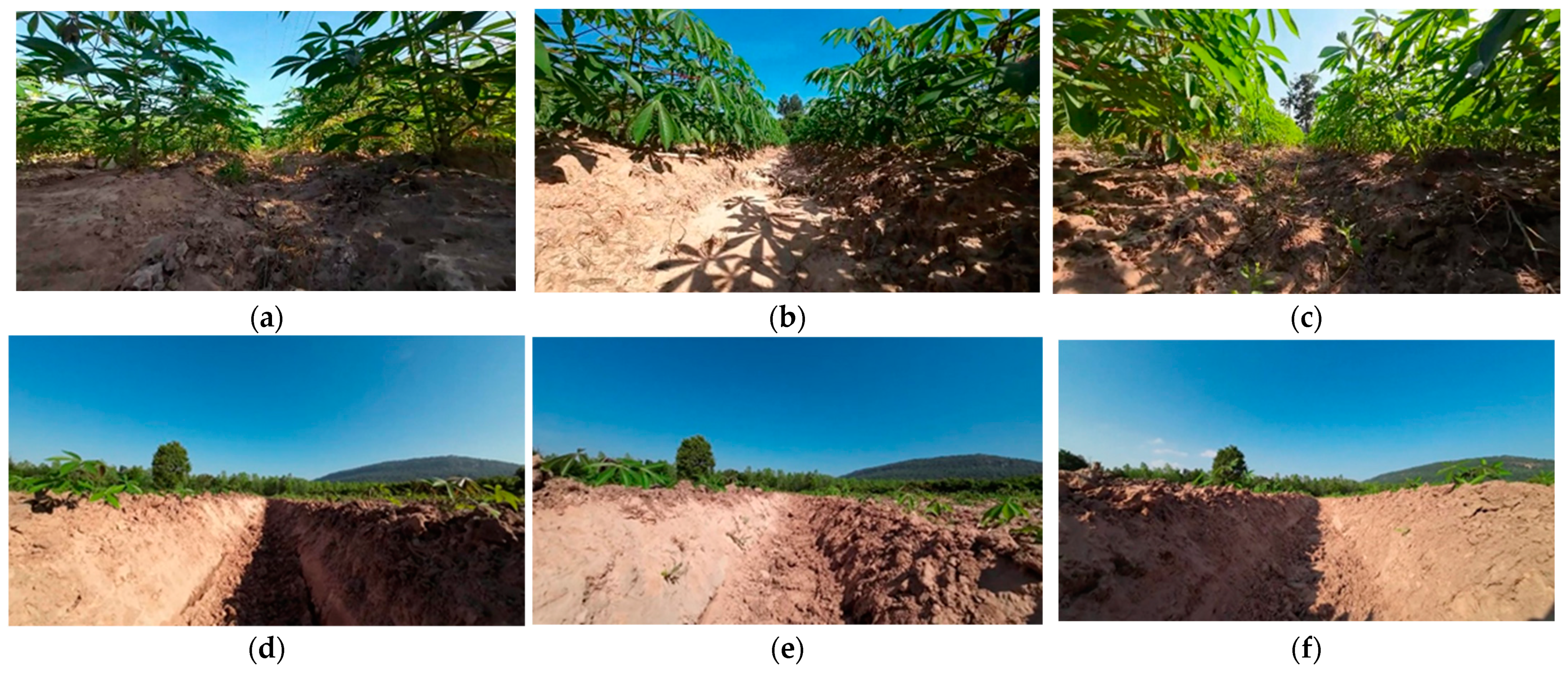

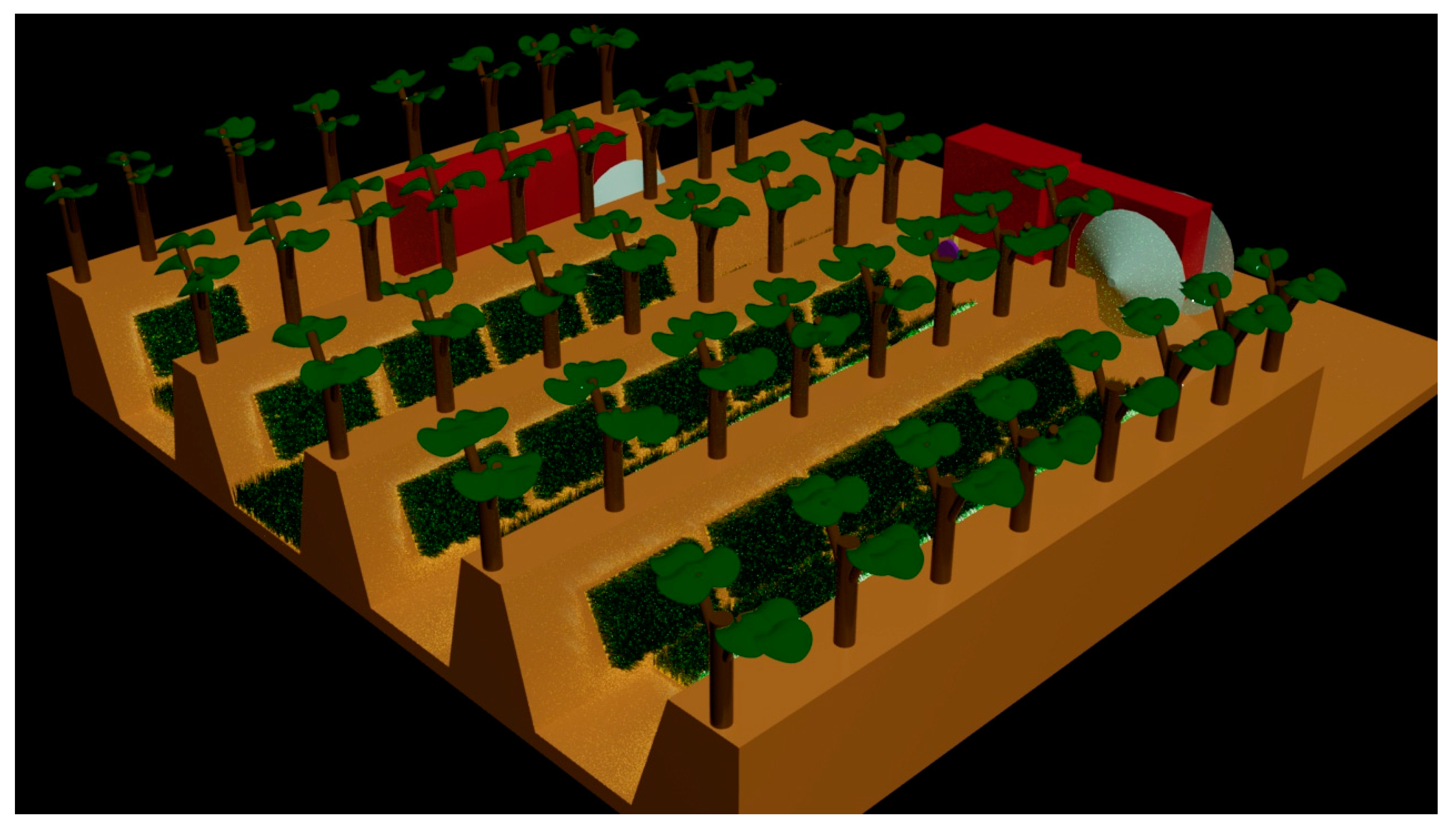

2.1. Remote Sensing-Based Ground Image Acquisition

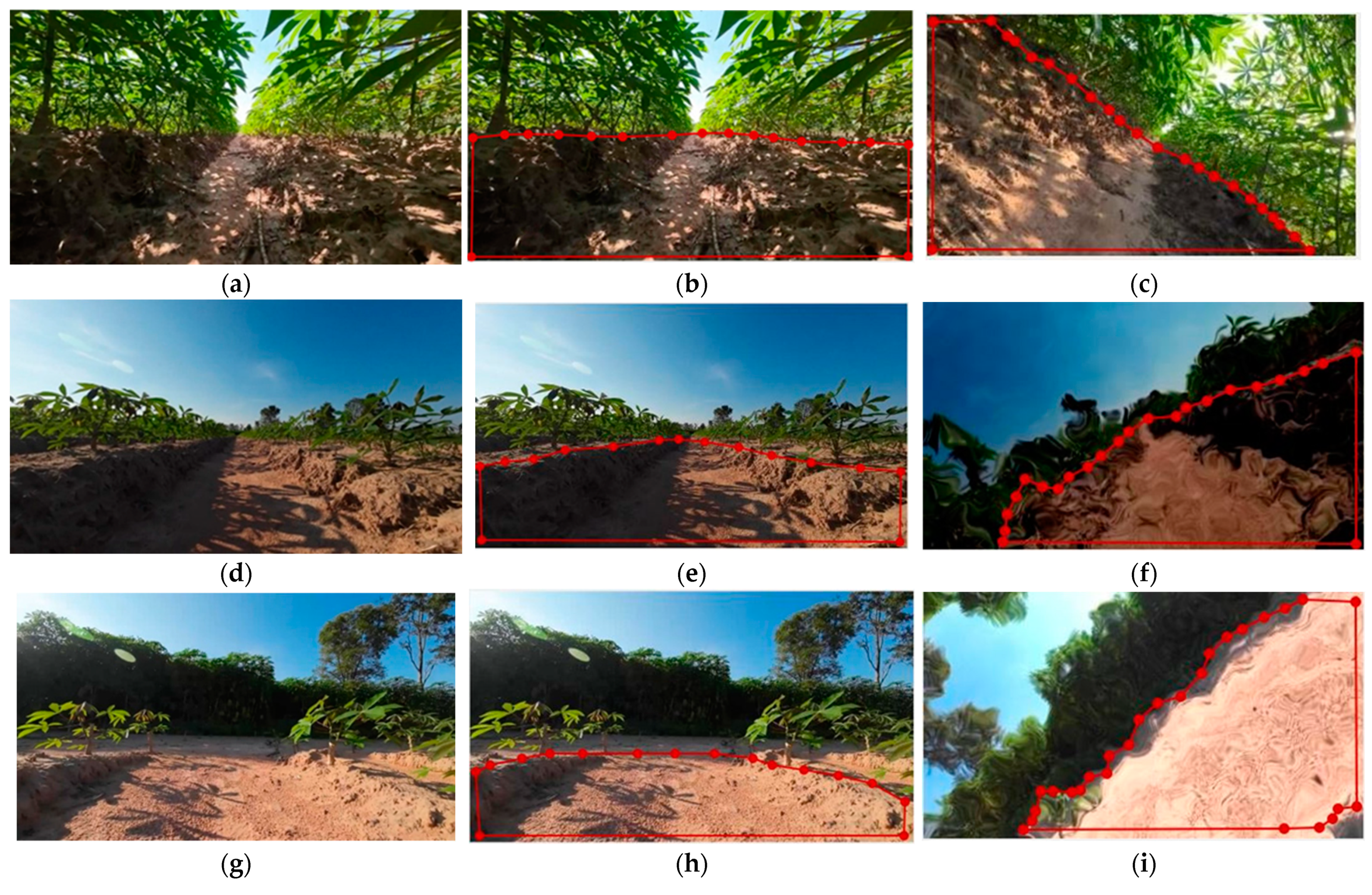

2.2. Image Dataset Development

2.3. Network Architecture

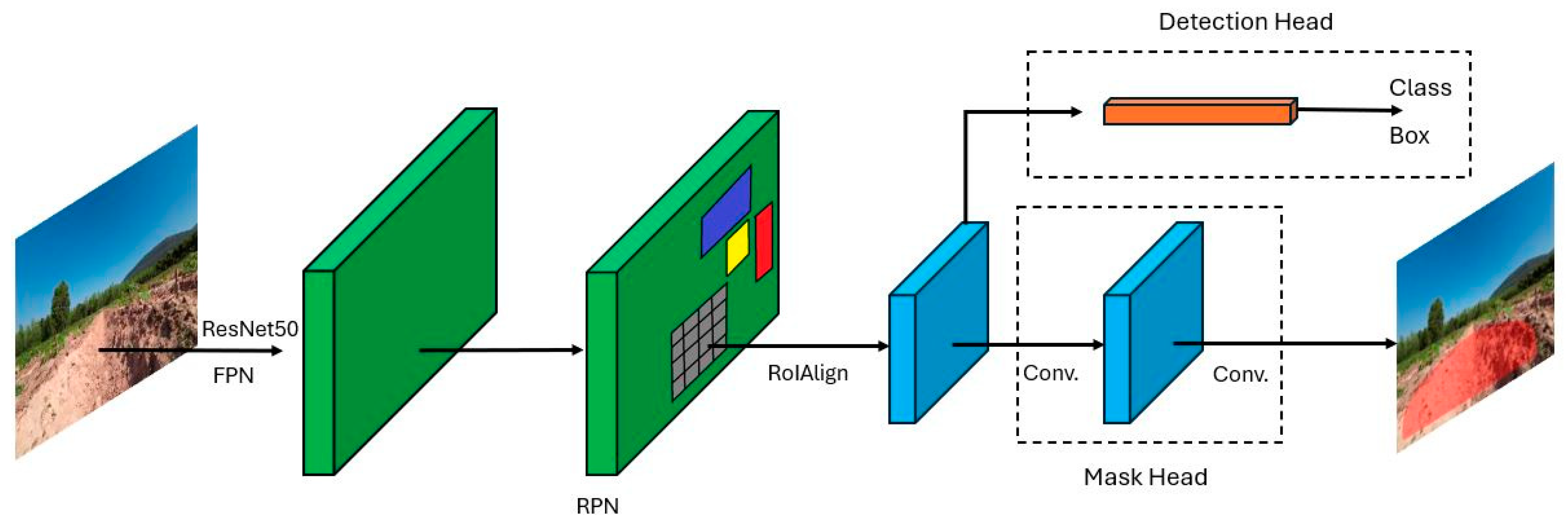

2.3.1. Mask R-CNN

- The input images of dimensions 800 × 800 are processed and input into ResNet50-FPN to extract the features. ResNet50-FPN, a combination of a ResNet50 backbone and a feature pyramid network (FPN), enhances the feature extraction across multiple scales. In this process, the images are converted into a feature map of 32 × 32 × 2048 pixels;

- The region proposal network (RPN) uses these feature maps to generate proposals or candidate bounding boxes indicating objects that may be contained in the images. The RPN then proposes potential regions of interest [21]. The ROI pooling layers extract the feature maps from the corresponding region to ensure that the feature representations for all the proposed regions have identical spatial dimensions regardless of the original sizes of the regions [22];

- The feature map and regions of interest are sent to the ROIAlign layers to ensure that all the proposed regions have identical sizes;

- Finally, the fixed-size feature maps are fed to two parallel branches. The first branch enters the object classification branch for class probability prediction for each proposed region. The second branch is a bounding box regression branch that refines the coordinates of the proposed bounding boxes to better fit the objects [23]. The algorithm outputs the 1152 × 1152-pixel output picture, which includes segmentation masks and bounding boxes.

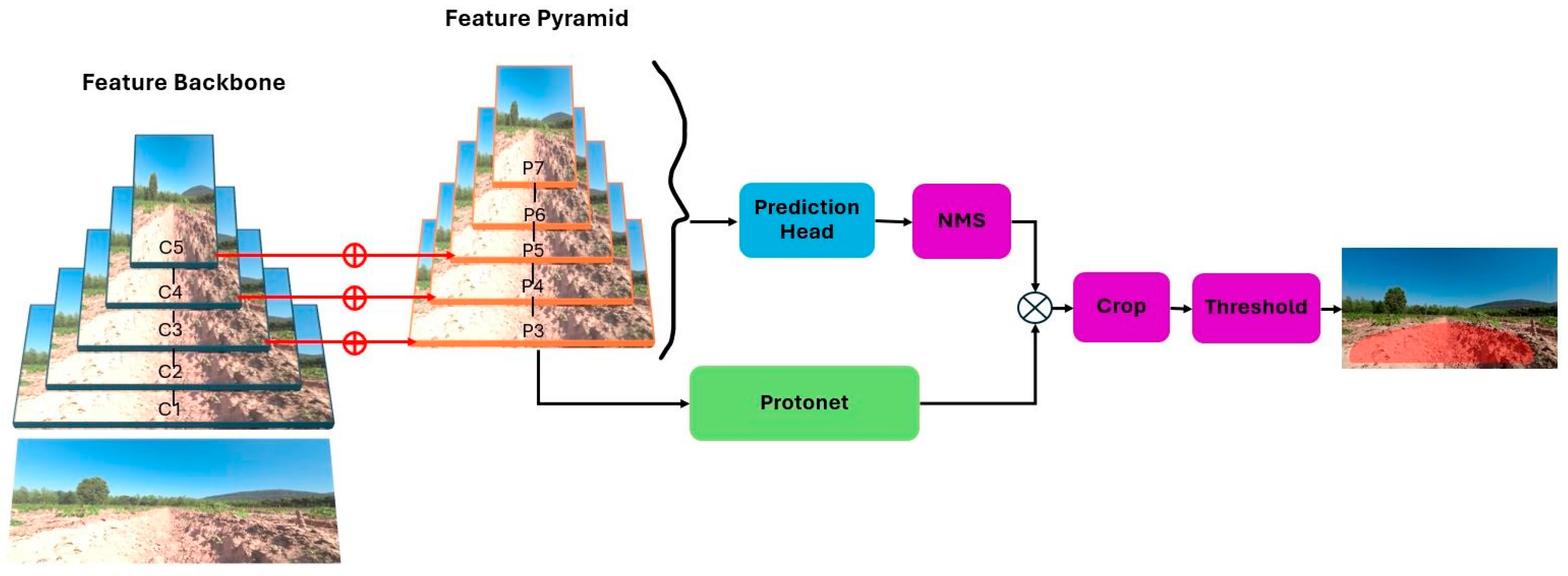

2.3.2. YOLACT

- Images with a size of 550 × 550 pixels are input into the ResNet50-FPN backbone to extract features from the images. The YOLACT model size is 97.7 megabytes;

- A set of prototype masks is generated by applying Protonet over the entire image;

- A set of coefficients is predicted for each instance in the images, and the bounding boxes are predicted for object instances. Non-maximum suppression (NMS) is applied to remove duplicate or overlapping bounding boxes;

- The instance masks are assembled by combining the corresponding coefficients and prototype masks. Then, the results are summed by cropping the images on the basis of the predicted bounding boxes, and an image containing the segmentation masks and bounding boxes with a size of 550 × 309 pixels is output [25].

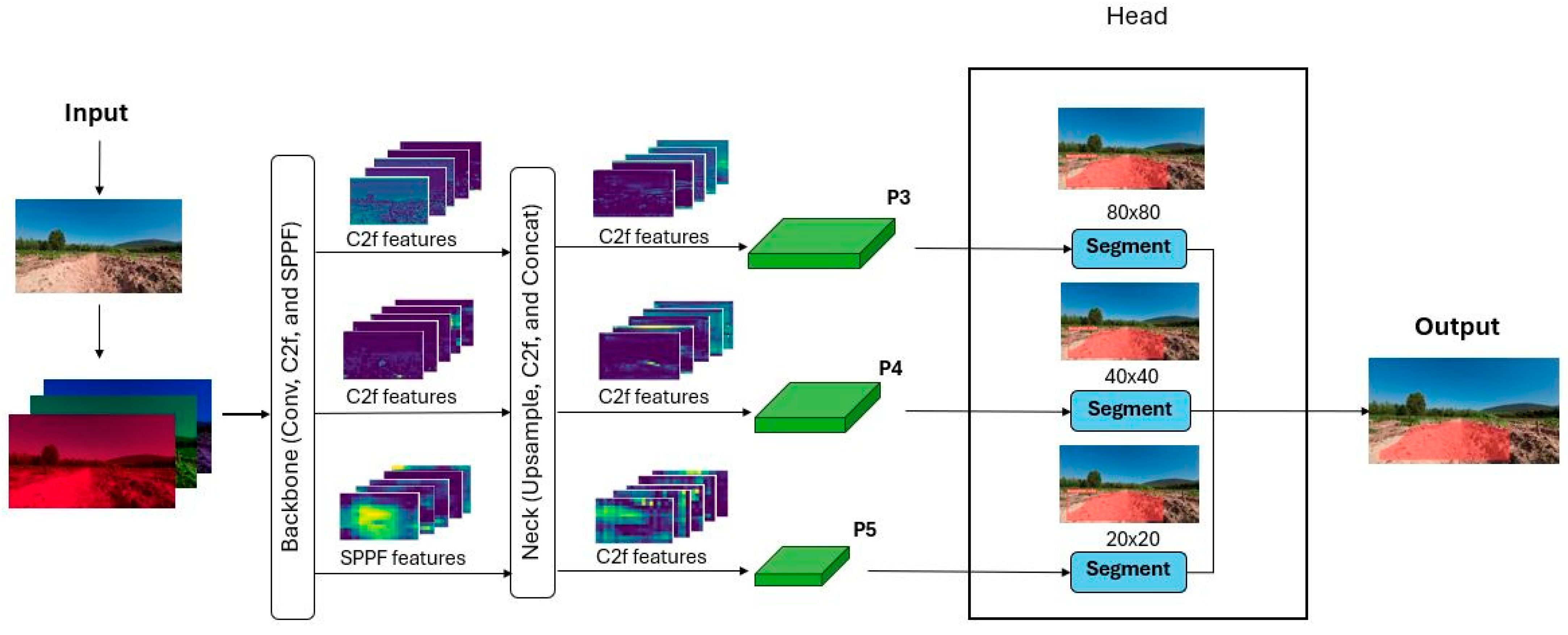

2.3.3. YOLOv8 Instance Segmentation

- Input: Images with a size of 640 × 640 pixels are input into the CSPDarknet53 backbone to extract features from the images. The model size is 6.5 megabytes (YOLOv8n-seg) [28];

- Spine: The spine of YOLOv8-seg extracts features via a cross-stage partial (CSP) layer called the C2f module. This cross-stage bottleneck is faster to implement than convolutional modules and combines high-level features to improve the detection accuracy. Additionally, the spatial pyramid pooling-fast (SPPF) layer and feature convolution layer, which extract features at different levels, significantly improve the model’s generalization ability;

- Neck: the feature pyramid network (FPN) in YOLOv8-seg allows the model to leverage multiscale features to detect objects of varying sizes accurately;

- Head: The head consists of a detection head and a segmentation head. The detection head outputs the bounding box and class label. The segmentation head consists of two parts for detection and segmentation and outputs a set of k masks and k detected objects. This head simultaneously produces different segmentation output levels inherited from the neck [29]. The segmentation results are subsequently combined to produce a single output of the segmentation process (Figure 9). The output image is 640 × 360 pixels in size.

2.4. Systems of Network Training and Testing

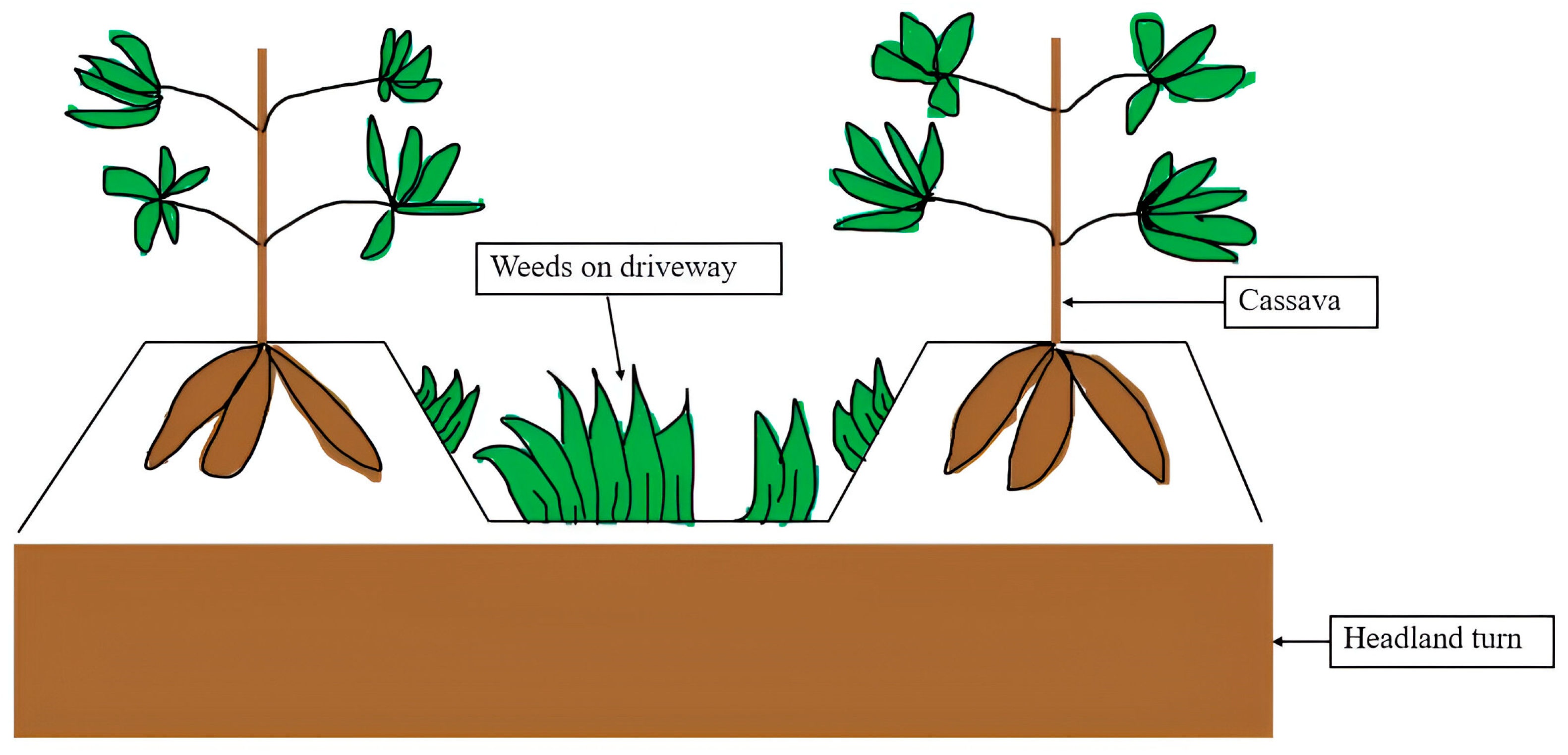

2.5. Building the Cassava Field Driveway Detection Model

2.6. Evaluation Metrics

3. Results and Discussion

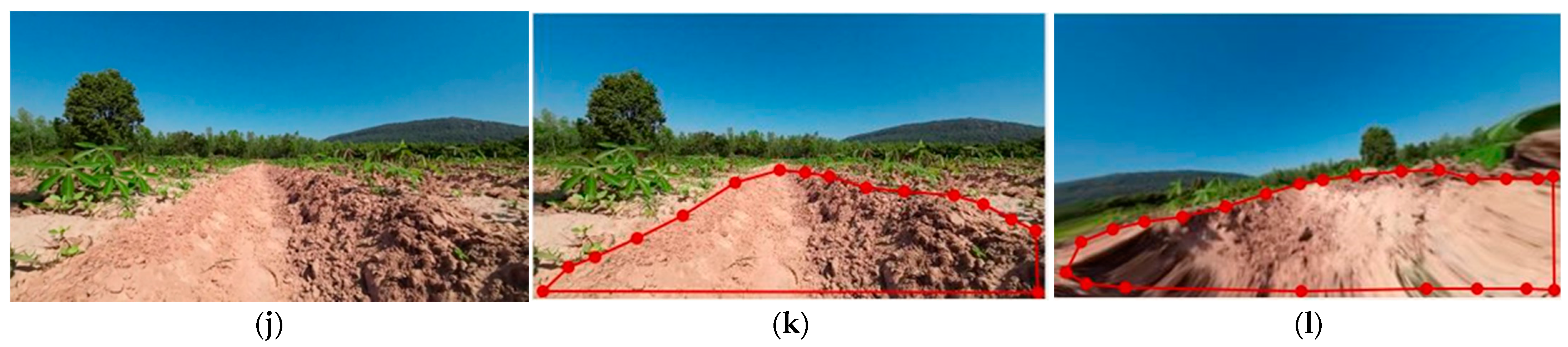

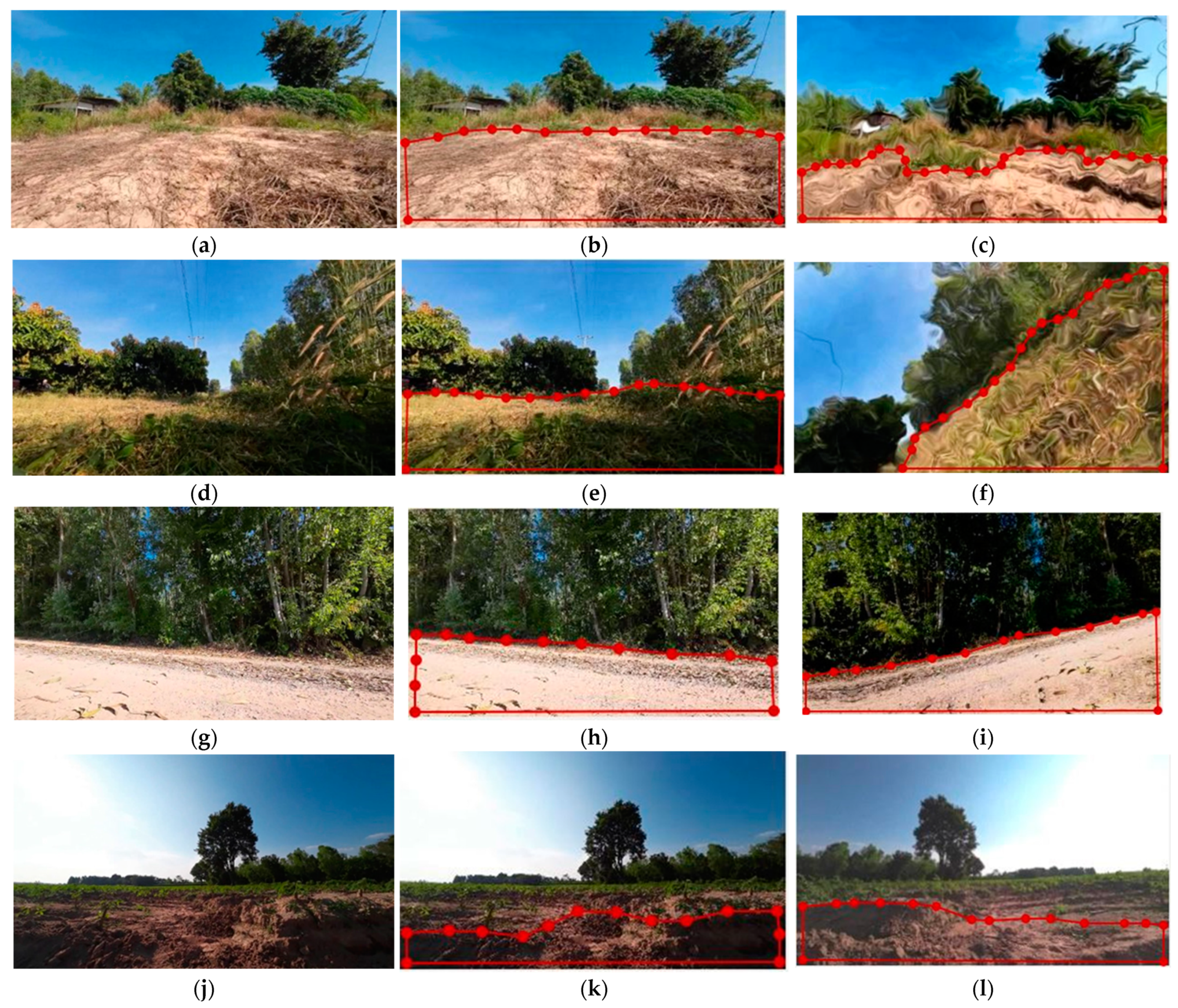

3.1. Image Dataset Evaluation

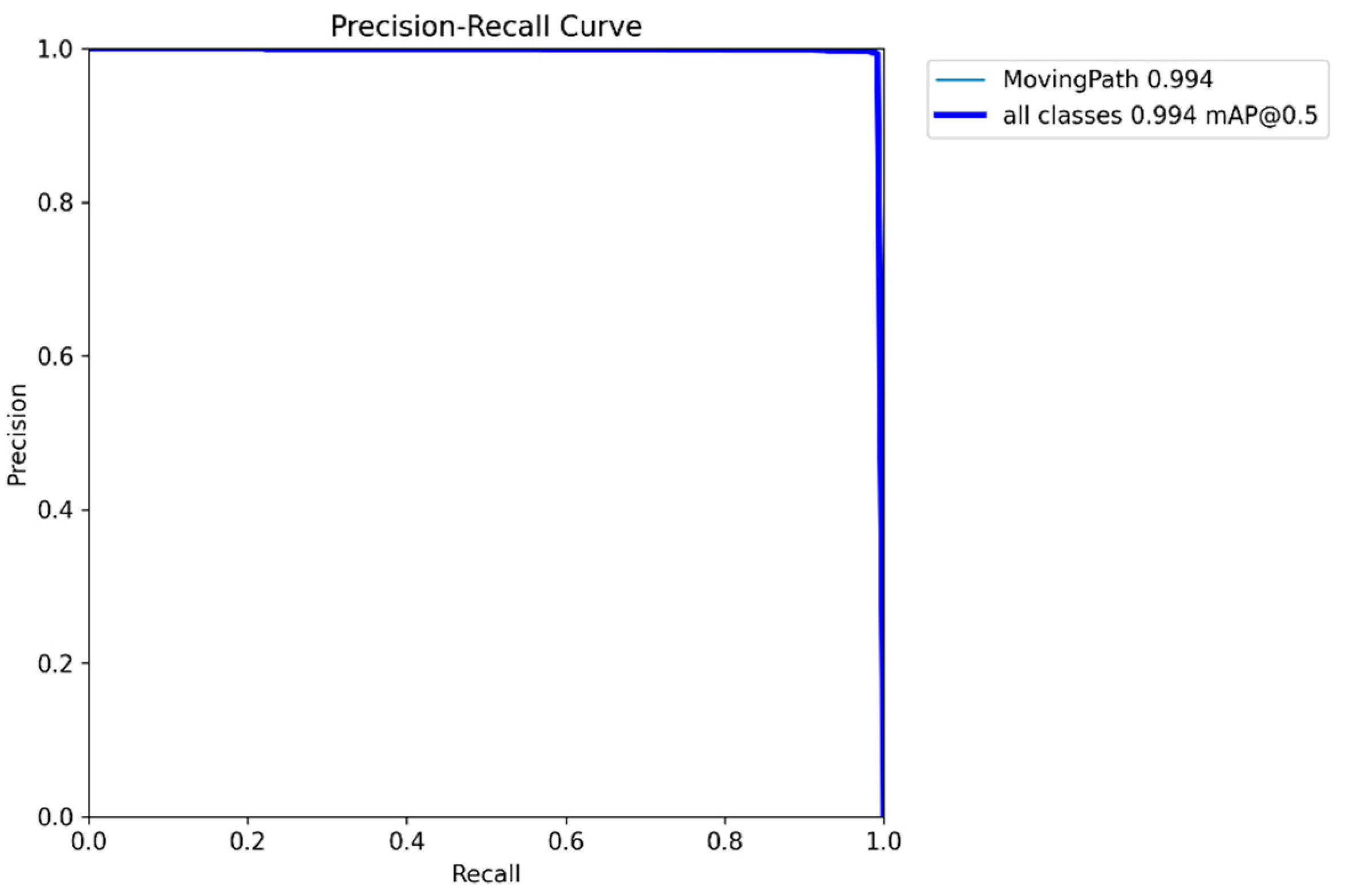

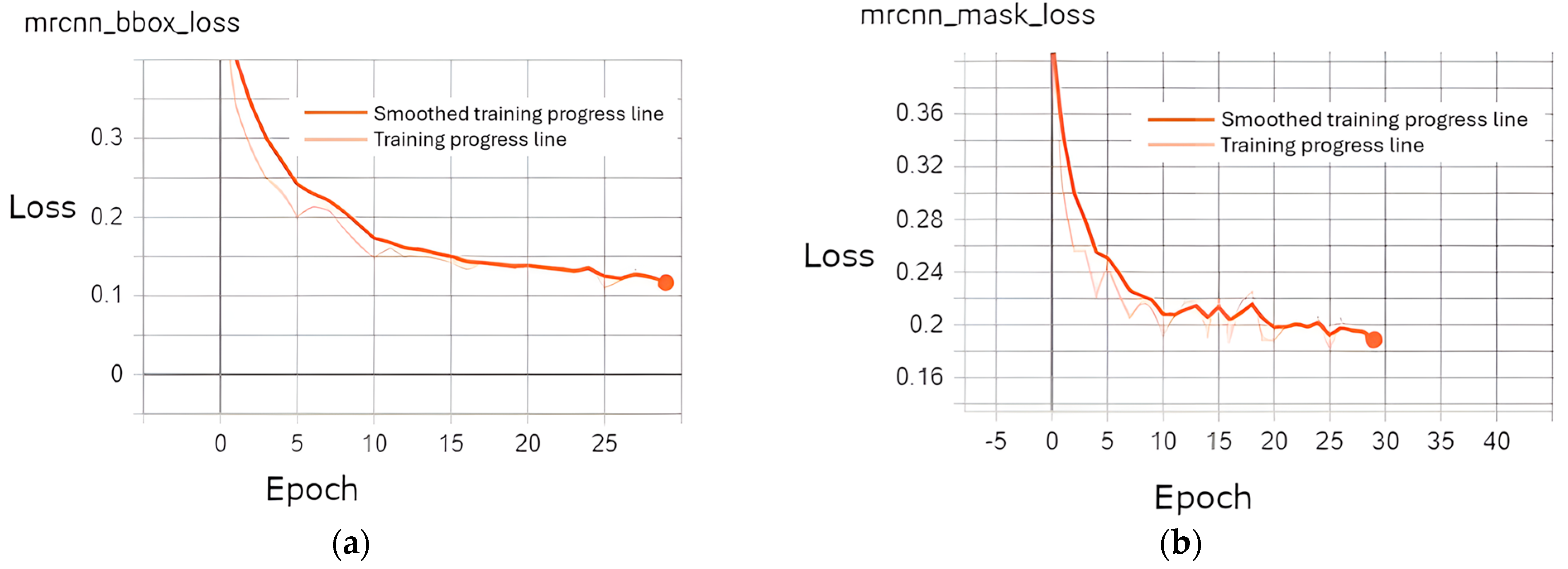

3.2. Training Performance

3.3. Testing Performance

3.3.1. Model Speed Performance

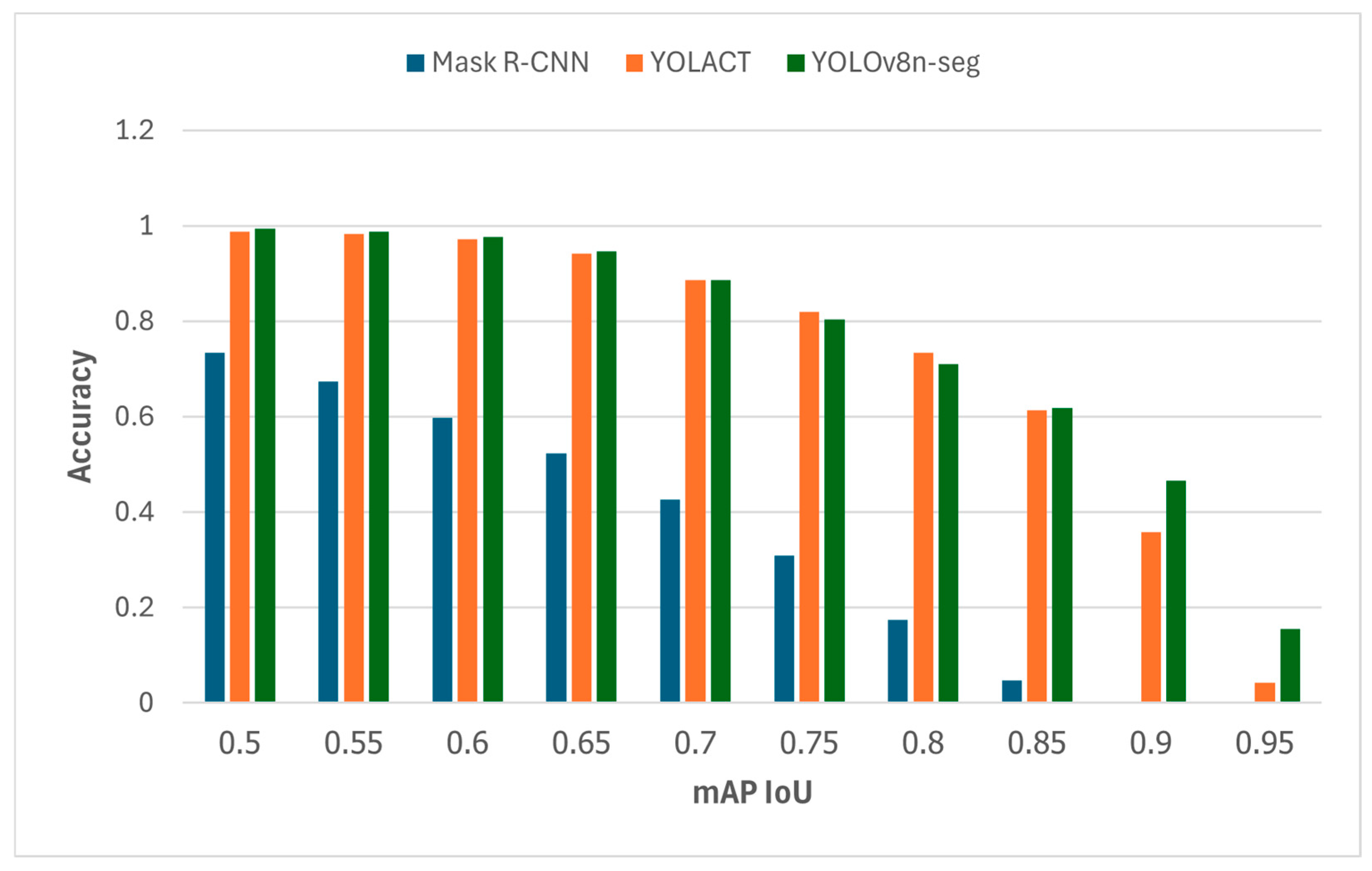

3.3.2. Model Segmentation Performance

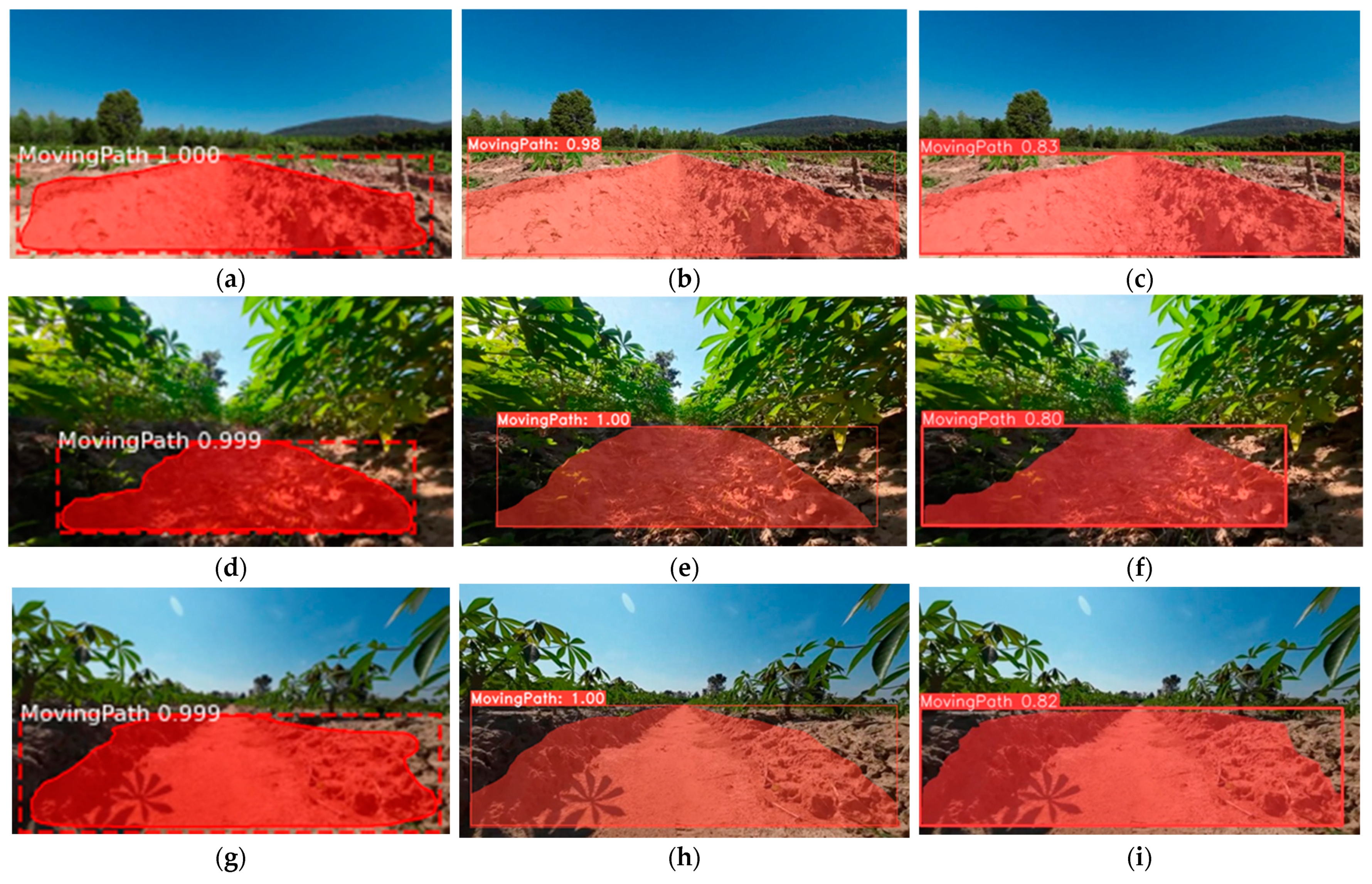

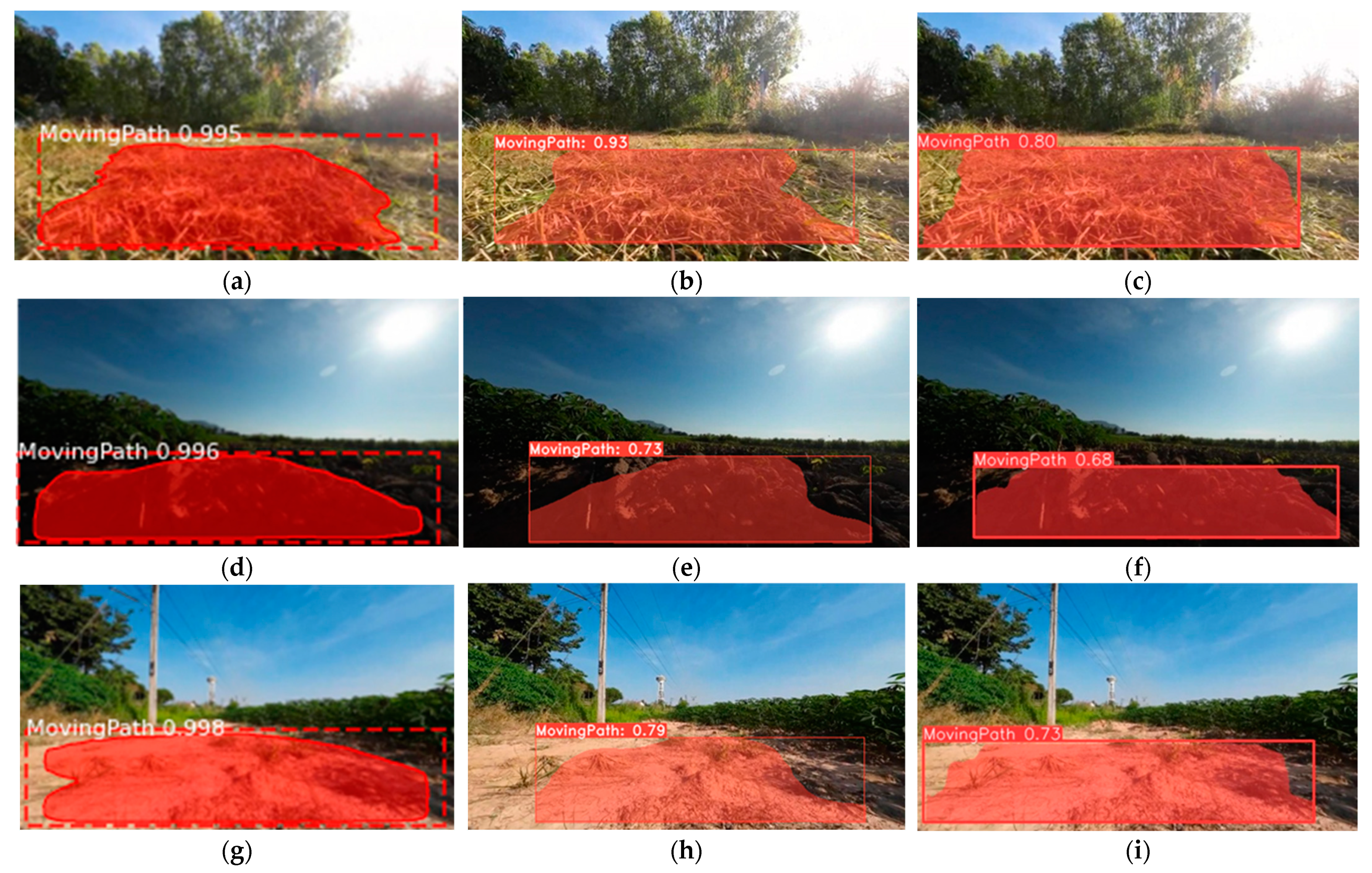

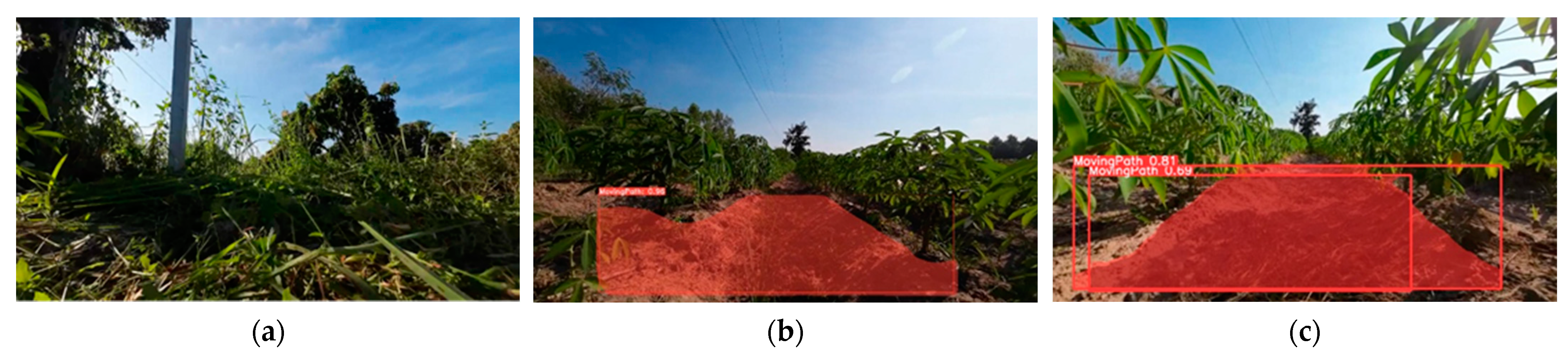

3.3.3. Implementation of the Detection Results

4. Conclusions

- Dataset Construction: Images of cassava plantations were collected at various times and under various conditions. In total, 3000 images were selected from the cassava datasets, which were augmented to 10,000. The datasets were separated into training and validation sets. Afterward, the data were input into the different image segmentation algorithms for training. A separate set of 300 original images was used for evaluating the test performances of the models;

- Driveway Detection: Drivable areas were successfully detected via the Mask R-CNN, YOLACT, and YOLOv8n-seg image segmentation algorithms. The training and test performances were assessed. YOLOv8n-seg achieved the best training performance in terms of both mAP@0.5 and mAP@0.5:0.95, which were 0.994 and 0.793, respectively. YOLOv8n-seg achieved the best FPS results on computers with and without discrete GPUs: 114.94 FPS and 12.16 FPS, respectively;

- Segmentation Results: New results were obtained via Mask R-CNN, which had the lowest accuracy at mAP@0.5 and resulted in inappropriate detections during testing on the video. The FPS results of all three image segmentation networks were obtained. YOLOv8n-seg obtained the best results, followed by YOLACT and Mask R-CNN. However, during testing on the video, YOLOv8n-seg yielded double detection on a single frame because NMS did not eliminate overlapping detections. Therefore, YOLACT can be implemented in driveway detection systems because this issue does not occur with it;

- Proposed Implementation: Further research will be conducted on a ground-based image dataset with a computer vision system that uses deep learning algorithms to automatically navigate a mechanical weeder in the cassava plantation driveway of a planting groove along a headland turn for unmanned weed management.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Clifton, P.; Keogh, J. Starch. In Encyclopedia of Food and Health; Elsevier: Amsterdam, The Netherlands, 2016; pp. 146–151. [Google Scholar]

- Khanthavong, P. Effect of Weed Biomass on Cassava Yield Related to Weeding Times. Adv. Plants Agric. Res. 2016, 5, 630–632. [Google Scholar] [CrossRef]

- Poramacom, N.; Ungsuratana, A.; Ungsuratana, P.; Supavititpattana, P. Cassava Production, Prices and Related Policy in Thailand. SSRN Electron. J. 2012, 3. [Google Scholar] [CrossRef]

- Polthanee, A.; Janthajam, C.; Promkhambu, A. Growth, Yield and Starch Content of Cassava Following Rainfed Lowland Rice in Northeast Thailand. Int. J. Agric. Res. 2014, 9, 319–324. [Google Scholar] [CrossRef]

- Chalachai, S.; Soni, P.; Chamsing, A.; Salokhe, V.M. A Critical Review of Mechanization in Cassava Harvesting in Thailand. Int. Agric. Eng. J. 2013, 22, 81–93. [Google Scholar]

- Siebers, T.; Catarino, B.; Agusti, J. Identification and Expression Analyses of New Potential Regulators of Xylem Development and Cambium Activity in Cassava (Manihot Esculenta). Planta 2016, 245, 539–548. [Google Scholar] [CrossRef] [PubMed]

- Ennin, S.; Otoo, E.; Tetteh, F. Ridging, a Mechanized Alternative to Mounding for Yam and Cassava Production. West Afr. J. Appl. Ecol. 2009, 15. [Google Scholar] [CrossRef][Green Version]

- Jiamjunnunja, J.; Sarobol, E.; Vicchukit, V.; Rojanaritpichet, C.; Poolsajuan, P.; Lertmongkol, V.; Duangpatra, P. Weed Management in Cassava Plantation; Department of Agronomy, Faculty of Agriculture, Kasetsart University: Bangkok, Thailand, 1999. [Google Scholar]

- Wang, X.; Zeng, H.; Lin, L.; Huang, Y.; Lin, H.; Que, Y. Deep Learning-Empowered Crop Breeding: Intelligent, Efficient and Promising. Front. Plant Sci. 2023, 14, 1260089. [Google Scholar] [CrossRef]

- Panda, S.K.; Lee, Y.; Jawed, M.K. Agronav: Autonomous Navigation Framework for Agricultural Robots and Vehicles Using Semantic Segmentation and Semantic Line Detection. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar] [CrossRef]

- Li, Y.; Guo, Z.; Shuang, F.; Zhang, M.; Li, X. Key Technologies of Machine Vision for Weeding Robots: A Review and Bench-mark. Comput. Electron. Agric. 2022, 196, 106880. [Google Scholar] [CrossRef]

- Ahmadi, A.; Halstead, M.; McCool, C. Towards Autonomous Visual Navigation in Arable Fields. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022. [Google Scholar] [CrossRef]

- Bakken, M.; Ponnambalam, V.R.; Moore, R.J.D.; Gjevestad, J.G.O.; From, P.J. Robot-Supervised Learning of Crop Row Seg-mentation. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022. [Google Scholar] [CrossRef]

- Casagli, N.; Morelli, S.; Frodella, W.; Intrieri, E.; Tofani, V. TXT-Tool 2.039-3.2 Ground-Based Remote Sensing Techniques for Landslides Mapping, Monitoring and Early Warning. In Landslide Dynamics: ISDR-ICL Landslide Interactive Teaching Tools; Springer: Cham, Switzerland, 2018; pp. 255–274. [Google Scholar]

- Martí-Juan, G.; Sanroma-Guell, G.; Piella, G. A Survey on Machine and Statistical Learning for Longitudinal Analysis of Neuroimaging Data in Alzheimer’s Disease. Comput. Methods Programs Biomed. 2020, 189, 105348. [Google Scholar] [CrossRef]

- Piccialli, F.; Di Somma, V.; Giampaolo, F.; Cuomo, S.; Fortino, G. A Survey on Deep Learning in Medicine: Why, How and When? Inf. Fusion 2021, 66, 111–137. [Google Scholar] [CrossRef]

- Sapkota, R.; Ahmed, D.; Karkee, M. Comparing YOLOv8 and Mask R-CNN for Instance Segmentation in Complex Orchard Environments. Artif. Intell. Agric. 2024, 13, 84–99. [Google Scholar] [CrossRef]

- Sharma, J.; Kumar, D.; Chattopadhay, S.; Kukreja, V.; Verma, A. Automated Detection of Wheat Powdery Mildew Using YOLACT Instance Segmentation. In Proceedings of the 2024 11th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 14–15 March 2024; pp. 1–4. [Google Scholar] [CrossRef]

- Wada, K. Image Polygonal Annotation with Python [Computer Software]. Available online: https://zenodo.org/records/5711226 (accessed on 14 June 2024).

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Deng, H.; Ergu, D.; Liu, F.; Ma, B.; Cai, Y. An Embeddable Algorithm for Automatic Garbage Detection Based on Complex Marine Environment. Sensors 2021, 21, 6391. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, B.; Gulliver, T.A.; alZahir, S. Image Splicing Detection Using Mask-RCNN. Signal Image Video Process. 2020, 14, 1035–1042. [Google Scholar] [CrossRef]

- Wang, S.; Sun, G.; Zheng, B.; Du, Y. A Crop Image Segmentation and Extraction Algorithm Based on Mask RCNN. Entropy 2021, 23, 1160. [Google Scholar] [CrossRef]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT: Real-Time Instance Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar] [CrossRef]

- Zheng, J.; Zheng, J.; Zhang, S.; Yu, H.; Kong, L.; Zhigang, D. Segmentation Method for Whole Vehicle Wood Detection Based on Improved YOLACT Instance Segmentation Model. IEEE Access 2023, 11, 81434–81448. [Google Scholar] [CrossRef]

- Zhao, X.; Ding, W.; An, Y.; Du, Y.; Yu, T.; Li, M.; Tang, M.; Wang, J. Fast Segment Anything. arXiv 2023. [Google Scholar] [CrossRef]

- Lyu, Z.; Lu, A.; Ma, Y. Improved YOLOV8-SEG Based on Multiscale Feature Fusion and Deformable Convolution for Weed Precision Segmentation. Appl. Sci. 2024, 14, 5002. [Google Scholar] [CrossRef]

- Yue, X.; Qi, K.; Na, X.; Zhang, Y.; Liu, Y.; Liu, C. Improved YOLOV8-SEG Network for Instance Segmentation of Healthy and Diseased Tomato Plants in the Growth Stage. Agriculture 2023, 13, 1643. [Google Scholar] [CrossRef]

- Sampurno, R.M.; Liu, Z.; Abeyrathna, R.M.R.D.; Ahamed, T. Intrarow Uncut Weed Detection Using You-Only-Look-Once Instance Segmentation for Orchard Plantations. Sensors 2024, 24, 893. [Google Scholar] [CrossRef]

- Corrigan, B.C.; Tay, Z.Y.; Konovessis, D. Real-Time Instance Segmentation for Detection of Underwater Litter as a Plastic Source. J. Mar. Sci. Eng. 2023, 11, 1532. [Google Scholar] [CrossRef]

- Solawetz, J. An Introduction to the COCO Dataset. Roboflow, 18 October 2020. [Google Scholar]

- Fabian, P.; Gaël, V.; Alexandre, G.; Vincent, M.; Bertrand, T.; Olivier, G.; Mathieu, B.; Peter, P.; Ron, W.; Vincent, D.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Ulutas, E.G.; Altin, C. Kiwi Fruit Detection with Deep Learning Methods. Int. J. Adv. Nat. Sci. Eng. Res. 2023, 7, 39–45. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, B.; Wang, H.; Xu, L.; Li, Y.; Liu, Z. Detection of the Drivable Area on High-Speed Road via YOLACT. Signal Image Video Process. 2022, 16, 1623–1630. [Google Scholar] [CrossRef]

| Model | Input Size | Batch Size | Epoch | Decay | Class | Learning | Momentum |

|---|---|---|---|---|---|---|---|

| Mask R-CNN | 800 × 800 | 2 | 30 | 0.0001 | 1 | 0.001 | 0.9 |

| YOLACT | 550 × 550 | 8 | 11 | 0.0005 | 1 | 0.001 | 0.9 |

| YOLOv8n-seg | 640 × 640 | 2 | 109 | 0.0005 | 1 | 0.001 | 0.937 |

| No. | Model | Backbone | Original mAP of Weights Pretrained on COCO 2017 | mAP@0.5 | mAP@0.5:0.95 |

|---|---|---|---|---|---|

| 1 | Mask R-CNN | ResNet50 | 0.361 | 0.735 | 0.349 |

| 2 | YOLACT | ResNet50 | 0.298 | 0.988 | 0.733 |

| 3 | YOLOv8n-seg | CSPDarknet53 | 0.367 | 0.994 | 0.793 |

| Devices | Mask R-CNN | YOLACT | YOLOv8n-seg |

|---|---|---|---|

| GPUs | 1.92 FPS | 23.45 FPS | 114.94 FPS |

| CPUs | 0.38 FPS | 1.49 FPS | 12.16 FPS |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Opasatian, I.; Ahamed, T. Driveway Detection for Weed Management in Cassava Plantation Fields in Thailand Using Ground Imagery Datasets and Deep Learning Models. AgriEngineering 2024, 6, 3408-3426. https://doi.org/10.3390/agriengineering6030194

Opasatian I, Ahamed T. Driveway Detection for Weed Management in Cassava Plantation Fields in Thailand Using Ground Imagery Datasets and Deep Learning Models. AgriEngineering. 2024; 6(3):3408-3426. https://doi.org/10.3390/agriengineering6030194

Chicago/Turabian StyleOpasatian, Ithiphat, and Tofael Ahamed. 2024. "Driveway Detection for Weed Management in Cassava Plantation Fields in Thailand Using Ground Imagery Datasets and Deep Learning Models" AgriEngineering 6, no. 3: 3408-3426. https://doi.org/10.3390/agriengineering6030194

APA StyleOpasatian, I., & Ahamed, T. (2024). Driveway Detection for Weed Management in Cassava Plantation Fields in Thailand Using Ground Imagery Datasets and Deep Learning Models. AgriEngineering, 6(3), 3408-3426. https://doi.org/10.3390/agriengineering6030194