Abstract

Having an additional tool for swiftly determining the extent of flood damage to crops with confidence is beneficial. This study focuses on estimating rice crop damage caused by flooding in Candaba, Pampanga, using open-source satellite data. By analyzing the correlation between Normalized Difference Vegetation Index (NDVI) measurements from unmanned aerial vehicles (UAVs) and Sentinel-2 (S2) satellite data, a cost-effective and time-efficient alternative for agricultural monitoring is explored. This study comprises two stages: establishing a correlation between clear sky observations and NDVI measurements, and employing a combination of S2 NDVI and Synthetic Aperture Radar (SAR) NDVI to estimate crop damage. The integration of SAR and optical satellite data overcomes cloud cover challenges during typhoon events. The accuracy of standing crop estimation reached up to 99.2%, while crop damage estimation reached up to 99.7%. UAVs equipped with multispectral cameras prove effective for small-scale monitoring, while satellite imagery offers a valuable alternative for larger areas. The strong correlation between UAV and satellite-derived NDVI measurements highlights the significance of open-source satellite data in accurately estimating rice crop damage, providing a swift and reliable tool for assessing flood damage in agricultural monitoring.

1. Introduction

The Philippines is predominantly an agrarian nation, where a significant proportion of the population resides in rural regions and relies on agriculture as their primary means of sustenance. As per the findings of the Statista Research Department, the agricultural industry contributed approximately 1.76 trillion Philippines pesos to the gross value added (GVA) in 2021, accounting for approximately 9.6 percent of the nation’s gross domestic product (GDP) [1]. However, being an archipelago and a tropical country situated within a typhoon belt, the Philippines experiences a high frequency of tropical cyclones, with about 20 typhoons and eight to nine landfall events annually [2]. The provinces in Cagayan Valley and Central Luzon in the northern Philippines, as well as the Bicol Region in the east, presented significant vulnerability to rice damage caused by typhoons [3]. The vulnerability of agricultural areas, which are typically located at lower elevations compared to urban settlements, makes them susceptible to the devastating impact of typhoons and prolonged heavy rainfall, leading to destructive flooding that significantly affects agricultural crops and the livelihoods of farmers.

Among the recurring causes of loss for local farmers, flooding-induced damage to rice crops poses a substantial challenge. To mitigate these losses, government officials have the authority to declare a state of calamity in affected municipalities. The Philippine Disaster Risk Reduction Management act of 2010, also known as Republic Act 10121, defines a state of calamity as a condition characterized by mass casualties, significant property damage, and the disruption of livelihoods, roads, and the normal way of life resulting from natural or human-induced hazards. The declaration of a state of calamity enables the reallocation of funds for infrastructure repair and improvement, the implementation of price controls on essential goods, the provision of interest-free loans to severely affected segments of the population through cooperatives or organizations, and the monitoring and prevention of price gouging, profiteering, and the hoarding of essential goods, medicines, and petroleum products [4,5]. The challenge lies in how fast and reliable the reported damages are, as the declaration of being in a state of calamity depends on such reports.

Currently, the estimation of agricultural damage caused by flooding in most parts of the country relies on manual approaches. Rotairo et al. [6] stated that many developing Asian and Pacific economies rely on administrative reporting systems to gather data on agricultural production and land use, primarily due to limited financial resources for conducting comprehensive agricultural surveys or censuses. However, a crucial need exists to bolster statistical capacity and enhance skills in these regions before large-scale censuses can be implemented. The main challenge lies in a shortage of technical personnel with the requisite skills to generate precise agricultural statistics. In the administrative reporting system, data collection starts at the grassroots level, such as villages and municipalities, with local agriculture personnel observing harvests and interviewing key individuals. This approach is cost-effective and offers more timely estimates than censuses, but it can result in measurement errors, subjectivity, and data inconsistencies. Reports from local administrative offices sometimes contain planned or adjusted figures, leading to inaccuracies and flawed decision-making by local officials [6]. The national disaster database in the Philippines, containing information on agricultural losses, is not always available. Out of the 526 typhoon events that made landfall or passed within 500 km of the Philippines from 1970 to 2018, only 60 have accessible reports on rice damage [3].

With the advancement of technology and increased accessibility to satellite information, the estimation of crop damage can be expedited and enhanced. Numerous studies have already utilized satellite imagery, UAVs, and computing technology for agricultural monitoring. These encompass both UAV-borne and space-borne optical and SAR remote sensing products, in conjunction with GIS. Such examples are the application of remote sensing and Geographic Information Systems (GIS) [7], the utilization of UAVs [8,9], the use of Synthetic Aperture Radar (SAR) [10,11,12,13,14,15], and optical satellite data [16]. Additionally, satellites have been employed to assess the impact of floods on crops [15,17,18,19]. Several studies have established a robust correlation between UAV and satellite imagery. For instance, Li et al. [20] conducted a study that compared S2 imagery with UAV imagery equipped with a multispectral camera for crop monitoring. Furthermore, other studies, such as those by Bollas et al. and Mangewa et al. [21,22], compared NDVI values measured by S2 with those obtained through measurements by UAVs equipped with a multispectral camera. This alignment with the first objective of this study underscores the importance of ascertaining this correlation within the context of the Philippines, further enhancing the credibility of this research. Moreover, remote sensing, as emphasized by Hendrawan et al. [23], facilitates the identification of spatiotemporal characteristics related to vegetation conditions, using vegetation indices for agricultural monitoring. It is commonly employed to assess vegetation growth and health. Thus, in this study, the NDVI was used as the index to monitor crop growth, as the reported damages were specific to particular growth stages of the crops when they were damaged. Moreover, the S2 NDVI is the most accurate vegetation index for estimating rice growth stages [24].

While previous studies have explored various approaches to utilizing technology for agricultural monitoring, this study aims to introduce a different approach that emphasizes the fast and reliable estimation of crop damages caused by floods. This is achieved through the utilization of open-source satellite data acquired via scripts customized by government-funded project (2015 to 2022) known as the Community Level—SARAI-Enhanced Agricultural Monitoring System (CL-SEAMS) of Project SARAI (Smarter Approaches to Reinvigorate Agriculture as an Industry in the Philippines). The satellite data acquisition is conducted via Google Earth Engine (GEE) and processed through open-source GIS [25,26,27,28]. This novel approach, presented in the study framework, expedites crop damage estimation by eliminating the need for users to download and manage large datasets locally. The use of cloud-based computing enables users to perform complex analyses without the requirement for powerful local hardware, a task that traditionally consumes significant time and effort. Additionally, the reliability of the estimation is enhanced, as cloud cover can be easily double-checked, mitigating a common source of inaccurate estimation when not properly monitored. By capitalizing on the availability and accessibility of such data, this research seeks to contribute to the advancement of agricultural monitoring practices by minimizing, if not eliminating, the manual assessment of crop damages that are still prevalent in most parts of the Philippines as well as in other developing countries. This may aid in achieving the so-called Agriculture 4.0 which consists in upgrading the usual farming techniques to create a more sustainable and efficient agriculture system [29].

Given the recurring issue of floods and the local community’s dependence on rice farming, this study aims to develop a method that can enhance and expedite the estimation of rice crop damage. To achieve this purpose, the following objectives have been established: (1) to ascertain the correlation between Normalized Difference Vegetation Index (NDVI) measurements obtained from UAVs and those derived from the Sentinel-2 satellite (S2). If a strong correlation is found between satellite-derived NDVI and UAV NDVI measurements using a multispectral camera, the subsequent objectives are as follows: (2) to estimate rice crop damage using satellite-derived information, and (3) to evaluate the accuracy of the estimated damage value by comparing it to the manually recorded damage data obtained by the local officials.

This paper explores the correlation between NDVI measurements obtained from UAVs equipped with a multispectral camera and those derived from the S2 satellite. While UAVs mounted with multispectral cameras offer detailed results for small areas, their high cost and time-consuming nature pose limitations when dealing with large areas. Therefore, the development of a more cost-effective and time-efficient alternative for agricultural monitoring is investigated.

The Philippines, a predominantly agricultural area, faces significant challenges due to its susceptibility to frequent tropical cyclones, particularly in regions crucial for agriculture. These natural disasters often result in devastating flooding, causing substantial damage to crops and disrupting the livelihoods of farmers. Mitigating such losses by taking advantage of advancements in technology, particularly satellite imagery, UAVs, and GIS platforms, offers promising solutions to expedite and enhance crop damage estimation for faster and more efficient assessments. Leveraging these tools aims to revolutionize agricultural monitoring in the Philippines, contributing to more sustainable and efficient farming practices, and ultimately advancing towards Agriculture 4.0.

2. Materials and Methods

2.1. Study Sites

Candaba, a municipality in the province of Pampanga within the Central Luzon region, is predominantly an agricultural area, with 31 out of 33 barangays engaged in rice cultivation [30]. The region is susceptible to recurrent flooding, particularly during the rainy seasons, owing to its classification as an alluvial plain, with a portion of it transformed into a swamp. These floods primarily result from the overflow of the Pampanga River, causing water to accumulate and impede efficient drainage, leading to severe floods [31]. In an interview with the Candaba Municipal Agricultural Officer, it was revealed that a damage report on agriculture reaching 20% and above should be initially reported to the local officials of the Disaster Risk Reduction and Management Office (DRRMO). This report will then undergo further protocols with higher officials before a state of calamity can be declared. The agricultural area of Candaba was reported to be approximately 17,000 hectares in 2015 and increased to about 19,500 hectares in 2021 [30,32]. Unfortunately, the current practice in Candaba, Pampanga, relies on manual damage assessments, which often consume a significant amount of time to complete and report due to the risks associated with accessing flooded areas. This issue was also emphasized in a study by Rahman and Di (2020), where they highlighted the challenges associated with past crop loss assessments, which were generalized and time-intensive due to reliance on survey-based data collection. To enable rapid flood loss assessment, according to them, the availability of remote sensing data plays a key role [6,17]. Remote sensing, as defined by the US Geological Survey (USGS), is the process of detecting and monitoring the physical characteristics of an area by measuring its reflected and emitted radiation from a distance [33]. The utilization of Unmanned Aerial Vehicles (UAVs) and satellites serves as an excellent example of remote sensing, enabling the acquisition of information without direct physical contact with the object.

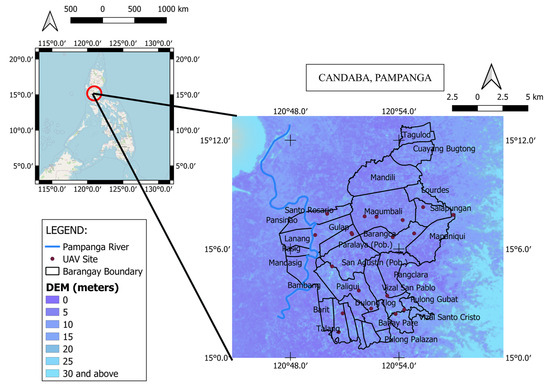

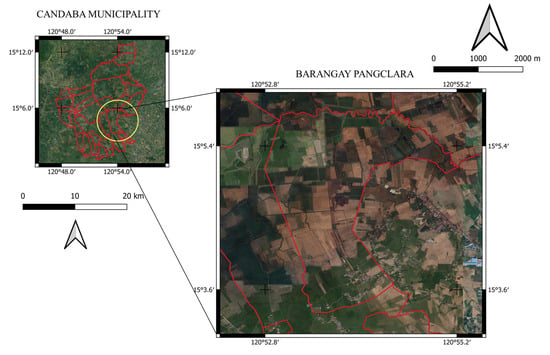

Table 1 provides an overview of the flooding events and their respective dates in Pampanga, which are the focus of analysis in this study. To achieve the first objective, NDVI measurements were conducted using multispectral camera-equipped UAVs in both the Philippines and Japan. In the Philippines, 16 sites within Candaba, Pampanga (as shown in Figure 1), were selected. These 16 sites represent agricultural barangays that are (1) occasionally inundated by serious flood events, such as barangays Vizal Sto. Niño, Magumbali, Barangca, Salapungan, and Mapaniqui; (2) sometimes inundated by flood events, such as barangays Vizal San Pablo, Talang, and Pangclara; and (3) usually inundated barangays, such as Santo Rosario, Gulap, Paralaya, San Agustin, and Paligui.

Table 1.

Six flood events and their date of occurrence in Candaba, Pampanga.

Figure 1.

Location of the 16 sites for UAV experiment in the Philippines.

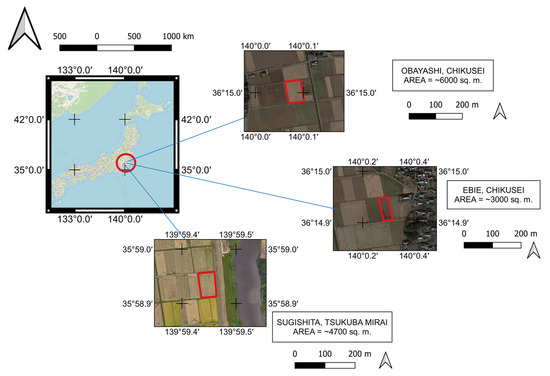

Due to initial constraints posed by COVID-19, UAV experiments were carried out in Ibaraki, Japan, where three specific study sites were chosen: Obayashi and Ebie in the city of Chikusei, and Sugishita in the town of Tsukuba Mirai (refer to Figure 2). These sites were selected due to their proximity to Tsukuba in Ibaraki prefecture, where the International Centre for Water Hazard and Risk Management (ICHARM) research laboratory is located. The UAV experiment in Ibaraki aimed to observe NDVI values throughout the entire cropping season, from planting to harvesting, as it aligns with the typical rice planting cycle in Japan. The second and third objectives focused on rice crop damage estimation, with specific attention to Candaba, Pampanga.

Figure 2.

Location of the three farm lots used as study sites in Japan.

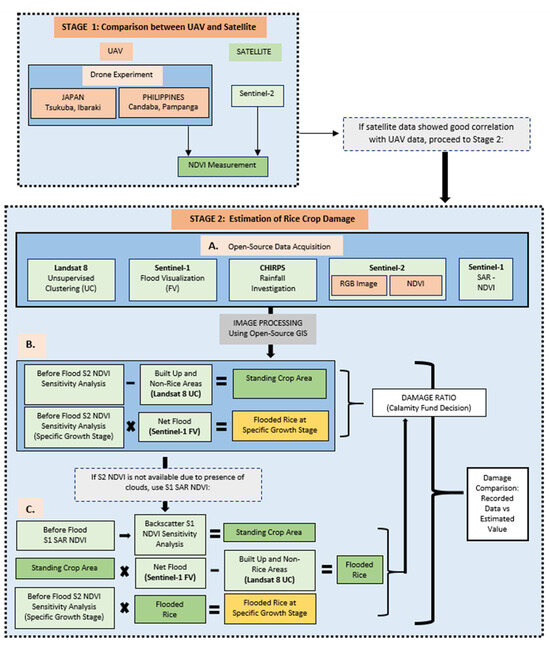

2.2. Study Framework

This study comprises two stages, as illustrated in Figure 3: (1) a comparison between UAV and satellite data, and (2) the estimation of rice crop damage. The reliability of the NDVI readings from the drone with a multispectral camera and the S2 satellite is assessed by examining their correlation. The drone captures the NDVI measurements, while the satellite-derived NDVI is used for comparison. If a strong correlation is observed between the satellite and UAV data, the study progresses to stage 2, wherein satellite data are utilized to cover a larger area and obtain information about past flooding events. In the second stage, various open-source satellite data (refer to Table 2) are acquired and processed using QGIS software 3.16.9 to estimate the damage inflicted on the rice crop by flooding. The damage data obtained from the Municipal Agriculture Office of Candaba, Pampanga, are then compared to the estimated damages derived from this study. Additionally, the area of the standing crop, which is the total above-ground plant biomass before the flooding event, is estimated and compared to the recorded data. The ratio between the damaged rice and the standing crop determines the damage ratio that will be used for the calamity fund decision. Further details on the aspects and framework of this study are explained in Section 2.4.

Figure 3.

Study framework showing two stages, 1 and 2, with stage 2 comprising three sub-stages: (A)—open-source data acquisition; (B)—estimation using S2 data; (C)—estimation using fusion of S2 and S1 data.

Table 2.

Satellite data acquired for image processing.

2.3. Materials

A UAV equipped with a multispectral camera, the DJI P4 Multispectral, as depicted in Figure 4, was utilized for this study. It is a high-precision UAV designed for environmental monitoring, agricultural missions, and precise plant-level data collection [34]. In Japan, the UAV’s elevation was set to 40 m, resulting in a resolution of 2 cm. Conversely, in the Philippines, the UAV’s elevation was adjusted to 120 m, providing a resolution of 6 cm due to the larger average observation area of about 45 ha, compared to only 0.5 ha in Japan. The experiment captured six bands, including RGB and five monochrome sensors for multispectral imaging.

Figure 4.

P4 Multispectral camera-mounted UAV used in the study.

For the satellite-derived NDVI, S2 was employed. S2 is a wide-swath, high-resolution, multispectral imaging mission that supports Copernicus Land Monitoring studies. It enables the monitoring of vegetation, soil, and water cover, as well as the observation of inland waterways and coastal areas. The S2 data consist of 13 UINT 16 spectral bands, which represent TOA reflectance scaled by 10,000. In addition, three QA bands are included, with one of them (QA60) serving as a bitmask band containing cloud mask information [35,36,37].

The radiation spectrum employed for NDVI determination included the Near Infrared (NIR) and Red bands. For the DJI P4 Multispectral, the NIR wavelength is 840 nm ± 26 nm, and the Red wavelength is 650 nm ± 16 nm [34], while for S2, the NIR wavelength is 842 nm, and the Red wavelength is 665 nm [35]. Therefore, NDVI measurements derived from both UAV and satellite utilized the same wavelengths, ensuring comparability. See Table 3 for the comparison between the UAV and the S2 satellite during the course of the research.

Table 3.

Comparison between multispectral camera-equipped UAV and Sentinel-2 Satellite during the conduct of the experiment.

The data on rice crop damage were obtained from the Municipal Agriculture Office of Candaba, Pampanga, which contained records of the six most recent flooding events due to typhoon since 2020. These records were the only available data currently preserved by the office. The data include information such as the affected barangays, the number of farmers impacted, the area of standing crops prior to the flood event, the specific stage of crop development when the damage occurred, the extent of damage (total and partial), and the associated cost of the damage. Additionally, data on the size of farm areas (in hectares) planted on specific dates were also obtained.

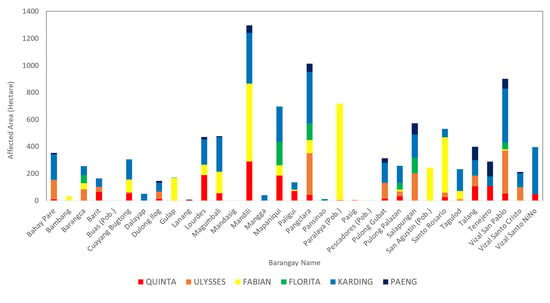

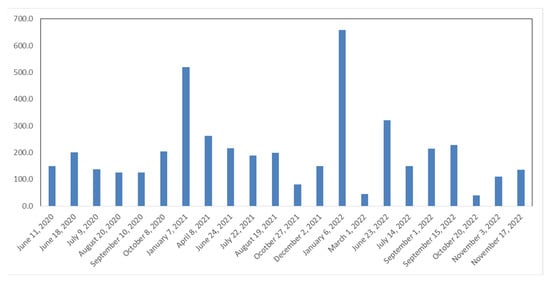

The damage data obtained from the Municipal Agriculture Office were summarized by creating graphs that show the extent of the rice crop damages in terms of affected area (hectares) per barangay (refer to Figure 5). Barangay is the smallest political unit in the Philippines. With this, Barangay Pangclara was selected for the investigation of rice crop damage estimation since it was one of only two barangays affected by all six flooding events, with the other barangay being Vizal San Pablo. In terms of the total affected area, Barangay Pangclara ranks higher than Barangay Vizal San Pablo. A map of Barangay Pangclara is provided in Figure 6. Additionally, the size of farm areas planted with rice (hectares) on specific dates ranging from June 2020 to November 2022, with a particular focus on Barangay Pangclara, was summarized (as shown in Figure 7).

Figure 5.

Rice crop damages in terms of affected area (in hectares) in barangays of Candaba, Pampanga.

Figure 6.

Map of Barangay Pangclara.

Figure 7.

Size of farm areas (in hectares) planted with rice on specific dates in Brgy. Pangclara.

Table 2 presents a summary of the satellite data utilized in this study. We employed unsupervised clustering (UC) of the Landsat-8 dataset to remove non-rice crops and built-up areas, [38]. Flood visualization (FV) was conducted using Sentinel-1 (S1) to illustrate the before and after effects of the typhoon. S1 provides data from a dual-polarization C-band SAR instrument at 5.405 GHz [19,39,40]. In investigating rainfall, we utilized Climate Hazards Group InfraRed Precipitation with Station data (CHIRPS). CHIRPS is a quasi-global rainfall dataset that spans over 30 years and incorporates 0.05° resolution satellite imagery with in situ station data to create gridded rainfall time series for trend analysis and seasonal drought monitoring [41,42]. The details regarding S2, which was used for the satellite-derived NDVI, were already mentioned earlier. The S2 RGB image was utilized to create a true-color composite image to identify the presence of clouds [35,36]. If S2 NDVI proved to be unreliable due to cloud cover, we resorted to using S1 NDVI instead [39].

2.4. Methods

In this study, we first verify the correlation of NDVI readings between UAV and S2 by conducting UAV experiments in Japan and the Philippines. After establishing that UAV and S2 have a good correlation, it becomes justifiable to use satellite data to estimate rice crop damage caused by flooding in Pampanga, Philippines, in order to cover a larger area. This is necessary as UAVs have limitations in their ability to cover large areas in a short time. Additionally, satellite data can verify past flooding events, which further assists in assessing the effectiveness of this study.

The S2 NDVI is determined using Equation (1) [43]:

The S1 SAR NDVI is determined using Equation (2) [28]:

where:

NDVI = (VH/VV + 1)/(VH/VV − 1)

VH is the backscatter coefficient of the SAR image for horizontal transmit, vertical receive polarization.

VV is the backscatter coefficient of the SAR image for vertical transmit, vertical receive polarization.

2.4.1. STAGE 1: Comparison between UAV and Satellite

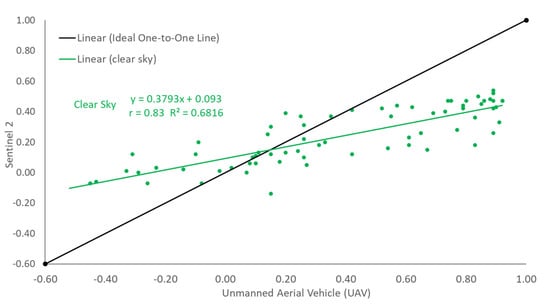

In the Philippines, UAV observations were conducted once within Candaba, Pampanga, covering 16 sites from 16 to 20 January 2023, during our field visit. S2 NDVI values were obtained for comparison corresponding to the dates or near the dates of observation. RGB images of S2 were used to verify cloud cover. Random point selections were used for data extraction for both UAV and S2 NDVI values. Point data analysis was employed to address the issue of resolution consistency in examining the correlation between UAV and satellite. Only observations with clear skies were considered, and a graph of UAV vs. S2 was created. A linear trend line was generated from the scatterplot to determine the correlation between the NDVI from the UAV and the satellite-derived NDVI.

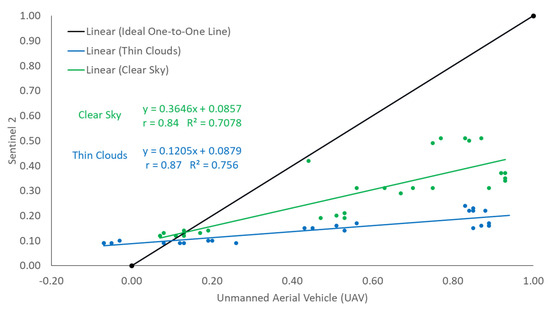

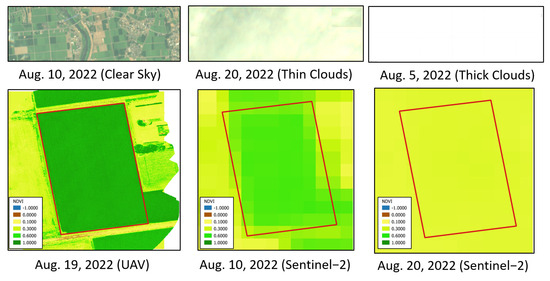

In Japan, drone observations were conducted to measure the NDVI for one rice cropping season (June to November 2022) across three farm lots. This served as the preliminary study before the experiment in the Philippines. The dates of observations were recorded and the corresponding S2 NDVI values were obtained for comparison. Additionally, RGB images of S2 were used to verify cloud cover. Data extraction for both UAV and S2 NDVI values was based on five random points, located near the center and around the four corners. Point data analysis was employed to address the issue of resolution consistency in examining the correlation between UAV and satellite. Observations with total cloud cover were excluded while those with a thin layer of clouds were separated from clear sky observations. A graph of UAV vs. S2 was created for the three sites, with clear sky observations plotted separately from those with thin clouds. A linear trend line was generated from the scatterplot to determine the correlation between the NDVI from the UAV and the satellite-derived NDVI.

2.4.2. STAGE 2A: Open-Source Satellite Data Acquisition for the Estimation of Rice Crop Damage

The unsupervised clustering of Landsat-8, flood visualization using S1, rainfall investigation using CHIRPS, RGB images and NDVI measurements of S2, and SAR NDVI of S1 were all performed in Google Earth Engine (GEE), a planetary-scale platform for Earth science data and analysis that combines a multi-petabyte catalog of satellite imagery and geospatial datasets [28]. Most of the scripts used in GEE were obtained from CL-SEAMS under the Project SARAI [25,26,27], while some were modified and developed by the authors.

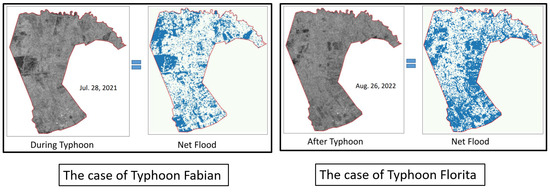

The main focus of the study was to estimate the damages to rice crop areas caused by flooding events brought about by typhoons. To identify non-rice and built-up areas, unsupervised clustering of Landsat 8 time-series data was performed, and these areas were removed during image processing. Flood visualization was conducted using S1 to compare the flood scenario images before and after the flooding event. The difference in the presence of inundated water before and after the flooding event scenarios represented the net flood, which was used in image processing to determine the flooded rice. In some cases where the net flood was immediately equated with the after-typhoon flood scenario due to the lack of significant differences between the scenarios before and after the flood event, CHIRPS rainfall data were utilized to investigate the amount of rainfall received in the area prior to the flood event.

Although the S2 NDVI is the most accurate vegetation index for estimating rice growth stages [24], it relies on optic sensors, making it highly susceptible to measurement bias and errors caused by cloud cover [36]. As such, RGB images from S2 were checked for all six flooding events, including dates before and after each event, to verify the presence of clouds. Only images with little to no cloud cover were selected, and corresponding S2 NDVI images were obtained. In cases where a reliable S2 NDVI could not be acquired due to thick clouds, NDVIs from the S1 SAR were obtained instead, following a similar approach to that used by Dobrinic et al. in their study [44]. Furthermore, a study conducted by Jiao et al. [45] demonstrated a high correlation coefficient of 0.94 between the S2 NDVI and the S1 NDVI, thereby justifying this course of action.

2.4.3. STAGE 2B-2C: Image Processing for the Estimation of Rice Crop Damage

For the estimation of standing rice crop, a sensitivity analysis of the NDVI was conducted on the obtained S2 NDVI image for the period preceding the typhoon event. The sensitivity increment used was 0.1 to simplify the method until it reached a value close to the recorded data value. From this identified standing rice crop, built-up and non-rice areas were deducted to obtain the estimated area of standing rice crop. In cases where reliable S2 NDVI images could not be obtained due to thick clouds in the period near before the typhoon event, S1 SAR NDVIs were obtained as an alternative. A sensitivity analysis of the backscatter value was performed with a sensitivity increment of 1.0, using the nearest available S2 NDVI and RGB images as the basis for the density of vegetation. Backscatter index represents the strength of the reflected electromagnetic energy in a radar system. This then served as the estimated area of standing crop.

To estimate crop damage at a specific growth stage, we conducted an NDVI sensitivity analysis on the S2 NDVI image obtained prior to the typhoon event. The analysis involved incrementing the NDVI values by 0.1 and comparing them to the recorded data values provided in the damage report, following the guidance of previous studies by Gonzalez-Betancourt et al. and Hasniati et al. [24,46], which emphasized the relation of the NDVI to rice growth stages. We multiplied the resulting image by the net flood, as determined in Section 2.4.2., to obtain an image showing the estimated flooded rice damaged during that specific growth stage. In cases where reliable S2 NDVI images were unavailable due to thick clouds before the typhoon event, we used S1 SAR NDVI as an alternative. A sensitivity analysis of the backscatter value was performed with an increment of 1.0, using the nearest available S2 NDVI and RGB images to determine vegetation density. This estimation represented the area of standing crop, which was then multiplied by the net flood to obtain the flooded rice less the non-rice and built-up areas. This flooded rice was then multiplied by the available S2 NDVI that had undergone sensitivity analysis for a particular growth stage, resulting in an image of the estimated flooded rice that was damaged at that stage.

The accuracy of the estimation method was assessed using the relative percent difference, which was calculated using Equations (3) and (4):

Accuracy = (1 − Relative Error) × 100%

At this stage of the study’s framework, machine learning methods are leveraged to develop algorithms and statistical models that facilitate computer systems in learning from data, recognizing patterns, and making predictions. This approach aligns with previous works, such as the studies by Vamsi et al. [47], who focused on estimating crop damage through leaf disease detection, and Filho et al. [48], who utilized machine learning models for rice crop detection from S1 data. However, the use of machine learning methods was hindered by the limited amount of available datasets, which was insufficient for training a model capable of minimizing errors and optimizing its predictive accuracy. Consequently, sensitivity analysis was employed in this section. Furthermore, it is worth noting that the recorded damage data may contain uncertainties stemming from the inaccessible nature of the flooded areas.

3. Results

3.1. STAGE 1: Comparison between UAV and Satellite

Figure 8 illustrates the comparison between UAV and S2 for Obayashi, Ibaraki, Japan. The graphs for Ebie and Sugishita are not presented as their results were nearly identical to Obayashi. As depicted in the figure, the NDVI measurements obtained under a thin layer of clouds deviated more from the ideal slope of 1.0, exhibiting a slope of 0.12 for Obayashi. For Ebie, the slope was 0.13, while for Sugishita, it was not considered due to the limited number of data points. Conversely, NDVI measurements captured under clear skies displayed a deviation closer to the ideal slope, with slopes of 0.36, 0.35, and 0.48 for Obayashi, Ebie, and Sugishita, respectively. These findings suggest that measurements taken under clear skies yield more accurate results compared to those acquired under cloud cover. In both conditions (clear skies and the presence of thin clouds), a strong correlation was observed between UAV and S2 NDVI readings in Obayasi and Ebie, with correlation coefficients of 0.87 and 0.80, respectively. NDVI measurements obtained under clear skies exhibited a strong correlation across all sites, with correlation coefficients of 0.84, 0.74, and 0.84 for Obayashi, Ebie, and Sugishita, respectively. It is worth noting that in nearly all cases, the S2 readings were higher than the UAV readings for the NDVI values below 0.1.

Figure 8.

Graph of UAV versus Sentinel−2 NDVI measurements for Obayashi, Ibaraki, Japan.

Figure 9 displays the comparison of UAV versus S2 for the 16 sites within Candaba, Pampanga, under clear skies conditions. The slope of the graph was 0.38, indicating a deviation from the ideal slope of 1.0. S2 NDVI readings exhibited a high correlation with UAV measurements, with a correlation coefficient of 0.83. Notably, S2 readings were consistently higher than UAV measurements for the range of NDVI below 0.1.

Figure 9.

Graph of UAV versus Sentinel−2 NDVI measurements for Candaba, Pampanga.

3.2. STAGE 2: Estimation of Rice Crop Damage

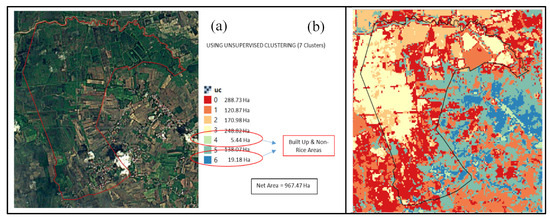

Figure 10b displays the unsupervised clusters for Barangay Pangclara from the Landsat-8 dataset (2013–2017), with seven clusters as the default script setting in the GEE. This is a machine learning method that involves averaging the time-series NDVI values to group them into seven clusters to facilitate the identification of possible non-rice and built-up areas. With the help of the actual RGB image as a reference (see Figure 10a) and the data on standing rice crop area in Table 2, clusters 4 and 6 were identified as built-up and non-rice areas.

Figure 10.

Unsupervised clustering method in Barangay Pangclara, Candaba, Pampanga, showing (a) actual RGB image; and (b) unsupervised clusters.

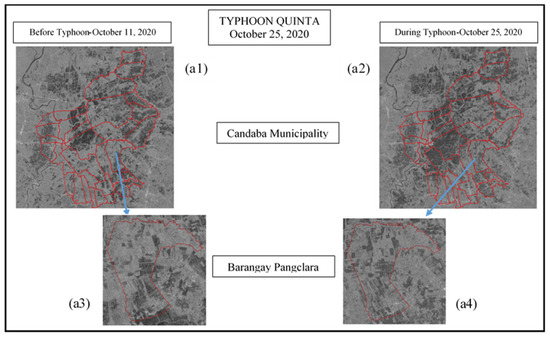

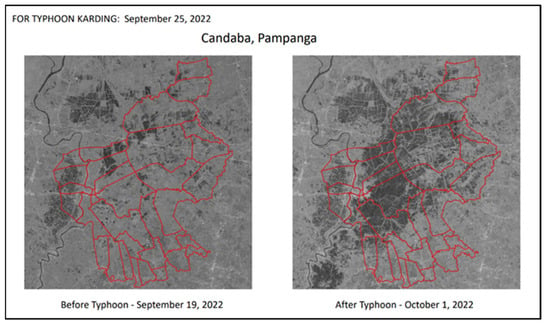

Figure 11(a1,a2) illustrate images for the entire Candaba region for flood visualization before and during Typhoon Quinta, respectively. Additionally, shown below them are Figure 11(a3,a4), depicting the flooding scenario in Barangay Pangclara under the same flooding event when zoomed in. Similar flood visualizations were conducted for the remaining flooding events.

Figure 11.

Flood visualizations of Candaba Municipality and Barangay Pangclara for the Typhoon Quinta flood event, showing (a1) before the typhoon at Candaba; (a2) during the typhoon at Candaba; (a3) before the typhoon at Brgy. Pangclara; and (a4) during the typhoon at Brgy. Pangclara.

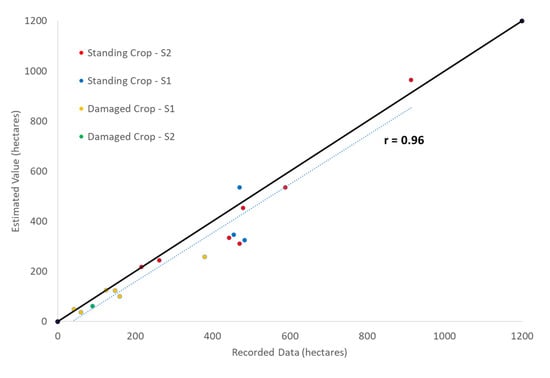

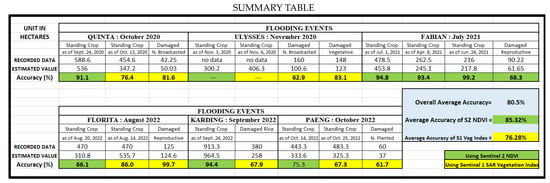

Image processing was conducted to estimate the standing crop area and the rice crop damaged by floods using the open-source Quantum GIS software 3.16.9. The recorded damage data and estimated damage values for the six flood events were compared, as depicted in Figure 12 and presented in the summary table shown in Figure 13. The table also provides a comparison between the S2 NDVI and S1 SAR NDVI. The summary table reflects the accuracy of the crop damage estimation.

Figure 12.

Comparison between the recorded damage data against estimated damage values.

Figure 13.

Summary table of comparison between recorded damage data and estimated damage value for the six flood events.

4. Discussion

4.1. STAGE 1: Comparison between UAV and Satellite

This study provides evidence of a strong linear correlation between UAV and S2 NDVI measurements, as demonstrated in Figure 8 and Figure 9, regardless of location (Japan or the Philippines). Similar findings have been reported in studies conducted in other countries, such as Germany, Greece, and Tanzania [20,21,22]. In the Japan UAV experiment, observations were collected for both clear sky and a thin layer of clouds, as the study period spanned one cropping season (June to November 2022). Conversely, due to the limited period of study (only five days, 16 to 20 January 2023) and the presence of thick clouds that can cause erroneous readings in S2 data [49,50], only clear sky observations were considered in the Philippines.

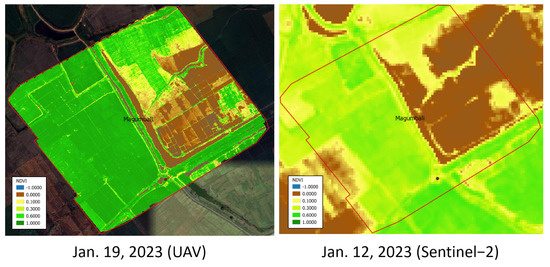

Figure 14 displays S2 RGB images of clear sky, thin cloud, and thick cloud observations at Obayashi on 10 August 2022, 20 August 2022, and 5 August 2022, respectively. Below them are three NDVI images, with the first one being the NDVI from UAV dated 19 August 2022, the second one being the NDVI from S2 dated 10 August 2022 (clear sky), and the third one being the NDVI from S2 dated 20 August 2022 (thin clouds). The NDVI image produced by the UAV is more detailed, with a 2 cm resolution, compared to S2′s 10 m resolution. Hence, it was used as the independent variable in the linear regression. However, there were several instances where the date of available S2 images did not coincide with the actual date of UAV observation. This is mainly due to the limitation of the availability of S2 images as their revisit time is fixed every 5 days. As seen in the figure, the clear sky observation closely resembled the UAV observation despite them being nine days apart from each other. In contrast, the thin cloud observation was only one day apart from the UAV observation date but did not closely resemble the UAV observation. This implies that clear sky observation is more reliable than observations with the presence of clouds, as also proven in Figure 8, where its trend line slope is closer to the one-to-one line. In both the Japan and Philippines NDVI readings, the majority of the NDVI measurements in S2 were lower than the NDVI measurements in UAV. However, in the section of NDVI values below 0.1, the S2 NDVI measurements were higher than the NDVI measurements of UAV, as seen in Figure 8 and Figure 9. This finding requires further investigation and additional experiments to determine if it consistently exhibits such behavior, as it may be related to the photosynthetic activities of the crop. It is worth noting that an NDVI value below 0.1 is typically the threshold between the presence of water and crops. Meanwhile, Figure 15 illustrates a sample image from Barangay Magumbali, which shows the detailed NDVI image captured by the UAV in the Philippines, in comparison to the derived S2 NDVI data.

Figure 14.

S2 RGB images (top) to determine cloud presence and NDVI measurements from UAV and S2 (bottom).

Figure 15.

Drone-captured vs. satellite-derived NDVI at Barangay Magumbali, Candaba, Pampanga.

The use of multispectral cameras mounted on UAVs has been proven to be effective in providing highly detailed results for precision agriculture applications, particularly in monitoring rice paddies, as demonstrated in the studies of Radoglou-grammatikis et al. and Paddy et al. [8,9]. However, this technology is limited by its high cost and time-consuming nature, especially when large areas are involved. Therefore, the development of a more cost-effective and time-efficient alternative for agricultural monitoring would be highly beneficial. In the first stage of this study, we achieved a high linear correlation of clear sky observations (Japan and the Philippines) with a lowest value obtained at 0.74 and a highest value of 0.84, giving an average correlation coefficient of 0.81. According to Calkins (2005), correlation coefficients between 0.7 and 0.9 indicate variables which can be considered highly correlated [51]. The deviation from the one-to-one slope ranged from 0.35 to 0.48, with an average slope of 0.39. Past studies have also shown good results in terms of the correlation of NDVIs derived from UAV and S2, such as a correlation coefficient that ranges between 0.84 and 0.98 by Bollas and Kokinou [21] and a correlation coefficient of 0.97 by Mangewa et al. [22]. With such good results, the use of satellite data in stage 2 of this study is highly justified.

4.2. STAGE 2: Estimation of Rice Crop Damage

The reported maximum area of rice cultivation in Barangay Pangclara from mid-2020 to the end of 2022 was 953.3 ha. This information served as the basis for identifying potential rice-growing areas within the barangay. In order to distinguish non-rice areas, a clear S2 RGB image was utilized, enabling the visual identification of built-up areas, as shown in Figure 10a. Using the unsupervised clustering method on the Landsat-8 dataset, seven clusters were obtained, as illustrated in Figure 10b. Among these clusters, clusters 4 and 6 were found to represent the built-up and possible non-rice areas, with a combined total area of 24.62 ha. This left a net area of 967.47 ha for the remaining five clusters. The difference of only 14 ha between this net area and the maximum recorded standing rice crop data signifies a small disparity, and thus the default seven clusters were no longer adjusted.

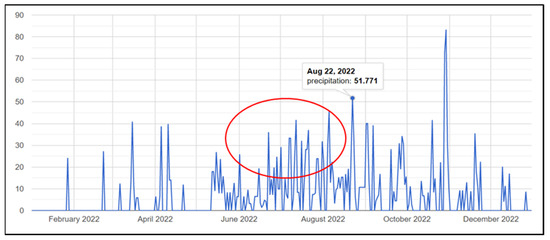

The ‘before’ and ‘after’ flood visualizations (Figure 16) were created using S1 C-band SAR Ground Range collection with VV co-polarization. The collection was filtered by date for both ‘before’ and ‘after’ images, and a mosaic of filtered images was created. The resolution was 10 m with a revisit time of 6 days [19,39,40]. The net flood, which represents the difference in the presence of inundated water before and after the flooding event, was used to determine the flooded rice areas. The usual method of determining the net flood was applied for four of the six flooding events. For the remaining two flooding events (Fabian and Florita), the net flood was immediately equated with the ‘after’ typhoon flood scenario due to the absence of significant differences between the ‘before’ and ‘after’ flood scenarios, as illustrated in Figure 17. These cases were investigated by analyzing the rainfall data within the barangay using CHIRPS rainfall data. The rationale behind such cases was continuous rainy days prior to the typhoon event, as depicted in Figure 18 for Typhoon Florita, where the encircled portion indicates continuous rainfall before the typhoon event.

Figure 16.

Flood visualization from S1 SAR under Typhoon Karding.

Figure 17.

Cases where the ‘after’ flood scenario from S1 SAR is equated with the net flood, where blue color shows inundated areas.

Figure 18.

CHIRPS 2022 rainfall data in Barangay Pangclara under Typhoon Florita showing continuous rainfall (in red circle) prior to typhoon event.

The S2 NDVI image before the typhoon event was utilized to estimate the standing rice crop for all six flooding events. However, due to the nature of S2′s optic sensors and the 5-day revisit time limitation, the interval of days between the typhoon event and the S2 NDVI used varied for each event. This discrepancy resulted from selecting images with minimal or no cloud cover. Based on the sensitivity analysis, the range of NDVI values used to determine the standing crop differed for different weather conditions. For clear skies (Quinta, Ulysses, and Fabian), the NDVI range was 0.4 to 1.0. For events with thin cloud cover (Florita, Paeng, and Karding), the NDVI ranges were 0.3 to 1.0, 0.2 to 1.0, and 0.1 to 1.0, respectively. These findings align with the results obtained in Stage 1 of this study, which demonstrated that the presence of clouds can influence NDVI values by affecting the absorption and reflection of Near Infrared (NIR) and Red wavelengths of the radiation spectrum.

The results of the NDVI sensitivity analysis for different growth stages are as follows:

- Newly broadcasted: ≤0.2 (clear skies), ≤0.1 (thin clouds);

- Newly planted: ≤0.2 (thin clouds);

- Vegetative: 0.2 to 0.4 (thin clouds);

- Reproductive: ≤0.3 (thin clouds), 0.4 to 0.5 (thin clouds), 0.4 to 1.0 (thin clouds).

In general, there were no fixed NDVI values or ranges of NDVI values for a particular growth stage. The NDVI is influenced by multiple factors, and the condition of the crop and farm lots varied across the six flooding events. However, the findings of this study could be further strengthened by continuously monitoring actual field data, such as geotagged farm lots with recorded and monitored planting activities.

In estimating the damage to rice crops at specific growth stages, we employed a combination of the S2 NDVI for growth stage identification and the S1 SAR NDVI for rice area detection. This approach was chosen due to the difficulty in obtaining S2 NDVI data during or near typhoon events, primarily due to persistent cloud cover. This cloud cover issue was consistently encountered across all six flood events. Previous studies have also supported the fusion of S1 and S2 data as an effective approach to enhance data acquisition and improve the overall quality of data, as demonstrated by Li et al. [52].

Referring to the summary table in Figure 13, the estimation of standing crop demonstrated an accuracy ranging between 66.1% and 99.2%. In terms of crop damage estimation, the lowest accuracy of 61.7% was observed during Typhoon Paeng, while the highest accuracy of 99.7% was achieved for Typhoon Florita. The accuracy of the estimation using Sentinel-2 ranged from 66.1% to 99.2% with an average accuracy of 85.3%. On the other hand, the accuracy of the estimation using Sentinel-1 ranged from 61.7% to 99.7% with an average accuracy of 76.3%. The overall average accuracy of this study, in terms of estimating standing crop and rice crop damage, is 80.5%. Comparing the recorded damage data against the estimated damage values, as shown in Figure 12, resulted in a remarkably high correlation coefficient of 0.96, with the trend line closely aligned to the ideal one-to-one slope, indicating a strong relationship between the two.

The potential errors that can be attributed to the results of this study include the following:

- Variations in the analysis dates due to the specific revisit time of S1 and S2 data, which may not always align with the exact dates of the flooding events.

- The accuracy of the recorded damage data may be questioned due to the generalized cropping calendar used and the lack of specification regarding rice varieties, as harvest dates can vary.

- Due to the 6-day revisit time of SAR data, this study lacks the capability to precisely determine the duration of inundation days. Consequently, it becomes challenging to differentiate between total damage, defined by the MAO as rice crops submerged for two days or longer, and partial damage, which refers to rice submerged for less than two days.

A more advanced study such as one using open data and machine learning can also estimate crop damage brought about by typhoons. However, the utilization of machine learning methods in this study may be impeded by the limited availability of datasets, which is insufficient for training a model capable of minimizing errors and optimizing its predictive accuracy. This limitation mirrors the challenge faced by Boeke et al. (2019) in their study, where they combined open data with regression and classification machine learning algorithms to predict rice loss due to typhoons in a case study in the Philippines. The support vector classifier performed the best, achieving a recall score as high as 88% but with a precision score as low as 10.84%. According to them, the data from 11 typhoons used to train both regression and binary classification models were inadequate, resulting in imprecise predictions [53].

In summary, open-source data and UAV imagery offer numerous advantages for crop damage assessment, including high spatial and temporal resolution, accessibility, cost-effectiveness, scalability, and remote sensing capabilities. However, they also present challenges related to weather dependency, technical expertise, equipment cost, regulatory compliance, and data processing. Despite these limitations, the potential benefits of using these advanced technologies outweigh the challenges, especially in terms of their ability to provide timely and comprehensive information for effective disaster response and agricultural management. With continued advancements in technology and increased capacity building efforts, open-source satellite data and UAV imagery hold great promise for revolutionizing crop damage assessment practices worldwide.

5. Conclusions

This study was able to ascertain and establish the correlation between NDVI measurements obtained from UAVs and those derived from the S2 satellite, subsequently achieving a fast and reliable estimation of rice crop damage using satellite-derived information. The use of multispectral cameras mounted on UAVs has proven highly effective in precision agriculture applications, particularly for monitoring rice paddies, providing detailed results with higher spatial resolutions. However, when assessing larger areas and conducting damage assessments, satellite imagery emerges as a valuable alternative. This study demonstrated a strong correlation of up to 0.84 between UAV- and satellite-derived NDVI measurements. Having an additional tool for swiftly determining the extent of flood damage to crops with confidence is crucial, where satellite-derived information, such as S1 and S2 data, presents a favorable approach in agricultural monitoring. This study showcased remarkable accuracy in crop damage estimation, reaching up to 99.7% with an average accuracy of 76.3% for S1, and up to 99.2% with an average accuracy of 85.3% for S2, with an overall average accuracy of 80.5% in estimating standing crop and rice crop damage. The correlation between the recorded damage data and estimated damage values yielded a very high coefficient of 0.96. Overall, integrating open-source satellite data and UAV imagery into crop damage assessment workflows can enhance the efficiency, accuracy, and timeliness of agricultural decision-making processes, benefiting farmers, researchers, and policymakers alike.

However, this method is not intended to completely replace the conventional approach to estimating crop damages, as it also has its limitations, which have been discussed in this study. Instead, it is intended to serve as an additional tool that can accelerate and improve the estimation process. Further studies should be conducted to explore the correlation between the NDVI derived from UAVs and S2-derived NDVIs. This study has demonstrated promising results, indicating the potential for employing linear regression and bias correction techniques to align the satellite-derived NDVI readings with UAV-derived NDVI readings. Additionally, the proposed methodology can also be evaluated in other areas with reliable recorded damage data to further test its accuracy and reliability. Furthermore, as this study is in its initial stage, machine learning methods can be employed in the future if sufficient and reliable damage data can be obtained.

Author Contributions

Conceptualization, methodology, formal analysis, and data curation, V.B.J.; validation, V.B.J., M.O., K.H. (Koki Homma), K.A. and K.H. (Kohei Hosonuma); investigation, V.B.J., M.O., M.R., K.H. (Koki Homma) and K.A.; resources, M.O. and K.H. (Koki Homma); writing—original draft preparation, V.B.J.; writing—review and editing, M.O., M.R., K.H. (Koki Homma) and K.A.; visualization, V.B.J., M.O. and M.R.; supervision, M.O. and M.R.; project administration, and funding acquisition, M.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Science and Technology Research Partnership for Sustainable Development (SATREPS) in collaboration between the Japan Science and Technology Agency (JST, JPMJSA1909) and the Japan International Cooperation Agency (JICA).

Data Availability Statement

Publicly available data sets were analyzed in this study. The data can be found here: https://earthengine.google.com/ (accessed on 11 May 2023). Other data are also available from their sources that can be found in the Section 2.

Acknowledgments

Sincere gratitude and profound appreciation are gratefully extended to the Community Level—SARAI-Enhanced Agricultural Monitoring System (CL-SEAMS) of Project SARAI (Smarter Approaches to Reinvigorate Agriculture as an Industry in the Philippines) for some of their outputs used in this study. We would also like to thank the Provincial Agriculture Office of Pampanga and the Municipal Agriculture Office of Candaba, Pampanga, for sharing their data and extending their support and assistance especially during the field visits.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Statista. Agriculture in the Philippines—Statistics and Facts. 2022. [Online]. Available online: https://www.statista.com/topics/5744/agriculture-industry-in-the-philippines/#topicOverview (accessed on 31 May 2023).

- PAGASA. Available online: https://www.pagasa.dost.gov.ph/climate/tropical-cyclone-information (accessed on 23 May 2023).

- Yuen, K.W.; Switzer, A.D.; Teng, P.P.S.; Lee, J.S.H. Assessing the impacts of tropical cyclones on rice production in Bangladesh, Myanmar, Philippines, and Vietnam. Nat. Hazards Earth Syst. Sci. 2022, 1–28. [Google Scholar] [CrossRef]

- Act No. 10121. 2010. Available online: https://www.officialgazette.gov.ph/2010/05/27/republic-act-no-10121/ (accessed on 31 May 2023).

- Unite, B. When and why a state of calamity is declared. Manila Bulletin, 18 November 2020. [Google Scholar]

- Rotairo, L.; Durante, A.C.; Lapitan, P.; Rao, L.N. Use of Remote Sensing to Estimate Paddy Area and Production; Asian Development Bank: Manila, Philippines, 2019. [Google Scholar] [CrossRef]

- Silleos, N.; Dalezios, N.; Trikatsoula, A.; Petsanis, G. Assessment Crop Damages Using Space Remote Sensing and Geographic Information System (GIS). IFAC Control Appl. Ergon. Agric. 1998, 31, 75–79. [Google Scholar]

- Dimyati, M.; Supriatna, S.; Nagasawa, R.; Pamungkas, F.D.; Pramayuda, R. A Comparison of Several UAV-Based Multispectral Imageries in Monitoring A Comparison of Several UAV-Based Multispectral Imageries in Monitoring Rice Paddy (A Case Study in Paddy Fields in Tottori Prefecture, Japan). ISPRS Int. J. Geo-Inf. 2023, 12, 36. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Comput. Networks 2020, 172, 107148. [Google Scholar] [CrossRef]

- Letsoin, S.M.A.; Purwestri, R.C.; Perdana, M.C.; Hnizdil, P.; Herak, D. Monitoring of Paddy and Maize Fields Using Sentinel-1 SAR Data and NGB Images: A Case Study in Papua, Indonesia. Processes 2023, 11, 647. [Google Scholar] [CrossRef]

- Hoang-Phi, P.; Lam-Dao, N.; Nguyen-Van, V.; Nguyen-Kim, T.; Le Toan, T.; Pham-Duy, T. Rice Growth Stage Monitoring and Yield Estimation in the Vietnamese Mekong Delta Using Mulit-Temporal Sentinel-1 Data; Springer: Cham, Switzerland, 2022. [Google Scholar] [CrossRef]

- Hirooka, Y.; Homma, K.; Maki, M.; Sekiguchi, K. Applicability of synthetic aperture radar (SAR) to evaluate leaf area index (LAI) and its growth rate of rice in farmers’ fields in LAO PDR. Field Crop. Res. 2015, 176, 119–122. [Google Scholar] [CrossRef]

- Nhangumbe, M.; Nascetti, A.; Ban, Y. Multi-Temporal Sentinel-1 SAR and Sentinel-2 MSI Data for Flood Mapping and Damage Assessment in Mozambique. ISPRS Int. J. Geo-Inf. 2023, 12, 53. [Google Scholar] [CrossRef]

- Huang, M.; Jin, S. Rapid flood mapping and evaluation with a supervised classifier and change detection in Shouguang using Sentinel-1 SAR and Sentinel-2 optical data. Remote Sens. 2020, 12, 2073. [Google Scholar] [CrossRef]

- Tavus, B.; Kocaman, S.; Gokceoglu, C. Flood damage assessment with Sentinel-1 and Sentinel-2 data after Sardoba dam break with GLCM features and Random Forest method. Sci. Total Environ. 2022, 816, 151585. [Google Scholar] [CrossRef]

- Brinkhoff, J. Early-Season Industry-Wide Rice Maps Using Sentinel-2 Time Series. In International Geoscience and Remote Sensing Symposium (IGARSS); Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022; pp. 5854–5857. [Google Scholar] [CrossRef]

- Rahman, M.S.; Di, L. A Systematic Review on Case Studies of Remote-Sensing-Based Flood Crop Loss Assessment. Agriculture 2020, 10, 13. [Google Scholar] [CrossRef]

- Miao, S.; Zhao, Y.; Huang, J.; Li, X.; Wu, R.; Su, W.; Zeng, Y.; Guan, H.; Abd Elbasit, M.A.M.; Zhang, J. A Comprehensive Evaluation of Flooding’s Effect on Crops Using Satellite Time Series Data. Remote Sens. 2023, 15, 1305. [Google Scholar] [CrossRef]

- Brusola, K.S.G.; Castro, M.L.Y.; Suarez, J.M.; David, D.R.N.; Ramacula, C.J.E.; Capdos, M.A.; Viado, L.N.T.; Dorado, M.A.; Ballaran, V.G., Jr. Flood Mapping and Assessment During Typhoon Ulysses (Vamco) in Cagayan, Philippines Using Synthetic Aperture Radar Images. In Water Resources Management and Sustainability. Solutions for Arid Regions; Springer: Cham, Switzerland, 2023; pp. 69–82. [Google Scholar] [CrossRef]

- Li, M.; Shamshiri, R.R.; Weltzien, C.; Schirrmann, M. Crop Monitoring Using Sentinel-2 and UAV Multispectral Imagery: A Comparison Case Study in Northeastern Germany. Remote Sens. 2022, 14, 442. [Google Scholar] [CrossRef]

- Bollas, N.; Kokinou, E.; Polychronos, V. Comparison of Sentinel-2 and UAV Multispectral Data for Use in Precision Agriculture: An Application from Northern Greece. Drones 2021, 5, 35. [Google Scholar] [CrossRef]

- Mangewa, L.J.; Ndakidemi, P.A.; Alward, R.D.; Kija, H.K.; Bukombe, J.K.; Nasolwa, E.R.; Munishi, L.K. Comparative Assessment of UAV and Sentinel-2 NDVI and GNDVI for Preliminary Diagnosis of Habitat Conditions in Burunge Wildlife Management Area, Tanzania. Earth 2022, 3, 769–787. [Google Scholar] [CrossRef]

- Hendrawan, V.S.A.; Komori, D. Developing flood vulnerability curve for rice crop using remote sensing and hydrodynamic modeling. Int. J. Disaster Risk Reduct. 2021, 54, 102058. [Google Scholar] [CrossRef]

- Hisham, N.H.B.; Hashim, N.; Saraf, N.M.; Talib, N. Monitoring of Rice Growth Phases Using Multi-Temporal Sentinel-2 Satellite Image. IOP Conf. Ser. Earth Environ. Sci. 2022, 1051, 012021. [Google Scholar] [CrossRef]

- CL-SEAMS. Available online: https://www.facebook.com/sarai.seams (accessed on 17 May 2023).

- Project SARAI. Available online: https://sarai.ph/ (accessed on 11 May 2023).

- Espaldon, M.V.; Dorado, M.; Salazar, A.; Eslava, D.; Lansigan, F.; Luyun, R., Jr.; Khan, C.; Aguilar, E.; Altoveros, N.; Bato, V.; et al. Project Sarai Technologies for Climate-Smart Agriculture in the Philippines. FFTC e-J. 2019. Available online: https://ap.fftc.org.tw/article/1639 (accessed on 20 October 2023).

- Google Earth Engine. Available online: https://earthengine.google.com/ (accessed on 11 May 2023).

- Bilotta, G.; Genovese, E.; Citroni, R.; Cotroneo, F.; Meduri, G.M.; Barrile, V. Integration of an Innovative Atmospheric Forecasting Simulator and Remote Sensing Data into a Geographical Information System in the Frame of Agriculture 4.0 Concept. Agriengineering 2023, 5, 1280–1301. [Google Scholar] [CrossRef]

- PAO. A public document acquired from the Provincial Agriculture Office during data gathering. In Pampanga Provincial Profile; Provincial Agriculture Office: Malolos, Philippines, 2015. [Google Scholar]

- Nagumo, N.; Sawano, H. Land Classification and Flood Characteristics of the Pampanga River Basin, Central Luzon, Philippines. J. Geogr. 2016, 125, 699–716. [Google Scholar] [CrossRef][Green Version]

- Municipal Profile of Candaba Pampanga. Available online: https://candabapampanga.gov.ph/municipal-profile/ (accessed on 21 February 2024).

- USGS. What Is Remote Sensing and What Is It Used for? Available online: https://www.usgs.gov/faqs/what-remote-sensing-and-what-it-used#:~:text=Remotesensingistheprocess,sense%22thingsabouttheEarth. (accessed on 5 May 2023).

- DJI P4 Multispectral. Available online: https://www.dji.com/jp/p4-multispectral (accessed on 6 May 2023).

- ESA. SENTINEL-2 User Handbook; 2015. European Space Agency Document. Available online: https://sentinels.copernicus.eu/web/sentinel/user-guides/document-library/-/asset_publisher/xlslt4309D5h/content/sentinel-2-user-handbook (accessed on 8 May 2023).

- ESA. Sentinel-2 Overview. Available online: https://www.esa.int/Applications/Observing_the_Earth/Copernicus/Sentinel-2_overview (accessed on 8 May 2023).

- ESA (2020b) Sentinel-2—Missions—Sentinel Online. 2020. Available online: https://sentinel.esa.int/web/sentinel/missions/sentinel-2 (accessed on 11 May 2023).

- USGS. Landsat Collection. Available online: https://www.usgs.gov/landsat-missions/landsat-collection-1?qt-science_support_page_related_con=1#qt-science_support_page_related_con (accessed on 7 May 2023).

- GEE. Sentinel-1 Algorithms. Available online: https://developers.google.com/earth-engine/guides/sentinel1 (accessed on 7 May 2023).

- ESA (2020a) Sentinel-1—Missions—Sentinel Online. 2020. Available online: https://sentinel.esa.int/web/sentinel/missions/sentinel-1 (accessed on 11 May 2023).

- UCSB/CHG. Climate Hazards Group InfraRed Precipitation with Station Data (CHIRPS). Available online: https://chc.ucsb.edu/data/chirps (accessed on 7 May 2023).

- Rivera, J.A.; Hinrichs, S.; Marianetti, G. Using CHIRPS Dataset to Assess Wet and Dry Conditions along the Semiarid Central-Western Argentina. Adv. Meteorol. 2019, 2019, 8413964. [Google Scholar] [CrossRef]

- GISGeography. What Is NDVI (Normalized Difference Vegetation Index)? Available online: https://gisgeography.com/ndvi-normalized-difference-vegetation-index/ (accessed on 19 May 2023).

- Dobrinić, D.; Gašparović, M.; Medak, D. Sentinel-1 and 2 Time-Series for Vegetation Mapping Using Random Forest Classification: A Case Study of Northern Croatia. Remote Sens. 2021, 13, 2321. [Google Scholar] [CrossRef]

- Jiao, X.; McNairn, H.; Yekkehkhany, B.; Robertson, L.D.; Ihuoma, S. Integrating Sentinel-1 SAR and Sentinel-2 optical imagery with a crop structure dynamics model to track crop condition. Int. J. Remote Sens. 2022, 43, 6509–6537. [Google Scholar] [CrossRef]

- Gonzalez-Betancourt, M.; Mayorga-Ruiz, L. Normalized difference vegetation index for rice management in El Espinal, Colombia. DYNA 2018, 85, 47–56. Available online: https://www.redalyc.org/journal/496/49657889005/html/ (accessed on 10 May 2023). [CrossRef]

- Vamsi, T.; Jarjana, P.R.; Mogalapalli, S.; Golagani, C.Y. A Machine Learning Approach for Estimating Crop Damage based on Leaf Disease Detection. Int. J. Res. Advent Technol. 2019, 7, 20–24. [Google Scholar] [CrossRef]

- Crisóstomo de Castro Filho, H.; Abílio de Carvalho Júnior, O.; Ferreira de Carvalho, O.L.; Pozzobon de Bem, P.; dos Santos de Moura, R.; Olino de Albuquerque, A.; Rosa Silva, C.; Guimarães Ferreira, P.H.; Fontes Guimarães, R.; Trancoso Gomes, R.A. Rice Crop Detection Using LSTM, Bi-LSTM, and Machine Learning Models from Sentinel-1 Time Series. Remote Sens. 2020, 12, 2655. [Google Scholar] [CrossRef]

- Tiede, D.; Sudmanns, M.; Augustin, H.; Baraldi, A. Investigating ESA Sentinel-2 products’ systematic cloud cover overestimation in very high altitude areas. Remote Sens. Environ. 2020, 252, 112163. [Google Scholar] [CrossRef]

- Sales, V.G.; Strobl, E.; Elliott, R.J. Cloud cover and its impact on Brazil’s deforestation satellite monitoring program: Evidence from the cerrado biome of the Brazilian Legal Amazon. Appl. Geogr. 2022, 140, 102651. [Google Scholar] [CrossRef]

- Calkins, K.G. An Introduction to Statistics. 2005. Available online: http://www.andrews.edu/~calkins/math/edrm611/edrmtoc.htm (accessed on 5 December 2023).

- Li, J.; Li, C.; Xu, W.; Feng, H.; Zhao, F.; Long, H.; Meng, Y.; Chen, W.; Yang, H.; Yang, G. Fusion of optical and SAR images based on deep learning to reconstruct vegetation NDVI time series in cloud-prone regions. Int. J. Appl. Earth Obs. 2022, 112, 102818. [Google Scholar] [CrossRef]

- Boeke, S.; van den Homberg, M.J.C.; Teklesadik, A.; Fabila, J.L.D.; Riquet, D.; Alimardani, M. Towards predicting rice loss due to typhoons in the philippines. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 63–70. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).