IOT-Enabled Model for Weed Seedling Classification: An Application for Smart Agriculture

Abstract

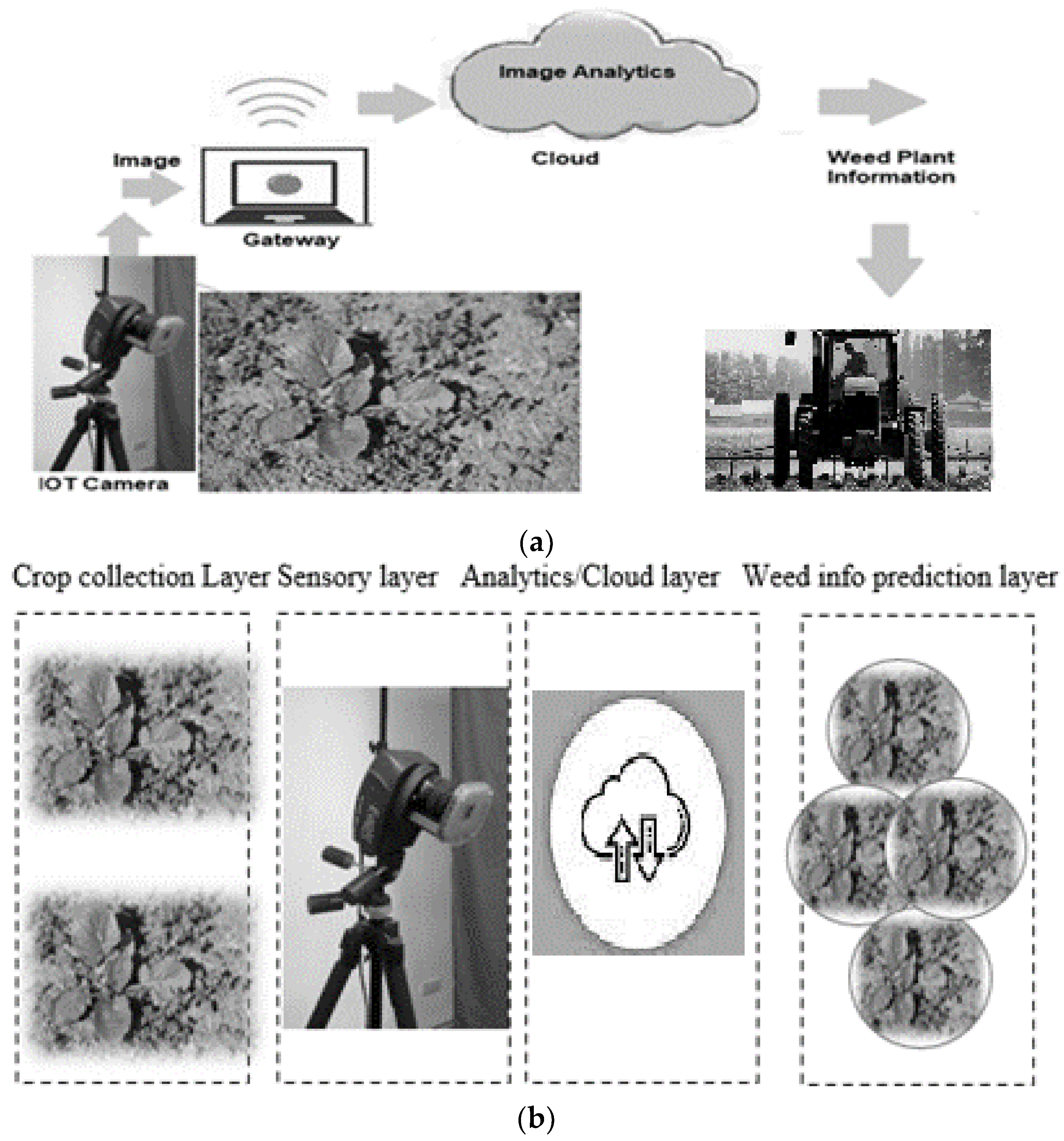

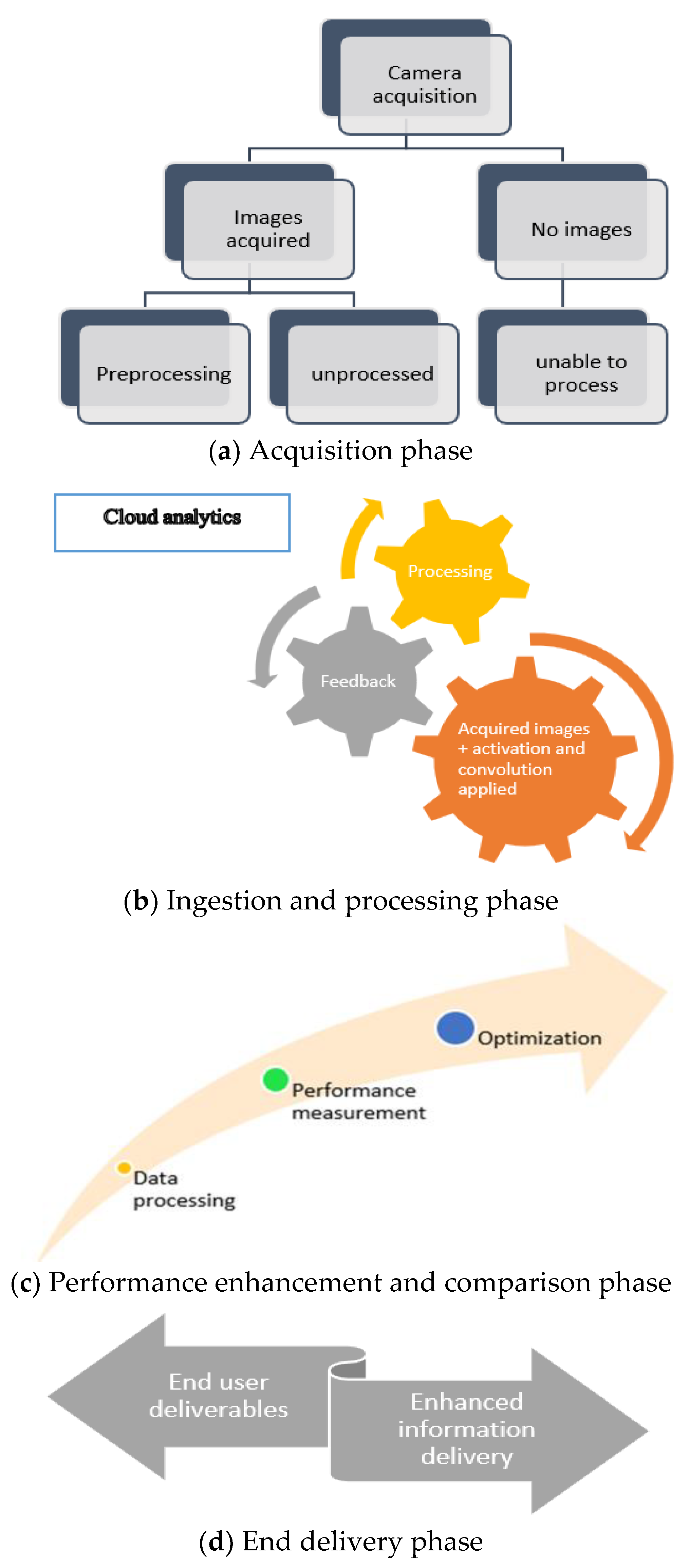

1. Introduction

- An IOT-enabled framework for smart agriculture;

- Design of a well regularized CNN framework for weed seedling classification;

- Analysis of the performance of weed seedling classification based on grayscale and color channel information.

2. Methods

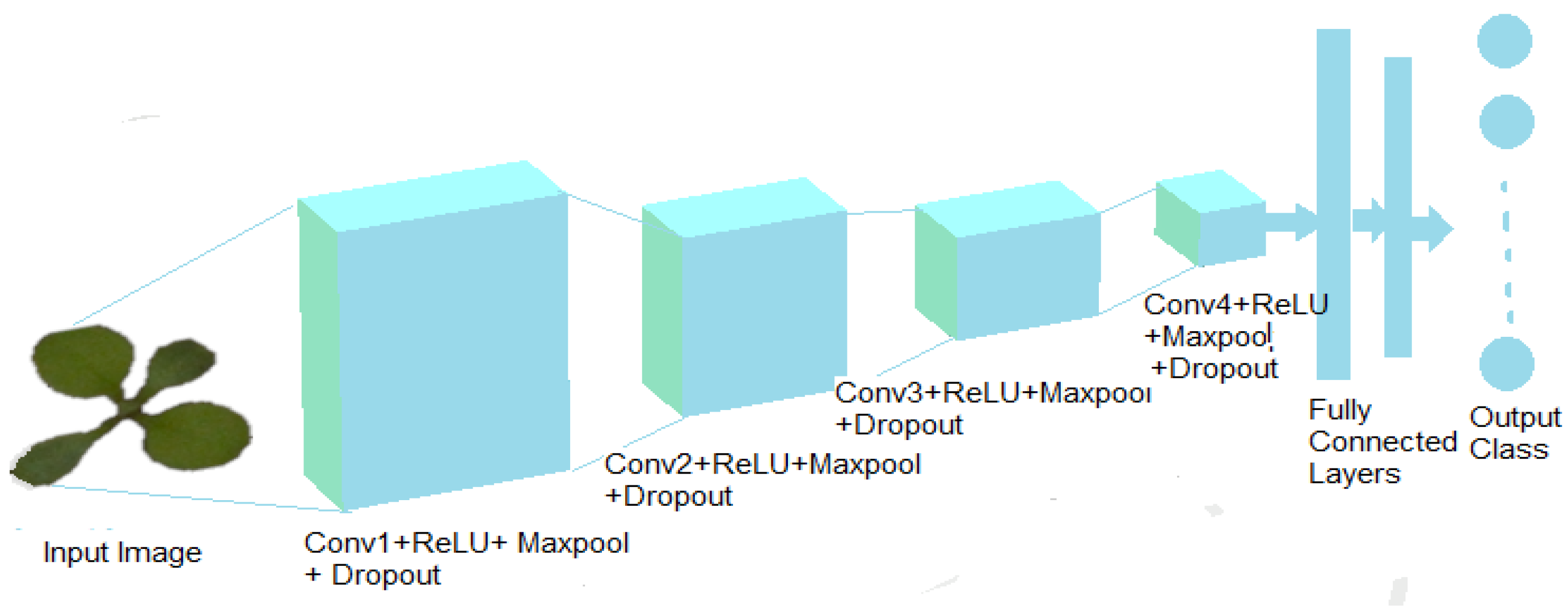

2.1. Regularized Convolution Neural Network

| Algorithm 1. Regularized Deep Weed-ConvNet Model. |

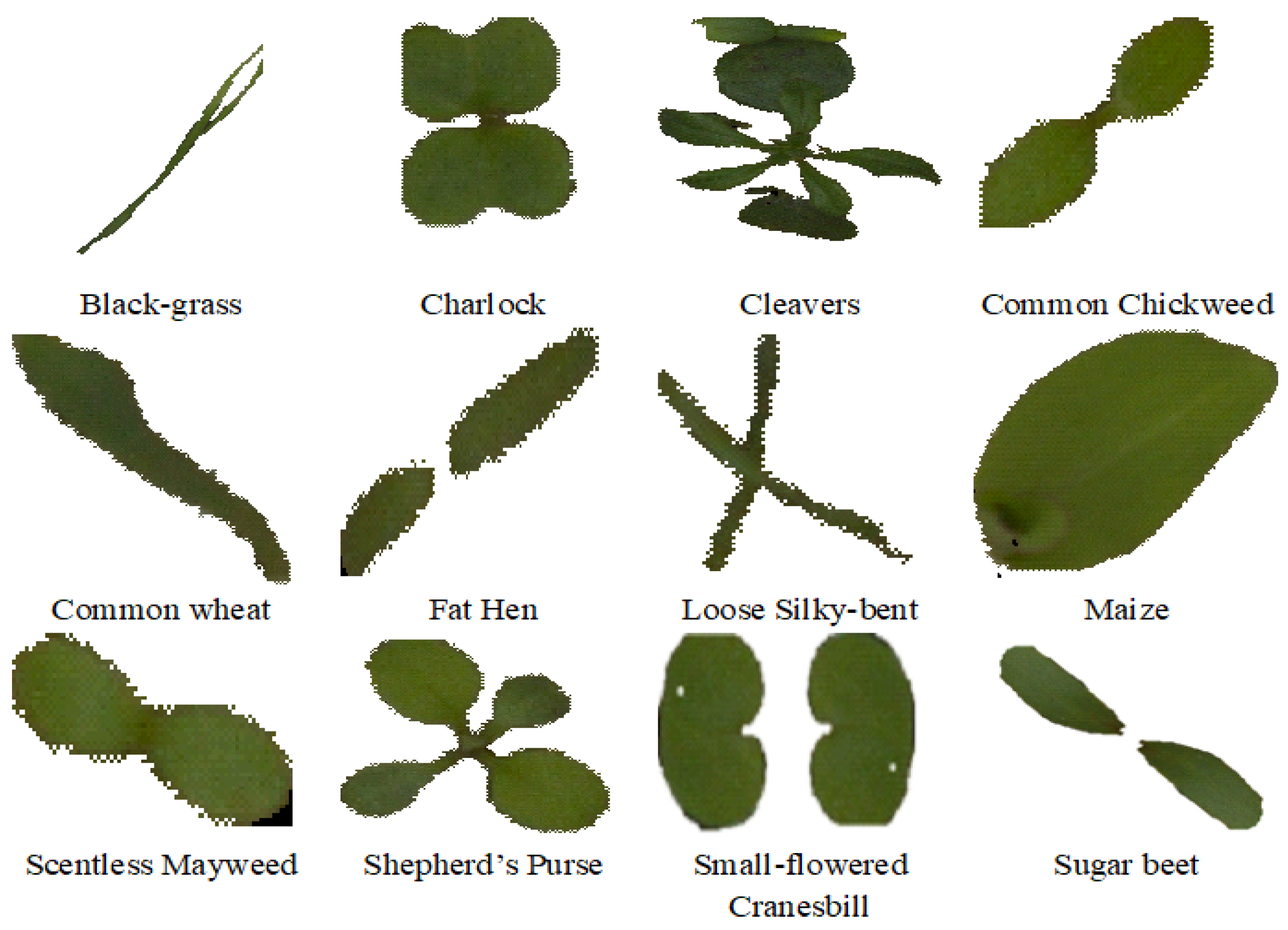

| Input: A total of 12 weed plant species are represented by an image dataset of distinct plants. |

| Output: The plant species class represents one of the 12 possible categories for the input weed images. |

| 1. Transform image to HSV (hue, saturation, and value) color space. |

| 2. Resize the images size for dimension reduction. |

| 3. Perform data augmentations rotation, scaling, and flipping. |

| 4. Learning-Forward Pass: |

| For each convolution filter apply convolution on the image matrix. |

| Generate feature map: |

| feature_map = |

| Univariate vector = max (feature_map(0,y)) |

| end for |

| 5. To build a single feature vector, combine all univariate features. |

| 6. Apply the SoftMax operation to the feature vector attained in step 5 as follows: |

| 7. Argmax (softmax_outputs) |

| Learning-Back Propagation: |

| 8. Loss function (categorical cross-entropy): |

| 9. Weight update: |

| where α is learning rate, β is momentum, and is γ weight decay. |

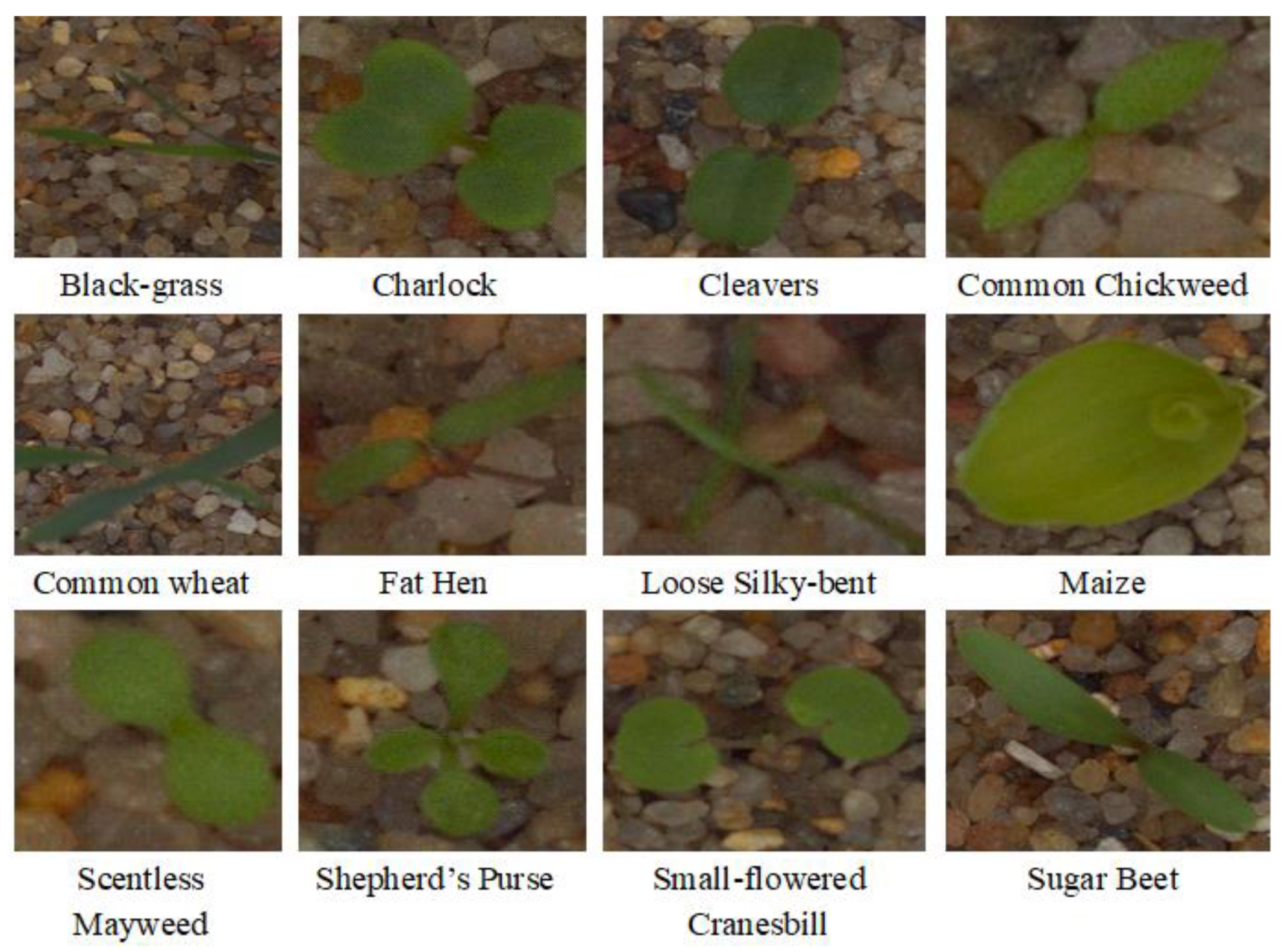

2.2. Image Data Set

3. Experiments and Results Discussion

- and are the truly labelled positive and negative weed samples, respectively.

- represents the no. of negative weed samples labelled incorrectly as positive.

- denotes the no. of positive weed samples labelled incorrectly as negative.

4. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gubbi, J.; Buyya, R.; Marusic, S.; Palaniswami, M. Internet of Things (IoT): A vision, architectural elements, and future directions. Future Gener. Comput. Syst. 2013, 29, 1645–1660. [Google Scholar] [CrossRef]

- Lee, I.; Lee, K. The Internet of Things (IoT): Applications, investments, and challenges for enterprises. Bus. Horiz. 2015, 58, 431–440. [Google Scholar] [CrossRef]

- Goenka, N.; Tiwari, S. AlzVNet: A volumetric convolutional neural network for multiclass classification of Alzheimer’s disease through multiple neuroimaging computational approaches. Biomed. Signal Process. Control 2022, 74, 103500. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Lipper, L.; Thornton, P.; Campbell, B.M.; Baedeker, T.; Braimoh, A.; Bwalya, M.; Hottle, R. Climate-smart agriculture for food security. Nat. Clim. Change 2014, 4, 1068. [Google Scholar] [CrossRef]

- Sinha, B.B.; Dhanalakshmi, R. Recent advancements and challenges of Internet of Things in smart agriculture: A survey. Future Gener. Comput. Syst. 2022, 126, 169–184. [Google Scholar] [CrossRef]

- Adamides, G.; Edan, Y. Human–robot collaboration systems in agricultural tasks: A review and roadmap. Comput. Electron. Agric. 2023, 204, 107541. [Google Scholar] [CrossRef]

- Gondchawar, N.; Kawitkar, R.S. IoT based smart agriculture. Int. J. Adv. Res. Comput. Commun. Eng. 2016, 5, 838–842. [Google Scholar]

- TongKe, F. Smart agriculture based on cloud computing and IOT. J. Converg. Inf. Technol. 2013, 8, 210–216. [Google Scholar]

- Jhuria, M.; Kumar, A.; Borse, R. Image processing for smart farming: Detection of disease and fruit grading. In Proceedings of the 2013 IEEE Second International Conference on Image Information Processing (ICIIP-2013), Shimla, India, 9–11 December 2013; pp. 521–526. [Google Scholar]

- Bhange, M.; Hingoliwala, H.A. Smart farming: Pomegranate disease detection using image processing. Procedia Comput. Sci. 2015, 58, 280–288. [Google Scholar] [CrossRef]

- Hamuda, E.; Glavin, M.; Jones, E. A survey of image processing techniques for plant extraction and segmentation in the field. Comput. Electron. Agric. 2016, 125, 184–199. [Google Scholar] [CrossRef]

- Mekala, M.S.; Viswanathan, P. A Survey: Smart agriculture IoT with cloud computing. In Proceedings of the 2017 International Conference on Microelectronic Devices, Circuits and Systems (ICMDCS), Vellore, India, 10–12 August 2017; pp. 1–7. [Google Scholar]

- Suma, N.; Samson, S.R.; Saranya, S.; Shanmugapriya, G.; Subhashri, R. IOT based smart agriculture monitoring system. Int. J. Recent Innov. Trends Comput. Commun. 2017, 5, 177–181. [Google Scholar]

- Kapoor, A.; Bhat, S.I.; Shidnal, S.; Mehra, A. Implementation of IoT (Internet of Things) and Image processing in smart agriculture. In Proceedings of the 2016 International Conference on Computation System and Information Technology for Sustainable Solutions (CSITSS), Bengaluru, India, 6–8 October 2016; pp. 21–26. [Google Scholar]

- Lottes, P.; Khanna, R.; Pfeifer, J.; Siegwart, R.; Stachniss, C. UAV-based crop and weed classification for smart farming. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3024–3031. [Google Scholar]

- Sadjadi, F.A.; Farmer, M.E. Smart Weed Recognition/Classification System. U.S. Patent No. 5,606,821, 4 March 1997. [Google Scholar]

- Dang, M.P.; Le, H.G.; Le Chau, N.; Dao, T.P. An Optimized Design of New XYθ Mobile Positioning Microrobotic Platform for Polishing Robot Application Using Artificial Neural Network and Teaching-Learning Based Optimization. Complexity 2022, 2022, 2132005. [Google Scholar] [CrossRef]

- Dang, M.P.; Le, H.G.; Nguyen, N.P.; Le Chau, N.; Dao, T.P. Optimization for a New XY Positioning Mechanism by Artificial Neural Network-Based Metaheuristic Algorithms. Comput. Intell. Neurosci. 2022, 2022, 9151146. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef] [PubMed]

- Sharma, A.K.; Tiwari, S.; Aggarwal, G.; Goenka, N.; Kumar, A.; Chakrabarti, P.; Jasiński, M. Dermatologist-Level Classification of Skin Cancer Using Cascaded Ensembling of Convolutional Neural Network and Handcrafted Features Based Deep Neural Network. IEEE Access 2022, 10, 17920–17932. [Google Scholar] [CrossRef]

- Tiwari, S. An analysis in tissue classification for colorectal cancer histology using convolution neural network and colour models. Int. J. Inf. Syst. Model. Des. 2018, 9, 1–19. [Google Scholar] [CrossRef]

- Tripathi, N.; Jadeja, A. A survey of regularization methods for deep neural network. Int. J. Comput. Sci. Mob. Comput. 2014, 3, 429–436. [Google Scholar]

- Mikołajczyk, A.; Grochowski, M. Data augmentation for improving deep learning in image classification problem. In Proceedings of the 2018 International Interdisciplinary PhD Workshop (IIPhDW), Swinoujście, Poland, 9–12 May 2018; pp. 117–122. [Google Scholar]

- Alimboyong, C.R.; Hernandez, A.A. An Improved Deep Neural Network for Classification of Plant Seedling Images. In Proceedings of the 2019 IEEE 15th International Colloquium on Signal Processing & Its Applications (CSPA), Penang, Malaysia, 8–9 March 2019; pp. 217–222. [Google Scholar]

- Giselsson, T.M.; Jørgensen, R.N.; Jensen, P.K.; Dyrmann, M.; Midtiby, H.S. A public image database for benchmark of plant seedling classification algorithms. arXiv 2017, arXiv:1711.05458. [Google Scholar]

- Tiwari, S. A Pattern Classification Based approach for Blur Classification. Indones. J. Electr. Eng. Inform. 2017, 5, 162–173. [Google Scholar]

- Ramani, P.; Pradhan, N.; Sharma, A.K. Classification Algorithms to Predict Heart Diseases—A Survey. In Computer Vision and Machine Intelligence in Medical Image Analysis. Advances in Intelligent Systems and Computing; Gupta, M., Konar, D., Bhattacharyya, S., Biswas, S., Eds.; Springer: Singapore, 2020; Volume 992. [Google Scholar]

- Sharma, N.; Litoriya, R.; Sharma, A. Application and Analysis of K-Means Algorithms on a Decision Support Framework for Municipal Solid Waste Management. In Advanced Machine Learning Technologies and Applications. AMLTA 2020. Advances in Intelligent Systems and Computing; Hassanien, A., Bhatnagar, R., Darwish, A., Eds.; Springer: Singapore, 2021; Volume 1141. [Google Scholar]

- Tang, K.; Wang, R.; Chen, T. Towards maximizing the area under the ROC curve for multi-class classification problems. In Proceedings of the Twenty-Fifth AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 7–11 August 2011. [Google Scholar]

- Le Chau, N.; Tran, N.T.; Dao, T.P. Topology and size optimization for a flexure hinge using an integration of SIMP, deep artificial neural network, and water cycle algorithm. Appl. Soft Comput. 2021, 113, 108031. [Google Scholar] [CrossRef]

| Layer (Type) | Output Shape | Parameters |

|---|---|---|

| Learning Parameter | Metric |

|---|---|

| Metric | |

| Loss function | |

| Optimizer |

| Training Hyperparameter | Search Space | Selected Value |

|---|---|---|

| Learning rate | [0.1, 0.01, 0.001, 0.0001, 0.00001] | |

| Epochs | [10, 20, 30, 40, 50, 60, 80, 100] | |

| Batch size | [8, 16, 32, 64] | |

| Early stopping | ||

| Conv2D layer 1 channels | [8, 16, 32, 48] | [16] |

| Conv2D layer 2 channels | [8, 16, 32, 48] | [16] |

| Conv2D layer 3 channels | [16, 32, 48, 64, 128] | [32] |

| Conv2D layer 4 channels | [16, 32, 48, 64, 128] | [64] |

| Conv2D layer 5 channels | [16, 32, 48, 64, 128, 256] | [128] |

| Kernel size for layers | [1,2,3,4,5,6,7,8] | [8] |

| Padding | [0,1,2,3,4] | [0] |

| Conv2D stride | [1,2,3,4] | [2] |

| Dropout rate layer 1,2 | [0.1, 0.2, 0.3, 0.4] | [0.2] |

| Dropout rate layer 1,2 | [0.1, 0.2, 0.3, 0.4] | [0.4] |

| Class | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 14 | 0 | 1 | 0 | 40 | 0 | 42 | 1 | 0 | 0 | 0 | 7 | |

| 0 | 123 | 4 | 0 | 3 | 2 | 0 | 0 | 0 | 0 | 0 | 6 | |

| 0 | 7 | 74 | 0 | 12 | 5 | 0 | 0 | 0 | 8 | 4 | 4 | |

| 0 | 20 | 10 | 102 | 1 | 5 | 0 | 13 | 3 | 5 | 1 | 60 | |

| 0 | 0 | 2 | 0 | 62 | 1 | 2 | 1 | 1 | 0 | 0 | 4 | |

| 0 | 2 | 2 | 0 | 6 | 134 | 1 | 2 | 0 | 0 | 2 | 11 | |

| 20 | 0 | 2 | 0 | 61 | 1 | 150 | 6 | 0 | 0 | 0 | 5 | |

| 0 | 1 | 5 | 2 | 2 | 1 | 0 | 65 | 0 | 0 | 0 | 3 | |

| 8 | 12 | 6 | 30 | 4 | 3 | 0 | 15 | 84 | 1 | 0 | 15 | |

| 0 | 19 | 2 | 4 | 3 | 1 | 0 | 0 | 4 | 25 | 0 | 3 | |

| 0 | 9 | 13 | 0 | 3 | 2 | 2 | 3 | 0 | 0 | 134 | 20 | |

| 0 | 3 | 0 | 0 | 6 | 1 | 0 | 10 | 0 | 0 | 1 | 118 |

| Class | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 46 | 0 | 0 | 0 | 4 | 4 | 44 | 0 | 0 | 0 | 2 | 5 | |

| 1 | 130 | 2 | 0 | 1 | 2 | 0 | 0 | 0 | 0 | 0 | 2 | |

| 0 | 6 | 99 | 0 | 1 | 3 | 0 | 0 | 0 | 0 | 0 | 5 | |

| 0 | 1 | 0 | 210 | 2 | 1 | 0 | 3 | 0 | 0 | 1 | 2 | |

| 2 | 0 | 1 | 1 | 53 | 5 | 1 | 0 | 2 | 0 | 0 | 8 | |

| 0 | 0 | 2 | 1 | 0 | 154 | 0 | 0 | 0 | 0 | 2 | 1 | |

| 35 | 1 | 1 | 0 | 5 | 9 | 185 | 1 | 0 | 0 | 3 | 5 | |

| 0 | 0 | 0 | 0 | 1 | 0 | 0 | 77 | 0 | 0 | 0 | 1 | |

| 3 | 3 | 1 | 7 | 0 | 1 | 0 | 2 | 155 | 1 | 0 | 5 | |

| 0 | 3 | 0 | 2 | 0 | 1 | 0 | 0 | 1 | 52 | 0 | 2 | |

| 0 | 2 | 2 | 0 | 1 | 1 | 0 | 0 | 2 | 0 | 176 | 2 | |

| 0 | 1 | 0 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 3 | 132 |

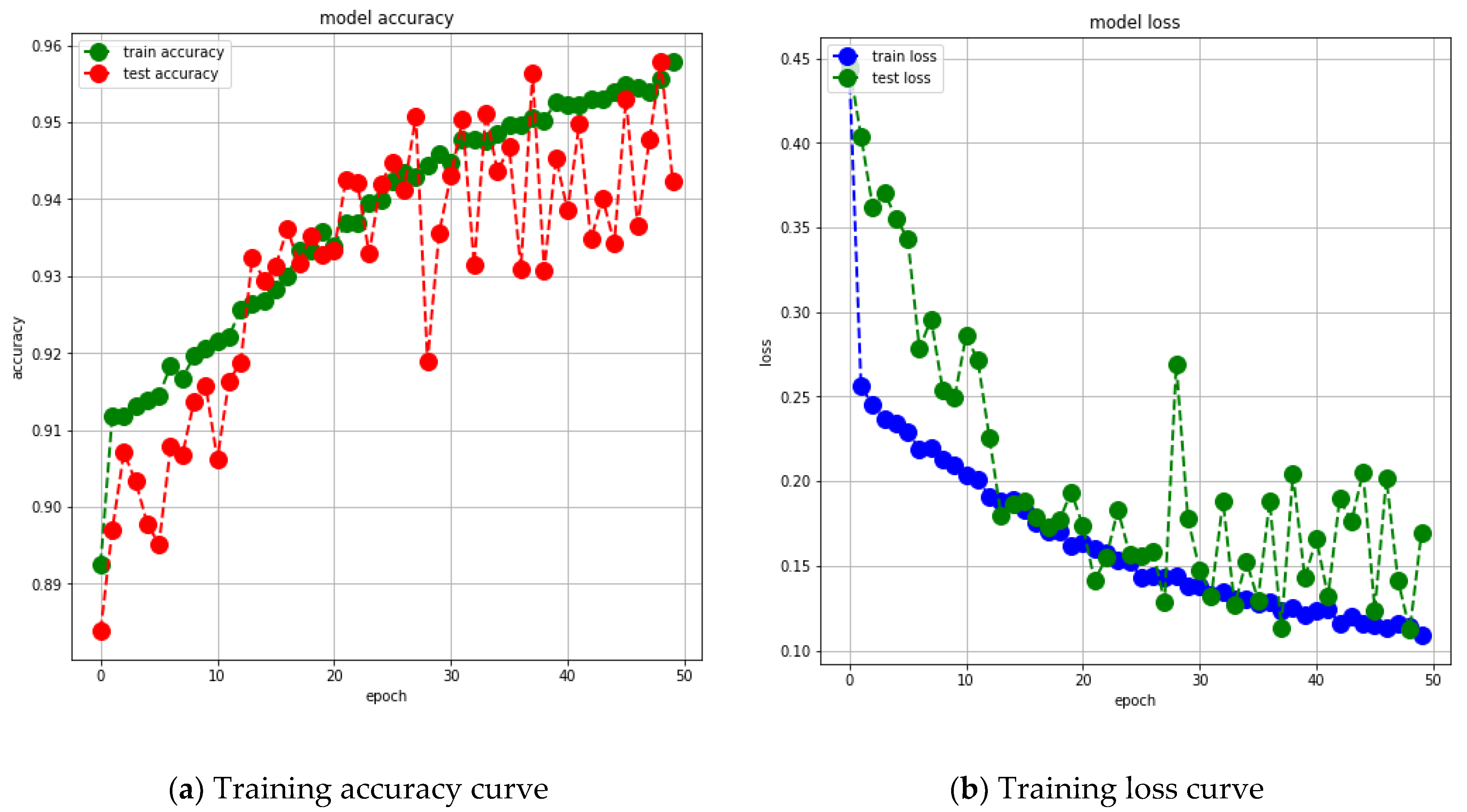

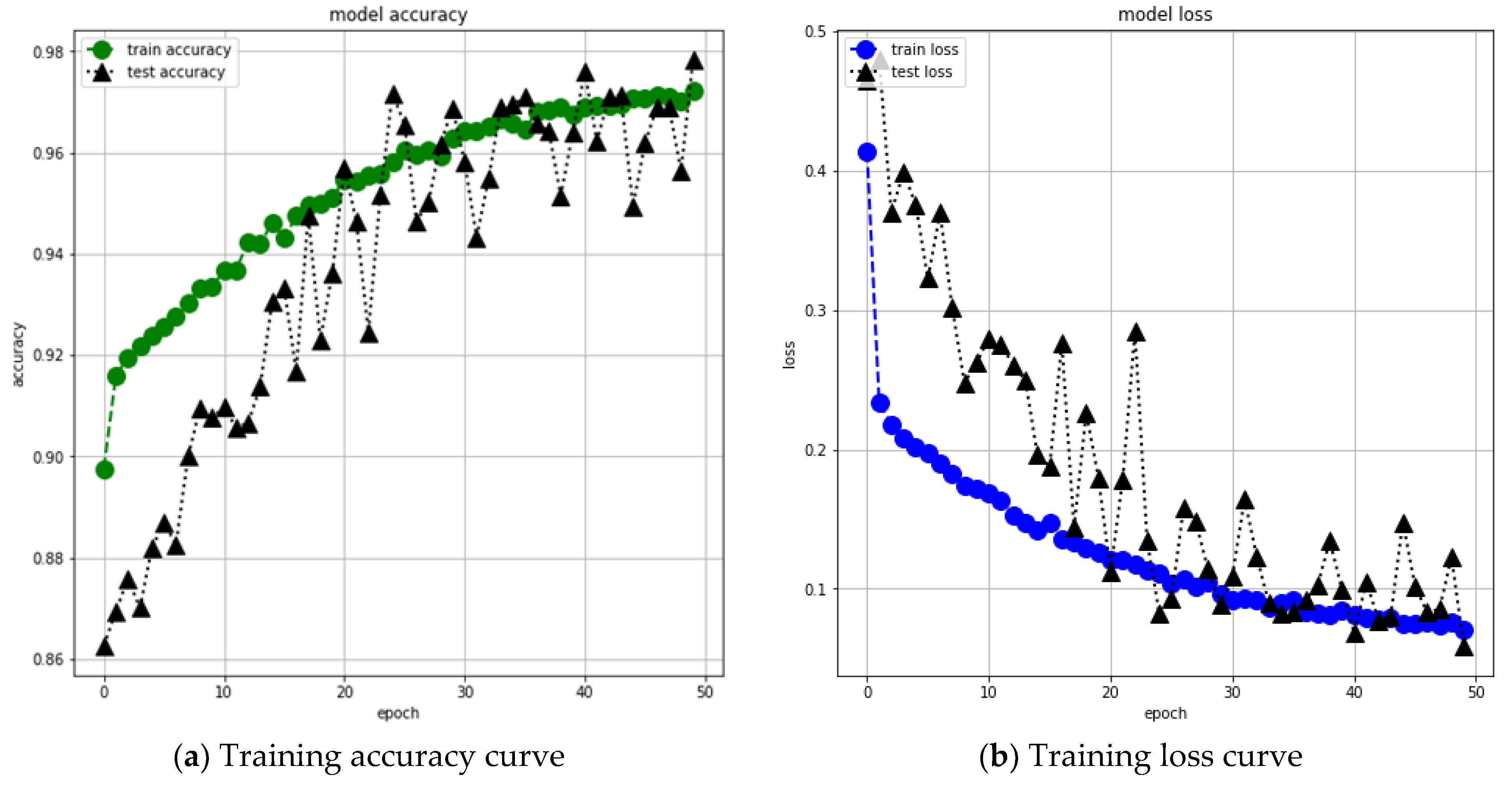

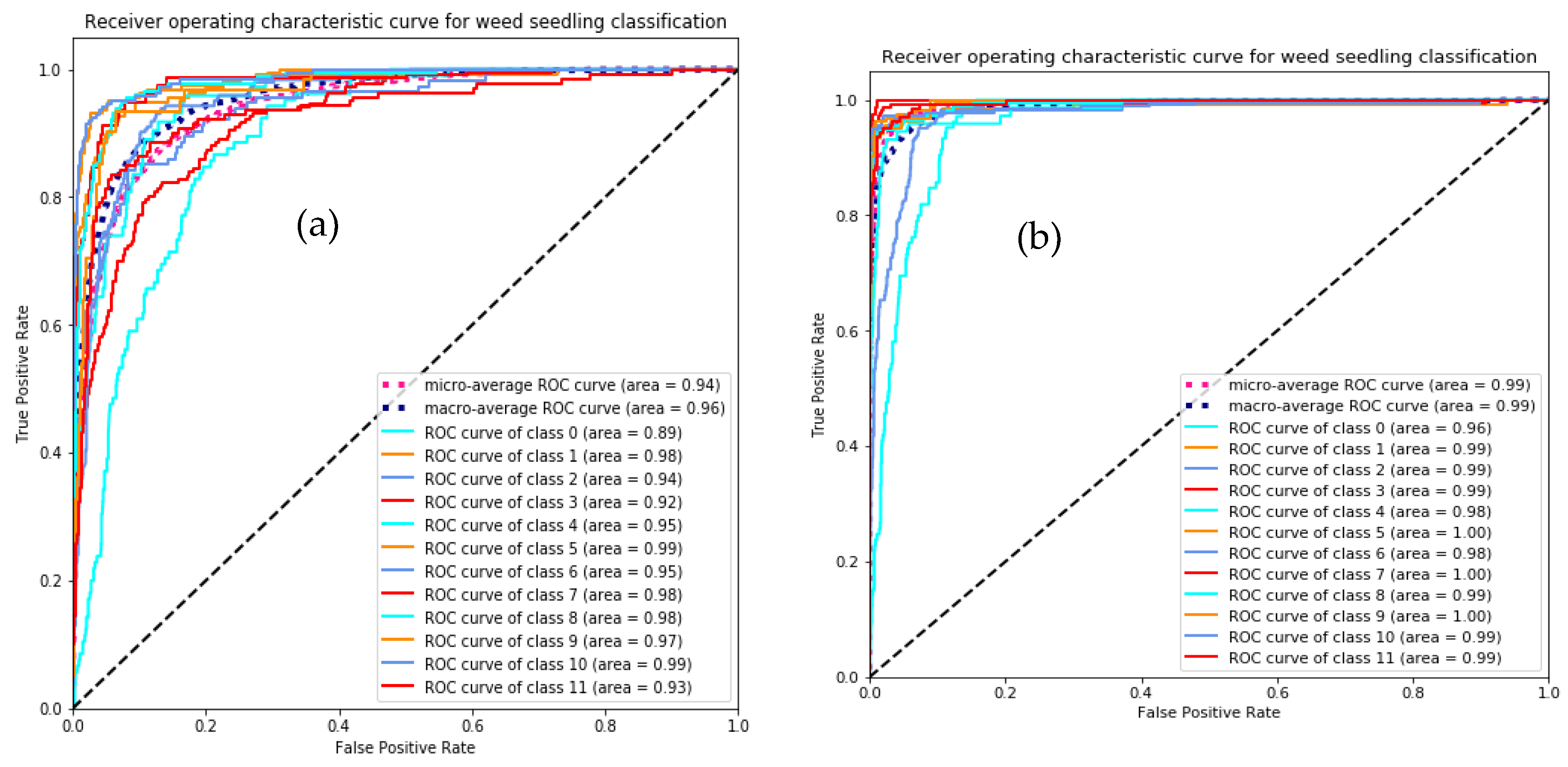

| Class | Performance Measures with Grayscale Segmented Weed Images | Performance Measures with Color Segmented Weed Images | ||||

|---|---|---|---|---|---|---|

| Precision | Recall | F1 Score | Precision | Recall | F1 Score | |

| Black-grass | 0.33 | 0.13 | 0.19 | 0.53 | 0.44 | 0.48 |

| Charlock | 0.63 | 0.89 | 0.74 | 0.88 | 0.94 | 0.91 |

| Cleavers | 0.61 | 0.65 | 0.63 | 0.92 | 0.87 | 0.89 |

| Common chickweed | 0.74 | 0.46 | 0.57 | 0.95 | 0.95 | 0.95 |

| Common wheat | 0.31 | 0.85 | 0.45 | 0.78 | 0.73 | 0.75 |

| Fat hen | 0.86 | 0.84 | 0.85 | 0.85 | 0.96 | 0.9 |

| Loose silky-bent | 0.76 | 0.61 | 0.68 | 0.8 | 0.76 | 0.78 |

| Maize | 0.56 | 0.82 | 0.67 | 0.91 | 0.97 | 0.94 |

| Scentless mayweed | 0.91 | 0.47 | 0.62 | 0.97 | 0.87 | 0.92 |

| Shepherd’s purse | 0.64 | 0.41 | 0.5 | 0.98 | 0.85 | 0.91 |

| Small-flowered cranesbill | 0.94 | 0.72 | 0.82 | 0.94 | 0.95 | 0.94 |

| Sugar beet | 0.46 | 0.85 | 0.6 | 0.78 | 0.95 | 0.85 |

| Average | 0.7 | 0.64 | 0.64 | 0.86 | 0.87 | 0.86 |

| Test Loss | 0.169 | 0.059 | ||||

| Test Accuracy | 0.942 | 0.978 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tiwari, S.; Sharma, A.K.; Jain, A.; Gupta, D.; Gono, M.; Gono, R.; Leonowicz, Z.; Jasiński, M. IOT-Enabled Model for Weed Seedling Classification: An Application for Smart Agriculture. AgriEngineering 2023, 5, 257-272. https://doi.org/10.3390/agriengineering5010017

Tiwari S, Sharma AK, Jain A, Gupta D, Gono M, Gono R, Leonowicz Z, Jasiński M. IOT-Enabled Model for Weed Seedling Classification: An Application for Smart Agriculture. AgriEngineering. 2023; 5(1):257-272. https://doi.org/10.3390/agriengineering5010017

Chicago/Turabian StyleTiwari, Shamik, Akhilesh Kumar Sharma, Ashish Jain, Deepak Gupta, Miroslava Gono, Radomir Gono, Zbigniew Leonowicz, and Michał Jasiński. 2023. "IOT-Enabled Model for Weed Seedling Classification: An Application for Smart Agriculture" AgriEngineering 5, no. 1: 257-272. https://doi.org/10.3390/agriengineering5010017

APA StyleTiwari, S., Sharma, A. K., Jain, A., Gupta, D., Gono, M., Gono, R., Leonowicz, Z., & Jasiński, M. (2023). IOT-Enabled Model for Weed Seedling Classification: An Application for Smart Agriculture. AgriEngineering, 5(1), 257-272. https://doi.org/10.3390/agriengineering5010017