Autonomous Vineyard Tracking Using a Four-Wheel-Steering Mobile Robot and a 2D LiDAR

Abstract

:1. Introduction

2. Materials and Methods

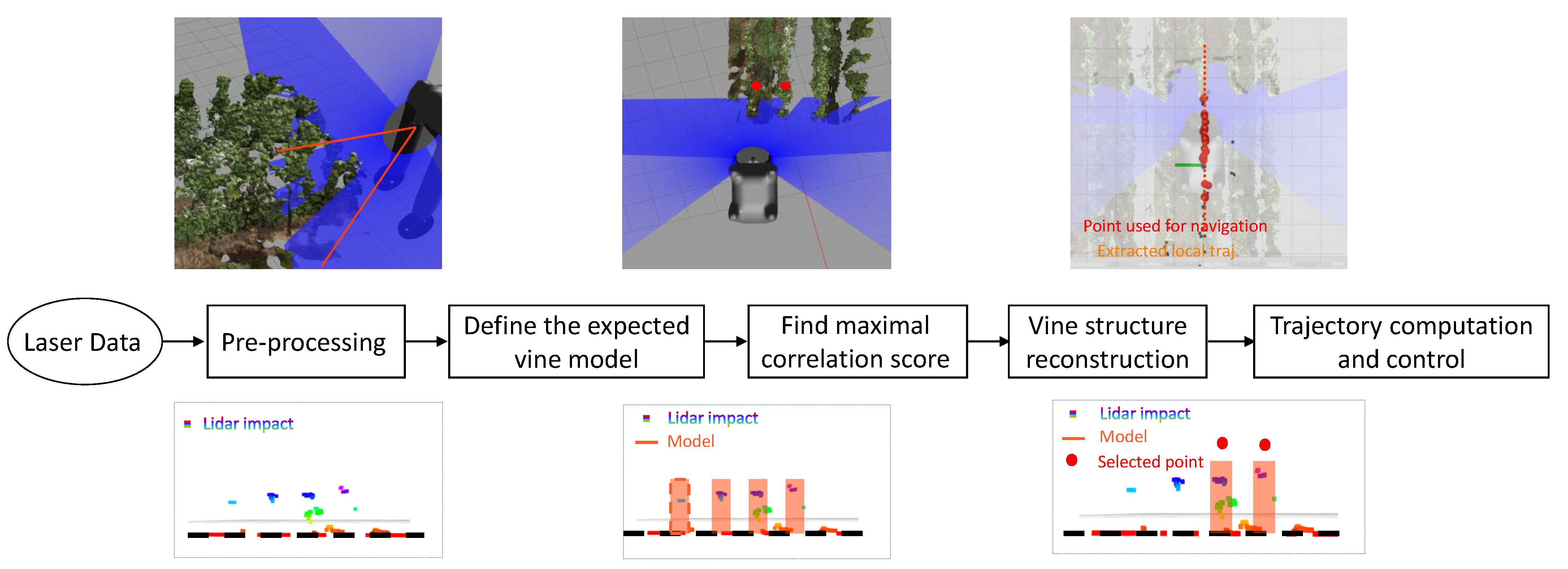

2.1. Row Detection Strategy

2.1.1. Pre-Processing

2.1.2. Detection Model Definition

2.1.3. Correlation Score Definition

- is the correlation score for the offset k between LiDAR data and template;

- z represents the LiDAR measurements on the Z-axis;

- m represents the values of the model data;

- and are the mean values of, respectively, LiDAR Z-axis data and model data.

2.1.4. Vine Structure Reconstruction

- —the lateral deviation of the retrieved point to the newest set-point in the robot frame;

- —the yaw of the robot computed with the odometry;

- and —the X and Y coordinates of the retrieved point;

- and —the X and Y coordinates of the previous aggregated set-point.

2.1.5. Trajectory Computation and Input Control

- y—the lateral deviation with respect to the reference trajectory;

- a and b, respectively, the slope and intercept computed by the least squares method;

- and —the coordinates of the robot computed through the system odometry;

- —the angular deviation;

- —the yaw of the robot computed through the system odometry.

2.2. Motion Control

2.2.1. Mobile Robot Modeling

2.2.2. Control Strategy 1: Backstepping Position/Orientation Control

- Step 1: Computation of the target angular deviation

- Step 2: Control law for the front steering angle

- Step 3: Control law for the rear steering angle

2.2.3. Control Strategy 2: Lateral Errors Regulation

- Step 1: Modeling of the tracking errors

- Step 2: Control law for the rear steering angle

- Step 3: Control law for the front steering angle

3. Results

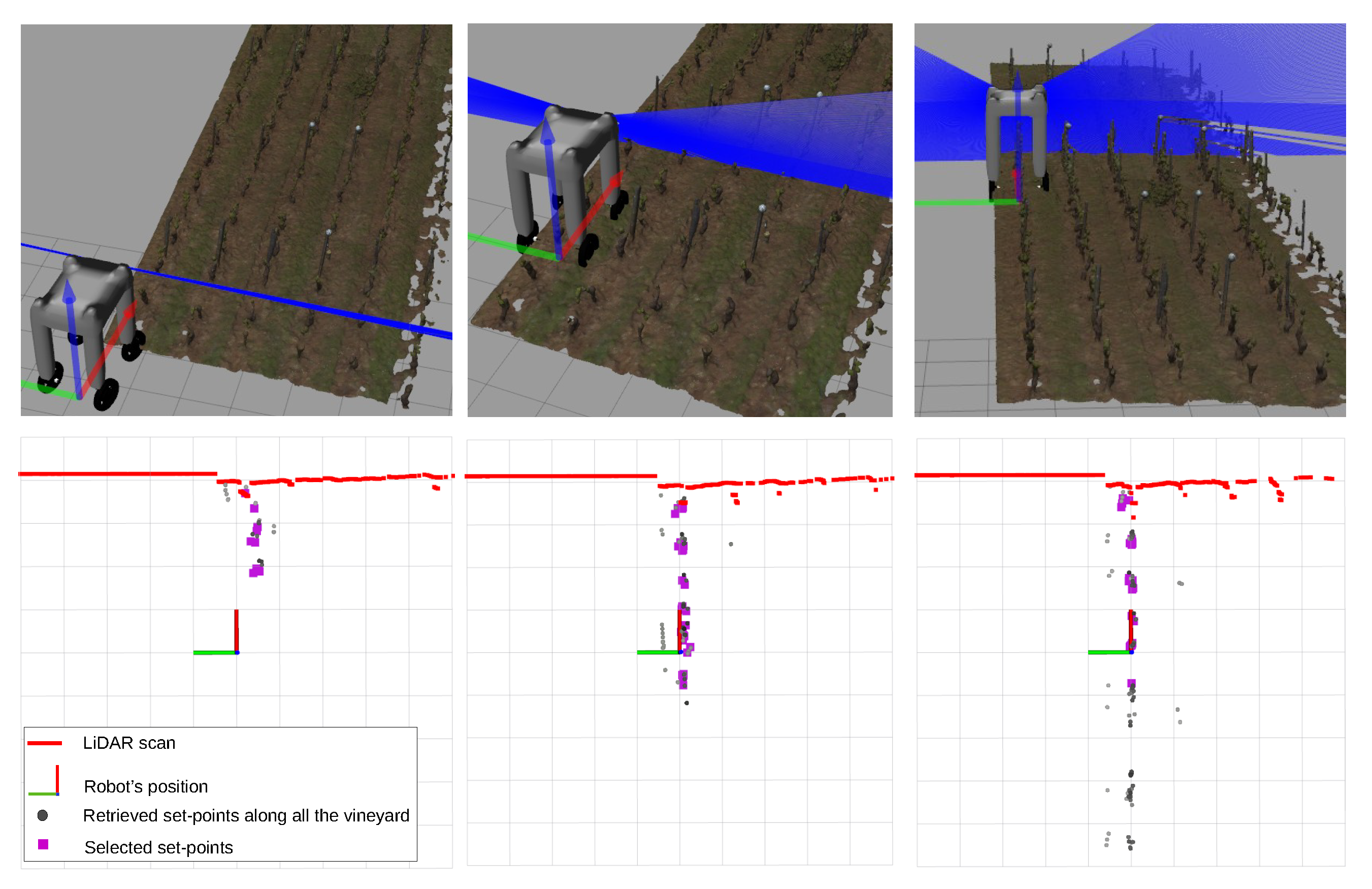

3.1. Simulation Testbed—Digitized Experimental Vineyard with Terrain Variability

3.2. Validation on Summer Vineyard

3.3. Validation on Winter Vineyard

3.4. Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- McGlynn, S.; Walters, D. Agricultural Robots: Future Trends for Autonomous Farming. Int. J. Emerg. Technol. Innov. Res. 2019, 6, 944–949. [Google Scholar]

- Thuilot, B.; Cariou, C.; Cordesses, L.; Martinet, P. Automatic guidance of a farm tractor along curved paths, using a unique CP-DGPS. In Proceedings of the 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems. Expanding the Societal Role of Robotics in the the Next Millennium (Cat. No. 01CH37180), Maui, HI, USA, 29 October–3 November 2001; Volume 2, pp. 674–679. [Google Scholar]

- Lenain, R.; Thuilot, B.; Cariou, C.; Martinet, P. Adaptive and predictive non linear control for sliding vehicle guidance: Application to trajectory tracking of farm vehicles relying on a single RTK GPS. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 1, pp. 455–460. [Google Scholar]

- Nehme, H.; Aubry, C.; Solatges, T.; Savatier, X.; Rossi, R.; Boutteau, R. LiDAR-based Structure Tracking for Agricultural Robots: Application to Autonomous Navigation in Vineyards. J. Intell. Robot. Syst. 2021, 103, 1–16. [Google Scholar] [CrossRef]

- Winterhalter, W.; Fleckenstein, F.; Dornhege, C.; Burgard, W. Localization for precision navigation in agricultural fields—Beyond crop row following. J. Field Robot. 2021, 38, 429–451. [Google Scholar] [CrossRef]

- Kaewket, P.; Sukvichai, K. Investigate GPS Signal Loss Handling Strategies for a Low Cost Multi-GPS system based Kalman Filter. In Proceedings of the 2022 19th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), Prachuap Khiri Khan, Thailand, 24–27 May 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Ullah, I.; Shen, Y.; Su, X.; Esposito, C.; Choi, C. A localization based on unscented Kalman filter and particle filter localization algorithms. IEEE Access 2019, 8, 2233–2246. [Google Scholar] [CrossRef]

- Blok, P.M.; van Boheemen, K.; van Evert, F.K.; IJsselmuiden, J.; Kim, G.H. Robot navigation in orchards with localization based on Particle filter and Kalman filter. Comput. Electr. Agric. 2019, 157, 261–269. [Google Scholar] [CrossRef]

- Iqbal, J.; Xu, R.; Sun, S.; Li, C. Simulation of an autonomous mobile robot for LiDAR-based in-field phenotyping and navigation. Robotics 2020, 9, 46. [Google Scholar] [CrossRef]

- Reiser, D.; Miguel, G.; Arellano, M.V.; Griepentrog, H.W.; Paraforos, D.S. Crop row detection in maize for developing navigation algorithms under changing plant growth stages. In Proceedings of the Robot 2015: Second Iberian Robotics Conference, Lisbon, Portugal, 19–21 November 2015; pp. 371–382. [Google Scholar]

- Durand-Petiteville, A.; Le Flecher, E.; Cadenat, V.; Sentenac, T.; Vougioukas, S. Tree detection with low-cost three-dimensional sensors for autonomous navigation in orchards. IEEE Robot. Autom. Lett. 2018, 3, 3876–3883. [Google Scholar] [CrossRef]

- Duda, R.O.; Hart, P.E. Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Winterhalter, W.; Fleckenstein, F.V.; Dornhege, C.; Burgard, W. Crop row detection on tiny plants with the pattern hough transform. IEEE Robot. Autom. Lett. 2018, 3, 3394–3401. [Google Scholar] [CrossRef]

- Gai, J.; Xiang, L.; Tang, L. Using a depth camera for crop row detection and mapping for under-canopy navigation of agricultural robotic vehicle. Comput. Electr. Agric. 2021, 188, 106301. [Google Scholar] [CrossRef]

- Guerrero, J.M.; Guijarro, M.; Montalvo, M.; Romeo, J.; Emmi, L.; Ribeiro, A.; Pajares, G. Automatic expert system based on images for accuracy crop row detection in maize fields. Exp. Syst. Appl. 2013, 40, 656–664. [Google Scholar] [CrossRef]

- Sharifi, M.; Chen, X. A novel vision based row guidance approach for navigation of agricultural mobile robots in orchards. In Proceedings of the 2015 6th International Conference on Automation, Robotics and Applications (ICARA), Queenstown, New Zealand, 17–19 February 2015; pp. 251–255. [Google Scholar]

- Hiremath, S.A.; Van Der Heijden, G.W.; Van Evert, F.K.; Stein, A.; Ter Braak, C.J. Laser range finder model for autonomous navigation of a robot in a maize field using a particle filter. Comput. Electr. Agric. 2014, 100, 41–50. [Google Scholar] [CrossRef]

- Debain, C.; Delmas, P.; Lenain, R.; Chapuis, R. Integrity of an autonomous agricultural vehicle according the definition of trajectory traversability. In Proceedings of the AgEng 2010, International Conference on Agricultural Engineering, Clermont-Ferrand, France, 6–8 September 2010. [Google Scholar]

- Leemans, V.; Destain, M.F. Line cluster detection using a variant of the Hough transform for culture row localisation. Image Vis. Comput. 2006, 24, 541–550. [Google Scholar] [CrossRef]

- Åstrand, B.; Baerveldt, A.J. A vision based row-following system for agricultural field machinery. Mechatronics 2005, 15, 251–269. [Google Scholar] [CrossRef]

- Guzmán, R.; Ariño, J.; Navarro, R.; Lopes, C.; Graça, J.; Reyes, M.; Barriguinha, A.; Braga, R. Autonomous hybrid GPS/reactive navigation of an unmanned ground vehicle for precision viticulture-VINBOT. In Proceedings of the Intervitis Interfructa Hortitechnica-Technology for Wine, Juice and Special Crops, Stuttgart, Germany, 27–30 November 2016; pp. 1–11. [Google Scholar]

- Prado, Á.J.; Torres-Torriti, M.; Yuz, J.; Cheein, F.A. Tube-based nonlinear model predictive control for autonomous skid-steer mobile robots with tire–terrain interactions. Control Eng. Pract. 2020, 101, 104451. [Google Scholar] [CrossRef]

- Danton, A.; Roux, J.C.; Dance, B.; Cariou, C.; Lenain, R. Development of a spraying robot for precision agriculture: An edge following approach. In Proceedings of the 2020 IEEE Conference on Control Technology and Applications (CCTA), Montreal, QC, Canada, 24–26 August 2020; pp. 267–272. [Google Scholar]

- Samson, C. Control of chained systems application to path following and time-varying point-stabilization of mobile robots. IEEE Trans. Autom. Control 1995, 40, 64–77. [Google Scholar] [CrossRef]

- Deremetz, M.; Lenain, R.; Couvent, A.; Cariou, C.; Thuilot, B. Path tracking of a four-wheel steering mobile robot: A robust off-road parallel steering strategy. In Proceedings of the 2017 European Conference on Mobile Robots (ECMR), Paris, France, 6–8 September 2017; pp. 1–7. [Google Scholar]

- Tourrette, T.; Lenain, R.; Rouveure, R.; Solatges, T. Tracking footprints for agricultural applications: A low cost lidar approach. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Workshop “Agricultural Robotics: Learning from Industry 4.0 and Moving into the Future”, Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Deremetz, M.; Couvent, A.; Lenain, R.; Thuilot, B.; Cariou, C. A Generic Control Framework for Mobile Robots Edge Following. In Proceedings of the ICINCO (2), Montreal, QC, Canada, 29–31 July 2019; pp. 104–113. [Google Scholar]

- Lenain, R.; Nizard, A.; Deremetz, M.; Thuilot, B.; Papot, V.; Cariou, C. Path Tracking of a Bi-steerable Mobile Robot: An Adaptive Off-road Multi-control Law Strategy. In Proceedings of the ICINCO (2), Porto, Portugal, 29–31 July 2018; pp. 173–180. [Google Scholar]

- Lenain, R.; Nizard, A.; Deremetz, M.; Thuilot, B.; Papot, V.; Cariou, C. Controlling Off-Road Bi-steerable Mobile Robots: An Adaptive Multi-control Laws Strategy. In Proceedings of the International Conference on Informatics in Control, Automation and Robotics, Porto, Portugal, 29–31 July 2018; pp. 344–363. [Google Scholar]

- Lenain, R.; Thuilot, B.; Guillet, A.; Benet, B. Accurate target tracking control for a mobile robot: A robust adaptive approach for off-road motion. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 2652–2657. [Google Scholar]

- Samson, C.; Morin, P.; Lenain, R. Modeling and control of wheeled mobile robots. In Springer Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2016; pp. 1235–1266. [Google Scholar]

- Chitta, S.; Marder-Eppstein, E.; Meeussen, W.; Pradeep, V.; Tsouroukdissian, A.R.; Bohren, J.; Coleman, D.; Magyar, B.; Raiola, G.; Lüdtke, M.; et al. ros_control: A generic and simple control framework for ROS. J. Open Source Softw. 2017, 2, 456. [Google Scholar] [CrossRef]

- Kanagasingham, S.; Ekpanyapong, M.; Chaihan, R. Integrating machine vision-based row guidance with GPS and compass-based routing to achieve autonomous navigation for a rice field weeding robot. Prec. Agric. 2020, 21, 831–855. [Google Scholar] [CrossRef]

| Settings/Scenario | Vine Model Parameters | LiDAR Position | Control Parameters | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Width (Index) | Height (m) | Corr. Threshold | x (m) | y (m) | z (m) | Strategy 1 | Strategy 2 | ||

| Summer Vineyard | 10.0 | 1.0 | 0.25 | 1.0 | 0 | 2.0 | 45 | = −0.9 = 1.0 = 1.0 | = 0.35 = 0.35 |

| Winter Vineyard | 5.0 | 1.0 | 0.25 | 1.0 | 0 | 2.0 | 45 | = −0.9 = 1.0 = 1.0 | = 0.35 = 0.35 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Iberraken, D.; Gaurier, F.; Roux, J.-C.; Chaballier, C.; Lenain, R. Autonomous Vineyard Tracking Using a Four-Wheel-Steering Mobile Robot and a 2D LiDAR. AgriEngineering 2022, 4, 826-846. https://doi.org/10.3390/agriengineering4040053

Iberraken D, Gaurier F, Roux J-C, Chaballier C, Lenain R. Autonomous Vineyard Tracking Using a Four-Wheel-Steering Mobile Robot and a 2D LiDAR. AgriEngineering. 2022; 4(4):826-846. https://doi.org/10.3390/agriengineering4040053

Chicago/Turabian StyleIberraken, Dimia, Florian Gaurier, Jean-Christophe Roux, Colin Chaballier, and Roland Lenain. 2022. "Autonomous Vineyard Tracking Using a Four-Wheel-Steering Mobile Robot and a 2D LiDAR" AgriEngineering 4, no. 4: 826-846. https://doi.org/10.3390/agriengineering4040053