1. Introduction

Climate change has become an emergent subject to be addressed without delay in human activities, as numerous studies [

1] show the necessity of reducing environmental impacts. While all sectors of activity must make considerable efforts, agriculture is particularly important in the fight against global warming [

2]. Current agriculture practices indeed use a consequent amount of pesticides and chemicals that have a non-negligible impact on the environment. Even though such practices have allowed us to increase yield and production levels to feed a growing worldwide population [

3], it has become crucial to develop alternative practices, enabling us to avoid the use of chemicals as well as soil degradation. In order to tackle environment-friendly production, several new agricultural practices have been proposed, such as precision agriculture (PA) or organic farming [

4]. Such examples of new routes can be gathered into the concept of agroecology [

5], which can be viewed as the study of the ecological processes applied to agricultural production systems. It consists in mixing different species and crops in the same area in order to preserve soil and protect vegetation without using chemicals. These new ways for farming nevertheless require frequent treatment and a regular monitoring of cultures. Agricultural operations are moreover more complex, as several kinds of crops have to be managed separately in the same area and with different seasonalities. As a result, the rise of agroecology needs increased manpower, despite the work to be achieved appearing to be harsh and painful. Farming jobs then appear to be less and less attractive, leading to a lack of manpower [

6]. The (r)evolution of agricultural practices then requires us to develop new tools, working with a high level of precision, without needing the use of hard human work.

As it has been observed in industry, robotics may appear as a promising solution to achieve these accurate, difficult and repetitive operations in the field [

7]. Several autonomous platforms have then been marketed in the area of agriculture to address the problem of work penibility and changing practices as well [

8]. The use of robots in such natural environments is nevertheless not straightforward from an industrial background, as the area is low-structured, with a changing context and task to be achieved, deeply impacting the perception and the control of mobile robots acting off-road [

9]. For the autonomous navigation functionality, a lot of efforts have been made to preserve the accuracy of robots in accordance with agricultural requirements (a few centimeters) in different contexts (absolute path tracking, row following, etc.). Robust approaches are often viewed as a solution, assimilating the variation in grip conditions as a bounded perturbation to be rejected [

10]. Such approaches are successfully applied in the framework of off-road path tracking [

11] but are hardly usable in agriculture due to the chattering effect, which may influence the quality of work achieved by an implement. The complexity of modeling mobile robots dynamics and dealing with perception conditions changes, bringing us to consider the use of learning-based approaches, such as in [

12] for control or in [

13] for row detection. Nevertheless, such approaches require, on the one hand, obtaining an important collection of data or having a realistic simulation framework. On the other hand, such points of view lose the deterministic properties allowing us to warrant the stability of the robots’ behavior.

Adaptive approaches bring a variation in robot dynamics, especially in the framework of path tracking. Ref. [

14] proposes an adaptive approach based on optimal control, while, in our previous work [

15], a dynamic observer is designed to achieve an accurate trajectory following on different kinds of soil, with different speeds. Such an adaptation, coupled with predictive layers [

16], allows us to preserve the accuracy within a few centimeters despite harsh dynamics and terrain variations. Nevertheless, they are often limited to one application such as path following using an absolute and accurate localization. A key challenge in agriculture robotics is not limited to autonomous navigation, as robots need to interact with vegetation and soil. As a result, it also has to reference its position with respect to crops, when it is in the field, while navigating without visible landmarks, when achieving a half-turn or while traveling between the farm and the field. The achievement of a complete agricultural task by a robot then requires several types of perception and algorithms, depending on the context, the task to be performed and the actual situation.

As a consequence, the adaptation of the robot must be extended to commutation between several behaviors (such as trajectory following, target tracking, structure following, etc.) in order to address the complexity of agricultural operations [

17]. A natural way to manage the successive task of course lies in off-line planning, allowing us to define the general robotic mission [

18]. If this initial planning constitutes an important element of allowing complex scenarios to be achieved, it first requires a semantic representation of the environment [

19], not always easy to obtain. Secondly, the uncertainty and variability about the crops’ growth or the probability of encountering unexpected situations requires the robot to adapt itself to the situation. As a result, on-line switching between several modes of control must be developed. Behavior-based control approaches such as those proposed in [

20] for off-road navigation also constitute a possible way. Nevertheless, the coexistence of several control actions, potentially in contradiction, may lead to inaccuracies or oscillations. In the framework of an agricultural task, this may quickly lead to crop destruction, depending on the situation and the on-boarded implement.

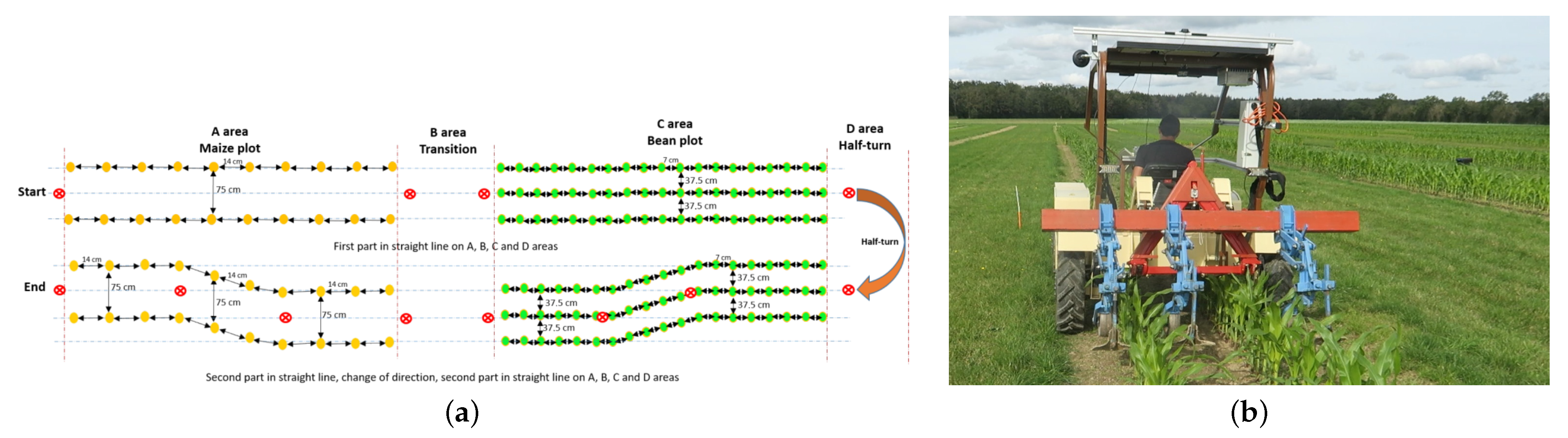

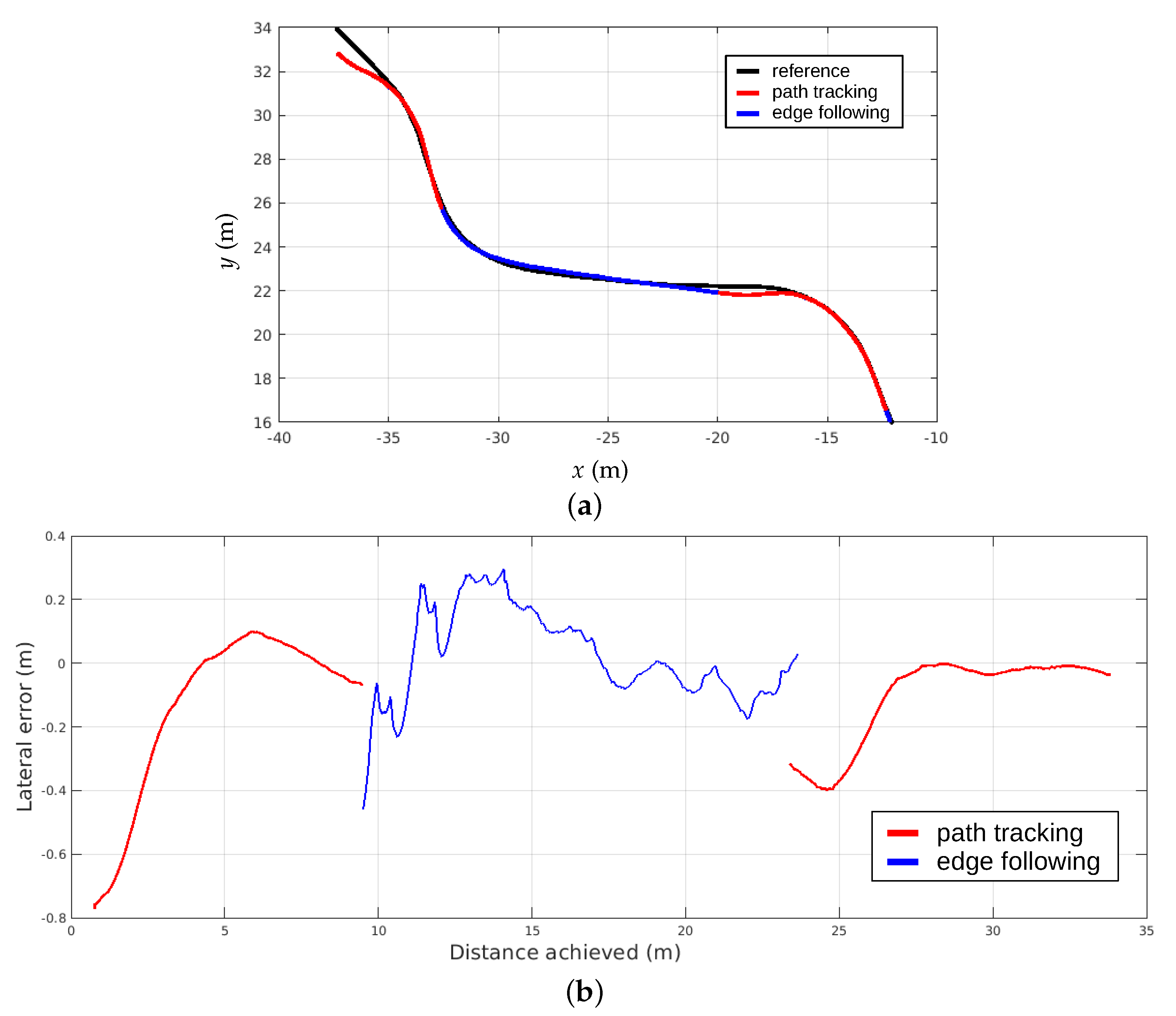

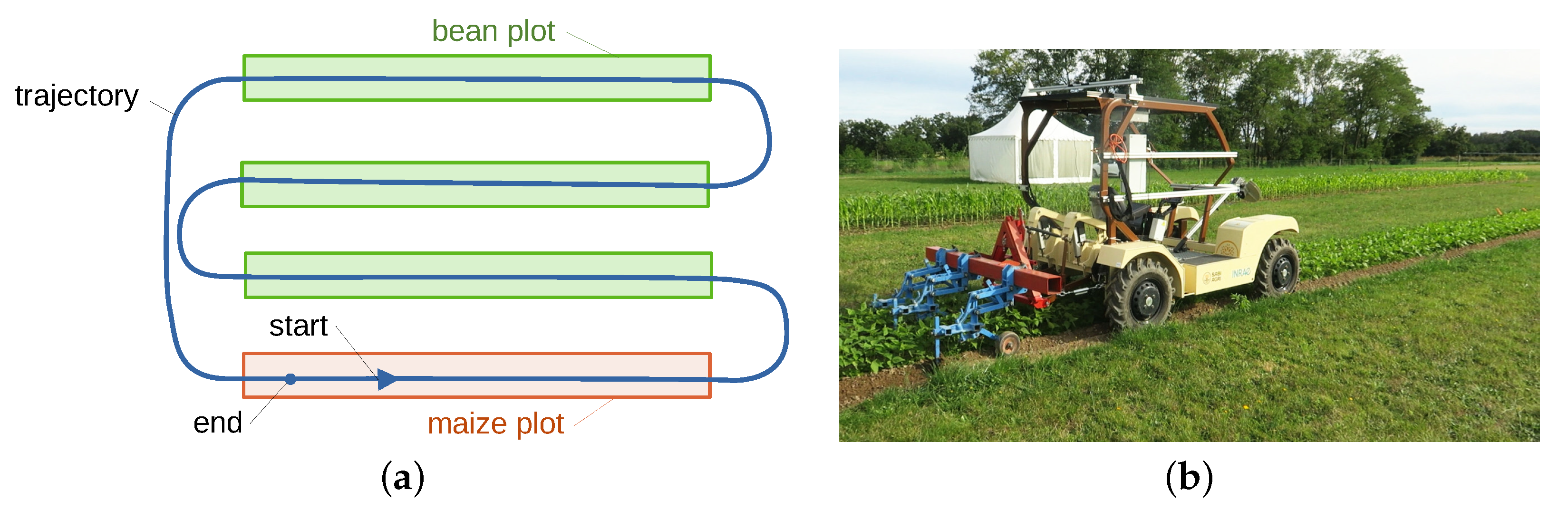

In this paper, we propose a global strategy allowing us to switch between different perception and control approaches on an electrical tractor that is foreseen to be automated, depicted in

Figure 1. Three main behaviors are described in the framework of this paper:

path tracking,

edge following and

furrow pursuing. The proposed approach consists in automatically switching between the different behaviors depending on the situation, i.e, the result of Lidar detection with respect to an expected shape of crops. The algorithm takes part in a predefined planned trajectory to be followed if no crops are detected. Three criteria are proposed to achieve the selection depending on the detection relevance. Such a strategy allows us to select the best behavior without requiring the use of a semantic map or a huge robotic mission planning description. The effectiveness of the proposed approach is evaluated in full-scale experiments in a three-environment testbed. The first simulates an autonomous navigation in a vineyard, switching between

path tracking and

edge following. The second and the third permit us to achieve the autonomous weeding of a field with different kinds of crops (maize and beans) that have not been sowed in straight lines. This testbed has been achieved in the framework of the “Acre” challenge of the METRICS project [

21]. Results show the ability to satisfactorily select the expected behavior, allowing us to keep an accuracy of within few centimeters, despite changing vegetation. This validates the ability to autonomously achieve a highly accurate and complete field operation, being positioned with respect to the crops when needed.

The paper is composed as follows. First, the global approach is described and the different behaviors are briefly exposed. It permits us to obtain three possible approaches to select depending on the situation. In the second part, the switching strategy is detailed, using the criteria proposed in the paper. It allows us to make a decision on the adapted behavior depending on the robot state and environment perception. The experimental results are then discussed in the third section. The experiment is based on two use cases: orchard navigation and open field weeding treatment. The results allow us to conclude on the effectiveness of the proposed algorithm to achieve complex agricultural tasks due to a fully autonomous robot.

2. Definition of Elementary Robotics Behaviors

In this paper, a global adaptation strategy to select the behavior of an agricultural robot is proposed in order to achieve complete operations depending on the encountered situation. As has been mentioned previously, we consider that the diversity of robotics tasks to be addressed in a field treatment cannot be handled by a single perception-control approach, as the robot must move in the farm, travel up to the field, track and interact with some rows of plants, make maneuvers, etc. To allow a high level of versatility for farming robots, an algorithm dedicated to switching between different elementary behaviors is proposed. Before detailing this architecture, let us first describe the three elementary approaches that are considered in this paper, allowing us to realize farming operations:

Path tracking. This first approach consists in following a known trajectory (planned or previously learned) in an absolute framework. This permits us to have an absolute autonomous navigation framework, without considering any visible reference. This behavior can be used to achieve maneuvers, travel between fields or move through the farm. It requires access to GNSS information or an absolute localization system.

Edge following. This second behavior is dedicated to moving relatively to an existing structure using a horizontal 2D Lidar. It selects impact points to derive a relative trajectory to be followed with an offset. This is used to follow structures in indoor environments (such as walls or fences in farm buildings) or in fields (such as tree rows in orchards or vineyards).

Furrow pursuing. This third approach consists in finding an expected shape on the ground using an inclined 2D Lidar. The objective is to recognize ground shape (such as footprint or crops) in the Lidar framework. The accumulation of detected points through the robot allows us to define a local trajectory to be followed.

The behavior

path tracking is considered in the following as a default behavior and is used when no specialized behavior is available. The two others are considered as specialized behaviors and are used when the elements to be observed are detected. They are selected in priority when detecting plants, as the objective of agricultural works is to move with respect to plants. The aim of the algorithm is to favor the specialized behaviors while maintaining consistent control. Before describing the selection algorithm in

Section 3, let us detail further the three elementary behaviors here before mentioned.

2.1. Path Tracking

The first elementary behavior investigated in this paper relies on the path following of an absolute trajectory. To this aim, let us consider the robot moving on a plane with a longitudinal symmetric axis with respect to the middle of the rear axle. Equipped with these assumptions, the robot can be viewed as a bicycle model such as the one depicted in

Figure 2. The notation used in the following is reported in this figure. The point to be controlled is the middle of the rear axle

O. The objective is to make it converge to the reference trajectory

. While the velocity is supposed to be controlled independently, one considers the speed vector

v of the point

O has a known variable. The position and the velocity of

O in the world frame are computed using an extended Kalman filter that merges the odometry of the robot, an inertial measurement unit (IMU) and a real-time kinematic (RTK) global navigation satellite system (GNSS). The robot is modeled in a Frénet frame, situated at the closest point from

O belonging to

. At this point, one can define:

- -

s, which is the curvilinear abscissa;

- -

y, the tracking error;

- -

, the angular deviation, which is defined by the difference between the robot heading and the tangent of the trajectory at point s.

Figure 2.

Extended kinemtic model of the robot for path following purpose.

Figure 2.

Extended kinemtic model of the robot for path following purpose.

In order to account for the bad grip conditions encountered in the off-road context, we also define two variables representative of the fact that rolling without sliding conditions is not satisfied. The two sideslip angles

and

are then introduced for, respectively, the front and the rear wheels. These variables are representative of the difference between each wheel orientation and the actual speed vector one. These two sideslip angles cannot be directly measured. As a result, an observer is used as detailed in [

22], allowing us to satisfactorily estimate these sideslip angles. Using these notations, one can derive the extended kinematic model giving the evolution of the state variables

with respect to curvilinear abscissa

s:

with

and

the yaw rate of the robot.

is the curvature of the reference point at

s and

L is the robot wheelbase. Variable

denotes the derivative with respect to the curvilinear abscissa, allowing us to obtain a convergence in distance, instead of time, in order to have a robot control independent from the speed.

Once the state vector is known, the objective of path tracking is to control the steering angle . This is achieved due to a backstepping approach composed of two steps.

2.1.1. Step 1: Optimal Orientation Computation

As the objective is to ensure the convergence of the tracking error

y to zero, one can define a differential equation imposing this behavior, such as:

with

a gain defining the exponential convergence distance. By injecting the expression in (

1) into this condition, one can obtain the target orientation:

If the robot relative orientation is equal to , then the differential equation is ensured and the lateral error will converge to zero. The second step then consists in finding the steering angle imposing the convergence .

2.1.2. Step 2: Steering Angle Control

As has been mentioned, the second step consists in ensuring the convergence of the angular deviation

to the target one

. Let us define the error

. A condition to ensure this convergence is to impose:

Let us substitute into

the second row of the kinematic model in (

1) and consider that the variations of target orientation can be neglected with respect to the angular deviation control. One can write:

then constitutes the expression of the yaw rate to be imposed to the robot for ensuring the condition in (

4). By considering the expression of

, one can derive directly the control law expression for the steering angle:

This control expression constitutes the steering angle

to be sent to the robot to ensure the expression in (

4) is true and finally the convergence of the tracking error

y goes to zero. A condition to be met is that the convergence distance for the angular deviation is shorter than the one imposed for the lateral error. This can be ensured by imposing

. In addition to this control expression, it has been proposed in [

22] to apply a predictive layer on the curvature servoing, allowing us to compensate for low-level delay and settling time. In practice, an anticipation effect can also be obtained by feeding forward the control law with future curvature

, considering

as a distance of prediction. As the robot moves at low speed, in this paper, the second solution is here applied considering that settling time of the actuator is equal to

T. One can then finally rewrite the control expression as:

The same control is then applied in the forthcoming strategy. Nevertheless, the lack of accuracy and noises obtained with other sensors does not permit us to satisfactorily observe sideslip angles. As a result in other behaviors, sideslip angles

and

are neglected and set to zero in the control expression in (

7).

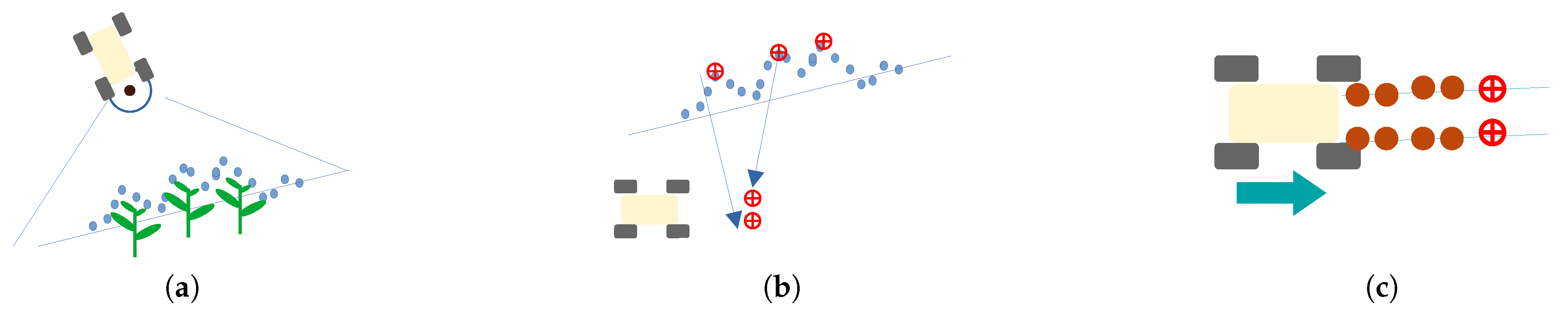

2.2. Edge Following

The aim of

edge following is to follow a linear object such as a wall, a row of posts or a row of plants. The shape of the object to follow is detected using a horizontal 2D Lidar (

Figure 3a). The Lidar is able to perceive a set of range measurements for different angles at the front of the robot. These measurements are converted into 2D points on a plane parallel to the ground (

Figure 3b). When the robot is close to the objects to follow, the points of the Lidar form one or several clusters on the left, on the right or on both sides of the robot. It is then possible to build a trajectory from the shape of the edge of these clusters.

The algorithm used to detect the shape is based on a circle of radius

r that rolls along the cluster. It is presented in Algorithm 1. If the cluster is on the right of the robot, the circle rolls on the left of the cluster, and if the cluster is on the left of the robot, the circle rolls on the right of the cluster. This allows us to build a chain from selected Lidar points so that the robot can traverse the observed area while following the object. The first point of the chain is selected by initializing the circle at the rear of the robot

and by translating it along an axis perpendicular to the robot’s forward axis. The translation is conducted progressively until a point of the Lidar touches the circle (

Figure 4a). This point is then the first in the chain. To avoid initializing the chain from a point on the wrong side of the robot, we only consider the points that touch a semicircle oriented in the direction of the translation. The next point of the chain is found by progressively rotating the circle around the previous point of the chain until a new point of the Lidar touches the circle (

Figure 4b). This new point is then added to the chain. The rotation of the circle is repeated until a rotation angle

limit or a chain length

limit is reached (

Figure 4c).

| Algorithm 1: Building the edge chain |

![Agriengineering 04 00050 i001]() |

The generated chain of Lidar points

is not directly used as a trajectory to follow. It is first approximated as a parabola by computing a linear regression of a polynomial of degree two on the chain (

Figure 4). The objective is then to find

such that it solves the equation

for every point

. The result is given by

where

It is then possible to compute the lateral error

y and angular error

of the robot position

to the parabola:

These errors are then used as input parameters for the backstepping robot control approach presented in

Section 2.1. However, this version of the control law does not include an estimation of sideslip angles.

2.3. Furrow Pursuing

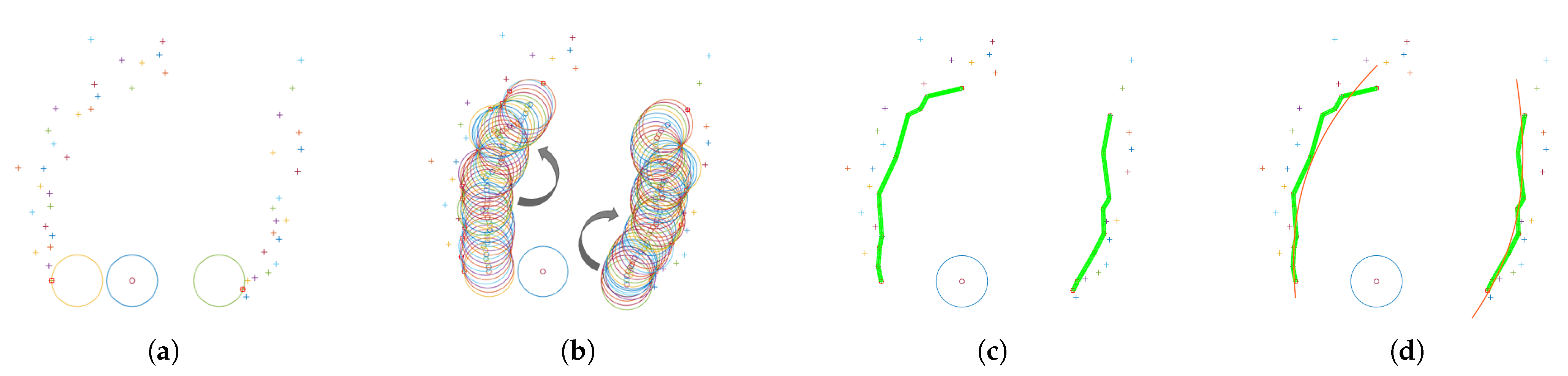

The aim of furrow pursuing is to follow a linear object such as wheelprints, one or two rows of small plants or a trellis of vine. This approach also uses a 2D Lidar to perceive the elements to follow but, contrary to edge following, the Lidar is inclined to look at the ground. In this configuration, the sensor cannot make a complete observation of the trajectory to follow because it can only perceive a slice of the environment at a given time. The trajectory is deduced by the accumulation of data obtained from several Lidar observations which are geometrically repositioned due to the odometry of the robot.

The first step of the detection algorithm is to convert the measurements of the 2D Lidar into 3D points in the robot frame (

Figure 5a). The axes

of the robot frame correspond to the

forward,

left and

up axes, respectively. It is not necessary to take the full angular range of the Lidar since we are only interested in the ground. Therefore, the detection algorithm is limited to a small angular range around the

x axis of the robot frame. The next step is to find one or more occurrences of the shape of the feature to follow in the Lidar scan. To do this, we compute a correlation function between the

z coordinates of the Lidar points and a reference model chosen specifically for the shape we want to track. Let

be a discrete function containing the

z coordinate of the point

k of the restricted Lidar scan and

the

z coordinate of the point

k of the reference model. The model corresponds to a rectangular function for which we configure the height

and the width

(in number of Lidar rays) to match the dimension of the element to follow. It can be expressed as

The correlation between the Lidar scan and the reference model corresponds to

with

In order to obtain the set of matches between the reference model and the Lidar measurement, the algorithm extracts a set of local maxima

in the correlation curve

if these maxima are greater than a correlation threshold

(

Figure 5b). The rest of the algorithm depends on the number of rows that the robot has to follow.

2.3.1. The Robot Is Configured to Track One Row

The selected maximum point

at a given time

t corresponds to the closest one of the previous measurement

or the closest to the robot position in the

y axis.

The previously selected points are grouped in a set

that is updated at each instant

t in order to remain accurate with respect to the movement of the robot. This movement is computed using robot odometry measurements and the evolution model of the robot. Points that are far from the robot are removed to keep the trajectory estimation local. The trajectory is obtained by applying a linear regression from the points

. The equation of the regression is

where

are the coefficients to solve. These coefficients allow us to compute the lateral error

y and angular error

from the robot position

to the trajectory. Because the points are expressed in the robot frame, these errors can be defined as

These errors are then used as input for the backstepping robot control approach presented in

Section 2.1. However, this version of the control law does not include an estimation of sideslip angles or the predictive layer.

2.3.2. The Robot Is Configured to Track Two Rows

If the algorithm tracks two rows, the maxima

are grouped into a set of pairs of Lidar points

, keeping only those where the distance in the robot’s

y axis are within a chosen interval

.

To select the best pair

of the measurement

t, it is necessary to define a function corresponding to the central point of a pair:

The selected pair

is then obtained using the formula

which corresponds to selecting the pair the closest to the robot on the

y axis or the closest to the previous selection (

). The points of the

n last selected pairs are separated into two groups which correspond to the left points

and the right points

. A linear regression similar to the one presented in Equation (

15) is applied on each group

and

in order to obtain the coefficients

and

which describe a line for each row of plants. From these lines, it is then possible to compute the lateral and angular errors of the robot to each line

and

by using Equation (

16). The error of the estimated trajectory is obtained by combining the errors of both lines:

These errors are then used as input for the backstepping robot control approach presented in

Section 2.1. However, this version of the control law does not include an estimation of sideslip angles or the predictive layer.

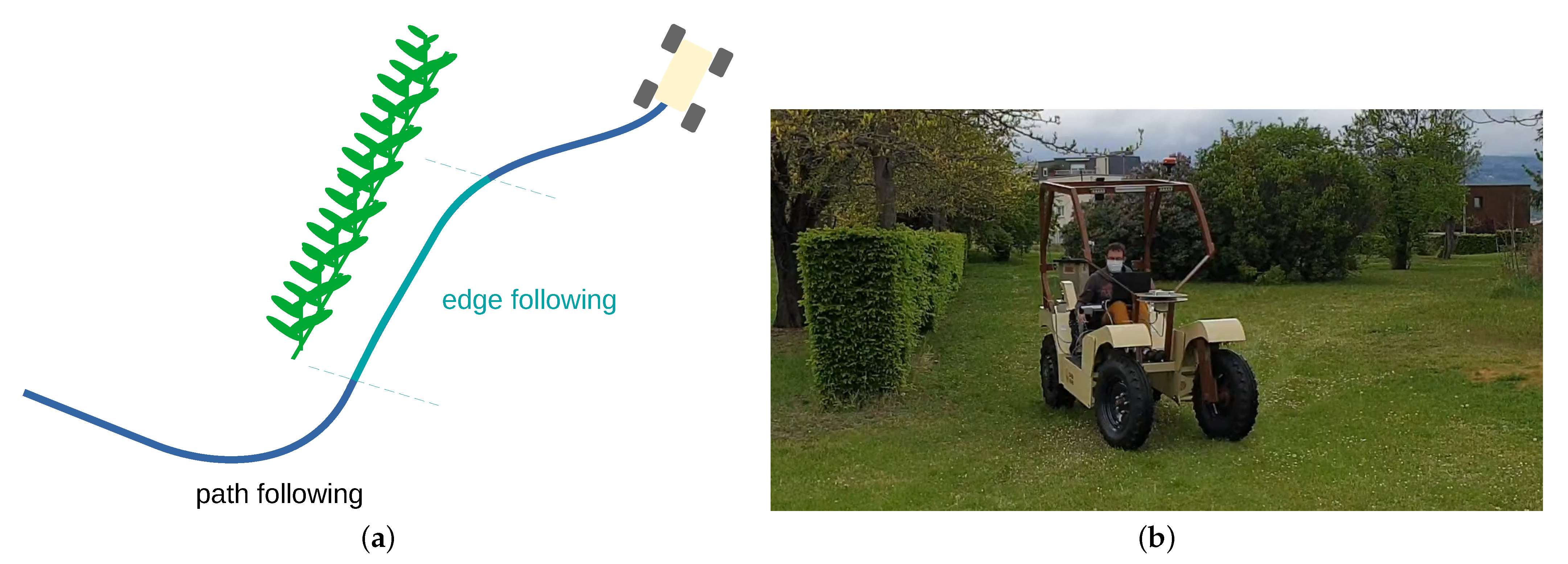

3. Switching to the Appropriate Behavior

The behavior path tracking has the advantage of being fully defined for the realization of the agricultural task. It requires, however, that GNSS is available. In the case of the specialized behaviors edge following and furrow pursuing, the trajectory to follow is generated locally in real time and is not available everywhere because there are situations where there is nothing to track. This is the case, for example, of passages where the robot has to make a U-turn to take the next line of vegetation. The selection algorithm thus acts as a state machine and allows us to choose between two states for robot control: path tracking or one of the specialized behaviors.

Since there are several types of specialized behavior, there are several versions of this state machine.

Figure 6 shows the components involved in the developed algorithm. In the function of the application, the specialization behavior can be

edge following or

furrow pursuing. Each behavior is able to provide a linear velocity and a steering angle to control the robot. The selection algorithm then only has to select which command to apply to the robot at a given time. The difficulty of this approach lies in the choice of the switching criterion between the different behaviors.

The criterion is based on the quality of the detection of the specialized behavior. This quality corresponds to the ability of the detection algorithm to determine whether the observed element corresponds to an element to follow. For edge following, the criterion is based on the following conditions:

The length of the detected chain is greater than a threshold . If the chain is too short, then there is high probability that this is not an object to follow.

The width of the chain on the y axis (in the robot frame) is lower than a threshold . This avoids following an object that is not in the current direction of the robot.

The standard deviation of the

y distance between the points of the chain and the parabola defined in Equation (

8) is lower than a threshold

. This allows us to quantify how complex the object to follow is. It is then possible to avoid following objects whose shapes do not correspond to the expected level of complexity.

These conditions can be written as

with

.

For furrow pursuing, the criterion is based on the following conditions:

The fact that the detection algorithm can find a pair of maxima in .

The correlation level of each maximum of the selected pair at the indexes and is lower than a threshold . This allows us to avoid false detection.

These conditions can be written as

These criteria allow us to switch from path tracking to specialized behavior but also the reverse transition. The difference lies in the values used for the thresholds of the conditions. Using different values for these thresholds allows us to avoid quick and repetitive transitions between the two behaviors. We also use delays in the transitions to avoid a behavior remaining selected for too short a time.

5. Conclusions

In this paper, a global strategy to adapt the global behavior of an agricultural robot has been proposed by selecting the best approach depending on the context of evolution. Three basic behaviors have been here proposed: path tracking, edge following and furrow pursuit. Depending on the task to be performed and the result of perception, a criterion has been proposed to select in real time the most adapted control approach while avoiding the chattering effect and the use of a semantic map or preliminary mission planning, potentially complex to obtain or define. The effectiveness of the proposed approach has been tested through full-scale experiments on an automated electrical tractor on different field typologies. The first type of testing has been achieved in an orchard environment, using commutation between structure following and path tracking. The second series of tests has been performed on a open field, in order to actually remove weeds from maize and beans, using a dedicated implement. The results of these experiments show the ability to handle the complex robotics mission that is required to achieve a complete agricultural operation. The obtained accuracy, within a few centimeters with respect to actual rows of crops, matches the farmers’ expectations to achieve agroecological tasks.

The proposed algorithm is compatible with the addition of new robotics behaviors without known limitations. Nevertheless, the extension to new behaviors (such as target tracking for cooperation) could imply extending the criteria. For the moment, such criteria only include the quality of crop or structure detection, without checking a good matching between the detected plants’ structures and the planned trajectory. The use of such information is expected to be developed to allow the robot to switch to the trajectory tracking if false detection occurs or if the field limit is reached without a row completely ending.

In order to increase the robustness of the proposed algorithm, it would be also interesting to add plant recognition capabilities within the Lidar frame or by adding exteroceptive sensor such as a camera. Despite a strength of the switching approach being that it avoids the use of a semantic map, navigation based on a structure may lead the robot to follow any shapes looking at the expected one. As a result, for safety reasons or enhanced robustness, the recognition of the nature of geometry would be an important element. This could be performed using reinforcement learning algorithms exploiting the database generated by current experiments. Additional information related to the plant and soil health could also be extracted, increasing the agroecological nature of the proposed developments.