1. Introduction

Present-day commercial automatic feeding systems (AFS) only incorporate an incomplete automation of the feeding process (“semi-automatic feeding”, stage 2) [

1]. Stage 2 indicates that filling, mixing, and distribution of the feed ration is performed automatically by the system, whereas feed removal from silos and transport from silos to the stable are performed manually by the farmer. These systems can only remove feed from interim storage and convey it into mixers. The interim storages, again, have to be filled by a manual operator. Moreover, the feed mixer is a distribution unit, or it conveys the mixed ration to a special feed distributor, which then dispenses the feed to the feeding fence. Furthermore, some of these systems push the feed regularly at programmable intervals. The duration of the interim storage of silage is limited to a few hours or a few days, as the air supply leads to rapid fodder spoilage after removal from the silo [

2,

3]. The removal of the roughage from the silos and their transport to the mixer or interim storage are, in turn, steps in feeding that cannot yet be managed by any commercial automatic feeding system. The most significant challenges here include the safe removal of silage with cutting or rotating tools, and the safe transport of the vehicles weighing several tons over non-restricted traffic areas.

Contrary to the harsh and complex environmental conditions in agriculture, standardized working industrial environments laid the foundation for the automation of transport vehicles [

4]. The success story of driverless transport systems or automated guided vehicles (AGVs) in intralogistics began more than six decades ago [

5]. There are optimal conditions, e.g., constant illuminance, paved roads, no changing or demanding weather conditions, and demarcated areas with trained staff. In the early days of driverless transport systems, in the 1950s, navigation was based on conductors with current flowing through them laid in the ground, known as inductive lane guidance [

5]. These were simply to be installed in the already paved surfaces of industrial halls and warehouses. LiDAR technology is now used in most AGVs. With the duration and the angle of the emitted laser beams, LiDAR scanners enable self-driving vehicles to navigate freely in indoor applications. Additionally, they are often part of perception systems in automated machinery, as they can maximize personal protection and collision avoidance [

6,

7,

8,

9]. LiDAR is an optical measuring principle, which makes it sensitive to external light and optical impairments. Therefore, LiDAR is only suitable to a limited extent for use outdoors. Dirt, fog, rain, soil conditions, and light-shadow transitions can substantially impact LiDAR technology [

10].

Automatic feeding systems move on a range of floor coverings, and operate both outdoors and indoors. This makes the use of ground control points difficult or completely eliminates the need for them. Likewise, using the global navigation satellite system (GNSS) alone is inadequate for two reasons. First, navigation in buildings is not possible due to the shading of the signal by the building envelope and, second, additional technologies are required for direct monitoring of the vehicle environment [

8]. LiDAR is an advanced technology that is highly promising for use in agricultural machinery, as well. This potential is predominantly seen in two sectors: In the automotive industry and in intralogistics. LiDAR technology is an important technological pillar for automation [

11,

12]. Even in land preparation LiDAR has an important standing, although GNSS and red-green- blue (RGB) cameras are more commonly used in agricultural automated machinery [

13]. This comes from the open-air environment on fields ideal for GNSS and their main tasks in identifying crops, rows or weeds ideal for RGB vision. LiDAR can create a precise image of the near and far range. This makes LiDAR an excellent fit for both navigation [

14,

15,

16] and in safety devices [

11,

12,

14,

17]. In an experimental setting, a robot vehicle was equipped with a LiDAR scanner and tested in practical agricultural driving tests. Vehicle navigation with LiDAR was analyzed using standardized criteria and robustness against challenging environmental conditions was documented. The aim of this article is to analyze the navigation of a driverless feed mixer with LiDAR under practical conditions and provide orientation on how to evaluate these navigation technologies.

2. State of Knowledge

Laser navigation is the most prominent representative of free navigation [

11]. It makes use of the physical characteristics of a laser (light amplification by stimulated emission of radiation) and implements them in the LiDAR method [

18]. LiDAR is a method for optical distance and speed measurement [

19]. It is based on the time-of-flight of emitted laser pulses and their scattering, known as time-of-flight (TOF) [

11,

12]. The laser beams of the LiDAR are electromagnetic waves and can be distinguished by differences in frequencies. LiDAR is an optical measurement method, which is the reason that the emitted pulses are absorbed, deflected or scattered by particles, bodies, and surfaces.

For the purposes of this study, LiDAR sensors can be divided into two categories. The first design principle is called a LiDAR sensor, which contains no moving parts and relies only on diode arrays. The LiDAR sensors work according to the multi-beam principle. For this purpose, they are provided with several permanently mounted transmitter and receiver units arranged horizontally next to each other. The angular resolution depends on the beam width and the lateral opening angle on the number of beams. Ranges of up to 150 m are possible in this configuration. The advantage of this design is that it does not have any moving parts. In the automotive industry (e.g., Volkswagen Up, Ford Focus), 2D LiDAR systems based on the multi-beam principle are used for active emergency braking. Due to the limited horizontal detection area, their use is limited to longitudinal guidance. They are not yet used in the AGV industry [

20].

The so-called laser scanners are the second type of LiDAR sensor. They are equipped with one or more mechanically rotating mirrors that redirect the emitted light pulses. The angle to the object is recorded based on the rotation of the mirror. The exact position of an object can be determined using the distance to the object and the angular position of the mirror [

20].

The multi-target capability of the sensors is a critical foundation for navigation, as several objects can be located in the sensor area. To differentiate between the targets, a separation ability is required, which among other things, depends on the resolution of the angle measurement. LiDAR laser beams leave the sensor as spherical waves, which correspond to strong bundling. Therefore, they can generate high-resolution measurement data [

16].

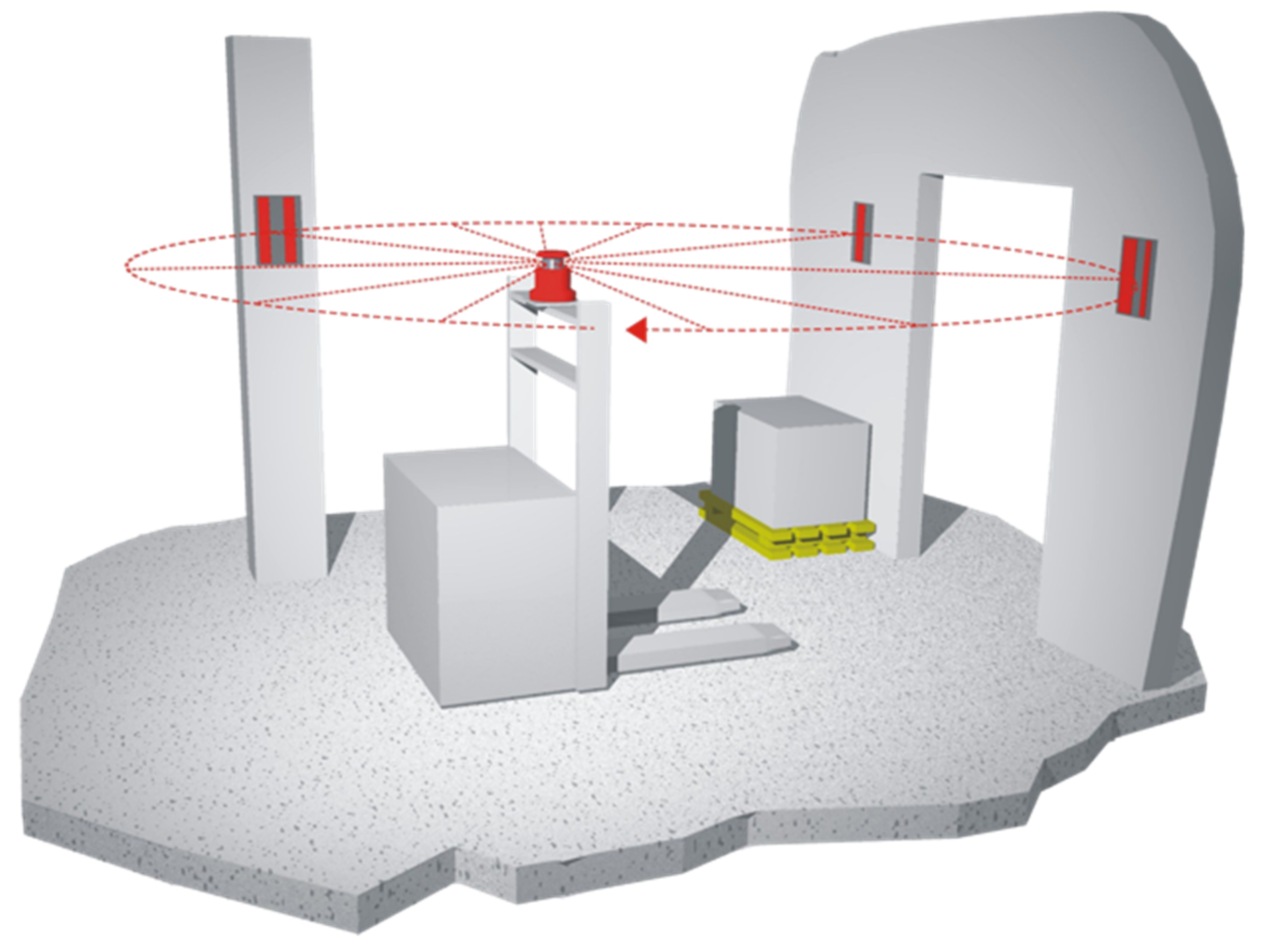

Classic laser navigation is based on the use of artificial landmarks. These are attached to walls and pillars at a specific height to avoid shadowing from people or other objects. A rotating laser scanner can precisely measure these reference marks even over long distances (

Figure 1). Depending on the procedure, at least two or three artificial markings must be visible to determine the position. The creation of new driving courses is possible directly via programming or a learning run (teach-in), which guarantees a significantly heightened level of flexibility with regard to the layout. To avoid the ambiguities of marks, it may be necessary to code them [

5].

The coordinates of the reference marks are saved when the vehicle is commissioned and configured. While driving, the laser scanner continuously detects the positions of these stationary marks. Therefore, the current position and orientation of the vehicle can be determined from the comparison of the position data. This position estimate is known as a feature-based method, which seeks to identify features or landmarks [

9]. This can be achieved in two ways: By means of the relative positions or by means of the absolute positions (

Figure 2). When covering the course, the vehicle computer continuously corrects the vehicle’s course deviations, which can occur due to various factors [

5].

To reduce, omit or support artificial landmarks, information concerning the contours of the environment is necessary. The laser scanners can output the measured contours to recognize natural landmarks in an external computer using suitable algorithms. The marks must be clearly recognizable and their position must not change. A distance measuring laser scanner can be used, for example, to drive along a wall. If a complete position determination/navigation is to succeed, additional procedures, such as edge detection or artificial bearing marks are added. This method of environmental navigation was also applied in these manuscript’s test series [

15].

If the laser scanner is also swiveled around another axis, it is possible to create a 3D image of the surroundings. This method is also used by Strautmann und Söhne GmbH in the “Verti-Q” concept presented in 2017 (

Figure 3). This enables quite reliable ceiling navigation, as the view of the ceiling is usually free of obstacles. Therefore, the disadvantage is the greater computing and time required for this multi-dimensional recording. At present, only slowly moving vehicles in the single-digit kilometers per hour range can be implemented with this technology in a practical manner. Additionally, the method is only suitable for indoor applications due to its principle [

15].

3. Materials and Methods

To evaluate the automatic lane guidance by LiDAR scanner, it is validated on the basis of three characteristics: Accuracy, Precision, and Consistency [

22]. For this purpose, a dynamic driving test was carried out using a robot vehicle in an agricultural environment (

Figure 4). At the test farm “Veitshof” of the Technical University of Munich, the vehicle drives along a route in and outside a stable building using a LiDAR scanner. A “run” was considered to be a series of 10 “test drives”, each completing the total route, as shown in

Figure 5. The climatic environment was consistent throughout test runs 2, 3, and 4 regarding lighting and weather conditions. It was a clouded December day between 10 a.m. and 4 p.m. at about 5 to 7 °C.

The LMS 100 laser scanner was mounted on the front of the vehicle in the middle above the steering axle. The scanner used a light wavelength of 905 nanometers and updated measures with a repetition rate of 50 Hz. The measuring range was between 0.5 and 20 m with an opening angle of 270° and an angle resolution of 0.50°. The scanner was insensitive to extraneous light up to 40,000 lx. The systematic error of the scanner was 30 mm. The LMS 100 has fog correction and multiple echo evaluation. The onboard PC ran an Ubuntu Linux operating system on which the robot operating system (ROS) was installed. The “Cartographer” program was used to graphically display the LiDAR data. The inertial measuring system of the type MTi-30-2A5G4 also supports the determination of spatial movement and geographical positioning.

The route (target trajectory) is programmed using waypoints on a digitally created map. The digital map is generated using the measurement data from the LMS 100 laser scanner from Sick AG and the “Cartographer” software program during a teach-in drive [

23]. The target trajectory was conducted over a drive-on feeding alley in the dairy stable (marks 2–3) around the stable building (marks 3–4–1) and back to the starting point at the feeding alley (mark 2), which corresponded to a route of about 110.5 m in length (

Figure 5). The feeding alley has a metal catching/feeding fence on both sides and is divided lengthwise by a metal drawbridge. The walls outside are wooden with a concrete base. Calf hutches are set up in the eastern area of the barn. The environment offers highly variable conditions: Floor coverings from gravel (79 m) to concrete (31.5 m), indoor and outdoor lighting conditions (31.5 m or 79 m), changing distances to reflective objects, changing materials/surface properties of the reflective objects, both static structural and dynamic living objects, such as cattle, in addition to dry or rainy conditions.

The extent to which the test drives (drive trajectories) deviate from the planned route (target trajectory) (accuracy) should be verified. Moreover, the extent to which the test drives (drive trajectories) deviate from one another (precision) should be examined. The criterion consistency is derived from accuracy and precision. The accuracy of a measurement system is the degree of deviation of measurement data from a true value. The accuracy was calculated by determining the closest neighbors of the drive trajectories to the target trajectory using the 1-nearest neighbor method (1NN method) and Euclidean distance calculation [

24]. The reproducibility of a measuring system describes the extent to which random samples under the same conditions scatter over several repetitions (precision). The precision was calculated by determining the closest neighbors of the drive trajectories to each other using the 1NN method and Euclidean distance calculation. If the measurement results of an evaluated test are accurate and precise, then they are consistent (consistency) [

22,

25].

In this work, a tolerance limit of 0.05 m is specified for precision and accuracy. As a result, this tolerance limit is set by the user and assumes a different value for additional scenarios. This limit has been orientated on real time kinematic (RTK)-GNSS, which is used, e.g., in guidance of farm machinery on fields and is capable of precision steering with only small deviations of ±3 cm. This considers other high-precision technologies for navigation as well as the restricted conditions inside buildings. The deviations of the individual trajectories are statistically evaluated by multiple assessments, applying the false discovery rate and using the Welch test.

The raw laser data are processed in real time with a Monte Carlo filter, which is widely used as a method of laser positioning [

26] during the experiment to remove measurement noise, and thus increase the accuracy of the data. After the test has been carried out, the data are further maintained using a Kalman filter and the associated high-resolution physical measuring system. The aim of using a Kalman filter is to eliminate systematic errors [

27]. The systematic error is one of three types of errors; it is the only type of error that is considered avoidable, and can be eliminated a priori. Random errors are random system errors, in which the cause is largely unknown [

25,

27]. Chaotic errors are mathematically inexplicable system errors, in which the cause is unknown [

25].

The high-resolution physical measuring system was developed to guarantee the optimal use of the Kalman filter for the evaluation. This can be described as follows.

Equation (1) describes the mathematical relationship between the position vector

at time

and the position vector

at time

(actual time plus certain quantity) and has an approximation quality of

.

Equation (2) represents the x-component representation of Equation (1).

Equation (3) represents the y-component representation of Equation (1).

Equation (4) describes the mathematical relationship between the heading angle

at time

and the heading angle

at time

and has an approximation quality of

.

where the quantity

is provided by

and the quantities

and

in Equation (8) are provided by the

approximations

and

Equation (5) describes the mathematical relationship between the absolute velocity

at time

t and the absolute velocity

at time

. By decomposing the acceleration vector

into its tangential and normal components, one first arrives at Equation (6) and finally, by means of trigonometric simplifications, at Equation (7). The radius of curvature

at time

t is included in Equation (8). The quantities provided by Equations (9) and (10) are required for calculating the radius of curvature

and describe the x and y components of the acceleration vector

at time

.

Equation (11) describes the relationship between the measurement data , the measurement matrix Hk, and the actual noise-free position data . The noise of the measurement data is modeled using a normally distributed (N(0, Rk)) error , where represents the covariance matrix.

The physical system Equations (1)–(10) and the measurement system are linked by the mathematical Equations (1)–(11). They form the extended Kalman filter. The results of the Kalman filter are optimal estimated values for the system state variable .

Predicted state estimate:

Predicted covariance estimate:

Near optimal Kalman gain:

Updated covariance estimate:

The prediction Equation (12), which in practice is subject to a normally distributed noise with a covariance matrix , is used to predict the true trajectory and is then calculated using the Kalman gain update step in Equation (17), which is still improving. To predict the covariance matrix Equation (13) of the denoised measurement data, the Jacobian matrix , the current covariance matrix of the denoised measurement data, and the covariance matrix of the physical system are required. The Jacobian matrix is approximated by central, finite differences with sufficient accuracy up to an accuracy. The matrix in Equation (14) describes the Jacobian observing matrix of the measurement system and relates the measurement data to the product of the observing matrix and the predicted position vectors . Equation (16) represents the optimal Kalman gain (Kk) and is required for the innovation in Equation (17) to calculate the actual position vector . Equation (18) describes the update of the covariance matrix of the denoised measurement data. The so-called innovation covariance matrix Equation (15) is required for Equation (16).

In total, four runs were conducted. The first run was a test run, to check that every aspect of the test setup was working. The second run revealed some issues, which were tracked down with observations made during the test and the notes in the test protocol. The robot displayed an erratic driving pattern during the entire run 2 on every journey. The reason for this was suspected to be the number of waypoints on the programmed route. An evaluation did not provide any quantifiable results, which is the reason that these measurement data were not further considered for data analysis. For runs 3 and 4, the number of waypoints in the route were increased. The erratic driving pattern could no longer be observed in runs 3 and 4. In addition to the x and y coordinates for each measurement point, the measurement time is also saved. This makes it easier to assign the data. The laser measurement data are recorded as a “.bag” file with every drive with ROS, and then saved as a text file. This indicates that the measurement data can be used for evaluation in Microsoft Excel and MATLAB.

4. Results

The results from the tests demonstrate the abilities of the automatic lane guidance via LiDAR scanner and their evaluation is validated on the basis of three characteristics: Accuracy, Precision, and Consistency. For a clear and definite assignment in the following paragraphs, runs and drives are shown as codes, e.g., R3D6 (run 3, drive 6).

4.1. Accuracy

The determination of the accuracy refers to the distances between the target trajectory and the test drives. The waypoints for the navigation represented the target trajectory, which in turn formed the true (target) trajectory in the accuracy calculation. Then, the accuracy for each point of the target trajectory was determined with the help of the 1NN method. For each point of the target trajectory, the nearest neighbor of all nine considered trajectories from run 3 in the section [mark 2–3] (referred to as “alley”) inside the facility, was determined by means of the Euclidean distance (

Figure 5).

In

Figure 6, a greater deviation of the trajectories at the beginning of section “alley” during run 3 is visible, which resulted from the previous cornering. The “steering angle” due to the different speeds of the wheels on the drive axle (rear axle) favored these deviations when cornering. The driving accuracy itself can be very high despite the fluctuations in the data, since even small steering commands are occurring on the scanners placed above the front axle enhanced by the lever. These measurement data were also corrected, assuming that they were influenced by the previous cornering. Nearest neighbors, which may have been incorrectly assigned, have been adjusted to reduce the systematic error. This two-stage preprocessing and cleaning of the measurement data led to a reliable data basis to enable robust evaluation results.

Figure 7 shows an example of how the accuracy of the drives from run 3 in the route section results in a reference point of the target trajectory. The target trajectory runs horizontally through the point XTRUE roughly. No regularity was discernible in the scatter of the measurement points of the drive trajectories around the target trajectory. The deviation of the closest neighbors to this point XTRUE varied from 0.0058 m (R3D2) to 0.0572 m (R3D7). Therefore, the measured values of the individual trajectories are classified as accurate in relation to this measuring point.

In

Figure 8, this so-called 1NN method is shown as an example in a 1-point scenario. As can be deduced from the figure, the calculated distance is greater than the actual deviation. From this, it can be concluded that the deviation is actually smaller than the evaluation of the data shown. A evident uncertainty behind this finding is that it is not known how the robot moved between the two measuring points. It can be assumed that he drove on the direct route. However, it is also possible that he moved irregularly in the area. Basically, a positive trend can be assumed, which implies that the deviations from the target trajectory are actually smaller than those determined in the evaluations.

The results of the accuracy assessment of all drives from run 3 in the route section are shown in

Figure 9. In total, 171 nearest neighbors were evaluated for the nine trajectories of run 3. Only the adjusted results from the processes described above were taken into account.

4.2. Precision

In contrast to the accuracy calculation, the precision results from the calculation of the distances between the drives. In the precision calculation, the 1NN method was used to determine the precision in the repetition of the drives. For each point of the drive, the closest neighbor of all nine considered drives from runs 3 and 4 was determined using the Euclidean distance. The data were processed in the same way as for the determination of the accuracy.

The closest neighbors are calculated for the measurement data of the nine selected drives from run 3 in the route section “alley”. As an example,

Figure 10 shows how the precision is calculated between the drives R3D6 and R3D1. A total of 514 closest neighbors between R3D6 and R3D1 were determined. The trajectories of the drives R3D6 and R3D1 are between 0.0008 and 0.0825 m apart. The average precision for this pair of trajectories is 0.0327 m. Therefore, the pair of trajectories is precise.

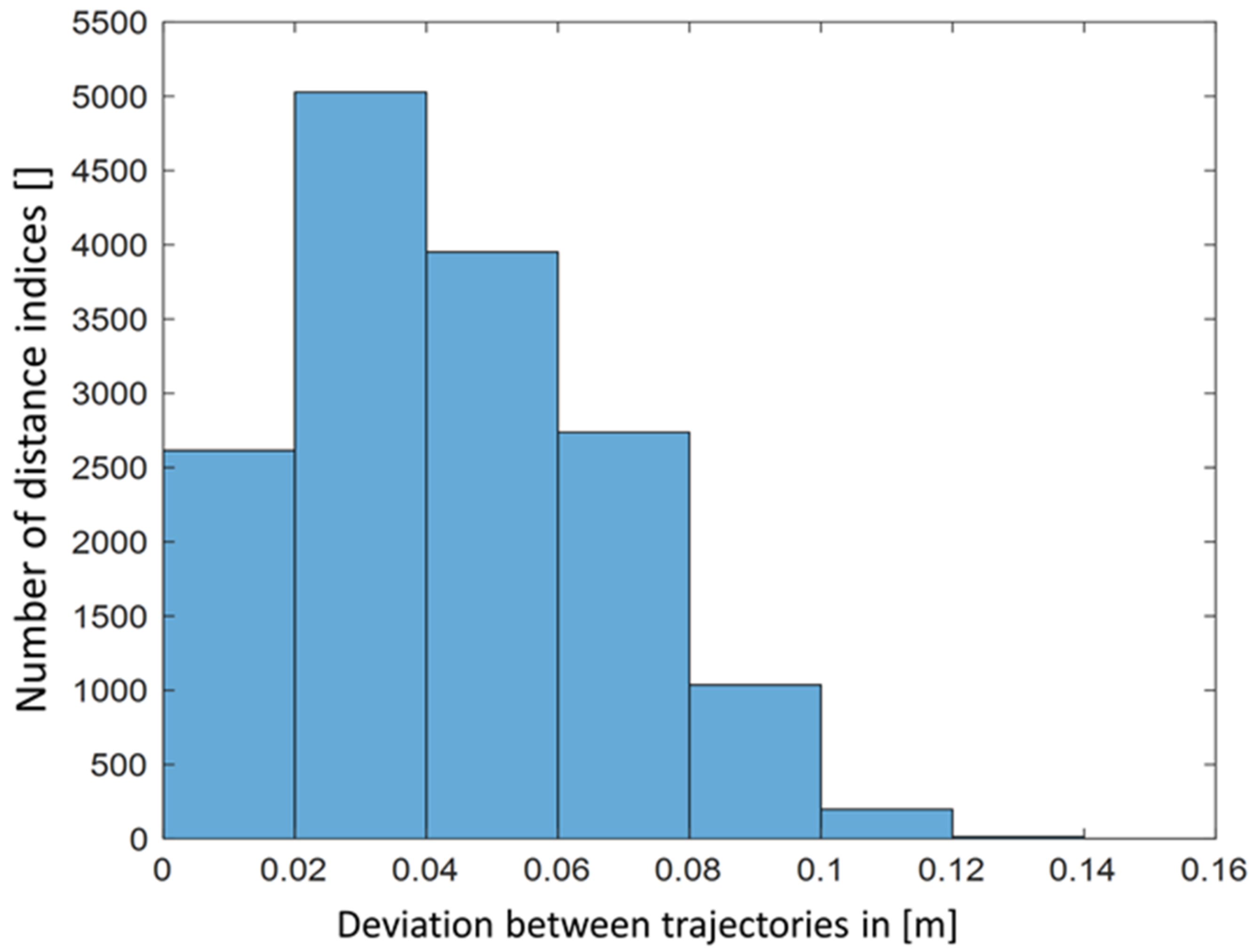

Figure 11 shows the statistical relationship between the precision of the nine considered trajectories from run 3 in the route section [

2,

3]. A total of 15,584 nearest neighbors form the data basis for the histogram. Overall, 1.4% of the values were over 0.1 m.

Figure 12 shows the statistical relationship between the precisions of the nine considered trajectories from run 3 in the route section. A total of 35,192 nearest neighbors formed the data basis for the histogram. Overall, 18.9% of the values were over 0.1 m.

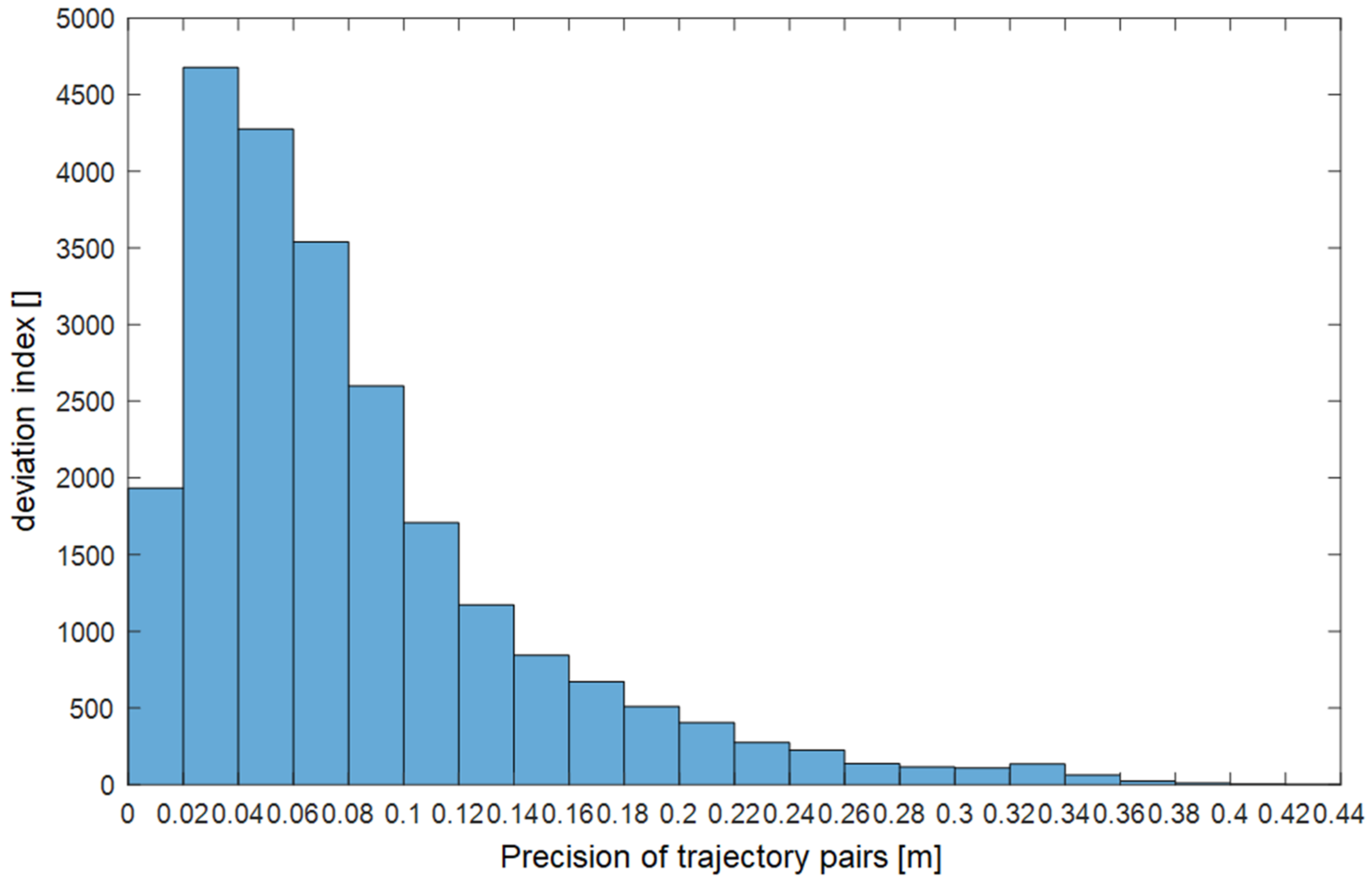

Figure 13 shows the statistical relationship between the precisions of the nine considered trajectories from run 4 in the route section. A total of 23,437 nearest neighbors formed the data basis for the histogram. Overall, 27.3% of the values were over 0.10 m, 6.4% were over 0.20 m, and 1.5% were over 0.3 m.

4.3. Accuracy, Precision, and Consistency

In this work, a tolerance limit of 0.05 m is specified for precision and accuracy. This tolerance limit can be selected by the user, and thus a different value is assumed for other scenarios. Measured against this threshold value, the navigation performance of the vehicle with a LiDAR scanner can be evaluated as follows:

In the route section “alley” from run 3, the navigation is rated as accurate and precise, and consequently consistent. An average deviation of 0.0487 m (Accuracy) or 0.0439 m (Precision) was determined. The standard deviation was 0.0286 m (Accuracy) and 0.0238 m (Precision). The maximum value was 0.1406 m (Accuracy) and 0.1511 m (Precision), the minimum value was 0.0047 m (Accuracy) and 0.00001 m (Precision) (

Figure 14).

In run 3, the navigation is assessed as inaccurate and imprecise, and subsequently as inconsistent. An average deviation of 0.0608 m (Accuracy) or 0.0707 m (Precision) was determined. The standard deviation was 0.0450 m (Accuracy) and 0.0588 m (Precision). The maximum value was 0.3337 m (Accuracy) and 0.4162 m (Precision), the minimum value was 0.0047 m (Accuracy) and 0.0001 m (Precision).

In run 4, the navigation is assessed as inaccurate and imprecise, and subsequently as inconsistent. An average deviation of 0.0878 m (Accuracy) or 0.0820 m (Precision) was determined. The standard deviation was 0.0657 m (Accuracy) and 0.0645 m (Precision). The maximum value was 0.3510 m (Accuracy) and 0.4276 m (Precision), the minimum value was 0.0099 m (Accuracy) and 0.0002 m (Precision).

The data showed a relationship between the number of measurement data and the accuracy or precision of the navigation. It can be observed that the drives with a more extensive measurement data basis as a result of the 1NN method are assessed as more accurate than the drives with less measurement data basis. This observation can be seen in all drives.

5. Discussion

The aim of this article is to analyze the navigation of a driverless feed mixer with LiDAR under practical conditions and present an orientation on how to evaluate these navigation technologies.

A practical, realistic environment is characterized by an undefined dynamic. Animals (cows and calves), people (employees and test staff), and machines (farm loaders and mixer wagons) are involved in the direct test environment at the TUM “Veitshof” research farm. At the same time, the route leads past a differentiated environment and over heterogeneous ground conditions. This environment is highly demanding on the technology. Particular challenges include the navigation via the cramped feeding table in the stable building, the navigation in the outside area with few natural landmarks for direction coordination, and the condition of the subsoil with inclines, slopes, and changing surfaces. Therefore, the driverless navigation in and around the stable building can be considered a significant success. It exhibited an easy yet functional implementation, despite not meeting the self-set deviation limits.

The selected characteristics are well suited for evaluating laser-assisted navigation. The accuracy in the repetition of a previously programmed route by the automated vehicle is a primarily fundamental requirement of the system. However, the navigation performance cannot be comprehensively assessed through accuracy alone, since chaotic or random errors can lead to dispersion in the reproduction of the drives. Only by considering the repeated drives and their deviation from one another (precision) in connection with the accuracy of the lane guidance does the consistent correctness (consistency) of the navigation performance result.

The results of the calculation of Accuracy and Precision show that navigation using the LiDAR scanner is inaccurate and imprecise, depending on the tolerance limit of 0.05 m. It is important to consider this binary result in a more differentiated manner. First, the tolerance limit of 0.05 m for Accuracy and Precision can be freely selected and thus adapted to different requirements. This value was selected in the present experiment since the routes of an automatic feed mixer wagon can also be located inside buildings and in confined conditions. As described in the results, it can be seen in the stable building on the cramped feeding table between the feed fences of the stall that the navigation performance is significantly improved. The navigation performance in the section “alley” is described as both accurate and precise, and therefore consistent. The main factors that positively influence this improved navigation are the paved concrete feeding table and the numerous objects for detection in the area. On the other hand, in the outside area in the section “alley”, the navigation performance deteriorates, but remains within an acceptable range. It is acceptable that the accuracy requirement should be selected to be lower in the outdoor area due to fewer obstacles or objects in the driving area. Another manufacturer of an automatic feeding system further showed that the driving accuracy and reproduction were artificially manipulated or provided with a random or regular offset in order that the floor coverings, such as tar or pavement are more evenly stressed and do not tend to form gullies. This does not affect the causality that the navigation performance increases as the number of objects in the detection area of the LiDAR scanner increases. The most inaccurate and imprecise places revealed the cornering, especially in the area of the incline at the stable entrance [mark 2] and the gradient at the stable exit [mark 3]. Herein, the navigation is impaired by the angular transition of the floor covering from gravel to concrete, the rigid connection of the laser scanner on the test vehicle, and thus the strong vibrations and inclinations with a disadvantageous detection area, as well as the inconvenient positioning of the laser scanner over the front axle at an appreciable distance (lever) to the drive axis. The smallest steering commands are amplified by the lever up to the laser scanner. This makes it difficult to steer the vehicle very accurately and precisely. This effect is intensified by the type of steering of the vehicle, which is solved by different wheel speeds on the drive axle (rear axle). As a final point, it can be mentioned that the calculated characteristics tend to worsen the navigation performance. This is due to the 1NN method and the calculation of the Euclidean distance. Since the target trajectory is subdivided into a limited number of waypoints, it is not technically feasible for the measurements of the driving trajectories to be at a perpendicular distance from the target trajectory. It can be assumed that the vehicle traveled directly between the waypoints and that the navigation performance is therefore better than determined in the calculations. Due to the circumstances mentioned throughout the tests, navigation with LiDAR scanners in an agricultural environment can be classified as absolutely sufficient and suitable. The most antagonistic factors for the navigation deviations have been observed within the machine construction, but not with the LiDAR scanner capabilities.

The scope of the measurement data when determining the accuracy is to be critically considered. With the inclusion of further measuring points, the informative value and security of the parameter could be augmented. Before starting the experiment, more waypoints or target trajectory positions would have to be stored. Based on fewer measurement gaps in the sequence, the data cleansing effort could be reduced. Additionally, an increased number of XTRUE points and a reduction in the distance between two XTRUE points should improve measurement data accuracy. However, there is also a limitation of the measurement data, since too many stored waypoints could cause the software to overcompensate, thereby causing the data to be more inaccurate. Moreover, due to the interpolation between the two waypoints, several waypoints could no longer be approached precisely. It can be assumed that the influence of discretization is generally higher on curves than on straight lines. In contrast to the determination of the “Accuracy”, a sufficiently large database could be used to determine the “Precision”. Although the significance of the Precision was highly rated, it could be further improved with an increase in the number of waypoints. As with the Accuracy, the number of possible waypoints is limited to not be imprecise.

6. Conclusions

The calculation of the characteristics Accuracy, Precision, and Consistency describes the LiDAR navigation as inaccurate and imprecise. However, a differentiated, analytical assessment of the test conditions yields the result that LiDAR scanner-based navigation is a suitable option for automating automatic feeding systems. The potential of the highly developed technology is also relevant for use on agricultural machines. Beyond that, the usage of LiDAR in other challenging environmental use cases seems promising, e.g., for unknown land exploration.

Additionally, the navigation should be tested with the aid of a LiDAR scanner on a mixer wagon. It should be assessed whether there are restrictions in the use of LiDAR technology on an agricultural vehicle in long-term tests. Furthermore, it would be advantageous to evaluate how navigation with LiDAR can be achieved outdoors with few detectable objects. In this scenario, can artificial landmarks be reinforced that blend in homogeneously with the surroundings or is it advisable to use GNSS support in addition to LiDAR in the outside area?

A critical concern in automated vehicles is personal protection and collision avoidance. Due to their physical properties, laser scanners are only partially suitable as an optical measurement technology for personal protection in outdoor areas. Herein, it is important to clarify whether laser scanners are exclusively suitable for these tasks or whether other technologies should supplement the concept of an automatic feeding system.