1. Introduction

Today’s world is dealing with several challenges: the COVID pandemic, population growth, climate change, and decreasing food production. Although these problems are independent from each other, they have a combined impact. For example, during the pandemic, a number of food-producing facilities halted production, resulting in panic in parts of the world [

1]. People worried about the reduced availability of food items and either hoarded available items, further compounding the situation, or had to go without. This food insecurity problem was and will continue to be further compounded by population growth. It is reported that in 2050, food production will need to be doubled to feed the world’s population which is estimated to reach 10 billion [

2].

Though frightening, these challenges, especially food insecurity, can be reduced by the advancement of science and technology that provide methodologies to address the issue. Technological advancements are being made in genetic engineering [

3], precision agriculture [

4], sensor technology, automation [

5], and robotics [

6]. These technologies, such as precision agriculture, will help reduce food problems in the future. Precision agriculture can be defined as an approach to crop management that maximizes production while minimizing resources and environmental impact using specifics-based technology [

7,

8]. Methods can be created that combine data analytics from soil sensing systems, remote satellite and drone sensing systems and yield monitoring systems to optimize crop growth and production [

9]. One unexpected challenge affecting food production and a growing population is that farmers are having a difficult time securing laborers for harvesting tasks [

10,

11]. Current methods require labor for sustainable food production. Future solutions for food production then must include means by which efficient and sustainable harvesting can be accomplished amidst a decreasing labor supply chain. Robotics is one such technology that has the potential to address the issue of a decreasing labor force.

Robotic technologies are being developed in areas of agriculture that are labor-intensive, with fruit harvesting being identified as one such area. Robotic fruit harvesting has been investigated for at least three decades [

12,

13,

14]. Early studies used black-and-white cameras to identify the fruit and a Cartesian robot to pick the fruit [

15]. Progress has been made in the area of fruit detection through the use of color cameras, stereo cameras, ultrasonic sensors, thermal cameras, and multispectral cameras. For manipulators used for harvesting, Cartesian robots, cylindrical robots, spherical robots, and articulated robots have been utilized. In the area of fruit recognition [

16], a critical task for a harvesting robot, several algorithms have been used including simple thresholding, multicolor segmentation, decision theoretic approaches, artificial neural networks, and deep learning [

17,

18]. Researchers have also utilized different methods for removing the fruit from the tree’s branches including vacuum based end effector, multifingered grippers, and cutting end effector to name a few [

19,

20,

21].

Although there have been a number of studies on robotic fruit harvesting, it has been a challenge to produce a commercially viable robot. One of the issues with robotic harvesting is the servoing of the manipulator towards the fruit [

22]. A popular method of servoing is using machine vision and is called visual servoing. In the current study, a visual servoing system for a robotic system to harvest apples was developed using machine vision to act as the sensor monitoring the position of the fruit with respect to the end effector. The reported position error was then used to guide the manipulator towards the fruit. The objectives of this study were to develop a visual servoing method for the robotic harvesting of apples and to evaluate the performance of the visual servoing for fruit harvesting.

2. Materials and Methods

2.1. Robotic System Overview

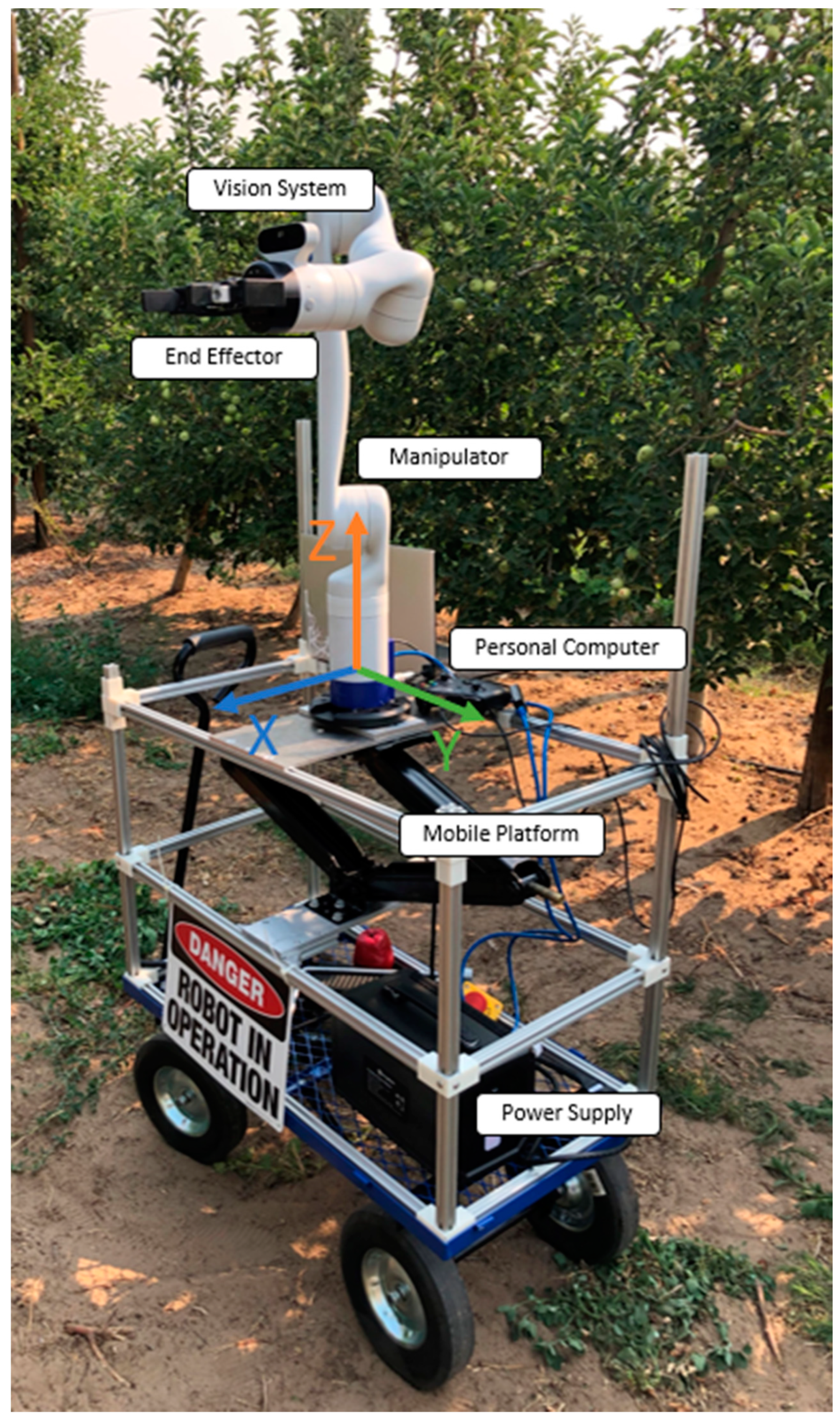

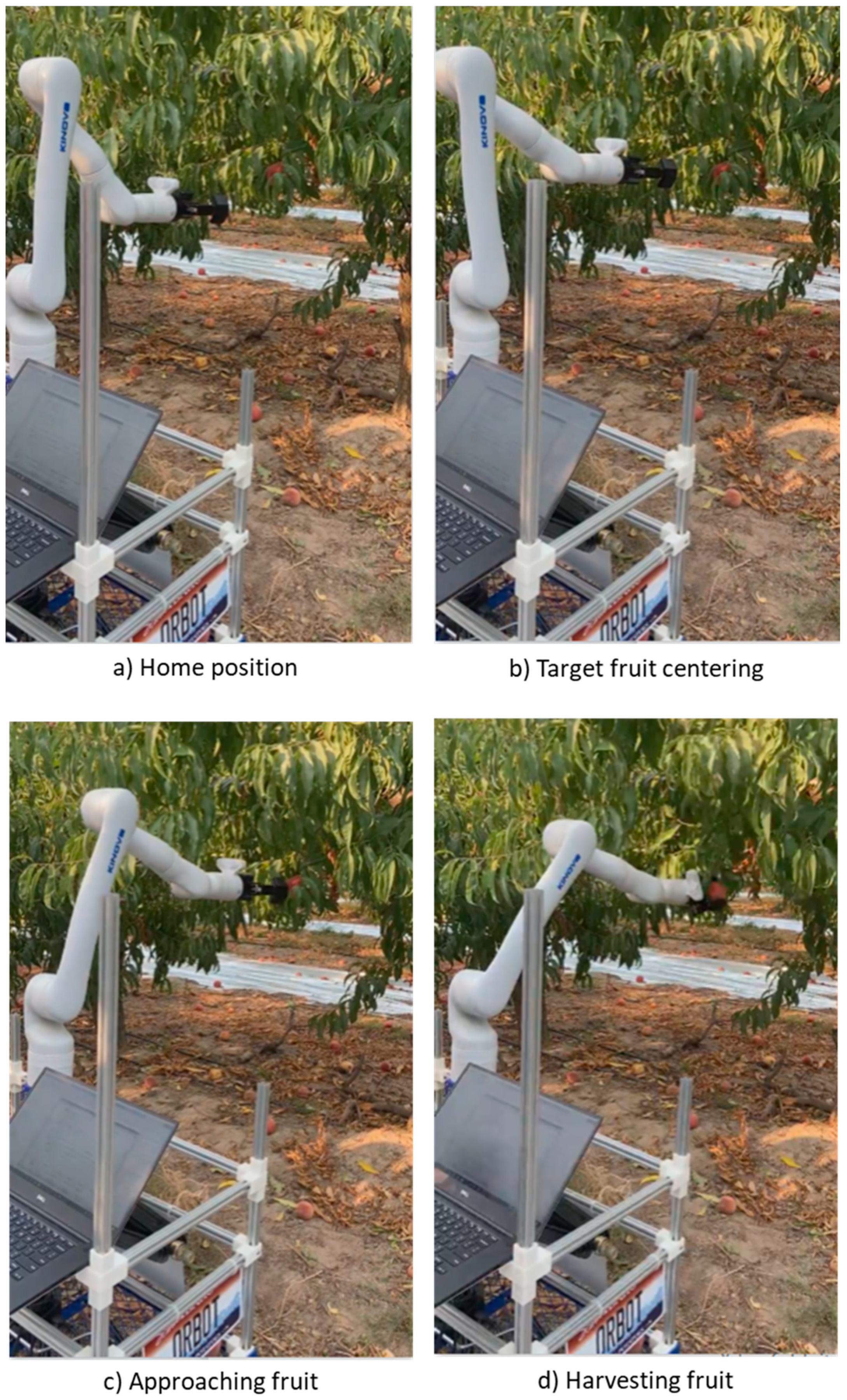

The robotic harvesting system (OrBot- ORchard roBOT),

Figure 1, is composed of the following: the manipulator, the vision system, the end effector, a personal computer, power supply, and a mobile platform.

The manipulator is a third-generation Kinova robotic arm [

23]. This arm has six degrees of freedom and has a reach of 902 mm. The arm has a full-range continuous payload of 2 kg, which easily exceeds the weight of an apple, and it has a maximum Cartesian translation speed of 500 mm/s. The average power consumption of the arm is 36 W. These characteristics make this manipulator suitable for fruit harvesting.

The vision system is comprised of a color sensor and a depth sensor. The color sensor (Omnivision OV5640) has a resolution of 1920 × 1080 pixels and a field of view of 65 degrees. The depth sensor (Intel RealSense Depth Module D410), enabled by both stereo vision and active IR sensing, has a resolution of 480 × 270 pixels with a field of view of 72 degrees. The color sensor is used to detect the fruit while the depth sensor is used to estimate the distance of the fruit from the end effector.

The end effector is a standard two-finger gripper. The gripper has a stroke of 85 mm and a grip force of 20 to 235 N, which is controlled depending on the target fruit. Customized 3D-printed fingers of the gripper were designed and developed to allow for a larger stroke for larger-sized fruit and to conform with the spherical shape of the fruit.

The main control unit of the harvesting process is a Dell XPS 15 9570 laptop computer. The manipulator is controlled using Kinova Kortex, a manufacturer-developed and included software platform [

24]. Kortex comes with Application Programming Interface (API) tools to allow for customized control. The control program for the manipulator and for the vision system were developed using a combination of the Kinova Kortex API, Matlab, Digital Image Processing toolbox, and Image Acquisition toolbox [

25].

The robotic manipulator with the gripper and the control unit are mounted on a mobile platform, which allows the system to move within the orchard. The mobile platform is a hand-driven cart with a customized aluminum structure and 3D-printed parts. The mobile platform was fabricated and assembled at the university machine shop. The robot is mounted to the structure which has the ability to adjust the height of the robot above the ground. In addition to the robot and laptop, the mobile platform carries the system’s power supply which is a portable power station with a capacity of 540 Wh.

2.2. Visual Servo Control

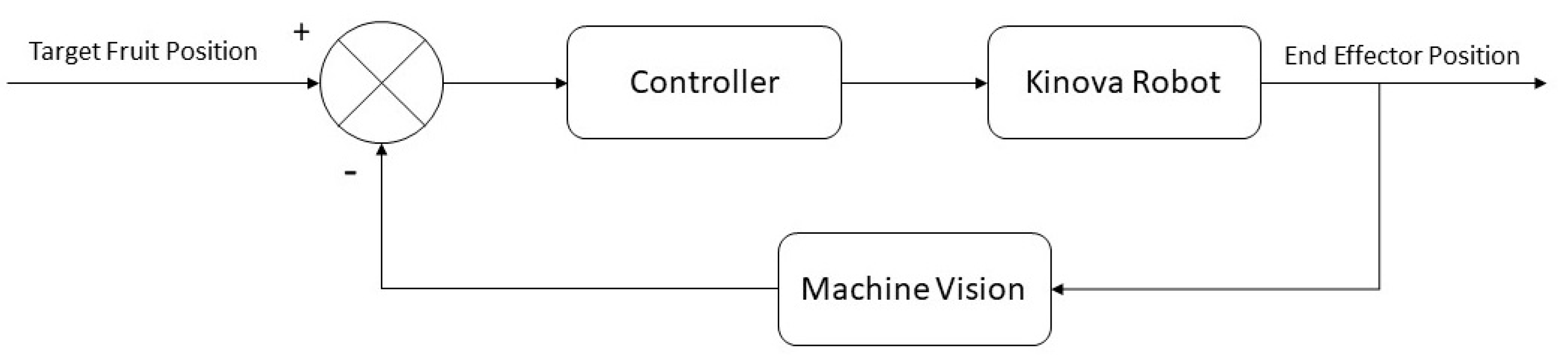

In addition to recognizing the fruit, the system must also guide the end effector towards the fruit, requiring a form of eye-hand coordination. The visual servo control (

Figure 2) is based on a negative feedback system. The system or the process that is controlled is the harvesting motion of the manipulator. The output of the process is the position of the gripper. The goal of the visual servo control is for the gripper to make contact with the fruit.

The position of the gripper with respect to the origin of the manipulator is known, but the position of the fruit relative to the gripper is not, so machine vision is used to estimate the position of the fruit and eventually the position of the gripper with respect to the fruit. The error between the gripper and the target fruit position is used to control the manipulator motion.

There are two ways to implement a visual servo system for fruit harvesting: the eye-in-hand (the camera is attached to the hand) or the eye-to-hand (the camera is in a fixed position). OrBot uses the eye-in-hand configuration. In addition to the camera configuration, visual servoing can be implemented using position-based visual servoing (PBVS) or image-based visual servoing (IBVS) [

26]. PBVS uses image processing to extract features from the image and derives the position of the target with respect to the camera and consequently the target’s position with respect to the robot. IBVS only uses the extracted features from the image to perform visual servoing. Since OrBot uses the eye-in-hand configuration, visual servoing was developed using IBVS, as the visual servoing process is simplified because only the image features are used for the robot motion. There is no need to calculate the geometric relationship between the target and the robot. It is important to note that IBVS is only effective if the features of the target are properly extracted. The result of this process, fruit fixation, is that the fruit is centered in the camera image, placing it directly in line with the harvesting end effector. This then facilitates the distance measurement of the fruit since the depth sensor is also integrated on the end effector.

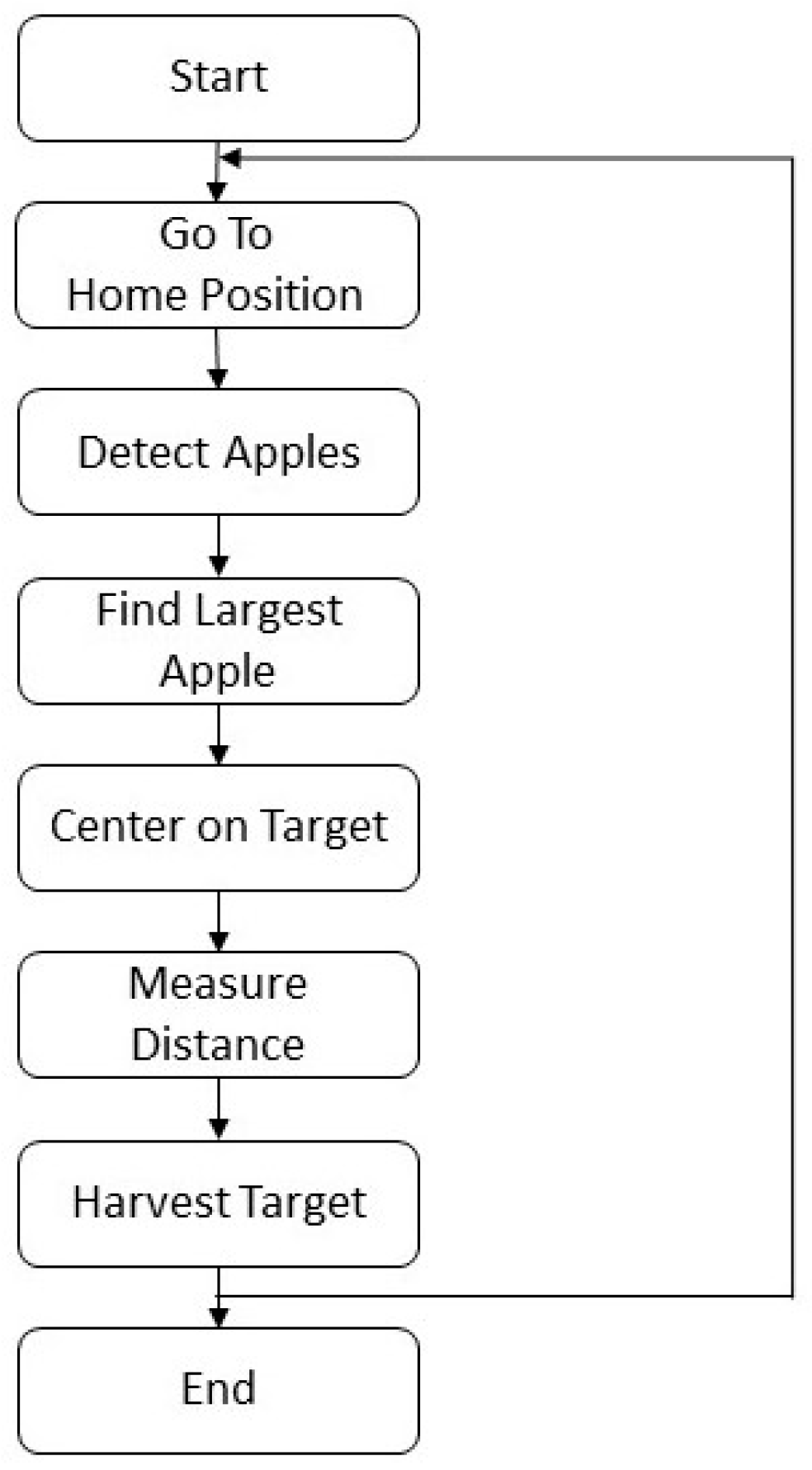

The visual servo control is integrated into the harvesting process, as defined by the following tasks:

The gripper is moved to a predefined home position and fruit search is initiated.

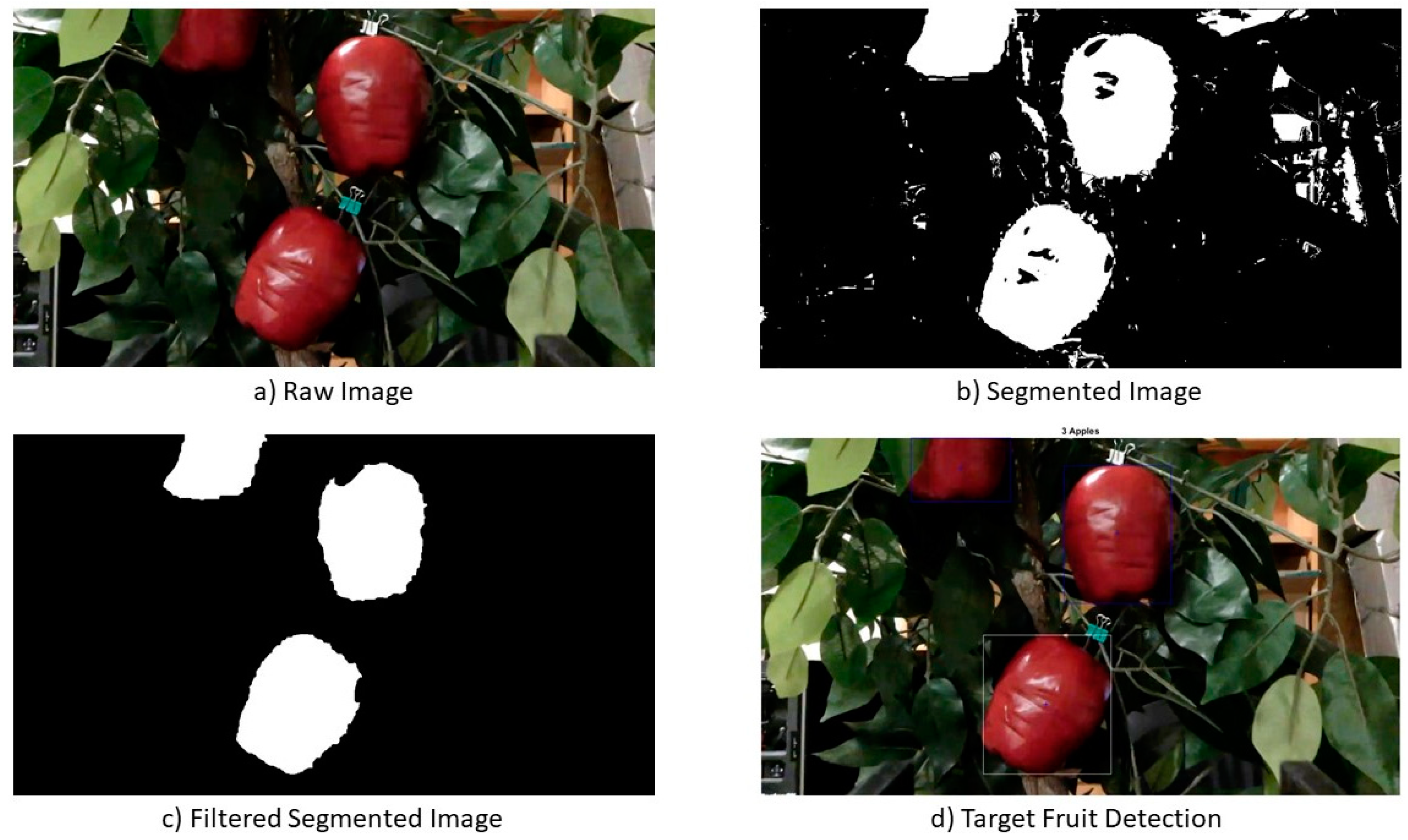

An image is collected from the color sensor.

The captured image is segmented to separate the fruit from the background. Fruit segmentation is based on an artificial neural network that uses the pixel’s chromaticity r and g as inputs, and the binary output is fruit or non-fruit.

If the determination is fruit then the binary segmentation image is filtered for size and position using blob analysis.

The fruit with the largest area in the image is selected as the target fruit.

This process is graphically shown in

Figure 3.

The distance from the end effector to the fruit is then computed and the manipulator is actuated to move towards the fruit and pick it. The manipulator then goes back to the home position and starts the process again until no fruit are found in the image.

Figure 4 shows the flow chart that OrBot follows.

2.3. Evaluation of the Visual Servo Control

The visual servo control described above uses the image-based visual servoing method. In this method, the control of the manipulator is dependent on the position of the target object in the image. The visual servo control developed in this project was evaluated using three tests: fixation test, visual servo speed test, and harvesting test.

2.3.1. Fixation Test

Fixation is the act of centering the focus on the target object, much like when people track an object, they try to center the object in their field of view. As the configuration of OrBot is image-based with a camera-in-hand configuration, fixation was used to center the target fruit in the image. The test was performed in the lab with configurations of one, two, and three fruit clusters. Five different distances for each cluster configuration were attempted. The fruits were arranged randomly in front of the robot and within the field of view of the camera. The goal of the test was to evaluate if the vision system was able to detect the target fruit, defined as the largest fruit in the image, and then center the target fruit in the image.

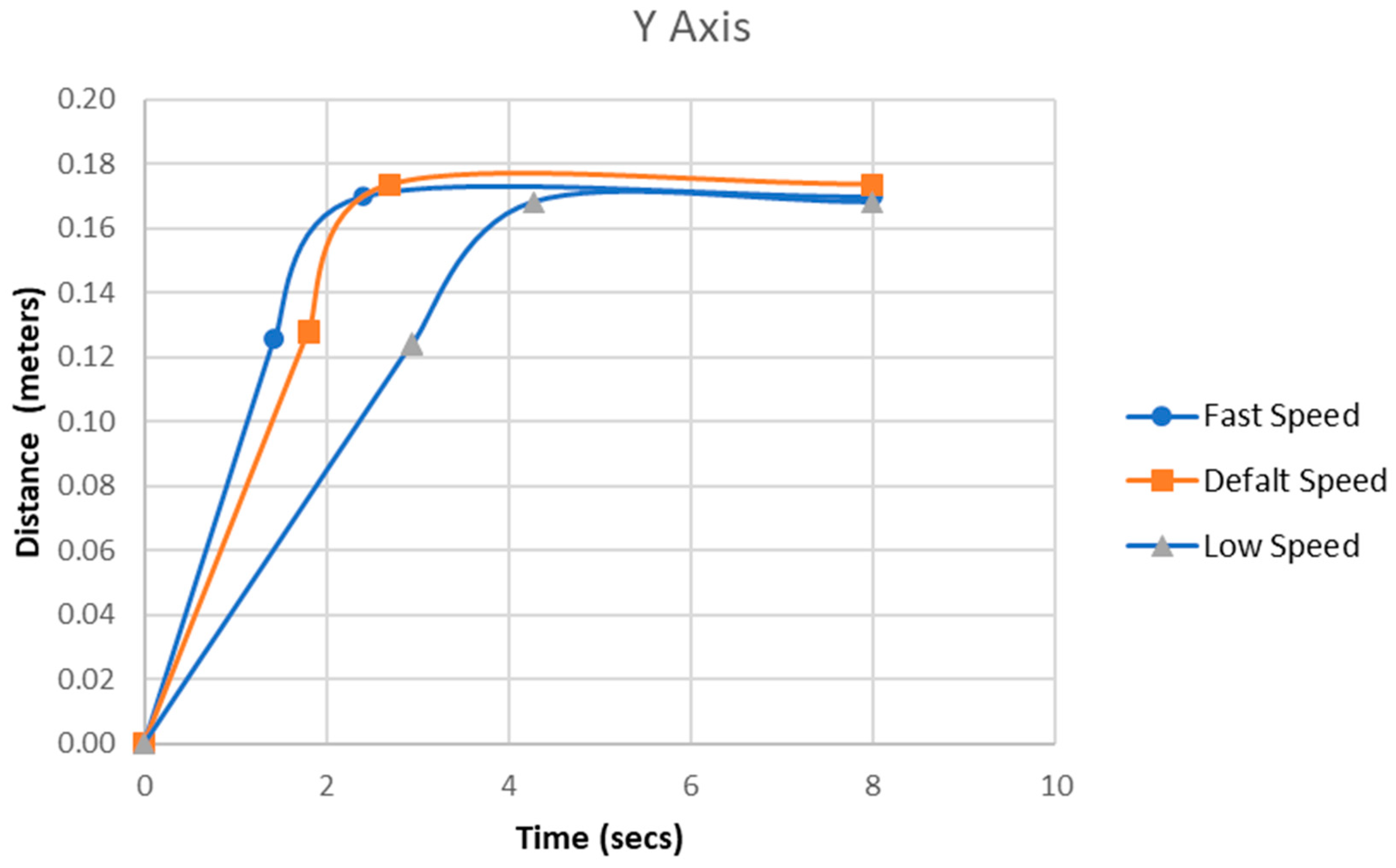

2.3.2. Visual Servo Speed Test

The visual servo speed test evaluates the speed of the harvesting robot in detecting the target and centering the end effector on the target. The test was performed in the lab using the same configuration of fruit as the fixation test. Each test was done three times at three different speeds: slow (50% of default value), medium (default value), and fast (80% of max value). The goal was to evaluate the visual servoing speed and determine the optimum speed for harvesting.

2.3.3. Fruit Harvesting Test

The goal of the harvesting test is to evaluate the performance of the robot as it harvests fruit. In addition to scoring a successful or failed harvest, the test also measured the time it took to complete the full sequence of tasks for different fruit arrangements. The arrangements varied from one to five apples inside the work envelope of the robot. An artificial tree holding plastic apples clipped to the branches was positioned about 400 mm away from the home position of the robot. This position ensured that the robot could reach all the fruit.

In addition to evaluating the harvesting process indoors, OrBot was tested in a commercial fruit orchard. OrBot is designed to harvest apples, but at the time of evaluation apples were still out of season. However, there was a peach orchard that had just been harvested in the local area. The owner gave permission for the team to test OrBot on the peaches that remained on the trees. Since the size and color of the mature peach closely matched the design parameters of OrBot, this alternative fruit provided an opportunity to evaluate performance of the OrBot with thirty harvesting attempts.

4. Discussion

Several problems need to be resolved to successfully harvest fruit with a robot. The fruit needs to be located and recognized by the robot, the end effector has to be guided to the fruit, and a technique that closely mimics the motions used by current human actions to minimize damage to the tree must be utilized for harvesting.

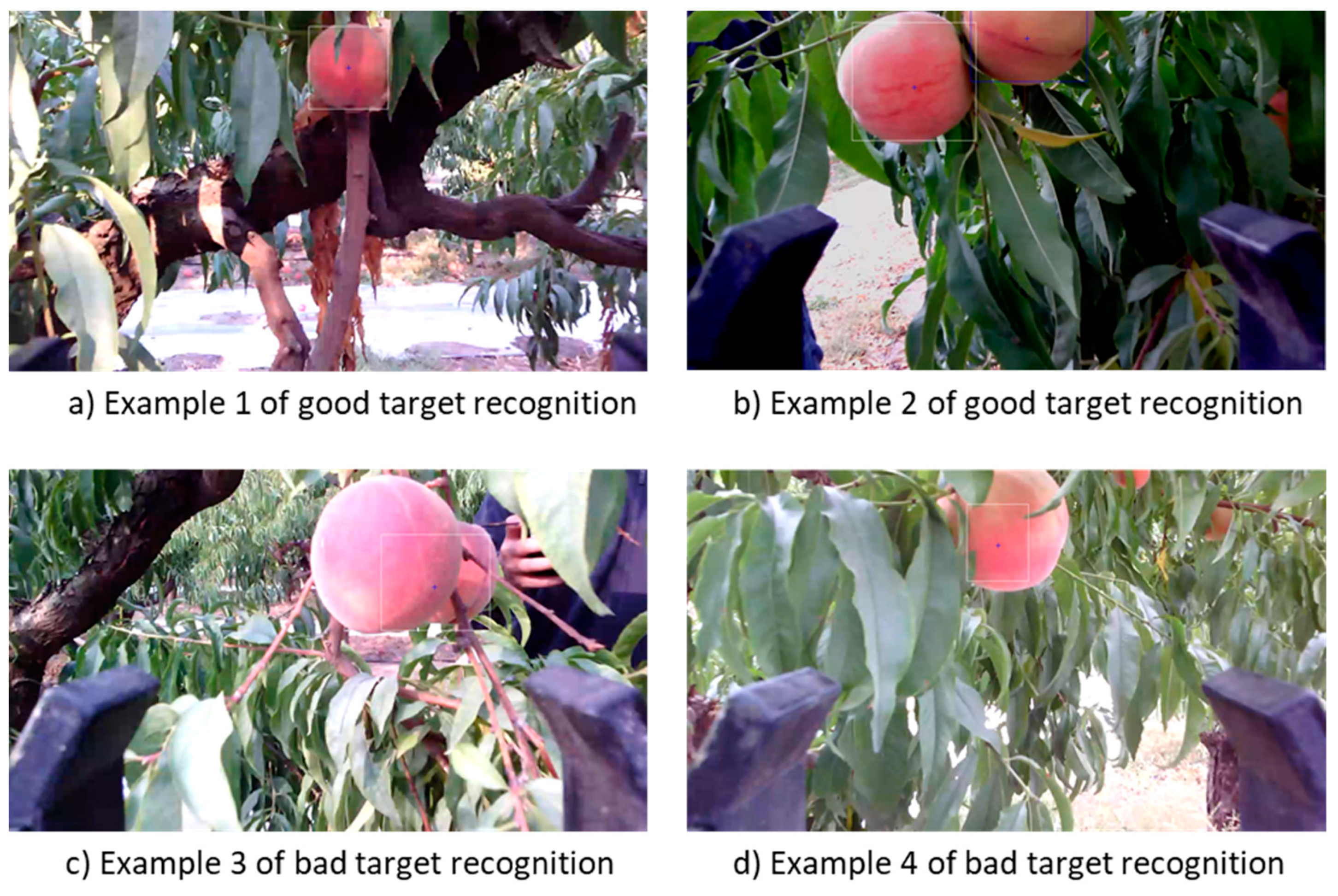

The results of the fixation test show that the developed visual servo system was able to position the target fruit in the center of the field of view. As shown in the results, the response of the system, with respect to centering the target fruit, demonstrated an overdamped system. As compared with previous works on fruit harvesting [

28,

29,

30], this study shows the response characteristic of visual servoing that is important for a second-order or higher-order systems. The overdamped response ensures that there is no oscillation, and a smooth motion of the robot is the result. The successful centering of the fruit also showed that the image processing, specifically the segmentation of the fruit from the image, was efficient. The combination of the artificial neural network, with chromaticity r and g as the input, proved to be effective in segmenting the fruit. Initially, the test was done indoors with controlled lighting which did not affect the segmentation of the fruit from the background. However, when OrBot was tested on peaches outdoors in an orchard, results showed that the recognition system was affected by the natural lighting condition. As seen from the examples of

Figure 9, there were fruits that were partially recognized. This finding is important to note since a target fruit that is only partially recognized would not be properly positioned by the manipulator to center it and measure its distance, leading to missed targets. Although OrBot had a relatively high harvesting success rate for peaches, it should be noted that the segmentation algorithm was trained for apples. Additionally, the segmentation algorithm should be developed to adapt to natural lighting conditions. An alternative solution would be to harvest at night, using controlled artificial lighting.

The speed of the response was also evaluated both in the fixation process and fruit harvesting. It was shown that the fast speed configuration of the robot was suitable for fruit harvesting as it maintained a consistent speed of 12 s in harvesting a fruit for different arbitrary positions. Although this speed is slower compared to a human picker, the harvesting speed is comparable with other harvesting robots developed for apples. The harvesting robot developed by Hohimer et al. [

28] achieved a harvesting speed of 7 s per fruit. However, the robot used had a smaller work envelope compared with OrBot. Another apple harvesting robot [

29] that used a 6-DOF manipulator reported a harvesting speed of 16 s per fruit. Comparing it with robotic harvesters for other crops [

30], the harvesting speed is faster than the average of the reported speeds of current developed harvesting robots which an average of 18.88 s. It should be noted that the harvesting speed is dependent on several factors, such as actuator’s angular speed, image processing speed, and the manipulator’s work envelope. Currently, OrBot’s harvesting operation is implemented using Matlab, which is not optimized for real time operation. Even with its current speed, the robot could be used to work cooperatively with human pickers to augment labor shortages. One advantage of a robot, even if it is slow, is the ability to work continuously without the need for a break.

Future tasks for the robotic harvesting project using OrBot include optimization of the visual servoing program, nighttime operation test, addition of eye-to-hand configuration, and the development of a soft adaptive fruit gripper. To optimize the program, the developed visual servoing will be implemented using the Robotic Operating System (ROS). OrBot will also be tested for nighttime harvesting using artificial lighting. As mentioned earlier, nighttime harvesting offers an alternative to dealing with variable lighting conditions caused by natural lighting. Another possibility for the future would be to add another camera that would be fixed in position (eye-to-hand). The added camera could provide a wider field of view of the scene, allowing the robot to see more fruits. By detecting more fruits, the robotic system could then store the fruit positions and use an optimal path planning strategy for multiple fruit harvesting. The multiple fruit harvesting could be more efficient and improve the harvesting time. Lastly, a soft adaptive robotic fruit gripper should be developed. The soft aspect relates to the characteristic of the gripper material, so as not to damage the fruit. The adaptive aspect pertains to tactile feedback to the robotic system informing about fruit pressure applied by the gripper.