1. Introduction

Urban trees are fundamental components of sustainable and livable smart cities. Beyond their ecological, social, and economic functions, they contribute to climate adaptation, environmental resilience, and data-driven green infrastructure planning. As natural environmental regulators and key indicators in digital urban monitoring systems, trees play a vital role in mitigating heat islands, filtering air pollutants, sequestering atmospheric carbon, and reducing surface runoff, thereby improving environmental quality and urban hydrology [

1,

2,

3,

4,

5]. They also provide social and psychological benefits by enhancing aesthetics, reducing noise, and promoting mental well-being [

6,

7], while contributing economically through increased property values and reduced energy consumption in residential and commercial areas [

8]. These diverse ecosystem services make accurate and timely tree crown mapping a key requirement for urban planning and smart city management [

9,

10].

Remote sensing provides an efficient and scalable means to monitor urban vegetation. A variety of platforms—including ground-based systems, aerial surveys, and satellites—offer structural and spectral information at different spatial and temporal resolutions. Ground-based sensors such as terrestrial LiDAR or field spectrometers provide detailed canopy information but are labor-intensive and spatially limited. Aerial platforms equipped with optical or LiDAR sensors capture fine geometric detail but are costly and logistically complex for frequent monitoring. In contrast, satellite imagery provides repeatable and cost-effective coverage at regional to global scales. High-resolution commercial satellites such as WorldView-2, WorldView-3, and GeoEye-1, with spatial resolutions as fine as 0.31–0.46 m, enable precise delineation of individual tree crowns in complex urban environments [

11,

12]. Their multispectral and near-infrared (NIR) capabilities also allow for vegetation health assessment, while Sentinel-2’s frequent revisit cycle supports dynamic urban forest monitoring [

13,

14].

Tree detection and delineation methods have evolved significantly. Early conventional techniques, including NDVI thresholding and morphological operations, were simple and computationally light but struggled to separate individual trees in dense or shadowed urban scenes [

15,

16,

17,

18,

19,

20,

21,

22]. Machine learning algorithms such as support vector machines (SVMs) and random forests (RFs) improved classification performance [

23,

24,

25,

26], yet they relied on handcrafted features and often required extensive parameter tuning. Clustering and regression-based approaches also aided vegetation mapping but lacked the spatial precision necessary for object-level crown delineation [

27,

28,

29,

30,

31,

32].

Recent advances in artificial intelligence, particularly deep learning, have revolutionized remote sensing-based vegetation mapping [

33]. Convolutional neural networks (CNNs) such as U-Net and Mask R-CNN automate feature extraction and achieve state-of-the-art accuracy in heterogeneous urban conditions [

33,

34,

35,

36]. Hybrid frameworks integrating multispectral, hyperspectral, and LiDAR data further enhance the model’s robustness [

37]. Transformer-based models and foundation networks such as the Segment Anything Model (SAM) have also emerged, enabling generalizable segmentation with minimal supervision [

38,

39]. Despite these advances, most studies rely on single-sensor or high-cost datasets (e.g., UAV or LiDAR), and few assess whether deep learning models maintain consistent accuracy across sensors with heterogeneous spatial and spectral resolutions.

We conducted a comparative analysis of three prominent segmentation methods, as summarized in

Table 1.

In summary, integrating high-resolution satellite imagery with deep learning offers a scalable, automated, and precise solution for urban tree crown detection—an essential component of smart city infrastructure. Addressing the limitations of prior work, this study introduces a unified deep learning framework that integrates data from three commercial high-resolution satellites—GeoEye-1, WorldView-2, and WorldView-3—within a single processing pipeline for the first time in the context of urban tree crown segmentation. This multi-sensor integration represents the core novelty of the research, demonstrating that a Residual U-Net architecture enhanced with Attention Gates (AGs) can maintain high accuracy and robustness across sensors of differing spatial and spectral characteristics.

The study introduces several methodological advancements:

A hybrid annotation approach combining NDVI-based region growing, manual labeling, and the Segment Anything Model (SAM) to efficiently generate accurate and diverse training masks.

A unified multi-sensor RGBN dataset (GeoEye-1, WorldView-2, and WorldView-3) designed to evaluate model performance across different spatial and spectral domains.

An enhanced Residual U-Net with Attention Gates, specifically optimized for high-resolution satellite imagery.

A fully automated workflow that remains scalable within the spatial resolution interval of the integrated sensors, supporting practical implementation for urban forestry monitoring and smart city applications.

Collectively, these contributions establish the first comprehensive framework for multi-sensor, satellite-based urban tree crown segmentation using deep learning. The approach advances understanding of cross-sensor transferability while providing a cost-effective, operational pathway for large-scale urban forest monitoring at city and regional levels.

2. Dataset

2.1. Dataset Preparation

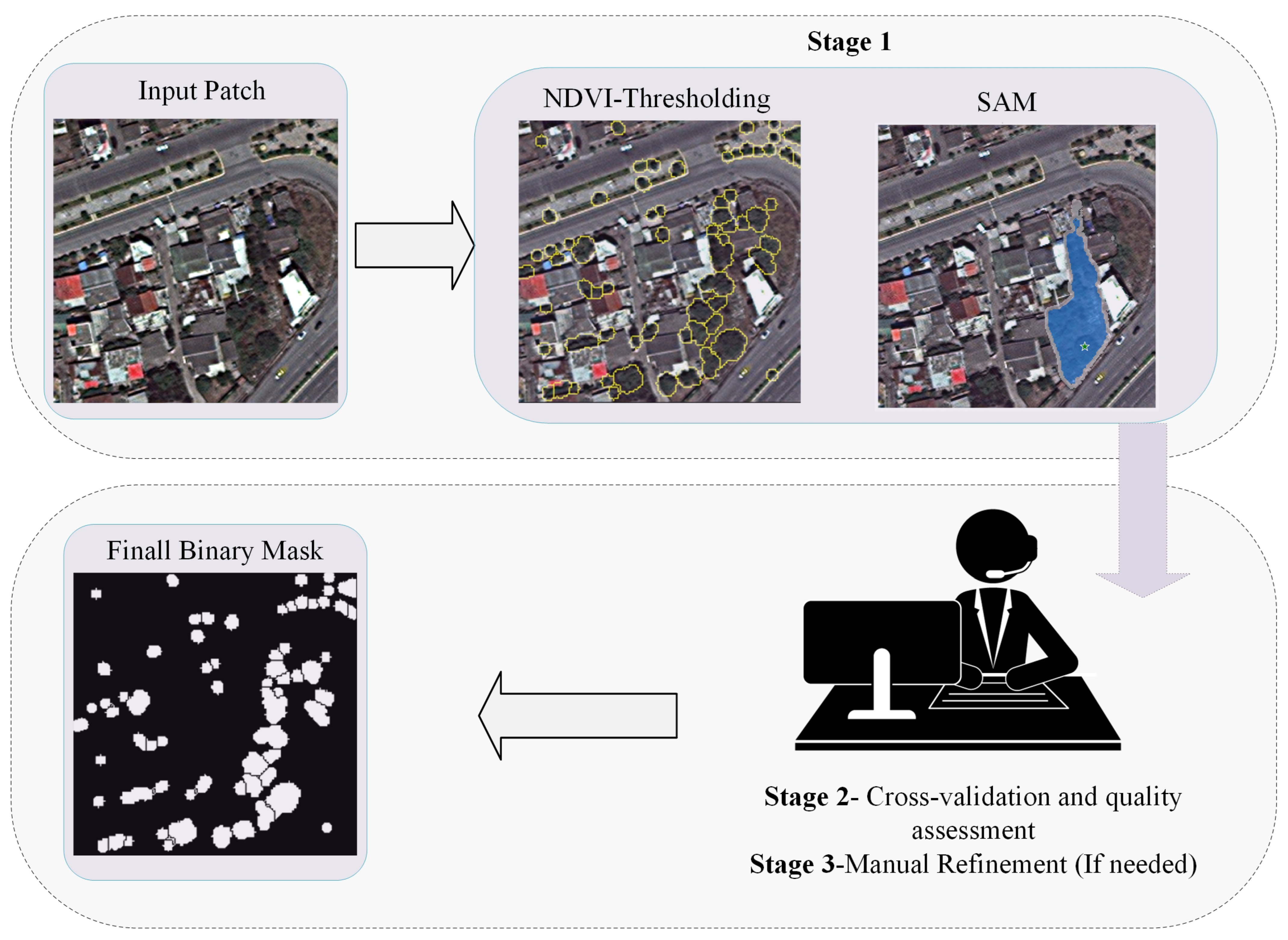

High-quality and reliable ground-truth masks are essential for accurate tree crown segmentation. To achieve this, we employed a hybrid annotation strategy that combines automated and manual methods to ensure both efficiency and precision in the labeling process. The workflow consisted of three main stages: (1) automated segmentation using NDVI–watershed and the Segment Anything Model (SAM), (2) comparative validation, and (3) manual refinement.

Stage 1—Automated segmentation with NDVI and SAM: We first generated preliminary masks of Pan-Sharpened images (

Figure 1) using two complementary segmentation approaches. The normalized difference vegetation index (NDVI) was computed from the red and near-infrared bands according to:

An NDVI threshold was applied to isolate vegetation, followed by a watershed region-growing algorithm to separate adjacent tree crowns. The watershed method interprets pixel intensities as a topographic surface, where flooding from local minima delineates catchment basins corresponding to individual tree crowns. Let

denote the pixel intensity function. The distance transform

was computed as:

Local maxima of

served as markers for the flooding process, and segmentation labels

were determined by:

where

represents the flow direction toward the nearest marker

m. The resulting NDVI–watershed masks effectively delineated individual crowns in open-canopy areas.

In parallel, we applied the Segment Anything Model (SAM) to the same image patches to generate automatic crown masks at larger scales, particularly for complex or densely vegetated regions. Both SAM-derived and NDVI–watershed masks were generated using pan-sharpened RGBN imagery to enhance spatial detail and vegetation contrast.

Stage 2—Cross-validation and quality assessment: For each patch, the two automatically generated masks (SAM and NDVI–watershed) were visually compared with the corresponding RGB imagery. The author reviewed all patches to identify misclassified areas, including unrecognized crowns, over-segmentation, and false detections. This inspection identified regions requiring manual correction and served as an internal validation step to ensure consistency between automated methods and the actual canopy structures.

Stage 3—Manual refinement using ArcGIS Pro (3.5.3): In the final step, all inaccurate or incomplete crown delineations were corrected manually using the polygon editing tools in ArcGIS Pro. Missing crowns were added, false positives were removed, and ambiguous boundaries were refined to match the true canopy extent visible in the RGB data. This manual correction ensured spatial accuracy and internal consistency across the dataset. While inter-annotator agreement was not formally quantified, all refinements were performed by the same operator to maintain labeling consistency.

To ensure a fair and consistent comparison across all networks, the same annotated dataset—comprising identical image–mask pairs—was used to train and evaluate all segmentation models, including U-Net, DeepLab v3, and the proposed Residual U-Net with Attention Gates. Each model was trained on the same supervised, digitalized tree canopy layer generated through the hybrid ground-truth process, ensuring that performance differences arise solely from the model architecture rather than variations in input data.

The overall workflow of the hybrid ground-truth generation process, including the three main stages of automated segmentation, cross-validation, and manual refinement, is illustrated in (

Figure 2).

The resulting dataset comprises 256 × 256 pixel RGBN image patches paired with their corresponding binary crown masks (

Figure 3). This hybrid ground-truth generation approach effectively combines the automation efficiency of SAM and NDVI–watershed segmentation with the precision of manual correction, resulting in a high-quality, reproducible dataset suitable for training deep learning models for urban tree crown detection.

2.2. Study Area

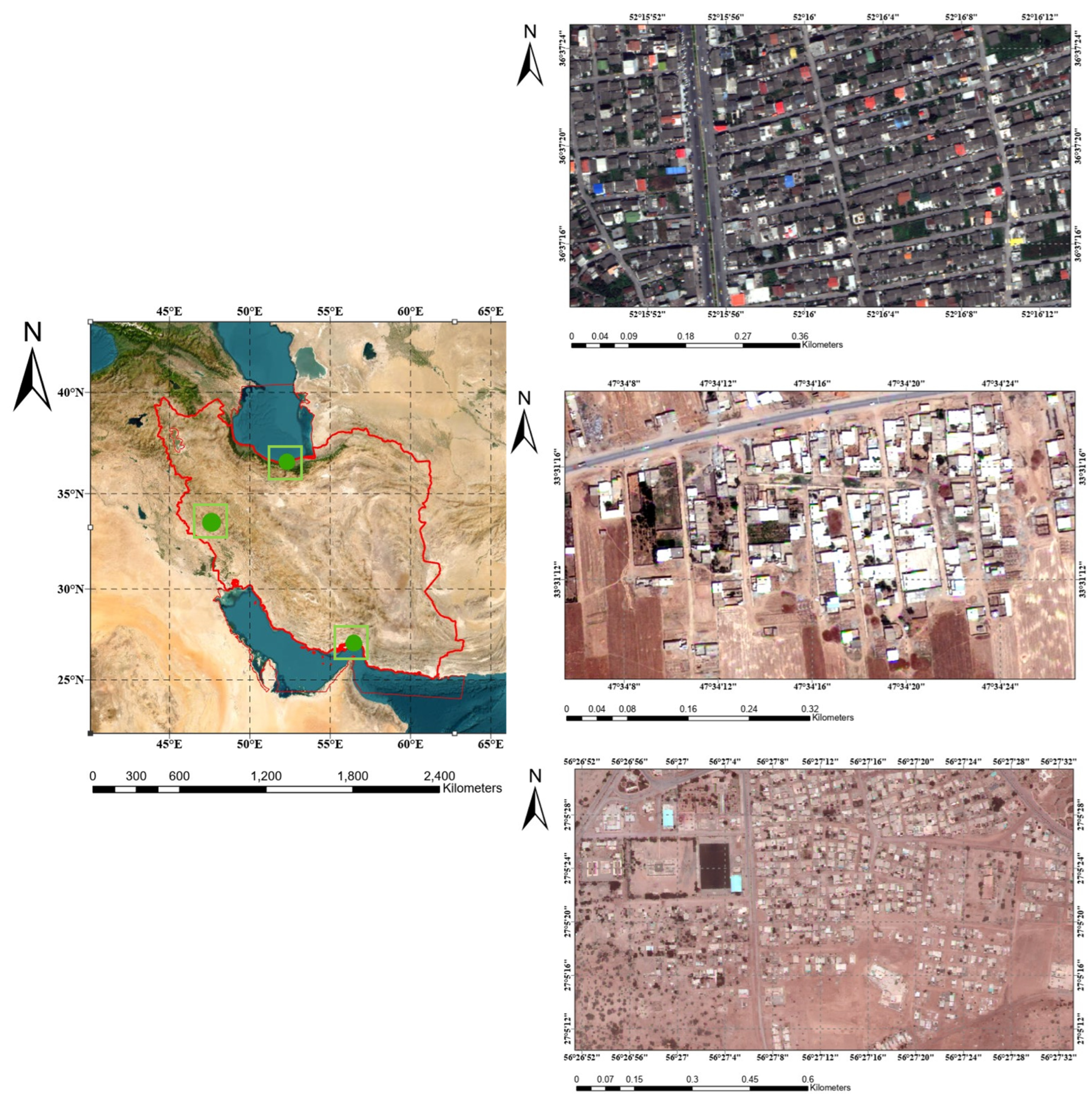

The dataset was compiled from three Iranian urban areas with distinct structural and ecological characteristics—coastal, densely built, and green suburban zones—capturing variability in canopy density, shadowing, and background reflectance. Although all three sites are located within Iran and the geographic scope may appear narrow, they represent markedly different climatic and ecological contexts. Mahmoudabad, located in northern Iran, features a moderate, humid climate adjacent to the Hyrcanian forests, with dense vegetation and a high population density. Kouhdasht, situated in a mountainous region of western Iran, has a temperate to semi-arid climate, with rugged topography and sparse vegetation. In contrast, Hormoz Island in southern Iran represents an arid coastal environment with unique soil reflectance, sparse vegetation, and distinctive spectral conditions. Together, these sites encompass a broad spectrum of climatic, vegetative, and morphological variability, making the dataset representative of heterogeneous urban contexts despite its national scale.

Our research is based on images of Hormoz Island, Kouhdasht, and Mahmoudabad, taken by WorldView-2, WorldView-3, and GeoEye-1 satellites (

Figure 4). As mentioned earlier, these images were initially pan-sharpened. Although these satellite images have different resolutions, we used them all together in this research.

3. Methodology

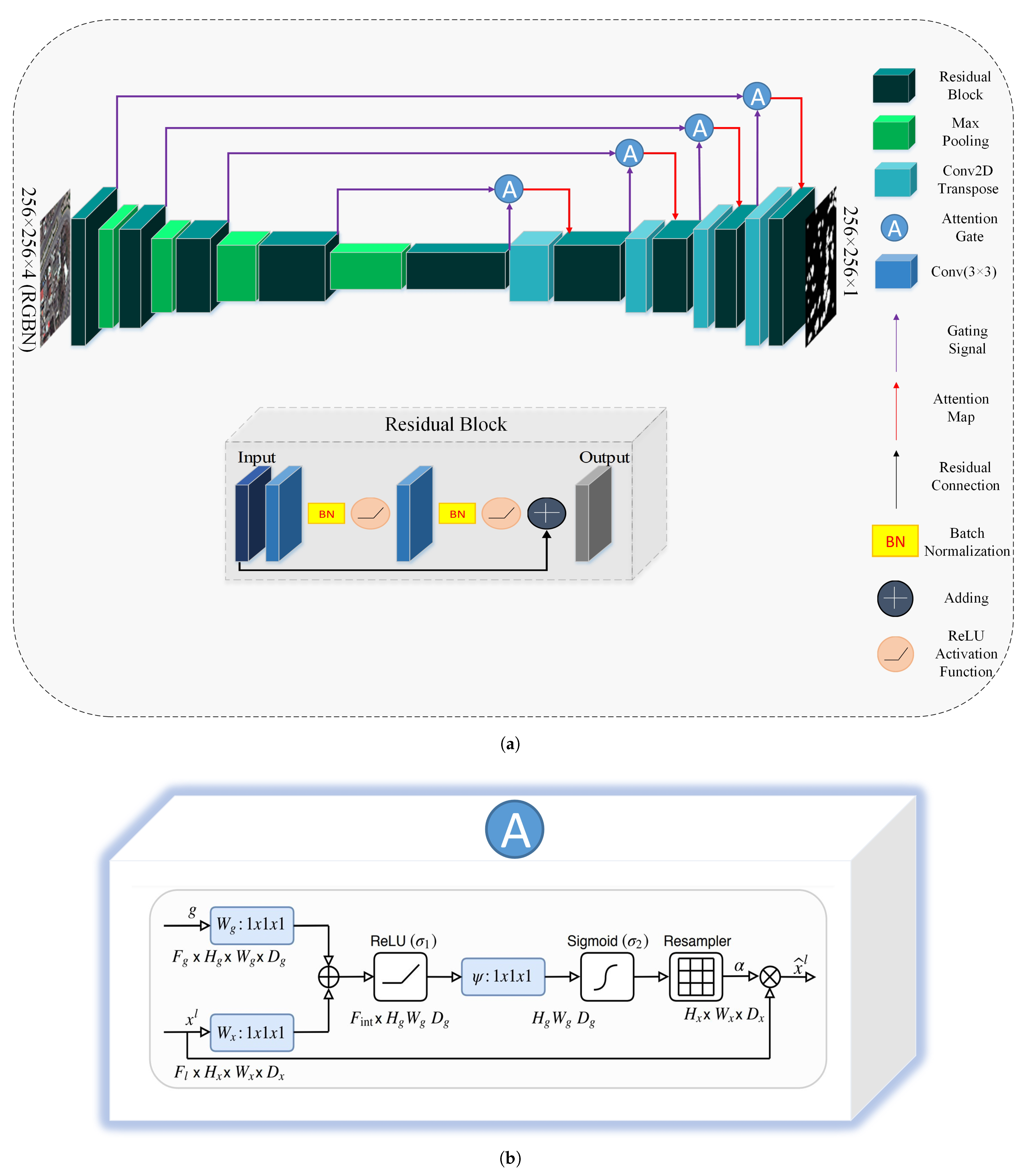

We propose a tree crown segmentation framework that integrates a Residual U-Net architecture with Attention Gates (AGs), specifically designed for high-resolution satellite imagery. This study is the first to implement a unified deep learning framework for tree crown segmentation using imagery from three distinct commercial satellite sensors—WorldView-2, WorldView-3, and GeoEye-1—each implemented independently. By training the model across these datasets, the framework demonstrates strong robustness and adaptability to varying spatial resolutions and spectral characteristics without requiring sensor-specific tuning or image fusion. The architecture addresses key challenges in urban remote sensing, including overlapping tree canopies, class imbalance, and spectral noise from complex built environments. It builds upon the foundational U-Net encoder–decoder structure, enhanced with residual connections for improved gradient propagation and Attention Gates for dynamic spatial feature refinement. This configuration enables accurate, consistent segmentation of individual tree crowns across diverse urban environments using only satellite-based data.

3.1. Advantages of the Proposed Method

Urban tree detection has evolved from manual, labor-intensive methods to advanced, automated approaches enabled by deep learning. Traditional image processing and early machine learning methods offered important foundations but were limited in scalability, adaptability, and robustness. Deep learning now dominates the field, providing superior accuracy and flexibility, especially when integrated with advanced data fusion techniques such as LiDAR or pan-sharpening. The combination of modern deep learning architectures with high-resolution satellite imagery has significantly advanced applications in urban forestry, urban planning, and environmental monitoring.

Despite these advancements, major challenges persist, such as class imbalance, spectral confusion in dense urban areas, and the need for diverse, high-quality training data. The proposed method addresses these issues by incorporating residual connections and Attention Gates, enabling the model to focus on relevant features while suppressing background noise. This approach effectively captures both fine-scale and global canopy patterns, improving segmentation accuracy in complex environments with overlapping crowns.

A notable strength of this framework is its ability to integrate data from multiple high-resolution satellite sensors, including WorldView-2, WorldView-3, and GeoEye-1. These sensors provide complementary spatial and spectral information, enhancing the model’s generalizability and performance across various urban landscapes. The automated feature extraction capability of the Residual U-Net with AGs further eliminates the need for manual intervention, ensuring computational efficiency and scalability for large-scale urban applications. Beyond segmentation, the method also produces actionable outputs, such as tree counts and precise crown-area measurements, enabling quantitative urban forest assessments and supporting sustainable city management.

3.2. U-Net Network Architecture

U-Net, originally proposed by Ronneberger et al. [

45], is a Fully Convolutional Network (FCN) widely adopted for image segmentation tasks, including medical imaging and vegetation analysis. The architecture consists of two main components: an encoder and a decoder.

3.2.1. Encoder Path

The encoder acts as a feature extractor, using convolutional and max-pooling layers to downsample the input image and capture hierarchical feature representations progressively:

where

is the input to the

i-th layer,

the convolutional weights,

the bias term, and

f the activation function, typically ReLU. Downsampling is performed via max pooling:

3.2.2. Decoder Path

The decoder reconstructs the segmentation map by upsampling and applying convolutional layers to restore spatial resolution:

where

is the input of the previous decoder layer and

the transposed convolution weights. To preserve spatial details, skip connections link encoder and decoder features:

where

represents the corresponding encoder feature map.

3.2.3. Output Layer

A

convolution generates the final segmentation map by mapping high-dimensional features to binary outputs:

where

denotes the sigmoid activation for binary segmentation tasks.

3.2.4. Key Features of U-Net

Skip Connections: Preserve spatial information critical for precise boundary delineation.

Symmetry: The symmetric encoder–decoder structure facilitates efficient feature reconstruction.

Flexibility: U-Net can be adapted for various segmentation tasks by modifying depth, input size, or loss functions.

3.3. Residual U-Net Network Architecture

While U-Net is effective, its deeper variants may suffer from vanishing gradients. Residual Networks (ResNets) mitigate this by introducing shortcut connections that enable efficient gradient flow. In this study, residual blocks are integrated into U-Net to form a Residual U-Net, enhancing feature learning and training stability. A residual unit is defined as:

where

and

are the input and output of the

i-th residual unit,

the residual function (convolution, normalization, activation), and

the identity mapping. Residual connections allow the model to learn corrections rather than re-learn redundant mappings, enhancing segmentation accuracy, particularly in dense urban canopies.

3.4. Attention Mechanism

The attention mechanism, introduced by Vaswani et al. [

46], enables networks to focus on the most informative regions dynamically. In image segmentation, attention improves feature discrimination by emphasizing target regions while suppressing irrelevant background details. This study incorporates Attention Gates (AGs) to enhance the model’s sensitivity to tree crown features and reduce interference from urban structures.

To evaluate the impact of AGs, an ablation study compared two versions of the model: one with and one without AGs. The inclusion of AGs improved the F1-score from 0.8772 to 0.9121 and IoU from 0.8017 to 0.8384, confirming their contribution to enhanced segmentation performance, particularly in complex and overlapping canopies.

Types of Attention

Soft Attention: Assigns proportional importance to all input regions.

Hard Attention: Focuses on specific regions but is computationally expensive.

Self-Attention: Models relationships between all input elements, capturing global context effectively.

For image data, spatial attention highlights key areas within an image, whereas channel attention prioritizes important feature maps across channels.

3.5. Integration of Attention Mechanism into Residual U-Net

Attention Gates were integrated into the skip connections between encoder and decoder layers to refine feature propagation. This mechanism suppresses non-relevant background information and highlights key canopy regions, improving segmentation precision in heterogeneous urban imagery.

How Attention Gates Work

Given encoder feature maps (

X) and decoder features (

G), the attention coefficients are computed as follows:

where

and

are learnable weights,

b is a bias term, and

is the sigmoid activation function. The refined feature map (

) selectively retains relevant information to accurately reconstruct tree crown boundaries.

3.6. Final Network Architecture

The proposed Residual U-Net with Attention Gates integrates the strengths of U-Net, residual learning, and attention mechanisms. The architecture includes:

Encoder: Four residual blocks, each followed by max-pooling for downsampling.

Bottleneck: A central residual layer with attention to bridge encoder and decoder.

Decoder: Four upsampling layers with attention-refined skip connections.

Output Layer: A convolution with sigmoid activation for binary segmentation.

To further enhance performance:

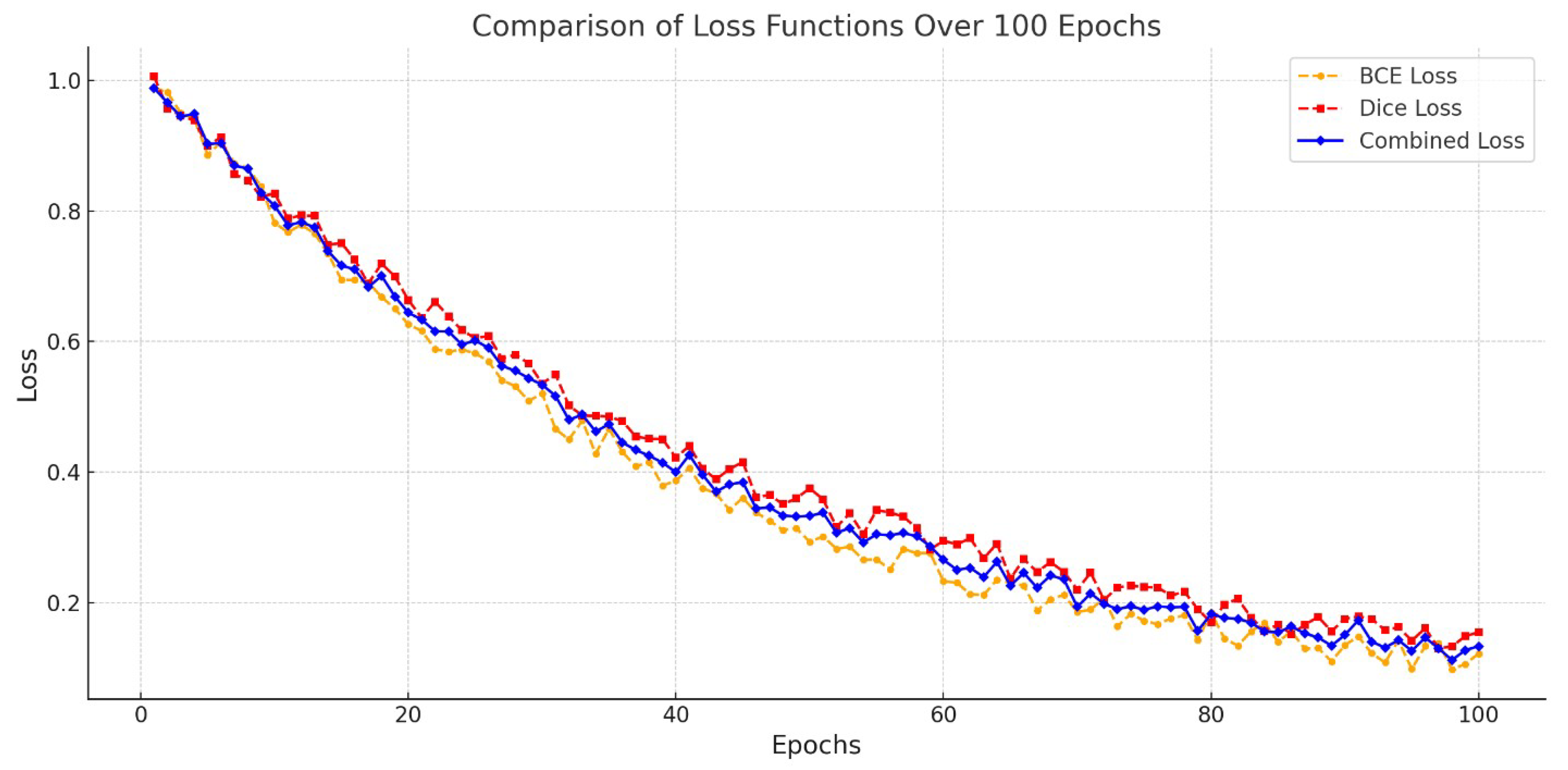

A combined loss function (Binary Cross-Entropy + Dice Loss) was used to address class imbalance.

Dropout layers were incorporated to reduce overfitting.

Data augmentation (flipping, rotation, scaling) was applied to increase dataset diversity.

3.7. Loss Functions

Two loss functions were implemented to optimize performance: Binary Cross-Entropy (BCE) and Dice Loss. BCE measures pixel-level classification accuracy:

where

is the ground truth (1 for tree, 0 for background) and

the predicted probability. Dice Loss focuses on region overlap:

where

prevents division by zero. The combined loss balances pixel-wise and region-level accuracy:

3.8. Evaluation Metrics

Model performance was evaluated using Precision, Recall, F1-Score, and Intersection over Union (IoU):

These metrics provide a comprehensive assessment of detection accuracy, segmentation quality, and boundary precision, ensuring reliable evaluation across sensors.

4. Training

The training phase of the proposed Residual U-Net with Attention Gates architecture (

Figure 5) involved a systematic approach to optimize model performance for tree crown segmentation in high-resolution RGBN satellite imagery. The dataset consisted of 4-band RGBN satellite images. The Near-Infrared (NIR) channel was specifically utilized to enhance vegetation detection. Binary segmentation masks were generated through automated (NDVI and Watershed segmentation) and manual refinement. Data augmentation techniques such as random flipping, rotation, and scaling were employed to increase diversity and prevent overfitting.

4.1. Model Setup

The model setup involved a modified ResNet-50 backbone that accepts a 4-channel input to accommodate the additional spectral band. Attention Gates were incorporated into the architecture to enhance the focus on tree crown regions while skipping connections and effectively combining hierarchical features. For optimization, the Adam optimizer was employed with an initial learning rate of 0.001, and a step decay scheduler was used to reduce the learning rate by a factor of 0.1 every 10 epochs. The model was trained with a batch size of 16 images per batch over 100 epochs.

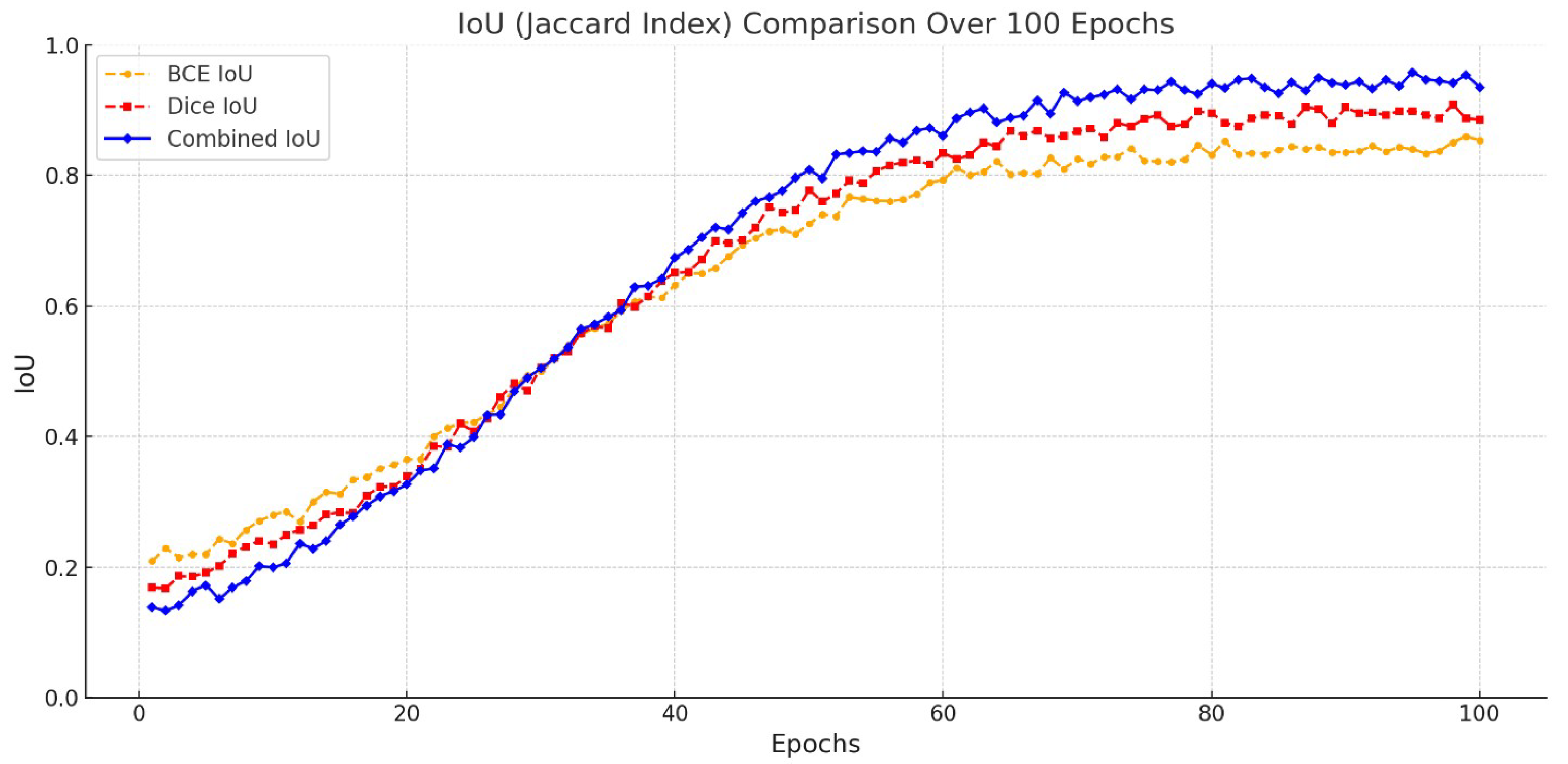

In

Figure 6 and

Figure 7, we can see the advantages of using DICE and BCE loss functions.

To provide a comprehensive understanding of the network configuration,

Table 2 presents the detailed layer-wise architecture and corresponding parameter counts of the proposed Residual U-Net model.

4.2. Validation and Evaluation

A validation set was utilized to monitor model performance and prevent overfitting. Key metrics, including Jaccard Index (IoU) and F1-Score, were tracked to assess the segmentation accuracy. The training loss steadily decreased, while the validation loss remained slightly higher, indicating appropriate generalization without overfitting (

Figure 8). This systematic training approach ensured robust segmentation results, particularly for identifying tree crowns in urban environments.

5. Results and Discussion

The proposed framework offers significant advantages in urban tree crown detection and segmentation through its multi-sensor integration strategy, efficient network design, and computational resource optimization. The model achieves high segmentation accuracy and generalization despite being trained on a relatively small dataset, confirming both its reliability and operational applicability. Key strengths include resolution-bounded scalability, adaptability across sensors, efficient use of limited training data, and robustness in heterogeneous urban environments.

A defining feature of this study is the integration of imagery from three high-resolution commercial satellites—GeoEye-1, WorldView-2, and WorldView-3—within a unified deep learning pipeline. This constitutes the first demonstration of multi-sensor integration for urban tree crown segmentation using a Residual U-Net architecture enhanced with Attention Gates. Each satellite contributes distinct spatial and spectral characteristics, and their joint use enables consistent segmentation performance across the 0.31–0.46 m resolution interval. Rather than claiming general scalability, the framework exhibits resolution-bounded scalability: it maintains accuracy and stability across datasets with similar high-spatial-resolution properties. This adaptability ensures robust results in regions with varying canopy densities, vegetation heterogeneity, and urban complexity, thereby confirming the method’s applicability across comparable high-resolution urban environments. Although the dataset includes only three Iranian cities, these sites represent contrasting urban morphologies, allowing the model’s cross-sensor consistency to be meaningfully evaluated.

Although trained on a dataset of only 1200 image patches, the model achieves strong predictive performance by combining data augmentation, dropout regularization, and patch-wise optimization. The use of patches balances computational efficiency with preservation of crown-scale spatial details. This approach mitigates overfitting while improving generalization across multiple sensors, confirming the model’s effectiveness even under limited labeled data conditions—a critical advantage for operational workflows where annotation is costly or time-intensive.

Another key contribution of the proposed framework is its ability to deliver state-of-the-art results using modest computational resources. The Residual U-Net with Attention Gates optimizes feature learning without relying on large-scale GPU clusters or extensive training time. All experiments were conducted on a workstation equipped with an NVIDIA GeForce RTX 3070 GPU (As shown in

Table 3), demonstrating that high segmentation accuracy can be achieved on widely available hardware for both model training and patch-based inference.

This computational efficiency specifically refers to model training and inference performed on image patches. City-scale deployment would require tiling of large satellite mosaics and batch processing on higher-throughput systems; however, the lightweight architecture ensures that such scaling remains computationally feasible. The average over one epoch was approximately 120 s, and the average inference time per patch was approximately 0.15 s.

It expands the framework’s accessibility to researchers, municipalities, and environmental organizations with limited computational infrastructure. Furthermore, the combination of Binary Cross-Entropy and Dice Loss effectively addresses class imbalance while maintaining high precision and recall, contributing to overall segmentation robustness.

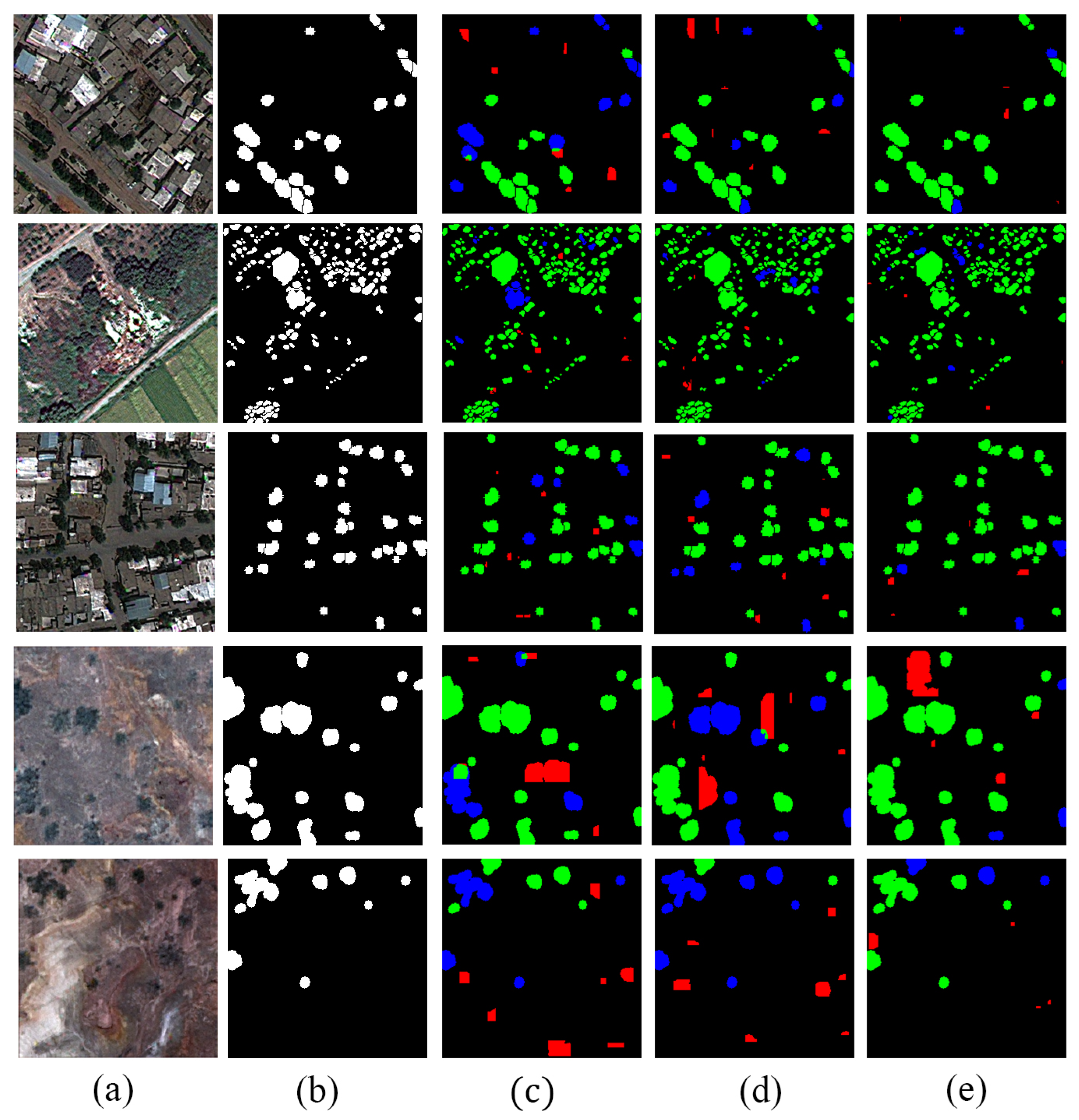

Quantitative results confirm the superior performance of the proposed model compared with widely used segmentation networks such as DeepLab v3 and U-Net (

Figure 9). The proposed Residual U-Net with AGs achieves an F1-score of 0.9121 and an IoU of 0.8384, outperforming DeepLab v3 (F1 = 0.8712, IoU = 0.7612) and U-Net with VGG19 (F1 = 0.8568, IoU = 0.7495). Precision and recall values of 0.9321 and 0.8930, respectively, indicate balanced detection capability, minimizing both omission and commission errors. These metrics highlight the model’s strength in delineating overlapping crowns and distinguishing trees from spectrally similar urban structures.

Although transformer-based architectures such as SegFormer demonstrate strong performance on large-scale semantic segmentation benchmarks, their results in this study were lower than those of the convolution-based models (as shown in

Table 4). SegFormer achieved an F1 score of 0.82 with the MiT-B2 backbone, which is lower than those of DeepLab v3 (0.87) and U-Net (0.85). This discrepancy can be attributed to the relatively small dataset and the high spatial resolution of the input imagery, which may make it difficult for transformer models to generalize fine-grained canopy boundaries without extensive pretraining or large-scale data. In contrast, convolutional networks such as U-Net and DeepLab v3, with their strong local feature aggregation and inductive bias toward spatial continuity, were more effective at capturing the compact and heterogeneous patterns of urban tree crowns. Consequently, SegFormer’s lower performance in this context underscores the importance of model–data suitability over architectural sophistication alone. In line with recent advances in tree crown segmentation, our findings are consistent with studies that emphasize the strength of deep learning models for fine-scale canopy delineation. Erdem et al. (2023) demonstrated the effectiveness of Mask R-CNN and U-Net in detecting apricot trees from UAV imagery, achieving high precision under structured orchard conditions but with limited adaptability to heterogeneous urban landscapes [

47]. Similarly, Ma et al. (2025) proposed a hybrid framework combining deep learning and a climbing algorithm for precise single-tree segmentation in dense forest environments, achieving strong results in complex canopies [

48]. Compared to these UAV and forest-oriented approaches, the proposed Residual U-Net with Attention Gates extends such capabilities to multi-sensor satellite imagery, maintaining high segmentation accuracy across varying spatial resolutions (0.31–0.46 m) without the need for LiDAR or UAV data. This demonstrates that high-resolution satellite imagery, when processed through a well-optimized convolutional architecture, can achieve performance comparable to advanced terrestrial or aerial methods.

The superior cross-sensor consistency and computational efficiency of the proposed method underscore its practical potential for operational use. By eliminating reliance on LiDAR or UAV inputs, the framework provides a cost-effective, repeatable solution for large-scale urban forestry monitoring. These results validate that multi-sensor satellite data, when processed through a well-optimized Residual Attention U-Net, can achieve performance comparable to or exceeding that of multi-source fusion approaches. The demonstrated accuracy across different sensors and urban morphologies establishes a foundation for transferring the model to other regions or time periods with minimal re-training effort.

In general, the results demonstrate that integrating data from multiple high-resolution satellite sensors within a unified deep learning framework can deliver reliable, transferable performance for urban tree crown segmentation. The model’s ability to maintain accuracy within the 0.31 to 0.46 m resolution interval highlights its robustness within the spatial limits of the training sensors and confirms its suitability for practical monitoring of urban forests using only satellite imagery. These findings collectively establish a foundation for the concluding discussions on the broader implications, operational potential, and future research directions of the framework.

6. Conclusions and Future Work

This study presented an efficient deep learning framework for detecting and segmenting urban tree crowns from high-resolution satellite imagery. By integrating multisensor data from GeoEye-1, WorldView-2, and WorldView-3 within a single processing pipeline, the proposed method leveraged the complementary spatial and spectral characteristics of these sensors to achieve high segmentation accuracy in heterogeneous, densely built urban environments. The Residual U-Net architecture enhanced with Attention Gates (AGs) demonstrated strong robustness, attaining state-of-the-art performance with an F1-score of 0.9121 and an Intersection over Union (IoU) of 0.8384. Despite being trained on a relatively small dataset of 1200 image patches, the model achieved high accuracy, reflecting the effectiveness of the hybrid ground-truth generation strategy, composite loss design, and data augmentation techniques. The workflow remains scalable within the spatial resolution interval of the integrated sensors, confirming its adaptability for similar high-resolution satellite platforms.

The principal novelty of this research lies in applying, for the first time in the context of urban tree crown segmentation, a unified deep learning framework that integrates data from multiple high-resolution satellite sensors. This multi-sensor approach demonstrates that a Residual U-Net with Attention Gates can maintain stable and accurate performance across sensors with heterogeneous spatial and spectral resolutions. Importantly, the framework relies solely on satellite imagery, eliminating the need for LiDAR or aerial data, which are often costly, logistically demanding, and geographically constrained. As a result, the proposed method offers a practical and cost-effective solution for operational urban tree monitoring using readily available commercial satellite imagery.

Although the dataset used in this study was limited to three urban areas within Iran, these cities represent distinctly different climatic and ecological conditions. Mahmoudabad, in the humid, densely vegetated northern region adjacent to the Hyrcanian forests, contrasts sharply with Kouhdasht, a mountainous city in western Iran characterized by a temperate-to-semiarid climate and sparse vegetation. Hormoz Island, located in the arid coastal south, exhibits unique soil reflectance and minimal vegetation cover. This diversity in geography, vegetation type, and urban morphology provided meaningful variability for evaluating cross-sensor consistency and model robustness within heterogeneous conditions. Nevertheless, the current results should be interpreted as evidence of cross-sensor reliability and intra-regional adaptability rather than full global generalization. Expanding the dataset to include cities with different climatic zones and architectural typologies worldwide will further strengthen the model’s generalization and operational potential.

From an operational standpoint, the framework enables the precise delineation of individual tree crowns and the reliable estimation of the crown area—outputs that directly support green infrastructure mapping, ecological monitoring and climate-adaptive urban planning. The method’s ability to operate efficiently on modest computational resources enhances its applicability for large-area implementation in data-driven smart city platforms.

Comparative analysis with prior studies reinforces these advantages. UAV- or LiDAR-based methods such as those of Yao et al. (2024) [

43] and Gan et al. (2023) [

44] achieved fine structural delineation but required complex preprocessing and expensive, non-scalable datasets. Likewise, the TreeSeg toolbox introduced by Speckenwirth et al. (2024) [

49] depended on aerial multispectral surveys, limiting repeatability over large regions. In contrast, our satellite-only approach achieves comparable or superior accuracy while enabling affordable, repeatable monitoring across extensive urban areas, thereby addressing one of the major limitations of previous studies.

In summary, this research advances urban forestry analysis by demonstrating the feasibility and reliability of multi-sensor, satellite-based deep learning for tree crown segmentation. The proposed framework’s resolution-bounded scalability, accuracy, and computational efficiency establish it as a practical foundation for operational urban forestry assessment within smart city ecosystems.

Future Work: Future research will focus on extending the framework beyond the current spatial resolution range by incorporating additional sensors and temporal observations, facilitating temporal change detection and long-term canopy dynamics analysis. The inclusion of complementary data sources such as LiDAR or hyperspectral imagery may further improve species classification and canopy health estimation. Moreover, integrating semi-automatic annotation tools and transformer-based hybrid architectures could enhance model generalization under occlusion and shadow conditions. Finally, expanding the framework toward biomass estimation and urban ecosystem service modeling would contribute to broader goals of environmental resilience and sustainable smart city development.

Author Contributions

Conceptualization, A.S., R.S.-H., D.S. and S.H.; Methodology, A.S., R.S.-H., D.S. and S.H.; Project administration, A.S., R.S.-H., D.S. and S.H.; Resources, A.S., R.S.-H., D.S. and S.H.; Validation, A.S., R.S.-H., D.S. and S.H.; Supervision, R.S.-H. and S.H.; Writing—original draft, A.S., R.S.-H., D.S. and S.H.; Writing—review and editing, A.S., R.S.-H., D.S. and S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

In preparing this article, the authors used OpenAI’s GPT-5 (model version gpt-4) to help refine the text. Software for AI was used to improve grammar, clarity and readability, but we made sure to fully maintain the original ideas, analysis, and conclusions of the authors. It is fully within the authors’ responsibility to retain all substantial elements of the work, such as research design, methodology, data analysis, and intellectual contributions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Shishegar, N. The impacts of green areas on mitigating urban heat island effect: A review. Int. J. Environ. Sustain. 2014, 9, 119. [Google Scholar] [CrossRef]

- Nowak, D.J. The effects of urban trees on air quality. USDA For. Serv. 2002, 96, 130413867. [Google Scholar]

- Rowntree, R.A.; Nowak, D.J. Quantifying the role of urban forests in removing atmospheric carbon dioxide. J. Arboric. 1991, 17, 269–275. [Google Scholar] [CrossRef]

- Carrick, J.; Abdul Rahim, M.S.A.B.; Adjei, C.; Ashraa Kalee, H.H.H.; Banks, S.J.; Bolam, F.C.; Campos Luna, I.M.; Clark, B.; Cowton, J.; Domingos, I.F.N.; et al. Is planting trees the solution to reducing flood risks? J. Flood Risk Manag. 2019, 12, e12484. [Google Scholar] [CrossRef]

- Zheng, H.; Wang, Z.; Hu, S.; Malano, H. Seasonal water allocation: Dealing with hydrologic variability in the context of a water rights system. J. Water Resour. Plan. Manag. 2013, 139, 76–85. [Google Scholar] [CrossRef]

- Locosselli, G.M.; Buckeridge, M.S. The science of urban trees to promote well-being. Trees 2023, 37, 1–7. [Google Scholar] [CrossRef]

- Wood, L.; Hooper, P.; Foster, S.; Bull, F. Public green spaces and positive mental health–investigating the relationship between access, quantity and types of parks and mental wellbeing. Health Place 2017, 48, 63–71. [Google Scholar] [CrossRef]

- Rouhollahi, M.; Whaley, D.; Byrne, J.; Boland, J. Potential residential tree arrangement to optimise dwelling energy efficiency. Energy Build. 2022, 261, 111962. [Google Scholar] [CrossRef]

- Brindal, M.; Stringer, R. The value of urban trees: Environmental factors and economic efficiency. In Proceedings of the 10th National Street Tree Symposium 2009, Adelaide, Australia, 3 September 2009; TreeNet. 2009. Available online: https://treenet.org/wp-content/uploads/2017/08/2009_SymposiumProceedings_FINAL.pdf (accessed on 4 May 2025).

- Agarwal, S.; Bhalla, P.; Kaur, S.; Babbar, R. Effect of body mass index on physical self concept, cognition & academic performance of first year medical students. Indian J. Med Res. 2013, 138, 515–522. [Google Scholar]

- Lelong, C.C.; Tshingomba, U.K.; Soti, V. Assessing Worldview-3 multispectral imaging abilities to map the tree diversity in semi-arid parklands. Int. J. Appl. Earth Obs. Geoinf. 2020, 93, 102211. [Google Scholar] [CrossRef]

- Jombo, S.; Adam, E.; Byrne, M.J.; Newete, S.W. Evaluating the capability of Worldview-2 imagery for mapping alien tree species in a heterogeneous urban environment. Cogent Soc. Sci. 2020, 6, 1754146. [Google Scholar] [CrossRef]

- European Space Agency. Sentinel-2 User Handbook. 2015. Available online: https://sentinels.copernicus.eu/documents/247904/685211/Sentinel-2_User_Handbook (accessed on 4 May 2025).

- U.S. Geological Survey. Landsat 8 Overview. 2024. Available online: https://www.usgs.gov/landsat-missions/landsat-8 (accessed on 4 May 2025).

- Yan, K.; Gao, S.; Yan, G.; Ma, X.; Chen, X.; Zhu, P.; Li, J.; Gao, S.; Gastellu-Etchegorry, J.-P.; Myneni, R.B.; et al. A global systematic review of the remote sensing vegetation indices. Int. J. Appl. Earth Obs. Geoinf. 2025, 139, 104560. [Google Scholar] [CrossRef]

- Gao, S.; Zhong, R.; Yan, K.; Ma, X.; Chen, X.; Pu, J.; Gao, S.; Qi, J.; Yin, G.; Myneni, R.B. Evaluating the saturation effect of vegetation indices in forests using a 3D canopy structure dataset. Remote Sens. Environ. 2023, 293, 113612. [Google Scholar] [CrossRef]

- Morales-Gallegos, L.M.; Martínez-Trinidad, T.; Hernández-de la Rosa, P.; Gómez-Guerrero, A.; Alvarado-Rosales, D.; Saavedra-Romero, L.d.L. Tree Health Condition in Urban Green Areas Assessed through Vegetation Indices and Chlorophyll Fluorescence. Forests 2023, 14, 1673. [Google Scholar] [CrossRef]

- Ravi, S.; Khan, A.M. Morphological Operations for Image Processing: Understanding and its Applications. In Proceedings of the NCVSComs-13 Conference Proceedings, Vadlamudi, India, 11–12 December 2013. [Google Scholar]

- Xiao, C.; Qin, R.; Xie, X.; Huang, X. Individual Tree Detection and Crown Delineation with 3D Information from Multi-View Satellite Imagery. Photogramm. Eng. Remote Sens. 2019, 85, 873–882. [Google Scholar] [CrossRef]

- Mao, T.; Fan, Y.; Zhi, S.; Tang, J. A Morphological Feature-Oriented Algorithm for Extracting Impervious Surface Areas Obscured by Vegetation in Collaboration with OSM Road Networks in Urban Areas. Remote Sens. 2022, 14, 2493. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Moskal, L.M.; Styers, D.M.; Halabisky, M. Monitoring Urban Tree Cover Using Object-Based Image Analysis and Public Domain Remotely Sensed Data. Remote Sens. 2011, 3, 2243–2262. [Google Scholar] [CrossRef]

- Cetin, Z.; Yastikli, N. The Use of Machine Learning Algorithms in Urban Tree Species Classification. ISPRS Int. J. Geo-Inf. 2022, 11, 226. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review. Int. J. Remote Sens. 2016, 37, 78–88. [Google Scholar]

- Su, Y.; Schwartz, M.; Fayad, I.; García, M.; Zavala, M.A.; Tijerín-Triviño, J.; Astigarraga, J.; Cruz-Alonso, V.; Liu, S.; Zhang, X.; et al. Canopy height and biomass distribution across the forests of Iberian Peninsula. Sci. Data 2025, 12, 678. [Google Scholar] [CrossRef]

- Aggarwal, C.C.; Reddy, C.K. Data Clustering: Algorithms and Applications; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar]

- Hosingholizade, A.; Erfanifard, Y.; Alavipanah, S.K.; Millan, V.E.G.; Mielcarek, M.; Pirasteh, S.; Stereńczak, K. Assessment of Pine Tree Crown Delineation Algorithms on UAV Data. Forests 2023, 16, 228. [Google Scholar] [CrossRef]

- Alasali, T.; Ortakci, Y. Clustering Techniques in Data Mining: A Survey of Methods, Challenges, and Applications. J. Comput. Sci. 2024, 9, 32–50. [Google Scholar] [CrossRef]

- Wang, H.; Song, C.; Wang, J.; Gao, P. A raster-based spatial clustering method with robustness to spatial heterogeneity. Sci. Rep. 2024, 14, 53066. [Google Scholar] [CrossRef]

- Wang, A.; Shi, S.; Yang, J.; Luo, Y.; Tang, X.; Du, J.; Bi, S.; Qu, F.; Gong, C.; Gong, W. Integration of LiDAR and Hyperspectral Imagery for Tree Species Classification in Urban Forests. Photogramm. Rec. 2025, 40, 123–135. [Google Scholar] [CrossRef]

- Lin, J.; Sun, Q.; Liu, Y.; Ye, H.; Tang, D.; Zhang, X.; Gao, Y. Forest Aboveground Biomass Estimation Based on Unmanned Aerial Vehicle LiDAR and Machine Learning Algorithms. Remote Sens. 2024, 16, 123. [Google Scholar] [CrossRef]

- Ventura, J.; Pawlak, C.; Honsberger, M.; Gonsalves, C.; Rice, J.; Love, N.L.R.; Han, S.; Nguyen, V.; Sugano, K.; Doremus, J.; et al. Individual Tree Detection in Large-Scale Urban Environments using High-Resolution Multispectral Imagery. arXiv 2022, arXiv:2208.10607. [Google Scholar]

- Gashti, E.H.; Bahiraei, H.; Zoej, M.J.V.; Ghaderpour, E. Fusion of Aerial and Satellite Images for Automatic Extraction of Building Footprint Information Using Deep Neural Networks. Information 2025, 16, 380. [Google Scholar] [CrossRef]

- Morgan, G.R.; Zlotnick, D.; North, L.; Smith, C.; Stevenson, L. Deep Learning for Urban Tree Canopy Coverage Analysis: A Comparison and Case Study. Geomatics 2024, 4, 412–432. [Google Scholar] [CrossRef]

- Martins, J.A.C.; Nogueira, K.; Osco, L.P.; Gomes, F.D.G.; Furuya, D.E.G.; Gonçalves, W.N.; Sant’Ana, D.A.; Ramos, A.P.M.; Liesenberg, V.; dos Santos, J.A.; et al. Semantic Segmentation of Tree-Canopy in Urban Environment with Pixel-Wise Deep Learning. Remote Sens. 2021, 13, 3054. [Google Scholar] [CrossRef]

- Ferreira, M.P.; Santos, D.R.; Ferrari, F.; Coelho Filho, L.C.T.; Martins, G.B.; Feitosa, R.Q. Improving urban tree species classification by deep-learning based fusion of digital aerial images and LiDAR. ISPRS J. Photogramm. Remote Sens. 2024, 198, 128–139. [Google Scholar] [CrossRef]

- Zhang, J.; Li, Y.; Yang, X.; Jiang, R.; Zhang, L. RSAM-Seg: A SAM-Based Model with Prior Knowledge Integration for Remote Sensing Image Semantic Segmentation. Remote Sens. 2025, 17, 590. [Google Scholar] [CrossRef]

- Deng, S.; Jing, S.; Zhao, H. A Hybrid Method for Individual Tree Detection in Broadleaf Forests Based on UAV-LiDAR Data and Multistage 3D Structure Analysis. Forests 2024, 15, 1043. [Google Scholar] [CrossRef]

- Kaartinen, H.; Hyyppä, J. Tree Extraction—Report of the EuroSDR/ISPRS Project, Commission II “Tree Extraction”; EuroSDR Official Publication No. 53; EuroSDR: Paris, France, 2008. [Google Scholar]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.A.; Zare, A.; White, E.P. Cross-site learning in deep learning RGB tree crown detection. Ecol. Inform. 2020, 56, 101061. [Google Scholar] [CrossRef]

- Guo, J.; Xu, Q.; Zeng, Y.; Liu, Z.; Zhu, X.X. Nationwide urban tree canopy mapping and coverage assessment in Brazil from high-resolution remote sensing images using deep learning. ISPRS J. Photogramm. Remote Sens. 2023, 198, 1–15. [Google Scholar] [CrossRef]

- Yao, S.; Hao, Z.; Post, C.J.; Mikhailova, E.A.; Lin, L. Individual Tree Crown Detection and Classification of Live and Dead Trees Using a Mask Region-Based Convolutional Neural Network (Mask R-CNN). Forests 2024, 15, 1900. [Google Scholar] [CrossRef]

- Gan, Y.; Wang, Q.; Iio, A. Tree Crown Detection and Delineation in a Temperate Deciduous Forest from UAV RGB Imagery Using Deep Learning Approaches: Effects of Spatial Resolution and Species Characteristics. Remote Sens. 2023, 15, 778. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Erdem, F.; Ocer, N.E.; Kucuk Matci, D.; Kaplan, G.; Avdan, U. Apricot Tree Detection from UAV-Images Using Mask R-CNN and U-Net. Photogramm. Eng. Remote Sens. 2023, 89, 89–96. [Google Scholar] [CrossRef]

- Ma, H.; Zhang, F.; Chen, S.; Yu, J. Individual Tree Segmentation Using Deep Learning and Climbing Algorithm: A Method for Achieving High-Precision Single-Tree Segmentation in High-Density Forests under Complex Environments. Photogramm. Eng. Remote Sens. 2025, 91, 101–110. [Google Scholar] [CrossRef]

- Speckenwirth, S.; Brandmeier, M.; Paczkowski, S. TreeSeg—A Toolbox for Fully Automated Tree Crown Segmentation Based on High-Resolution Multispectral UAV Data. Remote Sens. 2024, 16, 3660. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).