Highlights

What are the main findings?

- A smart transport management system based on UAV data integrating advanced machine learning and deep learning techniques is proposed to enhance road anomaly detection and severity classification.

- The system employs a comprehensive multi-stage framework, integrating a high-precision obstacle detection model, six specialized severity classification models, and an aggregation model to deliver accurate anomaly assessment, enabling strategic, data-driven road maintenance and enhanced transportation safety.

What is the implication of the main finding?

- A scalable and efficient solution is proposed to enhance road safety and optimize transportation management through intelligent anomaly detection and severity assessment.

- This framework sets a benchmark for future smart city initiatives by leveraging advanced machine learning techniques for proactive infrastructure maintenance and decision-making.

Abstract

Efficient transportation management is essential for the sustainability and safety of modern urban infrastructure. Traditional road inspection and transport management methods are often labor-intensive, time-consuming, and prone to inaccuracies, limiting their effectiveness. This study presents a UAV-based transport management system that leverages machine learning techniques to enhance road anomaly detection and severity assessment. The proposed approach employs a structured three-tier model architecture: A unified obstacle detection model identifies six critical road hazards—road cracks, potholes, animals, illegal dumping, construction sites, and accidents. In the second stage, six dedicated severity classification models assess the impact of each detected hazard by categorizing its severity as low, medium, or high. Finally, an aggregation model integrates the results to provide comprehensive insights for transportation authorities. The systematic approach seamlessly integrates real-time data into an interactive dashboard, facilitating data-driven decision-making for proactive maintenance, improved road safety, and optimized resource allocation. By combining accuracy, scalability, and computational efficiency, this approach offers a robust and scalable solution for smart city infrastructure management and transportation planning.

1. Introduction

Efficient road infrastructure is essential for urban mobility, economic growth, and public safety. However, poor maintenance, increasing traffic congestion, and environmental factors contribute to deteriorating road conditions, leading to significant safety and operational challenges. Studies indicate that road defects such as potholes and cracks account for approximately 50% of vehicle damage-related expenses and contribute to nearly 15% of annual traffic accidents globally. Additionally, urban roadblocks caused by animal intrusions, construction zones, and garbage accumulation disrupt transportation networks, impacting millions of commuters and freight operations daily. The global smart transportation market, valued at USD 110 billion in 2023, is projected to grow at a CAGR of 12.6%, reflecting an urgent demand for automated, data-driven transport management solutions that enhance efficiency, safety, and resource allocation.

Traditional road monitoring methods such as manual surveys and fixed-sensor-based inspections are labor-intensive and time-consuming, resulting in high operational costs and delayed response times. These limitations reduce the efficiency of road maintenance, transport management, and hazard mitigation. Previous works have primarily focused on camera-based imagery, satellite-based remote sensing, and vehicle-mounted sensors; however, they lack the high-resolution adaptability crucial for real-time monitoring across dynamic urban road networks. Furthermore, most prior research has addressed only single obstacle categories, such as pothole detection and severity classification, illegal dumping detection, or animal intrusion detection, without integrating them into a unified system. In contrast, our study introduces a comprehensive UAV-based framework capable of detecting six high-priority road obstacles (potholes, cracks, garbage accumulation, construction zones, animal intrusions, and vehicle accidents) within a single system. Unlike existing methods that classify a single obstacle type, our approach incorporates multi-class obstacle detection and severity classification, enabling a holistic, AI-driven transport management system. This research fills a critical knowledge gap by demonstrating the superiority of UAV-based monitoring over traditional and existing camera-based and sensor-based approaches, offering higher adaptability, scalability, and accuracy for real-time urban road management.

This paper introduces a UAV-based intelligent transport management for smart cities using artificial intelligence and machine learning models for real-time obstacle detection and severity classification. Unlike existing research, which primarily focuses on binary obstacle detection, our multi-stage framework incorporates the following:

- A high-precision obstacle detection model capable of identifying multiple road obstacle categories. This model leverages advanced deep learning techniques and computer vision to ensure accurate detection.

- Six category-specific severity classification models, designed using a hybrid approach that combines the feature extraction capabilities of convolutional networks with the contextual understanding of Transformer-based architectures.

- A novel preprocessing technique that dynamically adjusts images based on altitude variations, improving detection accuracy in aerial transport management.

- A real-time interactive dashboard that visualizes the detected obstacles, facilitating automated risk assessment and data-driven intervention planning.

Through the integration of AI-driven analytics, UAVs, and predictive maintenance strategies, our proposed system bridges the gap between obstacle detection and response time, ensuring resolution of critical road hazards. Our work not only helps to reduce the response time but also optimizes resource allocation by identifying high-risk obstacles and prioritizing their mitigation. Addressing these challenges necessitates an automated, high-precision approach that enables rapid and scalable transport management.

The rest of this paper is organized as follows: Section 2 presents related work and positions our contributions within the existing literature. Section 3 describes the data acquisition, preprocessing, and management pipeline. Section 4 details the modeling approaches used for obstacle detection and severity classification, along with evaluation metrics. Section 5 introduces the integrated AI cloud platform that operationalizes the system in real time. Finally, Section 6 concludes the paper and outlines future directions for research and development.

2. Related Work

2.1. Literature Survey

A comprehensive literature review was performed to analyze the current detection models employed in UAV-based transport systems to address urban challenges, including obstacle detection, waste management, and road safety. Table 1 shows a survey of different research papers.

2.1.1. Vehicle and Animal Detection

There was a study that employed CNN-based models such as SSD-500 and YOLOv3 have been utilized to detect cattle in streets and deserts, providing tailored solutions for specific regions [1]. Additionally, advanced frameworks like Faster R-CNN, SSD, YOLOv5, and YOLOv7 have been used for animal detection and counting in various terrains, including highways, forests, and deserts, demonstrating their robustness across diverse environments [2]. Another study employs YOLO models to detect real time tracking of vehicles on roads and highways, enhancing traffic management and safety [3,4]. Furthermore, This research focused on employing these traditional models to effectively identify livestock and wild animal detection using Faster RCNN and Unet [5,6] and monitor obstacles in urban scenarios. In this paper, we have used the above traditional models as a foundation for detecting vehicles, vehicle crashes, animals, and dead animal debris on roads [7,8], which may pose significant threats to transport management systems.

2.1.2. Road Damage and Debris Detection

Researchers has demonstrated the utilization of VGG-19 and U-net deep convolutional neural networks [9] for the detection of road cracks, particularly on the CRACK500 dataset. Additionally, more advanced frameworks such as Faster R-CNN and YOLOv8 have been employed for the assessment and categorization of road damage, underscoring their effectiveness in infrastructure monitoring [10]. Furthermore, techniques including support vector machine classification and simple linear iterative clustering algorithms have been applied to identify cracks and debris, specifically in smaller streets and highways, contributing to environmental cleanliness [11,12]. These models collectively emphasize the significance of surface analysis in maintaining the integrity and safety of urban infrastructure. This Paper employs the traditional models as a foundation to detect road damage and debris, which may present significant challenges for transport management systems.

2.1.3. Garbage and Illegal Dumping Detection

There was a study in which object detection models like SSD, YOLOv3 [10,11], and YOLOv4 have been widely utilized to identify waste across roads, sidewalks, and parks using UAV-based datasets, showcasing their efficiency in large-scale garbage detection tasks [13,14]. Additionally, highlights the implementation of CNN-based architectures, such as CNN1 and CNN2 [15,16], to detect garbage in parks and beaches, addressing urban waste management challenges. Furthermore, advanced techniques involving the integration of ResNet50 with Feature Pyramid Networks have proven effective in identifying illegal dumping and landfill sites, contributing to environmental sustainability efforts [17,18,19]. This paper explores the application of these traditional and advanced object detection models for detecting graffiti on walls and traffic signs [20,21] and mitigating environmental hazards.

2.1.4. Accident and Construction Detection

Researchers has demonstrated that models such as YOLOv5 and YOLOv8 are effective in identifying potholes and cracks on highways, enabling targeted maintenance strategies [22]. Tiny YOLO, combined with Convolutional Neural Networks, has proven its capability in detecting construction at various altitudes, making it suitable for UAV-based applications [23]. Furthermore, Convolutional LSTM models have been employed to detect debris resulting from vehicle accidents and classify crashed vehicles, showcasing their potential for real-time accident monitoring [24]. This paper incorporates these traditional models to enhance road safety in UAV-based transport management systems.

2.2. Technology Survey

The integration of Unmanned Aerial Vehicles (UAVs) with artificial intelligence and machine learning has revolutionized urban infrastructure monitoring. UAVs equipped with object detection models like YOLO, Faster R-CNN, and SSD have improved the accuracy and efficiency of detecting road damage, debris, and safety hazards, particularly in construction sites where hardhat detection has reached over 90% precision.

Furthermore, UAVs combined with deep learning techniques and transformers [25,26,27,28,29] have shown great promise in traffic and transport management, effectively identifying, and classifying urban solid waste. These technologies play a crucial role in enhancing the safety, sustainability, and overall management of urban infrastructures, offering timely and accurate solutions for various urban challenges. Table 1 shows comprehensive overview of different research papers including the above showcased overview, models developed, measurements and evaluation metrics used.

Unlike prior studies that typically address a single road anomaly type or rely on monolithic detection frameworks, our proposed research introduces a comprehensive hybrid UAV-based Transport Management System that integrates anomaly detection, severity classification, and decision support into a unified workflow. Stage 1 employs an enhanced YOLOv8 model for robust multi-class obstacle detection across six diverse categories (cracks, potholes, animal debris, garbage dumping, construction activities, and accidents). Stage 2 advances beyond existing works by deploying category-specific severity models, enabling fine-grained classification (low, medium, high) tailored to each anomaly type. This two-stage pipeline ensures modularity and adaptability, allowing independent model optimization for evolving conditions. Further, by combining the EfficientRep backbone of MobileNetV1-SSD with transformer-based encoders for severity analysis, the system achieves both computational efficiency and high accuracy. Crucially, unlike most existing UAV-road inspection approaches, our framework extends beyond detection to deliver a cloud-integrated, interactive dashboard, enabling real-time visualization, role-based access, and actionable decision-making for smart transport authorities. This holistic integration of detection, severity analysis, and operational intelligence distinguishes our approach from existing incremental improvements in the literature.

Table 1.

Comprehensive overview of existing research papers.

Table 1.

Comprehensive overview of existing research papers.

| Ref. | Objective | Models Used | Data Source | Area | Measurements | Evaluation Metrics |

|---|---|---|---|---|---|---|

| [1] | Cattle detection in desert farms | CNN (SSD-500, YOLOv3) | Custom | Desert Areas | Group-of-Animals Density Distribution, | F-score: 0.93, Accuracy: 0.89, map: 84.7 |

| [2] | Deer detection and counting | YOLOv3, YOLOv4, YOLOv4-Tiny, SSD | Custom images—Manually Annotated | Dense Forests, Agricultural Areas | Number of Deer—Detection | Map: 70.45%, Precision: 86%, Recall: 75% |

| [3] | Real-time UAV tracking for multi-target detection and tracking | YOLOv4, DeepSORT | Custom dataset of 3200 images | Various environments with UAVs | Tracking speed (69 FPS) | Tracking accuracy: 94.35% |

| [4] | Small-scale moving target detection of aerial imagery | CNNs | Custom dataset of 10*10 pixel targets | Aerial environments | 10*10 Target size in all images | Accuracy |

| [5] | Real-time object detection | YOLO, Fast YOLO | Custom datasets across various domains | Natural images, artwork | Detection speed—45 FPS for YOLO, 155 FPS for Fast YOLO | mAP, Localization error rate, False positive rate |

| [6] | Moving Object Detection through UAV | YOLO, DCNN, Micro NN | KITTI Dataset | Traffic environments | Objects—Speed and Motion—3 FPS for Micro NN | Reducing error rates from simulations |

| [7] | Livestock detection | Yolo v3, Faster RCNN, U-net | Custom dataset—89 Images | Aerial imagery captured by a quadcopter | Livestock—varying shapes, sizes, scales, and orientations | mAP |

| [8] | Wild animal detection | DCNN, SVM, K-NN, Ensemble Tree | Camera dataset | Forests | Animal detection in cluttered environments, multilevel graph cut proposals | Accuracy: 91.4% |

| [9] | Pavement Crack Detection | Faster RCNN, Preprocessing Algorithms | Crack Forest Dataset | Forests | Crack detection on Forest Roads | mAP |

| [10] | Pavement crack detection | VGG-19, U-net DCNN (Residual Blocks) | Crack500 | Roads | Crack classification, crack segmentation | Classification Accuracy: 100%, Segmentation Accuracy: Improved by 10% |

| [11] | Pavement distress detection using computer vision and deep learning | YOLO | Uav-pdd2023 | Highways, Provincial Roads, County Roads | Longitudinal cracks, transverse cracks, oblique cracks, alligator cracks, patching, and potholes | N/A |

| [12] | UAV-based crack detection framework | Faster-RCNN, YOLOv5s, YOLOv7-tiny, YOLOv8s | DJI mini 2 UAV imagery | Urban Roads | Crack detection, road damage assessment | Faster-RCNN: highest accuracy, yolo models: fastest algorithms |

| [13] | Domestic garbage detection using deep learning in complex multi-scenes | Skip-YOLO, YOLOv3 | Custom | Streets | Feature mapping, Convolution kernel, Dense convolutional blocks | Precision: 22.5%, recall: 18.6% |

| [14] | Litter detection | CNN, Yolo V8 | Camera imagery | City Streets | Tree branches, Leaves, Plastic | F-score: 0.94, Accuracy: 0.90, map: 86.5 |

| [15] | Waste dump detection | CNN, SSD | UAV imagery | Saint louis, Senegal | Varying shapes and sizes of waste dumping | F-score: 0.88, Accuracy: 0.85, map: 81.3 |

| [16] | Illegal dumping detection | Adversarial Autoencoder, Vanilla GAN, WGAN, WGAN-GP | Satellite imagery | Various locations | Dumping site size, location | F-score: 0.90, Accuracy: 0.87, map: 82.4 |

| [17] | Intelligent garbage detection using UAV and deep learning | CNN1, CNN2 | UAV images | Remote locations | Symmetry and homogeneity for image resizing | Accuracy: 94% |

| [18] | Solid waste detection using machine learning and GIS data | K-means segmentation, Random Forest, Efficient Net | VHR images, GIS data | Streets | Classification of waste into 5 categories, Image segmentation, Rooftop removal for accuracy enhancement | Accuracy: 73.95–95.76% for class—Sure, Overall accuracy—80.18% |

| [19] | Traffic and illegal dumping | Yolov5, Deep Sort | UAV video, coco dataset | San Jose | Detection of Illegal Dumping, Vehicle, and License Plate Identification | Accuracy: 97%, F-score: 0.95, Precision: 0.92, Recall: 0.91 |

| [20] | Multi target detection | YOLOv4, CNN | COCO2017 | Objects | Detection of multiple targets—Humans, Cats, Stationery | mAP |

| [21] | Graffiti detection | SSD MobileNet V2 | UAV imagery | Urban areas | Graffiti on walls and traffic signs detection | RPN loss |

| [22] | Debris Object Detection Caused by Vehicle Accidents | Pretrained SSD, Faster RCNN | UAV Imagery | Traffic environments | Number of debris caused by Vehicle accidents | Accuracy Graphs, Evaluation matrix, Detection box score |

| [23] | Improve construction safety using UAS and deep learning | Faster R-CNN, YOLOv3 | UAV imagery | Construction sites | Safety activity metrics, Hardhat detection | Precision: 93.1% for Faster R-CNN, 89.8% for YOLOv3 |

| [24] | Public parking spaces monitoring | Object detection models | CCTV imagery | Parking areas | Number of vehicles | Accuracy, scalability |

| [25] | Object detection in low-light conditions | Multi-enhancement networks | Camera imagery | Urban and Rural environments | Detection performance, response time | Accuracy |

| [26] | Image classification using Transformers | Vision Transformers | CIFAR-10, CIFAR100, Pets, Flowers, Cars | Natural images | Comparison of VIT performance with typical CNNs | Accuracy |

| [27] | Multiple Image classification | CNNs, Vision Transformers | Custom datasets | Noisy images | Comparison of CNNs and ViT on a large-scale dataset | Accuracy, mAP |

| [28] | Attention Mechanisms in ViT | Vision Transformers | CIFAR 10/100, MINST, MINST-F | Natural and artificial images | Applied graph structures to the attention head of the Transformer | Accuracy |

| [29] | AI Cloud Platform for Road inspection and analysis | YOLO, ViT, MB1-SSD | Custom UAV Imagery dataset | Urban and Rural environments | Density analysis, size-based severity classification, obstacle categorization | Precision, Accuracy, mAP, ROC, Confusion Matrix |

| Proposed Work | UAV-based transport management for animal, construction, potholes, cracks, illegal dumping, and accidents | 2-staged Hybrid models combining Traditional CNNs with Transformer architectures | Custom UAV Imagery dataset | Roads, Streets and Highways | Density analysis, relative sizing, severity classification, obstacle categorization | High precision, severity levels: low/medium/high, scalable efficiency |

3. Data Engineering

The foundation of any machine learning-based system lies in data collection, preparation, cleaning, and structuring for model training. This section outlines the data acquisition process for our research.

3.1. Data Collection

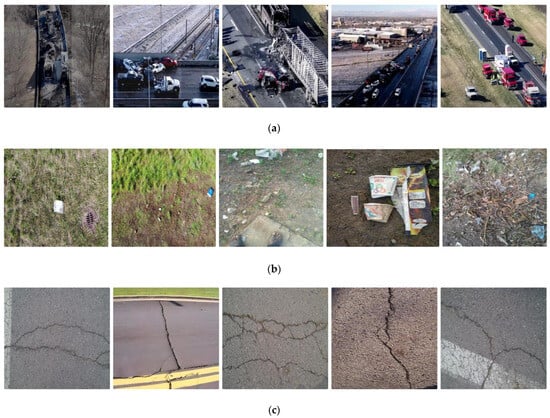

The dataset used in this study was compiled from public image repositories, drone videos, and custom-collected images. The UAV-PDD2023 and UAV pavement crack datasets contributed over 3800 annotated images for road distress detection, including cracks and potholes. To detect potholes, we incorporated the PDS-UAV and B21-CAP0388 datasets, totaling over 5800 images. Illegal dumping was analyzed using a mixed dataset combining publicly available UAV images and web-scraped data, contributing 3000+ annotated samples. Custom datasets covered animals, animal debris, and construction activities comprising over 4500 images. To include vehicle accident data, we extracted frames from 9 drone videos and converted them into images, maintaining consistency with the other datasets. This comprehensive dataset supports the development of accurate detection and classification models for various road hazards, as shown in Figure 1a–f, which show the data samples in the 6 different obstacle categories, namely, accidents, illegal dumping, cracks, construction, potholes, and animals on roads. While a significant portion of the dataset focuses on urban roads and highways, we also include samples from dense forests and agricultural areas. The current study emphasizes urban environments due to the higher complexity of traffic congestion and transport management in these regions.

Figure 1.

(a) Sample images from accident dataset. (b) Sample images from illegal dumping dataset. (c) Sample images from road crack dataset. (d) Sample images from road construction dataset. (e) Sample images from road pothole dataset. (f) Sample images from animal dataset.

Table 2 presents a comprehensive overview of the datasets employed in our study, categorizing them based on the type of road hazard they encompass. It details the total number of images collected for each subcategory, including cracks, potholes, illegal dumping, road animals, construction activities, and vehicle accidents.

Table 2.

Comprehensive overview of datasets.

3.2. Data Preprocessing

We ensured high-quality, non-grayscale images with minimal noise by excluding low-resolution ones during preprocessing. Given the varied sizes of the images from different sources, we resized them all to 512 × 512 pixels to maintain consistency and converted them into tensor format for compatibility with PyTorch 2.6. To address the challenge of varying altitudes in drone images, we standardized the images to a constant altitude, ensuring consistent relative sizing. For video data, instead of converting entire videos and facing excessive frame numbers, we manually clipped relevant segments, converted them into frames using OpenCV 4.10.0, and stored them for further analysis and modeling.

3.3. Data Transformation

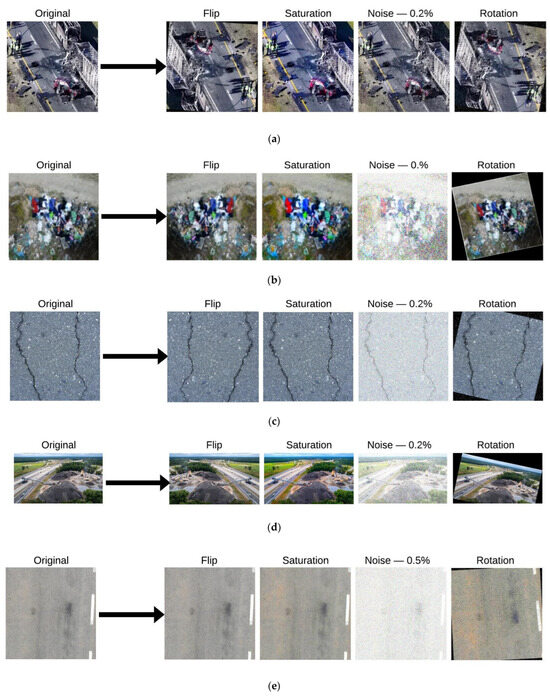

We applied image data augmentation using Keras’s ImageDataGenerator to enhance dataset diversity:

- Rotation: This simulates different orientations of the data.

- Saturation: This accounts for variations in lighting conditions.

- Flipping: This shifts images vertically and horizontally to mimic perspective changes.

- Noise Injection: This adds random noise to help the model handle real-world noise.

These transformations improved the dataset’s diversity and model robustness. Table 2 shows the count of images after augmentation. Also, Figure 2a–f illustrates augmentation samples, showing the original image, an arrow indicating transformation, and augmented versions of all the categories, including accidents, illegal dumping, road cracks, road construction, road potholes, and animal datasets.

Figure 2.

(a) Augmented data sample from accident dataset. (b) Augmented data sample from illegal dumping dataset. (c) Augmented data sample from road crack dataset. (d) Augmented data sample from road pothole dataset. (e) Augmented data sample from road construction site dataset. (f) Augmented data sample from animals on road dataset.

To improve model robustness across varying conditions, we applied data augmentation techniques such as noise addition and color saturation adjustments, which simulate environmental variations including bright sunlight, rain, fog, and smoke. Future work will expand the dataset to rural roads, tunnels, and railway tracks to further evaluate model generalization across diverse road types and climates.

3.4. Data Preparation

Data was collected from multiple sources, including images and videos, which were converted into image frames using the OpenCV library. The images were labeled and partitioned into three distinct datasets: training, validation, and test sets. The training dataset was utilized to train the models, while the validation dataset aided in estimating prediction errors to inform model selection. The test dataset was then employed to evaluate the generalization performance of the models. The ImageDataGenerator package was utilized to randomly shuffle and split the data into a 70% training, 20% validation, and 10% testing allocation, ensuring the process maintained a level of randomness. Furthermore, diligent attention was given to annotating any images that lacked prior annotations.

3.5. Data Statistics

Our dataset consists of raw data gathered from various open-source datasets, totaling around 17,000 images. To improve the quality and diversity, we applied data augmentation techniques, expanding the dataset to 153,000 images across 6 classes. The distribution of image counts for each class is provided in Table 3. The dataset was then split into 70% for training, 20% for validation, and 10% for testing.

Table 3.

Dataset class distribution.

To support reproducibility, the publicly available datasets employed in this study (e.g., UAV-PDD2023, CRACK500, PDS-UAV, and other referenced repositories) can be directly accessed from their respective sources. The custom-collected datasets like animal obstructions, construction activities, and accident frames, which comprise a substantial portion of this research, are securely stored on the San José State University institutional drive. Due to storage and institutional policy constraints, these datasets are not openly downloadable; however, researchers may request access by contacting the corresponding author. Access will be granted for academic and non-commercial research purposes upon verification. While this request-based mechanism ensures controlled sharing, it also presents a limitation in terms of full replicability compared to completely open datasets. Nevertheless, we believe that this hybrid approach balances data security with reproducibility, allowing the community to validate and extend our work.

4. Model Development

We developed a two-stage modeling architecture to enhance obstacle identification and classification on roadways. In the first stage, the obstacle detection model accurately identifies the type of obstacle present, such as potholes, cracks, debris, illegal dumping, road construction activities, vehicle accidents, or animals. Based on the detected obstacle, the system dynamically triggers one of the six category-specific models in the second stage. Each model in the second stage is specifically trained to assess the severity of the corresponding obstacle category identified in the first stage, enabling precise and efficient road condition analysis. Figure 3 shows our 2-stage model pipeline.

Figure 3.

Hybrid transport management machine learning model based on UAV data.

4.1. Stage 1—Obstacle Detection

In this research project, we optimized the YOLOv8 model to enable precise detection of obstacles by modifying its architecture to accommodate UAV-captured roadway imagery. The pretrained YOLOv8 model, originally trained on the COCO dataset with 80 classes, was fine-tuned by narrowing the detection classes to six specific categories relevant to our application domain. We adjusted the head architecture to concentrate on these targeted obstacles while retaining the robust backbone for efficient feature extraction. To enhance the detection accuracy, particularly for smaller obstacles like cracks and debris, we integrated a PANet layer, improving the multi-scale feature representation. These architectural modifications significantly enhanced the model’s performance, ensuring accurate localization and detection of obstacles under varying environmental conditions.

4.2. Stage 2—Category-Specific Severity Classification

In Stage 2 of our hybrid model architecture, category-specific models are employed for precise severity classification of the detected obstacles. After YOLOv8 identifies obstacles in Stage 1, the system selects the most significant category based on the largest bounding box and routes it to a corresponding Stage 2 model. Each of these models is meticulously customized and trained on data specific to its obstacle type—such as cracks, potholes, vehicle crashes, garbage, animals, or construction—enabling accurate severity assessment as low, medium, or high. This tailored approach ensures specialized analysis for each obstacle, optimizing severity evaluation and enhancing overall roadway management.

4.2.1. Accident Classification Model

The Accident Classification Model employs a two-stage hybrid architecture optimized for identifying and assessing vehicle-related road hazards. In the first stage, if the detected category is a vehicle crash, then this model is revoked. The second stage enhances the pretrained Vision Transformer model for precise severity classification. Key enhancements include incorporating vehicle count as a critical feature, resizing images to 224 × 224 with random horizontal flips during preprocessing, adding a dropout layer to mitigate overfitting, and leveraging Gaussian Error Linear Unit activation for improved non-linearity and pattern recognition. The model classifies severity into low, medium, and high categories based on factors such as the number of vehicles involved, the extent of damage, and road blockage.

4.2.2. Illegal Dumping Classification Model

The Illegal Dumping Classification Model detects illegal waste disposal in urban areas, enhancing environmental safety. Built on the SSD architecture, it integrates a Feature Pyramid Network (FPN) to improve detection across varying waste sizes. The FPN combines high-level abstract features with finer details from lower levels, improving feature extraction and detection accuracy. This modification enables the model to more effectively identify and locate different types of waste, supporting cleaner neighborhoods and better waste management.

4.2.3. Road Crack Classification Model

The Road Crack Detection Classification Model was designed using a modified MB1-SSD architecture, selected for its balance of speed and detection accuracy, particularly suited for real-time UAV applications. The base MB1-SSD model was adapted to improve crack detection by incorporating additional 1 × 1 and 3 × 3 convolutional layers (with stride 2 and padding 1) to enhance feature extraction and better capture fine-grained details of road surface cracks. To improve detection accuracy, especially in cases where cracks are closely situated, we implemented Soft Non-Maximum Suppression (Soft-NMS), which allows the model to retain multiple valid detections rather than suppressing them. Severity classification is based on the depth and size of the detected cracks, categorizing them into low, medium, and high severity levels. This targeted approach ensures precise assessment of road damage, facilitating timely maintenance interventions.

4.2.4. Road Construction Site Classification Model

The Road Construction Site Classification Model enhances roadway safety by detecting and classifying construction zone severity using a Vision Transformer (ViT). The model replaces the patch embedding layer with a Conv2D layer for detailed pixel-level feature extraction, capturing structural elements like machinery and barricades. The MLP head, updated with a linear layer, processes 768 features for accurate classification. Triggered by Stage 1 obstacle detections, the model evaluates severity based on factors like road blockage and proximity to traffic, categorizing it into low, medium, and high risk for informed decision-making.

4.2.5. Road Pothole Classification Model

The Road Pothole Detection Classification Model is designed to accurately identify and assess potholes on roadways to improve safety for vehicles and pedestrians. Based on the architecture of the Road Crack Detection Model, it incorporates an additional sequential block with convolution layers using a 5 × 5 kernel, as opposed to the standard 3 × 3, to capture larger pothole-related features while preserving spatial information. This modification enhances the model’s feature extraction capabilities, improving the accuracy of pothole detection and severity classification, thus contributing to safer driving conditions.

4.2.6. Animal Obstruction Classification Model

Our Animal Obstruction and Severity Classification Model utilizes a two-stage architecture optimized for identifying and evaluating animal-related road hazards. In Stage 1, the model detects the presence of animals using UAV-based video from roads, highways, and streets. For the second stage, we have enhanced the pretrained Vision Transformer model for fine-tuned severity classification. Key modifications include replacing the patch embedding layer with a Conv2D layer for finer pixel-level feature extraction, incorporating a dropout layer (p = 0.5) to prevent overfitting, and tailoring the model to classify severity into three categories: low, medium, and high. The severity assessment is based on the animal’s size, proximity to the road, and movement, ensuring precise risk evaluation.

4.3. Aggregation Module

The UAV-based aggregation module consolidates outputs from six detection models, categorizing detected objects by severity level, low, moderate, and high, for effective prioritization. It organizes the data to highlight critical areas needing immediate attention and visualizes this information on a map. The map employs color-coded severity representation, allowing for quick identification of high-risk regions, thereby enhancing decision-making and response efficiency.

The hybrid transport management model, which processes UAV-captured roadway data in two stages, is showcased in Figure 4. Stage 1 employs improved YOLOv8 for obstacle detection, classifying road hazards into predefined categories. Stage 2 assigns the detected obstacles to specialized category-specific models for severity assessment using tailored architectures like Vision Transformers and SSD variants. A mathematical aggregation module merges severity levels of different categories, generating a transport management analysis report for informed decision-making.

Figure 4.

Block diagram of the hybrid transport management model.

5. Model Evaluation Methods and Results

5.1. Model-Oriented Evaluation Metrics

To ensure the robustness, precision, and practical utility of the proposed UAV-based transport management system, we employ both model-oriented and problem-oriented evaluation metrics. These metrics offer a comprehensive assessment of the performance, reliability, and applicability of the object detection and severity classification models.

5.1.1. Confusion Matrix

The confusion matrix shown in Figure 5 is a fundamental tool used to measure classification performance across multiple classes. It presents the number of true positives (TPs), true negatives (TNs), false positives (FPs), and false negatives (FNs) in a tabular format, allowing us to understand where the model is performing well and where misclassifications occur. The classifications are as follows:

Figure 5.

Confusion matrix.

- True positives (TPs): These are correctly predicted positive instances.

- True negatives (TNs): These are correctly predicted negative instances.

- False positives (FPs): These are incorrectly predicted positive instances.

- False negatives (FNs): These are incorrectly predicted negative instances.

In multi-class classification, confusion matrices reveal per-class prediction errors and enable fine-grained performance analysis, particularly useful for identifying false classifications among similar road anomalies. For CNN- and Transformer-based models, confusion matrices are crucial for visualizing how well the model generalizes across categories such as potholes, garbage, or animals.

5.1.2. ROC Curve

The Receiver Operating Characteristic (ROC) curve plots the true positive rate (TPR) against the false positive rate (FPR) at various threshold settings. It is especially useful when evaluating severity classification models built using Transformer-based and CNN architectures. The Area Under the Curve (AUC) quantifies the classifier’s ability to distinguish between classes:

A higher AUC signifies better generalization and class separation performance, which is crucial for avoiding false alarms and missed hazards in transport applications.

5.1.3. Accuracy

Accuracy measures the proportion of correct predictions among the total number of cases. In our system, it provides a quick snapshot of how well the models detect road anomalies across diverse categories.

5.1.4. Precision

Precision evaluates the proportion of positive predictions that are actually correct. This metric is important in minimizing false positives, especially for high-risk road anomaly detection where over-prediction may lead to unnecessary interventions.

5.1.5. Recall

Recall (or sensitivity) measures the proportion of actual positives that are correctly identified. High recall ensures that the model detects nearly all relevant road hazards. In transport systems, this helps prevent under-reporting of critical issues.

5.1.6. F1 Score

The F1 Score balances precision and recall and is especially effective when dealing with class imbalances. It offers a single performance score that reflects both model robustness and reliability, critical for real-world deployments.

5.1.7. Mean Average Precision (mAP)

In object detection tasks like road obstacle detection, the Mean Average Precision (mAP) is a key metric. It averages the precision across different recall levels and classes, where is the Average Precision for class i. A high mAP score demonstrates that the model is consistent across all obstacle categories.

5.1.8. Loss

The training loss function quantifies prediction error during model optimization. Lower loss values indicate better model convergence and generalization. Monitoring loss helps fine-tune model parameters and avoid overfitting.

5.2. Stage 1–Obstacle Detection

In Stage 1, our improved YOLOv8 model demonstrated strong performance in detecting six road hazard categories, animal obstructions, accidents, cracks, construction sites, illegal dumping, and potholes, achieving an overall mAP of 70%. Accident detection had the highest accuracy (mAP 0.992), followed by animal obstructions (0.901), with other categories ranging between 0.362 and 0.780, showcased in Table 3. In terms of the runtime, preliminary experiments on consumer-grade hardware showed that inference times ranged between 0.1 s and 0.4 s per image, suggesting real-time feasibility for UAV-based deployments. The Stage 1 model was evaluated using F1–confidence analysis, a confusion matrix, and loss trends, which are showcased in Figure 6, Figure 7 and Figure 8, respectively, and mAP scores, which are depicted in Table 4, to ensure robust performance. As the first stage in our two-stage architecture, this detection model effectively identifies obstacles, providing a reliable foundation for severity classification in Stage 2.

Figure 6.

F1–confidence analysis of Stage 1 object detection model.

Figure 7.

Confusion matrix of Stage 1 object detection model.

Figure 8.

Loss trends of Stage 1 object detection model.

Table 4.

mAP scores of Stage 1 obstacle detection model.

Notably, illegal dumping detection was weaker due to dataset limitations such as class imbalance, inconsistent image perspectives, and visual ambiguity from cluttered objects. Future work will focus on improving dataset quality through stricter curation, scale-aware augmentation, and synthetic UAV imagery. Active learning strategies will also be explored to refine the model by targeting ambiguous or misclassified cases, with preliminary results showing promising improvements.

5.3. Stage 2—Category-Specific Severity Classification

In Stage 2, category-specific severity classification models were developed, each trained exclusively on its respective dataset to improve classification accuracy. These models utilized enhanced versions of MB1-SSD and Vision Transformer, evaluated using accuracy, mAP scores, and ROC curves. Each model classified severity into three levels, low, medium, and high, based on factors such as size, depth, quantity, and impact.

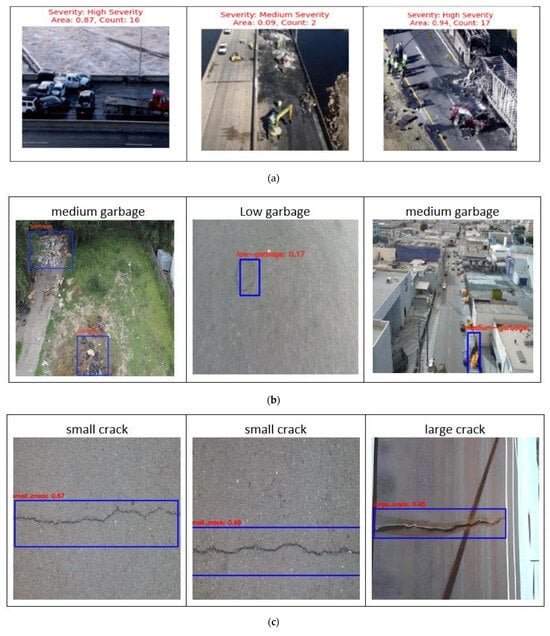

5.3.1. Accident Classification Model

For accident severity classification, the model performed with high reliability, achieving 90% accuracy and 95% precision. It effectively classified accident severity into low, medium, and high categories based on impact area and damage extent. Table 5 provides the evaluation metrics, while Figure 9a displays the output results of the Accident Classification Model.

Table 5.

Accuracy and precision of each model in Stage 2.

Figure 9.

(a) Accident Classification Model results. (b) Illegal Dumping Classification Model results. (c) Road Crack Classification Model results. (d) Road Construction Site Classification Model results. (e) Road Pothole Classification Model results. (f) Animal Obstruction Classification Model results.

5.3.2. Illegal Dumping Classification Model

For illegal dumping severity classification, the model achieved 85% accuracy and 81% precision. It effectively categorized garbage severity based on quantity and distribution, distinguishing between low, medium, and high levels. Figure 9b showcases the output results of the Illegal Dumping Classification Model.

5.3.3. Road Crack Classification Model

For road crack severity classification, the model demonstrated an accuracy of 86% and a precision of 88%. It effectively categorized cracks as small, medium, or large, considering both size and depth. Figure 9c illustrates the output results of the Road Crack Classification Model.

5.3.4. Road Construction Site Classification Model

For road construction site severity classification, the model achieved 81% accuracy and 80% precision, accurately identifying severity levels based on the scale of construction. Figure 9d presents the output results of the Road Construction Site Classification Model.

5.3.5. Road Pothole Classification Model

For road pothole severity classification, the model performed well with an accuracy of 87% and a precision of 85%. It effectively identified small, medium, and large potholes, considering both size and depth. Figure 9e highlights the output results of the Road Pothole Classification Model.

5.3.6. Animal Obstruction Classification Model

For animal obstruction severity classification, the model achieved 77% accuracy and 78% precision, effectively distinguishing between different levels of severity based on the number of animals present in an image. Figure 9f showcases the output results of the Animal Obstruction Classification Model.

The classification models demonstrated strong performance across all categories, with accuracy ranging from 77% to 90% and precision between 78% and 95%, as shown in Table 4. The Accident Classification Model achieved the highest accuracy (90%) and precision (95%), indicating robust severity identification. The Road Crack and Pothole Classification Models also performed well, with accuracies of 86% and 87%, respectively, effectively distinguishing severity levels based on size and depth. While the Animal Obstruction and Illegal Dumping Classification Models had slightly lower accuracy, they still provided reliable severity assessments. Overall, the models effectively categorized severity levels, ensuring accurate identification for transport management applications.

For stage 2 modeling, ROC curves were plotted to evaluate the performance of each category-specific model across three severity levels: low, medium, and high. These curves provide insights into the models’ ability to distinguish between severity classes, with higher AUC values indicating better classification performance. Figure 10 presents the ROC curves, demonstrating the effectiveness of our models in accurately identifying severity levels within each category.

Figure 10.

ROC curves of Stage 2 models: (a) ROC curve of Accident Classification Model; (b) ROC curve of Illegal dumping Classification Model; (c) ROC curve of Road Crack Classification Model; (d) ROC curve of Road Pothole Classification Model; (e) ROC curve of Road Construction Site Classification Model; and (f) ROC curve of Animal obstruction Classification Model.

5.4. City Inspection Analysis

We developed an advanced system where the outcomes from machine learning models are systematically aggregated and presented on category-specific dashboards. These dashboards are designed to offer intuitive insights, enabling efficient analysis and decision-making for city inspection processes.

5.4.1. Overview Dashboard

The overview dashboard provides a detailed, real-time analysis of various detected obstacles. As illustrated in Figure 11, this comprehensive dashboard displays essential information, including the type of obstacle, its severity, and the recommended course of action. An interactive map is integrated for enhanced data visualization, where obstacles are classified into three distinct zones:

Figure 11.

Overview dashboard of our system.

- Red Zone: This indicates high severity.

- Orange Zone: This indicates medium severity.

- Green Zone: This indicates low severity.

These zones are clearly marked on the map, allowing users to easily identify and assess obstacle details within specific geographic areas. This visual approach not only enhances situational awareness but also supports strategic planning by enabling a swift response to high-severity issues.

5.4.2. Category-Specific Dashboards

The category-specific dashboards showcased in Figure 12 are tailored to provide detailed information for each obstacle category. Each dashboard is dedicated to a particular type of obstacle and includes a dynamic map to facilitate easy identification across various regions. The visualization helps in pinpointing the exact location and severity level of obstacles, thus allowing the disaster management team to prioritize actions effectively.

Figure 12.

Category-specific (pothole) dashboard of our system.

These dashboards also provide granular details about the obstacles, aiding in efficient resource allocation and strategic planning. For instance, by analyzing severity levels at the street level, authorities can prioritize interventions and allocate resources more accurately. The interactive nature of these dashboards ensures that users can drill down into specific areas for an in-depth understanding, enhancing overall operational efficiency.

This structured approach significantly contributes to proactive disaster management and effective city maintenance strategies.

6. Conclusions

In this research, we have successfully achieved the stated objectives by developing a robust UAV-based transport management system that enhances road anomaly detection and classification. Through a two-stage framework for precise obstacle detection followed by category-specific severity classification, our system demonstrates high accuracy, computational efficiency, and adaptability, making it a valuable tool for smart city infrastructure, road maintenance planning, and traffic management. Despite challenges such as data diversity and computational demands, our findings highlight the system’s potential in enabling proactive decision-making, real-time monitoring, and improved urban mobility.

Future developments will focus on integrating real-time data streams from UAVs and cameras, allowing immediate processing and automated report generation for faster, data-driven decisions. Enhancing the model to detect multiple issues within a single scene, such as identifying cracks alongside construction debris or illegal dumping, will improve its versatility for complex real-world scenarios. Further, optimizing the system for scalability across diverse environments and geographical regions will ensure consistent performance. Additionally, incorporating predictive analytics will allow early identification of potential problem zones, improving maintenance planning and resource allocation. Alongside these technical priorities, future research will address policy and ethical considerations related to UAV surveillance in public spaces, ensuring that intelligent transport solutions are both effective and socially responsible.

Author Contributions

Conceptualization, J.G.; methodology, J.G., S.B. and Y.S.; data collection, S.B., Y.S., M.L. and D.V.; processing and model training, S.B. and Y.S.; system design and dashboard development, S.B.; writing and original draft preparation, S.B. and J.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

CAGR: Compound Annual Growth Rate; UAV: Unmanned Arieal Vehicle; CNN: Convolutional Neural Network; R-CNN: Region-Based Convolutional Neural Network; SSD: Single Shot Detection; YOLO: You Only Look Once; AI: Artificial Intelligence; ResNet: Residual Network; LSTM: Long Short-Term Memory; VGG: Visual Geometry Group; ROC: Receiver Operating Characteristic; AUC: Area Under the Curve.

References

- Aburasain, R.Y.; Edirisinghe, E.A.; Albatay, A. Drone-Based Cattle Detection Using Deep Neural Networks. In Intelligent Systems and Applications; Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2021; Volume 1250, pp. 598–611. [Google Scholar]

- Rančić, K.; Blagojević, B.; Bezdan, A. Animal Detection and Counting from UAV Images Using Convolutional Neural Networks. Drones 2023, 7, 179. [Google Scholar] [CrossRef]

- Hong, T.; Liang, H.; Yang, Q.; Fang, L.; Kadoch, M.; Cheriet, M. A Real-Time Tracking Algorithm for Multi-Target UAV Based on Deep Learning. Remote Sens. 2023, 15, 2. [Google Scholar] [CrossRef]

- Sun, W.; Yan, D.; Huang, J.; Sun, C. Small-scale moving target detection in aerial image by deep inverse reinforcement learning. Soft Comput. 2020, 24, 5897–5908. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- He, Y.; Li, X.; Nie, H. A Moving Object Detection and Predictive Control Algorithm Based on Deep Learning. J. Phys. Conf. Ser. 2021, 2002, 012070. [Google Scholar] [CrossRef]

- Han, L.; Tao, P.; Martin, R.R. Livestock detection in aerial images using a fully convolutional network. Comput. Vis. Media 2019, 5, 123–134. [Google Scholar] [CrossRef]

- Verma, G.K.; Gupta, P. Wild Animal Detection Using Deep Convolutional Neural Network. In Proceedings of the 2nd International Conference on Computer Vision & Image Processing, Roorkee, India, 9–12 September 2017. [Google Scholar] [CrossRef]

- Zhao, M.; Shi, P.; Xu, X.; Xu, X.; Liu, W.; Yang, H. Improving the Accuracy of an R-CNN-Based Crack Identification System Using Different Preprocessing Algorithms. Sensors 2022, 22, 7089. [Google Scholar] [CrossRef] [PubMed]

- Elamin, A.; El-Rabbany, A. UAV-Based Pavement Crack Detection Using Deep Convolutional Neural Networks. In Proceedings of the FIG Congress 2022: Volunteering for the Future—Geospatial Excellence for a Better Living, Warsaw, Poland, 11–15 September 2022. [Google Scholar]

- Yan, H.; Zhang, J. UAV-PDD2023: A Benchmark Dataset for Pavement Distress Detection Based on UAV Images. Data Brief 2023, 235, 109692. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Liu, C. A Pavement Crack Detection and Evaluation Framework for a UAV Inspection System Based on Deep Learning. Appl. Sci. 2023, 14, 1157. [Google Scholar] [CrossRef]

- Lun, Z.; Pan, Y.; Jiang, H. Skip-YOLO: Domestic Garbage Detection Using Deep Learning Method in Complex Multi-scenes. Int. J. Comput. Intell. Syst. 2023, 16, 139. [Google Scholar] [CrossRef]

- Ping, P.; Xu, G.; Kumala, E.; Gao, J. Smart Street Litter Detection and Classification Based on Faster R-CNN and Edge Computing. Int. J. Softw. Eng. Knowl. Eng. 2020, 30, 537–553. [Google Scholar] [CrossRef]

- Youme, O.; Bayet, T.; Dembele, J.M.; Cambier, C. Deep Learning and Remote Sensing: Detection of Dumping Waste Using UAV. Procedia Comput. Sci. 2021, 185, 361–369. [Google Scholar] [CrossRef]

- DumpingMapper: Illegal Dumping Detection from High Spatial Resolution Satellite Imagery. Available online: https://www.researchgate.net/publication/360435700_Accurate_Detection_of_Illegal_Dumping_Sites_Using_High_Resolution_Aerial_Photography_and_Deep_Learning (accessed on 5 August 2025).

- Verma, V.; Gupta, D.; Gupta, S. A Deep Learning-Based Intelligent Garbage Detection System Using an Unmanned Aerial Vehicle. Symmetry 2023, 14, 960. [Google Scholar] [CrossRef]

- Ulloa-Torrealba, Y.Z.; Schmitt, A.; Wurm, M.; Taubenböck, H. Litter on the Streets—Solid Waste Detection Using VHR Images. Eur. J. Remote Sens. 2023, 56, 2176006. [Google Scholar] [CrossRef]

- Pathak, N.; Biswal, G.; Goushal, M.; Mistry, V.; Shah, P.; Li, F.; Gao, J. Smart City Community Watch Camera-Based Community Watch for Traffic and Illegal Dumping. Smart Cities 2024, 7, 2232–2257. [Google Scholar] [CrossRef]

- Liu, J. Multi-target detection method based on YOLOv4 convolutional neural network. J. Phys. Conf. Ser. 2021, 1883, 012075. [Google Scholar] [CrossRef]

- Wang, S.; Gao, J.; Li, W.; Lu, S. Building Smart City Drone for Graffiti Detection and Clean-up. In Proceedings of the IEEE International Conference on Smart City Innovation, Leicester, UK, 19–23 August 2019. [Google Scholar] [CrossRef]

- Alam, H.; Valles, D.; Rabbany, A. Debris Object Detection Caused by Vehicle Accidents Using UAV and Deep Learning Techniques. In Proceedings of the IEEE Conference on Intelligent Systems, Vancouver, BC, Canada, 27–30 October 2020; pp. 3110–3115. [Google Scholar]

- Akinsemoyin, A.; Awolusi, I.; Chakraborty, D.; Al-Bayati, A.J.; Akanmu, A. Unmanned Aerial Systems and Deep Learning for Safety and Health Activity Monitoring on Construction Sites. Sensors 2023, 23, 6690. [Google Scholar] [CrossRef] [PubMed]

- Moroni, D.; Pieri, G.; Leone, G.R.; Tampucci, M. Smart cities monitoring through wireless smart cameras. In Proceedings of the 2nd International Conference, New York, NY, USA, 7 January 2019. [Google Scholar] [CrossRef]

- Awwad, Y.A.; Rana, O.; Perera, C. Anomaly Detection on the Edge Using Smart Cameras under Low-Light Conditions. Sensors 2024, 24, 772. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Deng, Y.; Zheng, Y.; Chattopadhyay, P.; Wang, L. Vision Transformers for Image Classification: A Comparative Survey. Technologies 2025, 13, 32. [Google Scholar] [CrossRef]

- Maurício, J.; Domingues, I.; Bernardino, J. Comparing Vision Transformers and Convolutional Neural Networks for Image Classification: A Literature Review. Appl. Sci. 2023, 13, 5521. [Google Scholar] [CrossRef]

- Kim, H.; Ko, B.C. Rethinking Attention Mechanisms in Vision Transformers with Graph Structures. Sensors 2024, 24, 1111. [Google Scholar] [CrossRef] [PubMed]

- Balivada, S.; Sha, Y.; Gao, J. Smart City Ai Cloud Platform for Road Inspection and Analysis. In Proceedings of the 11th IEEE International Conference on Big Data Computing Service and Machine Learning Applications (BigDataService), Tucson, AZ, USA, 21–24 July 2025; Available online: https://www.researchgate.net/publication/392312110_SMART_CITY_AI_CLOUD_PLATFORM_for_ROAD_INSPECTION_AND_ANALYSIS (accessed on 1 June 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).