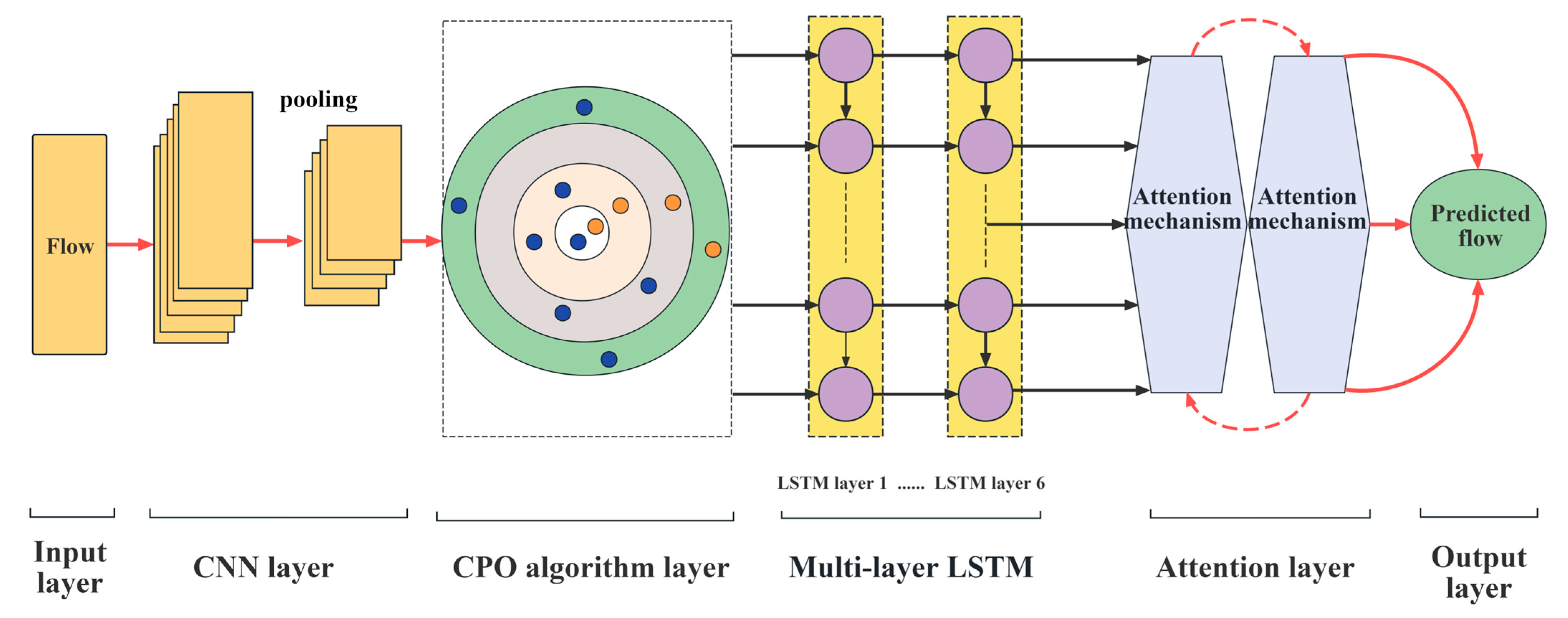

Traffic Flow Prediction via a Hybrid CPO-CNN-LSTM-Attention Architecture

Abstract

Highlights

- This paper designs a traffic flow prediction model that integrates machine learning and deep learning to improve the efficiency of traffic flow prediction, alleviate road congestion, and further contribute to the development of smart cities.

- The combined model demonstrated excellent traffic flow prediction performance, achieving an RMSE of 17.35–19.83 and an MAE of 13.98–14.04 in the prediction results.

- An effective traffic flow prediction method for intelligent transportation systems, improving road services and management, is provided.

- The incorporation of cutting-edge machine learning and deep learning frameworks provides a basis for upcoming smart city programs.

Abstract

1. Introduction

2. Proposed Method and Modeling

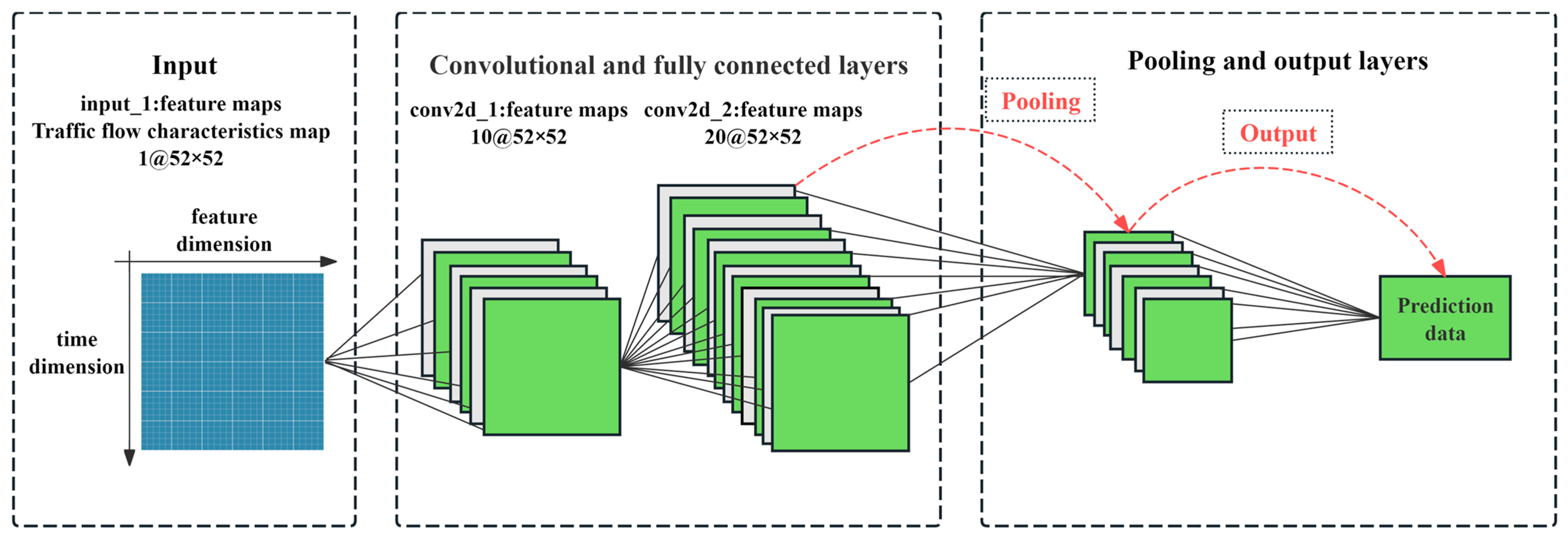

2.1. CNN Model Construction

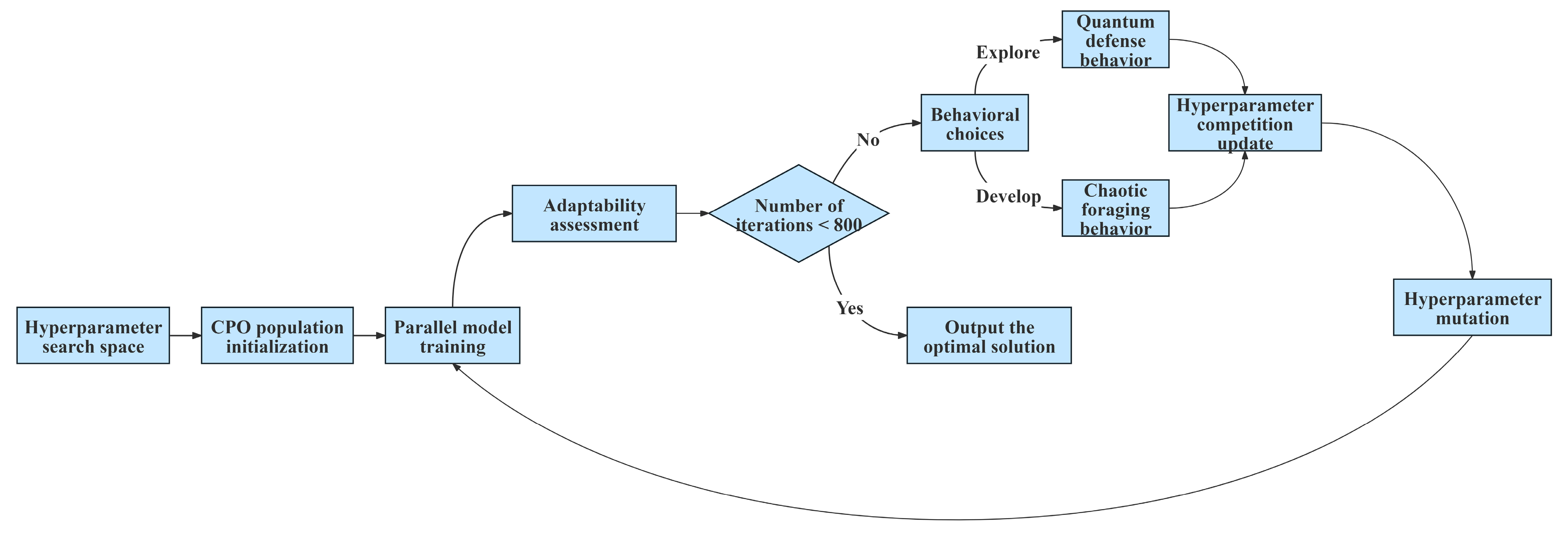

2.2. CPO Algorithm Modeling

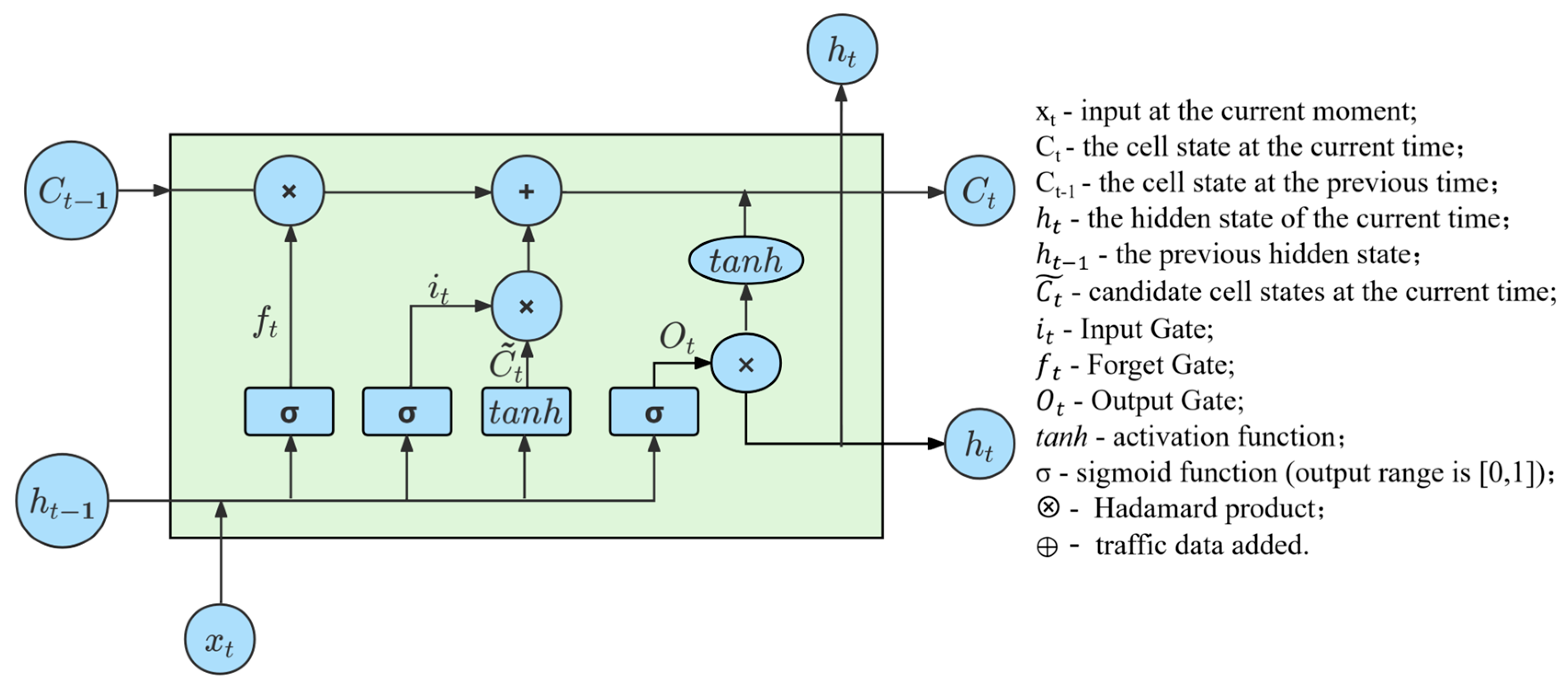

2.3. LSTM—Attention Model Construction

3. Results and Discussion

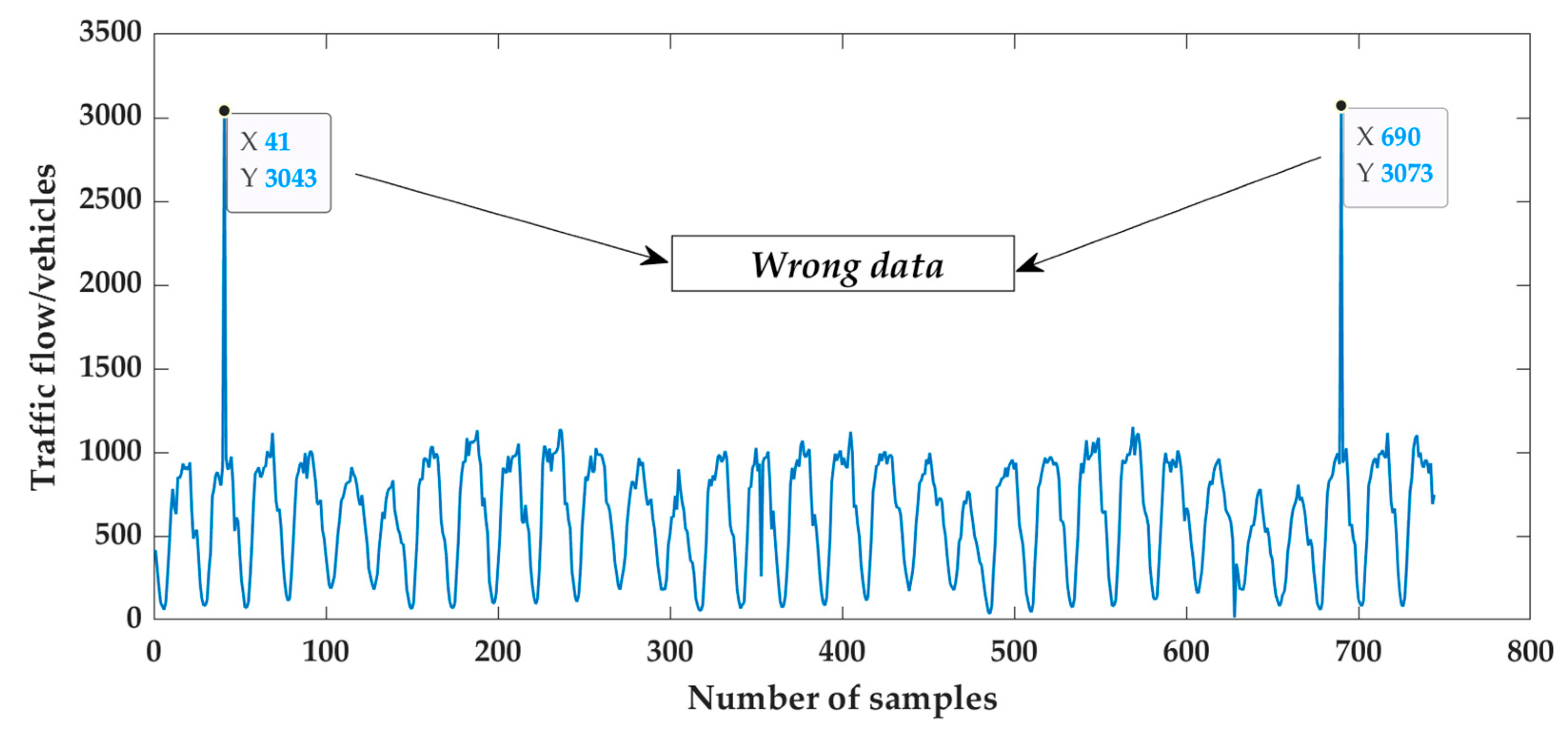

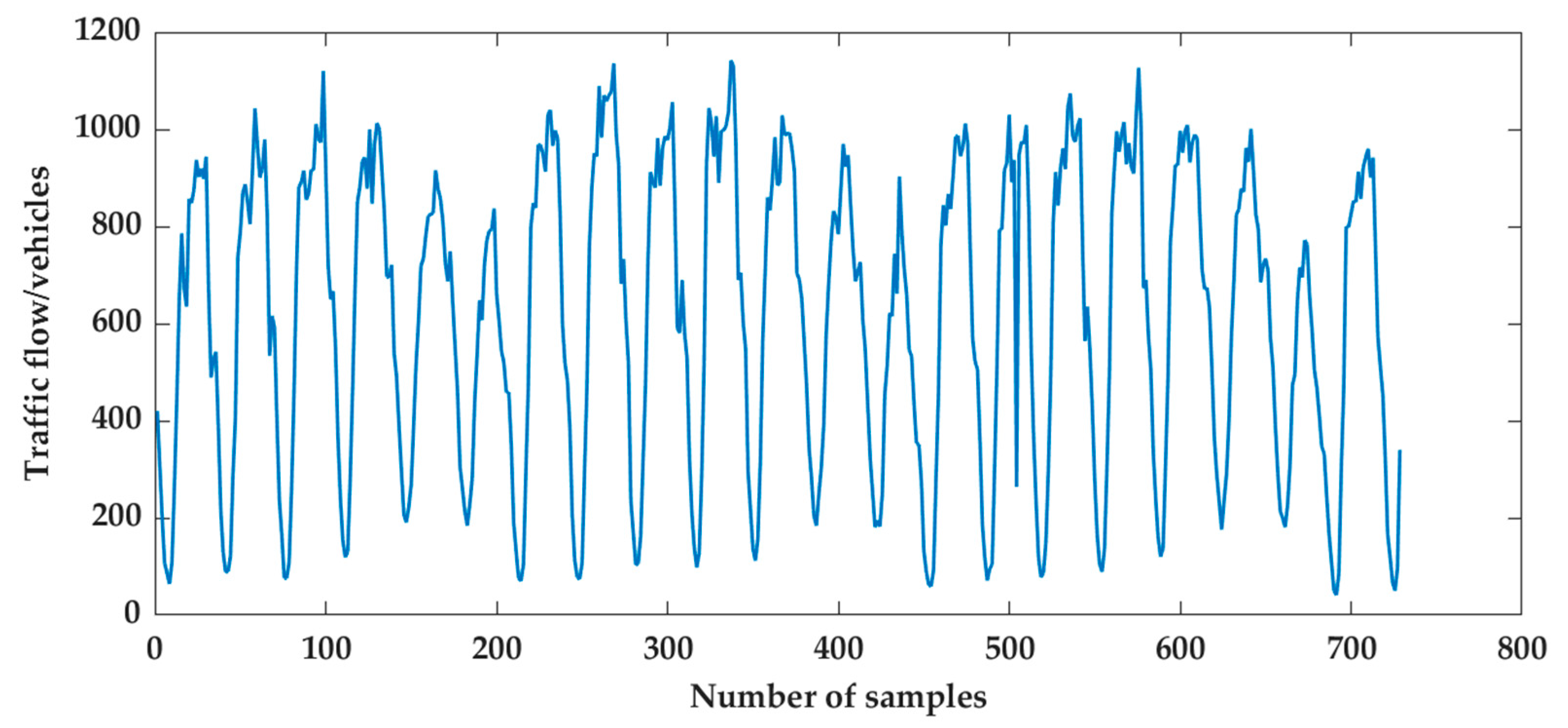

3.1. Data Processing

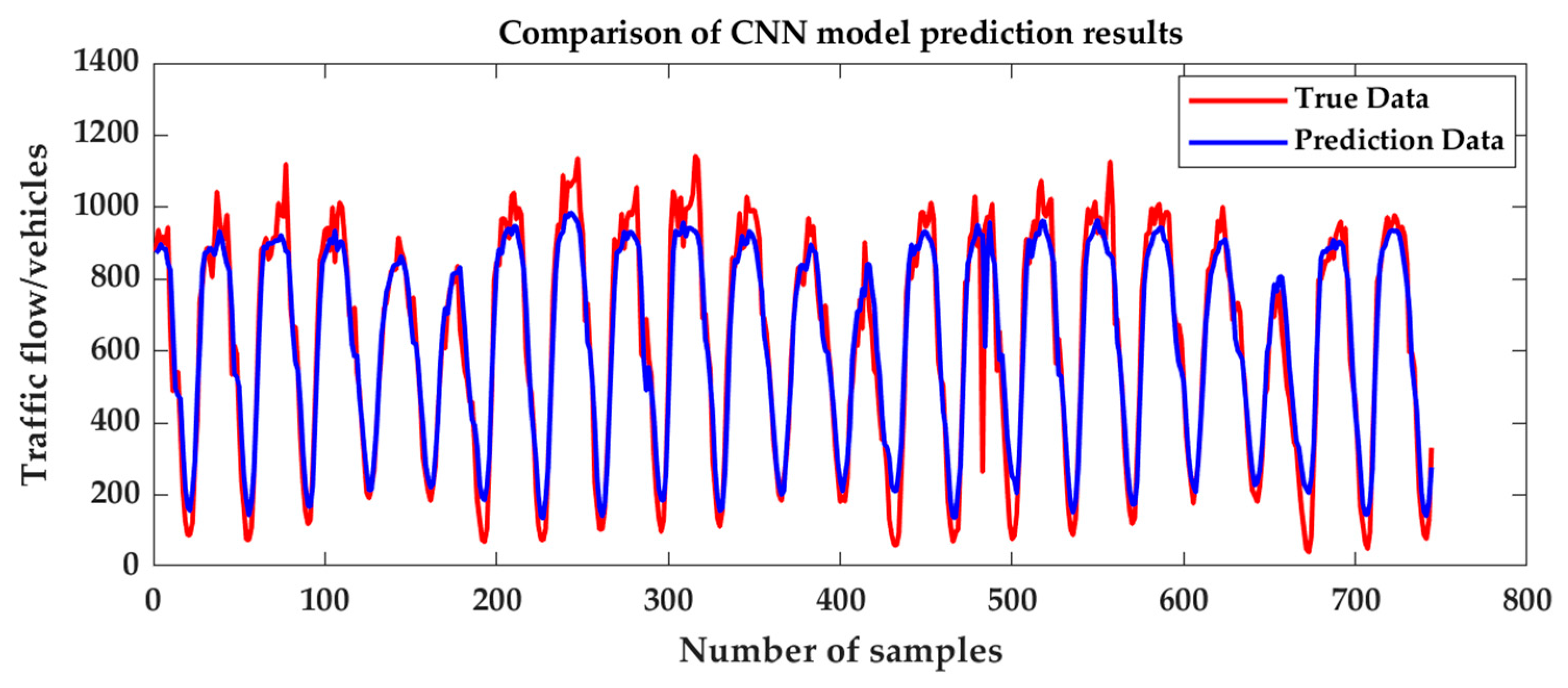

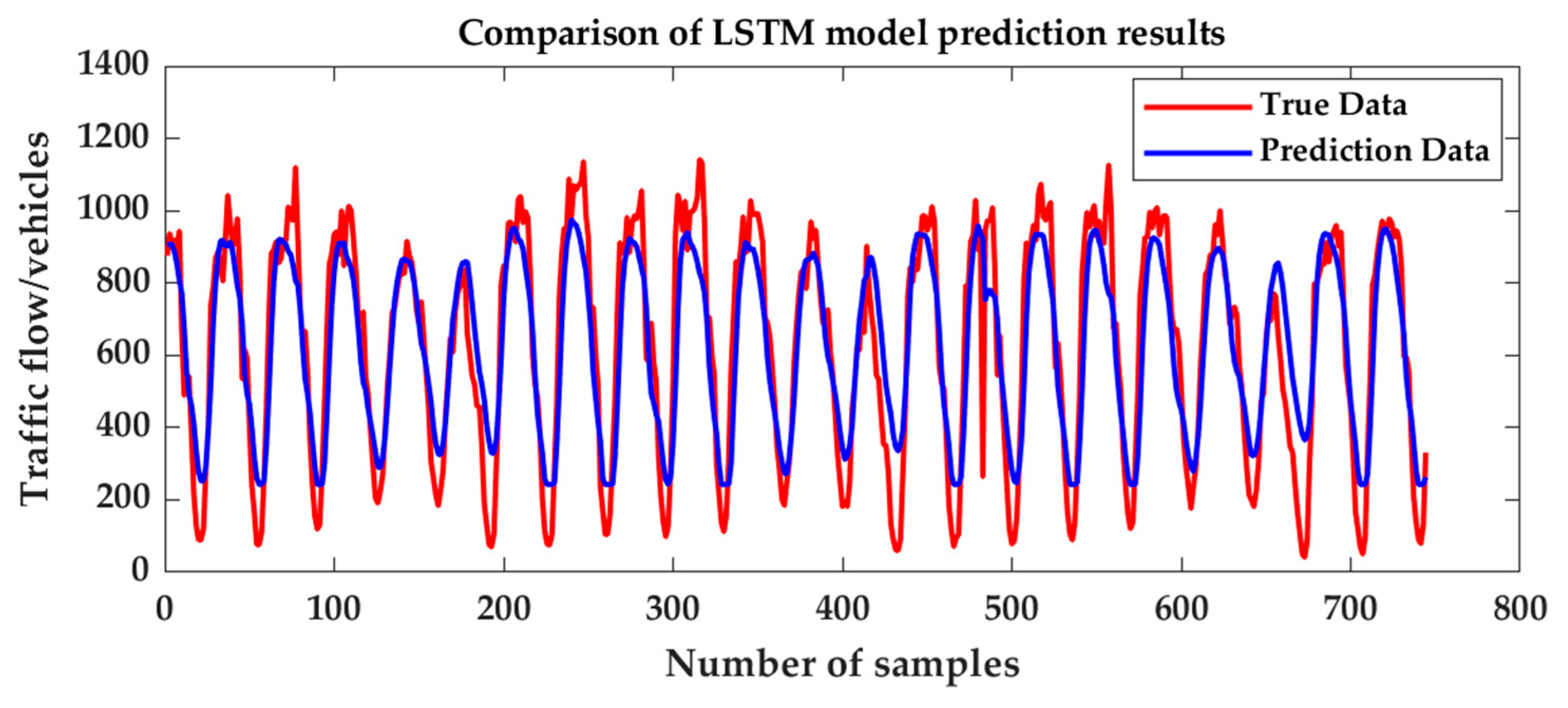

3.2. Single Model Prediction

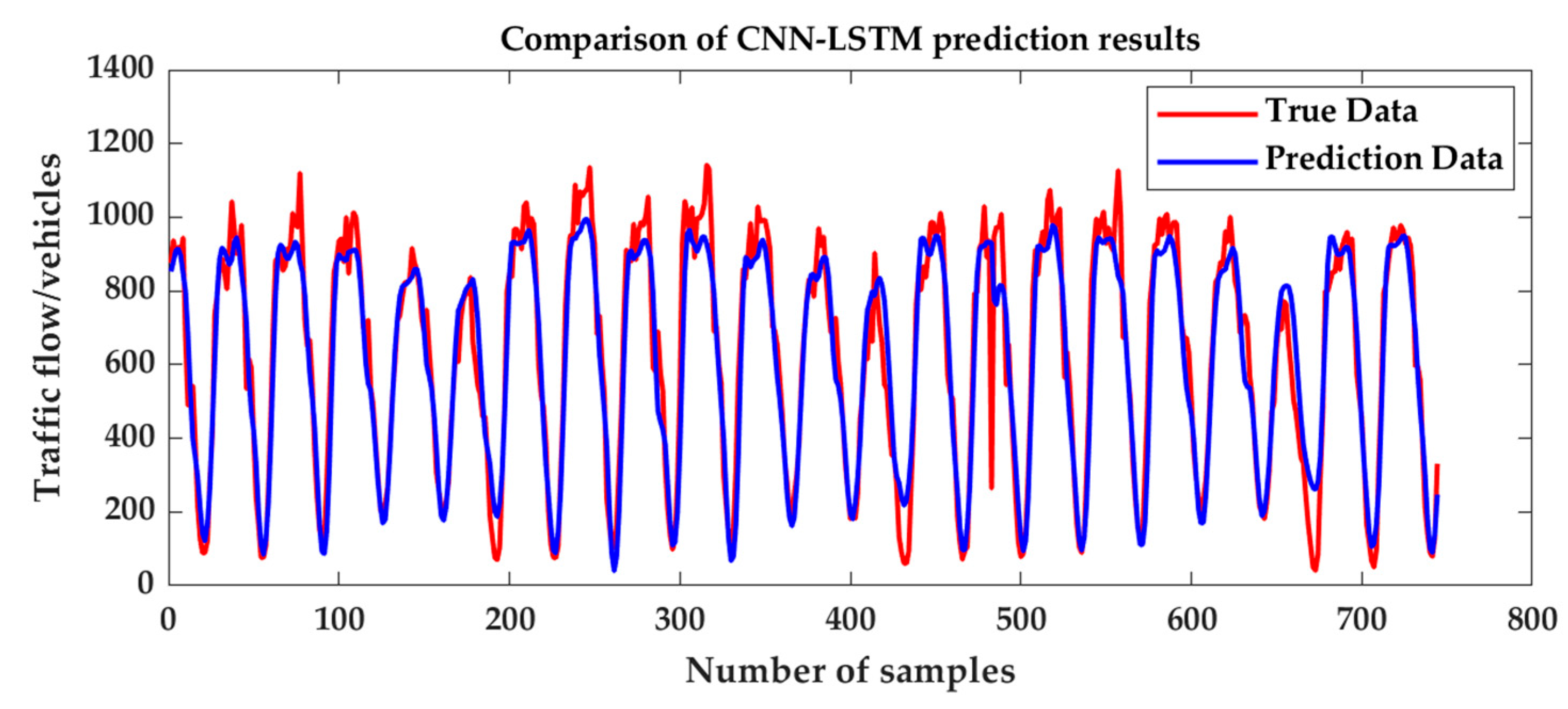

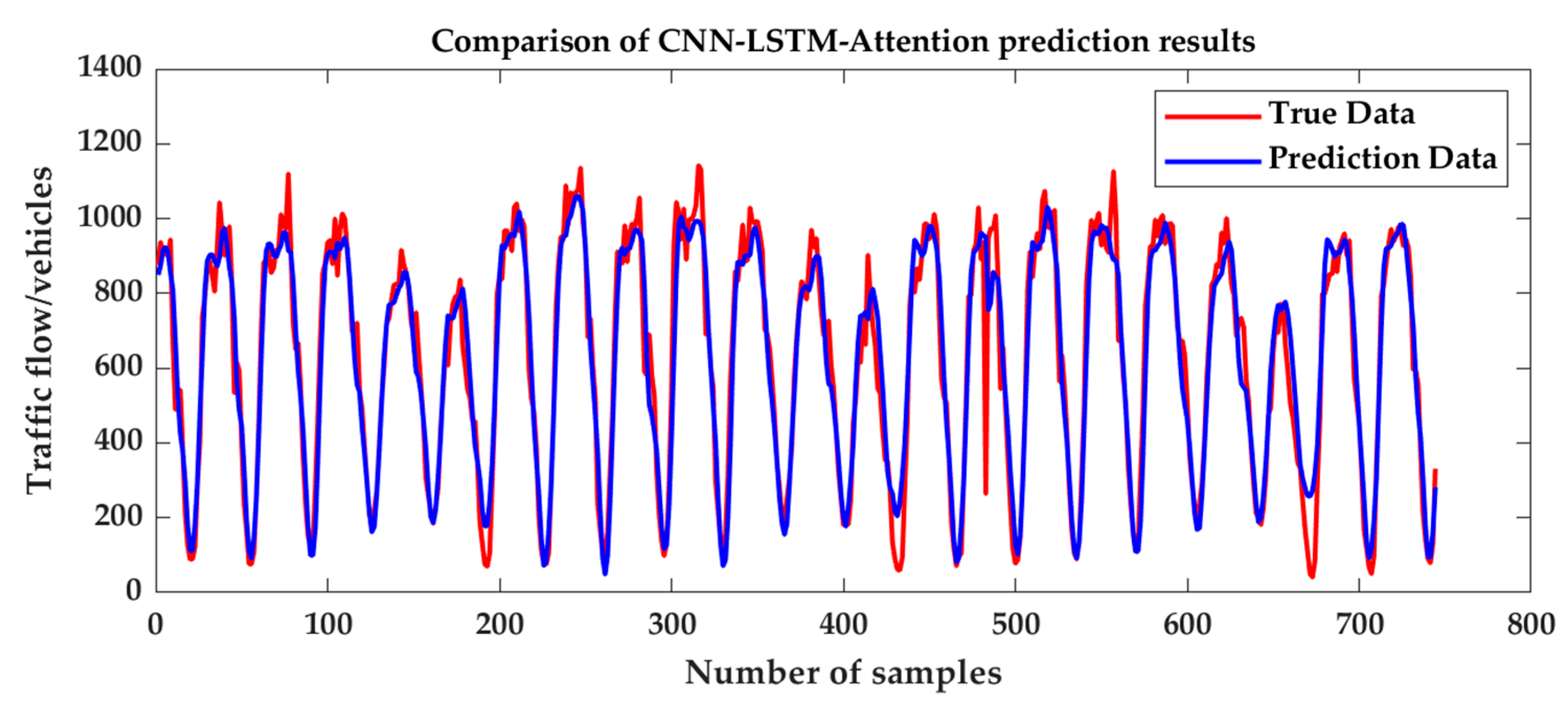

3.3. Hybrid Model Prediction

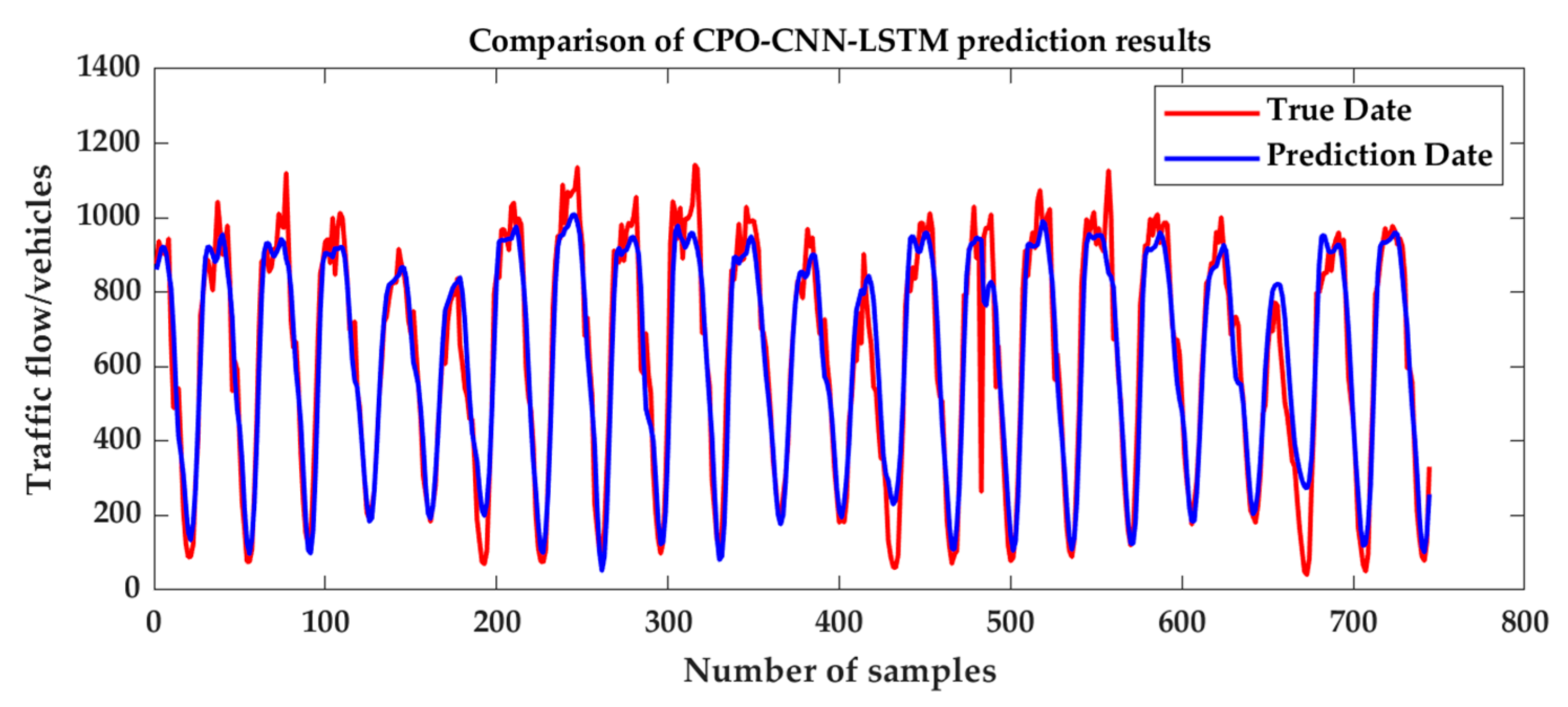

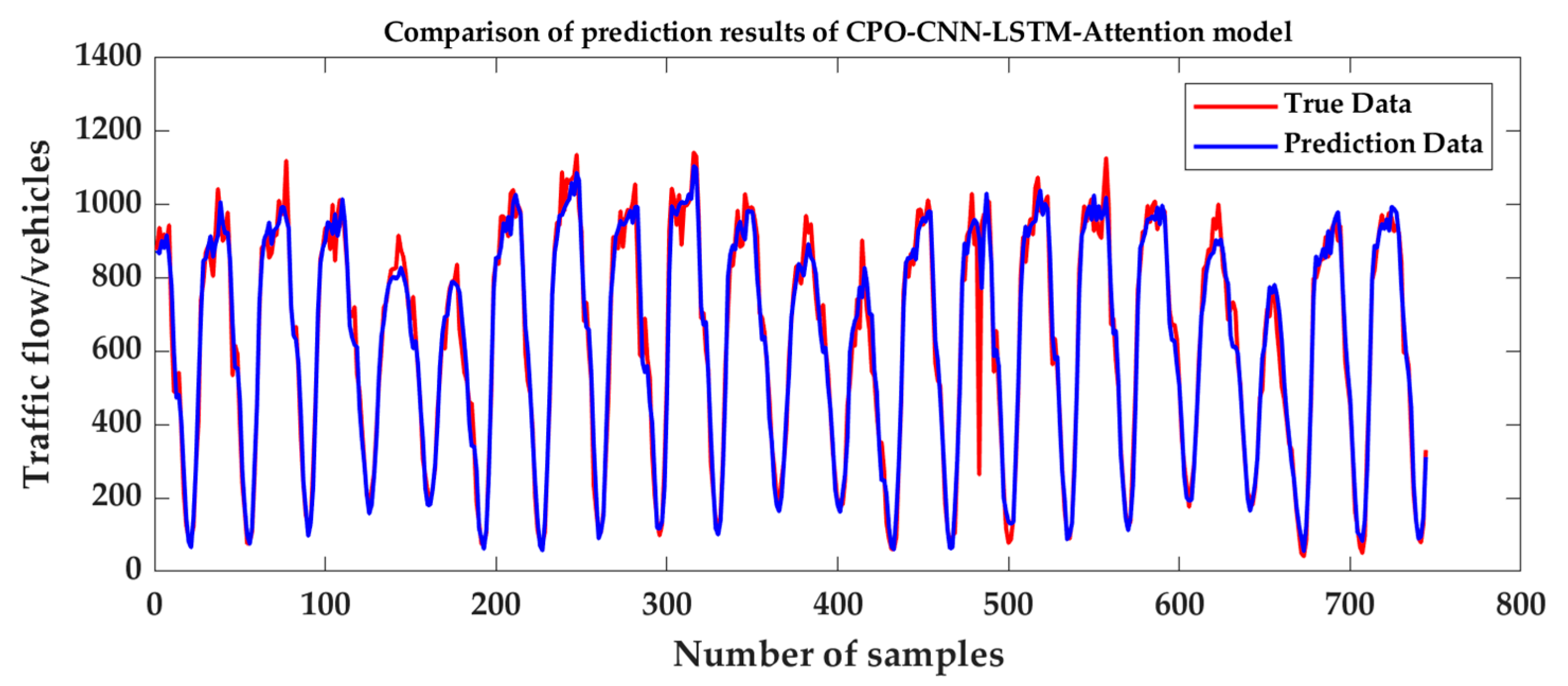

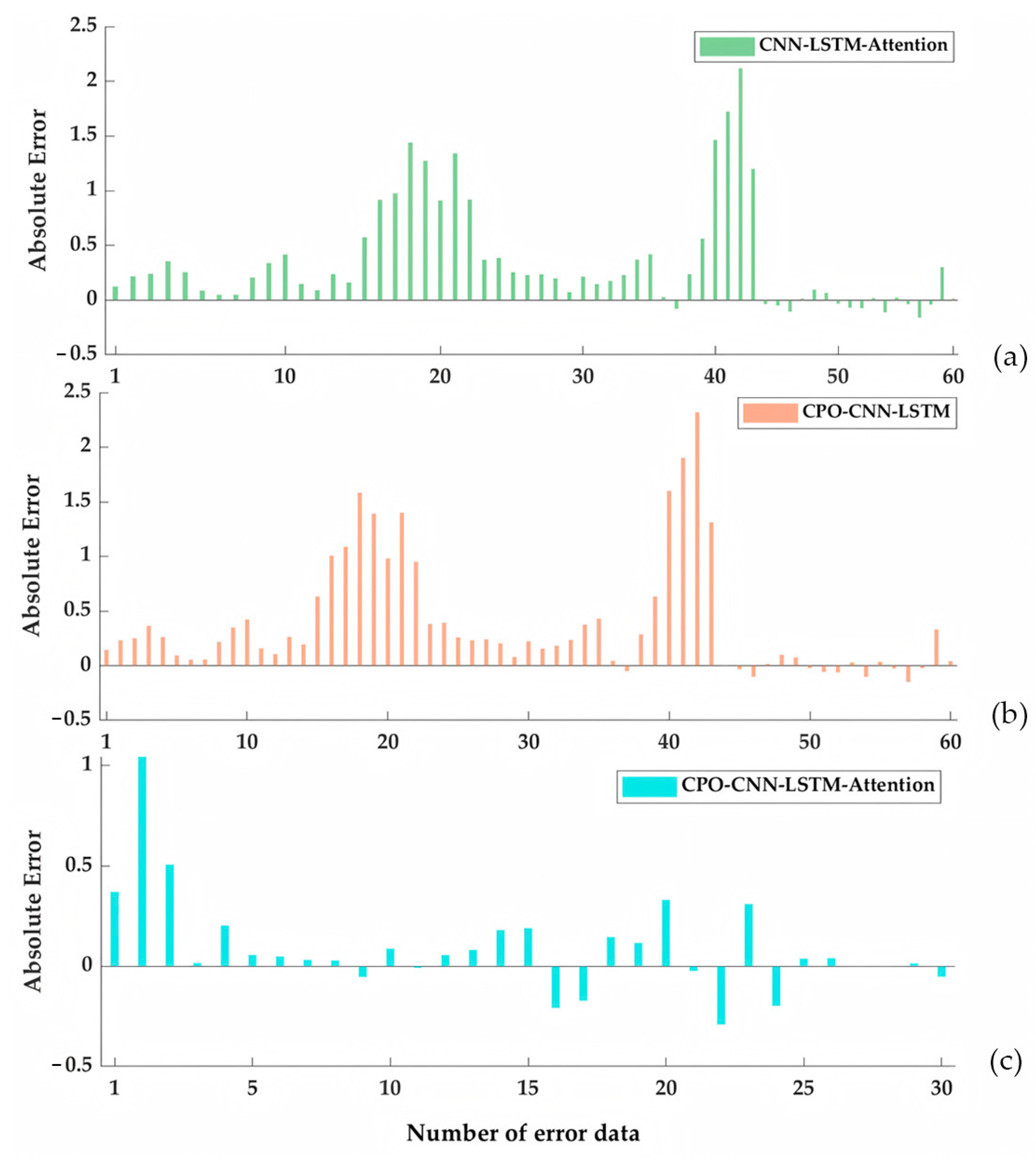

3.4. CPO-CNN-LSTM-Attention Model Prediction

3.5. Simulation Result Analysis

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ITS | Intelligent Transportation System |

| CPO | Crested Porcupine Optimizer |

| CNN | Convolutional Neural Network |

| LSTM | Long Short-Term Memory |

| RMSE | Root Mean Squared Error |

| MAE | Mean Absolute Error |

| SVM | Support Vector Machine |

| RF | Random Forest |

| GBDT | Gradient Boosting Decision Tree |

| RNN | Recurrent Neural Network |

| R2 | Coefficient of Determination |

| MSE | Mean Square Error |

| STGCN | Spatio-Temporal Graph Convolutional Networks |

| DCRNN | Diffusion Convolutional Recurrent Neural Network |

References

- Wang, P.; Zhang, Y.; Hu, T.; Zhang, T. Urban traffic flow prediction: A dynamic temporal graph network considering missing values. Int. J. Geogr. Inf. Sci. 2023, 37, 885–912. [Google Scholar] [CrossRef]

- Dai, G.; Tang, J.; Luo, W. Short-term traffic flow prediction: An ensemble machine learning approach. Alex. Eng. J. 2023, 74, 467–480. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, S.; Zhang, L.; Jiang, W.; Alam, S.; Xue, D. Short-term multi-step-ahead sector-based traffic flow prediction based on the attention-enhanced graph convolutional LSTM network (AGC-LSTM). Neural Comput. Appl. 2025, 37, 14869–14888. [Google Scholar] [CrossRef]

- Rajeh, T.M.; Li, T.; Li, C.; Javed, M.H.; Luo, Z.; Alhaek, F.J. Modeling multi-regional temporal correlation with gated recurrent unit and multiple linear regression for urban traffic flow prediction. Knowl.-Based Syst. 2023, 262, 110237. [Google Scholar] [CrossRef]

- Li, Z.; Xu, H.; Gao, X.; Wang, Z.; Xu, W.J. Fusion attention mechanism bidirectional LSTM for short-term traffic flow prediction. J. Intell. Transp. Syst. 2024, 28, 511–524. [Google Scholar] [CrossRef]

- Abdullah, S.M.; Periyasamy, M.; Kamaludeen, N.A.; Towfek, S.K.; Marappan, R.; Kidambi Raju, S.; Alharbi, A.H.; Khafaga, D.S. Optimizing Traffic Flow in Smart Cities: Soft GRU-Based Recurrent Neural Networks for Enhanced Congestion Prediction Using Deep Learning. Sustainability 2023, 15, 5949. [Google Scholar] [CrossRef]

- Dou, H.; Liu, Y.; Chen, S.; Zhao, H.; Bilal, H. A hybrid CEEMD-GMM scheme for enhancing the detection of traffic flow on highways. Soft Comput. 2023, 27, 16373–16388. [Google Scholar] [CrossRef]

- Ma, Y.; Lou, H.; Yan, M.; Sun, F.; Li, G. Spatio-temporal fusion graph convolutional network for traffic flow forecasting. Inf. Fusion 2024, 104, 102196. [Google Scholar] [CrossRef]

- Katambire, V.N.; Musabe, R.; Uwitonze, A.; Mukanyiligira, D. Forecasting the Traffic Flow by Using ARIMA and LSTM Models: Case of Muhima Junction. Forecasting 2023, 5, 616–628. [Google Scholar] [CrossRef]

- Zhang, Y.; Cheng, Q.; Liu, Y.; Liu, Z.J. Full-scale spatio-temporal traffic flow estimation for city-wide networks: A transfer learning based approach. Transp. B Transp. Dyn. 2023, 11, 869–895. [Google Scholar] [CrossRef]

- Ren, Q.; Li, Y.; Liu, Y.J. Transformer-enhanced periodic temporal convolution network for long short-term traffic flow forecasting. Expert Syst. Appl. 2023, 227, 120203. [Google Scholar] [CrossRef]

- Wang, C.; Wang, L.; Wei, S.; Sun, Y.; Liu, B.; Yan, L. STN-GCN: Spatial and Temporal Normalization Graph Convolutional Neural Networks for Traffic Flow Forecasting. Electronics 2023, 12, 3158. [Google Scholar] [CrossRef]

- Ma, Q.; Sun, W.; Gao, J.; Ma, P.; Shi, M. Spatio-temporal adaptive graph convolutional networks for traffic flow forecasting. IET Intell. Transp. Syst. 2023, 17, 691–703. [Google Scholar] [CrossRef]

- Cao, M.; Li, V.O.; Chan, V.W. A CNN-LSTM model for traffic speed prediction. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25–28 May 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Zhao, Z.; Li, Z.; Li, F.; Liu, Y. A CNN-LSTM based traffic prediction using spatial-temporal features. J. Phys. Conf. Ser. 2021, 2037, 012065. [Google Scholar] [CrossRef]

- Ranjan, N.; Bhandari, S.; Zhao, H.P.; Kim, H.; Khan, P.J.I.A. City-wide traffic congestion prediction based on CNN, LSTM and transpose CNN. IEEE Access 2020, 8, 81606–81620. [Google Scholar] [CrossRef]

- Bogaerts, T.; Masegosa, A.D.; Angarita-Zapata, J.S.; Onieva, E.; Hellinckx, P. A graph CNN-LSTM neural network for short and long-term traffic forecasting based on trajectory data. Transp. Res. Part C Emerg. Technol. 2020, 112, 62–77. [Google Scholar] [CrossRef]

- Méndez, M.; Merayo, M.G.; Núñez, M. Long-term traffic flow forecasting using a hybrid CNN-BiLSTM model. Eng. Appl. Artif. Intell. 2023, 121, 106041. [Google Scholar] [CrossRef]

- Toba, A.-L.; Kulkarni, S.; Khallouli, W.; Pennington, T. Long-Term Traffic Prediction Using Deep Learning Long Short-Term Memory. Smart Cities 2025, 8, 126. [Google Scholar] [CrossRef]

- Olayode, I.O.; Tartibu, L.K.; Campisi, T. Stability Analysis and Prediction of Traffic Flow of Trucks at Road Intersections Based on Heterogenous Optimal Velocity and Artificial Neural Network Model. Smart Cities 2022, 5, 1092–1114. [Google Scholar] [CrossRef]

- Linets, G.I.; Voronkin, R.A.; Slyusarev, G.V.; Govorova, S.V. Optimization Problem for Probabilistic Time Intervals of Quasi-Deterministic Output and Self-Similar Input Data Packet Flow in Telecommunication Networks. Adv. Eng. Res. 2024, 24, 424–432. [Google Scholar] [CrossRef]

- Ivanov, S.A.; Rasheed, B. Predicting the Behavior of Road Users in Rural Areas for Self-Driving Cars. Adv. Eng. Res. 2023, 23, 169–179. [Google Scholar] [CrossRef]

- Tsalikidis, N.; Mystakidis, A.; Koukaras, P.; Ivaškevičius, M.; Morkūnaitė, L.; Ioannidis, D.; Fokaides, P.A.; Tjortjis, C.; Tzovaras, D. Urban Traffic Congestion Prediction: A Multi-Step Approach Utilizing Sensor Data and Weather Information. Smart Cities 2024, 7, 233–253. [Google Scholar] [CrossRef]

- Kmiecik, M.; Wierzbicka, A. Enhancing Smart Cities through Third-Party Logistics: Predicting Delivery Intensity. Smart Cities 2024, 7, 541–565. [Google Scholar] [CrossRef]

- Lin, Z.; Wang, D.; Cao, C.; Xie, H.; Zhou, T.; Cao, C. GSA-KAN: A Hybrid Model for Short-Term Traffic Forecasting. Mathematics 2025, 13, 1158. [Google Scholar] [CrossRef]

- Cai, D.; Chen, K.; Lin, Z.; Li, D.; Zhou, T.; Leung, M.-F. JointSTNet: Joint Pre-Training for Spatial-Temporal Traffic Forecasting. IEEE Trans. Consum. Electron. 2025, 71, 6239–6252. [Google Scholar] [CrossRef]

- Zhang, H.; Yang, G.; Yu, H.; Zheng, Z. Kalman Filter-Based CNN-BiLSTM-ATT Model for Traffic Flow Prediction. Comput. Mater. Contin. 2023, 76, 1047–1063. [Google Scholar] [CrossRef]

- Yu, F.; Wei, D.; Zhang, S.; Shao, Y. 3D CNN-based accurate prediction for large-scale traffic flow. In Proceedings of the 2019 4th International Conference on Intelligent Transportation Engineering (ICITE), Singapore, 5–7 September 2019; pp. 99–103. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Abouhawwash, M. Crested Porcupine Optimizer: A new nature-inspired metaheuristic. Knowl.-Based Syst. 2024, 284, 111257. [Google Scholar] [CrossRef]

- Liu, H.; Zhou, R.; Zhong, X.; Yao, Y.; Shan, W.; Yuan, J.; Xiao, J.; Ma, Y.; Zhang, K.; Wang, Z. Multi-Strategy Enhanced Crested Porcupine Optimizer: CAPCPO. Mathematics 2024, 12, 3080. [Google Scholar] [CrossRef]

- Luo, Y.; Zheng, J.; Wang, X.; Tao, Y.; Jiang, X. GT-LSTM: A spatio-temporal ensemble network for traffic flow prediction. Neural Netw. 2024, 171, 251–262. [Google Scholar] [CrossRef] [PubMed]

- Ye, B.-L.; Zhang, M.; Li, L.; Liu, C.; Wu, W. A Survey of Traffic Flow Prediction Methods Based on Long Short-Term Memory Networks. IEEE Intell. Transp. Syst. Mag. 2024, 16, 87–112. [Google Scholar] [CrossRef]

- Chicco, D.; Warrens, M.J.; Jurman, G. The coefficient of determination R-squared is more informative than SMAPE, MAE, MAPE, MSE and RMSE in regression analysis evaluation. PeerJ Comput. Sci. 2021, 7, e623. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Lin, Z.; Xie, H.; Zhou, J.; Song, Y.; Zhou, T. Two-Way Heterogeneity Model for Dynamic Spatiotemporal Traffic Flow Prediction. Knowl.-Based Syst. 2025, 320, 113635. [Google Scholar] [CrossRef]

- Research Dataset UTD19. Available online: https://utd19.ethz.ch/index.html (accessed on 1 August 2025).

- Hafeez, S.A.; R, M. Intelligent Traffic Flow Prediction: A CNN-LSTM Hybrid Model with Bio-Inspired Fine-Tuning Using Marine Predator Algorithm. In Proceedings of the 2025 International Conference on Computational Robotics, Testing and Engineering Evaluation (ICCRTEE), Virudhunagar, India, 28–30 May 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Muhammad, A.S.; Zakari, R.Y.; Ari, A.B.; Wang, C.; Chen, L. Explainable Traffic Accident Severity Prediction with Attention-Enhanced Bidirectional GRU-LSTM. In Proceedings of the 2024 IEEE Smart World Congress (SWC), Nadi, Fiji, 2–7 December 2024; pp. 1083–1090. [Google Scholar] [CrossRef]

| Model | Prediction Times (s) |

|---|---|

| CNN | 5.42 |

| LSTM | 5.87 |

| CNN-LSTM | 6.43 |

| CNN-LSTM-Attention | 12.15 |

| CPO-CNN-LSTM | 15.33 |

| CPO-CNN-LSTM-Attention | 19.75 |

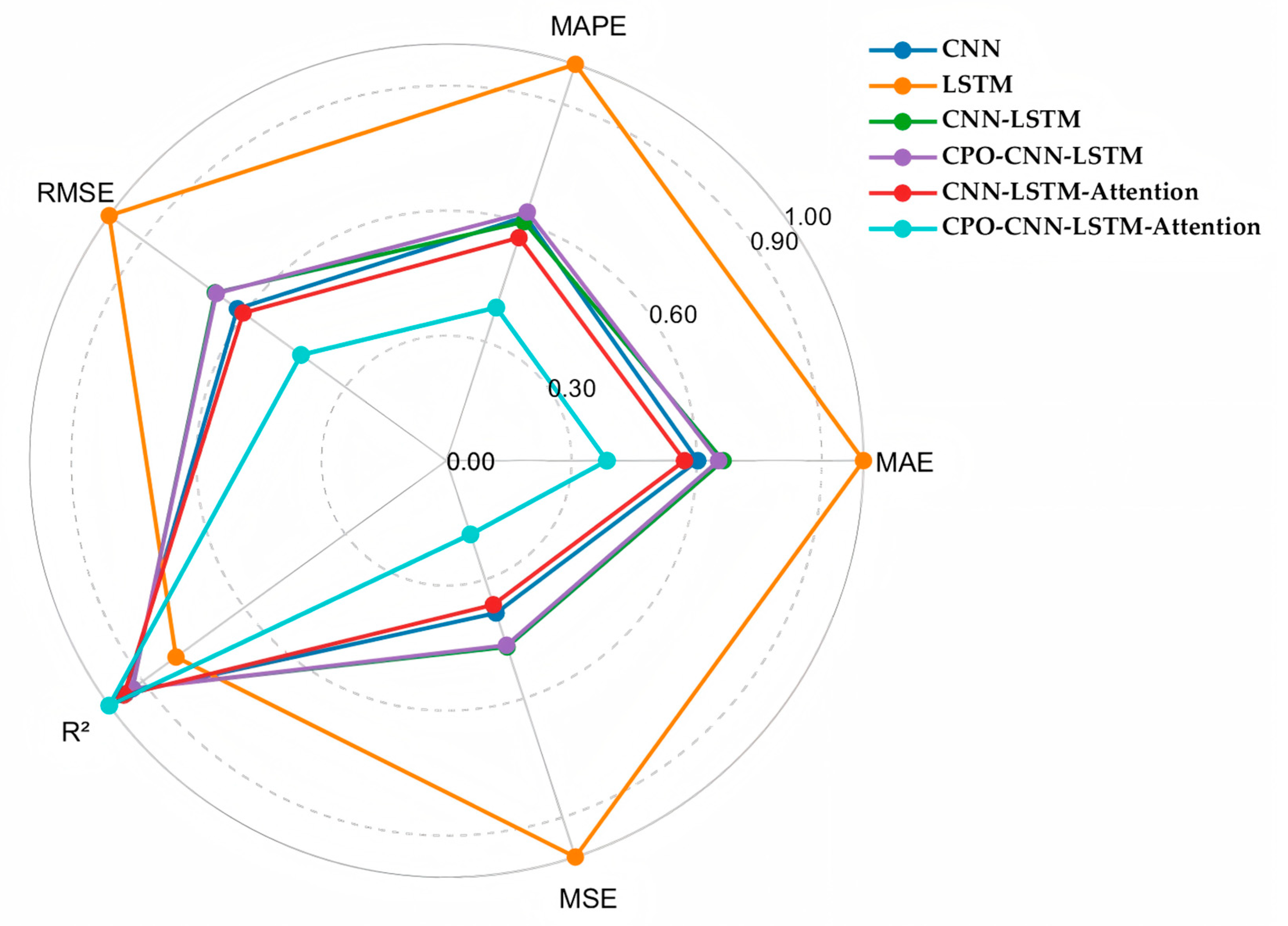

| Performance Indicators | Model | March | April | May |

|---|---|---|---|---|

| R2 | CNN | 0.8635 | 0.8701 | 0.8744 |

| LSTM | 0.8415 | 0.8580 | 0.8500 | |

| CNN-LSTM | 0.8682 | 0.8607 | 0.8741 | |

| CNN-LSTM-Attention | 0.8951 | 0.8873 | 0.8972 | |

| CPO-CNN-LSTM | 0.9011 | 0.8901 | 0.9109 | |

| CPO-CNN-LSTM-Attention | 0.9207 | 0.9133 | 0.9290 | |

| RMSE | CNN | 36.91 | 36.15 | 39.91 |

| LSTM | 47.39 | 42.60 | 45.16 | |

| CNN-LSTM | 33.76 | 30.01 | 31.88 | |

| CNN-LSTM-Attention | 27.50 | 27.90 | 27.33 | |

| CPO-CNN-LSTM | 23.99 | 23.15 | 23.84 | |

| CPO-CNN-LSTM-Attention | 19.83 | 17.35 | 19.81 | |

| MAE | CNN | 26.98 | 25.33 | 29.14 |

| LSTM | 30.15 | 29.80 | 32.30 | |

| CNN-LSTM | 23.22 | 23.10 | 24.27 | |

| CNN-LSTM-Attention | 21.04 | 21.91 | 21.17 | |

| CPO-CNN-LSTM | 19.52 | 18.27 | 18.87 | |

| CPO-CNN-LSTM-Attention | 14.04 | 13.98 | 14.00 | |

| MAPE | CNN | 26.33% | 25.18% | 26.00% |

| LSTM | 27.85% | 25.57% | 27.01% | |

| CNN-LSTM | 18.09% | 17.94% | 18.11% | |

| CNN-LSTM-Attention | 12.15% | 11.27% | 12.43% | |

| CPO-CNN-LSTM | 10.99% | 9.07% | 9.94% | |

| CPO-CNN-LSTM-Attention | 6.62% | 5.97% | 6.53% |

| Model | R2 | RMSE | MAE | MAPE |

|---|---|---|---|---|

| STGCN | 0.8704 | 24.92 | 17.90 | 12.74% |

| DCRNN | 0.8620 | 26.79 | 19.22 | 13.55% |

| CPO-CNN-LSTM-Attention | 0.8815 | 21.07 | 16.13 | 10.18% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Topilin, I.; Jiang, J.; Feofilova, A.; Beskopylny, N. Traffic Flow Prediction via a Hybrid CPO-CNN-LSTM-Attention Architecture. Smart Cities 2025, 8, 148. https://doi.org/10.3390/smartcities8050148

Topilin I, Jiang J, Feofilova A, Beskopylny N. Traffic Flow Prediction via a Hybrid CPO-CNN-LSTM-Attention Architecture. Smart Cities. 2025; 8(5):148. https://doi.org/10.3390/smartcities8050148

Chicago/Turabian StyleTopilin, Ivan, Jixiao Jiang, Anastasia Feofilova, and Nikita Beskopylny. 2025. "Traffic Flow Prediction via a Hybrid CPO-CNN-LSTM-Attention Architecture" Smart Cities 8, no. 5: 148. https://doi.org/10.3390/smartcities8050148

APA StyleTopilin, I., Jiang, J., Feofilova, A., & Beskopylny, N. (2025). Traffic Flow Prediction via a Hybrid CPO-CNN-LSTM-Attention Architecture. Smart Cities, 8(5), 148. https://doi.org/10.3390/smartcities8050148