1. Introduction

Advancement of computer vision technology is rapidly empowering traffic agencies to streamline the collection of roadway geometry data, providing savings in both time and resources. Traditionally, image processing has been viewed as a time-consuming and error-prone method for capturing roadway data. However, with the evolution of computational capabilities and image recognition techniques, new possibilities have emerged for accurately detecting and mapping various roadway attributes from diverse imagery sources. A study conducted in 2015 [

1] demonstrated that utilizing aerial and satellite imagery for gathering geometry data offered significant advantages over traditional field observations in terms of equipment costs, data accuracy, crew safety, data collection expenses, and time required for collection.

Since the introduction of the Highway Safety Manual (HSM) in 2010, many state departments of transportation (DOTs) have placed a strong emphasis on enhancing safety by aligning with the manual’s requirements for geometric data [

2]. However, the manual collection of data across extensive roadway networks has presented a considerable challenge for numerous state and local transportation agencies. These agencies have a critical role in maintaining up-to-date roadway data, which is essential for effective planning, maintenance, design, and rehabilitation efforts [

3]. Roadway characteristics index (RCI) data encompasses a comprehensive inventory of all elements that constitute a roadway, including highway performance monitoring system (HPMS) data, roadway geometry, traffic signals, lane counts, traffic monitoring locations, turning restrictions, intersections, interchanges, rest areas with or without amenities, high occupancy vehicle (HOV) lanes, pavement markings, signage, pavement condition, driveways, and bridges. DOTs utilize various methodologies for RCI data collection, ranging from field inventory and satellite imagery to mobile and airborne light detection and ranging (LiDAR), integrated geographic information system (GIS)/global positioning system (GPS) mapping systems, static terrestrial laser scanning, and photo/video logging [

2].

These methods fall into two main categories: ground observation/field surveying and aerial imagery/photogrammetry. The process of gathering RCI data involves considerable expenses, with each approach requiring specific equipment and time allocation for data collection, thereby impacting overall costs. Ground surveying entails conducting measurements along the roadside using total stations, while field inventory involves traversing the roadway to document current conditions and inputting this information into the inventory database. On the other hand, aerial imagery or photogrammetry entails extracting RCI data from georeferenced images captured by airborne systems such as satellites, aircraft, or drones. Each approach has its own advantages and disadvantages in terms of data acquisition costs, accuracy, quality, constraints on collection, storage requirements, labor intensity, acquisition and processing time, and crew safety. Additionally, pavement markings typically have a lifespan of 0.5 to 3 years [

4]. Because of their short lifespan, frequent inspection and maintenance are necessary. However, road inspectors generally adopt a periodic manual inspection approach to assess pavement marking conditions, which is both time-consuming and risky.

Despite the prevalent use of direct field observations by highway agencies and DOTs for gathering roadway data [

3], these methods pose numerous challenges, including difficulty, time consumption, risk, and limitations, especially in adverse weather conditions. Therefore, there is a critical need to explore alternative and more efficient approaches for collecting roadway inventory data. Researchers have explored novel and emerging technologies, such as image processing and computer vision methods [

2], for this purpose. Over the past decade, satellite and aerial imagery have been extensively utilized for acquiring earth-related information [

2]. High-resolution images obtained from aircraft or satellites can quickly provide RCI data upon processing [

5,

6,

7]. In a previous study, artificial intelligence (AI) was employed to extract school zones from high-resolution aerial images [

7]. The increasing availability of such images, coupled with advancements in data extraction techniques, highlights the importance of optimizing their use for efficiently extracting roadway inventory data. While there may be challenges in extracting obscure or small objects, recent advancements in machine learning research have lessened this limitation. Well-trained models can significantly enhance output accuracy. Furthermore, this method entails minimal to no field costs, making it economically advantageous.

RCI data encompass critical features like center, left, or right turning lanes, which are significant to be known to both state DOTs and local transportation agencies in the context of traffic operations and safety. These pavement markings, primarily situated near intersections, indicate permissible turns from each lane, effectively guiding and managing traffic flow. They offer continuous assistance in vehicle positioning, lane navigation, and maintaining roadway alignment, as highlighted in a USDOT study [

8]. Many DOTs have expressed keen interest in automated methods for detecting and evaluating these markings [

9]. Although turning lane information plays a pivotal role in optimizing roadway efficiency and minimizing accidents, particularly at intersections, a comprehensive geospatial inventory of turning lanes is currently lacking, spanning both local- and state-operated roadways in Florida. Therefore, it is crucial to develop innovative, efficient, and rapid methods for gathering such data. This is of paramount importance for the Florida DOT, serving various purposes such as identifying outdated or obscured markings, juxtaposing turning lane positions with other geometric features like crosswalks and school zones, and analyzing accidents occurring in these zones and at intersections.

To the best of the authors’ knowledge, there has been no prior research exploring the utilization of computer vision techniques to extract and compile an inventory of turning lane markings using high-resolution aerial images. As such, this study seeks to address this gap by developing automated tools for detecting these crucial roadway features through deep learning-based object detection models. Specifically, the focus will be on creating a You Only Look Once (YOLO)-based artificial intelligence model and framework to identify and extract turning lane markings, including left, right, and center lanes, from high-resolution aerial images. The primary objective is to devise an image processing and object detection methodology tailored to identify and extract these lane features across the State of Florida using high-resolution aerial imagery. The goals are to:

- (a)

develop a multiclass object detection model,

- (b)

evaluate the performance of the model with ground truth data, and

- (c)

apply the model to aerial images to detect the left, right, and center lane features on Florida’s public roadways.

This initiative holds significant importance for the Florida Department of Transportation (FDOT) and other transportation agencies for various reasons, including:

- i.

infrastructure management, such as identifying aging or obscured markings.

- ii.

comparing turning lane locations with other geometric features like crosswalks and school zones and analyzing accidents occurring within these zones and at intersections.

- iii.

automatically extracting road geometry data that can be seamlessly integrated with crash and traffic data, offering valuable insights to policymakers and roadway users.

2. Literature Review

Research on using AI techniques to extract RCI data—particularly those pertaining to pavement markings—has significantly increased in the last few years. By using detection models, AI approaches are essential in obtaining information about roadways from such data. For example, a study [

7] used high-resolution aerial pictures and YOLO to locate school zones. Although a lot of research has focused on employing LiDAR technology to capture RCI data, this method has drawbacks, including expensive equipment, complicated data processing, and long processing periods. For instance, a study [

3] used precision navigation and powerful computers to use LiDAR to compile inventories of various roadway elements. Furthermore, in other studies, mobile terrestrial laser scanning (MTLS) has been utilized to gather data from highway inventories [

5]. Furthermore, computer vision, sensor technology, and AI techniques have been combined in recent autonomous driving research to recognize pavement markings for vehicle navigation [

10,

11,

12,

13,

14].

The majority of previous research has been on conventional approaches, which are linked to longer data gathering times, traffic flow disruptions, and possible dangers to crew members. Conversely, there has not been much focus on developing technologies like computer vision, object detection, and deep learning in the domain of roadway geometry feature extraction using high-resolution aerial images. With the help of these cutting-edge technologies, roadway features may be extracted from high-resolution aerial photos, doing away with the necessity for labor-intensive fieldwork and drastically cutting down on data gathering time. In addition, the data collected using these cutting-edge techniques are readily available, protect crew safety by averting traffic-related risks, and quickly capture roadway characteristics over wide areas. Standardized image collection is not the only criterion to consider when choosing the best object detection model [

15].

2.1. Utilizing Computer Vision and Deep Learning for Roadway Geometry Feature Extraction

Experts in transportation are beginning to recognize convolutional neural networks (CNNs), a new instance of computer vision and deep learning methods [

16]. Notable progress has been made in the extraction of roadway geometry data by researchers using CNN and region-based convolutional neural networks (R-CNN). These methods have proven to be effective in quickly identifying, detecting, and mapping roadway geometry features over large regions with no need for human interaction. For example, in ref. [

4], photogrammetric data from Google Maps was utilized to automatically identify pavement marking issues on roadways using R-CNN to evaluate pavement conditions. With an average accuracy of 30%, the developed model in this study was trained to detect flaws in pavement markings, such as incorrect alignment, ghost marking, missing or fading edges, corners, segments, and cracks.

In one study, Google Street View (GSV) photos taken along interstate segments were used to recognize and categorize traffic signs using computer vision algorithms [

17]. In order to classify road signs according to their pattern and color information, the researchers used a machine learning technique that paired histograms of oriented gradients (HOG) data with a linear support vector machine (SVM) classifier. A classification accuracy of 94.63% was attained by them. However, neither local nor non-interstate roads were used to test the methodology. The research by [

18] used ground-level photos and a technique known as ROI-wise reverse reweighting network to recognize road markers. Using ROI-wise reverse reweighting and multi-layer pooling operation based on faster RCNN, the study achieved a 69.95% detection accuracy for road markers in images by highlighting multi-layer features.

In ref. [

12], inverse perspective mapped photos were used to estimate roadway geometry and identify lane markings on roadways using CNN. Researchers used CNN in [

19] to detect, measure, and extract the geometric properties of hidden cracks in roads using ground-penetrating radar pictures. CNN was used by the authors in [

20] to extract roadway features from remotely sensed photos. These methods have been used to detect images for a variety of purposes. For example, driverless cars use them to instantly identify objects and road infrastructure. Using the Caltech-101 and Caltech pedestrian datasets, a novel hybrid local multiple system (LM-CNN-SVM) incorporating CNN and SVMs was tested for item and pedestrian detection [

21]. Several CNNs were tasked with learning the properties of local areas and objects after the full image was divided into local regions. Significant characteristics were chosen using principal component analysis, and in order to improve the classifier system’s ability to generalize, these features were then fed into several SVMs using empirical and structural risk reduction strategies rather than only using CNN. Moreover, an original CNN architecture and a pre-trained AlexNet were both used, and the SVM output was mixed. The approach showed an accuracy range that was between 89.80% and 92.80%.

CNNs based on deep learning have demonstrated impressive capabilities in object detection recently [

22,

23,

24,

25]. These algorithms have been greatly enhanced by their progression from region-based CNN (R-CNN) to fast R-CNN [

26] and ultimately faster R-CNN [

27]. The CNN method basically involves computing CNN features after extracting region proposals as possible object placements. By adding another region proposal network (RPN) to the object detection network, faster R-CNN improves performance even further. In a study [

28], the vehicle detection and tracking skills of machine learning algorithms and deep learning techniques—faster R-CNN and ACF—were thoroughly compared. The results show that quicker R-CNN performs better in this area than ACF. Therefore, choosing the algorithm to perform the analysis requires significant thought. The output of vehicle identification and tracking algorithms may include parameters like speed, volume, or vehicle trajectories, depending on the analysis’s goals. Vehicle detection algorithms differ from point tracking techniques in that they can also classify automobiles.

2.2. Obtaining Roadway Geometry Data through LiDAR and Aerial Imagery Techniques

Road markings can be extracted from photos by taking advantage of their high reflectivity, which is a characteristic that they usually display. In ref. [

29], researchers developed an algorithm that uses pixel extraction to recognize and locate road markers in pictures taken by cameras installed on moving cars. Initially, a median local threshold (MLT) image filtering technique was used by the system to extract marking pixels. After that, a recognition algorithm was used to identify markings according to their dimensions and forms. For the identified markers, the average true positive rate was 84%. Researchers in [

30] extracted highway markers from aerial photographs using a CNN-based semantic segmentation technique. The paper presented a novel method for processing high-resolution images for lane marking extraction that makes use of a discrete wavelet transform (DWT) and a fully convolutional neural network (FCNN). In contrast to earlier techniques, this strategy—which used a symmetric FCNN—focused on small-sized lane markers and investigated the best places for DWT insertion sites inside the architecture. The accuracy of lane detection could be enhanced by the model’s ability to collect high-frequency information with the integration of DWT into the FCNN architecture. Aerial LaneNet architecture consisted of a sequence of pooling, wavelet transform, and convolutional layers. The model’s effectiveness was assessed using an aerial lane detection dataset that was made available to a general audience. The study found that washed-out lane markers and shadowed areas presented constraints.

Researchers used the scan line method in [

31], which allowed them to recover road markings from LiDAR point clouds. The team efficiently structured the data by timestamp-sequentially ordering the LiDAR point clouds and grouping them into scan lines based on the scanner angle. They then isolated seed roadway points, which formed the foundation for determining complete roadway points, by taking advantage of the height difference between trajectory data and the road surface. Using these points, a line was drawn that encircled the scan line’s seed points as well as every other point. After that, points that fell within a given range of this line were kept and classified as asphalt points or road markings according to how intense they were. In order to smooth out intensity values and improve data quality by lowering noise, a dynamic window median filter was used. Finally, the research team identified and extracted roadway markers using edge detection algorithms and limitations. Roadway data were gathered by researchers in [

3] using two-meter resolution aerial images and six-inch accuracy LiDAR data saved in ASCII comma-delimited text files. Using ArcView, they added height information to LiDAR point shapefiles and then converted the data points into Triangular Irregular Networks (TIN). The analytical process was started by combining the aerial photos and LiDAR boundaries. To find obstructions in the observer’s line of sight, ArcView’s object obstruction tool was utilized. Tracing the line of sight—visible terrain represented by green line segments, obscured terrain by red line segments—was used to calculate the stopping sight distance. With ArcView’s identification and contour capabilities, side slope and contours were determined. The grade was also determined by the researchers by calculating the elevation difference between a segment’s two ends. Conversely, ref. [

32] built a deep learning model for the automated recognition and extraction of road markings using a 3D profile of pavement data obtained by laser scanning. They were able to detect with 90.8% accuracy. As part of the project, a step-shaped operator was designed to identify possible locations for pavement marking edges. The road markings were subsequently extracted using CNN by combining the geometric properties and convolution information of these locations.

In ref. [

33] pavement markings were extracted from camera images and pavement conditions were assessed using computer vision techniques. Preprocessing was applied to videos that were taken of cars from the frontal perspective for the research. The preprocessing involved the extraction of image sequences, the Gaussian blur median filter for filtering and smoothing the images, and the inverse perspective transform for correcting the photos. A hybrid detector that relied on color and gradient features was used to find pavement markings. After that, regions with marks were identified using image segmentation and then categorized into edge, dividing, barrier, and continuity lines according to their characteristics. Building on a prior study [

34] that used Yolov3 to extract turning lane features from aerial images, this research will use additional image processing techniques along with a more advanced version of yolo to extract turning lane features, including left, right, and center, from extremely high-resolution aerial images in Leon County, Florida.

To the authors’ knowledge, there has not been any research carried out on how to create such a large inventory of geocoded left, right, and center turning lane markings on a state- or countywide scale using an integrated image processing and machine learning techniques on very high-resolution aerial images. Thus, by creating automated methods for identifying these turning lane markings from very high-resolution images on a large scale (e.g., left, right, and center), our research attempts to close this gap. To achieve this, the study will specifically concentrate on developing an artificial intelligence model based on the You Only Look Once (YOLO) methodology.

4. Materials and Methodology

The selection of the methodology for gathering roadway inventory data are contingent upon various factors, including data collection time (e.g., data collection, reduction, and processing) and cost (e.g., data collection, and reduction), as well as accuracy, safety, and data storage demands. In this study, our objective was to develop a deep learning object detection model tailored to identify turning lane markings from high-resolution aerial images within Leon County, Florida.

4.1. Data Description

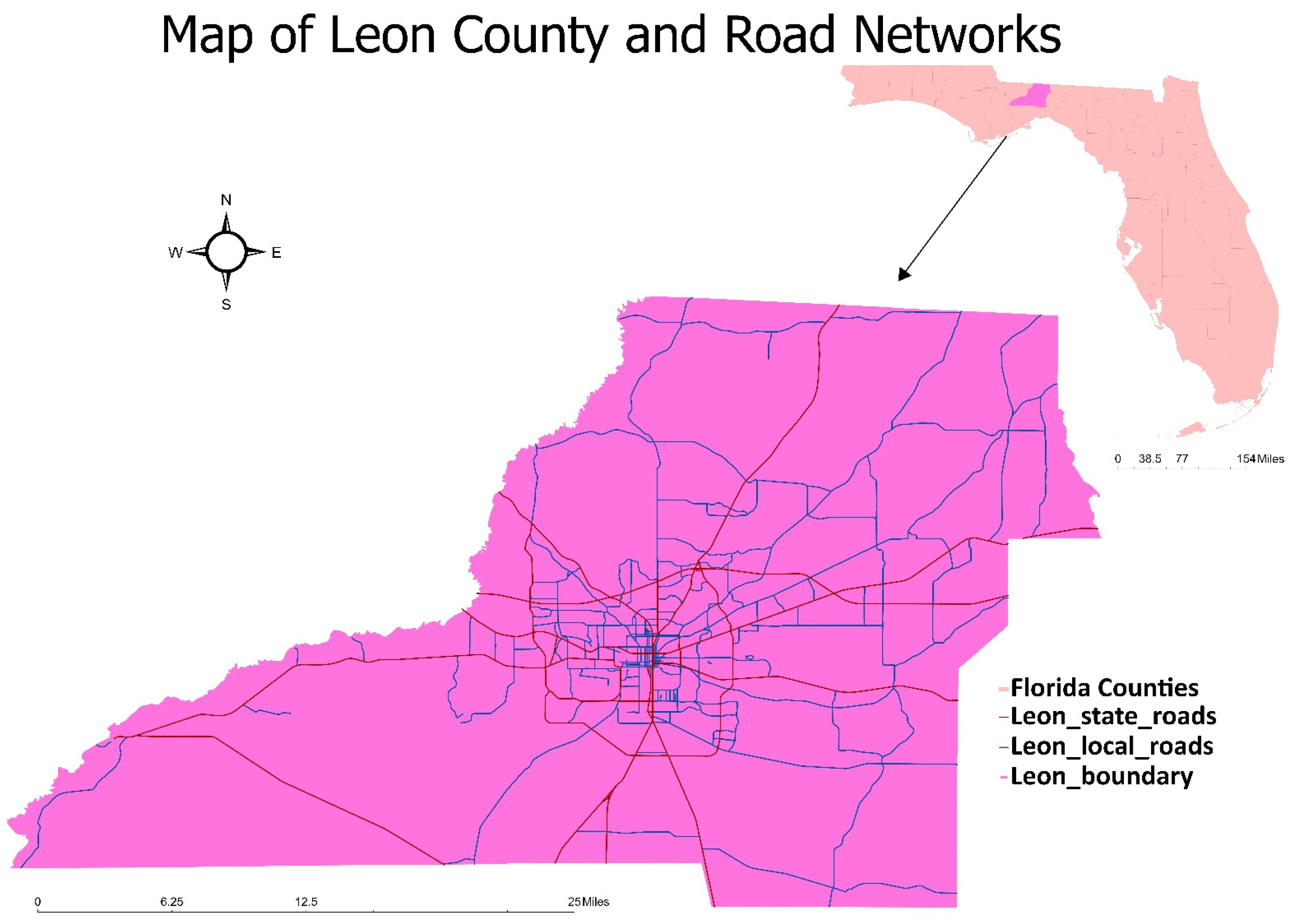

The proposed algorithm for detecting turning lanes relies on high-resolution aerial images and recent advancements in computer vision and object detection techniques. The goal is to automate the process of identifying pavement markings for turning lanes, offering a novel solution for transportation agencies. The aerial images used in this study were sourced from the Florida Aerial Photo Look-Up System (APLUS), an aerial imagery database managed by the Surveying and Mapping Office of the Florida Department of Transportation (FDOT). Specifically, images from Leon (2018) and Miami-Dade (2017) Counties, Florida, were utilized. These images have a resolution ranging from 1.5 ft down to 0.25 ft, ensuring sufficient detail for accurate detection of turning lanes. The model’s resolution requirement allows for the utilization of any imagery falling within or exceeding this resolution threshold. The majority of the images were in 0.5 ft/pixel resolution, with a size of 5000 × 5000 and a 3-band (RGB) image format, although precise resolutions varied by county. Additionally, state and local roadway shapefiles were obtained from the FDOT’s GIS database, providing further data for the detection algorithm. The images are given in MrSID format, which enables GIS projection onto maps, enhancing spatial analysis capabilities. Also, state, and local roadway shapefiles were acquired from the FDOT’s GIS database, complementing the image data, and enriching the dataset for further analysis.

This study focuses on identifying turning lanes present on roadways managed by counties or cities, as well as those situated on state highway system routes, excluding interstate highways. State highway system routes are termed ON system roadways or state roadways, while county or city-managed roads are referred to as OFF system roadways or local roadways, according to their FDOT classification. Our proposed approach involves merging all centerlines from the shapefile of county and city-managed roadways. While the FDOT’s GIS data can provide various geometric data points crucial for mobility and safety assessments, they lack information on the locations of turning configurations on the local roadways. Therefore, the main objective of this project is to utilize an advanced object identification model to compile an inventory of turning lane markers, specifically left only, right only, and center lanes, on both state and local roadways in Leon County, Florida.

4.2. Pre-Processing

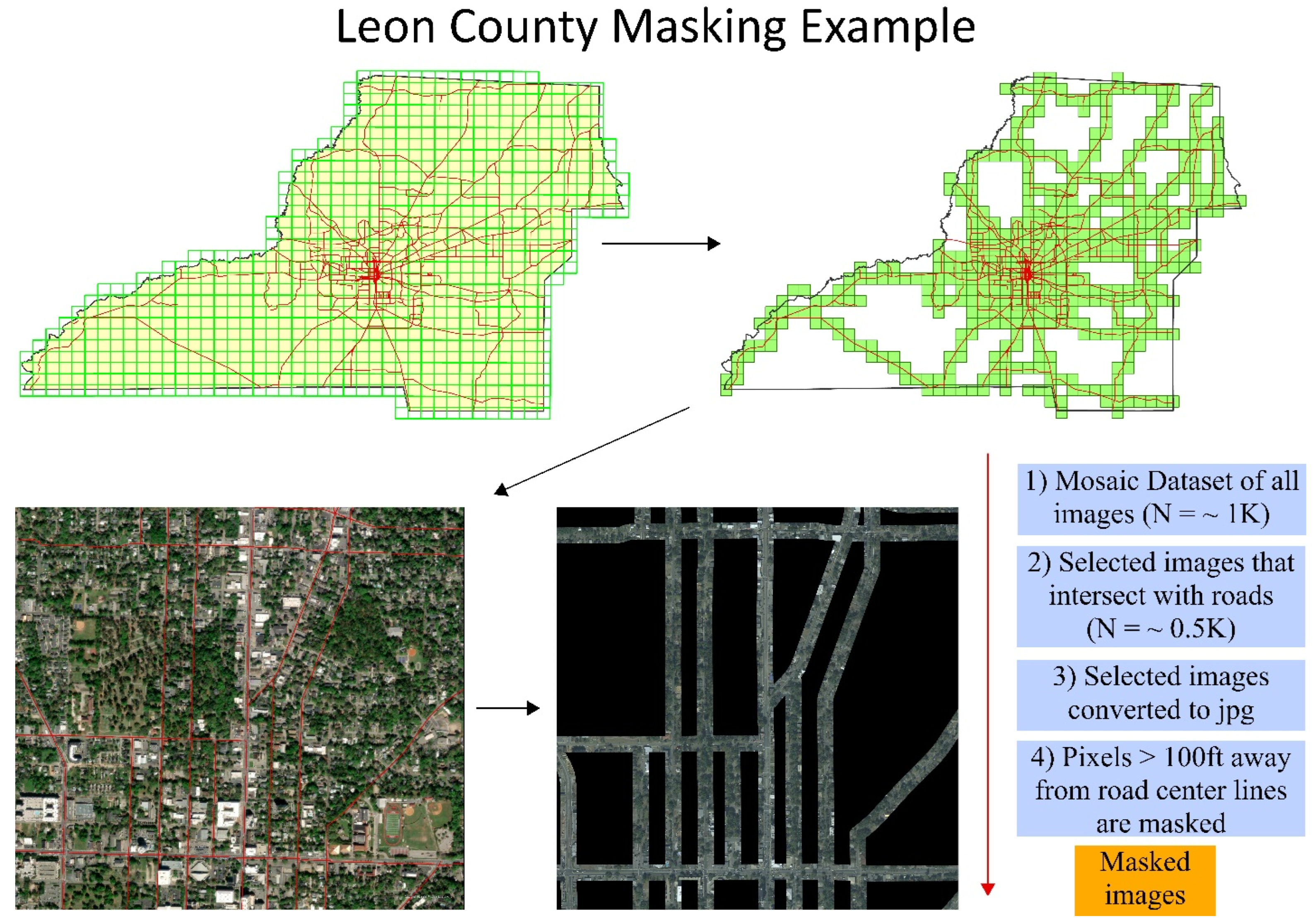

Due to the volume of data and the complexity of object recognition, preprocessing is essential. This method involves selecting and discarding images that do not intersect with a roadway centerline and mask out pixels outside a buffer zone. In this approach, the number of photos was decreased, and the image masking model excluded objects that were 100 feet away from state and local roadways (

Figure 2). Prior to masking the images, the roadway shapefile is buffered to create overlapping polygons, serving as reference for cropping intersecting regions of aerial images. During masking, pixels outside the reference layer’s boundary are removed. The resulting cropped images, with fewer pixels, are then mosaiced into a single raster file, facilitating easier handling and analysis.

First, all aerial images from the selected counties are imported into a mosaic dataset using ArcGIS Pro. Geocoded photos are then managed and visualized using mosaic databases, allowing for the intersection with additional vector data to select specific image tiles based on location. Following this, a subset of photos is extracted, comprising images that intersect with roadway centerlines. An automated image masking tool is subsequently developed using ArcGIS Pro’s ModelBuilder interface. This tool systematically processes the photo folder, applying a mask based on a 100-foot buffer around roadway centerlines, and saves the resulting masked images as JPG files for the object detection process. Exported images are further processed for model training and detection.

4.3. Data Preparation for Model Training and Evaluation

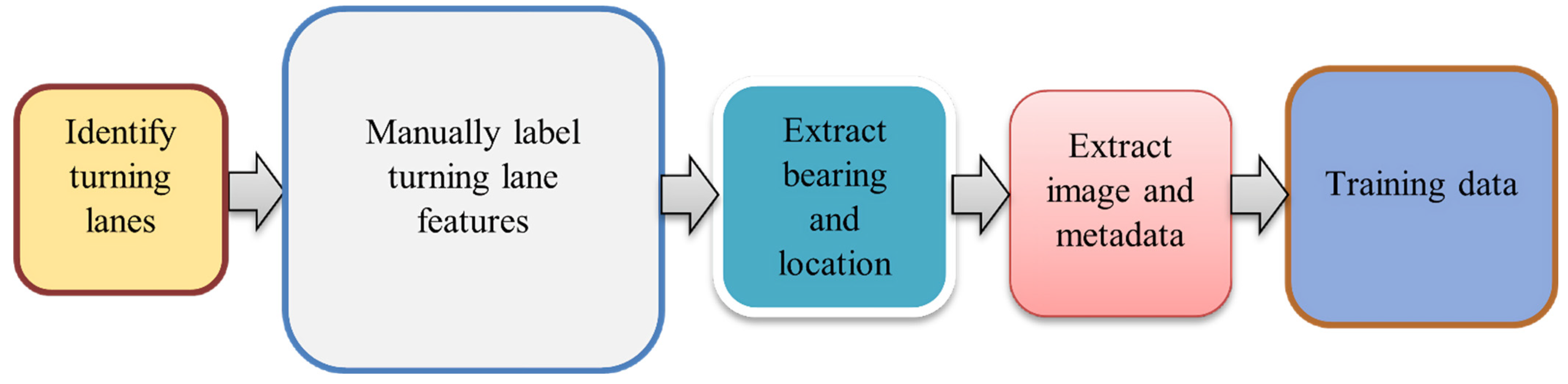

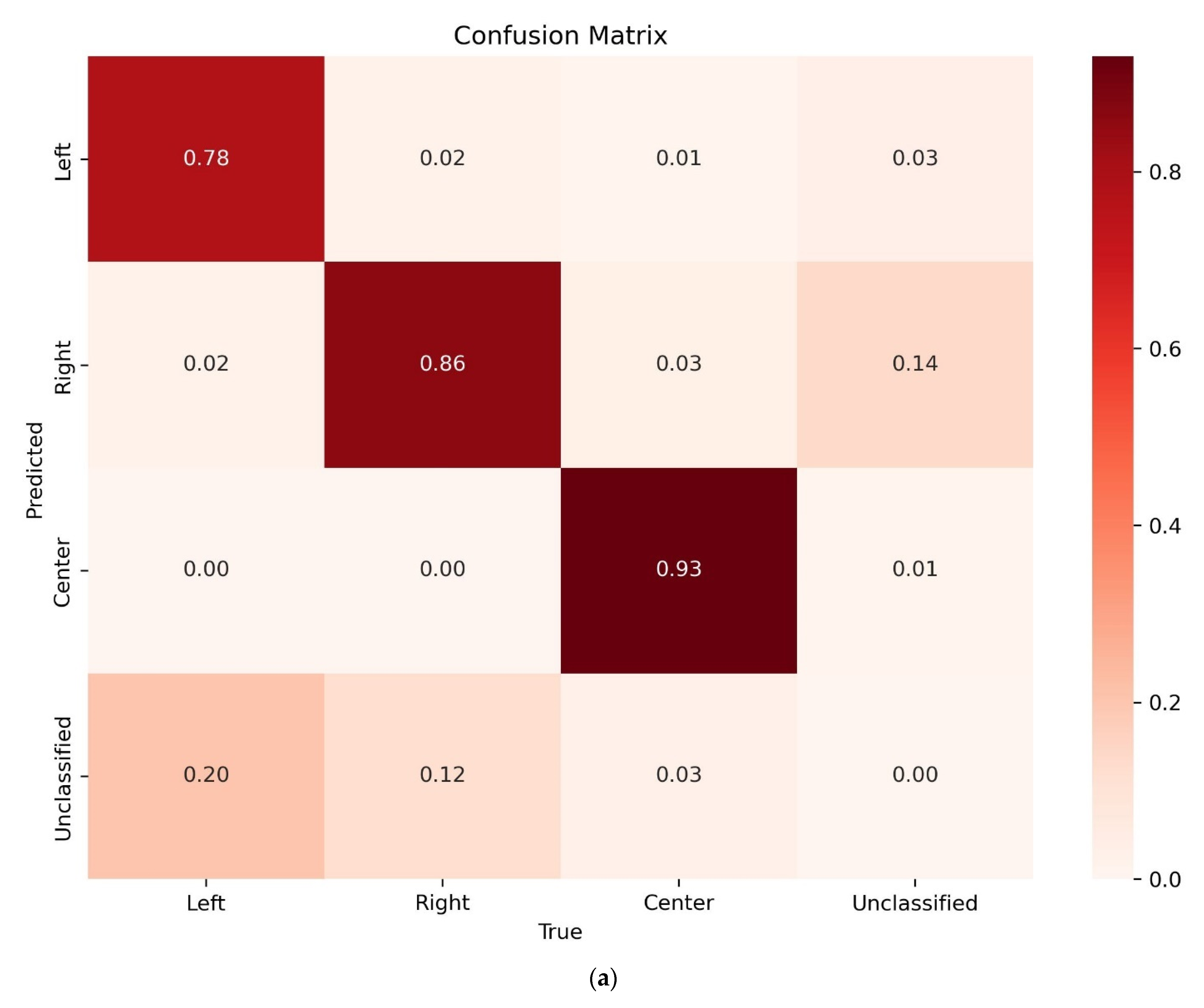

High-quality image data are crucial for training deep learning models to identify various turning lane features. The process of image preparation, depicted in

Figure 3, outlines the steps from turning lane identification to the extraction of training data or images. Significant time and effort were dedicated to generating training data for model creation, as a substantial portion of the model’s performance hinges on the quantity, quality, and diversity of the training data used. Similarly, additional time and effort were invested in the model training process, involving testing different parameters, and selecting the optimal ones to train the model. This is depicted by the larger box sizes for “Manually label turning lane features” and “training data” in

Figure 3.

For the initial investigation, a 4-class object detection model was developed to identify the turning configurations. The training data with varying classes were prepared for the model. The training data prepared for the turning lane model comprised 4 classes: “left_only”, “right_only”, “center”, and “none”, denoted as classes 1, 2, 3, and 0, respectively. These labels were represented by rectangular bounding boxes encompassing the turning lane markings (

Table 1). The training data initially prepared for the turning lane model were manually labelled 4687 features on the aerial images of Miami–Dade county using the Deep-Learning Toolbox in ArcGIS Pro. The model had 1418 features (30.3%) for the “left_only” class, 1328 features (28.3%) for the “right_only” class, 662 features (14.1%) for the “center” class, and 1279 features (27.3%) for the “none” class. The “none” class labels for the model were made up of all other visible markings or features except “left_only”, “right_only”, and “center”. Some features which were very common, for instance, left only and right only, were more prevalent in the training data for the turning lane model, while less common features like “center” had lower representation. To address this imbalance and enhance dataset diversity, some of these less common features were duplicated. However, to prevent potential issues like model overfitting and bias resulting from duplication, data augmentation techniques such as rotation were employed. Rotation involved randomly rotating the training data features at various angles to generate additional training data, thereby increasing dataset diversity. This approach enabled the model to recognize objects in different orientations and positions, which was particularly advantageous in this study, given that features like left only and right only lanes can appear in multiple travel directions at intersections. A 90 degrees rotation was applied to the training data. Finally, 11,036 exported image chips containing 16,564 features were used to train the model. It is important to mention that a single image chip may contain multiple features.

Each class within the training data was distinctively labeled to ensure clarity. These classes distinctly categorized the turning lane features and non-features identified in the input image by the detector, thereby enhancing the model’s detection accuracy. The output data were then sorted into categories such as left only, right only, center, and none, utilizing a class value field. The metadata generated for these labels, such as the image chip size, object class, and bounding box dimensions, were stored in an xml file. A function was then created to further convert the metadata into yolov5 format to obtain the transformed bounding box center coordinates, the box width, and height, using the following equations:

where

is the

x coordinate of the bounding box center,

is the

y coordinate of the bounding box center,

is width the bounding box,

is height the bounding box,

is width the image chip,

is height the image chip,

is maximum

x value of the bounding box,

is minimum

x value of the bounding box,

is maximum

y value of the bounding box, and

is minimum

y value of the bounding box. The normalization of coordinates was performed using the image dimensions to maintain the bounding box positions relative to the input image.

It is worth noting that object detection models perform optimally when trained on clear and distinctive features. Given that left only and right only turning markings often exhibit a lateral inversion of one another, rendering them less distinguishable, the model’s performance in detecting them is expected to be lower. To ensure uniqueness, the labels for training the center lane class featured both left arrows facing each other from different travel directions. This approach was similarly applied to label all other features to maintain uniqueness in the training features. The input mosaic data comprised high-resolution aerial images covering Miami–Dade County, Florida, with a tile size of 5000 × 5000 square feet.

4.4. YOLOv5—Turning Lane Detection Model

You Only Look Once (YOLO) is predominantly utilized for real-time object detection. Compared to other models such as R-CNN or faster R-CNN, YOLO’s standout advantage lies in its speed. Specifically, YOLO surpasses R-CNN and faster R-CNN architectures by a factor of 1000 and 100, respectively [

36]. This notable speed advantage stems from the fact that while other models first classify potential regions and then identify items based on the classification probability of those regions, YOLO can predict based on the entire image context and perform the entire image analysis with just one network evaluation. Initially introduced in 2016 [

37], YOLO has seen subsequent versions, including YOLOv2 [

38] and YOLOv3, which introduced improvements in multi-scale predictions. YOLOv4 [

39] and YOLOv5 [

40] were subsequently released in 2020 and 2022, respectively.

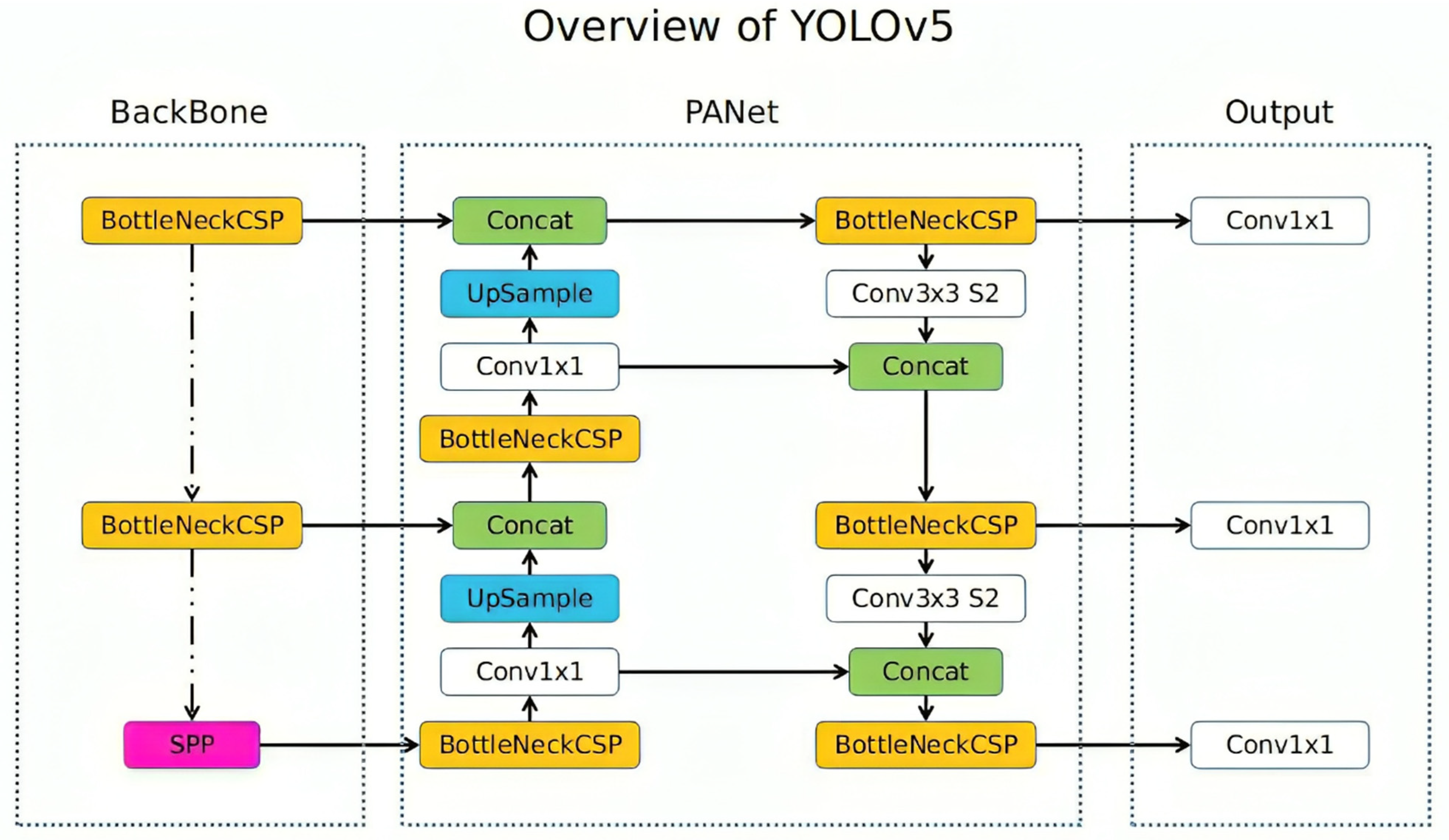

YOLOv5 architecture is made up of the backbone, the neck (PANet), and the head (output). Unlike other detection models, YOLOv5 uses cross-stage partial network (CSP)-Darknet-53 as its backbone, PANET as its neck, and a yolo layer as its head. CSP-Darknet is a better version of Darknet-53, which was the backbone of YOLOv3 with higher accuracy and processing speed [

40]. The architecture of the backbone and 6 × 6 convolution 2d structure improves model’s efficiency [

41], making the feature extractor perform better and faster than other object detection models including the previous versions [

42]. Compared to the previous versions, it has a higher speed, higher precision, and a smaller volume. YOLOv5’s backbone enhances both the accuracy and speed of the model, performing twice as fast as ResNet152 [

36]. Utilizing multiple pooling and convolution, YOLOv5 forms image features at different levels using CSP and spatial pyramid pooling (SPP) to extract different-sized features from input images [

43]. The architecture has a significant advantage in the area of computational complexity: on the one hand, the BottleneckCSP architecture reduces complexity and improves speed in the inference process; on the other hand, SPP allows for the extraction of features from images in different parts of the input image, creating three-scale feature maps which improve detection quality. Moreover, the neural network neck is a series of layers that are zones between the input and output layers, which is made up of feature pyramid network (FPN) and path aggregation network (PAN) structures, the task of which is to integrate and synthesize image features in various proportions before transferring it to the prediction. FPN enables semantic features of high level to be passed to the lower feature maps, and PAN enables localization features of high accuracy to be passed to the higher feature maps, finally moving into the detection phase where targets of different sizes in feature maps are generated in the output head [

43].

Figure 4 shows the network architecture of YOLOv5, illustrating the three-scale detection process. This process involves applying a 1 × 1 detection kernel on feature maps situated at three distinct areas and sizes within the network.

4.5. Turning Lane Detector

The object detection model’s adjustable parameters and hyperparameters encompass various factors such as the learning rate, input image dimensions, number of epochs, batch size, anchor box dimensions, and ratios, as well as the percentages of training and testing data. Visualization of the machine learning model’s evaluation metrics was presented through graphs depicting precision–recall, validation and training loss, F1 confidence curve, precision confidence, and confusion matrix. Validation loss and mean average precision were computed on the validation dataset, which constituted 10% of the input dataset. Key parameters influencing object detection performance include the batch size, learning rate, and training epoch. The learning rate dictates the rate at which the model acquires new insights from training data, striking a balance between precision and convergence speed. Optimal learning rates of 3.0 × 10−4 were used to train the model. Batch size refers to the number of training samples processed per iteration, with larger sizes facilitating parallel processing for faster training, albeit requiring more memory. In contrast, smaller batch sizes enhance model performance on new data by increasing randomness during training. Given the multi-class nature and high data complexity of the developed model, a batch size of 64 was adopted to enhance performance. Also, the anchor box represents the size, shape, and location of the object being detected. Four sizes of initial detection anchor boxes were used to train the model. The epoch number denotes the iterations the model undergoes during training, representing the number of times the training dataset is processed through the neural network. The model was trained using 100 epochs. In this study, 80% of the input data were utilized for model training, selected randomly to ensure representativeness. A 20% (10% validation and 10% test) split of the input dataset was used for validation during training and testing after training to measure the model’s performance. This test set derived from the input dataset was used to compute the evaluation metrics such as accuracy, precision, recall, and F1 score after model training. In other words, part of 2207 image chips was used to tune the model’s hyperparameters during the training process and part of the image chips was used to assess the model’s accuracy after the training process.

The determination of the training and test data split primarily hinged on the size of the training dataset. For datasets exceeding 10,000 samples, a validation size of 20–30% was deemed sufficient to provide randomly sampled data for evaluating the model’s performance. A default 50% overlap between the label and detection bounding boxes was considered a valid prediction criterion. Subsequently, recall and precision were computed to assess the true prediction rate among the original labels and all other predictions, respectively. During the model training, a binary cross-entropy loss was used for each label to calculate the classification loss, rather than using mean square error. Therefore, logistic regression was used to predict both object confidence and class predictions. This approach reduces the complexity of computations involved and improves the model’s performance [

36]. The calculation methods for the loss function for Yolov5 is the summation of the class loss, object confidence loss, and the location or box loss, which are formulated as follows [

40]:

where

is the overall loss,

is the classification loss,

is the object confidence loss,

is the location or box loss,

,

,

are the weights for the classification loss, object confidence loss, and location or box loss respectively.

is the size of the grid (if the input image is divided into

-grid cells).

is the number of bounding boxes predicted per grid cell.

is the probability predictor for the object category,

is the diagonal distance of the smallest closure area that can contain both the predicted box and the real box,

is the confidence score,

is the intersection of prediction boundary box and basic facts,

is the weights for the classification error,

is the probability predictor of the classification error,

is the intersection over union,

is the Euclidean distance between the centroids of the prediction box and the real box,

are the center point of the predicted box and the real box,

is the positive trade-off parameter,

measures the consistency of aspect ratio, and

and

are the aspect ratios of the target and predictions.

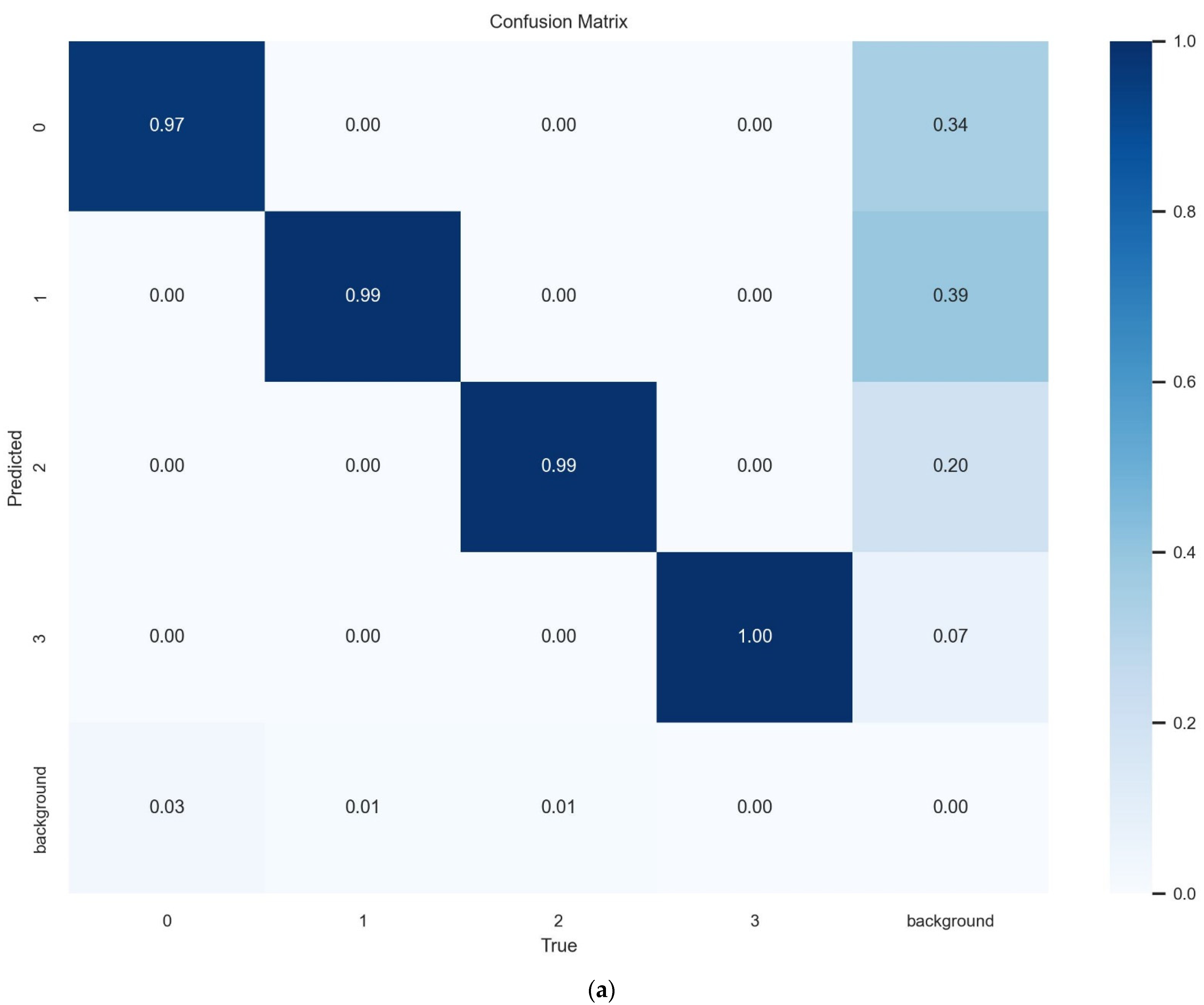

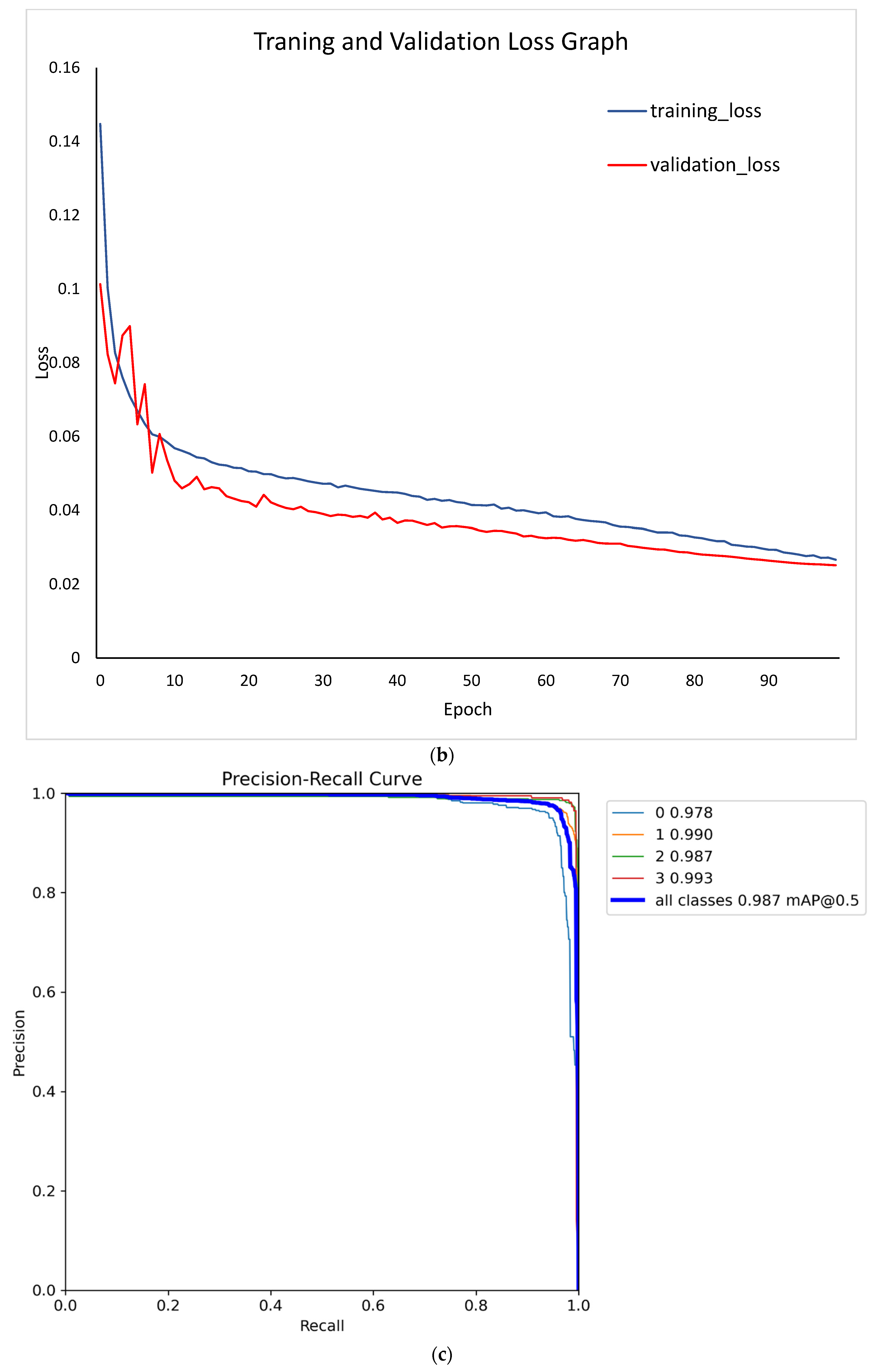

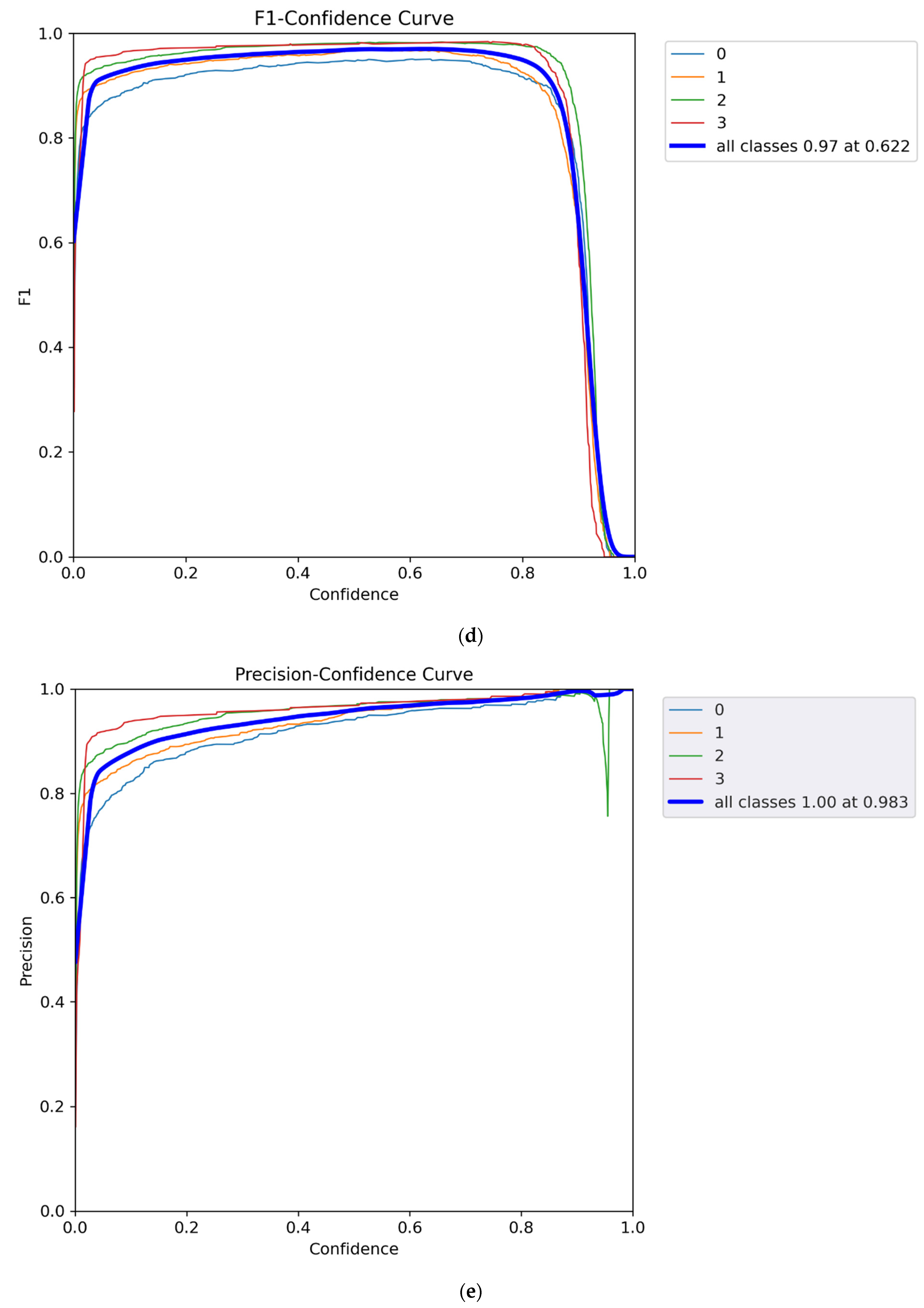

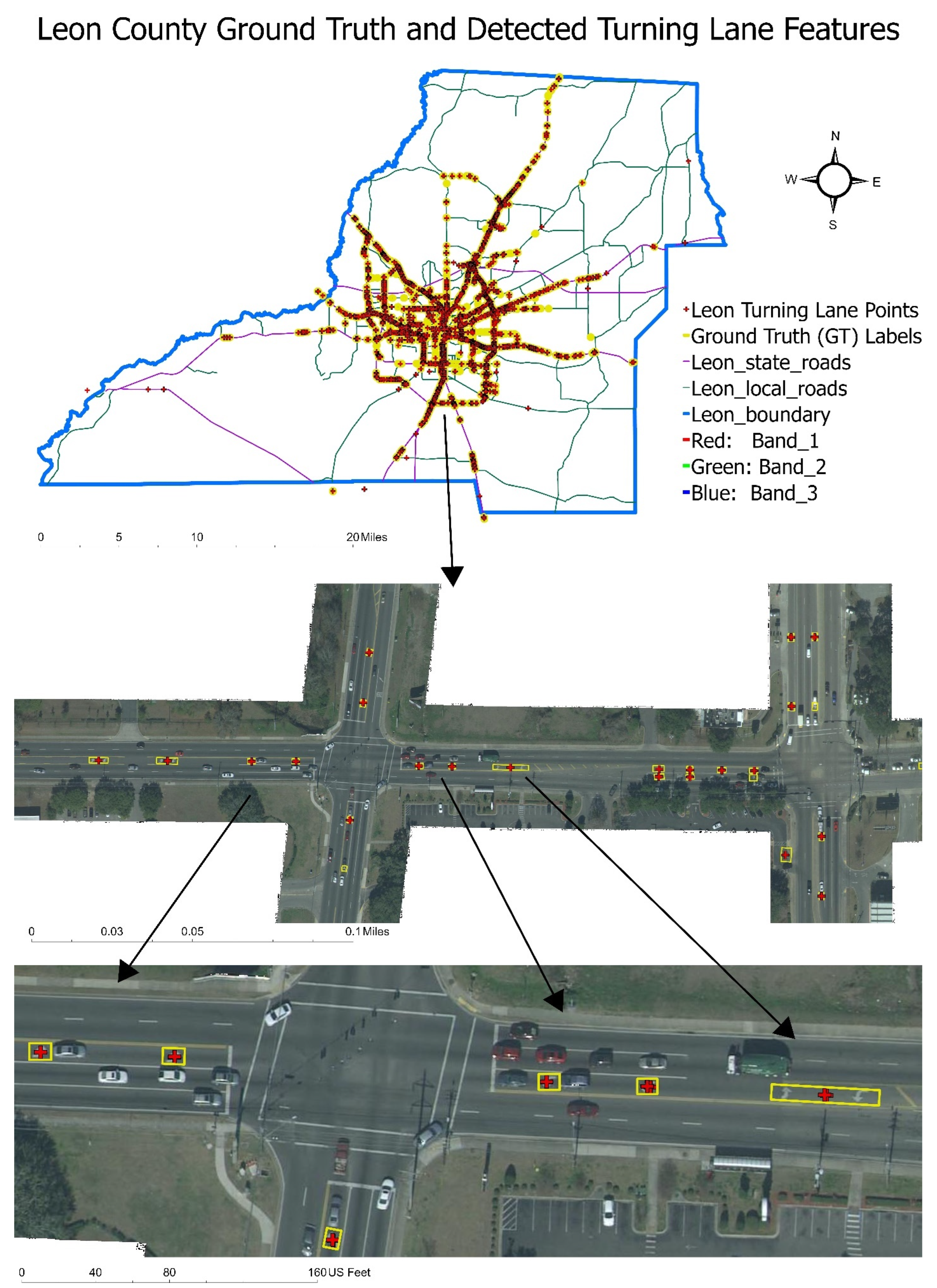

After model training, the performance was evaluated. The confusion matrix describes the true positive rate as well as the misclassified rate. The diagonal values show very high true positive predictions for each class in the confusion matrix (

Figure 5a). A background class can be observed on the confusion matrix. This class represents areas where no object is detected. Therefore, this accounts for instances where no object is detected in a particular region. It can also be observed that the model has extremely low misclassified features with center features showing a 100% correct classification and 0 misclassification. The graph of the train-validation loss for the model is shown in

Figure 5b. The validation loss examined how well the model fits the validation set derived from the input data, whereas the training loss evaluated how well the model fits training data. A high loss indicates that the product of the model has errors, while a low loss value shows there are fewer errors present in the model. From the graph, it can be noted that the model had a very low training and validation loss difference with the training loss is slightly higher than validation loss (

Figure 5b). This shows that the model is very accurate and performs well on new and existing data; however, the model performed better on the new dataset. The model has a very good precision–recall curve as shown in

Figure 5c. This describes how sensitive the model is in detecting true positives and how well it predicts the positive values. A good classifier has both high precision and high recall across the graph. The developed model has a precision of 0.969 and a recall value of 0.970. F1 value is a metric calculated from the harmonic mean between the precision and accuracy values. Higher F1 values indicate a better performance. When the F1 value is compared with the confidence thresholds, the optimum threshold can be identified. From the F1 confidence curve (

Figure 5d), the model performs very well between 0.90 and 0.98 F1 score when the confidence threshold is set between 0.05–0.8. The optimum threshold of 0.622 returns an F1 value of 0.97 for all classes. Observing the precision–confidence curve, the precision gently increases with higher confidence thresholds. Therefore, the model is very precise even at lower confidence of 0.2 reaching an ideal precision at 0.98 (

Figure 5e). The average accuracy of the developed model was 0.987. Therefore, we can conclude that the developed detector performs quite well.

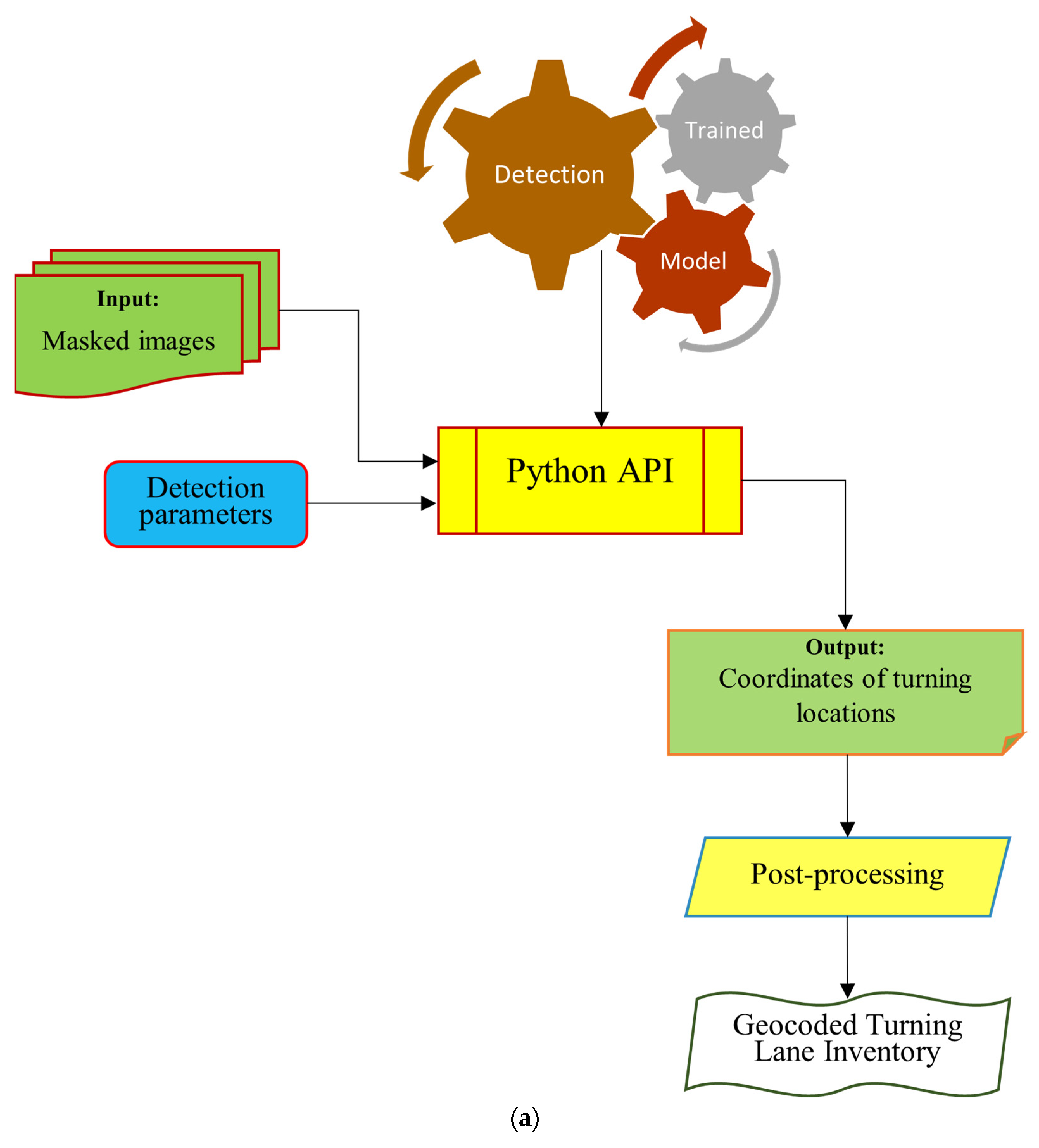

4.6. Mapping Turning Lanes

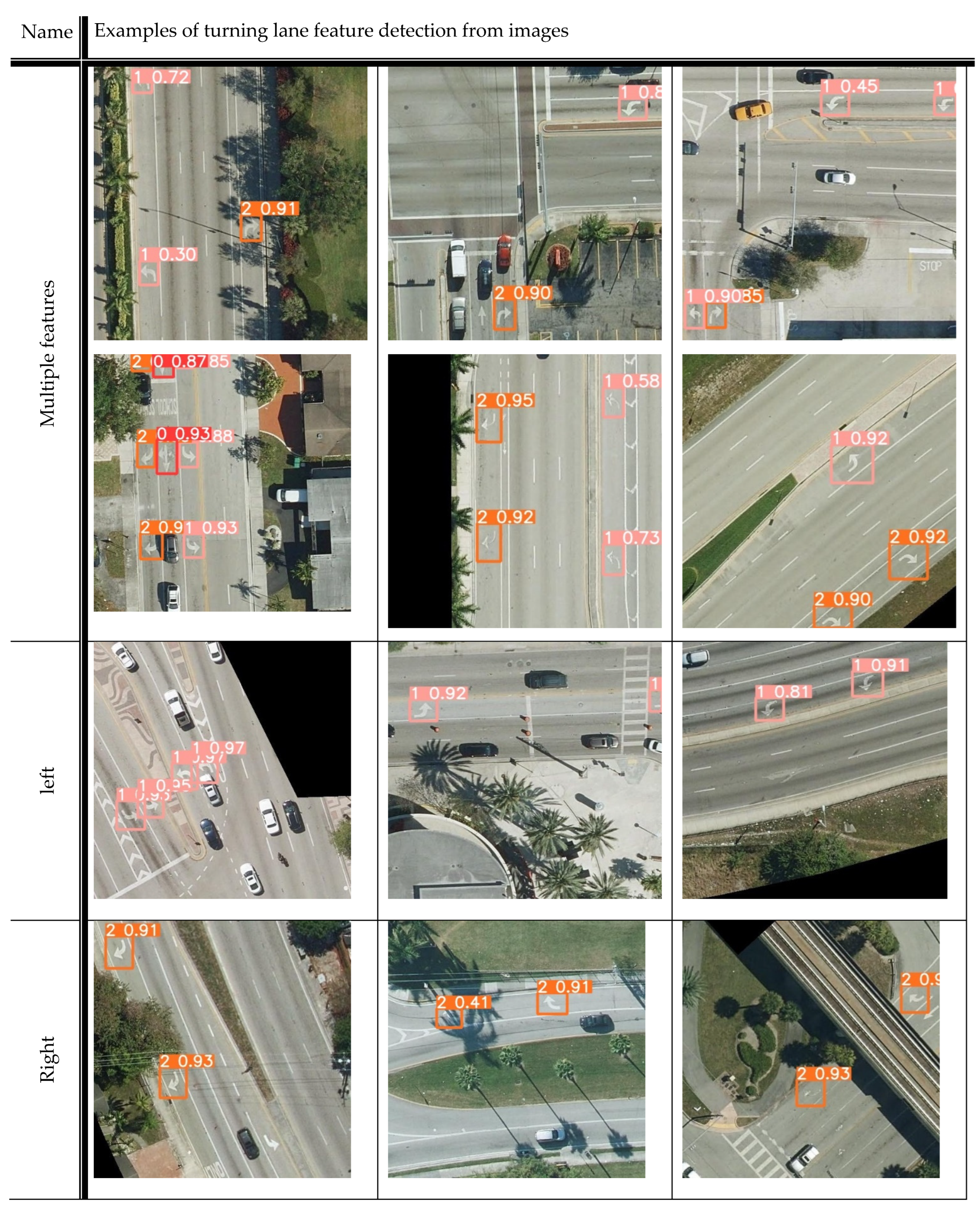

The turning lane detector was initially tested on individual photos.

Figure 6b demonstrates how the detector correctly outlines turning lanes with bounding boxes using the detection’s confidence score. A threshold of 0.1 was employed to capture all features detected with very low confidence levels. It is important to note that lower detection thresholds generally increase false positives, which results in lower precision and increases computational workload as well as detection time, and irrelevant features or noise. On the other hand, lower detection thresholds increase the model’s sensitivity to detecting faint or partially visible features while increasing recall. With higher recall, the model is more likely to identify and detect all instances of the target object class and reduce the chances of missing any objects. More than 10% overlap between two bounding boxes was avoided to minimize duplicate detections. The detector was trained on 512 × 512 sub-images and a resolution of 0.5 feet per pixel. It should be noted that utilizing huge photos with object detection techniques is impractical, since the cost of computing grows rapidly. Therefore, another image processing step was utilized to split detection images into tiles of 512 × 512 or less to carry out tile detections on the input aerial images. The output detection labels with coordinates were converted into shapefiles and visualized in ArcGIS for further processing and analysis.

The detection and mapping procedure was carried out at the county level, since the detector performed very well on single photos.

Figure 6a provides a summary of this procedure. The photographs in the folder with a label of “masked images” were first picked out and iterated through the detector. An output file of all the identified turning lanes in that county was created once all photos had been sent to the detector. Confidence scores were included in the output file. This file was used to map turning lanes. Note that the model can detect turning lane markings from images with a resolution ranging from 1.5 ft down to 0.25 ft or higher. However, the model has not been tried on any images with resolution lower than the ones provided by the Florida APLUS system. From the observations, the model made some false detections in a few instances. These were outlined and discussed in the results.

Figure 6b shows some examples of the detected features.

4.7. Post-Processing

Finally, after applying the model to the obtained aerial images in Leon County, the total number of observed left, right, and center detections in Leon County was found to be 4795 using the model. Redundant detections caused by the overlapping distance on image were removed at the post-processing stage. The turning configurations for state and local roadways can also be classified into groups depending on analytical objectives. The filter’s non-maximum suppression was applied, which kept the detection that overlapped and had the highest confidence level. Therefore, all the detected turning lane markings with over 10% overlap with lower confidence levels were removed. The detected features were converted from polygon shapefiles to point shapefiles for analysis.

5. Results and Discussion

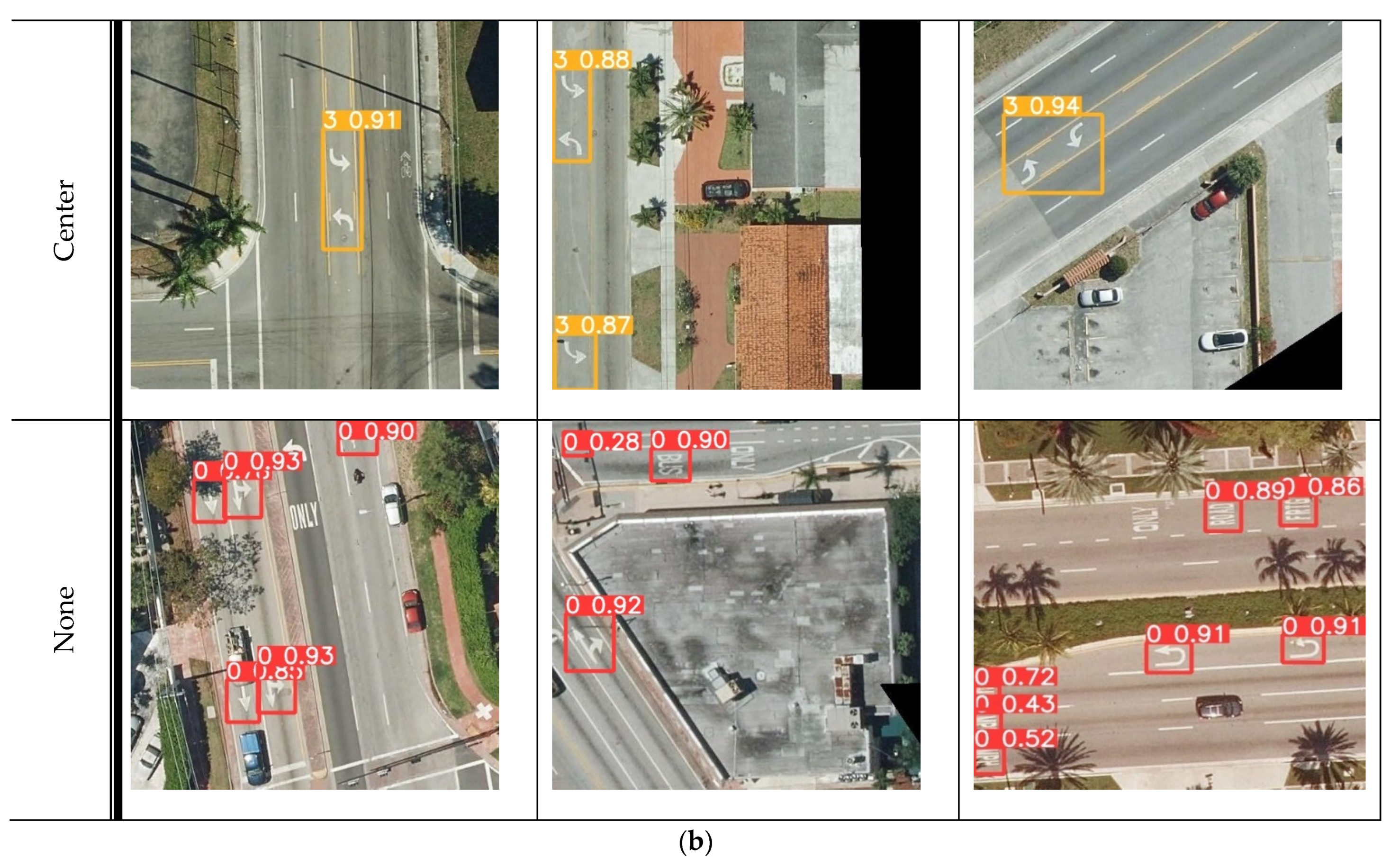

The model’s performance was assessed during the model training using precision, recall, and F1 scores. Afterwards, Leon County was utilized as the county where ground truth (GT) data were collected, and the developed model’s performances were assessed with respect to completeness, correctness, quality, and F1 score. The GT dataset of turning lane features on Leon County roadways were used as a proof of concept. After detection of left, right and center features, a total of 4795 detections were observed, where 3101 were classified as left, 1478 were classified as right, and 216 were classified as center lane features. After visual inspection, a total of 5515 visible turning lane markings for left, right, and center lane features were collected as a GT dataset using the masked photographs as the background. Note that each turning lane may exhibit a range of 1–6 features, such as left only or right only markings. Consequently, a single lane may contain multiple consecutive turning features aligned in a straight line, and each lane may consist of several features that collectively define that lane. However, to perform a robust analysis, the assessment was carried out by directly comparing individual turning features on the aerial images.

Figure 7 shows the GT dataset and detected turning lane markings in Leon County. Although some of the turning lane markings, especially the left only marking, were missed by the model, the overall performance of the model was reliable. This is mainly because of various reasons such as occlusions, faded markings, shadows, poor image resolution, and variety of the pavement marking design.

As noted, the suggested model identified turning lane markers with a minimum confidence score of 10%. For the purposes of this case study, the model identified turning lane markers (M) on the local roads in Leon County were retrieved. On the GT, a similar location-based selection methodology was used. The suggested model’s performance was assessed by examining the points that were discovered within the polygons and vice versa at various confidence levels of 90%, 75%, 50%, 25%, and 10%, respectively (

Table 2). A confusion matrix was used to visualize the model’s performance against the GT dataset of Leon County (

Figure 8a).

This study’s major goal was to assess the accuracy and performance of the proposed model’s predictions and contrast them with a ground truth dataset. Separate evaluation analysis was performed using each “left_only”, “right_only”, and “center” detections of the developed model. The accuracy and performance on turning lane model were assessed after testing the model’s correctness (precision) and completeness (recall) using a complete ground truth dataset and measuring the F1 score. The F1 score calculates the harmonic mean using the precision and recall values. It is the appropriate evaluation metric when dealing with imbalanced datasets. It is crucial in object detection tasks where missing actual objects is more detrimental than incorrectly classifying background regions as objects.

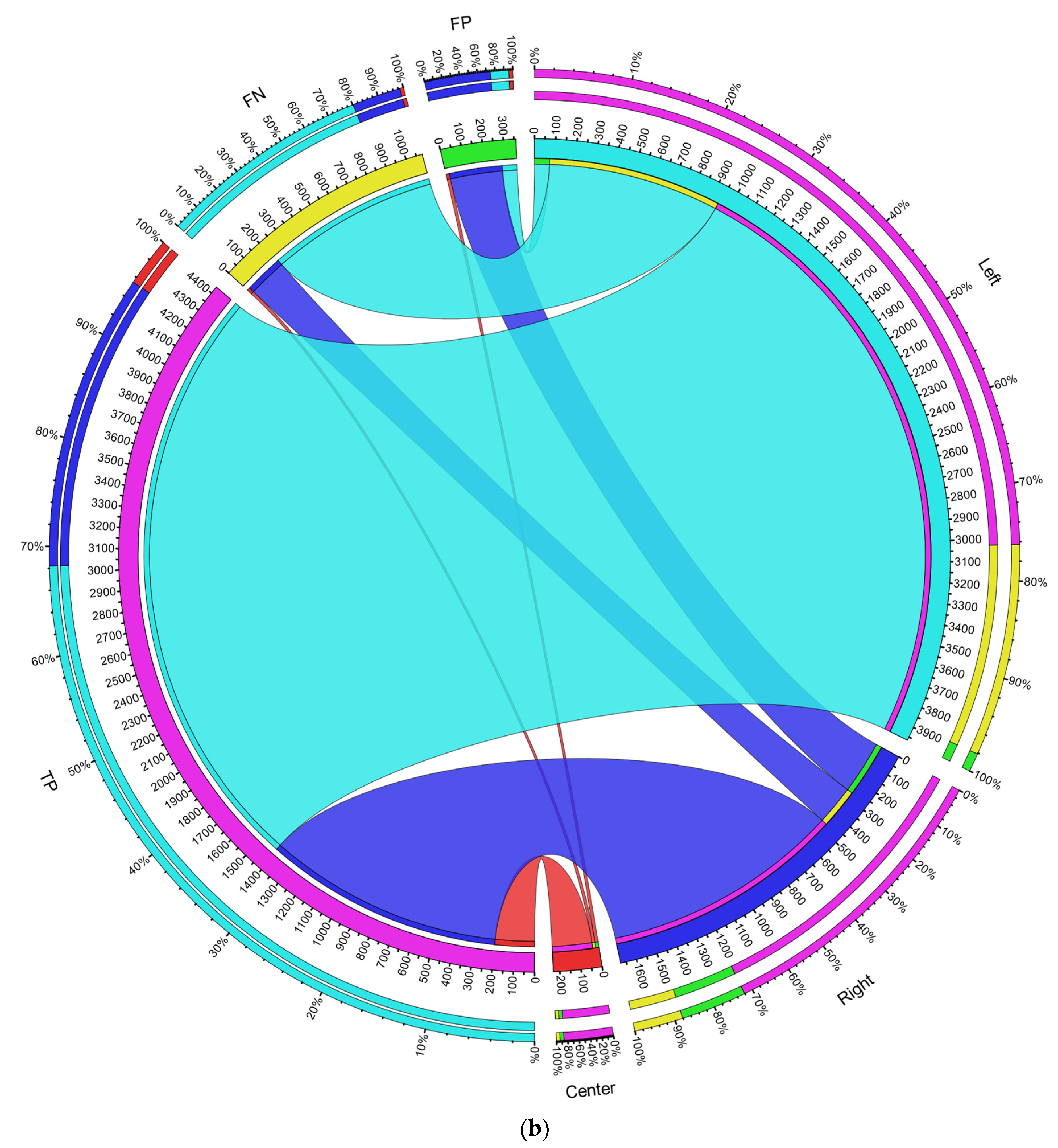

Finally, the suggested model’s performance was assessed using the criteria of completeness (recall), correctness (precision), quality (intersection over union), and F1. The model’s performance was also visualized using a circus plot [

44] in

Figure 8b. These criteria were initially utilized in [

45,

46] for the goal of highway extraction, and they are now often used for performance evaluation of the related models [

47,

48]. The following selection criteria are necessary to determine the performance evaluation metrics:

- i.

GT: Number of turning lane polygons (ground truth),

- ii.

M: Number of model-detected turning lane points

- iii.

False Negative (FN): Number of GT turning lane polygon without M turning lane point

- iv.

False Positive (FP): Number of M turning lane points not found within GT turning lane polygon

- v.

True Positive (TP): Number of M turning lane points within GT turning lane polygon

Performance evaluation metrics:

Completeness = , true detection rate among GT turning lane (recall)

Correctness = , True detection rate among M turning lane (precision)

Quality = , True detection among M turning lane plus the undetected GT

turning lane (Intersection over Union: IoU)

Based on the findings, we observe that this automated turning lane detection and mapping model can on average detect and map 57% of the turning lanes with 99% precision at 75% confidence level and an F1 score of 71%. At a lower confidence level of 25%, it can detect ~80% of the turning lanes with 96% precision and an F1 score of 87%. At a 75% confidence level, the average quality of the model’s detection is 58% whereas, the average quality of the model is also 77% at 25% confidence level.

Higher accuracy was achieved at low confidence levels, since there is a higher recall and more room is given to increase the number of detections. That is, from the observations, detected turning lane markings that had occlusions from vehicle or trees, shadows, and faded markings generally had lower confidence levels. Therefore, reducing the confidence level threshold adds these detected features to the total number of detections. The new detections allowed into the pool for valuation relatively includes more true positives, fewer or zero false positives, and fewer or zero false negatives. With the increase in the number of true positives as confidence decreases, the accuracy of the model increases, since the accuracy is described based on the relationship between the number of true positives and the total number of detections. It can also be observed that the poor distinctiveness of the detection features affected detection performance. As stated earlier, the observed difference between the left only and right only turning markings, which is just a lateral inversion of the other marking, made it less unique and therefore resulted in lower detection confidence levels. When the left turn is flipped horizontally, it becomes a right turn and vice versa. On the other hand, the center lane, which was trained using a relatively distinct shape, recorded better detection results than the left only and right only lanes.

The detected turning lanes were classified under different confidence levels. The final list is shown in

Table 2. The extracted roadway geometry data can be integrated with crash and traffic data, especially at intersections, to advise policy makers and roadway users. That is, they can be used for a variety of purposes such as identifying those markings that are old and invisible, comparing the turning lane locations with other geometric features like crosswalks and school zones, and analyzing the crashes occurring around the turning lanes at intersections

6. Conclusions and Future Work

This study investigates the utilization of computer vision tools for roadway geometry extraction focusing on Florida turning lanes as a proof of concept. This is a creative approach that uses computer vision technology to possibly replace labor- and error-intensive traditional manual inventory. The created system can extract recognizable turning marks from images using high quality images. By removing the requirement for a human inventory procedure and improving highway geometry data quality by removing mistakes from manual data entry, the findings will assist stakeholders in saving money. The benefits of such roadway data extraction from imagery for transportation agencies are numerous and include identifying markings that are outdated and invisible, comparing the locations of turning lanes with other geometric features like crosswalks, school zones, and analyzing crashes that take place close to these locations.

However, the study also identifies notable limitations and offers recommendations for future research. Challenges arise from aerial images of roadways obstructed by tree canopies, limiting the identification of turning lane markings. Moving forward, the developed model can be integrated into roadway geometry inventory datasets, such as those used in the HSM and the Model Inventory of Roadway Elements (MIRE), to identify and rectify outdated or missing lane markings. Future research endeavors will also focus on refining and expanding the capabilities of the model to detect and extract additional roadway geometric features. Additionally, there are plans to integrate the extracted left only, right only, and center lanes with crash data, traffic data, and demographic information for a more comprehensive analysis.