The Making of Responsible Innovation and Technology: An Overview and Framework

Abstract

1. Introduction

2. Literature Background

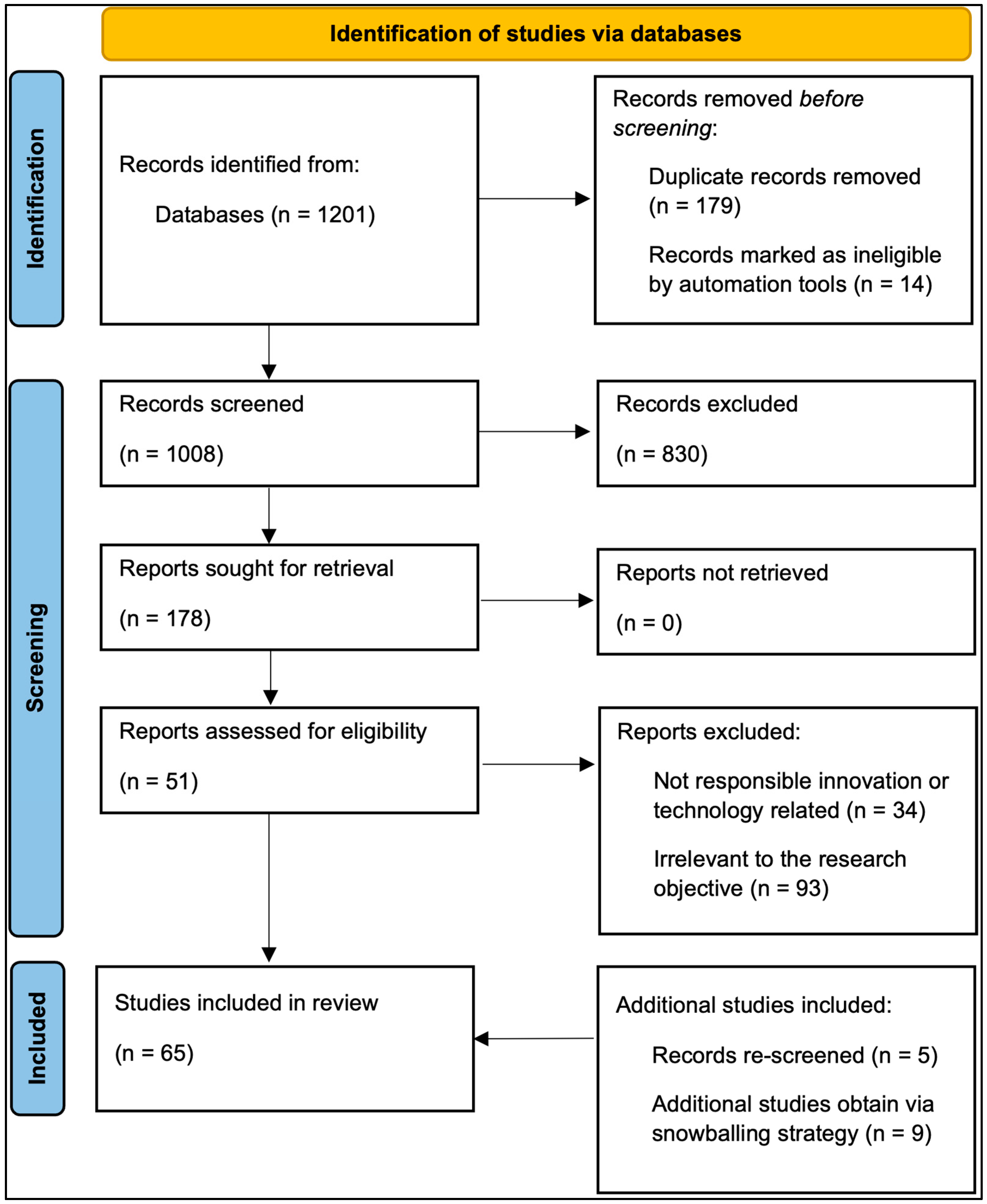

3. Methodology

4. Results

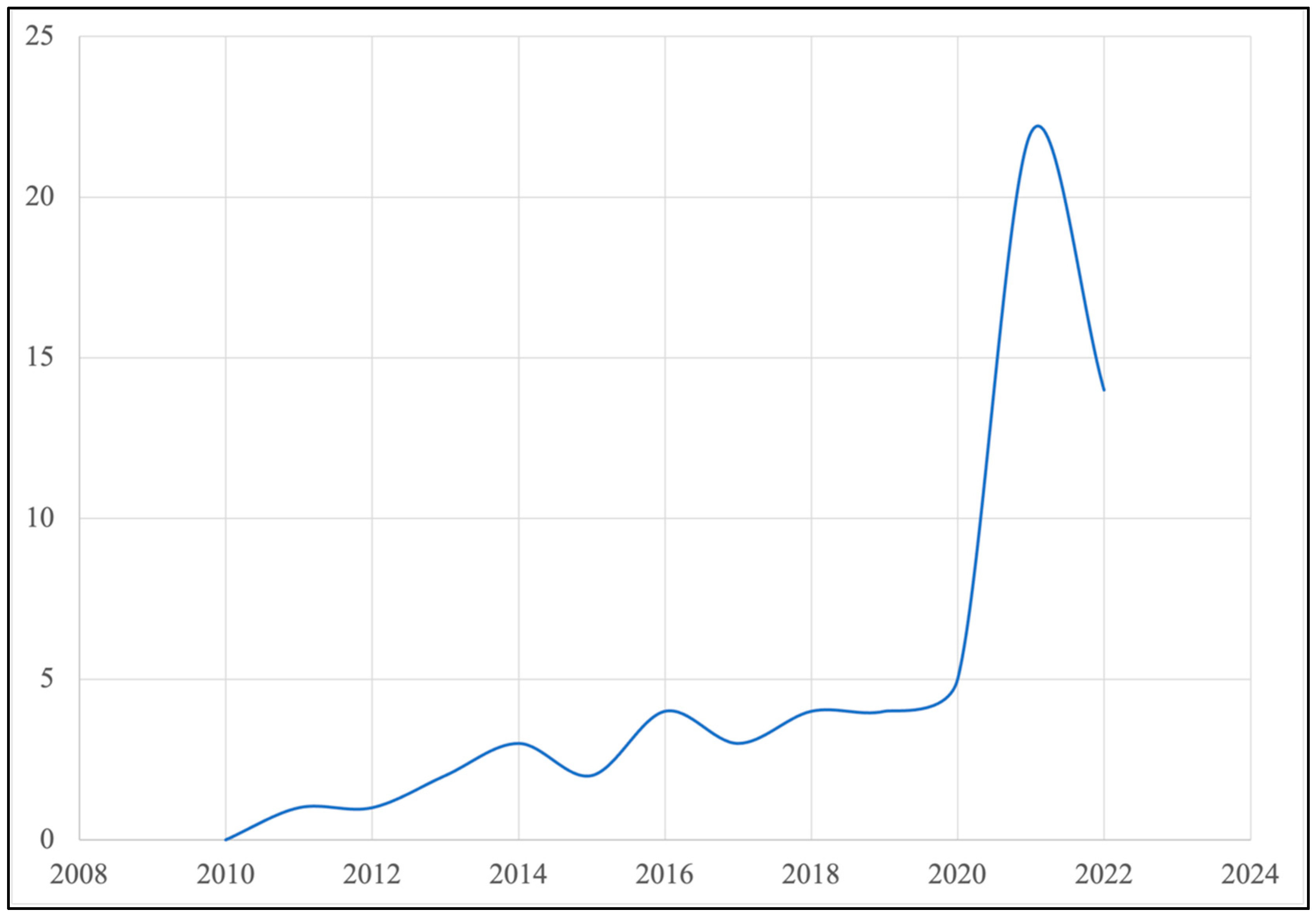

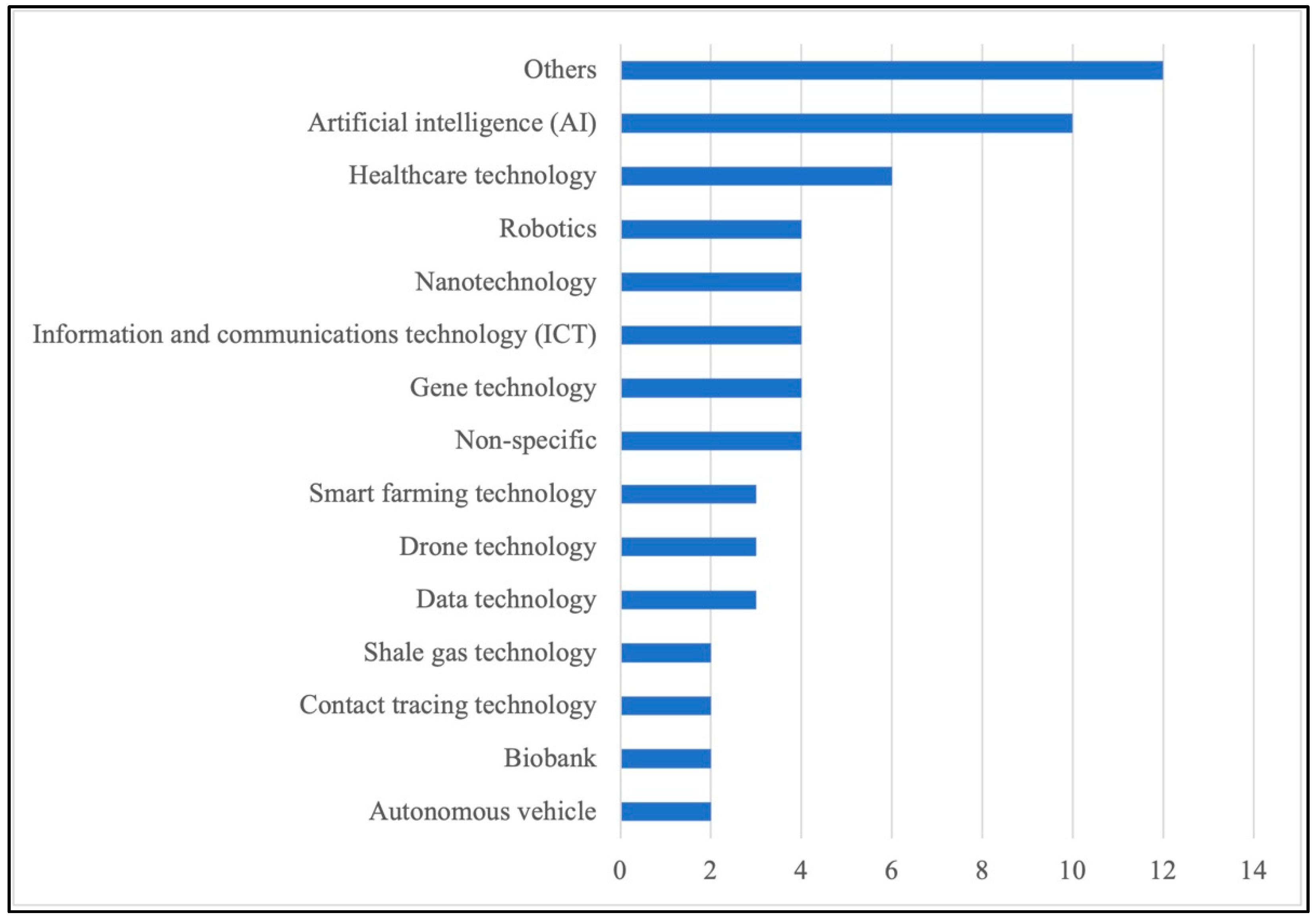

4.1. General Observations

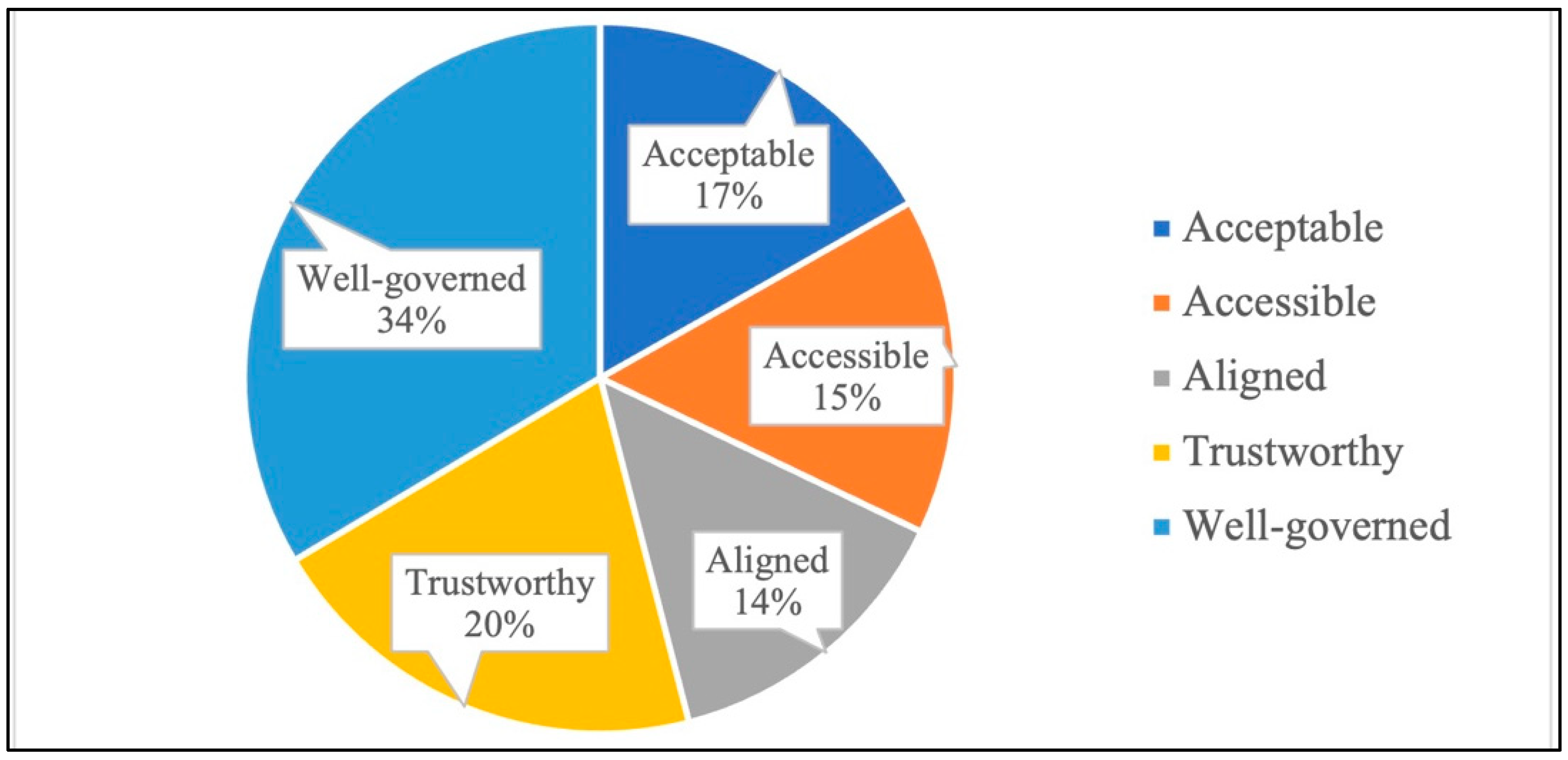

4.2. Acceptable Innovation and Technology

4.3. Accessible Innovation and Technology

4.4. Aligned Innovation and Technology

4.5. Trustworthy Innovation and Technology

4.6. Well Governed Innovation and Technology

5. Findings and Discussion

5.1. Key Findings

“Responsible innovation and technology is an approach to deliver acceptable, accessible, trustworthy, and well governed technological outcomes, while ensuring these outcomes are aligned with societal desirability and human values and can be responsibly integrated into our cities and societies.”

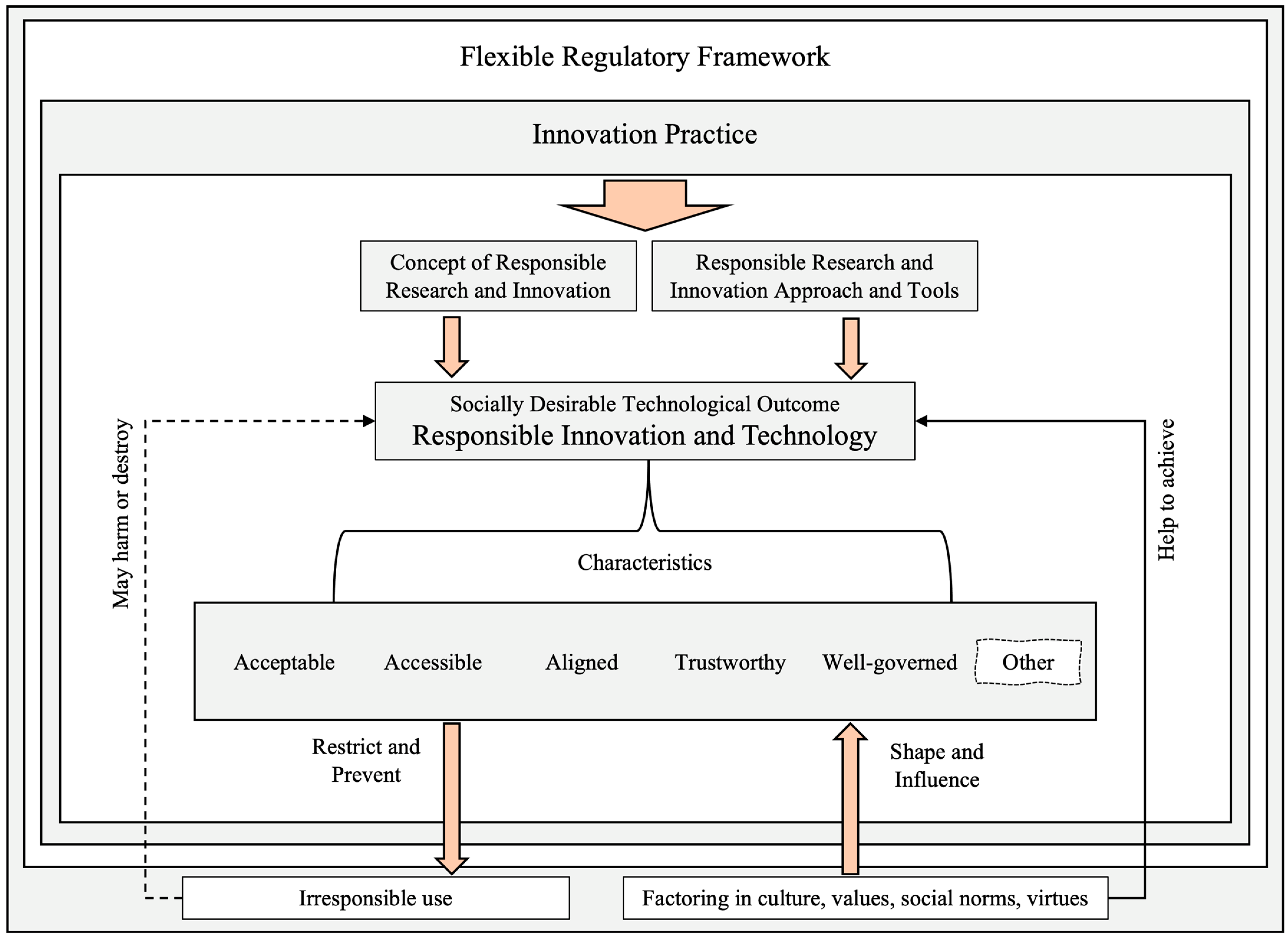

5.2. Conceptual Framework

6. Conclusions

- ▪ How can we decide if innovation and technology contain the responsible characteristics, and what are the specific evaluation criteria?

- ▪ What are the specific scales in defining responsible characteristics in innovation and technology? For example, when a technological innovation contains many characteristics of RIT but is missing some, should it still be considered ‘responsible’?

- ▪ How should RIT be compatible with specific values in complex cultural contexts, and how should innovation and technology sectors balance the possible conflict of values from different levels of cultural contexts, such as individual, collective, community, and national levels?

- ▪ How can we define the social desirability of innovation and technology that may vary with different values or specific innovation purposes, and how can we make choices when different social expectations or needs conflict?

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| No | Author | Journal | Title | Year | Aspect | Innovation | Characteristic | Keyword | Finding |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Sujan et al. | Safety Science | Stakeholder perceptions of the safety and assurance of artificial intelligence in healthcare. | 2022 | Healthcare | Artificial intelligence | Acceptable | Harmless | 1. The use of innovations should not disrupt the relationship between patients and clinicians. |

| Trustworthy | Secure | 1. Innovation should provide greater efficiency and accuracy to reduce error and to make care safer. 2. Need for rigorous approaches, sound safety evidence, and independent oversight. | |||||||

| Well governed | Participatory | 1. Diversity of views can support responsible innovation. | |||||||

| Accountable | 1. Provide auditable and traceable history of every action that the AI did to facilitate the incident investigation process. | ||||||||

| 2 | Li et al. | Computers in Human Behavior | What drives the ethical acceptance of deep synthesis applications? A fuzzy set qualitative comparative analysis. | 2022 | Digital media | Artificial intelligence | Aligned | Meaningful | 1. Should promote the progress of society and human civilization, create a more intelligent way of work and life, improve people’s well-being, and benefit all humanity, including future generations. |

| Acceptable | Ethical | 1. Should follow the principle of people-centered development and use, based on the principle of respecting human autonomy. | |||||||

| Harmless | 1. Well-being and the common good, justice, and a lack of harm are the core conditions of high ethical acceptance. | ||||||||

| Equitable | 1. Should ensure fairness and justice, avoid prejudice and discrimination against specific groups or individuals, and avoid disadvantaging vulnerable groups. | ||||||||

| Trustworthy | Transparent | 1. Accountability and transparency are the most important guiding principles in AI development. | |||||||

| Well governed | Accountable | 1. AI should be auditable and accountable. The people responsible for different stages of the life cycle of an AI system should be identifiable and responsible for the results of the AI system. | |||||||

| 3 | Eastwood et al. | Frontiers in Robotics and AI | Responsible robotics design–A systems approach to developing design guides for robotics in pasture-grazed dairy farming. | 2022 | Agriculture | Robotics | Aligned | Meaningful | 1. Robotics should provide positive impacts on social well-being. |

| Sustainable | 1. To ensure broad sustainability outcomes with milking robotics. A key consideration is the implications for animal well-being using robots. | ||||||||

| Accessible | Adaptable | 1. Should be able to integrate with existing technologies and leverage new opportunities for productivity gains. 2. Must be robust to deal with complex operating environments. 3. Must be easy to train, use, and maintain to increase job flexibility and ability for a wider range of people. | |||||||

| Affordable | 1. High-throughput robotics may be using high-cost technology to perform low-cost jobs. | ||||||||

| Acceptable | Harmless | 1. Robotics should reduce injuries and physical demands on people and avoid negative psychological impacts, such as changes to the self-identity of staff if robotics replaces their roles. | |||||||

| Well governed | Regulated | 1. Potential regulatory barriers also need to be assessed in robotics development. | |||||||

| 4 | Ienca et al. | Neuroethics | Towards a Governance Framework for Brain Data. | 2022 | Medical science | Data technology | Trustworthy | Secure | 1. Brain data should be considered a special category of personal data that warrants heightened protection during collection and processing. 2. Usage of brain data should consider and prevent inherent risks of algorithmic processing including bias, privacy violation, and cybersecurity vulnerabilities. |

| Transparent | 1. The process of collecting, managing, and/or processing identifiable brain data should be transparently disclosed. | ||||||||

| Well governed | Accountable | 1. Legal entities responsible for data breaches and other regulatory failures should be identifiable and held accountable. | |||||||

| 5 | Bao et al. | Computers in Human Behavior | Whose AI? How different publics think about AI and its social impacts. | 2022 | Technology | Artificial intelligence | Well governed | Participatory | 1. AI development and regulation must include efforts to engage with the public in order to account for the varied perspectives that different social groups hold concerning the risks and benefits of AI. |

| 6 | Townsend and Noble | Sociologia Ruralis | Variable rate precision farming and advisory services in Scotland: Supporting responsible digital innovation? | 2022 | Agriculture | Smart farming technology | Well governed | Participatory | 1. Design and implementation of innovation should allow for a more bottom-up approach that considers the voices of a broader stakeholder group. |

| 7 | Stahl | International Journal of Information Management | Responsible innovation ecosystems: Ethical implications of the application of the ecosystem concept to artificial intelligence. | 2022 | Technology | Artificial intelligence | Aligned | Meaningful | 1. Innovations should actively seek to align their processes and expected outcomes with societal needs and/or preferences. |

| 8 | Middelveld et al. | Public Understanding of Science | Imagined futures for livestock gene editing: Public engagement in the Netherlands. | 2022 | Agriculture | Gene technology | Aligned | Deliberate | 1. We need to exercise care (and moderation) in decision-making practices of innovation to avoid large and irreversible consequences for life on Earth. |

| Sustainable | |||||||||

| 9 | Merck et al. | Bulletin of Science, Technology & Society | What Role Does Regulation Play in Responsible Innovation of Nanotechnology in Food and Agriculture? Insights and Framings from US Stakeholders. | 2022 | Agriculture | Nanotechnology | Trustworthy | Transparent | 1. Should provide a range of processes to ensure transparency (via processes, safety studies, and assessments), requiring disclosure, and changing the use of confidential business information. |

| Secure | 1. Should conduct more safety studies to strengthen safety and ensure independent third-party testing. | ||||||||

| Well governed | Regulated | 1. Require basic and appropriate regulations to ensure safety and efficacy but ensure that they do not appear as a barrier to innovation or a guardrail against the risks of novel products. | |||||||

| Participatory | 1. Should interact with stakeholders early in innovation processes, involving the public and engaging stakeholders, to strengthen community and stakeholder engagement. | ||||||||

| 10 | Russell et al. | Journal of Responsible Innovation | Opening up, closing down, or leaving ajar? How applications are used in engaging with publics about gene drive. | 2022 | Biology | Gene technology | Well governed | Participatory | 1. Should devote more attention to finding creative ways for public engagement, which can create fresh perspectives, engagements, and collective actions. |

| 11 | Foley et al. | Journal of Responsible Innovation | Innovation and equality: an approach to constructing a community governed network commons. | 2022 | Technology | Information and communication technologies | Well governed | Participatory | 1. Apply more interdisciplinary approaches to bring the public’s values into innovation, aiming to align technological and societal research for equitable outcomes. |

| 12 | Donnelly et al. | AI & Society | Born digital or fossilized digitally? How born digital data systems continue the legacy of social violence towards LGBTQI+ communities: a case study of experiences in the Republic of Ireland. | 2022 | Technology | Data technology | Acceptable | Equitable | 1. Developers should eliminate systematic stereotyping during the innovation process and take a more inclusive approach within the original software design to reduce marginalization towards minority groups in society. |

| 13 | Samuel et al. | Critical Public Health | COVID-19 contact tracing apps: UK public perceptions. | 2022 | Healthcare | Contact tracing technology | Acceptable | Ethical | 1. New health innovation practices should be compatible with respect to personal privacy and autonomy. |

| Trustworthy | Explainable | 1. Innovation practices should be within contexts where public trust in government and institutions is established and robust. | |||||||

| Well governed | Participatory | 1. Effective communication and engagement are helpful in maintaining public support and trust. | |||||||

| 14 | MacDonald et al. | Journal of the Royal Society of New Zealand | Conservation pest control with new technologies: public perceptions. | 2022 | Biology | Gene technology | Trustworthy | Transparent | 1. Innovation design and processes need to involve and communicate/be transparent with the public. |

| Well governed | Participatory | ||||||||

| 15 | Bunnik and Bolt | Epigenetics Insights | Exploring the Ethics of Implementation of Epigenomics Technologies in Cancer Screening: A Focus Group Study. | 2021 | Medical science | Epigenomics technology | Acceptable | Ethical | 1. From an ethical point of view, innovation and its implementation should be respected for autonomy and ethically acceptable. |

| Equitable | 1. Should grant all categories of road users the same level of protection, which aims to redress inequalities in vulnerability among road users. 2. Should adopt non-discriminatory and more inclusive designs to reduce the risks of perpetuated and increased inequalities between individuals and groups in society. 3. Should avoid blaming the victim, stigmatization, and discrimination. | ||||||||

| Trustworthy | Transparent | 1. Informed consent was deemed important because people need to understand ‘the whole chain’ of events or decisions they may be confronted with based on the possible outcomes of screening. | |||||||

| Explainable | 1. Professionals should be able to explain what epigenomic screening entails and what the results might mean. | ||||||||

| Secure | 1. The security of data and samples and the protection of the privacy of screening participants were crucial conditions for the responsible implementation of epigenomics technologies in public health settings. | ||||||||

| 16 | Santoni de Sio | Ethics and Information Technology | The European Commission report on ethics of connected and automated vehicles and the future of ethics of transportation. | 2021 | Urbanology | Autonomous vehicle | Acceptable | Harmless | 1. CAVs should prevent unsafe use by inherently safe design and meaningful human control approaches. 2. Products do not harm human health and/or the environment. |

| Trustworthy | Secure | 1. The first and most obvious is the safeguard of informational privacy, in line with some basic principles of GDPR such as data minimization, storage limitation, and strict necessity requirements, as well as the promotion of informed consent practices and user control over data. | |||||||

| Explainable | 1. Create adequate social and legal spaces where questions about the design and use choices of CAVs can be posed and answered, making the relevant people aware, willing, and able to provide the required explanations to the relevant audience and the relevant audiences able and willing to require and understand the explanations. | ||||||||

| Well governed | Participatory | 1. Create the institutional, social, and educational environment to ensure that all key stakeholders can discuss, identify, decide, and accept their respective obligations. | |||||||

| Accountable | 1. Should address the following often-posed question: who is to blame (and held legally culpable) for accidents involving CAVs? | ||||||||

| 17 | Kokotovich et al. | NanoEthics | Responsible innovation definitions, practices, and motivations from nanotechnology researchers in food and agriculture. | 2021 | Agriculture | Nanotechnology | Aligned | Meaningful | 1. Create effective and efficient products to improve human well-being and solve societal problems. |

| Deliberate | 1. Should carefully and comprehensively consider the potential social, health, and environmental impacts associated with the innovation and ensure that actual and potential near-term and longer-term negative impacts are mitigated to the extent feasible. | ||||||||

| Acceptable | Harmless | 1. Develop products that are publicly acceptable because of their potential to impact the uptake of technology. | |||||||

| Trustworthy | Secure | 1. Use nanotechnologies and/or engineered nanomaterials to create agrifood products that were more safe than conventional counterparts. | |||||||

| Well governed | Regulated | 1. Adhere to regulations to ensure agrifood products align with the mission of academic discipline and research integrity and ethics. | |||||||

| Participatory | 1. Engage stakeholders and collaborate interdisciplinarily to determine what specific products to pursue. | ||||||||

| 18 | Grieger et al. | NanoImpact | Responsible innovation of nano-agrifoods: Insights and views from US stakeholders. | 2021 | Agriculture | Nanotechnology | Aligned | Meaningful | 1. Create effective and efficient products to address a significant problem or societal need and improve the world. |

| Sustainable | 1. Should treat resources with respect and in the most responsible way and use the resource in a non-wasteful manner. | ||||||||

| Acceptable | Harmless | 1. Do nothing that could cause irreversible harm to public health or the environment. | |||||||

| Trustworthy | Transparent | 1. Improve transparency and communication. | |||||||

| Well governed | Participatory | 1. Consider the impacts of an innovative development on the different stakeholders and engage them in the early stage of innovation process. | |||||||

| Regulated | 1. Adhere to regulations. | ||||||||

| 19 | Laursen and Meijboom | Journal of Agricultural and Environmental Ethics | Between Food and Respect for Nature: On the Moral Ambiguity of Norwegian Stakeholder Opinions on Fish and Their Welfare in Technological Innovations in Fisheries. | 2021 | Agriculture | Fishery technology | Well governed | Regulated | 1. Governance regulation becomes a key outcome of the innovation process to make innovation acceptable in India. |

| 20 | Singh et al. | Technological Forecasting and Social Change | Analysing acceptability of E-rickshaw as a public transport innovation in Delhi: A responsible innovation perspective. | 2021 | Urbanology | E-rickshaw | Aligned | Sustainable | 1. Universal and culture-specific values should be embedded in innovation, which can make the product socially, economically, and environmentally sustainable. |

| Accessible | Inclusive | ||||||||

| Well governed | Participatory | 1. Engage stakeholders via a representative and inclusive process to establish common ground or consensus, which can, along with other positives, make innovation acceptable and workable. 2. Some implications of technological innovation may be controversial, and thus, public research and development must reconcile the possible repercussions of participating in their development. | |||||||

| 21 | Stitzlein et al. | Sustainability | Reputational risk associated with big data research and development: an interdisciplinary perspective. | 2021 | Agriculture | Data technology | Acceptable | Equitable | 1. Encourage fairer and more equitable technology use. |

| Ethical | 1. Incorporate moral and societal values into the design processes for making emerging technologies more ethical and more democratic. | ||||||||

| Trustworthy | Transparent | 1. Handle greater transparency about data collection, reuse, consent, and custodianship. | |||||||

| 22 | Christodoulou and Iordanou | Frontiers in Political Science | Democracy under attack: challenges of addressing ethical issues of AI and big data for more democratic digital media and societies. | 2021 | Digital media | Artificial intelligence | Well governed | Participatory | 1. Should involve even more stakeholders (public) to identify common ground across countries and regions, as well as cultural-specific challenges that need to be addressed. |

| Data technology | Regulated | 1. Legislation should help to address the challenge of defining ethics and reaching a consensus that improves the wider implications to society. | |||||||

| 23 | Middelveld and Macnaghten | Elem Sci Anth | Gene editing of livestock: Sociotechnical imaginaries of scientists and breeding companies in the Netherlands. | 2021 | Agriculture | Gene technology | Aligned | Deliberate | 1. Require stakeholders—including agricultural scientists—to exercise humility, avoid easy judgment, and learn to hesitate. |

| Meaningful | 1. The purpose of livestock gene editing applications is represented as that of producing “better” animals, with “improved” animal welfare and “increased” disease resistance, that contribute a vital role to play in “solving” the global food challenge. | ||||||||

| Acceptable | Ethical | 1. Highly valued animal health and welfare and the long historical arc of concerns about animal food safety in unison create a high ethical sensitivity to animals in Europe. Innovation should be particularly careful to avoid or fuel future controversy. | |||||||

| 24 | Mladenović and Haavisto | Case Studies on Transport Policy | Interpretative flexibility and conflicts in the emergence of Mobility as a Service: Finnish public sector actor perspectives. | 2021 | Urbanology | Mobility-as-a-Service | Aligned | Meaningful | 1. Mobility as a Service (MaaS) should be designed to truly meet people’s needs and to put the user at the center of transport service provision, with all the associated benefits. |

| Sustainable | 1. MaaS would reduce the percentage of car use, by freeing people from car dependency to increase the share of sustainable modes. | ||||||||

| Accessible | Inclusive | 1. Embrace the inherent conflict in the value-laden mobility domain, which paves the way for a culture of technological innovation. | |||||||

| Well governed | Participatory | 1. The public and private sectors need to find a way to cooperate and begin dialogue, and information sharing are important in a fast-moving field. | |||||||

| 25 | Rochel and Evéquoz | AI & Society | Getting into the engine room: a blueprint to investigate the shadowy steps of AI ethics. | 2021 | Technology | Artificial intelligence | Trustworthy | Explainable | 1.Innovator should be able to make explicit their reasons or standard for choosing option A over other existing options and be able to justify these choices. |

| 26 | Pickering | Future Internet | Trust, but Verify: Informed Consent, AI Technologies, and Public Health Emergencies. | 2021 | Healthcare | Artificial intelligence | Acceptable | Harmless | 1. Reduce or eliminate the harmful effects of technology use and achieve an overall state of well-being. |

| Trustworthy | Explainable | 1. Should explain the rationale to users, characterize the strengths and weaknesses, and convey an understanding of how they will behave in the future. | |||||||

| Well governed | Accountable | 1. Understand the actors who will often be regulated with specific obligations. These actors would all influence the trust context. | |||||||

| 27 | Stankov and Gretzel | Information Technology & Tourism | Digital well-being in the tourism domain: mapping new roles and responsibilities. | 2021 | Tourism | Information and communication technologies | Trustworthy | Explainable | 1. Generating high-quality interpretable, intuitive, human-understandable explanations of AI decisions is essential for operators and users to understand, trust, and effectively manage local government AI systems. |

| 28 | Yigitcanlar et al. | Journal of Open Innovation: Technology, Market, and Complexity | Responsible urban innovation with local government artificial intelligence (AI): A conceptual framework and research agenda. | 2021 | Urbanology | Artificial intelligence | Acceptable | Ethical | 1. The ethical considerations made by the designers and adopters of AI systems are critical when it comes to avoiding the unethical consequences of AI systems. |

| Accessible | Affordable | 1. AI systems should be accessible and affordable. Alternatively, the resources can be leveraged in new ways, or other solutions can be found that do not jeopardize the delivery of high-value outputs. | |||||||

| Trustworthy | Transparent | 1. Reduce the created risks or adverse consequences as much as possible and build users’ trust and confidence via increasing transparency and security of the system. | |||||||

| Secure | |||||||||

| 29 | Iakovleva et al. | Sustainability | Changing Role of Users—Innovating Responsibly in Digital Health. | 2021 | Healthcare | Healthcare technology | Well governed | Participatory | 1. Should carefully consider the ability and willingness of users to get involved and contribute their insights and absorb this type of feedback to shape and modify innovation in response to their insights. |

| 30 | Buhmann and Fieseler | Technology in Society | Towards a deliberative framework for responsible innovation in artificial intelligence. | 2021 | Technology | Artificial intelligence | Accessible | Inclusive | 1. The inclusion of all arguments constitutes the main precondition of the rationality of the process of deliberation. |

| Trustworthy | Transparent | 1. Stakeholders need to have as much information as possible about the issues at stake, the various suggestions for their solution, and the ramifications of these proposed solutions. | |||||||

| Explainable | 1. Needs to be clearly responsive to stakeholders’ suggestions and concerns. | ||||||||

| Well governed | Participatory | 1. Stakeholders need institutionalized access to deliberative settings to ensure they have a chance to voice their concerns, opinions, and arguments. | |||||||

| 31 | Lehoux et al. | Health Services Management Research | Responsible innovation in health and health system sustainability: Insights from health innovators’ views and practices. | 2021 | Healthcare | Healthcare technology | Accessible | Affordable | 1. Address specific system-level benefits but often struggle with the positioning of their solution within the health system. |

| Acceptable | Ethical | 1. Increase general practitioners’ capacity or patients and informal caregivers’ autonomy. | |||||||

| Well governed | Participatory | 1. Engage stakeholders at an early ideation stage using context-specific methods combining both formal and informal strategies. | |||||||

| 32 | Akintoye et al. | Journal of Information, Communication and Ethics in Society | Understanding the perceptions of UK COVID-19 contact tracing app in the BAME community in Leicester. | 2021 | Healthcare | Contact tracing technology | Acceptable | Ethical | 1. Reassure users that this technology will not target them and will not be misused in any way. The collected data will securely be processed and will not be used in any way to discriminate against them or unjustifiably target them. |

| Trustworthy | Secure | ||||||||

| Transparent | 1. Commit to full transparency in the implementation of the technology to provide clear information on how and what the data will be used for in the future. | ||||||||

| Well governed | Regulated | 1. Clear regulation or policy to prevent misuse or dual use of concern. | |||||||

| 33 | Ten Holter et al. | Technology Analysis & Strategic Management | Reading the road: challenges and opportunities on the path to responsible innovation in quantum computing. | 2021 | Technology | Quantum computing | Accessible | Inclusive | 1. Support interdisciplinary dialogue between fields to empower researchers, which is essential to add richness of understanding to possible impacts. |

| Acceptable | Equitable | 1. Ensure wide, democratic access to technologies. | |||||||

| Well governed | Participatory | 1. Generate more frequent, more detailed conversations with society to increase public understanding of quantum technologies. 2. Widen the pool of stakeholders consulted to incorporate the views of wider groups of stakeholders. | |||||||

| 34 | Macdonald et al. | Journal of Responsible Innovation | Indigenous-led responsible innovation: lessons from co-developed protocols to guide the use of drones to monitor a biocultural landscape in Kakadu National Park, Australia. | 2021 | Urbanology | Drone technology | Accessible | Inclusive | 1. Incorporate diversified ethical considerations and social impacts into the technological design, especially in some cross-cultural settings, to ensure products match local users’ needs and preferences and recognize local knowledge and governance. |

| Well governed | Regulated | 1. Establish guidelines for technological systems to remain human-centric, serving humanity’s values and ethical principles, including ensuring humans remain in the loop about drone development and use. | |||||||

| 35 | Chamuah and Singh | Aircraft Engineering and Aerospace Technology | Responsibly regulating the civilian unmanned aerial vehicle deployment in India and Japan. | 2021 | Urbanology | Drone technology | Well governed | Participatory | 1. Participation of both internal and external stakeholders in regulations would make it more inclusive, participatory, reflexive, and responsive, heralding responsible governance that is suitable for robust policymaking. |

| Regulated | |||||||||

| Accountable | 1. Have to keep a strategy and plan and identify essential values to ensure the accountability of new and emerging technology. | ||||||||

| 36 | Rose et al. | Land use policy | Agriculture 4.0: Making it work for people, production, and the planet. | 2021 | Agriculture | Smart farming technology | Accessible | Inclusive | 1. Having open conversations about the future of agriculture should include the crucial views of marginalized individuals who might possess differing opinions. |

| Well governed | Participatory | ||||||||

| Regulated | 1. Require updates to legislation, guidelines, and possible support for various technologies in the form of skills training, improved infrastructure, or perhaps funding. | ||||||||

| 37 | Hussain et al. | IEEE Transactions on Software Engineering | Human values in software engineering: Contrasting case studies of practice. | 2020 | Technology | Software engineering | Aligned | Meaningful | 1. Technology should be socially desirable and aligned with human values of freedom, justice, privacy, and so on. |

| 38 | Lockwood | IET Smart Cities | Bristol’s smart city agenda: vision, strategy, challenges and implementation. | 2020 | Urbanology | Smart city technology | Accessible | Inclusive | 1. Reduce the impacts of the digital divide on the most deprived areas or more vulnerable groups to spread the benefits of digitization spreading across the city. |

| Trustworthy | Secure | 1. It is vital that smart technologies are developed with sufficient safeguards to minimize the risk of harm these technologies may cause, be that data protection and privacy breaches or biased, discriminatory outcomes. | |||||||

| Well governed | Regulated | 1. Regulators in developing relevant policy frameworks, regulations, and standards should appropriately balance what can be done (what is technologically viable), what may be done (from a legal perspective), and what should be done (what is ethical and acceptable). | |||||||

| 39 | Mecacci and Santoni de Sio | Ethics and Information Technology | Meaningful human control as reason-responsiveness: the case of dual-mode vehicles. | 2020 | Urbanology | Autonomous vehicle | Trustworthy | Secure | 1. Promote a strong and clear connection between human agents and intelligent systems, thereby resulting in better safety and clearer accountability. |

| Well governed | Accountable | ||||||||

| 40 | Brandao et al. | Artificial Intelligence | Fair navigation planning: A resource for characterizing and designing fairness in mobile robots. | 2020 | Urbanology | Robotics | Acceptable | Equitable | 1. Include realistic fairness models within the planning objectives of innovation. |

| Trustworthy | Transparent | 1. Provide transparency to let users understand and control the impact of the technology in terms of the values of interest. | |||||||

| Secure | 1. Require data collection to go together with privacy-assuring methods. | ||||||||

| 41 | Chamuah and Singh | SN Applied Sciences | Securing sustainability in Indian agriculture through civilian UAV: a responsible innovation perspective. | 2020 | Agriculture | Drone technology | Aligned | Meaningful | 1. Have the ability or power to address existing problems or societal needs. |

| Accessible | Affordable | 1. The economic viability is one of the essential aspects of sustainability for civilian UAVs in India. | |||||||

| Trustworthy | Secure | 1. Should maintain the safety, security and privacy rights of the people while deploying the drone. | |||||||

| Transparent | 1. The transparency and traceability of data provided by civil UAVs further make them accountable and entwine the values of responsibility in the overall civilian UAV innovations. | ||||||||

| Well governed | Accountable | ||||||||

| 42 | Rivard et al. | BMJ Innovations | Double burden or single duty to care? Health innovators’ perspectives on environmental considerations in health innovation design. | 2019 | Healthcare | Healthcare technology | Aligned | Sustainable | 1. Taking the environment into consideration is part of responsible practice in health innovation to foster environmentally friendly health innovations, which realize supporting patient care while reducing environmental impacts. |

| 43 | Hemphill | Journal of Responsible Innovation | ‘Techlash’, responsible innovation, and the self-regulatory organization. | 2019 | Digital media | Information and communication technologies | Well governed | Regulated | 1. Implement a regulatory regime to address policy concerns of privacy, public safety, and national security. 2. Except for the traditional approach (government regulation), self-regulation and public regulation can be well-reasoned alternatives. |

| 44 | Stemerding et al. | Futures | Future making and responsible governance of innovation in synthetic biology. | 2019 | Biology | Synthetic technology | Well governed | Regulated | 1. Need to foster and facilitate innovation via more generic institutional, regulatory, and pricing measures. |

| Participatory | 1. Stakeholder engagement enhanced reflexivity about the different needs and interests that should be considered in shaping the innovation agenda. | ||||||||

| 45 | Samuel and Prainsack | New Genetics and Society | Forensic DNA phenotyping in Europe: Views “on the ground” from those who have a professional stake in the technology. | 2019 | Medical science | DNA phenotyping | Accessible | Adaptable | 1. Had to meet two criteria: be valid and reliable; be ethically unproblematic. 2. It is important only to use tests that are deemed ethically “safe”. |

| Acceptable | Ethical | ||||||||

| Well governed | Participatory | 1. Must engage both professional and public stakeholder views regarding future policy decisions. | |||||||

| 46 | Rose and Chilvers | Frontiers in Sustainable Food Systems | Agriculture 4.0: Broadening responsible innovation in an era of smart farming. | 2018 | Agriculture | Smart farming technology | Accessible | Inclusive | 1. Broadening notions of ‘inclusion’ that open up to wider “ecologies of participation”, which change public opinion to accept technologies rather than making technological trajectories more responsive to the needs of society. |

| 47 | Koirala, et al. | Applied Energy | Community energy storage: A responsible innovation towards a sustainable energy system? | 2018 | Energy | Community energy storage | Aligned | Meaningful | 1. Provide higher flexibility as well as accommodate the needs and expectations of citizens and local communities. |

| Sustainable | 1. Guarantee socially and technologically acceptable transformation towards an inclusive and sustainable energy system. | ||||||||

| Accessible | Inclusive | 1. Allow stakeholders to express their values and design operational criteria to respect and include them. | |||||||

| Affordable | 1. Decentralized markets for flexibility, ease of market participation, and community empowerment are expected to create better conditions for its implementation. | ||||||||

| Well governed | Participatory | 1. Enhance participation level to allow local communities to provide important feedback to the technology providers, which leads to higher acceptance and further technological innovation. | |||||||

| Regulated | 1. Flexible legislation program for the experimentation and development of socio-technical models specific to the local, social, and physical conditions. | ||||||||

| 48 | Winfield and Jirotka | Physical and Engineering Sciences | Ethical governance is essential to building trust in robotics and artificial intelligence systems. | 2018 | Technology | Artificial intelligence | Acceptable | Harmless | 1. Do no harm, including being free of bias and deception. |

| Ethical | 1. Respect human rights and freedoms, including dignity and privacy, while promoting well-being. | ||||||||

| Trustworthy | Explainable | 1. Should be explainable or even capable of explaining their own actions (to non-experts) and being transparent (to experts). | |||||||

| Transparent | 1. Be transparent and dependable while ensuring that the locus of responsibility and accountability remains with their human designers or operators. | ||||||||

| Well governed | Accountable | ||||||||

| 49 | Pacifico Silva et al. | Health Research Policy and Systems | Introducing responsible innovation in health: a policy-oriented framework. | 2018 | Healthcare | Healthcare technology | Aligned | Sustainable | 1. Need to reduce, as much as possible, the negative environmental impacts of health innovations throughout their entire lifecycle. |

| Accessible | Affordable | 1. Deliver both high-performing products as well as affordable ones to support equity and sustainability. | |||||||

| Acceptable | Equitable | 1. Increase our ability to attend to collective needs whilst tackling health inequalities. | |||||||

| 50 | Sonck et al. | Life Sciences, Society and Policy | Creative tensions: mutual responsiveness adapted to private sector research and development. | 2017 | Business | Non-specific | Well governed | Participatory | 1. Support mutually responsive relations in the innovation process, which assist innovators and stakeholders reach some form of joint understanding about how the innovation is shaped and eventually applied. |

| 51 | Boden et al. | Connection Science | Principles of robotics: regulating robots in the real world. | 2017 | Technology | Robotics | Acceptable | Harmless | 1. Robots should not be designed solely or primarily to kill or harm humans, except for national security interests. |

| Trustworthy | Secure | 1. Robots should be designed using processes that assure their safety and security and make sure that the safety and security of robots in society are assured so that people can trust and have confidence in them. | |||||||

| Transparent | 1. Robots should not be designed in a deceptive way to exploit vulnerable users; instead, their machine nature should be transparent. | ||||||||

| Well governed | Regulated | 1. Robots should be designed and operated as far as is practicable to comply with existing laws, fundamental rights, and freedoms, including privacy. | |||||||

| Accountable | 1. The person with legal responsibility for a robot should be attributed. | ||||||||

| 52 | Leenes et al. | Law, Innovation and Technology | Regulatory challenges of robotics: some guidelines for addressing legal and ethical issues. | 2017 | Technology | Robotics | Well governed | Regulated | 1. Develop a method, a framework, or guidelines that can be used to make innovation in a certain context more responsible. 2. Develop self-learning procedures that can be used to make innovation in a certain context more responsible. |

| 53 | Demers-Payette et al. | Journal of Responsible Innovation | Responsible research and innovation: a productive model for the future of medical innovation. | 2016 | Medical science | Healthcare technology | Aligned | Deliberate | 1. Carefully anticipate the consequences and opportunities associated with medical innovations to generate a clear understanding of the uses of medical innovation and of its context. |

| Meaningful | 1. Ensure potential innovations align with clinical and healthcare system challenges and needs to achieve a better alignment between health and innovation value systems and social practices. | ||||||||

| Well governed | Participatory | 1. Use formal deliberative mechanisms to provide a sustained engagement way for stakeholders in the innovation process. | |||||||

| Regulated | 1. Flexible steering of innovation trajectories within a highly regulated environment without compromising the safety of new products. | ||||||||

| 54 | Foley et al. | Journal of Responsible Innovation | Towards an alignment of activities, aspirations and stakeholders for responsible innovation. | 2016 | Technology | Non-specific | Aligned | Meaningful | 1. Support the creation of technologies that contribute to the stewardship of planetary systems identified. |

| Sustainable | 1. Does not interfere with access to basic resources critical to a healthy human life. | ||||||||

| Acceptable | Ethical | 1. Affords people freedom of expression and freedom from oppression and does not reinforce social orders that subjugate human beings. | |||||||

| Equitable | 1. Ensure that select groups of people are not inequitably burdened by negative impacts. | ||||||||

| 55 | Dignum et al. | Science and engineering Ethics | Contested technologies and design for values: The case of shale gas. | 2016 | Energy | Shale gas technology | Well governed | Participatory | 1. To create and implement a technological design, we must look beyond technology itself and iteratively include institutions and stakeholder interactions to acknowledge the complex and dynamic embedding of a (new) technology in a societal context. |

| 56 | Arentshorst et al. | Technology in Society | Exploring responsible innovation: Dutch public perceptions of the future of medical neuroimaging technology. | 2016 | Medical science | Neuroimaging technology | Accessible | Affordable | 1. Should never result in negative social or economic implications for individuals/patients. |

| Trustworthy | Transparent | 1. Freedom of choice, guaranteed privacy, the right to know or to be kept in ignorance, and informed consent should be self-evident prerequisites. 2. The acts, competencies, and knowledge of experts developing and working with neuroimaging can be trusted in terms of doing good and determining the correct treatment plan. | |||||||

| Well governed | Participatory | 1. Relevant actors need to become mutually responsive, and participants’ concerns should be taken seriously in order to promote responsible embedding of neuroimaging. | |||||||

| 57 | Fisher et al. | Journal of Responsible Innovation | Mapping the integrative field: Taking stock of socio-technical collaborations. | 2015 | Technology | Non-specific | Accessible | Inclusive | 1. Adopt more inclusive strategies to integrate wider stakeholders to align science, technology, and innovation more responsibly with their broader societal contexts. |

| 58 | Samanta and Samanta | Journal of Medical Ethics | Quackery or quality: the ethicolegal basis for a legislative framework for medical innovation. | 2015 | Healthcare | Healthcare technology | Trustworthy | Transparent | 1. At the heart of the regulation of medical innovation is care delivered by a process that is accountable and transparent and that allows full consideration of all relevant matters. |

| Well governed | Accountable | ||||||||

| Regulated | 1. A combination of ethicolegal principles and statutory regulations would permit responsible medical innovation and maximize benefits in terms of therapy and patient-centered care. | ||||||||

| 59 | Toft et al. | Applied Energy | Responsible technology acceptance: Model development and application to consumer acceptance of Smart Grid technology. | 2014 | Energy | Smart grid technology | Accessible | Adaptable | 1. Acceptance of a new technology depends on believing that the technology is easy to use and useful for achieving a personal goal. |

| 60 | Wickson and Carew | Journal of Responsible Innovation | Quality criteria and indicators for responsible research and innovation: Learning from transdisciplinarity. | 2014 | Environment conservation | Nanotechnology | Aligned | Meaningful | 1. Addressing a grand social challenge. |

| Deliberate | 1. Generating a range of positive and negative future scenarios and identifying and assessing associated risks and benefits of these for social, environmental, and economic sustainability. | ||||||||

| Sustainable | |||||||||

| Accessible | Inclusive | 1. Openly and actively seeking ongoing critical input, feedback, and feed-forward from a range of stakeholders. | |||||||

| Adaptable | 1. Outcomes work reliably under real-world conditions. 2. Resources are carefully considered and allocated to efficiently achieve maximum utility and impact. | ||||||||

| Trustworthy | Transparent | 1. Transparent identification of a range of uncertainties and limitations that may be relevant for various stakeholders. | |||||||

| Explainable | 1. Openly communicated lines of delegation and ownership able to respond to process dynamics and contextual change. | ||||||||

| Well governed | Regulated | 1. Documented compliance with highest-level governance requirements, research ethics, and voluntary codes of conduct, which are all actively monitored throughout. | |||||||

| Accountable | 1. Preparedness to accept accountability for both potentially positive and negative impacts. | ||||||||

| 61 | Taebi et al. | Journal of Responsible Innovation | Responsible innovation as an endorsement of public values: The need for interdisciplinary research. | 2014 | Energy | Shale gas technology | Accessible | Inclusive | 1. Responsible innovation as an accommodation of public values, which requires undertaking interdisciplinary research and interaction between innovators and other stakeholders in conjunction with the early assessment of ethical and societal desirability. |

| 62 | Lauss et al. | Biopreservation and Biobanking | Towards biobank privacy regimes in responsible innovation societies: ESBB conference in Granada 2012. | 2013 | Biology | Biobank | Trustworthy | Secure | 1. Biobank privacy regimes presuppose knowledge of and compliance with legal rules, professional standards of the biomedical community, and state-of-the-art data safety and security measures. |

| Well governed | Regulated | ||||||||

| 63 | Gaskell et al. | European Journal of Human Genetics | Publics and biobanks: Pan-European diversity and the challenge of responsible innovation. | 2013 | Biology | Biobank | Aligned | Deliberate | 1. Lying behind European diversity is a number of common problems, issues, and concerns—many of which are not set in stone and can be addressed by informed and prudent actions on the part of biobank developers and researchers. |

| Trustworthy | Secure | 1. Assiduous mechanisms for the protection of privacy and personal data should be given careful consideration. | |||||||

| Explainable | 1. Need to consider how to explain to the public the rationale for cooperation with other actors that can help to increase people’s trust. | ||||||||

| Well governed | Participatory | 1. Stakeholder engagement relates to readiness to participate in biobank research and to agree to broad consent. | |||||||

| 64 | Van den Hove et al. | Environmental Science & Policy | The Innovation Union: a perfect means to confused ends? | 2012 | Technology | Non-specific | Aligned | Meaningful | 1. Innovation should be re-targeted to deliver better health and well-being, improved quality of life, and sustainability. |

| Accessible | Inclusive | 1. A broader concept of innovation must be deployed, aiming to overcome technological and ideological lock-ins. | |||||||

| 65 | Stahl | Journal of Information, Communication and Ethics in Society | IT for a better future: how to integrate ethics, politics and innovation. | 2011 | Technology | Information and communication technologies | Acceptable | Ethical | 1. Incorporate ethics into ICT research and development to engage in discussion of what constitutes ethical issues and be open to incorporation of gender, environmental, and other issues. |

| Well governed | Regulated | 1. Provide a regulatory framework that will support ethical impact assessment for ICTs to proactively consider solutions to foreseeable problems that will likely arise from the application of future and emerging technologies. | |||||||

| Participatory | 1. To allow and encourage stakeholders to exchange ideas, to express their views, and to reach a consensus concerning good practices in the area of ethics and ICT. | ||||||||

| Accountable | 1. Ensure that specific responsibility ascriptions are realized within technical work and further sensitize possible subjects of responsibility to some of the difficulties of discharging their responsibilities. |

References

- Yigitcanlar, T.; Guaralda, M.; Taboada, M.; Pancholi, S. Place making for knowledge generation and innovation: Planning and branding Brisbane’s knowledge community precincts. J. Urban Technol. 2016, 23, 115–146. [Google Scholar] [CrossRef]

- Yigitcanlar, T.; Sabatini-Marques, J.; da-Costa, E.; Kamruzzaman, M.; Ioppolo, G. Stimulating technological innovation through incentives: Perceptions of Australian and Brazilian firms. Technol. Forecast. Soc. Chang. 2019, 146, 403–412. [Google Scholar] [CrossRef]

- Santoni de Sio, F. The European Commission report on ethics of connected and automated vehicles and the future of ethics of transportation. Ethics Inf. Technol. 2021, 23, 713–726. [Google Scholar] [CrossRef]

- Eastwood, C.; Rue, B.; Edwards, J.; Jago, J. Responsible robotics design–A systems approach to developing design guides for robotics in pasture-grazed dairy farming. Front. Robot. AI 2022, 9, 914850. [Google Scholar] [CrossRef]

- Russell, A.; Stelmach, A.; Hartley, S.; Carter, L.; Raman, S. Opening up, closing down, or leaving ajar? How applications are used in engaging with publics about gene drive. J. Responsible Innov. 2022, 9, 151–172. [Google Scholar] [CrossRef]

- Sujan, M.; White, S.; Habli, I.; Reynolds, N. Stakeholder perceptions of the safety and assurance of artificial intelligence in healthcare. Saf. Sci. 2022, 155, 105870. [Google Scholar] [CrossRef]

- David, A.; Yigitcanlar, T.; Li, R.; Corchado, J.; Cheong, P.; Mossberger, K.; Mehmood, R. Understanding Local Government Digital Technology Adoption Strategies: A PRISMA Review. Sustainability 2023, 15, 9645. [Google Scholar] [CrossRef]

- Ocone, R. Ethics in engineering and the role of responsible technology. Energy AI 2020, 2, 100019. [Google Scholar] [CrossRef]

- Yigitcanlar, T.; Cugurullo, F. The sustainability of artificial intelligence: An urbanistic viewpoint from the lens of smart and sustainable cities. Sustainability 2020, 12, 8548. [Google Scholar] [CrossRef]

- Regona, M.; Yigitcanlar, T.; Xia, B.; Li, R. Opportunities and adoption challenges of AI in the construction industry: A PRISMA review. J. Open Innov. Technol. Mark. Complex. 2022, 8, 45. [Google Scholar] [CrossRef]

- Ribeiro, B.; Smith, R.; Millar, K. A mobilising concept? Unpacking academic representations of responsible research and innovation. Sci. Eng. Ethics 2017, 23, 81–103. [Google Scholar] [CrossRef] [PubMed]

- Bashynska, I.; Dyskina, A. The overview-analytical document of the international experience of building smart city. Bus. Theory Pract. 2018, 19, 228–241. [Google Scholar] [CrossRef]

- Thapa, R.; Iakovleva, T.; Foss, L. Responsible research and innovation: A systematic review of the literature and its applications to regional studies. Eur. Plan. Stud. 2019, 27, 2470–2490. [Google Scholar] [CrossRef]

- Stilgoe, J.; Owen, R.; Macnaghten, P. Developing a framework for responsible innovation. Res. Policy 2013, 42, 1568–1580. [Google Scholar] [CrossRef]

- Von Schomberg, R. A vision of responsible research and innovation. In Responsible Innovation: Managing the Responsible Emergence of Science and Innovation in Society; Owen, R., Bessant, J., Heintz, M., Eds.; Wiley: London, UK, 2013; pp. 51–74. [Google Scholar]

- Koops, B. The concepts, approaches, and applications of responsible innovation. In Responsible Innovation 2; Springer: Cham, Switzerland, 2015; pp. 1–15. [Google Scholar]

- Genus, A.; Iskandarova, M. Responsible innovation: Its institutionalisation and a critique. Technol. Forecast. Soc. Change 2018, 128, 1–9. [Google Scholar] [CrossRef]

- Dudek, M.; Bashynska, I.; Filyppova, S.; Yermak, S.; Cichoń, D. Methodology for assessment of inclusive social responsibility of the energy industry enterprises. J. Clean. Prod. 2023, 394, 136317. [Google Scholar] [CrossRef]

- Resnik, D. The Ethics of Science: An Introduction; Routledge: London, UK, 2005. [Google Scholar]

- Owen, R.; Macnaghten, P.; Stilgoe, J. Responsible research and innovation: From science in society to science for society, with society. Sci. Public Policy 2012, 39, 751–760. [Google Scholar] [CrossRef]

- Glerup, C.; Horst, M. Mapping ‘social responsibility’ in science. J. Responsible Innov. 2014, 1, 31–50. [Google Scholar] [CrossRef]

- Burget, M.; Bardone, E.; Pedaste, M. Definitions and conceptual dimensions of responsible research and innovation: A literature review. Sci. Eng. Ethics 2017, 23, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Jakobsen, S.; Fløysand, A.; Overton, J. Expanding the field of Responsible Research and Innovation (RRI)–from responsible research to responsible innovation. Eur. Plan. Stud. 2019, 27, 2329–2343. [Google Scholar] [CrossRef]

- Wiarda, M.; van de Kaa, G.; Yaghmaei, E.; Doorn, N. A comprehensive appraisal of responsible research and innovation: From roots to leaves. Technol. Forecast. Soc. Change 2021, 172, 121053. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, G.; Lv, X.; Li, J. Discovering the landscape and evolution of responsible research and innovation (RRI). Sustainability 2022, 14, 8944. [Google Scholar] [CrossRef]

- Von Schomberg, R. Research and Innovation in the Information and Communication Technologies and Security Technologies Fields: A Report from the European Commission Services; Publications Office of the European Union: Luxembourg, 2011. [Google Scholar]

- De Saille, S. Innovating innovation policy: The emergence of ‘Responsible Research and Innovation’. J. Responsible Innov. 2015, 2, 152–168. [Google Scholar] [CrossRef]

- Voegtlin, C.; Scherer, A. Responsible innovation and the innovation of responsibility: Governing sustainable development in a globalised world. J. Bus. Ethics 2017, 143, 227–243. [Google Scholar] [CrossRef]

- Loureiro, P.; Conceição, C.P. Emerging patterns in the academic literature on responsible research and innovation. Technol. Soc. 2019, 58, 101148. [Google Scholar] [CrossRef]

- Li, M.; Wan, Y.; Gao, J. What drives the ethical acceptance of deep synthesis applications? A fuzzy set qualitative comparative analysis. Comput. Hum. Behav. 2022, 133, 107286. [Google Scholar] [CrossRef]

- Singh, R.; Mishra, S.; Tripathi, K. Analysing acceptability of E-rickshaw as a public transport innovation in Delhi: A responsible innovation perspective. Technol. Forecast. Soc. Change 2021, 170, 120908. [Google Scholar] [CrossRef]

- Hussain, W.; Perera, H.; Whittle, J.; Nurwidyantoro, A.; Hoda, R.; Shams, R.; Oliver, G. Human values in software engineering: Contrasting case studies of practice. IEEE Trans. Softw. Eng. 2020, 48, 1818–1833. [Google Scholar] [CrossRef]

- Merck, A.; Grieger, K.; Cuchiara, M.; Kuzma, J. What Role Does Regulation Play in Responsible Innovation of Nanotechnology in Food and Agriculture? Insights and Framings from U.S. Stakeholders. Bull. Sci. Technol. Soc. 2022, 42, 85–103. [Google Scholar] [CrossRef]

- Stankov, U.; Gretzel, U. Digital well-being in the tourism domain: Mapping new roles and responsibilities. Inf. Technol. Tour. 2021, 23, 5–17. [Google Scholar] [CrossRef]

- Koirala, B.; van Oost, E.; van der Windt, H. Community energy storage: A responsible innovation towards a sustainable energy system? Appl. Energy 2018, 231, 570–585. [Google Scholar] [CrossRef]

- Li, W.; Yigitcanlar, T.; Erol, I.; Liu, A. Motivations, barriers and risks of smart home adoption: From systematic literature review to conceptual framework. Energy Res. Soc. Sci. 2021, 80, 102211. [Google Scholar] [CrossRef]

- Li, F.; Yigitcanlar, T.; Nepal, M.; Thanh, K.; Dur, F. Understanding urban heat vulnerability assessment methods: A PRISMA review. Energies 2022, 15, 6998. [Google Scholar] [CrossRef]

- Pacifico Silva, H.; Lehoux, P.; Miller, F.; Denis, J. Introducing responsible innovation in health: A policy-oriented framework. Health Res. Policy Syst. 2018, 16, 90. [Google Scholar] [CrossRef] [PubMed]

- Ulnicane, I.; Eke, D.; Knight, W.; Ogoh, G.; Stahl, B. Good governance as a response to discontents? Déjà vu, or lessons for AI from other emerging technologies. Interdiscip. Sci. Rev. 2021, 46, 71–93. [Google Scholar] [CrossRef]

- Sayers, A. Tips and tricks in performing a systematic review. Br. J. Gen. Pract. 2008, 58, 136. [Google Scholar] [CrossRef]

- Wohlin, C.; Kalinowski, M.; Felizardo, K.; Mendes, E. Successful combination of database search and snowballing for identification of primary studies in systematic literature studies. Inf. Softw. Technol. 2022, 147, 106908. [Google Scholar] [CrossRef]

- Yigitcanlar, T.; Desouza, K.; Butler, L.; Roozkhosh, F. Contributions and risks of artificial intelligence (AI) in building smarter cities: Insights from a systematic review of the literature. Energies 2020, 13, 1473. [Google Scholar] [CrossRef]

- Buhmann, A.; Fieseler, C. Towards a deliberative framework for responsible innovation in artificial intelligence. Technol. Soc. 2021, 64, 101475. [Google Scholar] [CrossRef]

- Kokotovich, A.; Kuzma, J.; Cummings, C.; Grieger, K. Responsible innovation definitions, practices, and motivations from nanotechnology researchers in food and agriculture. NanoEthics 2021, 15, 229–243. [Google Scholar] [CrossRef]

- Owen, R.; von Schomberg, R.; Macnaghten, P. An unfinished journey? Reflections on a decade of responsible research and innovation. J. Responsible Innov. 2021, 8, 217–233. [Google Scholar] [CrossRef]

- Yigitcanlar, T.; Agdas, D.; Degirmenci, K. Artificial intelligence in local governments: Perceptions of city managers on prospects, constraints and choices. AI Soc. 2023, 38, 1135–1150. [Google Scholar] [CrossRef]

- Owen, R.; Stilgoe, J.; Macnaghten, P.; Gorman, M.; Fisher, E.; Guston, D. A framework for responsible innovation. In Responsible Innovation: Managing the Responsible Emergence of Science and Innovation in Society; John Wiley & Sons: Hoboken, NJ, USA, 2013; Volume 31, pp. 27–50. [Google Scholar]

- Stilgoe, J.; Guston, D. Responsible Research and Innovation; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Bacq, S.; Aguilera, R. Stakeholder governance for responsible innovation: A theory of value creation, appropriation, and distribution. J. Manag. Stud. 2022, 59, 29–60. [Google Scholar] [CrossRef]

- Winfield, A.; Jirotka, M. Ethical governance is essential to building trust in robotics and artificial intelligence systems. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2018, 376, 20180085. [Google Scholar] [CrossRef] [PubMed]

- Bunnik, E.; Bolt, I. Exploring the Ethics of Implementation of Epigenomics Technologies in Cancer Screening: A Focus Group Study. Epigenetics Insights 2021, 14, 25168657211063618. [Google Scholar] [CrossRef]

- Foley, R.; Bernstein, M.; Wiek, A. Towards an alignment of activities, aspirations and stakeholders for responsible innovation. J. Responsible Innov. 2016, 3, 209–232. [Google Scholar] [CrossRef]

- Stitzlein, C.; Fielke, S.; Waldner, F.; Sanderson, T. Reputational risk associated with big data research and development: An interdisciplinary perspective. Sustainability 2021, 13, 9280. [Google Scholar] [CrossRef]

- Ten Holter, C.; Inglesant, P.; Jirotka, M. Reading the road: Challenges and opportunities on the path to responsible innovation in quantum computing. Technol. Anal. Strateg. Manag. 2021, 35, 844–856. [Google Scholar] [CrossRef]

- Pickering, B. Trust, but Verify: Informed Consent, AI Technologies, and Public Health Emergencies. Future Internet 2021, 13, 132. [Google Scholar] [CrossRef]

- Samuel, G.; Roberts, S.L.; Fiske, A.; Lucivero, F.; McLennan, S.; Phillips, A.; Hayes, S.; Johnson, S.B. COVID-19 contact tracing apps: UK public perceptions. Crit. Public Health 2022, 32, 31–43. [Google Scholar] [CrossRef]

- Stahl, B. IT for a better future: How to integrate ethics, politics and innovation. J. Inf. Commun. Ethics Soc. 2011, 9, 140–156. [Google Scholar] [CrossRef]

- Middelveld, S.; Macnaghten, P. Gene editing of livestock: Sociotechnical imaginaries of scientists and breeding companies in the Netherlands. Elem. Sci. Anthr. 2021, 9, 00073. [Google Scholar] [CrossRef]

- Brandao, M.; Jirotka, M.; Webb, H.; Luff, P. Fair navigation planning: A resource for characterizing and designing fairness in mobile robots. Artif. Intell. 2020, 282, 103259. [Google Scholar] [CrossRef]

- Grieger, K.; Merck, A.; Cuchiara, M.; Binder, A.; Kokotovich, A.; Cummings, C.; Kuzma, J. Responsible innovation of nano-agrifoods: Insights and views from US stakeholders. NanoImpact 2021, 24, 100365. [Google Scholar] [CrossRef] [PubMed]

- Boden, M.; Bryson, J.; Caldwell, D.; Dautenhahn, K.; Edwards, L.; Kember, S.; Newman, P.; Parry, V.; Pegman, G.; Rodden, T.; et al. Principles of robotics: Regulating robots in the real world. Connect. Sci. 2017, 29, 124–129. [Google Scholar] [CrossRef]

- Arbolino, R.; Carlucci, F.; De Simone, L.; Ioppolo, G.; Yigitcanlar, T. The policy diffusion of environmental performance in the European countries. Ecol. Indic. 2018, 89, 130–138. [Google Scholar] [CrossRef]

- Wickson, F.; Carew, A. Quality criteria and indicators for responsible research and innovation: Learning from transdisciplinarity. J. Responsible Innov. 2014, 1, 254–273. [Google Scholar] [CrossRef]

- Samuel, G.; Prainsack, B. Forensic DNA phenotyping in Europe: Views “on the ground” from those who have a professional stake in the technology. New Genet. Soc. 2019, 38, 119–141. [Google Scholar] [CrossRef]

- Arentshorst, M.; de Cock Buning, T.; Broerse, J. Exploring responsible innovation: Dutch public perceptions of the future of medical neuroimaging technology. Technol. Soc. 2016, 45, 8–18. [Google Scholar] [CrossRef]

- Yigitcanlar, T.; Corchado, J.; Mehmood, R.; Li, R.; Mossberger, K.; Desouza, K. Responsible urban innovation with local government artificial intelligence (AI): A conceptual framework and research agenda. J. Open Innov. Technol. Mark. Complex. 2021, 7, 71. [Google Scholar] [CrossRef]

- Van den Hove, S.; McGlade, J.; Mottet, P.; Depledge, M. The Innovation Union: A perfect means to confused ends? Environ. Sci. Policy 2012, 16, 73–80. [Google Scholar] [CrossRef]

- Fisher, E.; O’Rourke, M.; Evans, R.; Kennedy, E.; Gorman, M.; Seager, T. Mapping the integrative field: Taking stock of socio-technical collaborations. J. Responsible Innov. 2015, 2, 39–61. [Google Scholar] [CrossRef]

- Macdonald, J.M.; Robinson, C.J.; Perry, J.; Lee, M.; Barrowei, R.; Coleman, B.; Markham, J.; Barrowei, A.; Markham, B.; Ford, H.; et al. Indigenous-led responsible innovation: Lessons from co-developed protocols to guide the use of drones to monitor a biocultural landscape in Kakadu National Park, Australia. J. Responsible Innov. 2021, 8, 300–319. [Google Scholar] [CrossRef]

- Chamuah, A.; Singh, R. Securing sustainability in Indian agriculture through civilian UAV: A responsible innovation perspective. SN Appl. Sci. 2020, 2, 106. [Google Scholar] [CrossRef]

- Taebi, B.; Correlje, A.; Cuppen, E.; Dignum, M.; Pesch, U. Responsible innovation as an endorsement of public values: The need for interdisciplinary research. J. Responsible Innov. 2014, 1, 118–124. [Google Scholar] [CrossRef]

- Geoghegan-Quinn, M. Commissioner Geoghegan-Quinn Keynote Speech at the “Science in Dialogue” Conference. In Proceedings of the Science in Dialogue Conference, Odense, Denmark, 23–25 April 2012; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Middelveld, S.; Macnaghten, P.; Meijboom, F. Imagined futures for livestock gene editing: Public engagement in the Netherlands. Public Underst. Sci. 2022, 32, 143–158. [Google Scholar] [CrossRef]

- Demers-Payette, O.; Lehoux, P.; Daudelin, G. Responsible research and innovation: A productive model for the future of medical innovation. J. Responsible Innov. 2016, 3, 188–208. [Google Scholar] [CrossRef]

- Stahl, B. Responsible innovation ecosystems: Ethical implications of the application of the ecosystem concept to artificial intelligence. Int. J. Inf. Manag. 2022, 62, 102441. [Google Scholar] [CrossRef]

- Rivard, L.; Lehoux, P.; Miller, F. Double burden or single duty to care? Health innovators’ perspectives on environmental considerations in health innovation design. BMJ Innov. 2019, 6, 4–9. [Google Scholar] [CrossRef]

- Gaskell, G.; Gottweis, H.; Starkbaum, J.; Gerber, M.M.; Broerse, J.; Gottweis, U.; Hobbs, A.; Helén, I.; Paschou, M.; Snell, K.; et al. Publics and biobanks: Pan-European diversity and the challenge of responsible innovation. Eur. J. Hum. Genet. 2013, 21, 14–20. [Google Scholar] [CrossRef]

- Mladenović, M.N.; Haavisto, N. Interpretative flexibility and conflicts in the emergence of Mobility as a Service: Finnish public sector actor perspectives. Case Stud. Transp. Policy 2021, 9, 851–859. [Google Scholar] [CrossRef]

- Asveld, L.; Ganzevles, J.; Osseweijer, P. Trustworthiness and responsible research and innovation: The case of the bio-economy. J. Agric. Environ. Ethics 2015, 28, 571–588. [Google Scholar] [CrossRef]

- Papenbrock, J. Explainable, Trustworthy, and Responsible AI for the Financial Service Industry. Front. Artif. Intell. 2022, 5, 902519. [Google Scholar]

- Lockwood, F. Bristol’s smart city agenda: Vision, strategy, challenges and implementation. IET Smart Cities 2020, 2, 208–214. [Google Scholar] [CrossRef]

- Ienca, M.; Fins, J.J.; Jox, R.J.; Jotterand, F.; Voeneky, S.; Andorno, R.; Ball, T.; Castelluccia, C.; Chavarriaga, R.; Chneiweiss, H.; et al. Towards a Governance Framework for Brain Data. Neuroethics 2022, 15, 20. [Google Scholar] [CrossRef]

- Rochel, J.; Evéquoz, F. Getting into the engine room: A blueprint to investigate the shadowy steps of AI ethics. AI Soc. 2021, 36, 609–622. [Google Scholar] [CrossRef]

- Akintoye, S.; Ogoh, G.; Krokida, Z.; Nnadi, J.; Eke, D. Understanding the perceptions of UK COVID-19 contact tracing app in the BAME community in Leicester. J. Inf. Commun. Ethics Soc. 2021, 19, 521–536. [Google Scholar] [CrossRef]

- Yigitcanlar, T.; Mehmood, R.; Corchado, J. Green artificial intelligence: Towards an efficient, sustainable and equitable technology for smart cities and futures. Sustainability 2021, 13, 8952. [Google Scholar] [CrossRef]

- Oldeweme, A.; Märtins, J.; Westmattelmann, D.; Schewe, G. The role of transparency, trust, and social influence on uncertainty reduction in times of pandemics: Empirical study on the adoption of COVID-19 tracing apps. J. Med. Internet Res. 2021, 23, e25893. [Google Scholar] [CrossRef]

- MacDonald, E.; Neff, M.; Edwards, E.; Medvecky, F.; Balanovic, J. Conservation pest control with new technologies: Public perceptions. J. R. Soc. N. Z. 2022, 52, 95–107. [Google Scholar] [CrossRef]

- Samanta, J.; Samanta, A. Quackery or quality: The ethicolegal basis for a legislative framework for medical innovation. J. Med. Ethics 2015, 41, 474–477. [Google Scholar] [CrossRef] [PubMed]

- Chamuah, A.; Singh, R. Responsibly regulating the civilian unmanned aerial vehicle deployment in India and Japan. Aircr. Eng. Aerosp. Technol. 2021, 93, 629–641. [Google Scholar] [CrossRef]

- Bao, L.; Krause, N.M.; Calice, M.N.; Scheufele, D.A.; Wirz, C.D.; Brossard, D.; Newman, T.P.; Xenos, M.A. Whose AI? How different publics think about AI and its social impacts. Comput. Hum. Behav. 2022, 130, 107182. [Google Scholar] [CrossRef]

- Christodoulou, E.; Iordanou, K. Democracy under attack: Challenges of addressing ethical issues of AI and big data for more democratic digital media and societies. Front. Political Sci. 2021, 3, 682945. [Google Scholar] [CrossRef]

- Stemerding, D.; Betten, W.; Rerimassie, V.; Robaey, Z.; Kupper, F. Future making and responsible governance of innovation in synthetic biology. Futures 2019, 109, 213–226. [Google Scholar] [CrossRef]

- Hemphill, T. ‘Techlash’, responsible innovation, and the self-regulatory organization. J. Responsible Innov. 2019, 6, 240–247. [Google Scholar] [CrossRef]

- Mecacci, G.; Santoni de Sio, F. Meaningful human control as reason-responsiveness: The case of dual-mode vehicles. Ethics Inf. Technol. 2020, 22, 103–115. [Google Scholar] [CrossRef]

- Rose, D.; Wheeler, R.; Winter, M.; Lobley, M.; Chivers, C. Agriculture 4.0: Making it work for people, production, and the planet. Land Use Policy 2021, 100, 104933. [Google Scholar] [CrossRef]

- Townsend, L.; Noble, C. Variable rate precision farming and advisory services in Scotland: Supporting responsible digital innovation? Sociol. Rural. 2022, 62, 212–230. [Google Scholar] [CrossRef]

- Lehoux, P.; Silva, H.; Rocha de Oliveira, R.; Sabio, R.; Malas, K. Responsible innovation in health and health system sustainability: Insights from health innovators’ views and practices. Health Serv. Manag. Res. 2022, 35, 196–205. [Google Scholar] [CrossRef]

- Foley, R.; Sylvain, O.; Foster, S. Innovation and equality: An approach to constructing a community governed network commons. J. Responsible Innov. 2022, 9, 49–73. [Google Scholar] [CrossRef]

- Iakovleva, T.; Oftedal, E.; Bessant, J. Changing Role of Users—Innovating Responsibly in Digital Health. Sustainability 2021, 13, 1616. [Google Scholar] [CrossRef]

- Dignum, M.; Correljé, A.; Cuppen, E.; Pesch, U.; Taebi, B. Contested technologies and design for values: The case of shale gas. Sci. Eng. Ethics 2016, 22, 1171–1191. [Google Scholar] [CrossRef]

- Sonck, M.; Asveld, L.; Landeweerd, L.; Osseweijer, P. Creative tensions: Mutual responsiveness adapted to private sector research and development. Life Sci. Soc. Policy 2017, 13, 14. [Google Scholar] [CrossRef] [PubMed]

- Lauss, G.; Schröder, C.; Dabrock, P.; Eder, J.; Hamacher, K.; Kuhn, K.; Gottweis, H. Towards biobank privacy regimes in responsible innovation societies: ESBB conference in Granada 2012. Biopreservat. Biobank. 2013, 11, 319–323. [Google Scholar] [CrossRef] [PubMed]

- Leenes, R.; Palmerini, E.; Koops, B.; Bertolini, A.; Salvini, P.; Lucivero, F. Regulatory challenges of robotics: Some guidelines for addressing legal and ethical issues. Law Innov. Technol. 2017, 9, 1–44. [Google Scholar] [CrossRef]

- Westwood, R.; Low, D. The multicultural muse: Culture, creativity and innovation. Int. J. Cross Cult. Manag. 2003, 3, 235–259. [Google Scholar] [CrossRef]

- Zhu, B.; Habisch, A.; Thøgersen, J. The importance of cultural values and trust for innovation: A European study. Int. J. Innov. Manag. 2018, 22, 1850017. [Google Scholar] [CrossRef]

- Kim, S.; Parboteeah, K.; Cullen, J.; Liu, W. Disruptive innovation and national cultures: Enhancing effects of regulations in emerging markets. J. Eng. Technol. Manag. 2020, 57, 101586. [Google Scholar] [CrossRef]

- Setiawan, A. The influence of national culture on responsible innovation: A case of CO2 utilisation in Indonesia. Technol. Soc. 2020, 62, 101306. [Google Scholar] [CrossRef]

| Characteristic | Description | Exemplar Reference |

|---|---|---|

| Acceptable | Publicly acceptable, ethically unproblematic, and harmless, including being free of bias and deception. Devoted to delivering equitable products and encouraging fair technology use for achieving an overall state of well-being and the common good. | [31] |

| Accessible | Broaden the notions of accessibility to deliver culturally inclusive, technically adaptable, and financially affordable products. Devoted to spreading the benefits of digitization across societies and cities without barriers. | [4] |

| Aligned | Deliberate in decision-making practices and aligned with societal desirability and human values. Devoted to achieving meaningful, positive, and sustainable outcomes to solve the accompanied challenges and improve the well-being of life on Earth. | [32] |

| Trustworthy | Handle greater informational transparency and technical security within designing, producing, implementing, and operating processes. Devoted to delivering human-understandable explanations of decisions to increase public understanding, trust, and confidence robustly. | [6] |

| Well governed | Adhere to statutory regulations and governance requirements and can be well governed by the broader stakeholder groups. Devoted to ensuring its dependability and accountability to maintain public support and trust, which leads to higher acceptance and further implementation. | [33] |

| Primary Criteria | Secondary Criteria | ||

|---|---|---|---|

| Inclusionary | Exclusionary | Inclusionary | Exclusionary |

| Academic journal articles | Duplicate records | Responsible innovation and technology-related | Not responsible innovation or technology-related |

| Peer-reviewed | Books and chapters | ||

| Full-text available online | Industry reports | Relevance to the research objective | Irrelevant to the research objective |

| Published in English | Government reports | ||

| Criteria |

|---|

|

|

|

|

|

|

| Keyword | Description | Exemplar Reference |

|---|---|---|

| Ethical | Afford and respect human rights and freedoms, including dignity and privacy, while ensuring innovations and technologies do not reinforce social orders that subjugate human beings, promoting autonomy and ethical acceptability to avoid unethical consequences. | [50,51,52] |

| Equitable | Eliminate systematic stereotyping to reduce the potential risks and impacts of perpetuated and/or increased inequalities between individuals and groups in society while encouraging more broad, democratic, and equitable innovation implementation. | [52,53,54] |

| Harmless | Ensure products do not harm human health (including physical and psychological) and/or the environment while reducing or eliminating the harmful effects of technology use by appropriate safeguards, e.g., inherently safe design, meaningful human control approach, and so on. | [3,4,55] |

| Keyword | Description | Exemplar Reference |

|---|---|---|

| Adaptable | Produce valid and reliable products adaptable to existing technologies and complex operating environments, ensuring they are easy to train, use, and maintain to increase the flexibility for application scenarios and the useability for a broader range of people. | [4,63,64] |

| Affordable | Ensure the delivery of high-value outputs while maintaining economic viability; alternatively, leverage resources in economic ways to avoid any negative financial implications for users, which creates better conditions for wider implementation scenarios. | [38,65,66] |

| Inclusive | Incorporate diversified cultures, knowledge, and values to align innovation more responsibly with practical societal contexts, aiming to overcome technological and ideological lock-ins and make technological trajectories more responsive to the needs of society. | [67,68,69] |

| Keyword | Description | Exemplar Reference |

|---|---|---|

| Deliberate | Carefully anticipate and assess the associated consequences and opportunities and exercise deliberation in decision-making practices to mitigate actual and potential negative impacts for life on earth to the extent feasible. | [44,63,73] |

| Meaningful | Achieve a better alignment between people’s needs and/or preferences and innovative technologies and social practices to create expected and meaningful outcomes; e.g., address significant problems or societal needs and improve human well-being. | [60,74,75] |

| Sustainable | Taking the environment into consideration is part of the innovation to treat resources with respect and in the most responsible way throughout the entire lifecycle of innovation, which ensures broad sustainability outcomes while avoiding large and irreversible consequences for the earth. | [52,60,76] |

| Keyword | Description | Exemplar Reference |

|---|---|---|

| Explainable | Make explicit the reason or standard for any decisions or acts made and be able to justify these choices to provide not just the experts but also the public with adequate understanding and trust, which is essential for effective implementation and management. | [3,50,78] |

| Secure | Assure the safety and security of innovation in society, both physically and digitally, to minimize the risks of harm or the adverse consequences these technologies may cause as possible, aiming to build the public’s trust and confidence in them. | [61,66,81] |

| Transparent | Information and data regarding the design, production, implementation, operating processes, and future planning of innovation should be transparently disclosed to increase public understanding of innovation, including its opportunities, benefits, risks, and consequences. | [33,59,82] |

| Keyword | Description | Exemplar Reference |

|---|---|---|

| Accountable | The entities with legal responsibility for any decisions and acts made or other failures are identifiable, traceable, and held accountable, aiming to embed the values of responsibility in the overall innovation processes. | [50,57,82] |

| Participatory | Widen stakeholder groups, enhance participation level, and support mutually responsive relations to bring diversified public views and values into innovation, which maintains public support and trust, while embedding innovation successfully in the complex and dynamic societal context via participatory and responsive governance. | [65,89,90] |

| Regulated | Operated as far as is practicable to comply with existing regulations, fundamental rights, and freedoms, which helps address the complex challenges and reach a consensus that fosters and facilitates innovation and improves the wider implications to society. | [61,91,92] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, W.; Yigitcanlar, T.; Browne, W.; Nili, A. The Making of Responsible Innovation and Technology: An Overview and Framework. Smart Cities 2023, 6, 1996-2034. https://doi.org/10.3390/smartcities6040093

Li W, Yigitcanlar T, Browne W, Nili A. The Making of Responsible Innovation and Technology: An Overview and Framework. Smart Cities. 2023; 6(4):1996-2034. https://doi.org/10.3390/smartcities6040093