Object Part-Aware Attention-Based Matching for Robust Visual Tracking

Abstract

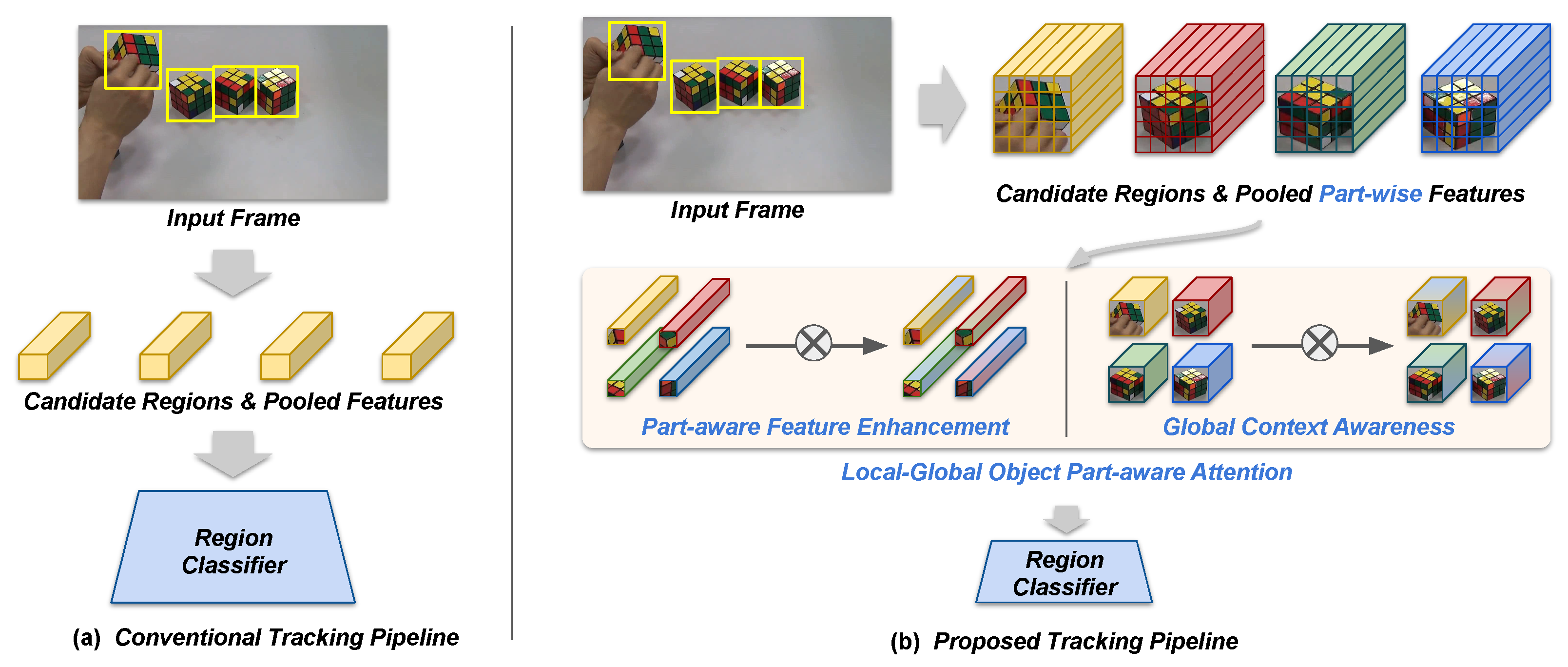

1. Introduction

2. Related Work

2.1. Attention Mechanisms in Visual Tracking

2.2. Object Part-Based Approaches

2.3. Local–Global Context Integration for Visual Tracking

3. Proposed Method

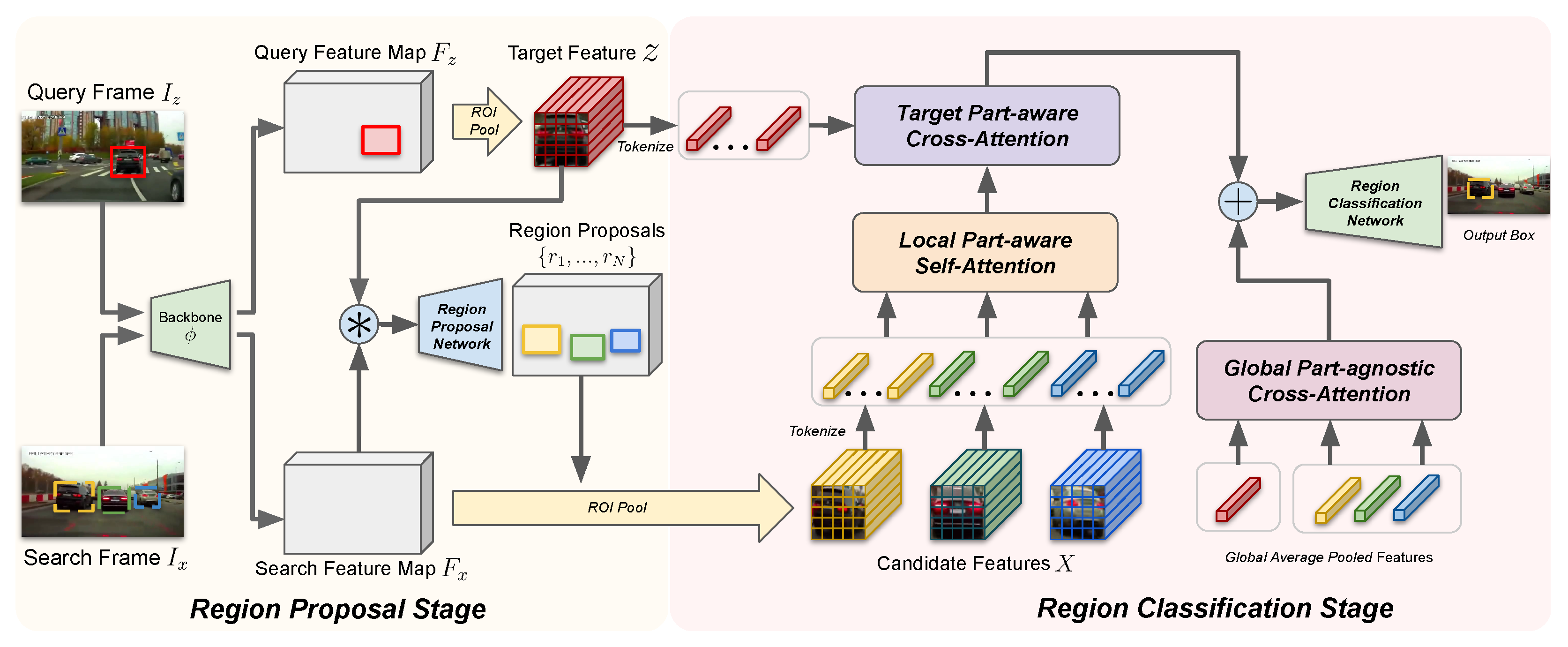

3.1. Overview of the Tracking Framework

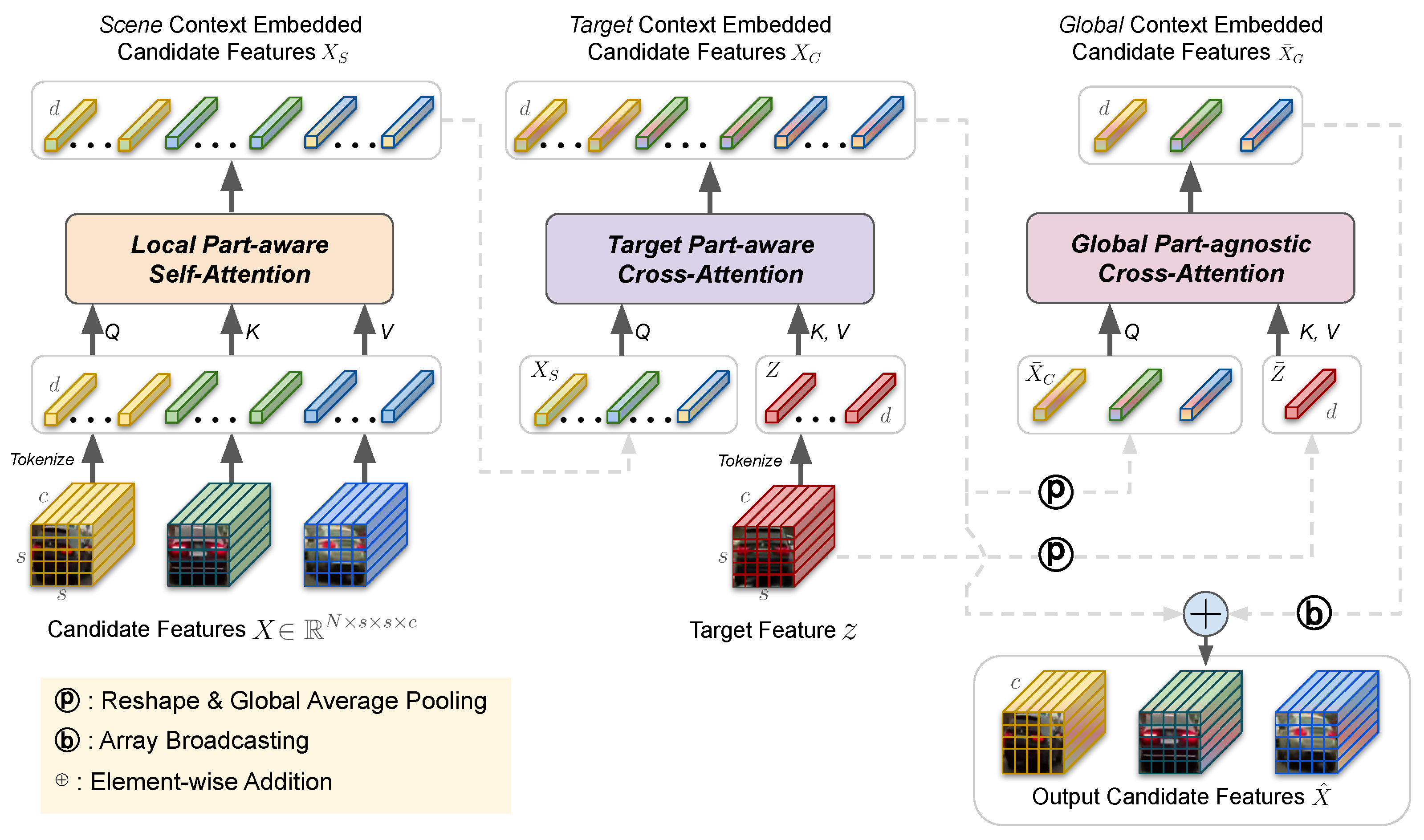

3.2. Incorporating the Object Part-Aware Attention Module

| Algorithm 1: Visual tracking with OPAM | |

| Input : Video sequence with length L, with frame images | |

| Initial target bounding box coordinates | |

| Output: Target bounding box coordinates for all frames in the video | |

| # Tracker Initialization | |

| Compute feature as in Equation (1) | |

| Obtain target feature z from , using initial box by ROIAlign [24] | |

| # Tracking for frames t > 1 | |

| for t = 2 to L do | |

| # Region proposal stage | |

| Compute feature as in Equation (1) | |

| Perform depth-wise cross correlation with as in Equation (2) | |

| Compute output maps using RPN branches as in Equation (3) | |

| Obtain top-N candidate boxes | |

| # OPAM modulation as in Equation (4) | |

| Extract candidate features X using ROIAlign | |

| MSA on X as in Equation (7), obtain | |

| MCA between and Z as in Equation (8), obtain | |

| Compute spatially pooled and | |

| MCA between and as in Equation (9), obtain | |

| Modulate using as in Equation (10), obtain | |

| # Region classification stage | |

| Compute output values as in Equation (5) | |

| Refine box coordinates as in Equation (6) | |

| Choose the k-th region with the highest score | |

| end | |

| Return: as the output of current frame | |

3.3. Training the Proposed Tracking Framework

3.4. Implementation Details

4. Experiments

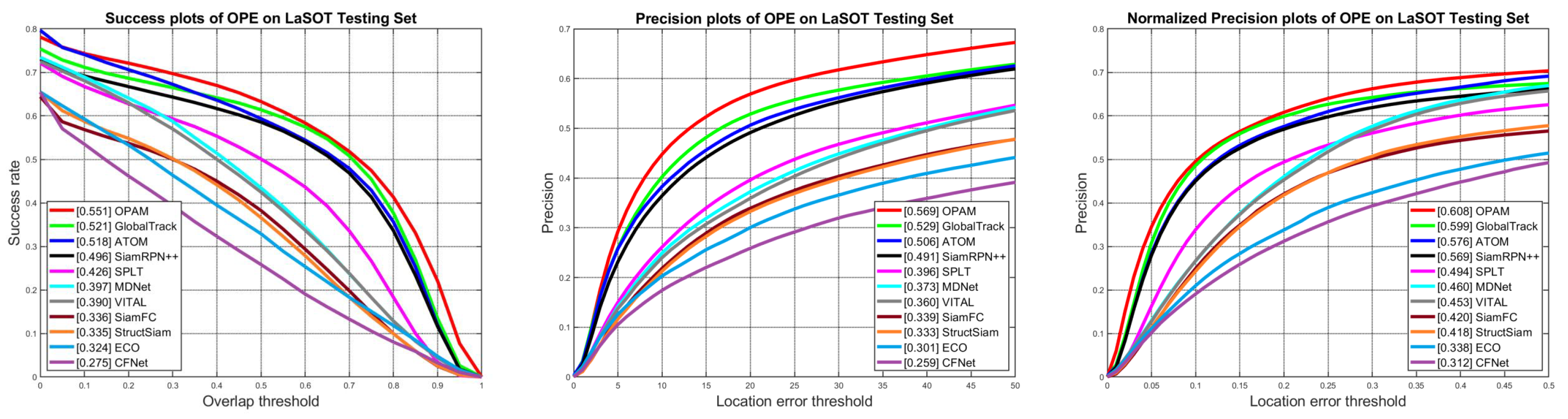

4.1. Quantitative Evaluation

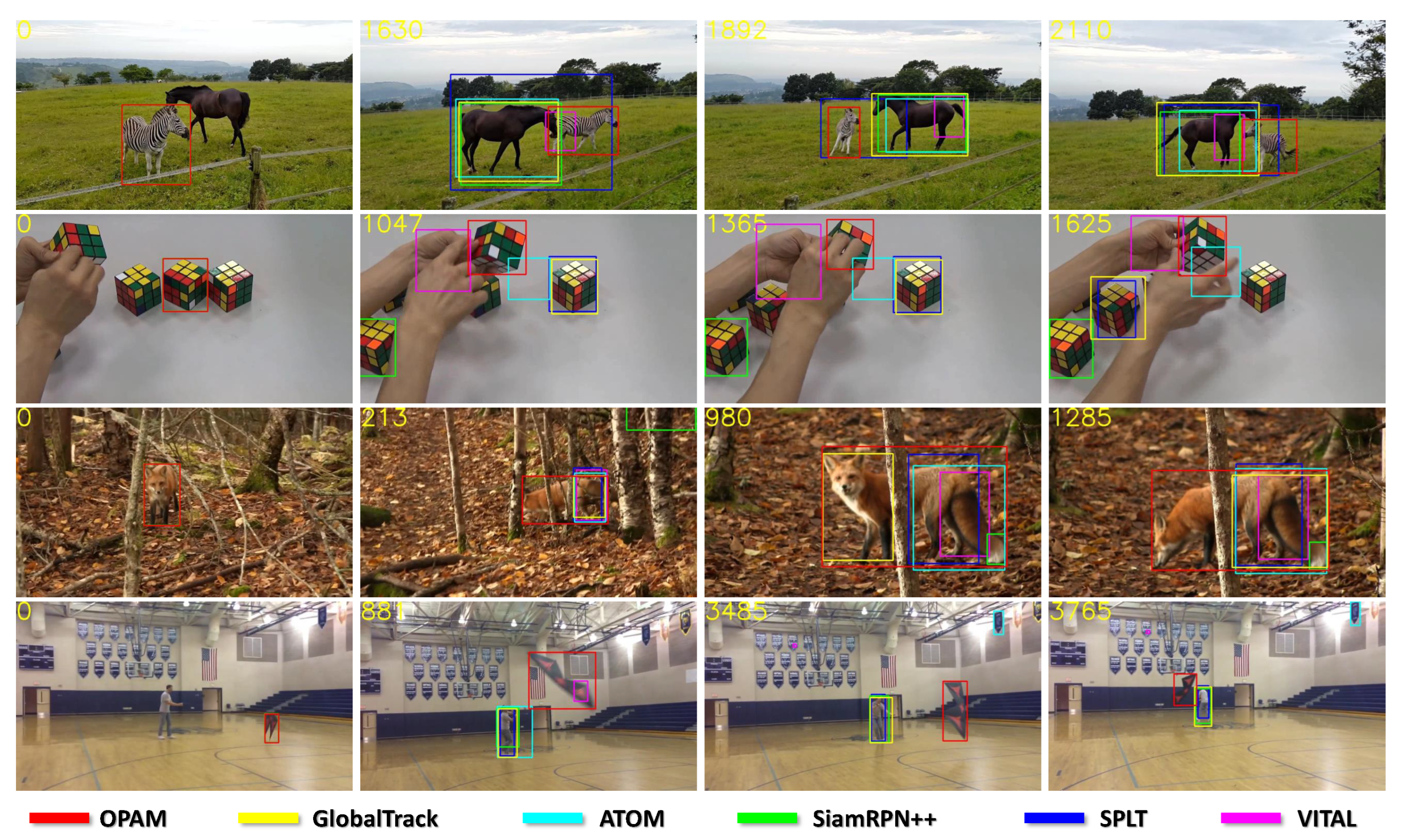

4.2. Qualitative Evaluation

5. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. Lasot: A high-quality benchmark for large-scale single object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. GOT-10k: A Large High-Diversity Benchmark for Generic Object Tracking in the Wild. IEEE TPAMI Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-Convolutional Siamese Networks for Object Tracking. arXiv 2016, arXiv:1606.09549. [Google Scholar] [CrossRef]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High Performance Visual Tracking with Siamese Region Proposal Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of Siamese Visual Tracking with Very Deep Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese Box Adaptive Network for Visual Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ATOM: Accurate tracking by overlap maximization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Bhat, G.; Danelljan, M.; Gool, L.V.; Timofte, R. Learning discriminative model prediction for tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8126–8135. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 2017 Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Yan, B.; Peng, H.; Fu, J.; Wang, D.; Lu, H. Learning Spatio-Temporal Transformer for Visual Tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10448–10457. [Google Scholar]

- Chen, X.; Peng, H.; Wang, D.; Lu, H.; Hu, H. SeqTrack: Sequence to Sequence Learning for Visual Object Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 14572–14581. [Google Scholar]

- Bai, Y.; Zhao, Z.; Gong, Y.; Wei, X. ARTrackV2: Prompting Autoregressive Tracker Where to Look and How to Describe. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 19048–19057. [Google Scholar]

- Liang, S.; Bai, Y.; Gong, Y.; Wei, X. Autoregressive Sequential Pretraining for Visual Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 11–15 June 2025; pp. 7254–7264. [Google Scholar]

- Yan, B.; Zhang, X.; Wang, D.; Lu, H.; Yang, X. Alpha-Refine: Boosting Tracking Performance by Precise Bounding Box Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 5289–5298. [Google Scholar]

- Wang, N.; Zhou, W.; Wang, J.; Li, H. Transformer Meets Tracker: Exploiting Temporal Context for Robust Visual Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 1571–1580. [Google Scholar]

- Wang, G.; Luo, C.; Xiong, Z.; Zeng, W. SPM-Tracker: Series-Parallel Matching for Real-Time Visual Object Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. GlobalTrack: A Simple and Strong Baseline for Long-term Tracking. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the 8th International Conference on Learning Representations (ICLR), Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Yu, Y.; Xiong, Y.; Huang, W.; Scott, M.R. Deformable Siamese Attention Networks for Visual Object Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Zhang, B.; Liang, Z.; Dong, W. Siamese Attention Networks with Adaptive Templates for Visual Tracking. Mob. Inf. Syst. 2022, 1, 7056149. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully convolutional one-stage object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Wu, Y.; He, K. Group normalization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. In Proceedings of the 7th International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. IJCV Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-Aware Siamese Networks for Visual Object Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zhang, Z.; Peng, H.; Fu, J.; Li, B.; Hu, W. Ocean: Object-aware Anchor-free Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Yan, B.; Zhao, H.; Wang, D.; Lu, H.; Yang, X. ‘Skimming-Perusal’ Tracking: A Framework for Real-Time and Robust Long-Term Tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Nam, H.; Han, B. Learning Multi-Domain Convolutional Neural Networks for Visual Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H.S. End-To-End Representation Learning for Correlation Filter Based Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H. Fast online object tracking and segmentation: A unifying approach. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Held, D.; Thrun, S.; Savarese, S. Learning to track at 100 fps with deep regression networks. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Danelljan, M.; Robinson, A.; Khan, F.S.; Felsberg, M. Beyond correlation filters: Learning continuous convolution operators for visual tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Ma, C.; Huang, J.B.; Yang, X.; Yang, M.H. Hierarchical convolutional features for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

| OPAM | GlobalTrack [19] | ATOM [7] | DiMP-50 [8] | SiamRPN++ [5] | DASiam [29] | SPLT [31] | MDNet [32] | Ocean [30] | SiamFC [3] | CFNet [33] | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| AUC | 0.551 | 0.521 | 0.518 | 0.569 | 0.496 | 0.448 | 0.426 | 0.397 | 0.560 | 0.336 | 0.275 |

| Precision | 0.569 | 0.529 | 0.506 | - | 0.491 | 0.427 | 0.396 | 0.373 | 0.566 | 0.339 | 0.259 |

| Normalized Precision | 0.608 | 0.599 | 0.576 | 0.650 | 0.569 | - | 0.494 | 0.460 | - | 0.420 | 0.312 |

| FPS | 65 | 6 | 30 | 43 | 35 | 110 | 25.7 | 0.9 | 25 | 58 | 43 |

| (%) | OPAM | ATOM [7] | DiMP-50 [8] | SiamMask [34] | Ocean [30] | CFNet [33] | SiamFC [3] | GOTURN [35] | CCOT [36] | ECO [37] | CF2 [38] | MDNet [32] |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 64.1 | 63.4 | 71.7 | 58.7 | 72.1 | 40.4 | 35.3 | 37.5 | 32.8 | 30.9 | 29.7 | 30.3 | |

| 48.9 | 40.2 | 49.2 | 36.6 | - | 14.4 | 9.8 | 12.4 | 10.7 | 11.1 | 8.8 | 9.9 | |

| 56.9 | 55.6 | 61.1 | 51.4 | 61.1 | 37.4 | 34.8 | 34.7 | 32.5 | 31.6 | 31.5 | 29.9 |

| Aspect Ratio | Background Clutter | Camera Motion | Deformation | Fast Motion | Full Occlusion | Illumination Variation | Low Resolution | Motion Blur | Out-of-View | Partial Occlusion | Rotation | Scale Variation | Viewpoint Change | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AUC | 0.549 | 0.462 | 0.564 | 0.588 | 0.412 | 0.453 | 0.570 | 0.457 | 0.539 | 0.530 | 0.524 | 0.557 | 0.551 | 0.532 |

| Baseline | +LPSA | +TPCA | +GCA | |

|---|---|---|---|---|

| AUC | 0.525 | 0.533 | 0.542 | 0.551 |

| Module | Backbone | Region Proposal | Region Classification | OPAM | Total |

| Num. of Param. | 11.18 M | 5.11 M | 4.96 M | 0.96 M | 22.21 M |

| Forward Scenario | Total (end-to-end) | Backbone | Continuous Tracking | ||

| GFLOPs | 57.64 | 42.04 | 36.62 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, J. Object Part-Aware Attention-Based Matching for Robust Visual Tracking. Signals 2025, 6, 47. https://doi.org/10.3390/signals6030047

Choi J. Object Part-Aware Attention-Based Matching for Robust Visual Tracking. Signals. 2025; 6(3):47. https://doi.org/10.3390/signals6030047

Chicago/Turabian StyleChoi, Janghoon. 2025. "Object Part-Aware Attention-Based Matching for Robust Visual Tracking" Signals 6, no. 3: 47. https://doi.org/10.3390/signals6030047

APA StyleChoi, J. (2025). Object Part-Aware Attention-Based Matching for Robust Visual Tracking. Signals, 6(3), 47. https://doi.org/10.3390/signals6030047