Rocket Launch Detection with Smartphone Audio and Transfer Learning

Abstract

1. Introduction

2. Data and Methods

2.1. Data Preparation

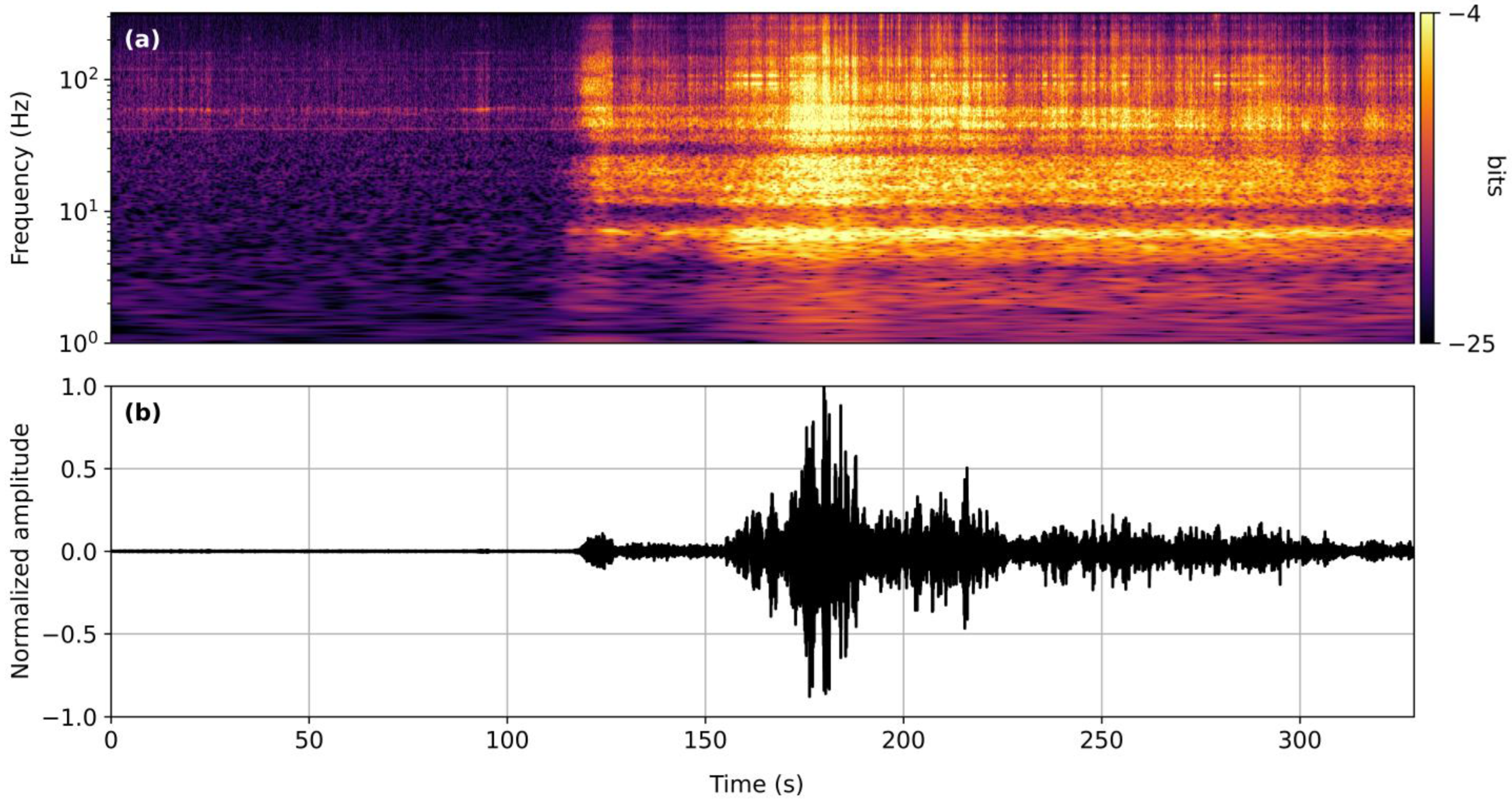

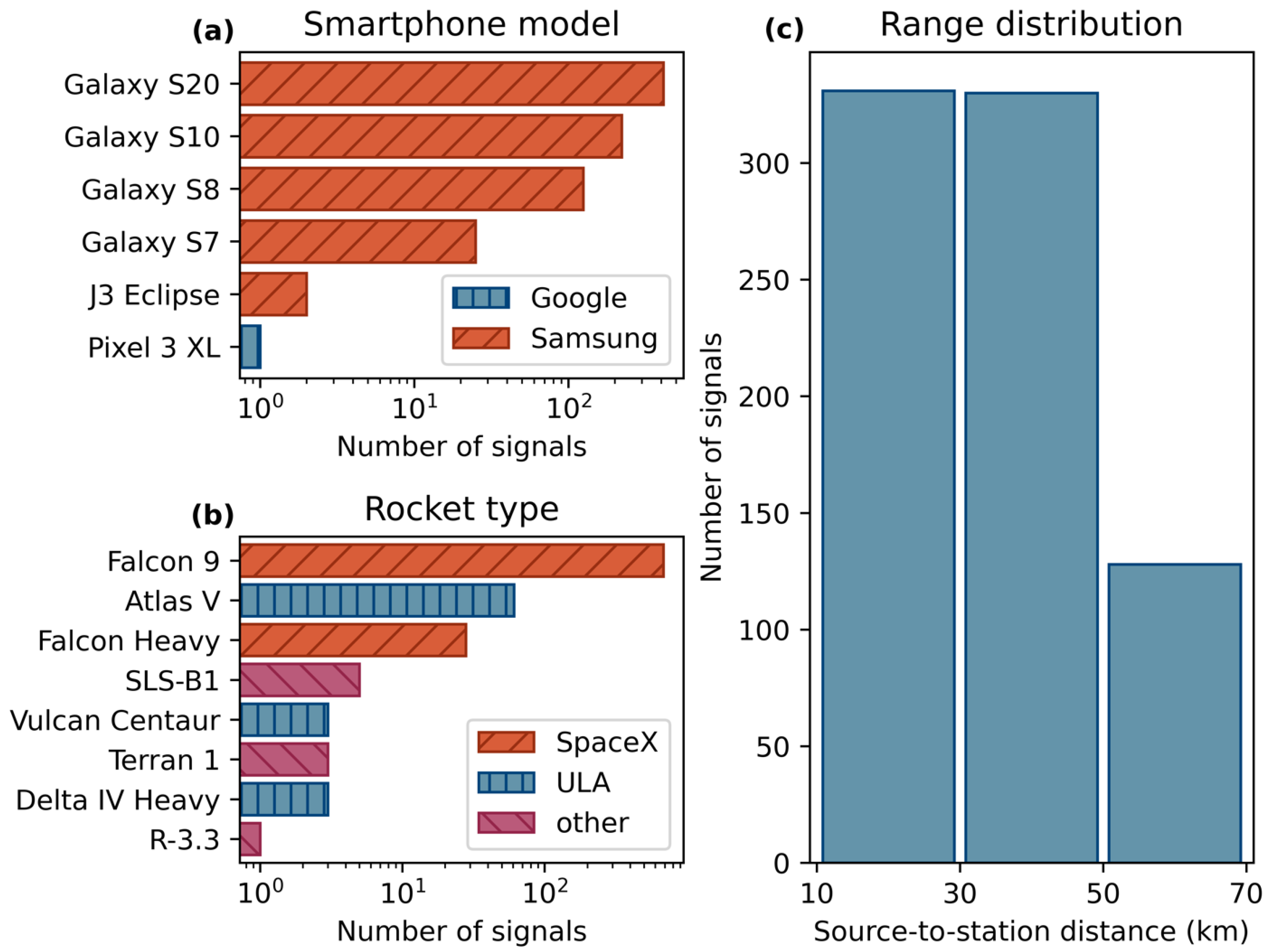

2.1.1. Aggregated Smartphone Timeseries of Rocket-Generated Acoustics (ASTRA)

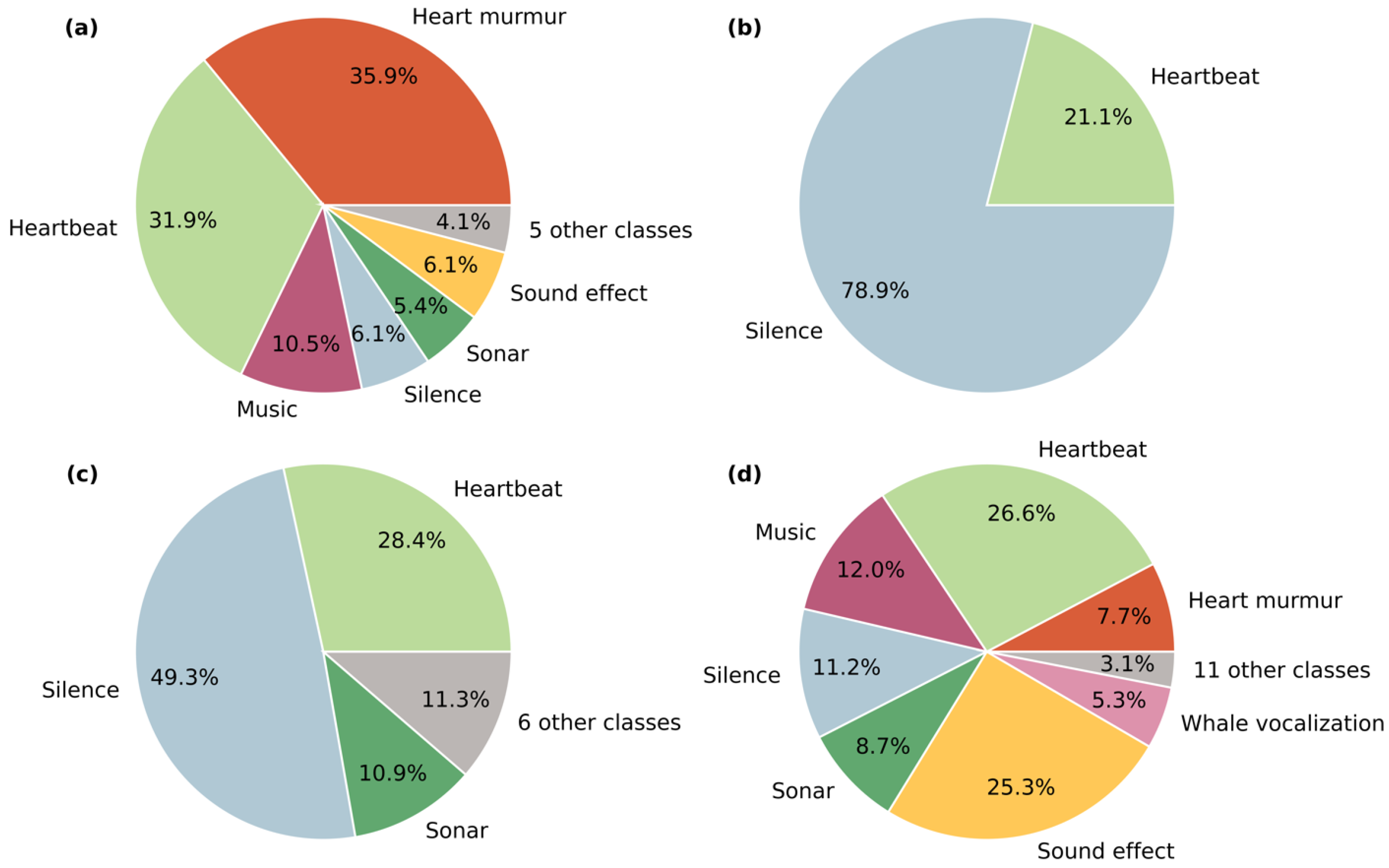

2.1.2. Smartphone High-Explosive Audio Recordings Dataset (SHAReD)

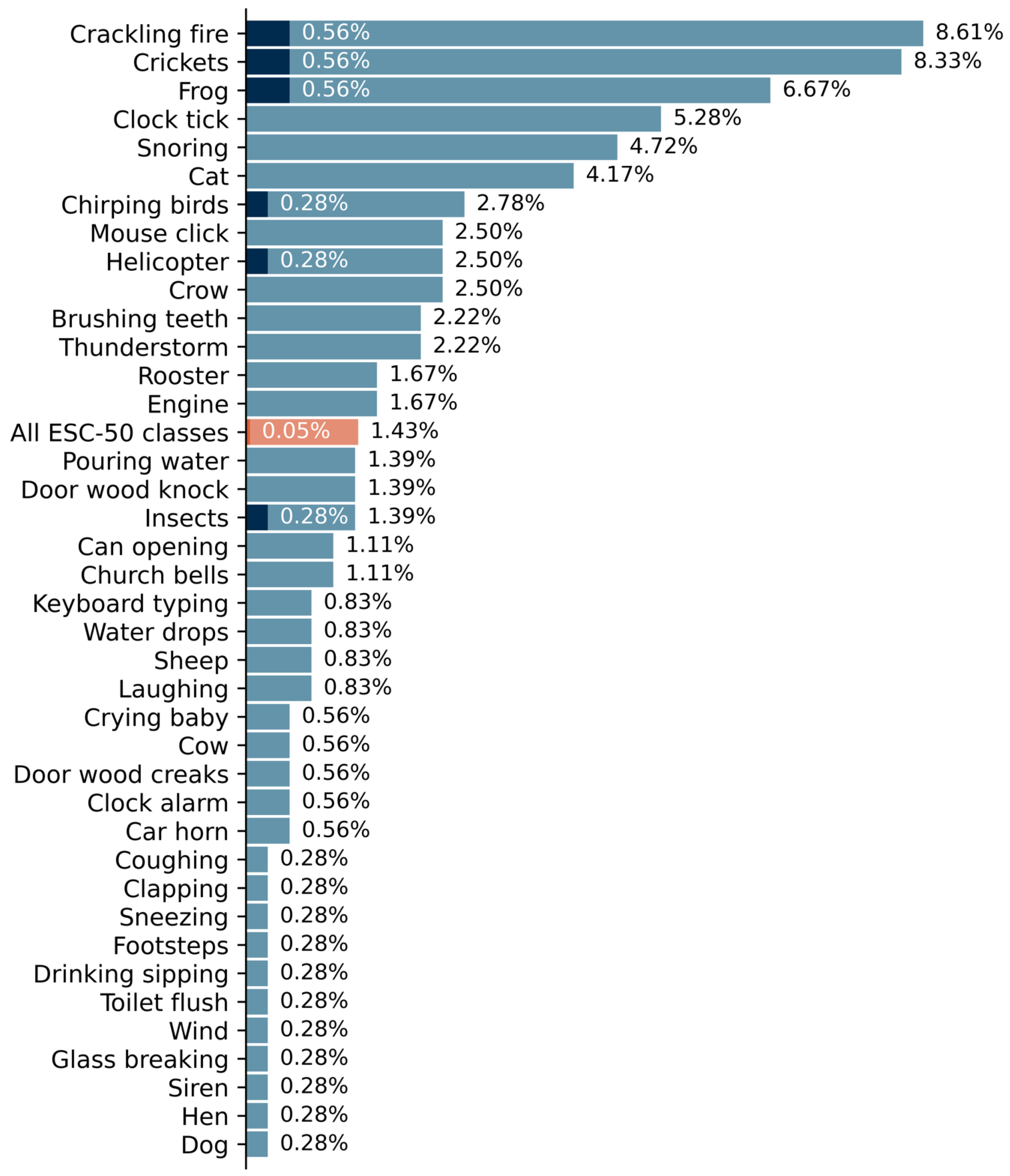

2.1.3. Environmental Sound Data from ESC-50

2.1.4. Data Fusion, Resampling, and Splitting

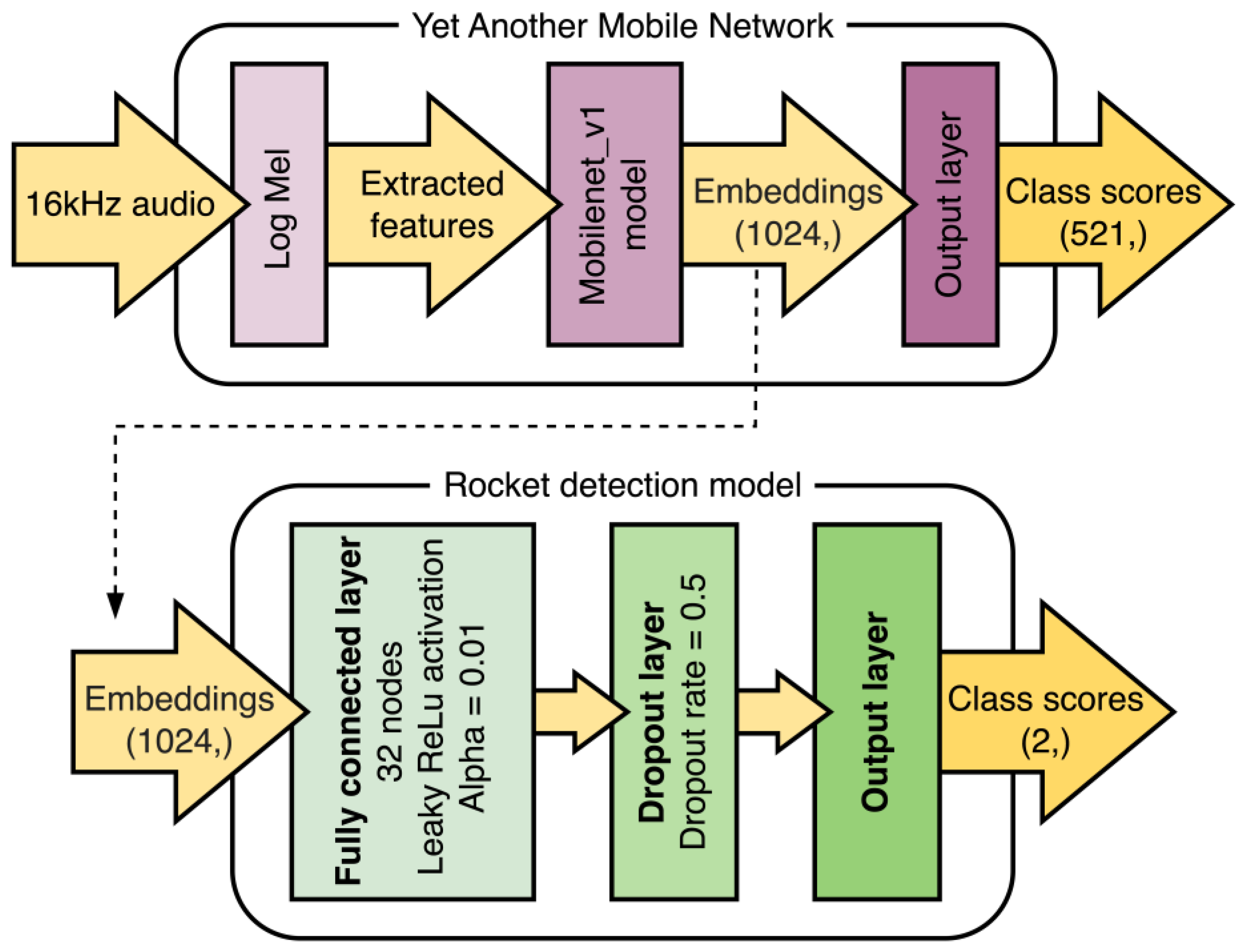

2.2. Yet Another Mobile Network (YAMNet)

2.3. Transfer Learning Model Design

3. Results

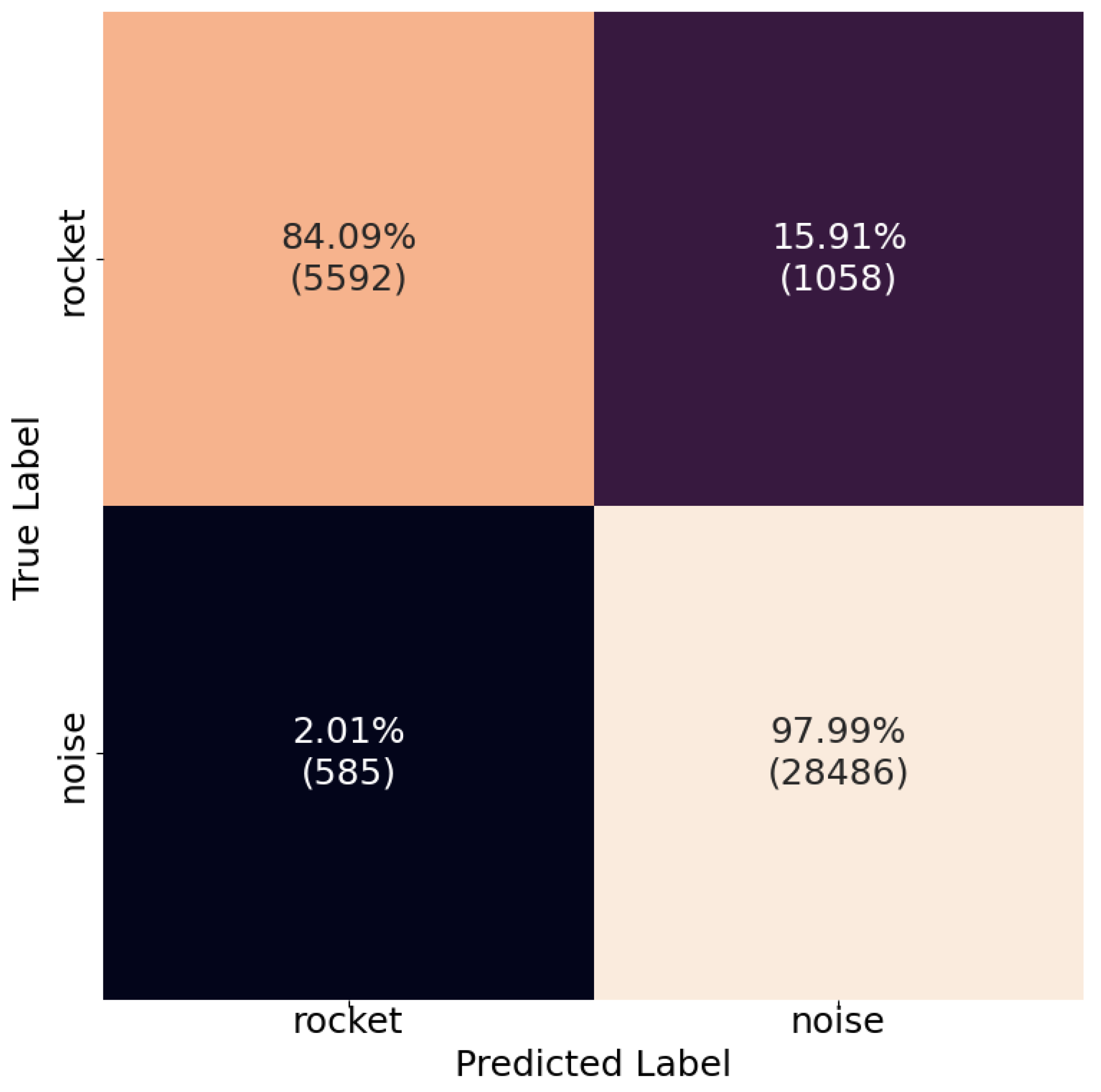

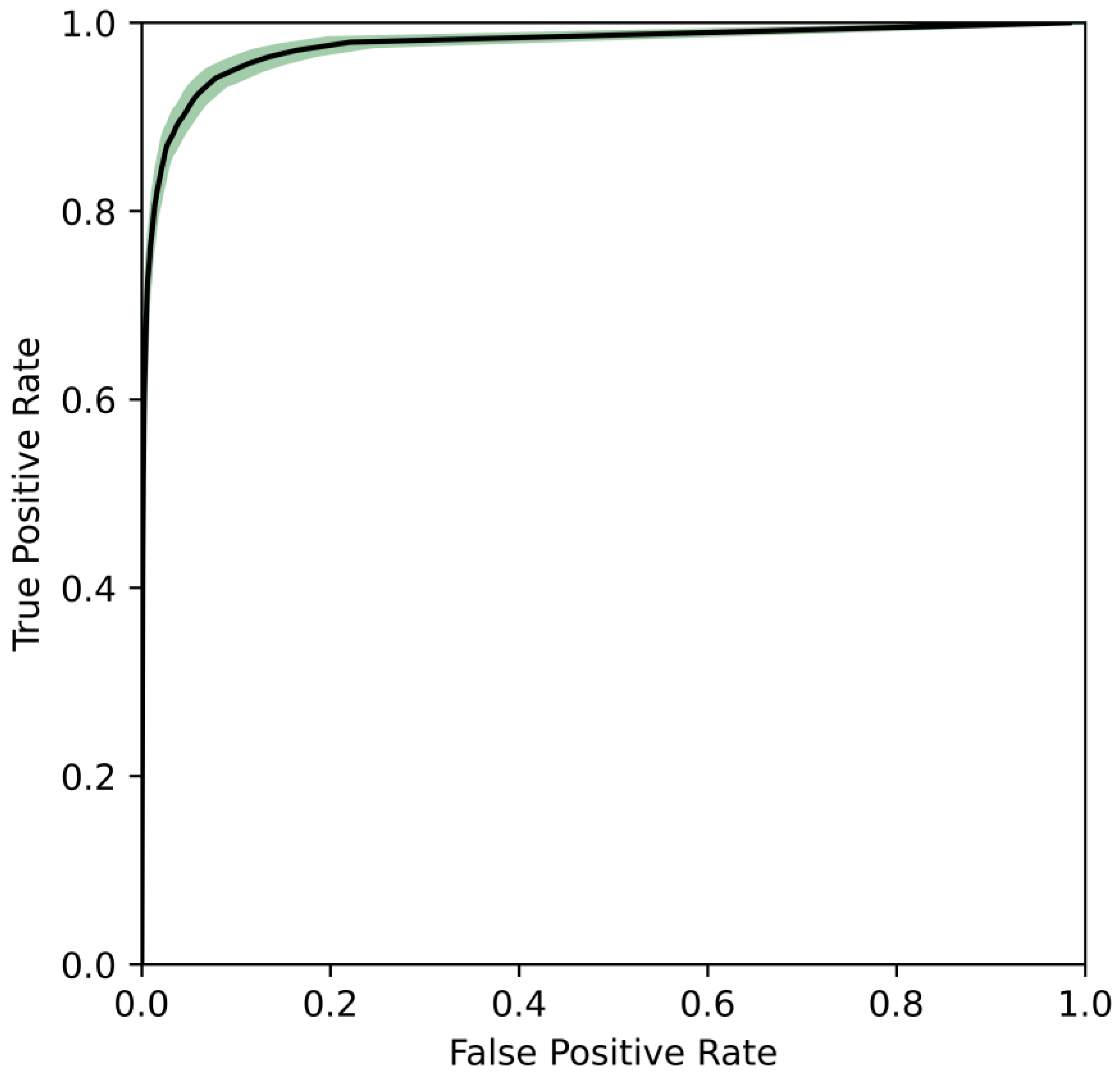

3.1. Overall Performance

3.2. Misclassification Analysis

4. Discussion

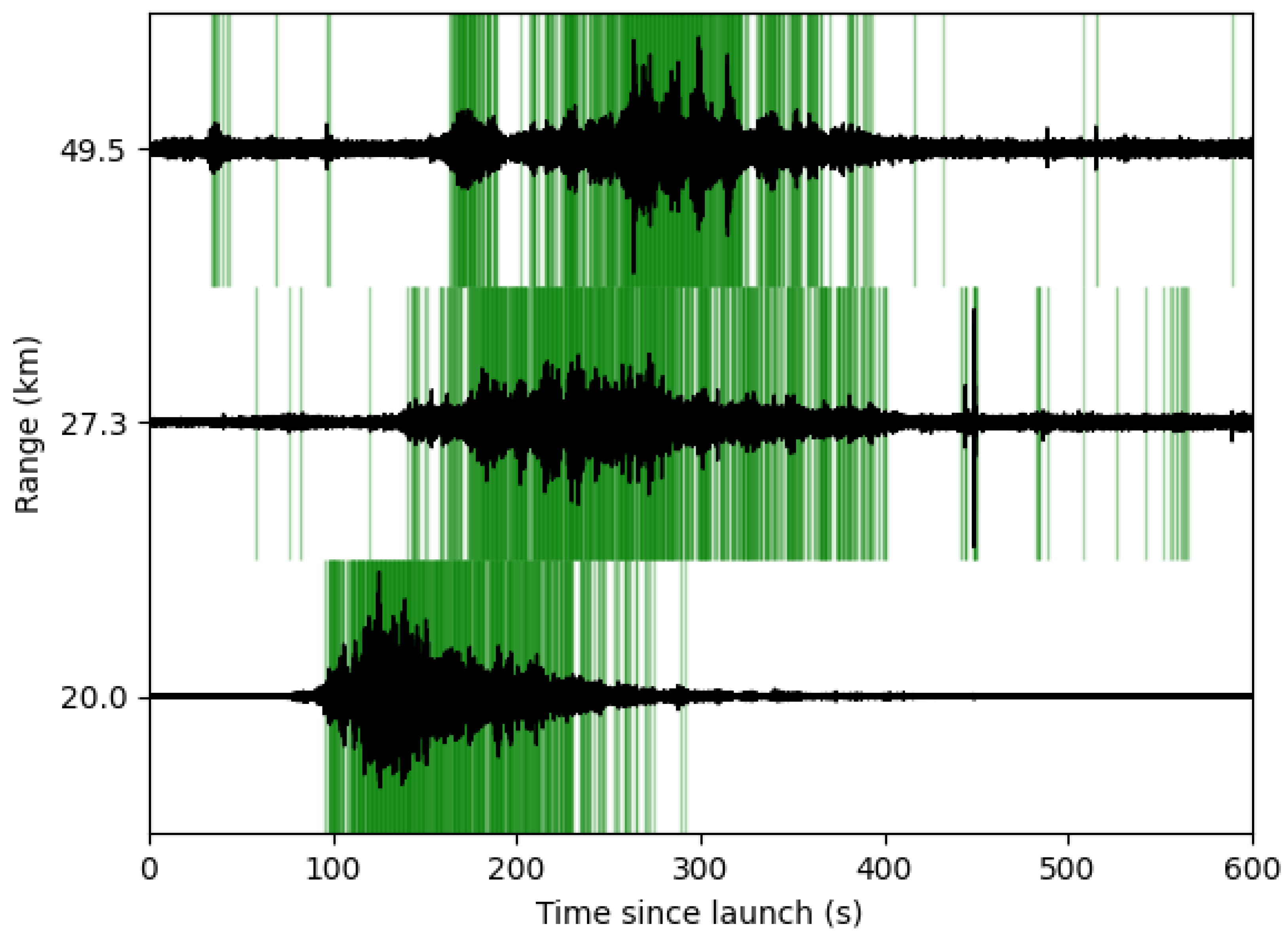

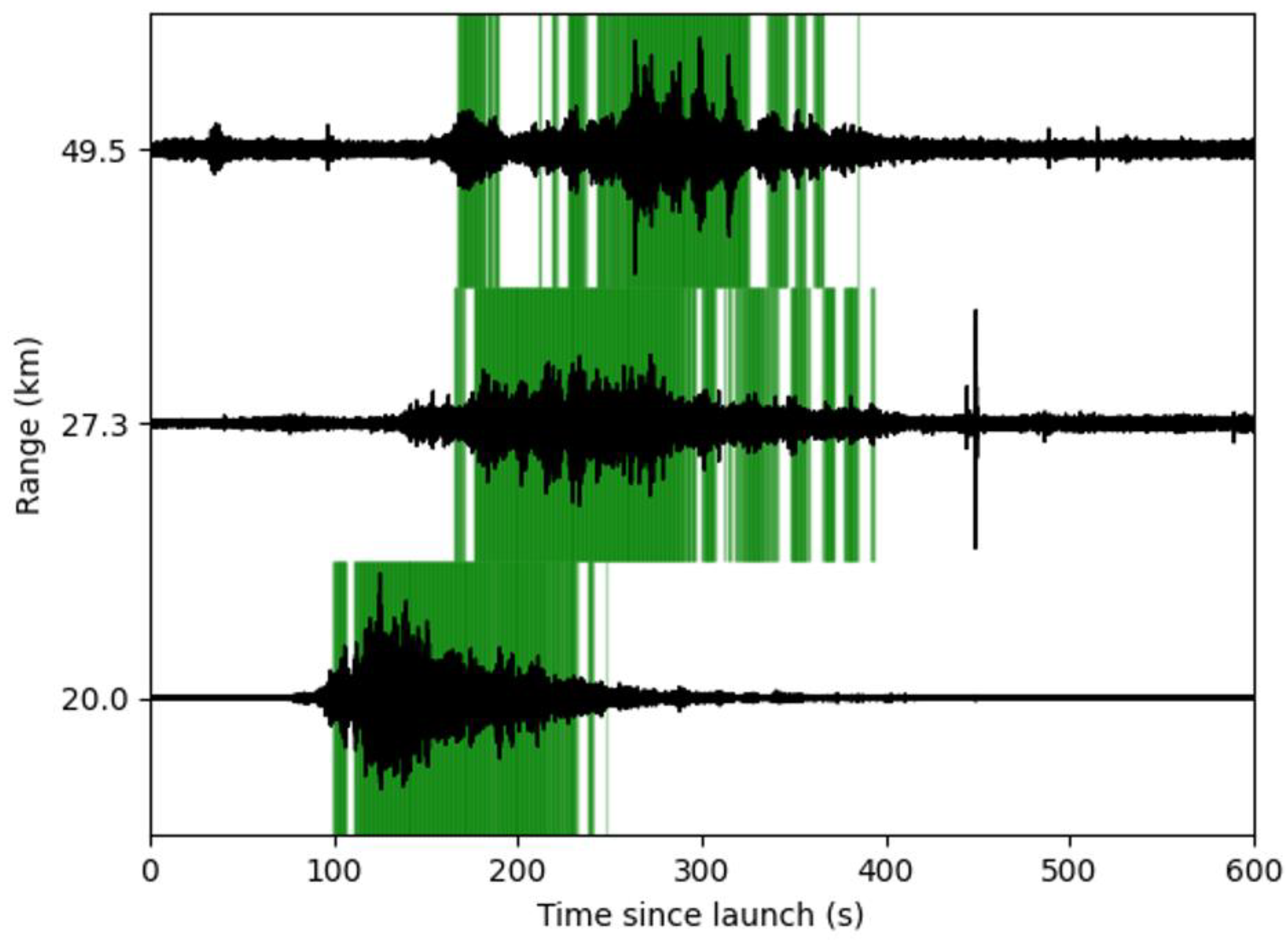

Mitigation of False Positives

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IMS | International Monitoring System |

| ASTRA | Aggregated Smartphone Timeseries of Rocket-generated Acoustics |

| SHAReD | Smartphone High-explosive Audio Recordings Dataset |

| YAMNet | Yet Another Mobile Network |

| ReLU | Reticulated Linear Unit |

| MTV | Consortium for Monitoring, Technology, and Verification |

| ETI | Consortium for Enabling Technologies and Innovation |

References

- Schwardt, M.; Pilger, C.; Gaebler, P.; Hupe, P.; Ceranna, L. Natural and Anthropogenic Sources of Seismic, Hydroacoustic, and Infrasonic Waves: Waveforms and Spectral Characteristics (and Their Applicability for Sensor Calibration). Surv. Geophys. 2022, 43, 1265–1361. [Google Scholar] [CrossRef] [PubMed]

- Hupe, P.; Ceranna, L.; Le Pichon, A.; Matoza, R.S.; Mialle, P. International Monitoring System Infrasound Data Products for Atmospheric Studies and Civilian Applications. Earth Syst. Sci. Data 2022, 14, 4201–4230. [Google Scholar] [CrossRef]

- Pilger, C.; Hupe, P.; Gaebler, P.; Ceranna, L. 1001 Rocket Launches for Space Missions and Their Infrasonic Signature. Geophys Res. Lett. 2021, 48, e2020GL092262. [Google Scholar] [CrossRef]

- Takazawa, S.K.; Popenhagen, S.K.; Ocampo Giraldo, L.A.; Cárdenas, E.S.; Hix, J.D.; Thompson, S.J.; Chichester, D.L.; Garcés, M.A. A Comparison of Smartphone and Infrasound Microphone Data from a Fuel Air Explosive and a High Explosive. J. Acoust. Soc. Am. 2024, 156, 1509–1523. [Google Scholar] [CrossRef] [PubMed]

- Thandu, S.C.; Chellappan, S.; Yin, Z. Ranging Explosion Events Using Smartphones. In Proceedings of the 2015 IEEE 11th International Conference on Wireless and Mobile Computing, Networking and Communications (WiMob), Abu Dhabi, United Arab Emirates, 19–21 October 2015; IEEE: New York, NY, USA, 2015; pp. 492–499. [Google Scholar]

- Thandu, S.C.; Bharti, P.; Chellappan, S.; Yin, Z. Leveraging Multi-Modal Smartphone Sensors for Ranging and Estimating the Intensity of Explosion Events. Pervasive Mob. Comput. 2017, 40, 185–204. [Google Scholar] [CrossRef]

- Popenhagen, S.K. Aggregated Smartphone Timeseries of Rocket-Generated Acoustics (ASTRA). Available online: https://doi.org/10.7910/DVN/ZKIS2K (accessed on 21 November 2024).

- Popenhagen, S.K.; Garcés, M.A. Acoustic Rocket Signatures Collected by Smartphones. Signals 2025, 6, 5. [Google Scholar] [CrossRef]

- Takazawa, S.K. Smartphone High-Explosive Audio Recordings Dataset (SHAReD). hosted on Harvard Dataverse. Available online: https://doi.org/10.7910/DVN/ROWODP (accessed on 28 January 2025).

- Piczak, K.J. ESC: Dataset for Environmental Sound Classification. In Proceedings of the 23rd ACM International Conference on Multimedia; Association for Computing Machinery, Brisbane, Australia, 26–30 October 2015; pp. 1015–1018. [Google Scholar]

- Plakal, M.; Ellis, D. YAMNet 2029. Available online: https://github.com/tensorflow/models/tree/master/research/audioset/yamnet (accessed on 13 October 2023).

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Gemmeke, J.F.; Ellis, D.P.W.; Freedman, D.; Jansen, A.; Lawrence, W.; Moore, R.C.; Plakal, M.; Ritter, M. Audio Set: An Ontology and Human-Labeled Dataset for Audio Events. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 05–09 March 2017; IEEE: New York, NY, USA, 2017; pp. 776–780. [Google Scholar]

- Bozinovski, S. Reminder of the First Paper on Transfer Learning in Neural Networks, 1976. Informatica 2020, 44, 291–302. [Google Scholar] [CrossRef]

- Bozinovski, S.; Fulgosi, A. The influence of pattern similarity and transfer of learning upon training of a base perceptron B2. In Proceedings of Symposium Informatica 3-121-5, Bled, Slovenia, 7–10 June 1976. [Google Scholar]

- Brusa, E.; Delprete, C.; Di Maggio, L.G. Deep Transfer Learning for Machine Diagnosis: From Sound and Music Recognition to Bearing Fault Detection. Appl. Sci. 2021, 11, 11663. [Google Scholar] [CrossRef]

- Tsalera, E.; Papadakis, A.; Samarakou, M. Comparison of Pre-Trained CNNs for Audio Classification Using Transfer Learning. J. Sens. Actuator Netw. 2021, 10, 72. [Google Scholar] [CrossRef]

- Ashurov, A.; Zhou, Y.; Shi, L.; Zhao, Y.; Liu, H. Environmental Sound Classification Based on Transfer-Learning Techniques with Multiple Optimizers. Electronics 2022, 11, 2279. [Google Scholar] [CrossRef]

- Hyun, S.H. Sound-Event Detection of Water-Usage Activities Using Transfer Learning. Sensors 2023, 24, 22. [Google Scholar] [CrossRef] [PubMed]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- The Pandas Development Team Pandas-Dev/Pandas: Pandas. Available online: https://doi.org/10.5281/zenodo.3509134 (accessed on 21 November 2024).

- Hersbach, H.; Bell, B.; Berrisford, P.; Biavati, G.; Horányi, A.; Muñoz Sabater, J.; Nicolas, J.; Peubey, C.; Radu, R.; Rozum, I.; et al. ERA5 Hourly Data on Single Levels from 1940 to Present. Copernic. Clim. Change Serv. (C3s) Clim. Data Store (Cds) 2023, 10. [Google Scholar]

- Copernicus Climate Change Service (2023): ERA5 Hourly Data on Single Levels from 1940 to Present. Available online: https://cds.climate.copernicus.eu/datasets/reanalysis-era5-single-levels (accessed on 26 November 2024).

| Event | No. False Negatives | No. Positive Samples | No. True Positives |

|---|---|---|---|

| ASTRA_218 | 7 | 30 | 21 |

| ASTRA_219 | 3 | 15 | 12 |

| ASTRA_220 | 1 | 25 | 24 |

| ASTRA_221 | 1 | 10 | 9 |

| ASTRA_222 | 10 | 25 | 15 |

| ASTRA_224 | 5 | 20 | 15 |

| ASTRA_225 | 4 | 25 | 21 |

| ASTRA_226 | 2 | 20 | 18 |

| ASTRA_227 | 3 | 10 | 7 |

| ASTRA_229 | 5 | 15 | 10 |

| ASTRA_230 | 1 | 20 | 19 |

| ASTRA_232 | 4 | 20 | 16 |

| Event | No. False Positives | No. Negative Samples | No. True Negatives |

|---|---|---|---|

| ASTRA_225 | 5 | 50 | 45 |

| ESC50_1660 | 3 | 5 | 2 |

| ESC50_1667 | 1 | 5 | 4 |

| ESC50_1675 | 1 | 5 | 4 |

| ESC50_1683 | 1 | 5 | 4 |

| ESC50_1731 | 2 | 5 | 3 |

| ESC50_1761 | 1 | 5 | 4 |

| ESC50_1779 | 2 | 5 | 3 |

| ESC50_1808 | 2 | 5 | 3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Popenhagen, S.K.; Takazawa, S.K.; Garcés, M.A. Rocket Launch Detection with Smartphone Audio and Transfer Learning. Signals 2025, 6, 41. https://doi.org/10.3390/signals6030041

Popenhagen SK, Takazawa SK, Garcés MA. Rocket Launch Detection with Smartphone Audio and Transfer Learning. Signals. 2025; 6(3):41. https://doi.org/10.3390/signals6030041

Chicago/Turabian StylePopenhagen, Sarah K., Samuel Kei Takazawa, and Milton A. Garcés. 2025. "Rocket Launch Detection with Smartphone Audio and Transfer Learning" Signals 6, no. 3: 41. https://doi.org/10.3390/signals6030041

APA StylePopenhagen, S. K., Takazawa, S. K., & Garcés, M. A. (2025). Rocket Launch Detection with Smartphone Audio and Transfer Learning. Signals, 6(3), 41. https://doi.org/10.3390/signals6030041