Multi-Instance Multi-Scale Graph Attention Neural Net with Label Semantic Embeddings for Instrument Recognition

Abstract

1. Introduction

- (1)

- The proposal of an instance correlation graph structure for instrument representation: This graph transforms the sequential linear structure of music audio into the 2-dimensional graph structure to capture the similarity of instances containing similar instruments and the similarity of unrelated instances.

- (2)

- The proposal of a multi-instance multi-scale graph attention neural network (MMGAT) with label semantic embeddings based on the instance correlation graphs and label correlations: MMGAT designs a graph attention network with different scales on the instance correlation graphs and a masked label semantic autoencoder for instrument recognition.

2. Related Works

3. The Proposed MMGAT Methods

3.1. Building Instance Correlation Graph for MMGAT

3.2. The Structure of the Proposed MMGAT

3.2.1. The Designed Label Correlation Embeddings

3.2.2. The Introduction of the Auxiliary Classifier

3.3. MMGAT Loss Function

4. Experiments

4.1. Dataset

- (1)

- Class-imbalance-resistant micro-averaging for instrument-level fidelity;

- (2)

- Macro-averaging sensitive to rare classes for taxonomy-level fairness.

4.2. Experiment Analysis

4.3. Ablation Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Barbedo, J.G.A.; Tzanetakis, G. Musical instrument classification using individual partials. IEEE Trans. Audio Speech Lang. Process. 2010, 19, 111–122. [Google Scholar] [CrossRef]

- Wang, D.L.; Chen, J. Supervised speech separation based on deep learning: An overview. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 26, 1702–1726. [Google Scholar] [CrossRef] [PubMed]

- Kratimenos, A.; Avramidis, K.; Garoufis, C.; Zlatintsi, A.; Maragos, P. Augmentation methods on monophonic audio for instrument classification in polyphonic music. In Proceedings of the 28th European Signal Processing Conference (EUSIPCO), Amsterdam, The Netherlands, 18–21 January 2021; pp. 156–160. [Google Scholar]

- Tiemeijer, P.; Shahsavari, M.; Fazlali, M. Towards Music Instrument Classification using Convolutional Neural Networks. In Proceedings of the IEEE International Conference on Omni-layer Intelligent Systems (COINS), London, UK, 29–31 July 2024; pp. 1–6. [Google Scholar]

- Szeliga, D.; Tarasiuk, P.; Stasiak, B.; Szczepaniak, P.S. Musical Instrument Recognition with a Convolutional Neural Network and Staged Training. Procedia Comput. Sci. 2022, 207, 2493–2502. [Google Scholar] [CrossRef]

- Zhang, J.; Wei, T.; Zhang, M.-L. Label-specific time-frequency energy-based neural network for instrument recognition. IEEE Trans. Cybern. 2024, 54, 7080–7093. [Google Scholar] [CrossRef] [PubMed]

- Banerjee, S.; Strand, R. Lifelong learning with dynamic convolutions for glioma segmentation from multi-modal MRI. In Medical Imaging 2023: Image Processing; SPIE: Bellingham, WA, USA, 2023; Volume 12464, pp. 821–824. [Google Scholar]

- Reghunath, L.C.; Rajan, R. Transformer-based ensemble method for multiple predominant instruments recognition in polyphonic music. EURASIP J. Audio Speech Music Process. 2022, 1, 11. [Google Scholar] [CrossRef]

- Lekshmi, C.R.; Rajeev, R. Multiple Predominant Instruments Recognition in Polyphonic Music Using Spectro/Modgd-gram Fusion. Circuits Syst. Signal Process. 2023, 42, 3464–3484. [Google Scholar] [CrossRef]

- Yu, D.; Duan, H.; Fang, J.; Zeng, B. Predominant Instrument Recognition Based on Deep Neural Network With Auxiliary Classification. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 852–861. [Google Scholar] [CrossRef]

- Joder, C.; Essid, S.; Richard, G. Temporal Integration for Audio Classification With Application to Musical Instrument Classification. IEEE Trans. Audio Speech Lang. Process. 2009, 17, 174–186. [Google Scholar] [CrossRef]

- Duan, Z.; Pardo, B.; Daudet, L. A novel cepstral representation for timbre modeling of sound sources in polyphonic mixtures. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing, Florence, Italy, 4–9 May 2014; pp. 7495–7499. [Google Scholar]

- Eggink, J.; Brown, G. Using instrument recognition for melody extraction from polyphonic audio. J. Acoust. Soc. Amer. 2005, 118, 2032. [Google Scholar] [CrossRef]

- Gururani, S.; Summers, C.; Lerch, A. Instrument activity detection in polyphonic music using deep neural networks. In Proceedings of the International Society for Music Information Retrieval Conference, Paris, France, 23–27 September 2018; pp. 577–584. [Google Scholar]

- Han, Y.; Kim, J.; Lee, K. Deep convolutional neural networks for predominant instrument recognition in polyphonic music. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 25, 208–221. [Google Scholar] [CrossRef]

- Hung, Y.N.; Chen, Y.A.; Yang, Y.H. Multitask learning for frame-level instrument recognition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 381–385. [Google Scholar]

- Mesaros, A.; Heittola, T.; Virtanen, T.; Plumbley, M.D. Sound event detection: A tutorial. IEEE Signal Process. Mag. 2021, 38, 67–83. [Google Scholar] [CrossRef]

- Pavl, S.; Craus, M. Reaction-diffusion model applied to enhancing U-Net accuracy for semantic image segmentation. Discret. Contin. Dyn. Syst.-S 2023, 16, 54–74. [Google Scholar] [CrossRef]

- Yang, J.; Zhou, K.; Li, Y.; Liu, Z. Generalized out-of-distribution detection: A survey. Int. J. Comput. Vis. 2024, 132, 5635–5662. [Google Scholar] [CrossRef]

- Girin, L.; Leglaive, S.; Bie, X.; Diard, J.; Hueber, T.; Alameda-Pineda, X. Dynamical variational autoencoders: A comprehensive review. arXiv 2020, arXiv:2008.12595. [Google Scholar]

- Kim, J.; Kong, J.; Son, J. Conditional variational autoencoder with adversarial learning for end-to-end text-to-speech. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 18–24 July 2021; pp. 5530–5540. [Google Scholar]

- Grosche, P.; Mu, M.; Kurth, F. Cyclic tempogram—A mid-level tempo representation for music signals. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010. [Google Scholar]

- Nam, J.; Herrera, J.; Slaney, M.; Smith, J.O., III. Learning sparse feature representations for music annotation and retrieval. In Proceedings of the 13th International Society for Music Information Retrieval Conference, Porto, Portugal, 8–12 October 2012; pp. 565–570. [Google Scholar]

- Humphrey, E.; Durand, S.; McFee, B. OpenMIC-2018: An Open Data-set for Multiple Instrument Recognition. In Proceedings of the ISMIR 2018, Paris, France, 23–27 September 2018; pp. 438–444. [Google Scholar]

- Essid, S.; Richard, G.; David, B. Musical instrument recognition by pairwise classification strategies. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 1401–1412. [Google Scholar] [CrossRef]

- Bosch, J.J.; Janer, J.; Fuhrmann, F.; Herrera, P. A comparison of sound segregation techniques for predominant instrument recognition in musical audio signals. In Proceedings of the 13th International Society for Music Information Retrieval Conference, Porto, Portugal, 8–12 October 2012; pp. 559–564. [Google Scholar]

- Plchot, O.; Burget, L.; Aronowitz, H.; Matëjka, P. Audio enhancing with DNN autoencoder for speaker recognition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Shanghai, China, 20–25 March 2016; pp. 5090–5094. [Google Scholar]

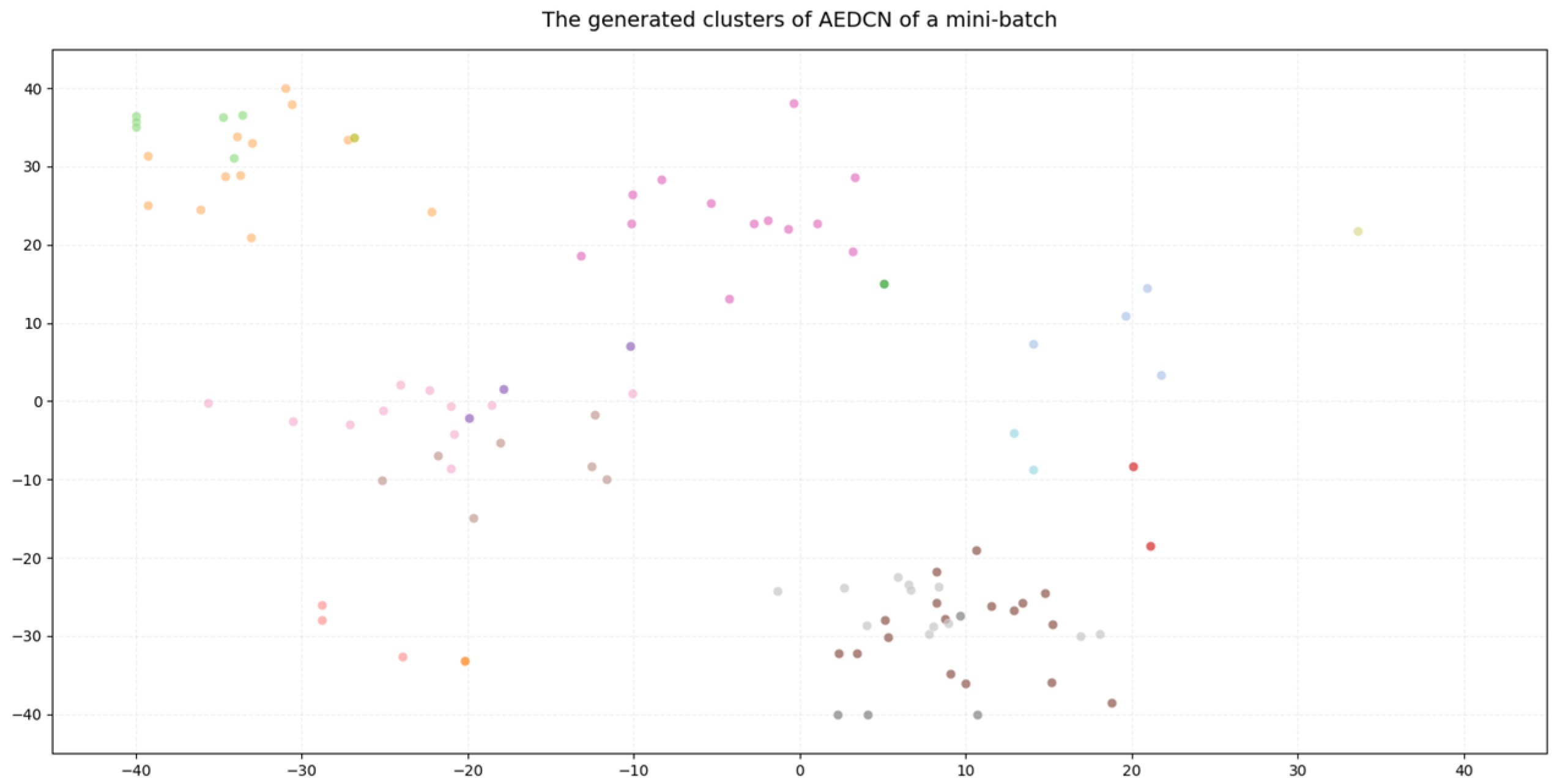

- Zhang, J.; Bai, N. Augmentation Embedded Deep Convolutional Neural Network for Predominant Instrument Recognition. Appl. Sci. 2023, 13, 10189. [Google Scholar] [CrossRef]

- Van der, L.; Hinton, G. Visualizing high-dimensional data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Instruments | Abbreviations | Training |

| accordion | acc | 374 |

| banjo | ban | 592 |

| bass | bas | 415 |

| cello | cel | 598 |

| clarinet | cla | 396 |

| cymbals | cym | 814 |

| drums | dru | 828 |

| flute | flu | 472 |

| guitar | gur | 1066 |

| mallet_percussion | mal | 522 |

| mandolin | mad | 652 |

| organ | org | 482 |

| piano | pia | 885 |

| saxophone | sax | 830 |

| synthesizer | syn | 823 |

| trombone | tro | 635 |

| trumpet | tru | 828 |

| ukulele | ukulele | 1127 |

| violin | vio | 779 |

| voice | voi | 764 |

| Instruments | Abbreviations | Auxiliary Classes |

|---|---|---|

| accordion | acc | Soft onset |

| banjo | ban | Hard onset |

| bass | bas | Soft onset |

| cello | cel | Soft onset |

| clarinet | cla | Soft onset |

| cymbals | cym | Hard onset |

| drums | dru | Hard onset |

| flute | flu | Soft onset |

| guitar | gur | Hard onset |

| mallet_percussion | mal | Hard onset |

| mandolin | mad | Hard onset |

| organ | org | Soft onset |

| piano | pia | Hard onset |

| saxophone | sax | Soft onset |

| synthesizer | syn | Hard onset |

| trombone | tro | Hard onset |

| trumpet | tru | Hard onset |

| ukulele | ukulele | Hard onset |

| violin | vio | Soft onset |

| voice | voi | Other |

| Model | F1 Micro | F1 Macro |

|---|---|---|

| SVM [25] | 0.33 | 0.24 |

| Bosch et al. [26] | 0.46 | 0.40 |

| MTF-DNN (2018) [14] | 0.30 | 0.26 |

| Audio DNN [27] | 0.52 | 0.50 |

| ConvNet (2017) [15] | 0.59 | 0.51 |

| Muti-task ConvNet (2020) [10] | 0.61 | 0.56 |

| Kratimenos et al. (2022) [8] | 0.60 | 0.53 |

| WaveGAN ConvNet (2021) | 0.60 | 0.60 |

| Voting-Swin-T (2022) | 0.60 | 0.60 |

| Staged trained ConvNet | 0.60 | 0.60 |

| VAE augmentation ConvNet | 0.61 | 0.60 |

| AEDCN (2023) [28] | 0.61 | 0.61 |

| MMGAT | 0.63 | 0.62 |

| Model | acc | ban | bas | cel | cla | cym | dru | flu | gur | mal | mad | org | pia | sax | syn | tru | tro | uku | vio | voi | F1 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | 0.19 | 0.21 | 0.13 | 0.17 | 0.27 | 0.46 | 0.41 | 0.33 | 0.31 | 0.25 | 0.29 | 0.16 | 0.32 | 0.14 | 0.17 | 0.18 | 0.21 | 0.14 | 0.21 | 0.29 | 0.24 |

| MTF-DNN | 0.17 | 0.23 | 0.22 | 0.19 | 0.29 | 0.43 | 0.45 | 0.36 | 0.28 | 0.32 | 0.21 | 0.26 | 0.33 | 0.18 | 0.19 | 0.21 | 0.20 | 0.17 | 0.23 | 0.32 | 0.26 |

| ConvNet | 0.37 | 0.43 | 0.33 | 0.51 | 0.41 | 0.64 | 0.61 | 0.45 | 0.43 | 0.50 | 0.62 | 0.53 | 0.66 | 0.49 | 0.55 | 0.55 | 0.56 | 0.42 | 0.54 | 0.62 | 0.51 |

| Muti-task ConvNet | 0.41 | 0.44 | 0.41 | 0.54 | 0.51 | 0.69 | 0.65 | 0.57 | 0.47 | 0.55 | 0.64 | 0.52 | 0.72 | 0.56 | 0.66 | 0.54 | 0.51 | 0.40 | 0.66 | 0.68 | 0.56 |

| WaveGAN ConvNet | 0.57 | 0.52 | 0.51 | 0.55 | 0.52 | 0.71 | 0.73 | 0.61 | 0.47 | 0.59 | 0.67 | 0.53 | 0.67 | 0.62 | 0.69 | 0.54 | 0.55 | 0.43 | 0.71 | 0.73 | 0.60 |

| Voting-Swin-T | 0.55 | 0.51 | 0.45 | 0.62 | 0.49 | 0.75 | 0.75 | 0.63 | 0.49 | 0.60 | 0.62 | 0.46 | 0.78 | 0.61 | 0.71 | 0.54 | 0.57 | 0.47 | 0.74 | 0.73 | 0.60 |

| AEDCN | 0.57 | 0.53 | 0.51 | 0.57 | 0.54 | 0.72 | 0.74 | 0.66 | 0.50 | 0.61 | 0.66 | 0.56 | 0.71 | 0.64 | 0.70 | 0.57 | 0.56 | 0.43 | 0.71 | 0.71 | 0.61 |

| MMGAT | 0.56 | 0.54 | 0.49 | 0.64 | 0.52 | 0.76 | 0.76 | 0.64 | 0.55 | 0.64 | 0.64 | 0.43 | 0.79 | 0.68 | 0.72 | 0.55 | 0.57 | 0.52 | 0.73 | 0.74 | 0.62 |

| Model | F1 Micro | F1 Macro |

|---|---|---|

| MMGAT-mel-spectrogram | 0.60 | 0.60 |

| MMGAT-single. | 0.62 | 0.60 |

| MMGAT-principal | 0.62 | 0.60 |

| MMGAT_without_center | 0.62 | 0.61 |

| MMGAT_graph | 0.62 | 0.62 |

| MMGAT | 0.63 | 0.62 |

| Model | F1 Micro | F1 Macro |

|---|---|---|

| MMGAT-Euc | 0.60 | 0.60 |

| MMGAT-L1 | 0. 61 | 0.59 |

| MMGAT-EMD | 0.62 | 0.61 |

| MMGAT | 0.63 | 0.62 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, N.; Wu, Z.; Zhang, J. Multi-Instance Multi-Scale Graph Attention Neural Net with Label Semantic Embeddings for Instrument Recognition. Signals 2025, 6, 30. https://doi.org/10.3390/signals6030030

Bai N, Wu Z, Zhang J. Multi-Instance Multi-Scale Graph Attention Neural Net with Label Semantic Embeddings for Instrument Recognition. Signals. 2025; 6(3):30. https://doi.org/10.3390/signals6030030

Chicago/Turabian StyleBai, Na, Zhaoli Wu, and Jian Zhang. 2025. "Multi-Instance Multi-Scale Graph Attention Neural Net with Label Semantic Embeddings for Instrument Recognition" Signals 6, no. 3: 30. https://doi.org/10.3390/signals6030030

APA StyleBai, N., Wu, Z., & Zhang, J. (2025). Multi-Instance Multi-Scale Graph Attention Neural Net with Label Semantic Embeddings for Instrument Recognition. Signals, 6(3), 30. https://doi.org/10.3390/signals6030030