Abstract

The analysis of electrocardiogram (ECG) signals is profoundly affected by the presence of electromyographic (EMG) noise, which can lead to substantial misinterpretations in healthcare applications. To address this challenge, we present ECGDnet, an innovative architecture based on Transformer technology, specifically engineered to denoise multi-channel ECG signals. By leveraging multi-head self-attention mechanisms, positional embeddings, and an advanced sequence-to-sequence processing architecture, ECGDnet effectively captures both local and global temporal dependencies inherent in cardiac signals. Experimental validation on real-world datasets demonstrates ECGDnet’s remarkable efficacy in noise suppression, achieving a Signal-to-Noise Ratio (SNR) of 19.83, a Normalized Mean Squared Error (NMSE) of 0.9842, a Reconstruction Error (RE) of 0.0158, and a Pearson Correlation Coefficient (PCC) of 0.9924. These results represent significant improvements from traditional deep learning approaches while maintaining complex signal morphology and effectively mitigating noise interference.

1. Introduction

Electrocardiogram (ECG) signals represent the electrical activity of the heart and serve as a cornerstone in modern cardiac diagnostics. These signals, typically ranging from 0.05 to 100 Hz in frequency, contain critical morphological features including P waves, QRS complexes, and T waves [1,2], each reflecting specific cardiac events. However, in real-world clinical settings, ECG recordings are invariably contaminated by noise, particularly electromyogram (EMG) artifacts. These EMG artifacts, generated by skeletal muscle contractions [3,4], manifest as high-frequency components ranging from 20 Hz to 500 Hz and can exhibit amplitudes comparable to or even exceeding the ECG signal itself. This interference poses a significant challenge as it can mask subtle ECG features [5,6], distort important diagnostic markers like ST segments, and potentially lead to false interpretations of arrhythmias or other cardiac abnormalities. The denoising of electrocardiogram signals constitutes a pivotal area of research, tackling the complexities introduced by noise interference within clinical diagnostic frameworks. Conventional filtering methodologies, as elaborated in [7], emphasize the utilization of reference datasets such as SimEMG to assess the efficacy of denoising performance. Although these methodologies demonstrate efficacy in mitigating noise [8], residual artifacts frequently endure, thereby impacting diagnostic precision. Adaptive filtering methodologies, exemplified in [9], offer an effective approach for the real-time removal of cardiogenic oscillations from esophageal pressure signals. This methodology illustrates a substantial attenuation of noise levels, achieved without the necessity for supplementary apparatus, thereby rendering it appropriate for clinical application. The iterative regeneration method (IRM) presented in [10] exemplifies innovative techniques that provide significant enhancements compared to conventional wavelet and FIR filters. IRM demonstrates a proficient equilibrium between the attenuation of noise and the preservation of signal morphology, while also exhibiting computational efficiency, thereby rendering it particularly suitable for both mobile and conventional ECG devices. In a comparable manner, hierarchical adaptive filtering, illustrated by the hierarchical Kalman filter (HKF) in [11], utilizes patient-specific dynamics to attain enhanced denoising efficacy. The adaptive methodologies highlighted herein emphasize the critical necessity of customizing noise suppression strategies to align with particular clinical scenarios. The advent of machine learning and deep learning methodologies has significantly revolutionized the domain, providing effective solutions for the denoising of electrocardiogram signals. Ref. [12] presents an adversarial deep learning framework aimed at the denoising of fetal ECG signals, which results in a notable enhancement of the signal-to-noise ratio (SNR) and an increase in the accuracy of QRS complex detection. Methods based on autoencoders, such as the denoising convolutional autoencoder (DCAE) referenced in [13], significantly improve ECG signals derived from in-ear recordings, demonstrating exceptional accuracy in R-peak detection and producing clinically viable waveform reconstructions. Fully convolutional networks (FCNs), as examined in [14], demonstrate superior performance compared to conventional techniques in the reconstruction of single-channel ECG signals that have been compromised by noise, thereby highlighting the capabilities of deep learning across various applications. Emerging generative models present a compelling avenue for exploration. Refs. [15,16] investigate the utilization of diffusion models in the context of time series data, emphasizing score-based denoising techniques specifically applied to ECG and sEMG signals. These models demonstrate exceptional capability in preserving signal fidelity while effectively mitigating noise, thereby establishing an innovative framework for generative denoising. Furthermore, ref. [17] highlights the significance of noise on heart rate variability (HRV) metrics, presenting a preprocessing technique that proficiently reduces the impact of artifacts on essential diagnostic indicators.

The literature reviewed indicates a transition from traditional filtering techniques to more sophisticated machine learning and hybrid methodologies. Traditional filters establish a fundamental basis for the attenuation of noise; however, contemporary methodologies emphasize the importance of computational efficiency and adaptive functionalities. Subsequent investigations ought to focus on the amalgamation of these methodologies, thereby guaranteeing maximal noise attenuation while preserving signal integrity to the greatest extent possible. The advancements observed in this domain present considerable promise for the enhancement of ECG signal quality, which, in turn, could lead to improved diagnostic outcomes within healthcare environments. Recent advances in deep learning have shown promise in signal processing tasks, yet existing neural network architectures for ECG denoising have limitations in capturing long-range dependencies and complex temporal relationships within the signal. This is particularly crucial when dealing with EMG artifacts, which can occur at various time scales and intensities. To address these challenges, we present ECGDnet, a novel denoising network that leverages transformer architecture to effectively remove EMG artifacts from ECG signals. Our approach exploits the transformer’s superior ability to model long-range dependencies and its self-attention mechanism to identify and preserve crucial ECG morphological features while selectively removing muscle-related noise. The paper is structured in the following way: in the Section 2, we present ECGDnet, elaborating on its architecture and its innovative methodology for ECG signal denoising. The datasets employed for training and validation are detailed, along with the preprocessing methods implemented to incorporate EMG noise into the data. Subsequently, we delineate the performance metrics employed to assess the denoising efficacy of the proposed approach. The Section 4 emphasizes a comparative analysis of ECGDnet against other leading models, highlighting its performance and computational efficiency. In Section 6, the paper offers valuable insights and recommendations for future research avenues in the realm of ECG signal processing and its implementation in environments with limited resources.

2. Materials and Methods

2.1. Data for Training and Validation

2.1.1. ECG MIT-BIH

This study utilized the widely recognized MIT-BIH dataset [18], which comprises ECG recordings provided by the Massachusetts Institute of Technology and meticulously annotated by medical experts in accordance with international standards. The MIT-BIH database has been extensively utilized within the academic community for research aimed at the recognition and classification of arrhythmic heartbeats. The MIT-BIH dataset comprises 48 ECG recordings, each with a duration of 30 min. The recordings comprised two leads per record and were sampled at a frequency of 360 Hz, thereby providing comprehensive data for the investigation. Expert annotations were assigned to the ECG recordings to ensure the accuracy and reliability of the dataset. The MIT-BIH database employs optimization methodologies and actively solicits contributions from the research community to facilitate future advancements. This enables the dataset to benefit from ongoing advancements and self-optimization methodologies aimed at enhancing its quality and usability.

2.1.2. sEMG

The dataset [7] named sEMG is a collection of four-channel surface electromyography signals from 40 participants, aimed at studying human–computer interaction. The data include various hand gestures, such as rest, wrist extension, flexion, and grip. The data were collected from four forearm muscles during ten distinct hand gestures simulations using the BIOPAC MP36 device and Ag/AgCl surface bipolar electrodes were sampled at a frequency of 512 Hz. The dataset is useful for research into recognition, classification, and predictive modeling in EMG-based hand movement control systems. It also serves as a reference for artificial intelligence models, particularly deep learning, detecting gesture-related electromyography signals. The dataset is recommended for benchmarking existing datasets and validating machine learning and deep learning models.

2.2. Data Preprocessing

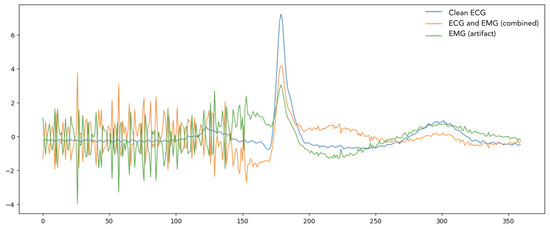

In the preprocessing stage, we implemented an advanced signal integration strategy that combines clean ECG signals from the MIT-BIH Arrhythmia Database with surface EMG (sEMG) noise signals recorded from chest muscles, a comprehensive comparison of MIT-BIH and sEMG databases is shown in Table 1. To ensure compatibility between the two signal sources as shown in Figure 1, the EMG recordings were first resampled to match the sampling frequency and temporal length of the ECG signals. This resampling procedure preserved the spectral and temporal integrity of the EMG signal while enabling dimensional alignment, resulting in both signals having shape , where N is the number of signal segments and is the number of time points per segment.

Table 1.

Comprehensive comparison of MIT-BIH and sEMG databases.

Figure 1.

Time Series of Clean ECG signal, Combined, and EMG Artifact Signals.

After alignment, the EMG noise was added to the clean ECG signal to generate a physiologically realistic contaminated signal , using the following formulation:

Here, is a scaling factor that adjusts the noise amplitude to simulate a target signal-to-noise ratio (SNR). Given an SNR value in decibels, , the corresponding linear SNR is computed as:

The scaling factor is then calculated as:

This formulation ensures that the added noise is spectrally and energetically consistent with real-world physiological conditions. The EMG signals used for contamination preserve high-frequency characteristics typically observed in motion artifacts and muscle activity, making them a reliable surrogate for real interference in ECG recordings. By simulating contamination at different SNR levels, this preprocessing step enables the construction of a diverse and challenging dataset, facilitating the development of robust and generalizable ECG denoising models.

3. Methodology

Our methodology seeks to develop a neural network capable of denoising severely corrupted ECG signals through the utilization of a dual dataset [19]. One dataset comprises ECG signals, while the other encompasses the artifact sources, specifically the EMG signals, which serve as the noise component in our investigation. Given a paired signal training dataset c and n, where n represented the noisy version of the clean ECG signal c for a given sample, our goal is to learn the right parameter values of a neural network so that the network can map the noisy signal N to the clean signal C. With these optimized parameters, the neural network can be used to denoise all ECG signals, including those not available in the training database.

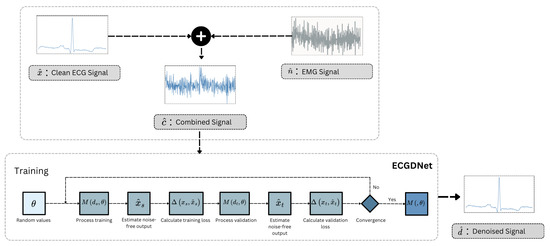

The overall framework shown in Figure 2 illustrated the overview of the proposed approach at training and inference time. The inference section of the figure illustrates the workflow of ECGDNet, a model designed to denoise ECG signals by removing noise such as EMG interference. The process starts with two inputs: the clean ECG signal and the noise signal , the degradation process of acquired ECG can be represented as mentioned in Equation (1). This noisy signal is fed into ECGDNet, which processes it to produce a denoised output signal . The objective of the model is to accurately reconstruct the clean ECG signal by separating it from the noise, ensuring the output is a noise-free representation of the original signal. The training part outlines the training process of a transformer based model for denoising signals by leveraging both training and validation datasets. The process begins by initializing the model parameters with random values. The training dataset consists of noisy signals and their corresponding ground-truth clean signals , while the validation dataset comprises and . During each training iteration, the model processes the noisy training signals to estimate the corresponding noise-free output . The quality of this estimation is evaluated using a loss function , which computes the difference between the estimated output and the ground-truth signal . The parameters are then updated using the Adam optimization algorithm to minimize this training loss. In parallel, the model’s performance on the validation dataset is monitored to assess generalization. The noisy validation signals are fed into the model to generate estimated noise-free outputs , and the validation loss is computed by comparing these outputs to the ground-truth clean signals . It is important to emphasize that the validation data is never used for updating the model parameters and plays no role in the optimization process. Instead, the validation loss serves exclusively as an early stopping criterion: the training continues until the validation loss plateaus or increases for a specified number of epochs, indicating that further training may lead to overfitting. Upon convergence, the final set of optimized parameters defines the trained model , which is then ready for deployment in denoising tasks. This approach ensures that the model generalizes well to unseen data while avoiding overfitting through independent validation monitoring.

Figure 2.

Training and inference schemes of the proposed approach for ECG denoising.

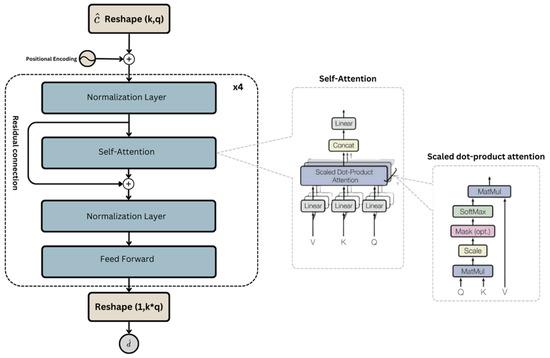

In the proposed ECGDnet architecture as shown in Figure 3, the input ECG signal is reshaped into a two-dimensional representation of shape , where denotes the number of temporal segments (or tokens) and represents the dimensionality of each token. This design enables the model to process the ECG signal as a sequence, capturing both local features within each segment and global dependencies across the entire signal. To preserve temporal structure and order information—which are essential in physiological signals—learnable positional encodings are added to the input representation. While tokenization introduces discrete segment boundaries, the model’s use of cross-token self-attention and positional embeddings mitigates potential degradation due to signal shifts, ensuring robustness in the presence of phase variations or waveform misalignment.

Figure 3.

Hierarchical Architecture of ECGDnet: End-to-End Transformer Pipeline with Cross-Layer Self-Attention and Dynamic Residual Pathways for Advanced ECG Signal Processing.

Within the attention mechanism, the query (Q), key (K), and value (V) matrices are generated from the same input tensor but via separate learned projections. This is implemented through a single linear transformation layer that maps the input into a concatenated tensor, which is then split into Q, K, and V. These matrices are not identical copies but distinct, parameterized projections that allow the model to learn asymmetric interactions among different time segments. This formulation supports the model’s ability to dynamically attend to relevant temporal regions, thereby enhancing denoising performance across a range of physiological noise conditions.

While Table 2 and Figure 3 represent the same overall architecture, they serve complementary purposes and operate at different levels of abstraction. Figure 3 provides a high-level schematic of ECGDnet’s transformer encoder pipeline, highlighting the hierarchical organization of self-attention and feedforward layers within residual blocks. It illustrates the general structure of a single encoder block, repeated four times in our model, along with the flow of information and residual connections.

Table 2.

Comprehensive Architecture of ECGDnet: Layer Configurations, Output Dimensions, and Computational Parameters for ECG Signal Analysis.

Table 2, on the other hand, provides a detailed, layer-by-layer breakdown of each of the four Transformer blocks shown in Figure 3. Each Transformer block in Table 2 includes a normalization layer, a multi-head self-attention module (implemented via linear projections for Q, K, and V), a projection layer for the attention output, a second normalization layer, and a feedforward network composed of two fully connected layers. Although the layer configuration remains consistent across all four blocks, parameter weights are not shared; each block learns independent parameters, enabling deeper hierarchical feature learning. This repetition is consistent with standard Transformer encoder designs, where each layer refines the representation produced by the preceding one.

3.1. Training Parameters

The Table 3 provides a detailed description of the training hyperparameters used for the proposed ECGDnet denoising model. For training, a batch size of 128 was selected, with an initial learning rate of , optimized using the Adam optimizer configured with , , and . The model was trained for 50 epochs, utilizing a cosine annealing learning rate schedule with a warm-up phase spanning 5 epochs. The loss function combines a primary loss of Mean Squared Error (MSE) and a secondary loss of L1, weighted at 1.0 and 0.1, respectively. To prevent overfitting and improve generalization, regularization techniques such as weight decay (), gradient clipping (threshold of 1.0), and a dropout rate of 0.1 were applied. Early stopping was incorporated, monitoring the validation loss with a patience of 10 epochs and a minimum delta of to terminate training when improvements stagnated. These hyperparameters collectively aim to balance training stability, efficiency, and performance. The proposed neural network architecture was implemented using the PyTorch (version 3.9) deep learning framework and trained for 50 epochs on an NVIDIA A100 GPU with 80 GB of memory. Leveraging the high-performance computing capabilities of the State-of-the-Art A100 accelerator, the extensive 50 epoch training regimen allowed the model to thoroughly optimize its parameters and capture complex relationships within the sequential data. This rigorous experimental setup, combining the flexibility of PyTorch with the parallel processing power of the GPU, ensures the reliability and reproducibility of the research findings, enabling future applications of the self-attention-based architecture.

Table 3.

Detailed training hyperparameters of the proposed ECGDnet denoising model.

3.2. Data Splitting

In this study, the dataset was divided into three distinct subsets: a training set consisting of 44,784 samples, a validation set containing 5598 samples, and a test set that also includes 5598 samples. To maintain subject-independent evaluation, it was imperative that no overlap occurred between subjects within these subsets. The test set was meticulously constructed utilizing data exclusively from subjects that were not part of the training or validation sets. This approach facilitates an unbiased evaluation of the model’s generalization capability. The validation set was utilized solely for the purposes of hyperparameter tuning and early stopping, and it was not incorporated during the model training phase. The integrity of the test data was maintained, ensuring it remained entirely unobserved during both the training and model selection phases.

3.3. Performance Metrics

In this study, three sets of performance measures were used to evaluate the proposed approach and provide a robust evaluation framework for ECG denoising, ensuring the reconstructed signals are clinically relevant and diagnostically accurate [20,21,22]. These metrics include the signal-to-noise ratio (SNRdB), the Pearson correlation coefficient (PCC), and the normalized mean squared error (NMSE).

Signal-to-Noise Ratio (SNRdB) quantifies the strength of the clean signal (X) relative to the noise () introduced during the denoising process. SNR is typically expressed in decibels (dB) and is defined as:

where:

- is the signal power

- is the noise power

In expanded form:

A higher SNRdB indicates that the noise introduced by the denoising algorithm is minimal, preserving the integrity of the original ECG signal. Conversely, a low SNRdB indicates that the denoising process has introduced significant distortions.

Normalized Mean Squared Error (NMSE) quantifies the deviation between the clean signal (X) and the denoised signal (Y), normalized by the energy (squared norm) of the clean signal. The NMSE is given by:

NMSE penalizes larger deviations more significantly than smaller ones. A lower NMSE value indicates that the denoised signal closely approximates the clean signal. For normalization and interpretability, a complementary metric can be defined:

This score ranges from 0 to 1, where:

- 1: Perfect reconstruction (denoised signal is identical to the clean signal)

- 0: Maximum error (denoised signal completely deviates from the clean signal)

Pearson Correlation Coefficient (PCC) measures the degree of linear association between the clean ECG signal (X) and the denoised ECG signal (Y). It evaluates how well the variations in one signal correspond to variations in the other. PCC is computed as:

where:

- is the covariance

- and are the standard deviations of X and Y, respectively

The covariance is defined as:

where:

- and are the individual samples of X and Y

- and are the means of X and Y

- n is the number of data points

Substituting the covariance into the PCC formula:

The PCC ranges between:

- PCC = 1: A perfect positive linear relationship

- PCC = 0: No linear relationship

- PCC = : A perfect negative linear relationship

In the context of ECG denoising, PCC quantifies how closely the reconstructed (denoised) signal aligns with the clean reference signal in terms of waveform similarity.

4. Results and Discussion

Table 4 presents the performance evaluation of four methods [17,18,19,23]: Sym4, 1D-ResCNN, IC-U-Net, and ECGDnet, using four metrics: Signal-to-Noise Ratio (SNR), Normalized Mean Squared Error (NMSE), Relative Error (RE), and Pearson Correlation Coefficient (PCC). These metrics assess the quality of denoising, with higher SNR and PCC values and lower NMSE and RE values indicating better performance. The results show that ECGDnet outperforms all other methods, achieving the highest SNR value (19.83 dB), the lowest NMSE (0.9842), the smallest RE (0.0158), and the strongest PCC (0.9924). IC-U-Net follows closely, while Sym4 and 1D-ResCNN show comparatively lower performance. This analysis underscores the effectiveness of modern deep learning architectures such as ECGDnet in achieving superior denoising performance for medical signals like ECG, surpassing traditional and earlier neural network-based methods.

Table 4.

Comparative analysis of ECG denoising methods. The best results for each metric are highlighted in bold.

Among the models, ECGDnet demonstrates great performance across all metrics. It achieves the highest SNR of 19.83, indicating its ability to produce the cleanest reconstructed signals. Furthermore, it records the highest NMSE of 0.9842, reflecting the model’s capability to preserve the original signal structure with high fidelity. The RE for ECGDnet is the lowest (0.0158), signifying minimal reconstruction error, while the PCC of 0.9924 confirms a near-perfect correlation between the reconstructed and original signals. Comparatively, IC-U-Net and 1D-ResCNN show slightly lower performance, with IC-U-Net having an SNR of 19.33 and PCC of 0.9921, while 1D-ResCNN lags behind with an SNR of 18.51 and PCC of 0.9893.

The results suggest that the design of ECGDnet, incorporating a multi-head attention mechanism, positional embeddings, and Transformer-inspired blocks, significantly enhances its ability to capture temporal dependencies and denoise ECG signals effectively [24]. In contrast, IC-U-Net, with its U-Net-inspired structure, and 1D-ResCNN, relying on residual CNN blocks, provide competitive but less optimal results. This performance comparison underscores the potential of ECGDnet as a State-of-the-Art solution for ECG signal denoising tasks [9,20,25,26]. While the absolute differences may appear numerically small (e.g., ECGDnet vs. IC-U-Net), these gains are significant in the context of biomedical signal processing, where even marginal improvements can translate to clinically meaningful differences, such as improved delineation of low-amplitude P-waves or enhanced fidelity in QRS complex morphology critical for tasks like arrhythmia detection or ischemia assessment. For example, a higher Pearson correlation coefficient (PCC) reflects stronger temporal alignment with the original ECG waveform, improving downstream interpretability by cardiologists or automated diagnostic algorithms. Similarly, lower Relative Error (RE) and NMSE indicate reduced distortion, which enhances diagnostic accuracy, particularly in edge cases involving subtle waveform anomalies. The experimental findings indicate that ECGDnet exhibits superior performance compared to IC-U-Net and 1D-ResCNN in the context of ECG denoising, particularly in its ability to capture long-range temporal dependencies and reduce the impact of EMG noise. The enhanced temporal feature extraction and noise suppression capabilities of ECGDnet contribute to its superiority. In contrast to IC-U-Net, which is primarily designed for spatial representation in medical imaging tasks, ECGDnet is specifically engineered for sequential signal processing, integrating architectures that proficiently capture long-term dependencies. The documented improvements in performance are largely attributed to its multi-scale feature extraction mechanism, which allows the network to differentiate ECG components from high-frequency EMG artifacts.

Moreover, ECGDnet likely utilizes dilated convolutions alongside residual connections, enhancing the receptive field while preserving signal integrity. This design enables efficient noise filtering without compromising the fidelity of clinically significant waveforms. In contrast, IC-U-Net, despite its hierarchical feature extraction capability, may struggle to capture the sequential dependencies inherent in one-dimensional ECG signals, leading to suboptimal noise suppression. Similarly, 1D-ResCNN, while leveraging residual learning to improve feature propagation, lacks explicit mechanisms for addressing transient noise components, which diminishes its effectiveness against EMG artifacts.

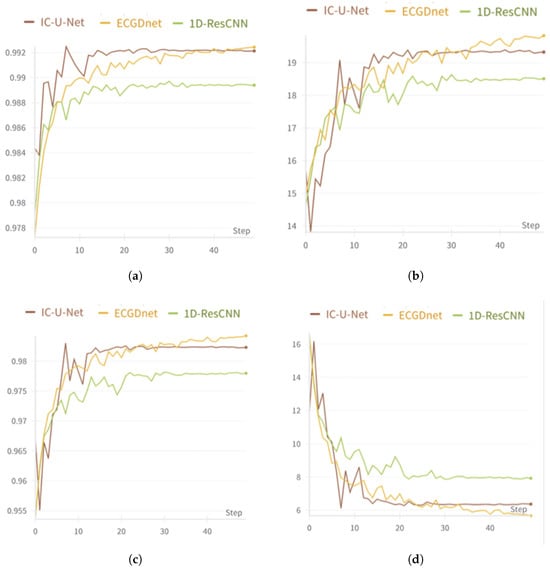

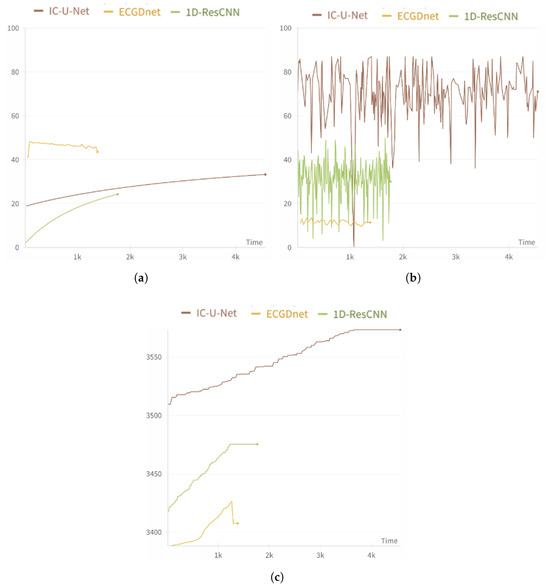

These performance improvements are further validated through quantitative metrics and visual analysis. The quantitative assessment illustrated in Figure 4a,b confirms that ECGDnet outperforms alternative models, achieving higher PCC and SNR, while maintaining a lower NMSE. Additionally, the visual evaluation in Figure 4c,d provides further evidence of ECGDnet’s superiority, demonstrating better preservation of the morphology of PQRST complexes and effective suppression of high-frequency noise, ensuring that the waveform integrity is maintained.

Figure 4.

(a) shows the Validation PCC Curves (b) shows the Validation SNRdb Curves (c) shows the Validation NMSE Curves (d) shows the Validation Loss Curves.

Given these performance advantages, ECGDnet proves to be particularly well suited for real-time ECG denoising, a crucial requirement in wearable and remote health monitoring systems. In these applications, it is essential to minimize signal distortion while effectively eliminating noise to ensure accurate clinical interpretation. The ability to process signals efficiently and reliably makes ECGDnet an ideal choice for continuous health monitoring, where robust denoising mechanisms are necessary to maintain signal quality in dynamic environments.

LSTMs are indeed a well-established baseline for time-series modeling tasks, including ECG signal processing. However, our study primarily explores the advantages of transformer-based architectures, which offer improved capacity to capture long-range dependencies and parallel processing capabilities, leading to better scalability and performance in denoising applications. Moreover, one of the key objectives of our work is to design a model suitable for deployment on resource-constrained edge devices. LSTM networks, while effective, require sequential data processing and tend to exhibit higher GPU memory usage and latency during inference compared to models optimized for parallelism, such as the proposed architecture. These computational constraints make LSTMs less suitable for low-power or real-time embedded scenarios.

While ECGDnet is highly effective in ECG denoising, its capabilities extend far beyond this specific application. Its strengths in capturing long-range temporal dependencies and suppressing high-frequency noise make it a promising tool for various biomedical signal processing tasks. One particularly promising application is in electroencephalography (EEG) artifact removal, where EEG signals frequently suffer from EMG contamination, ocular artifacts (EOG), and power line interference. The multi-scale feature extraction and temporal modeling capabilities of ECGDnet could significantly enhance EEG-based brain–computer interfaces (BCIs), improve epilepsy detection, and optimize sleep monitoring by effectively isolating neural activity from artifacts.

Beyond EEG artifact removal, ECGDnet’s robust noise suppression capabilities can also be leveraged in photoplethysmography (PPG) signal enhancement. PPG signals, commonly used in wearable health devices and pulse oximetry, are particularly susceptible to motion artifacts and ambient light interference. By applying ECGDnet’s advanced denoising techniques, the accuracy of heart rate variability (HRV) analysis, blood oxygen level estimation, and continuous health monitoring could be significantly improved.

Moreover, ECGDnet presents potential for adaptation in electromyography (EMG) signal processing, particularly in contexts such as neuromuscular disease diagnosis, prosthetic device control, and rehabilitation monitoring, where precise signal interpretation is crucial. Another significant application lies in the extraction of fetal electrocardiogram (fECG) from maternal ECG, a challenging task due to the low amplitude of fetal signals and the dominance of maternal ECG activity. ECGDnet’s deep feature extraction capabilities offer an opportunity to enhance non-invasive fetal monitoring, contributing to advancements in prenatal diagnostics.

In addition to these applications, ECGDnet has the potential to improve signal processing in seismocardiography (SCG) and ballistocardiography (BCG), two novel methodologies in cardiovascular monitoring that are highly affected by motion-induced noise. By refining these signals, ECGDnet can enhance heart rate estimation, support comprehensive cardiac function analysis, and contribute to the early detection of cardiovascular diseases.

Given ECGDnet’s broad applicability across multiple biomedical domains, further architectural optimizations could enhance its effectiveness even further. Future research should explore attention mechanisms and transformer-based models to improve its ability to generalize across diverse physiological time-series data. By integrating such advancements, ECGDnet could be further optimized for real-world biomedical applications, ensuring superior performance across a wide range of health monitoring and diagnostic systems [27,28,29,30].

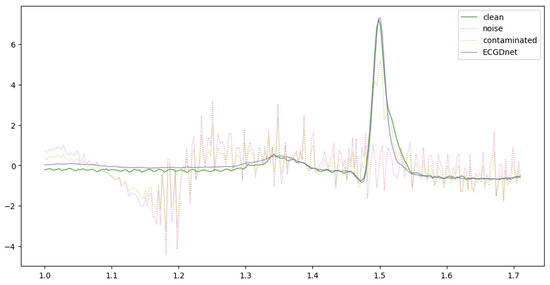

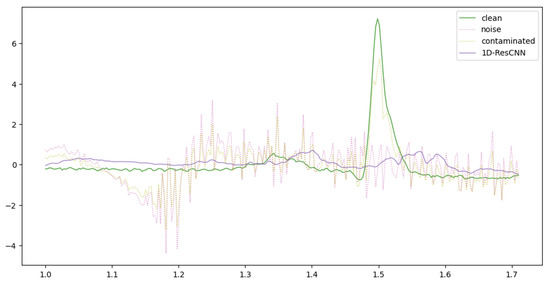

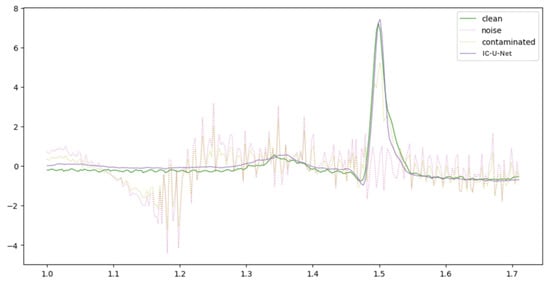

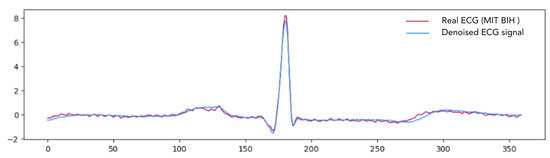

Figure 5, Figure 6 and Figure 7 provide a comparative visualization of ECG denoising performance using three different deep learning architectures: IC-U-Net, ECGDnet, and 1D-ResCNN. Each plot includes four signals: the clean ECG, the noise, the contaminated ECG, and the denoised ECG output from the respective model, and Figure 8 shows the Comparison Between Real ECG Signal (MIT-BIH) and ECGDnet Denoised Output.

Figure 5.

Comparative Visualization of ECGDnet Denoising Performance Against Ground Truth ECG.

Figure 6.

Comparative Visualization of 1D-ResCNN Denoising Performance Against Ground Truth ECG.

Figure 7.

Comparative Visualization of IC-U-Net Denoising Performance Against Ground Truth ECG.

Figure 8.

Comparison Between Real ECG Signal (MIT-BIH) and ECGDnet Denoised Output Illustrating High-Fidelity Reconstruction.

In all visualizations, the models show a strong ability to recover the clean signal from the contaminated input, closely approximating the ground truth—particularly in critical regions such as the QRS complex [23,31,32]. ECGDnet exhibits a smoother and more precise recovery, maintaining waveform morphology with minimal residual noise. IC-U-Net and 1D-ResCNN also perform effectively, though with minor differences in noise attenuation and waveform preservation. These comparisons underscore each model’s capability in managing noise in ECG signals and emphasize ECGDnet’s superior denoising fidelity.

The performance metrics shown in Figure 9 highlight the advantages of ECGDnet in the field of TinyML [33,34], showcasing its suitability for deployment in resource-constrained environments. In terms of central processing unit (CPU) utilization, ECGDnet demonstrates consistently lower usage compared to IC-U-Net and 1D-ResCNN, indicating reduced energy consumption—an essential factor for edge devices operating on limited power [35,36,37].

Figure 9.

Resource Utilization Comparison of IC-U-Net, ECGDnet, and 1D-ResCNN (a) shows the Process CPU utilization (b) shows the GPU utilization (c) shows the Process memory in use (MB).

Furthermore, ECGDnet exhibits minimal graphics processing units (GPU) utilization, unlike IC-U-Net, which relies heavily on GPUs. This makes ECGDnet highly compatible with low-power devices that typically lack GPU capabilities [3,38]. Memory efficiency is another standout feature: ECGDnet maintains the lowest and most stable memory usage throughout runtime. This characteristic is particularly beneficial for TinyML applications, where devices are often constrained by limited random access memory (RAM). Overall, ECGDnet’s low resource requirements, energy efficiency, and real-time inference capability make it a highly advantageous choice for deploying health-monitoring models on edge devices.

The computational efficiency of ECGDnet is a pivotal consideration in its relevance to large-scale datasets and real-time biomedical signal processing. As illustrated in Figure 9, ECGDnet exhibits a moderate yet steady level of CPU utilization, suggesting optimization for parallel computation and minimal processing bottlenecks. In contrast, IC-U-Net shows fluctuating and elevated GPU usage, indicating a greater reliance on GPU acceleration.

ECGDnet’s consistently low GPU usage implies either a smaller number of trainable parameters or reliance on computationally efficient operations, making it lightweight even for GPU-enabled environments. An analysis of memory consumption further reveals that ECGDnet has significantly lower memory demands compared to IC-U-Net, positioning it as a preferable option for memory-constrained applications, including wearable devices and real-time remote health monitoring systems [28,30,39,40,41].

Thanks to its minimal memory requirements and optimized CPU utilization, ECGDnet exhibits strong scalability for deployment across large datasets without incurring substantial computational costs. The model’s efficiency promotes feasibility for extended ECG analysis over large patient populations. Additionally, the consistent computational footprint suggests that real-time ECG denoising on edge computing platforms is achievable.

Nevertheless, the limited GPU usage highlights opportunities for further optimization. Techniques such as hardware-aware quantization, network pruning, or tensor decomposition could enhance inference speed and energy efficiency. Future research should focus on improving GPU parallelism and advancing deep learning acceleration strategies. Such developments are crucial for reducing latency and enhancing the practical deployment of ECGDnet in real-time clinical environments, particularly for ambulatory ECG monitoring and continuous patient surveillance.

5. Limitations and Future Work

Although ECGDnet exhibits encouraging outcomes in the denoising of ECG signals, it is imperative to recognize several inherent limitations. The present investigation is predicated on the utilization of synthetic noise, which is produced through the amalgamation of pristine ECG signals and resampled EMG data. While this methodology facilitates a controlled and reproducible assessment, it fails to comprehensively account for the variability and intricacies inherent in real-world noise conditions, including motion artifacts and baseline wander encountered in ambulatory environments. Subsequent research endeavors will integrate authentic noisy ECG datasets, such as the MIT-BIH Noise Stress Test Database, and perform cross-dataset validation to enhance the evaluation of clinical generalization.

Secondly, while ECGDnet employs an encoder-only Transformer architecture and retains a comparatively low parameter count, the computational requirements associated with self-attention mechanisms could still present significant challenges for ultra-low-power edge devices. In this context, subsequent investigations will delve into hybrid architectures that integrate lightweight convolutional front-ends with attention-based modules. Additionally, the research will encompass compression methodologies, including quantization, pruning, and knowledge distillation, aimed at minimizing model size and inference latency.

In conclusion, although this research primarily addresses ECG signals, the architecture of the model is designed to be modular and applicable across various contexts. Initial studies indicate that the existing framework may be modified for the purpose of denoising additional biomedical signals, including EEG and PPG, both of which exhibit similar temporal structures and are prone to noise interference. Subsequent investigations will focus on customizing ECGDnet for these modalities and assessing its efficacy on pertinent datasets to examine its potential utility in more extensive physiological monitoring scenarios.

6. Conclusions

This study assessed four techniques for ECG signal denoising: a traditional method, Sym4, alongside three deep learning models—1D-ResCNN, IC-U-Net, and ECGDnet—emphasizing their effectiveness in reconstructing clean signals from contaminated data. The comparative analysis, utilizing essential performance metrics including Signal-to-Noise Ratio (SNR), Normalized Mean Squared Error (NMSE), Relative Error (RE), and Pearson Correlation Coefficient (PCC), revealed that ECGDnet surpassed the other methods across all evaluated criteria. Specifically, ECGDnet achieved the highest SNR (19.83), lowest NMSE (0.9842), lowest RE (0.0158), and highest PCC (0.9924), underscoring its superior capability to reduce noise while preserving the physiological fidelity of ECG signals.

Sym4, a wavelet-based technique, demonstrated the least effective performance, showing limited ability to capture the intricate, non-linear dynamics characteristic of ECG signals—particularly when compared to data-driven deep learning approaches. The superior performance of ECGDnet is attributed to its novel architecture, which incorporates multi-head attention mechanisms and Transformer-inspired positional embeddings to effectively capture long-range temporal dependencies. This makes ECGDnet particularly adept at managing the complex structure of ECG waveforms.

In contrast, IC-U-Net and 1D-ResCNN, although still effective, showed comparatively lower performance. These limitations are likely tied to the inherent constraints of their U-Net-based and convolutional structures, which may not fully exploit long-range dependencies. While Sym4 maintains the advantage of computational efficiency, it falls short when tasked with the denoising of complex biomedical signals, reaffirming the advantages of deep learning-based, attention-driven architectures.

In summary, this research underscores the promise of sophisticated attention-based frameworks like ECGDnet for ECG signal denoising and broader biomedical signal processing applications. Future work should focus on optimizing computational efficiency for real-time and resource-constrained deployments, as well as expanding the model’s scope to include other physiological signals, multi-modal datasets, or hybrid methodologies that integrate traditional signal processing with modern deep learning techniques.

Author Contributions

Conceptualization, F.-E.B.-B., B.J. and A.E.; methodology, F.-E.B.-B., I.T. and A.E.; software, I.T. and A.E.; validation, F.-E.B.-B., B.J., O.M. and A.E.; formal analysis, I.T. and A.E.; investigation, F.-E.B.-B. and A.E.; resources, O.M. and A.E.; data curation, A.E.; writing—original draft preparation, F.-E.B.-B., B.J. and A.E.; writing—review and editing, I.T., O.M. and A.E.; visualization, A.E.; supervision, F.-E.B.-B., B.J. and A.E.; project administration, B.J. and A.E.; funding acquisition, A.E. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study utilized the widely recognized MIT-BIH dataset. Ethical review and approval were waived for this study, due to the use of publicly available datasets.

Informed Consent Statement

This study used publicly available dataset where informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are openly available in Physionet and Mendeley data [MIT-BIH] [https://physionet.org/content/mitdb/1.0.0/].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bernard, C. Étude sur la physiologie du cœur. Rev. Des Deux Mondes (1829–1971) 1865, 56, 236–252. Available online: https://www.jstor.org/stable/44739012 (accessed on 16 May 2025).

- Cephalometric Landmarks Identification Through an Object Detection-Based Deep Learning Model. Available online: https://openurl.ebsco.com/EPDB%3Agcd%3A10%3A23109450/detailv2?sid=ebsco%3Aplink%3Ascholar&id=ebsco%3Agcd%3A175975014&crl=c&link_origin=scholar.google.co.jp (accessed on 19 February 2025).

- Xie, Y.-L.; Lin, C.-W. YOLO-ResTinyECG: ECG-Based Lightweight Embedded AI Arrhythmia Small Object Detector with Pruning Methods. Expert Syst. Appl. 2025, 263, 125691. [Google Scholar] [CrossRef]

- EfficientNetV2 and Attention Mechanisms for the Automated Detection of Cephalometric Landmarks. Available online: https://ieeexplore.ieee.org/abstract/document/10620094 (accessed on 19 February 2025).

- Chauveau, A. Nouvelles Recherches Expérimentales sur les Mouvements et les Bruits Normaux du Cœur; J.-B. Baillère: Paris, France, 1856. [Google Scholar]

- IoT-Powered Predictive Maintenance Framework for ICU Ventilators. Available online: https://ieeexplore.ieee.org/abstract/document/10620162 (accessed on 19 February 2025).

- Atanasoski, V.; Maneski, L.P.; Ivanović, M.D. SimEMG Database as a Tool for Testing the Preservation of Diagnostic ECG-Signal Features upon the Electromyographic Noise Removal. In Proceedings of the 11th International Conference IcETRAN 2024, Niš, Serbia, 3–6 June 2024. [Google Scholar]

- Reynier, P. Des Nerfs du Coeur: Anatomie et Physiologie; J.-B. Ballière: Paris, France, 1880. [Google Scholar]

- Qin, Y.; Huang, Z.; Zhou, X.; Gui, S.; Xiong, L.; Liu, L.; Liu, J. A Novel Adaptive Filter with a Heart-Rate-Based Reference Signal for Esophageal Pressure Signal Denoising. J. Clin. Monit. Comput. 2024, 38, 701–714. [Google Scholar] [CrossRef]

- Atanasoski, V.; Petrović, J.; Maneski, L.P.; Miletić, M.; Babić, M.; Nikolić, A.; Panescu, D.; Ivanović, M.D. A Morphology-Preserving Algorithm for Denoising of EMG-Contaminated ECG Signals. IEEE Open J. Eng. Med. Biol. 2024, 5, 296–305. [Google Scholar] [CrossRef] [PubMed]

- Revach, G.; Locher, T.; Shlezinger, N.; van Sloun, R.J.G.; Vullings, R. HKF: Hierarchical Kalman Filtering with Online Learned Evolution Priors for Adaptive ECG Denoising. arXiv 2023, arXiv:2210.12807. [Google Scholar] [CrossRef]

- Mvuh, F.L.; Ebode Ko’a, C.O.V.; Bodo, B. Multichannel High Noise Level ECG Denoising Based on Adversarial Deep Learning. Sci. Rep. 2024, 14, 801. [Google Scholar] [CrossRef] [PubMed]

- Occhipinti, E.; Zylinski, M.; Davies, H.J.; Nassibi, A.; Bermond, M.; Bachtiger, P.; Peters, N.S.; Mandic, D.P. In-Ear ECG Signal Enhancement with Denoising Convolutional Autoencoders. arXiv 2024, arXiv:2409.05891. [Google Scholar] [CrossRef]

- Wang, K.-C.; Liu, K.-C.; Peng, S.-Y.; Tsao, Y. ECG Artifact Removal from Single-Channel Surface EMG Using Fully Convolutional Networks. arXiv 2022, arXiv:2210.13271. [Google Scholar] [CrossRef]

- Liu, Y.-T.; Wang, K.-C.; Liu, K.-C.; Peng, S.-Y.; Tsao, Y. SDEMG: Score-Based Diffusion Model for Surface Electromyographic Signal Denoising. arXiv 2024, arXiv:2402.03808. [Google Scholar] [CrossRef]

- Crabbé, J.; Huynh, N.; Stanczuk, J.; van der Schaar, M. Time Series Diffusion in the Frequency Domain. arXiv 2024, arXiv:2402.05933. [Google Scholar] [CrossRef]

- Saleem, S.; Khandoker, A.H.; Alkhodari, M.; Hadjileontiadis, L.J.; Jelinek, H.F. A Two-Step Pre-Processing Tool to Remove Gaussian and Ectopic Noise for Heart Rate Variability Analysis. Sci. Rep. 2022, 12, 18396. [Google Scholar] [CrossRef] [PubMed]

- Moody, G.B.; Mark, R.G. MIT-BIH Arrhythmia Database. physionet.org. 1992. Available online: https://physionet.org/content/mitdb/1.0.0/ (accessed on 19 February 2025).

- Preprocessing and Denoising Techniques for Electrocardiography and Magnetocardiography: A Review. Available online: https://www.mdpi.com/2306-5354/11/11/1109 (accessed on 25 November 2024).

- Hu, K.-Y.; Wang, J.; Cheng, Y.; Yang, C. Adaptive Filtering and Smoothing Algorithm Based on Variable Structure Interactive Multiple Model. Sci. Rep. 2023, 13, 12993. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Wang, W. Chaotic Signal Denoising Based on Energy Selection TQWT and Adaptive SVD. Sci. Rep. 2023, 13, 18873. [Google Scholar] [CrossRef] [PubMed]

- Tong, S.; Yu, D.; Li, X.; Wang, L.; Wang, L. Research on ECG Signal Denoising Based on EM-UKF Algorithm. In Proceedings of the 2024 9th International Conference on Multimedia Systems and Signal Processing (ICMSSP), Bangkok, Thailand, 24–26 May 2024; pp. 18–23. [Google Scholar] [CrossRef]

- Improved Multi-Layer Wavelet Transform and Blind Source Separation Based ECG Artifacts Removal Algorithm from the sEMG Signal: In the Case of Upper Limbs. Available online: https://www.frontiersin.org/articles/10.3389/fbioe.2024.1367929/full (accessed on 25 November 2024).

- Sameni, R.; Shamsollahi, M.B.; Jutten, C.; Clifford, G.D. A Nonlinear Bayesian Filtering Framework for ECG Denoising. IEEE Trans. Biomed. Eng. 2007, 54, 2172–2185. [Google Scholar] [CrossRef]

- Dataset for Multi-Channel Surface Electromyography (sEMG) Signals of Hand Gestures. Available online: https://data.mendeley.com/datasets/ckwc76xr2z/2 (accessed on 25 November 2024).

- Denoising of Blasting Vibration Signals Based on CEEMDAN-ICA Algorithm. Available online: https://www.nature.com/articles/s41598-023-47755-9 (accessed on 10 March 2024).

- High-Density Surface EMG Denoising Using Independent Vector Analysis. Available online: https://ieeexplore.ieee.org/document/9064821 (accessed on 19 February 2025).

- Radware Bot Manager Captcha. Available online: https://ieeexplore.ieee.org (accessed on 19 February 2025).

- Komorowski, D.; Mika, B.; Kaczmarek, P. A New Approach to Preprocessing of EMG Signal to Assess the Correctness of Muscle Condition. Sci. Pap. Silesian Univ. Technol. Organ. Manag. Ser. 2023, 186, 217–238. [Google Scholar] [CrossRef]

- Ponnada, S.; Kuan Tak, T.; Kshirsagar, P.R.; Srinivasa Rao, P.; Dayal, A. Expanding Applications of TinyML in Versatile Assistive Devices: From Navigation Assistance to Health Monitoring System Using Optimized NASNet-XGBoost Transfer Learning. IEEE Access 2024, 12, 168328–168338. [Google Scholar] [CrossRef]

- Noise Removal in Single-Lead Capacitive ECG with Adaptive Filtering and Singular Value Decomposition. Available online: https://ieeexplore.ieee.org/abstract/document/10714420 (accessed on 25 November 2024).

- Zhang, Z.; Ye, Y.; Luo, B.; Chen, G.; Wu, M. Investigation of Microseismic Signal Denoising Using an Improved Wavelet Adaptive Thresholding Method. Sci. Rep. 2022, 12, 22186. [Google Scholar] [CrossRef]

- Farag, M.M. A Tiny Matched Filter-Based CNN for Inter-Patient ECG Classification and Arrhythmia Detection at the Edge. Sensors 2023, 23, 1365. [Google Scholar] [CrossRef]

- Kim, J.; Kim, E.; Kyung, Y.; Ko, H. A Study on ECG Monitoring Embedded Systems. In Proceedings of the 2022 13th International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 19–21 October 2022; pp. 1671–1673. [Google Scholar] [CrossRef]

- Busia, P.; Scrugli, M.A.; Jung, V.J.-B.; Benini, L.; Meloni, P. A Tiny Transformer for Low-Power Arrhythmia Classification on Microcontrollers. IEEE Trans. Biomed. Circuits Syst. 2024, 19, 142–152. [Google Scholar] [CrossRef]

- Ibrahim, M.F.R.; Alkanat, T.; Meijer, M.; Schlaefer, A.; Stelldinger, P. End-to-End Multi-Modal Tiny-CNN for Cardiovascular Monitoring on Sensor Patches. In Proceedings of the PerCom 2024, Biarritz, France, 11–15 March 2024; p. 24. [Google Scholar] [CrossRef]

- Ficco, M.; Guerriero, A.; Milite, E.; Palmieri, F.; Pietrantuono, R.; Russo, S. Federated Learning for IoT Devices: Enhancing TinyML with On-Board Training. Inf. Fusion 2024, 104, 102189. [Google Scholar] [CrossRef]

- Comparative Analysis of Machine Learning Algorithms With Advanced Feature Extraction for ECG Signal Classification. Available online: https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=10496107 (accessed on 6 January 2025).

- Bashford, J.; Wickham, A.; Iniesta, R.; Drakakis, E.; Boutelle, M.; Mills, K.; Shaw, C.E. Preprocessing Surface EMG Data Removes Voluntary Muscle Activity and Enhances SPiQE Fasciculation Analysis. Clin. Neurophysiol. 2020, 131, 265–273. [Google Scholar] [CrossRef] [PubMed]

- Hayajneh, A.M.; Aldalahmeh, S.; Zaidi, S.A.R.; McLernon, D.; Obeidollah, H.; Alsakarnah, R. Channel State Information Based Device-Free Wireless Sensing for IoT Devices Employing TinyML. In Proceedings of the 2022 4th IEEE Middle East and North Africa Communications Conference (MENACOMM), Amman, Jordan, 6–8 December 2022; pp. 215–222. [Google Scholar] [CrossRef]

- Riillo, F.; Quitadamo, L.R.; Cavrini, F.; Gruppioni, E.; Pinto, C.A.; Pastò, N.C.; Sbernini, L.; Albero, L.; Saggio, G. Optimization of EMG-Based Hand Gesture Recognition: Supervised vs. Unsupervised Data Preprocessing on Healthy Subjects and Transradial Amputees. Biomed. Signal Process. Control 2014, 14, 117–125. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).