Signal to Noise Ratio of a Coded Slit Hyperspectral Sensor

Abstract

1. Introduction

2. Materials and Methods: The Signal to Noise (SNR) Model

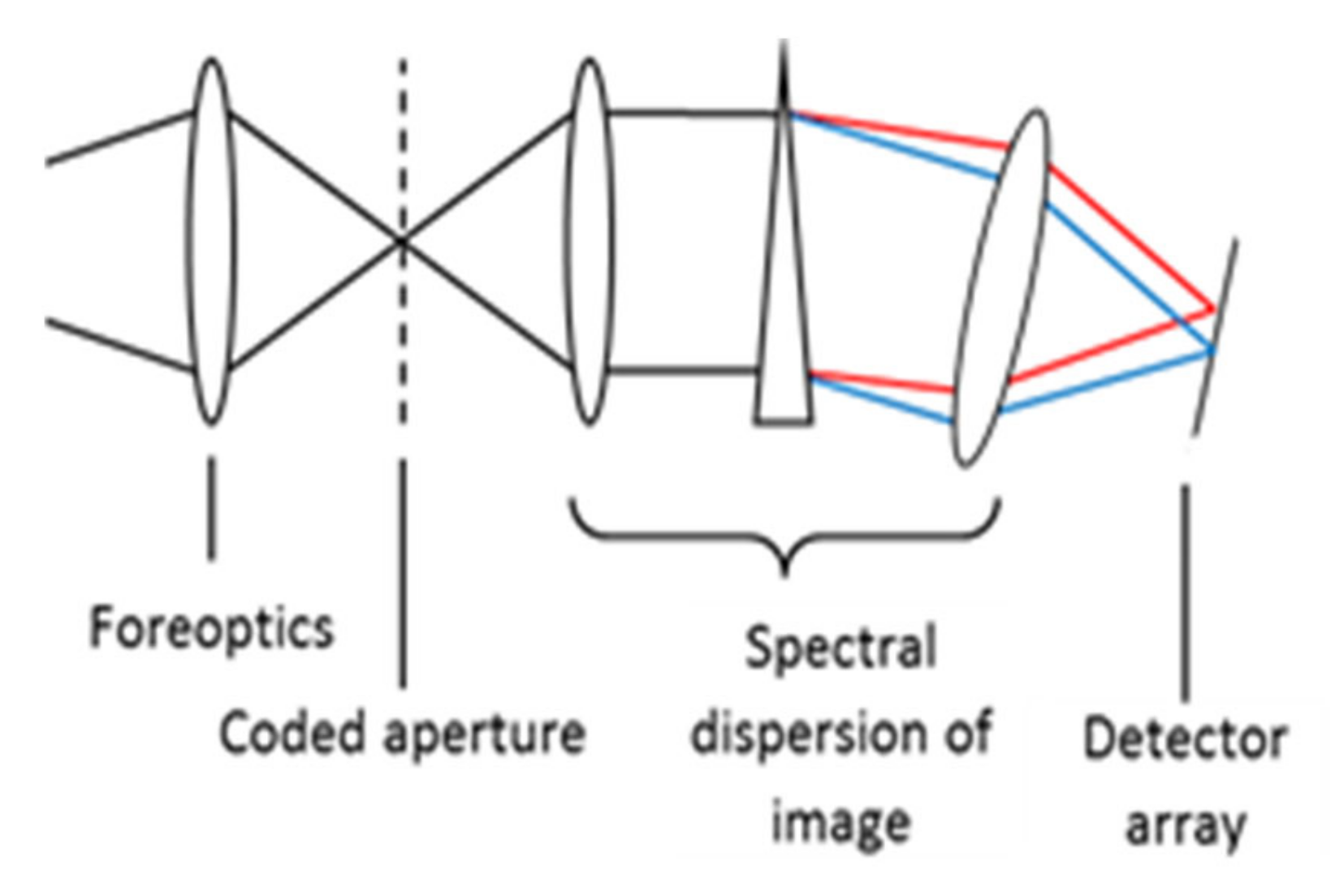

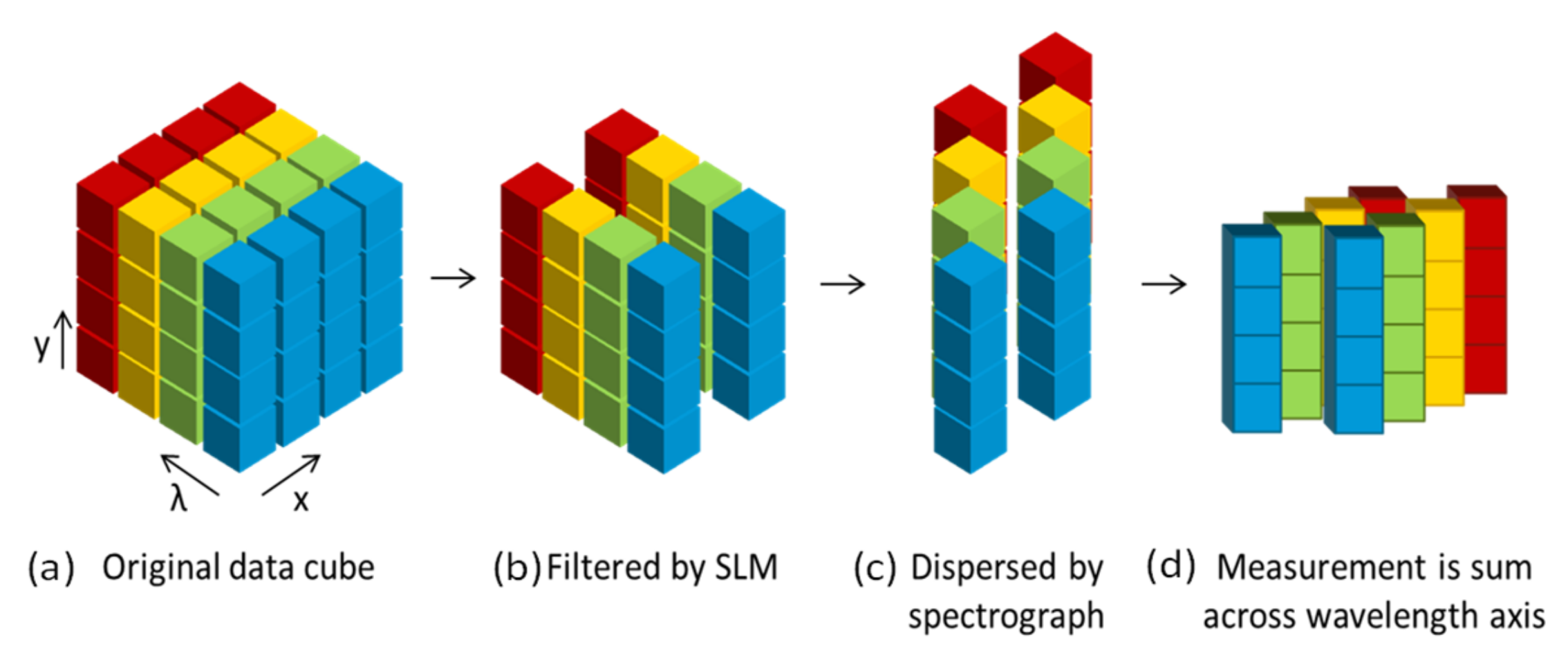

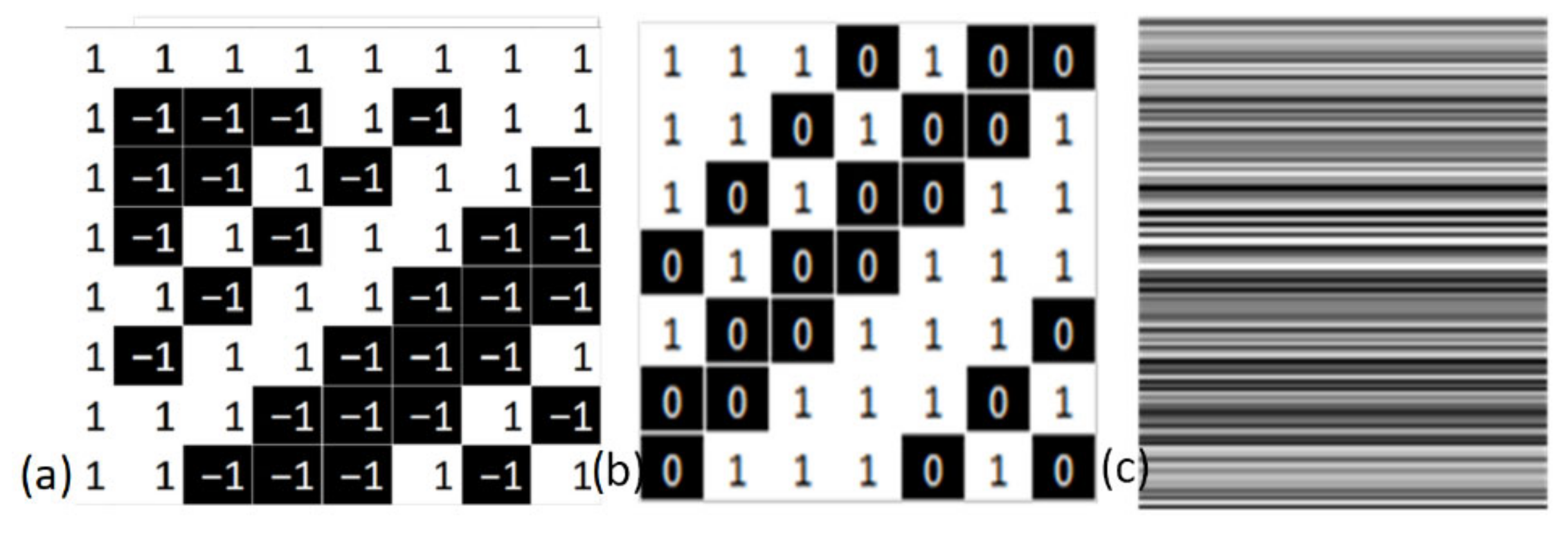

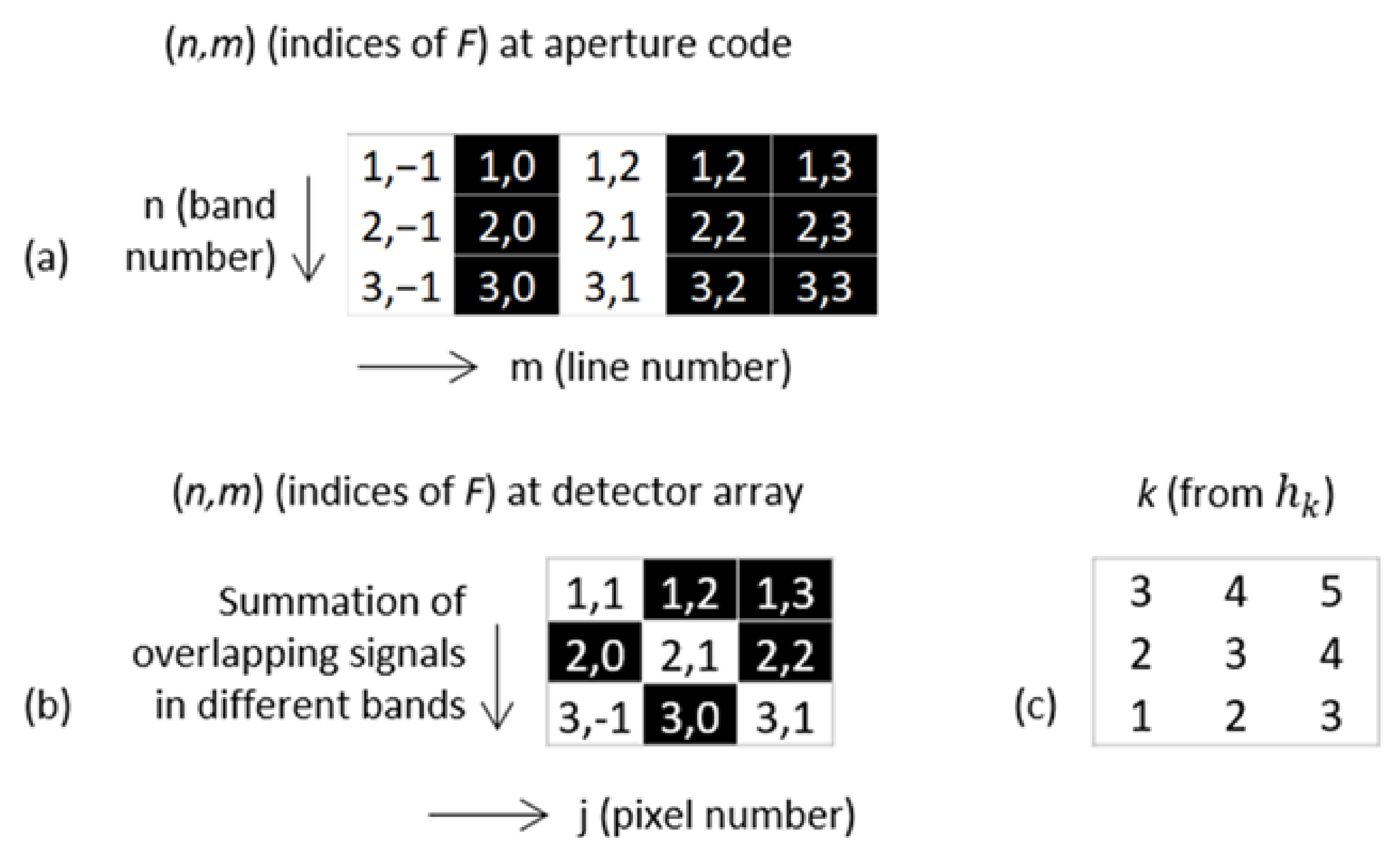

2.1. The Configuration of the Coded Aperture Sensor

2.2. The SNR Model for Assessing the Utility of CA Multiplexed Sensing

3. Results and Data Analysis

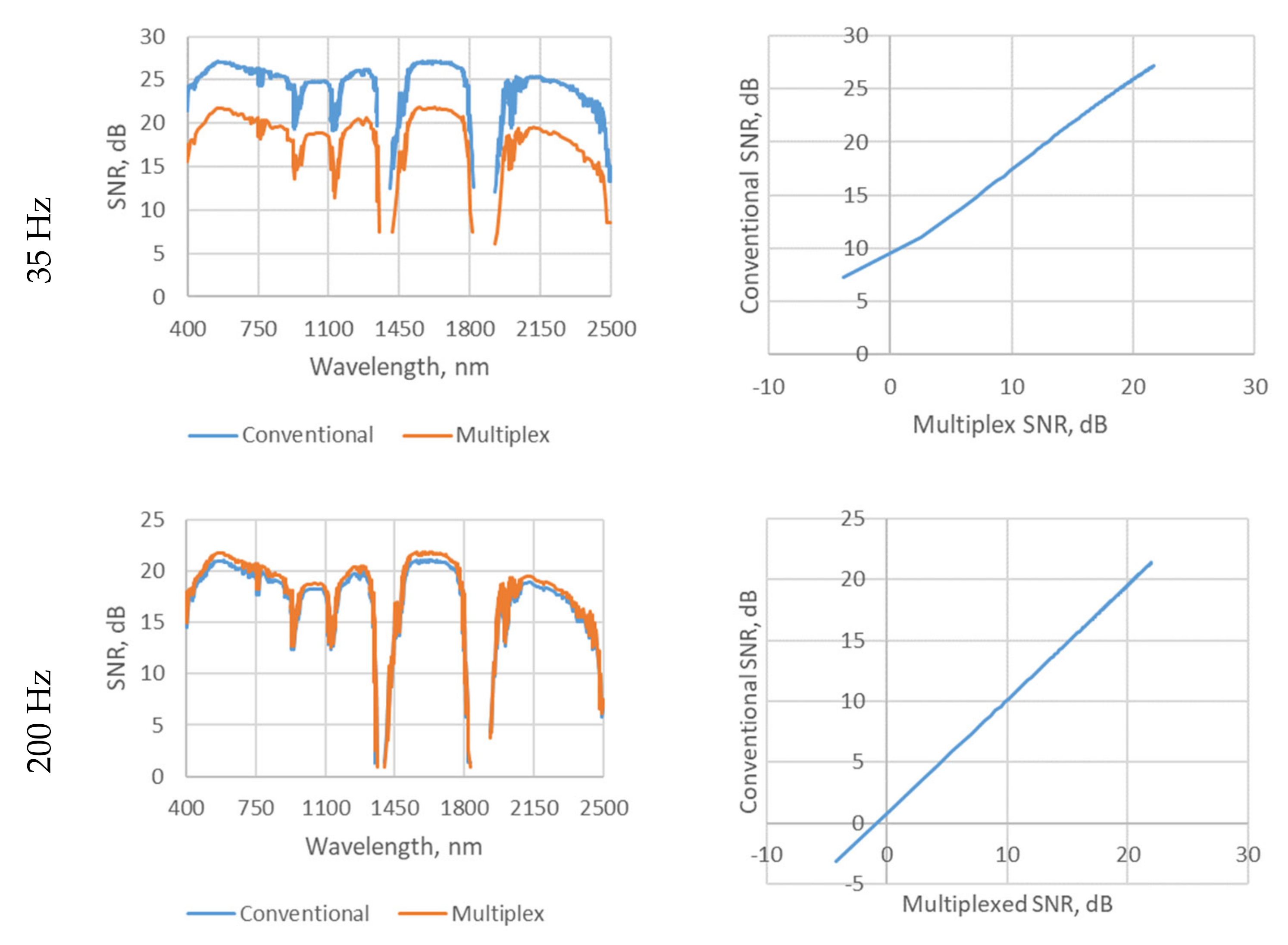

3.1. The Visualisation of SNR

3.2. The Trends of the SNR Performance

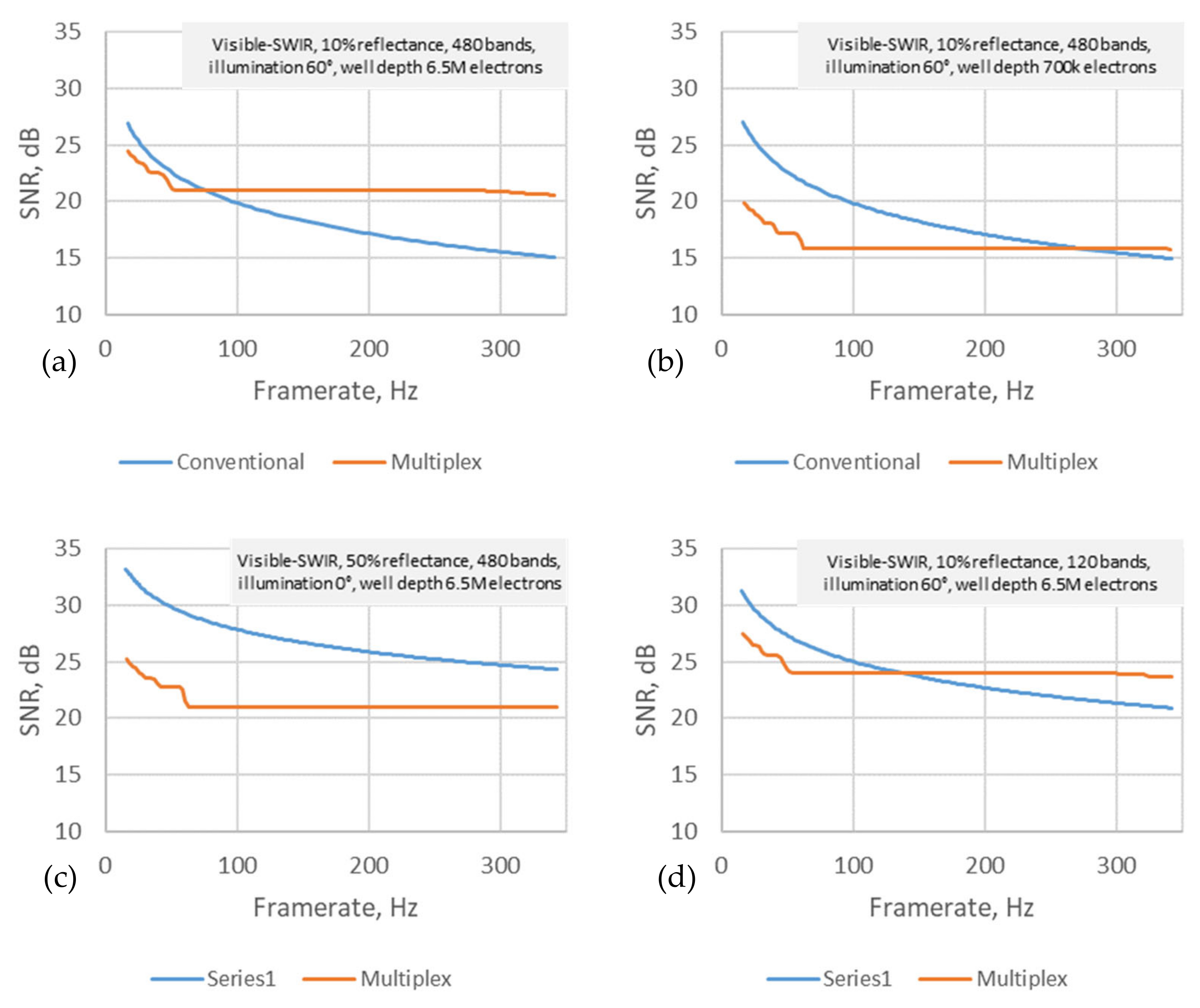

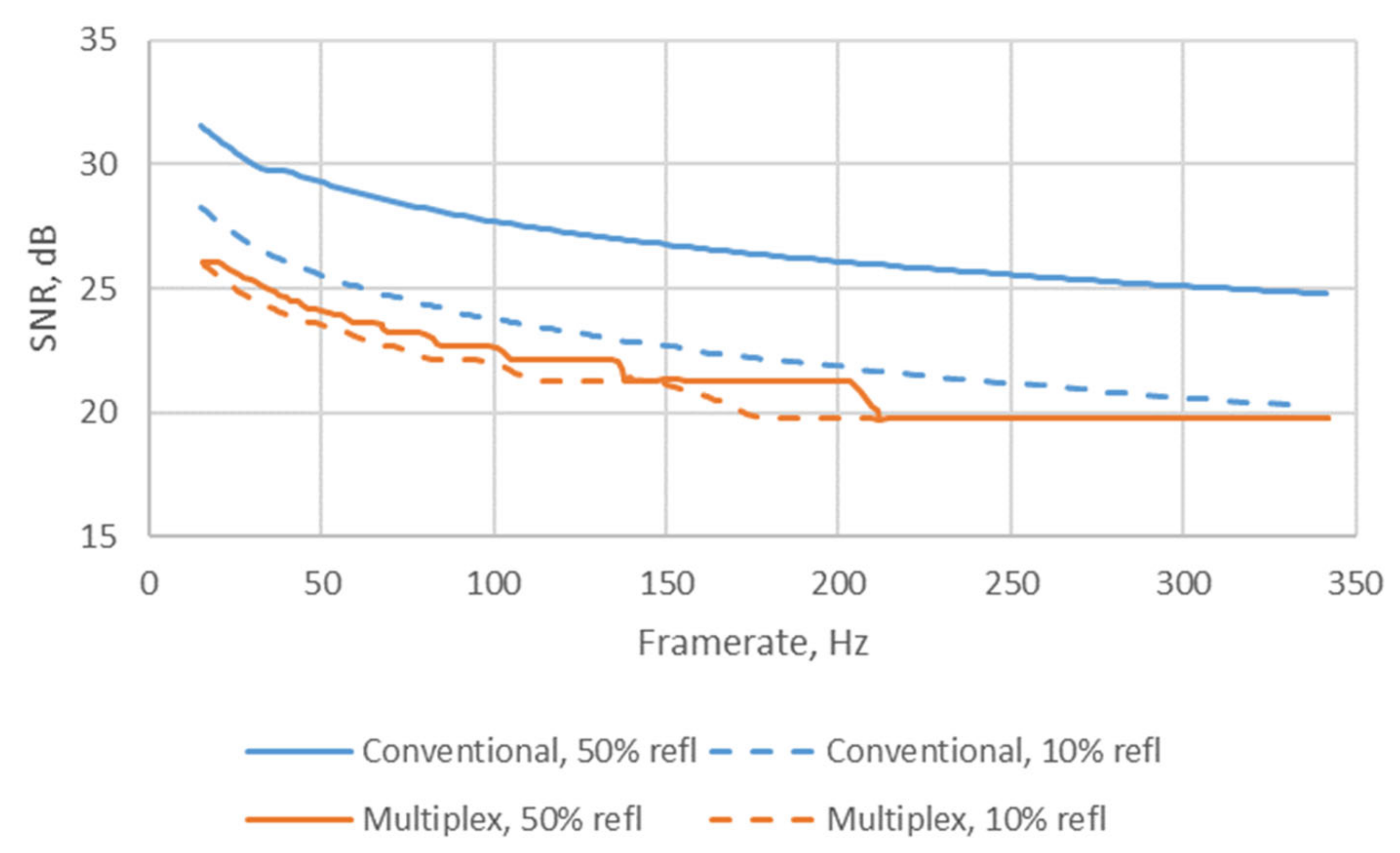

3.3. The SNR for the Integrated Visible-SWIR Sensor System

3.4. The SNR of the SWIR Imaging System

3.5. The SNR of the Visible-NIR Sensing

3.6. The Requirements of SNR for Successful Target Detections Based on Second Order Statistical Detector

3.7. Implication of the Present Results in the Context of Compressive Sensing Schemes

4. Summary and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Arce, G.R.; Brady, D.J.; Carin, L.; Arguello, H.; Kittle, D.S. Compressive coded aperture spectral imaging: An introduction. IEEE Signal Process. Mag. 2014, 31, 105–115. [Google Scholar] [CrossRef]

- Goldstein, N.; Vujkovic-Cvijin, P.; Fox, M.; Gregor, B.; Lee, J.; Cline, J.; Adler-Golden, S. DMD-based adaptive spectral imagers for hyperspectral imagery & direct detection of spectral signatures. Proc. SPIE 2009, 7210, 721008. [Google Scholar]

- Graff, D.L.; Love, S.P. Real-time matched-filter imaging for chemical detection using a DMD-based programmable filter. In Proceedings of the Emerging Digital Micromirror Device Based Systems & Applications V, San Francisco, CA, USA, 5–6 February 2013; Volume 86180F, p. SPIE 8618. [Google Scholar]

- Correa, C.V.; Arguello, H.; Arce, G.R. Spatiotemporal blue noise coded aperture design for multi-shot compressive spectral imaging. J. Opt. Soc. Am. A 2016, 33, 2312–2322. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Ma, X.; Lau, D.L.; Zhu, J.; Arce, G.R. Compressive Spectral Imaging Based on Hexagonal Blue Noise Coded Apertures. IEEE Trans. Comput. Imaging 2020, 6, 749–763. [Google Scholar] [CrossRef]

- Wang, L.; Xiong, Z.; Gao, D.; Shi, G.; Wu, F. Dual-camera design for coded aperture snapshot spectral imaging. Appl. Opt. 2015, 54, 848–858. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Zhao, Y. SNR of the coded aperture imaging system. Opt. Rev. 2021, 28, 106–112. [Google Scholar] [CrossRef]

- Deloye, C.J.; Flake, J.C.; Kittle, D.; Bosch, E.H.; Rand, R.S.; Brady, D.J. Exploitation Performance & Characterization of a Prototype Compressive Sensing Imaging Spectrometer. In Excursions in Harmonic Analysis, Volume 1: The February Fourier Talks at the Norbert Wiener Center; Andrews, D.T., Balan, R., Benedetto, J.J., Czaja, W., Okoudjou, A.K., Eds.; Birkhäuser Boston: Boston, MA, USA, 2013; pp. 151–171. [Google Scholar]

- Busuioceanu, M.; Messinger, D.W.; Greer, J.B.; Flake, J.C. Evaluation of the CASSI-DD hyperspectral compressive sensing imaging system. Proc. SPIE 2013, 8743, 87431V. [Google Scholar] [CrossRef]

- Tang, G.; Wang, Z.; Liu, S.; Li, C.; Wang, J. Real-Time Hyperspectral Video Acquisition with Coded Slits. Sensors 2022, 22, 822. [Google Scholar] [CrossRef] [PubMed]

- Harwit, M.; Sloane, N.J. Hadamard Transform Optics; Academic Press: New York, NY, USA, 1979. [Google Scholar]

- Wagadarikar, A.; John, R.; Willett, R.; Brady, D. Single disperser design for coded aperture snapshot spectral imaging. Appl. Opt. 2008, 47, B44–B51. [Google Scholar] [CrossRef] [PubMed]

- Kittle, D.; Choi, K.; Wagadarikar, A.; Brady, D.J. Multiframe image estimation for coded aperture snapshot spectral imagers. Appl. Opt. 2010, 49, 6824–6833. [Google Scholar] [CrossRef] [PubMed]

- ASTM G173; Standard Tables for Reference Solar Spectral Irradiances: Direct Normal & Hemispherical on 37° Tilted Surface. ASTM: West Conshohocken, PA, USA, 2012.

- Demers, R.T.; Bailey, R.; Beletic, J.W.; Bernd, S.; Bhargava, S.; Herring, J.; Kobrin, P.; Lee, D.; Pan, J.; Petersen, A.; et al. The CHROMA focal plane array: A large-format, low-noise detector optimized for imaging spectroscopy. Proc. SPIE 2013, 8870, 88700J. [Google Scholar]

- Mouroulis, P.; Green, R.O.; Van Gorp, B.; Moore, L.B.; Wilson, D.W.; Bender, H.A. Landsat swath imaging spectrometer design. Optice 2016, 55, 015104. [Google Scholar] [CrossRef]

- Figgemeier, H.; Benecke, M.; Hofmann, K.; Oelmaier, R.; Sieck, A.; Wendler, J.; Ziegler, J. SWIR detectors for night vision at AIM. Proc. SPIE 2014, 9070, 907008. [Google Scholar]

- Tennant, W.E. Rule 07 Revisited: Still a Good Heuristic Predictor of p/n HgCdTe Photodiode Performance? J. Electron. Mater. 2010, 39, 1030–1035. [Google Scholar] [CrossRef]

- Mouroulis, P.; Wilson, D.W.; Maker, P.D.; Muller, R.E. Convex grating types for concentric imaging spectrometers. Appl. Opt. 1998, 37, 7200–7208. [Google Scholar] [CrossRef] [PubMed]

- Bernstein, L.S.; Adler-Golden, S.M.; Sundberg, R.L.; Levine, R.Y.; Perkins, T.C.; Berk, A.; Ratkowski, A.J.; Felde, G.; Hoke, M.L. A New Method for Atmospheric Correction & Aerosol optical Property Retrieval for VIS-SWIR Multi-& Hyperspectral Imaging Sensors: QUAC (QUick Atmospheric Correction); Spectral Sciences Inc.: Burlington, MA, USA, 2005. [Google Scholar]

- Manolakis, D.; Marden, D.; Shaw, G.A. Hyperspectral image processing for automatic target detection applications. Linc. Lab. J. 2003, 14, 79–116. [Google Scholar]

- Mahdaoui, A.E.; Ouahabi, A.; Moulay, M.S. Image Denoising Using a Compressive Sensing Approach Based on Regularization Constraints. Sensors 2022, 22, 2199. [Google Scholar] [CrossRef] [PubMed]

| Modelled Sensor Parameters | Visible-SWIR | SWIR Band | Visible-NIR |

|---|---|---|---|

| No. of wavebands | 480.0 | 288.0 | 768.0 |

| Pixel sizes, µm | 30.0 | 20.0 | 8.0 |

| Pixel well depth, e− | 5.0 × 106 | 1.10 × 106 | 9.0 × 104 |

| Noise floor level, e− | 600.0 | 150.0 | 110.0 |

| Dark current level, e−/s/pixel | 207.0 | 100.0 | 4000.0 |

| Max. frame rate, Hz | 125.0 | 450.0 | 170.0 |

| Readout time, µs | 16.80 | 7.70 | -- |

| Optical throughput f/# | 2.00 | 2.00 | 2.50 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Piper, J.; Yuen, P.W.T.; James, D. Signal to Noise Ratio of a Coded Slit Hyperspectral Sensor. Signals 2022, 3, 752-764. https://doi.org/10.3390/signals3040045

Piper J, Yuen PWT, James D. Signal to Noise Ratio of a Coded Slit Hyperspectral Sensor. Signals. 2022; 3(4):752-764. https://doi.org/10.3390/signals3040045

Chicago/Turabian StylePiper, Jonathan, Peter W. T. Yuen, and David James. 2022. "Signal to Noise Ratio of a Coded Slit Hyperspectral Sensor" Signals 3, no. 4: 752-764. https://doi.org/10.3390/signals3040045

APA StylePiper, J., Yuen, P. W. T., & James, D. (2022). Signal to Noise Ratio of a Coded Slit Hyperspectral Sensor. Signals, 3(4), 752-764. https://doi.org/10.3390/signals3040045