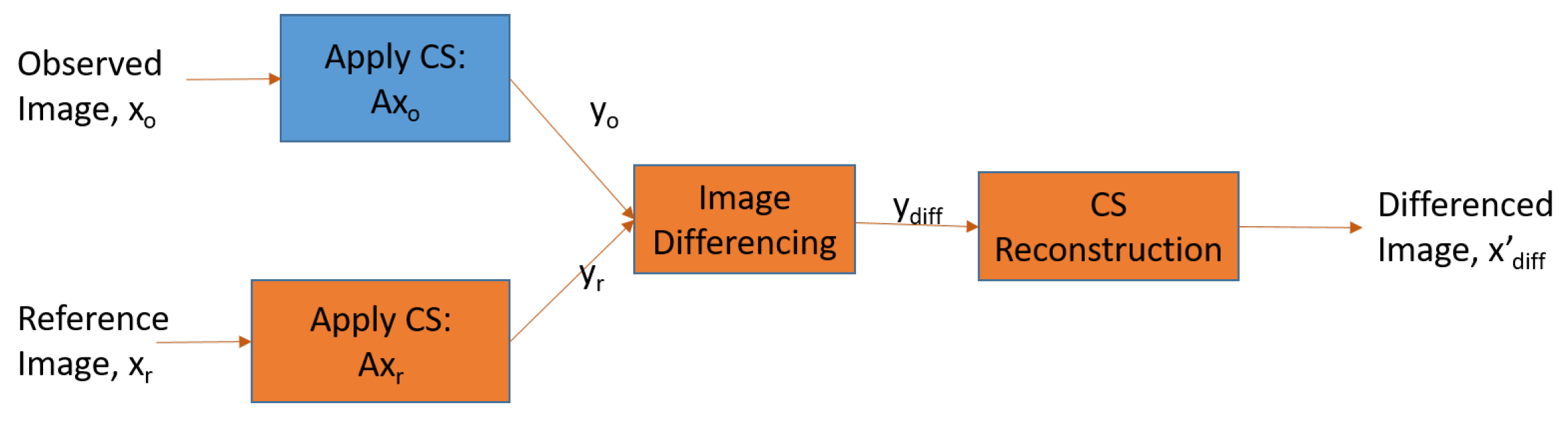

Figure 1.

CS Architecture. The blue block represents CS data acquisition which can be performed on-board a spaceflight instrument, while the orange blocks represent computations which can be performed on the ground. Image differencing can also be performed on-board to further reduce data volume.

Figure 1.

CS Architecture. The blue block represents CS data acquisition which can be performed on-board a spaceflight instrument, while the orange blocks represent computations which can be performed on the ground. Image differencing can also be performed on-board to further reduce data volume.

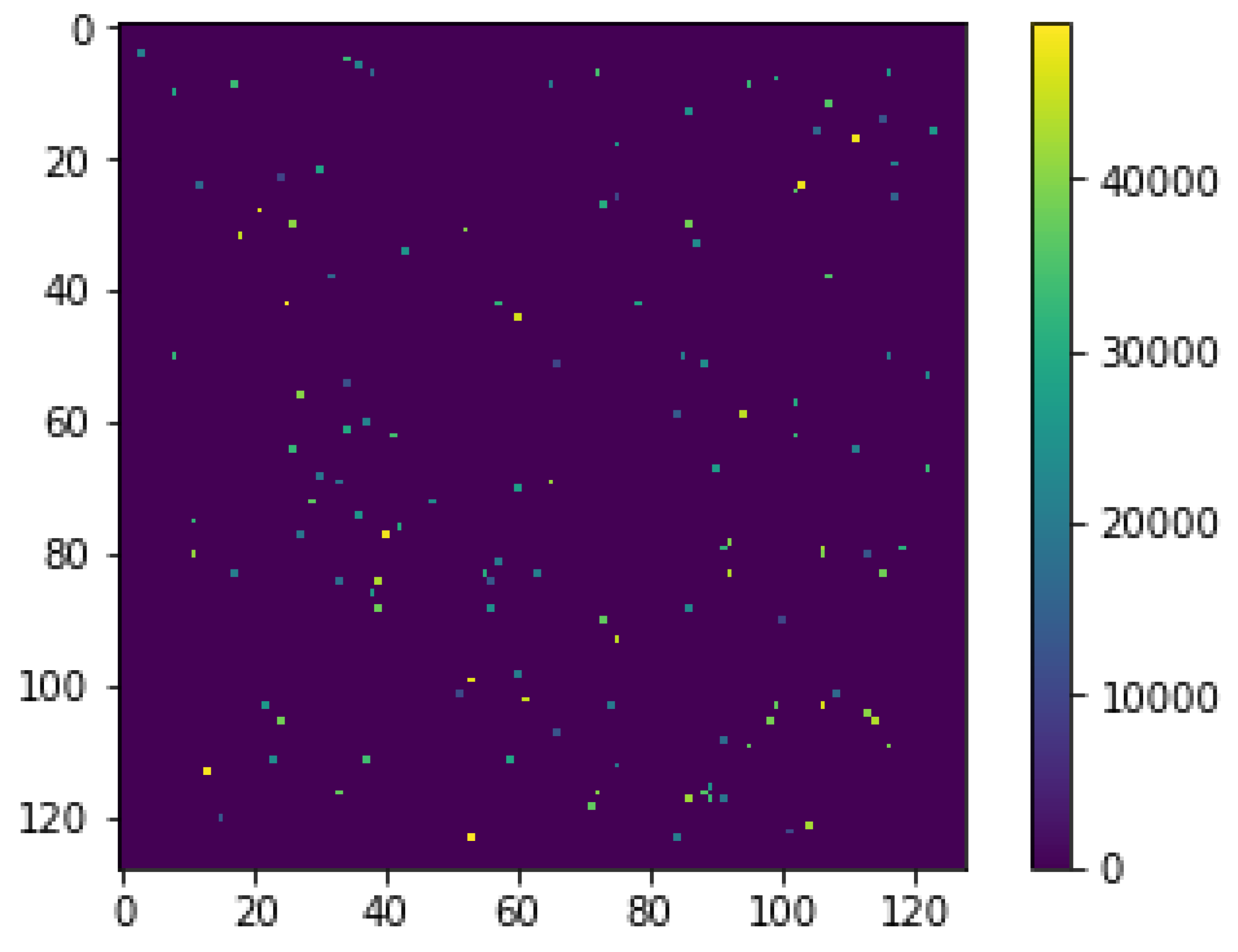

Figure 2.

Sample reference image.

Figure 2.

Sample reference image.

Figure 3.

Sample observed image.

Figure 3.

Sample observed image.

Figure 4.

Sample differenced image.

Figure 4.

Sample differenced image.

Figure 5.

A diagram of satellite constellations observing the same spatial region in order to capture a microlensing parallax of any microlensing event occurring the given field-of-view. X represents a satellite with a CS detector system.

Figure 5.

A diagram of satellite constellations observing the same spatial region in order to capture a microlensing parallax of any microlensing event occurring the given field-of-view. X represents a satellite with a CS detector system.

Figure 6.

A potential CS implementation of the detector system using a telescope to acquire the light from the spatial region, a set of micro-mirror arrays to reflect light using CS projection methods, and a photodiode to capture a single measurement of the total reflected light.

Figure 6.

A potential CS implementation of the detector system using a telescope to acquire the light from the spatial region, a set of micro-mirror arrays to reflect light using CS projection methods, and a photodiode to capture a single measurement of the total reflected light.

Figure 7.

Photometric curves generated by different parallax values, shown with its corresponding CS reconstructed curve for R = 7000 km.

Figure 7.

Photometric curves generated by different parallax values, shown with its corresponding CS reconstructed curve for R = 7000 km.

Figure 9.

Photometric curves generated by different parallax values, shown with its corresponding CS reconstructed curve for R = 42,000 km. The original photometric curve without any microlensing effects is shown in red for comparison.

Figure 9.

Photometric curves generated by different parallax values, shown with its corresponding CS reconstructed curve for R = 42,000 km. The original photometric curve without any microlensing effects is shown in red for comparison.

Figure 11.

Photometric curves generated by different parallax values, shown with its corresponding CS reconstructed curve for R = 1 AU. The magnification is significantly lower because the differenced image is reconstructed using our CS technique, and the in both and is significantly high.

Figure 11.

Photometric curves generated by different parallax values, shown with its corresponding CS reconstructed curve for R = 1 AU. The magnification is significantly lower because the differenced image is reconstructed using our CS technique, and the in both and is significantly high.

Table 1.

Microlensing parameter definitions obtained from a photometric curve.

Table 1.

Microlensing parameter definitions obtained from a photometric curve.

| Parameter | Definition |

|---|

| Time of peak magnification |

| Einstein ring crossing time: |

| Impact parameter in units of |

| Microlensing source star flux |

| Microlensing source star blended flux |

Table 2.

Comparison between traditional detectors and CS detectors.

Table 2.

Comparison between traditional detectors and CS detectors.

| Traditional Detectors | CS Detectors |

|---|

| CCD Detectors | Typically designed with spatial light modulators and photodiode |

| Pixel by pixel readout of the image | Total power reflected from the matrix projected onto the image is measured |

| Digitization of each pixel readout | Digitization of the total power read |

Table 3.

Simulation setup parameters.

Table 3.

Simulation setup parameters.

| R | | Cadence | Observation Time |

|---|

| 7000 km | 1 day | 48 min | 1 day |

| 42,000 km | 1 day | 48 min | 1 day |

| 1 AU | 1 day | 5.02 days | 150.5 days |

Table 4.

Percent Error for CS reconstruction for each for R = 7000 km. The second row shows average % error over all time samples, the third row shows average % error at peak magnification, and the last row shows the standard deviation of the % error at peak magnification.

Table 4.

Percent Error for CS reconstruction for each for R = 7000 km. The second row shows average % error over all time samples, the third row shows average % error at peak magnification, and the last row shows the standard deviation of the % error at peak magnification.

| 0 | | | | | | | |

|---|

| Avg % Err | 0.175 | 0.230 | 0.088 | 0.109 | 0.163 | 0.161 | 0.240 | 0.098 |

| Avg % Err at peak | 0.075 | 0.06 | 1.07 | 0.068 | 1.09 | 0.076 | 0.081 | 0.073 |

| Std dev. % Err at peak | 0.057 | 0.064 | 9.94 | 0.056 | 9.94 | 0.086 | 0.070 | 0.068 |

Table 5.

Percent error at peak magnification over 100 Monte Carlo simulations, between a microlensing photometric curve with shown in the first row, compared to the photometric curve with in the first column. Error values for R = 7000 km. Values in bold underline show where % error between the two curves is less than .

Table 5.

Percent error at peak magnification over 100 Monte Carlo simulations, between a microlensing photometric curve with shown in the first row, compared to the photometric curve with in the first column. Error values for R = 7000 km. Values in bold underline show where % error between the two curves is less than .

| | 0 | | | | | | | |

|---|

| 0 | - | 12.0 | 25.3 | 40.0 | 50.2 | 56.5 | 59.8 | 60.1 |

| 13.6 | - | 15.2 | 31.8 | 43.4 | 50.6 | 54.4 | 54.7 |

| 33.9 | 17.9 | - | 19.6 | 33.3 | 41.7 | 46.2 | 46.6 |

| 66.7 | 46.7 | 24.5 | - | 16.9 | 27.5 | 33.1 | 33.5 |

| 101 | 76.6 | 49.8 | 20.4 | - | 12.7 | 19.4 | 20.0 |

| 130 | 102 | 71.6 | 37.9 | 14.5 | - | 7.72 | 8.34 |

| 149 | 119 | 85.9 | 49.4 | 24.1 | 8.36 | - | 0.674 |

| 151 | 121 | 87.2 | 50.4 | 24.9 | 9.10 | 0.679 | - |

Table 6.

Time difference in Hours at peak magnification between a microlensing photometric curve with shown in the first row, compared to the photometric curve with in the first column. R = 7000 km.

Table 6.

Time difference in Hours at peak magnification between a microlensing photometric curve with shown in the first row, compared to the photometric curve with in the first column. R = 7000 km.

| | 0 | | | | | | | |

|---|

| 0 | - | 0 | 0.828 | 0.828 | 1.66 | 1.66 | 0.828 | 0.828 |

| 0 | - | 0.828 | 0.828 | 1.66 | 1.66 | 0.828 | 0.828 |

| 0.828 | 0.828 | - | 0 | 0.828 | 0.828 | 0. | 0 |

| 0.828 | 0.828 | 0 | - | 0.828 | 0.828 | 0 | 0 |

| 1.66 | 1.66 | 0.828 | 0.828 | - | 0 | 0.828 | 0.828 |

| 1.66 | 1.66 | 0.828 | 0.828 | 0 | - | 0.828 | 0.828 |

| 0.828 | 0.828 | 0 | 0 | 0.828 | 0.828 | - | 0 |

| 0.828 | 0.828 | 0 | 0 | 0.828 | 0.828 | 0 | - |

Table 7.

Percent Error for CS reconstruction for each for R = 42,000 km. The second row shows average % error over all time samples, the third row shows average % error at , and the last row shows the standard deviation of the % error at .

Table 7.

Percent Error for CS reconstruction for each for R = 42,000 km. The second row shows average % error over all time samples, the third row shows average % error at , and the last row shows the standard deviation of the % error at .

| 0 | | | | | | | |

|---|

| Avg % Err | 0.108 | 0.110 | 0.182 | 0.110 | 0.191 | 0.208 | 0.173 | 0.201 |

| Avg % Err at peak | 1.07 | 0.059 | 0.091 | 1.07 | 0.086 | 0.063 | 0.062 | 0.071 |

| Std dev. % Err at peak | 9.94 | 0.041 | 0.205 | 9.94 | 0.094 | 0.049 | 0.062 | 0.057 |

Table 8.

Percent error at peak magnification between a microlensing photometric curve with shown in the first row, compared to the photometric curve with in the first column. Error values for R = 42,000 km. Values in bold underline show where % error between the two curves is less than .

Table 8.

Percent error at peak magnification between a microlensing photometric curve with shown in the first row, compared to the photometric curve with in the first column. Error values for R = 42,000 km. Values in bold underline show where % error between the two curves is less than .

| | 0 | | | | | | | |

|---|

| 0 | - | 22.1 | 7.50 | 109 | 215 | 25.1 | 59.7 | 70.9 |

| 28.3 | - | 18.7 | 168 | 305 | 3.85 | 48.3 | 62.6 |

| 8.11 | 15.8 | - | 126 | 241 | 19.0 | 56.5 | 68.5 |

| 52.1 | 62.7 | 55.7 | - | 51.0 | 64.1 | 80.7 | 86.1 |

| 68.3 | 75.3 | 70.7 | 33.8 | - | 76.2 | 87.2 | 90.8 |

| 33.5 | 4.00 | 23.5 | 179 | 321 | - | 46.2 | 61.1 |

| 148 | 93.4 | 130 | 418 | 682 | 86.0 | - | 27.7 |

| 243 | 168 | 218 | 617 | 982 | 157 | 38.3 | - |

Table 9.

Time difference in Hours at peak magnification between microlensing photometric curve with shown in the first row, compared to the photometric curve with in the first column. Error values for R = 42,000 km.

Table 9.

Time difference in Hours at peak magnification between microlensing photometric curve with shown in the first row, compared to the photometric curve with in the first column. Error values for R = 42,000 km.

| | 0 | | | | | | | |

|---|

| 0 | - | 1.66 | 4.14 | 6.62 | 9.10 | 10.8 | 10.8 | 10.8 |

| 1.66 | - | 2.48 | 4.97 | 7.45 | 9.10 | 9.10 | 9.10 |

| 4.14 | 2.48 | - | 2.48 | 4.97 | 6.62 | 6.62 | 6.62 |

| 6.62 | 4.97 | 2.48 | - | 2.48 | 4.14 | 4.14 | 4.14 |

| 9.10 | 7.45 | 4.97 | 2.48 | - | 1.66 | 1.66 | 1.66 |

| 10.8 | 9.10 | 6.62 | 4.14 | 1.66 | - | 0 | 0 |

| 10.8 | 9.10 | 6.62 | 4.14 | 1.66 | 0 | - | 0 |

| 10.8 | 9.10 | 6.62 | 4.14 | 1.66 | 0 | 0 | - |

Table 10.

Percent Error for CS reconstruction for each for R = 1 AU. The second row shows average % error over all time samples, the third row shows average % error at the peak of each curve, and the last row shows the standard deviation of the % error at the peak.

Table 10.

Percent Error for CS reconstruction for each for R = 1 AU. The second row shows average % error over all time samples, the third row shows average % error at the peak of each curve, and the last row shows the standard deviation of the % error at the peak.

| 0 | | | | | | | |

|---|

| Avg % Err | 0.437 | 0.441 | 0.633 | 0.743 | 0.621 | 0.348 | 0.616 | 0.582 |

| Avg % Err at peak | 0.186 | 0.192 | 0.192 | 0.194 | 0.183 | 0.119 | 0.106 | 0.117 |

| Std dev. % Err at peak | 0.146 | 0.190 | 0.178 | 0.183 | 0.137 | 0.287 | 0.092 | 0.083 |

Table 11.

Percent error at peak between a microlensing photometric curve with shown in the first row, compared to the photometric curve with in the first column. Error values for R = 1 AU. Values in bold underline show where % error between the two curves is less than .

Table 11.

Percent error at peak between a microlensing photometric curve with shown in the first row, compared to the photometric curve with in the first column. Error values for R = 1 AU. Values in bold underline show where % error between the two curves is less than .

| | 0 | | | | | | | |

|---|

| 0 | - | 12.7 | 27.2 | 43.7 | 18.7 | 276 | 217 | 167 |

| 11.3 | - | 12.8 | 27.5 | 5.34 | 234 | 181 | 137 |

| 21.4 | 11.4 | - | 13.0 | 6.65 | 196 | 149 | 110 |

| 30.4 | 21.5 | 11.5 | - | 17.4 | 162 | 121 | 85.6 |

| 15.8 | 5.07 | 7.12 | 21.0 | - | 217 | 167 | 125 |

| 73.4 | 70.0 | 66.2 | 61.8 | 68.4 | - | 15.7 | 29.1 |

| 68.5 | 64.4 | 59.9 | 54.7 | 62.5 | 18.6 | - | 15.9 |

| 62.5 | 57.7 | 52.3 | 46.1 | 55.5 | 41.0 | 18.9 | - |

Table 12.

Time difference in Days at peak between a microlensing photometric curve with shown in the first row, compared to the photometric curve with in the first column. Error values for R = 1 AU. Values in bold underline show where % error between the two curves is less than .

Table 12.

Time difference in Days at peak between a microlensing photometric curve with shown in the first row, compared to the photometric curve with in the first column. Error values for R = 1 AU. Values in bold underline show where % error between the two curves is less than .

| | 0 | | | | | | | |

|---|

| 0 | - | 25.9 | 51.9 | 77.8 | 88.2 | 62.3 | 36.3 | 10.4 |

| 25.9 | - | 25.9 | 51.9 | 62.3 | 88.2 | 62.3 | 36.3 |

| 51.9 | 25.9 | - | 25.9 | 36.3 | 114 | 88.2 | 62.3 |

| 77.8 | 51.9 | 25.9 | - | 10.4 | 140 | 114 | 88.2 |

| 88.2 | 62.3 | 36.3 | 10.4 | - | 151 | 125 | 98.6 |

| 62.3 | 88.2 | 114 | 140 | 151 | - | 25.9 | 51.9 |

| 36.3 | 62.3 | 88.2 | 114 | 125 | 25.9 | - | 25.9 |

| 10.4 | 36.3 | 62.3 | 88.2 | 98.6 | 51.9 | 25.9 | - |

Table 13.

FPGA modules comparison for a traditional detector and CS based detector.

Table 13.

FPGA modules comparison for a traditional detector and CS based detector.

| | Traditional Detector | CS Detector |

|---|

| 1 | Data acquisition (ADC) interface | Data acquisition (ADC) interface |

| 2 | Data storage module | Data storage module |

| 3 | Data compression | Spatial modulation implementation |

| 4 | Data packetization and transmission | Data packetization and transmission |