Abstract

Due to impaired mobility caused by aging, it is very important to employ early detection and monitoring of gait parameters to prevent the inevitable huge amount of medical cost at a later age. For gait training and potential tele-monitoring application outside clinical settings, low-cost yet highly reliable gait analysis systems are needed. This research proposes using a single LiDAR system to perform automatic gait analysis with polynomial fitting. The experimental setup for this study consists of two different walking speeds, fast walk and normal walk, along a 5-m straight line. There were ten test subjects (mean age 28, SD 5.2) who voluntarily participated in the study. We performed polynomial fitting to estimate the step length from the heel projection cloud point laser data as the subject walks forwards and compared the values with the visual inspection method. The results showed that the visual inspection method is accurate up to 6 cm while the polynomial method achieves 8 cm in the worst case (fast walking). With the accuracy difference estimated to be at most 2 cm, the polynomial method provides reliability of heel location estimation as compared with the observational gait analysis. The proposed method in this study presents an improvement accuracy of 4% as opposed to the proposed dual-laser range sensor method that reported 57.87 cm ± 10.48, an error of 10%. Meanwhile, our proposed method reported ±0.0633 m, a 6% error for normal walking.

1. Introduction

The physical mobility of an individual is one of the indicators of quality of life. A decline in mobility due to aging or other physical/mental disability has been linked to a surge in chronic illness and mood changes, amongst others [1]. The bulk of the research touching on mobility has been directed towards older adults since loss of mobility is prevalent with age. Physical mobility challenges lead to dependence and costly healthcare attention [2,3]. From this, it is extremely important to identify mobility problems as early as possible, to ensure remedial actions are taken early enough. This is usually achieved using gait analysis.

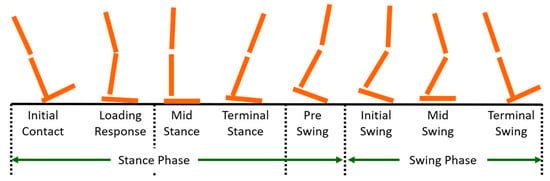

Gait is a term to describe an individual’s pattern of walking. A gait cycle can be categorized into two different phases, as shown in Figure 1. The sub-categories in stance phase is initial contact, loading response, mid stance, terminal stance, and pre-swing. Swing phase consists of initial swing, mid swing, and terminal swing. Hence, the gait cycle starts when the heel of one foot is in contact with the ground and ends when the heel of the same foot is again in contact with the ground. By observing the gait cycle, parameters such as step time, swing time, step length and width, weight distribution, cadence, etc., can be obtained and utilized for clinical analysis to identify and isolate gait disorders.

Figure 1.

Gait phases.

To identify gait deviations or to make a diagnosis for appropriate therapy, acquiring the spatiotemporal gait parameters is necessary [4]. Stance time, swing time, and step length are several parameters that are frequently studied. By focusing on the detection of foot contact with the ground, also known as single-leg support, other spatiotemporal gait parameters are readily obtained.

According to the authors in [5], gait disorders and falls are largely underdiagnosed and often receive inadequate evaluation. The causes of gait disorders may include neurological conditions, orthopedic problems, and medical conditions. In order to evaluate the gait disorders, careful clinical observations of gait-related neurological functions should be regularly analyzed, as early as possible. Axial motor symptoms are usually less well documented in medical reports than other parts of the neurological examination [5]. One of the commonly reported neurological gait disorder is freezing of gait (FoG). Tracking of the occurrence of the disorder has been shown to be a challenging task for clinicians [6,7]. On the other hand, if the information leading to development of the disorder can be availed through self-reported devices or clinician reports, optimization of therapy, which has been proven to be beneficial, can be properly adopted.

There are two approaches to evaluate the presence of gait disorders: gait testing using appropriate devices or by response-based evaluation by patients through questionnaires. Gait analysis using devices gives objective results to assist the clinician in making an accurate diagnosis. As an example, to predict the risk of fall and mobility performance in elderly adults, maximal step length has been reported to have this ability, and is easy to measure in clinical settings [8,9]. It is reported that older adults with a history of falls use stepping responses more frequently than non-fallers. Particularly, when having to change leg or direction, a slowed voluntary stepping is observed [10,11,12].

With the problem at hand, accurate diagnosis is principally tied to the quality of the detection of gait parameters. As such, manufacturers have focused on the development of a cost-effective gait measurement device. In the 21st century, democratization of healthcare and telemonitoring trends is pushing the needs for accessible/wearable measurement devices and approaches utilizing recent technological feats [13]. To this end, a lot of research has been focusing on developing a small and low-cost device that is reliable enough to measure the gait parameters accurately [14,15,16,17].

Conventional gait analysis is performed using multi-camera systems or a pressure-sensitive walkway [18,19,20,21]. These two systems have been signified to be the gold standard for analyzing and validation of spatiotemporal gait parameters. Although they offer the measurement accuracy needed for healthcare professionals to evaluate gait quality, the high cost of the equipment is a limiting factor. Thus, to counter this problem, gait analysis system that are versatile and accessible outside of clinical settings, while still achieving accurate measurement, have been proposed in literature.

Two general distinctions of the proposed measurement system can be identified: wearable and non-wearable gait measurement systems. For wearable systems, the commonly used approach is the use of inertial measurement units (IMU) equipped with gyro and accelerometer sensors that gets the acceleration and angles of the attached body parts, in this case the legs. The system has been utilized in areas like sport science, rehabilitation, pose tracking, among others [22,23]. The biggest appeal is on the ease-of-use that wearable measurement units afford the user. On the other hand, IMU systems, as reported in various studies, face some challenges. The obtained IMU data may produce low accuracy and noisy data that must be pre-processed using a fusion algorithm and calibrated beforehand [24]. Moreover, the relative orientation between the sensors and the subject body cannot be fixed over different data acquisition sessions [25]. In a system developed by [26], the accuracy of IMU in estimating 3D orientation was investigated by using a simple pendulum motion. The authors reported an RMS error of between 8.5° and 11.7° of the IMU raw data processed by the supplied Kalman filter algorithm supplied by the vendor. However, with the custom fusion algorithm developed by the authors, the RMS error was reduced to between 0.8° and 1.3°. For the wearable sensors, to increase the accuracy, a fusion algorithm that suits the physical activities tracking and the multiple use of body-worn sensors can potentially reduce the ease of use. One of the solutions to encounter the limitations face by body-worn sensors is the use of a non-wearable system. This approach employs alternative sensing elements, such as cameras and lasers, for gait monitoring.

For non-wearable systems, camera-based methods have been widely studied by researchers [27,28,29]. For camera-based methods, there are two different approaches: marker-based and marker-less. A study by [30] indicated that marker-based systems, such as Vicon Nexus, offer a more accurate result compared to marker-less systems. Vicon’s motion-capture systems use high-speed and high-resolution imaging cameras positioned around the space where the action is taking place to detect the reflective markers attached to the body in real-time. Equipped with specialized software, Nexus can precisely determine where the cameras are positioned in space. Thus, marker coordinates are computed faster and effortlessly. However, this system requires carefully configured camera settings in a laboratory setting with sufficient markers on the subject. In addition, this type of system requires more time, technical expertise, and equipment to assess the analysis outcomes [31]. Hence, there has been a growing interest in developing marker-less-based technologies.

For a marker-less-based system, an RGB-depth camera that can extract kinematic information has been developed by Microsoft Kinect and Intel RealSense, among others [32,33,34,35]. The pros for this type of method would be the absence of markers, which maximize the subject’s movement comfortability and reduction in preparation time prior to the experiment. Furthermore, by extracting the kinetic information, the accuracy can be improved. Nevertheless, most of the currently available methods are still based on laboratory settings and have limitations regarding the capture rate, capture volume, and even uncontrolled lighting conditions [36,37]. In a system developed by [38], the authors developed a 3D marker-less motion capture technique using OpenPose, a state-of-the-art machine-learning, posture-tracking algorithm, from cameras. Several other researches are adopting motion-capture technology leveraging on deep-learning algorithms [39,40]. The current challenge this and other strategies are trying to solve is to make detection systems applicable in vast environments, such as open air or welfare fields.

Besides the camera systems, in recent years, laser range scanners have been gaining interest to be used for gait analysis. This is because the cost of a laser range scanner has decreased greatly and real-time multi-line LiDAR (Light Detection and Ranging), such as Velodyne HDL-64E [41], Puck, and SICK TiM7xx [42], provide three-dimensional range information to a target using multiple laser beams in real time. Additionally, it has a fairly long range of measurement of over 25 m. However, this type of particular LiDAR is mainly used for person identification and recognition activity [43,44]. There have been few studies on detecting spatiotemporal gait parameters only using Velodyne LiDAR.

A research study on spatiotemporal parameters gait analysis based on a LiDAR approached was conducted by the authors in [45]. The study focused on step length measurement using LiDAR utilizing dual laser range sensors. The authors reported that the measurement using a laser range sensor is quick and easy to set up. The proposed method involved installing dual laser range sensors at the opposite ends of a 5 m walking path. The objective was to analyze a longer-range gait measurement from ten healthy participants. The authors proposed a walking test on three different speeds—slow, normal, and fast walking—and compared the validity of the proposed method to the instrumented walkway measurements.

In this paper, we propose a measuring system to estimate the step length of the subject using a single LiDAR system. The aim of the present study is to develop and validate the usability of the single LiDAR system as a step length measurement that can perform outside the laboratory setting, building on the setup as reported in [45]. Two-dimensional LiDAR data of below the ankle area, as the subjects walked forward, were used to evaluate the walking experiments of two different walking speeds, namely, a normal walk and fast walk. Normal walking speed is defined as the participants’ preferred walking speed in a comfortable manner. Meanwhile, for fast walking speed, the participants were instructed to walk twice as fast as one’s preferred normal walking speed. We employed polynomial fitting methods to identify the foot off and step lengths. We utilized visual inspection to confirm the validity of the proposal and compared the performance of the two approaches.

2. Materials and Methods

2.1. Experimental Setup

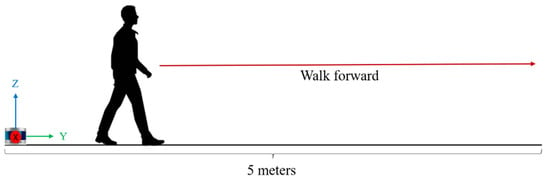

We conducted an experiment to characterize the walking gait in an uncontrolled environment. The setup involved gathering 3D LiDAR data and analyzing the data in MATLAB. For the data acquisition part, the personal computer is connected to the 3D LiDAR (Velodyne VLP-16) device via an ethernet cable. Participants were asked to walk along a straight path, as shown in Figure 2. The VLP-16 was placed 1 m away from the subject on a flat ground. Altogether the walking distance from the LiDAR is 5 m. Two different walking speeds were experimented for each different speed. The first experiment is walking in a normal speed and the second experiment is walking in a faster speed. During the recording of the walking test using 3D LiDAR, each participant was asked to stand still for 3 s before starting the walk and after finishing the walk. The current experiment was conducted in an office setup with many objects, as will be discussed. This we believe is a fairly representative (messy) environment to perform data recording that would simulate an outdoor environment.

Figure 2.

Walking experiment setup.

2.2. Apparatus

A Velodyne LiDAR, particularly, the VLP-16, was used for this experiment. This LiDAR was used to record the leg trajectory of the participants. The VLP-16 has real-time technology, 3D distances, and calibrated reflective measurements. The small yet powerful device makes it flexible to use in any environment. The LiDAR sensor has a scanning range of 360° horizontal field of view and 30° (+15° to −15°) vertical field of view, with a rotation rate of 5–20 Hz.

The LiDAR’s real-time visualization and processing application of the data sensor is called VeloView [46]. It has the ability of pre-recorded playback data stored in pcap files as well as recording live streams as pcap files. Using this viewing application, the user can use it to display, select, and measure information about the cloud points captured from the sensor. For further analysis of data extraction, MATLAB was used to calculate the step length automatically of the extracted csv files that contains the necessary parameters, such as cloud points coordinates and distance in meter.

2.3. Lidar Projection Analysis

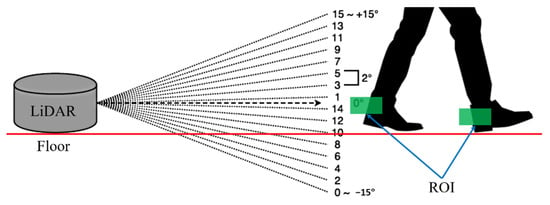

2.3.1. LiDAR Laser Channel Selection

A single laser channel of the LiDAR device has a 903 nm laser emitter and detector pair. There are altogether 16 laser channels, as shown in Figure 3 below. Each laser channel with a given laser ID number is fixed at a particular elevation angle relative to the horizontal plane of the sensor. For example, a laser ID of 15 will have an elevation angle of 15°. Hence, to select only the best one that fits the region of interest (ROI), which is the ankle area of the subject’s leg, the laser ID of number 1 with a 1° elevation angle was selected, as shown.

Figure 3.

Selected LiDAR laser ID in line with the region of interest of the subject’s ankle area.

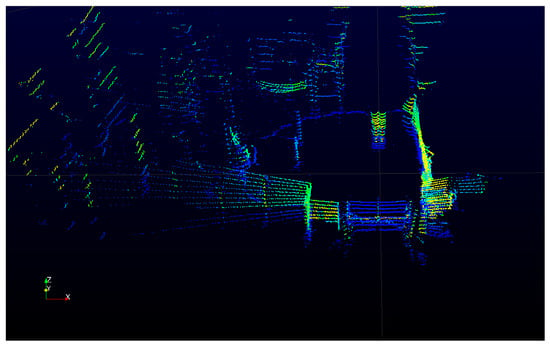

2.3.2. Cloud Point Data Extraction

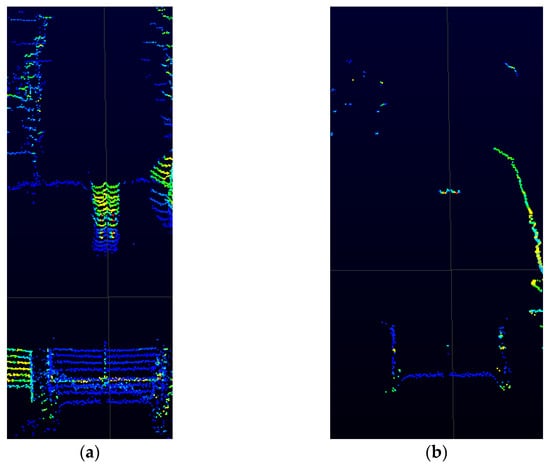

The cloud points captured from LiDAR was viewed using VeloView software, as shown in Figure 4. Environmental data were captured showing the walls and other office structures. By comparing with Figure 5a,b, the selection of a laser ID of 1° angle of elevation provides a clearer view of the ankle of the subject since the main focus here is to capture the firing of the laser onto the ankle only when walking. In VeloView, one rotation can be referred to as a single “frame” of data, beginning and ending at approximately 0° azimuth. For the walking experiment, the RPM was set to 600 for both walking speeds. Hence, 600 RPM will produce 10 frames per second. The recorded data with only the selection ID number 1 were then exported as XYZ data in csv format for further analysis.

Figure 4.

Live-captured 3D LiDAR data obtained from the VeloView software in an office setup.

Figure 5.

Zoomed view of the live-captured 3D LiDAR device: (a) walk path without prior ID selection (all firing laser channels); and (b) with only a laser ID selection of 1 (1° elevation angle).

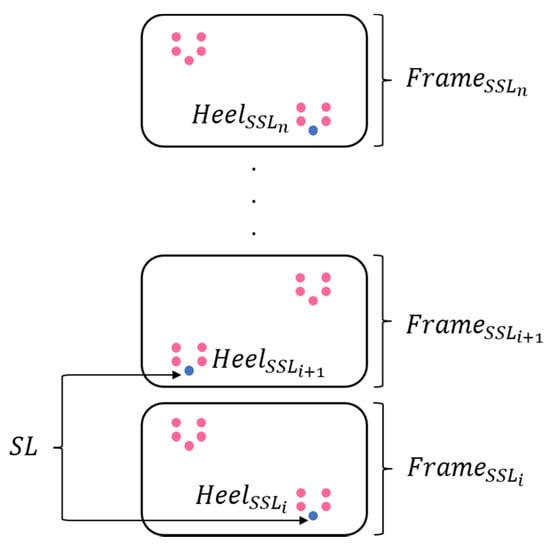

2.3.3. Single-Support Leg Identification

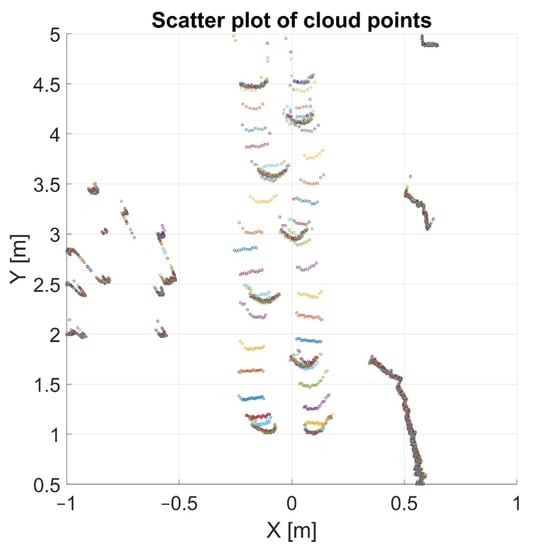

Single-support leg is the period when only one leg is at stance while the opposite leg is swinging. In this experiment, the single-support leg is identified by the high concentration of cloud points, as shown in Figure 6. The high concentration of cloud points is where the leg is in contact with the ground while the opposite leg is swinging in the air.

Figure 6.

Scatter plot of the cloud points after frame extraction.

After the frame extraction, unwanted cloud points can be seen since the laser from the LiDAR device is projecting 360° of the environments. These outliers can be a consequence of the step length analysis method, as it is considered as noisy data. By specifying the range of the current horizontal distance of x and vertical distance of y in Figure 6, the outliers can be removed. For instance, in Figure 6, the outlier can be removed by specifying outside the range .

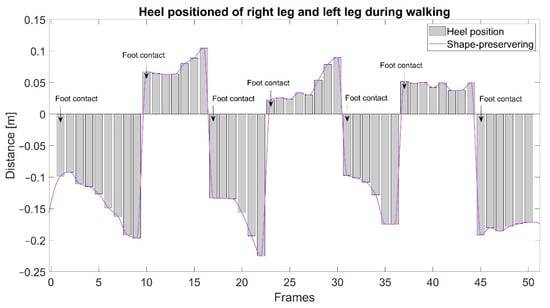

2.3.4. Heel Tracking from Laser Scans and Step Length Calculation

Figure 7 shows the recognition of the right and left heel from the raw point cloud data of the LiDAR device during walking. The frames represents the time frames of walking duration and the distance (m) represents the horizontal x-axis value obtained from the LiDAR device. Values less than 0 m determined the left leg while the right leg is identified by values more than 0 m. Hence, when the heel values change from negative to positive, then the position of the left leg changes to right leg, and vice versa. As a result, the distance between the point of initial contact of one foot and the point of initial contact of the opposite foot can be computed as the step length.

Figure 7.

Heel estimation from both the right and left legs.

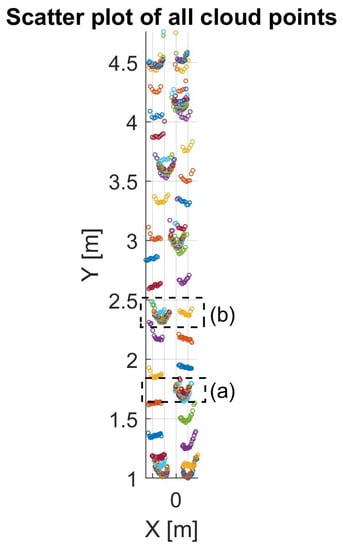

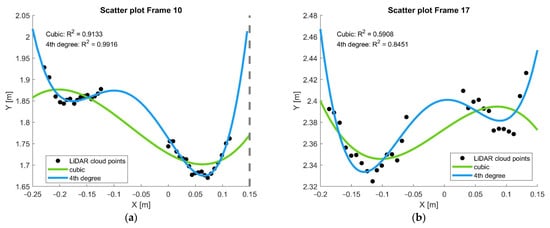

During walking, the laser projection from the LiDAR device onto the lower leg of the subjects generates a round shape. These round shapes are the contours of the back of the subject’s heel, as shown in Figure 8. As the subject walks towards the end of the walking distance, the clarity of the cloud points decreased. Therefore, in order to increase the accuracy of the heel estimation, we fitted a 4th-order polynomial for each LiDAR scanning frame, as shown in Figure 9a,b.

Figure 8.

Scatter plot of all the cloud points during walking: (a) the identified single-support leg of the first step; (b) the identified single-support leg of the second step.

Figure 9.

Scatter plot of the extracted cloud points, fitted with a quadratic polynomial of the 3rd degree and 4th degree, and the estimated heel position: (a) extracted calculated data from Frame 10; (b) extracted calculated data from Frame 17.

As shown in Figure 9, the reported values of R2 of the cubic polynomial is 0.9133 and 0.5908 for Frames 10 and 17, respectively. Meanwhile, the R2 value for the 4th-degree polynomial is 0.9916 and 0.8451 for Frames 10 and 17, respectively. The R2 values of the lower-order polynomials were unacceptable. For the higher-order polynomial, despite the better R2 values, the u shape that represented the heel was distorted. Hence, the 4th-degree polynomial was selected as it retains the u shape significantly. Equation (1) represents the 4th-degree polynomial, where .

As shown from Figure 7, the that represents the single-support leg () phase was identified. From these identified frames of , we calculated the lowest local minima, which represents the heel , as shown in Table 1.

Table 1.

Tabulated 2-dimensional sample array of .

As shown in Figure 10, the average Step Length can be obtained by calculating the differences between the adjacent rows of in with an array dimension of . Equation (2) represents this operation.

Figure 10.

Determination of step length from the cloud points data.

2.4. Participants

There were altogether 10 healthy volunteers that participated in this experiment as shown in Table 2. The participants were recruited among the students of the university. The subjects were free from any cardiovascular, neurological, or musculoskeletal disease and had no walking difficulties.

Table 2.

Number of participants with the corresponding standard deviation.

3. Results

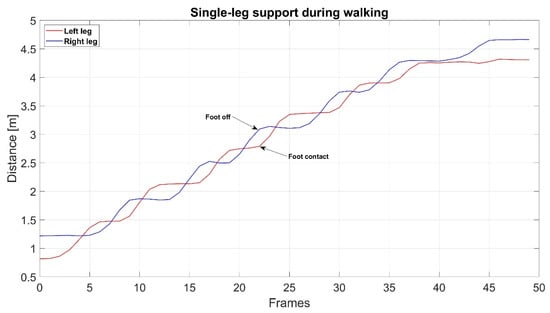

Figure 11 represents the time-course data of foot contact and the foot-off position calculated during walking. The frames on the y-axis signifies the time data from the LiDAR sensor. The distance on the x-axis signifies the location of the single-support leg during walking. If the right leg is in contact with the floor, the opposite left leg must be in mid-air during walking, and vice versa. Both the left-leg and right-leg data were calculated using the moving average over a sliding window of length 2, thus removing the DC offset.

Figure 11.

Foot position during walking.

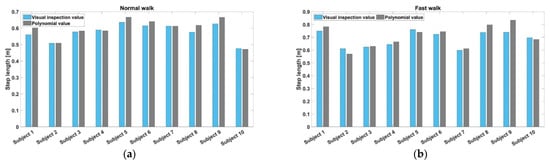

Figure 12a,b shows the individual results of the average step length from all 10 participants for each of the fast walk and normal walk experiments obtained from both the visual inspection and polynomial methods. From the figure, it can be observed that each participant tends to have a unique or individualistic step length. Since they were instructed to walk on their own pace for both walking speed experiments, it resulted in an individually selected walking pace.

Figure 12.

Experimental results obtained from both a fast and normal walking speed: (a) the differences in step length value between the visual inspection method and polynomial fitting curve method for normal walk; (b) the differences in step length value between the visual inspection method and polynomial fitting curve method for fast walk.

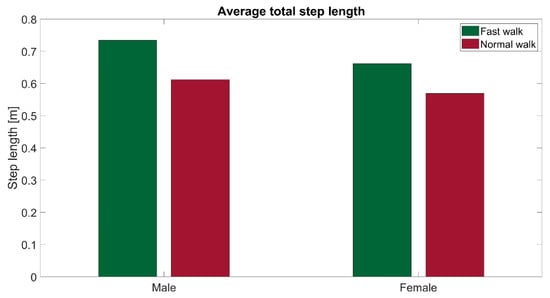

In Figure 13, the average male step length was 0.7439 m and 0.6292 m for fast and normal walk, respectively. This translates to an increase of 11.5% in step length from normal walk to fast walk. The average female step length was 0.6545 m and 0.5856 m for fast and normal walk, respectively. For female participants, normal to fast walking corresponded to an increase of 6.9% in the experiment. Other factors, such as aging or physical complications, might affect the results of step length. In this study, since the subjects consisted of healthy young adults, the trial results for both fast walk and normal walk are observed to be in the same walking gait for young adults, i.e., an increase in distance in step length with an increase in walking speed.

Figure 13.

Comparisons of step length during fast walking speed and normal walking speed between male participants and female participants.

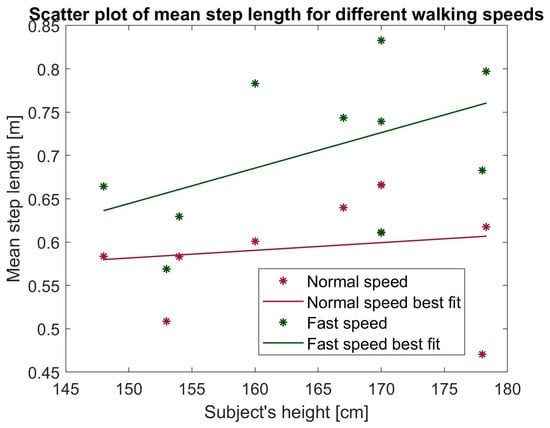

Figure 14 shows the scatter plot between the height of each participant and their represented average step length for normal walk and fast walk, respectively. A linear fit is applied to the data, as shown. The correlation coefficient between the mean step length of normal walk and the subject’s height is R = 0.1482. Meanwhile, for fast walk, the correlation coefficient against the subject’s height is R = 0.4951.

Figure 14.

Correlation of subject’s height and step length for normal walking speed and fast walking speed.

Table 3 shows the summary of the step length gait parameter for both normal speed and fast speed using the visualization inspection and the quadratic polynomial method. From the table, the visual inspection method of step length agrees with the proposed method. The SD value is taken to represent the accuracy of the measurement. From the data, visual inspection is accurate up to 6%, which translates to 6 cm, while the polynomial method achieves 8 cm in fast walking.

Table 3.

The standard deviation (SD) of step length in comparison with the quadratic polynomial curve fitting value and the visually detected value.

4. Discussion

This paper proposes the development of a measuring step length method using a single LiDAR on healthy individuals. The aim is to validate the use of the single LiDAR to estimate accurately the heel cloud point using a polynomial method during both normal walk and fast walk.

Figure 8 shows the cloud points data along the projected walkway. Despite the difficulty in obtaining a clearer U shape of the heel as the walking speed increases, by utilizing mathematical algorithms such as the polynomial curve fitting, as shown in Figure 9, further accuracy of the location of the cloud points can be achieved. Detailed summarization of the performance is available in Table 2.

From Table 2, the measurements of the step length and standard deviation for normal walk and fast walk for all subjects are presented for both the visual inspection method and polynomial method. From the results, the average error in step length was found as ±0.0515 and ±0.0625 for normal walk and fast, respectively, while that of the visual inspection method reported ±0.0633 and ±0.0874 for normal walk and fast, respectively. This translates to a 5–9 cm error in step length. From this, the performance of the proposed method is comparable to the visual inspection with an acceptable error.

With the accuracy difference estimated to be at most 2 cm, the polynomial method provides reliability of heel location estimation as compared with the observational gait analysis. Moreover, polynomial method offers automated analysis when it comes to huge data as opposed to visual inspection by clinicians. This is because, for visual inspection analysis done by clinicians, there might exist inconsistencies or disagreements in the descriptive terminology between them [47].

There was a significant difference between the two methods, with a p-value of 0.5053. The interquartile range (IQR) of the visualization inspection method and polynomial fitting method for normal speed walk were 0.0541 and 0.0564, respectively. For faster speed walk, the interquartile range of the visualization inspection and polynomial fitting method were 0.1155 and 0.1533, respectively. From the reported IQR, the polynomial estimation method performs on par with the expert inspection.

In comparison, the dual-laser range sensor results reported in [45] achieved an average error of 10% or 10 cm. The current setup, though using a single LiDAR, achieved results comparable to those of the dual sensors. The proposed method in this study presented an improvement accuracy of 4% using the result as a standard in normal walk. In addition, with the aim of non-obstructive gait step length measurement on the subject, this proposed LiDAR-based approach achieved the intended purpose.

Overall, using the polynomial best-fitting curve for the cloud points reported promising results individually than when using the visual inspection method. Subject 9 had the highest standard deviation by using the visual inspection than using the quadratic polynomial fitting value approach. This is because, since the heel-shaped contour of the cloud points is incomplete as the subject walks faster and farther away from the device, it is difficult to determine the estimated position of the heel of the leg. By using the curve-fitting approach, the incomplete heel shape of the cloud points can be achieved by estimating the heel position of the leg.

In Figure 11, the result shows that the proposed method of polynomial estimation of the heel cloud point is able to detect the foot contact and foot off during walking from both the left leg and right leg. A similar pattern of a shorter step length in females than males, as seen in Figure 12 and Figure 13, has also been reported in [48]. The differences in step length may be related to the differences in body proportions between men and women.

In Figure 14, the association between the step length and the subject’s height for normal walk has a very low correlation while, for fast walk, it is considered to be moderately correlated. There is little significant relationship between the height of the subject and step length. In adults, there is no relation between step length and height at normal walking speed; however, there is a significant difference in step length given the lower extremity length [49]. Assessment of varied step length may include the measurement of the lower leg instead of the subject’s height.

This particular LiDAR device also imposed drawbacks for gait step length analysis. As shown in Figure 3, the angle of direction of shift is not entirely parallel to the floor as the distance increases. Each firing of the laser scans creates a path of hyperbola across the plane and a slight affine distortion to each hyperbola in the forward motion of the scanner [50]. In this paper, we proposed to use only the 1° angle for both experiments because it only focuses on the chosen region of interest, which is the ankle of the subject. That is why the walking distance proposed is limited to only 5 m, which is appropriate enough to proof the validation of using LiDAR for step length measurement purposes.

By limiting the gait parameters to only step length, we can reduce the immense size of the cloud points data. Furthermore, LiDAR provides not only the x, y, and z axes of the cloud points but also the timing, which can be extracted further to obtain other gait parameters such as the speed. This may be included for future work improvements. Other limitations present in this study is the limited sampling of the test subjects, which was predominated by healthy, young participants. To determine the gait disorders of the proposed method for clinical gait analysis, elderly subjects will be considered for further work improvements.

5. Conclusions

In this paper, we have successfully developed a system for step length measurement using LiDAR. Velodyne VLP-16 was used as a tool to capture the walking step movement, particularly below the ankle area of the subject. For measurement analysis, MATLAB algorithms provide essential calculations that resulted in acceptable step length estimations.

The experimental results indicate that the measurement distance of walking step can be calculated relatively accurately. With the aim of a convenient and reduced-cost measurement system, this experimental setup and results reached the desired goals. Furthermore, with the inclusion of various age groups, especially older people, in future studies, the highest level of comfort and usability could be assessed. In this study, all participants were able to do the walking experiment without difficulty and any discomfort. Further research improvement might include an automation of the measurement analysis and enhancement of the algorithm to increase the measurement accuracy.

Author Contributions

Conceptualization, K.M., J.K.M. and N.B.H.K.; methodology, N.B.H.K. and J.K.M.; software, K.M.; validation, N.B.H.K.; formal analysis, N.B.H.K.; investigation, N.B.H.K.; resources, K.M. and N.B.H.K.; data curation, J.K.M. and N.B.H.K.; writing—original draft preparation, N.B.H.K.; writing—review and editing, J.K.M.; visualization, N.B.H.K. and J.K.M.; supervision, M.S., K.M. and S.M.N.A.S.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Gifu University ethics (approval procedures number 27-226, Year 2021) issued by Gifu University ethics committee).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Marino, F.R.; Lessard, D.M.; Saczynski, J.S.; McManus, D.D.; Silverman-Lloyd, L.G.; Benson, C.M.; Blaha, M.J.; Waring, M.E. Gait Speed and Mood, Cognition, and Quality of Life in Older Adults with Atrial Fibrillation. J. Am. Heart Assoc. 2019, 8, e013212. [Google Scholar] [CrossRef]

- Grady, A.; Klunk, W.J.; Jenson, J. Health Care Spending and the Aging of the Population. Available online: https://ecommons.cornell.edu/handle/1813/76246 (accessed on 5 February 2022).

- Selikson, S.; Damus, K.; Hamerman, D. Risk Factors Associated with Immobility. J. Am. Geriatr. Soc. 1988, 36, 707–712. [Google Scholar] [CrossRef]

- Herssens, N.; Verbecque, E.; Hallemans, A.; Vereeck, L.; Van Rompaey, V.; Saeys, W. Do spatiotemporal parameters and gait variability differ across the lifespan of healthy adults? A systematic review. Gait Posture 2018, 64, 181–190. [Google Scholar] [CrossRef]

- Rubenstein, L.Z.; Solomon, D.H.; Roth, C.P.; Young, R.T.; Shekelle, P.G.; Chang, J.T.; MacLean, C.H.; Kamberg, C.J.; Saliba, D.; Wenger, N.S. Detection and Management of Falls and Instability in Vulnerable Elders by Community Physicians. J. Am. Geriatr. Soc. 2004, 52, 1527–1531. [Google Scholar] [CrossRef] [PubMed]

- Bloem, B.R.; Hausdorff, J.M.; Visser, J.E.; Giladi, N. Falls and freezing of gait in Parkinson’s disease: A review of two interconnected, episodic phenomena. Mov. Disord. 2004, 19, 871–884. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez-Martín, D.; Samà, A.; Pérez-López, C.; Català, A.; Arostegui, J.M.M.; Cabestany, J.; Bayés, À.; Alcaine, S.; Mestre, B.; Prats, A.; et al. Home detection of freezing of gait using support vector machines through a single waist-worn triaxial accelerometer. PLoS ONE 2017, 12, e0171764. [Google Scholar] [CrossRef] [PubMed]

- The Usefulness of Maximal Step Length to Predict Annual Fall Risk. Available online: https://pesquisa.bvsalud.org/portal/resource/pt/wpr-167699 (accessed on 10 March 2022).

- Cho, B.-L.; Scarpace, D.; Alexander, N.B. Tests of Stepping as Indicators of Mobility, Balance, and Fall Risk in Balance-Impaired Older Adults. J. Am. Geriatr. Soc. 2004, 52, 1168–1173. [Google Scholar] [CrossRef] [PubMed]

- Dite, W.; Temple, V.A. A Clinical Test of Stepping and Change of Direction to Identify Multiple Falling Older Adults. Available online: https://www.sciencedirect.com/science/article/pii/S0003999302002538 (accessed on 10 March 2022).

- Chandler, J.M.; Duncan, P.W.; Studenski, S.A. Balance Performance on the Postural Stress Test: Comparison of Young Adults, Healthy Elderly, and Fallers. Available online: https://academic.oup.com/ptj/article-abstract/70/7/410/2728657 (accessed on 10 March 2022).

- Lord, S.R.; Fitzpatrick, R.C. Choice Stepping Reaction Time: A Composite Measure of Falls Risk in Older People. Available online: https://academic.oup.com/biomedgerontology/article-abstract/56/10/M627/584900 (accessed on 10 March 2022).

- Christensen, J.K.B. The Emergence and Unfolding of Telemonitoring Practices in Different Healthcare Organizations. Int. J. Environ. Res. Public Health 2018, 15, 61. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Qi, Y.; Soh, C.B.; Gunawan, E.; Low, K.-S.; Thomas, R. Estimation of Spatial-Temporal Gait Parameters Using a Low-Cost Ultrasonic Motion Analysis System. Sensors 2014, 14, 15434–15457. [Google Scholar] [CrossRef] [Green Version]

- Latorre, J.; Llorens, R.; Colomer, C.; Alcañiz, M. Reliability and comparison of Kinect-based methods for estimating spatiotemporal gait parameters of healthy and post-stroke individuals. J. Biomech. 2018, 72, 268–273. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Li, P.; Tian, S.; Tang, K.; Chen, X. Estimation of Temporal Gait Parameters Using a Human Body Electrostatic Sensing-Based Method. Sensors 2018, 18, 1737. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, F.; Stone, E.E.; Skubic, M.; Keller, J.M.; Abbott, C.; Rantz, M. Toward a Passive Low-Cost In-Home Gait Assessment System for Older Adults. IEEE J. Biomed. Health Inform. 2013, 17, 346–355. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, B.; Bates, B.T. Comparison of F-Scan in-sole and AMTI forceplate system in measuring vertical ground reaction force during gait. Physiother. Theory Pract. 2000, 16, 43–53. [Google Scholar] [CrossRef]

- Patterson, M.R.; Johnston, W.; O’Mahony, N.; O’Mahony, S.; Nolan, E.; Caulfield, B. Validation of temporal gait metrics from three IMU locations to the gold standard force plate. In Proceedings of the 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 667–671. [Google Scholar] [CrossRef] [Green Version]

- Kharazi, M.; Memari, A.; Shahrokhi, A.; Nabavi, H.; Khorami, S.; Rasooli, A.; Barnamei, H.; Jamshidian, A.; Mirbagheri, M. Validity of microsoft kinectTM for measuring gait parameters. In Proceedings of the 22nd Iranian Conference on Biomedical Engineering (ICBME), Tehran, Iran, 25–27 November 2015; pp. 375–379. [Google Scholar] [CrossRef]

- Lamine, H.; Bennour, S.; Laribi, M.; Romdhane, L.; Zaghloul, S. Evaluation of Calibrated Kinect Gait Kinematics Using a Vicon Motion Capture System. Comput. Methods Biomech. Biomed. Eng. 2017, 20, S111–S112. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Seel, T.; Raisch, J.; Schauer, T. IMU-Based Joint Angle Measurement for Gait Analysis. Sensors 2014, 14, 6891–6909. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gouwanda, D.; Senanayake, S.M.N.A. Emerging Trends of Body-Mounted Sensors in Sports and Human Gait Analysis. IFMBE Proc. 2008, 21, 715–718. [Google Scholar] [CrossRef]

- Ikizoğlu, S.; Şahin, K.; Ataş, A.; Kara, E.; Çakar, T. IMU Acceleration Drift Compensation for Position Tracking in Ambulatory Gait Analysis. In Proceedings of the 14th International Conference on Informatics in Control, Automation and Robotics, Madrid, Spain, 26–28 July 2017; pp. 582–589. [Google Scholar]

- Sposaro, F.; Tyson, G. iFall: An Android Application for Fall Monitoring and Response. Available online: https://ieeexplore.ieee.org/abstract/document/5334912/ (accessed on 10 March 2022).

- Brodie, M.; Walmsley, A.; Page, W. Dynamic accuracy of inertial measurement units during simple pendulum motion. Comput. Methods Biomech. Biomed. Eng. 2008, 11, 235–242. [Google Scholar] [CrossRef] [PubMed]

- Steinert, A.; Sattler, I.; Otte, K.; Röhling, H.; Mansow-Model, S.; Müller-Werdan, U. Using New Camera-Based Technologies for Gait Analysis in Older Adults in Comparison to the Established GAITRite System. Sensors 2019, 20, 125. [Google Scholar] [CrossRef] [Green Version]

- Kluge, F.; Gaßner, H.; Hannink, J.; Pasluosta, C.; Klucken, J.; Eskofier, B.M. Towards Mobile Gait Analysis: Concurrent Validity and Test-Retest Reliability of an Inertial Measurement System for the Assessment of Spatio-Temporal Gait Parameters. Sensors 2017, 17, 1522. [Google Scholar] [CrossRef]

- Cho, Y.-S.; Jang, S.-H.; Cho, J.-S.; Kim, M.-J.; Lee, H.D.; Lee, S.Y.; Moon, S.-B. Evaluation of Validity and Reliability of Inertial Measurement Unit-Based Gait Analysis Systems. Ann. Rehabil. Med. 2018, 42, 872–883. [Google Scholar] [CrossRef] [Green Version]

- Carse, B.; Meadows, B.; Bowers, R.; Rowe, P. Affordable clinical gait analysis: An assessment of the marker tracking accuracy of a new low-cost optical 3D motion analysis system. Physiotherapy 2013, 99, 347–351. [Google Scholar] [CrossRef] [PubMed]

- Holden, M.K.; Gill, K.M.; Magliozzi, M.R.; Nathan, J.; Piehl-Baker, L. Clinical Gait Assessment in the Neurologically Impaired: Reliability and Meaningfulness. Available online: https://academic.oup.com/ptj/article-abstract/64/1/35/2727634 (accessed on 8 February 2022).

- Gabel, M.; Gilad-Bachrach, R.; Renshaw, E.; Schuster, A. Full Body Gait Analysis with Kinect. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012. [Google Scholar]

- Springer, S.; Yogev Seligmann, G. Validity of the Kinect for Gait Assessment: A Focused Review. Sensors 2016, 16, 194. [Google Scholar] [CrossRef]

- Mejia-Trujillo, J.D.; Castano-Pino, Y.J.; Navarro, A.; Arango-Paredes, J.D.; Rincon, D.; Valderrama, J.; Munoz, B.; Orozco, J.L. Kinect™ and Intel RealSense™ D435 comparison: A preliminary study for motion analysis. In Proceedings of the IEEE International Conference on E-health Networking, Application & Services (HealthCom), Bogotá, Colombia, 14–16 October 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Siena, F.L.; Byrom, B.; Watts, P.; Breedon, P. Utilising the Intel RealSense Camera for Measuring Health Outcomes in Clinical Research. J. Med. Syst. 2018, 42, 53. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Clark, R.A.; Mentiplay, B.F.; Hough, E.; Pua, Y.H. Three-dimensional cameras and skeleton pose tracking for physical function assessment: A review of uses, validity, current developments and Kinect alternatives. Gait Posture 2019, 68, 193–200. [Google Scholar] [CrossRef] [PubMed]

- Sarbolandi, H.; Lefloch, D.; Kolb, A. Kinect range sensing: Structured-light versus Time-of-Flight Kinect. Comput. Vis. Image Underst. 2015, 139, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Nakano, N.; Sakura, T.; Ueda, K.; Omura, L.; Kimura, A.; Iino, Y.; Fukashiro, S.; Yoshioka, S. Evaluation of 3D Markerless Motion Capture Accuracy Using OpenPose with Multiple Video Cameras. Front. Sports Act. Living 2020, 2, 50. [Google Scholar] [CrossRef] [PubMed]

- Nath, T.; Mathis, A.; Chen, A.C.; Patel, A.; Bethge, M.; Mathis, M.W. Using DeepLabCut for 3D markerless pose estimation across species and behaviors. Nat. Protoc. 2019, 14, 2152–2176. [Google Scholar] [CrossRef] [PubMed]

- Mathis, A.; Schneider, S.; Lauer, J.; Mathis, M.W. A Primer on Motion Capture with Deep Learning: Principles, Pitfalls, and Perspectives. Neuron 2020, 108, 44–65. [Google Scholar] [CrossRef] [PubMed]

- Lidar: HDL-64E S3 User’s Manual and Programming Guide. Available online: https://scholar.google.com/scholar_lookup?title=HDL–64E+S3+Users’s+Manual+and+Programming+Guide&publication_year=2013& (accessed on 9 March 2022).

- 2D LiDAR Sensors|TiM7xx|SICK. Available online: https://www.sick.com/au/en/detection-and-ranging-solutions/2d-lidar-sensors/tim7xx/c/g501853 (accessed on 14 March 2022).

- Benedek, C.; Galai, B.; Nagy, B.; Janko, Z. Lidar-Based Gait Analysis and Activity Recognition in a 4D Surveillance System. IEEE Trans. Circuits Syst. Video Technol. 2016, 28, 101–113. [Google Scholar] [CrossRef] [Green Version]

- Yamada, H.; Ahn, J.; Mozos, O.M.; Iwashita, Y.; Kurazume, R. Gait-based person identification using 3D LiDAR and long short-term memory deep networks. Adv. Robot. 2020, 34, 1201–1211. [Google Scholar] [CrossRef]

- Iwai, M.; Koyama, S.; Tanabe, S.; Osawa, S.; Takeda, K.; Motoya, I.; Sakurai, H.; Kanada, Y.; Kawamura, N. The validity of spatiotemporal gait analysis using dual laser range sensors: A cross-sectional study. Arch. Physiother. 2019, 9, 3. [Google Scholar] [CrossRef] [PubMed]

- VeloView|ParaView. Available online: https://www.paraview.org/veloview/ (accessed on 10 March 2022).

- Watelain, E.; Froger, J.; Rousseaux, M.; Lense, G.; Barbier, F.; Lepoutre, F.-X.; Thevenon, A. Variability of video-based clinical gait analysis in hemiplegia as performed by practitioners in diverse specialties. J. Rehabil. Med. 2005, 37, 317–324. [Google Scholar] [CrossRef] [Green Version]

- Cho, S.; Park, J.; Kwon, O. Gender differences in three dimensional gait analysis data from 98 healthy Korean adults. Clin. Biomech. 2004, 19, 145–152. [Google Scholar] [CrossRef]

- Scherer, S.A.; Bainbridge, J.; Hiatt, W.R.; Regensteiner, J.G. Gait characteristics of patients with claudication. Arch. Phys. Med. Rehabil. 1998, 79, 529–531. [Google Scholar] [CrossRef]

- Lassiter, H.A.; Whitley, T.; Wilkinson, B.; Abd-Elrahman, A. Scan Pattern Characterization of Velodyne VLP-16 Lidar Sensor for UAS Laser Scanning. Sensors 2020, 20, 7351. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).