Language and Reasoning by Entropy Fractals

Abstract

:1. Introduction

2. Background

2.1. Fractals and Language

- D stands by fractal dimension

- N is the number of identical parts in the fractal

- L represents the total length of the fractal

2.2. Zipf–Mandelbrot, Chance, and Prediction

- f the frequency of the nth word sorted from the most frequent one

- a is a positive real number, usually over 1.1

- k = rank of data

- q and s parameters

2.3. Thermodynamics as a Law of Complex Processes

3. Materials and Methods

- The original limit of 20 questions was changed to 30.

- This first question is changed to a selection between animal, vegetable, and another thing.

- When the ANN cannot guess the target, then it displays a candidate word.

- When ANN hits the word, it displays the answers that are different from the ones expected.

- The user can select extra answers besides yes and no, but they are not used in texts.

- Questions made by the AI can have small grammatical errors. They are neglected because the number and position of nouns and verbs are correct.

- A Processor Intel® Core™ i7-7600U CPU @ 2.80GHz × 4, with 7,4 GiB RAM and 256,1 GB Hard disk, an operating system Linux Ubuntu 20.04.2 LTS of 64 bits.

- A Processor Intel® Dual Core™ i7-7600U CPU @ 2.80GHz × 2, with 8 GiB RAM and 256 GB Hard disk, an operating system Linux Ubuntu 20.04.2 LTS of 64 bits.

- Tag &V if there is an action (verb or verbal phrase o).

- Tag &N if there is any type of nominal reference (noun or short noun phrase).

- Tag &A if there is any type of qualification, description, properties, or characteristic.

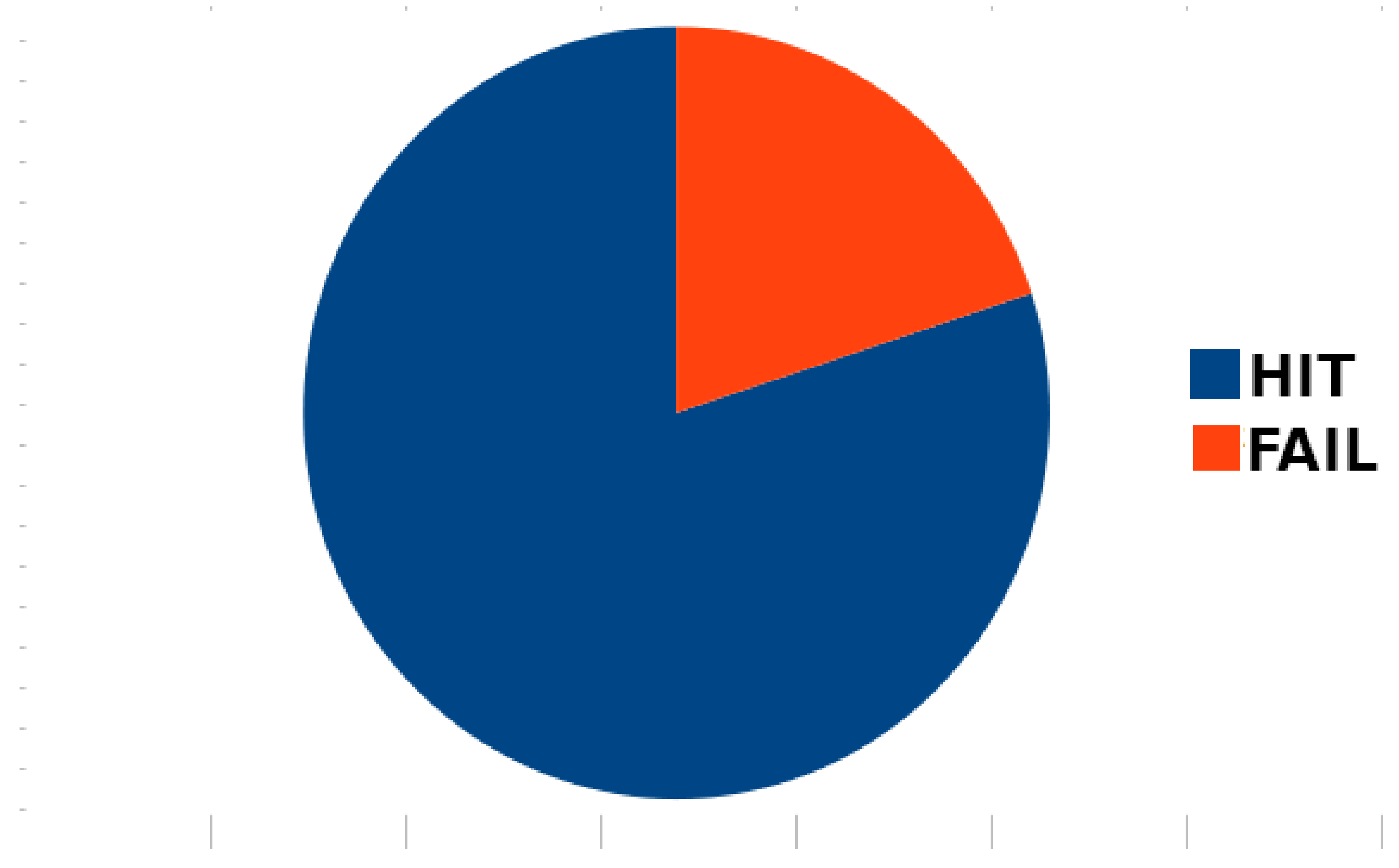

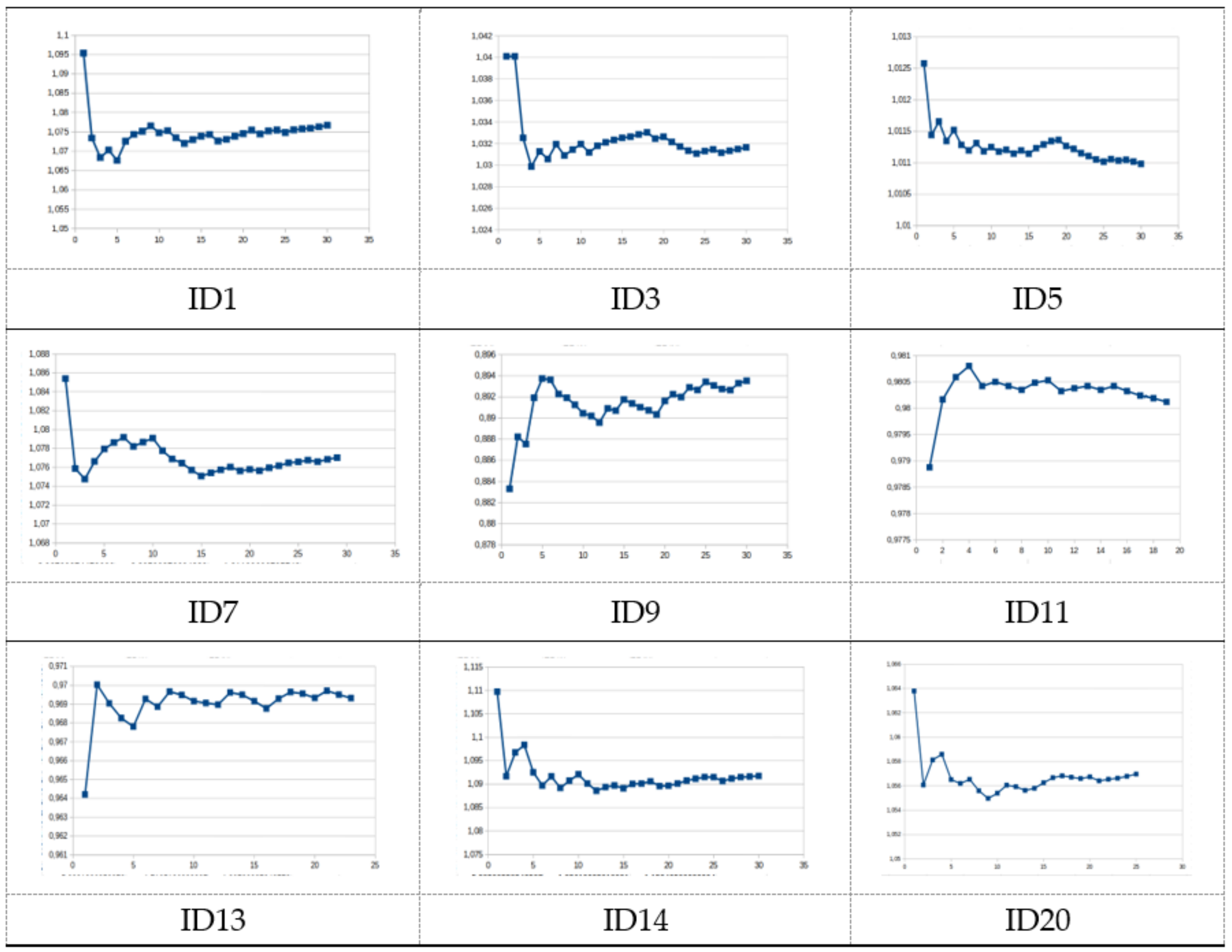

4. Tests and Results

- Player 1 thinks a word and keeps it in secret.

- Player 2 performs up to 20 Yes/No questions to guess the word.

- Player 1 answers the questions.

- At any time, player 2 can try a word.

- Player 2 wins if he can guess the secret word.

- Player 1 wins if player 1 asked 20 questions and never gets the correct word.

4.1. Rule R1

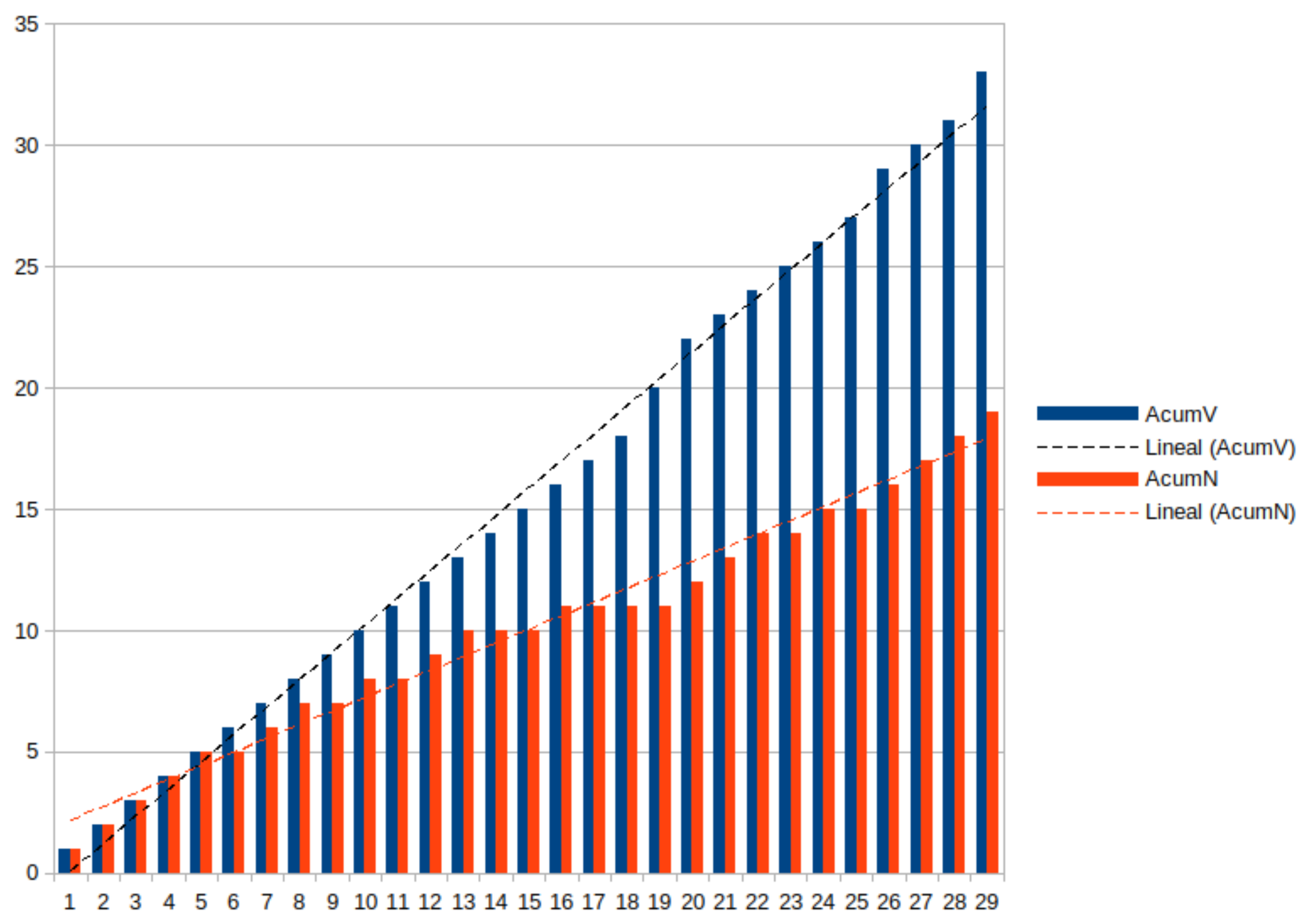

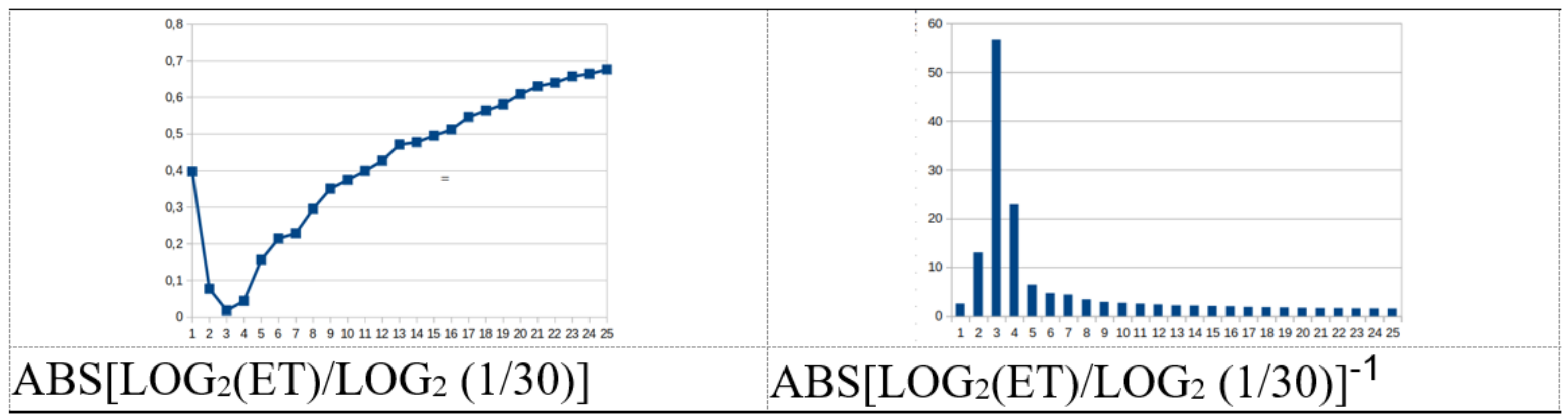

4.2. Rule R2

- s: number of verbs (V), nouns (N), or descriptive word (A).

- T: total number of words.

- N: number of partitions.

- change rate.

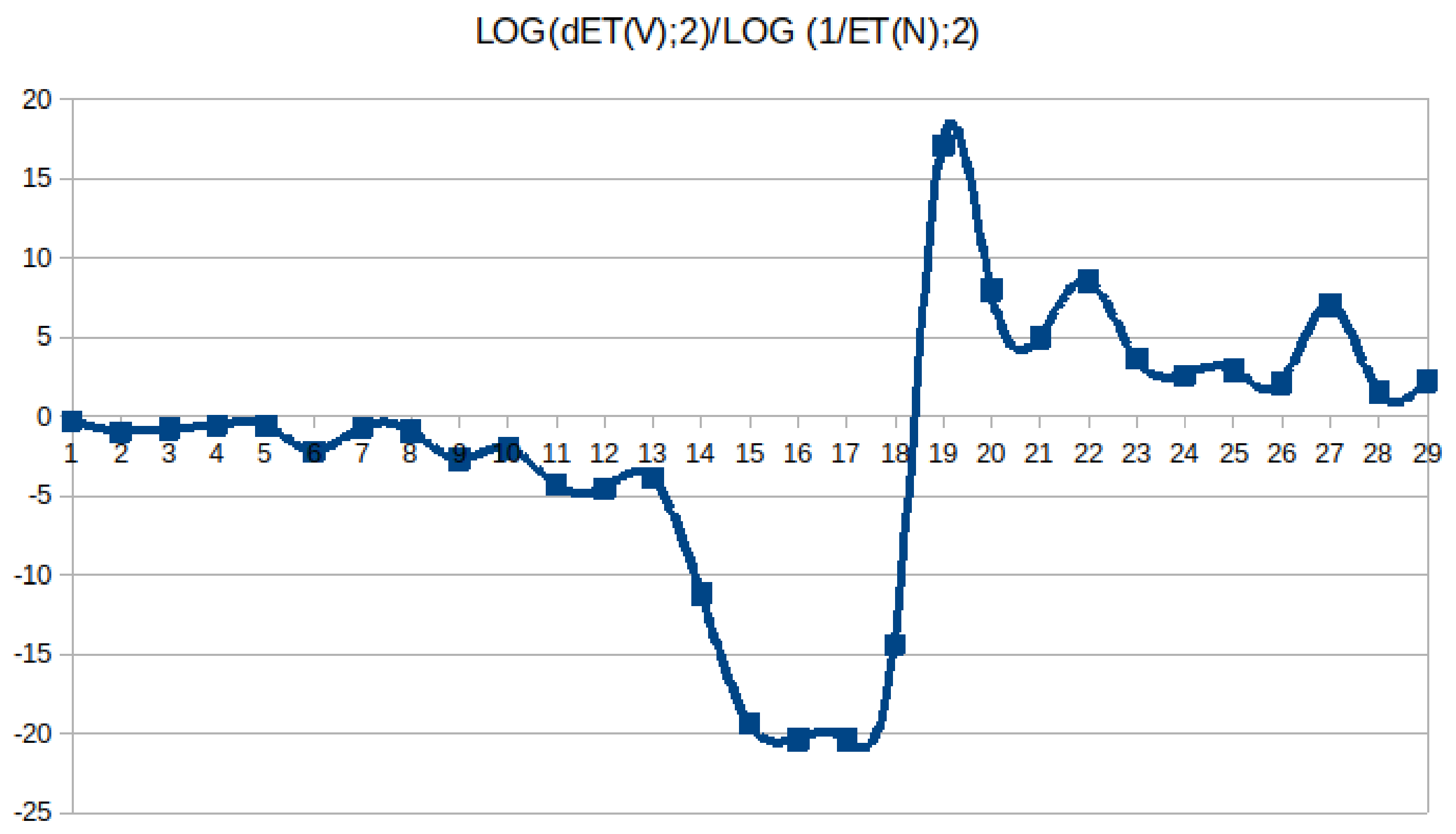

4.3. Rule R3

- Language and its communication process imply a tuple (space, t), and involves a type of movement.

- There is always causation and an effect.

- Vq the number of verbs in sentence q.

- VNA total number of verbs, nouns, and qualifiers in the game.

- Vq the number of verbs in sentence q.

- VN total number of verbs, and nouns in the game.

5. Discussion

- Global entropy does not change: Equilibrium.

- All the entropy remains within the system.

- No entropy is transferred out of the system but held as local entropy.

- Time t є[−∞, +∞] holds the entire activity (sequence of sentences) in a lapse [t1; t2].

- No changes out of [t1; t2].

- H presents breakouts.

- H is cyclical.

- Language relates to a tuple (space, t) ≥ it involves movement.

- There is a causality: cause and effect.

- D evolves with t ≥ D(t + 1) depends on D(t).

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Siegler, R.S.; Booth, J.L. Development of numerical estimation in Young Children. Child Dev. 2004, 75, 428–444. [Google Scholar] [CrossRef] [PubMed]

- Wynn, K. Addition and Subtraction by human infants. Nature 1992, 358, 749–750. [Google Scholar] [CrossRef] [PubMed]

- Sulbarán Sandoval, J.A. Fractal as Architectural Paradigm: Deconstruction vs Vivid Patterns Language (El Fractal Como Paradigma arquitectónico: Deconstrucción vs Lenguaje de Patrones Viviente); Procesos Urbanos: Maracaibo, Venezuela, 2016; pp. 79–88. Available online: https://revistas.cecar.edu.co/index.php/procesos-urbanos/article/view/268 (accessed on 1 June 2021).

- Reyes, E. Breve introducción a Jacques Derrida y la Deconstrucción. Available online: http://hipercomunicacion.com/pubs/derrida-decons.html (accessed on 4 March 2016).

- Sharir, O.; Peleg, B.; Shoham, Y. The Cost of Training NLP Models: A concise overview. arXiv 2020, arXiv:2004.08900. Available online: https://arxiv.org/pdf/2004.08900.pdf (accessed on 4 March 2020).

- Skinner, B. Verbal Behavior; Appleton-Century-Crofts: New York, NY, USA, 1957. [Google Scholar]

- Chomsky, N. Reflections on Language; Random House: Manhattan, NY, USA, 1975. [Google Scholar]

- Berwick, R.; Weinberg, A. The Grammatical Basis of Linguistic Performance; MIT Press: Cambridge, MA, USA, 1986. [Google Scholar]

- Sala Torrent, M. Trastornos del desarrollo del lenguaje oral y escrito. In Congreso de Actualización en Pediatría; 2020; pp. 251–263. Available online: https://www.aepap.org/sites/default/files/documento/archivos-adjuntos/congreso2020/251-264_Trastornos%20del%20desarrollo%20del%20lenguaje.pdf (accessed on 1 June 2021).

- Widyarto, S.; Syafrullah, M.; Sharif, M.W.; Budaya, G.A. Fractals Study and Its Application. In Proceedings of the 6th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Bandung, Indonesia, 18–20 September 2019; pp. 200–204. [Google Scholar] [CrossRef]

- Spinadel, V.M. Fractals (Fractales). In Proceedings of the Segundo Congreso Internacional de Matemáticas en la Ingeniería y la Arquitectura, Madrid, Spain, 3, 4 and 7 April 2008; pp. 113–123. [Google Scholar]

- Zipf, G.K. Selected Studies of the Principle of Relative Frequency in Language; Harvard University Press: Cambridge, MA, USA, 1932. [Google Scholar]

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 3, 379–423. [Google Scholar] [CrossRef] [Green Version]

- Mandelbrot, B. Information Theory and Psycholinguistics; Wolman, B.B., Nagel, E., Eds.; Scientific Psychology: New York, NY, USA, 1965. [Google Scholar]

- Apostol, T.M. Introduction to Analytic Number Theory; Springer Verlag: Berlin/Heidelberg, Germany, 1976; ISBN 0-387-90163-9. [Google Scholar]

- Rebolledo, R. Complexity and Chance (Complejidad y azar). Humanit. J. Valpso. 2018. [Google Scholar] [CrossRef]

- Callen, H.B. Thermodynamics and an Introduction to Thermostatistics, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 1985. [Google Scholar]

- Billingsley, P. Ergodic Theory and Information; John Wiley & Sons: Hoboken, NJ, USA, 1965. [Google Scholar]

- Bunge, M. Probability and Law (Probabilidad y Ley). Magazine Diánoia. 1969, Volume 15, pp. 141–160. Available online: https://www.cairn.info/materiaux-philosophiques-et-scientifiques-vol-2--9782919694525-page-983.htm (accessed on 1 June 2021). [CrossRef] [Green Version]

- Von Bertalanffy, L. General System Theory; Foundations, Development, Applications (Teoría General de los Sistemas; Fundamentos, Aplicaciones); Fondo de Cultura Económica: Mexico City, Mexico, 1984. [Google Scholar]

- Rodríguez Duch, M.F. Chaos, Entropy and Public Health: Legal Analysis from a Multidimensional Perspective (Caos, Entropía y Salud pública: Análisis desde una Perspectiva Jurídica Multidimensional); Argentina Association of Administrative Law Magazine: Buenos Aires, Argentina, 2016. [Google Scholar]

- Esteva Fabregat, C. Follow-Up for a Complexity Theory (Acompañamientos a una Teoría de la Complejidad); Desacatos: Ciudad de Mexico, Mexico, 2008; Volume 12, Available online: https://dialnet.unirioja.es/servlet/articulo?codigo=5852219 (accessed on 1 June 2021).

- Eco, U. Tratado de Semiótica General; Lumen: Biblioteca Umberto Eco. Alessandria: Piamonte, Italy, 2000. [Google Scholar]

- Costa dos Santos, R.; Ascher, D. Popper Epistemology and Management as an Applied Social Science: A Theoretical Essay; Espacios: Curitiba, Brazil, 2017; Volume 38, p. 20. ISSN 07981015. Available online: http://www.revistaespacios.com/a17v38n16/a17v38n16p20.pdf (accessed on 1 June 2021).

- López De Luise, D.; Azor, R. Sound Model for Dialog Profiling. Int. J. Adv. Intell. Paradig. 2015, 9, 623–640. [Google Scholar] [CrossRef]

- 20Q. Available online: http://www.20q.net/ (accessed on 12 July 2021).

- Real Academia Española. Available online: https://www.rae.es/ (accessed on 7 July 2021).

- Ministery of Education of Spain. Ministerio de Educación de España. 2021. Available online: https://www.educacionyfp.gob.es/ (accessed on 7 July 2021).

- Cambridge Dictionary. Available online: https://dictionary.cambridge.org. (accessed on 7 July 2021).

- Rodriguez Santos, A.E. I.E.S. San Cristóbal de Los Ángeles. Madrid, Spain. 2011. Available online: https://m.facebook.com/profile.php?id=117806488264899 (accessed on 1 June 2021).

- Spinadel, V.W. Fractal geometry and Euclidean thermodynamics (Geometría fractal y geometría euclidiana). Mag. Educ. Pedagogy. Univ. Antioq. 2003, 15, 85–91. [Google Scholar]

- Cook, T.A. Capiulo V: “Botany: The Meaning of Spiral Leaf Arrangements”. In The Curves of Life; Constable and Company Ltd.: London, UK, 1914; p. 81. [Google Scholar]

- Cook, T.A. The Curves of Lifes; Dover Publications: New York, NY, USA, 1979. [Google Scholar]

- Fractal Foundation. Available online: http://fractalfoundation.org/ (accessed on 5 June 2021).

| #Words | #Sentences | #Nouns | #Verbs | |

|---|---|---|---|---|

| AVERAGE | 178.00 | 50.00 | 15.00 | 31.00 |

| SD | 37.95 | 9.72 | 4.06 | 6.84 |

| MIN | 235.00 | 60.00 | 23.00 | 40.00 |

| MAX | 178.00 | 50.00 | 15.00 | 31.00 |

| Rule | Language Features | Name | Characteristics |

|---|---|---|---|

| R1 | A communication C succeeds if it is composed of sentences able to transfer entropy in a proper way | Rule of the Main Behavior | 1. Global entropy does not change: Equilibrium 2. All the entropy remains within the system 3. No entropy is transferred out of the system but held as local entropy |

| R2 | There is a complement between V and N, though not perfect they balance each other, and H has a certain rhythm and cycle | Dimension and Rhythm | 1. Timing t є[−∞, +∞] holds the entire activity (sequence of sentences) in a lapse [t1; t2] 2. No changes out of [t1; t2] 3. H presents breakouts 4. H is cyclical |

| R3 | Any communication C is an activity with a triplet (O, E, C) composed of a cause, effect, and evolution in t | Triplet activity | 1. Language relates to a tuple (space, t) ≥ it involves movement 2. Causality: cause and effect 3. D evolves with t ≤ D(t + 1) depends on D(t) |

| Cluster | %q | CantV | CantN |

|---|---|---|---|

| 0 | (35%) | 1 | 1 |

| 1 | (13%) | 1 | 0.1 |

| 2 | (5%) | 2 | 1.0 |

| 3 | (47%) | 2 | 0.2 |

| 4 | (1%) | 1 | 1.7 |

| Succeed | MIN | MAX | AVG | DEV | INTERVAL | |

|---|---|---|---|---|---|---|

| Y | 6.06 | 10.64 | 8.24 | 1.44 | [6.79] | [9.68] |

| C | 6.77 | 13.23 | 9.40 | 2.61 | [6.80] | [9.40] |

| N | 9.83 | 10.77 | 10.30 | 0.40 | [9.91] | [10.30] |

| Test | E[1−k] | ɸE[1−k] − 1 |

|---|---|---|

| T05 | 0.61 | 1.61 |

| T07 | 0.19 | 1.19 |

| T12 | 0.17 | 1.17 |

| T10 | 0.21 | 1.21 |

| T19 | 0.15 | 1.15 |

| T20 | 0.20 | 1.20 |

| Test | E[1−k] | ɸE[1−k] − 1 |

|---|---|---|

| T01 | 0.14 | 1.15 |

| T02 | 0.25 | 1.22 |

| T03 | 0.21 | 1.23 |

| T04 | 0.21 | 1.20 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

López De Luise, D. Language and Reasoning by Entropy Fractals. Signals 2021, 2, 754-770. https://doi.org/10.3390/signals2040044

López De Luise D. Language and Reasoning by Entropy Fractals. Signals. 2021; 2(4):754-770. https://doi.org/10.3390/signals2040044

Chicago/Turabian StyleLópez De Luise, Daniela. 2021. "Language and Reasoning by Entropy Fractals" Signals 2, no. 4: 754-770. https://doi.org/10.3390/signals2040044

APA StyleLópez De Luise, D. (2021). Language and Reasoning by Entropy Fractals. Signals, 2(4), 754-770. https://doi.org/10.3390/signals2040044