1. Introduction

Image denoising methods that can estimate a noiseless, clean natural image (original image) from a noisy observation are actively being studied [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21]. Many previous works have assumed that the noise in such a noisy observation is an additive white Gaussian noise (AWGN). One popular approach for image denoising is to use nonlocal self-similarity (NLSS), in which it is assumed that a local segment of an image (a patch) is similar to other local patches [

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21].

Weighted nuclear norm minimization for image denoising (WNNM) [

16] is an optimization-based method based on an NLSS-based objective function. WNNM assumes that a matrix whose columns consist of similar patches extracted from a clean image is low rank and achieves state-of-the-art denoising performance among non-learning-based methods.

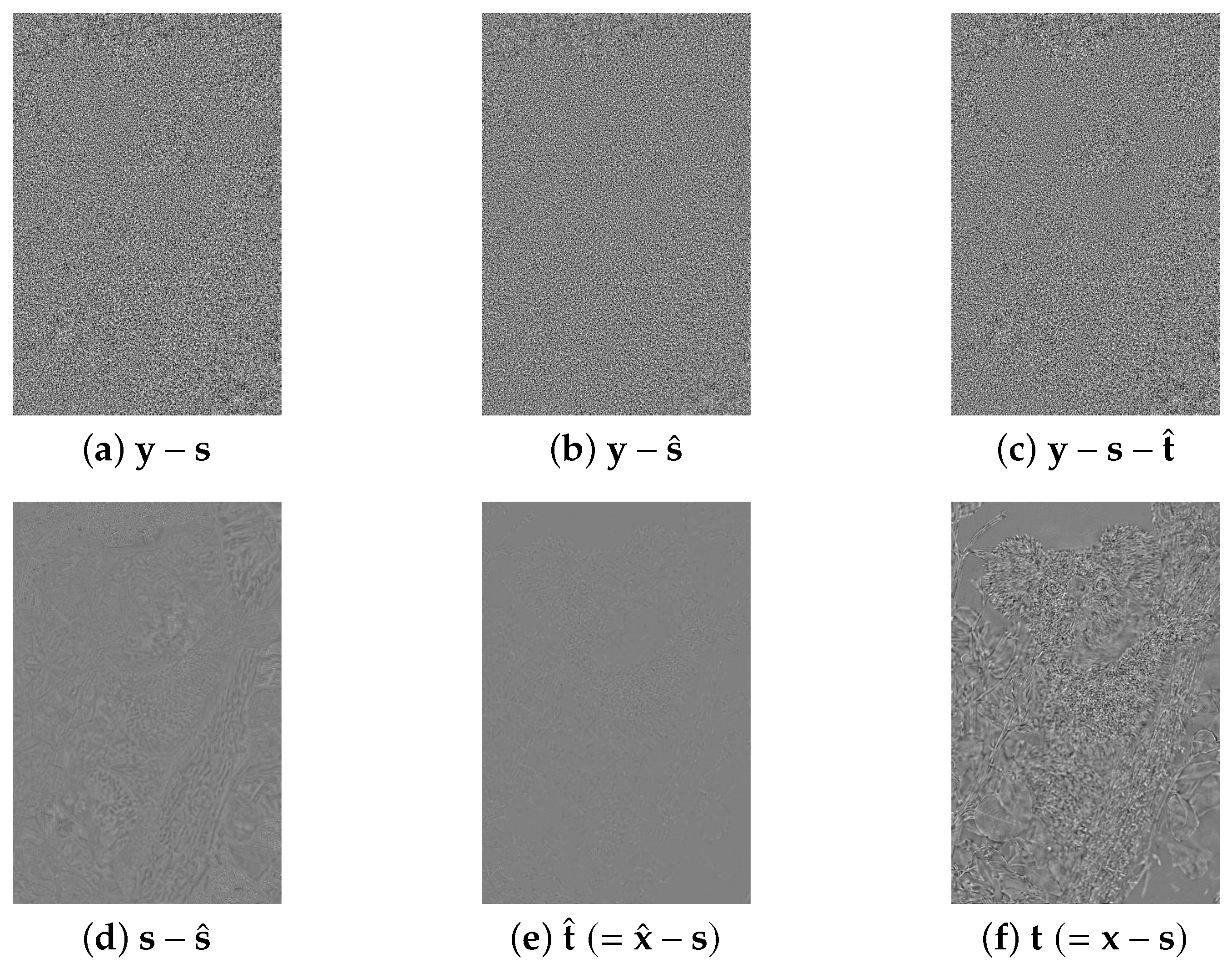

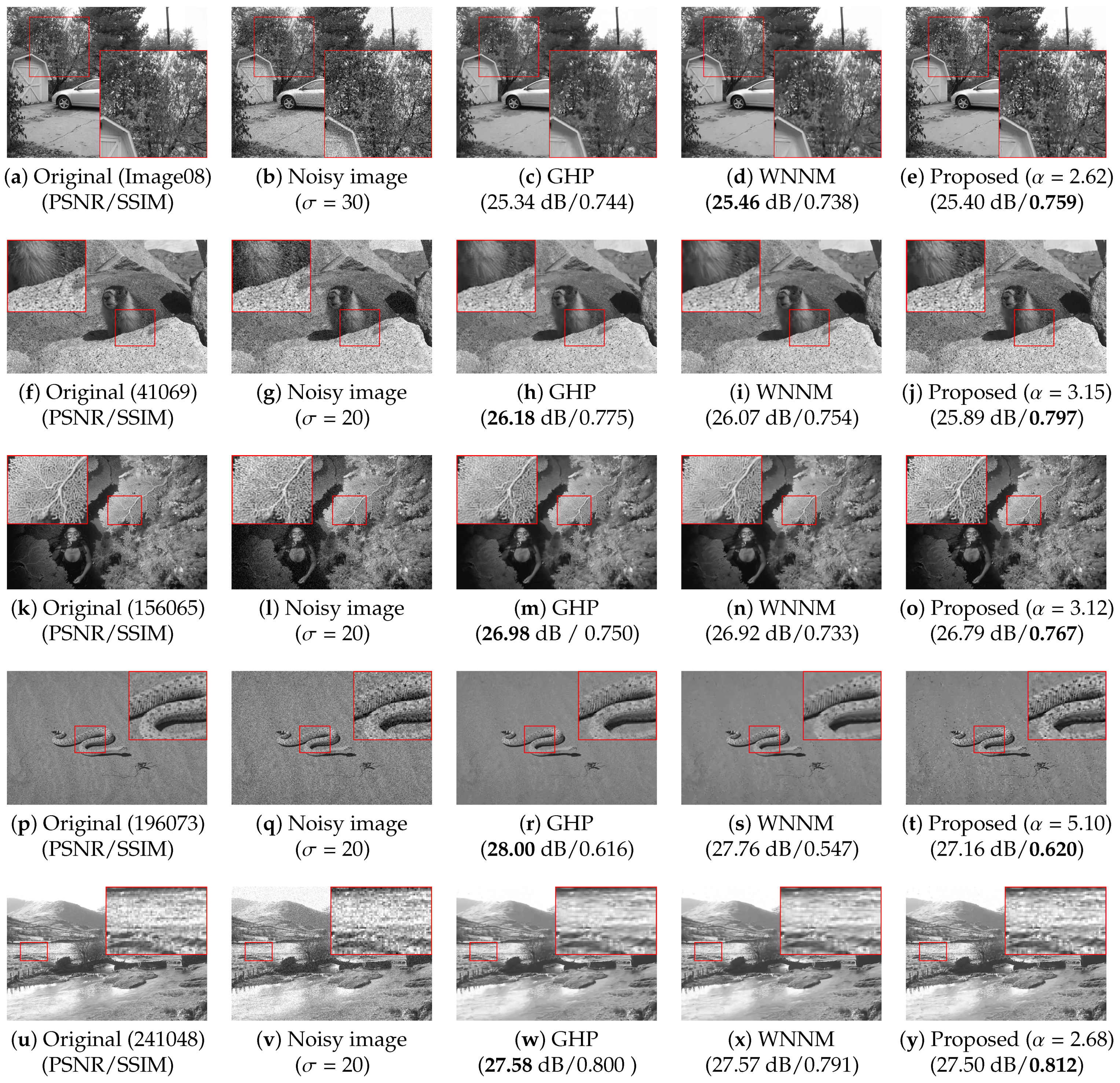

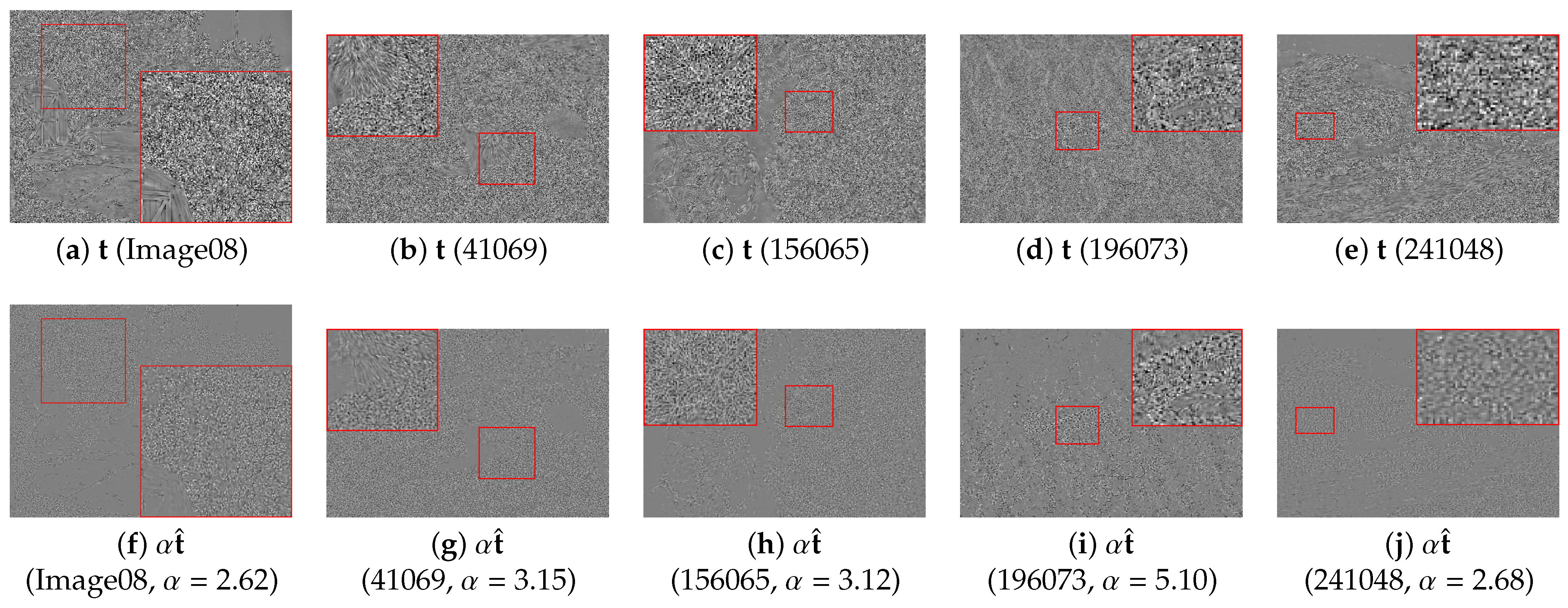

Image denoising methods such as WNNM can estimate the original image well in terms of the mean squared error (MSE) or the peak-signal-to-noise ratio (PSNR). However, as shown in

Figure 1, texture is often lost in the estimated image. Because the texture carries important information about the aesthetic properties of the materials depicted in certain parts of an image (such as the feel of sand, fur, or tree bark), the texture losses severely degrade the subjective image quality.

On the other hand, it is difficult to obtain a good description of texture (we refer to such a description as a texture model) and to estimate its parameters because of its stochastic nature, and the performance of texture-aware image denoising methods [

8,

19,

20,

21] depends on these models and parameters. Thus, we can classify texture-aware image denoising methods based on the texture models they utilize.

The gradient histogram preservation (GHP) method [

19] is a prominent texture model for texture-aware image denoising. GHP describes texture features in the form of a histogram of the spatial gradients of the pixel values (gradient histogram). GHP is based on an image denoising method called nonlocally centralized sparse representation [

15], and it can recover texture by imposing the condition that the gradient histogram of the output image must be close to that of the original image. The parameters of GHP can be estimated from the observed image by solving an inverse problem.

However, GHP does not utilize the relationships between distant pixels. Nevertheless, these relationships can carry important texture information because textures are often repeated over long distances.

Zhao et al. addressed this problem by proposing a texture-preserving denoising method [

20] that groups similar texture patches through adaptive clustering and then applies principal component analysis (PCA) and a suboptimal Wiener filter to each group. The Wiener filter is a special case of a linear minimum mean squared error estimator (LMMSE filter), and it requires an estimation of the covariance matrices of the original signals. In this texture model, the relationships between distant pixels can be expressed by the covariance matrices. The covariance matrices that are used in the suboptimal Wiener filter are calculated from sample observation patches, which are chosen using a nonfixed search window.

As another method of managing the distant relationships characterizing texture, a denoising method using the total generalized variation (TGV) and low-rank matrix approximation via nuclear norm minimization has been proposed [

21]. In this method, the TGV is used to avoid the oversmoothing that is typically caused by low-rank matrix approximation methods [

10,

16]. The TGV and nuclear norm minimization are applied to capture the relationships between nearby pixels and distant pixels, respectively. Liu et al. claim that the latter are related to textures with regular patterns. The parameter of their texture model is the weight parameter of the TGV term. An iterative algorithm is required to estimate this parameter.

There is a trade-off between the complexity (or capacity) of a texture model and the simplicity of parameter estimation. For example, GHP [

19] is a simple texture model with parameters that are easily estimated. However, this model cannot express textures in detail. By contrast, the covariance in the PCA domain [

20] and the combination of the TGV and the nuclear norm [

21] are complex texture models that can represent detailed textures well; however, their parameter estimation is relatively difficult.

To resolve this problem, we employ a slightly different definition of texture in this paper. We define texture as the difference between the original image and the corresponding denoised image (as obtained from an existing denoiser). We use the covariances within the texture component and between the texture and noise (covariance matrices) as our texture model, thereby capturing information about the relationships between distant pixels. Throughout this paper, we represent the covariance information in matrix form. Thus, we refer to this information as covariance matrices. It would appear to be difficult to estimate these matrices from a noisy observation. However, because we define the texture as the difference between the original and denoised images, we can utilize the information obtained in the denoising process to estimate the texture covariance matrices more easily.

We propose to use Stein’s lemma [

22] and several empirical assumptions to estimate the covariance matrices. Then, we apply an LMMSE filter based on the covariance matrices to estimate the lost texture information. In general, the Wiener filter assumes that the target and the noise have zero covariance. However, in our case, there is nonzero covariance between the texture, which is the difference between the original and denoised images, and the noise because the denoised image depends on the noise. Thus, we propose to use the LMMSE filter for the case in which there is covariance between the signal and noise.

Moreover, our approach yields a separate texture image, which allows us to emphasize the texture with any desired magnitude. Such texture magnification can improve the subjective quality of an image.

Figure 2 presents our motivation.

Our contributions are summarized as follows:

We propose a new definition of texture for texture-aware denoising and a method of recovering texture information by applying an LMMSE filter.

We introduce several nontrivial assumptions to estimate the covariance matrices regarding the texture and noise that are used in the LMMSE filter based on Stein’s lemma.

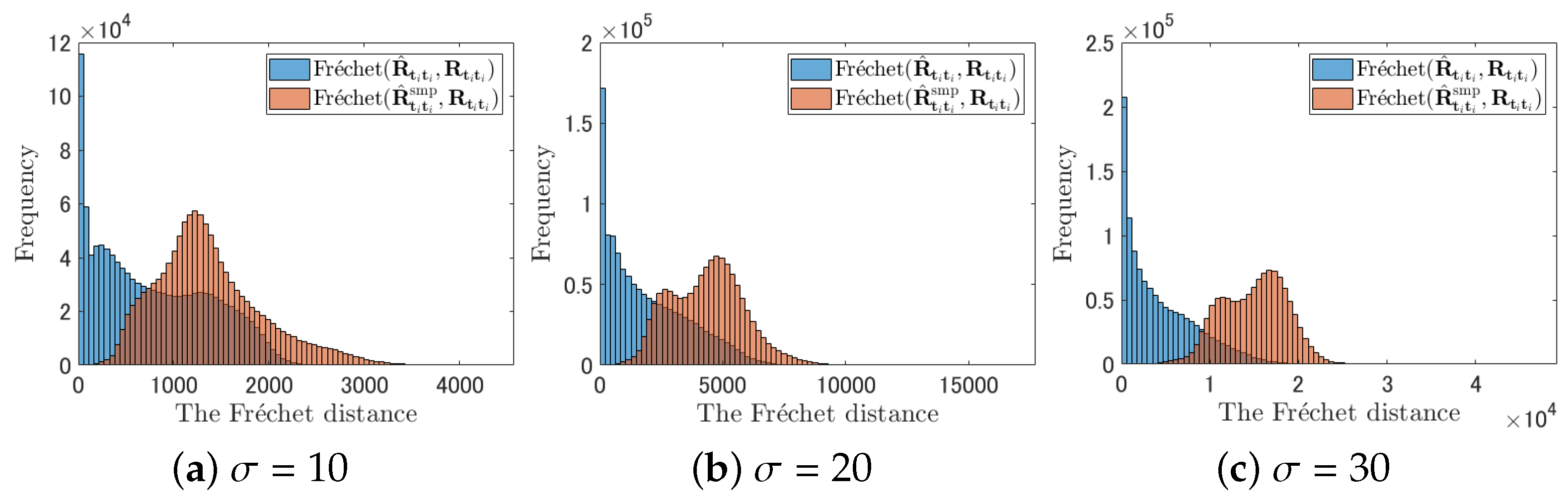

We show an effectiveness of our method in terms of the PSNR and subjective quality (with texture magnification) through experiments. We also show similarities between our estimated covariance matrices and the corresponding true covariance matrices in terms of the Fréchet distance [

23].

Recently, several image denoising methods based on neural networks have been proposed [

9,

18]. These methods achieve excellent denoising performance. However, understanding the process underlying such black-box methods is difficult. To the best of our knowledge, WNNM is still the state-of-the-art among denoising algorithms that are not based on machine learning approaches (i.e., white-box methods), and our proposed method represents the first successful attempt to significantly improve the performance of WNNM. Additionally, our method can explicitly extract the texture component from the noisy image, enabling us to maximize the perceptual quality of the denoised images by arbitrarily magnifying the obtained texture. In the pursuit of the inherent model of natural images, it is worthwhile to improve the denoising performance of white-box algorithms, as accomplished with the proposed method.

This paper is organized as follows.

Section 2 introduces WNNM as the background to this study.

Section 3 introduces our newly proposed method of texture recovery and analyzes the texture and noise covariance matrices using Stein’s lemma. We propose a linear approximation of WNNM to enable the application of Stein’s lemma.

Section 4 compares our method with other state-of-the-art denoising methods.

Section 5 concludes the paper.

Appendix A proves that our LMMSE filter can successfully estimate the texture component of the original image.

Appendix B shows experimental results to confirm our several assumptions.

Please note that this work on texture recovery has been previously presented in conference proceedings [

24]. In this paper, we show the proof of the LMMSE filter and experimental results to confirm some assumptions. We also analyze the estimation accuracy of our texture covariance matrix used for texture recovery and the effectiveness of emphasizing the texture statistically. In conference proceedings, we only provided limited experimental results (24 images of Kodak Photo CD PCD 0992). On the other hand, in this paper, we show new experimental results obtained on two image datasets (contains 110 images in total) to confirm our method’s effectiveness.

2. Preliminaries and Notation

In this paper, denotes the set of real numbers, small bold letters denote vectors, and large bold letters denote matrices. We denote the estimates of and by and , respectively.

In this section, we describe WNNM [

16] (a state-of-the-art denoising method based on NLSS) because our method estimates the texture information lost via the denoising process in WNNM.

In image denoising, a noisy observation is modeled as

where

is the noisy observation with

m pixels,

is the original image that is the target of estimation, and

is AWGN, of which the standard deviation is

.

In WNNM, the observed image is first divided into I overlapping focused patches ( pixels). The I depends on the image size and the noise level. Our experiment follows the default parameter of author’s implementation of WNNM. For example, in the case of , the patch size is pixels, and the focused patches are selected by 1 pixel skip (stride 2). Therefore, when the image size is pixels, the total number of focused patches I is 32,004 (note that similar patches are selected from pixels patches extracted from the neighborhood ( pixels) of the focused patch. The overlapping focused patches are then vectorized. We denote the i-th focused patch of by (). Then, a search is performed for the patches that are the most similar to each segmented patch , and for each , a patch matrix is created that includes as its leftmost column, while the remaining columns of are the patches that are similar to . For the i-th patches of the other components and , we use similar notation, i.e., and . We denote their corresponding patch matrices by and . Note that the indices of the similar patches in and are the same as those in .

For simplicity, we subtract the columnwise average of the matrix

from each row of

and denote the result by

. The same columnwise average subtraction method is used in the implementation provided by Gu et al.; however, this is not explicitly described in [

16]. Because we assume that

is AWGN, each columnwise average of the noiseless matrix

is sufficiently similar to that of

. Thus, the objective of WNNM is to estimate

which is obtained by subtracting the columnwise average of

from

.

The core of the denoising process of WNNM is the following equation based on the singular value decomposition (SVD) of the observed patch matrix

:

where

is the denoised patch matrix,

is the SVD and

is a threshold function. Each component

of the diagonal matrix

is expressed as

where

C is an arbitrary parameter, and

is a small number. This process corresponds to a closed-form solution of the iterative reweighting method applied to the singular value matrix of

[

16].

The singular value thresholding described above is applied to each focused patch and similar patches, and estimates of the original patches are obtained by adding the columnwise average to

. Then, the estimated original patches are combined to obtain a reconstructed image (overlapping patches are subjected to pixelwise averaging). In WNNM, this process is iterated to estimate the original image. In each iteration, the denoising target

is updated by calculating a weighted sum of the most recently estimated image

and the original noisy observation

(where

k is the index of the iteration) with a weight parameter

, as follows:

. This process is called iterative regularization, and the parameter

is fixed to

in [

16]. The WNNM algorithm is given in Algorithm 1. For additional details on WNNM, please consult [

16].

| Algorithm 1 Image denoising via WNNM. |

| Input: Noisy observation |

| Initialize and |

| for

do |

| Iterative regularization: |

| for do |

| Find the similar patch matrix |

| Estimate the corresponding original patch matrix by applying Equation (2); the result is |

| end for |

| Use the to reconstruct the estimated image |

| end for |

| Output: Obtain the denoised image as |

3. Recovering Texture via Statistical Analysis

In this section, we introduce our denoising method that recovers the texture lost by denoising a noisy observation. In this paper, we define a structure (or cartoon) component

, where

is a map corresponding to the denoising process of WNNM. Then, in contrast to previous work [

8,

19,

20,

21], we define a texture component

as the difference between the original image

and the structure

(i.e.,

). Thus, we obtain our extended observation model, i.e.,

Please note that the above equation is equivalent to Equation (

1) since

. Our goal is to estimate the texture component

that has been lost via WNNM.

First, we denoise the observation with WNNM. Following WNNM, we estimate each texture patch matrix from the corresponding structure patch matrix , which is obtained in the final iteration of the WNNM procedure, and the corresponding observation patch matrix . Because we use an LMMSE filter to estimate , we need the statistical information on the relationship between the texture and noise; however, this information cannot be calculated directly because and are not observable. Instead, we estimate this information using Stein’s lemma and several assumptions, which are described below, and then reconstruct the estimated texture patch matrices to obtain the estimated texture component . Finally, we obtain an estimate of the original image, , by adding the estimated texture component to the denoised image .

3.1. LMMSE Filter for Texture Recovery

We use an LMMSE filter to estimate each texture patch

. The objective function of the LMMSE filter,

is formulated as

where

denotes the expected value and

is the

ℓ − 2 norm. Because this formula is quadratic, we can easily find the minimizer as follows:

where we adopt the following covariance matrix notation:

(where

and

are some random vectors). The proof is given in

Appendix A.

Unfortunately, the covariance matrices and cannot be directly obtained because and are not observable. We solve this problem by using Stein’s lemma and introducing several assumptions.

3.2. Estimation of the Covariance Matrices

As mentioned above, we need to estimate

and

. Since we define

as

, and the value of

is changed with respect to the value of

, we can assume that

is the output of a function that takes

as an input.

also follows a normal distribution. Thus, we can estimate

using Stein’s lemma as follows:

The above equation shows that our desired covariance matrices can be obtained from the effects of the

on the

. The texture patch

is equal to

according to Equation (

5); moreover, we assume that the effect of

on

is zero. Therefore, we can estimate the covariance matrix

as

We need to analyze the variations in the WNNM output as the noise varies. As mentioned above, the WNNM process is complex. Thus, we need to approximate the WNNM process with a linear filter to simplify the analysis.

Surprisingly, the linear approximation of the WNNM process also provides us with an empirical method of estimating , which is more difficult to estimate than . In the next subsection, we describe how to approximate WNNM with a linear filter and how to estimate .

3.3. Linear Approximation of the WNNM Procedure

To simplify the analysis and determine the covariance matrix

by using Stein’s lemma, we assume that we can precisely approximate the whole process of WNNM as a linear filter:

The SVDs of

and

are formulated as

If we ignore the fact that the patch matrices are updated in WNNM via a similar patch search, reconstruction and iterative regularization in each iteration, then the SVDs of

and

will have common right and left singular matrices. We assume that

and

are equal to

and

, respectively. Thus, we can obtain the approximate WNNM filter

as follows:

The partial derivative of the columnwise average of with respect to is considered to be very small because is Gaussian and because the average of is zero. Note that is obtained by subtracting the columnwise average of from .

If this approximation of WNNM is sufficiently accurate, then

. From Equation (

9), we can estimate

as

Additionally, we use the approximate WNNM filter

to estimate

. We assume that

can be estimated as

This assumption is founded on preliminary experiments. The details of these preliminary experiments are presented in

Appendix B.3.

3.4. Recovering the Texture of a Denoised Image

Based on the above discussion, we can calculate the LMMSE filter and estimate

. However,

is not invertible in practice because the number of patches

M is smaller than

L. Although an equivalent estimate of

appears to be

(where

is the identity matrix), this choice does not provide good recovery performance. Instead, substituting

into the sample covariance matrix

was found to yield the best results in our preliminary experiments. Thus, we calculate the LMMSE filter as

Substituting Equation (

13) and (

14) into (

15), we can estimate

as

Then, we can obtain the desired estimated texture image

that is obtained by combining each

in the same manner used to reconstruct an image from the patches obtained in WNNM. Finally, we can obtain the final estimated image

with enhanced texture as

We use the LMMSE filter to obtain

; however, minimizing the MSE often causes

to lose clarity. We can obtain a clearer texture-enhanced image as follows:

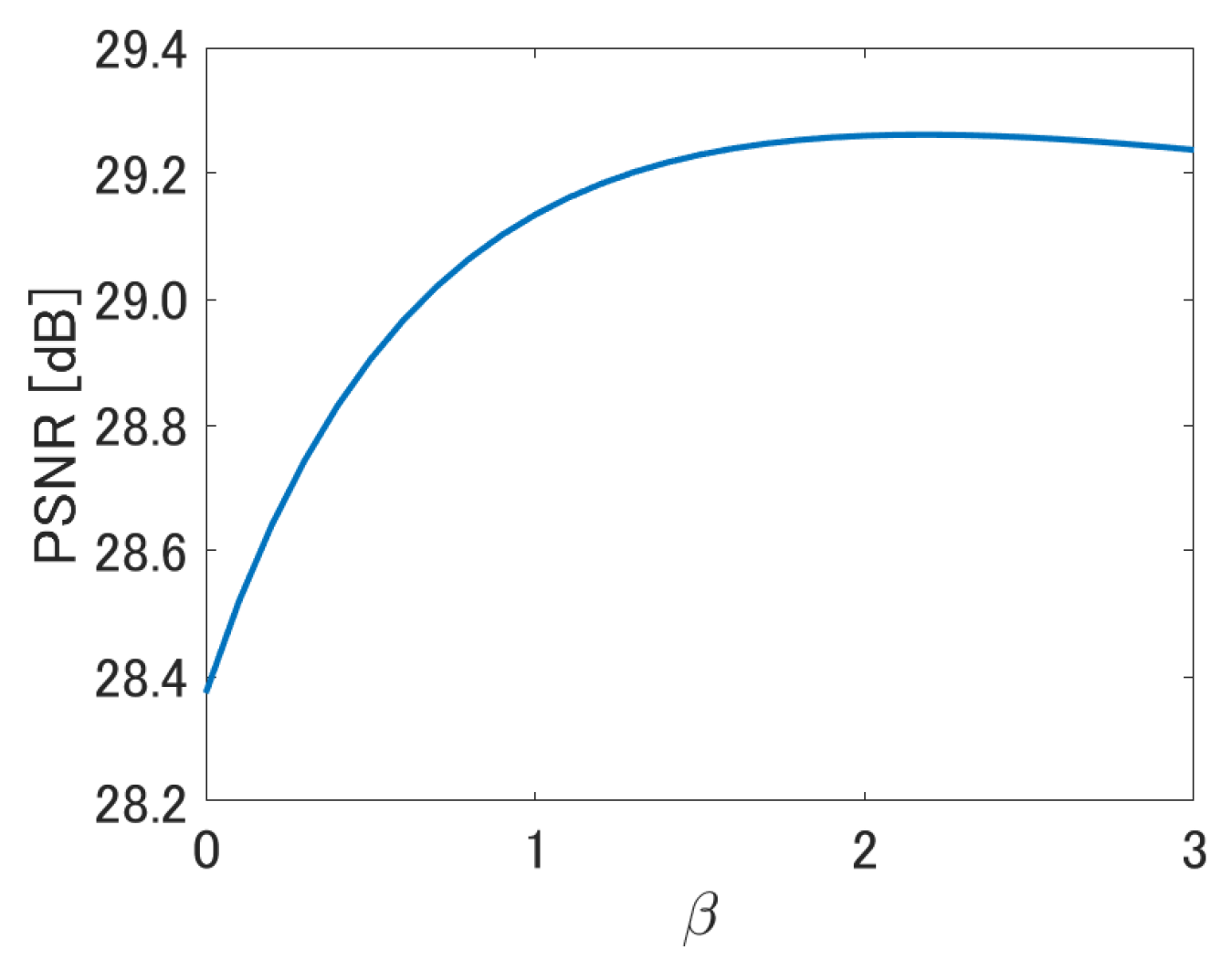

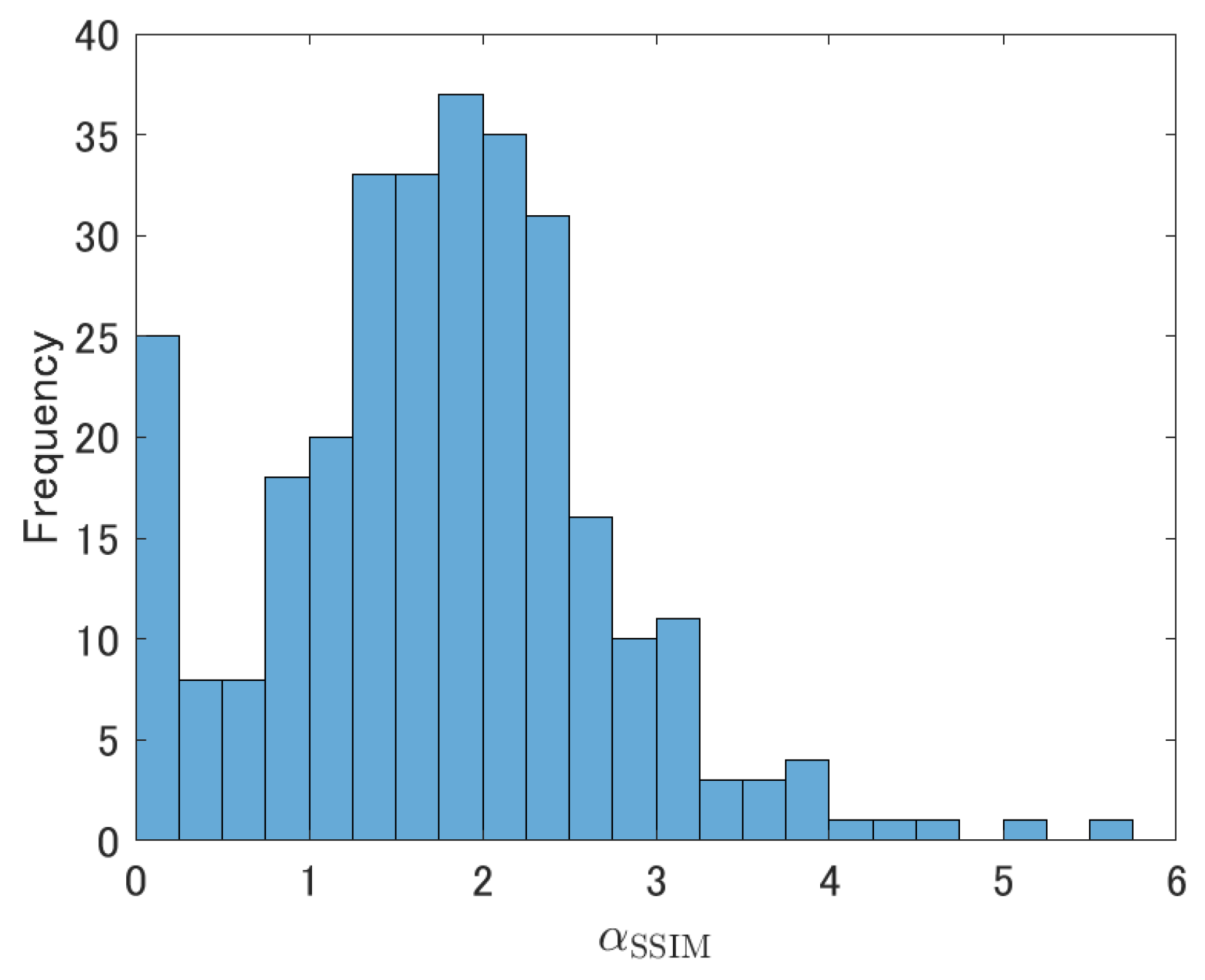

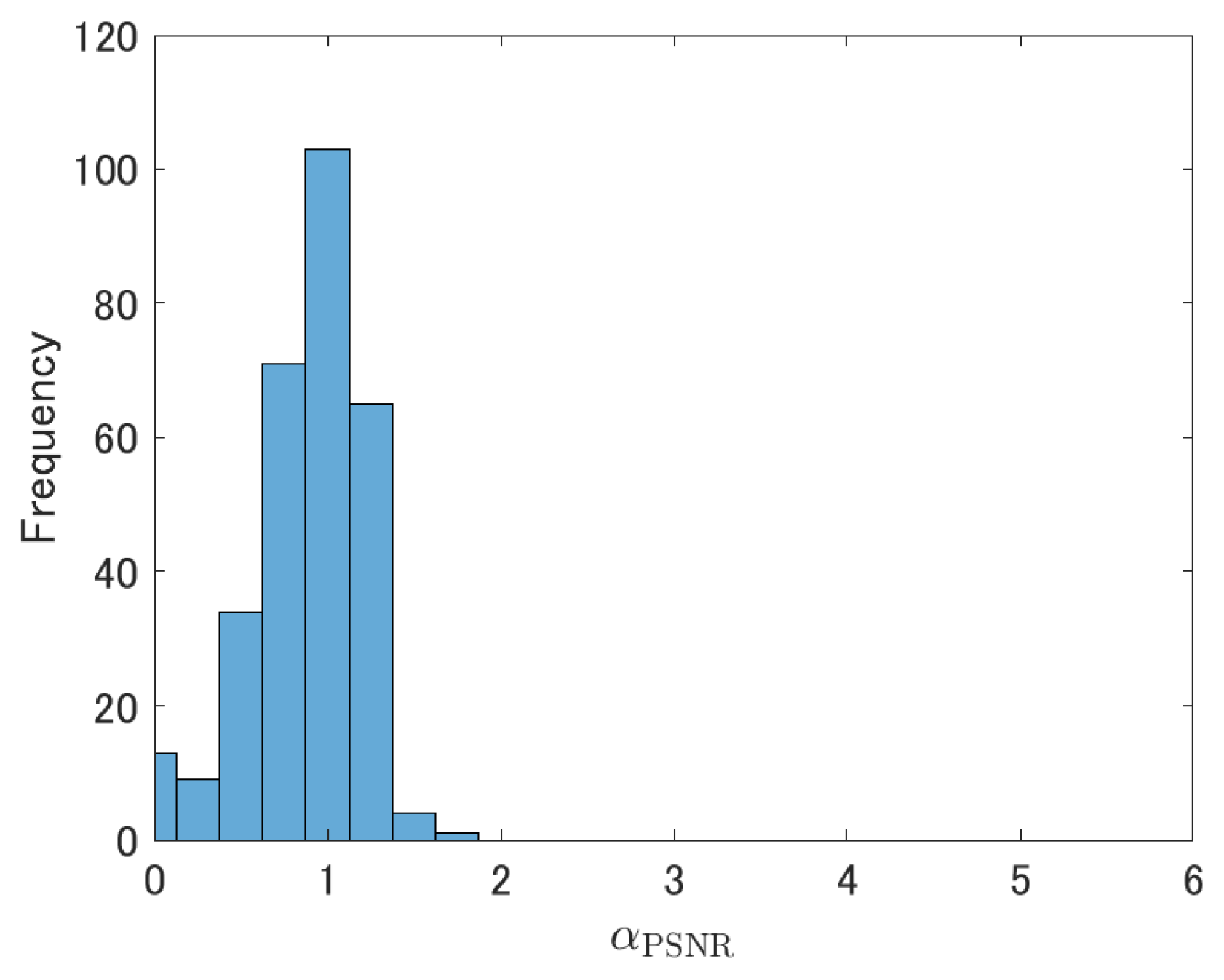

where

is an arbitrary parameter used to control the magnitude of the texture that is added. Note that

means the proposed method applies the LMMSE filter and we can expect to obtain the denoised images with best PSNR if all of our assumptions hold. The entire proposed process is presented in Algorithm 2.

| Algorithm 2 Texture recovery using the proposed method. |

| Input: Noisy observation |

| Initialize and |

| for do |

| Iterative regularization: |

| for do |

| Find the similar patch matrix |

| Estimate the corresponding original patch matrix via Equation (2); the result is denoted by |

| if k=K then |

| Estimate and using Stein’s lemma via Equation (13) and (14) |

| Calculate via Equation (9) |

| Estimate via Equation (16) to obtain |

| end if |

| end for |

| Use the to reconstruct the estimated image |

| end for |

| Use the to reconstruct the estimated texture component |

| Output: Obtain the final estimated image as |

5. Conclusions

In this paper, we have proposed a method of recovering texture information that has been oversmoothed by the denoising process in WNNM. For texture recovery, we apply an LMMSE filter to a noisy image. Because our filter requires covariance matrices between the texture and noise, we have also proposed a method of estimating this information based on Stein’s lemma and several key assumptions.

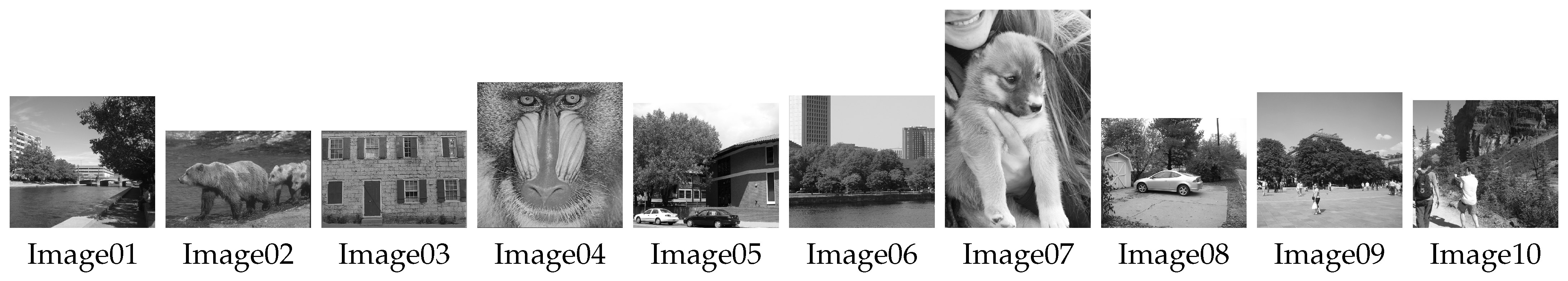

Experimental results obtained on various image datasets show that our method can improve PSNR and SSIM of WNNM and also outperforms other state-of-the-art methods with respect to both criteria. Moreover, we confirmed statistically significant differences between our method and WNNM. With our method, SSIM values can be further improved by choosing a suitable value for a scaling parameter that controls the magnitude of the added texture. Moreover, blurred edges and texture can be enhanced through a proper selection of this scaling parameter. Additionally, our estimated texture covariance matrices are more similar to the corresponding oracle covariance matrices in terms of the Fréchet distance than are simply sampled estimates obtained from the observed images. Finally, an additional computational time of our texture recovery is 7–13% of the computational time of WNNM. We consider the additional cost is acceptable considering the gain of PSNR and SSIM.

In the experiments, we chose almost all the parameters of the proposed method based on the default setting of WNNM. The only parameter that the user needs to choose is . When the user wants to maximize the PSNR, = 1 gives the best result in most cases. If the user would like to enhance the texture, the user should choose the larger than 1. In addition, our experimental results show that almost always improves the SSIM.