1. Introduction

The Humanities and Heritage Italian Open Science Cloud (H2IOSC) [

1] is a research initiative funded by the Italian Ministry of University and Research as part of the National Recovery and Resilience Plan (PNRR). The project aims to establish a federated and inclusive cluster of Research Infrastructures (RIs) within the European Strategy Forum on Research Infrastructures (ESFRI) domain of Social and Cultural Innovation. This cluster fosters collaboration among researchers from various fields, such as Humanities, Language Technologies, and Cultural Heritage, allowing them to work on data and compute-intensive projects.

H2IOSC brings together four key RIs. The first is DARIAH-IT (Digital Research Infrastructure for the Arts and Humanities), which supports digitally enabled research and teaching in the arts and humanities. The second RI is CLARIN, which provides access to language data and tools for research in the humanities and social sciences. CLARIN offers multimodal digital language data (including text, audio, and video) along with advanced tools for analyzing and integrating these datasets. The third RI, OPERAS, promotes open scholarly communication in the social sciences and humanities within the European Research Area, coordinating and federating resources to meet the communication needs of European researchers in these fields. Lastly, E-RIHS [

2], the Italian node of the European research infrastructure for Heritage Science, offers access to cutting-edge scientific tools and organizes research, PhD, and training programmes focused on non-invasive diagnostics applied to cultural heritage.

DIGILAB is set to become the digital access platform for the Italian node of the European Infrastructure for Heritage Science (E-RIHS). Its main challenge is to integrate data, tools, and services into a unified digital environment, maximizing accessibility and interoperability while offering advanced services for processing, analyzing, and correlating data. The architecture of DIGILAB follows the principles set by ESFRI [

3] and is aligned with the goals of cluster projects in the Cultural Heritage domain [

4]. On the national level, DIGILAB’s design adheres to the guidelines of the National Plan for the Digitization of Cultural Heritage [

5], developed by the Central Institute for the Digitization of Cultural Heritage-Digital Library of the Italian Ministry of Cultural Heritage. This plan outlines a strategic vision for digital innovation in the management of cultural assets. Additionally, DIGILAB must comply with EU data management principles (FAIR, Open Research Data) and the European Open Science Cloud (EOSC) initiative [

6].

In this context, Wireless Sensor Networks (WSNs) have emerged as a promising technology for real-time monitoring and surveillance of cultural heritage sites, particularly for microclimate monitoring. WSNs offer advantages such as cost-effectiveness, flexibility, and non-invasiveness. However, their implementation presents challenges, including issues related to sensor reliability, energy efficiency, data security, communication protocols, and interoperability. Addressing these challenges requires a multidisciplinary approach, involving experts from engineering, computer science, conservation, archeology, and cultural management.

Based on the related research section reported in the following, this paper proposes a multi-sensor software/hardware platform designed to support the remote monitoring and protection of Cultural Heritage assets, fulfilling the requirements of the H2IOSC project. This is achieved through the implementation of a multi-technology, large-scale Wireless Sensor Network (WSN). Following the Internet of Things (IoT) paradigm in this paper, we propose SENNSE, the new Spatial hEritage scieNce oNline Sensor Environment platform, specifically designed for real-time monitoring in heritage sites with its implicit challenges. SENNSE integrates a wireless sensor network (WSN) for monitoring both indoor and outdoor scenarios, complemented by wireless data loggers and battery-powered loggers. As previously mentioned, the SENNSE hardware platform is fully open and can be customized in terms of sensor types and transmission technologies, depending on the context and the specific object to be monitored—whether an environment or an individual artefact. Furthermore, SENNSE incorporates advanced data processing and interpretation modules that convert the collected information into valuable insights, which are communicated to the user through detailed reports and customized dashboards. The flexibility of both the hardware components and the software modules makes SENNSE ideally suited for different monitoring schemes in cultural heritage sites such as museums, libraries, and archeological areas. The two presented case studies are focused on the microclimate monitoring of indoor environments. More technically, the system SENNSE relies on integrating a Sensor Network of Smart Objects using Wi-Fi and Bluetooth Low Energy technologies, along with an online platform for data post-processing and visualization. The Message Queuing Telemetry Transport (MQTT) protocol [

7], known for its lightweight and efficient communication, has been selected to connect the WSN nodes and facilitate data exchange.

The data collected by the SENNSE management middleware platform is sent to the DIGILAB platform, which enables the analysis, post-processing, and visualization of heterogeneous geolocated data related to cultural heritage and is presented via interactive and customizable multi-tenant dashboards.

By harnessing the capabilities of Internet of Things (IoT) technologies and the computational power of DIGILAB, the large-scale WSNs enable remote monitoring and smart control of cultural heritage sites across the nation, minimizing the need for physical interventions and improving the efficiency of preservation efforts. Additionally, the platform will facilitate connections between the collected data across various research contexts in the cultural heritage field, enhancing data sharing and accessibility. Two use cases have been considered to validate the new SENNSE Platform. In the first case, the platform was tested in a controlled laboratory environment. In the second case, a “standard” use case was modelled, considering a real cultural heritage context, such as the “Biblioteca Bernardini” in Lecce, Italy.

This document is organized into sections. It begins with the Introduction, which outlines the objectives of the H2IOSC project and the role of DIGILAB as a digital access platform for cultural heritage research. This section also introduces the reasons for developing SENNSE as an IoT-based solution for real-time monitoring of cultural heritage assets.

The second section, Related Research, provides an overview of existing IoT platforms and data processing requirements, highlighting technological gaps and the need for scalable, interoperable solutions tailored to cultural heritage.

The third section, Materials and Methods, details the structured workflow adopted for the design and development of SENNSE.

The fourth section, DIGILAB Integration and Testing, focuses on integrating SENNSE into DIGILAB to ensure semantic interoperability and describes the validation activities carried out in both controlled laboratory environments and real-world scenarios, such as the Biblioteca Bernardini in Lecce.

Finally, the document concludes with the Results and Conclusions, summarizing the platform’s capabilities, its contribution to cultural heritage monitoring, and the outcomes of the testing phases. Additional sections include References and acknowledgments related to funding and author contributions.

2. Related Research

2.1. IoT Platforms and IoT Data Processing Requirements

In recent years, there has been a proliferation of platforms and devices that have been developed with a particular focus on the utilization of low-cost, open-source devices. This emphasis has been placed in recognition of the imperative for innovative instruments that facilitate environmental monitoring within the domain of cultural heritage.

In [

8], an exposition is given of a low-cost device for the environmental monitoring of cultural buildings. The device is capable of measuring temperature, humidity and dew point. The visualization of the acquired data is facilitated by the ThingSpeak platform.

As demonstrated in [

9], another case of degradation monitoring is provided, featuring an autonomous and energy-efficient device. The visualization of data is achieved by Node-RED.

In [

10], an alternative low-cost solution to prevent the degradation of cultural buildings is presented. This solution can acquire environmental parameters and automatically regulate them through actuators.

In [

11], the authors present the findings of a monitoring campaign that was conducted in Colombia at both indoor and outdoor locations. The acquisition process has been facilitated by the utilization of portable devices, which have been equipped with wireless communications systems. The monitoring project conducted in indoor sites has revealed significant correlations between data and the presence of visitors, as well as the various methods employed for the conservation of artefacts.

In [

12], an IoT monitoring system has been installed in Matera, Italy, thus demonstrating the potential of IoT technologies to facilitate the management of incidents at cultural sites.

In addition to the devices and sensor platforms that have been proposed in the literature, a preliminary study was conducted on the online IoT platform. Below are some of the IoT platforms analyzed in detail, as it is possible to see in the

Table 1.

AskSensors [

13] is described as the IoT platform designed to be the simplest application on the market. Sensors can be easily connected, connected devices can be managed in real time, and the data collected can be analyzed on the cloud. The platform allows interaction with any actuators: LEDs, relays, motors, etc. Once the hardware is connected to the AskSensors IoT server, commands can be sent from anywhere in the world. The subscription plans include a free solution with many limitations and some paid plans that unlock many useful features.

Akenza [

14] is a platform that enables the creation of great products and services at the forefront of IoT. It allows us to connect, control, and manage sensor nodes all from a single platform. With easy and secure management of smart devices, connectivity, and data, Akenza’s IoT application platform enables the rapid introduction of innovative and smart solutions. The platform is suitable for organizations of all sizes, from startups to large enterprises. With self-service and low-code functionalities, Akenza promises ease of use even for those with no specific IT or technical knowledge.

ThingsBoard [

15] is a stable and well-developed open-source IoT platform. Built on the Java 8 platform, it provides full support for standard IoT protocols for device connectivity, including MQTT, CoAP, and HTTP, and currently supports three different database options: SQL, NoSQL, and hybrid databases. It creates workflows from the project lifecycle and REST APIs and has dozens of customizable dashboard widgets. ThingsBoard also offers various subscription plans, from the most economical “Maker” to the most complete “Enterprise” plan.

ThingSpeak [

16] is an open-source IoT analytics platform that allows aggregating, visualizing, and analyzing real-time data streams in the cloud and provides instant visualizations of data published by connected devices. Thanks to the ability to execute MATLAB code, this platform allows for online analysis and processing of newly received data. This service is often used for prototyping and proof of concept for IoT systems that require specific analyses. Some of the key features of ThingSpeak include easy device configuration using the most common IoT protocols. The ThingSpeak IoT software stores all information sent to the cloud in a single central online location. Analysts have access to their data both online and offline. The platform also allows users to import data from third-party websites. Companies use the collected data to develop predictive algorithms.

Thethings [

17] is an IoT enterprise platform based in the Netherlands that enables the implementation of scalable and flexible IoT solutions. This simple platform offers the ability to quickly connect IoT products using multiple protocols, APIs to manage them, and dashboards to display incoming data. The design and proposal of this platform are based on LoRaWAN technology, which allows the creation of networks between objects without the need for Wi-Fi or 3G/4G connections. In other words, it allows the activation of networks without requiring access codes or mobile subscription services. This ease of use allows ordinary users to participate easily by activating a device that contributes to creating peer-to-peer nodes for full city coverage. The first project started in Amsterdam and was completed in six weeks. Another key aspect of LoRaWAN devices (and the project) is their low energy consumption. With Thethings, users can create custom IoT dashboards to monitor sensor behaviour and the status of monitored sites in real time. Dashboards are highly customizable by adding and removing widgets. The site does not offer free subscription plans, but it can provide a demo of the service and offer a series of paid plans to meet various needs.

While several IoT platforms are currently available, none fully meet the scalability and national/international interoperability requirements essential for safeguarding cultural heritage.

To complete the scenarios about the IoT platform, it is necessary to consider the IoT Data Processing Requirements of the IoT Data that are primarily driven by the massive scale, high velocity, and diversity of the data, requiring advanced processing, analysis, security, and efficiency measures. In detail, the key requirements for IoT data processing can be summarized as follows:

Managing Massive Scale and Velocity in IoT Data: IoT applications generate enormous amounts of data at very high speed, creating significant challenges for traditional data management systems. As the number of connected devices grows, infrastructures must evolve rapidly from medium-scale servers to fully equipped data centers. This scenario requires scalable solutions for storage and distributed processing to handle dynamic datasets efficiently [

18,

19]. Moreover, many IoT applications demand real-time responses, making low-latency analysis essential. These requirements push current infrastructures close to their limits, particularly in terms of computational capacity and energy consumption, quality assurance, and security management [

20,

21].

Data Filtering and Preprocessing: As IoT systems generate massive amounts of data, it becomes essential to reduce the volume before transmission and storage [

22]. This is often achieved through edge filtering, where part of the processing occurs directly on the device. Dedicated mechanisms, such as System Efficiency Enhancements (SEEs) [

23], are designed to preprocess and filter incoming data efficiently [

24]. Additionally, data must undergo cleansing, transformation, and standardization to ensure compatibility and facilitate integration with analytical systems [

25]. A common step in this process is staging and normalization, which prepares data for subsequent analysis by converting it into a consistent format.

Advanced Analysis and Real-Time Decision-Making: IoT applications require moving beyond traditional batch processing to enable just-in-time reactions to incoming data. Analysis must not only respond to immediate thresholds but also identify evolutionary patterns that could signal critical situations, supporting predictive planning and resource optimization. To achieve this, high-speed data analysis is essential, often involving numerous queries per second [

26]. Furthermore, integrating Machine-Learning (ML) and Deep-Learning (DL) [

27] models enhances the ability to detect complex patterns [

28], correlations, and anomalies that conventional methods might overlook, enabling more accurate predictions and informed decision-making.

Quality, Integrity, and Security Management: Ensuring the quality, integrity, and security of IoT data is fundamental for reliable decision-making and system performance [

29]. Equally important is anomaly detection, which helps identify irregularities that could compromise data security or system dependability [

30]. To protect data integrity and confidentiality, robust security measures must be implemented, including encryption during transmission and storage, authentication protocols, and intrusion detection systems [

31].

Energy Efficiency in Data Transfer: Reducing energy consumption in IoT systems requires optimizing data transmission and minimizing unnecessary transfers. Techniques such as data aggregation, which consolidate multiple data points into unified packets, help decrease the frequency of transmissions and extend the operational life of energy-constrained devices [

32]. By implementing efficient transfer mechanisms and reduction strategies [

33], IoT infrastructures can achieve significant energy savings while maintaining performance.

In summary, IoT data elaboration requires moving towards flexible, scalable, and highly efficient architectures which can be tailored to meet these compute-demanding applications. The need for high computational power relative to power consumption is a central theme in addressing these demands.

2.2. DIGILAB Architecture, Data Model, and Hosting Datacenter

As reported before, DIGILAB is envisioned as the digital access platform for the Italian node of the European Research Infrastructure for Heritage Science (E-RIHS). Its primary objective is to integrate data, tools, and services within a unified digital ecosystem, thereby enhancing accessibility and interoperability while providing advanced capabilities for data processing, analysis, and correlation.

The DIGILAB platform focuses on managing information for the Heritage Science (HS) communities. Its architecture is designed to handle both ontologically characterized data (i.e., metadata based on an ontology) and raw data. DIGILAB has developed its own ontology, building upon the experience and conceptual foundations of CIDOC CRM [

34]. This ontology introduces specific concepts tailored to the platform’s requirements, while maintaining semantic alignment through mappings to CIDOC CRM classes and properties. This approach ensures interoperability, consistency, and compliance with international standards, while extending the model to address the unique needs of cultural heritage research within DIGILAB.

The platform aims to provide advanced services to individual researchers, research groups, project teams, and the broader scientific HS community. The main DIGILAB services include:

A comprehensive catalogue of datasets, services, and tools. All resources included in the catalogues are required to be publicly accessible, albeit with varying levels of access permissions. Data may be directly contributed by platform users or imported from external sources. The platform provides a unified and coherent access point to the managed information. This information may originate natively within DIGILAB (i.e., contributed by its members), be imported from external repositories, or be referenced through metadata harvesting techniques.

Knowledge discovery services: DIGILAB incorporates a unified, semantically referenced repository where all managed information converges. This singular repository facilitates the development of a shared knowledge graph accessible to all modules within the platform. Additionally, the platform provides web services to support data transformation, reporting, and other related functionalities. A brief overview of the primary functionalities to be supported is presented below.

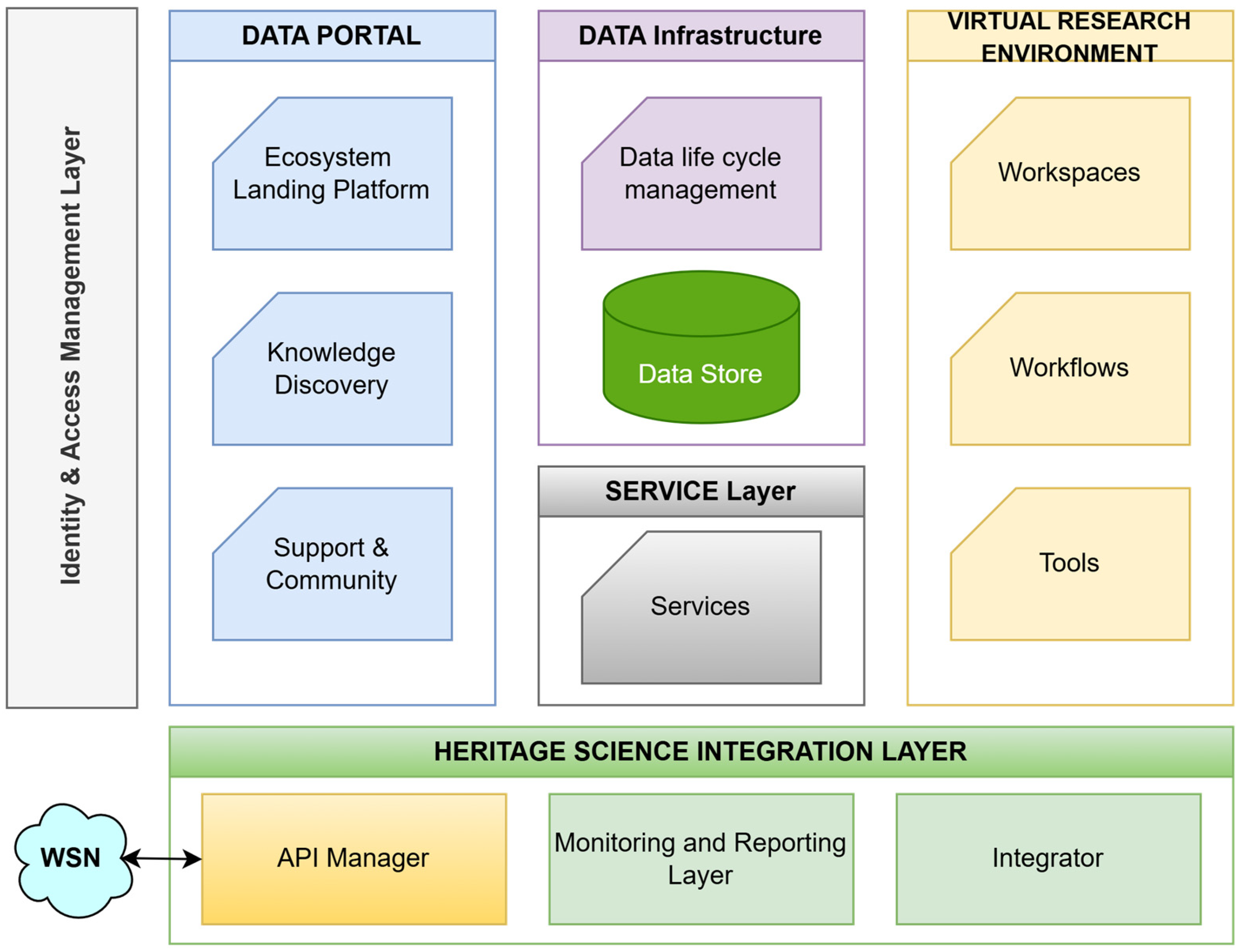

The architecture of the DIGILAB platform, illustrated in

Figure 1, comprises three distinct modules: the DIGILAB Portal, the DIGILAB Data Infrastructure (DIGILAB-DI), and the DIGILAB Virtual Research Environment (VRE). The DIGILAB Data Infrastructure represents the primary module, with its core objective being data management. The data model of DIGILAB is structured around the DIGILAB ontology. Accordingly, DIGILAB-DI incorporates semantic management systems that facilitate data correlation and metadata enrichment. This module enables the creation of workflows for data manipulation and encompasses an ETL (Extract, Transform, Load) module for data import as well as a metadata harvesting module. Additionally, to support the Wireless Sensor Network, DIGILAB-DI integrates the DIGILAB IoT semantic framework (further detailed below), which allows for the import and metadata enrichment of raw sensor data. The DIGILAB Portal module is designed to provide access to DIGILAB’s services for the research community. It includes a suite of support portals, such as training, helpdesk, and community-focused platforms. The DIGILAB Virtual Research Environment offers advanced data analysis services to researchers, enabling them to utilize metadata for correlating data and subsequently uncovering new domain-specific knowledge. The DIGILAB platform is set to be hosted within the E-RIHS node located in Lecce, Italy. Certain services will be managed collaboratively with other federated national nodes.

The Datacenter is a Hyper-Converged HPC (High-Performance Computing) composed of 12 compute nodes that deliver different services based on CPU and GPU processing. It is accompanied by different storage tiers to handle hot, warm, and cold data. These components let us manage the services and the entire data life cycle efficiently and flexibly.

The main goal of HCI (Hyper-Converged Infrastructure) is to bring this ease of deployment and management to high-performance computing environments, which traditionally require expert-level configuration and tuning. Hyper-converged infrastructure is built on four main principles. First, it consolidates resources through software-defined infrastructure, integrating compute, storage, and networking into a single system managed centrally by software rather than relying on specialized hardware. Second, it ensures scalability through automation and orchestration, allowing organizations to expand capacity easily by adding nodes and leveraging automation for tasks like provisioning, backup, and disaster recovery. Third, HCI emphasizes resilience and high availability, incorporating redundancy and data replication to maintain operations even in the event of component failures. Finally, it offers flexibility and agility, enabling businesses to adapt quickly to changing workloads, whether for virtualized environments, cloud-native applications, or data-intensive analytics.

The hardware equipment available includes the 12 HCI nodes, each with a 2 × 32-core Xeon Gold with 1TB of RAM, 4x25GbE Optical network ports, 25 TB of Tier 1 storage on NVMe HDD, and NVIDIA A40 GPUs. The tier 2 storage system comprises 4 Storage Array Nodes of 320 TB each, which will be integrated by a cloud backup (Tier 3) to store cold data in other federated data centres that participate in the distributed infrastructure.

The internal networking infrastructure relies on a high-speed spine-leaf architecture to optimize east–west traffic and enable fast communication between cluster nodes, ensuring scalability for Software-Defined Infrastructure (SDI). Security is a priority, supported by monitoring tools and active response systems, with additional measures for sensor connectivity and data protection. All equipment is housed in a modular, self-contained autonomous datacenter, designed for independence and resilience. This facility integrates power, cooling, networking, storage, and computing within a single unit, guaranteeing continuous operation even in isolated environments or during external failures.

2.3. DIGILAB Data Model

As previously stated, DIGILAB and, consequently, SENNSE make extensive use of ontologies to model domain knowledge. Since expert knowledge is implicit and cannot be directly articulated, its acquisition is a complex process of structuring hidden expertise. The modelling approach is inherently iterative and approximate, requiring continuous refinement and validation to maintain relevance and alignment with reality.

In the Cultural Heritage domain, CIDOC CRM [

34] serves as the reference ontology model, providing a conceptual framework that integrates theory and practice to describe and organize heritage information. In September 2000, the CIDOC CRM was officially accepted as a work item by the ISO Technical Interoperability Committee and became an ISO standard in 2006. As previously stated, DIGILAB has established its own ontology grounded in the principles of CIDOC CRM, incorporating platform-specific concepts while preserving semantic consistency through mappings to CIDOC CRM classes and properties.

The DIGILAB ontology was modelled using the Web Ontology Language (OWL), which provides advanced expressiveness, including property constraints and complex class definitions. OWL, grounded in computational logic, enables reasoning tasks such as consistency checking and inference, and supports publishing interoperable ontologies. OWL is part of the W3C’s Semantic Web technology stack, which includes, among others, RDF (Resource Description Framework), RDFS (Resource Description Framework Schema), and SPARQL (Protocol and RDF Query Language).

To formalize the DIGILAB ontology, we used the software DRAW.IO (v29.3.6) to model the concepts, objects, and data properties through a graphic notation, and Protégé (v5.6.5) to refine the skeleton of the ontology to produce the final ontology.

DRAW.IO facilitates the visual design of ontologies through an intuitive diagramming interface, while Protégé, an open-source platform, supports the formalization and refinement of ontological structures using semantic languages such as OWL. Together, these tools enable collaborative development, logical consistency, and advanced ontology modelling. The modelled elements in DRAW.IO are formalized using semantic language in the Protégé platform.

Protégé is an open-source platform widely used for ontology development, offering comprehensive features for building, editing, and refining ontologies. It enables efficient management of classes, properties, and relationships, ensuring logical consistency and supporting the enhancement of ontological structures and semantics.

3. Materials and Methods

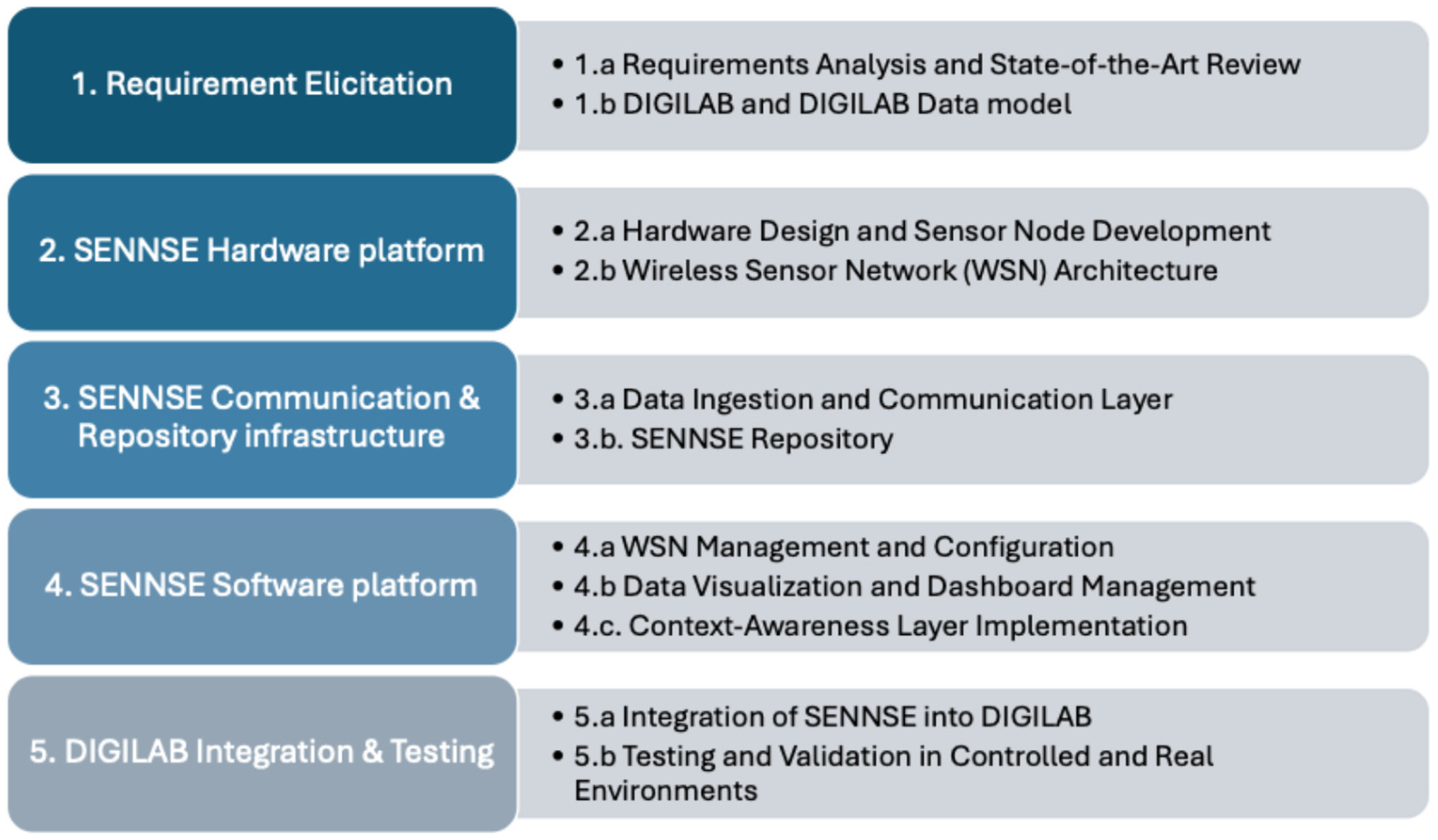

The design and development of the SENNSE platform followed a structured roadmap, ensuring interoperability, scalability, and compliance with cultural heritage monitoring requirements. The design and development of the SENNSE platform followed a structured and iterative workflow aimed at creating a robust, scalable, and interoperable solution for cultural heritage monitoring. Each phase was conceived to address specific technical and functional requirements, ensuring compliance with FAIR principles and seamless integration with the DIGILAB ecosystem. The process began with a thorough requirements analysis and State-of-the-Art review, including an in-depth study of DIGILAB’s architecture and semantic data model to guarantee interoperability. Subsequently, the hardware layer was developed, focusing on the design of flexible sensor nodes and a generic WSN architecture capable of supporting heterogeneous technologies. Building on this foundation, the software layer was implemented to manage data ingestion and communication through an MQTT-based system, complemented by a secure repository with multi-tenancy features. Advanced modules for WSN management, dashboard customization, and a context-aware visualization layer were then introduced to enhance usability and adaptability.

Finally, SENNSE was integrated into DIGILAB to enable semantic annotation and linkage of IoT datasets to cultural heritage objects, creating a unified and semantically enriched knowledge ecosystem. The platform was subsequently validated through controlled laboratory tests and a real-world deployment at the Biblioteca Bernardini in Lecce, confirming its reliability, scalability, and user-centric design.

Figure 2 reports a schema of the tasks carried out:

The detailed descriptions of the tasks carried out are described below:

- 1.

Requirement Elicitation

Requirement Analysis and State-of-the-Art Review. The process started with an extensive review of existing IoT platforms and cultural heritage monitoring solutions to identify technological gaps and define functional requirements. This step ensured that SENNSE would address interoperability, scalability, and FAIR data principles.

DIGILAB and DIGILAB Data model. As previously discussed, the design of SENNSE required an in-depth analysis of the DIGILAB architecture and its underlying data model to ensure full compatibility and seamless integration. This preliminary study was essential to align SENNSE’s structure with DIGILAB’s semantic framework, enabling interoperability across heterogeneous data sources and services.

- 2.

SENNSE Hardware platform

Hardware Design and Sensor Node Development. To ensure adaptability and long-term usability, the hardware board of the sensor node, designed around ESP32 (Espressif Systems (Shanghai) Co., Ltd., Shanghai, China) and was engineered as a highly flexible component capable of integrating new sensor types and supporting emerging transmission technologies with minimal effort. This modular architecture enables researchers to expand monitoring capabilities without replacing the entire system, reducing costs and improving scalability. Furthermore, the board includes multiple connectors and configurable interfaces to accommodate diverse sensing devices and communication standards, ensuring a future-proof design. Enclosures were modelled using CAD software (FreeCAD 0.21) and produced via 3D printing, adopting a parametric approach that facilitates rapid customization and iterative upgrades.

Wireless Sensor Network (WSN) Architecture. For monitoring purposes, it was necessary to design a generic and highly adaptable WSN architecture capable of supporting the flexibility of sensor nodes and accommodating heterogeneous technologies. The proposed WSN integrates multi-protocol communication, including Wi-Fi and Bluetooth Low Energy, to ensure compatibility across both indoor and outdoor environments. The architecture was conceived to be modular and scalable, allowing seamless addition of new nodes and the integration of emerging transmission standards without requiring structural changes. Each node can incorporate different sensor types thanks to a configurable hardware interface, ensuring adaptability to diverse cultural heritage monitoring scenarios.

At the conclusion of these two activities, the development process produced two key outcomes: the physical hardware board for the sensor node and the abstract model of the Wireless Sensor Network (WSN).

The abstract WSN model was defined to represent the logical structure and configuration parameters of the network. This model will provide the foundation for the configuration tool “WSN Management and Configuration design”, allowing dynamic management of nodes, communication protocols, and operational settings.

- 3.

SENNSE Communication and Repository

Data Ingestion and Communication Layer. The ingestion layer is developed as a custom solution tailored to cultural heritage needs. The MQTT protocol was chosen for its lightweight architecture and ability to support secure, low-latency communication between sensor nodes and the SENNSE server. This guarantees efficient data transfer even in large-scale deployments with thousands of sensors. To enhance flexibility, the ingestion layer integrates modular gateways capable of managing heterogeneous devices and communication standards. These gateways include customizable connectors, allowing the incorporation of non-standard protocols and emerging technologies without redesigning the entire system. This modular approach ensures that SENNSE can adapt to evolving IoT ecosystems and maintain compatibility with diverse sensor configurations. Beyond simple data transfer, the ingestion layer also implements data validation and buffering mechanisms, ensuring resilience during connectivity interruptions and preserving data integrity. Once collected, raw sensor data is routed to the SENNSE repository for storage and subsequent processing, forming the backbone of the visualization and analytics pipeline.

SENNSE Repository. The SENNSE server hosts a comprehensive database that aggregates raw sensor data collected through the MQTT connector. This repository acts as the core of the data pipeline: it stores unprocessed environmental readings alongside configuration settings, user profiles, and access control parameters. Once acquired, the raw data is processed and made available to the visualization layer, enabling real-time dashboards and interactive analytics. By adopting a multi-tenancy architecture, the repository ensures secure data segregation, allowing each user or research group to access only its dedicated IoT data space while maintaining centralized management and robust security controls.

At the end of these two activities, the software layer of SENNSE was designed and developed to ensure efficient data acquisition and secure management. This layer integrates an MQTT broker that acts as the primary interface for collecting raw data streams from distributed WSN nodes, guaranteeing reliable ingestion even in large-scale deployments. To complement this, the SENNSE Repository was implemented. It employs a robust database for storing raw sensor readings along with configuration parameters, user profiles, and access control settings. To prevent data loss during peak loads or connectivity interruptions, the repository incorporates a queue-based buffering system, ensuring that incoming data is processed in a controlled manner before being routed to the visualization layer.

- 4.

SENNSE Software platform

The SENNSE software was organized into two main modules: WSN Management and Configuration, and Data Visualization and Dashboard Management.

WSN Management and Configuration. This module is designed to handle the integration, configuration, and control of multiple Wireless Sensor Networks (WSNs) deployed across different sites. It provides advanced functionalities for device registration, protocol management and remote configuration of sensor parameters. Moreover, it supports over-the-air (OTA) firmware updates, enabling continuous maintenance without physical intervention. To safeguard sensitive cultural heritage data, security is enforced through role-based access control, encrypted communication channels, and strict authentication mechanisms, ensuring robust protection and compliance with data governance standards.

Data Visualization and Dashboard Management. This module is dedicated to transforming raw sensor data into meaningful insights through advanced visualization techniques. It enables real-time monitoring via fully customizable dashboards, allowing each user to design tailored views that reflect specific research objectives and operational needs. Users can select widgets, configure indicators, and organize panels to create personalized interfaces that simplify data interpretation. To further enhance usability, SENNSE will integrate a context-aware layer that dynamically adapts the visualization based on user roles, research context, and data relevance. This intelligent mechanism will ensure that information is presented in the most appropriate format, improving navigation and decision-making for interdisciplinary stakeholders.

Context-Awareness Layer Implementation. A dedicated layer has been designed to dynamically adapt data visualization based on user roles and research context. Similar to the other layers, this component is built upon an ontological model, carefully engineered according to best practices in ontology design. It categorizes information and maps it to the most appropriate representation, thereby enhancing usability and improving the overall user experience for interdisciplinary stakeholders.

The developed WSN Management module enables integration and remote configuration of multiple sensor networks, supporting OTA updates and secure communication. The Dashboard Management module provides a dashboard design environment and real-time monitoring across the designed dashboards. A context-aware layer was planned to adapt visualizations dynamically based on user roles and research context, improving usability and visualization. Together, these components deliver a flexible, secure, and user-centric system for cultural heritage monitoring.

- 5.

DIGILAB Integration and Testing

- a.

Integration of SENNSE into DIGILAB. At the end of the development process, a key activity focused on integrating SENNSE with the DIGILAB platform to ensure semantic interoperability and advanced data management. Within SENNSE, researchers can identify subsets of IoT data that represent critical conditions for monitored cultural heritage assets. These subsets are annotated and linked to the corresponding object, creating a semantic relationship that enriches the knowledge base.

By harmonizing the two models, SENNSE and DIGILAB form a unified, semantically enriched ecosystem that enables researchers to explore, correlate, and visualize data efficiently, fostering interoperability and knowledge sharing across the cultural heritage domain.

- b.

Testing and Validation in Controlled and Real Environments. The platform was validated through two use cases: a controlled laboratory setup and a real-world deployment at the Biblioteca Bernardini in Lecce. These tests assessed data accuracy, system stability, and dashboard usability, informing iterative improvements.

The integration of SENNSE into DIGILAB successfully established a unified and semantically enriched ecosystem for cultural heritage monitoring. Following integration, the platform was validated through two complementary use cases: a controlled laboratory environment and a real-world deployment at the Biblioteca Bernardini in Lecce. These tests confirmed the accuracy of data acquisition, the stability of the system, and the usability of dashboards, providing essential feedback for iterative improvements. Together, these results demonstrate SENNSE’s capability to deliver reliable, scalable, and user-centric solutions for cultural heritage preservation.

Based on the activities described above, the following section provides a detailed overview of the SENNSE modules and the testing activities carried out in real-world environments.

3.1. SENNSE: The IoT Core of DIGILAB for Cultural Heritage Sensing and Monitoring

SENNSE is implemented as an external system integrated within the DIGILAB platform, acting as the dedicated system for managing Wireless Sensor Networks (WSNs) deployed across cultural heritage sites. Designed to ensure interoperability and scalability, SENNSE provides a unified environment where heterogeneous sensor nodes can be configured, monitored, and controlled seamlessly. The system leverages adaptable and reconfigurable WSNs equipped with specialized sensors for cultural heritage applications, enabling the acquisition of critical environmental and structural data from geographically distributed sites. Through its integration with DIGILAB, SENNSE not only supports real-time data collection but also ensures semantic alignment with DIGILAB’s knowledge base, allowing IoT datasets to be annotated and linked to cultural objects. This approach creates a cohesive ecosystem that combines advanced monitoring capabilities with semantic interoperability, facilitating data analysis, visualization, and knowledge sharing across the heritage science community.

The SENNSE sensors capture key parameters such as temperature, humidity, light exposure, UV radiation, air quality, vibrations, and agents of deterioration. To facilitate seamless communication between these sensors and the DIGILAB infrastructure, a dedicated hardware-software middleware layer is required.

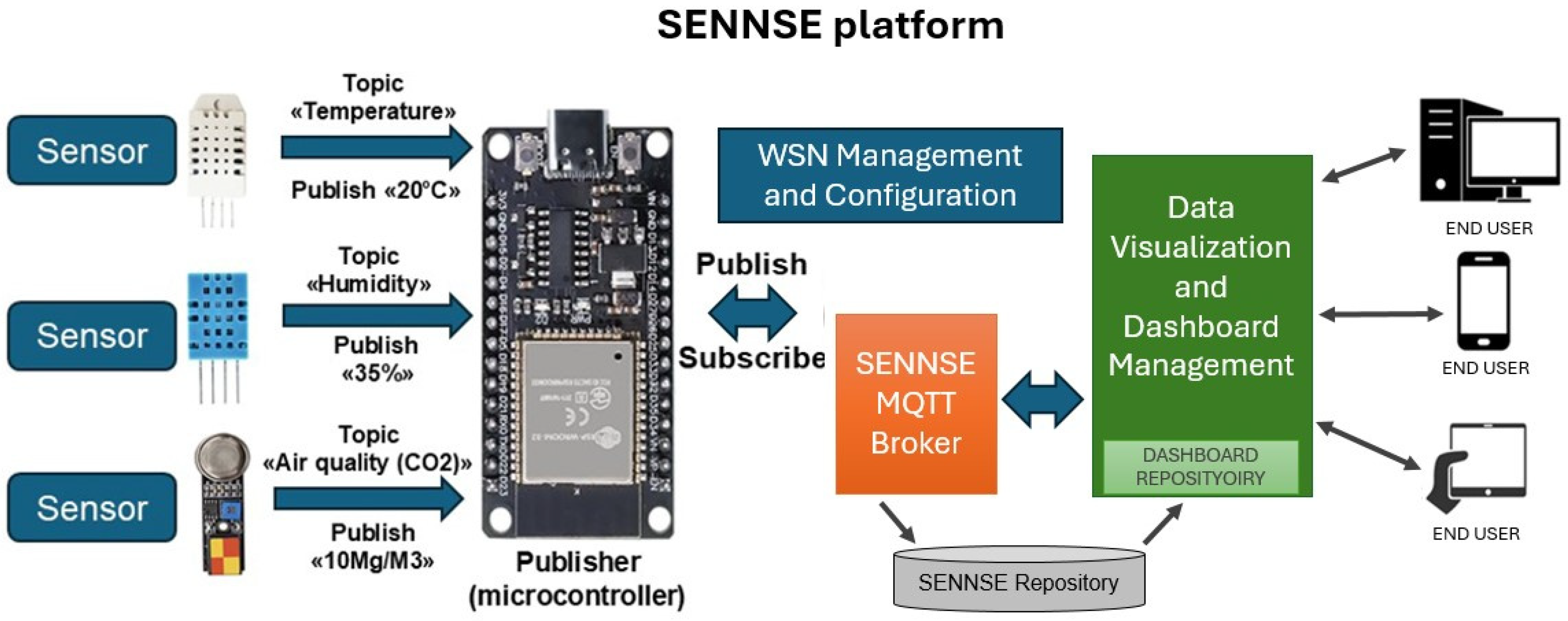

Figure 3 provides an overview of the large-scale WSN architecture, illustrating both its physical and software components. Each sensor node is responsible for collecting and preprocessing data locally before transmitting it to the MQTT broker via appropriate wireless communication protocols.

At the core of this architecture lies the MQTT broker, which serves as the bridge between the physical sensor network and the software ecosystem. The feasibility of deploying a cloud-hosted MQTT-based IoT architecture has been explored and is under evaluation. MQTT was chosen for its lightweight messaging capabilities, enabling efficient communication between sensor nodes and the application layer. The selection process considered MQTT’s ability to handle large data volumes, meet latency requirements, and ensure security. On the software side, each sensor node outputs data in JSON format, encapsulated within MQTT messages. These messages can either be stored in a database or processed directly by the software interface. The data transmission rate from sensors varies depending on the monitored parameters, ranging from real-time updates every few seconds to periodic transmissions occurring every few hours.

As depicted in

Figure 3, the architecture is structured around three main components:

SENNSE MQTT Broker—This core server hosts the MQTT broker, which manages communication between sensor nodes. It connects to a main database via a dedicated “connector,” ensuring seamless data transmission from sensors while also relaying configuration updates back to the nodes. Operating bidirectionally, this connector synchronizes system variables between the MQTT broker and individual sensors. The SENNSE MQTT infrastructure is designed to support at least 100,000 sensor connections nationwide, with each sensor transmitting messages of up to 1.5 KB.

SENNSE Repository—The central server incorporates a database that aggregates all sensor data received through the MQTT connector. In addition to storing environmental readings, it maintains configuration settings, user information, and access control parameters to regulate system usage.

WSN Management and Configuration—The backend software manages system operations, including data backups, update scheduling, database synchronization, and other essential configurations. This ensures the proper functioning of server-side processes and their integration with the front-end interface.

The WSN system offers various user-level functionalities, allowing each sensor node to be linked to a specific cultural artefact or site. Users can analyze data, configure sensors, set monitoring intervals, define alert thresholds, and manage other parameters. The platform also incorporates robust access control mechanisms, enabling different user profiles with varying levels of permissions.

The user interface will be fully customizable, displaying sensor data in an intuitive graphical format. A map-based visualization will allow users to navigate monitored sites and access sensor-specific data through interactive icons. Additionally, APIs will be provided for integration with artificial intelligence modules to enhance data analysis.

To address connectivity limitations, a backup function will be implemented to store local sensor data when network access is restricted or unavailable. Furthermore, the system will support both automatic and manual synchronization of local sensor data with the central database when connectivity is restored.

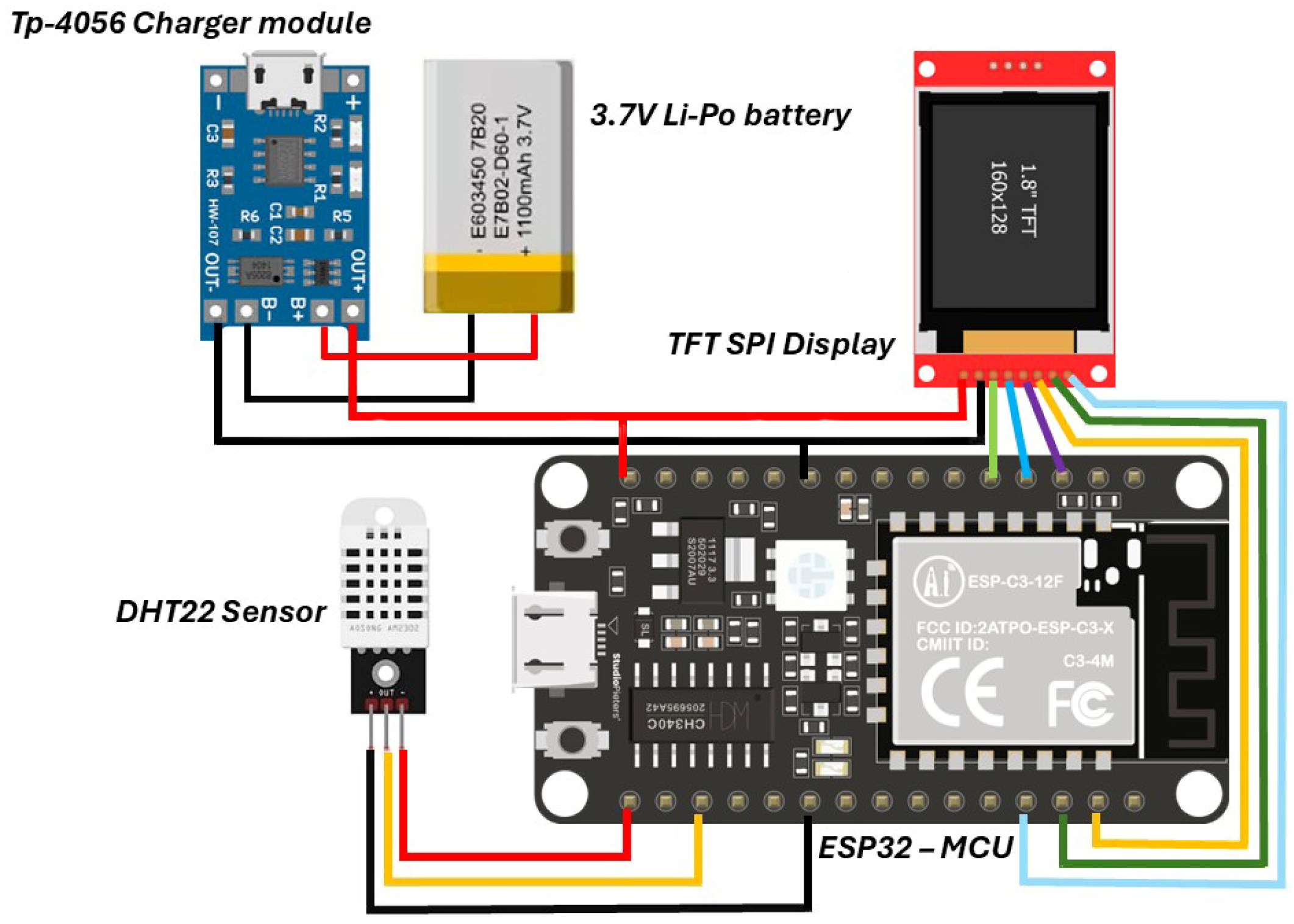

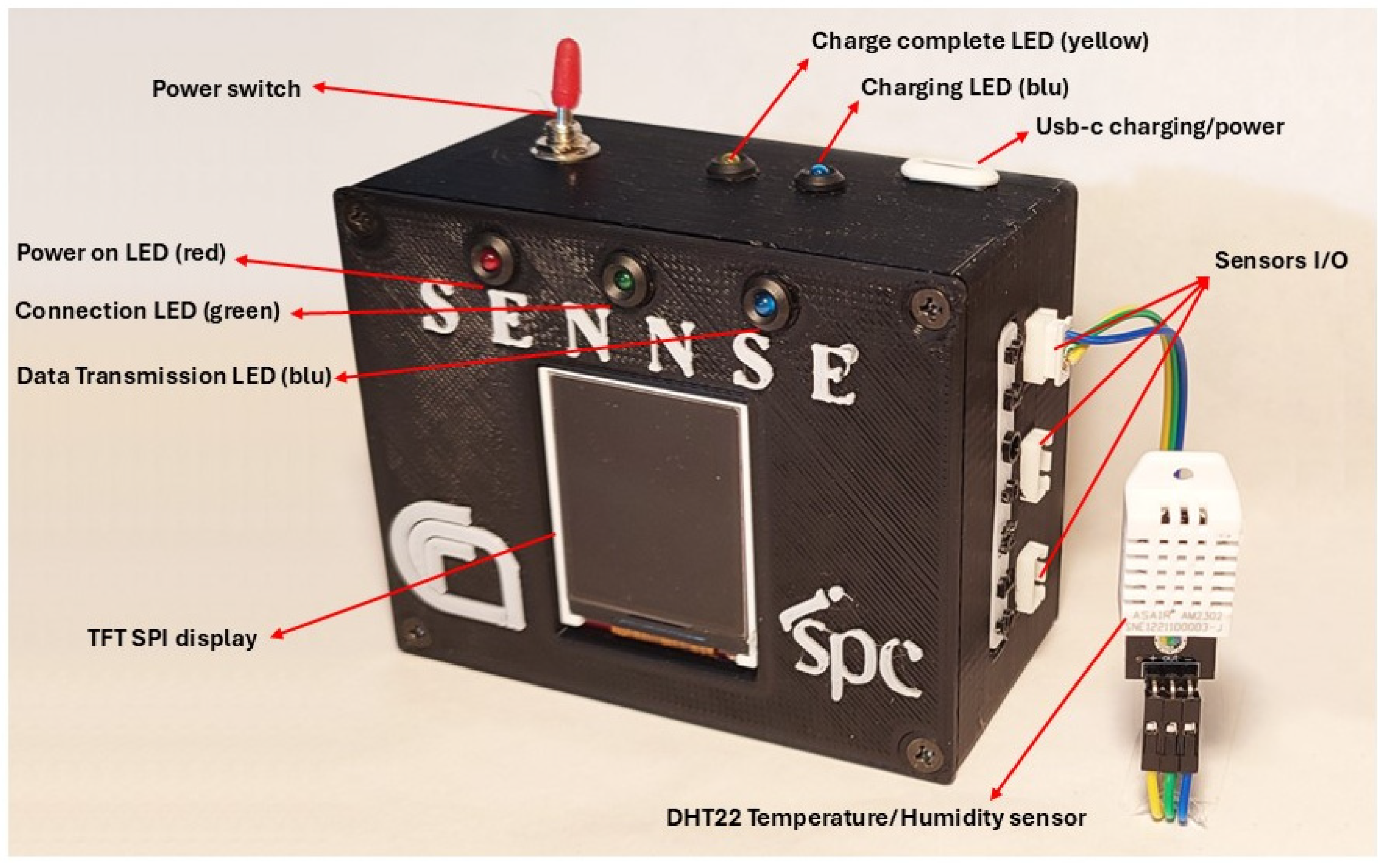

Regarding the physical implementation of the sensor nodes, it was decided, as previously mentioned, to use ESP32-type microcontroller boards soldered onto a matrix-type PCB base, following the circuit layout shown in

Figure 4. The power supply is provided by a 3.7-volt lithium polymer battery with a capacity of 1100 milliamp-hours, controlled by a small board based on the TP-4056 chip (NanJing Top Power ASIC Corp, ShenZhen, GuangDong Province, China), which also handles charging. Two LEDs are connected to this electronic board, indicating the progress and completion of charging. A 1.8-inch TFT display with a serial connection (SPI), and a resolution of 160 × 128 pixels allows for real-time visualization of the data monitored by the connected sensor. Specifically, the proposed prototype includes a DHT-22 type analogue temperature and humidity sensor (chip produced by Aosong Electronics (ASAIR), Guangzhou, China), which is connected to a GPIO pin of the ESP32.

The box that houses the electronics of the sensor node was designed using FreeCAD 0.21, produced by the FreeCAD Team. FreeCAD is an open-source CAD software platform for 3D design and development, combining tools for industrial and mechanical design, simulation, collaboration, and CAM machining in a single package. The software allows for quick and easy exploration of design ideas with an integrated “concept-to-production” toolset. It also includes useful functions for passing directly from 3D design to final printing. In general, a parametric approach was chosen. Parametric modelling of 3D objects involves modifying an element based on various parameters, such as dimensional data, which can be predefined and modified.

The dimensions of the designed case are 100 × 80 × 50 mm, and its function is to house the circuit relating to the node composed of the ESP32 board, the charging module and the battery (see

Figure 5).

To facilitate a comprehensive overview of the acquired data, a TFT display has been installed on the lid. In addition, three LEDs are used to indicate the operational status of the node.

A toggle-type micro-switch allows the node to be turned on and off, while a USB-C connector allows the battery to be charged. The charging status is shown by two LEDs that indicate whether the process is in progress or has been completed.

Sensors can be connected to the node via JST connectors located on the lateral side of the case. In particular, the proposed prototype has been equipped with a DHT22 sensor for temperature and humidity measurement, but the system is expandable with a large variety of environmental sensors.

As the project did not require the use of specific materials for the manufacturing of the node cases, since the environment in which they were to be installed did not present climatic characteristics, it was decided that the use of PLA material for the printing process would be the most appropriate solution.

PLA filament is a thermoplastic material derived from renewable resources, such as corn starch, tapioca roots, or sugar cane. Due to its ecological origin, this material has gained popularity in various industries and is now being used in medical applications and food products.

3.2. The SENNSE Software Platform

As reported in the previous paragraph, the WSN has the task of collecting data from sensors in fixed positions or from temporary data acquisition sessions. Then the data is sent to the SENNSE platform, which will elaborate and display it.

Over the years, methods for monitoring and safeguarding cultural heritage have changed and evolved. Thanks to IoT systems, it is possible to install sensor networks directly on the asset to be monitored, carrying out real-time control. Although this aspect introduces advantages, there is a need for systems that not only manage the large amount of produced data but also make it available to users.

The aspect of interdisciplinarity in cultural heritage is a relevant point, involving very different fields of study. Therefore, the data collected must be shared between different types of users. This means that the same data must be represented in different ways and correlated with other data in relation to the field of interest.

There are various online platforms providing systems for collecting and displaying data in the IoT context and employed for different purposes, such as environmental and energy consumption monitoring. These services allow for managing sensors and presenting the collected data by means of dashboards that can be customized using widgets.

Although these online platforms are equipped with functionalities for managing sensors, and collecting and presenting data about various scenarios, there are none whose features are specifically designed for monitoring, studying and safeguarding cultural heritage, managing very heterogeneous data deriving from various fields of study such as archeology, chemistry and engineering.

The SENNSE platform adopts a modular approach for data ingestion and device management, enabling interoperability with heterogeneous sensors. This design ensures flexibility and scalability while maintaining full control over customization and security features, which are essential for managing sensitive heritage-related data. In the following, the features of the SENNSE platform are reported:

MQTT compatibility: The system is compatible with all features offered by the MQTT protocol and/or with other complementary or alternative protocols for acquiring sensor data from IoT hardware platforms.

Alert generation and monitoring (email, notification): The system can alert via email or notification for any value read by the sensors that deviates from the pre-set ranges. Alerts will also be sent in case of system malfunctions.

Dashboard customization (panels, indicators, Sites, etc.): The administrator can customize each component of the graphical interface, from the basic graphics to the individual panels and the complete customization of the individual indicators for the detected values.

Open Source: The platform publicly makes the source code available or allows public use without limitations.

Real-time data: The platform supports the processing and visualization of real-time data (maximum 7 s from acquisition).

GIS integration and interaction with the Map: A Geographic Information System indicates the site’s location on an interactive map (click to view site details and access local site content). The system uses maps from the Google ecosystem or maps created with proprietary techniques and technologies.

2D/3D representation with sensor object interactive icon: Representation of the physical indoor/outdoor site where various sensor nodes are located. The system allows placing interactive sensor icons on a 2D/3D floor plan at the physical locations where they are placed.

Presence of APIs for predictive algorithms or AI tools: The system is equipped with APIs and interfaces to access predictive platforms and models, including tools for artificial intelligence and machine learning for the analysis of trained datasets of specific sensor data for predictive purposes.

Cloud/on-premises access: The data produced by the installed sensor network is transmitted in real time to the cloud, which collects and makes it available to authorized users. The platform is designed to work “on-cloud” or, if it only provides “on-premises” installation, on specific hardware.

Single object sensor data in addition to environmental sensor data: The platform displays environmental data in addition to data from the individual IoT node and has panels and interfaces dedicated to this type of data integrated into a single visualization system.

Online sensor device configuration: thanks to the platform’s management software, each sensor can be configured by modifying its detection and operating parameters based on the identified needs.

The architecture of SENNSE is structured around two main modules, each addressing a critical aspect of IoT-based cultural heritage monitoring:

WSN Management and Configuration: This module is responsible for the integration, configuration, and control of multiple Wireless Sensor Networks (WSNs) distributed across different sites. It provides functionalities for device registration, protocol management (including MQTT and alternative communication standards), and remote configuration of sensor parameters such as sampling intervals, alert thresholds, and operational modes. The module ensures interoperability with heterogeneous hardware and supports over-the-air (OTA) firmware updates (see

Section 3.4 for details), enabling continuous maintenance without physical intervention. Security is enforced through role-based access control and encrypted communication channels to safeguard sensitive cultural heritage data.

Data Visualization and Dashboard Management: The second module focuses on the processing, visualization, and contextual representation of acquired data. It enables real-time monitoring through customizable dashboards, allowing users to create tailored views for specific research needs. Advanced features include GIS integration for geospatial mapping of monitored sites, 2D/3D representation of sensor layouts, and interactive icons linked to individual nodes. The module also supports multi-tenancy, ensuring that each user or research group operates within a dedicated IoT data space, with personalized dashboards and secure access. APIs are provided for predictive analytics and AI integration, facilitating anomaly detection and trend forecasting.

In detail, the WSN Management and Configuration package allows connecting to and managing low-level nodes (such as sensors and devices). The idea is to implement functionality and procedures to connect to and modify a WSN. This is performed by creating a setup process using abstracted models of the WSN’s sensors and devices that will allow nodes to connect.

The data ingestion architecture of SENNSE adopts a modular gateway approach that enables the association of sensors and devices through multiple communication protocols. Additionally, the system includes a customizable connector layer, allowing support for non-standard protocols and extending compatibility with a wide range of IoT nodes.

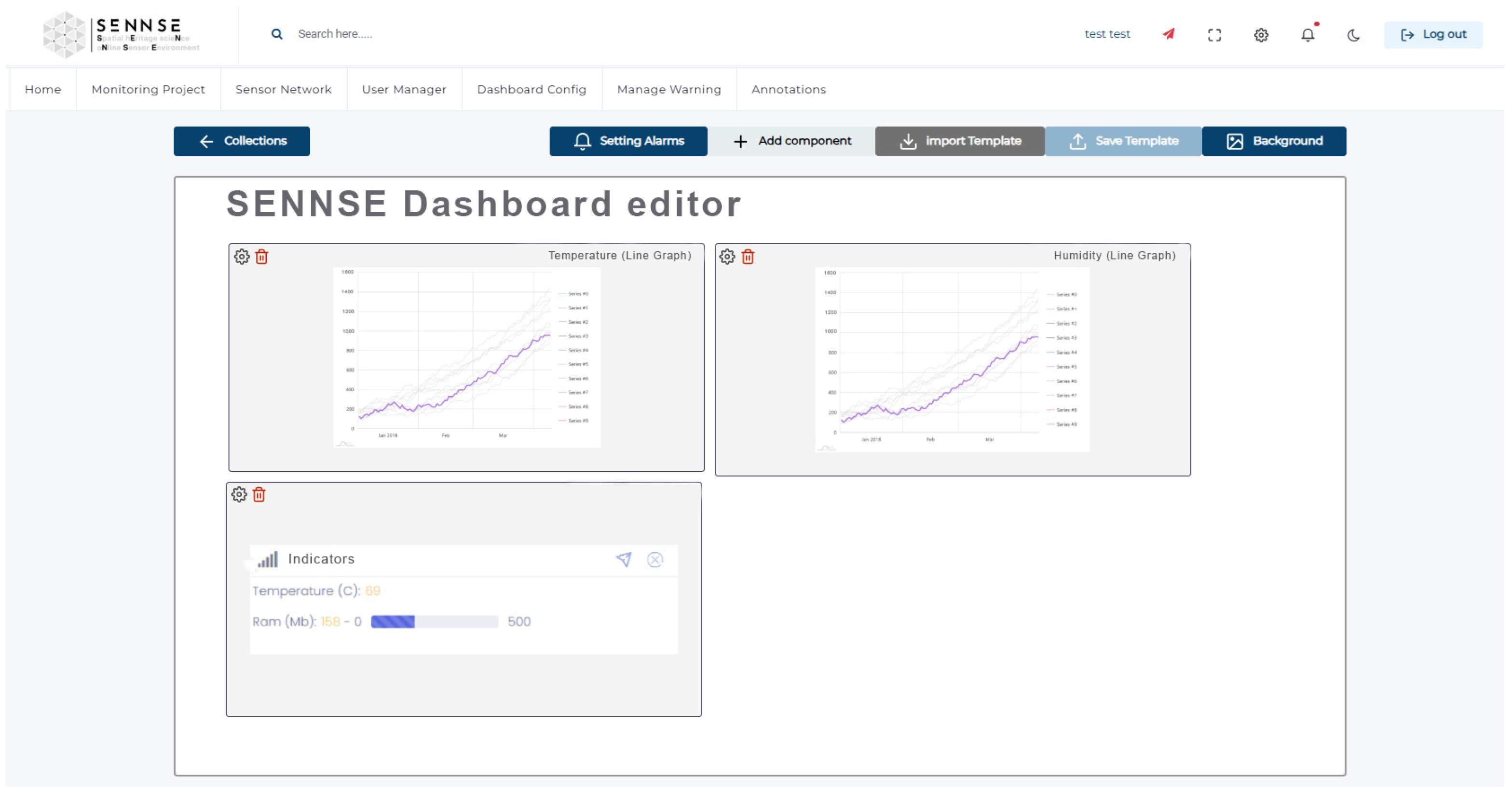

In the Data Visualization and Dashboard Management package (

Figure 6), the data visualization section, a key feature is the customization of the data display based on the field of study of the user accessing it. This means that data collected by the same sensor network is displayed differently based on the area of interest.

As already mentioned, dashboards are the fundamental aspect of the platform. Although there are various services that allow us to examine data from sensors, the component created will not only deal with data collection but will have functions that will permit us to view the values in an interactive way suited to the various types of users. Since the field of cultural heritage involves various professional figures, from humanists to biologists and engineers, this component must display the data according to the needs of each user. This can happen either through interface customization procedures or through automatic procedures that will choose the optimal display mode based on the area of use. Moreover, SENNSE will incorporate a dedicated context-awareness layer designed to introduce an innovative approach for exploring and visualizing scientific data. This component enables the categorization of information and its mapping to the most suitable visual representation based on contextual parameters, thereby enhancing the relevance and usability of data for researchers (see

Section 3.3 for details).

SENNSE is designed to manage multiple Wireless Sensor Networks (WSNs) distributed across different geographic areas within a single unified environment. This capability is achieved through the creation of separate IoT data spaces, leveraging multi-tenancy features that allow each user or group of users to operate within their own secure domain. Strong emphasis is placed on security: SENNSE, using the Authentication and Authorization Infrastructure of DIGILAB, integrates an advanced access control system to ensure data protection and confidentiality. Each user or team is assigned a dedicated data space where they can configure monitoring parameters and create customized dashboards for data visualization. This approach guarantees both flexibility and privacy, enabling tailored views and analytics while maintaining centralized management of heterogeneous sensor networks.

Within the SENNSE platform, researchers can identify specific subsets of IoT data that represent particularly significant conditions for monitored assets, for example, out-of-range humidity values in a historical building or excessive vibrations affecting a statue. These subsets can be annotated and subsequently integrated into the DIGILAB knowledge base, establishing a semantic link to the corresponding monitored object. To ensure proper integration within DIGILAB, it is essential that the datasets are annotated according to the platform’s semantic data model. Consequently, the SENNSE data model has been designed to be fully compatible with DIGILAB’s ontology, enabling seamless interoperability. This alignment not only guarantees accurate contextualization of the acquired data—capturing temporal and environmental conditions—but also supports advanced functionalities such as AI-driven predictive analytics and alert systems. By harmonizing SENNSE and DIGILAB data models, the platform facilitates the creation of a unified, semantically enriched ecosystem for cultural heritage monitoring and knowledge management.

3.3. SENNSE Data Model

We developed the SENNSE data model as a strategic component to optimize information flow and achieve interoperability across multimodal and heterogeneous sensor sources, a characteristic that inherently poses interoperability challenges. The initial design drew on a comprehensive review of current literature and existing IoT data models and standards [

35,

36], reusing well-established concepts where appropriate while tailoring them to our specific requirements.

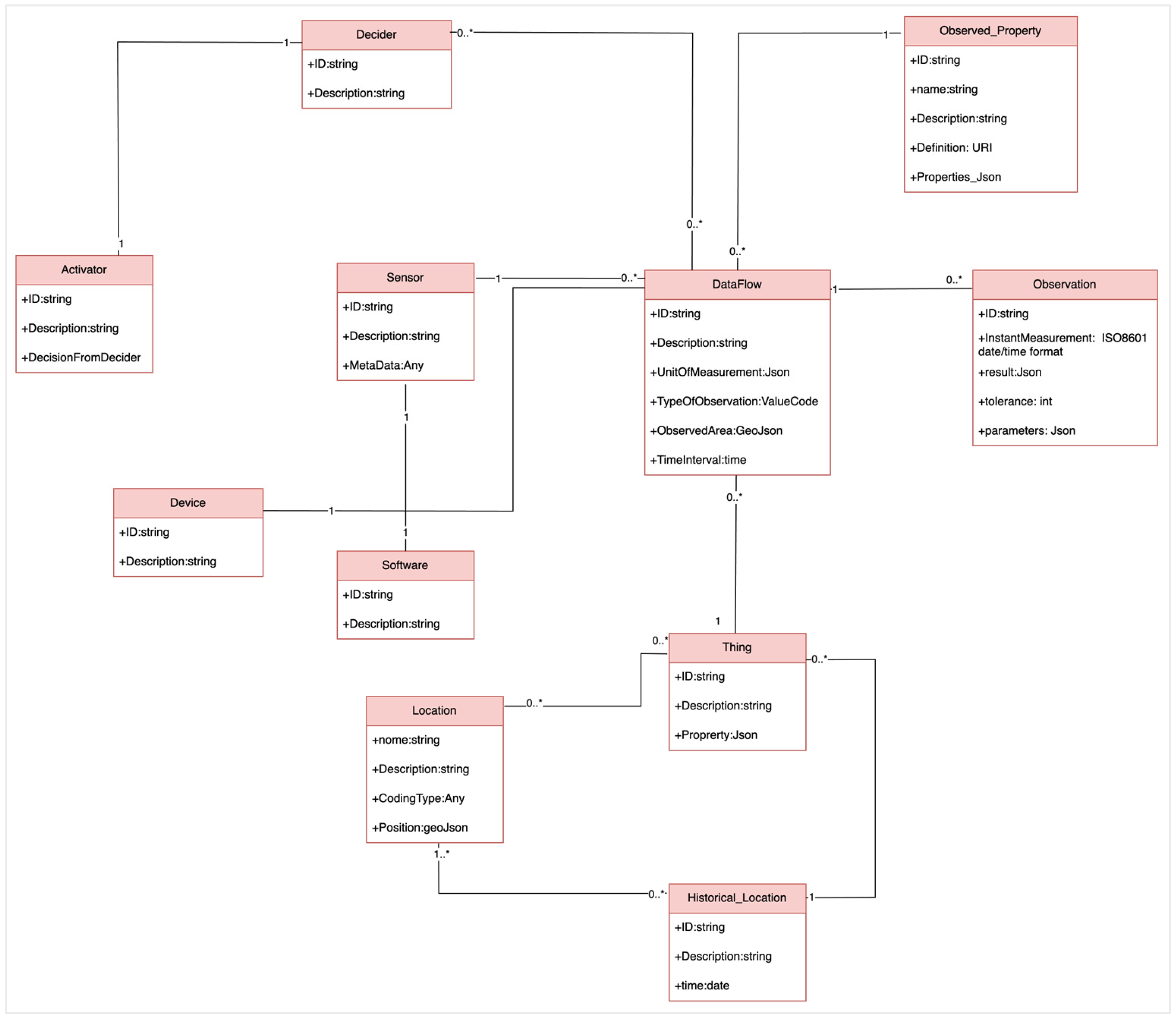

The SENNSE data model was created through an iterative process: identifying core entities (e.g., sensors, data types, observations, interactions), defining their attributes and relationships, and refining the schema across successive iterations. In the final step, the schema was formalized into logical and physical data structures. This process offers a clearer view of the system architecture and ensures the model can be effectively applied and extended. Integrating the SENNSE data model into the platform is essential to address interoperability and data-reuse challenges. In

Figure 7, the main entities (expressed through cardinality) and relationships of the SENNSE data model are reported as follows with:

Sensor: the component positioned near a cultural artefact or cultural heritage site. A sensor is responsible for the measurement of multiple physical quantities.

Decider: a component that compares sensor measurements with predefined decision rules and determines whether an action should be triggered.

Observed_Property: the observed property reports the measurement obtained by the sensor.

Observation: the act of measuring or determining the value of a property.

Activator: is the component that, if authorized by the decider, proceeds to take an action.

Thing: Refers to any entity, such as a device, that is part of a network and can exchange data with other devices within that network.

Historical_Location: specifies the time periods associated with the current and past positions of an entity (“thing”).

Location: the Location entity represents the place where the entity “thing” has been positioned.

Software: closely related to the data flow and sensor entities, it refers to the software responsible for acquiring the data.

Device: the device used to visualize the acquired data.

3.4. The SENNSE Context Awareness Layer

There is a significant need in the scientific community for tools that can visualize and correlate information from different sources. In this section, we present the core innovative component of the project: a context-aware ontological engine.

This context-aware system is designed to understand and extract relevant information from the surrounding context. In a previous analysis, the definition of context emerged as follows: “

Any information that can be used to characterise the situation of entities (such as a person, place or object) that are considered relevant to the interaction between a user and an application, including the user and the application itself” [

37]. The aim of this paper is an extended approach to context that goes beyond the typical classification found in the literature, which generally consists of user, platform and environment.

For example, a researcher using the SENNSE platform may need to assess the surveillance status of a particular location within a museum context. The information displayed for this researcher would differ from the data display intended for a sensor specialist who may be more interested in checking which sensors have detected humidity changes in a cultural object container.

Translating this information into an ontological model, in the words of [

38], the desired ontological model describes the domain of knowledge. The model contains general terms and relationships within the specific subject area. The semantic approach envisaged for this project will facilitate the management of knowledge about cultural heritage and all the activities related to its conservation and protection.

The proposed model for ontology is designed with flexible logical relationships that are well-suited to describing complex processes within the computing domain. A system is considered context-aware if it uses context to provide relevant information and services to the user, where relevance is determined by the user’s specific task.

The design and development of a semantic model is not intended to replace existing data structures, but to enhance them. The aim is to provide data with a common expression, ensuring a defined semantic representation that preserves data and concept interoperability.

The context awareness layer proposes a novel way to help scientists explore and visualize scientific data. This approach aims to categorize information and map it to the most appropriate visual representation based on context awareness.

Typically, context awareness is expressed by variables such as user, platform and environment. In this case, an evolution is proposed that extends the concept of context to include several dimensions, as described below:

User: the researcher interacting with a specific context. For example, the researcher who needs to monitor a room in a museum.

Channel: the medium through which the equipment is used.

Target: the goal the user is trying to achieve.

Data type: the type of data.

Visualization: the module responsible for displaying scientific data.

To dynamically model an interface that adapts to the context of use (which may come from different domains), it is necessary to semantically model the context. To ensure multimodal interface representation, the method is to organize entities and functionalities semantically, allowing alternative representations depending on the situational relevance of an operational characteristic and the contextual conditions.

The novel approach of SENNSE to context-awareness has led to the development of a new research project called ReXplorA. In detail, Research data eXploration and discoveRy (ReXplorA) aim to define a methodological approach that enables the abstraction of the different informational classes into which scientific research data can be categorized, together with the profiles of stakeholders’ informational needs that drive the lifecycle of such data.

3.5. SENNSE: Over-the-Air Node Firmware Update

In evolving technology like IoT, a now common feature in both hardware and software called Over-The-Air Update keeps the user experience simple and convenient. Thanks to this feature, developers can fix bugs or introduce new features without requiring the user to modify anything, such as the entire hardware. Not only that, but they can also include a variety of enhancements or other features. While several microcontrollers offer this feature, the ESP32 often uses a single feature that provides both secure boot and firmware updates.

When it comes to large-scale embedded and distributed projects, Over-The-Air (OTA) capability can be highly valuable.

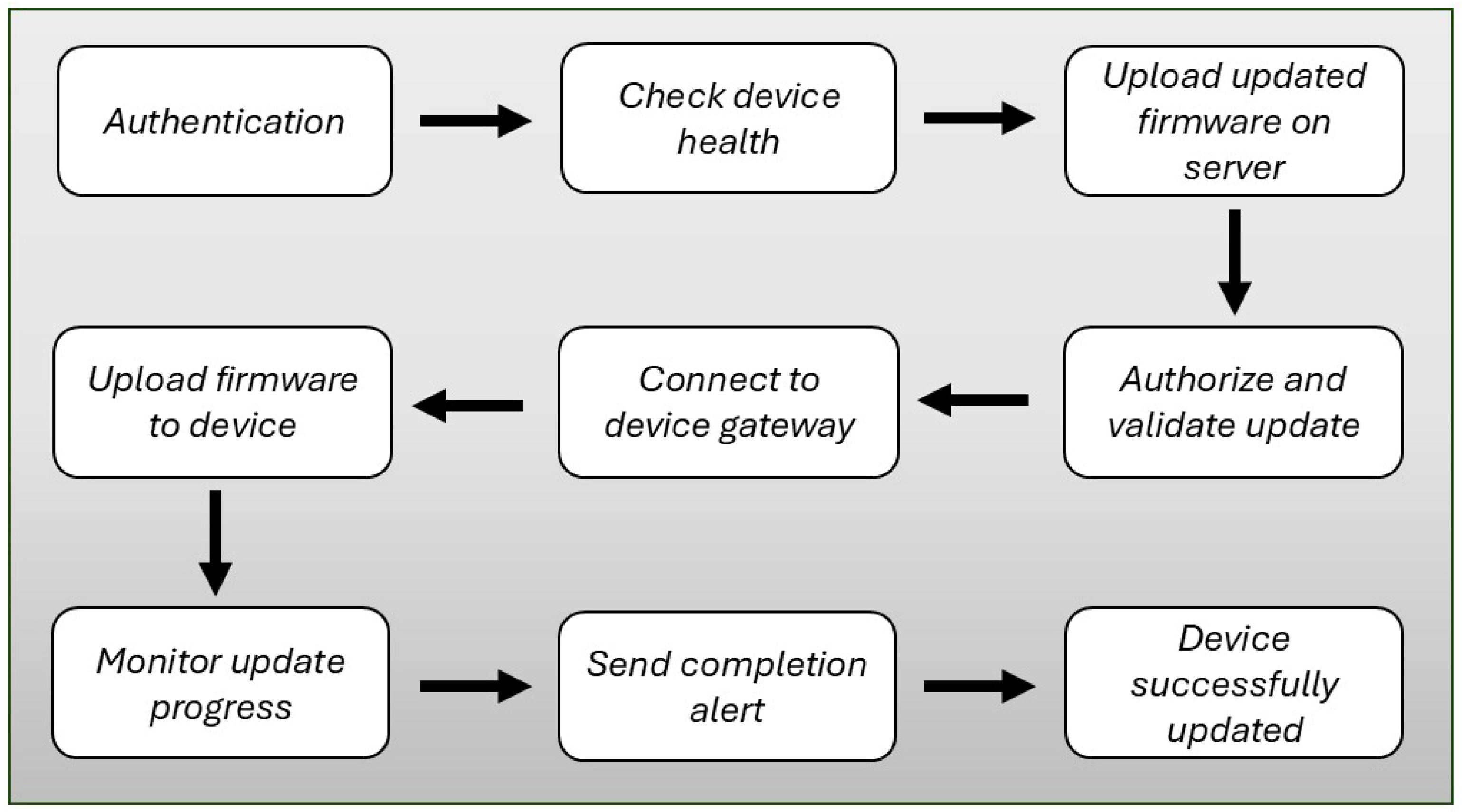

Over-the-air (OTA) firmware updates are a cornerstone of modern IoT deployments, especially for sensor nodes built around the ESP32 microcontroller. These updates allow devices to receive new firmware remotely, ensuring they remain secure, functional, and aligned with evolving system requirements without physical intervention. The process illustrated in

Figure 8 outlines a robust and secure OTA workflow tailored for ESP32-based sensor nodes, integrating both software and hardware considerations.

The update cycle begins with authentication, a critical step that ensures only authorized entities can initiate the firmware update. This typically involves verifying credentials or tokens between the update server and the device gateway. Once authenticated, the system proceeds to check device health, confirming that the ESP32 node is operational, has sufficient memory, and is not currently engaged in critical tasks. This pre-check minimizes the risk of update failures or data corruption.

Next, the updated firmware is uploaded to the server, often hosted on a cloud platform or local gateway. This firmware must be compiled specifically for the ESP32 architecture, considering its dual-core processor, memory constraints, and peripheral configurations. Once the firmware is in place, the system performs authorization and validation, ensuring the firmware is signed, versioned correctly, and compatible with the target device. This step is vital for preventing bricking due to incompatible binaries.

Following validation, the system connects to the device gateway, which acts as a bridge between the server and the ESP32 node. This connection may use MQTT, HTTP, or HTTPS protocols, depending on the deployment. Once the link is established, the firmware is uploaded to the device, typically using the ESP32’s OTA API, which writes the new firmware to a secondary partition while the device continues running the current version. This dual-partition strategy ensures rollback capability in case of update failure.

During the upload, the system monitors the update progress, tracking packet integrity, transfer speed, and device responsiveness. If any anomalies are detected, the process can be paused or aborted. Upon successful transfer and flashing, a completion alert is sent, notifying the system administrator that the update has finalized. Finally, the ESP32 node reboots and validates the new firmware, transitioning into its updated operational state.

This well-defined OTA update workflow significantly strengthens system reliability and reflects the principles behind scalable sensor network design. Through the integration of secure authentication, rigorous validation steps, and continuous monitoring, ESP32-based devices maintain a high level of resilience and adaptability even in rapidly changing conditions. Such an approach ensures consistent performance across a wide range of real-world scenarios, from environmental sensing to the protection of cultural heritage sites.

Implementing OTA functionality on the ESP32 can offer significant advantages, especially in modern IoT deployments. While it introduces concerns related to security and update reliability, there are strong reasons to consider OTA updates as part of a long-term device management strategy. Ultimately, the decision to adopt OTA should follow a careful evaluation of both the benefits and the challenges within the context of your specific application.

One of the strongest motivations for OTA support is the physical placement of the device. When an ESP32 node is installed in a remote or difficult-to-reach location, performing manual updates becomes impractical. Any need for frequent firmware revisions would otherwise require repeated on-site visits, increasing operational costs, and slowing down the deployment cycle.

Another key factor is scale. IoT systems typically involve large numbers of devices distributed across wide areas. In such scenarios, manually retrieving, updating, and redeploying each unit is simply not feasible. OTA updates provide a centralized, automated mechanism to push new firmware to thousands of nodes simultaneously, ensuring consistency and reducing the risk of human error.

OTA capabilities also act as a safeguard against widespread failures. If a critical bug is discovered, one that cannot be fixed without reflashing the firmware, OTA updates can prevent the total loss of an entire fleet of devices. Without this mechanism, each device would require manual intervention, potentially resulting in significant downtime or even permanent failure.

In some cases, OTA updates are not just a convenience but a contractual requirement. Devices sold with long-term support commitments or service agreements must be kept up to date, and OTA provides the most efficient way to meet those obligations.

Despite these advantages, OTA implementation does come with challenges. Security must be carefully managed to prevent unauthorized access or malicious firmware injection. The update process must be robust enough to handle interruptions, power loss, or network instability. These considerations add complexity to the system design, and they must be addressed thoughtfully to ensure a reliable final solution.

In summary, while OTA updates introduce additional technical hurdles, their benefits in terms of scalability, maintainability, and long-term device reliability often outweigh the risks—provided that the implementation is secure, well-designed, and aligned with the needs of the application.

4. Results

The platform SENNSE allows researchers to acquire data from sensor networks installed on monuments, buildings, and archeological sites and display them on customizable dashboards. This will not only enable accurate, timely monitoring but also allow the information to be displayed appropriately based on the user’s needs and scientific scope. At the moment, the platform is operating in public-controlled environments to test its functionality in real-use cases.

For this purpose, two environments have been selected for monitoring. The data and considerations resulting from these two installations will help refine the functionalities implemented in the SENNSE platform and improve the features of the sensor nodes.

The first case concerns the monitoring of environmental parameters at our institute. This is a “controlled case”, where the node sensors are installed in locations with no requirements for environmental or infrastructure conditions, such as power supply, Wi-Fi availability, excessive humidity, mould, or risk of flooding. Moreover, the environmental parameters are controlled by the air conditioning and heating system; therefore, they always fall within a defined range of values.

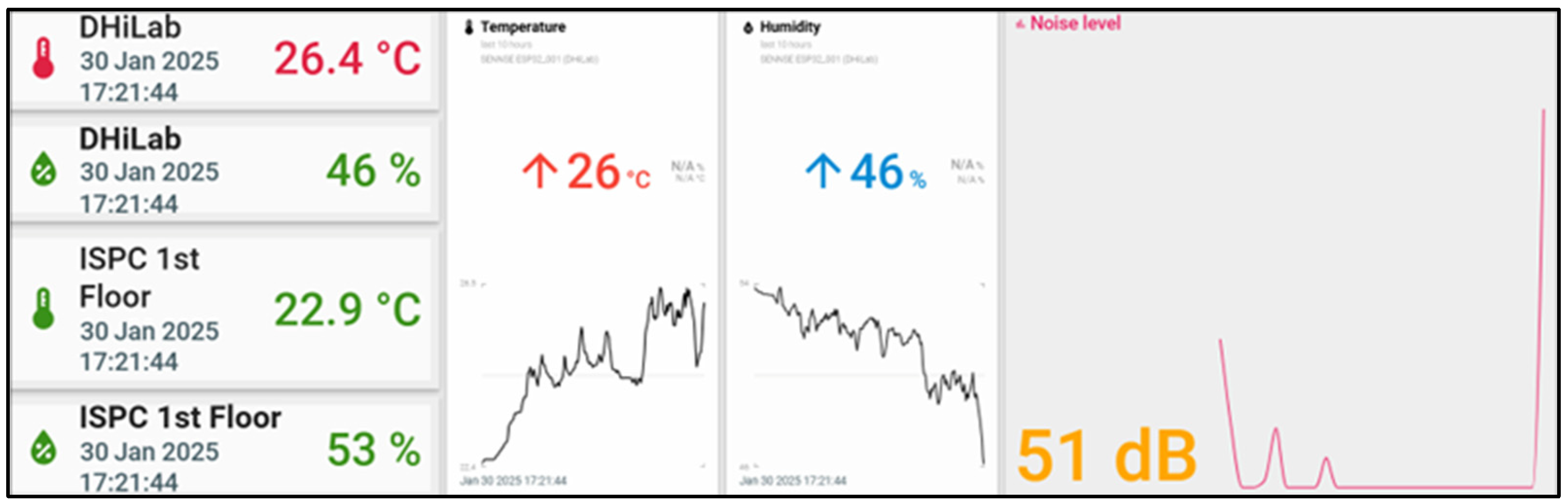

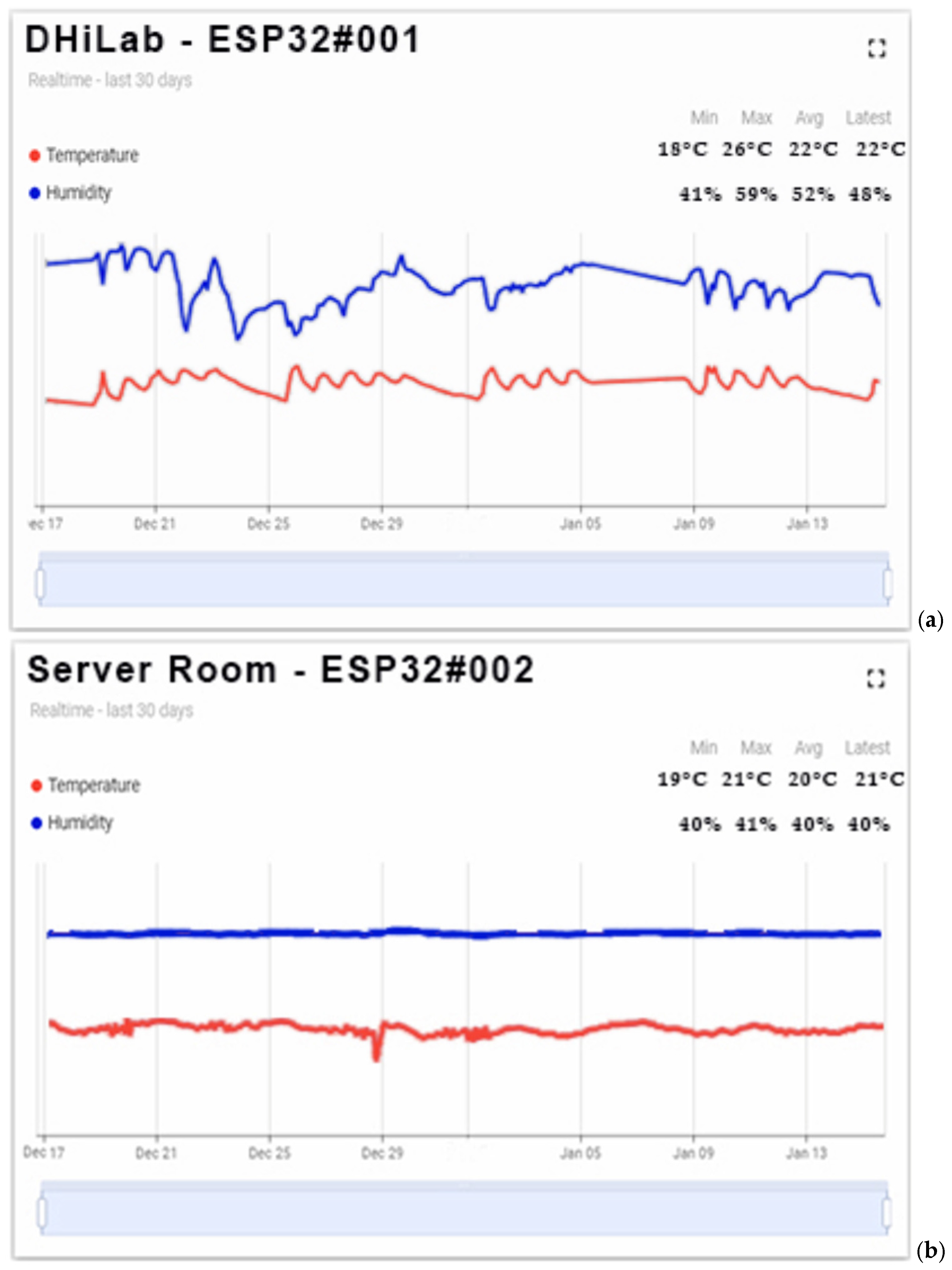

Figure 9 shows an image of the dashboard displaying data. The dashboard provides a rapid, comprehensive view of the environmental data collected by sensors and their evolution over time. It is composed of a series of widgets, chosen and customized based on the data to be displayed. In

Figure 10a, a detail of the dashboard is shown: these graphs represent data from two humidity/temperature sensors placed in our lab (the DHiLab) and in the server room. The blue lines represent humidity, while the red lines represent temperature. In the first graph, the values are more variable; there is a visible periodic behaviour in the temperature values, corresponding to hours when people are in, the heating system is on, and night hours. On the contrary, in the server room, the temperature is stable around 20 degrees because it is controlled by a 24-h active air-conditioning system.

The second case is about the monitoring in the “Biblioteca Nicola Bernardini” (in Lecce, Italy). This is a work-in-progress project that will represent the “standard case”.

In this preliminary phase, some rooms of the library have been inspected, in particular those that house important manuscripts dating from the 14th to the 18th centuries. The goal is to detect the environmental conditions in which these manuscripts are currently preserved, ensuring they are within the correct environmental values (temperature, humidity, air quality, etc.) suitable for optimal long-term material conservation, and to establish whether they are suitable for preservation. The preventive inspection of the library allowed us to detect electrical sockets on each of the three levels, ensuring a constant energy supply. This situation will guarantee long-term operation without the need to develop technical solutions that account for the use of batteries and related charging circuits within the sensor node, giving us the advantage of heat dissipation, which, without the presence of power circuitry, remains quite limited. As regards the internet connection, we were able to ascertain the presence of some totems inside the examined room, which host Wi-Fi repeaters; it will be our task, during the installation phase, to find the most suitable locations to have a stable and constant connection; the IP address distribution system provides for a limited number of connections. As the room is frequented daily by many students, we will be assigned fixed and priority addresses, which will guarantee that we never have connection problems.

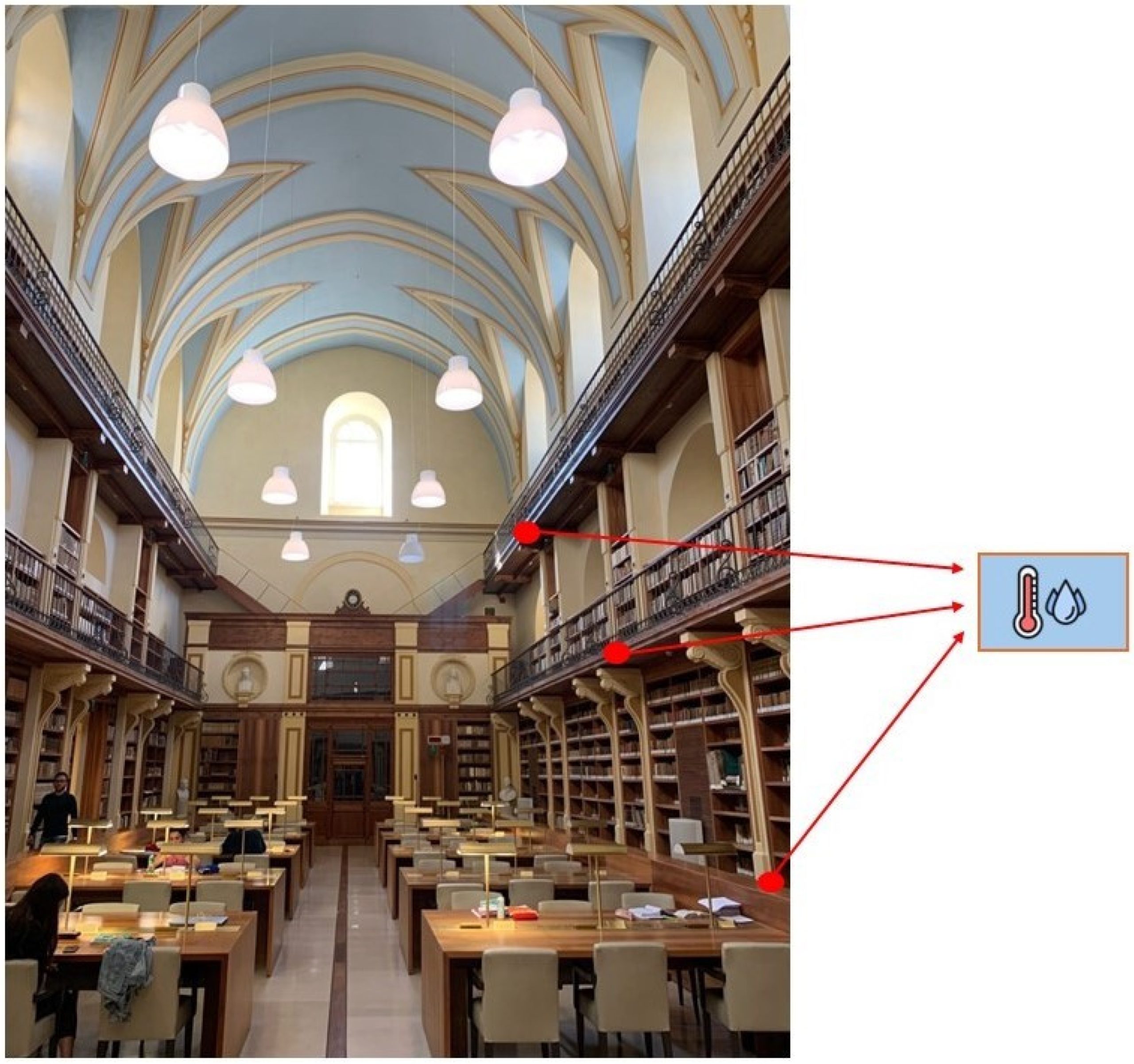

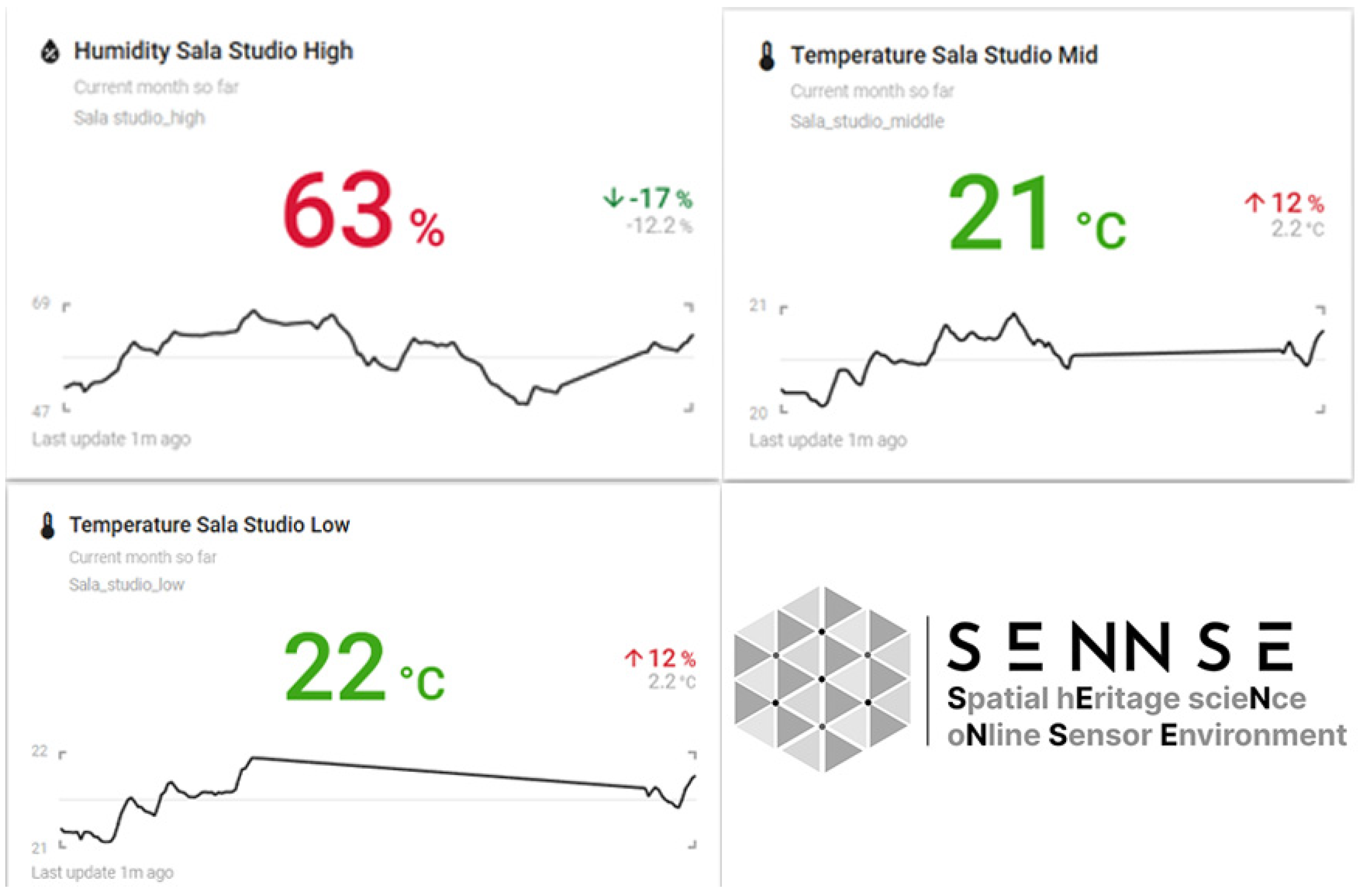

The main room, shown in

Figure 11, is the one we focused on. The room develops in height, on three levels, and for each level, paying special attention to hiding the sensing node from view. The library dashboards and acquired data are shown in

Figure 12.

Future activities will concern the installation of further and different sensors for environmental monitoring and their connection online with the SENNSE platform.