Reshaping Museum Experiences with AI: The ReInHerit Toolkit

Abstract

1. Introduction

1.1. Results from ReInHerit Research on Digital Tools

1.2. Demographics

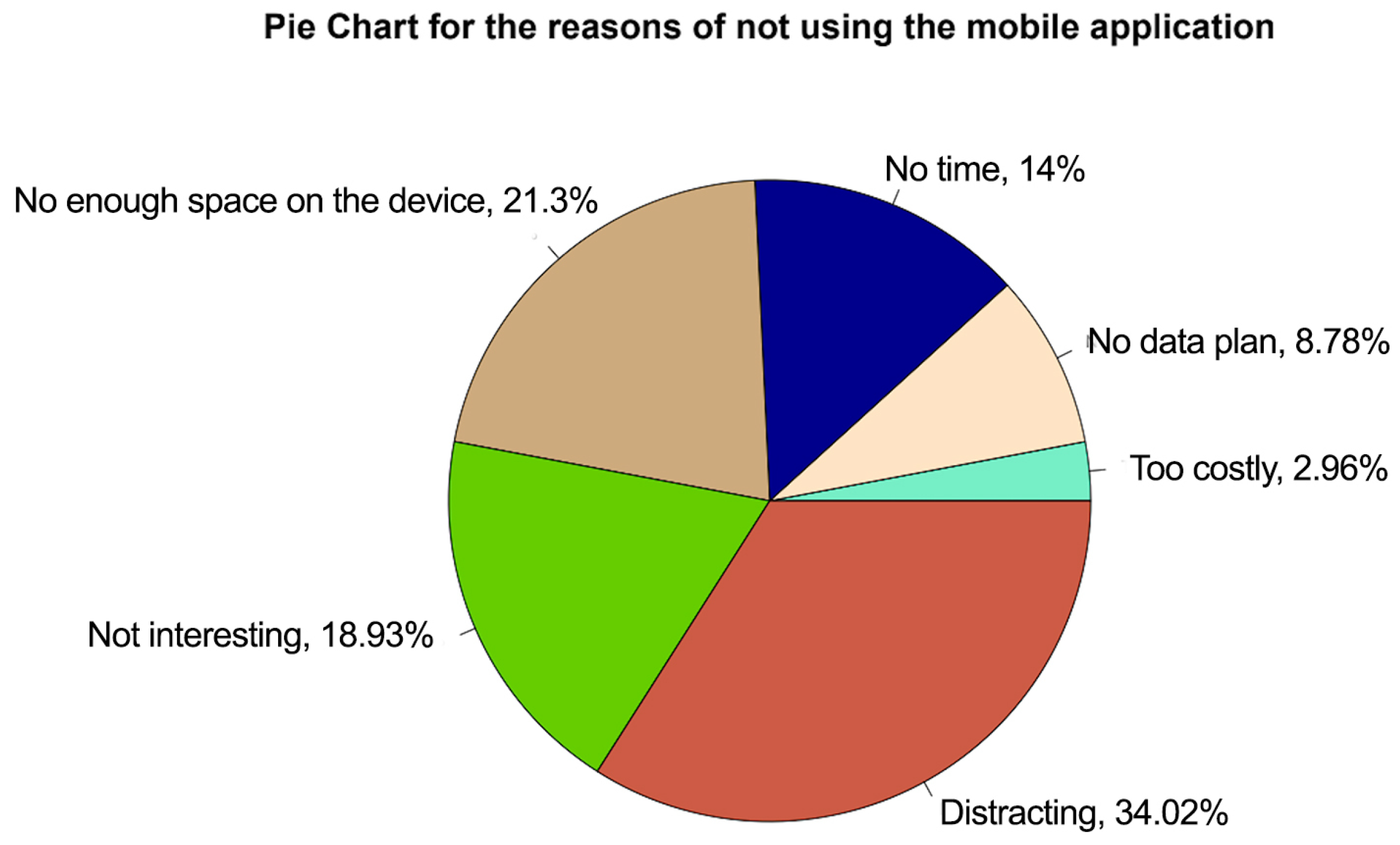

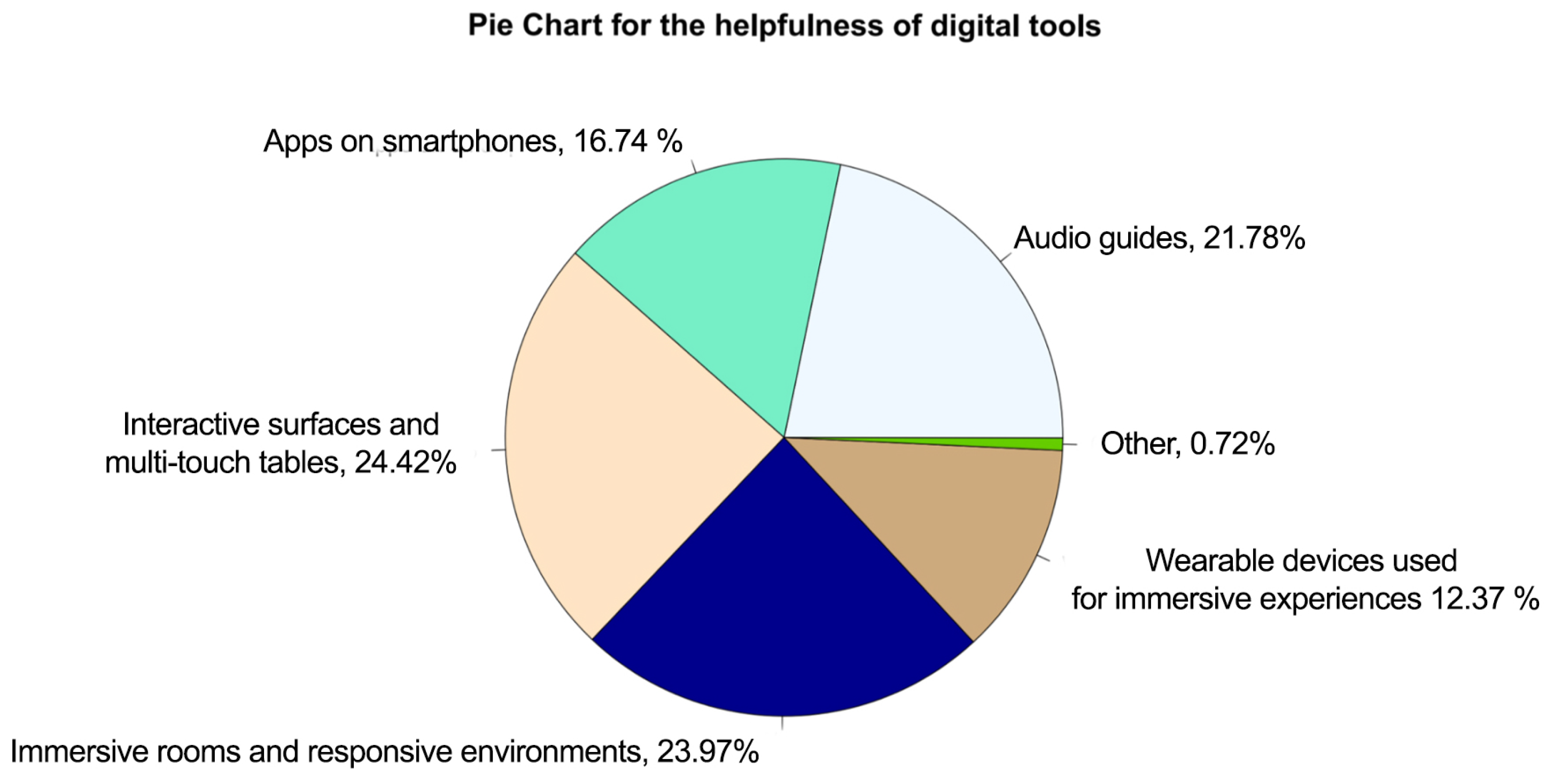

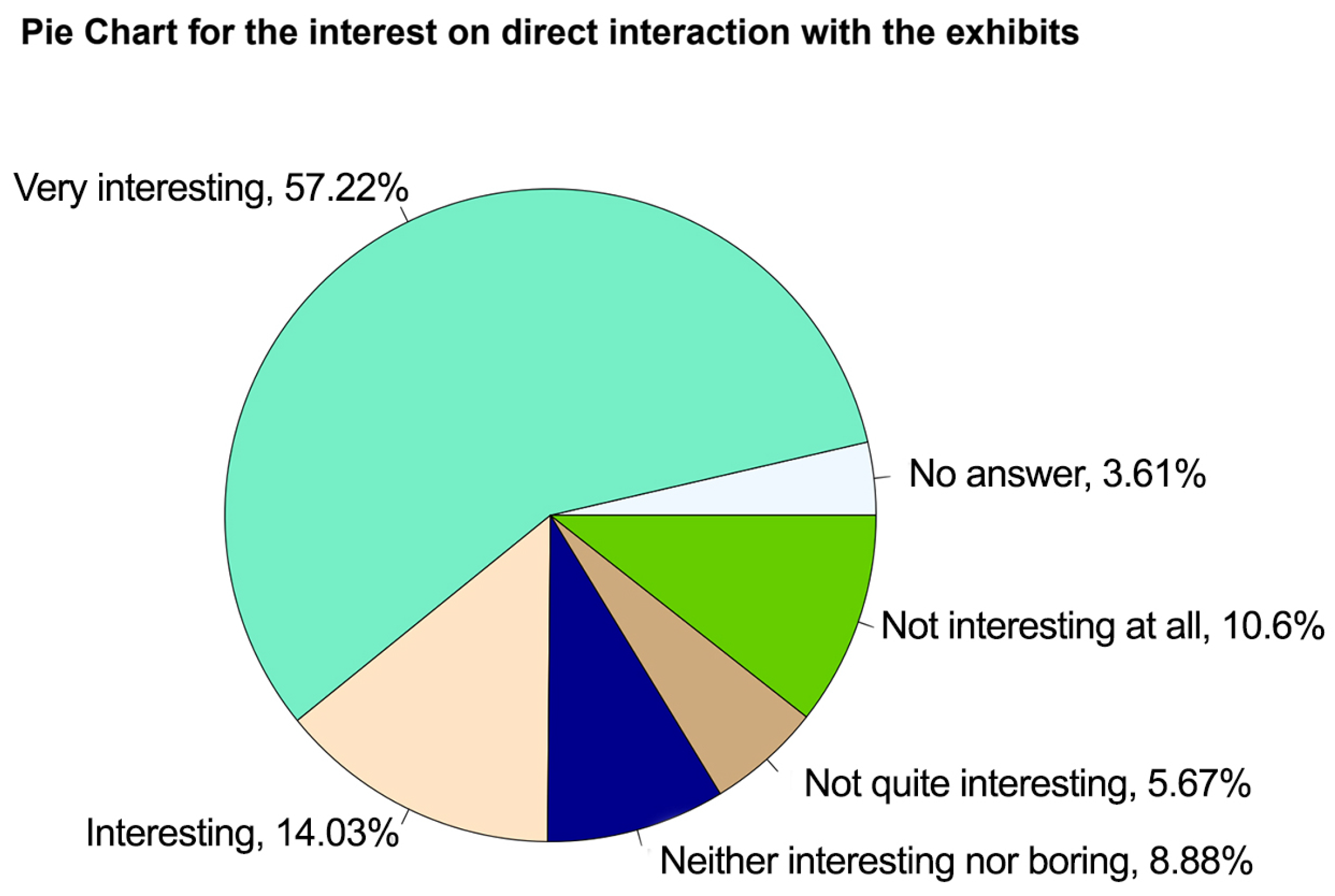

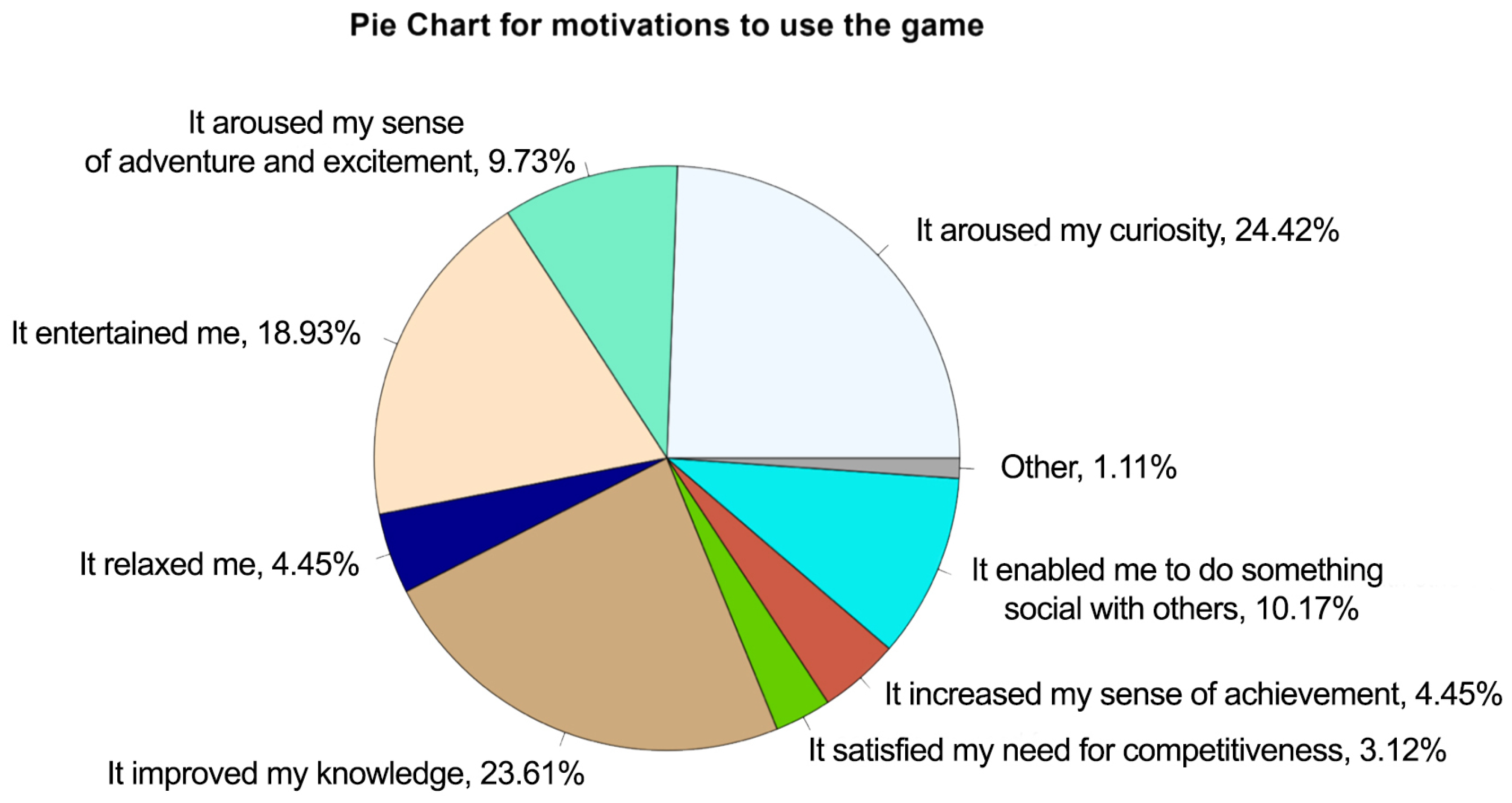

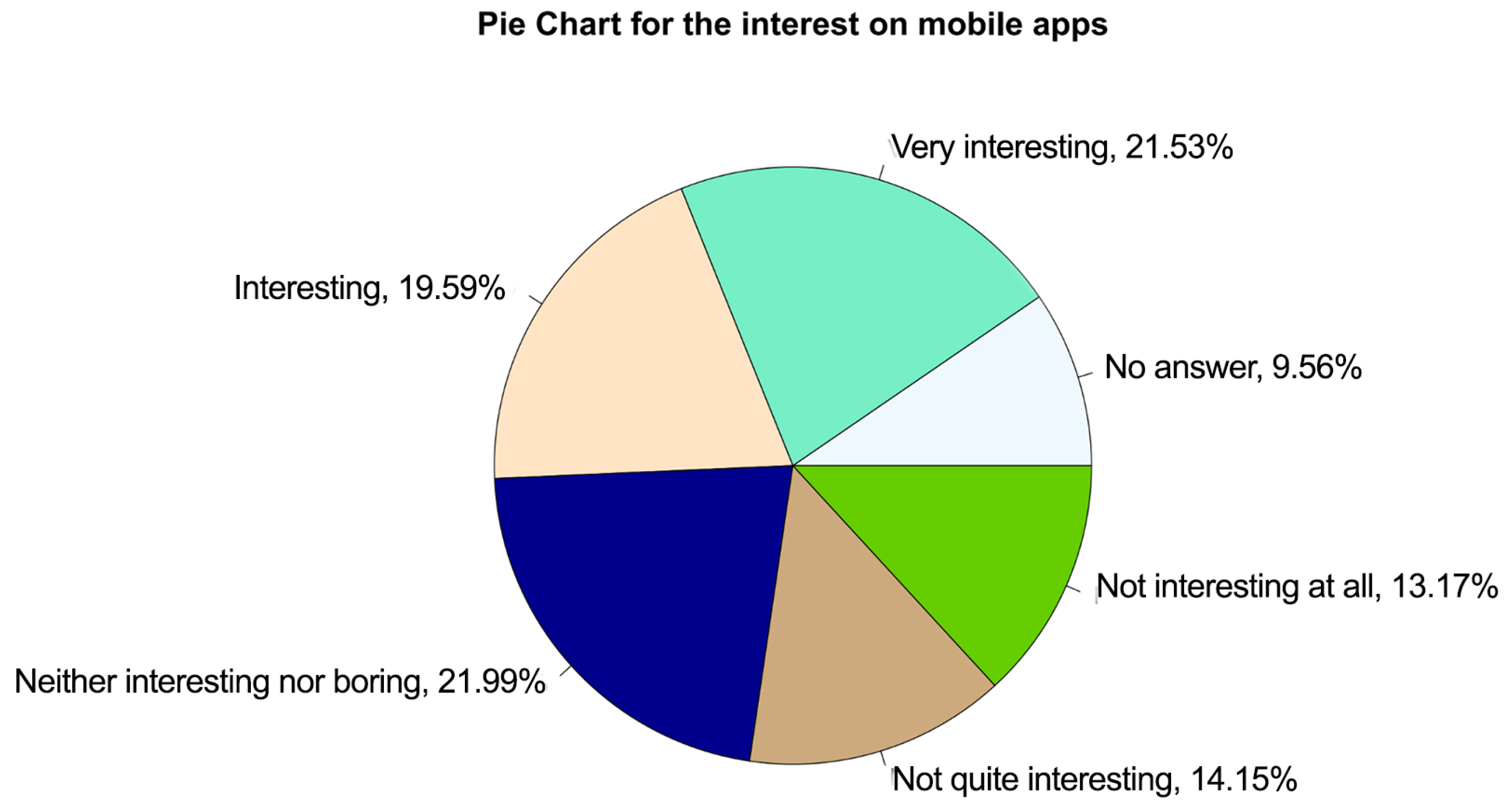

1.3. Visitor Preferences

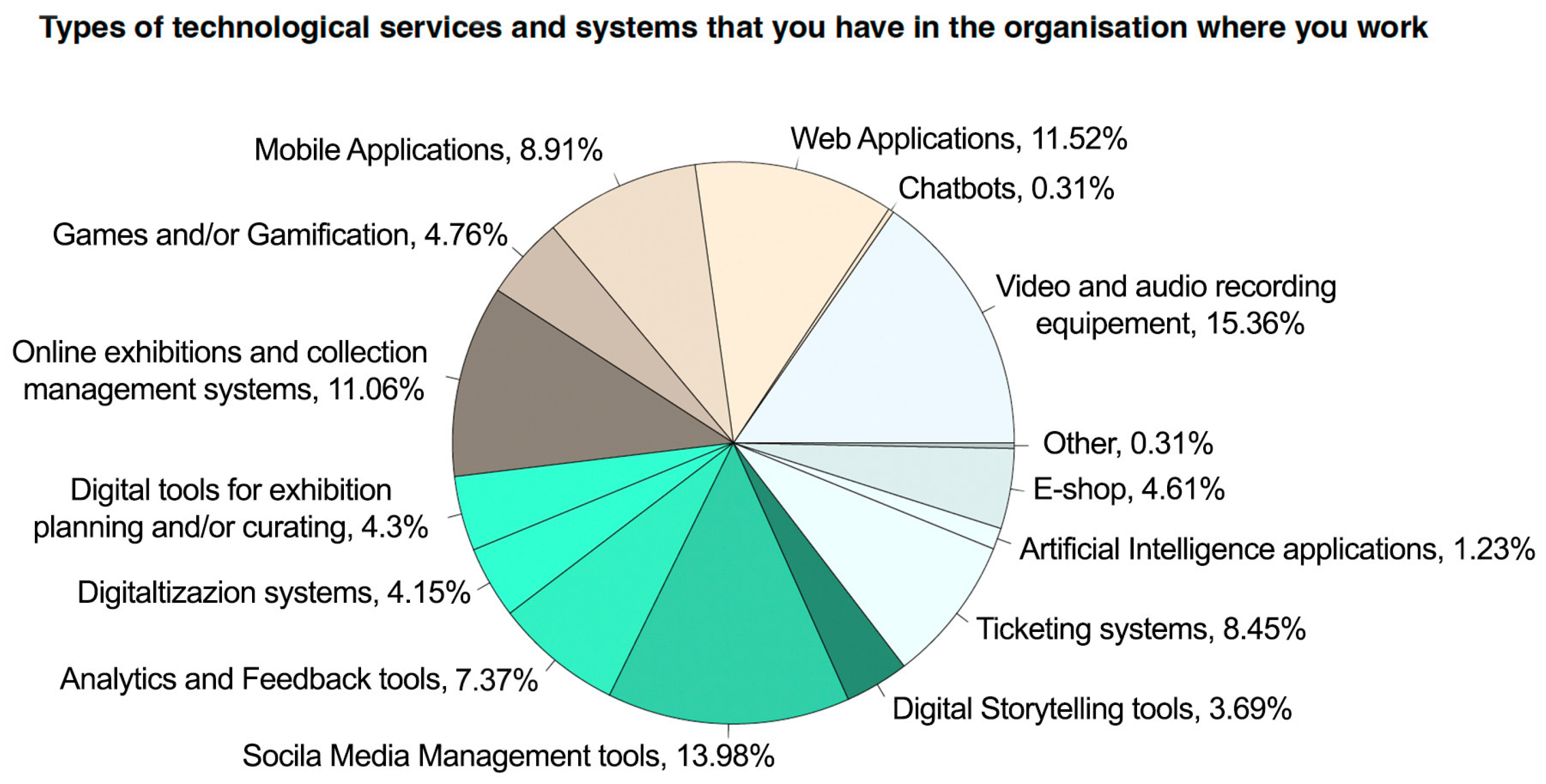

1.4. Heritage Professionals Needs

- In total, 67.33% of museums and CH sites rely on standard ICT tools, while only 33% use innovative ICT tools. This highlights a need for integrating more innovative tools into the sector to improve visitor engagement and experience.

- The analysis revealed that smaller organizations are more likely to rely on standard ICT tools and face greater challenges in adopting innovative solutions. This underscores the need for sharing digital platform that can support museums of all sizes, offering tailored solutions to their specific needs.

- AI and gamification tools (e.g., chatbots and digital storytelling) were identified as important but rarely used. These tools can enhance visitor interaction and engagement, making them crucial for future development.

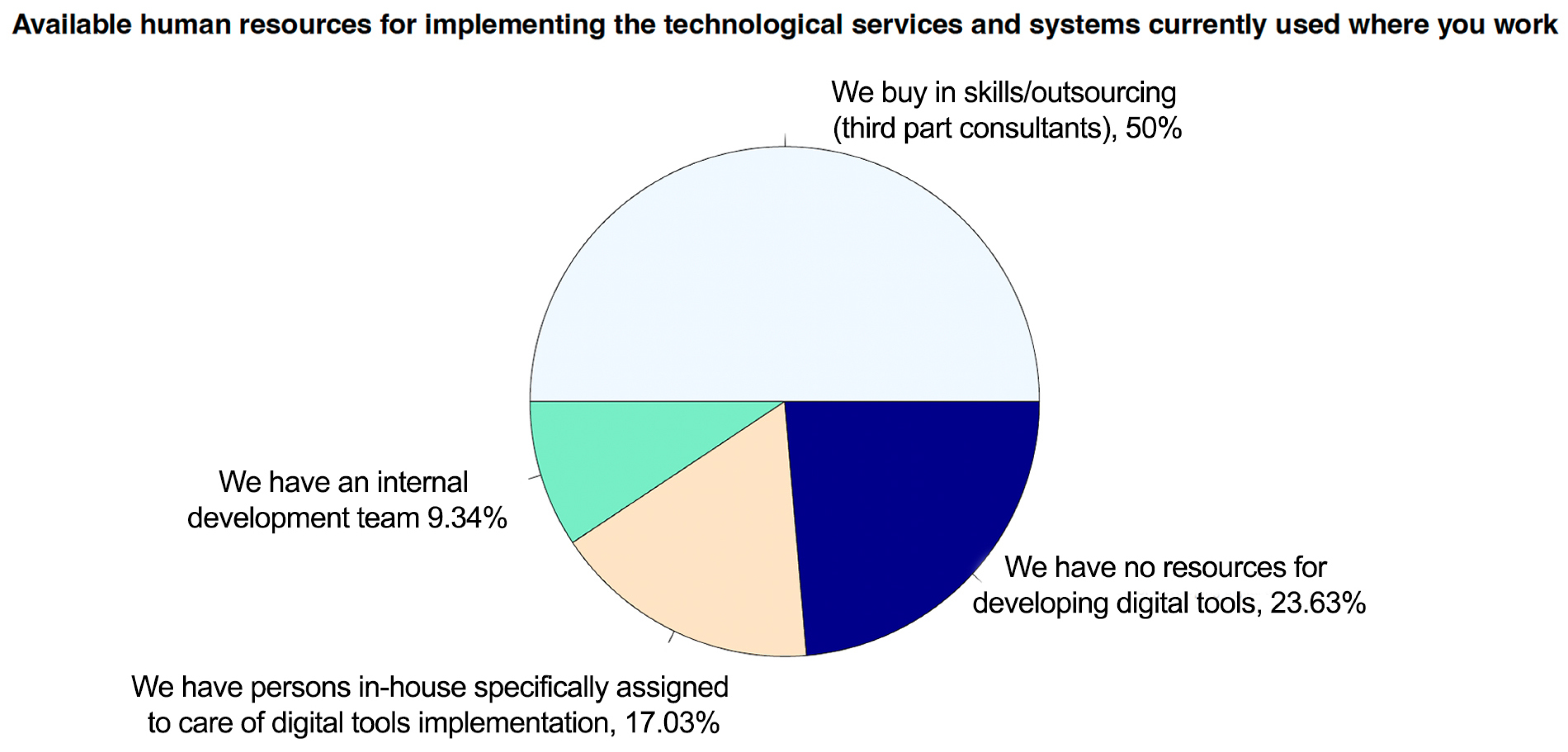

- Human resources: Most organizations do not employ dedicated professionals for technological implementation. Instead, they rely on third-party consultants or lack the resources to develop digital tools internally. This indicates a need for training and upskilling heritage professionals to become active agents in the digital transformation of cultural heritage institutions (Figure 9).

2. Materials and Methods

2.1. Insights on AI and Museums

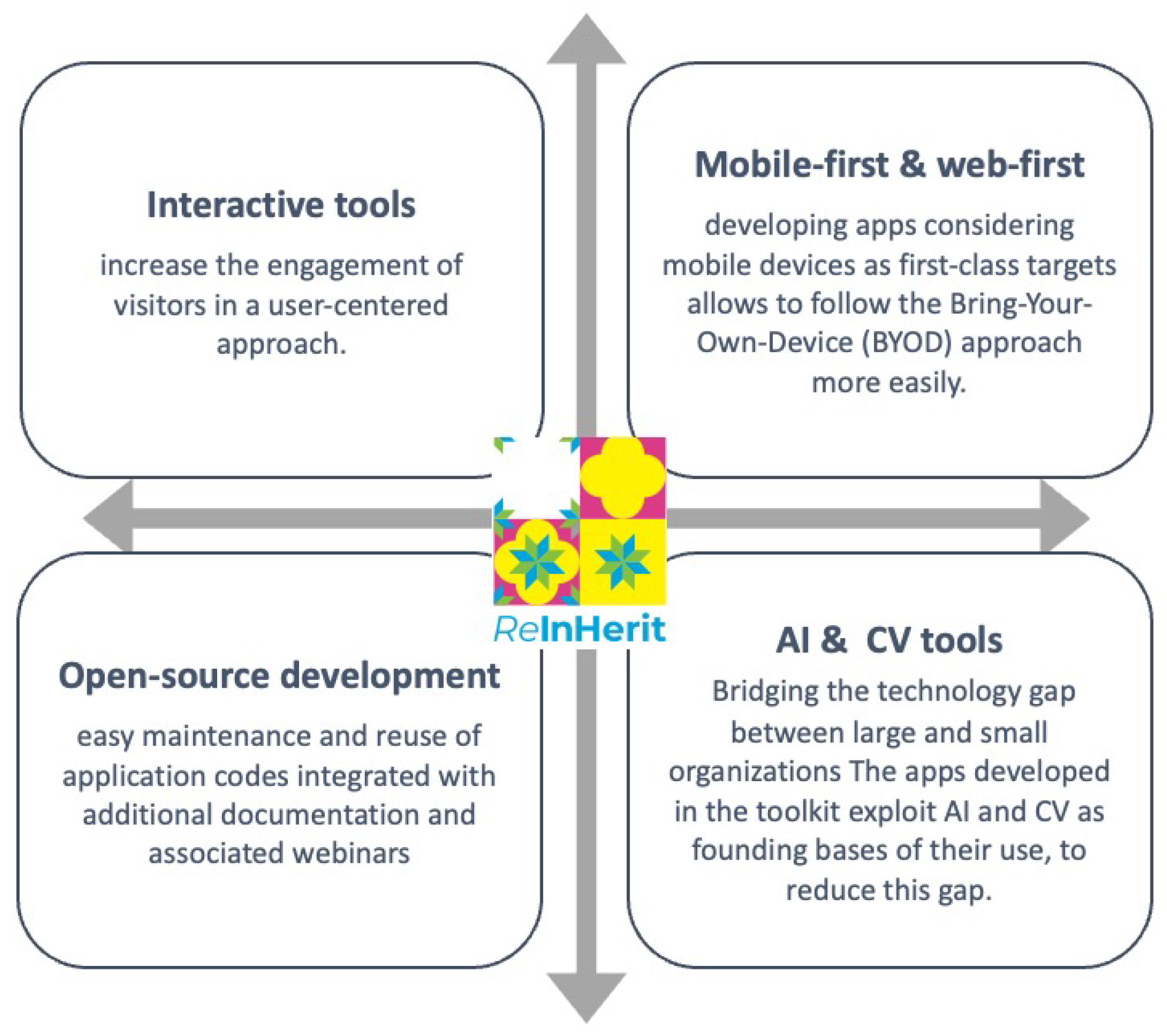

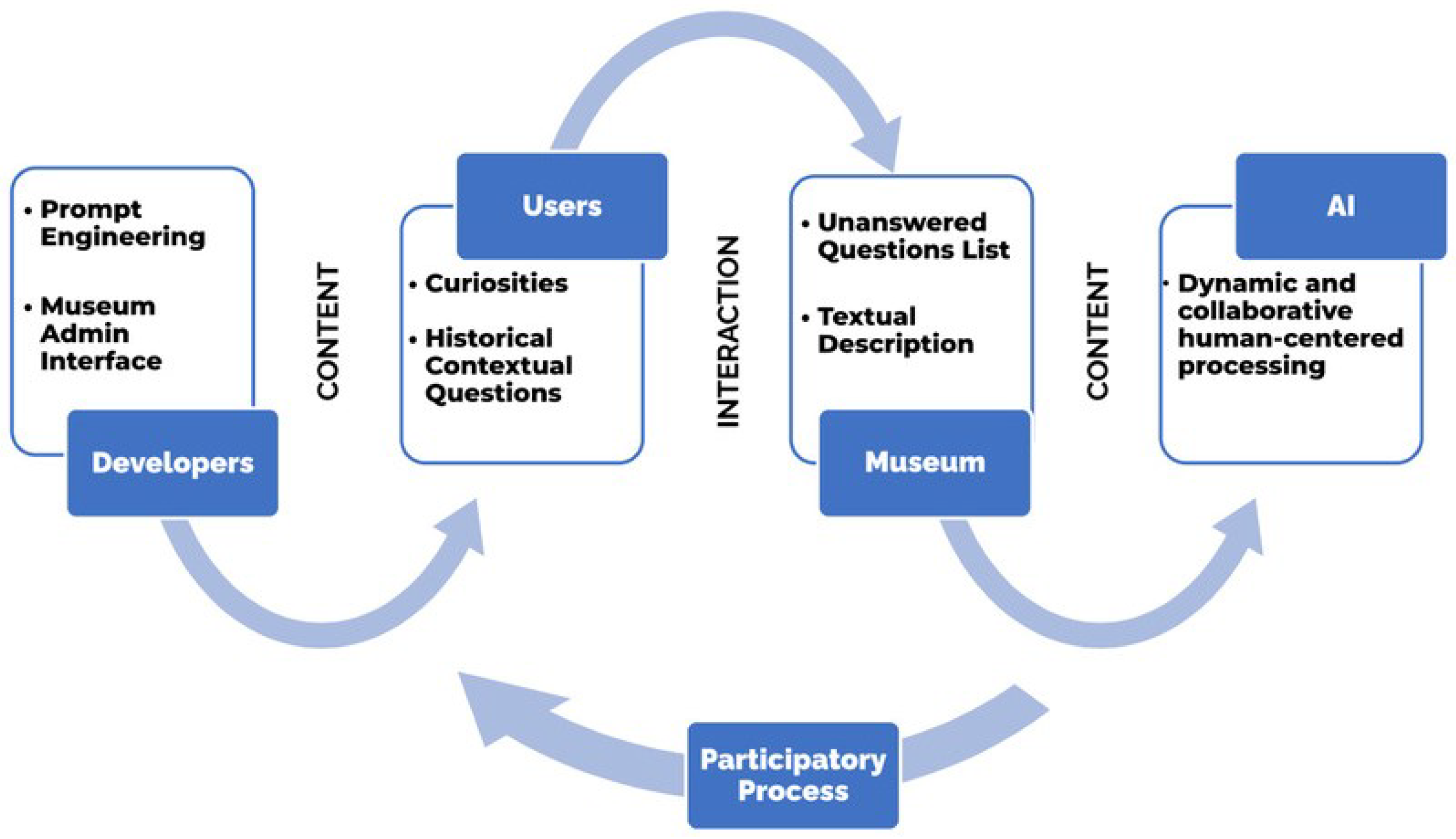

2.2. The ReInherit Toolkit Method

3. Results

3.1. Strike-a-Pose

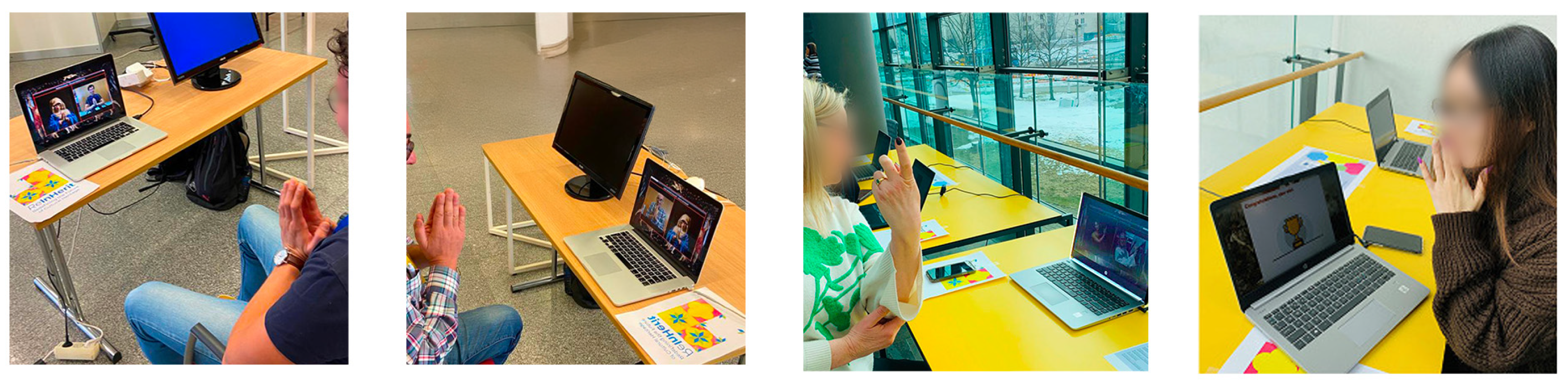

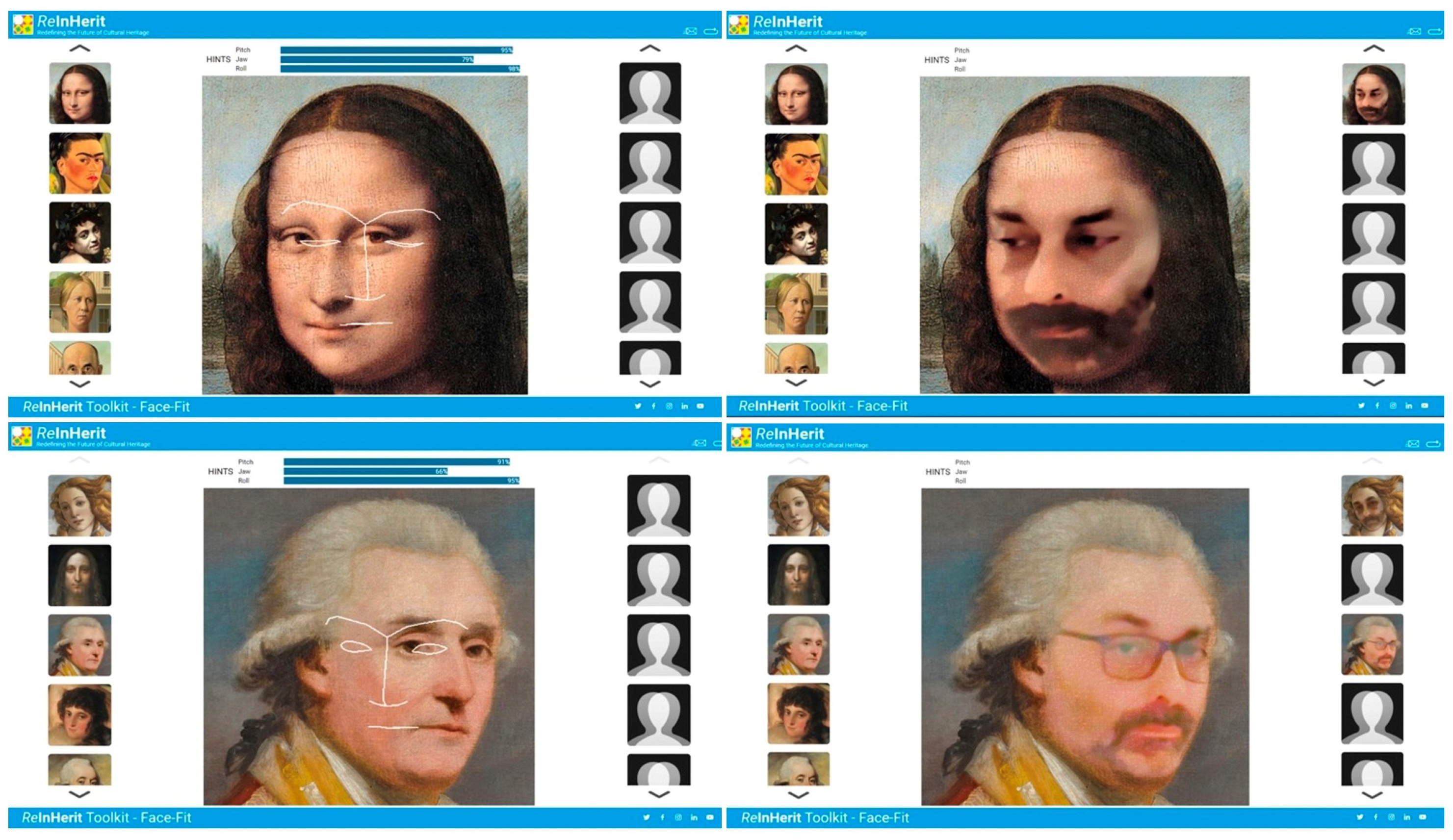

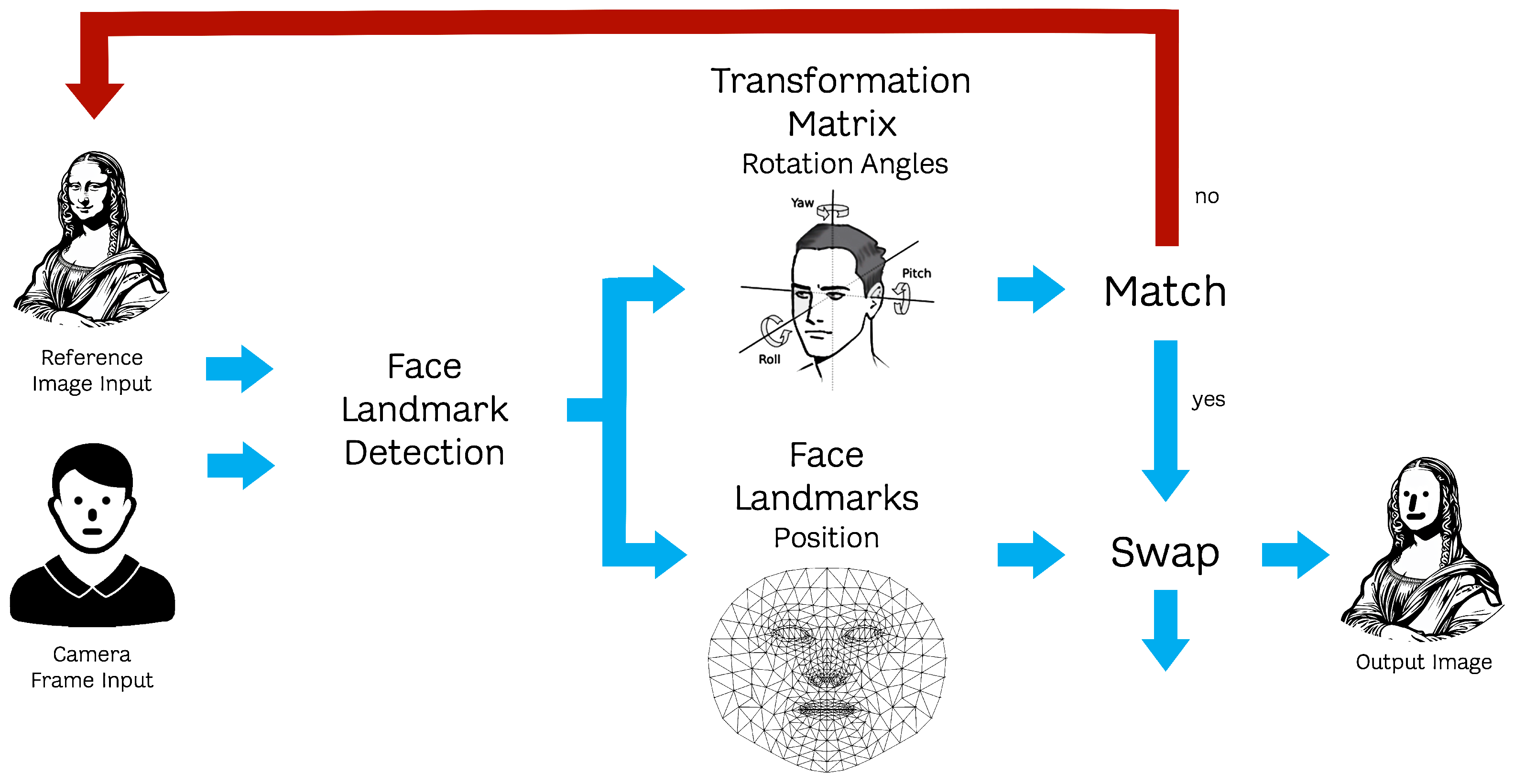

3.2. Face-Fit

- To implement these experiences as challenges that enhance visitor engagement and provide personalized takeaways of the visit, encouraging post-visit exploration.

- To generate user-created content that can amplify engagement on social media platforms.

- To employ advanced AI methods optimized for mobile execution, supporting a BYOD strategy for widespread accessibility.

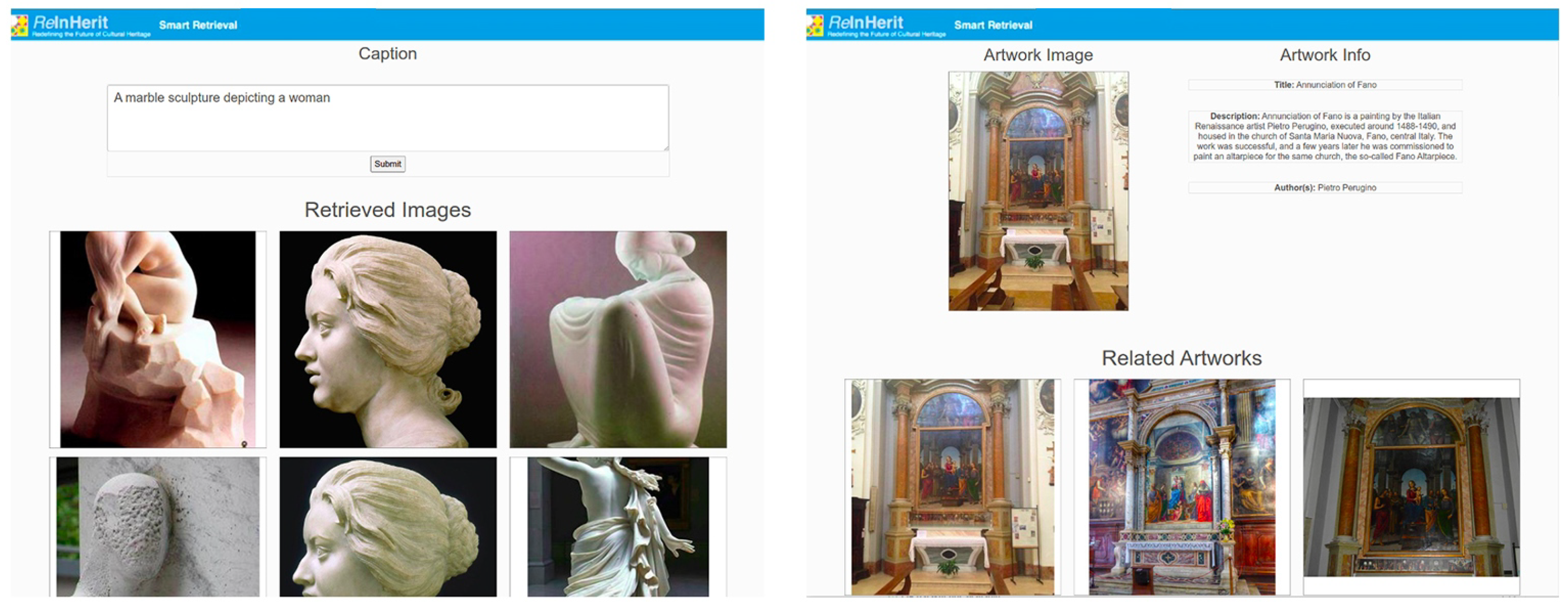

3.3. Using CLIP for Artwork Recognition and Image Retrieval

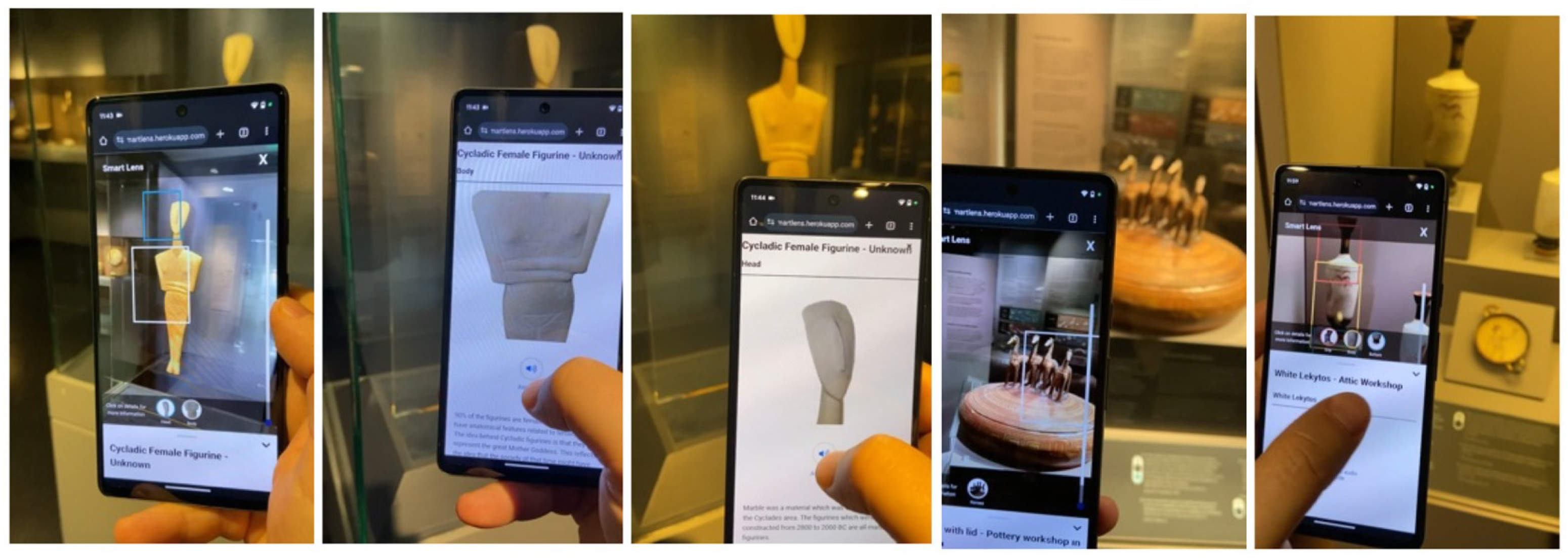

3.4. Smart Lens

- Content-based Image Retrieval (CBIR)—This method compares visual descriptors from the user’s live camera feed with those extracted from a curated image dataset. Each artwork is not only represented as a whole but also partitioned into segments so that fine-grained features can be detected and matched efficiently.

- Classification—A neural network model, specifically fine-tuned for the collection, assigns a class label to the input frame based on overall appearance. The recognition result is accepted only if the confidence score exceeds a designated threshold. This lightweight solution is ideal for running directly on mobile devices.

- Object Detection—In this mode, the system pinpoints and labels multiple details within a single artwork using bounding boxes. The underlying model, optimized for detecting artwork-specific elements, selects only those regions whose confidence level meets the predefined criteria. This approach is particularly suited for complex works with multiple visual components.

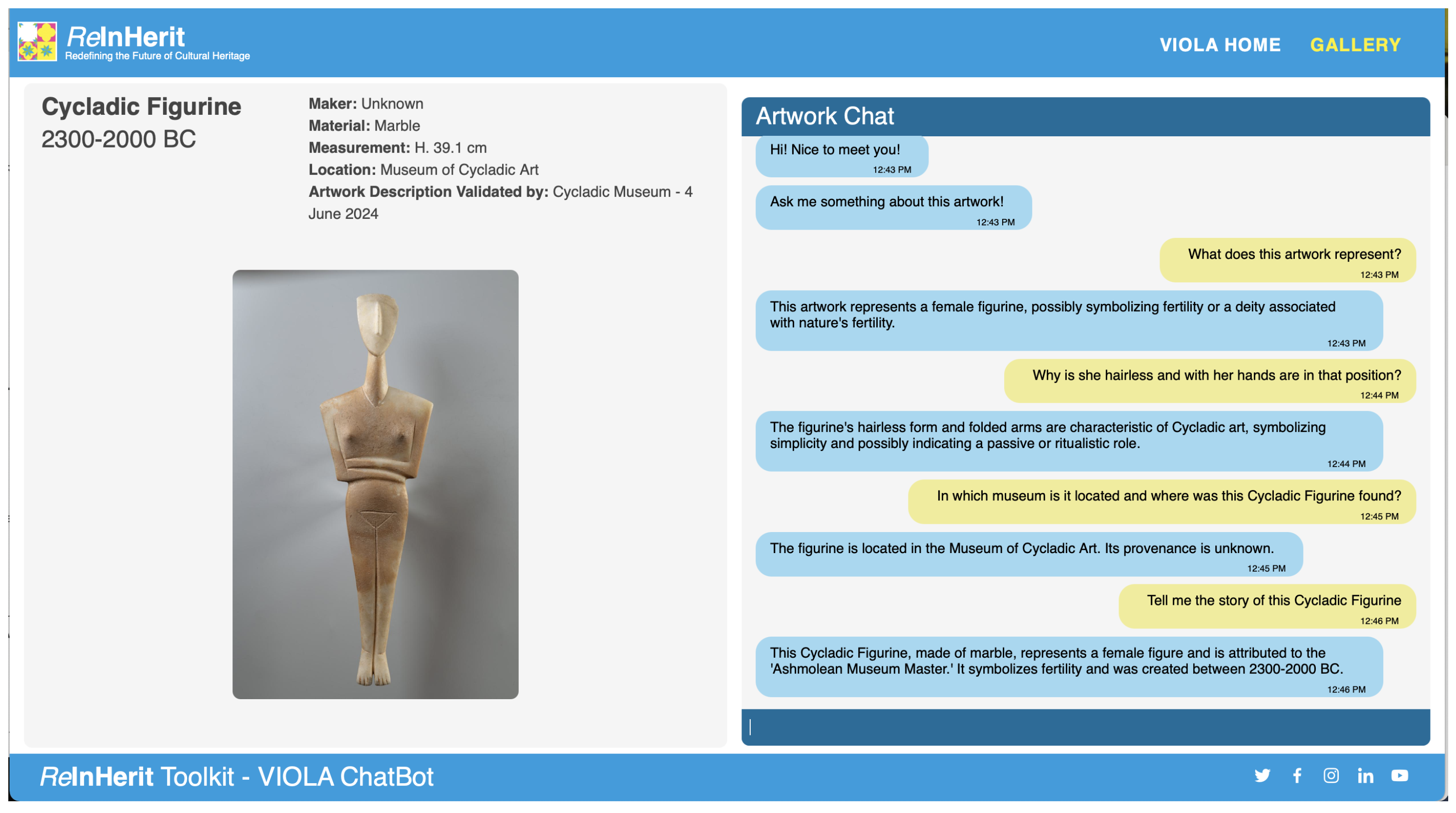

3.5. Multimedia Chatbot: VIOLA

- A neural network classifies the user’s query, determining whether it pertains to the visual content or the contextual aspects of the artwork.

- A question-answering (QA) neural network uses contextual information about the artwork, stored in JSON format, to address questions related to its context.

- A visual question-answering (VQA) neural network processes the visual data and the visual description of the artwork, stored in JSON format, to answer questions about the content of the artwork [75].

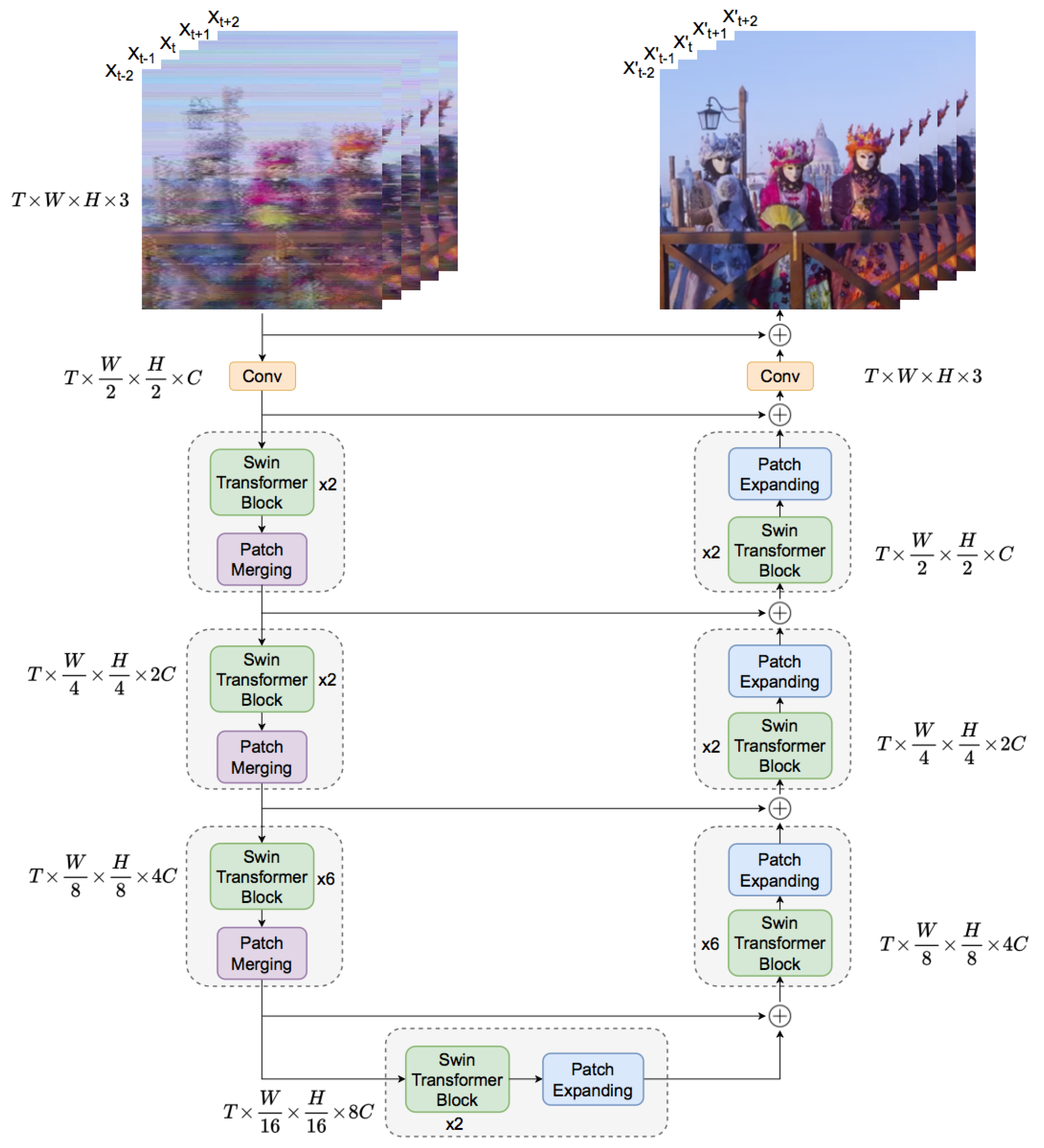

3.6. Smart Video and Photo Restorer

4. Discussion

5. Challenges and Future Directions

- Transferability and reuse: Several applications from the toolkit (e.g., Strike-a-Pose, Face-Fit, SmartLens, VIOLA Chatbot, and Smart Retrieval) have been adapted and reused by institutions within and beyond the original consortium. The reusability and portability are strong indicators of technical and conceptual robustness.

- Capacity building and skill development: The toolkit has contributed to the training of museum professionals and early-career researchers. Success was measured through the creation and adoption of syllabi, workshops, and curricular resources, as well as through qualitative feedback and reflection activities conducted during and after piloting.

- Scientific recognition and peer-reviewed contributions: Components of the toolkit were recognized at major international conferences (e.g., ACM Multimedia and CVPR) and contributed to scholarly publications in the fields of HCI, computer vision, and digital museology.

- Open-source impact: Public repositories on platforms like GitHub have shown sustained interest as reflected in downloads, stars, forks, and issues raised or resolved by external contributors. However, it must be noted that adapting an open-source application still requires a certain investment in terms of activity and technical capabilities from the adopting organization: these open-source systems greatly lower the cost to implement innovative applications but cannot fully eliminate them.

- Institutional integration: Success was also measured by the degree of integration of ReInHerit tools within the curatorial or educational strategies of participating museums, demonstrating long-term adoption potential.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| API | Application Programming Interface |

| BYOD | Bring Your Own Device |

| CBIR | Content-based Image Retrieval |

| CHAT | Conversational Human Agent Training |

| CH | Cultural Heritage |

| CIR | Combined Image Retrieval |

| CLIP | Contrastive Language-Image Pre-Training |

| CSS | Cascading Style Sheets |

| CV | Computer Vision |

| GDPR | General Data Protection Regulation |

| GPU | Graphics Processing Unit |

| GPT | Generative Pre-trained Transformer |

| HCI | Human–Computer Interaction |

| HTML | Hyper Text Markup Language |

| ICT | Information and Communication Technologies |

| ISO | International Organization for Standardization |

| LLMs | Large Language Models |

| LPIPS | Learned Perceptual Image Patch Similarity |

| ML | Machine Learning |

| MLLMs | Multimodal Language Models |

| NGOs | Non Governmental Organizations |

| NLP | Natural Language Processing |

| PSNR | Peak signal-to-noise ratio |

| QA | Question Answering |

| REST | Representational State Transfer |

| SQL | Structured Query Language |

| SSD | Solid State Drive |

| SSIM | Structural Similarity Index |

| UX | User Experience |

| VGG | Visual Geometry Group |

| VQA | Visual Question Answering |

| 1 | The Hub collects resources and training material to foster and support cultural tourism in museums and heritage sites, and serves as a networking platform to connect and exchange experiences. Website: https://reinherit-hub.eu/resources, accessed on 9 July 2025. |

| 2 | ReInHerit deliv. D3.9—Training Curriculum and Syllabi—https://ucarecdn.com/095df394-fad6-4f35-bdcc-09931d0b8dd2/, accessed on 9 July 2025. |

| 3 | ReInHerit deliv. D3.1—National Surveys Report—https://ucarecdn.com/54faa991-1570-4a53-9e8a-c1dea0a33110/, accessed on 9 July 2025. |

| 4 | The national surveys complied with GDPR, ensuring full anonymization of data by not requesting personal details. Participants were also informed about the survey’s purpose and how the data would be used. Similarly, the focus groups followed strict ethical guidelines, with informed consent procedures and data anonymization carefully planned according to the project’s Ethics and Data Management Plan. An additional central aim was to ensure full adherence to GDPR standards. See ReInHerit Deliverables D2.1, D2.4, D3.1 https://reinherit-hub.eu/deliverables, accessed on 9 July 2025. |

| 5 | For more details on the selection process and the qualitative and quantitative analytical methods, see ReInHerit deliv. D2.4 Focus Groups Phase II Report—https://ucarecdn.com/4966fc16-784a-4473-b457-4ba6ba458c13/, accessed on 9 July 2025. |

| 6 | ReInHerit deliv. D3.2—Toolkit Strategy Report—https://ucarecdn.com/71ffe888-3c0d-470d-962d-ab145edcff3f/, accessed on 9 July 2025. |

| 7 | NEMO press release: https://www.ne-mo.org/news-events/article/nemo-presents-3-recommendations-addressing-the-development-of-ai-technology-in-museums, accessed on 9 July 2025. |

| 8 | ReInHerit news: https://reinherit-hub.eu/news, accessed on 9 July 2025. |

| 9 | Ethical Aspects and Scientific Accuracy of AI/CV-based tools: https://reinherit-hub.eu/bestpractices/db1bd5ab-218f-480b-b709-06ac9ab72b33, accessed on 9 July 2025. |

| 10 | ReInHerit Applications https://reinherit-hub.eu/applications/, accessed on 9 July 2025. |

| 11 | Movenet and TensorFlow.js: https://blog.tensorflow.org/2021/05/next-generation-pose-detection-with-movenet-and-tensorflowjs.html, accessed on 9 July 2025. |

| 12 | Strike-a-Pose: Co-creation process at ReInHerit Hackathon https://reinherit-hub.eu/summerschool/6205f8e2-60aa-46d2-bca3-bc46c9283029, accessed on 9 July 2025. |

| 13 | ReInHerit travelling exhibitions: https://reinherit-hub.eu/travellingexhibitions, accessed on 9 July 2025. |

| 14 | Available on the ReInHerit digital hub: https://reinherit-hub.eu/tools/apps/543b2b77-35f1-41b5-b06e-3a355f2a1c6b, accessed on 9 July 2025. |

| 15 | VIOLA Chatbot: Co-creation process at ReInHerit Hackathon https://reinherit-hub.eu/summerschool/314ed627-6f30-4d43-9428-4e55aee28066 accessed on 9 July 2025. |

| 16 | Students and researchers from different international academic backgrounds participated in the international XR/AI Summer School 2023 from 17 to 22 July 2023 in Matera Italy, working on the topics of extended reality and artificial intelligence. More info on ReInHerit Hackathon and project proposals: https://reinherit-hub.eu/summerschool/ accessed on 9 July 2025. |

| 17 | AI-Based Toolkit for Museums and Cultural Heritage Sites https://www.europeanheritagehub.eu/document/ai-based-toolkit-for-museums-and-cultural-heritage-sites/ accessed on 9 July 2025. |

References

- Stamatoudi, I.; Roussos, K. A Sustainable Model of Cultural Heritage Management for Museums and Cultural Heritage Institutions. ACM J. Comput. Cult. Herit. 2024. [Google Scholar] [CrossRef]

- Gaia, G.; Boiano, S.; Borda, A. Engaging Museum Visitors with AI: The Case of Chatbots. In Museums and Digital Culture: New Perspectives and Research; Giannini, T., Bowen, J.P., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 309–329. [Google Scholar] [CrossRef]

- Adahl, S.; Träskman, T. Archeologies of Future Heritage: Cultural Heritage, Research Creations, and Youth. Mimesis J. 2024, 13, 487–497. [Google Scholar] [CrossRef]

- Pietroni, E. Multisensory Museums, Hybrid Realities, Narration, and Technological Innovation: A Discussion Around New Perspectives in Experience Design and Sense of Authenticity. Heritage 2025, 8, 130. [Google Scholar] [CrossRef]

- Ivanov, R.; Velkova, V. Analyzing Visitor Behavior to Enhance Personalized Experiences in Smart Museums: A Systematic Literature Review. Computers 2025, 14, 191. [Google Scholar] [CrossRef]

- Wang, B. Digital Design of Smart Museum Based on Artificial Intelligence. Mob. Inf. Syst. 2021, 2021, 4894131. [Google Scholar] [CrossRef]

- Perakyla, A.; Sorjonen, M.L. Emotion in Interaction; Oxford University Press: Oxford, UK, 2012. [Google Scholar] [CrossRef]

- Ciolfi, L. Embodiment and Place Experience in Heritage Technology Design. In The International Handbooks of Museum Studies; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2015; Chapter 19; pp. 419–445. [Google Scholar] [CrossRef]

- Parry, R. Museums in a Digital Age; Leicester Readers in Museum Studies; Routledge: London, UK, 2010. [Google Scholar]

- Walhimer, M. Designing Museum Experiences; Rowman & Littlefield: Lanham, MD, USA, 2021. [Google Scholar]

- Cucchiara, R.; Del Bimbo, A. Visions for Augmented Cultural Heritage Experience. IEEE Multimed. 2014, 21, 74–82. [Google Scholar] [CrossRef]

- MusuemBooster. Museum Innovation Barometer. 2022. Available online: https://www.museumbooster.com/mib (accessed on 7 May 2025).

- Giannini, T.; Bowen, J. Museums and Digital Culture: From Reality to Digitality in the Age of COVID-19. Heritage 2022, 5, 192–214. [Google Scholar] [CrossRef]

- Thiel, S.; Bernhardt, J.C. (Eds.) Reflections, Perspectives and Applications; Transcript Verlag: Bielefeld, Germany, 2023; pp. 117–130. [Google Scholar] [CrossRef]

- Clarencia, E.; Tiranda, T.G.; Achmad, S.; Sutoyo, R. The Impact of Artificial Intelligence in the Creative Industries: Design and Editing. In Proceedings of the International Seminar on Application for Technology of Information and Communication (iSemantic), Semarang, Indonesia, 21–22 September 2024; pp. 440–444. [Google Scholar] [CrossRef]

- Muto, V.; Luongo, S.; Sepe, F.; Prisco, A. Enhancing Visitors’ Digital Experience in Museums through Artificial Intelligence. In Proceedings of the Business Systems Laboratory International Symposium, Palermo, Italy, 11–12 January 2024. [Google Scholar]

- Furferi, R.; Di Angelo, L.; Bertini, M.; Mazzanti, P.; De Vecchis, K.; Biffi, M. Enhancing traditional museum fruition: Current state and emerging tendencies. Herit. Sci. 2024, 12, 20. [Google Scholar] [CrossRef]

- Villaespesa, E.; Murphy, O. This is not an apple! Benefits and challenges of applying computer vision to museum collections. Mus. Manag. Curatorship 2021, 36, 362–383. [Google Scholar] [CrossRef]

- Osoba, O.A.; Welser, W., IV; Welser, W. An Intelligence in Our Image: The Risks of Bias and Errors in Artificial Intelligence; Rand Corporation: Santa Monica, CA, USA, 2017. [Google Scholar]

- Villaespesa, E.; Murphy, O. THE MUSEUMS + AI NETWORK—AI: A Museum Planning Toolkit. 2020. Available online: https://themuseumsai.network/toolkit/ (accessed on 7 May 2025).

- Zielke, T. Is Artificial Intelligence Ready for Standardization? In Proceedings of the Systems, Software and Services Process Improvement; Yilmaz, M., Niemann, J., Clarke, P., Messnarz, R., Eds.; Springer: Cham, Switzerland, 2020; pp. 259–274. [Google Scholar]

- Pasikowska-Schnass, M.; Young-Shin, L. Members’ Research Service, “Artificial Intelligence in the Context of Cultural Heritage and Museums: Complex Challenges and New Opportunities” EPRS|European Parliamentary Research PE 747.120—May 2023. Available online: https://www.europarl.europa.eu/RegData/etudes/BRIE/2023/747120/EPRS_BRI(2023)747120_EN.pdf (accessed on 7 May 2025).

- CM. Recommendation CM/Rec(2022)15 Adopted by the Committee of Ministers on 20 May 2022 at the 132nd Session of the Committee of Ministers. 2022. Available online: https://search.coe.int/cm?i=0900001680a67952 (accessed on 9 July 2025).

- Furferi, R.; Colombini, M.P.; Seymour, K.; Pelagotti, A.; Gherardini, F. The Future of Heritage Science and Technologies: Papers from Florence Heri-Tech 2022. Herit. Sci. 2024, 12, 155. [Google Scholar] [CrossRef]

- Boiano, S.; Borda, A.; Gaia, G.; Di Fraia, G. Ethical AI and Museums: Challenges and New Directions. In Proceedings of the EVA London 2024, London, UK, 8–12 July 2024. [Google Scholar] [CrossRef]

- Orlandi, S.D.; De Angelis, D.; Giardini, G.; Manasse, C.; Marras, A.M.; Bolioli, A.; Rota, M. IA FAQ Intelligenza Artificiale—AI FAQ Artificial Intelligence. Zenodo. 2025. Available online: https://zenodo.org/records/15069460 (accessed on 9 July 2025).

- Nikolaou, P. Museums and the Post-Digital: Revisiting Challenges in the Digital Transformation of Museums. Heritage 2024, 7, 1784–1800. [Google Scholar] [CrossRef]

- Barekyan, K.; Peter, L. Digital Learning and Education in Museums: Innovative Approaches and Insights. NEMO. 2023. Available online: https://www.ne-mo.org/fileadmin/Dateien/public/Publications/NEMO_Working_Group_LEM_Report_Digital_Learning_and_Education_in_Museums_12.2022.pdf (accessed on 9 July 2025).

- Falk, J.H.; Dierking, L.D. The Museum Experience, 1st ed.; Routledge: London, UK, 2011. [Google Scholar] [CrossRef]

- Falk, J.; Dierking, L. The Museum Experience Revisited; Routledge: London, UK, 2016. [Google Scholar] [CrossRef]

- Falk, J.H. Identity and the Museum Visitor Experience; Wiley: Hoboken, NJ, USA, 2010. [Google Scholar]

- Mazzanti, P.; Sani, M. Emotions and Learning in Museums. NEMO. 2021. Available online: https://www.ne-mo.org/fileadmin/Dateien/public/Publications/NEMO_Emotions_and_Learning_in_Museums_WG-LEM_02.2021.pdf (accessed on 9 July 2025).

- Ekman, P.; Davidson, R.J. (Eds.) The Nature of Emotion: Fundamental Questions; Oxford University Press: New York, NY, USA, 1994. [Google Scholar]

- Panksepp, J. Affective Neuroscience: The Foundations of Human and Animal Emotions; Oxford University Press: Oxford, UK, 2005. [Google Scholar]

- Damásio, A.R. Descartes’ Error: Emotion, Reason, and the Human Brain; Avon Books: New York, NY, USA, 1994. [Google Scholar]

- Barrett, L.F. How Emotions Are Made; Houghton Mifflin Harcourt: New York, NY, USA, 2017. [Google Scholar]

- Barrett, L.F.; Mesquita, B.; Gendron, M. Context in Emotion Perception. Curr. Dir. Psychol. Sci. 2011, 20, 286–290. [Google Scholar] [CrossRef]

- Immordino-Yang, M.; Damasio, A. We Feel, Therefore We Learn: The Relevance of Affective and Social Neuroscience to Education. Mind Brain Educ. 2007, 1, 3–10. [Google Scholar] [CrossRef]

- Wood, E.; Latham, K.F. The Objects of Experience: Transforming Visitor-Object Encounters in Museums, 1st ed.; Routledge: London, UK, 2014. [Google Scholar] [CrossRef]

- Pietroni, E.; Pagano, A.; Fanini, B. UX Designer and Software Developer at the Mirror: Assessing Sensory Immersion and Emotional Involvement in Virtual Museums. Stud. Digit. Herit. 2018, 2, 13–41. [Google Scholar] [CrossRef]

- Hohenstein, J.; Moussouri, T. Museum Learning: Theory and Research as Tools for Enhancing Practice, 1st ed.; Routledge: London, UK, 2017. [Google Scholar] [CrossRef]

- Pescarin, S.; Città, G.; Spotti, S. Authenticity in Interactive Experiences. Heritage 2024, 7, 6213–6242. [Google Scholar] [CrossRef]

- Lieto, A.; Striani, M.; Gena, C.; Dolza, E.; Anna Maria, M.; Pozzato, G.L.; Damiano, R. A sensemaking system for grouping and suggesting stories from multiple affective viewpoints in museums. Hum.-Comput. Interact. 2024, 39, 109–143. [Google Scholar] [CrossRef]

- Damiano, R.; Lombardo, V.; Monticone, G.; Pizzo, A. Studying and designing emotions in live interactions with the audience. Multimed. Tools Appl. 2021, 80, 6711–6736. [Google Scholar] [CrossRef]

- Khan, I.; Melro, A.; Amaro, A.C.; Oliveira, L. Role of Gamification in Cultural Heritage Dissemination: A Systematic Review. In Proceedings of the Sixth International Congress on Information and Communication Technology (ICICT), London, UK, 25–26 February 2021; pp. 393–400. [Google Scholar] [CrossRef]

- Casillo, M.; Colace, F.; Marongiu, F.; Santaniello, D.; Valentino, C. Gamification in Cultural Heritage: When History Becomes SmART. In Proceedings of the Image Analysis and Processing—ICIAP 2023 Workshops; Foresti, G.L., Fusiello, A., Hancock, E., Eds.; Springer: Cham, Switzerland, 2024; pp. 387–397. [Google Scholar]

- Galindo-Durán, A. Enhancing Artistic Heritage Education through Gamification: A Comparative Study of Engagement and Learning Outcomes in Local Museums. Nusant. J. Behav. Soc. Sci. 2025, 4, 51–58. [Google Scholar] [CrossRef]

- Liao, Y.; Jin, G. Design, Technology, and Applications of Gamified Exhibitions: A Review. In Proceedings of the HCI International 2025 Posters; Stephanidis, C., Antona, M., Ntoa, S., Salvendy, G., Eds.; Springer: Cham, Switzerland, 2025; pp. 106–116. [Google Scholar]

- Alexander, J. Gallery One at the Cleveland Museum of Art. Curator Mus. J. 2014, 57, 347–362. [Google Scholar] [CrossRef]

- Alexander, J.; Barton, J.; Goeser, C. Transforming the Art Museum Experience: Gallery One. In Proceedings of the Museums and the Web 2013; Proctor, N., Cherry, R., Eds.; Museums and the Web LLC: Silver Spring, MD, USA, 2013; Available online: https://mw2013.museumsandtheweb.com/paper/transforming-the-art-museum-experience-gallery-one-2 (accessed on 9 July 2025).

- Fan, S.; Wei, J. Enhancing Art History Education Through the Application of Multimedia Devices. In Proceedings of the International Conference on Internet, Education and Information Technology (IEIT 2024), Tianjin, China, 31 May–2 June 2024; Atlantis Press: Dordrecht, The Netherland, 2024; pp. 425–436. [Google Scholar] [CrossRef]

- Münster, S.; Maiwald, F.; di Lenardo, I.; Henriksson, J.; Isaac, A.; Graf, M.M.; Beck, C.; Oomen, J. Artificial Intelligence for Digital Heritage Innovation: Setting up a R&D Agenda for Europe. Heritage 2024, 7, 794–816. [Google Scholar] [CrossRef]

- Fiorucci, M.; Khoroshiltseva, M.; Pontil, M.; Traviglia, A.; Del Bue, A.; James, S. Machine Learning for Cultural Heritage: A Survey. Pattern Recognit. Lett. 2020, 133, 102–108. [Google Scholar] [CrossRef]

- Pansoni, S.; Tiribelli, S.; Paolanti, M.; Di Stefano, F.; Frontoni, E.; Malinverni, E.S.; Giovanola, B. Artificial intelligence and cultural heritage: Design and assessment of an ethical framework. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 1149–1155. [Google Scholar] [CrossRef]

- UNESCO. Guidance for Generative AI in Education and Research. 2023. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000386693?locale=en (accessed on 7 May 2025).

- UNESCO. Recommendation on the Ethics of Artificial Intelligence SHS/BIO/PI/2021/1. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000381137. (accessed on 7 May 2025).

- Ranjgar, B.; Sadeghi-Niaraki, A.; Shakeri, M.; Rahimi, F.; Choi, S.M. Cultural Heritage Information Retrieval: Past, Present, and Future Trends. IEEE Access 2024, 12, 42992–43026. [Google Scholar] [CrossRef]

- Rachabathuni, P.K.; Mazzanti, P.; Principi, F.; Ferracani, A.; Bertini, M. Computer Vision and AI Tools for Enhancing User Experience in the Cultural Heritage Domain. In Proceedings of the HCI International 2024—Late Breaking Papers; Zaphiris, P., Ioannou, A., Sottilare, R.A., Schwarz, J., Rauterberg, M., Eds.; Springer: Cham, Switzerland, 2025; pp. 345–354. [Google Scholar] [CrossRef]

- Donadio, M.G.; Principi, F.; Ferracani, A.; Bertini, M.; Del Bimbo, A. Engaging Museum Visitors with Gamification of Body and Facial Expressions. In Proceedings of the ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; MM ’22. pp. 7000–7002. [Google Scholar] [CrossRef]

- TensorFlow.js Library. Available online: https://www.tensorflow.org/js (accessed on 9 July 2025).

- Flask Framework. Available online: https://flask.palletsprojects.com/ (accessed on 9 July 2025).

- Nielsen, J. Iterative user-interface design. Computer 1993, 26, 32–41. [Google Scholar] [CrossRef]

- MediaPipe Face Mesh Model Card. Available online: https://drive.google.com/file/d/1VFC_wIpw4O7xBOiTgUldl79d9LA-LsnA/view (accessed on 9 July 2025).

- MediaPipe Library. Available online: https://ai.google.dev/edge/mediapipe/solutions/guide (accessed on 9 July 2025).

- Kartynnik, Y.; Ablavatski, A.; Grishchenko, I.; Grundmann, M. Real-time Facial Surface Geometry from Monocular Video on Mobile GPUs. arXiv 2019, arXiv:1907.06724. [Google Scholar]

- Ekman, P.; Friesen, W.V.; O’sullivan, M.; Chan, A.; Diacoyanni-Tarlatzis, I.; Heider, K.; Krause, R.; LeCompte, W.A.; Pitcairn, T.; Ricci-Bitti, P.E.; et al. Universals and cultural differences in the judgments of facial expressions of emotion. J. Personal. Soc. Psychol. 1987, 53, 712. [Google Scholar] [CrossRef]

- Reinhard, E.; Adhikhmin, M.; Gooch, B.; Shirley, P. Color transfer between images. IEEE Comput. Graph. Appl. 2001, 21, 34–41. [Google Scholar] [CrossRef]

- Gallese, V. Embodied Simulation. Its Bearing on Aesthetic Experience and the Dialogue Between Neuroscience and the Humanities. Gestalt Theory 2019, 41, 113–127. [Google Scholar] [CrossRef]

- Karahan, S.; Gül, L.F. Mapping Current Trends on Gamification of Cultural Heritage. In Proceedings of the Game + Design Education; Cordan, Ö., Dinçay, D.A., Yurdakul Toker, Ç., Öksüz, E.B., Semizoğlu, S., Eds.; Springer: Cham, Switzerland, 2021; pp. 281–293. [Google Scholar]

- Bonacini, E.; Giaccone, S.C. Gamification and cultural institutions in cultural heritage promotion: A successful example from Italy. Cult. Trends 2022, 31, 3–22. [Google Scholar] [CrossRef]

- OPEN-AI. CLIP: Connecting Text and Images. 2021. Available online: https://openai.com/research/clip (accessed on 7 May 2025).

- Chiaro, R.D.; Bagdanov, A.; Bimbo, A.D. NoisyArt: A Dataset for Webly-supervised Artwork Recognition. In Proceedings of the International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2019), Prague, Czech Republic, 25–27 February 2019; INSTICC. SciTePress: Lisboa, Portugal, 2019; pp. 467–475. [Google Scholar] [CrossRef]

- Baldrati, A.; Bertini, M.; Uricchio, T.; Del Bimbo, A. Exploiting CLIP-Based Multi-modal Approach for Artwork Classification and Retrieval. In The Future of Heritage Science and Technologies: ICT and Digital Heritage; Springer International Publishing: Berlin/Heidelberg, Germany, 2022; pp. 140–149. [Google Scholar] [CrossRef]

- TensorFlow Framework. Available online: https://www.tensorflow.org/ (accessed on 9 July 2025).

- Bongini, P.; Becattini, F.; Bimbo, A.D. Is GPT-3 all you need for Visual Question Answering in Cultural Heritage? arXiv 2023, arXiv:2207.12101. [Google Scholar]

- Rachabatuni, P.K.; Principi, F.; Mazzanti, P.; Bertini, M. Context-aware chatbot using MLLMs for Cultural Heritage. In Proceedings of the ACM Multimedia Systems Conference, Bari, Italy, 15–18 April 2024; MMSys ’24. pp. 459–463. [Google Scholar] [CrossRef]

- Boiano, S.; Borda, A.; Gaia, G.; Rossi, S.; Cuomo, P. Chatbots and new audience opportunities for museums and heritage organisations. In Proceedings of the Conference on Electronic Visualisation and the Arts, Swindon, GBR, London, UK, 9–13 July 2018; EVA ’18. pp. 164–171. [Google Scholar] [CrossRef]

- Mountantonakis, M.; Koumakis, M.; Tzitzikas, Y. Combining LLMs and Hundreds of Knowledge Graphs for Data Enrichment, Validation and Integration Case Study: Cultural Heritage Domain. In Proceedings of the International Conference On Museum Big Data (MBD), Athens, Greece, 18–19 November 2024. [Google Scholar]

- Ferrato, A.; Gena, C.; Limongelli, C.; Sansonetti, G. Multimodal LLM Question Generation for Children’s Art Engagement via Museum Social Robots. In Proceedings of the Adjunct, Proceedings of the 33rd ACM Conference on User Modeling, Adaptation and Personalization, New York, NY, USA, 16–19 June 2025; UMAP Adjunct’25; Association for Computing Machinery: New York, NY, USA, 2025; pp. 144–150. [Google Scholar] [CrossRef]

- Engstrøm, M.P.; Løvlie, A.S. Using a Large Language Model as Design Material for an Interactive Museum Installation. arXiv 2025, arXiv:2503.22345. [Google Scholar]

- Gustke, O.; Schaffer, S.; Ruß, A. CHIM—Chatbot in the Museum. Exploring and Explaining Museum Objects with Speech-Based AI. In AI in Museums; Thiel, S., Bernhardt, J.C., Eds.; Transcript Verlag: Bielefeld, Germany, 2023; pp. 257–264. ISBN 978-3-8394-6710-7. [Google Scholar] [CrossRef]

- Ferracani, A.; Ricci, S.; Principi, F.; Becchi, G.; Biondi, N.; Del Bimbo, A.; Bertini, M.; Pala, P. An AI-Powered Multimodal Interaction System for Engaging with Digital Art: A Human-Centered Approach to HCI. In Proceedings of the Artificial Intelligence in HCI; Degen, H., Ntoa, S., Eds.; Springer: Cham, Switzerland, 2025; pp. 281–294. [Google Scholar]

- Galteri, L.; Bertini, M.; Seidenari, L.; Uricchio, T.; Del Bimbo, A. Increasing Video Perceptual Quality with GANs and Semantic Coding. In Proceedings of the ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; MM ’20. pp. 862–870. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; Bengio, Y., LeCun, Y., Eds.; ICLR: Singapore, 2015. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 18–22 June 2018; pp. 586–595. [Google Scholar] [CrossRef]

- Antic. DeOldify. Available online: https://github.com/jantic/DeOldify (accessed on 7 May 2025).

- Murphy, O.; Villaespesa, E.; Gaia, G.; Boiano, S.; Elliott, L. Musei e Intelligenza Artificiale: Un Toolkit di Progettazione; Goldsmiths; University of London: London, UK, 2024. [Google Scholar] [CrossRef]

| Topic | Description |

|---|---|

| Playful Experience | AI and CV tools are applied to foster learning and build a deeper connection between visitors and artworks. Interactions and gamified experiences are designed to trigger emotion, encourage creativity, and support participatory engagement. |

| New Audience | Younger audiences, who tend to be more familiar with digital technologies, are a key target of the ReInherit Toolkit, which aims to increase their active participation in museum experiences. |

| Sustainability | Smaller museums often lack the resources and skills to adopt digital tools, making training and capacity-building crucial for effective heritage innovation. |

| Bottom–Up | The development process follows a community-driven model, where local participants are actively involved through workshops and hackathons. This inclusive method ensures that the tools reflect the needs and insights of the users themselves. |

| Co-Creation | The innovative goal is to offer not just a tool as a final product but a collaborative development process that fosters mediation between different disciplinary sectors. |

| Method | PSNR ↑ | SSIM ↑ | LPIPS ↓ |

|---|---|---|---|

| DeOldify [87] | 11.56 | 0.451 | 0.671 |

| Our method | 34.78 | 0.939 | 0.063 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mazzanti, P.; Ferracani, A.; Bertini, M.; Principi, F. Reshaping Museum Experiences with AI: The ReInHerit Toolkit. Heritage 2025, 8, 277. https://doi.org/10.3390/heritage8070277

Mazzanti P, Ferracani A, Bertini M, Principi F. Reshaping Museum Experiences with AI: The ReInHerit Toolkit. Heritage. 2025; 8(7):277. https://doi.org/10.3390/heritage8070277

Chicago/Turabian StyleMazzanti, Paolo, Andrea Ferracani, Marco Bertini, and Filippo Principi. 2025. "Reshaping Museum Experiences with AI: The ReInHerit Toolkit" Heritage 8, no. 7: 277. https://doi.org/10.3390/heritage8070277

APA StyleMazzanti, P., Ferracani, A., Bertini, M., & Principi, F. (2025). Reshaping Museum Experiences with AI: The ReInHerit Toolkit. Heritage, 8(7), 277. https://doi.org/10.3390/heritage8070277