Exploring Cognitive Variability in Interactive Museum Games

Abstract

1. Introduction

2. Background, Related Work, and Research Objective

2.1. Theoretical Background

2.2. Related Works

2.2.1. Games in the Cultural Heritage Domain

2.2.2. Cognitive Differences in Games and Cultural Heritage

- Cognitive styles. The FD-I cognitive style has been one of the most frequently studied constructs, particularly due to its implications for visual exploration and information processing. FI visitors tend to engage in analytical and structured processing, while FD visitors typically adopt a more holistic, context-sensitive approach. These styles have been shown to influence interaction behavior, game navigation, and information recall in cultural heritage games [2]. Empirical studies reveal that FD and FI users demonstrate distinct gaze behaviors during gameplay. For example, FI individuals produce longer and more targeted fixations on salient areas. At the same time, FDs exhibit more dispersed and shorter scanpaths [2]. These visual behavior differences are mirrored in interaction patterns. FIs are more likely to explore detailed game elements and perform better in tasks requiring focused attention and cognitive restructuring. In contrast, FDs may benefit more from structured gameplay or socially-driven scenarios [2]. Such distinctions are particularly evident in tasks involving visual search or item identification, which are common in heritage games.

- Cognitive characteristics. Analytic reasoning and cognitive flexibility have been increasingly used to differentiate styles of game playing. For example, strategic players often display an analytic cognitive style and a high need for cognition, preferring games that require planning and deliberate decision-making. In contrast, non-strategic or impulsive players may rely more on intuitive thinking and seek immediate rewards, reflecting a faster, less reflective game approach [18]. Studies on collaborative games have shown that players’ performance and interaction styles vary significantly with their cognitive profiles, influencing how they process contextual and visual information [19].

- Cognitive levels. They influence how users interact with educational games and the benefits they derive. At the early cognitive level, educational games that focus on basic classification, color and shape recognition, and simple number concepts have been shown to enhance foundational thinking skills [20]. Games that require memory, sequencing, and logical thinking are more suitable at the developing stage, helping to deepen understanding of abstract concepts and improve critical thinking abilities [21]. At the advanced cognitive level, games that include strategic complexity, adaptive challenges, and narrative engagement provide optimal stimulation. Advanced systems that dynamically adjust game difficulty and content based on the learner’s performance (e.g., those using fuzzy reasoning models) could further enhance learning outcomes by tailoring content to individual cognitive profiles [22]. Moreover, research has shown that different cognitive levels shape how they interpret, interact with, and internalize digital museum content, in game-based exploration (e.g., character-driven guides or VR-based tasks), influencing their ability to process narrative content, navigate interactive tasks, and sustain attention across multimodal experiences [23] and enhancing meaning-making and memory for specific cognitive levels (e.g., less cognitively advanced audiences) [24]. Recent research has expanded these investigations to XR and multimodal settings in recent years, emphasizing the need for inclusive design strategies that account for cognitive variability [25]. Emerging work highlights the need to bridge traditional user modeling approaches with real-time multimodal sensing to enable responsive and personalized learning pathways. As such, integrating cognitive characteristics into adaptive frameworks is expected to grow, fostering personalized engagement with cultural content across diverse audiences and platforms.

2.3. Research Objective

3. Study

3.1. Methodology

3.1.1. Null Hypotheses

3.1.2. Dataset and Data

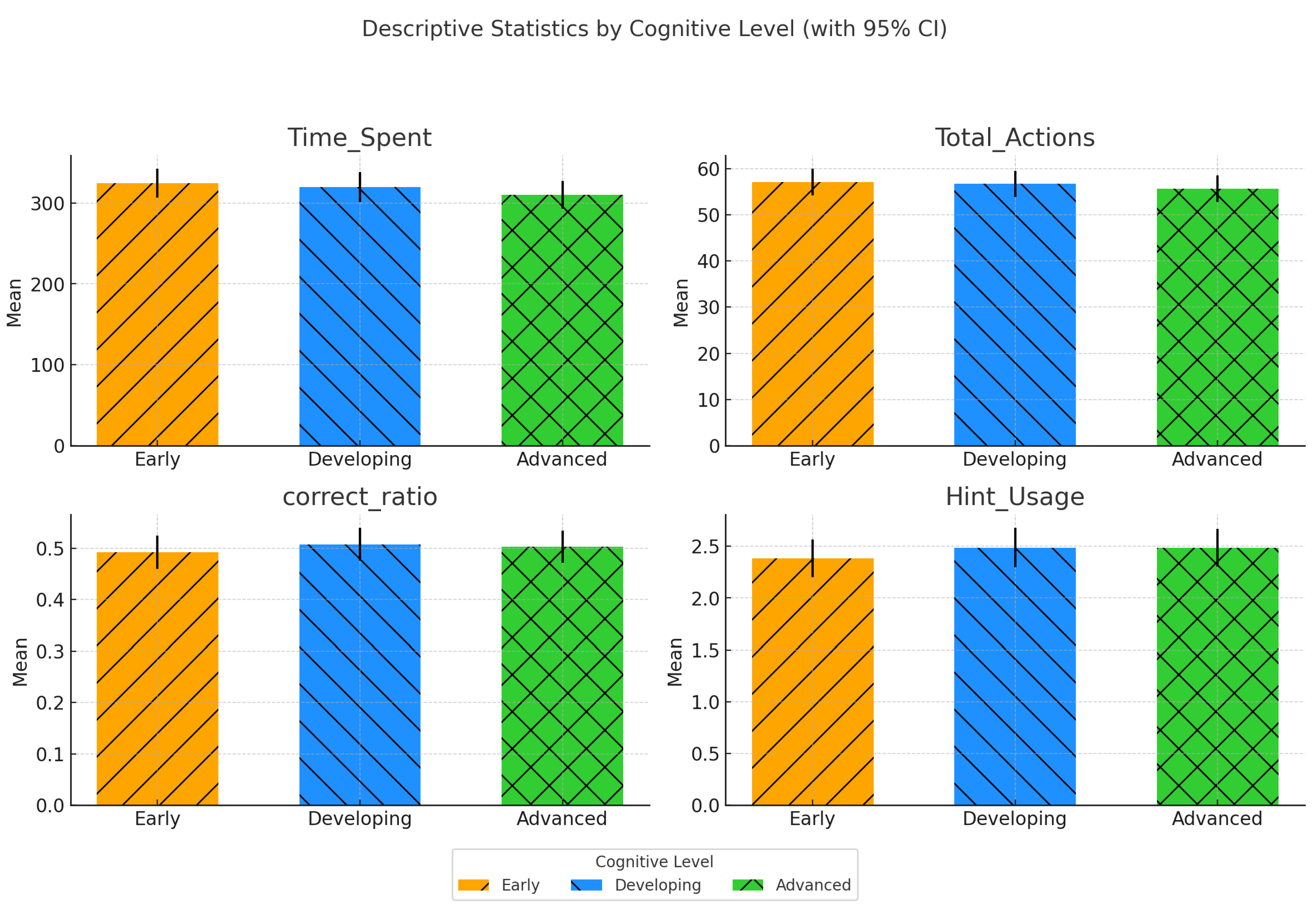

- User interaction data capture aspects of player behavior during the game: Time_Spent represents the total time (in seconds) a participant engaged with the game and is stored as a continuous numeric variable, Total_Actions record the number of interactions performed, Correct_Responses_Ratio represents the ratio of correctly answered challenges, and Hint_Usage represents the number of hints used by the participant.

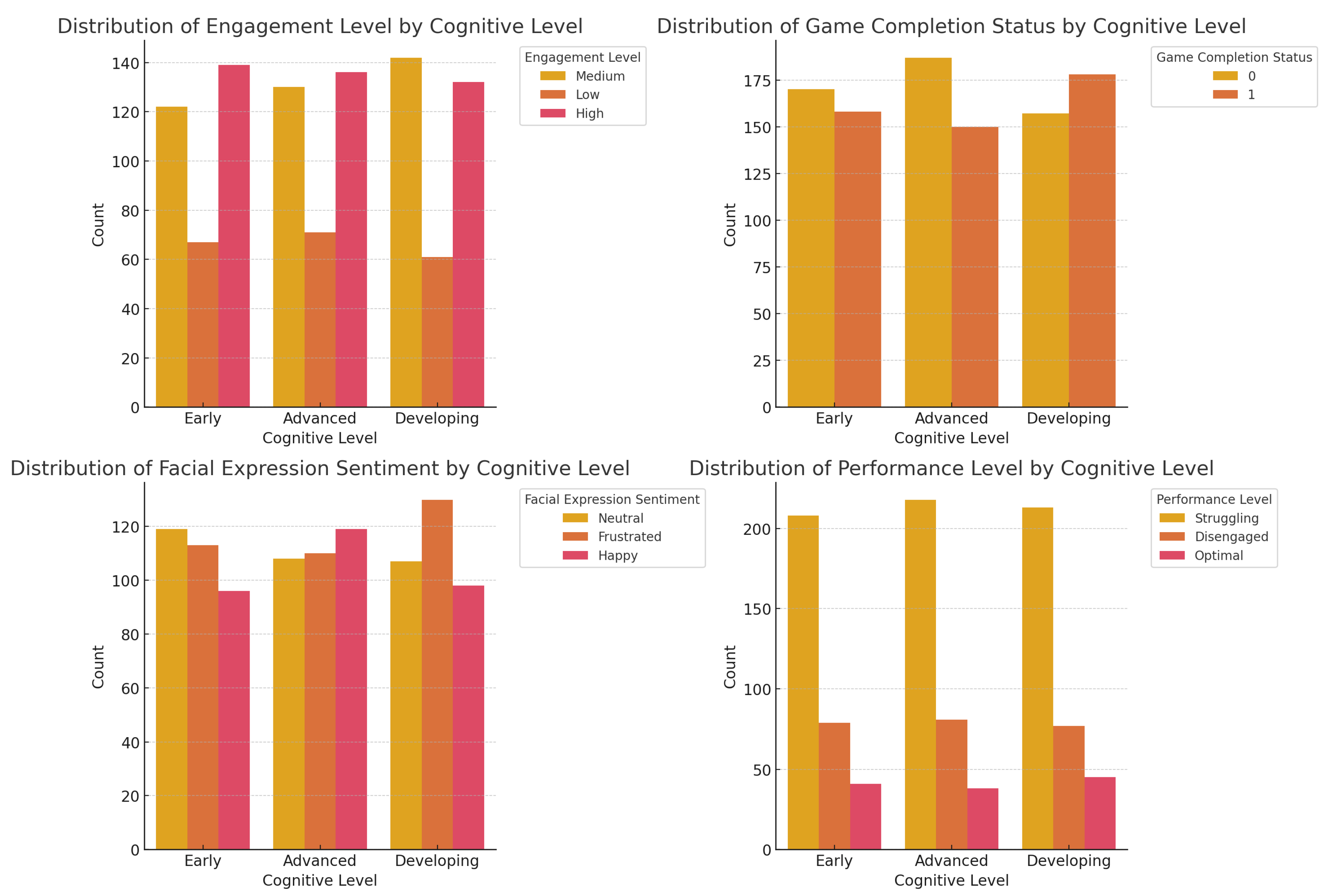

- Affective and performance states represent high-level behavioral and emotional indicators during gameplay: Engagement_Level reflects the participant’s inferred engagement and is recorded as a nominal variable with categories such as Low, Medium, and High; Game_Completion_Status is a nominal variable indicating whether the participant completed the game session; Facial_Expression_Sentiment captures affective state using emotion recognition categories (e.g., Neutral, Frustrated), and Performance_Level is a nominal classification of overall task performance based on predefined success criteria. We note that the dataset does not provide technical documentation about the emotion recognition method used to derive these sentiment labels (e.g., model type, training data, or accuracy), which limits our ability to assess their validity within a digital museum context.

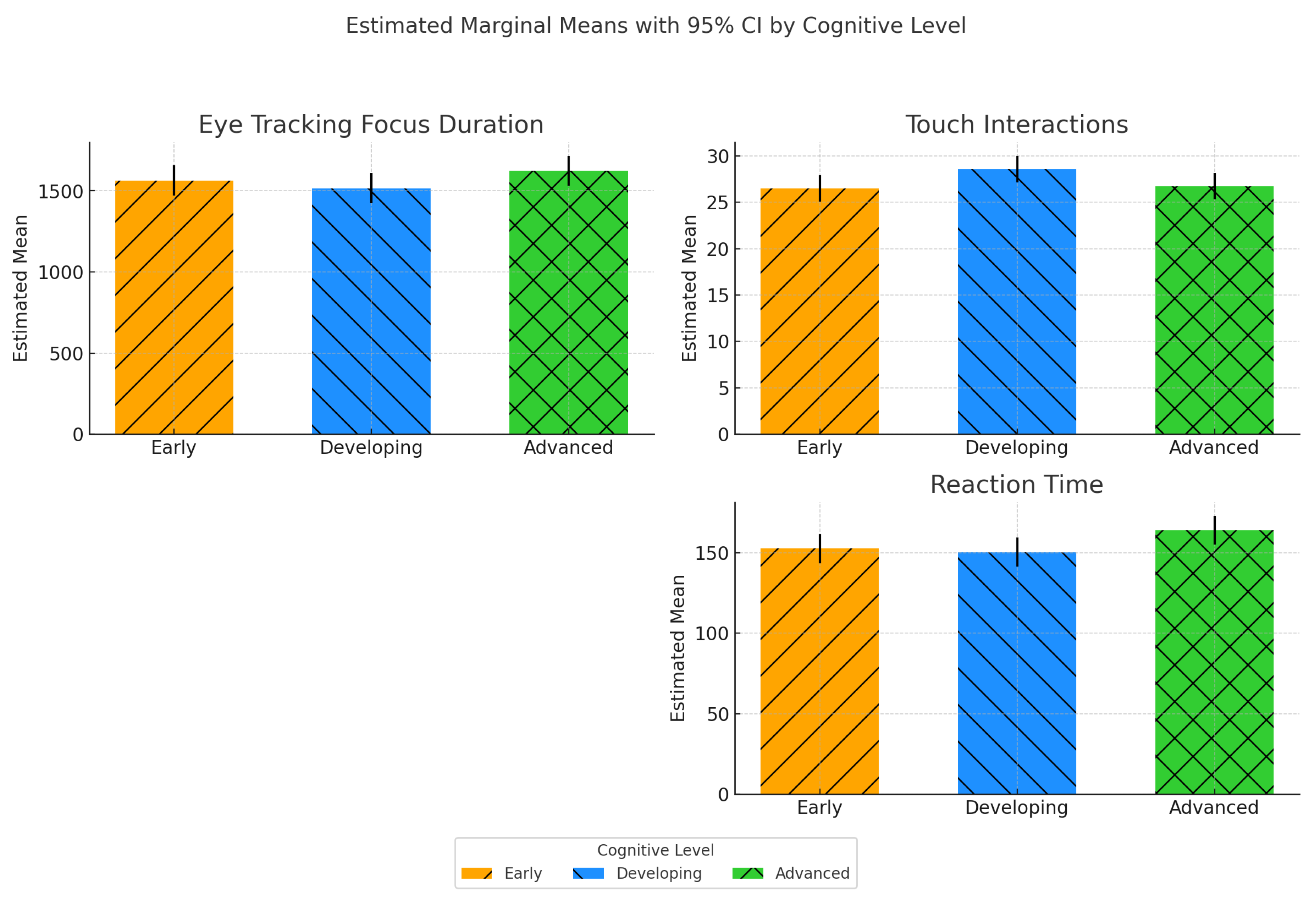

- Sensor-based interaction measures include continuous metrics derived from real-time user interaction and physiological responses: Eye_Tracking_Focus_Duration measures the total duration (in seconds) of gaze focus on relevant game elements; Touch_Interactions counts the number of physical touch-based inputs recorded during the session, and Reaction_Time captures the average time (in seconds) the participant took to respond to in-game prompts or tasks.

3.1.3. Procedure

3.2. Results

3.2.1. User Interaction

3.2.2. Affection and Performance

3.2.3. Sensor-Based Interaction

4. Discussion

4.1. Contribution

4.2. Theoretical and Practical Implications

4.3. Limitations

4.4. Future Research Directions

5. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

| 1 | Dataset URL: https://www.kaggle.com/datasets/ziya07/museum-game-interaction-dataset/data (accessed on 20 June 2025) |

| 2 | See note 1 above |

References

- Bellotti, F.; Berta, R.; De Gloria, A.; D’ursi, A.; Fiore, V. A serious game model for cultural heritage. J. Comput. Cult. Herit. 2013, 5, 17. [Google Scholar] [CrossRef]

- Raptis, G.E.; Fidas, C.A.; Avouris, N.M. Do Game Designers’ Decisions related to Visual Activities affect Knowledge Acquisition in Cultural Heritage Games? An Evaluation from a Human Cognitive Processing Perspective. ACM J. Comput. Cult. Herit. (JOCCH) 2019, 12, 4. [Google Scholar] [CrossRef]

- Raptis, G.E.; Fidas, C.; Katsini, C.; Avouris, N. A Cognition-Centered Personalization Framework for Cultural-Heritage Content. User Model. User-Adapt. Interact. 2019, 29, 9–65. [Google Scholar] [CrossRef]

- Basile, P.; de Gemmis, M.; Iaquinta, L.; Lops, P.; Musto, C.; Narducci, F.; Semeraro, G. SpIteR: A Module for Recommending Dynamic Personalized Museum Tours. In Proceedings of the 2009 IEEE/WIC/ACM International Joint Conference on Web Intelligence and Intelligent Agent Technology-Volume 01, Washington, DC, USA, 15–18 September 2009; WI-IAT ’09. pp. 584–587. [Google Scholar] [CrossRef]

- Javdani Rikhtehgar, D.; Wang, S.; Huitema, H.; Alvares, J.; Schlobach, S.; Rieffe, C.; Heylen, D. Personalizing cultural heritage access in a virtual reality exhibition: A user study on viewing behavior and content preferences. In Proceedings of the Adjunct Proceedings of the 31st ACM Conference on User Modeling, Adaptation and Personalization, Limassol, Cyprus, 26–29 June 2023; pp. 379–387. [Google Scholar]

- Roes, I.; Stash, N.; Wang, Y.; Aroyo, L. A Personalized Walk Through the Museum: The CHIP Interactive Tour Guide. In Proceedings of the CHI ’09 Extended Abstracts on Human Factors in Computing Systems, New York, NY, USA, 4–9 April 2009; CHI EA ’09. pp. 3317–3322. [Google Scholar] [CrossRef]

- Raptis, G.E.; Fidas, C.A.; Avouris, N.M. Effects of Mixed-Reality on Players’ Behaviour and Immersion in a Cultural Tourism Game: A Cognitive Processing Perspective. Int. J. Hum.-Comput. Stud. 2018, 114, 69–79. [Google Scholar] [CrossRef]

- Schmiedek, F.; Oberauer, K.; Wilhelm, O.; Süß, H.M.; Wittmann, W.W. Individual differences in components of reaction time distributions and their relations to working memory and intelligence. J. Exp. Psychol. Gen. 2007, 136, 414. [Google Scholar] [CrossRef] [PubMed]

- Wen, W.; Ishikawa, T.; Sato, T. Working memory in spatial knowledge acquisition: Differences in encoding processes and sense of direction. Appl. Cogn. Psychol. 2011, 25, 654–662. [Google Scholar] [CrossRef]

- Witkin, H.A.; Moore, C.A.; Goodenough, D.R.; Cox, P.W. Field-Dependent and Field-Independent Cognitive Styles and their Educational Implications. ETS Res. Bull. Ser. 1975, 1975, 1–64. [Google Scholar] [CrossRef]

- Hwang, G.J.; Sung, H.Y.; Hung, C.M.; Huang, I.; Tsai, C.C. Development of a Personalized Educational Computer Game based on Students’ Learning Styles. Educ. Technol. Res. Dev. 2012, 60, 623–638. [Google Scholar] [CrossRef]

- Steichen, B.; Wu, M.M.A.; Toker, D.; Conati, C.; Carenini, G. Te,Te,Hi,Hi: Eye Gaze Sequence Analysis for Informing User-Adaptive Information Visualizations. In User Modeling, Adaptation and Personalization, Proceedings of the 22nd International Conference, UMAP 2014, Aalborg, Denmark, 7–11 July 2014; Dimitrova, V., Kuflik, T., Chin, D., Ricci, F., Dolog, P., Houben, G.J., Eds.; Springer: Cham, Switzerland, 2014; pp. 183–194. [Google Scholar]

- Mortara, M.; Catalano, C.E.; Bellotti, F.; Fiucci, G.; Houry-Panchetti, M.; Petridis, P. Learning cultural heritage by serious games. J. Cult. Herit. 2014, 15, 318–325. [Google Scholar] [CrossRef]

- Antoniou, A.; Lepouras, G.; Bampatzia, S.; Almpanoudi, H. An Approach for Serious Game Development for Cultural Heritage: Case Study for an Archaeological Site and Museum. ACM J. Comput. Cult. Herit. (JOCCH) 2013, 6, 17. [Google Scholar] [CrossRef]

- Anderson, E.F.; McLoughlin, L.; Liarokapis, F.; Peters, C.; Petridis, P.; De Freitas, S. Developing serious games for cultural heritage: A state-of-the-art review. Virtual Real. 2010, 14, 255–275. [Google Scholar] [CrossRef]

- Pedersen, I.; Gale, N.; Mirza-Babaei, P.; Reid, S. More than meets the eye: The benefits of augmented reality and holographic displays for digital cultural heritage. J. Comput. Cult. Herit. (JOCCH) 2017, 10, 1–15. [Google Scholar] [CrossRef]

- Perry, S.; Roussou, M.; Economou, M.; Young, H.; Pujol, L. Moving Beyond the Virtual Museum: Engaging Visitors Emotionally. In Proceedings of the 23rd International Conference on Virtual System Multimedia (VSMM 2017), Dublin, Ireland, 31 October–4 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Mouneyrac, A.; Lemercier, C.; Le Floch, V.; Challet-Bouju, G.; Moreau, A.; Jacques, C.; Giroux, I. Cognitive characteristics of strategic and non-strategic gamblers. J. Gambl. Stud. 2018, 34, 199–208. [Google Scholar] [CrossRef] [PubMed]

- Alharthi, S.A.; Raptis, G.E.; Katsini, C.; Dolgov, I.; Nacke, L.E.; Toups, Z.O. Investigating the Effects of Individual Cognitive Styles on Collaborative Gameplay. ACM Trans. Comput.-Hum. Interact. 2021, 28, 1–49. [Google Scholar] [CrossRef]

- Loka, N.; Diana, R.R. Improving cognitive ability through educational games in early childhood. JOYCED J. Early Child. Educ. 2022, 2, 50–59. [Google Scholar] [CrossRef]

- Zia, A.; Chaudhry, S.; Naz, I. Impact of computer based educational games on cognitive performance of school children in lahore, Pakistan. Shield. Res. J. Phys. Educ. Sport. Sci. 2017, 12, 40–58. [Google Scholar]

- Chrysafiadi, K.; Papadimitriou, S.; Virvou, M. Cognitive-based adaptive scenarios in educational games using fuzzy reasoning. Knowl.-Based Syst. 2022, 250, 109111. [Google Scholar] [CrossRef]

- Lin, L.; Lu, L.; Lin, N. A Cognitive Psychoanalytic Perspective on Interaction Design in the Education of School-Age Children in Museums. In HCI International 2024 Posters; Springer: Cham, Switzerland, 2024; pp. 212–219. [Google Scholar] [CrossRef]

- Chang, C.W. The Cognitive Study of Immersive Experience in Science and Art Exhibition. In Augmented Cognition; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 369–387. [Google Scholar] [CrossRef]

- Bekele, M.K.; Pierdicca, R.; Frontoni, E.; Malinverni, E.S.; Gain, J. A survey of augmented, virtual, and mixed reality for cultural heritage. J. Comput. Cult. Herit. (JOCCH) 2018, 11, 1–36. [Google Scholar] [CrossRef]

- Lyu, K. Research on the Interactive Design and Optimization of Museum Game-Oriented Cultural and Creative Products Based on Piaget’s Game Theory and Convolutional Neural Network. SSRN Scholarly Paper No. 5179507. 15 March 2025. [Google Scholar] [CrossRef]

- Naudet, Y.; Antoniou, A.; Lykourentzou, I.; Tobias, E.; Rompa, J.; Lepouras, G. Museum Personalization Based on Gaming and Cognitive Styles. Int. J. Virtual Communities Soc. Netw. 2015, 7, 1–30. [Google Scholar] [CrossRef]

- Qinghong, Y.; Dule, Y.; Junyu, Z. The research of personalized learning system based on learner interests and cognitive level. In Proceedings of the 2014 9th International Conference on Computer Science & Education, Vancouver, BC, Canada, 22–24 August 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 522–526. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Raptis, G.E. Exploring Cognitive Variability in Interactive Museum Games. Heritage 2025, 8, 267. https://doi.org/10.3390/heritage8070267

Raptis GE. Exploring Cognitive Variability in Interactive Museum Games. Heritage. 2025; 8(7):267. https://doi.org/10.3390/heritage8070267

Chicago/Turabian StyleRaptis, George E. 2025. "Exploring Cognitive Variability in Interactive Museum Games" Heritage 8, no. 7: 267. https://doi.org/10.3390/heritage8070267

APA StyleRaptis, G. E. (2025). Exploring Cognitive Variability in Interactive Museum Games. Heritage, 8(7), 267. https://doi.org/10.3390/heritage8070267