Image-Based POI Identification for Mobile Museum Guides: Design, Implementation, and User Evaluation

Abstract

1. Introduction

2. Background and Related Work

2.1. Computer Vision and Its Use in Indoor Localization

2.2. Indoor Localization in Museums

2.3. Using Large Language Models in Cultural Heritage

3. Tools and Methods

3.1. POI Identification

3.1.1. Image Representation

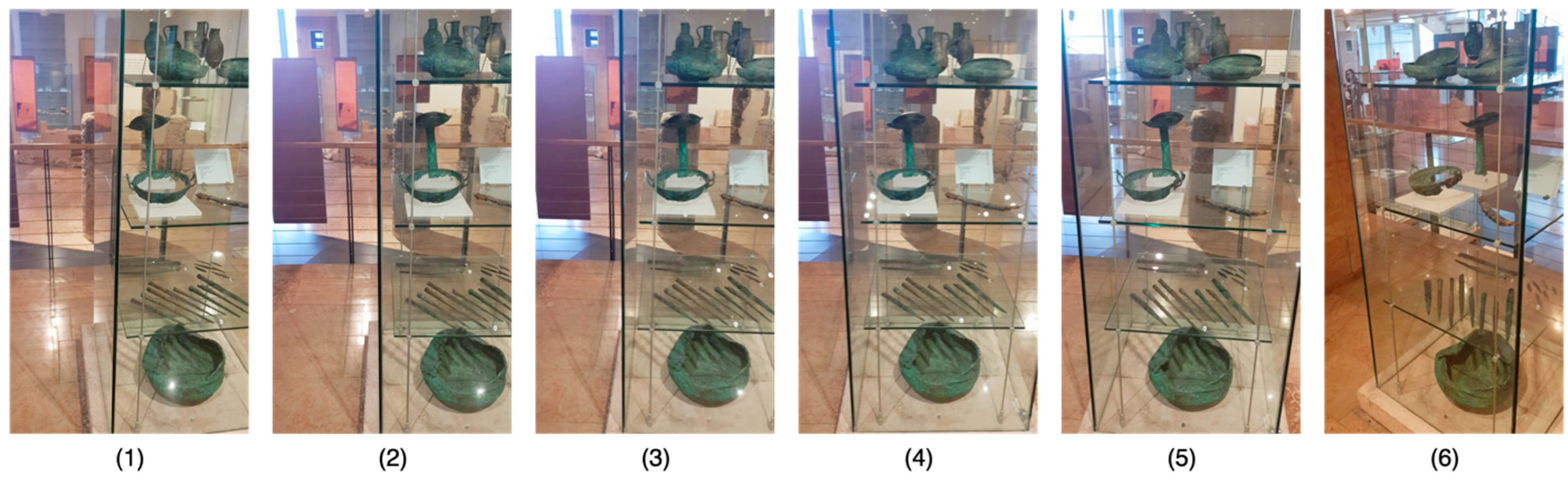

3.1.2. POI Dataset Construction

3.1.3. POI Recognition

3.2. Experimental System

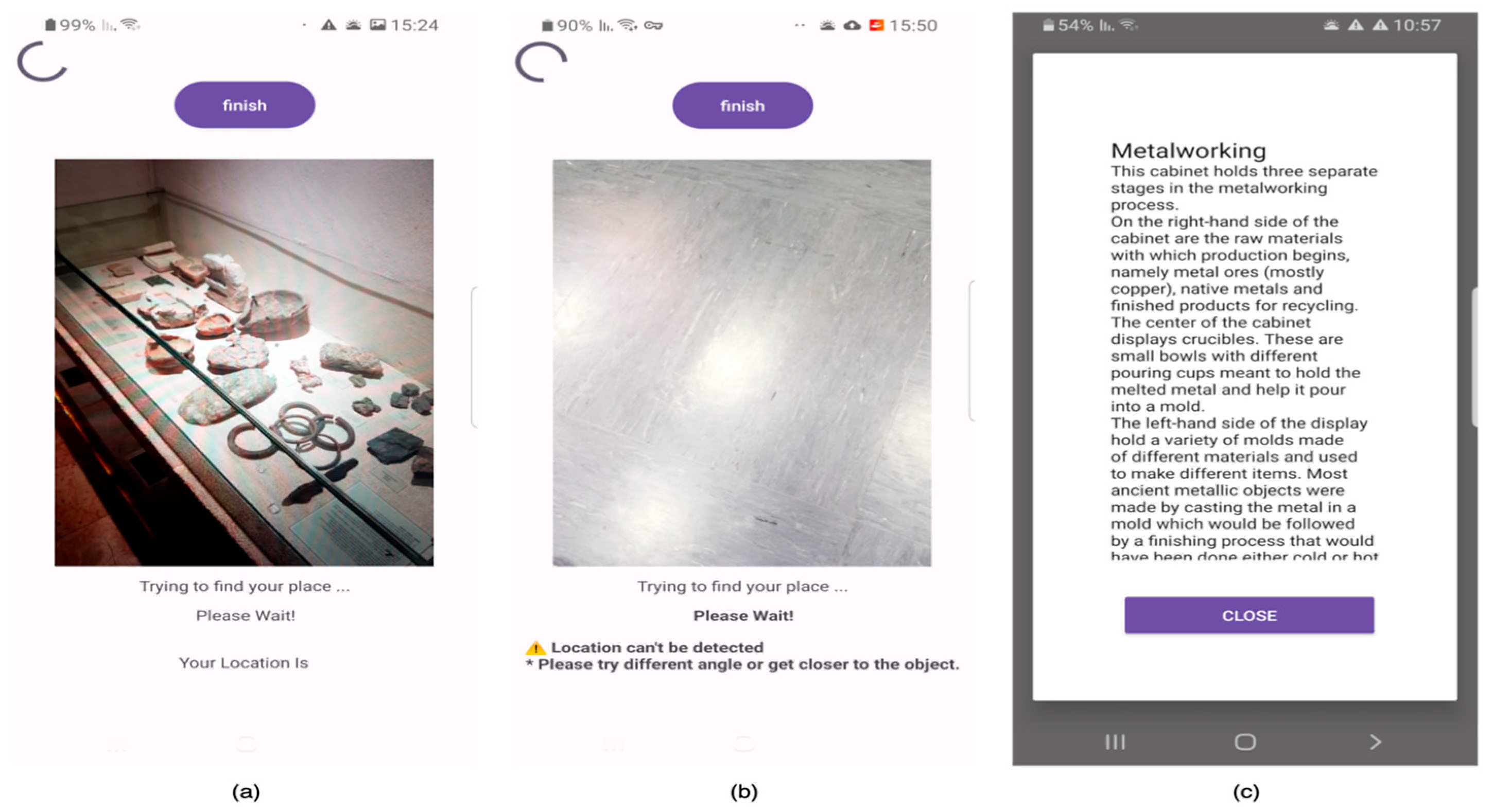

3.2.1. The Mobile App

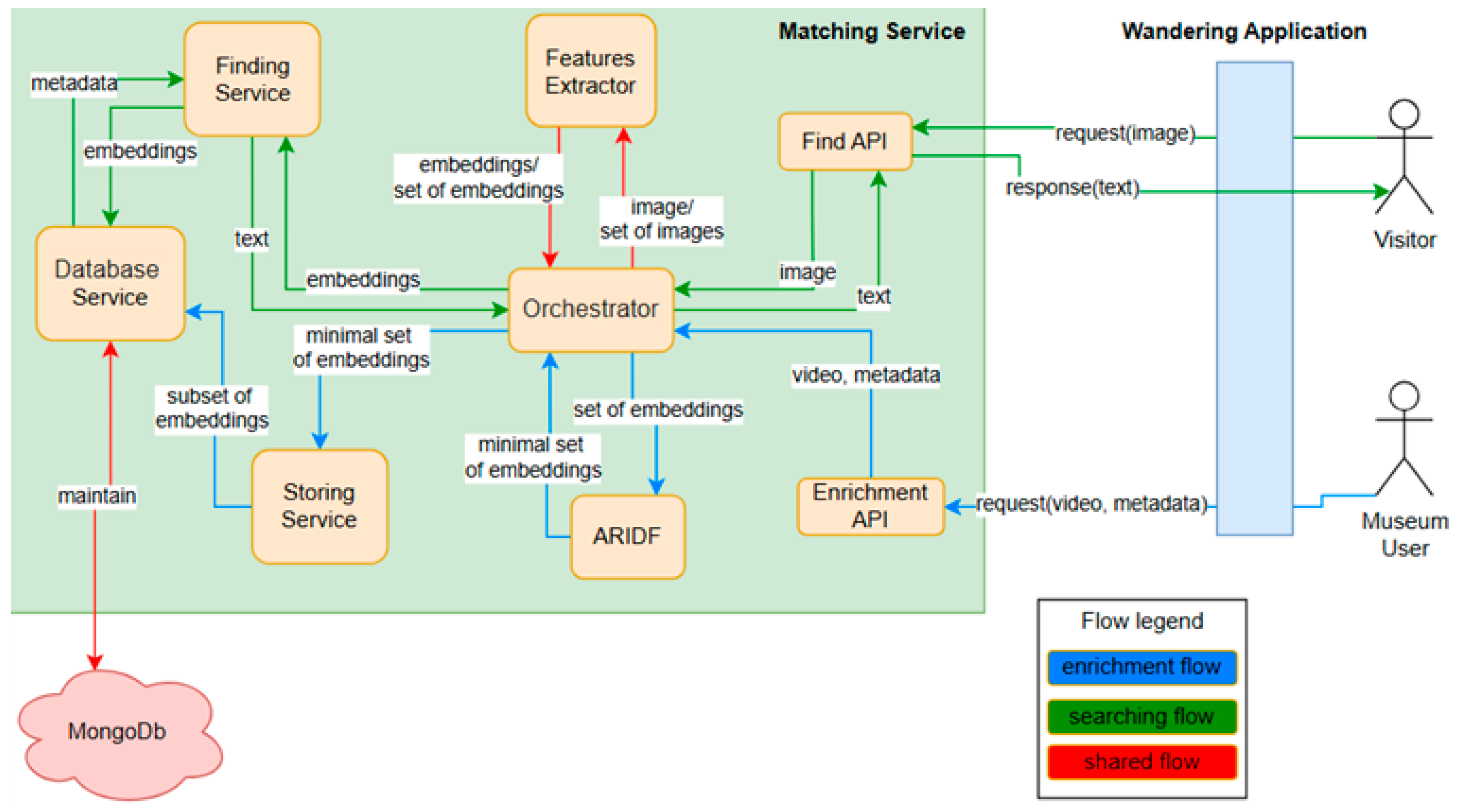

3.2.2. POI Identification Service

3.2.3. System Architecture

3.3. LLM-Assisted Content Creation and Delivery

4. Experimentation

4.1. Experimental Setup

4.2. Procedure

4.3. Participants

4.4. Data Collection and Analysis

4.5. Data Availability

5. Results

5.1. ARIDF Performance

5.2. Log Analysis

5.2.1. Participants’ Perspective

5.2.2. POI Perspective

5.3. SUS Score

5.4. Qualitative Findings

5.4.1. Errors in Location Identification

5.4.2. Total Number of Location Queries

5.4.3. Perceived Response Time

5.4.4. Instances of Failure in Location Identification

5.4.5. Clarity of Location Search Initiation

5.4.6. Clarity of Location Search Identification

5.4.7. General Feedback About the System

5.4.8. Suggestions for Improvement

6. Discussion

6.1. Accuracy

6.2. Quality and Performance

6.3. Usability

6.4. Users’ Comfort

6.5. Enjoyment and Engagement

6.6. Contribution

6.7. Limitations

7. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Misra, P. Global Positioning system: Signals. Measurements, and Performance; Ganga-Jamuna Press: Nanded, India, 2006. [Google Scholar]

- Morley, S.K.; Sullivan, J.P.; Carver, M.R.; Kippen, R.M.; Friedel, R.H.W.; Reeves, G.D.; Henderson, M.G. Energetic particle data from the global positioning system constellation. Space Weather 2017, 15, 283–289. [Google Scholar] [CrossRef]

- Brena, R.F.; García-Vázquez, J.P.; Galván-Tejada, C.E.; Muñoz-Rodriguez, D.; Vargas-Rosales, C.; Fangmeyer, J., Jr. Evolution of indoor positioning technologies: A survey. J. Sens. 2017, 2017, 2630413. [Google Scholar] [CrossRef]

- Wahab, N.H.A.; Sunar, N.; Ariffin, S.H.; Wong, K.Y.; Aun, Y. Indoor positioning system: A review. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 477–490. [Google Scholar] [CrossRef]

- Chintalapudi, K.; Padmanabha Iyer, A.; Padmanabhan, V.N. Indoor localization without the pain. In Proceedings of the Sixteenth Annual International Conference on Mobile Computing and Networking, Chicago, IL, USA, 20–24 September 2010; pp. 173–184. [Google Scholar] [CrossRef]

- Bai, Y.B.; Wu, S.; Wu, H.R.; Zhang, K. Overview of RFID-Based Indoor Positioning Technology. GSR, 2012. Available online: https://ceur-ws.org/Vol-1328/GSR2_Bai.pdf (accessed on 12 May 2025).

- Gu, Y.; Chen, M.; Ren, F.; Li, J. HED: Handling environmental dynamics in indoor WiFi fingerprint localization. In Proceedings of the 2016 IEEE Wireless Communications and Networking Conference, Doha, Qatar, 3–6 April 2016; pp. 1–6. [Google Scholar]

- Bai, L.; Ciravegna, F.; Bond, R.; Mulvenna, M. A low cost indoor positioning system using bluetooth low energy. IEEE Access 2020, 8, 136858–136871. [Google Scholar] [CrossRef]

- Gupta, P.; Sharma, V.; Gairolla, J.; Thakur, U.; Pandey, N.; Khurana, D.; Ramavat, A.S. Mobile Based Indoor Hospital Navigation System for Tertiary Care Setup: A Scoping Review. 2024; preprint. [Google Scholar]

- Zhang, L.; Huang, L.; Yi, Q.; Wang, X.; Zhang, D.; Zhang, G. Positioning method of pedestrian dead reckoning based on human activity recognition assistance. In Proceedings of the 2022 IEEE 12th International Conference on Indoor Positioning and Indoor Navigation (IPIN), Beijing, China, 5–7 September 2022; pp. 1–8. [Google Scholar]

- Naser, R.S.; Lam, M.C.; Qamar, F.; Zaidan, B.B. Smartphone-based indoor localization systems: A systematic literature review. Electronics 2023, 12, 1814. [Google Scholar] [CrossRef]

- Kuflik, T.; Stock, O.; Zancanaro, M.; Gorfinkel, A.; Jbara, S.; Kats, S.; Sheidin, J.; Kashtan, N. A visitor’s guide in an active museum: Presentations, communications, and reflection. J. Comput. Cult. Herit. 2011, 3, 1–25. [Google Scholar] [CrossRef]

- Xia, Y.; Xiu, C.; Yang, D. Visual indoor positioning method using image database. In Proceedings of the 2018 Ubiquitous Positioning, Indoor Navigation and Location-Based Services (UPINLBS), Wuhan, China, 22–23 March 2018; pp. 1–8. [Google Scholar]

- Liu, X.; Huang, H.; Hu, B. Indoor Visual Positioning Method Based on Image Features. Sensors Mater. 2022, 34, 337–348. [Google Scholar] [CrossRef]

- Brusch, I. Identification of travel styles by learning from consumer-generated images in online travel communities. Inf. Manag. 2022, 59, 103682. [Google Scholar] [CrossRef]

- Youssef, M.A.; Agrawala, A.; Shankar, A.U. WLAN location determination via clustering and probability distributions. In Proceedings of the First IEEE International Conference on Pervasive Computing and Communications, 2003 (PerCom 2003), Fort Worth, TX, USA, 23–26 March 2003; pp. 143–150. [Google Scholar]

- Feldmann, S.; Kyamakya, K.; Zapater, A.; Lue, Z. An Indoor Bluetooth-Based Positioning System: Concept, Implementation and Experimental Evaluation. In Proceedings of the International conference on wireless networks, Las Vegas, NV, USA, 23–26 June 2003; Volume 272. [Google Scholar]

- Renaudin, V.; Yalak, O.; Tomé, P.; Merminod, B. Indoor navigation of emergency agents. Eur. J. Navig. 2007, 5, 36–45. [Google Scholar]

- Chen, R.; Chen, L. Smartphone-based indoor POI positioning. Urban Inform. 2021, 3, 467–490. [Google Scholar]

- Barbour, N.; Schmidt, G. Inertial sensor technology trends. IEEE Sensors J. 2001, 1, 332–339. [Google Scholar] [CrossRef]

- Cohen, N.; Dror, R.; Klein, I. Diffusion-Driven Inertial Generated Data for Smartphone Location Classification. arXiv 2025, arXiv:2504.15315. [Google Scholar]

- Wang, S.S. A BLE-based pedestrian navigation system for car searching in indoor parking garages. Sensors 2018, 18, 1442. [Google Scholar] [CrossRef] [PubMed]

- Sawaby, A.M.; Noureldin, H.M.; Mohamed, M.S.; Omar, M.O.; Shaaban, N.S.; Ahmed, N.N.; Elhadidy, S.; Hassan, A.; Mostafa, H. A smart indoor navigation system over BLE. In Proceedings of the 2019 8th International Conference on Modern Circuits and Systems Technologies (MOCAST), Thessaloniki, Greece, 13–15 May 2019; pp. 1–4. [Google Scholar]

- Elmenreich, W. An Introduction to Sensor Fusion; Technical Report; Vienna University of Technology: Vienna, Austria, 2002; Volume 502, pp. 1–28. [Google Scholar]

- Piras, M.; Lingua, A.; Dabove, P.; Aicardi, I. Indoor navigation using Smartphone technology: A future challenge or an actual possibility? In Proceedings of the 2014 IEEE/ION Position, Location and Navigation Symposium-PLANS 2014, Monterey, CA, USA, 5–8 May 2014; pp. 1343–1352. [Google Scholar]

- Geok, T.K.; Aung, K.Z.; Aung, M.S.; Soe, M.T.; Abdaziz, A.; Liew, C.P.; Hossain, F.; Tso, C.P.; Yong, W.H. Review of indoor positioning: Radio wave technology. Appl. Sci. 2020, 11, 279. [Google Scholar] [CrossRef]

- Morris, T. Computer Vision and Image Processing; Palgrave Macmillan Ltd.: New York, NY, USA, 2004. [Google Scholar]

- Morar, A.; Moldoveanu, A.; Mocanu, I.; Moldoveanu, F.; Radoi, I.E.; Asavei, V.; Gradinaru, A.; Butean, A. A comprehensive survey of indoor localization methods based on computer vision. Sensors 2020, 20, 2641. [Google Scholar] [CrossRef]

- Cheng, J.; Zhang, L.; Chen, Q.; Hu, X.; Cai, J. A review of visual SLAM methods for autonomous driving vehicles. Eng. Appl. Artif. Intell. 2022, 114, 104992. [Google Scholar] [CrossRef]

- Cavallari, T.; Golodetz, S.; Lord, N.A.; Valentin, J.; Prisacariu, V.A.; Di Stefano, L.; Torr, P.H. Real-time RGB-D camera pose estimation in novel scenes using a relocalisation cascade. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2465–2477. [Google Scholar] [CrossRef]

- Wecker, A.J.; Lanir, J.; Kuflik, T.; Stock, O. Where to go and how to get there: Guidelines for indoor landmark-based navigation in a museum context. In Proceedings of the 17th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct, Copenhagen, Denmark, 24–27 August 2015; pp. 789–796. [Google Scholar]

- Stock, O.; Zancanaro, M.; Busetta, P.; Callaway, C.; Krüger, A.; Kruppa, M.; Kuflik, T.; Not, E.; Rocchi, C. Adaptive, intelligent presentation of information for the museum visitor in PEACH. User Model. User-Adapt. Interact. 2007, 17, 257–304. [Google Scholar] [CrossRef]

- Kuflik, T.; Lanir, J.; Dim, E.; Wecker, A.; Corra, M.; Zancanaro, M.; Stock, O. Indoor positioning: Challenges and solutions for indoor cultural heritage sites. In Proceedings of the 16th International Conference on Intelligent User Interfaces, Palo Alto, CA, USA, 13–16 February 2011; pp. 375–378. [Google Scholar]

- Jiang, Y.; Zheng, X.; Feng, C. Toward Multi-area Contactless Museum Visitor Counting with Commodity WiFi. ACM J. Comput. Cult. Herit. 2023, 16, 1–26. [Google Scholar] [CrossRef]

- Meliones, A.; Sampson, D. Blind MuseumTourer: A system for self-guided tours in museums and blind indoor navigation. Technologies 2018, 6, 4. [Google Scholar] [CrossRef]

- Trichopoulos, G.; Konstantakis, M.; Caridakis, G.; Katifori, A.; Koukouli, M. Crafting a Museum Guide Using ChatGPT4. Big Data Cogn. Comput. 2023, 7, 148. [Google Scholar] [CrossRef]

- Dror, R.; Hutchinson, D.; Jones, M.; Van Hyning, V.; Kuflik, T. The Curator’s Helper. In Proceedings of the 32nd ACM Conference on User Modeling, Adaptation and Personalization, New York, NY, USA, 16–19 June 2024; pp. 496–504. [Google Scholar]

- Yang, S.; Ma, L.; Jia, S.; Qin, D. An improved vision-based indoor positioning method. IEEE Access 2020, 8, 26941–26949. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, online, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Mokatren, M.; Kuflik, T.; Shimshoni, I. ARIDF: Automatic Representative Image Dataset Finder for Image Based Localization. In Proceedings of the 30th ACM Conference on User Modeling, Adaptation and Personalization, Barcelona, Spain, 4–7 July 2022; pp. 383–390. [Google Scholar]

- Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Bangor, A.; Kortum, P.; Miller, J. Determining what individual SUS scores mean: Adding an adjective rating scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

| Method | Advantages | Disadvantages |

|---|---|---|

| Wi-Fi Triangulation | Widely available in most indoor environments. Cost-effective as it uses existing Wi-Fi networks. | Susceptible to interference from walls and objects. Limited accuracy in complex or crowded environments. |

| Bluetooth Beacons (BLE) | Low power consumption. Easy to deploy. Provides good accuracy within short ranges. | Requires maintenance or infrastructure (power). Signal may weaken in large spaces or through obstacles. |

| RFID | High precision for short-range localization. No reliance on batteries for tags. | Limited range. Expensive to deploy over large areas. Requires specialized readers. |

| Inertial Sensors | Can work without external infrastructure. Suitable for real-time tracking. | Accumulates errors over time (drift). Limited standalone accuracy. |

| Computer Vision | High accuracy in recognizing locations and landmarks. Cost-effective using existing cameras. | Dependent on lighting conditions. Computationally intensive. Accuracy can be impacted by moving objects. |

| Visual SLAM | Provides simultaneous localization and mapping. Works in real time for dynamic environments. | High computational cost. Requires advanced hardware for real-time processing. |

| Augmented Reality | Enhances user engagement. Real-time overlay of information onto the environment. | Requires advanced hardware for real-time processing. Limited accuracy for large or cluttered spaces. High battery usage in devices. |

| Landmark Recognition | High reliability using unique landmarks. Improves accuracy with sensor fusion. | Requires pre-mapped landmarks. Less effective in environments lacking distinctive features. |

| Sensor Fusion | Combines data from multiple sources for improved accuracy. Works in diverse conditions. | High computational complexity. May require multiple sensors, increasing system cost. |

| Smartphones (General) | Widely available and versatile. Integrates multiple features (Wi-Fi, sensors, cameras). | Dependent on smartphone hardware capabilities. Battery drain can be significant during continuous use. |

| POI # | POI Name | Frames Before ARIDF | Frames After ARIDF | Duration (sec) | % Reduction | # of Visits | # of Errors | # of Unrecognized POIs |

|---|---|---|---|---|---|---|---|---|

| 1 | De Materia Medica by the Greek | 157 | 55 | 78 | 65% | 25 | 0 | 1 |

| 2 | Human Illnesses in Ancient Times | 21 | 7 | 10 | 67% | 15 | 0 | 0 |

| 3 | Metal working | 27 | 6 | 13 | 78% | 15 | 1 | 0 |

| 4 | Lost Wax | 27 | 6 | 13 | 78% | 15 | 1 | 0 |

| 5 | Bronze Vessels | 26 | 6 | 13 | 77% | 15 | 0 | 1 |

| 6 | Selection of Metal Objects | 28 | 6 | 14 | 79% | 20 | 0 | 0 |

| 7 | Artifacts made of Iron | 28 | 6 | 14 | 79% | 20 | 0 | 1 |

| 8 | Physician | 90 | 25 | 45 | 72% | 20 | 0 | 1 |

| 9 | Glassmaking-Part1 | 34 | 6 | 17 | 82% | 17 | 0 | 0 |

| 10 | Glassmaking-Part2 | 45 | 6 | 22 | 87% | 18 | 1 | 1 |

| 11 | Producing the Raw Glassp-Part1 | 52 | 6 | 25 | 88% | 15 | 1 | 0 |

| 12 | Producing the Raw Glassp-Part2 | 38 | 6 | 18 | 84% | 28 | 0 | 0 |

| 13 | Ossuary | 27 | 11 | 13 | 59% | 12 | 0 | 1 |

| 14 | Burial coffin | 43 | 13 | 22 | 70% | 15 | 0 | 0 |

| 15 | Selection of Wooden Objects | 56 | 6 | 27 | 89% | 15 | 0 | 0 |

| 16 | Lead coffin | 42 | 10 | 20 | 76% | 15 | 2 | 1 |

| 17 | Carpenter’s Tools | 71 | 26 | 34 | 63% | 19 | 0 | 0 |

| 18 | Frieze fragment | 27 | 9 | 13 | 67% | 17 | 0 | 0 |

| 19 | Burial coffin (Sarcophagus) | 36 | 8 | 17 | 78% | 15 | 0 | 0 |

| 20 | Hebrew Promissory Note | 25 | 6 | 12 | 76% | 15 | 0 | 0 |

| 21 | Alphabetic Script | 68 | 7 | 32 | 90% | 17 | 1 | 0 |

| 22 | Jewish Tombstone | 30 | 7 | 14 | 77% | 18 | 0 | 0 |

| 23 | Hieroglyphic Script | 36 | 6 | 17 | 83% | 12 | 0 | 0 |

| 24 | Jewish ossuaries | 32 | 7 | 15 | 78% | 19 | 0 | 0 |

| 25 | Stone Vessels Everyday Life | 47 | 6 | 23 | 87% | 21 | 0 | 1 |

| 26 | Tables | 31 | 6 | 15 | 81% | 16 | 0 | 0 |

| 27 | Stone Vessels (Late 2nd Temple Period) | 48 | 6 | 23 | 88% | 18 | 0 | 0 |

| 28 | Stone Jar | 31 | 7 | 15 | 77% | 22 | 0 | 0 |

| 29 | Mosaic Art | 123 | 41 | 61 | 67% | 21 | 0 | 0 |

| Average | 43.68 | 10.07 | 22.58 | 77.3% | 17.59 | 0.28 | 0.24 | |

| Min | 21 | 6 | 10 | 59% | 12 | 0 | 0 | |

| Max | 157 | 55 | 78 | 90% | 28 | 1 | 1 | |

| STD | 27.386 | 10.194 | 15.281 | 0.083 | 3.590 | 0.454 | 0.510 | |

| Count | 621 | 7 | 8 |

| Participant # | # of Visited POIs | # of Successful Searching Tries | # of Unrecognized POIs | # of Wrongly Recognized POIs | Avg | Min | Max | STD |

|---|---|---|---|---|---|---|---|---|

| Number of Tries for Successfully Recognized POI | ||||||||

| 1 | 25 | 27 | 1 | 0 | 1.08 | 1 | 2 | 0.34 |

| 2 | 15 | 19 | 0 | 0 | 1.27 | 1 | 3 | 0.59 |

| 3 | 15 | 20 | 0 | 1 | 1.33 | 1 | 3 | 0.65 |

| 4 | 15 | 17 | 0 | 1 | 1.13 | 1 | 2 | 0.43 |

| 5 | 15 | 16 | 1 | 0 | 1.07 | 1 | 2 | 0.36 |

| 6 | 20 | 25 | 0 | 0 | 1.25 | 1 | 2 | 0.44 |

| 7 | 20 | 22 | 1 | 0 | 1.10 | 1 | 2 | 0.37 |

| 8 | 20 | 22 | 1 | 0 | 1.10 | 1 | 3 | 0.55 |

| 9 | 17 | 22 | 0 | 0 | 1.29 | 1 | 2 | 0.47 |

| 10 | 18 | 20 | 1 | 1 | 1.11 | 1 | 3 | 0.58 |

| 11 | 15 | 15 | 0 | 1 | 0.93 | 1 | 1 | 0.00 |

| 12 | 28 | 36 | 0 | 0 | 1.29 | 1 | 2 | 0.46 |

| 13 | 12 | 13 | 1 | 0 | 1.08 | 1 | 3 | 0.60 |

| 14 | 15 | 16 | 0 | 0 | 1.07 | 1 | 2 | 0.26 |

| 15 | 15 | 17 | 0 | 0 | 1.13 | 1 | 2 | 0.35 |

| 16 | 15 | 15 | 1 | 2 | 1.00 | 1 | 2 | 0.38 |

| 17 | 19 | 25 | 0 | 0 | 1.32 | 1 | 2 | 0.48 |

| 18 | 17 | 20 | 0 | 0 | 1.18 | 1 | 3 | 0.53 |

| 19 | 15 | 15 | 0 | 0 | 1.00 | 1 | 1 | 0.00 |

| 20 | 15 | 20 | 0 | 0 | 1.33 | 1 | 3 | 0.62 |

| 21 | 17 | 21 | 0 | 1 | 1.24 | 1 | 3 | 0.60 |

| 22 | 18 | 19 | 0 | 0 | 1.06 | 1 | 2 | 0.24 |

| 23 | 12 | 13 | 0 | 0 | 1.08 | 1 | 2 | 0.29 |

| 24 | 19 | 23 | 0 | 0 | 1.21 | 1 | 2 | 0.42 |

| 25 | 21 | 22 | 1 | 0 | 1.05 | 1 | 2 | 0.22 |

| 26 | 16 | 17 | 0 | 0 | 1.06 | 1 | 2 | 0.25 |

| 27 | 18 | 21 | 0 | 0 | 1.17 | 1 | 2 | 0.38 |

| 28 | 22 | 26 | 0 | 0 | 1.18 | 1 | 2 | 0.39 |

| 29 | 21 | 26 | 0 | 0 | 1.24 | 1 | 3 | 0.54 |

| 30 | 16 | 16 | 0 | 0 | 1.00 | 1 | 1 | 0.00 |

| Sum | 526 | 606 | 8 | 7 | ||||

| Avg | 17.53 | 20.17 | 0.27 | 0.23 | ||||

| Min | 12.00 | 13.00 | 0.00 | 0.00 | ||||

| Max | 28.00 | 36.00 | 1.00 | 2.00 | ||||

| STD | 3.54 | 4.96 | 0.45 | 0.50 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Egbariya, B.; Dror, R.; Kuflik, T.; Shimshoni, I. Image-Based POI Identification for Mobile Museum Guides: Design, Implementation, and User Evaluation. Heritage 2025, 8, 266. https://doi.org/10.3390/heritage8070266

Egbariya B, Dror R, Kuflik T, Shimshoni I. Image-Based POI Identification for Mobile Museum Guides: Design, Implementation, and User Evaluation. Heritage. 2025; 8(7):266. https://doi.org/10.3390/heritage8070266

Chicago/Turabian StyleEgbariya, Bashar, Rotem Dror, Tsvi Kuflik, and Ilan Shimshoni. 2025. "Image-Based POI Identification for Mobile Museum Guides: Design, Implementation, and User Evaluation" Heritage 8, no. 7: 266. https://doi.org/10.3390/heritage8070266

APA StyleEgbariya, B., Dror, R., Kuflik, T., & Shimshoni, I. (2025). Image-Based POI Identification for Mobile Museum Guides: Design, Implementation, and User Evaluation. Heritage, 8(7), 266. https://doi.org/10.3390/heritage8070266