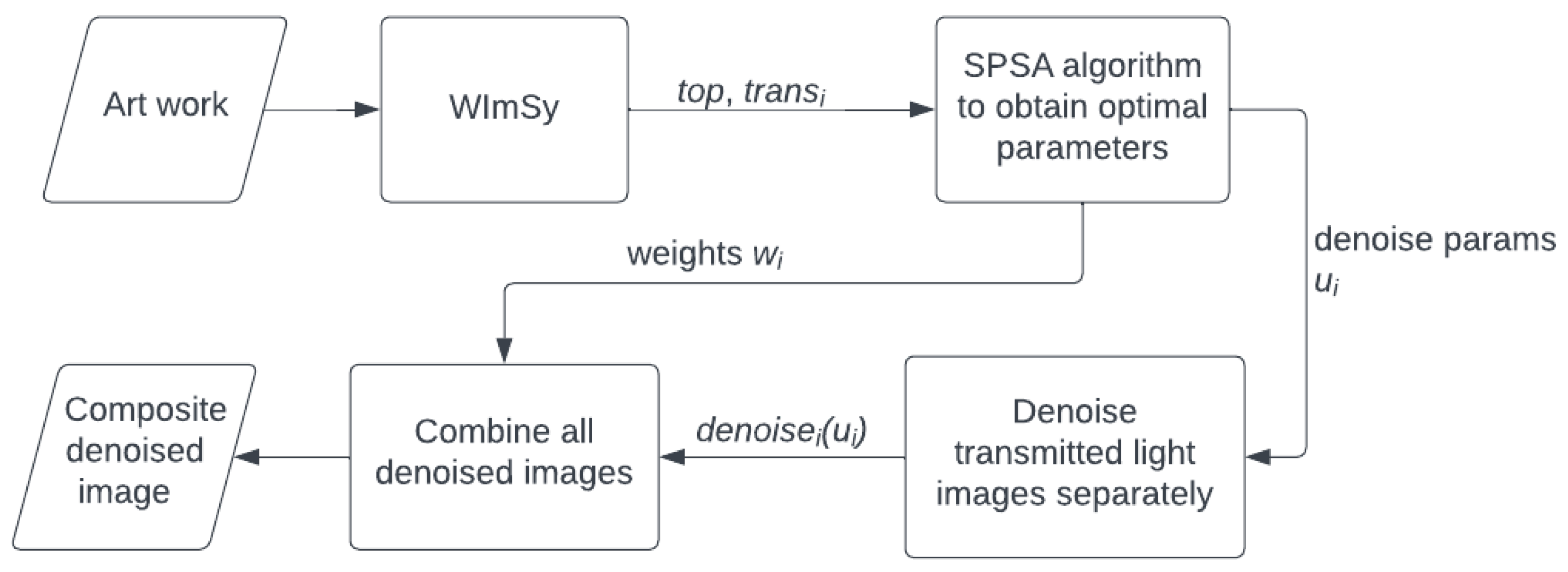

This section begins by describing the hardware that we have developed for imaging the internal structure of paper. Complementing this are the algorithms that we have developed and implemented, which process the images in order to decrease the noise and enhance the legibility of the resulting images.

2.1. The WImSy Machine and the Dataset

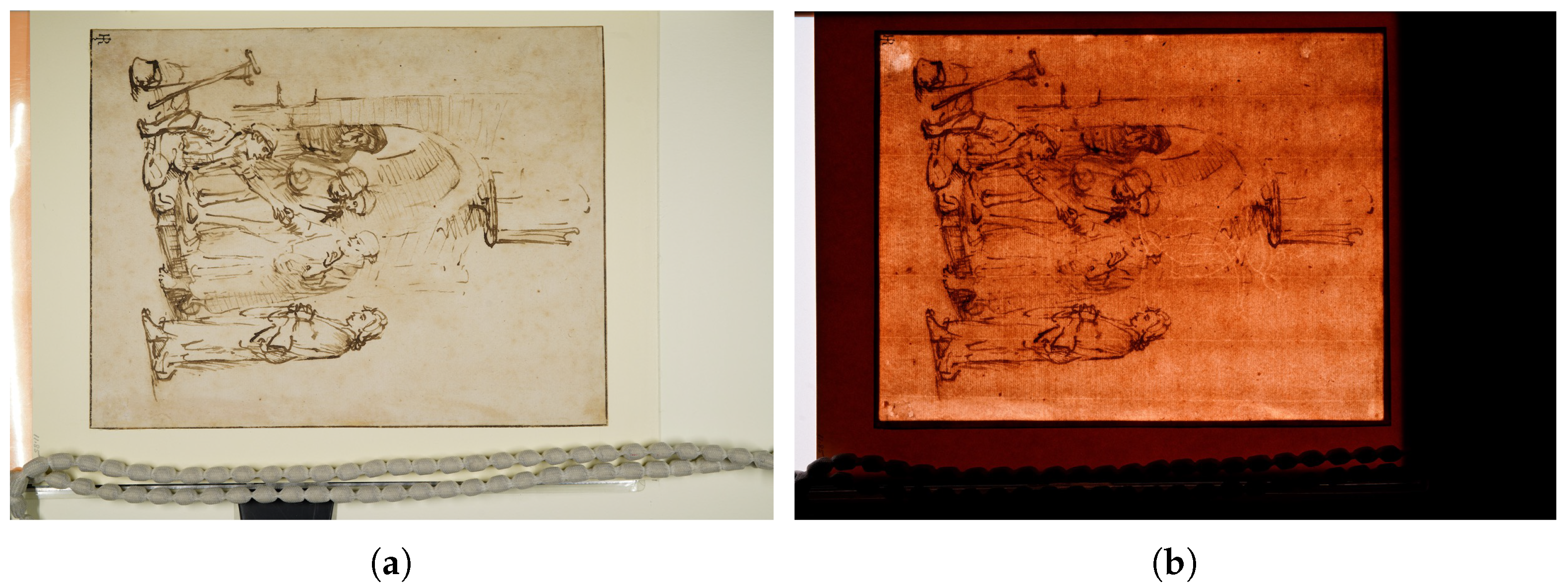

The WImSy, shown in

Figure 1, is a specialized system developed for photographing small works on paper up to 8

by 11

(20.3 cm by 28 cm), such as prints and drawings. It consists of a portable photographic stand, multiple LED lighting options, a flat light plate, a camera, a hardware interface to control the lights, and software to sequence the capture of multiple images. One of the unique features of WImSy is that it incorporates three different light positions to capture different aspects of the artwork.

WImSy uses the Sony Alpha a7R IV mirrorless camera, which incorporates a 61-megapixel CMOS sensor. For each exposure the camera is configured to output a jpeg (compressed, lossy) and an uncompressed RAW image. Files sizes are approximately 45 MB and 117 MB, respectively. Image resolution is 9504 × 6336 pixels, resulting in 280 × 280 pixels per on the subject, providing sufficient resolution for imaging laid lines. The camera is fitted with a Sony FE 50 mm f/2.8 macro lens. The camera autofocuses using reflected light and holds that focus through the stack of subsequent raking and transmitted light images.

WImSy incorporates three light positions: (1) surface illumination is made with two LED light strips positioned on either side of the object; (2) raking illumination is made with a single, low angle, LED light strip; (3) transmitted light is achieved using an electroluminescent light sheet. Light strips measure 18 (45.7 cm) in length and incorporate 72 LEDs per 12 inches (30.5 cm) with color temperature 6000 k, operating at 24 volts direct current (24 V DC). The light sheet operates at 12 V DC and is specified to output 200 lux/. The sheets are swappable with 11× 17 (28 cm × 43 cm) proving most useful for general use with a smaller 8.5× 14 (21.5 cm × 35.5 cm) sheet sometimes needed depending on the mounting configuration the subject. A two-second WImSy capture involves exposure intensity of approximately 3440 lux or 1.9 lux/h, roughly the equivalent of 2.5 min of display time at 50 lux/h. Given the rapid cycling of the lights, heat output is negligible. Lights are operated through a custom designed DMX-compliant controller for on/off sequencing and intensity control.

The camera and LEDs are mounted to an armature composed of 1 (2.5 cm) extruded aluminum. With LED mounts folded inward for more compact shipping, the armature measures 27 height × 9 width × 18 depth (68.5 cm H × 22.9 cm W × 45.7 cm D). Experience indicates the present prototype could be shorter by about 4 (10 cm), potentially making future models even more compact. The camera and light positions are geometrically fixed to ensure repeatable, replicable, results across captures. As configured, a WImSy capture measures approximately 11× 17 (28 cm × 43 cm) of physical area. Larger works on paper require compositing images across multiple captures.

The camera and lighting functions are controlled using custom software developed in C++ for the Microsoft Windows operating system. The software integrates the Sony Camera Remote API Beta SDK for live view and for setting focus, aperture, shutter speed, ISO, and for controlling file transfer to the computer. The software has two modes: “Developer” options include all camera functions and allows the triggering of three to six transmitted light images, each made using different camera settings to maximize the rendering of paper features typically obscured in conventional transmitted light. Once parameters are optimized, “User” mode prompts for file naming and captures the complete image stack with a single mouse click.

The system is designed to be compact and portable with all components, including a dedicated laptop computer, fitting into a single protective case for travel and shipping. System set-up requires approximately five minutes, mainly involving establishing cable connections between the components. Besides basic art handling experience, no specialized expertise is required to operate the system. Still a prototype, the ultimate system cost should be less than $10,000.

With its ability to provide maximum consistency across image stacks of various lightning positions, the system is ideal for studying and analyzing watermarks and other features in works on paper. In particular, the surface illumination is used to capture the overall surface appearance of the artwork, while raking illumination is used to highlight the texture of the paper. The transmitted light images, of which there are nominally six for each image capture sequence, can be used to investigate subsurface features such as watermarks, chain lines, and laid lines. The exposure and lighting sequence is fully automated, taking about 30 to 45 s to produce an “image stack” that is perfectly registered. By fixing the lights, camera, and other variables, the system enables downstream computational processing to search for patterns within and across datasets.

For our study, we utilized datasets of images captured at the Metropolitan Museum of Art in New York (in October 2022) and at the Getty Museum in Los Angeles (in February 2023). Each artwork is represented by a toplit image, which captures the surface features of the print or drawing, and between three and six transmitted light images, which capture both the surface and subsurface watermark features.

Figure 2 provides an example of a surface image and the corresponding transmitted light image. The datasets allow testing and evaluation of the effectiveness of the denoising algorithms.

2.2. “Denoising” Transmitted Light Images Using Surface Subtraction

Transmitted light images often show features relating to the structure and composition of a sheet of paper, though details are often obscured by drawing, writing, or other markings on the surface. Thus transmitted light images often consist of a watermark feature superimposed on the surface features. We call the process of removing these surface features

denoising, and it can help to make the internal features more clear and to visually isolate the watermark. Our approach to denoising a single transmitted light image linearly combines the surface and transmitted images based on the method described in [

9]. Assuming the two images are perfectly aligned (registered), a weighted grayscale version of the surface image is subtracted from the grayscale version of the transmitted light image. Both of these are readily obtained from the WImSy image stack. The method is extended to exploit multiple transmitted light images in

Section 2.3.

Let

and

be registered transmitted light and surface images of the same sheet of paper. The denoised watermark image,

, is

where

u is an adjustable parameter in the range

.

A scaling function

is employed after the denoising process to map the pixel values of the resulting image to the range of

, allowing for better visual presentation of the watermark. Specifically, given an input image

I, the scaled output image

is obtained by

where

and

represent the minimum and maximum pixel values in the image

I, respectively. Once the denoising process is complete, the scaling function can be applied to the resulting image

to obtain a scaled image

.

To denoise a single transmitted light image manually, the value of the regularization parameter

u can be adjusted using a slider while visually inspecting the output

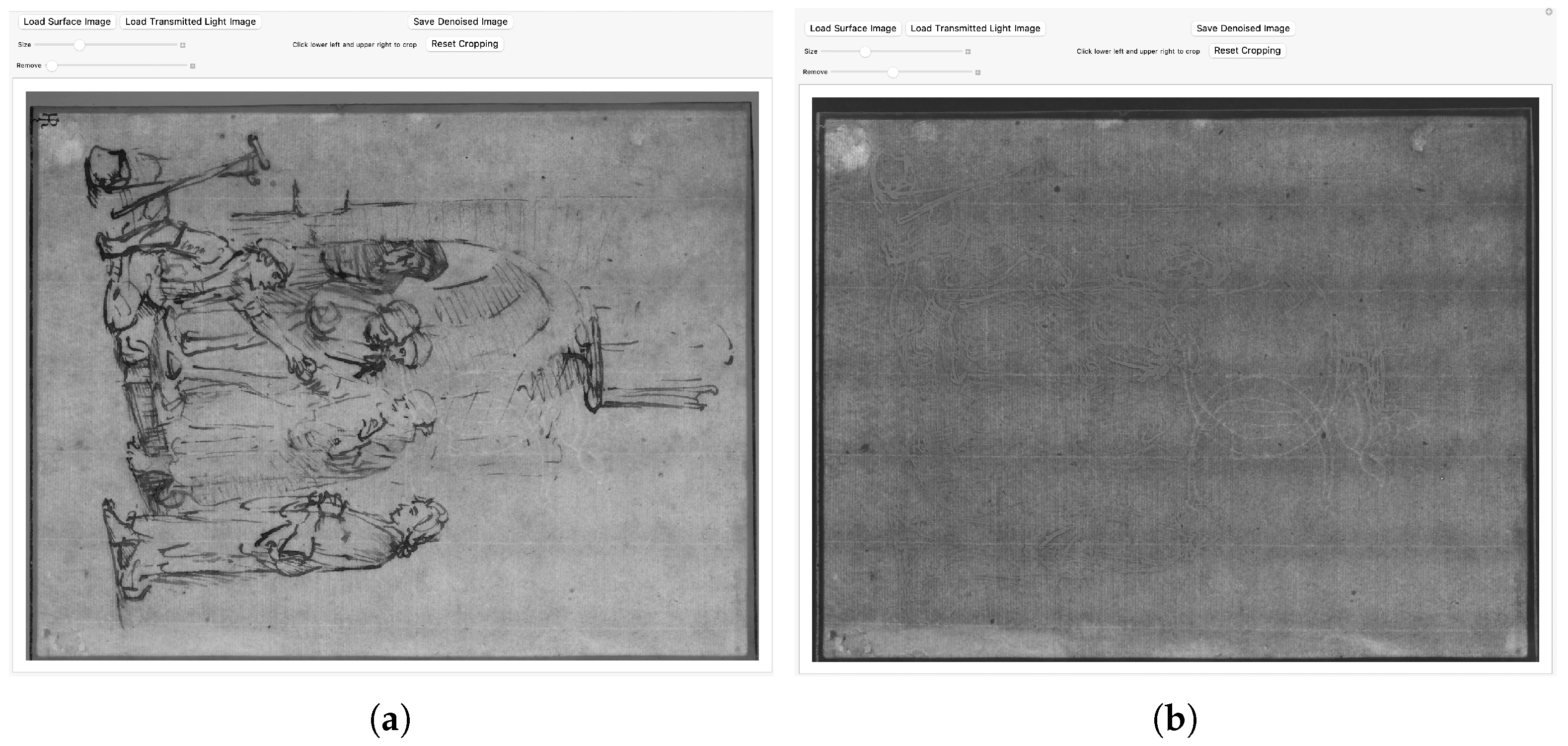

. A graphical user interface (GUI) can be used to facilitate this process, as shown in

Figure 3.

2.3. Using Multiple Transmitted Light Images in the Denoising Process

The effectiveness of the denoising process depends significantly on the exposure time of the transmitted light image. If the exposure time is too short, there will be insufficient details present in the image to obtain a clear view of the internal features, whereas if the exposure time is too long, the resulting images will be washed out and lack clarity. To ensure that one or more images are suitable for denoising, WImSy captures several (usually six) different exposures in the image stack.

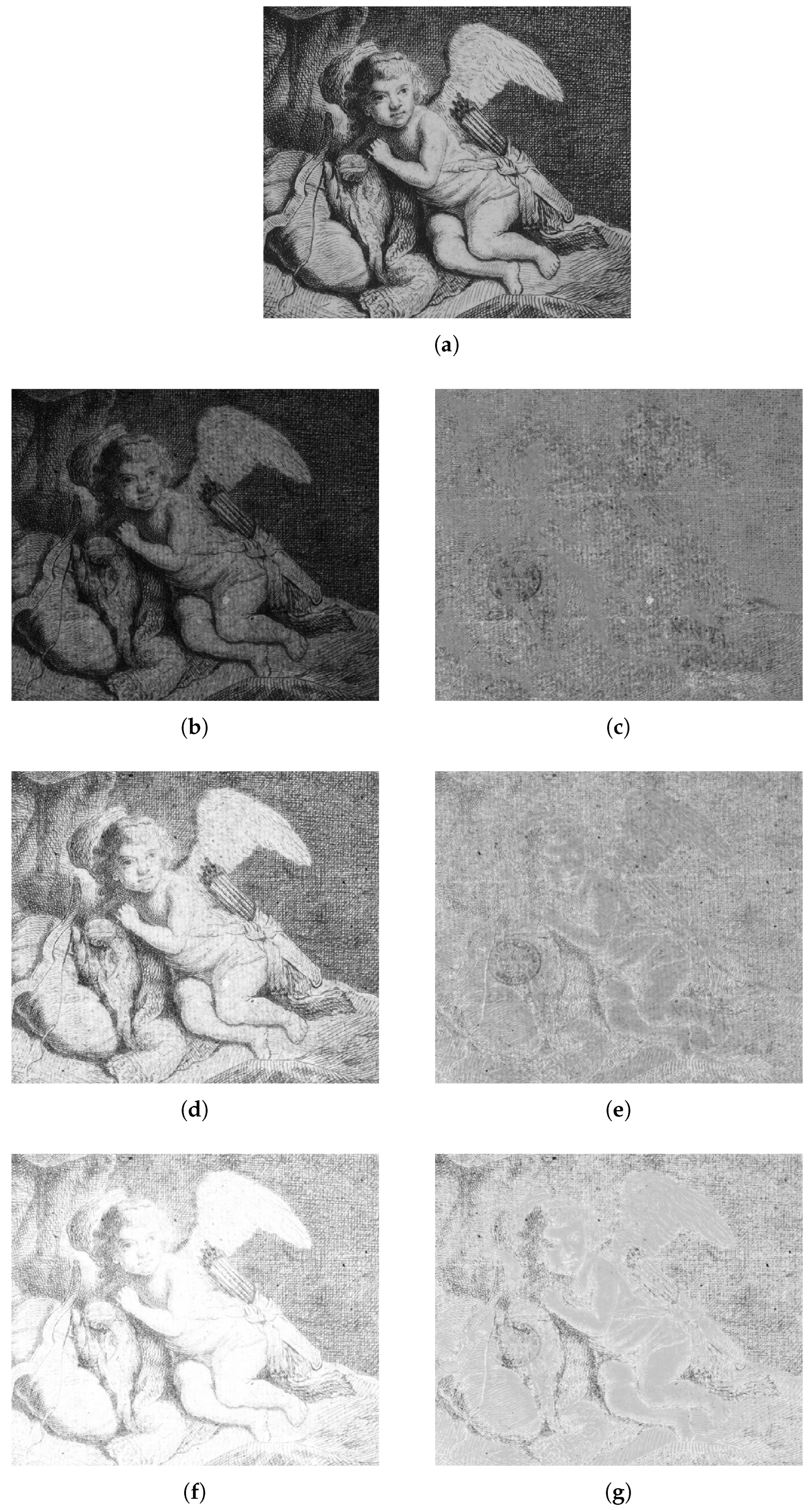

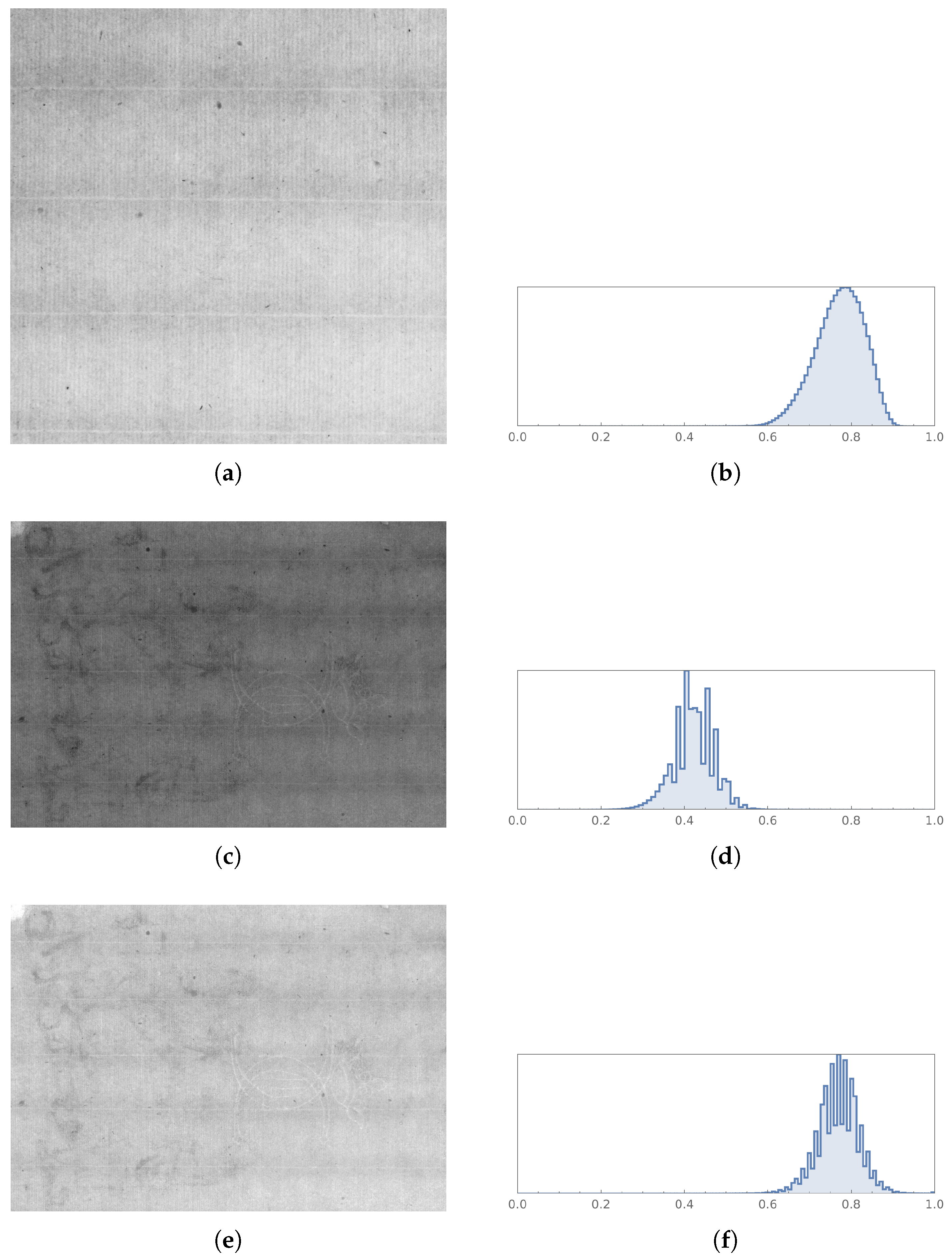

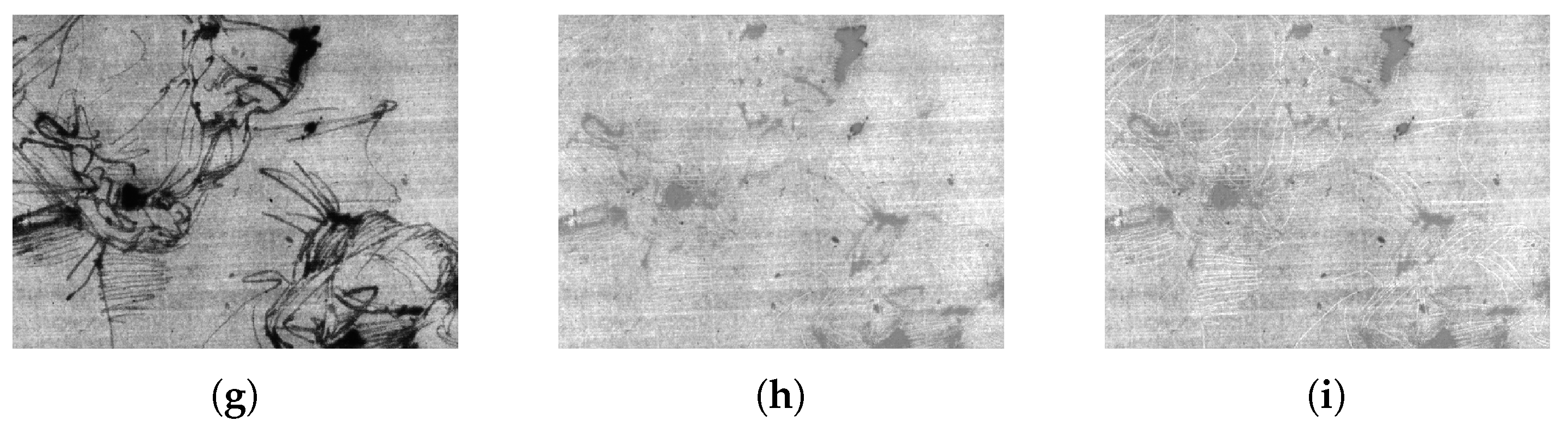

Figure 4 shows three transmitted light images and the corresponding denoised images captured with different exposure times.

In some cases, such as in

Figure 3, a single transmitted light image is sufficient for denoising using the method described in

Section 2.2. However, it may also happen that no single image displays good features throughout, necessitating the combination of multiple images. There are two possible strategies to accomplish this: denoise the images separately and then combine them via an HDR-like technique, or combine the transmitted light images using HDR-like techniques and then denoise the resulting image. From our experimental results, we have found the latter strategy to be more effective. The rationale for this is that different denoising parameters

u can be applied to transmitted light images obtained with varying exposure times.

Analogous to the denoising method using a single transmitted light image, as in

Section 2.2, we apply a two-step denoising process to a set of multiple transmitted light images using an HDR-like method. Let

be a collection of

n transmitted light images, and let

be the corresponding surface image. All images are aligned and are the same size. To obtain the denoised images

corresponding to

and

, we first denoise each image separately and then combine them using the HDR-like method. The denoised image

is obtained by subtracting the product of a list of parameters

and

from

, which can be expressed as follows:

An example of such denoised images are presented in

Figure 4.

The denoised images

s are then combined using the weighted sum (

4), where

is a weight vector:

To ensure that the resulting image is within the 0 to 1 range, a scaling function

, as in Equation (

2), is applied:

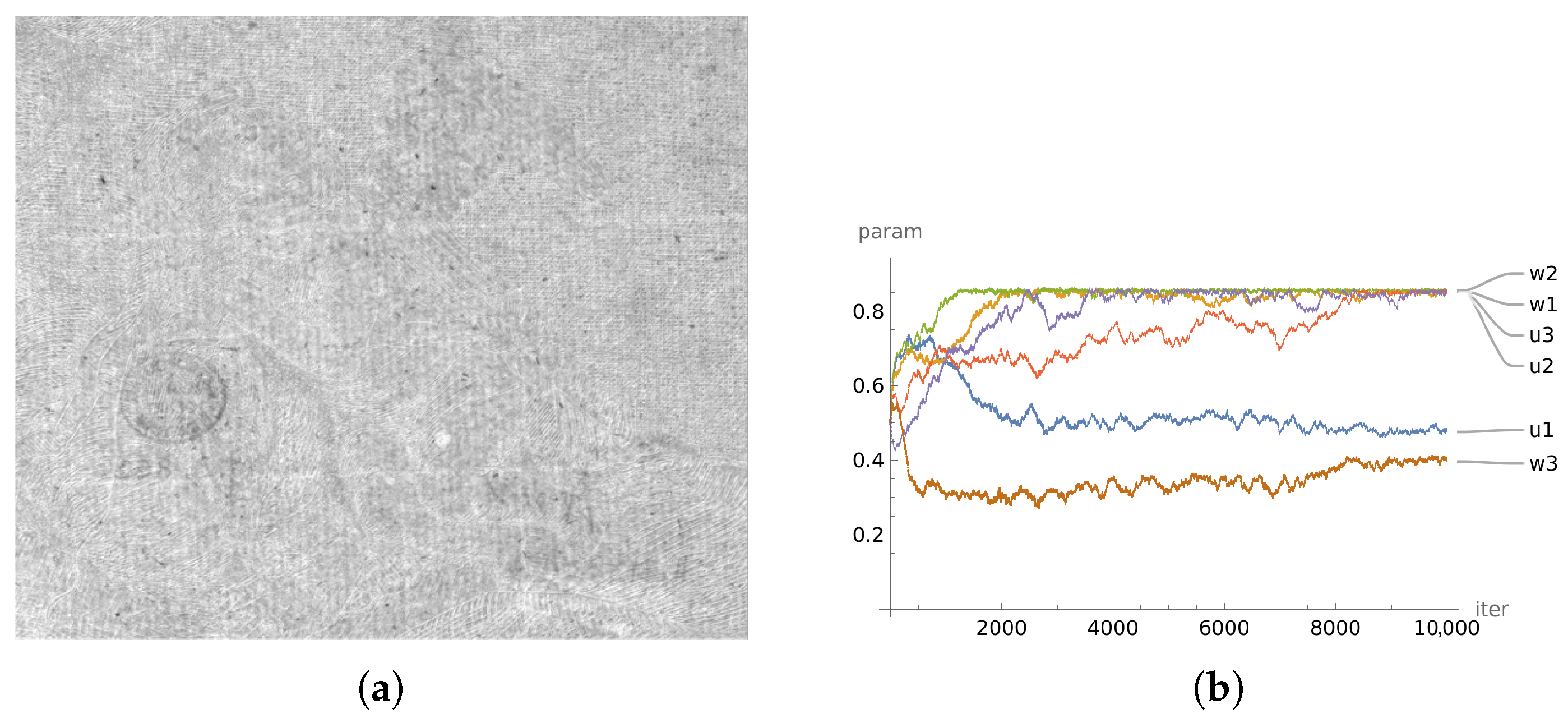

Figure 5 illustrates the combination of denoised images, which yields significantly improved results compared to any single denoised image.

While the proposed method demonstrates effectiveness, the denoising process requires the selection of at least parameters. Therefore, either the denoising parameters must be manually selected, or an automated algorithm must be designed to select these parameters. The subsequent sections provide further elaboration on the automated selection of denoising parameters.

2.5. An Optimization Criterion for Image Denoising

It is not possible to design the loss function from “first principles” because the goal of the process is to achieve a clear view of the inside of the sheet of paper. Instead, we use a transmitted light image of a blank paper with no surface markings as a “target image”. We selected a sheet from a 1536 edition of De re militari by Vegetius, which we had previously photographed and studied in [

11]. The quality of a candidate denoised image can then be assessed by measuring the similarity between it and the target.

Key to this procedure is that a suitable measure of the “distance” or “similarity” must be selected. Pixel-level distance measures are not suitable for this because the images may be of different scales, and will certainly have mis-aligned chain and laid lines. A good candidate is to use a measure of the distance between histograms of the two images. This is useful because it only considers the distribution of pixel brightness and not their specific locations. For example, a poorly denoised image might have a larger percentage of black pixels than the target. By adjusting the denoising parameter to make these percentages similar, the image becomes increasingly denoised.

In

Figure 7, it can be observed that the shape of the histogram in (b) and (d) are similar but their locations (center of the peaks) are different. This occurs because a denoised image may have different brightness than the target image. Therefore it is necessary to standardize and shift the histograms to ensure that their peaks are aligned. To be concrete, the shifted, denoised image

is calculated from the denoised image

using the mean of the

where

is the average value of all pixels in the image. This shifts the histogram so that the output has approximately the same overall brightness as the target, and the histograms in (f) and (b) have become more similar.

To quantify the difference between histograms, we utilize the Earth Mover’s Distance (EMD) introduced in [

12] as a distance measure, which is defined as the minimum cost to transform one histogram to another.

Specifically, given the histogram of the image denoted as , and the histogram of the denoised image denoted as , the EMD between and is computed as the minimum cost required to transform into . This is denoted as .

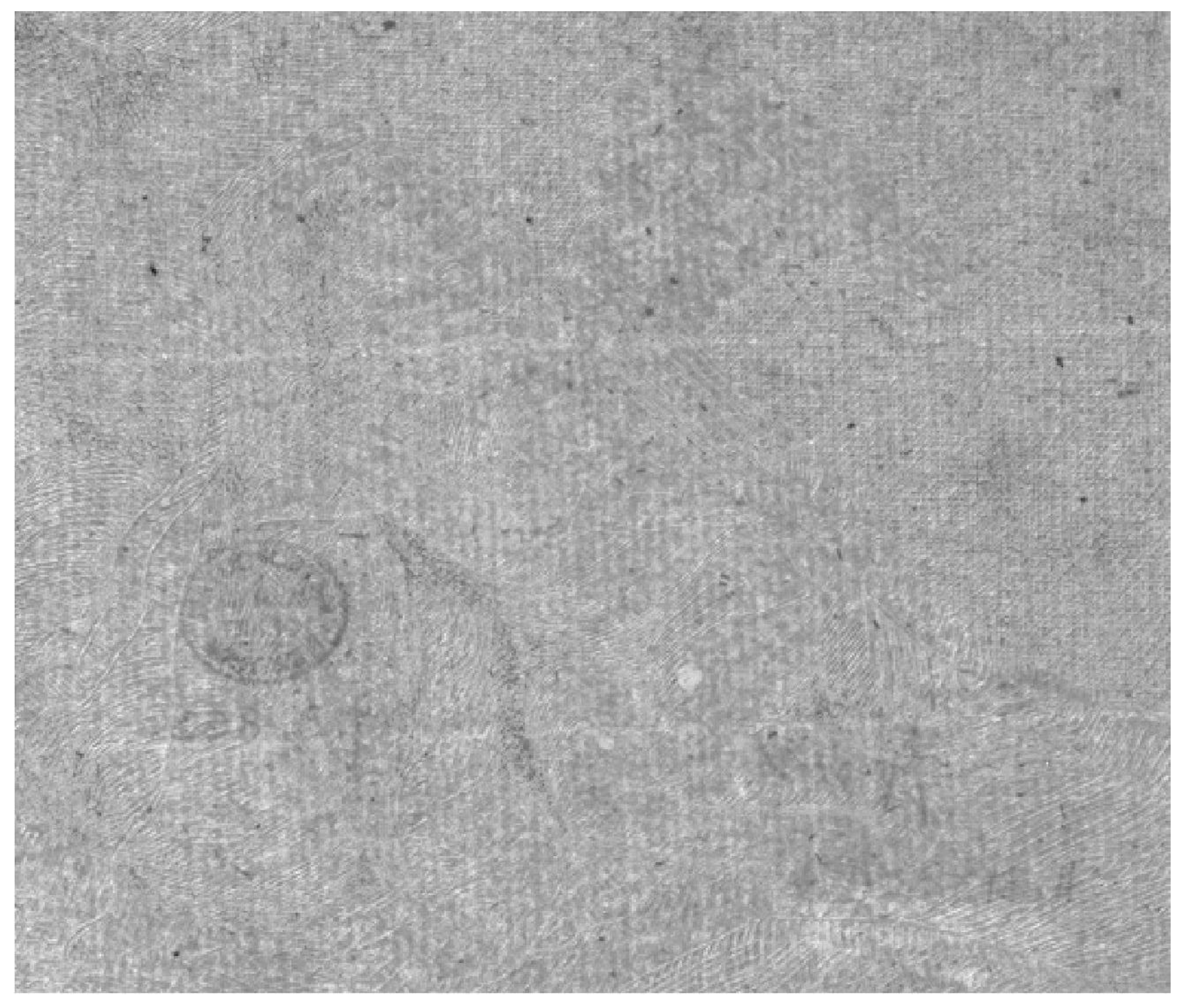

Although the EMD loss is generally effective, it may fail to perform well in situations where the image is heavily marked. For example, the overall EMD loss for

Figure 8 may be low, but the surface features are not removed completely. To enhance the robustness of the loss function, note that poorly denoised images may exhibit inconsistencies across different regions, whereas well denoised images should have consistent EMD values across all regions. As illustrated in

Figure 8, the EMD values for the image vary across four regions. To address this issue, we propose an improvement to the EMD loss by partitioning the image into four regions and selecting the maximum EMD value among them. Hence

where

is the histogram of

kth part of denoised image.

In addition to optimizing the EMD, it is also helpful to observe that the parameters

and

cannot be arbitrarily large or small. The range of these parameters can be restricted to

by introducing a regularization term

, defined as a piecewise function

that penalizes values outside this range

When applied to multiple parameters, the regularization term is defined as

Combining the robust version of EMD loss and the regularization term gives

where

is a hyperparameter controlling the effect of the constraint on the range of the parameters.

2.6. Optimizing the EMD

After specifying the loss function

in Equation (

10), the next step is to devise an efficient optimization algorithm that can solve for the optimal parameters. A commonly used method for parameter optimization is grid search, which involves iterating through all possible parameter combinations within a parameter space of

and comparing the resulting losses. However, this approach can be extremely slow, particularly when dealing with a large number of parameters, and it can be challenging to determine an appropriate grid size. An alternative method is gradient descent, which relies on calculating the gradient of the loss function with respect to the optimization parameters. However, since the EMD requires calculating histograms, it is not straightforward to compute the gradient directly.

To overcome this challenge, we adopt the Simultaneous Perturbation Stochastic Approximation (SPSA) algorithm as described in Algorithm 1, see [

13,

14]. SPSA approximates the gradient with a small number of evaluations of the loss function, allowing the parameter estimation to converge in a gradient-like fashion. Another advantage of SPSA is that the computation time need not scale with the number of parameters; even when dealing with multiple transmitted light images, the algorithm remains (almost) as fast as when operating on a single image.

Each iteration of the SPSA algorithm perturbs the parameters in a random direction by a small amount

, and then estimates the gradient based on the changes in the value of the loss function resulting from these perturbations. The algorithm is:

| Algorithm 1 Simultaneous Perturbation Stochastic Approximation (SPSA) Algorithm |

- Input:

Initial guess of parameters , step size a, perturbation size , number of iterations N, current minimum loss , current minimum parameter - 1:

for do - 2:

Choose random perturbation with components - 3:

Calculate estimated gradient: - 4:

for - 5:

Update parameters: - 6:

- 7:

if then - 8:

- 9:

end if - 10:

end for - Output:

Optimized parameters

|

Here,

represents the vector of parameters to be optimized, which is a concatenation of

and

.

n is the number of transmitted light images, and

is the loss function being minimized defined in Equation (

7). The algorithm iteratively updates the parameters by estimating the gradient using random perturbations of the parameter vector. The step size

a determines the size of the update, and the perturbation size

controls the magnitude of the perturbations. The algorithm runs for a fixed number of iterations

N, and returns the optimized parameters

.