4.1. Data

We collected daily data on 98 stablecoins from three primary cryptocurrency data platforms: CoinMarketCap, CoinGecko, and TokenInsight. CoinMarketCap (

https://coinmarketcap.com, accessed on 15 November 2025) is a widely recognized aggregator that provides historical and real-time data on cryptocurrency prices, market capitalization, and trading volumes, alongside listing status updates. CoinGecko (

https://www.coingecko.com, accessed on 15 November 2025) offers a complementary dataset, tracking similar metrics with an emphasis on community-driven insights and additional market indicators. TokenInsight (

https://tokeninsight.com, accessed on 15 November 2025) provides detailed analytics on blockchain assets, including stablecoins, with a focus on risk ratings and market performance. Given that data for some stablecoins are no longer available online due to delisting or project abandonment, we supplemented our dataset using the Wayback Machine (

https://web.archive.org/,waybackmachine, accessed on 15 November 2025) [

34]. The Wayback Machine is an initiative by the Internet Archive that archives snapshots of websites over time, enabling us to retrieve historical data from these platforms when primary sources were inaccessible. The full list of stablecoins analyzed is reported in

Table 1 of

Section 3.1 (“Detecting Stablecoin Failure with Simple Thresholds”).

Our dataset includes daily observations of open, high, low, and close prices, market capitalization, and trading volume for these 98 stablecoins, spanning January 2019 to November 2024. Following [

14], we categorized the coins into two groups based on observation count: 39 stablecoins with fewer than 730 observations, used to forecast 1-day and 30-day ahead probabilities of death, and 59 stablecoins with more than 730 observations, used to forecast 1-day, 30-day, and 365-day ahead probabilities of death. For each stablecoin, we computed several market capitalization differences: today’s value minus yesterday’s (

), today’s minus 7 days ago (

), and today’s minus 30 days ago (

). Additionally, we calculated daily stablecoin volatility using a modified estimator [

35] that accounts for opening gaps, as proposed by [

36], and defined as:

where

,

,

, and

denote the open, high, low, and close prices on day

t. We also computed weekly

and monthly

rolling averages of the daily historical volatilities. This regressor structure, incorporating daily, weekly, and monthly horizons, draws inspiration from the Heterogeneous Auto-Regressive (HAR) model in [

37].

The selection of daily, weekly, and monthly horizons for market capitalization changes and volatility captures both immediate market reactions and sustained trends, providing a comprehensive view of stability dynamics across different time frames relevant to investors and regulators.

We included the T3 Bitcoin Volatility Index [

38], a 30-day implied volatility (IV) measure for Bitcoin derived from Bitcoin option prices via linear interpolation between the expected variances of the two nearest expiration dates. Sourced from (

https://t3index.com/indexes/bit-vol/, accessed on 15 January 2025) until its discontinuation in February 2025, the full methodology is archived at (

https://web.archive.org/web/20221114185507/https://t3index.com/wp-content/uploads/2022/06/Bit-Vol-process_guide-Jan-2019-2022_03_22-06_02_32-UTC.pdf, accessed on 15 November 2025). We also computed weekly and monthly rolling averages of this index for each stablecoin. Alternative implied volatility indices exist, such as those from Deribit (

https://www.deribit.com, accessed on 15 November 2025), a leading crypto options exchange that calculates volatility from its Bitcoin and Ethereum option markets, and other institutions like Skew or CryptoCompare, which provide similar metrics. The Bitcoin IV is potentially an important regressor because Bitcoin volatility often drives broader crypto market dynamics, potentially destabilizing stablecoins through correlated price shocks or shifts in investor confidence, thereby influencing their probability of default.

The daily news-based Economic Policy Uncertainty (EPU) Index, sourced from (

https://www.policyuncertainty.com/us_monthly.html, accessed on 15 November 2025), is constructed from newspaper archives in the NewsBank Access World News database, which aggregates thousands of global news sources. We restricted our analysis to newspaper articles, excluding magazines and newswires, to ensure consistency. Weekly and monthly rolling averages of this index were also computed for each stablecoin. The EPU Index captures macroeconomic and policy-related uncertainty, which may affect stablecoin stability by altering investor risk appetite or triggering capital flows that impact peg maintenance, making it a relevant predictor of PDs. This index has been successfully used in a large number of academic and professional articles; see (

https://www.policyuncertainty.com/research.html, accessed on 15 November 2025) and all references therein. A notation

Table A14 was added in the

Appendix A that lists all our main variables.

Differently from past literature (see [

14] and references therein), we excluded daily Google Trends data on stablecoin searches due to several limitations: for many stablecoins, daily data were unavailable because search volumes fell below Google Trends’ reporting threshold, which standardizes searches between 0 and 1 only for sufficient activity. Moreover, for coins with available data, searches predominantly reflected interest in the real dollar rather than the stablecoin, introducing noise and potential bias. Thus, we discarded this variable entirely. Furthermore, we did not consider Google Trends data for Bitcoin in our analysis, following the evidence provided by [

39,

40]. These studies compared the predictive performance of models using implied volatility against those using Google search data for forecasting volatility and risk measures across various financial and commodity markets. Their findings indicate that implied volatility models generally outperform those based on Google Trends data. This result is attributed to the fact that the information captured by Google search activity is already embedded within implied volatility, whereas the reverse does not hold. These authors argue that this is likely because implied volatility reflects the forward-looking expectations of sophisticated market participants—such as institutional investors with access to superior information—while Google Trends primarily reflect the behavior and expectations of retail investors with limited information.

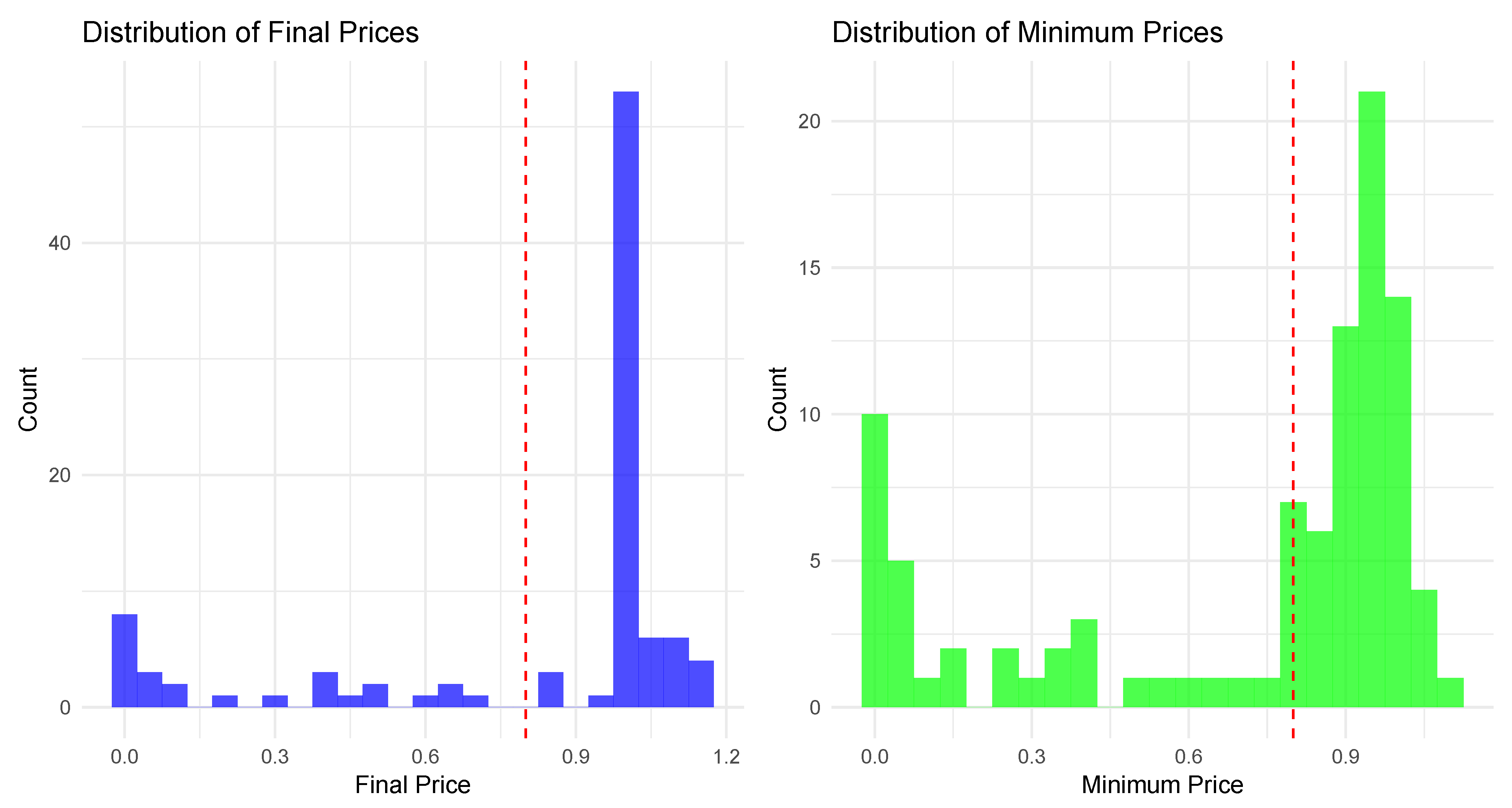

As detailed in

Section 3.1, we applied two competing criteria to classify stablecoins daily as dead or alive/resurrected: the volume-based approach in [

5], and our price threshold method at

$0.80, deeming a coin dead if its price falls below 80 cents, and alive or resurrected if above or recovering above 80 cents, respectively. The CoinMarketCap final listing status was not used for daily analysis, as it reflects only the end-of-sample status. The dataset of 39 stablecoins with fewer than 730 observations spans February 2021 to November 2024 (11,558 daily observations), while the 59 stablecoins with more than 730 observations span January 2019 to November 2024 (76,176 daily observations). Following [

14,

15], we employed direct forecasts, using 1-day lagged regressors for 1-day ahead PDs, 30-day lagged regressors for 30-day ahead PDs, and, for coins with over 730 observations, 365-day lagged regressors for 365-day ahead PDs.

Table 3 reports the total number of “dead days”—days when stablecoins are classified as

dead under the two criteria—both in absolute value and as a percentage.

It appears that the price threshold method is more restrictive, requiring a significant price drop below 80 cents, whereas the volume-based Feder method captures more days as dead, potentially including periods of low activity that do not necessarily reflect a loss of peg stability.

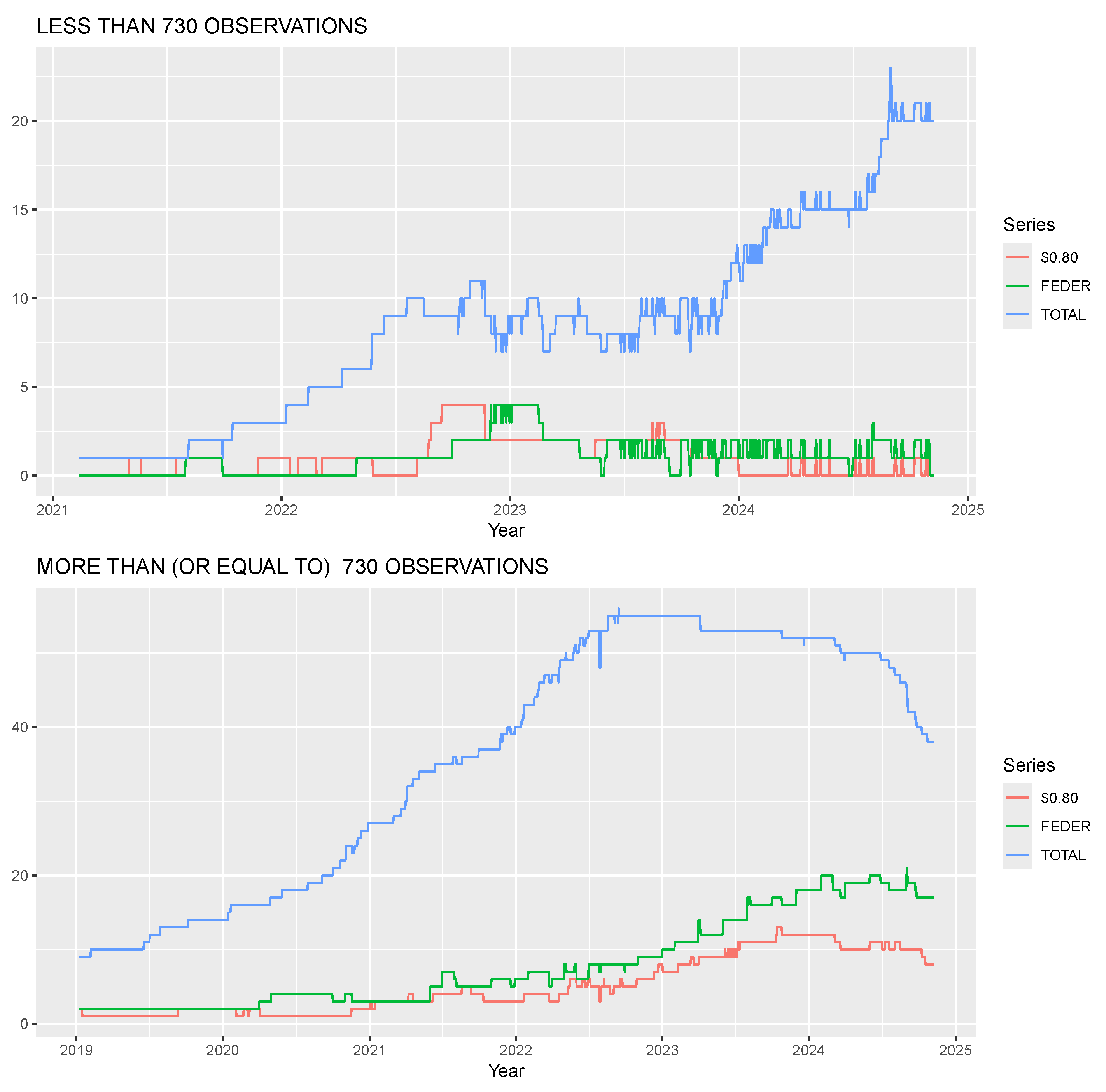

Figure 2 illustrates the total number of stablecoins available each day (blue line) and the number of dead stablecoins each day according to the Feder method (green line) and the

$0.80 threshold (red line), split by observation groups.

For stablecoins with fewer than 730 observations, the $0.80 method reacts more quickly to failure events, as seen in sharper spikes (e.g., August 2022 and mid-2023), while the Feder method shows more sustained periods of dead stablecoins, reflecting its sensitivity to prolonged low volume. For stablecoins with 730 or more observations, the $0.80 method again responds faster, with notable peaks (e.g., 2022), compared to the Feder method’s broader plateaus. This quicker reaction aligns with the price threshold’s focus on immediate peg deviations, making it a more responsive indicator of stablecoin distress in real-time monitoring scenarios.

4.2. In-Sample Analysis

The in-sample analysis evaluates the performance of various panel models and a random forest model in explaining the probability of stablecoin failure, using the full available data sample and the two classification criteria outlined in

Section 3.1: the

$0.80 price threshold and the volume-based approach of [

5].

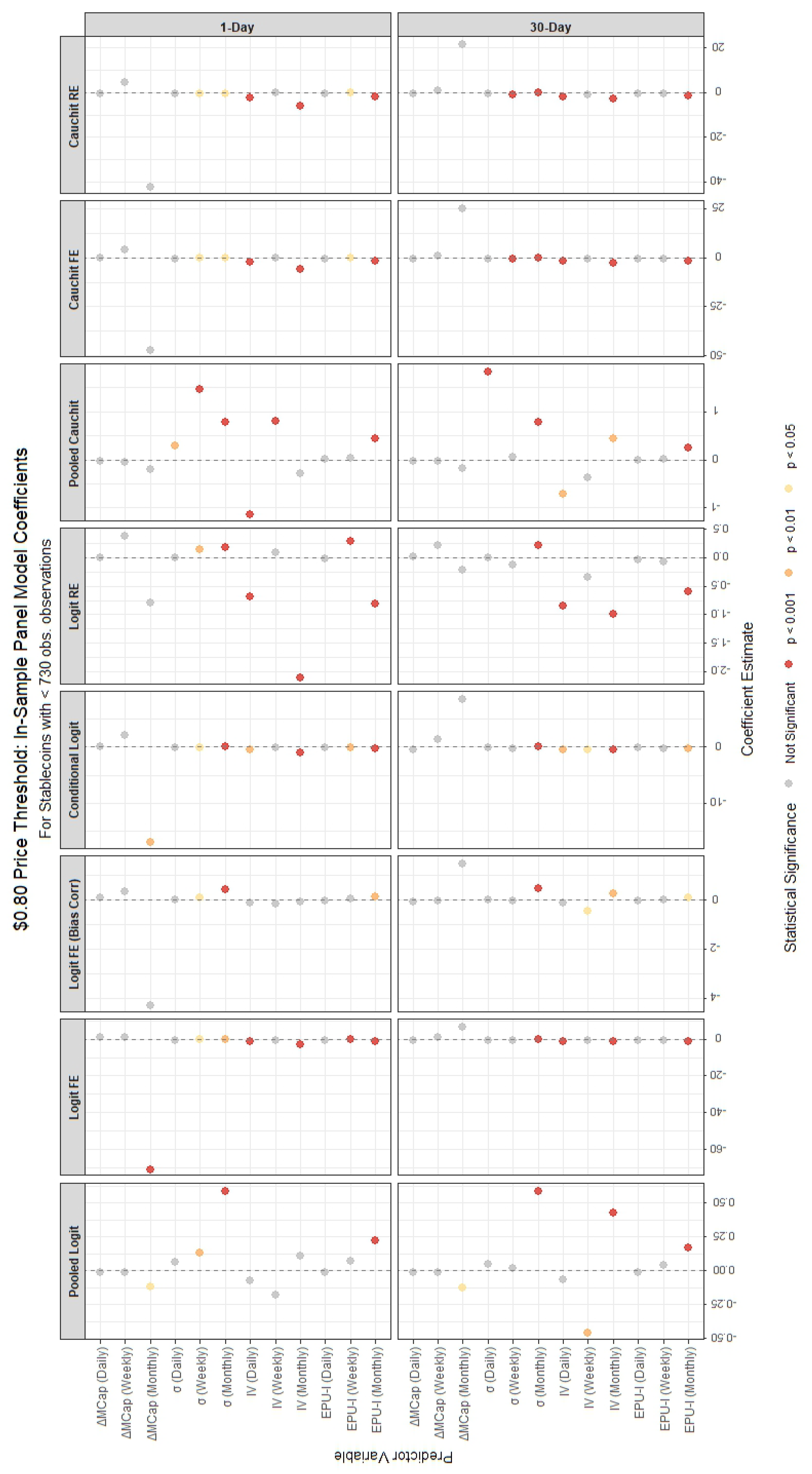

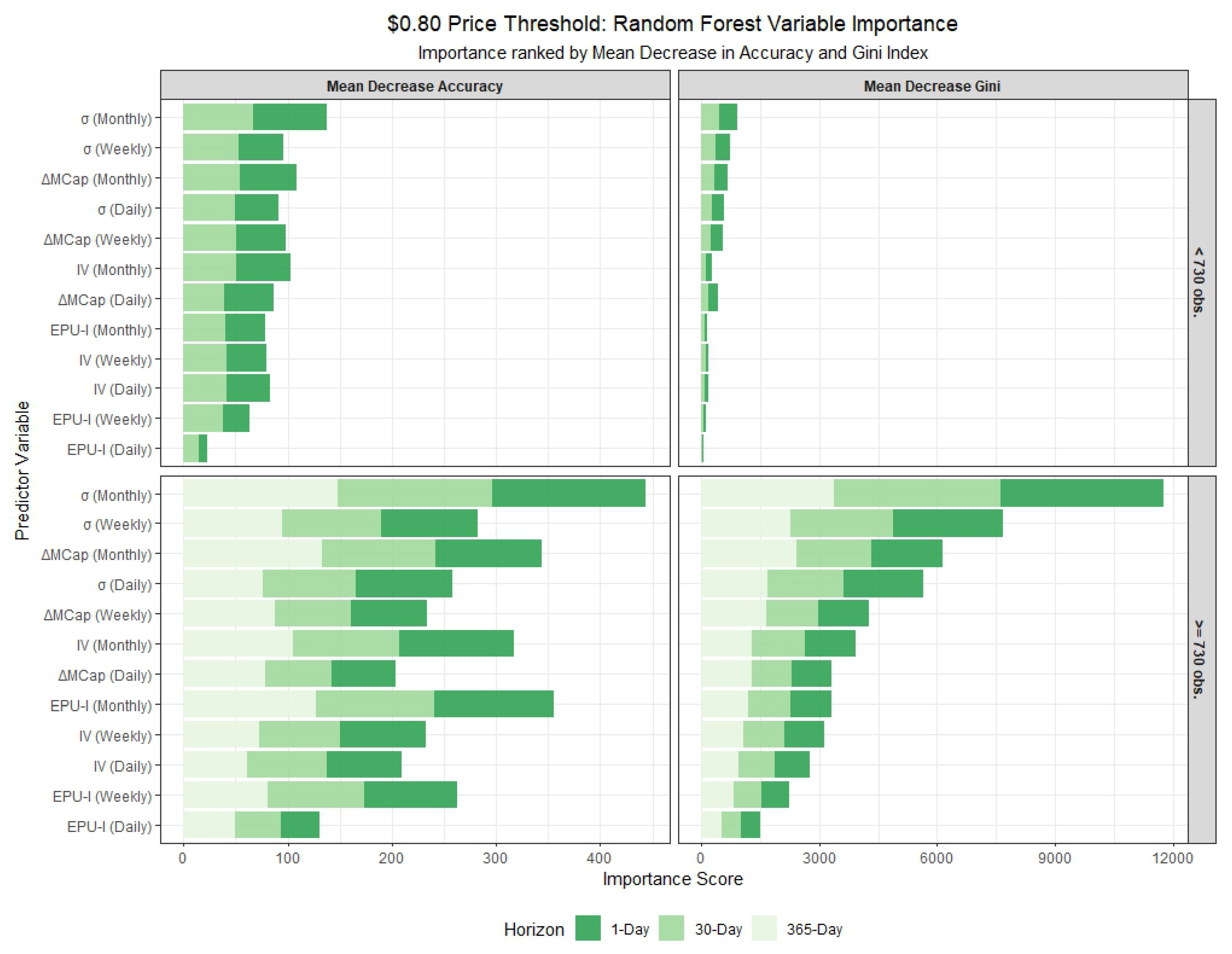

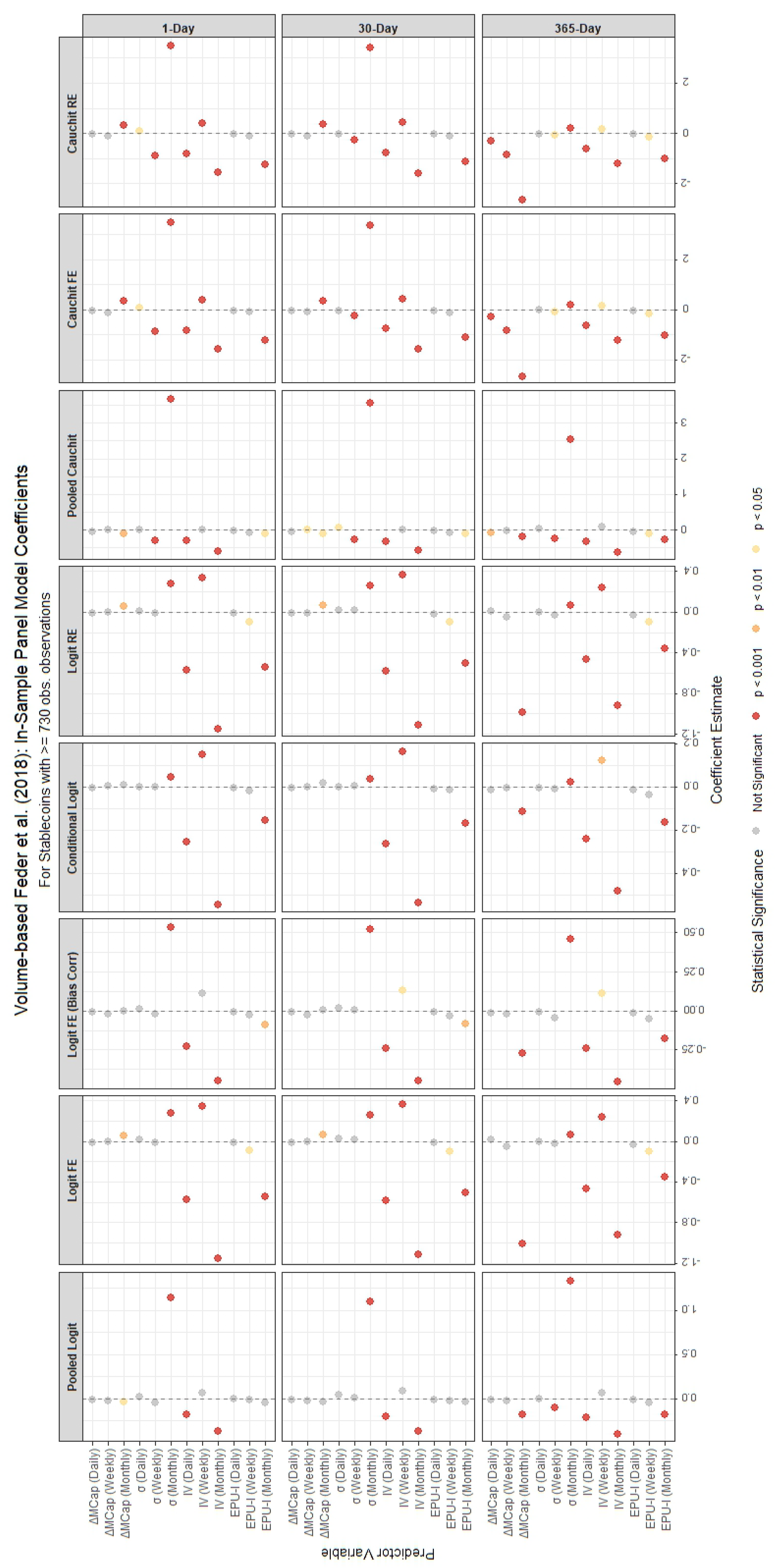

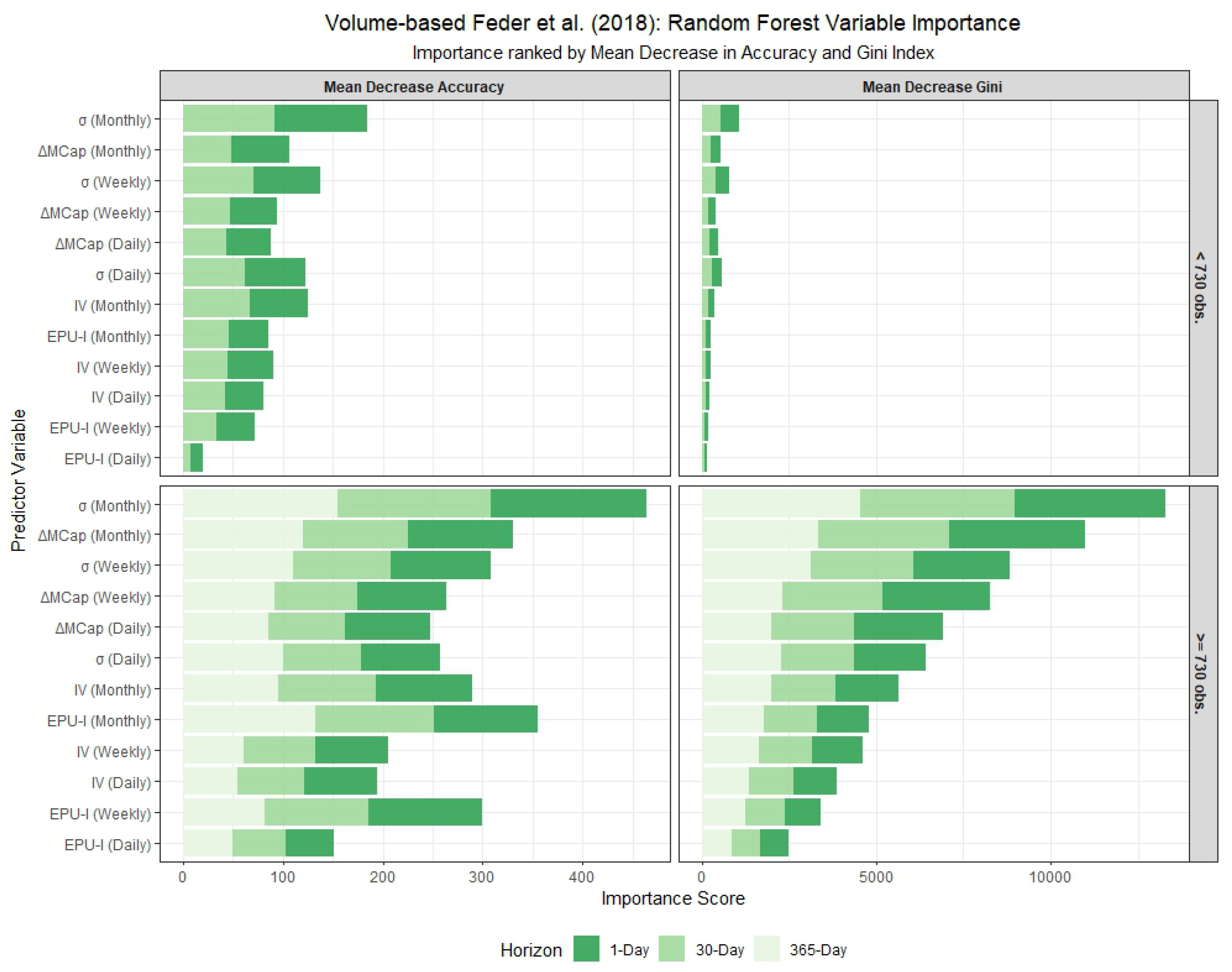

The in-sample results are visualized in

Figure 3,

Figure 4,

Figure 5,

Figure 6,

Figure 7 and

Figure 8. Specifically,

Figure 3 and

Figure 4 show the panel model coefficient estimates for the

$0.80 price threshold approach, segmented by stablecoin lifespan (≥730 and <730 observations). The corresponding Random Forest variable importance measures are displayed in

Figure 5. Similar visual summaries for the Volume-based Feder et al. (2018) approach are provided in

Figure 6,

Figure 7 and

Figure 8. In the variable importance charts, results are broken down by lifespan and metric, with variables ranked and forecast horizons distinguished by color.

Detailed tabular results are available in the

Appendix A. Panel model coefficient estimates are presented in

Table A1 and

Table A4 for stablecoins with ≥730 observations, and in

Table A2 and

Table A5 for those with fewer observations. Variable importance measures for the Random Forest models, covering both lifespan categories, are located in

Table A3 and

Table A6. The tables provide coefficient estimates for a suite of panel models (logit and cauchit variants with pooled, fixed effects (FE), bias-corrected FE, conditional logit, and random effects (RE) specifications) and key variable importance metrics (Mean Decrease Accuracy and Mean Decrease Gini) for 1-day, 30-day, and 365-day forecast horizons where applicable. In the random forest model, ‘Mean Decrease Accuracy’ (MDA) measures the average reduction in out-of-bag prediction accuracy when a variable is permuted, indicating its contribution to classification performance. ‘Mean Decrease Gini’ (MDG) quantifies the total decrease in node impurity (using the Gini index) across all splits involving the variable, reflecting its importance in tree construction.

4.2.1. Differences Between the $0.80 Price Threshold and the Feder Method

For stablecoins with fewer than 730 observations, the panel models under the

$0.80 price threshold method (

Table A2) reveal a mix of significant and insignificant regressors, with varying signs and magnitudes. The monthly market capitalization change (

) and volatilities (

,

) consistently show statistical significance across most models for the 1-day horizon, with coefficients indicating strong effects—generally negative for

(i.e., an increase in market cap reduces the PD) and positive for the volatilities (highlighting increased credit risk). Bitcoin volatility (

) and the monthly Economic Policy Uncertainty Index (

) also exhibit significant negative impacts, suggesting that higher Bitcoin volatility and economic uncertainty influence stablecoin failure. In contrast, for stablecoins with 730 or more observations (

Table A1), the results are more consistent across models and horizons. For instance,

and

remain highly significant with mostly negative and positive signs, respectively, and Bitcoin volatility terms (

,

) are uniformly negative and significant. This suggests that longer data histories enhance the stability and reliability of coefficient estimates, likely due to reduced noise and greater statistical power.

Under the

Feder et al. (2018) volume-based criterion, differences between the two stablecoin groups are similarly pronounced. For coins with fewer than 730 observations (

Table A5), the 1-day horizon shows fewer significant regressors, with

consistently negative and

positive, while

is significant only in the pooled logit. For coins with 730 or more observations (

Table A4), the 1-day horizon highlights

(positive sign) and

(negative sign) as key drivers, with more regressors achieving significance across models (e.g., particularly

with negative effects). The 365-day horizon further amplifies these effects, with

showing large negative coefficients. The increased significance and magnitude for coins with longer histories suggest that the volume-based Feder criterion benefits from extended data, capturing prolonged low-volume periods more effectively than the price threshold method, which reacts to immediate price drops.

Summarizing, the results exhibit notable differences depending on the method used to define stablecoin failures. For the $0.80 price threshold, variables such as the lagged monthly market capitalization change () and monthly volatility () are consistently significant across most panel models, particularly for stablecoins with ≥730 observations. In contrast, the Feder method places greater emphasis on volatility measures, with and showing strong predictive power. The Feder method also highlights the importance of economic policy uncertainty (), which is less pronounced in the price threshold analysis. This suggests that the Feder method, which incorporates trading volume, may capture broader market dynamics and systemic risks, while the price threshold focuses more on direct price declines.

4.2.2. Panel Models vs. Random Forest Models

Comparing panel models to random forest models reveals distinct interpretive strengths. Panel models (

Table A1,

Table A2,

Table A4 and

Table A5) provide coefficient estimates with statistical significance, allowing directional inference. For example, under the

$0.80 threshold,

has mainly a positive effect, implying that higher volatility increases the failure probability. Random forest models (

Table A3 and

Table A6), however, focus on variable importance via Mean Decrease Accuracy (MDA) and Mean Decrease Gini (MDG), without directional insight. For stablecoins with fewer than 730 observations (

Table A3),

tops the 1-day horizon for both criteria, followed by

and

, aligning with panel model significance but offering a non-parametric perspective.

Therefore, panel models and random forest approaches offer complementary insights. Panel models provide interpretable coefficients, revealing that lagged volatility and market capitalization changes are critical predictors. In contrast, random forest models prioritize variables based on predictive accuracy and Gini importance, with the stablecoin monthly volatility consistently ranking highest. This suggests that while panel models identify specific directional effects, random forest models capture non-linear relationships and interactions, particularly for volatility and market-derived variables.

4.2.3. Differences Across Forecast Horizons and Stablecoin Lifespans

Differences across forecast horizons are evident in both model types. For the

$0.80 threshold with 730 or more observations (

Table A1), the 1-day horizon shows strong effects from

(mainly positive) and

(mainly negative), which persist but weaken in magnitude at 30 days and further diminish at 365 days. This attenuation suggests that short-term volatility and market dynamics are more predictive of immediate failure, while longer horizons dilute these effects. For fewer than 730 observations (

Table A2), the 30-day horizon shows fewer significant terms compared to the 1-day, indicating limited predictive power with shorter data spans. Random forest results (

Table A3) mirror this, with MDA values for

slightly decreasing from the 1-day to the 30-day horizon for stablecoins with fewer observations but remaining stable for those with longer histories, suggesting robustness across horizons when data is sufficient.

Under the Feder criterion, horizon effects differ. For 730 or more observations (

Table A4), the 365-day horizon introduces more significant terms compared to the 1-day, reflecting the criterion’s sensitivity to prolonged low volume. For fewer observations (

Table A5), the 30-day horizon shows reduced significance, likely due to data constraints. Random forest results (

Table A6) confirm

as the top predictor across all horizons, with

and

gaining importance at longer horizons, highlighting their role in sustained volume-based failure.

Summarizing, the forecast horizon significantly influences the results. For 1-day-ahead forecasts, daily and weekly variables play a more prominent role, while longer horizons (30-day and 365-day) emphasize monthly variables. The random forest results align with this pattern, showing higher importance scores for monthly variables at longer horizons. This indicates that short-term failures are driven by recent market movements, while long-term failures are influenced by sustained trends in volatility and market capitalization, as well as macroeconomic and systemic factors. Moreover, forecast horizon differences underscore short-term sensitivity in the $0.80 threshold and longer-term relevance in the Feder criterion, informing their suitability for different monitoring contexts.

Finally, we remark that stablecoins with 730 or more observations yield more robust and significant results across both forecasting models and definitions of stablecoin death, benefiting from longer data histories. These stablecoins exhibit more stable and statistically significant coefficients, particularly for monthly variables such as and . For these variables, the panel models consistently show strong negative coefficients for , indicating that declines in market capitalization are a robust predictor of failure, and positive coefficients for , suggesting that increases in stablecoin volatility are a reliable signal of impending failure. In contrast, stablecoins with fewer than 730 observations display more erratic results, with fewer significant coefficients and larger standard errors, likely due to limited data availability. The random forest results corroborate this evidence, showing higher variable importance scores for and in the larger dataset, further underscoring their reliability as predictors for more established stablecoins.

4.2.4. Economic Interpretation of In-Sample Drivers of Stablecoin Default

The in-sample results, consistently across model specifications, forecast horizons, sample lengths, and death definitions, reveal a coherent economic narrative linking market stability, investor confidence, and systemic trust mechanisms within the stablecoin ecosystem. Beyond statistical relationships, the estimated coefficients highlight how macro-financial and crypto-specific forces jointly determine the credibility and resilience of individual stablecoins.

First, the negative and highly significant effect of stablecoin volatility on the probability of default underscores the central role of price stability as a coordination device. In theoretical terms, lower volatility reduces uncertainty about redemption value and reinforces expectations of convertibility, which in turn strengthens the self-fulfilling confidence loop sustaining the peg. This finding is consistent with models of monetary trust and coordination equilibria, where small deviations from parity are tolerated, but increasing fluctuations erode collective confidence and trigger runs or liquidity withdrawals. Across model types, this effect remains the most robust determinant of survival, particularly at shorter forecast horizons, indicating that day-to-day price discipline is essential for maintaining the perception of “moneyness” [

3,

41]. For example, when significant volatility emerges for algorithmic stablecoins, this could indicate a breakdown in the arbitrage incentives designed to correct price deviations [

3]. For collateralized stablecoins, it may reflect mounting concerns over the quality, liquidity, or transparency of the underlying reserves, raising redemption fears akin to a bank run [

2]. High volatility deters a stablecoin’s use as a medium of exchange or unit of account within the decentralized finance (DeFi) ecosystem, eroding its utility and demand [

4]. Consequently, elevated volatility is not merely a statistical feature but a direct symptom of a loss of monetary confidence—the core of what defines a stablecoin’s failure—as seen in the TerraUSD collapse, where amplified fluctuations eroded trust and triggered systemic contagion [

18]. Our models quantitatively confirm that periods of high price instability are strong leading indicators of a terminal loss of peg and its final demise.

Second, larger increases in market capitalization are associated with a lower probability of failure, confirming that market depth and adoption act as stabilizing forces. Expanding capitalization reflects growing transactional use, network effects, and greater distribution of coin holdings, all of which contribute to reducing idiosyncratic liquidity shocks (S&P Global Ratings, 2025). Conversely, a declining market cap reflects net outflows, capital flight, and a collapse in confidence. This can trigger a negative feedback loop: as investors redeem or sell their holdings, the reduced market cap makes the coin more susceptible to large trades and liquidity crises, further increasing its fragility. Therefore, from an economic standpoint, higher capitalization signals stronger user trust and institutional anchoring, mitigating the risk of destabilizing redemptions. Interestingly, this protective effect becomes more pronounced in models estimated on longer time series (over 730 observations), where structural market expansion rather than short-term speculative inflows appears to drive stability. This distinction highlights how the accumulation of user base and transactional liquidity operates as a form of endogenous insurance for the peg (see [

42,

43]).

Third, a more nuanced finding is the generally negative relationship between Bitcoin’s implied volatility (

) and stablecoin default risk. At first glance, one might expect that turmoil in the core crypto asset (Bitcoin) would spill over and destabilize the entire ecosystem, including stablecoins. However, the negative coefficient suggests the opposite: when Bitcoin becomes more volatile, stablecoins appear to become

safer in a relative sense. This admits a compelling economic interpretation as a

flight-to-quality or

flight-to-safety phenomenon within the digital asset space. During periods of extreme uncertainty and price swings in the crypto market, investors seek to de-risk their portfolios. They often divest from volatile assets like Bitcoin and Ethereum and park their capital in stablecoins to preserve value and await clearer market directions. This surge in demand for stability can temporarily bolster stablecoin prices, increase their trading volumes, and reduce their perceived default risk. This effect positions certain stablecoins as a type of “safe haven” asset

within the crypto ecosystem—a role that becomes particularly prominent during internal market crises, even if they remain risky from a traditional finance perspective. This inverse relationship, consistent across almost all our models, underscores that stablecoin stability cannot be analyzed in isolation but must be viewed as embedded within the larger crypto-financial network (see [

44,

45,

46,

47,

48]).

Finally, the role of the Economic Policy Uncertainty Index (

EPU-I) is less dominant than the coin-specific factors, and its effect varies. However, when significant, it often carries a negative sign, implying that higher traditional economic uncertainty is associated with

lower stablecoin default risk. In periods of heightened policy uncertainty, investors and institutions may increase their use of stablecoins as settlement or collateral instruments that combine the benefits of digital liquidity with perceived U.S. dollar stability. This effect is consistent with the safe-haven hypothesis observed for other dollar-denominated instruments: when traditional financial markets become riskier or regulatory uncertainty rises, stablecoins (particularly asset-backed designs) gain attractiveness as alternative transactional media. Conversely, during tranquil periods of low EPU, demand for stablecoins as a hedging or reserve instrument diminishes, potentially amplifying idiosyncratic fragility. This finding highlights the hybrid nature of stablecoins as both crypto assets and synthetic money-like instruments whose demand is countercyclical to global uncertainty (see [

49,

50,

51,

52]). However, the varying significance across models indicates that while EPU-I plays a role, coin-specific factors dominate, consistent with SP’s emphasis on internal reserve quality over external shocks [

12].

When interpreted jointly, these results reveal a unified mechanism: stablecoin survival depends on the interaction between micro-level stability signals (volatility and capitalization) and macro-level credibility anchors (crypto and policy uncertainty). The consistency of these relationships across model types, forecast horizons, and definitions of failure suggests that the identified determinants reflect fundamental economic forces rather than model-specific artifacts. At a conceptual level, the evidence supports the view that stablecoins operate as endogenous confidence assets, sustained by liquidity, predictability, and systemic calm, whose failure dynamics mirror those of traditional monetary systems when the equilibrium of trust collapses. For investors, these insights advocate monitoring capitalization and volatility as early indicators, while regulators can leverage them to mitigate systemic risks, as warned by the Financial Stability Oversight Council (2024) [

1]. Future research could extend this by incorporating real-time reserve data to refine these interpretations.

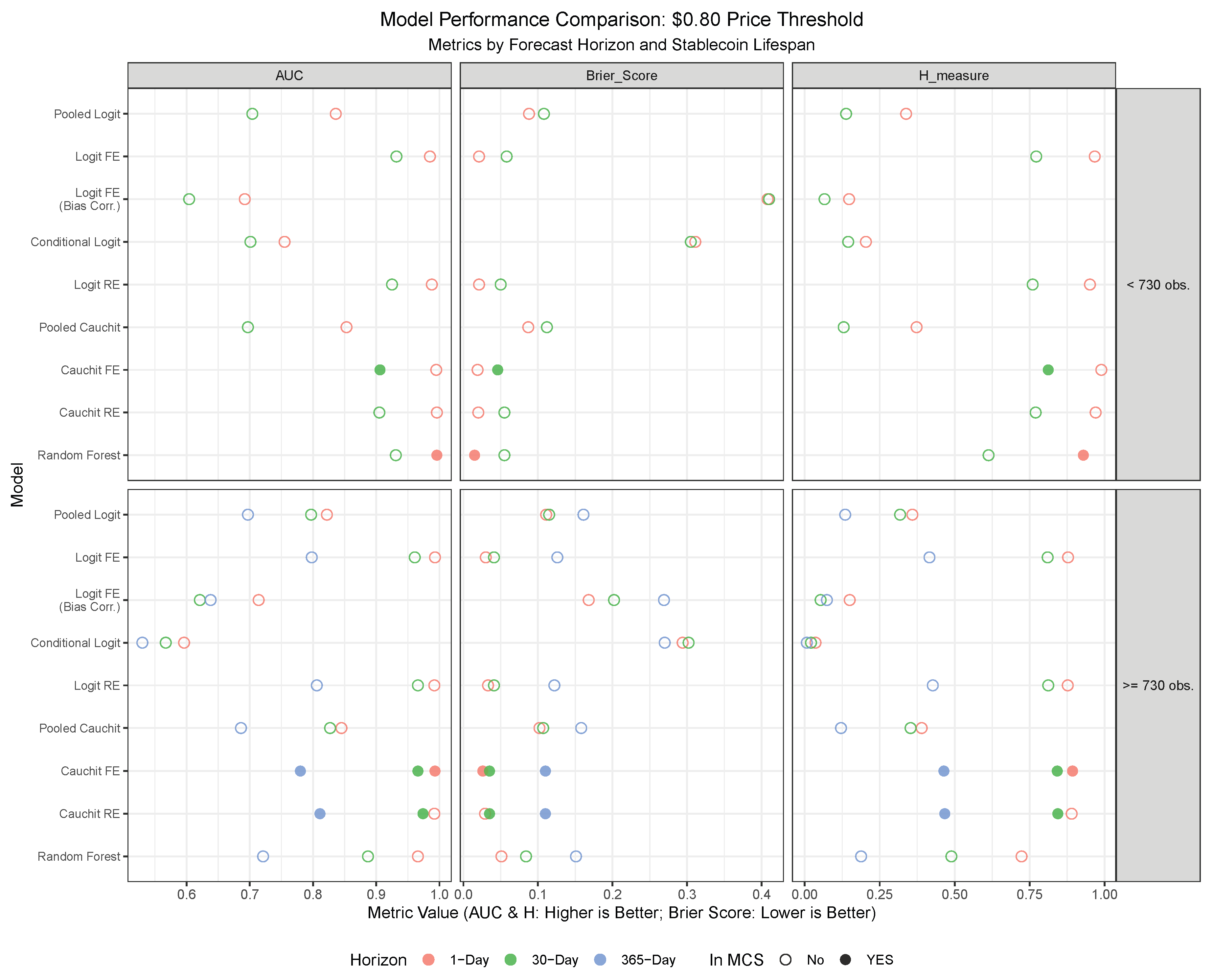

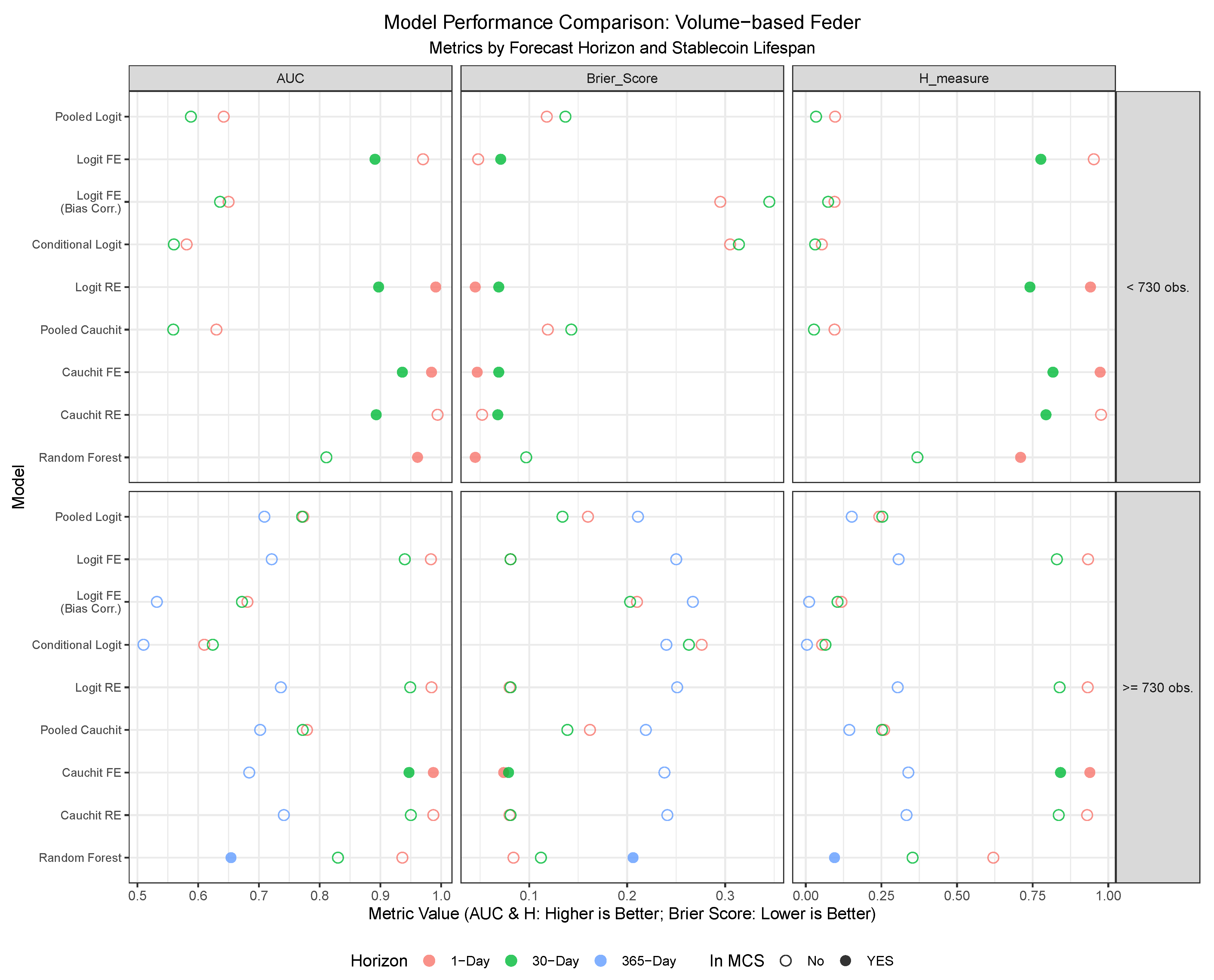

4.3. Out-of-Sample Analysis

We assess the forecasting performance of our eight panel models and the random forest model in detecting stablecoin failures, using both the

$0.80 price threshold and the volume-based criterion of [

5], as outlined in

Section 3.1.

The initial dataset for estimating panel models and the Random Forest model spanned February 2021 to February 2022 for coins with fewer than 730 observations and January 2019 to January 2021 for coins with more than 730 observations. In essence, all stablecoin data were pooled together up to a specific time point (e.g., time t), and panel models along with the Random Forest model were estimated with this dataset to calculate the out-of-sample probabilities of death. Subsequently, the time window was extended by one day, and the process was repeated. For both the panel models and the Random Forest model, direct forecasts were generated by estimating the models multiple times, corresponding to the number of forecast horizons, using regressors lagged by the duration of each forecast horizon (e.g., 1-day lagged regressors for 1-day-ahead probability of death predictions, and so forth).

The out-of-sample performance of nine different models, tested under the two competing definitions of a stablecoin death, is visualized in

Figure 9 and

Figure 10. The plots evaluate performance using the AUC, H-measure, and Brier Score, segmenting the results by the stablecoin’s lifespan and the forecasting horizon. Models that are included in the Model Confidence Set (MCS), which is based on the Brier Score as a loss function at a 10% significance level, are highlighted with a solid point to denote them as statistically superior forecasting models. Detailed forecasting results are presented in tabular format in the

Appendix A.

Table A7 and

Table A8 provide a comprehensive breakdown of performance, evaluated separately by stablecoin lifespan (<730 observations and ≥730 observations) and forecast horizon (1-day, 30-day, and 365-day, with the 365-day horizon applied only to stablecoins with longer histories). The tables include the aforementioned performance metrics (AUC, H-measure, Brier Score) along with standard classification metrics—Accuracy, Sensitivity, and Specificity—derived using two alternative thresholds (50% and empirical prevalence). Finally, the tables explicitly report each model’s inclusion in the Model Confidence Set.

Among the evaluated forecasting models, the panel Cauchit model with fixed effects consistently emerges as the top performer across multiple evaluation criteria, forecast horizons, and stablecoin classifications. This model demonstrates superior predictive power, as evidenced by its frequent inclusion in the Model Confidence Set (MCS), its consistently high AUC and H-measure values, and its low Brier Scores under both the $0.80 price threshold and the volume-based Feder approach. Particularly noteworthy is its robustness at shorter forecast horizons, where it achieves near-optimal sensitivity and specificity, making it highly reliable for real-time failure detection. For stablecoins with fewer than 730 observations, the panel Cauchit FE model excels in navigating volatility and limited data availability, while for longer-lived stablecoins, it leverages historical patterns to maintain strong performance. While other models, such as Random Forest and Logit RE, also perform well in specific contexts—particularly for short-term forecasts of stablecoins with fewer than 730 observations—their results are less consistent compared to the panel Cauchit FE model. In contrast, the bias-corrected logit model with fixed effects and the Conditional Logit model consistently underperform across both classification criteria, likely due to overfitting or misspecification in sparse data settings.

Based on these findings, the Cauchit FE model clearly emerges as the preferred choice for predicting stablecoin failures, particularly when aiming for a balance between accuracy, adaptability, and robustness across varying conditions.

In terms of

differences among classification criteria, under the

$0.80 price threshold (

Table A7), models generally exhibit higher predictive power compared to the Feder criterion (

Table A8), as evidenced by higher AUC and H-measure values across most horizons and lifespans. In contrast, the volume-based Feder approach incorporates trading volume dynamics, introducing additional complexity and noise into the classification process. Consequently, models evaluated under this criterion exhibit lower sensitivity and specificity, particularly at shorter forecast horizons, reflecting the inherent challenges of capturing failure events based on volume fluctuations.

The distinction between stablecoins with fewer than 730 observations and those with 730 or more observations also plays a significant role in shaping the results. Under the $0.80 threshold, stablecoins with fewer than 730 observations show excellent performance in short-term forecasts, particularly at the 1-day-ahead horizon. For stablecoins with 730 or more observations, performance remains strong, but sensitivity is generally lower, suggesting that longer histories dilute the ability to detect rare failure events amidst more stable periods. The Feder criterion generally mirrors this trend.

Across forecast horizons, predictive performance declines as the horizon extends, more markedly under the Feder criterion. This suggests that the $0.80 threshold retains more predictive power over longer horizons due to its focus on price signals, whereas the Feder criterion struggles as volume trends become less informative over time. Notably, models such as Logit RE and Cauchit RE maintain relatively strong performance across horizons for stablecoins with longer lifespans, underscoring their adaptability to varying forecasting requirements, even though they are considerably more difficult to estimate and computationally demanding.

In summary, based on these findings, we recommend the panel Cauchit FE model as the preferred choice for predicting stablecoin failures, particularly when aiming for a balance between accuracy, adaptability, and robustness across varying conditions. For applications where interpretability is less critical, the Random Forest model offers a competitive alternative, especially for short-term forecasts. The $0.80 price threshold method appears to outperform the Feder criterion in out-of-sample forecasting, particularly for short-term horizons and stablecoins with shorter lifespans, due to its sensitivity to immediate price deviations. Stablecoins with fewer than 730 observations benefit from higher precision in short-term forecasts, while those with 730 or more observations show robust but less sensitive predictions. Forecasting performance degrades across horizons, more so under the Feder approach, where long-term volume signals weaken.