1. Introduction

Inflation, the persistent rise in the price of goods and services, is a critical economic phenomenon that affects the financial stability and decision-making processes of businesses, governments and individuals. Inflation forecasts play a key role in addressing these economic challenges by providing insights into future price movements and thereby enabling informed financial planning (BIS, 2021) [

1]. Governments rely on inflation forecasts when designing monetary and fiscal policies to stabilize the economy and achieve price stability. Inflation expectations, which reflect the expected rate of future price increases, play a crucial role in shaping actual inflation outcomes. These expectations influence the consumption and investment decisions of households and firms, as well as wage negotiations and pricing strategies (Binder & Kamdar, 2022) [

2]. Forecasting inflation involves a variety of methods, ranging from statistical models to simpler survey-based approaches. Inflation forecasts are essential for understanding the complex processes of economic decision-making. By providing insights into future price movements, these forecasts allow economic agents to make informed decisions to mitigate risks and take advantage of opportunities (ECB, 2017) [

3] and (ECB, 2021) [

4].

In our study, we use the Harmonised Index of Consumer Prices (HICP) and its components used by Eurostat to track price changes. The HICP index is calculated as the weighted average of the price changes of a basket of goods and services consumed by households. Accurate inflation forecasting is important from the perspective of monetary authorities, as it allows them to prepare for intervention in a forward-looking manner. Timely and appropriate intervention reduces the costs of disinflation and does not unnecessarily restrain economic growth. According to modern economic thinking, maintaining price stability is the task of the central bank, but government economic policy also has a strong impact on price changes. Governments not only influence the general level of prices through fiscal stimulus but can also intervene in the development of certain components of inflation through price regulation and support systems. We therefore consider it important to forecast individual inflation components accurately in order to provide a clearer picture of the future for any fiscal interventions. Governments may find it urgent to quickly halt or temporarily slow down price increases in certain product groups, as price increases in certain product groups have an immediate and strong impact on the social situation and well-being of households. The most accurate possible forecasting of inflation and its individual components plays an important role in the planning of interventions.

In our research, we used the data of subcomponent (Food and non-alcoholic beverages; Alcoholic beverages and tobacco; Clothing and footwear; Housing, water, electricity, gas and other fuels; Furnishings, household equipment and routine house maintenance; Health; Transport; Communication; Recreation and culture; Education; Restaurants and hotels; Miscellaneous goods and services) of monthly HICP for the period January 2000 (base period) to December 2023. Inflation data were collected from Eurostat. This period was chosen because it includes the period of calm/control (2016), subprime crisis (2008) and the Russian–Ukrainian conflict (2022). Our aim is to examine which method can better forecast the components of HICP. There were crises with different starting points over the whole period under study. We assess whether the patterns learned for the forecasting models during the earlier crisis period can be used to produce accurate forecasts in the later period. In doing so, we help to refine the modelling of inflation forecasts. We highlight the importance of focusing on specific components of inflation.

Our study is an attempt to determine whether inflation trends can be predicted using “technical” forecasting methods. We do not wish to reflect on the various assumptions of economic thinking as to which economic phenomena are fundamentally linked to inflation trends. We assume that machine learning and deep learning methods are capable of extracting information about the future from inflation data series. From this perspective, we adopt the approach of technical analysts in financial markets, according to which the data series studied themselves concentrate the information content of the factors that shape the data.

1.1. Novelty and Significance of the Study

The novelty of this study lies primarily in its focus not only on the aggregate HICP index but also on its subcomponents. This approach makes it possible to uncover the heterogeneous inflation dynamics across different consumption categories and to highlight substantial differences in their forecastability. While most previous research has concentrated on headline inflation, much less attention has been paid to the heterogeneity of subcomponents and their varying sensitivity to macroeconomic shocks. Another innovative aspect is that the analysis spans three distinct economic periods (2009—post-financial crisis, 2016—stable period, and 2023—pandemic and geopolitical tensions), allowing us to test the temporal robustness of forecasting models. Methodologically, we combine classical machine learning algorithms with deep learning architectures, placing particular emphasis on the role of activation functions. Furthermore, we introduce the use of the Forecastability Index (FI) and the Interquartile Range (IQR) metric, which enable us to assess not only accuracy but also the stability of predictions across models. Together, these measures allow us to rank the forecastability of HICP subcomponents, a perspective that has so far received limited attention in the literature. Our findings therefore contribute to the macroeconomic policy debate by identifying which consumption sectors can be forecast more reliably and where greater uncertainty persists.

1.2. Interpretation of HICP and Subcategories

The HICP has been published since 1997. Several changes have been made to the measurement methodology. The current regulation has been in force since 2016. Regulation on the HICP—Regulation (EU) 2016/792 [

5] of the European Parliament and of the Council clarified the rules for the production of the HICP. The HICP is a measure of price changes for goods and services. Goods and services are those that fall within the scope of household final monetary expenditure (EUROSTAT, 2024) [

6].

Prices of goods and services are measured according to the Classification of Individual Consumption by Purpose (COICOP). COICOP currently consists of 14 main categories (major groups). The first twelve categories include total individual consumption expenditure of households, while the last two categories include social transfers in kind provided by non-profit institutions serving households and general government. In our study, we interpret this level of aggregation as a subcategory.

1.3. HICP Prediction and the Use of Machine Learning Models in the Literature

The use of a number of methods to predict HICP can be found in the literature, some examples of which are given below. Over the past few years, various methodologies have been applied in the field of inflation forecasting, ranging from traditional approaches (e.g., ARIMA, VAR) to machine learning solutions (e.g., random forest, XGBoost). Combining different models, such as employing performance-based weighting (Hubrich and Skudelny, 2017) [

7], can offer advantages in times of crisis by integrating the strengths of multiple techniques. Meanwhile, simpler methods like ARIMA handle short-term fluctuations effectively, and structural VAR models capture macroeconomic relationships more accurately. The European Central Bank (ECB, 2016) [

8] emphasizes that core inflation (i.e., excluding energy and food) tends to be less volatile yet exhibits a delayed response to inflation trends.

A study by Stakėnas (2018) [

9] on Lithuanian HICP components points out that forecasting sub-indices (e.g., food, energy) individually and then aggregating them can improve accuracy, although this disaggregated approach limits the ability to model interactions among components. Earlier work by Stock and Watson (1996) [

10] also suggests that modelling each price category separately can reduce overall forecast errors; however, the ECB (2016) [

8] cautions that individual component errors may align simultaneously, thereby diminishing the benefits of disaggregation.

Neural networks have increasingly come to the fore in inflation forecasting. Yang and Guo (2021) [

11] used a GRU-RNN architecture on CPI indicators in China, finding that the model effectively captures nonlinear relationships and processes complex long-term time series data, outperforming traditional frameworks in forecast accuracy. Similarly, Astrakhantseva et al. (2021) [

12], examining regional inflation in Russia, showed that recurrent networks are adept at modelling nonlinear dynamics and support localized forecasting efforts. Sengupta et al. (2024) [

13] proposed the Filtered Ensemble Wavelet Neural Network (FEWNet) to forecast CPI in BRIC countries (Brazil, Russia, India, and China), integrating Economic Policy Uncertainty (EPU) and Geopolitical Risk (GPR). Their wavelet-based model significantly outperformed standard methods, including ARIMA and XGBoost.

Aras and Lisboa (2022) [

14] investigated both core and headline inflation in Turkey using tree-based machine learning algorithms, observing that random forest consistently yielded the best results. These authors highlight the importance of variable selection in enhancing performance. In turn, Rodríguez-Vargas (2020) [

15], studying inflation forecasts for Costa Rica, found that LSTM models offered the most stable outcomes over multiple forecast horizons, and also concluded that multivariate methods systematically outperformed univariate ones.

Joseph et al. (2024) [

16], who employed a bottom-up approach to forecast UK inflation, compared traditional autoregressive (AR) models with methods such as Ridge regression, LASSO, and Elastic Net. They noted that AR models are often difficult to surpass, although shrinkage techniques can prevail when inflation trends shift. Adding macroeconomic variables did not result in markedly improved accuracy, whereas disaggregating CPI data led to better forecasts. Similarly, Paranhos (2021) [

17] demonstrated that LSTM networks outperform both autoregressive and UC-SV models over longer forecast horizons in U.S. data, with the ability to incorporate long-term dependencies being particularly valuable during periods of heightened economic uncertainty (e.g., the Great Recession).

Further evidence for the effectiveness of machine learning in inflation forecasting can be found in Naghi et al. (2024) [

18], who tested Random Forest, Gradient Boosting, and neural networks using OECD and Eurostat data enriched with various macroeconomic indicators. These approaches outperformed classical structural models, especially in volatile contexts. Stoneman and Duca (2024) [

19] applied LSTM and CNN architectures to U.S. inflation amid the COVID–19 environment, concluding that deep learning outperforms traditional (S)ARIMA by better capturing nonlinearities and rapidly shifting inflation patterns. Barkan et al. (2023) [

20] introduced a hierarchical RNN structure for disaggregated U.S. CPI components, achieving significant performance gains by leveraging information across higher-level indices.

In a hybrid context, Theoharidis et al. (2023) [

21] combined ConvLSTM networks with variational autoencoders (VAEs) to refine U.S. inflation forecasts, reporting noteworthy accuracy enhancements over benchmark models. Marcellino (2008) [

22], McAdam and McNelis (2005) [

23] explored “thick” models for inflation aggregating linear and neural network-based forecasts to show that these can match or even exceed the best linear approaches in certain conditions. Šestanović (2019) [

24], using Jordan Neural Networks, illustrated that Eurozone inflation could be accurately predicted over a two-year horizon, aligning with the Survey of Professional Forecasters. Meanwhile, Li et al. (2023) [

25] tested a genetically optimized XGBoost model for Taiwan’s inflation, outperforming not only conventional machine learning but also deep learning models. Similar patterns emerge in Araujo et al. (2023) [

26] for Brazil and in Mirza et al. (2024) [

27] for Pakistan, where random forest and gradient boosting excelled under high-volatility scenarios. Additionally, Alomani et al. (2025) [

28] compared ARIMA to a gradient boosting regressor (GBR) on World Bank data, highlighting GBR’s superior short-term performance, even though ARIMA remained more reliable over extended timeframes.

Overall, these studies collectively indicate that neural network approaches (especially GRU and Jordan Neural Networks) are promising for predicting HICP and other inflation indicators, thanks to their capacity to handle nonlinearities and long-term temporal patterns. Yet the extent to which they decisively outperform traditional statistical and econometric methods is not uniform across all situations, pointing to the need for continued methodological refinements and careful variable selection. By doing so, researchers can improve the accuracy of inflation forecasts, be it at an aggregate level or broken down into specific subcomponents.

2. Materials and Methods

2.1. Applied Methods

2.1.1. Simple Recurrent Neural Network (RNN)

An RNN is a neural network designed to process serial data, such as temporal or sequential information. Unlike traditional feedforward networks, RNN uses feedback connections so that the hidden state of the previous time step is taken into account when calculating the next step. This enables RNN to recognize and learn certain temporal relationships. The hidden states are updated using a nonlinear activation function (e.g., tanh or ReLU), and the resulting states are passed on to the next time step. RNN is useful in tasks such as speech recognition, language model building, or time series analysis. However, the network also has serious drawbacks, notably the problem of vanishing gradients, which makes it difficult to learn long-term relationships. This happens because the gradient values become too small over time, so the network cannot efficiently update information from previous steps. Another problem is an exploding gradient, in which the gradient values become too large, which can make learning unstable. Because of these problems, simple RNNs are rarely used for long sequences, and more advanced architectures such as LSTM or GRU are used instead (Bai et al. 2021) [

29]. The following equations can represent the basic structure of an RNN:

where

xt represents the inputs at time

t, St the outputs of the hidden layer,

yt the information of the output layer,

f() the activation function, and

bh and

bo the bias vectors of the hidden and output layers, respectively. The weights are defined as

W, which is the weight of the hidden layer;

U, which is the weight of the inputs at the current time; and

V, which is the weight of the outputs.

2.1.2. Gated Recurrent Unit (GRU)

The GRU is a simplified version of the LSTM, which is also designed to deal with the problem of gradient descent. The GRU structure contains fewer gates than the LSTM, so it is faster and requires fewer computational resources. In contrast to the three gates of the LSTM, the GRU uses only two gates: the update gate and the reset gate. The update gate determines how much information from the previous state is to be retained and how much is to be replaced based on the new input. And the reset gate controls how much information from the old state is forgotten, allowing the network to adapt flexibly to sequences of different lengths. GRU performance can be similar or even better than LSTM, especially for smaller data sets. In addition, it learns faster due to fewer parameters and can therefore be more efficient in environments where computational capacity is limited. For example, GRU is a popular choice for machine learning-based chatbots, machine translation, speech recognition and time-series analysis. Because it has a simpler structure, it can be a preferable alternative to LSTM in many cases, although for some problems LSTM may be more accurate (Xiao et al., 2022) [

30]. The following equations govern the GRU:

In this case, represents the hidden state of the neuron at the previous time step, and σ() denotes the logistic sigmoid function, defined as The weight matrices for the update gate are and , and for the reset gate, they are and . The weight matrices for the intermediate output are represented by and . The input value at time t is denoted by , while the hidden layer output and the temporary unit state at time t are represented by and , respectively.

2.1.3. Long Short-Term Memory (LSTM)

LSTM is a more advanced version of RNN, developed specifically for learning long-term relationships. LSTM solves the gradient descent problem in simple RNNs by using a special memory cell. This memory cell helps to store and retrieve longer-term information. The LSTM contains three main gates: the input gate, the forget gate and the output gate. The forget gate controls what previous information the cell forgets or retains. The input gate decides what new information is put into the memory cell. The output gate determines what information is passed from the cell to the next time step. The LSTM is therefore able to store important information for a longer period of time while filtering out less important data. For this reason, it is well suited for tasks such as text and speech processing, machine translation or time series prediction. However, LSTM is more computationally intensive than simple RNN because it uses more gates and memory cells and therefore requires more processing power. Nevertheless, it remains one of the most popular solutions among recurrent networks for handling long-term correlations. (Barkan et al., 2023) [

20]. The following equations describe the LSTM model:

The matrices

and

represent the weight matrices of the gated unit, and

is the bias term for this unit.

is the current cell state, while

refers to the cell state at the previous time step. Similarly,

and

are the weight matrices of the output gate, and

is the corresponding bias term. Here,

denotes the input batch at time t, and

is the hidden state from the previous time step. The weight matrices for the input gate are

and

, with

as the bias term, and the sigmoid function σ is used in this equation to control the activation. The weight matrices of the forget gate are

and

, and the bias term is

. The candidate memory cells are denoted by

. As mentioned earlier, the weight matrices for the gated unit are

and

, with

as the bias term. The current cell state

differs from the previous cell state

at this time step, while the output gate’s weight matrices remain

and

, and the bias term remains

(Dai et al. 2022) [

31].

2.1.4. Support Vector Machine (SVM) Regression

SVM regression (SVR—Support Vector Regression) is a machine learning method based on the principle of the support vector machine (SVM), which aims to find an optimal hyper-plane between the input variables and the target variable. One of the main advantages of SVR is that it is flexible and robust, as it can model not only linear but also nonlinear relationships using kernel truncations (e.g., polynomial or RBF kernels). The SVR introduces an ε-insensitive zone, which means that only data points that fall outside the given error bound are considered by the model. This helps to reduce the sensitivity of the model to noisy data and avoid overfitting. SVR can be mathematically described as an optimization problem, which aims to minimize the error and find the best-fitting plane to the data. The advantages of SVR include good performance on small to medium-sized data sets, and it works well in high-dimensional space. Its disadvantage is that its computational cost can be high on large data sets, as optimization requires quadratic programming. SVR is particularly well suited for financial forecasting, weather forecasting, and bioinformatics problems (Ülke et al., 2018) [

32]. The decision boundary is defined in Equation (4), where SVMs can map the input vectors

into a high-dimensional feature space

and

is mapped by the kernel function

.

SVMs convert non-separable classes into separable ones with linear, nonlinear, sigmoid, radial basis, and polynomial kernel functions. The formula of the kernel functions is shown in Equations (5)–(7), where γ is the constant of the radial basis function, and d is the degree of the polynomial function. The two adjustable parameters in the sigmoid function are the slope α and the intercepted constant c.

2.1.5. Random Forest Regression (RFR)

Random Forest regression is an ensemble learning method that combines multiple decision trees to increase the accuracy of the prediction. The method involves building multiple trees on a randomly selected data set, and the final prediction is the averaged or majority voting result of the trees. One of the key techniques is Bootstrap Aggregation (Bagging), where trees learn on different random samples, thus reducing overfitting and improving generalization ability. One of the big advantages of Random Forest is that it is extremely robust, less sensitive to noisy data, and does not require much prior data transformation. It can also handle missing data and nonlinear relationships. A disadvantage is that, although it scales well, it can be more computationally intensive on larger data sets, as it requires the computation of many trees together. Random Forest is excellent for financial forecasting, medical diagnostics, and other predictive modelling problems where large amounts of data are available (Park et al., 2022) [

33].

Two important fine-tuning parameters are used in the modelling of RF: one is the number of branches in the cluster (p), and the other is the number of input vectors to be sampled in each split

(k). Each decision tree in RF is learned from a random sample of the data set (Ismail et al., 2020) [

34].

To build the RF model, three parameters must be defined beforehand: the number of trees (

n), the number of variables (

K), and the maximum depth of the decision trees (

J). Learning sets (

Di, i = 1, …., n) and variable sets (

Vi, i = 1, …., n) of decision trees are created by random sampling with replacement, which is called bootstrapping. Each decision tree of depth

J generates a weak learner

from each set of learners and variables. Then, these weak learners are used to predict the test data, and then an ensemble of n trees

is generated. For a new sample, the RF can be defined as follows (Park et al. 2022) [

33]:

where

is the predicted value of

.

For modelling, the Python programming language version 3.9 was used. Both the Scikit-Learn and Tensorflow libraries, developed for machine learning methods, were helpful in the modelling process.

2.2. Performance Evaluation

The most commonly used metrics in the literature for evaluating the predictive models and assessing their accuracy are root mean square error (RMSE), mean absolute error (MAE), and mean absolute percentage error (MAPE) (Nti et al., 2020) [

35].

2.2.1. Mean Absolute Error (MAE)

Mean Absolute Error (MAE): This indicator measures the average magnitude of the error in a set of predictions.

where

is the estimated value produced by the model,

is the actual value, and n is the number of observations.

2.2.2. Normalized Mean Absolute Error (NMAE)

However, the database used also contains zero values, so the traditional MAPE indicator could not be used because of the zero denominator. To compare the periods and the different inflation components, the Normalized Mean Absolute Error (NMAE) was used.

where

is the maximum value of the data range, and

is the minimum.

Forecasts have greater accuracy and dependability when the indicator has a lower value. Because the NMAE is not influenced by the price’s nominal magnitude, it can also be used to compare different models and instruments (Terven et al. 2023) [

36].

2.2.3. Forecastability Index (FI)

To quantify the predictability of both the inflation subcomponents and the aggregated HICP, we introduced the Forecastability Index (FI). For each category, the index measures the relative magnitude of model errors (NMAE) compared to the best-performing model:

where j denotes either an inflation subcomponent or the overall HICP,

m refers to a given model, and

M is the number of models considered. The term

captures the smallest forecasting error for category j. By construction, an FI

j value close to 1 indicates that the category is relatively easy to predict, whereas higher values reflect greater forecasting difficulty.

2.2.4. Interquartile Range (IQR)

To assess the dispersion of forecasting errors across models, we also computed the interquartile range (IQR). For each category, the IQR is defined as the difference between the 75th and 25th percentiles of the error distribution:

where Q1

j and Q3

j denote the 25th and 75th percentiles, respectively, of the model errors for category j. A low IQR suggests that forecasting performance is stable and largely independent of model choice, while a high IQR indicates that forecastability is more sensitive to the specific model used, implying reduced robustness.

Taken together, the FI allows us to rank both the subcomponents and the HICP in terms of forecasting difficulty, while the IQR provides complementary insights into the robustness of predictions across different models.

2.3. Hyperparameters

Table 1 reports the set of hyperparameters employed across the models. For each examined period (2009, 2016, and 2023), all models were optimized separately using the full time series of the respective regime, considering both the individual inflation subcomponents and the aggregate HICP index. A 12-month rolling window approach was applied, allowing each forecast to be based on the most recent historical information while mitigating the risk of overfitting. A time-based 90–10% train–test split was used, explicitly avoiding random sampling to preserve the temporal structure of the data. Hyperparameter tuning was performed using a grid search framework combined with time series split cross-validation, ensuring that each validation fold relied strictly on past observations. This setup effectively eliminated any risk of information leakage between the training and testing phases.

For the deep learning models, an early stopping mechanism was implemented based on the validation loss (Mean Absolute Error, MAE), which helped prevent overfitting by halting training once no further improvement was observed. The model weights from the best-performing epoch were retained, improving both the stability and generalization of forecasts. Models were re-optimized for each period and inflation subcategory to ensure that hyperparameters adapted appropriately to the specific macroeconomic environment.

In the case of the neural network architectures, particular emphasis was placed on the specification of the activation functions, given their pivotal role in shaping both the convergence properties and the predictive accuracy of the models. To further underscore their relevance, the results associated with different activation functions are presented and discussed separately, highlighting their influence on the forecastability of the inflation subcomponents. (Apicella et al., 2021; Szandała, 2021; Dubey et al., 2022; Singh et al., 2023; Vancsura et al., 2024) [

37,

38,

39,

40,

41].

All analyses were conducted in Python 3.11, using the TensorFlow and Keras libraries for deep learning implementation (including tensorflow, keras, numpy, pandas, scikit-learn), while classical machine learning models such as Support Vector Regression (SVR) and Random Forest Regressor (RFR) were implemented using the scikit-learn package (SVR, RandomForestRegressor, GridSearchCV, and TimeSeriesSplit).

2.4. Data

In our research, we used the data of subcomponent (Food and non-alcoholic beverages; Alcoholic beverages and tobacco; Clothing and footwear; Housing, water, electricity, gas and other fuels; Furnishings, household equipment and routine house maintenance; Health; Transport; Communication; Recreation and culture; Education; Restaurants and hotels; Miscellaneous goods and services) of monthly HICP for the period January 2000 (base period) to December 2023. Inflation data were collected from Eurostat. This period was chosen because it includes the period of calm/control (2016), Subprime crisis (2008), and the Russian–Ukrainian conflict (2022).

In our study, we have divided this time period into three sub-samples, the first of which relates to the subprime crisis. In this case, we made forecasts for the 12 months of 2009. The full database covered the period from 1 January 2000 to 31 December 2009. The second sub-sample was defined as a control period. Here, we simulated forecasts for the year 2016, for which the period from 1 January 2007 to 31 December 2016 provided the inflation subtype data. The third sub-sample targeted the period of the Russian–Ukrainian conflict and provided the results of the predictions for the year 2023. The full database covered the interval between 1 January 2014 and 31 December 2023. Indeed, the two crisis periods have been important factors in the analysis for the robustness test of the models. The forecasting targets were defined as the year-on-year (y/y) percentage changes of the HICP and its 12 inflation subcomponents. Accordingly, the input data consisted of monthly aggregated y/y inflation rates, which inherently remove most seasonal patterns; therefore, additional seasonal adjustment was not required. No explicit detrending was applied, as the primary goal was to allow the models to learn the long-term patterns and structural dynamics of inflation directly from the data.

Given the exceptional price shocks and energy market volatility observed in 2022–2023, the data set was examined for potential outliers. Extreme values were retained, as they represent genuine economic phenomena and carry essential information about inflationary dynamics. No missing observations were present in the data set; therefore, no imputation procedures were required.

All data were scaled using a MinMaxScaler fitted exclusively on the training subset and subsequently applied to the validation and test data to prevent information leakage. This scaling approach contributed to stable model convergence, particularly for RNN-based architectures, where linear activation functions can be sensitive to input magnitude. Overall, these preprocessing steps ensured the robustness of model training and the reliability of the resulting forecasts.

For the modelling, the learning and testing datasets were split in a ratio of 90–10%. For each of the forecast periods (2008, 2016, 2023), the forecast period was between 1 January and 31 December. Simulations were carried out using univariate methods.

Before implementing the forecasting models, the time-series properties of the HICP and its twelve subcomponents were examined to ensure the statistical adequacy of the data.

Table 2 presents the results of the unit root, structural break, and stationarity tests conducted for each category. Specifically, the Augmented Dickey–Fuller (ADF), Phillips–Perron (PP), Kwiatkowski–Phillips–Schmidt–Shin (KPSS), and Elliott–Rothenberg–Stock Dickey–Fuller Generalized Least Squares (DF–GLS) tests were applied to assess the presence of unit roots and mean reversion, while the Zivot–Andrews (ZA) test and the Pelt algorithm were employed to detect structural breaks in the series. The results reveal that inflation subcomponents exhibit heterogeneous stationarity properties, reflecting their differing market and policy structures. In general, categories such as Communication, Transport, and Restaurants and Hotels display stronger evidence of stationarity, with statistically significant ADF and DF-GLS statistics (

p < 0.05) and stable KPSS outcomes. For instance, Communication shows robust stationarity (ADF stat = −4.06,

p = 0.0011; DFGLS stat = −3.20,

p = 0.0014), suggesting a mean-reverting price process, likely driven by regulated tariffs and slower price adjustments. Conversely, subcomponents like Furnishings, Miscellaneous goods and services, and Food and non-alcoholic beverages exhibit weaker evidence of stationarity, as indicated by high ADF

p-values (>0.4) and borderline KPSS significance. These series appear to follow near-random-walk dynamics, consistent with persistent or trend-inflation patterns in consumer goods.

The Zivot–Andrews and Pelt tests highlight several structural breaks across nearly all categories, typically clustered around major macroeconomic disruptions such as the 2008 financial crisis, the 2012–2014 euro-area stagnation, and the 2021–2023 inflationary shock. For example, breaks in Housing, water, electricity, gas, and other fuels occur near observations May 2013 and April 2021, corresponding roughly to the 2013 and 2021 periods, associated with energy price shocks and the pandemic onset. The Alcoholic beverages and tobacco and Education series also exhibit multiple structural breaks, possibly reflecting fiscal policy changes and regulated pricing interventions. The existence of these breaks supports the interpretation that inflation dynamics are regime-dependent and shaped by exogenous shocks.

Despite the presence of several non-stationary or near-integrated series and identified structural shifts, the models employed (RNN, LSTM, GRU) are well suited to handle such time-series complexities. Unlike traditional linear econometric methods that assume strict stationarity and homoscedastic residuals, deep learning frameworks can capture nonlinear, non-stationary, and regime-dependent patterns through dynamic weight adaptation and memory mechanisms. Consequently, while some inflation components deviate from classical stationarity assumptions, the applied methodologies remain robust and capable of extracting meaningful temporal dependencies, yielding reliable forecasts under varying macroeconomic regimes.

3. Results

Table 3 presents descriptive statistics for the main subcomponents of inflation over the period from 1 January 2000 to 31 December 2009. The average monthly inflation rates vary considerably across categories, with alcoholic beverages and tobacco (4.10%) and education (3.57%) exhibiting the highest average price increases, while communication experienced a deflationary trend with an average of −2.46%. Notably, the volatility of inflation, as measured by the standard deviation, is particularly high in transport (2.76%) and communication (2.17%), indicating substantial fluctuations in these sectors. In contrast, categories such as miscellaneous goods and services (0.39%) and furnishings (0.47%) show much more stable price developments. The range of values highlights the dispersion in inflationary dynamics, with communication and clothing displaying the widest intervals. The HICP, representing the overall inflation rate, recorded a relatively stable mean of 2.10% with limited variability (standard deviation of 0.80%). These findings underscore the heterogeneity of inflationary pressures across different sectors and emphasize the importance of disaggregated analysis in inflation research.

Table 4 shows the NMAE estimates for the year 2009. In the Alcoholic beverages and tobacco subgroup, the NMAE values were in a narrower range. The best performance was obtained with LSTM (tanh) with a value of 0.0368, while the worst estimation accuracy was obtained with RNN (tanh) with a value of 0.0584. For the Clothing and footwear subcomponent, the RFR model was the most accurate (0.0273), while the GRU (ELU) was the least accurate with a value of 0.0707. For the Communication predictions, the LSTM (tanh) also performed best (0.0277), while the RNN (ELU) algorithm produced the worst prediction (0.0561). For the Education component, the best (0.0300) and worst (0.1847) model performances were in a much wider range. The former was produced by GRU (tanh) and the latter by SVR, which is a rather poor result. For Food and non-alcoholic beverages, a model with a linear activation function, RNN, was the most accurate with an NMAE of 0.0213, while RFR was the least accurate (0.1062). For Furnishings, household equipment, and routine house maintenance, the LSTM (linear) showed the best performance with 0.0702, while the GRU (ELU) had the worst estimation performance (0.1195). It is important to note that in this case, a significant shift in the minimum value of the NMAE values was already observed compared to the previous categories. For the Health subtype, we observed the smallest difference between model performances. GRU (ELU) achieved the most optimal result (0.0178), while RNN (ELU) performed the worst (0.0431). Considering the NMAE values, this category is considered the most predictable of all for the 2009 forecast horizon. When examining the Housing, water, electricity, gas, and other fuels subcomponent, a much more nuanced picture emerges, as there is a significant difference between the most accurate (RNN-linear, 0.0476) and least accurate (RFR, 0.2006) models. On the other hand, for the Miscellaneous goods and services type, the best performance was achieved by the RFR (0.0418), while the least useful algorithm was the RNN (tanh) (0.0719). For both the Recreation and culture and Restaurants and hotels categories, the RNN (linear) model performed best (0.0968 and 0.0786), while the SVR performed worst (0.1536 and 0.2390). For the Transport subtype, the LSTM (ELU) was the most useful with 0.0872, while the RFR was underperforming (0.2372). The latter three categories thus posed significant challenges for the methodologies used. The best result over the period was achieved in the Health category, while the worst was achieved in the Restaurants and hotels category.

Examining the average model performance per subtype, we find that RNN (linear) and LSTM (tanh) performed outstandingly and very similarly. However, the traditional machine learning models scored rather poorly on average.

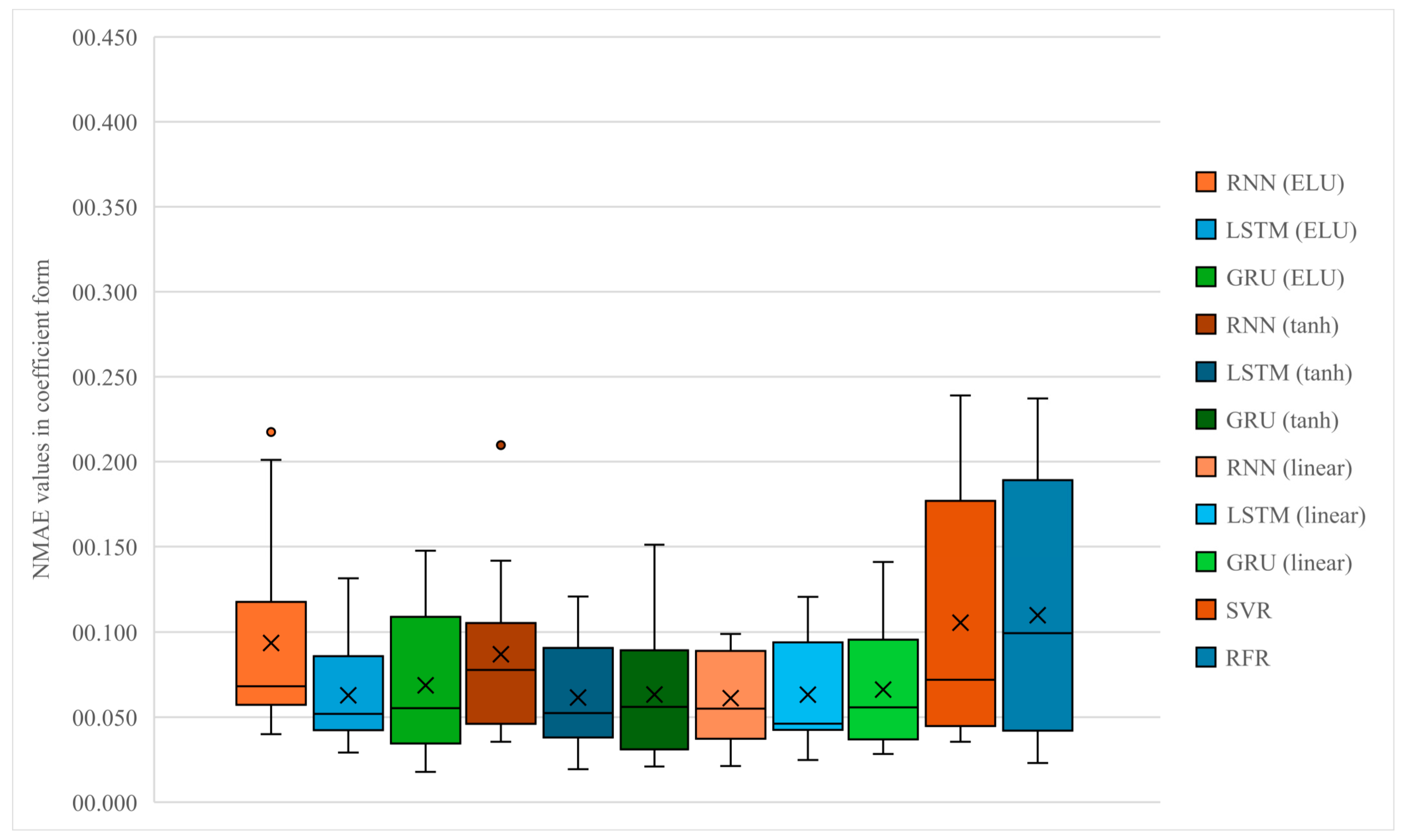

Figure 1 presents the distribution of Normalized Mean Absolute Error (NMAE) values for all forecasting models for the year 2009, which reflects the post-financial crisis environment. The results clearly indicate that deep learning architectures (RNN, LSTM, and GRU) with various activation functions (ELU, tanh, and linear) generally outperform traditional machine learning models such as SVR and RFR. The median NMAE values of neural network-based models are notably lower, accompanied by narrower interquartile ranges, suggesting more stable and consistent forecasting performance. Models using nonlinear activation functions, particularly ELU and tanh, exhibit superior accuracy, implying that these functions are better suited to capture the nonlinear and dynamic nature of inflation series. In contrast, models employing linear activation functions show wider variability and slightly higher median errors, reflecting limitations in representing complex time dependencies. Among the classical algorithms, SVR shows moderate errors, while RFR performs the weakest, with both higher median values and greater dispersion. The RNN (ELU) and LSTM (tanh) models demonstrate the most robust and balanced performance, as evidenced by their compact error distributions and the absence of major outliers. Outlier values were limited to a few inflation subcomponents, with “Restaurants and hotels” and “Transport” showing exceptionally high NMAE scores under the RNN-based models. These deviations suggest that price dynamics in these categories were particularly volatile or less predictable during this period, reducing the models’ forecasting accuracy. Overall, the analysis confirms that deep learning models, particularly those leveraging nonlinear activations, consistently deliver more reliable and accurate inflation forecasts across heterogeneous subcomponents.

Table 5 reports descriptive statistics for the disaggregated components of inflation from 1 January 2007 to 31 December 2016. During this period, the highest average inflation rates were observed in alcoholic beverages and tobacco (3.25%) and education (2.34%), whereas communication remained deflationary with an average rate of −1.81%. Transport exhibited the highest volatility among all subcomponents (standard deviation of 3.33%), reflecting its sensitivity to external shocks such as oil price fluctuations. Conversely, alcoholic beverages and tobacco showed relatively stable price dynamics with the lowest standard deviation (0.90%). The inflation rates for furnishings, health, and miscellaneous goods and services remained moderate and stable, indicating limited variation, recorded an average of 1.47% with moderate dispersion (standard deviation of 1.18%), suggesting stability. The HICP reflects overall inflation; generally, there was a low-inflation environment throughout the decade. These results confirm the continued heterogeneity of inflation behaviour across sectors and highlight the necessity of component-level analysis for better understanding inflationary trends and policy implications.

Table 6 shows the NMAE estimates for the year 2016. In the Alcoholic beverages and tobacco subgroup, the best performance was achieved with the GRU (ELU) method with a value of 0.0434, while the worst estimation accuracy was shown by the RFR with a value of 0.1289. For the Clothing and footwear subcomponent, the LSTM (linear) model was the most accurate (0.0448), while the GRU (ELU) was the least accurate, with a difference of 0.0858. For the Communication forecast, the LSTM (tanh) performed best (0.0159), while the RFR algorithm produced the weakest forecast (0.1192). For the Education component, the best (0.0155) and worst (0.0453) model performances were in a much narrower range than in 2009. The former was produced by GRU (linear) and the latter by LSTM (linear). For Food and non-alcoholic beverages, the model with an ELU activation function, LSTM, was the most accurate with an NMAE of 0.0370, while the least accurate was RNN (tanh) (0.0539). For Furnishings, household equipment, and routine house maintenance, RFR showed the best performance with 0.0301, while RNN (tanh) had the worst estimation performance (0.0863). For the Health subcomponent, LSTM (tanh) achieved the best result (0.0241), while RNN (tanh) performed the worst (0.0894). For the Housing, water, electricity, gas and other fuels subcomponent, GRU (tanh) was the most accurate with 0.0360, while LSTM (linear) was the least accurate with an NMAE of 0.0748. For the Miscellaneous goods and services type, GRU (ELU) achieved the best performance with 0.0401, while SVR was considered the least accurate algorithm (0.0824). The Recreation and culture category presented the greatest challenge to the models in 2016. This is where the largest gap between the performance of the best (RFR, 0.0977) and worst (RNN-ELU, 0.4279) models is observed. For the Restaurants and hotels sub-type, we obtained much more consolidated results than in the previous period. The best performing model was RNN (linear) (0.0279), while the worst was RFR (0.0751). For the Transport subtype, the GRU (linear) was the most useful with 0.0553, while the RNN (ELU) was considered the worst (0.1453). The best result for the period was obtained for Education, while the worst was obtained for Recreation and culture.

Looking at the average model performance by subtype, we find that GRU (tanh) and RNN (linear) performed outstandingly with minimal variation from each other. The RNN (ELU) model achieved the weakest average rating.

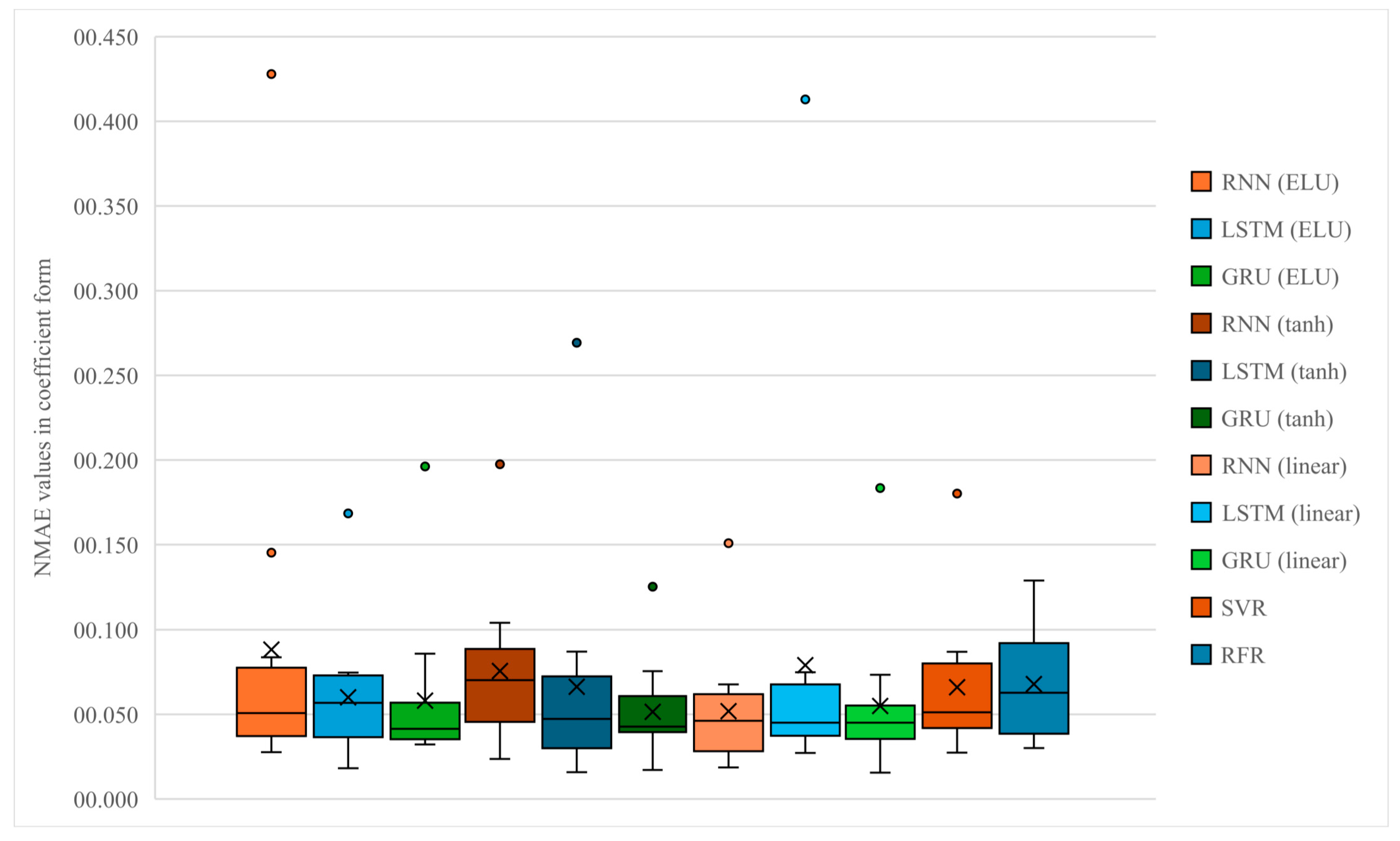

Figure 2 displays the distribution of Normalized Mean Absolute Error (NMAE) values across all forecasting models for the 2016 period, representing a phase of relative macroeconomic stability. Compared with the preceding period, the dispersion of the NMAE values appears generally narrower, suggesting improved model convergence and reduced volatility in forecast errors. The deep learning models (RNN, LSTM, GRU) again outperform the traditional approaches (SVR and RFR), as evidenced by their lower medians and tighter interquartile ranges. Among them, the GRU (ELU) and LSTM (tanh) models exhibit the lowest median errors, reflecting their strong capacity to capture temporal dependencies and nonlinear dynamics in the inflation data. The use of nonlinear activation functions such as ELU and tanh leads to more compact error distributions and fewer extreme outliers, confirming their stabilizing effect on model performance. In contrast, the linear activation variants display slightly higher median NMAE values and larger spreads, indicating lower flexibility when modelling complex price movements. Classical machine learning models, particularly RFR, show higher variability and median errors, reaffirming their weaker adaptability to macroeconomic shocks and structural changes. Nearly all models exhibited outlier values associated with the “Recreation and culture” category, indicating a systemic forecasting challenge rather than a model-specific limitation. This pattern suggests that structural or irregular shocks within this subcomponent may have been difficult to capture even for the more complex learning architectures. Overall, the distribution patterns suggest that nonlinear deep learning models provide not only higher accuracy but also greater robustness, maintaining consistent performance even in a stable inflationary environment.

Table 7 summarizes the descriptive statistics of inflation subcomponents between 1 January 2014 to 31 December 2023, a period marked by heightened global economic volatility. Food and non-alcoholic beverages (3.19%) and alcoholic beverages and tobacco (3.21%) registered the highest average inflation rates, while communication once again exhibited a deflationary trend with a mean of −1.11%. Housing-related costs displayed the greatest volatility (standard deviation of 5.65%), with values ranging from −6.10% to 23.20%, underscoring the impact of energy price shocks and regulatory shifts. Transport and food also showed substantial variability, with standard deviations of 4.48% and 4.53%, respectively. In contrast, health (0.66%) and communication (1.00%) remained relatively stable in terms of price fluctuations. Interestingly, while the HICP averaged 2.20%, its standard deviation of 2.74% reflects increased inflation dispersion during this decade, especially in the post-pandemic years. Overall, the data highlight significant heterogeneity and rising volatility in inflation dynamics, necessitating careful monitoring of disaggregated components for accurate inflation forecasting and policy formulation.

Table 8 shows the NMAE estimates for the year 2023. The recent global economic problems (COVID, Russian–Ukrainian conflict) have caused a significant rise in inflation globally, with the impact even more pronounced in the European Union. This period is considered to be the most extreme for the tests, which also underpins our performance expectations for the models. In the Alcoholic beverages and tobacco subgroup, the best performance was achieved with the RNN (linear) method with a value of 0.0575, while the worst estimation accuracy was shown by the RFR with a value of 0.3963. For the Clothing and footwear subcomponent, the RNN (tanh) model was the most accurate (0.0728), while the SVR was the least accurate with 0.4967. For the Communication predictions, the RNN (linear) also performed the best (0.0613), while the SVR algorithm produced the weakest prediction result (0.0904). For the Education component, the best (0.0449) and worst (0.5109) model performances were in a much wider range. The former was produced by GRU (tanh) and the latter by SVR, which is a rather poor result. For Food and non-alcoholic beverages, a model with a linear activation function, RNN was the most accurate with an NMAE value of 0.0849, while RNN (ELU) was the least accurate (0.3822). For Furnishings, household equipment, and routine house maintenance, RNN (tanh) showed the best performance with 0.0988, while GRU (ELU) had the worst estimation performance (0.3061). For the Health subtype, we observed one of the largest differences in model performance. The most optimal result was achieved by RNN (tanh) (0.0804), while LSTM (ELU) performed the worst (0.9958). When examining the Housing, water, electricity, gas, and other fuels subcomponent, RNN (ELU) performed the best (0.0955), while GRU (tanh) performed the worst (0.3288). For the Miscellaneous goods and services type, the best performance was achieved by RNN (tanh) with 0.1348, while the least useful algorithm was LSTM (linear) (1.7279), which performed extremely poorly. Considering the NMAE values, this category is considered the most unpredictable of all for the 2023 forecast horizon. For the Recreation and culture category, the highest estimation accuracy was achieved by RNN (linear) (0.0845), while the least performing model was LSTM (tanh) (0.2493). For the Restaurants and hotels subcategory, the best performing algorithm was RNN (tanh) (0.0445), while the worst performing algorithm was RNN (linear) (0.1625). For the Transport subtype, the SVR was able to achieve the lowest result with 0.0868, while the LSTM (tanh) was considered the least useful (0.1492). The best result over time was achieved for Restaurants and hotels, while the worst result was achieved for Miscellaneous goods and services.

As expected, the most challenging period was 2023, which is reflected in the average performance of the models. In this comparison, the best performance is attributed to the RNN (tanh) algorithm, while the worst average performance, with a significant gap, is obtained by the LSTM (linear) model, with a prominent role of the exceptionally poor performance for the Miscellaneous goods and services subtype.

Figure 3 presents the distribution of Normalized Mean Absolute Error (NMAE) values across all forecasting models for the 2023 period. The results reveal a generally higher dispersion of errors compared to earlier periods, reflecting the elevated volatility and uncertainty of the inflation environment during the post-pandemic and geopolitical shock phase. While deep learning models continue to outperform the traditional approaches, the differences among architectures become more pronounced under these unstable conditions. The RNN (tanh) and RNN (linear) models exhibit the lowest median NMAE values, indicating better adaptability to abrupt structural changes in the inflation series. In contrast, LSTM and GRU models, especially those using ELU and tanh activations, show wider interquartile ranges and the presence of outliers, suggesting sensitivity to short-term volatility. Traditional machine learning models, particularly SVR and RFR, perform significantly weaker, with both higher median and mean NMAE values, indicating reduced robustness in highly non-stationary environments. Despite the increased overall error dispersion, nonlinear recurrent models still maintain a relative advantage in stability and predictive accuracy. This pattern underlines the superior flexibility of deep learning architectures in capturing the rapidly changing inflation dynamics characteristic of the 2023 regime.

Across the three examined periods (2009, 2016, and 2023), the evolution of forecasting performance reflects clear links between macroeconomic stability and model accuracy. During the 2009 crisis period, models exhibited higher NMAE dispersion, indicating instability in capturing the rapidly changing inflation dynamics. The 2016 control period showed a noticeable improvement, with all recurrent neural network variants producing more compact error distributions and lower median values, reflecting greater predictability in a stable environment. By contrast, in 2023 forecasting accuracy deteriorated again, and the variance of errors widened significantly. Among the tested models, LSTM and GRU architectures with nonlinear activation functions (particularly tanh and ELU) consistently achieved the most stable results across all regimes. Traditional machine learning methods, especially SVR and RFR, tended to produce larger errors and greater variability, confirming their lower adaptability to nonlinear and volatile inflation patterns. The dispersion in the boxplots across models and categories serves as an empirical proxy for forecast uncertainty, reflecting variation in model-specific error distributions. This dispersion illustrates how uncertainty increases under volatile regimes, while narrowing substantially in periods of macroeconomic stability. Outlier observations were primarily linked to “Miscellaneous goods and services” and, to a lesser extent, “Health”. The concentration of extreme values in these categories especially across LSTM variants implies that model performance was more sensitive to the changing dynamics of these sectors in the post-pandemic period. Overall, the results underline that deep learning approaches are not only superior under stable conditions but also more resilient to economic shocks, capturing complex dependencies that traditional algorithms fail to model effectively.

Table 9 presents the normalized mean absolute error (NMAE) of the HICP inflation forecasts, estimated by the different machine learning models for the three years under study: 2009, 2016, and 2023. For 2009, the errors are relatively high, especially for the SVR (0.3987) and RFR (0.3309) models, while the lowest error is obtained with the RNN linear activation (0.0530). This suggests that the more complex models did not necessarily perform better in the mid-crisis period. By 2016, forecast errors had decreased significantly, with the LSTM (ELU) model achieving the lowest error (0.0287) and the average error for all models decreasing to 0.0381. In 2023, NMAE values increased again, especially for SVR (0.2476) and RFR (0.3076), which can be attributed to the post-pandemic and geopolitically uncertain period. Here again, the best performance was shown by LSTM with tanh activation (0.0493), which proved to be more robust in the changed environment. The year-to-year changes reflect the interaction between the volatility of the inflation environment and the adaptability of the models. Traditional machine learning algorithms, such as SVR and RFR, have consistently performed the poorest, confirming the advantage of recursive neural networks in predicting time-dependent economic time series. Interestingly, in 2016 almost all models performed with exceptionally low error, indicating the stability of inflation trends and the well-learned patterns of the models. Overall, the results suggest that the activation functions and the economic context of the period have a significant impact on the forecasting performance of the models. Simpler, linear RNN-based approaches offer a reliable and robust alternative in turbulent periods.

To ensure comparability with traditional statistical approaches, two benchmark models Seasonal Autoregressive Integrated Moving Average (SARIMA—automatic orders with drift) and Exponential Smoothing (ETS) were also implemented. Both models were estimated using the same 12-month rolling window framework as applied to the machine learning and deep learning approaches, ensuring temporal consistency and preventing information leakage. In addition to the inflation subcomponents, the HICP was also forecasted for each examined period, with all models re-estimated sequentially to capture potential structural changes in the data. The evaluation metrics, including MAE and NMAE, are reported in

Appendix A Table A5. The results show that neither of the baseline statistical models achieved superior predictive performance compared to the best-performing machine learning models within each regime and inflation category. This finding underscores the added value of advanced data-driven methods in capturing nonlinear and time-varying inflation dynamics that traditional linear frameworks often fail to represent adequately. Overall, the SARIMA and ETS models serve as meaningful benchmarks, confirming the robustness and relative advantage of the applied machine and deep learning architectures.

Building on the model performance comparisons, we calculated the Forecastability Index (FI) to assess the relative predictability of each inflation subcomponents and the overall HICP. This index provides a clear perspective on which categories are easier or more challenging to forecast, complementing the insights gained from the benchmark models.

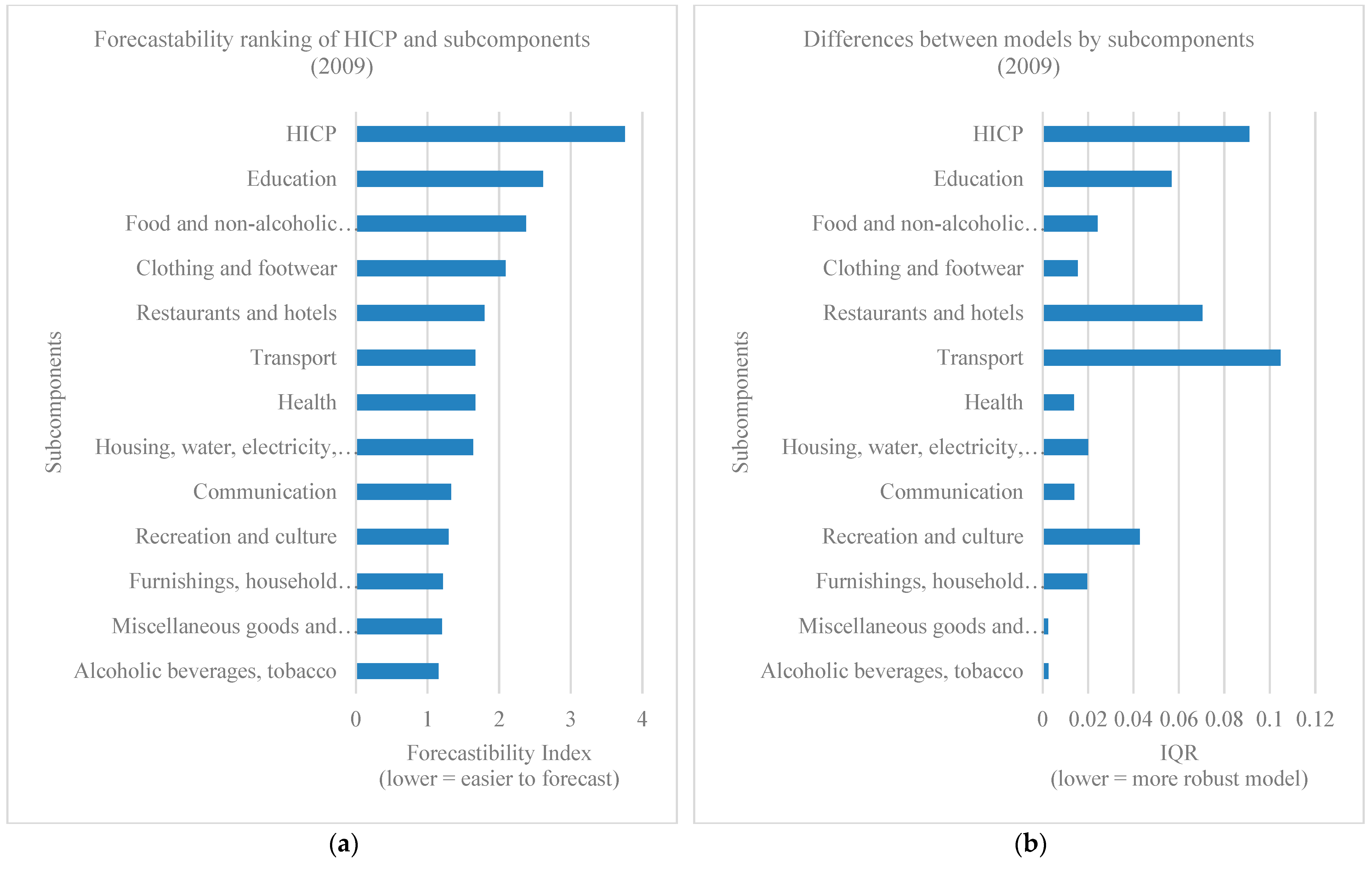

Figure 4 displays the ranking of inflation subcomponents according to their Forecastability Index (FI) and interquartile range (IQR) in 2009. The results show that Alcoholic beverages and tobacco and Miscellaneous goods and services are among the most predictable categories, as indicated by their low FI values. A middle group, including Recreation and culture, Communication, and Housing, water, electricity, gas and other fuels, exhibits moderately higher FI scores, reflecting a greater forecasting challenge. The most difficult components to predict are Clothing and footwear, Food and non-alcoholic beverages, and Education, all of which record FI values well above 2. Finally, the aggregated HICP shows by far the highest FI, suggesting that headline inflation is considerably more difficult to model than its disaggregated subcomponents. The IQR values provide complementary insights into the robustness of forecasting performance. A lower IQR implies that the predictability of a subcomponent is less dependent on the choice of model, while a higher IQR indicates greater variability across models. The findings suggest that categories such as Alcoholic beverages and tobacco and Miscellaneous goods and services are not only easy but also stable to forecast. In contrast, sectors like Transport, Restaurants and hotels, and Education display relatively high IQR values, highlighting that forecasting accuracy for these subcomponents is more sensitive to model specification.

Figure 5 displays the ranking of inflation subcomponents according to their Forecastability Index (FI) and interquartile range (IQR) in 2016. The analysis reveals that Food and non-alcoholic beverages is the most predictable category, with the lowest FI value, followed by the aggregated HICP and Transport, which also fall within the relatively easier-to-forecast group. A mid-range of predictability is observed for Clothing and footwear, Housing, water, electricity, gas and other fuels, and Miscellaneous goods and services, each with FI values between 1.4 and 1.5. Higher forecasting difficulty is associated with categories such as Restaurants and hotels, Alcoholic beverages and tobacco, Furnishings, household equipment and routine house maintenance, Health, and Education, where FI values approach or exceed 1.6–1.7. The most challenging components to predict in this period are Recreation and culture and Communication, both with FI values well above 2, indicating significant forecasting complexity. The IQR results provide additional insight into the robustness of predictions across models. Categories such as Food and non-alcoholic beverages, HICP, and Transport show very low IQR values, suggesting that their forecastability is stable and largely independent of model choice. In contrast, Recreation and culture has by far the highest IQR, underlining considerable dispersion in model performance and a lack of robustness. Moderate IQR values are observed for Clothing and footwear, Miscellaneous goods and services, and Alcoholic beverages and tobacco, pointing to greater sensitivity to model specification. Overall, the IQR analysis highlights that while some subcomponents are consistently predictable across models, others, especially Recreation and culture and Communication, remain both difficult and unstable to forecast.

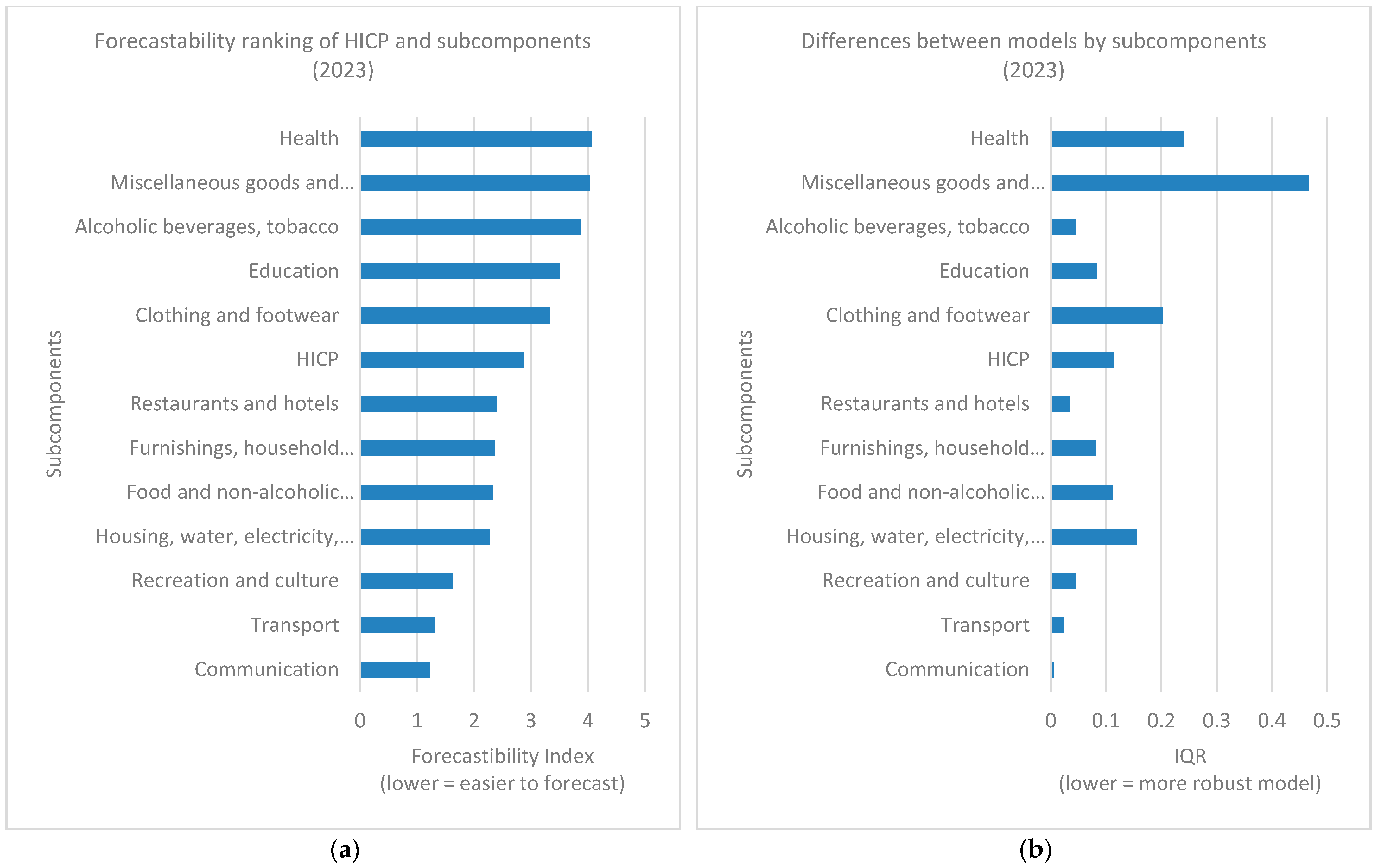

Figure 6 displays the ranking of inflation subcomponents according to their Forecastability Index (FI) and interquartile range (IQR) in 2023. The results indicate that Communication is the most predictable category in this sample, with the lowest FI value, followed by Transport and, to a lesser extent, Recreation and culture. These components can therefore be considered relatively easier to forecast. In contrast, a large group of subcomponents (including Housing, water, electricity, gas and other fuels, Food and non-alcoholic beverages, Furnishings, household equipment and routine house maintenance, and Restaurants and hotels) record substantially higher FI values, ranging between 2.2 and 2.4. The aggregated HICP also appears in this cluster, with an FI close to 2.9. The most difficult categories to forecast are found at the upper end of the ranking: Clothing and footwear, Education, Alcoholic beverages and tobacco, Miscellaneous goods and services, and Health, all of which exceed an FI of 3.0. Notably, Health and Miscellaneous goods and services stand out as particularly challenging, with FI values around 4.0, underscoring the considerable complexity of forecasting these components. The IQR results complement the FI analysis by capturing the robustness of forecasts across models. Communication again demonstrates high stability, with the lowest IQR, followed by Transport and Recreation and culture, where the dispersion of model errors is limited. Conversely, several subcomponents with high FI values also exhibit very large IQRs, indicating not only forecasting difficulty but also strong sensitivity to model choice. In particular, Miscellaneous goods and services and Health show extremely high IQRs (0.47 and 0.24, respectively), highlighting substantial heterogeneity across models. Other categories, such as Clothing and footwear, Food and non-alcoholic beverages, and Housing, water, electricity, gas, and other fuels, also record relatively large IQR values, reinforcing the notion that these subcomponents are both hard to predict and unstable across different model specifications.

As

Table 9 presents the NMAE values for the overall HICP, while

Table 3,

Table 5 and

Table 7 detail the same error metrics for various HICP subcategories over the years 2009, 2016, and 2023. In 2009, the subcategories generally show much lower forecast errors compared to the aggregate HICP NMAE of 0.1989. Most subcategories’ errors range between 0.03 and 0.14, with only a few exceptions like “Restaurants and hotels” and “Transport,” which have somewhat higher errors (~0.14–0.15). This contrast indicates that the aggregated inflation measure (HICP) was harder to predict than its individual components, possibly due to the combined volatility and complexity of the overall index. By 2016, both the aggregate HICP and subcategories experienced a marked improvement in forecast accuracy. The average NMAE for subcategories dropped to roughly 0.06, while the overall HICP error was even lower at 0.0381, reflecting a more stable inflation environment. Forecasting models performed consistently well across most subcategories, except for “Recreation and culture,” which exhibited notably higher errors (~0.22). This suggests that during stable economic conditions, models better capture both aggregate and disaggregated inflation dynamics, though some niche areas remain more challenging. The 2023 results show a substantial increase in errors across both HICP and its subcategories. The average NMAE for subcategories rose to about 0.22, while the aggregate HICP error reached 0.1421. Some categories such as “Miscellaneous goods and services” and “Health” had particularly high errors, with averages well above 0.3, indicating increased volatility or model difficulties possibly linked to post-pandemic and geopolitical uncertainties. Nevertheless, other subcategories like “Communication” and “Transport” maintained relatively lower errors (around 0.07–0.11), demonstrating some robustness in prediction. Overall, the results highlight that while individual components may be easier to forecast under normal conditions, in turbulent periods both aggregate and disaggregated inflation indicators face heightened prediction challenges.

6. Conclusions

This paper has investigated the forecastability of the HICP and its 12 subcomponents using a wide range of machine learning and deep learning models over three distinct macroeconomic periods (2009, 2016, and 2023). By comparing forecasting performance across both models and economic regimes, we aimed to provide a comprehensive understanding of the heterogeneity of inflation dynamics in the euro area. Our results confirm that advanced deep learning architectures, particularly RNN (linear) and LSTM (tanh), consistently achieved lower forecast errors than traditional machine learning techniques such as SVR and RFR. This indicates that models based on recurrent neural networks are better equipped to capture the nonlinearities and temporal dependencies that characterize inflation processes. A further contribution of this study is the introduction of the Forecastability Index (FI) and the Interquartile Range (IQR) as complementary measures of predictive performance. The FI allowed us to systematically rank the predictability of inflation categories, while the IQR provided insights into the robustness of results across models. Together, these indicators revealed clear differences among inflation components. Categories such as Health and Education emerged as the most predictable, with low forecast errors and limited model sensitivity, suggesting that their inflation dynamics are more stable and easier to capture. Conversely, components such as Recreation and culture, Restaurants and hotels, and, in some cases, Clothing and footwear proved particularly difficult to forecast, displaying both high FI values and wide inter-model variation. These results underscore the importance of moving beyond the aggregate HICP in order to better understand the sectoral sources of inflationary volatility.

Our temporal analysis highlights that the broader macroeconomic environment plays a decisive role in forecasting accuracy. Following the 2009 financial crisis, inflation was highly volatile, and forecasting errors increased substantially across all models. The 2016 period of stability represented the most favourable forecasting environment, with consistently lower errors and reduced heterogeneity across methods. Finally, the 2023 inflation shock, driven by the COVID-19 pandemic and the Russia–Ukraine conflict, once again exposed the limitations of conventional models under extreme uncertainty. In these periods of instability, recurrent neural networks and advanced architectures such as LSTM and GRU demonstrated superior adaptability, while classical regression-based models like SVR and RFR recorded significantly higher errors. These findings suggest that deep learning approaches not only outperform in general but are particularly valuable in volatile environments where nonlinear dynamics and structural breaks dominate. For central banks operating under inflation-targeting regimes, the inclusion of advanced machine learning and deep learning models can significantly enhance the timeliness and accuracy of inflation forecasts, especially when traditional models struggle to capture rapidly changing dynamics. This is particularly relevant in periods of crisis, when the credibility of monetary policy depends on responding quickly to shifting inflation expectations. For governments, the disaggregated analysis of inflation is equally important, as it reveals which components can be forecast with greater reliability and where forecasting remains fragile. This information can guide targeted interventions, such as energy price support schemes, subsidies, or temporary price caps, that aim to reduce the social burden of inflation without distorting the entire price system.

The study also has implications for financial institutions and firms. Accurate inflation forecasts affect the pricing of loans, bonds, and other financial instruments, as well as business investment and wage-setting decisions. Companies in inflation-sensitive sectors such as food, energy, housing, and real estate can benefit directly from more reliable forecasts by improving cost planning, supply chain management, and pricing strategies. By identifying which inflation components are systematically harder to predict, firms and investors can also better manage risk exposure in volatile markets.

In conclusion, this study demonstrates that a dual approach combining advanced deep learning methods with new evaluation metrics (FI and IQR) provides richer insights into both the accuracy and robustness of inflation forecasts. By explicitly focusing on subcomponents in addition to the headline HICP, we offer a more granular perspective on the sources of inflationary pressure. Our findings confirm that macroeconomic stability improves forecastability across the board, while periods of crisis and uncertainty amplify errors and model dispersion. Overall, this research not only strengthens the methodological toolkit for forecasting inflation but also contributes to policy debates by identifying which sectors can be forecast with greater confidence and where persistent challenges remain. Future research should continue to explore model innovations and extend the analysis to other economies, thereby improving the resilience of inflation forecasting frameworks in an increasingly uncertain global environment.

Our study paves the way for comparisons with forecasts based on models where predictions are constructed based on the impact of various economic variables. This can inspire debates that focus on analysing the accuracy of models with different approaches.