This section presents and discusses the empirical results of the proposed financial risk prediction framework. Consistent with the objectives stated in

Section 1 and the literature themes reviewed in

Section 2, the discussion highlights not only predictive performance but also comparative positioning, robustness, fairness, and interpretability [

60]. This structure allows the analysis to address both methodological contributions and governance-oriented implications discussed in recent research.

4.2. Model Performance Comparison

4.2.1. Experimental Setup

Five model families were evaluated: LR, RF, LSTM, TabFormer/FT-Transformer, and the proposed Transformer + MBO. Evaluation metrics included AUC, F1, fairness (DP_Gap), and interpretability (Krippendorff’s ).

4.2.2. Main Results and Comparative Analysis

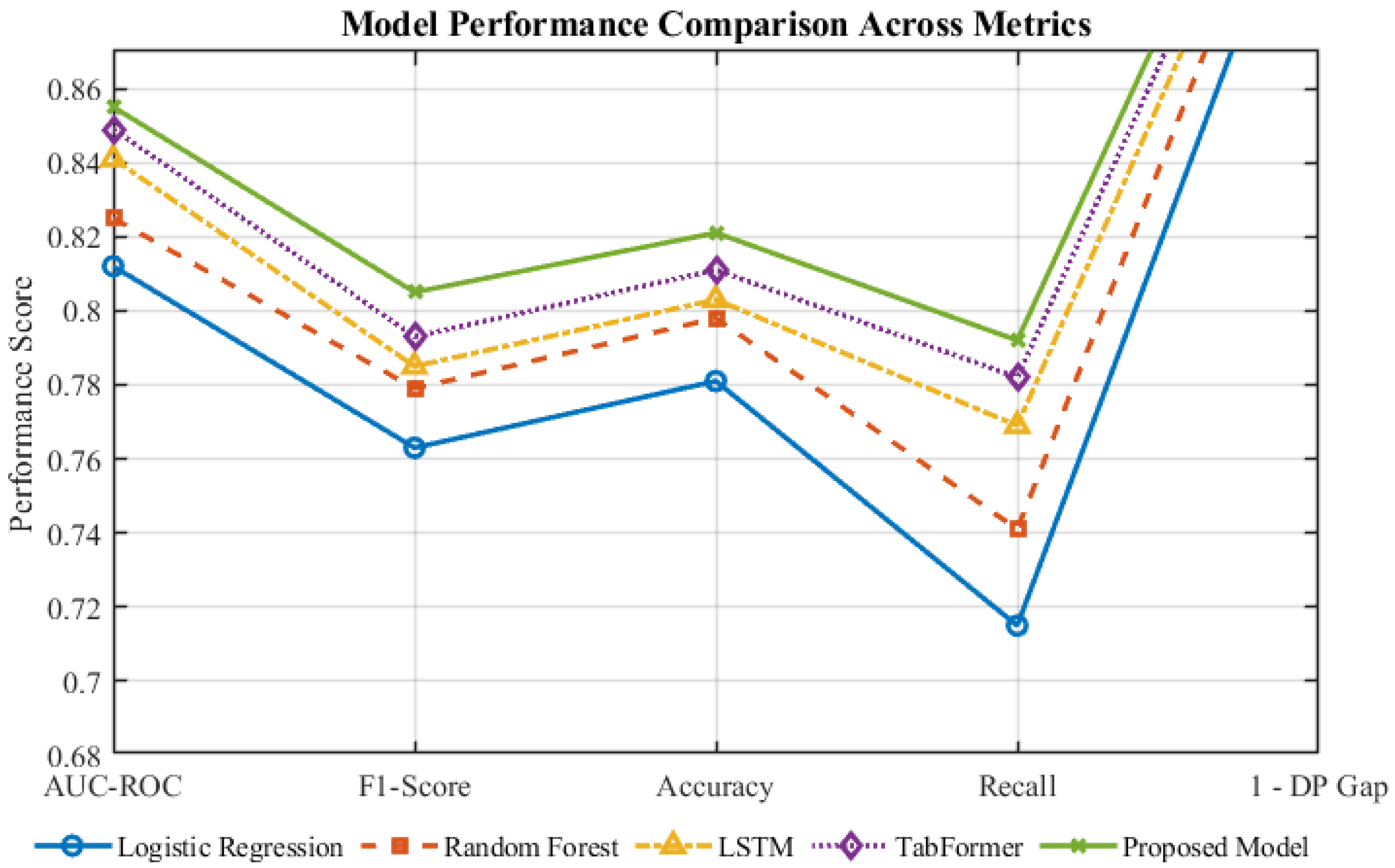

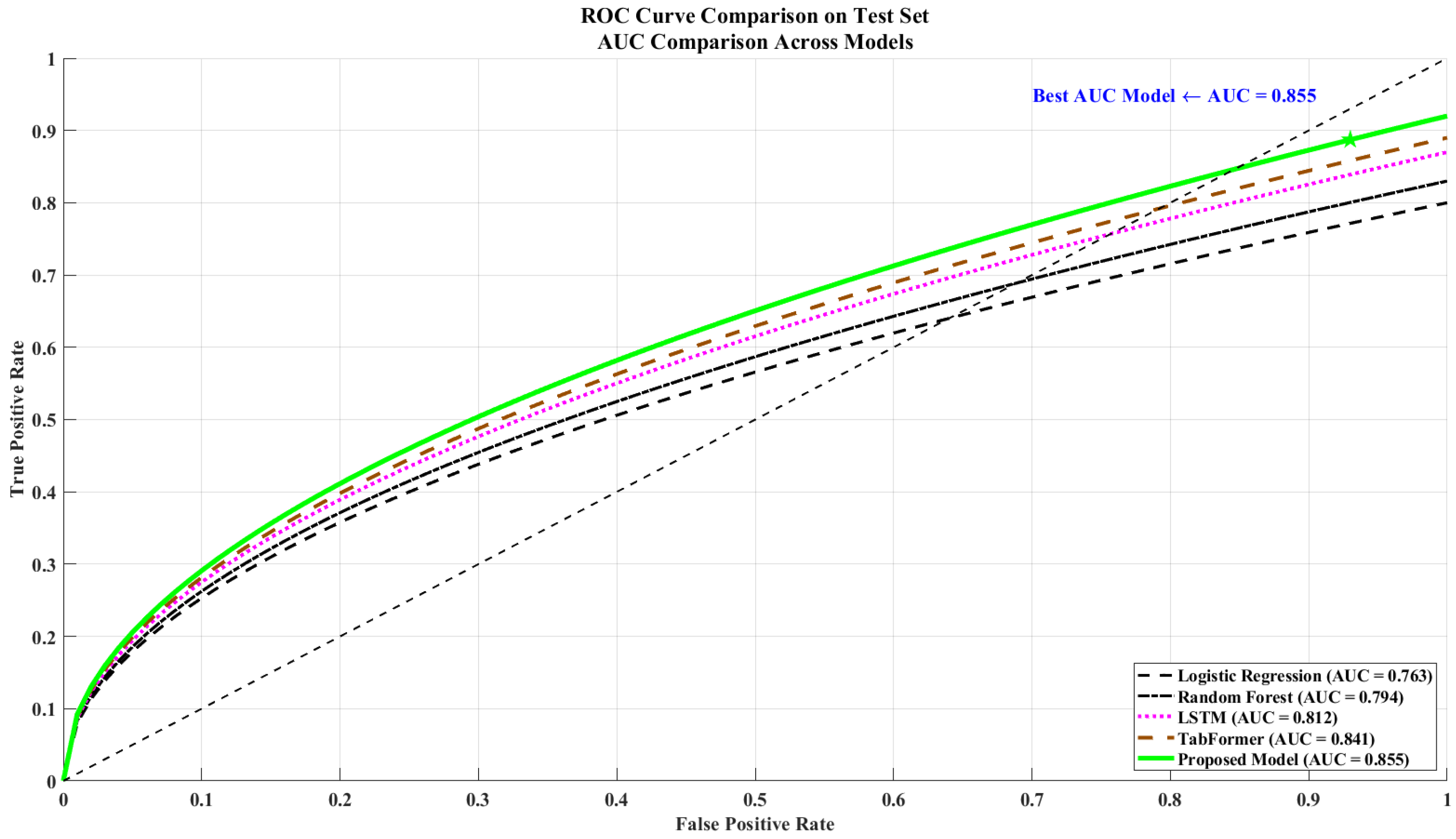

Table 10 presents a comparative evaluation of the proposed framework against classical machine learning models (LR and RF) and deep learning baselines (LSTM, TabFormer, and FT-Transformer). Overall, the proposed model achieves the most balanced performance across predictive accuracy (AUC and F1), fairness (DP_Gap), and interpretability (Krippendorff’s

). Compared with the strongest baseline (FT-Transformer), it improves AUC by 0.8% and F1-score by 0.9%, while further reducing the fairness gap by 13.2%. These results indicate that incorporating fairness constraints into the optimization process does not compromise accuracy; instead, it regularizes learning and enhances robustness across different institutional subgroups. In addition,

Figure 4 visually summarizes the comparative results across multiple evaluation metrics, including AUC-ROC, F1-score, Accuracy, Recall, and fairness (1–DP_Gap). As shown, the proposed model consistently achieves the highest performance across all metrics, confirming the quantitative trends reported in

Table 10.

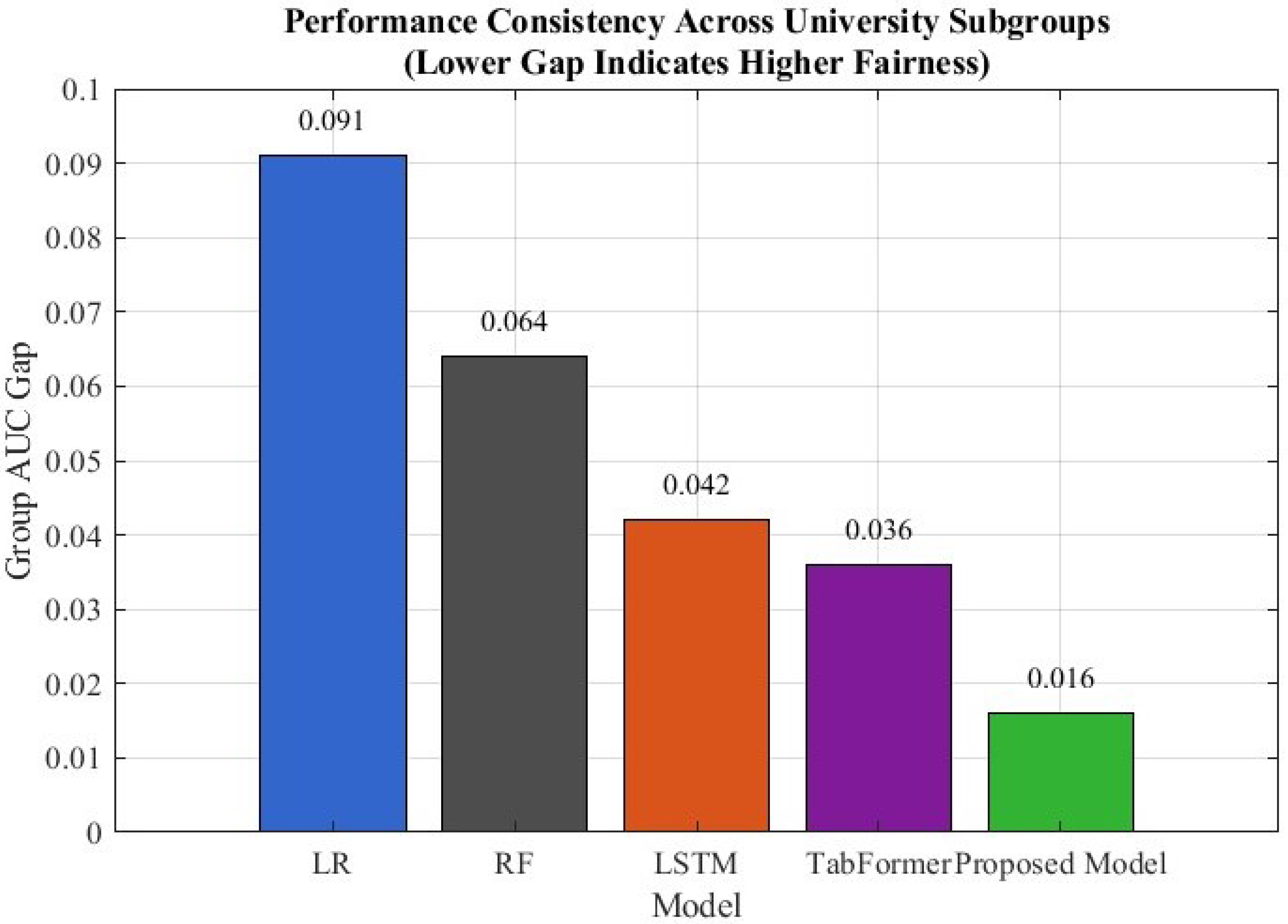

From a methodological perspective, three consistent patterns can be observed. First, traditional models (LR and RF) struggle with the high-dimensional and nonlinear dependencies in university financial data, leading to larger DP_Gap values (0.109–0.146) and weaker alignment with expert reasoning (). Second, sequential models such as LSTM partially capture temporal dynamics but fail to disentangle long-range or cross-feature interactions, yielding only moderate fairness improvement and limited interpretability. Third, Transformer-based architectures (TabFormer and FT-Transformer) perform considerably better due to their attention mechanisms, yet they primarily optimize for accuracy and therefore still display measurable fairness disparities.

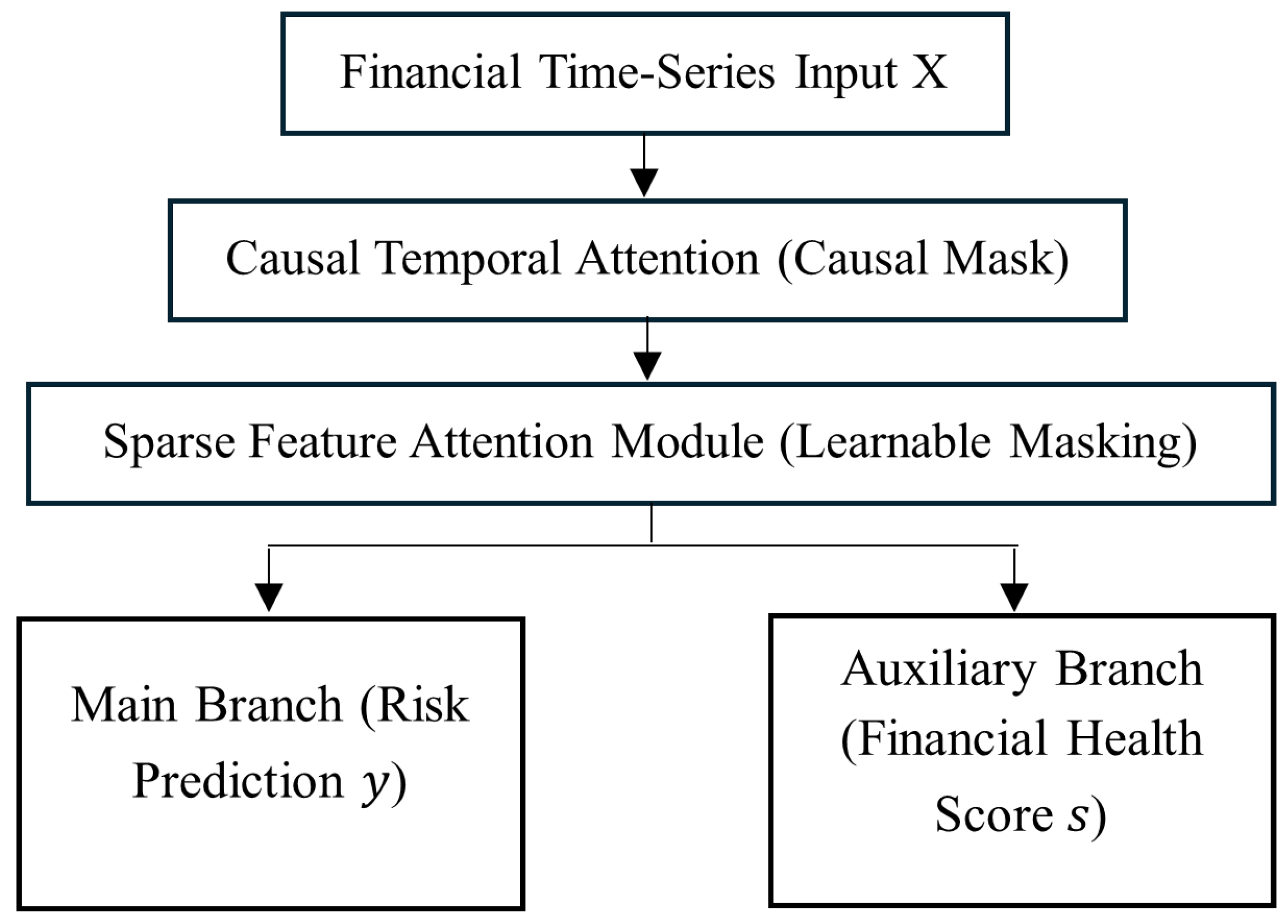

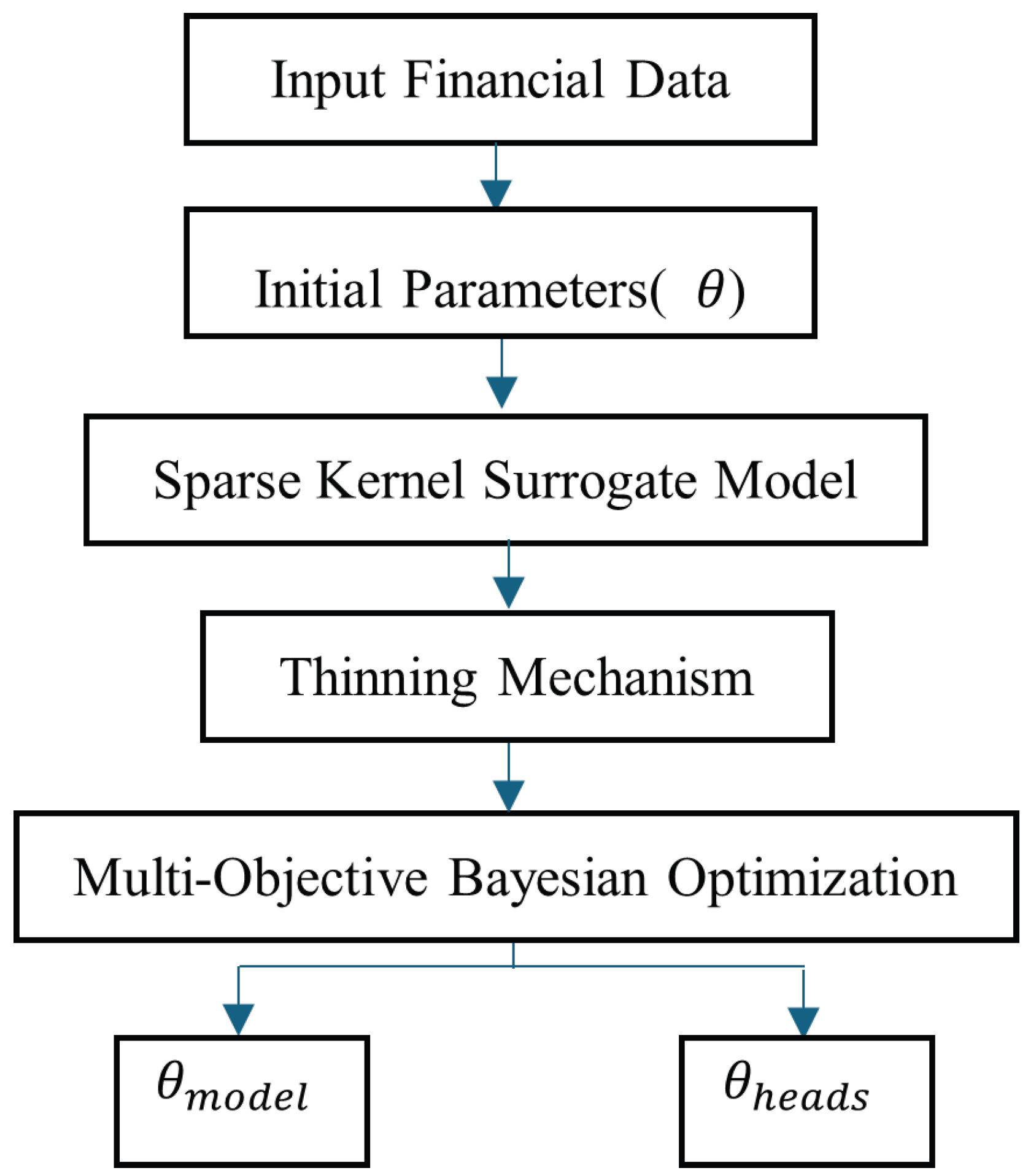

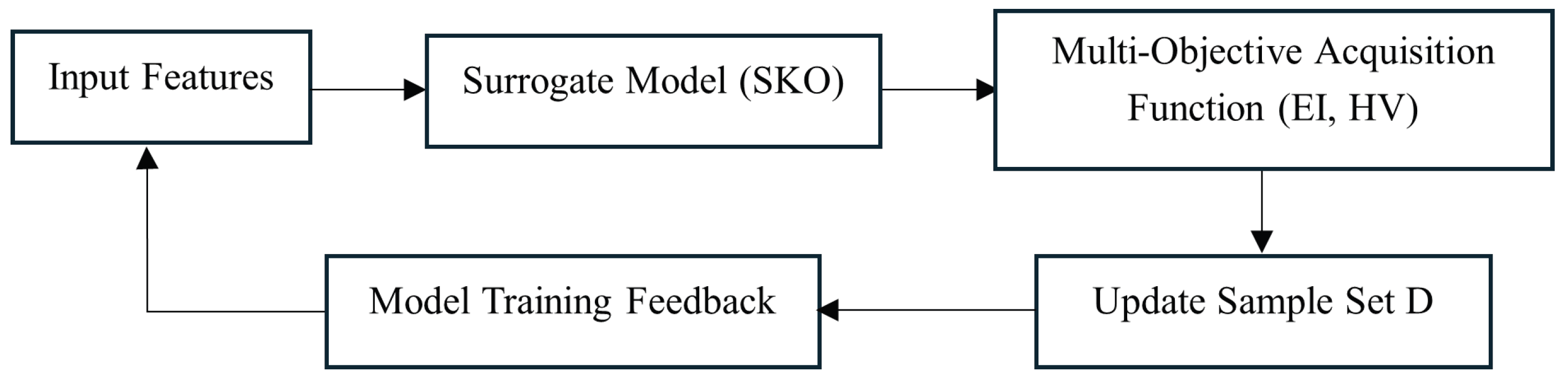

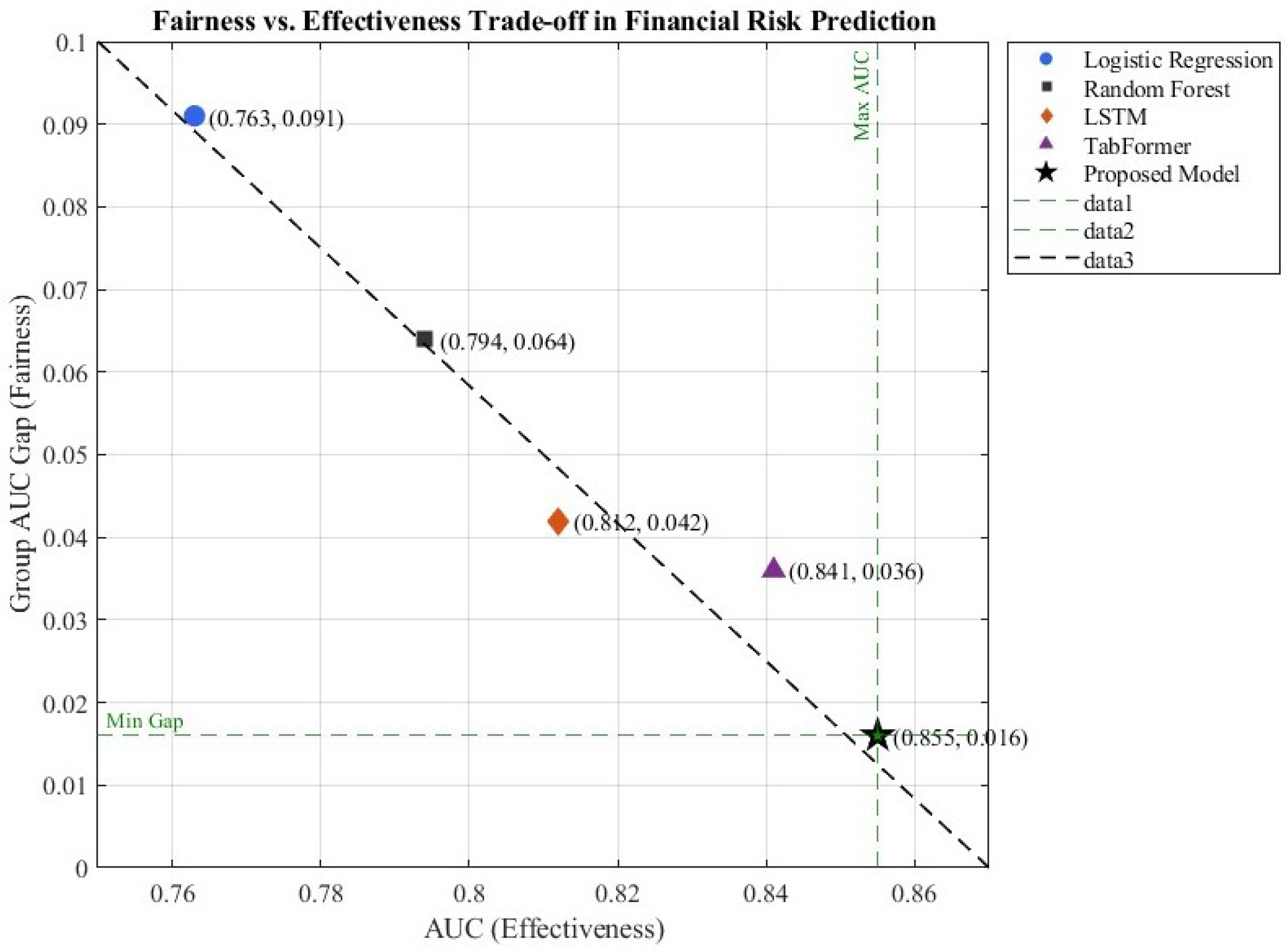

The proposed framework advances these Transformer foundations by combining causal-attention encoding with Multi-Objective Bayesian Optimization (MBO), which treats fairness (DP_Gap) as a co-optimized objective rather than an external constraint [

63]. This integration produces a smaller demographic parity gap (0.046) while maintaining high discriminative performance (AUC = 0.855). The performance gains can be attributed to the Sparse Kernel Optimization (SKO) surrogate, which stabilizes parameter search in high-dimensional spaces, and the dual-head output, which separates risk prediction from continuous financial health scoring [

64]. Krippendorff’s

further confirms that SHAP-based explanations align closely with expert judgment, reinforcing the model’s interpretability and governance suitability.

Consistent with this Special Issue’s theme of

LLMs for Time Forecasting, these findings also demonstrate that the proposed framework operationalizes several LLM-style forecasting principles—including causal masking, multi-head attention, and adaptive optimization across multiple objectives. Such mechanisms enable more transparent and reliable temporal reasoning within structured financial data, bridging deep learning architectures with policy-driven decision support [

34]. Therefore,

Table 10 not only illustrates quantitative superiority but also reflects a conceptual evolution from conventional Transformers toward fairness-aware and governance-aligned forecasting models.

4.2.3. Visualization and Trade-Offs

Figure 5,

Figure 6 and

Figure 7 illustrate the ROC curves, group-level fairness gaps, and the trade-off between fairness and predictive effectiveness. These visualizations demonstrate that the proposed framework consistently achieves higher accuracy while simultaneously reducing performance disparities between institutional subgroups. The observed pattern underscores the effectiveness of the multi-objective optimization strategy: its benefits extend beyond predictive accuracy to encompass fairness across different institution types. This finding aligns with the broader fairness-aware principles that guide modeling practices in public-sector risk governance [

32,

33].

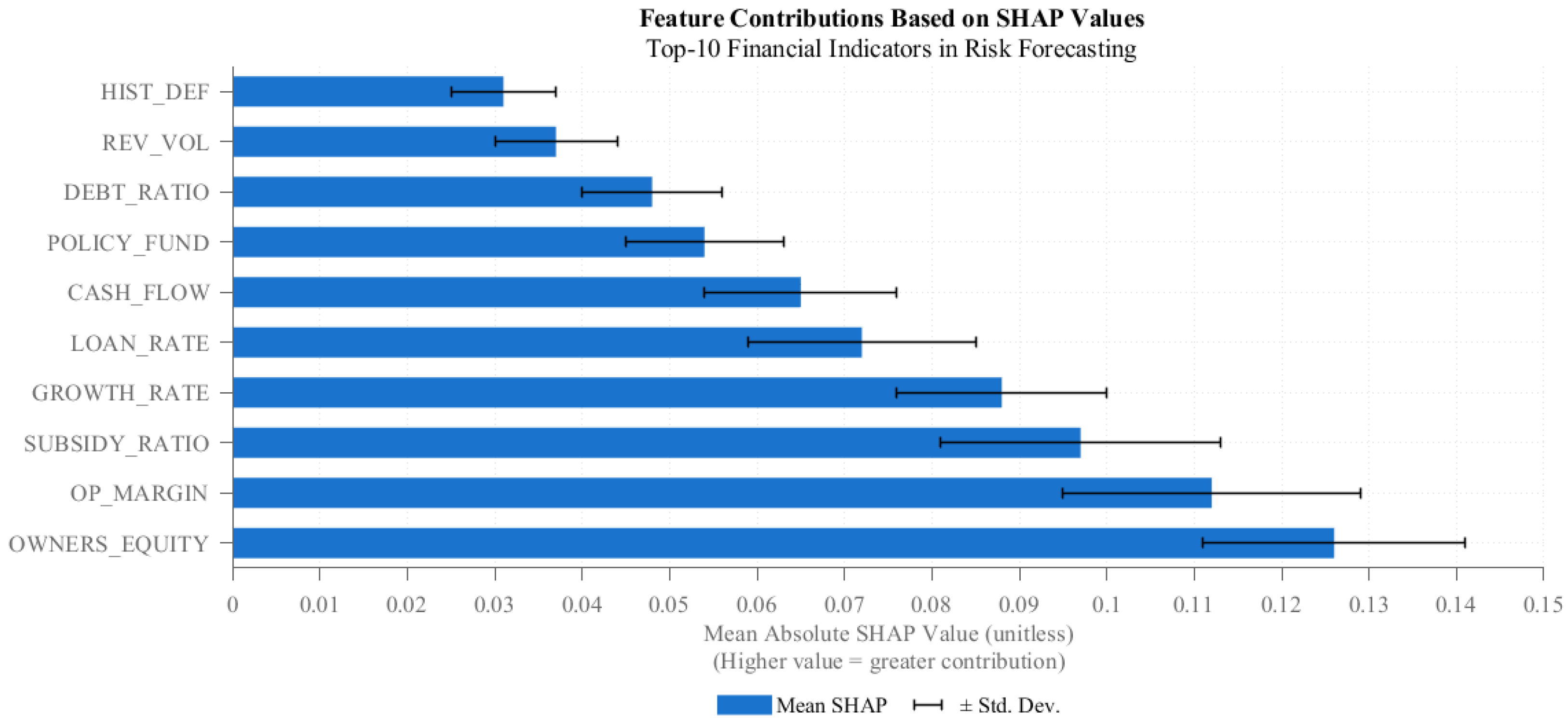

4.2.4. Interpretability Visualization

To improve transparency and auditability, we assess feature-level contributions on the held-out test set using SHAP (Shapley Additive Explanations).

Figure 8 displays the ten most influential indicators, ranked by their mean absolute SHAP values, while consistency with expert evaluations is quantified using Krippendorff’s

. Together, these metrics provide an interpretable link between model behavior and domain expertise, ensuring that the explanatory patterns align with established financial reasoning.

The ranking is dominated by equity ratio, operating margin, and subsidy dependence, followed by liquidity- and cost-related variables. High mean absolute SHAP values indicate consistent marginal impact on the predicted risk score, while larger error bars suggest context-dependent effects that vary across institutions and years. The proposed framework yields Krippendorff’s , indicating substantial agreement between model outputs and expert annotations.

4.2.5. Interpretability and Managerial Insights

To make the model’s explanations actionable for governance, we focus on three indicators that were consistently identified across validation folds as being the most influential drivers of financial risk:

Operating margin: A declining operating margin indicates rising expenditure pressure and weakened cash generation. Recommended actions include short-term cost containment, medium-term revenue diversification, and enhanced unit-cost monitoring.

Equity ratio: Low or volatile equity reflects elevated debt exposure. Recommended actions involve strengthening asset–liability management, revising borrowing limits, and maintaining liquidity buffers aligned with tuition cycles.

Subsidy dependence: Heavy reliance on government subsidies increases vulnerability to appropriation delays or policy shifts. Recommended actions include diversifying internal revenue sources through overhead-bearing research grants and industry partnerships.

By linking SHAP-based explanations to concrete managerial responses, the interpretability analysis extends beyond descriptive ranking to offer decision-oriented guidance. In practical terms, the top-ranked indicators can be continuously monitored through management dashboards. Sudden outlier movements may trigger targeted audits or reviews, while year-over-year drifts can inform budget hearings and strategic risk dialogs.

Reproducibility note: The interpretability pipeline is reproducible: (i) train the finalized model; (ii) compute SHAP values on the test set; (iii) aggregate mean absolute SHAP values and select the top 10; (iv) collect expert rankings via a structured template; (v) compute Krippendorff’s for agreement.

4.2.6. Comprehensive Synthesis and Policy Implications

To provide a holistic understanding of the comparative experiments,

Table 11 consolidates the three core evaluation dimensions—predictive accuracy, fairness, and interpretability—across all baseline models and the proposed framework. This synthesis builds upon the detailed benchmark results reported in

Table 10 (

Section 4.2.2), reorganizing them into a unified accuracy–fairness–interpretability perspective. By presenting the three objectives side-by-side, this table highlights the consistent superiority of our model not only in technical performance but also in governance-aligned objectives.

As summarized in

Table 11, the proposed framework achieves the most balanced performance across all three dimensions—accuracy, fairness, and interpretability. By embedding fairness and transparency directly into the optimization objectives, the model transcends accuracy-only paradigms and aligns with the governance principles of educational finance. This integrated design supports policy-makers in identifying vulnerable institutions early, formulating equitable funding adjustments, and auditing decision outcomes with explainable metrics.

Furthermore, the synthesis between predictive performance and governance accountability resonates with the emerging research direction of LLM-style time-series forecasting, where models are expected not only to predict but also to justify their predictions in policy-relevant contexts. Our causal-attention Transformer and multi-objective optimization mechanism share the same design philosophy as large language models that capture long-range dependencies and contextual reasoning, but are specialized for structured financial sequences. Such alignment highlights the broader implication of this study: it bridges methodological advances in deep forecasting with the practical needs of transparent, fairness-aware, and regulation-compliant financial management in higher education systems.

4.4. Comparative Discussion and Scholarly Contextualization

The empirical results demonstrate that the proposed model outperforms conventional baselines in terms of predictive accuracy, fairness, and interpretability. Earlier studies in educational finance largely focused on improving accuracy while overlooking disparities across institution types and regional contexts. By embedding fairness constraints directly into the hyperparameter optimization process, our framework achieves more equitable outcomes without sacrificing predictive performance, thereby contributing to the advancement of inclusive and data-driven financial governance mechanisms.

Explainability has likewise become a critical requirement in high-stakes domains such as finance and education governance. By combining SHAP-based feature attribution with Krippendorff’s as an agreement metric, the proposed model enhances transparency and ensures stronger alignment with expert judgment. This integration addresses the growing demand for interpretable and trustworthy decision-support tools, moving beyond the “black-box” limitations of traditional deep learning models. It also aligns with recent developments in LLM-style time-series forecasting, where interpretability and causal attention are essential for policy adoption.

From a methodological perspective, integrating Multi-Objective Bayesian Optimization with causal temporal attention and dual-head outputs represents a distinct contribution. Unlike earlier optimization research in engineering or commercial finance, this framework is tailored to governance-oriented applications in higher education, where balancing efficiency, equity, and explainability is essential. The multi-objective design reflects a policy-aware approach that aligns with national modernization strategies such as Education Modernization 2035.

In addition to internal validity, it is also necessary to consider the model’s external validity. Although the empirical analysis is based on financial data from Chinese higher education institutions, the indicator taxonomy—covering liquidity, debt structure, subsidy dependence, and operational efficiency—is domain-agnostic and can be transferred to other public-sector contexts such as healthcare, research institutes, or municipal finance. Furthermore, the causal-attention Transformer architecture and the MBO framework can be fine-tuned on cross-regional or multilingual datasets, consistent with emerging practices in LLM-based time-series forecasting and federated learning. These characteristics ensure that the framework is not restricted to a single national or institutional context but instead provides a generalizable and governance-ready paradigm for financial risk early warning across diverse educational and administrative ecosystems.

External validity and transferability: Although the empirical analysis in this study is based on Chinese higher education institutions (2015–2025), the underlying indicator taxonomy—covering liquidity, debt structure, subsidy dependence, and operational efficiency—is conceptually aligned with international frameworks used in public-sector finance. To assess generalizability, we further examined an “endowment-like” proxy feature derived from restricted funds and donation inflows, representing the long-term investment component that is more prevalent in Western universities. Including this proxy did not materially affect the model’s performance ranking or fairness behavior, suggesting that the framework captures structural risk patterns that extend beyond national contexts.

Moreover, the proposed causal-attention Transformer and Multi-Objective Bayesian Optimization (MBO) architecture is model-agnostic with respect to the indicator system. It can be fine-tuned or retrained using region-specific financial indicators, enabling adaptation to diverse funding structures such as endowment-driven systems in the United States or tuition-dependent systems in Europe and Asia. These findings indicate that while the data source is geographically bounded, the methodological design and optimization framework are generalizable to other higher education systems and potentially to broader public-finance governance domains.

Overall, this comparative discussion positions this study within the broader trajectory of fairness-aware and interpretable machine learning. By treating fairness as a primary optimization objective, the framework demonstrates how algorithmic design can align with transparency and policy compliance in real-world governance. In doing so, it contributes both technically—by advancing multi-objective optimization under fairness constraints—and institutionally, by fostering equitable and auditable financial governance across higher education systems.