Prediction of Airtightness Performance of Stratospheric Ships Based on Multivariate Environmental Time-Series Data

Abstract

1. Introduction

- (1)

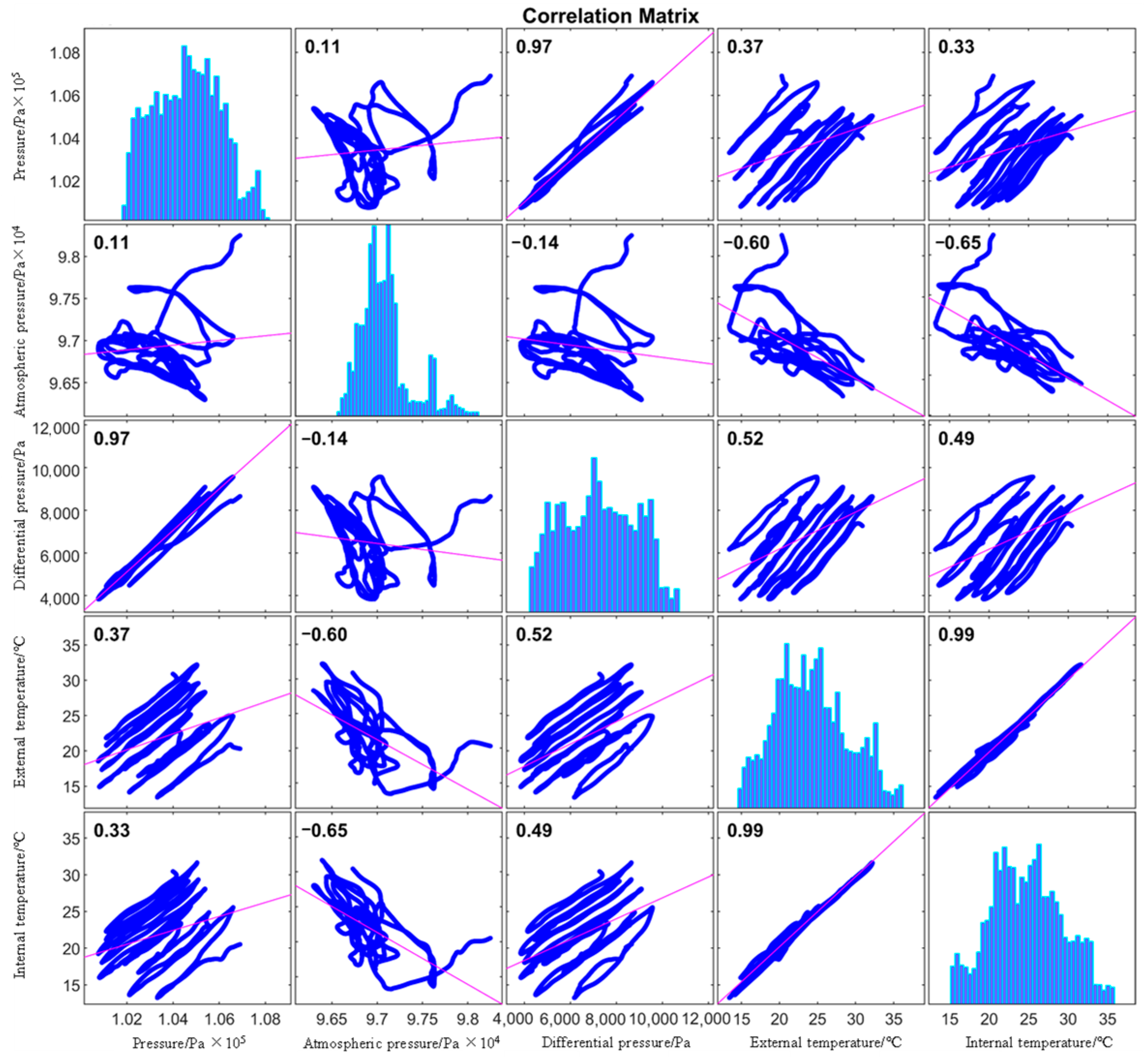

- Correlation Analysis of Features: This study examines the linear correlations between various features in the dataset;

- (2)

- Model Selection and Comparison: Common machine learning models are selected and compared based on their predictive performance on the sample dataset;

- (3)

- Result Analysis: The long-term prediction results of each model are analyzed for validity, demonstrating the superiority of the NeuralProphet model in predicting the airtightness of stratospheric airships.

2. Data Preprocessing and Sample Partitioning

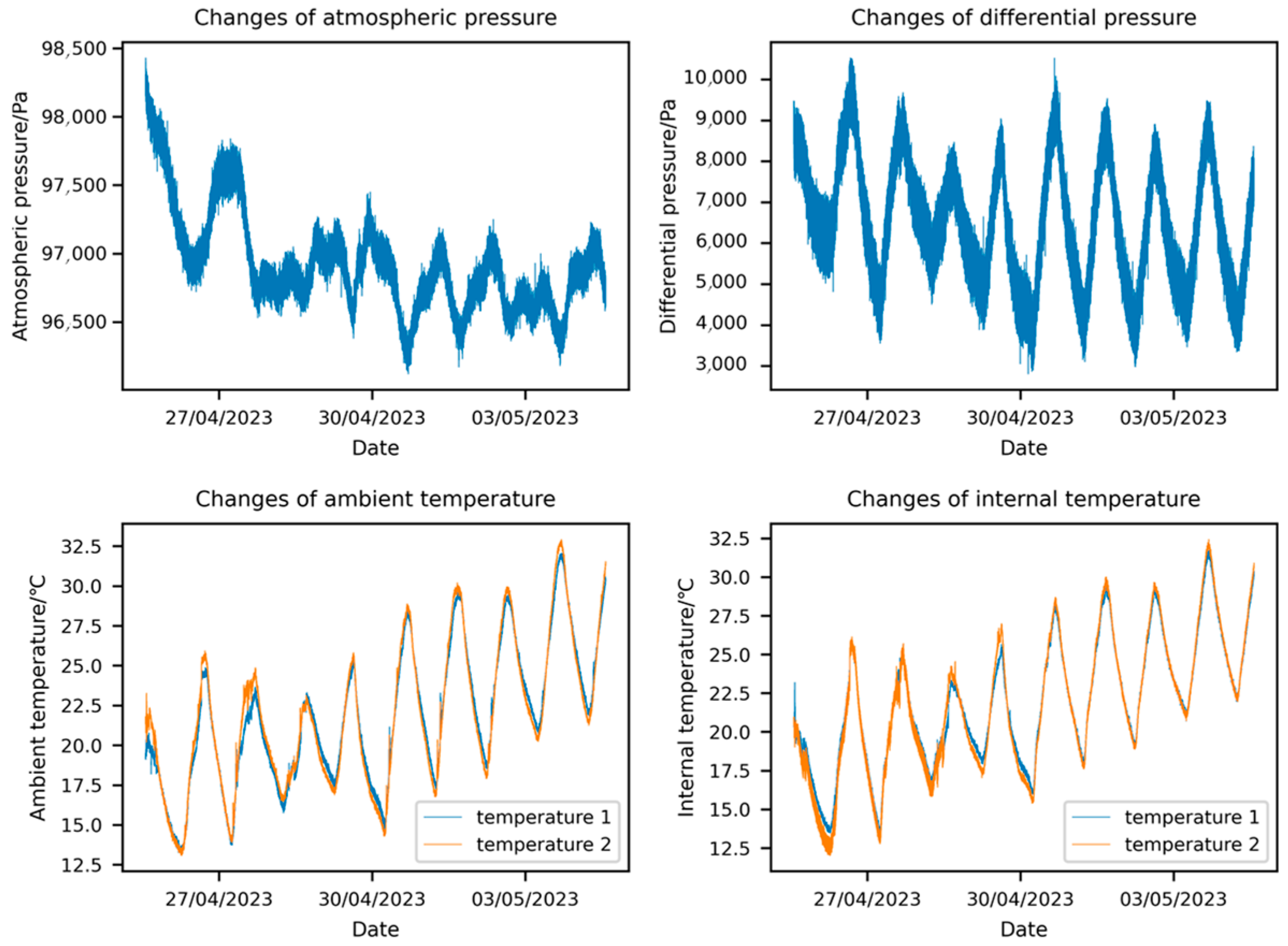

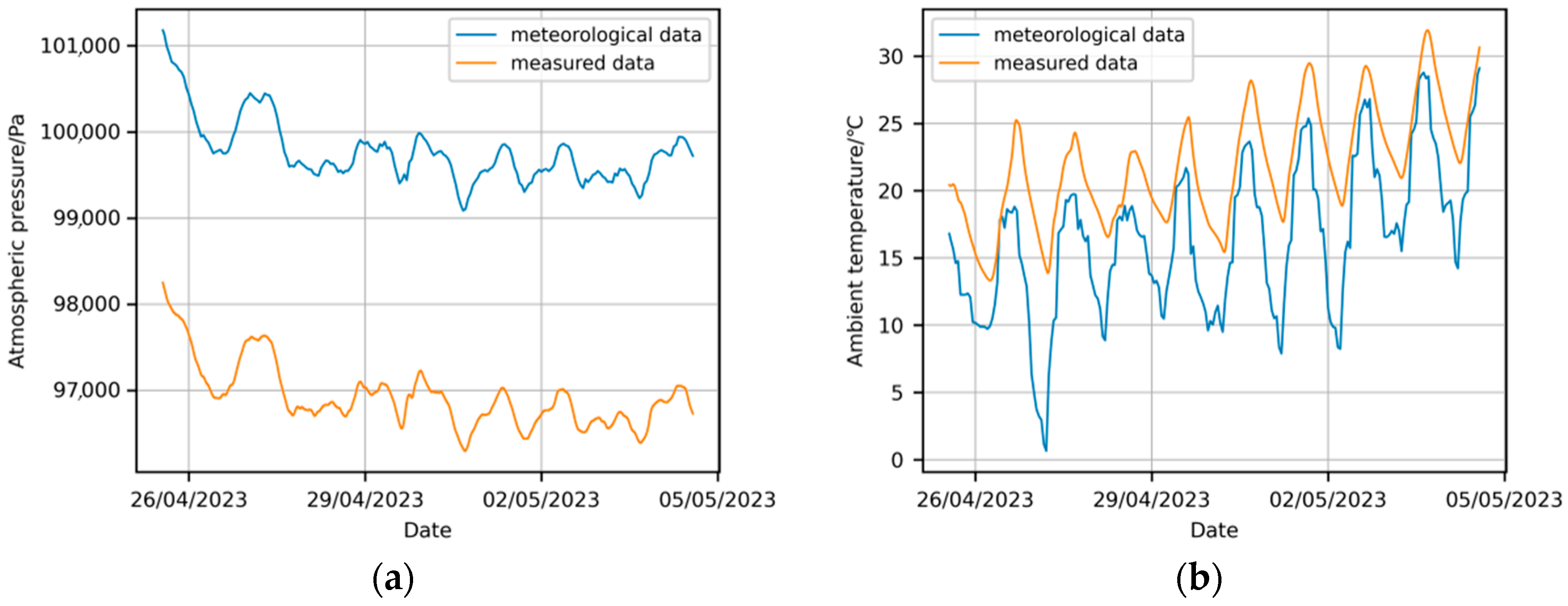

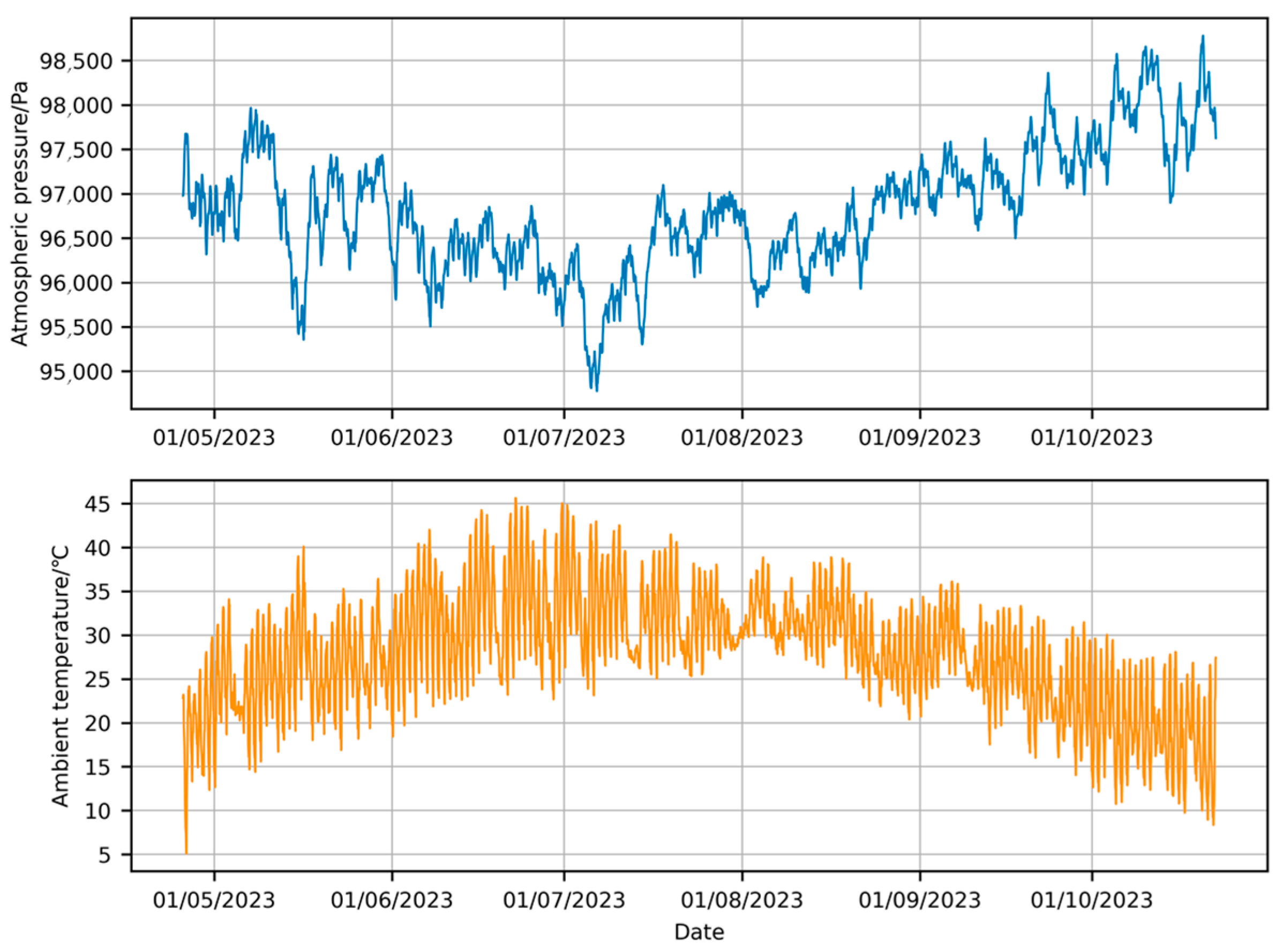

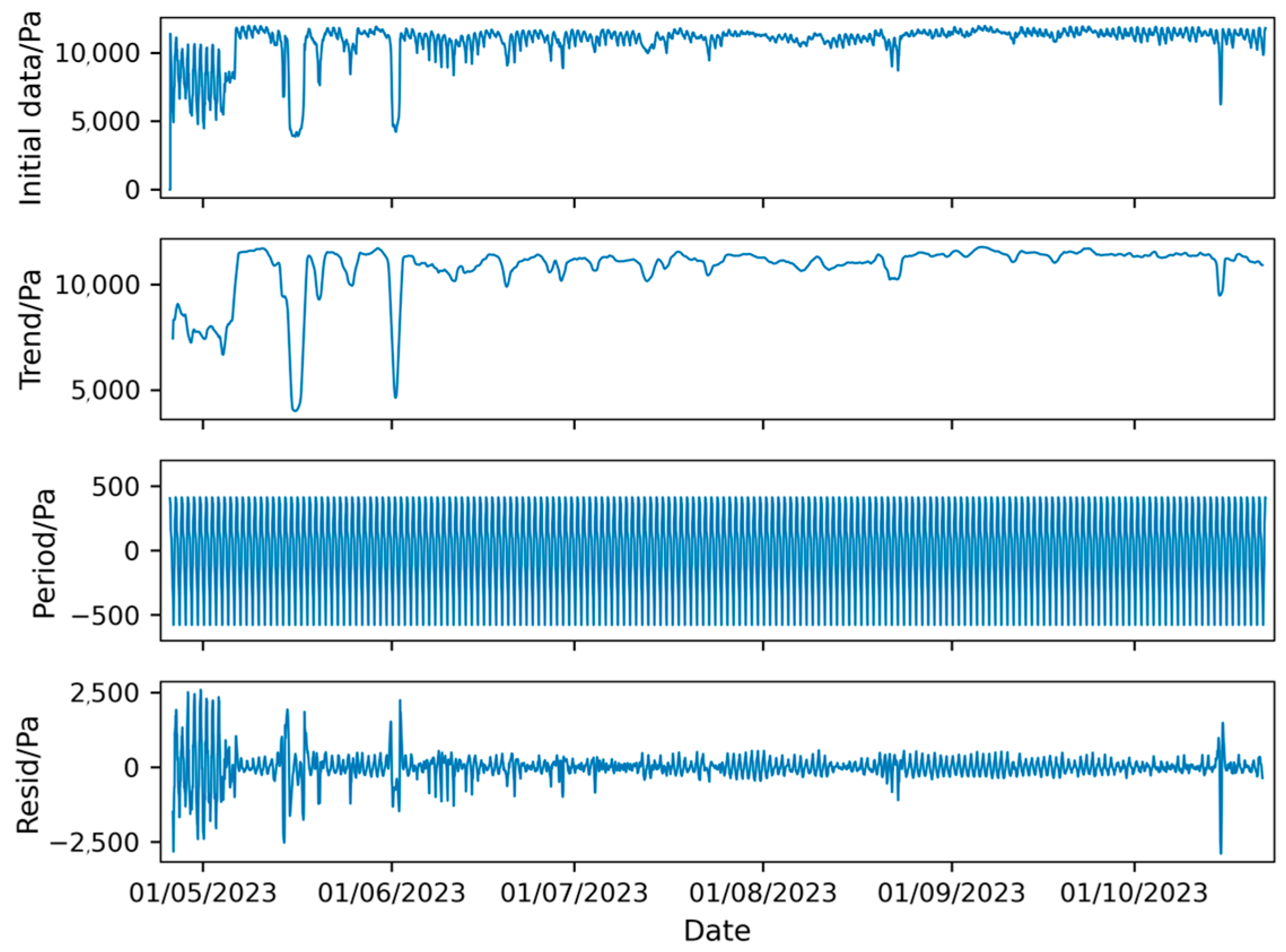

2.1. Data Preprocessing

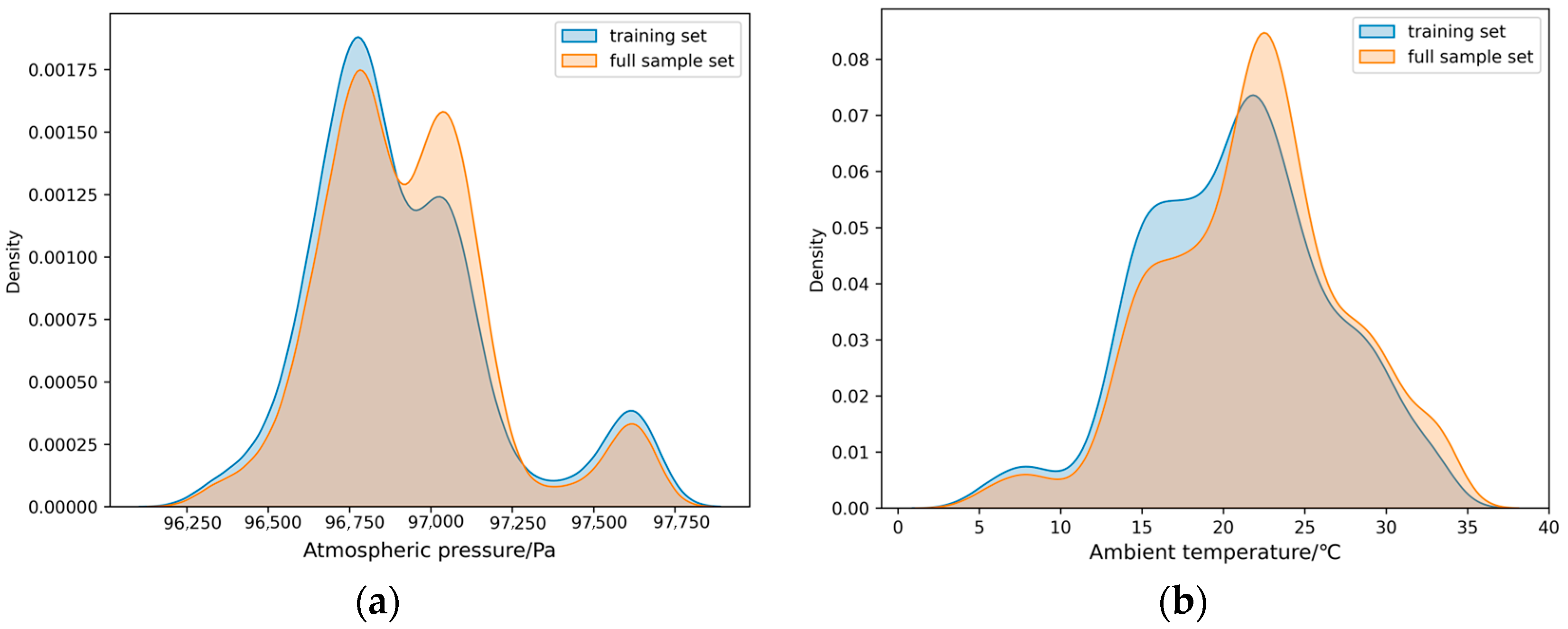

2.2. Sample Partitioning

3. Evaluation Metrics and Model Selection

3.1. Evaluation Metrics Selection

3.2. Model Selection

3.3. Computing Resources

4. Experiment

4.1. Computational Results

4.2. Prediction Results

4.2.1. Evaluation Metrics

4.2.2. Result Curves

- A.

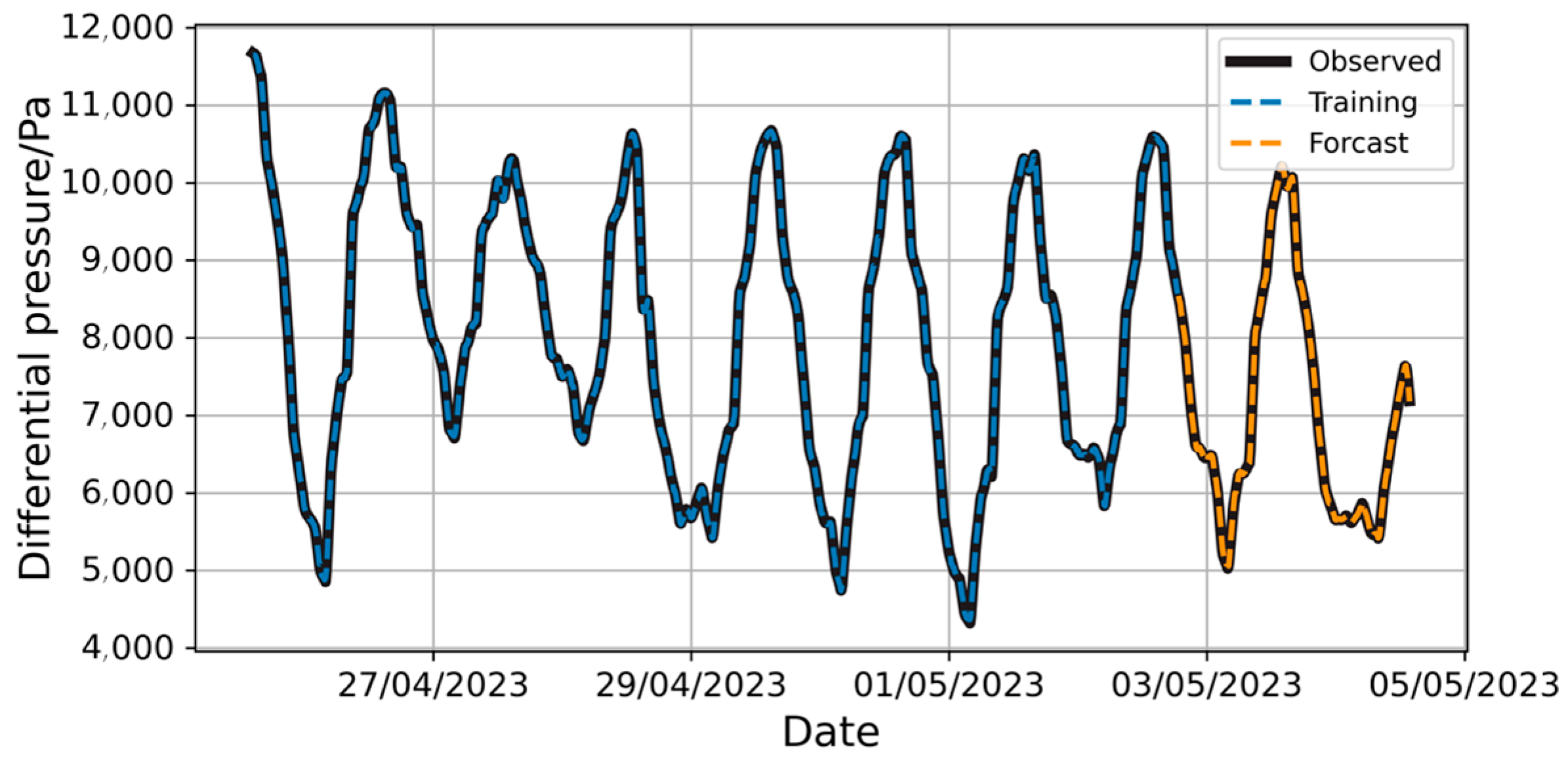

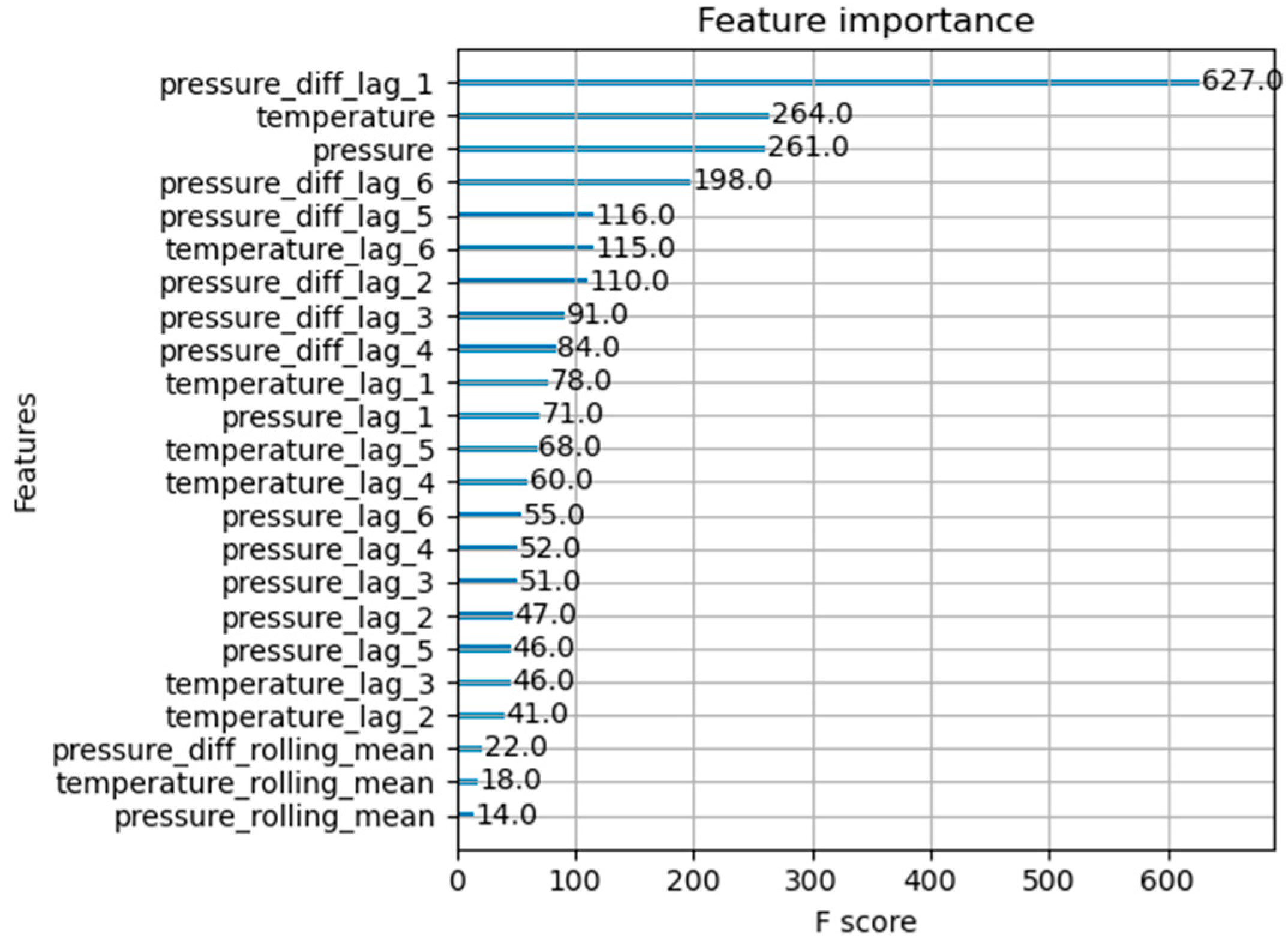

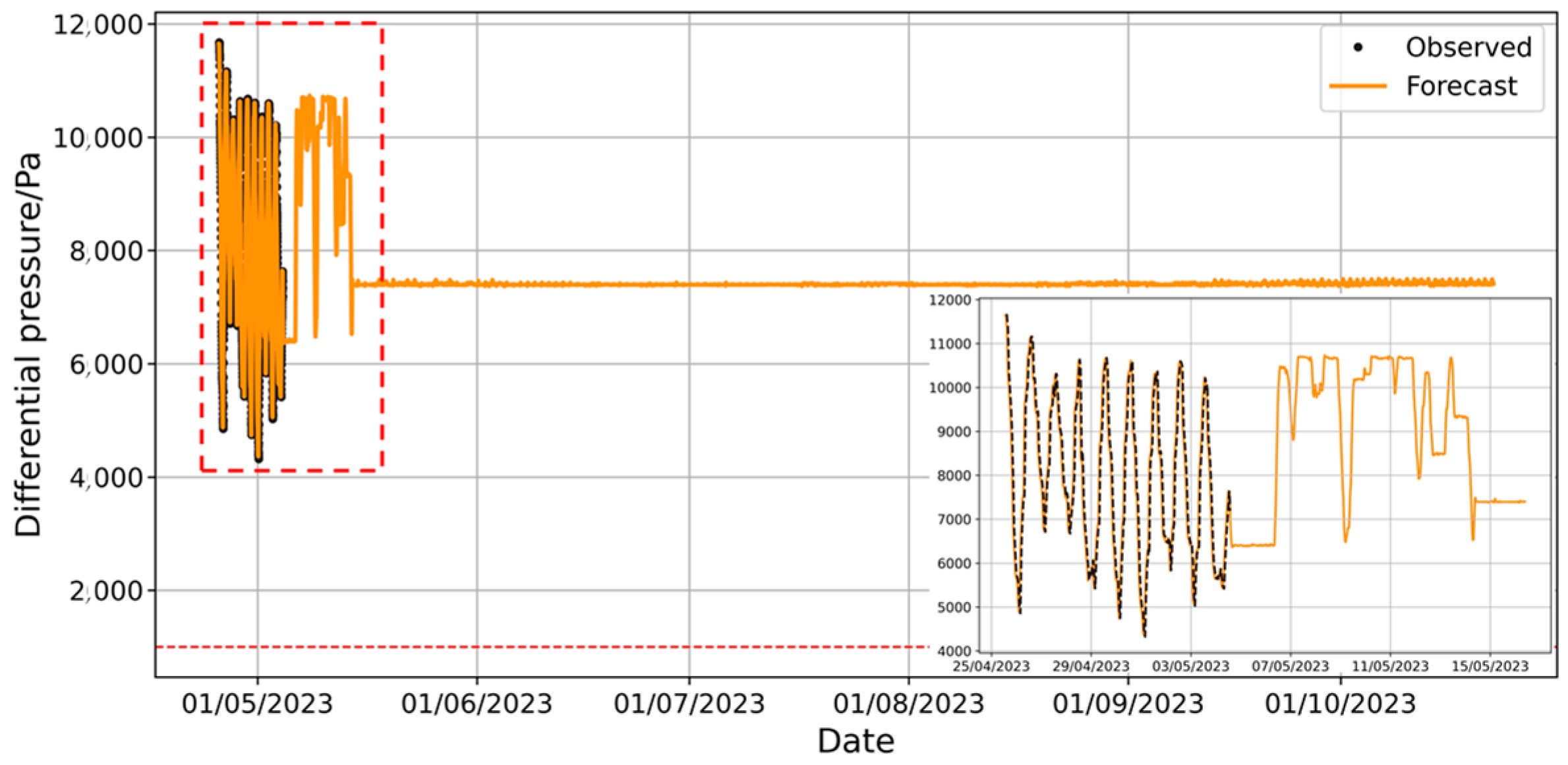

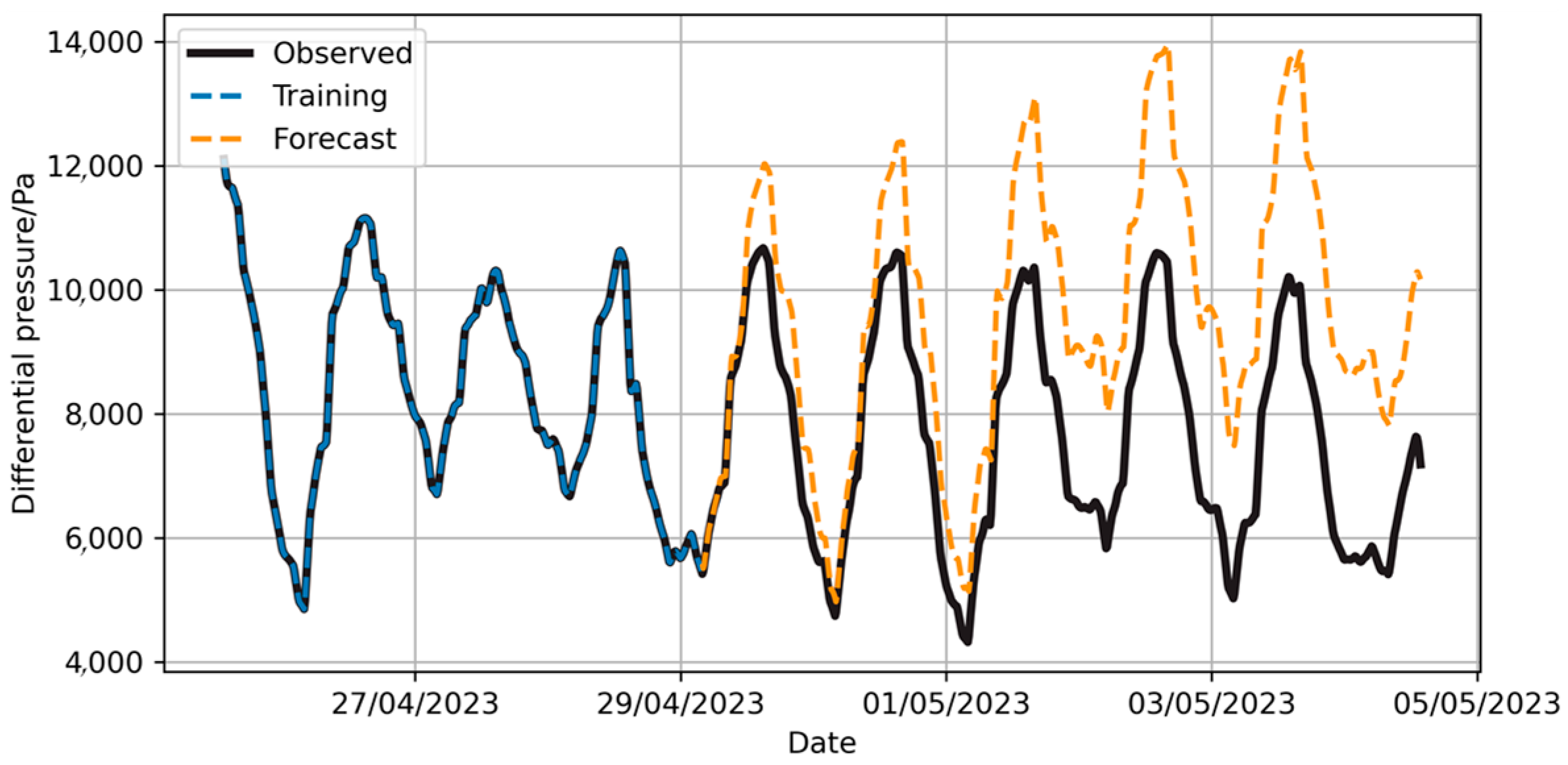

- Model XGBoost

- B.

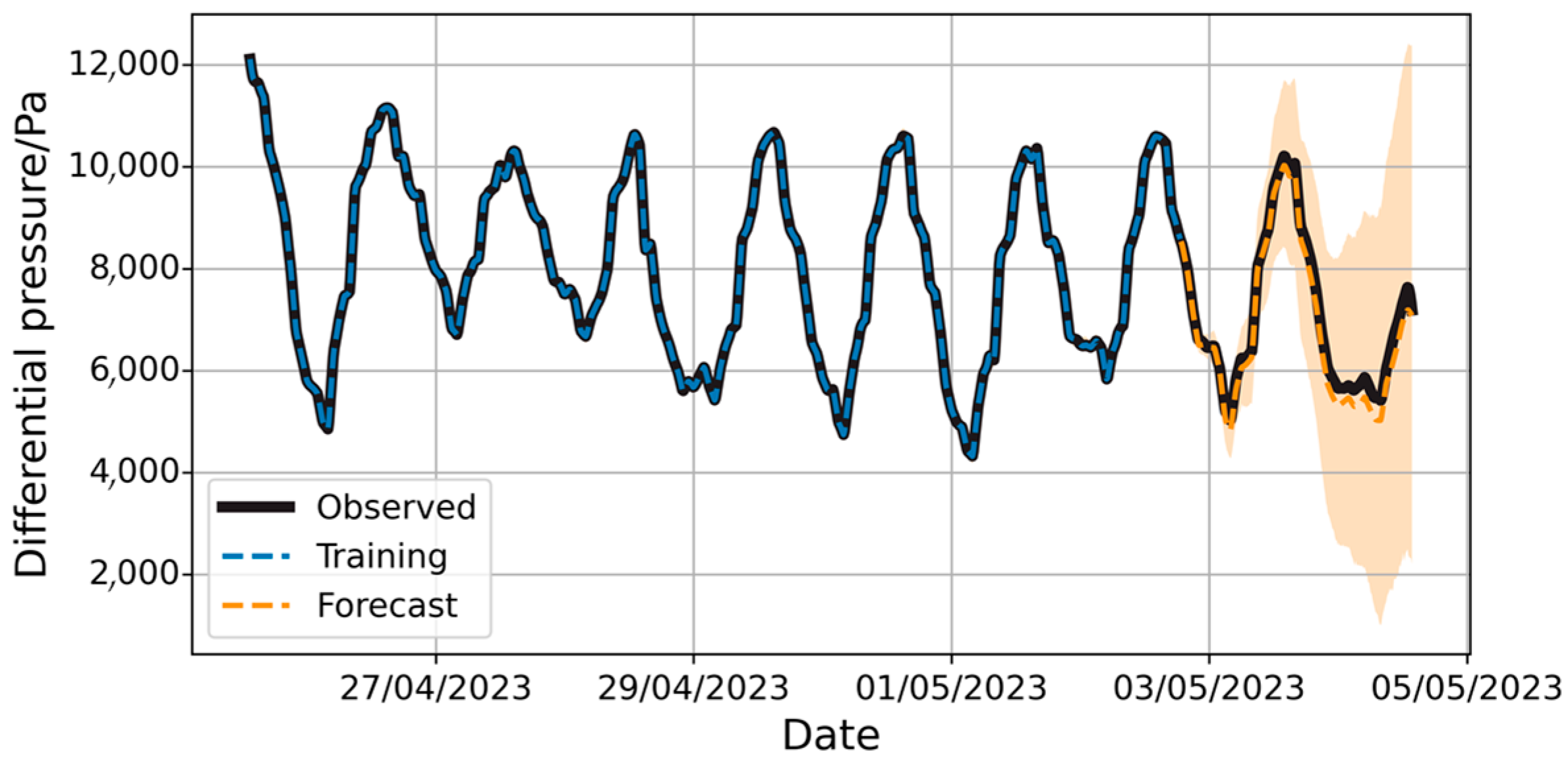

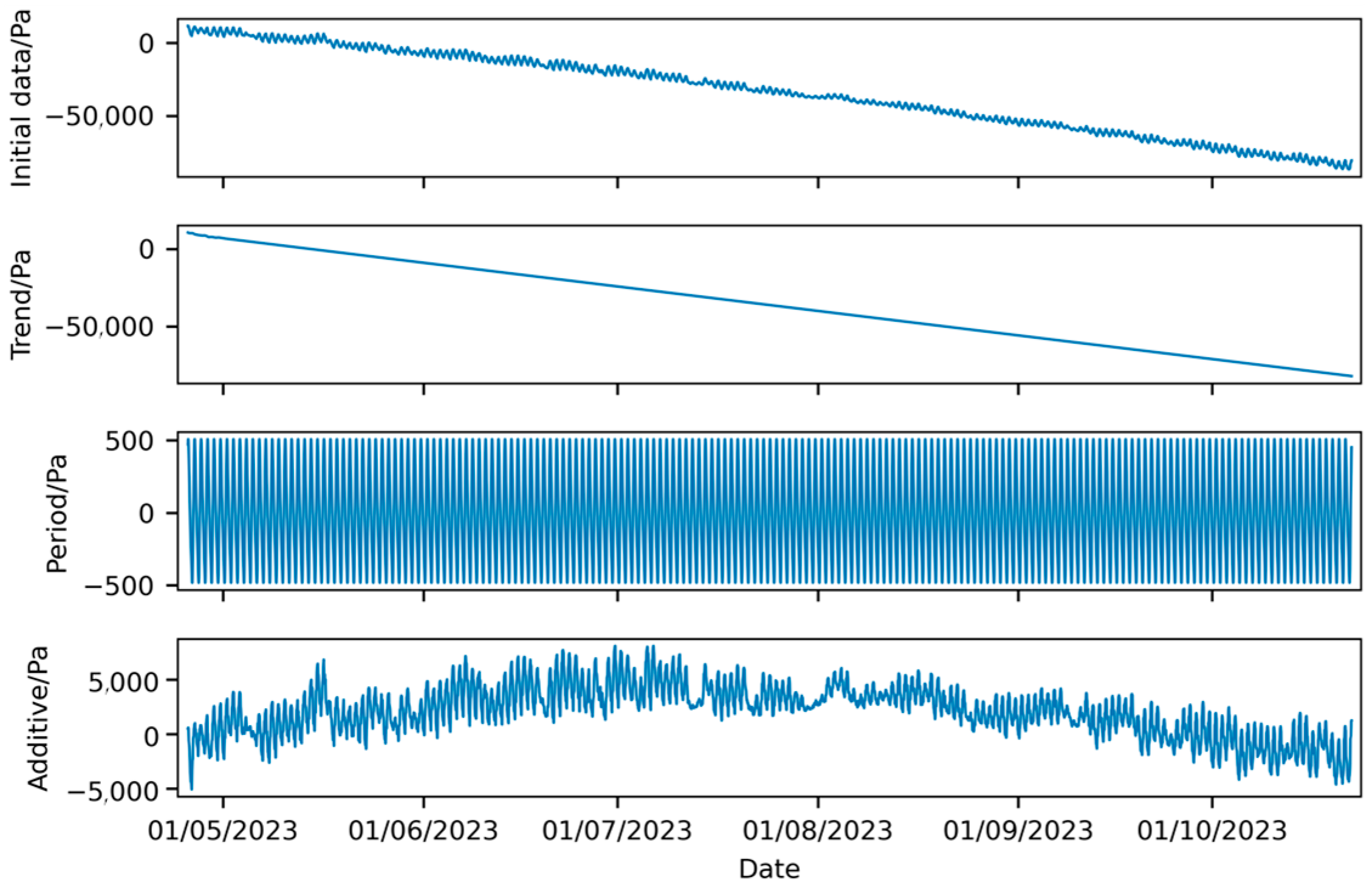

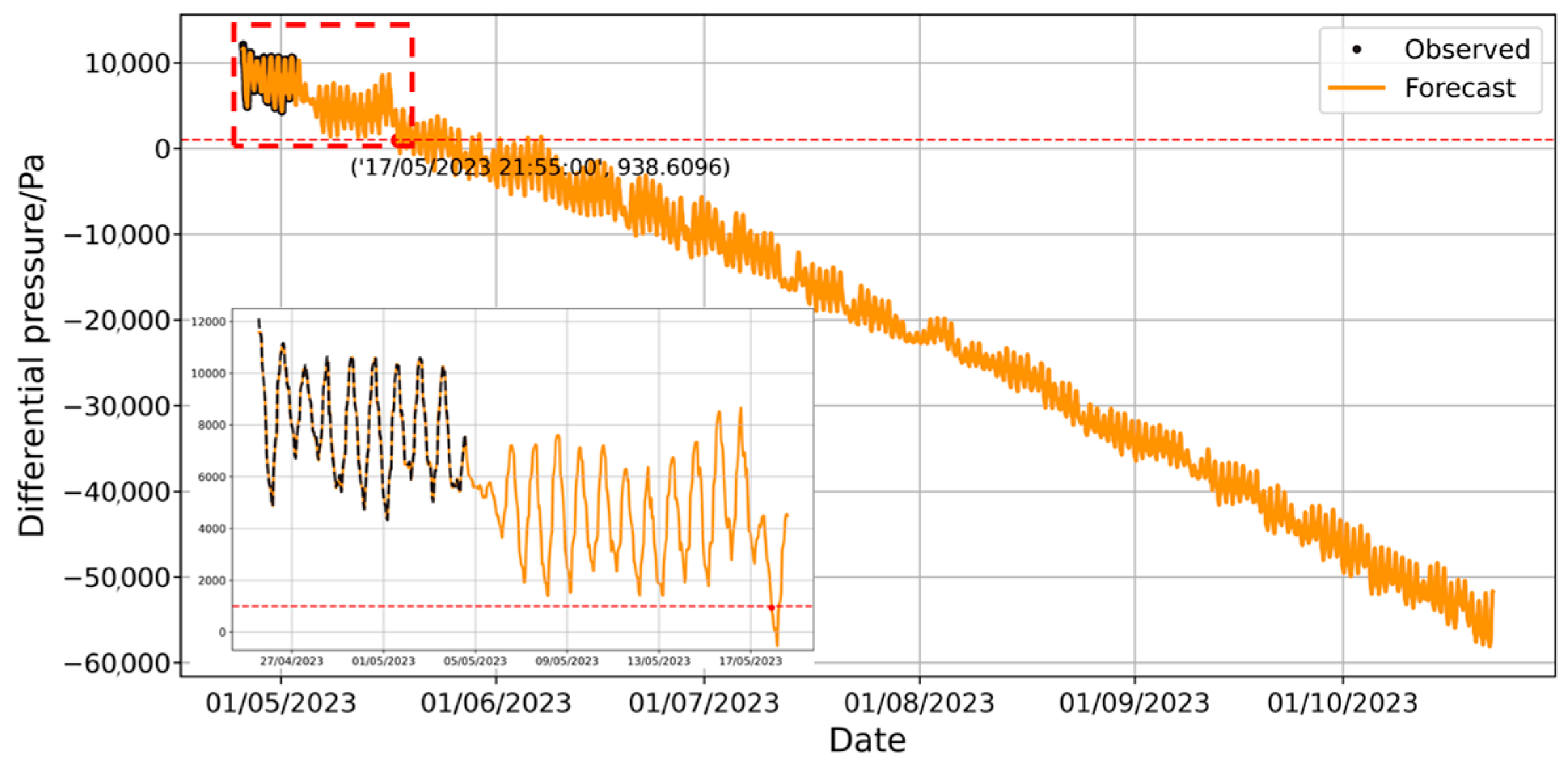

- Model Prophet

- C.

- Model LSTM

- D.

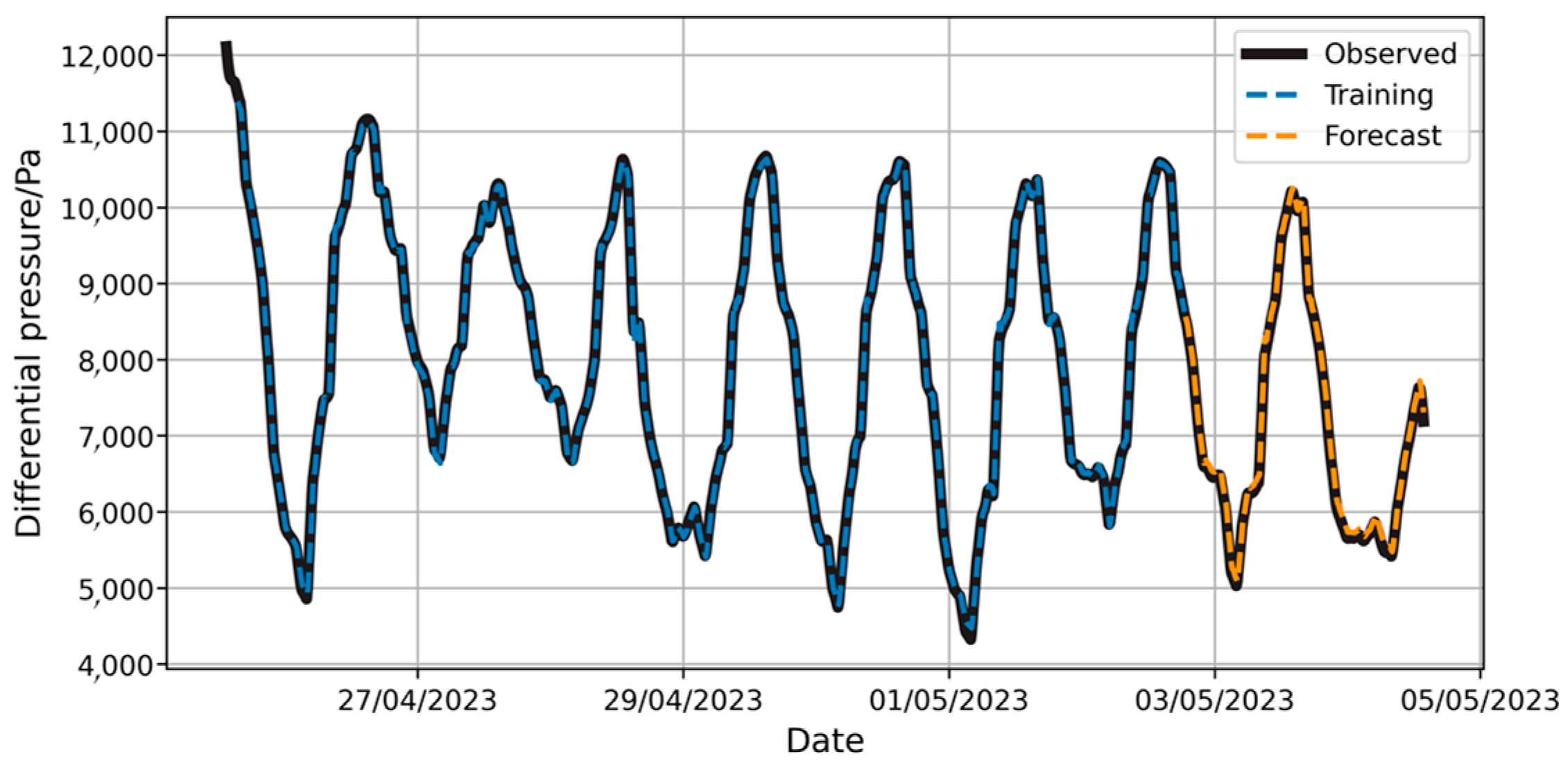

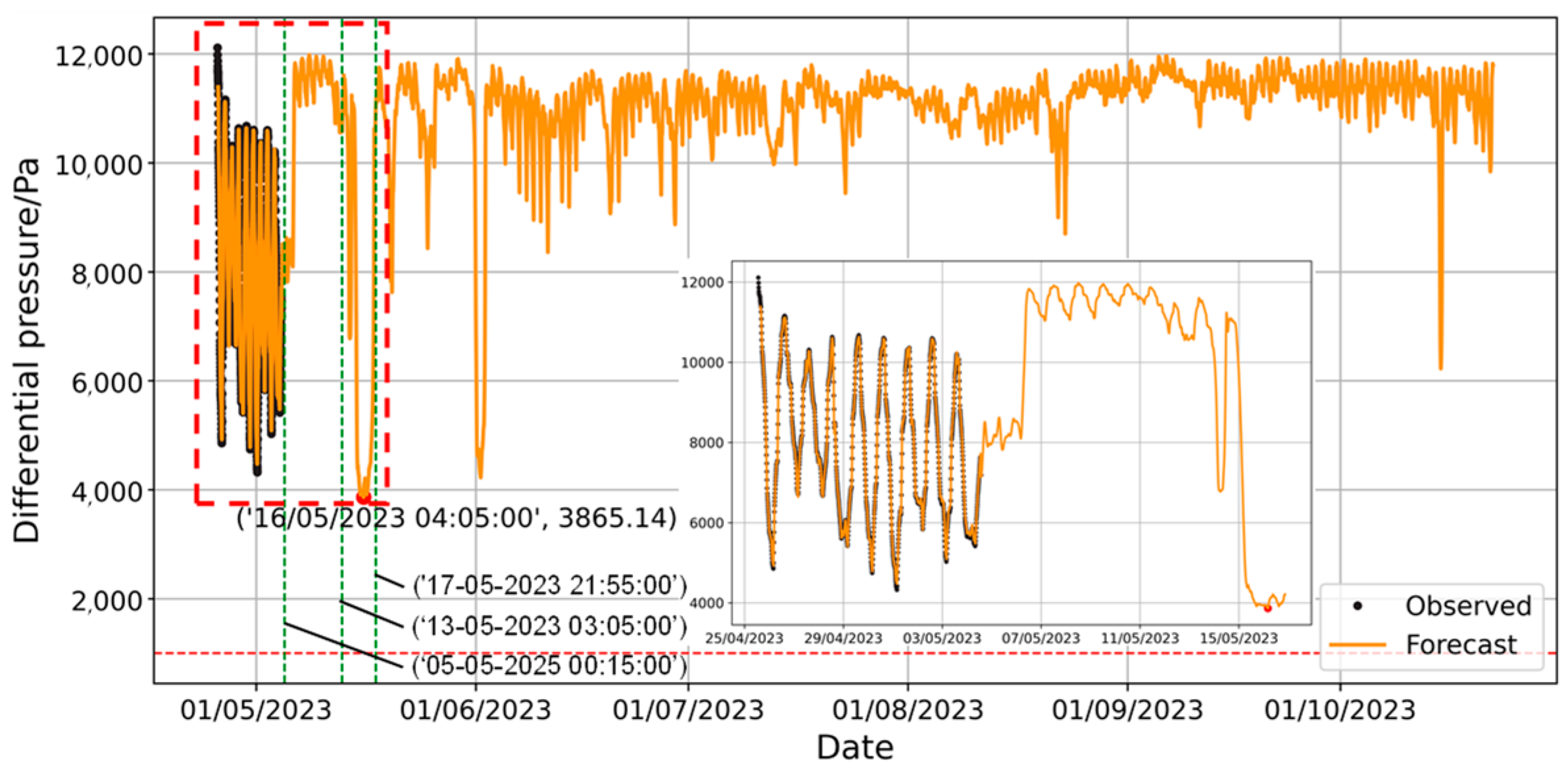

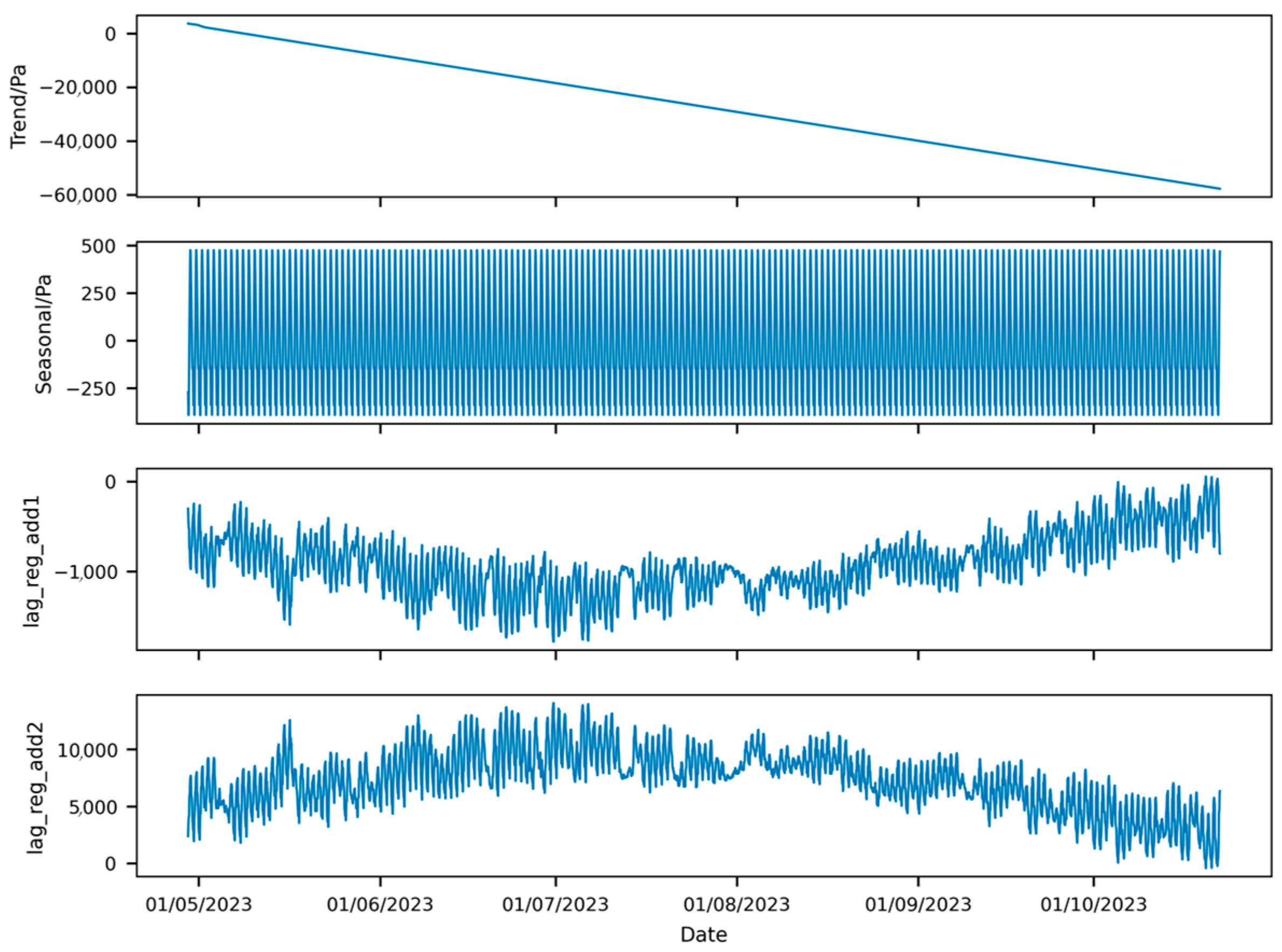

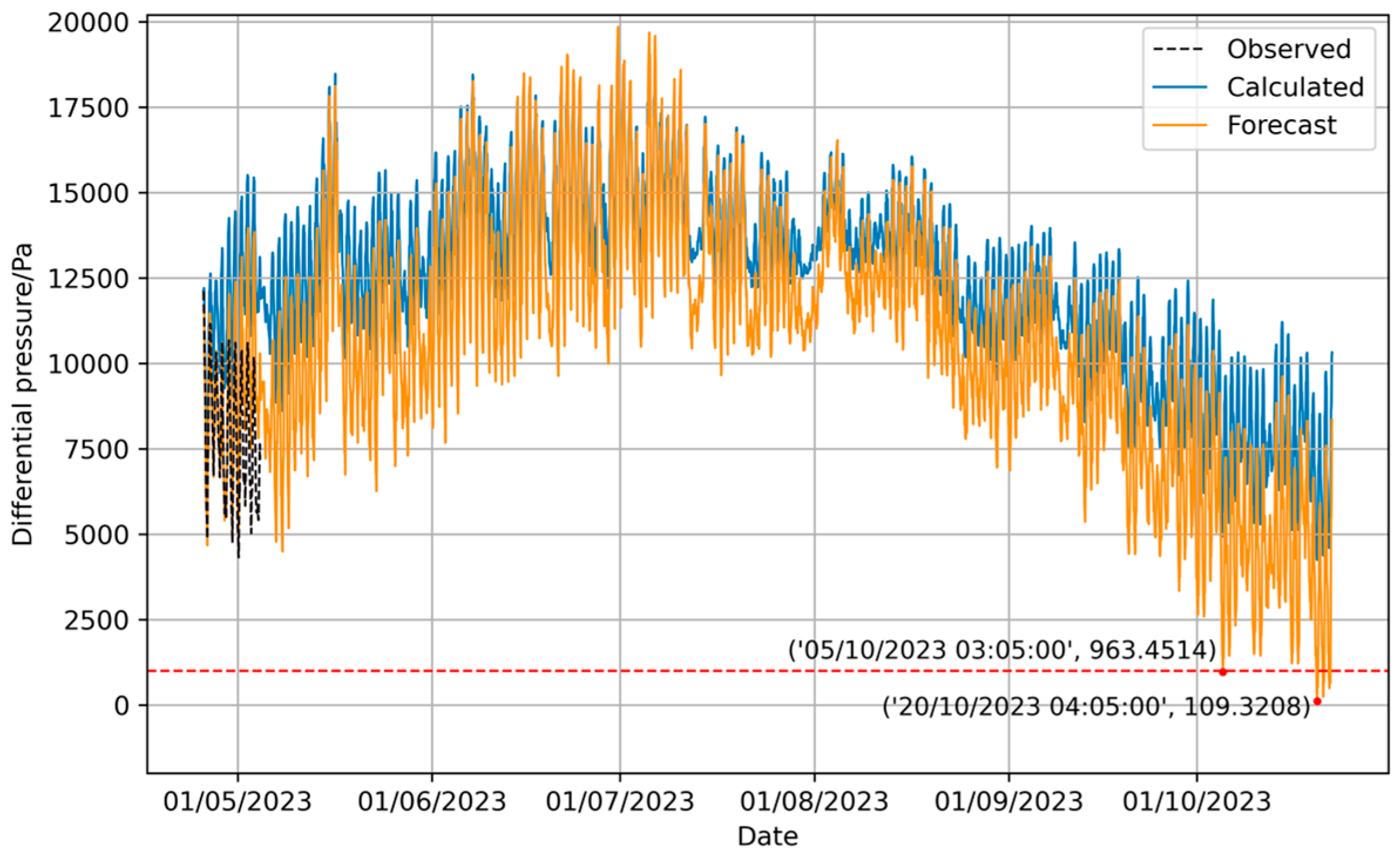

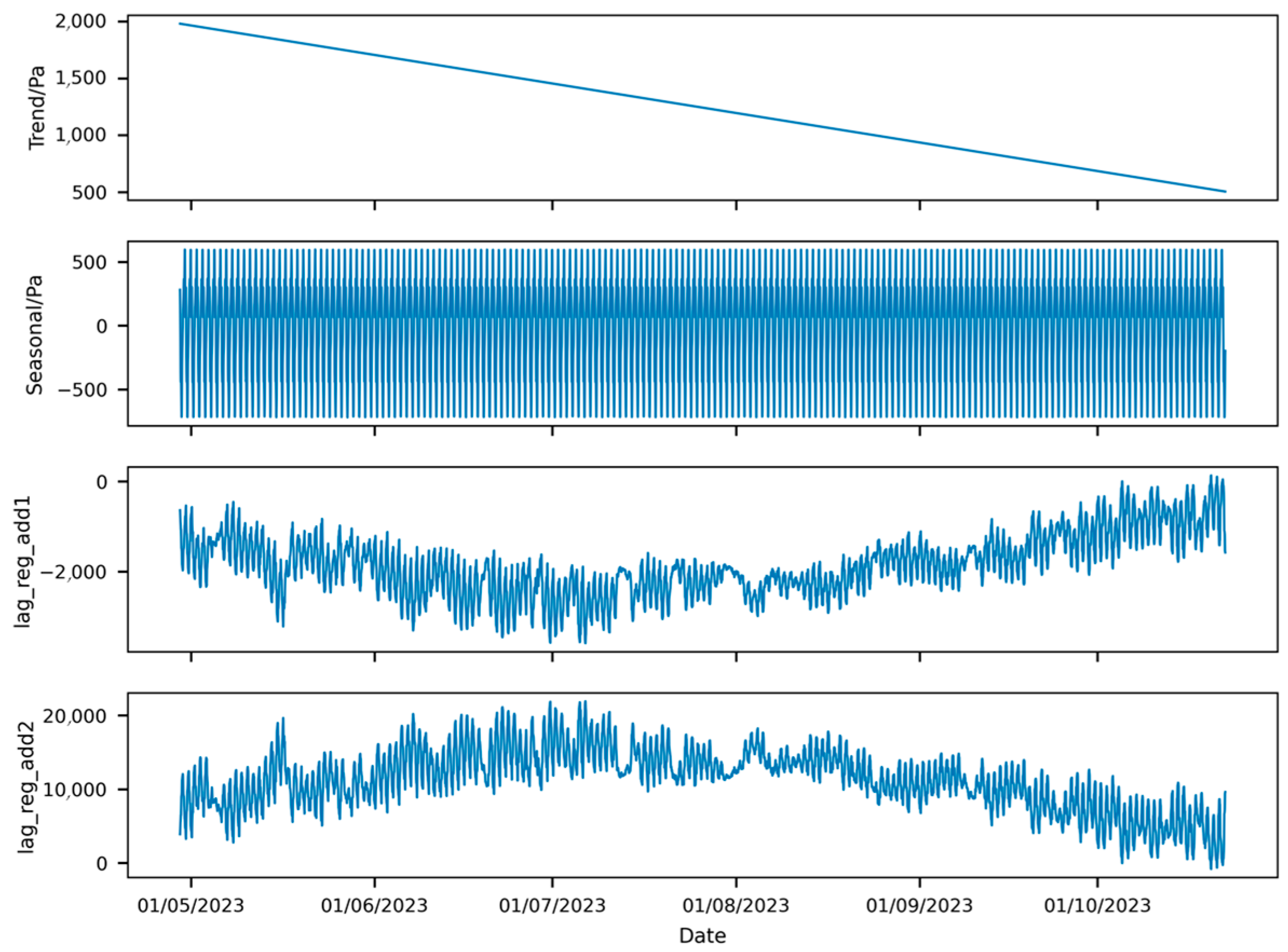

- Model NeuralProphet

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ECMWF | European Centre for Medium-Range Weather Forecasts |

| Mean Absolute Error | |

| Mean Absolute Percentage Error | |

| Root Mean Square Error | |

| Coefficient of Determination | |

| LSTM | Long Short-Term Memory |

| STL | Seasonal-Trend decomposition using Loess |

References

- Wasim, M.; Ali, A.; Choudhry, M.; Saleem, F.; Shaikh, I.; Iqbal, J. Unscented Kalman filter for airship model uncertainties and wind disturbance estimation. PLoS ONE 2021, 16, e0257849. [Google Scholar] [CrossRef] [PubMed]

- Shi, Z.; Zhang, X.; Li, J.; Qian, T. The influence of pressure retention index of stratospheric aerostats on their stationary performance. Acta Aeronaut. Et Astronaut. Sinica 2016, 37, 1833–1840. [Google Scholar] [CrossRef]

- Lv, J.; Nie, Y.; Zhang, Y.; Chen, Q.; Gao, H. Research on Environmental Adaptability of Stratospheric Airship Materials. In Man-Machine-Environment System Engineering; Springer: Berlin/Heidelberg, Germany, 2024; Volume 1256. [Google Scholar] [CrossRef]

- Song, L.; Yang, Y.; Zheng, Z.; He, Z.; Zhang, X.; Gao, H.; Guo, X. Theoretical analysis and experimental study on physical explosion of stratospheric airship envelope. Front. Mater. 2023, 9, 1046229. [Google Scholar] [CrossRef]

- Lu, J.; Yang, K.; Zhang, P.; Wu, W.; Li, S. A Trend Forecasting Method for the Vibration Signals of Aircraft Engines Combining Enhanced Slice-Level Adaptive Normalization Using Long Short-Term Memory Under Multi-Operating Conditions. Sensors 2025, 25, 2066. [Google Scholar] [CrossRef]

- Sun, K.; Liu, S.; Gao, Y.; Du, H.; Cheng, D.; Wang, Z. Output power prediction of stratospheric airship solar array based on surrogate model under global wind field. Chin. J. Aeronaut. 2025, 38, 103244. [Google Scholar] [CrossRef]

- Luo, Y.; Zhu, M.; Chen, T.; Zheng, Z. Remaining useful life prediction for stratospheric airships based on a channel and temporal attention network. Commun. Nonlinear Sci. Numer. Simul. 2025, 143, 108634. [Google Scholar] [CrossRef]

- Chen, M.; Liu, Q.; Zhang, J.; Chen, S.; Zhang, C. XGBoost-based Algorithm for post-fault transient stability status prediction. Power Syst. Technol. 2020, 44, 1026–1034. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 13 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Xu, Y.; Zhen, J.; Jiang, X.; Wang, J. Mangrove species classification with UAV-based remote sensing data and XGBoost. Natl. Remote Sens. Bull. 2021, 25, 737–752. [Google Scholar] [CrossRef]

- Li, H. Research on Web Spam Detection Method Based on XGBoost Algorithm. Master’s Thesis, Southwest Jiaotong University, Chengdu, China, 2020. [Google Scholar]

- Baghbani, A.; Kiany, K.; Abuel-Naga, H.; Lu, Y. Predicting the Compression Index of Clayey Soils Using a Hybrid Genetic Programming and XGBoost Model. Appl. Sci. 2025, 15, 1926. [Google Scholar] [CrossRef]

- Yin, C.; Ge, Y.; Chen, W.; Wang, X.; Shao, C. The Impact of the Urban Built Environment on the Carbon Emissions of Rede-Hailing Based on the XGBoost Model; Journal of Beijing Jiaotong University: Beijing, China, 2025. [Google Scholar]

- Taylor, S.; Letham, B. Forecasting at scale. Am. Stat. 2018, 72, 37–45. [Google Scholar] [CrossRef]

- Zhu, Y.; Liu, Z. A Dual-Dimension Convolutional-Attention Module for Remaining Useful Life Prediction of Aeroengines. Aerospace 2024, 11, 809. [Google Scholar] [CrossRef]

- Peng, Y.; Liu, Y.; Zhang, R. Modeling and analysis of stock price forecast based on LSTM. Comput. Eng. Appl. 2019, 55, 209–212. [Google Scholar] [CrossRef]

- Hasan, M.M.; Hasan, M.J.; Rahman, P.B. Comparison of RNN-LSTM, TFDF and stacking model approach for weather forecasting in Bangladesh using historical data from 1963 to 2022. PLoS ONE 2024, 19, e0310446. [Google Scholar] [CrossRef]

- Krake, T.; Klötzl, D.; Hägele, D.; Weiskopf, D. Uncertainty-Aware Seasonal-Trend Decomposition Based on Loess. IEEE Trans. Vis. Comput. Graph. 2025, 31, 1496–1512. [Google Scholar] [CrossRef]

- Cao, D.; Ma, J.; Sun, L.; Ma, N. A Time-series Prediction Algorithm Based on a Hybrid Model. Recent Adv. Comput. Sci. Commun. 2023, 16, 3–17. [Google Scholar] [CrossRef]

- Wang, M.; Meng, Y.; Sun, L.; Zhang, T. Decomposition combining averaging seasonal-trend with singular spectrum analysis and a marine predator algorithm embedding Adam for time series forecasting with strong volatility. Expert Syst. Appl. 2025, 274, 126864. [Google Scholar] [CrossRef]

- Yang, J.; Liu, C.; Pan, J. Deformation prediction of arch dams by coupling STL decomposition and LSTM neural network. Appl. Intell. 2024, 54, 10242–10257. [Google Scholar] [CrossRef]

- Triebe, O.; Hewamalage, H.; Pilyugina, P.; Laptev, N.; Bergmeir, C.; Rajagopal, R. NeuralProphet: Explainable Forecasting at Scale. arXiv 2021. [Google Scholar] [CrossRef]

- Pragalathan, J.; Schramm, D. Comparison of SARIMA, Fb-Prophet and NeuralProphet Models for Traffic Flow Predictions at a Busy Urban Intersection. In Technologies for Sustainable Transportation Infrastructures; Springer: Berlin/Heidelberg, Germany, 2024; Volume 529, pp. 127–143. [Google Scholar] [CrossRef]

- Arslan, S. A hybrid forecasting model using LSTM and Prophet for energy consumption with decomposition of time series data. PeerJ Comput. Sci. 2022, 8, e1001. [Google Scholar] [CrossRef]

- Yuan, S.; Wang, C.; Mu, B.; Zhou, F.; Duan, W. Typhoon intensity forecasting based on LSTM using the rolling forecast method. Algorithms 2021, 14, 83. [Google Scholar] [CrossRef]

- Huang, L.; Wu, H.; Lou, Y.; Zhang, H.; Liu, L.; Huang, L. Spatiotemporal Analysis of Regional Ionospheric TEC Prediction Using Multi-Factor NeuralProphet Model under Disturbed Conditions. Remote Sens. 2023, 15, 195. [Google Scholar] [CrossRef]

| Input/Output | Feature |

|---|---|

| Input | {Preceding atmospheric pressure; Preceding pressure differential; Preceding external temperature} |

| Output | {Subsequent pressure differential} |

| Traditional Machine Learning Models | Deep Learning Models | ||

|---|---|---|---|

| XGBoost | Prophet | LSTM | NeuralProphet |

| Component | Model and Specifications | Description |

|---|---|---|

| CPU | Intel(R) Core(TM) i7-8750H CPU Base Frequency: 2.20 GHz Core Count: 6 cores, 12 threads Cache: 9 MB SmartCache | Used for model training and data preprocessing. Supports multithreaded parallel computation. |

| GPU | Intel(R) UHD Graphics 630 Core Clock Frequency: 300 MHz CUDA Cores: 24 | Supports CUDA and cuDNN acceleration. |

| RAM | DDR4 2667 MHz Capacity: 8 GB | Used for data preprocessing and caching. Supports lightweight dataset training. |

| Evaluation Metrics | XGBoost | Prophet | LSTM | Neural-Prophet |

|---|---|---|---|---|

| 16.42638 | 222.13035 | 58.58745 | 66.35149 | |

| (%) | 0.21908 | 3.40779 | 0.86830 | 0.95075 |

| 21.26976 | 258.64652 | 71.12825 | 80.07560 | |

| 0.99986 | 0.96826 | 0.99802 | 0.99696 | |

| Training Time (s) | 0.16 ± 0.032 | 3.95 ± 0.085 | 5.38 ± 4.15 | 25.29 ± 1.3 |

| Short-term Prediction (s) | 0.019 ± 0.005 | 1.76 ± 0.041 | 0.0064 ± 0.0017 | 0.11 ± 0.035 |

| Long-term Prediction (s) | 316.78 | 68.59 | 33.16 | 1.30 |

| Parameters | Setting | Parameters | Setting |

|---|---|---|---|

| Objective | Regression | Subsample | 0.8 |

| Learning_rate | 0.1 | Colsample_bytree | 0.8 |

| N_estimators | 100 | Random_state | 42 |

| Max_depth | 5 | ||

| Parameters | Setting | Parameters | Setting |

|---|---|---|---|

| Seasonality_mode | Additive | Daily_seasonality | True |

| Yearly_seasonality | False | Holidays | None |

| Weekly_seasonality | False | Interval_width | 0.95 |

| Parameters | Setting | Parameters | Setting |

|---|---|---|---|

| Time step | 12 | Hidden size | 15 |

| Network layer | 2 | Learning rate | Dynamic, initial value = 0.1 |

| Parameters | Setting | Parameters | Setting |

|---|---|---|---|

| Seasonality mode | Additive | Weekly seasonality | False |

| Yearly seasonality | False | Daily seasonality | True |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bi, Y.; Xu, W.; Song, L.; Yang, M.; Zhang, X. Prediction of Airtightness Performance of Stratospheric Ships Based on Multivariate Environmental Time-Series Data. Forecasting 2025, 7, 28. https://doi.org/10.3390/forecast7020028

Bi Y, Xu W, Song L, Yang M, Zhang X. Prediction of Airtightness Performance of Stratospheric Ships Based on Multivariate Environmental Time-Series Data. Forecasting. 2025; 7(2):28. https://doi.org/10.3390/forecast7020028

Chicago/Turabian StyleBi, Yitong, Wenkuan Xu, Lin Song, Molan Yang, and Xiangqiang Zhang. 2025. "Prediction of Airtightness Performance of Stratospheric Ships Based on Multivariate Environmental Time-Series Data" Forecasting 7, no. 2: 28. https://doi.org/10.3390/forecast7020028

APA StyleBi, Y., Xu, W., Song, L., Yang, M., & Zhang, X. (2025). Prediction of Airtightness Performance of Stratospheric Ships Based on Multivariate Environmental Time-Series Data. Forecasting, 7(2), 28. https://doi.org/10.3390/forecast7020028