Abstract

Traffic operation efficiency is greatly impacted by the increase in travel demand and the increase in vehicle ownership. The continued increase in traffic demand has rendered the importance of controlling traffic, especially at intersections. In general, the inefficiency of traffic scheduling leads to traffic congestion, resulting in a rise in fuel consumption, exhaust emissions, and poor quality of service. Various methods for time series forecasting have been proposed for adaptive and remote traffic control. The prediction of traffic has attracted profound attention for improving the reliability and efficiency of traffic flow scheduling while reducing congestion. Therefore, in this work, we studied the problem of the current traffic situation at Muhima Junction one of the busiest junctions in Kigali city. Future traffic rates were forecasted by employing long short-term memory (LSTM) and autoregressive integrated moving average (ARIMA) models, respectively. Both the models’ performance criteria for adequacy were the mean absolute error (MAE), mean absolute percentage error (MAPE), and root mean squared error (RMSE). The results revealed that LSTM is the best-fitting model for monthly traffic flow prediction. Within this analysis, we proposed an adaptive traffic flow prediction that builds on the features of vehicle-to-infrastructure communication and the Internet of Things (IoT) to control traffic while enhancing the quality of service at the junctions. The real-time actuation of traffic-responsive signal control can be assured when real-time traffic-based signal actuation is reliable.

1. Introduction

The rapid increase in travel demand and the increased number of vehicles require urban network traffic management and transportation modeling. Conventionally, traffic is regulated either by traffic signals or by signs at an intersection. The overall capacity of the intersection is constrained due to that traffic flow is associated with idleness as vehicles make frequent stops. Such scenarios lead to traffic congestion, crashes, fuel wastage, and exhaust emissions [1]. In developing countries, the situation is compounded by the limited availability of land and financial constraints for infrastructure expansion and traffic light systems maintenance. Traffic signal timing is a well-known problem in transportation engineering. The problem involves selecting and adjusting the timing of traffic lights to reduce congestion [2]. With technological advancements, traffic flow prediction methods have evolved over time [3]. This is where the idea of Intelligent Transportation Systems (ITS) plays a vital role, serving as an essential element of smart city infrastructures [4]. The ITS can offer instant analysis of road infrastructure and enhance traffic management efficiency [5,6]. At the heart of ITS systems lies traffic forecasting as a pivotal element [7]. Forecasting traffic involves mainly two methodologies, including parametric techniques, encompassing stochastic and temporal approaches, and non-parametric techniques, such as machine learning (ML), which have gained traction in addressing intricate traffic issues [8,9].

An assessment detailed in [10] discovered that non-parametric algorithms excel over parametric counterparts largely due to their capacity to manage an extensive range of parameters within vast datasets. Long short-term memory (LSTM) and recurrent neural networks (RNN) have been mainly utilized to examine how different input configurations influence the predictive capabilities of LSTM [11]. The input data from a single detector station to the forecast traffic flow include flow, speed, and occupancy [12]. The findings indicate that incorporating occupancy and speed details could potentially contribute to an overall improvement in the model’s performance. In [13], the power of integrating loop detectors’ traffic data (data detected at one point) with floating car data was revealed, which consists of the average vehicular flow rate and traffic density and how they can support during traffic analysis.

The integration of the deep belief network (DBN) and LSTM was proposed for urban traffic flow prediction while factoring in the influence of weather data, mainly rainfall. In [14], the experimental findings highlight that by incorporating additional rainfall-related factors, the accuracy of the deep learning predictors surpasses that of the existing predictors. Moreover, these integrated predictors exhibit enhancements compared to the original deep learning models that lack the rainfall input. In [8], authors introduced the graph attention LSTM Network (GAT-LSTM), which enhances the LSTM architecture by incorporating graph attention mechanisms in both the input-to-state and state-to-state transitions. This innovation is employed to establish an end-to-end trainable encoder-forecaster model, specifically designed for addressing the complex task of multi-link traffic flow prediction. The experimental outcomes demonstrate the superior capability of the GAT-LSTM network in capturing spatiotemporal correlations, resulting in a significant improvement of 15% to 16% compared to the state-of-the-art baseline.

In [15], authors introduced a hybrid prediction approach for short-term traffic flow utilizing both the auto-regressive integrated moving average (ARIMA) model and the LSTM neural network. This technique enables short-term forecasts of upcoming traffic flow by leveraging historical traffic data. Experimental findings highlight that the proposed dynamic weighted combination model outperforms the three comparative baselines, comprising individual ARIMA and LSTM methods, as well as an equal-weight combination, exhibiting a more effective prediction outcome. In [16], authors introduced the LSTM model, an enhanced version of the RNN, tailored to capture long-term sequence dependencies in traffic speed. This LSTM model effectively mitigates issues related to gradient vanishing and exploding. In a separate study in [17], the authors presented a deep Bidirectional LSTM (Bi-LSTM) model. This model extracts deep traffic flow features by utilizing both forward and backward propagation, leading to notable enhancements in prediction accuracy utilized the LSTM recurrent neural network to assess how different input configurations impact the performance of LSTM predictions [18]. The investigation delved into the influences of diverse inputs, encompassing traffic flow, occupancy, speed, as well as information about neighboring traffic, on the efficacy of short-term traffic flow prediction. The findings revealed that incorporating occupancy and speed data could potentially augment the overall model performance.

In [19], an innovative bidirectional LSTM model based on K-nearest neighbor principles demonstrated superior predictive capabilities. The precise anticipation of short-term traffic flow represents a fundamental challenge within ITS, furnishing essential monitoring and technical support for forthcoming traffic conditions. The timely and accurate prognosis of traffic flow stands as a foundational prerequisite for effective traffic management and informed travel route planning. Empirical findings underscore that, in comparison to SVR, LSTM, GRU, KNN-LSTM, and CNN-LSTM models, the novel model outlined in this research attains heightened prediction precision, showcasing performance enhancements of 77% [17]. In [20], the authors introduced a traffic flow forecasting models based on LSTM capable of handling variable lengths, irregular data sampling, and missing data. In [21], authors introduced an innovative traffic flow prediction technique rooted in deep learning, which inherently accounts for both spatial and temporal correlations. The method employs a stacked autoencoder model to grasp overarching traffic flow characteristics, utilizing a layer-by-layer training approach [22]. Notably, experimental results substantiate the method’s outstanding performance in predicting traffic flow.

In [23], authors present a hybrid prediction approach, SDLSTM-ARIMA, which combines an improved LSTM-RNN with the time series model. This method, derived from the Recurrent Neural Networks (RNN) model, assesses the singularity of traffic data over time in conjunction with probability values from the dropout module, integrating them at irregular time intervals to achieve precise traffic flow predictions [24]. Experimental findings highlight that the SDLSTM-ARIMA model outperforms similar approaches relying solely on ARIMA or autoregressive methods in terms of prediction accuracy. In the absence of a demand forecasting study consisting of a macro model to study the horizon years, this study relied on linear growth derived from previous City of Kigali reports and studies to come up with the long-term future growth rate where Kigali has seen growth of 4.5%. The presence of a small unplanned neighborhood around each planned neighborhood in the inner city and the presence of largely unplanned neighborhoods at the city’s edge with youth will increase travel demand [25]. Table 1 summarizes a few of the related work documented in terms of the models and performance metrics used in their evaluation. Based on the performance indexes, the analysis in our work was built on them to perceive our forecasting objectives [5,8,11,12,17,21,26,27].

Table 1.

Related work summary.

Kigali city’s population growth rate is estimated to vary between 1.8% to 2.5% (2025–2040) and an employment growth rate of 3.24% (2025–2040) [29]. Therefore, this paper intends to build and compare two forecasting models to predict traffic flow at Muhima Junction located in Kigali city. The junction is highly used as it is connected to other three junctions and the bus stand where buses stop to pick up and drop off passengers from two provinces in the neighboring Kigali city.

The major motivation of this work is to establish a traffic forecasting approach for analyzing traffic flow rate and the demand for real-time flow scheduling and signal optimization based on time series data. In this work, we considered only the traffic data without considering the variability effects of traffic influencing factors such as weather, season and other effects due to data limitation. The main contributions of this paper are as follows: (i) this work aims to provide a comparison between two commonly used forecasting models for long-term traffic flow forecasting, (ii) the study assesses the performance of the statistical model and deep learning technique, and (iii) the study seeks to propose an IoT-based conceptual framework to help in traffic prediction for effective scheduling of the traffic signal.

The rest of this paper is organized as follows. In Section 1, we have reviewed the related works. In Section 2, we briefly introduce the data used, ARIMA and LSTM, and then describe their evaluation parameter while evaluating the prediction of the models in this paper. In Section 3, we provide the results. In Section 4, we conclude the whole paper.

2. Methodology and Data Source

2.1. Dataset Description and Preparation

In this paper, we have used secondary data consisting of road traffic datasets from both Kigali City and the Transport Authority. The dataset gathers classified turning movement traffic data collected in 15 min intervals for 7 days of the week between 07:00 AM and 20:00 PM. The data are collected by the the use of bespoke digital traffic cameras which are specifically used to ensure the highest level of accuracy. The data from the video footage are post-processed to provide the classified turning movement volumes at the junctions in the city. We only preferred to extract and use 60 min discrete interval traffic data series of one of the junctions commonly known as Muhima Junction located at the latitude of 1°56′30.08″ S and longitude of 30°03′07.28″ E out of the other 12 junctions.

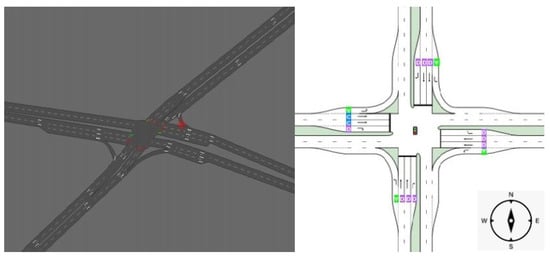

The cameras allowed for accurate data collection at all junctions. However, for the missing data points, the authors used the historical average data value substituting where missing value and zero data were presented in the dataset [24,30]. The typical volumes along with 12 h volumes range between 10,545 and 23,900 vehicles. The cameras allow discrete capture of all movements and modes, including U-turns and pedestrians with high levels of accuracy. We divided the sample dataset into two subperiods: (i) the period that ranges from 1 January 2021 to 31 December 2021, and (ii) the period that ranges from 1 January 2022 to 31 December 2022. Every vehicle using the junction was captured and the parameter noted was time, which is in 15-minute intervals, arriving from and heading towards. The dataset used contained 30,452 counts which were split into the training set and a test set to assess the model’s forecasting performance, hence a ratio of 75% trained and 25% validation datasets. We used Python 3.9.13 on the Anaconda Jupyter Notebook for the study. Table 2 depicts the descriptive statistics of the traffic volume in 15-min interval time. Figure 1 depicts the layout of the junction.

Table 2.

Descriptive statistics.

Figure 1.

Layout of the study area.

2.2. ARIMA Model

Auto-Regressive Integrated Moving Average (ARIMA) is expressed as ARIMA (p,d,q) where (AR) is autoregressive, (I) is the integration (MA) is the moving average, (p) an autoregressive term, (p) denotes the number of autoregressive orders, (d) specifies the order of differentiation applied to the series to estimate model, and (q) specifies the order of moving average parts [31,32]. This time series method depends on the assumption that the series is stationary; stationary means that the series is free of periodic fluctuations; therefore, if the series is not stationary, it should be made stationary before developing the forecasting model. Any regular, periodic increase or decrease in the series mean indicates seasonality in time series data. Seasonal variation often follows the hourly rate—daily, weekly, or yearly repeats are common. We predict the traffic flow rate (x) at time t using a linear combination of past months’ daily traffic values at the time . Hourly traffic flow data can have mainly three types of seasonality, including a daily pattern, weekly pattern, and an annual pattern. Combining the AR and MA processes, we arrive at ARIMA.

where are d-order difference observations, are coefficients of the d-order difference observations, error values and are coefficients for errors.

ARIMA processes tend to become stationary with constant means and equal variance by differentiation. Time series is a sequence of data observed over time; Box and Jenkins developed a mathematical model to forecast future values of particular data based on the previously observed values of that data quantity. They developed the model that employs the sample data’s autocorrelation and partial autocorrelation functions as key instruments to determine the ARIMA model’s order [33]. In [34], the authors investigated the appropriateness of seasonal ARIMA for univariate traffic flow prediction. Both definition and theoretical basis for using seasonal ARIMA to model traffic condition data and its prediction power were revealed in predicting future traffic conditions and when comparing heuristic forecast generation methods and seasonal ARIMA [27]. Further presentation of the ARIMA model form is detailed by [35].

ARIMA is used in various models and is useful for time series modeling and forecasting time series. These include the AR, MA, ARMA, ARIMA, and SARIMA. In this work, a non-seasonal ARIMA model was employed, neglecting the modulation effects of days, and climate conditions on a particular day or any other effect, and therefore has pure trend lines. Generally, the predicted values of a particular variable are a linear function of several past observations and random errors [36]. A time series N] is generated by a SARIMA given that:

where N is the number of observations, p, d, q, P, D, and Q are integers; B is the lag operator; S is the seasonal period length.

where is the regular autoregressive operator (AR) of order p. This AR model combines past values of the relevant variable here considered to be traffic flow rate in a manner that is linear.

Hence, is the autoregressive operator (AR) of order P, and the parameter p denotes the number of prior values to be considered into account for the forecast (MA) model of order q. The MA models combine past error values linearly as compared to using previous values of the relevant variable. The parameter q, which indicates the number of prior error values to be taken into account for the forecast, describes them.

Hence, is the seasonal MA of order Q, D is the number of seasonal differences; is the residual at time t, is both identically and independently distributed.

2.3. LSTM Model

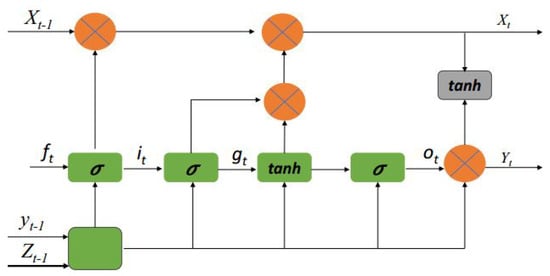

LSTM is one of the various neural network types. It is a particular type of Recurrent Neural Network (RNN) that has the ability to remember values from earlier stages for future use [37]. The RNN is a depth neural network known for being efficient and accurate, and it has an enormous impact on long-term data learning. RNN has seen significant adoption in the recognition of patterns and many other fields [26]. However, it suffers from gradient disappearance. This problem is addressed by the LSTM neural network in RNN, which is distinguished by its ability to learn long-term dependency information. The RNN methods are a particular type of neural network designed for sequence problems. They have been used to generate sequenced information for deep learning applications such as tracking objects, and the categorization of images. LSTM overcomes RNN’s limitation of not having complete information on how to train the network with backpropagation. The following describes how a time point is processed inside an LSTM cell. The irrelevant data in the LSTM are identified and eliminated from the cell state via the sigmoid layer, referred to as the forget gate layer. Figure 2 shows the LSTM schematic diagram of the LSTM network.

Figure 2.

LSTM mechanism.

In the figure, indicates memory from the previous state, the output state of the previous LSTM unit, indicates the current input, and , , , forget, input, output gates, respectively.

where, is the sigmoid forget layer, is the weight, is the output from previous timestamp, is the new input and is the bias. In our context of traffic prediction data at the junction, the input of data is denoted as X = [, ,…, ] where , , and indicate the four sections of the roads that are inputting to the described junction. Therefore, the hidden state of memory block Y = [, ,…, ] is based on the LSTM state networks used.

2.4. Forecast Validation

To assess the model fitting and forecasting efficiency of both ARIMA and LSTM, three indexes were considered. Those are the mean absolute error (MAE), which is the distinction that exists between the predicted value and the actual value and is expressed as an absolute number. The MAE reveals the mean size of the forecast error which we can expect. Hence, the MAE, which is the risk metric corresponding to the expected value of the absolute error, is given by Equation (9). A low MAE value indicates the closeness of the predicted values to actual values.

where and represent the forecasting and the true measurement of the nth sample and N is the total number of input samples. The mean absolute percentage error (MAPE) determines how accurate the forecasted quantities were in comparison to the actual quantities. The MAPE is the average of a set of errors and it is given by Equation (10).

Root mean squared error (RMSE), which tells how far a line fits its actual value, was previously used in measuring model performance in prediction mechanisms [11,38]. The higher RMSE denotes significant differences between the predicted and actual values. Traffic flow modeling used in this paper is assumed to be a regression problem. The RMSE, which is the standard deviation of the residuals, is defined by Equation (11).

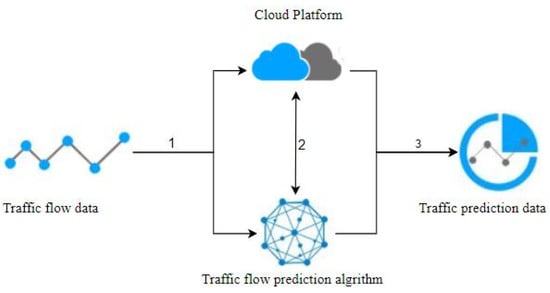

2.5. Design of Adaptive Traffic Flow Prediction Embedded System

The embedded system’s flexibility to adapt can be realized through several traffic flow detection nodes. In [39], authors describe an enhanced real-time vehicle-counting system as part of ITS. In [40], to classify and count vehicles that pass, authors proposed a system that builds on stationary video camera data. The design of the system is based on specific requirements, such as the need to operate on various types of roads and with a low recording frequency. In [41], the authors proposed a real-time traffic scheduling that builds on the traffic flow information and actuates the traffic signal based on the real-time flow data. Therefore, building a system that builds on the historical traffic and trained model along with the traffic flow prediction model of each embedded monitoring device in the cloud platform and at the junction level could allow real-time signal length estimation based on existing traffic knowledge and real-time data. While detecting traffic flow, each monitoring node will continue to upload data to the cloud platform and continuously train new data. By applying TinyML [42], the models can be embedded in the traffic controller, assuming that the traffic sensors count the vehicles crossing a particular section of the road, and then the next period signal length can be predicted. Figure 3 depicts the main elements of the proposed usage scenario. It is assumed that the real time data are collected and then fed into the model which is running in the back end. The model trained on the historical data predicts the next scenario by which signal length and cycle length can be determined.

Figure 3.

Data flow diagram of the system.

3. Results and Discussion

In this work, the hourly data of traffic flow at Muhima Junction were collected. Both the ARIMA and the LSTM models are compared.

3.1. Traffic Trend at the Area

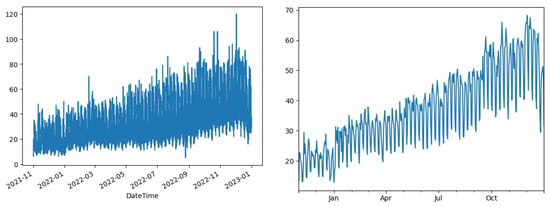

Analyzing the variation of traffic flow, Figure 4 shows that the traffic flow varies and keeps increasing over the period. This indicates a continuing overall tendency of data to increase.

Figure 4.

Variation of traffic flow at the junction.

Table 3 summarizes the analysis of the traffic volumes where the peak hours of travel occurred mainly in two durations of the day. The PM peak was found to be higher than the AM peak. The analysis of the traffic volumes found that the peak hours of travel occurred at the following times 08:00 to 09:00 AM are Peak and 17:00 to 19:00 PM is the Peak period for all the days, and as the year passes the traffic has kept increasing.

Table 3.

AM and PM Peak hours.

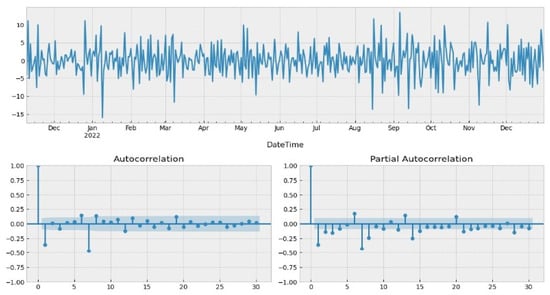

3.2. Fitting Models with ARIMA Model

We used the first trend difference and seasonal difference (d = 1) to eliminate numerical irregularities. Daily data from 2021 to 2022 showed a significantly stationary trend over time; consequently, d = 0. Integration was obviously essential to make the functions stationary. Model ARIMA had the lowest Akaike’s Information Criterion (AIC) when compared to . The partial autocorrelation (PACF) was 0 at lag 1, thus P = 0 or 1 in the monthly traffic rate. Both autocorrelation function (ACF) and PACF are mainly used to gain insights and identify the underlying patterns in time series data [43]. Each model complies with the requirement for residual time series white noise. They are used to understand and identify the underlying patterns and relationships in time series data [44]. Figure 5 shows the ACF and PACF for the traffic data.

Figure 5.

The ACF and PACF graphs.

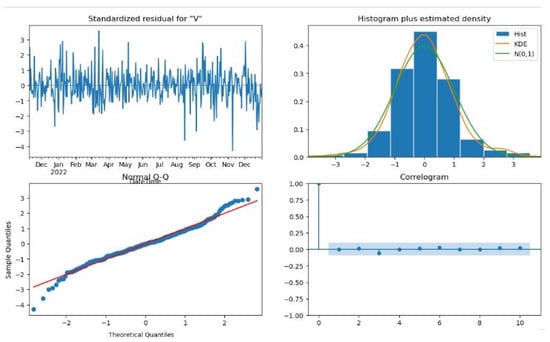

Figure 6 shows the model diagnostic plot for the developed time series models. The model residual quantile-quantile (q-q) plots are where the Q-Q plot compares the distribution of data, histogram, and normal distribution of the dataset. According to the graph, the Q-Q indicates the first data set’s quantiles in comparison to the second data set’s quantiles. Nearly all of the blue dots in the Normal Q-Q plot fall in line with the red line, indicating a correct and unskewed distribution. The correlogram indicates that only at Lag 1 were the residuals not autocorrelated.

Figure 6.

Time series residual plot.

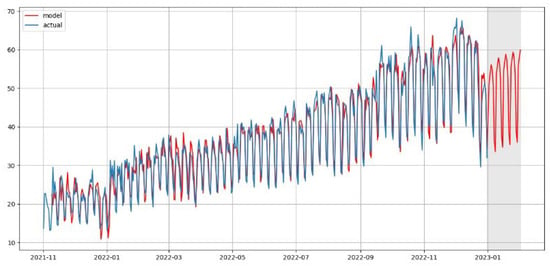

3.3. Fitting Models with LSTM Model

The primary objective of developing the prediction model is to determine if it is able to forecast future traffic flow rates for the same series accurately. Through assessing the performance of the model measure produced, the accuracy of the forecasting, as well as the fitted model, are evaluated with the help of performance metrics equations. The evaluation showed that, when using the LSTM model for a 60-min prediction horizon, the number of layers was set to 2, 3, 5, and 6 by trial and error. As such, there are 100 memory units in the spatial axis in the LSTM network. Figure 7 presents the LSTM forecasts.

Figure 7.

Graphical representation of future forecast by LSTM model.

The main objective of developing the prediction model is to test its capability to predict future traffic flow, resulting in actuation and accurate signal length estimation. While training and testing the LSTM, defining, and fitting the model processes were performed on test data, and therefore traffic forecasts. LSTM was primarily created to remove the long-term reliance problem. For removing the long-term dependency problem, the model finds it simple to remember the information that is given to them for a very long length of time.

3.4. Model Comparison

In order to solve the issues with the current short-term traffic flow forecasting techniques, the aim of this work is to propose a precise and effective model for forecasting traffic based on predictions of desired outcomes. Comparing the LSTM with ARIMA models, the performance of the proposed LSTM network is better than the ARIMA model. The results indicate that LSTM is an effective technique—since it has a lower error rate compared to other models, it can be applied to forecasting with greater regularity. Table 4 lists the performance metrics of the models. LSTM obtained an MAE of 10.2, MAPE of 22.5%, and RMSE of 5.8. On the other hand, the ARIMA model obtained an MAE of 10.8, a MAPE of 24.2%, and a RMSE of 9.1. However, both models predict traffic flow well. ARIMA has been a widely used model for obtaining the forecasts. In [28], the authors used ARIMA and the MSE and MAPE metrics to evaluate the model performance in forecasting working days to predict day ahead traffic flow and attained 24.7 and 9.94, respectively. The same model was also used in mobile network traffic forecasting and the metrics for traffic forecast evaluation revealed that the model performs well in time series forecasting. Comparison of accuracy metrics of the two models is significant. Table 4 presents the comparison of average performance for both models.

Table 4.

Summary of test statistical errors.

4. Conclusions

The lack of studies in the context of traffic forecasts and the integration of IoT has revealed the need for traffic analysis and development of ITS. ARIMA has been a widely used model for obtaining the forecasts. In this work the model was compared with LSTM, a deep learning technique. The two models adopted have been used and present good performances at different time scales when forecasting future demand or trends. Both models solely rely on past data to comprehend the trend and forecast the potential future. ARIMA model filters out the high-frequency noise in the data and development trends prediction. The LSTM, being a complex neural network and a deep learning application, learns from the pattern to identify non-linear dependencies and further store useful memory for a longer time. In this study, both models were shown to be good candidates for predicting the monthly series from data points. As described above, the LSTM model applied achieved a MAPE of 22.5% over the same period compared to the seasonal ARIMA model presented 24.2% MAPE for the original 60-min interval series over the same seven days. In order to predict using the ARIMA model, statistical inference needs to be accomplished. There is a way to compare both the prediction model performance by comparing their results on both on a weekly and monthly basis. There is a need for daily time series forecasts as the traffic flow has shown a tendency to increase in the future. Heterogeneity and other traffic-influencing factors which were not considered have to be taken into account due to their fluctuating effects. Understanding the significance of measuring the uncertainty linked to deterministic projections of traffic flow is also essential. Future research may need to concentrate on creating a probabilistic forecasting model based on the suggested method in order to address the congestion problem.

Author Contributions

This work is a contribution made by V.N.K., R.M., A.U. and D.M. Conceptualization, Data Collection, modelling, and documentation were done by V.N.K.; Overall Work supervision, Draft proofreading, and reviewing R.M., A.U. and D.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the African Center of Excellence in Internet of Things (ACEIoT), College of Science and Technology, University of Rwanda.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nasir, M.K.; Noor, R.; Kalam, M.A.; Masum, B.M. Reduction of fuel consumption and exhaust pollutant using intelligent transport systems. Sci. World J. 2014, 2014, 836375. [Google Scholar] [CrossRef] [PubMed]

- Gagliardi, G.; Lupia, M.; Cario, G.; Tedesco, F.; Cicchello Gaccio, F.; Lo Scudo, F.; Casavola, A. Advanced adaptive street lighting systems for smart cities. Smart Cities 2020, 3, 1495–1512. [Google Scholar] [CrossRef]

- Chen, C.; Liu, B.; Wan, S.; Qiao, P.; Pei, Q. An edge traffic flow detection scheme based on deep learning in an intelligent transportation system. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1840–1852. [Google Scholar] [CrossRef]

- Florez, R.; Palomino-Quispe, F.; Coaquira-Castillo, R.J.; Herrera-Levano, J.C.; Paixão, T.; Alvarez, A.B. A CNN-Based Approach for Driver Drowsiness Detection by Real-Time Eye State Identification. Appl. Sci. 2023, 13, 7849. [Google Scholar] [CrossRef]

- Boukerche, A.; Wang, J. A performance modeling and analysis of a novel vehicular traffic flow prediction system using a hybrid machine learning-based model. Ad Hoc Netw. 2020, 106, 102224. [Google Scholar] [CrossRef]

- Meena, G.; Sharma, D.; Mahrishi, M. Traffic prediction for intelligent transportation system using machine learning. In Proceedings of the 2020 3rd International Conference on Emerging Technologies in Computer Engineering: Machine Learning and Internet of Things (ICETCE), Jaipur, India, 7–8 February 2020; pp. 145–148. [Google Scholar]

- Yuan, H.; Li, G. A survey of traffic prediction: From spatio-temporal data to intelligent transportation. Data Sci. Eng. 2021, 6, 63–85. [Google Scholar] [CrossRef]

- Lu, S.; Zhang, Q.; Chen, G.; Seng, D. A combined method for short-term traffic flow prediction based on recurrent neural network. Alex. Eng. J. 2021, 60, 87–94. [Google Scholar] [CrossRef]

- Chrobok, R. Theory and Application of Advanced Traffic Forecast Methods. Ph.D. Thesis, University of Duisburg-Essen, Duisburg, Germany, 2005. [Google Scholar]

- George, S.; Santra, A.K. Traffic prediction using multifaceted techniques: A survey. Wirel. Pers. Commun. 2020, 115, 1047–1106. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, W.; Wu, X.; Chen, P.C.; Liu, J. LSTM network: A deep learning approach for short-term traffic forecast. IET Intell. Transp. Syst. 2017, 11, 68–75. [Google Scholar] [CrossRef]

- Abduljabbar, R.L.; Dia, H.; Tsai, P.W.; Liyanage, S. Short-term traffic forecasting: An LSTM network for spatial-temporal speed prediction. Future Transp. 2021, 1, 21–37. [Google Scholar] [CrossRef]

- Alonso, B.; Musolino, G.; Rindone, C.; Vitetta, A. Estimation of a Fundamental Diagram with Heterogeneous Data Sources: Experimentation in the City of Santander. ISPRS Int. J. Geo-Inf. 2023, 12, 418. [Google Scholar] [CrossRef]

- Wu, T.; Chen, F.; Wan, Y. Graph attention LSTM network: A new model for traffic flow forecasting. In Proceedings of the 2018 5th International Conference on Information Science and Control Engineering (ICISCE), Zhengzhou, China, 20–22 July 2018; pp. 241–245. [Google Scholar]

- Wang, J.; Hu, F.; Li, L. Deep bi-directional long short-term memory model for short-term traffic flow prediction. In Proceedings of the Neural Information Processing: 24th International Conference, ICONIP 2017, Guangzhou, China, 14–18 November 2017; Proceedings, Part V 24. Springer: Berlin/Heidelberg, Germany, 2017; pp. 306–316. [Google Scholar]

- Kang, D.; Lv, Y.; Chen, Y.y. Short-term traffic flow prediction with LSTM recurrent neural network. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–6. [Google Scholar]

- Zhuang, W.; Cao, Y. Short-Term Traffic Flow Prediction Based on a K-Nearest Neighbor and Bidirectional Long Short-Term Memory Model. Appl. Sci. 2023, 13, 2681. [Google Scholar] [CrossRef]

- Tian, Y.; Zhang, K.; Li, J.; Lin, X.; Yang, B. LSTM-based traffic flow prediction with missing data. Neurocomputing 2018, 318, 297–305. [Google Scholar] [CrossRef]

- Yang, B.; Sun, S.; Li, J.; Lin, X.; Tian, Y. Traffic flow prediction using LSTM with feature enhancement. Neurocomputing 2019, 332, 320–327. [Google Scholar] [CrossRef]

- Lv, Y.; Duan, Y.; Kang, W.; Li, Z.; Wang, F.Y. Traffic flow prediction with big data: A deep learning approach. IEEE Trans. Intell. Transp. Syst. 2014, 16, 865–873. [Google Scholar] [CrossRef]

- Li, Y.; Chai, S.; Ma, Z.; Wang, G. A hybrid deep learning framework for long-term traffic flow prediction. IEEE Access 2021, 9, 11264–11271. [Google Scholar] [CrossRef]

- Wei, W.; Wu, H.; Ma, H. An autoencoder and LSTM-based traffic flow prediction method. Sensors 2019, 19, 2946. [Google Scholar] [CrossRef]

- Liu, B.; Cheng, J.; Liu, Q.; Tang, X. A long short-term traffic flow prediction method optimized by cluster computing. Electr. Electron. Eng. 2018, 1, 1–19. [Google Scholar]

- Navarro-Espinoza, A.; López-Bonilla, O.R.; García-Guerrero, E.E.; Tlelo-Cuautle, E.; López-Mancilla, D.; Hernández-Mejía, C.; Inzunza-González, E. Traffic flow prediction for smart traffic lights using machine learning algorithms. Technologies 2022, 10, 5. [Google Scholar] [CrossRef]

- Hafner, S.; Georganos, S.; Mugiraneza, T.; Ban, Y. Mapping Urban Population Growth from Sentinel-2 MSI and Census Data Using Deep Learning: A Case Study in Kigali, Rwanda. In Proceedings of the 2023 Joint Urban Remote Sensing Event (JURSE), Heraklion, Greece, 17–19 May 2023; pp. 1–4. [Google Scholar]

- Han, X.; Gong, S. LST-GCN: Long Short-Term Memory Embedded Graph Convolution Network for Traffic Flow Forecasting. Electronics 2022, 11, 2230. [Google Scholar] [CrossRef]

- Smith, B.L.; Williams, B.M.; Oswald, R.K. Comparison of parametric and nonparametric models for traffic flow forecasting. Transp. Res. Part C Emerg. Technol. 2002, 10, 303–321. [Google Scholar] [CrossRef]

- Shah, I.; Muhammad, I.; Ali, S.; Ahmed, S.; Almazah, M.M.; Al-Rezami, A. Forecasting day-ahead traffic flow using functional time series approach. Mathematics 2022, 10, 4279. [Google Scholar] [CrossRef]

- Nkurunziza, D.; Tafahomi, R. Assessment of Pedestrian Mobility on Road Networks in the City of Kigali. J. Public Policy Adm. 2023, 8, 1–20. [Google Scholar] [CrossRef]

- Lawe, S.; Wang, R. Optimization of traffic signals using deep learning neural networks. In Proceedings of the AI 2016: Advances in Artificial Intelligence: 29th Australasian Joint Conference, Hobart, TAS, Australia, 5–8 December 2016; pp. 403–415. [Google Scholar]

- Choi, B. ARMA Model Identification; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Xu, X.; Jin, X.; Xiao, D.; Ma, C.; Wong, S. A hybrid autoregressive fractionally integrated moving average and nonlinear autoregressive neural network model for short-term traffic flow prediction. J. Intell. Transp. Syst. 2023, 27, 1–18. [Google Scholar] [CrossRef]

- Awe, O.; Okeyinka, A.; Fatokun, J.O. An alternative algorithm for ARIMA model selection. In Proceedings of the 2020 International Conference in Mathematics, Computer Engineering and Computer Science (ICMCECS), Lagos, Nigeria, 18–21 March 2020; pp. 1–4. [Google Scholar]

- Williams, B.M.; Hoel, L.A. Modeling and forecasting vehicular traffic flow as a seasonal ARIMA process: Theoretical basis and empirical results. J. Transp. Eng. 2003, 129, 664–672. [Google Scholar] [CrossRef]

- Brockwell, P.J.; Davis, R.A. Introduction to Time Series and Forecasting; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Hyndman, R.J.; Athanasopoulos, G. Forecasting: Principles and Practice; OTexts: Melbourne, Australia, 2018. [Google Scholar]

- Ishfaque, M.; Dai, Q.; Haq, N.u.; Jadoon, K.; Shahzad, S.M.; Janjuhah, H.T. Use of recurrent neural network with long short-term memory for seepage prediction at Tarbela Dam, KP, Pakistan. Energies 2022, 15, 3123. [Google Scholar] [CrossRef]

- Kontopoulou, V.I.; Panagopoulos, A.D.; Kakkos, I.; Matsopoulos, G.K. A Review of ARIMA vs. Machine Learning Approaches for Time Series Forecasting in Data Driven Networks. Future Internet 2023, 15, 255. [Google Scholar] [CrossRef]

- Kutlimuratov, A.; Khamzaev, J.; Kuchkorov, T.; Anwar, M.S.; Choi, A. Applying Enhanced Real-Time Monitoring and Counting Method for Effective Traffic Management in Tashkent. Sensors 2023, 23, 5007. [Google Scholar] [CrossRef]

- Sonnleitner, E.; Barth, O.; Palmanshofer, A.; Kurz, M. Traffic measurement and congestion detection based on real-time highway video data. Appl. Sci. 2020, 10, 6270. [Google Scholar] [CrossRef]

- Antoine, G.; Mikeka, C.; Bajpai, G.; Valko, A. Real-time traffic flow-based traffic signal scheduling: A queuing theory approach. World Rev. Intermodal Transp. Res. 2021, 10, 325–343. [Google Scholar] [CrossRef]

- Schizas, N.; Karras, A.; Karras, C.; Sioutas, S. TinyML for Ultra-Low Power AI and Large Scale IoT Deployments: A Systematic Review. Future Internet 2022, 14, 363. [Google Scholar] [CrossRef]

- Long, B.; Tan, F.; Newman, M. Forecasting the Monkeypox Outbreak Using ARIMA, Prophet, NeuralProphet, and LSTM Models in the United States. Forecasting 2023, 5, 127–137. [Google Scholar] [CrossRef]

- Sedai, A.; Dhakal, R.; Gautam, S.; Dhamala, A.; Bilbao, A.; Wang, Q.; Wigington, A.; Pol, S. Performance Analysis of Statistical, Machine Learning and Deep Learning Models in Long-Term Forecasting of Solar Power Production. Forecasting 2023, 5, 256–284. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).