Abstract

Collaborative learning has been widely studied in higher education and beyond, suggesting that collaboration in small groups can be effective for promoting deeper learning, enhancing engagement and motivation, and improving a range of cognitive and social outcomes. The study presented in this paper compared different forms of human and robot facilitation in the game of planning poker, designed as a collaborative activity in the undergraduate course on agile project management. Planning poker is a consensus-based game for relative estimation in teams. Team members collaboratively estimate effort for a set of project tasks. In our study, student teams played the game of planning poker to estimate the effort required for project tasks by comparing task effort relative to one another. In this within- and between-subjects study, forty-nine students in eight teams participated in two out of four conditions. The four conditions differed in respect to the form of human and/or robot facilitation. Teams 1–4 participated in conditions C1 human online and C3 unsupervised robot, while teams 5–8 participated in conditions C2 human face to face and C4 supervised robot co-facilitation. While planning poker was facilitated by a human teacher in conditions C1 and C2, the NAO robot facilitated the game-play in conditions C3 and C4. In C4, the robot facilitation was supervised by a human teacher. The study compared these four forms of facilitation and explored the effects of the type of facilitation on the facilitator’s competence (FC), learning experience (LX), and learning outcomes (LO). The results based on the data from an online survey indicated a number of significant differences across conditions. While the facilitator’s competence and learning outcomes were rated higher in human (C1, C2) compared to robot (C3, C4) conditions, participants in the supervised robot condition (C4) experienced higher levels of focus, motivation, and relevance and a greater sense of control and sense of success, and rated their cognitive learning outcomes and the willingness to apply what was learned higher than in other conditions. These results indicate that human supervision during robot-led facilitation in collaborative learning (e.g., providing hints and situational information on demand) can be beneficial for learning experience and outcomes as it allows synergies to be created between human expertise and flexibility and the consistency of the robotic assistance.

1. Introduction

Collaborative learning (CL) has been widely studied in school and higher education. A number of research studies on CL, including collaborative learning supported by robots, have shown that collaboration, especially in small groups, can be effective for promoting deeper learning, enhancing engagement and motivation, and improving a range of cognitive and social learning outcomes [1,2,3,4,5,6,7,8]. Interaction in small groups tends to promote active learning through the engagement and contributions of all team members compared to larger groups, which often grapple with limited participation, reduced engagement, and limited opportunities for equal expression and feedback. While active participatory learning in small groups has been shown to be effective, both for content retention and engagement, teachers in their roles as CL facilitators have been struggling to ensure comparable encouragement, engagement, and participation of all group members [4]. Here, social robots have started to be discussed as a technology that can support teachers by delivering instructions and feedback consistently, eliminating variations that may arise due to human factors such as focus, mood, fatigue, judgements, and biases, and also freeing up teachers’ time for more complex and personalised support [3,4,5,6,7]. As pointed out by Miyake, Okita, and Rosé, “Robots can take the collaboration out of the computer and into the three dimensional, real world environments of students” [8] (p. 57). Robots stand apart from computers and mobile devices due to their appearance, personality, and the ability to engage humans in social interactions [9]. Applying educational robots in real-world contexts can support students’ engagement in hands-on, experiential learning activities, transcending the limitations of traditional two-dimensional interfaces. Compared to two-dimensional online learning environments, physical social robots can be used to facilitate collaborative learning activities in a consistent way [8]. Also, robots equipped with a computer display can enable interaction similar to that on a computer or a mobile device [9]. However, while robots can enhance collaboration and engagement in real-world environments, their effectiveness depends on a wide range factors related to their design, usability, implementation, and integration into a curriculum and a learning environment.

The study presented in this paper is the third study in our planning poker series [5,6]. In the study by [5], we piloted and tested the game of planning poker with NAO in two different study programmes with 29 undergraduate and graduate students. The results showed that the design of planning poker facilitated by the NAO robot was helpful for both undergraduate and graduate students trying to understand the concept of relative estimation in agile teams [5]. In our follow-up study [6], the game of planning poker with the NAO robot was applied as a scalable social learning experience for a larger number of small groups of undergraduate students. The study with 46 students showed that the majority of the participants valued NAO as a robotic facilitator. There were also some interesting differences related to a range of aspects of the learning experience among students with and without prior experience with the NAO robot [6]. The collaborative learning experience with NAO was more exciting for students without prior experience, while the attainment of learning outcomes (especially increase in knowledge and stimulation of curiosity) was rated higher by students with prior experience. These differences indicate that, once the novelty of “robots as a new technology fades away over time, the attention of learners can shift from the technology to the actual process and content of collaborative learning” [5] (p. 16). The study presented in this paper did not consider prior experience as a variable as our primary objective was to compare the effects of human and robot facilitation types within the four conditions.

Planning poker is a popular consensus-based game for relative estimation in teams who collaboratively estimate effort for a set of project tasks relative to one another. Collaboration was defined as “the mutual engagement of participants in a coordinated effort to solve the problem together” [10] (p. 70). Following the approach by Roschelle and Teasley, the study presented in this paper focuses on collaborative learning as a synchronous activity taking place through face-to-face interactions in a team of players playing the game of planning poker together [10]. Collaborative learning in the game of planning poker is enabled by following a shared common goal, performing the same actions, and influencing the cognitive processes of interaction partners by justifying individual estimations and standpoints. Based on these characteristics, the game of planning poker can be classified as a collaborative learning activity [11,12]. In CL, groups are seen as a major source of knowledge [12], and social interactions allow the integration of new knowledge through the development of common ground [13]. Discussions plays an important role in collaborative activities, as they enhance the quality of collaboration through the sharing of task-related information [14]. Similarly, discussions in the game of planning poker allow the players to bring together different perspectives, share information, and integrate the expertise of all group members in reaching a consensus [5,6]. The game of planning poker is typically facilitated by a human facilitator who helps the team understand the concepts underlying relative estimation, and ensures that a session remains effective and the team can reach a consensus. The role of the facilitator is to empower all group members to share their perspectives, negotiate, and justify their estimations [15]. Studies have shown that this multi-perspective team-based estimation approach results in more accurate estimates compared to estimations made by single experts [15,16]. Specifically, group discussions in planning poker, in which teams discuss the work involved and later individual estimates, help groups in arriving at more accurate results [16].

We designed the game of planning poker as an educational simulation of a collaborative, consensus-building, relative estimation activity for small teams of students in which team members are encouraged to share their understanding and perspectives related to a set of tasks, justify their estimations, and reach a consensus in estimating effort for each task relative to other tasks. In the current study, we tested the game of planning poker in four different conditions. The conditions (C) differed in relation to the type of facilitation, i.e., C1 was human facilitation online, C2 was human facilitation face to face (C2), C3 was unsupervised robotic facilitation, and C4 was supervised robotic facilitation, in which a human teacher provided additional support to students. In C1 and C2, the human teacher facilitated the game of planning poker by introducing students to the activity and supporting the process by providing information and guidance. The difference between these two conditions was that, in C1, the game-play and the facilitation took place online, while, in C2, the game-play and the facilitation took place face to face. In C3 and C4, the robot NAO facilitated collaborative learning by structuring the session, prompting learners to engage in the sequence of activities of the planning poker game, and time keeping. The difference between C3 and C4 was that, in C3, the teacher did not intervene in the game-play, passively observing the session facilitated by the robot, while, in C4, the teacher supported students on demand by answering questions and giving hints when the information provided by the robot seemed not to be sufficient. We created these variations (C1–C4) to explore possible differences in effects related to the perceptions of the facilitator’s competence, learning experience, and learning outcomes.

In this within- and between-subjects study, forty-nine undergraduate students participated in two out of four conditions. There was a total of eight student teams, and each team participated in one human-facilitated and one robot-facilitated condition. Teams 1–4 participated in C1 (human online) and C3 (unsupervised robot), while teams 5–8 participated in C2 (human in presence) and C4 (supervised robot). This arrangement ensured that each student team had exposure to both human and robot facilitation, allowing a nuanced exploration of the different approaches to CL facilitation.

This paper is divided into five sections. Following this introduction (Section 1), the next section outlines related work in the area of collaborative learning, CL (2.1), computer-supported collaborative learning, CSCL (2.2), and robot-supported collaborative learning, RSCL (2.3), while focusing on the comparisons of human and robotic facilitators (Section 2). Next, we describe the methods of the study (Section 3) and the research results (Section 4), which are followed by a discussion of the results and pointers for future research (Section 5). The paper ends with the contributions and limitations of our study (Section 6).

2. Related Work

2.1. Collaborative Learning (CL)

Collaborative learning (CL) refers to a coordinated, synchronous activity which results from a continued attempt to construct and maintain a shared conception of a problem [14]. As elaborated by [11], CL can be looked at from the perspective of different types of interactions, characteristics of a situation, mechanisms for learning, and effects of collaboration. CL is characterised by the equality of interaction partners, shared common goal, joint work, interactivity of peers who influence each other’s cognitive processes, synchronicity of communication, shared work in the group (rather that splitting work and working on sub-tasks), and the possibility to negotiate, argue, and justify standpoints [11]. CL brings together different perspectives and complementary skills in joint activities aimed at reaching a shared goal and/or solving a shared problem [11,12,13,14].

Peer interaction is an important element of CL as it provides opportunities for learners to support each other through information sharing and communication [11,12,13,14]. CL has been shown to have a positive effect on the sense of self-efficacy and self-regulation [11,14]. In CL, groups are seen as a major source of knowledge [11], and social interactions allow new knowledge to be integrated through the development of common ground [13]. These characteristics are also found in the game of planning poker, which is typically played in small groups and aims to reach a consensus, which can be seen as the development of common ground, based on information sharing and communication through social interactions which allow the perspectives and knowledge of all peers (team members) to be integrated.

2.2. Computer-Supported Collaborative Learning (CSCL)

Computer-supported collaborative learning (CSCL) has been defined as the collaboration of two or more learners solving a problem together supported by computer technology [17]. To ensure collaboration in the context of CSCL, detailed and simultaneously applied instructional approaches have to be applied to structure social interactions within a group [17]. Some of the key instructional approaches used to encourage collaboration in groups include the cognitive approach to promoting “epistemic fluency”, the direct approach to structuring task-specific learning activities, and the conceptual approach to stimulate collaboration in general [17]. The cognitive approach aims to promote the “epistemic fluency” in the sense of the ability to “identify and use different ways of knowing” and “take the perspectives of others” [17]. Epistemic fluency can be achieved by describing, explaining, predicting, arguing, critiquing, evaluating, and defining [17]. While direct approaches aim at structuring a task-specific learning activity, conceptual approaches aim at tailoring a general conceptual model of collaborative learning [17]. In our study, we used these three approaches (cognitive, direct, and conceptual) to enhance collaboration in small teams of students playing the planning poker game (cf. Section 3 below).

CSCL research and practice have used different kinds of scripts to promote collaboration [18]. CSCL scripts have been used as instructional support to set up and facilitate scripted collaboration [18]. Such scripts have been designed for the specification and distribution of roles and tasks, sequencing and scaffolding of activities, specification of turn-taking rules and work phases, monitoring and regulating interactions [18,19], as well as prompting groups’ socio-cognitive and socio-emotional monitoring [20]. The generic framework for CSCL scripting outlined by [2] includes five key components (participants, activities, roles, resources, and groups) and three key mechanisms (task distribution, group formation, and sequencing). Micro and macro scripts differ when it comes to the level of granularity at which they support CL [20]. While macro scripts focus on orchestrating the collaborative activity, micro scripts include specific prompts for fine-grained collaborative learning activities [2]. Such scripts can be also applied in designing robot-supported collaborative learning (RSCL). In our study, we used these two types of scripts (macro and macro) to design the game of planning poker (cf. Section 3 below).

2.3. Robot-Supported Collaborative Learning (RSCL)

Researchers have explored the potential of different types of embodied robots in supporting collaborative learning, promoting the meaningful engagement of learners, and establishing interactive relationships with learners [8,9]. This research has included robots from different categories such as automated (autonomous-control), tele-operated (remote-control), and transformed (both autonomous- and remote-control) robots, and used methods such as scripted collaboration, automatic collaborative learning process analysis, and various instructional models in specific domains such as robot-assisted language learning (RALL) [8,9]. In our study, we used the NAO robot with autonomous control and without remote control (automated robot).

Robot-supported collaborative learning (RSCL) describes a specific CSCL approach in which a physical, embodied robot supports the collaborative learning process [4,5,6]. Compared to other technologies used in CSCL, physical robots, comprising categories such as social, humanoid, educational, and service robots, can be applied not only to mediate interactions but also to act as interaction partners with a shared presence in a physical space, including a classroom [21]. From this perspective, RSCL differs from CSCL in that a robot is used as a co-present interaction agent equipped with a social role and a social presence [21].

Previous research in the field of robotic facilitation of collaborative learning has reported promising effects of applying social robots as facilitators, emphasising that robots can support collaboration in real-world environments [7]. While a human facilitator may support collaboration in teams in a more individual and spontaneous way, a robotic facilitator can support collaborative learning in a more efficient, consistent, and non-judgemental way [3,7]. Research has shown that applying a social robot as a facilitator of CL in small groups can improve the attention and motivation of learners [3], provide better time management, objectivity, and efficiency [4], and enhance the learning experience on both the social and cognitive level, including stimulating interest, curiosity, understanding, and motivation [5], and also improving efficiency and time management [4,5]. The study by [4] compared CL facilitation by the NAO robot to a human facilitator and facilitation with the help of tablets in an undergraduate course on Human–Computer Interaction (HCI). The study revealed some key benefits of applying a robotic facilitator such as improved time management in facilitating group activities and higher accuracy and focus in repeated activities, as well as the objectivity and non-judgemental way of communication of the robotic facilitator [4]. The drawbacks of the robot compared to a human facilitator included the technical limitations of speech recognition, limited adaptability to a diversity of situations and learners, and overall lower responsiveness compared to a human [4]. The study by [22] explored the application of the NAO robot as a facilitator of brainstorming activities in small groups of students. In this experiment with 54 university students, the facilitation by the robot was compared to human facilitation. The aim of the study was to explore whether the robot-led condition influenced productivity in brainstorming. The results showed that brainstorming productivity, measured by the number of generated ideas, was similar in both conditions [22]. The study concluded that robot facilitators can effectively support brainstorming activities, especially when humans are not available or undesirable [22]. The study by [23] compared a robot tutee (NAO) to a human tutee and showed that enjoyment, willingness to play again, and perceptions of the quality of the tutee’s questions, and of their own learning gains, were similar for both conditions. However, participants found communication with the robot more difficult, mainly due to the limitations in speech recognition in off-the-shelf robots such as the NAO robot [23].

Moreover, studies in robot-assisted language collaborative learning (RALL) provide valuable insights into robot facilitation of CL. A review of 22 journal articles on RALL [24] reported that most of the studies on RALL in the past were conducted with children, including pre-schoolers (aged 4–7 years) and school children (aged 7–12 years). Nevertheless, there are studies in the field of RALL which were conducted with adult learners in the area of collaborative language learning, and these studies are especially relevant to the work presented in this paper. One of the reasons is that adult learners may have higher demands related to the robot’s realism in appearance and behaviour as well as higher requirements related to the transferability of the learning experience into real-life settings [25]. The study by [25] explored interaction and collaboration in robot-assisted language learning with 33 adult second language learners and concluded that robots such as NAO or Furhat, which have a high degree of anthropomorphism and expressiveness, may be best suited for application and studies with adult learners [25]. The degrees of freedom in the body movements of the robot have been considered as one of the main considerations for the interaction with robots in a physical space [25]. In the study by [25], which applied the Furhat robot in the role of a social companion to collaborative language learning, the learning activity focused on communication practice in pairs of adult learners. While a robot was seen as inferior to a human instructor, the authors concluded that robot–learner interactions can be effective if a robot is applied as a catalyst of collaborative learning among peers [25]. This approach was also applied in the study presented in this paper, in which the robot facilitated the collaboration among students in a team. This is a different interaction pattern compared to one-on-one robot–human interaction, in which the interaction involves direct communication between the robot and a single human with the robot’s attention directed towards the single human, allowing for undivided focus and personalised assistance, feedback, or guidance based on cues from the individual. The interaction dynamics are different when a robot facilitates collaboration among peers in a team. In this HCI pattern, the robot coordinates interactions in a team, facilitates discussions, and fosters collaboration among team members in support of the achievement of a common goal.

The study by [25] also analysed how robot–learner interactions in RALL are influenced by the behaviour of the robot. The aim of the study was to “determine a suitable pedagogical set-up for RALL with adult learners, since RALL studies have almost exclusively been performed with children and youths” [24] (p. 1273). The study applied different interaction patters with the robot acting as an interviewer, narrator, facilitator, and interlocutor. These four interaction patterns were based on a subset of interaction strategies of human moderators. In the interaction pattern of the robot as a facilitator, the robot enhanced learner-to-learner interaction by encouraging learners to talk with each other, asking open questions, and requesting that a peer comment on what the other peer said. The design of the “robot as facilitator” included fewer robot statements and questions. Also, robot utterances were shorter compared to the other three interaction patterns. This design strategy was used when designing the script for the game of planning poker, in which the number and length of utterances of a facilitator (human or robot) are kept short and the focus is on enhancing interactions between peers in a team.

Furthermore, the study by [25] showed that the adult participants were most active when the robot encouraged learner-to-learner interaction in its role as a facilitator. The level of activity was measured by the number and length of student utterances. The results showed that, in the “robot as facilitator” condition, the number of learner utterances was the highest, and also learner utterances were the longest, compared to in the “robot as interviewer” condition, which resulted in the lowest share of learner utterances, and the “robot as narrator” condition, which resulted in the shortest total length of learner utterances [25]. These results indicate that a robot used in the role of facilitator can enhance the activity of learners and, in consequence, collaboration in groups [25].

Despite the studies presented above, there is still little research comparing the effects of different forms of robotic facilitation in collaborative learning, especially in the context of higher education, or comparative studies which explore differences between human and robot facilitation in CL settings. The study presented in this paper contributes to filling this research gap as it explores the different effects of the four different types of human and robot facilitation of collaborative learning with adult learners. The study specifically explores and compares effects related to the perceptions of the facilitator’s competence (FC), the learning experience (LX), and the learning outcomes (LO). The next section outlines the methods applied to (a) designing the game of planning poker with the robotic and human facilitators and (b) designing the empirical study.

3. Methods

3.1. Study Objectives and Design

The study explored four different types of human and robot facilitation in the game of planning poker as a collaborative learning activity with undergraduate students on the course on agile project management in the study programme Digital Economy (BSc.) at a university of applied sciences in Germany. Throughout this course, students gain a solid understanding of agile principles and project management practices via a series of interactive seminars and project-based activities. The game of planning poker is introduced in this course as a method for the relative estimation of tasks in a project along with other estimation techniques such as story points. Planning poker is a game-based technique for relative effort estimation in agile teams, who estimate the effort needed to complete tasks in relation to each other [15,16]. Typically, the game of planning poker is played in small teams and is guided by a human facilitator who ensures that the method is used in a proper way [15,16]. In the game of planning poker, all participants are encouraged to make an individual estimation and reach a consensus. The game of planning poker as a CL activity focuses on peer communication, making decisions, negotiating, and justifying standpoints in the process of consensus building. Since a consensus is required for the successful completion of the game, team discussions aim at sharing different perspectives and influencing each other’s assessment of the effort required for completing the project tasks which are estimated during a planning poker session.

The aim of our study was twofold. The first aim was an educational one—we introduced the game of planning poker to provide students with an engaging, collaborative learning experience for small teams as part of the course on agile project management. The learning outcome was defined as follows: “The students understand and know how to apply relative estimation techniques”. At the same time, we followed a research goal which focused on exploring and comparing the effects of different forms of human and robot facilitation on perceptions of the facilitator’s competence, the learning experience, and the learning outcomes. Our primary research question was “What are the differences in the perceptions of the facilitator, the learning experience, and learning outcomes in the four conditions, i.e., C1 human online, C2 human face-to-face, C3 unsupervised, and C4 supervised robot facilitation?”

To encourage collaborative learning (CL) in teams, the game of planning poker made use of the three approaches to designing CL outlined by [17], i.e., (a) the conceptual approach was used to stimulate general collaboration by promoting interaction and reflection throughout the process of estimation; (b) the direct approach was used to structure the estimation process from individual estimates through to discussion through to consensus-reaching; (c) the cognitive approach was used to structure the activity by using methods such as describing, explaining, and defining, as well encouraging the participants to consider the standpoints of all team members.

In CSCL, micro and macro scripts are used to structure collaboration at different levels of granularity [20]. While macro scripts focus on orchestrating CL activities, micro scripts include prompts for specific activities [20]. The script of the game of planning poker was designed as an integrated macro and micro script and included the description of the orchestration of the game on the macro level with key components (participants, activities, roles, resources, groups) and mechanisms (task distribution, group formation, sequencing), as defined by [2]. The script also included detailed information such as prompts and encouragements on the micro level [20].

Furthermore, the script was based on the cyclical model of self-regulated learning (SRL) by Zimmerman [26]. According to the social cognitive approach to SRL by Zimmerman, self-regulated learning describes the degree to which learners are active in their learning process on the metacognitive, motivational, and behavioural levels [26]. Self-regulated learning depends on three types of influences: personal, environmental, and behavioural [26]. Accordingly, the SRL model distinguishes three main self-regulatory phases in SRL, forethought, performance, and self-reflection, each of which is broken down to a number of subcomponents [27]. The macro script for the planning poker game was based on the SRL model as it structures the game-play into three main phases, i.e., introduction, estimation, and reflection, which correspond to the three self-regulation phases by [27]. This SRL model [27,28] was already applied in previous studies, e.g., by [19], to designing a macro script for computer-supported collaborative learning (CSCL).

The macro script of planning poker includes a step-by-step description of a sequence of events during the game of planning poker, starting with a welcome and ending with a reflection and farewell. The micro script includes an introduction to planning poker with information about the principles of agile estimation and an explanation of how planning poker will be played in a small group. The micro script includes prompts such as a simple warm-up exercise, which aims to make sure that students understand and know how to use planning poker cards. In the robot condition, the micro script also includes prompts related to the modes of interaction with the robot (e.g., using speech and foot bumpers as tactile sensors) and an explanation of the tasks to be estimated during the play, as well as task-by-task prompts and encouragements for team collaboration. In this way, the script helps to structure collaboration uniformly in all four conditions.

The game of planning poker is divided into five parts: (1) Welcome and Introduction, (2) Explanation, (3) Planning Poker, (4) Create Protocol, and (5) Closing. In “Welcome and Introduction”, the facilitator welcomes and introduces students to the game of planning poker. In “Explanation”, the facilitator explains the idea of agile estimation and the structure of the game. In “Planning Poker”, the facilitator presents the tasks to be estimated, reads each task, and asks participants to perform a relative estimation by choosing one of the planning poker cards. The tasks used in the game of planning poker in the four conditions are presented in Table 1.

Table 1.

Tasks used for collaborative estimation in student teams in the game of planning poker.

Planning poker cards are a tool used in agile project management, particularly in the context of estimating the relative effort required to complete project tasks. Each planning poker card displays a numerical value representing effort or complexity, typically using a modified Fibonacci sequence (0, 1, 2, 3, 5, 8, 13, etc.). Tasks that are estimated to require more effort are assigned a higher number from the Fibonacci sequence. The aim of the estimation is not to determine the exact time or resources required to complete a task but to establish a common understanding of “effort” in a team (e.g., what does “effort” mean to us in terms of workload, resources, difficulty levels, etc.?). This common ground is worked out by the members of the team. In projects, this relative estimation technique helps to prioritise tasks and allocate resources effectively. Each participant independently selects a card that represents their initial estimation. Upon a signal from the facilitator, all participants reveal their card to each other simultaneously. If there are discrepancies in estimates, participants discuss their reasoning and aim to reach a common understanding. This process continues until a consensus is reached by the team.

In the “Planning Poker” phase of our script for the game of planning poker, there is a standard procedure for each task to be estimated, i.e., the facilitator (a) reads a task from the list of tasks, (b) asks the participants to independently select a card that corresponds to their estimation of the effort required to complete a particular task, (c) asks all participants to reveal their chosen cards to each other simultaneously, and, finally, (d) asks the participants to justify why they chose a card with a specific numerical value and discuss these individual estimates as a group with the aim of reaching a consensus. When participants estimate the effort required to complete a particular task, they estimate the relative effort compared to other tasks from the list. Participants estimate how complex or difficult a task may be compared to other tasks. A task that is estimated to be more complex might require more time and/or appropriate expertise and, hence, would be rated with a higher numerical value compared to a simpler task which would be rated with a lower numerical value from the Fibonacci sequence. Planning poker cards allow the players to signal their initial estimations to other group members. In the “Planning Poker” phase of the game, the facilitator uses encouraging utterances to stimulate group discussions and consensus building. An example of robotic encouragement is “Ok, so now justify your estimation and discuss which number you will assign to this task”. The human facilitator could naturally use a wider range of encouragements and adjust them to the situation, while the encouragement prompts from the robot in our application are pre-programmed and, hence, limited. Once a team reaches a consensus, a group member reports the final result to the facilitator. In the “Create Protocol” phase, the facilitator saves the result, i.e., a human facilitator saves the result in a spreadsheet, while the robot automatically generates a PDF file with the report. This process is repeated until all tasks have been estimated, and a consensus has been reached by the team. In “Closing”, the facilitator wraps up the activity, asks students to reflect on what they learned, and reviews key learnings from the game.

The application “Planning Poker with NAO” applied in conditions C3 and C4 was programmed using the Choregraphe software for NAO (version 2.8.6) and additional Python code for the documentation of results from each estimation round (one result per task). The application was described in more detail in [5,6] and is available as an open-source application on GitHub (https://github.com/Humanoid-Robots-as-Edu-Assistants/Planning_Poker, accessed on 4 July 2024) as in the Supplementary Materials.

3.2. Participants and Conditions

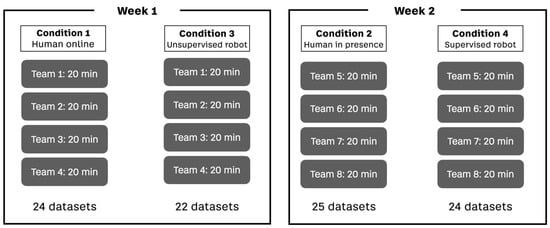

The study took place at the end of the winter semester in January 2023, after the students had gained a solid understanding of the common principles and methods of agile project management on the course. The study participants were students from the course on agile project management in the third semester of their studies in the undergraduate programme Digital Economy (BSc.). The survey was administered at the end of each game. The students consented to include the data from the survey in academic research. We received a total of 95 full datasets from the survey (cf. Figure 1). The age distribution was as follows: 52.6% were 20 to 24 years old, 17.9% were under 20, and 16.8% were 25 to 29 years old. Of the participants, 64.2% were male, and 35.8% were female. The majority of the study participants had a Western European background (60%). There were, altogether, 8 student teams and 16 game sessions (2 sessions per team; each team participated in 2 out of 4 conditions). Each team comprised 6–7 students. The duration of a planning poker game session was 20 min. Student teams could choose between human online (C1) and unsupervised robot (C3) conditions in week 1, and human in-person (C2) and supervised robot (C4) conditions in week 2. In this way, each team participated in one human-facilitated planning poker game (C1 or C2) and in one robot-facilitated game (C3 or C4). There was an equal distribution of teams across conditions with 4 teams per condition, i.e., 4 game sessions per condition. From the online survey, we obtained 24 datasets in conditions C1 and C3, 22 in C2, and 24 in C4 (Figure 1).

Figure 1.

Allocation of teams and datasets obtained in C1 and C3 (week 1) and C2 and C4 (week 2).

Students booked the time slots using an online booking tool in the learning management system. Student teams were instructed to select two time slots either in week 1 (C1 and C3) or in week 2 (C2 and C4). Students did not know the exact details about the type of facilitation on a given day. The information was communicated to students by the teacher along these lines: “Please choose your two time slots, either in week 1 with teacher online and robot in class, or in week 2 with teacher in class and robot in class”. In this way, students knew they would be playing once with a teacher and once with a robot, but they did not know the details of each condition.

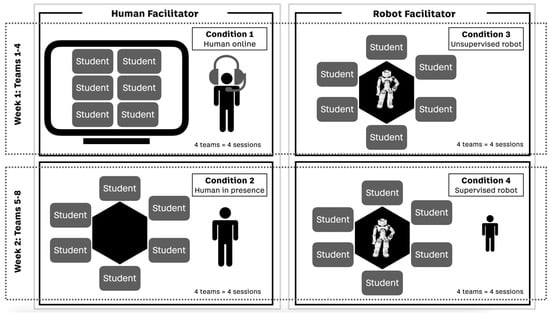

The study had a within- and between-subjects design. In the within-subject design, 4 teams in week 1 (teams 1–4) and the other 4 teams in week 2 (teams 5–8) experienced 2 different conditions—1 with a human facilitator and 1 with a robot facilitator—i.e., teams 1–4 participated in C1 (human) and C3 (robot), while teams 5–8 participated in C2 (human) and C4 (robot). This allowed the effects of the different conditions within the same group of participants to be compared, i.e., within teams 1–4 in week 1 and within teams 5–8 in week 2. In the between-subjects design, we compared the effects between the two different human conditions (C1 and C2) and the effect between the two different robot conditions (C3 and C4). We compared (a) effects of the two types of human facilitation in C1 (teams 1–4) and C2 (teams 5–8) and (b) effects of the two types of robot facilitation in C3 (teams 1–4) and C4 (teams 5–8). Teams 1–4 and teams 5–8 were independent groups as they did not experience the same conditions. By comparing effects across these independent teams, we could explore nuanced differences between the two types of human facilitation and between the two types of robotic facilitation (Figure 2).

Figure 2.

Within- and between-subjects design with 4 conditions. Teams 1–4: C1: human online and C3: unsupervised robot (week 1); teams 5–8: C2: human in person and C4: supervised robot (week 2).

The description of each of the four conditions (cf. Figure 2) is presented below:

- Condition 1: Human facilitation online. In this condition, student teams played planning poker with the human teacher during a video call using the web conferencing tool Zoom. To facilitate the planning poker game sessions with the teacher online, each team was allocated a separate time slot of 20 min in Zoom on day 1 of week 1. The teacher used a free real-time online tool called planningpoker.live to support agile estimation in remote groups. Each student team met with the teacher in a video call in a selected time slot on day 1. Each student used their own device (mostly notebooks) from their chosen location (e.g., home or campus). In this way, teams’ participants participated remotely and were not co-located. The teacher conducted the session from home using a notebook. The teacher connected with each student team for 20 min per team through Zoom’s webcam and audio features, which enable both visual and auditory communication. All four online planning poker game sessions in this condition were divided into five parts according to the unified script for the game, i.e., “Introduction”, “Explanation”, “Planning Poker”, “Documentation”, and “Closing”. In “Introduction”, the teacher introduced the online tool and explained how it would be used during the game. In “Explanation”, the teacher explained the concept and the rules of the planning poker game. In “Planning Poker”, the actual planning poker game took place. In this phase, students used a digital list of tasks to be estimated and a set of digital planning poker cards with the modified Fibonacci scale for relative estimation. The estimation outcomes of each session were documented in a digital spreadsheet in the “Documentation” phase. In “Closing”, the teacher wound the session up, asked students to reflect on what they learned, reviewed key learnings from the session, asked students to participate in the online evaluation survey, ended the video call, and, finally, closed the online planning poker tool.

- Condition 2: Human facilitation in person. In this condition, students played the planning poker game with the teacher in person on the university campus on day 2 of week 1. Each of the four student teams met with the teacher in person for a booked time slot of 20 min in a seminar room allocated to the course. All planning poker game sessions in this condition were divided into five parts, “Welcoming”, “Explanation”, “Planning Poker”, “Documentation”, and “Closing”, according to the unified script for the game of planning poker. In “Welcoming”, the teacher welcomed the students and invited them to group around a table (Figure 1). In “Explanation”, the teacher explained the concept and rules of the planning poker game, whereas, in “Planning Poker”, the actual game of planning poker took place. In this phase, students used a printed list of tasks to be estimated and a set of physical planning poker cards with the modified Fibonacci scale. The estimation outcomes from each game session were documented by the teacher in a spreadsheet in the “Documentation” phase. In the “Closing” part, the teacher wound the session up, asked the students to reflect on what they learned, reviewed key learnings from the session, invited students to participate in the online evaluation survey by sharing the link to the survey, and, finally, closed the session. After the session, the students left the room, and the teacher cleaned up the room.

- Condition 3: Unsupervised robot facilitation. In this condition, student teams played planning poker with the NAO robot on day 1 of week 2. Each student team arrived in the 20 min time slot allocated to them in Moodle, entered the room, and were asked by the teacher to group around a pentagon-shaped table with the NAO robot placed in the middle of the table (Figure 1). We chose this position for the robot to enable eye contact with students and due to the small size of the robot (58 cm in height). From that time on, the teacher sat at the back of the room and observed the interaction of students with the robot without getting involved in the game. All planning poker game sessions in this condition were divided into five parts, “Welcoming”, “Explanation”, “Planning Poker”, “Documentation”, and “Closing”, according to our unified script. In “Welcoming”, the robot welcomed the students and introduced itself as an agile coach. In “Explanation”, the robot explained the concept and rules of the planning poker game, whereas, in “Planning Poker”, the actual game of planning poker took place. In this phase, students used a printed list of tasks to be estimated and a set of physical planning poker cards with the modified Fibonacci scale for relative estimation. The estimation outcomes from each session were automatically documented by the NAO robot in the PDF report file in the “Documentation” phase. In the “Closing” part, the robot wound the session up, asked students to reflect on what they learned, and reviewed key learnings from the session. Finally, the teacher invited students to participate in the online evaluation survey by sharing the link to the survey and closed the session. After the session, the students left the room, and the teacher cleaned up the room.

- Condition 4: Supervised robot facilitation. In this condition, student teams played planning poker with the NAO robot on day 2 of week 2. Each student team arrived in the 20 min time slot allocated to them, entered the room, and were asked by the teacher to group around a pentagon-shaped classroom table with the NAO robot placed in the middle of the table, as in condition 3. From that time on, the teacher was present in the classroom, walking around the table and offering students help on demand, e.g., clarified some ad hoc questions related to the nuances of relative estimation or ideas behind some of the tasks. In this way, the teacher was involved in the game-play by supporting students with more individual and contextual support on demand that could have not been delivered by the robot. All planning poker game sessions with NAO were divided into five parts, “Welcoming”, “Explanation”, “Planning Poker”, “Documentation”, and “Closing”, according to our unified script. In “Welcoming”, the robot welcomed the students and introduced itself as an agile coach (Figure 1). In “Explanation”, the robot explained the concept and rules of the planning poker game, whereas, in “Planning Poker”, the actual game of planning poker took place. In this phase the support from the teacher was mostly requested by students. In this phase, students used a printed list of tasks to be estimated and a set of physical planning poker cards with the modified Fibonacci scale. While the robot guided students step by step in the same way as in condition 3, the teacher supported students additionally by providing answers to questions and provided hints if they noticed that students were unsure about a nuance of agile estimation or a meaning of a task. The estimation outcomes from each session were automatically documented by the robot in the PDF report file in the “Documentation” phase. In the “Closing” part, the robot wound the session up, asked the students to reflect on what they learned, and reviewed key learnings from the session. Finally, the teacher invited students to participate in the online evaluation survey and closed the session. After the session, the students left the room, and the teacher cleaned up the room.

The setup of conditions C3 and C4 with the robot NAO is visualised below (Figure 3). The same setup was used for C3 (unsupervised robot) and C4 (supervised robot) with the difference being that a teacher was sitting at the back of the room in C3, and the teacher was standing close to the team in C4.

Figure 3.

Study setup with NAO in conditions C3 (unsupervised robot) and C4 (supervised robot).

3.3. Materials and Measures

Materials in both conditions included the list of estimation tasks, planning poker cards, and a laptop. In C1 (human online), the laptop was used to enable the online session, and, in C2 (human F2F), to save the estimation results in a spreadsheet. While C2, C3, and C4 had a physical set of planning poker cards for each team member in a given team, in C1 (human online), virtual planning poker cards were applied by using the online tool for playing the game of planning poker—planningpoker.live. In C3 and C4, a laptop was used to deploy the application on the robot. The robot conditions C3 and C4 additionally included the NAO robot and the human operator, who was monitoring the deployment of the application.

The study applied mainly quantitative online survey methods. The measures included the learning experience (LX) scale (20 items), the facilitator’s competence (FC) scale (6 items), the learning outcomes (LO, 2 items), and socio-demographic measures. The measures applied in the online survey are presented in more detail below:

- Learning experience (LX) scale: Participants’ learning experience was measured with the 20-item LX scale created by [5], which is a shortened version of the 76-item LX scale created by [27]. The original LX scale by [27] was developed to assess the learning experience (LX) in the context of serious games and includes 76 items related to different qualities of the LX such as perceived usefulness, perceived ease of use and perceived usability, perceived sound and visual effect adequacy, perceived narrative adequacy, immersion, enjoyment, perceived realism, perceived goal clarity, perceived adequacy of the learning material, perceived feedback adequacy, perceived competence, motivation, perceived relevance to personal interests, and perceived knowledge improvement. The LX scale was shortened and adapted to the CL scenario of planning poker, i.e., items related to measuring the perceived realism of virtual objects and perceived adequacy of storytelling were removed as they are not relevant for the CL activity of planning poker. The shortening of the LX scale aimed to reduce respondent fatigue and ensure higher response rates, which might have been compromised due to the length of the original survey [5]. The shortening of the scale and the adaptations to the items were described in more detail in [5]. Items were assessed on a five-point Likert scale (1 = “strongly disagree”; 5 = “strongly agree”) following the design of the original rating scale by [27]. The measured consistency of the LX scale was α = 0.87.

- Facilitator’s competence (FC) scale: The participant’s perception of the facilitator’s competence was measured by the six-item facilitator’s competence scale used in the study by [29] to measure the perceived competence of a robot. This scale is derived from the Stereotype Content Model by [30] which proposes that competence and warmth as two fundamental dimensions of social cognition can predict individuals’ judgments of others. The six items (i.e., competent, confident, capable, efficient, intelligent, skilful) were rated on a five-point Likert scale (1 = “strongly disagree”; 5 = “strongly agree”). The scale had a high internal consistency of α = 0.95.

- Learning outcomes (LO): The participant’s perception of the attainment of learning outcomes was measured using two items, i.e., (1) “The activity helped me to understand how to play planning poker” and (2) “I feel confident in agile estimation after playing the game”. Items were rated on a five-point Likert scale (1 = “strongly disagree”; 5 = “strongly agree”).

- Socio-demographic measures: The survey included items related to the socio-demographics of age and gender. Participant’s age was assessed through pre-defined age groups (under 20, 20–24, 25–29, 30–34, 35–39, 40–44, 45–49). Gender was assessed through a single-choice question with three answer options (female, male, diverse).

4. Results

Data from the online survey were analysed using SPSS IBM version 29. The study compared the effects of the four distinct facilitation types in the four conditions (C), i.e., C1 human online, C2 human face to face, C3 unsupervised robot, and C4 supervised robot, on perceived facilitator’s competence (FC), learning experience (LX), and learning outcomes (LO).

4.1. Preliminary Data Analysis

Preliminary data analysis included tests for assumptions of the parametric statistics. Both Q-Q plots and P-P plots indicated normal distributions. The Levene’s Tests showed homogeneity of variance. The results of the two-tailed, bivariate correlations (Pearson) between scale scores (FC, LX, and LO) are presented below (Table 2).

Table 2.

Means (M), standard deviations (SD), and correlations (Pearson) for FC, LX, and LO in the sample.

All scales reached high mean values, which indicates an overall positive assessment of the facilitators’ competence (M = 3.96) and the learning experience (M = 3.35) and a high self-assessment of learning outcomes (M = 4.20) across conditions. The results also reveal significant correlations between the three scales; the higher the perceived facilitator’s competence, the more positive the learning experience and the higher the self-assessment of the learning outcomes.

4.2. Comparing the Four Conditions C1–C4

In the next step, descriptive statistics for all items on the three scales (FC, LX, and LO) for each of the four conditions (C1–C4) were computed. The results are summarised below (Table 3). To compare the values across the four conditions, we conducted a one-way ANOVA for the FC, LX, and LO scales. There was a range of significant differences at p < 0.05. While there was a significant difference in perceived facilitator’s competence (FC) across the four conditions (F(3,91) = 7.489, p < 0.001), there were no significant differences in the other two scale scores for LX and LO. However, there were significant differences for single items of the LX and LO scales (Table 3).

Table 3.

Results for facilitator’s competence (FC), learning experience (LX), and learning outcomes (LO) in the four conditions (C1–C4) with mean values, standard deviations, p-values, and size effects (η2).

Tukey HSD post hoc test results revealed significant differences between the four conditions with constantly higher values for the human facilitator online in C1. The human facilitator in C1 was perceived as (1) more competent compared the unsupervised robot facilitator in C3 (M1 = 4.37 vs. M3 = 3.45); (2) more confident compared to the human facilitator in C2 and the unsupervised robot facilitator in C3 (M1 = 4.32 vs. M2 = 3.96 vs. M3 = 3.86); (3) more capable compared to the human face to face in C2, the unsupervised robot in C3, and the supervised robot in C4 (M1 = 4.41 vs. M2 = 4.04 vs. M3 = 3.32 vs. M4 = 4.08); (4) more efficient than the unsupervised robot in C3 (M1 = 4.14 vs. M3 = 3.23); (5) more intelligent than the human facilitator in C2 and the unsupervised robot facilitator in C3 (M1 = 4.24 vs. M2 = 4.00 vs. M3 = 3.00); and (6) more skilful compared to the unsupervised and supervised robot facilitator in C2 and C4 (M1 = 4.22 vs. M = 3.95 vs. M2 = 3.00). A noteworthy observation emerged as we found that the perceived competence scores of human facilitators in C1 and C2 showed no statistically significant variance from that of the supervised robot facilitation in C4 except for in the case of item 3 (capable).

There were two significant differences in the learning experience (LX) scale computed with the Tukey HSD post hoc test, i.e., (1) participants forgot about time passing more in C1 compared to C3 (M = 3.32 vs. M = 3.30); and (2) participants in C1 perceived the activity as more relevant compared to participants in C4 (M = 3.21 vs. M = 2.16). There was also one significant difference in learning outcomes (LO) from the Tukey HSD post hoc test, i.e., participants in C2 felt that the game helped them to understand planning poker (PP) more compared to participants in C3 (M = 4.65 vs. M = 3.00). However, there were no significant effects of age and gender on any of the scales (FC, LX, LO) as a result of the one-way ANOVA.

4.3. Exploring the Impact of Multiple Factors

A series of linear regression analyses was conducted to explore the possible effects of multiple factors, i.e., (a) experimental condition, (b) age, and (c) gender, on the three dependent variables (1) facilitator’s competence (FC), (2) learning experience (LX), and (3) learning outcomes (LO). In all three regression analyses, the Durbin–Watson statistics showed that the data satisfied the assumption of observation independence.

The results related to the facilitator’s competence scale (FC, 6 items) showed a joint significant effect between condition, age, and gender (F(3, 91) = 3.450, p = 0.020, R2 = 0.102) on FC. The individual predictors were examined further and indicated that the experimental condition (C1–C4) (t = −2.911, p = 0.005) was a significant predictor of FC, while other factors were not (p > 0.050). The results of the second and third multiple regressions related to the learning experience (LX, 20-item scale) and learning outcomes (LO, 2 items) showed no significant effects between experimental conditions, gender, and age on LX and LO, respectively.

Finally, two linear regressions with FC (6-item scale) and LX (20-item scale) and FC and LO (2 items) were computed to explore the facilitator’s competence (FC) as a possible predictor of the learning experience (LX) and learning outcomes (LO). The result of the first analysis showed a highly significant effect (F(1, 93) = 51.563, p < 0.001, R2 = 0.350), which indicates that the facilitator’s competence (t = 7.181, p < 0.001) is a significant predictor of the learning experience (LX). Similarly, the result of the second analysis showed a highly significant effect (F(1, 93) = 20.386, p < 0.001, R2 = 0.171), which indicates that perceived facilitator’s competence (t = 4.515, p < 0.001) is a significant predictor of the learning outcomes (LO).

4.4. Comparing Paired Observations

Two paired-samples t-tests were conducted to compare paired observations, i.e., (1) for teams 1–4 who participated in C1 (human online) and C3 (unsupervised robot) in week 1 and (2) for teams 5–8 who participated in C2 (human presence) and C4 (supervised robot) in week 2. The results are summarised below.

4.4.1. Comparing Human Online (C1) and Unsupervised Robot (C3)

- Facilitator’s competence (FC): The results showed significant average differences (two-sided p < 0.001) between all six items and the average scale scores (t21 = 6.073, p < 0.001). On average, the perceived facilitator’s competence in the human online condition was 1.34 points higher than in the unsupervised robot condition (95% CI [0.88, 1.80]).

- Learning experience (LX): The results showed significant average differences (two-sided p < 0.001) between 4 out of 20 LX items, i.e., (1) LX2 “I forgot about time passing while playing” (t21 = 3.367, p = 0.003); (2) LX8 “Planning poker makes learning more interesting” (t21 = 3.382, p = 0.003); (3) LX14 “I felt competent when playing planning poker” (t21 = 2.524, p = 0.020); and (4) LX18 “Playing planning poker stimulated my curiosity” (t21 = 2.914, p = 0.008). On average, participants in C1 (human online) forgot about time passing more, found the activity more interesting, felt more competent, and felt that the activity stimulated their curiosity more compared to C3 (unsupervised robot). There was no significant difference (p > 0.05) between the total scale score for LX between C1 and C3.

- Learning outcomes (LO): There were no significant paired correlations and no significant differences between the two items in C1 compared to C3, even though the mean values were slightly higher for C1 compared to C3 (cf. Table 3).

4.4.2. Comparing Human in Person (C2) and Supervised Robot (C4)

- Facilitator’s competence (FC): There were no significant paired correlations between the variables either in single items or in the total scale score, even though the mean values were slightly higher for C2 except for in the case of one item, i.e., mean values for facilitator’s confidence were equal in C2 and C4 (cf. Table 3).

- Learning experience (LX): There were no significant differences in any of the LX items.

- Learning outcomes (LO): There was one statistically significant difference in item LO1 “The activity helped me to understand planning poker” (t23 = 2.460, p < 0.05). On average, participants in C2 (human in presence) felt that the activity helped them to understand the game of planning poker significantly more compared to C4 (supervised robot) (M = 0.625, 95% CI [0.99, 1.15]). Although the mean scores for item LO2 “I feel confident in agile estimation after playing planning poker” were higher in C4 (supervised robot) compared to C2 (human in person), this difference was not significant (cf. Table 3).

4.5. Comparing Independent Observations

Two independent-samples t-tests were run on the data with a 95% confidence interval (CI) for the mean differences to compare two independent pairs, i.e., (1) human facilitators in C1 (human online) and C2 (human face to face); and (2) robotic facilitators in C3 (unsupervised robot) and C4 (supervised robot).

4.5.1. Comparing Human Online (C1) and Human in Person (C2)

- Facilitator’s competence (FC): The results showed significant differences for all six items and the total scale score (t35.088 = 2.218, p < 0.001) with a difference of 0.35 (95% CI, 0.33 to 1.14). On average, FC in C1 (human online) was higher than in C2 (human in person), i.e., for items FC1 “competent” (t43.804 = 1.488, p = 0.012), FC2 “confident” (t36.293 = 2.742, p < 0.001), FC3 “capable” (t33.559 = 3.009, p < 0.001), FC4 “efficient” (t42.949 = 1.298, p = 0.035), FC5 “intelligent” (t39.176 = 1.515, p = 0.025), and FC6 “skilful” (t37.528 = 2.439, p = 0.002).

- Learning experience (LX): There was only one significant difference in item LX8 “Planning poker makes learning more interesting” (t38.551 = 1.427, p = 0.005). The average score for this item in C1 was 0.340 points higher than the average score in C2.

- Learning outcomes (LO): There were no significant differences between the two items in both conditions (p > 0.05). The mean values for LO2 were equal, and there was a higher (but not significant) mean value for LO1 “I understand planning poker” for C2 (cf. Table 3).

4.5.2. Comparing Unsupervised Robot (C3) and Supervised Robot (C4)

- Facilitator’s competence (FC): There were no significant differences between the conditions (p > 0.05). Although mean values were higher for all six FC items in C4 (cf. Table 3), these differences were not statistically significant.

- Learning experience (LX): There were two significant differences in items (1) LX3 “Playing planning poker was fun” (t40.902 = 0.837, p = 0.050), with a score significantly higher in C3 (unsupervised robot) with a difference of 0.25 (95% CI, −0.35 to 0.85); and (2) LX15 “I felt successful when playing planning poker” (t35.856 = −1.864, p = 0.031) with a score significantly higher in C4 (supervised robot), with a difference of −0.55 (95% CI, −1.15 to 0.49).

- Learning outcomes (LO): There were no significant differences between the two LO items in both conditions. Although the mean values for both items were higher in C4 (supervised robot), these differences were not statistically significant (cf. Table 3).

4.6. Comparing Human and Robot Facilitation

In order to compare human and robot facilitation as two independent groups and examine the differences among the means of human (C1 and C2) and robot (C3 and C4) conditions, one-way ANOVA was computed for the facilitator’s competence (FC), learning experience (LX), and learning outcomes (LO). The results showed that higher mean values were reached in human conditions (C1 and C2) for all positively formulated items except for three items, i.e., (1) LX11 “I will definitely try to apply what I learned”, (2) LX15 “I felt successful when playing planning poker” and LX16 “I felt a sense of control when playing planning poker”, and (3) LX17 “Planning poker motivated me to learn”, for which the mean values in the robot conditions (C3 and C4) were higher. These results may indicate that the participants perceived the game of planning poker co-facilitated/facilitated by the robot as more motivating (LX17), and as providing a stronger feeling of success (item 15) and control (LX16), as well as enhancing the will to apply what was learned (LX11). At the same time, human facilitation seems to have enhanced concentration (LX1), forgetting about time (item 2), competence (LX14), enjoyment (LX3, LX5), knowledge (LX9), understanding (LX10), curiosity (LX18), and relevance (LX19). Compared to both robot (C3 and C4) conditions, the game sessions of planning poker in the human conditions (C1 and C2) were perceived as an easier (LX7, LX12) and more interesting way to learn (LX8).

All negatively formulated items received lower values in the human conditions, indicating that the participants felt more bored (LX4) and more frustrated (LX6) in the robot conditions. Also, robot conditions were rated as more complex (LX13). Finally, robot conditions were rated as less relevant, i.e., “It was not relevant to me as I already knew most of it” (LX20). This result most probably resulted from the study design, i.e., human conditions (C1 in week 1, C3 in week 2) were preceded by the robot conditions (C2 in week 1, C4 in week 2), so students already knew the game of planning poker when they played it with the robot.

In order to examine the differences among the means of human (C1 and C2) compared to robot (C2 and C4) conditions, a one-way ANOVA was computed for all items and scores in FC, LX, and LO. There were three significant differences related to LX at p ≤ 0.05 between human and robot conditions for the following items: LX2 “I forgot about time passing while playing” (F(1,93) = 4.650, p = 0.034); LX14 “I felt competent when playing planning poker” (F(2,92) = 3.965, p = 0.049); and LX20 “Planning poker was not relevant to me” (F(1,93) = 6.493, p = 0.012). The highly significant difference related in LX20 “Planning poker was not relevant to me, because I already know most of it” is plausible since human-facilitated preceded robot-facilitated sessions. The statistically significant results of the comparative analysis are summarised in the table below (Table 4).

Table 4.

Comparing human-facilitated (C1 and C2) and robot-facilitated (C3 and C4) conditions. Only items with statistically significant differences at * p < 0.05 are displayed in this table.

5. Discussion

In this paper, we explored the comparative efficacy of four different forms of human and robot facilitation of a collaborative learning activity in the game of planning poker. Our study, with a sample of 49 students on an undergraduate agile project management course, was conducted to (1) provide the students with an engaging, collaborative learning experience in small teams on the course on agile project management, following the key learning outcome for the game of planning poker defined as “the students will understand and know how to apply to relative estimation techniques”, and (2) compare the effects of the four different forms of human and robot facilitation on the perceptions of facilitator’s competence (FC), learning experience (LX), and learning outcomes (LO), following the key research question “What are the differences in the perception of the facilitator’s competence, the learning experience, and learning outcomes between human online, human face-to-face, unsupervised and supervised robot facilitation?”

The four different types of human and robot facilitation of the game of planning poker were reflected in our design of the four conditions (C1–C4). Student teams participated in two out of four conditions, i.e., teams 1–4 participated in C1 human online and C2 unsupervised robot facilitation in week 1, and teams 5–8 in C2 human face-to-face and C3 supervised robot facilitation in week 2. In C1 and C2, a human teacher facilitated the game of planning poker by providing information and guidance. In C3 and C4, the robot NAO facilitated the game by structuring the exercise, prompting, and time keeping. While, in C3, the robot was the sole facilitator, in C4, a human teacher provided additional support on demand. The within- and between-subjects analysis compared C1–C4, exploring the effects on perceived facilitator’s competence (FC), learning experience (LX), and learning outcomes (LO). The pivotal findings from the study are organised into the subsequent subsections based on the following thematic categories: (1) the importance of a human teacher in facilitating collaborative learning, (2) the synergies between a human teacher and a robot facilitator, (3) the potential of robotic facilitators, (4) the role of the facilitator’s competence in learning experience and learning outcomes; and (5) conclusions and recommendations for future research.

5.1. The Importance of the Human Teacher

In general, our findings indicate that human facilitation may be most beneficial for the perception of the facilitator’s competence and learning outcomes. In our study, the human facilitation excelled over robot facilitation in all aspects of the facilitator’s competence, measured using the six-item FC scale created by [28]. The human facilitator was rated as significantly more competent, confident, capable, efficient, intelligent, and skilful compared to the robot facilitator in C3 and C4, with highest mean values being achieved in the human online condition (C1). The total FC score in C1 (human online) was the highest, while it was the lowest in C3 (unsupervised robot). The comparison of both human conditions (C1 and C2) with both robot conditions (C2 and C4) confirmed these results, showing that the participants in both human conditions rated the competence of the human facilitator significantly higher compared to the robot facilitator (cf. Table 4).

Similarly, results related to learning outcomes (LO) revealed that the human online facilitation in C1 was most effective in helping students understand planning poker—the LO item “The game helped me to understand planning poker” received the highest ratings in C1 compared to all other conditions (C2–C4). However, it is also interesting to notice that the ratings in C4 (supervised robot) beat the ratings in C1 (human online) when it came to the second learning outcome “I feel confident in estimating”. This may indicate that the participants in the game facilitated by the supervised robot were able to gain more confidence in relative estimation compared to in all other conditions (C1–C3). Finally, the comparison between the human conditions (C1 and C2) and the robot conditions (C3 and C4) revealed a significant difference in participants’ self-assessment of their competence gain. Participants in the human conditions reported feeling significantly more competent than those in the robot conditions. Also, the analysis showed that human in-person facilitation (C2) contributed to a deeper understanding of planning poker compared to the supervised robot condition (C4). These results underscore the importance of a human facilitator for the attainment of leaning outcomes. At the same time, these results may be interpreted as a pointer for future improvements in the design of the game of planning poker with a robot, e.g., the next iterations of the game design could integrate more advanced technological solutions which could enable the robot to give on-demand hints and feedback during the game-play.

However, when it came to the learning experience (LX), there were not many significant differences between conditions with human and conditions with robot facilitation. In fact, the comparison of both human conditions (C1 and C2) with both robot conditions (C2 and C4) revealed only two significant differences, i.e., the ratings for the items “I forgot about time passing” and “I felt competent” were significantly higher for human conditions (C1 and C2) compared to robot conditions (C2 and C4). The third significant difference was related to the item “The game was not relevant to me as I already knew most of it”, but this effect can be explained by the sequence of the conditions, as already described above. Also, it is interesting to notice that there were no significant differences in any of the 20 LX items between C2 (human in presence) and C4 (supervised robot) condition, indicating that a similarly positive learning experience was achieved in the settings with human–robot co-facilitation.

Finally, the learning experience (LX) score of both human conditions (C1 and C2) revealed only one significant difference related to the item “Planning poker makes learning more interesting”, which was rated significantly higher in human online (C1) compared to human in-person (C2) conditions. Comparing the mean values of other items shows that higher scores were reached across a range of LX items in C1 (human online), while LX item ratings in C2 were consistently lower, with the exception of “I felt bored”, “I understood agile estimation”, “I felt in control”, and “The game motivated me to learn”. In total, in C1, the participants gave higher ratings for 7 out of 10 LX items, i.e., “I forgot about time passing”, “Planning poker was fun”, “I really enjoyed the activity”, “The game made learning more interesting”, “The game increased my knowledge”, “The game was easy to learn”, and “I felt competent”. These are also interesting results, and they suggest a preference for the human online facilitation (C1) over human in-person facilitation (C2) in the game of planning poker. This preference is particularly evident in items related to engagement, enjoyment, and perceived competence, where higher scores were observed in C1. These findings indicate the potential benefits of online facilitation in the game of planning poker for fostering a more stimulating learning environment.

5.2. The Synergies of Human–Robot Co-Facilitation

The findings of our study provide some valuable insights into the potential of social robots as facilitators of collaborative learning, especially when these robots are supervised by human teachers who can use the time and attention freed up by the application of the robot to provide additional support on demand to the learners.

First, the ratings related to the perceived competence of the facilitator measured with the six items of the FC scale created by [28] were on average higher for the supervised robot in C4 compared to the unsupervised robot in C3. The supervised robot in C4 was rated as more competent, more confident, more capable, more efficient, more intelligent, and more skilful compared to the unsupervised robot in C3. For some items of the FC scale, the assessment of the supervised robot in C4 was even higher compared to the human facilitator in C2, i.e., the supervised robot in C4 was perceived as more capable and more efficient compared to the human facilitator in the face-to-face condition (C2). Also, the overall score for the FC scale was highest in the supervised robot condition C4, not only compared to the unsupervised robot in C3, but also to the human facilitator in the face-to-face condition C2.

Moreover, when it comes to the learning experience, the participants felt most deeply concentrated in the supervised robot condition C4 compared to all other conditions (C1–C3). Also, comparing the supervised (C4) and the unsupervised (C3) robot conditions, we can observe that, in C4, the participants forgot about time passing more, enjoyed the activity more, found it more interesting, felt less frustrated, felt more competent, felt more successful, felt more in control, felt that the game increased their knowledge more, felt that the game stimulated their curiosity more, felt that it was more easy to learn, and felt that it was more relevant than in C3. The participants in C4 also gained a better understanding of agile estimation, were more motivated, and were more willing to apply what they learned compared to those in C3. In sum, the scores in C4 (supervised robot) across all 20 items of the LX scale surpassed the scores in C3 (unsupervised robot), with a higher total scale score for LX in C4 compared to C3. The results also showed that item LX3 “Playing planning poker was fun” was rated significantly higher in C3, while LX15 “I felt successful when playing planning poker” was rated significantly higher in C4. These differences indicate that different facilitation types may have different effects on specific aspects of the learning experience. While applying a robot as a sole facilitator may be more entertaining, adding human co-facilitation may create an enhanced feeling of success, possibly due to more specific hints which can enhance learning outcomes.

Furthermore, 8 out of 20 LX items received the highest ratings in C4 compared to all other conditions (C1–C3), i.e., “I was deeply concentrated”, “I felt successful”, “I felt sense of control”, “the game motivated me to learn”, “the game stimulated my curiosity”, “it was relevant to me”, “through the game I understood agile estimation”, and “I will apply what I learned”. In fact, the total score for LX reached the highest values in C4, even compared to both human conditions (C1 and C2). Although this result was not statistically significant, it does indicate the potential of human–robot co-facilitation, which allows a standardised procedure and the consistency of a robotic facilitation to be combined with the more specific and situational support of a human facilitator. Moreover, there were no significant differences in any of the 20 LX items between C2 (human in-person condition) and C4 (supervised robot condition). This result indicates that a supervised robot with a human teacher co-facilitating collaborative leaning may be as beneficial for the learning experience in collaborative learning as purely human facilitation.

Finally, when it comes to learning outcomes (LO), the scores in C4 (supervised robot) surpassed the scores in C3 (unsupervised robot). Participants in C4 reported that the game helped them understand planning poker more compared to in C3, and that they felt more confident in agile estimation compared to in C3. These results again underscore the benefits of human–robot co-facilitation compared to unsupervised robot facilitation.

5.3. The Potential of Robotic Facilitation

The results of our study show that the type of human and robot facilitation may have a significant influence on the perceived facilitator’s competence (FC). However, it is worth noting that the facilitator competence (FC) scores of human facilitators in both C1 and C2 exhibited no statistically significant difference compared to those of the supervised robot facilitation in C4. This suggests that a robot under human supervision may be perceived as equally competent as a human facilitator.