Effects of Imagery as Visual Stimuli on the Physiological and Emotional Responses

Abstract

:1. Introduction

2. Materials and Methods

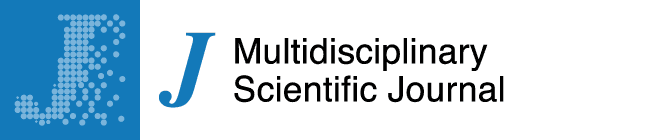

2.1. Participants and Stimuli Description

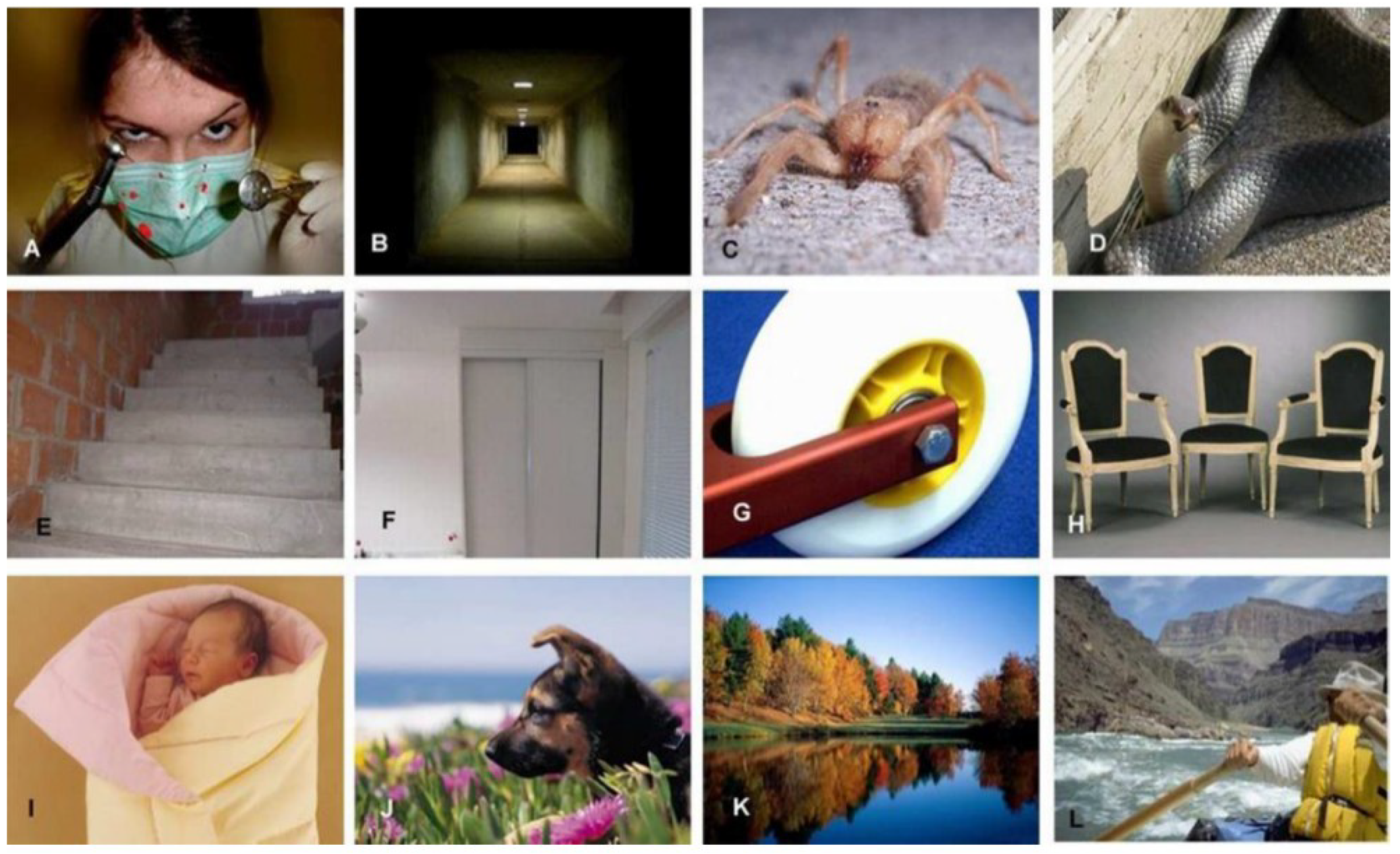

2.2. Sensory Session and Self-reported Response Acquisition

2.3. Video Acquisition and Facial Expressions Analysis

2.4. Statistical Analysis

3. Results

3.1. Self-reported and Biometric Responses

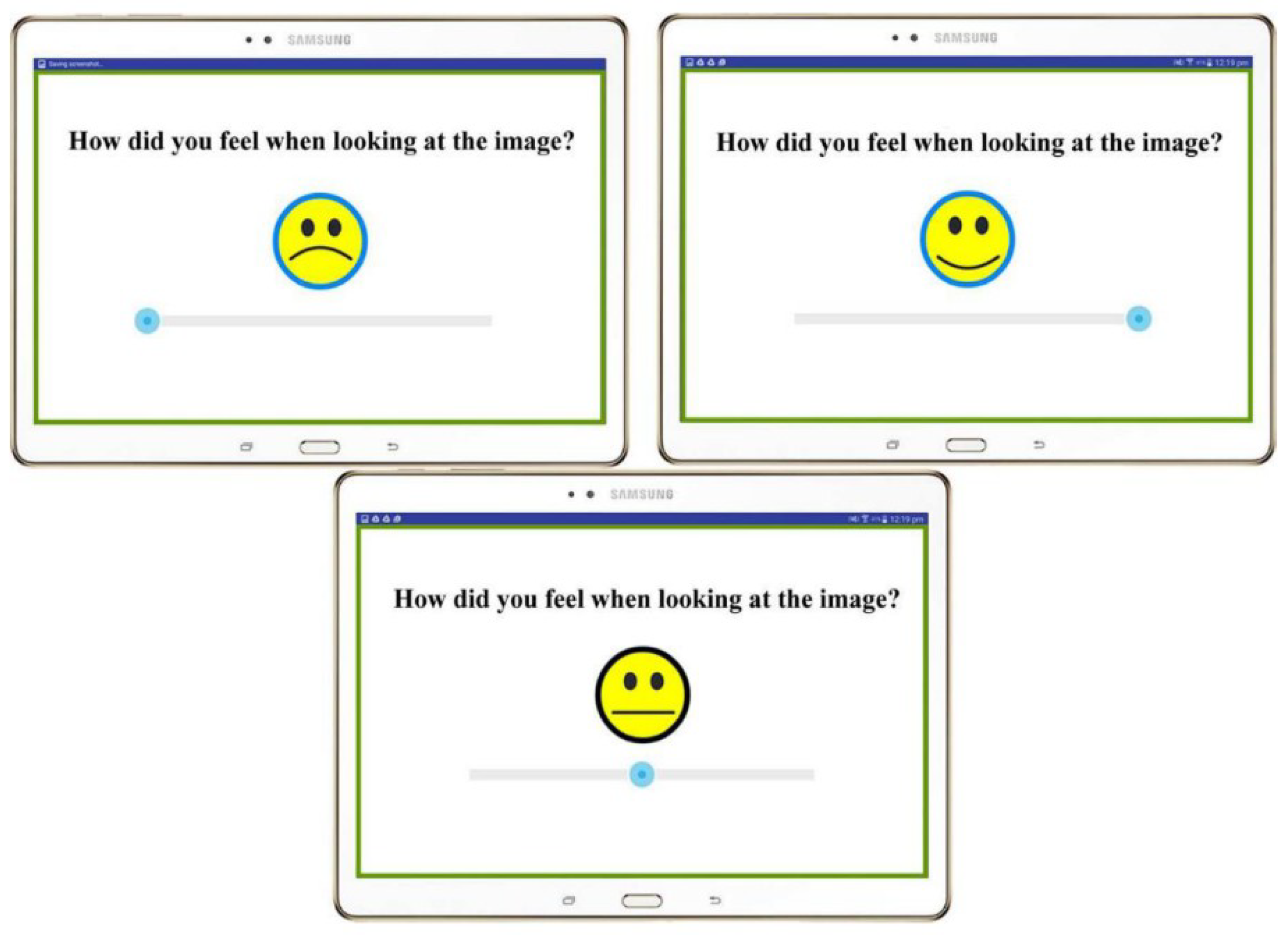

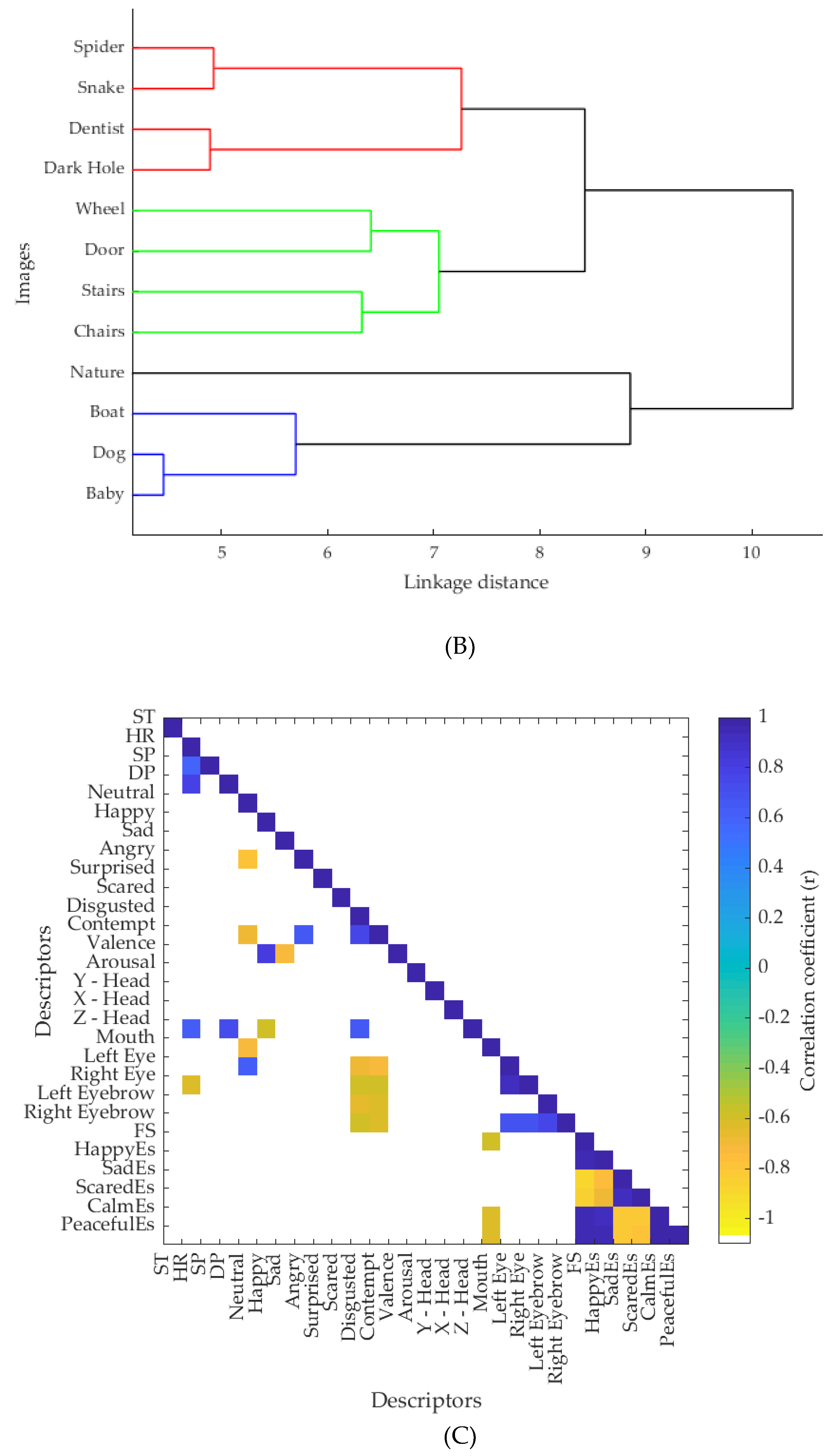

3.2. Multivariate Data Analysis and Correlations (Self-reported and Biometric Responses)

3.3. Regression Analysis (General Linear Model) Predicting Self-Reported Responses Using Biometrics

4. Discussion

4.1. Self-reported and Biometric Responses

4.2. Multivariate Data Analysis and Correlations (Self-Reported and Biometric Responses)

4.3. Regression Analysis (General Linear Model) Predicting Self-Reported Responses Using Biometrics

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- King, S.; Meiselman, H. Development of a method to measure consumer emotions associated with foods. Food Qual. Prefer. 2010, 21, 168–177. [Google Scholar] [CrossRef]

- Sarma, M.; Bhattacharyya, K. Facial expression based emotion detection—A review. ADBU J. Eng. Technol. 2016, 4, 201–205. [Google Scholar]

- Dan-Glauser, E.S.; Scherer, K.R. The geneva affective picture database (gaped): A new 730-picture database focusing on valence and normative significance. Behav. Res. Methods 2011, 43, 468–477. [Google Scholar] [CrossRef] [PubMed]

- Chaya, C.; Eaton, C.; Hewson, L.; Vázquez, R.F.; Fernández-Ruiz, V.; Smart, K.A.; Hort, J. Developing a reduced consumer-led lexicon to measure emotional response to beer. Food Qual. Prefer. 2015, 45, 100–112. [Google Scholar] [CrossRef]

- Kendall, A.; Zinbarg, R.; Bobova, L.; Mineka, S.; Revelle, W.; Prenoveau, J.; Craske, M. Measuring positive emotion with the mood and anxiety symptom questionnaire: Psychometric properties of the anhedonic depression scale. Assessment 2016, 23, 86–95. [Google Scholar] [CrossRef] [PubMed]

- Gunaratne, T.M.; Viejo, C.G.; Fuentes, S.; Torrico, D.D.; Gunaratne, N.M.; Ashman, H.; Dunshea, F.R. Development of emotion lexicons to describe chocolate using the Check-All-That-Apply (CATA) methodology across Asian and Western groups. Food Res. Int. 2019, 115, 526–534. [Google Scholar] [CrossRef] [PubMed]

- Rebollar, R.; Lidón, I.; Martín, J.; Puebla, M. The identification of viewing patterns of chocolate snack packages using eye-tracking techniques. Food Qual. Prefer. 2015, 39, 251–258. [Google Scholar] [CrossRef]

- Lim, J. Hedonic scaling: A review of methods and theory. Food Qual. Prefer. 2011, 22, 733–747. [Google Scholar] [CrossRef]

- Kreibig, S.D. Autonomic nervous system activity in emotion: A review. Biol. Psychol. 2010, 84, 394–421. [Google Scholar] [CrossRef]

- Naït-Ali, A.; Fournier, R. Signal and Image Processing for Biometrics; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Loijens, L.; Krips, O. Facereader Methodology Note. Available online: https://docplayer.net/26907734-Facereader-what-is-facereader-how-does-facereader-work-methodology-note.html (accessed on 12 June 2019).

- Mehra, S.; Charaya, S. Enhancement of face recognition technology in biometrics. Int. J. Sci. Res. Educ. 2016, 4, 5778–5783. [Google Scholar] [CrossRef]

- Ko, C.-H.; Yu, C.-Y. Gender differences in emotional responses to iconic design. In Proceedings of the 2016 5th IIAI International Congress on Advanced Applied Informatics (IIAI-AAI), Kumamoto, Japan, 10–16 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 785–790. [Google Scholar]

- Yu, C.Y. The use of facial recognition to evaluate human emotion when recognizing shading and texture. Bull. Jpn. Soc. Sci. Design 2016, 62, 5–69. [Google Scholar]

- Barlow, D.H. Anxiety and Its Disorders: The Nature and Treatment of Anxiety and Panic; Guilford Press: New York, NY, USA, 2004. [Google Scholar]

- Cruz Albarran, I.; Benitez Rangel, J.; Osornio Rios, R.; Morales Hernandez, L. Human emotions detection based on a smart-thermal system of thermographic images. Infrared Phys. Technol. 2017, 81, 250–261. [Google Scholar] [CrossRef]

- Brugnera, A.; Adorni, R.; Compare, A.; Zarbo, C.; Sakatani, K. Cortical and autonomic patterns of emotion experiencing during a recall task. J. Psychophysiol. 2018, 32, 52–63. [Google Scholar] [CrossRef]

- Granero, A.C.; Colomer Granero, A.; Fuentes Hurtado, F.; Naranjo Ornedo, V.; Guixeres Provinciale, J.; Ausín, J.; Alcañiz Raya, M. A comparison of physiological signal analysis techniques and classifiers for automatic emotional evaluation of audiovisual contents. Front. Comput. Neurosci. 2016, 10. [Google Scholar] [CrossRef]

- Dimberg, U. Facial reactions to facial expressions. Psychophysiology 1982, 19, 643–647. [Google Scholar] [CrossRef] [PubMed]

- McCaul, K.D.; Holmes, D.S.; Solomon, S. Voluntary expressive changes and emotion. J. Personal. Soc. Psychol. 1982, 42, 145. [Google Scholar] [CrossRef]

- Ekman, P.; Levenson, R.W.; Friesen, W.V. Autonomic nervous system activity distinguishes among emotions. Science 1983, 221, 1208–1210. [Google Scholar] [CrossRef] [PubMed]

- De Wijk, R.; He, W.; Mensink, M.G.J.; Verhoeven, R.H.G.; de Graaf, C.; Matsunami, H. ANS responses and facial expressions differentiate between the taste of commercial breakfast drinks. PLoS ONE 2014, 9, e93823. [Google Scholar] [CrossRef]

- Viejo, C.G.; Fuentes, S.; Torrico, D.D.; Dunshea, F.R. Non-contact heart rate and blood pressure estimations from video analysis and machine learning modelling applied to food sensory responses: A case study for chocolate. Sensors 2018, 18, 1802. [Google Scholar] [CrossRef]

- Barksdale, D.J.; Farrug, E.R.; Harkness, K. Racial discrimination and blood pressure: Perceptions, emotions, and behaviors of black american adults. Issues Ment. Health Nurs. 2009, 30, 104–111. [Google Scholar] [CrossRef]

- Torrico, D.D.; Fuentes, S.; Viejo, C.G.; Ashman, H.; Gunaratne, N.M.; Gunaratne, T.M.; Dunshea, F.R. Images and chocolate stimuli affect physiological and affective responses of consumers: A cross-cultural study. Food Qual. Prefer. 2018, 65, 60–71. [Google Scholar] [CrossRef]

- Fuentes, S.; Gonzalez Viejo, C.; Torrico, D.; Dunshea, F. Development of a biosensory computer application to assess physiological and emotional responses from sensory panelists. Sensors 2018, 18, 2958. [Google Scholar] [CrossRef] [PubMed]

- Asher, S.R.; Singleton, L.C.; Tinsley, B.R.; Hymel, S. A reliable sociometric measure for preschool children. Dev. Psychol. 1979, 15, 443. [Google Scholar] [CrossRef]

- Jun, S.; Rho, S.; Han, B.; Hwang, E. A fuzzy inference-based music emotion recognition system. In Proceedings of the 5th International Conference on Visual Information Engineering (VIE 2008), Xi’an China, 29 July–1 August 2008. [Google Scholar]

- Markov, K.; Matsui, T. Music genre and emotion recognition using gaussian processes. IEEE Access 2014, 2, 688–697. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. CVPR (1) 2001, 1, 511–518. [Google Scholar]

- Viejo, C.G.; Fuentes, S.; Howell, K.; Torrico, D.D.; Dunshea, F.R. Integration of non-invasive biometrics with sensory analysis techniques to assess acceptability of beer by consumers. Physiol. Behav. 2019, 200, 139–147. [Google Scholar] [CrossRef]

- Jensen, J.N.; Hannemose, M. Camera-Based Heart Rate Monitoring; Department of Applied Mathematics and Computer Science, DTU Computer: Lyngby, Denmark, 2014; Volume 17. [Google Scholar]

- Viejo, C.G.; Fuentes, S.; Howell, K.; Torrico, D.; Dunshea, F.R. Robotics and computer vision techniques combined with non-invasive consumer biometrics to assess quality traits from beer foamability using machine learning: A potential for artificial intelligence applications. Food Control 2018, 92, 72–79. [Google Scholar] [CrossRef]

- Torrico, D.D.; Fuentes, S.; Viejo, C.G.; Ashman, H.; Dunshea, F.R. Cross-cultural effects of food product familiarity on sensory acceptability and non-invasive physiological responses of consumers. Food Res. Int. 2018, 115, 439–450. [Google Scholar] [CrossRef]

- Torrico, D.D.; Hutchings, S.; Ha, M.; Bittner, E.P.; Fuentes, S.; Warner, R.D.; Dunshea, F.R. Novel techniques to understand consumer responses towards food products: A review with a focus on meat. Meat Sci. 2018, 144, 30–42. [Google Scholar] [CrossRef]

- Deng, P.Y.A.W. Needle in the Haystack—User Behavior Anomaly Detection for Information Security with Ping Yan and Wei Deng. Available online: https://www.slideshare.net/databricks/needle-in-the-haystackuser-behavior-anomaly-detection-for-information-security-with-ping-yan-and-wei-deng/7 (accessed on 15 June 2017).

- Prokop, P.; Özel, M.; Uşak, M. Cross-cultural comparison of student attitudes toward snakes. Soc. Anim. 2009, 17, 224–240. [Google Scholar]

- King, S.; Meiselman, H.; Carr, B.T. Measuring emotions associated with foods in consumer testing. Food Qual. Prefer. 2010, 21, 1114–1116. [Google Scholar] [CrossRef]

- Leu, J.; Mesquita, B.; Ellsworth, P.C.; ZhiYong, Z.; Huijuan, Y.; Buchtel, E.; Karasawa, M.; Masuda, T. Situational differences in dialectical emotions: Boundary conditions in a cultural comparison of North Americans and east Asians. Cogn. Emot. 2010, 24, 419–435. [Google Scholar] [CrossRef]

- Yamamoto, K.; Suzuki, N. The effects of social interaction and personal relationships on facial expressions. J. Nonverbal Behav. 2006, 30, 167–179. [Google Scholar] [CrossRef]

- Hess, U.; Bourgeois, P. You smile–I smile: Emotion expression in social interaction. Biol. Psychol. 2010, 84, 514–520. [Google Scholar] [CrossRef] [PubMed]

- Maughan, L.; Gutnikov, S.; Stevens, R. Like more, look more. Look more, like more: The evidence from eye-tracking. J. Brand Manag. 2007, 14, 335–342. [Google Scholar] [CrossRef]

- Sadr, J.; Jarudi, I.; Sinha, P. The role of eyebrows in face recognition. Perception 2003, 32, 285–293. [Google Scholar] [CrossRef]

- Seibt, B.; Neumann, R.; Nussinson, R.; Strack, F. Movement direction or change in distance? Self- and object-related approach–avoidance motions. J. Exp. Soc. Psychol. 2008, 44, 713–720. [Google Scholar] [CrossRef]

- Ganchrow, J.; Steiner, J.; Daher, M. Neonatal response to intensities facial expressions in different qualities and of gustatory stimuli. Infant Behav. Dev. 1983, 6, 189–200. [Google Scholar] [CrossRef]

- Robert Soussignan, J.W.; Schaaf, B. Epigenetic approach to the perinatal development of affective processes in normal and at-risk newborns. Adv. Psychol. Res. 2006, 40, 187. [Google Scholar]

- Van de Mortel, T.F. Faking it: Social desirability response bias in self-report research. Aust. J. Adv. Nurs. 2008, 25, 40. [Google Scholar]

- Donaldson, S.I.; Grant-Vallone, E.J. Understanding self-report bias in organizational behavior research. J. Bus. Psychol. 2002, 17, 245–260. [Google Scholar] [CrossRef]

- Schwarz, N. Self-reports: How the questions shape the answers. Am. Psychol. 1999, 54, 93. [Google Scholar] [CrossRef]

- Yeh, L.; Kim, K.; Chompreeda, P.; Rimkeeree, H.; Yau, N.; Lundahl, D. Comparison in use of the 9-point hedonic scale between Americans, Chinese, Koreans, and Thai. Food Qual. Prefer. 1998, 9, 413–419. [Google Scholar] [CrossRef]

- Noldus. Facereader: Tool for Automatic Analysis of Facial Expression: Version 6.0; Noldus Information Technology Wageningen: Wageningen, The Netherlands, 2014. [Google Scholar]

- Ekman, P.; Rosenberg, E.L. What the Face Reveals: Basic and Applied Studies of Spontaneous Expression Using the Facial Action Coding System (FACS); Oxford University Press: Oxford, MS, USA, 1997. [Google Scholar]

| Image | Group | Face Scale | HappyEs | SadEs | ScaredEs | CalmEs | PeacefulEs |

|---|---|---|---|---|---|---|---|

| Dark hole | Negative | 3.59f ±3.44 | 1.29gh ±0.80 | 1.90a ±1.13 | 2.92b ±1.11 | 1.67d ±0.91 | 1.44d ±0.87 |

| Dentist | Negative | 3.00f ±2.55 | 1.15h ±0.41 | 2.02a ±1.02 | 2.92b ±1.16 | 1.25e ±0.48 | 1.19d ±0.39 |

| Snake | Negative | 2.71f ±3.07 | 1.23gh ±0.66 | 1.88a ±1.28 | 3.54a ±1.29 | 1.38de ±0.67 | 1.23d ±0.55 |

| Spider | Negative | 3.67f ±3.29 | 1.31fgh ±0.66 | 1.92a ±1.25 | 3.25ab ±1.26 | 1.42de ±0.85 | 1.38d ±0.89 |

| Chairs | Neutral | 7.64d ±2.99 | 1.90d ±0.88 | 1.40a ±0.87 | 1.29d ±0.71 | 2.44c ±1.09 | 2.17c ±1.17 |

| Door | Neutral | 7.42de ±1.67 | 1.54efg ±0.74 | 1.23a ±0.62 | 1.31d ±0.55 | 2.27c ±1.14 | 2.17c ±1.07 |

| Stairs | Neutral | 6.54e ±2.54 | 1.63def ±0.82 | 1.63a ±0.79 | 1.67c ±0.78 | 2.20c ±1.13 | 2.04c ±1.01 |

| Wheel | Neutral | 7.78d ±1.89 | 1.83de ±0.93 | 1.13a ±0.39 | 1.10d ±0.37 | 2.17c ±1.02 | 2.10c ±1.02 |

| Baby | Positive | 12.20bc ±2.80 | 3.40bc ±0.89 | 1.10a ±0.47 | 1.04d ±0.20 | 3.56b ±0.92 | 3.69a ±1.03 |

| Boat | Positive | 11.11c ±3.21 | 3.21c ±0.87 | 1.23a ±0.72 | 1.77c ±0.88 | 2.44c ±0.99 | 2.40c ±1.12 |

| Dog | Positive | 12.39ab ±2.88 | 3.67ab ±0.83 | 1.27a ±0.61 | 1.19d ±0.49 | 3.23b ±0.95 | 3.29b ±0.92 |

| Nature | Positive | 13.32a ±1.92 | 3.98a ±0.91 | 1.15a ±0.41 | 1.04d ±0.29 | 4.00a ±0.88 | 4.02a ±0.79 |

| Image | Group | NeutralNS | HappyNS | SadNS | AngryNS | SurprisedNS | ScaredNS | DisgustedNS | ContemptNS | ValenceNS | ArousalNS | Y-HeadNS | X-HeadNS |

| Dark hole | Negative | 0.44 ±0.17 | 0.15 ±0.16 | 0.26 ±0.20 | 0.15 ±0.15 | 0.05 ±0.07 | 0.07 ±0.12 | 0.07 ±0.09 | 0.08 ±0.07 | −0.21 ±0.29 | 0.37 ±0.22 | 12.95 ±10.09 | −7.86 ±5.95 |

| Dentist | Negative | 0.45 ±0.16 | 0.16 ±0.18 | 0.23 ±0.20 | 0.15 ±0.13 | 0.05 ±0.05 | 0.07 ±0.10 | 0.07 ±0.10 | 0.09 ±0.08 | −0.18 ±0.30 | 0.38 ±0.22 | 13.08 ±10.97 | −6.62 ±5.76 |

| Snake | Negative | 0.42a ±0.18 | 0.15 ±0.15 | 0.26 ±0.22 | 0.19 ±0.16 | 0.05 ±0.06 | 0.56 ±0.08 | 0.07 ±0.10 | 0.10 ±0.12 | −0.20 ±0.31 | 0.41 ±0.25 | 12.25 ±10.60 | −7.31 ±7.81 |

| Spider | Negative | 0.43 ±0.18 | 0.13 ±0.14 | 0.27 ±0.23 | 0.17 ±0.15 | 0.07 ±0.13 | 0.07 ±0.12 | 0.07 ±0.11 | 0.10 ±0.08 | −0.25 ±0.29 | 0.41 ±0.23 | 12.06 ±10.92 | −7.20 ±5.61 |

| Chairs | Neutral | 0.42 ±0.19 | 0.12 ±0.15 | 0.26 ±0.22 | 0.17 ±0.15 | 0.06 ±0.07 | 0.07 ±0.12 | 0.07 ±0.09 | 0.09 ±0.08 | −0.24 ±0.29 | 0.41 ±0.23 | 12.65 ±10.88 | −7.26 ±6.47 |

| Door | Neutral | 0.44 ±0.19 | 0.11 ±0.12 | 0.28 ±0.23 | 0.17 ±0.14 | 0.04 ±0.03 | 0.07 ±0.09 | 0.08 ±0.09 | 0.09 ±0.09 | −0.26 ±0.29 | 0.36 ±0.23 | 12.34 ±9.32 | −6.36 ±6.42 |

| Stairs | Neutral | 0.47 ±0.19 | 0.12 ±0.13 | 0.27 ±0.22 | 0.15 ±0.17 | 0.05 ±0.06 | 0.06 ±0.11 | 0.06 ±0.09 | 0.09 ±0.09 | −0.25 ±0.29 | 0.39 ±0.22 | 12.59 ±9.59 | −6.30 ±5.93 |

| Wheel | Neutral | 0.43 ±0.19 | 0.13 ±0.14 | 0.24 ±0.21 | 0.17 ±0.15 | 0.05 ±0.07 | 0.08 ±0.14 | 0.09 ±0.15 | 0.12 ±0.11 | −0.25 ±0.29 | 0.40 ±0.21 | 11.52 ±12.39 | −6.28 ±6.45 |

| Baby | Positive | 0.46 ±0.18 | 0.16 ±0.18 | 0.25 ±0.18 | 0.16 ±0.16 | 0.05 ±0.08 | 0.07 ±0.10 | 0.05 ±0.08 | 0.07 ±0.06 | −0.19 ±0.29 | 0.42 ±0.22 | 12.78 ±9.50 | −7.32 ±6.30 |

| Boat | Positive | 0.44 ±0.19 | 0.14 ±0.13 | 0.25 ±0.21 | 0.17 ±0.17 | 0.05 ±0.08 | 0.07 ±0.12 | 0.07 ±0.10 | 0.11 ±0.12 | −0.22 ±0.29 | 0.43 ±0.24 | 13.48 ±10.11 | −6.17 ±5.97 |

| Dog | Positive | 0.44 ±0.18 | 0.17 ±0.18 | 0.24 ±0.20 | 0.15 ±0.15 | 0.04 0.05 | 0.07 ±0.12 | 0.06 ±0.08 | 0.08 ±0.07 | −0.18 ±0.31 | 0.39 ±0.23 | 14.10 ±9.58 | −7.29 ±6.02 |

| Nature | Positive | 0.45 ±0.17 | 0.14 ±0.15 | 0.27 ±0.22 | 0.15 ±0.14 | 0.05 0.07 | 0.06 ±0.09 | 0.06 ±0.08 | 0.09 ±0.09 | −0.21 ±0.31 | 0.39 ±0.24 | 12.17 ±9.54 | −7.10 ±5.87 |

| Image | Group | Z-HeadNS | MouthNS | LE | RE | LEBNS | REBNS | GDNS | HRNS | DPNS | SPNS | STNS | |

| Dark hole | Negative | −4.28 ±4.18 | 0.21 ±0.34 | 0.15a,b ±0.27 | 0.17a,b ±0.29 | 0.01 ±0.53 | −0.25 ±0.33 | 0.38 ±0.38 | 87.90 ±8.91 | 76.21 ±6.990 | 118.67 ±30.30 | 31.79 ±4.78 | |

| Dentist | Negative | −3.24 ±3.96 | 0.25 ±0.35 | 0.22a,b ±0.33 | 0.19a,b ±0.30 | 0.04 ±0.58 | −0.26 ±0.35 | 0.39 ±0.36 | 87.71 ±7.71 | 75.09 ±4.93 | 117.81 ±26.15 | 31.75 ±4.74 | |

| Snake | Negative | −4.47 ±4.81 | 0.25 ±0.35 | 0.16a,b ±0.31 | 0.23a,b ±0.31 | 0.02 ±0.53 | −0.29 ±0.37 | 0.43 ±0.44 | 87.60 ±8.88 | 76.43 ±5.87 | 119.21 ±27.45 | 32.49 ±4.79 | |

| Spider | Negative | −4.18 ±4.23 | 0.21 ±0.32 | 0.13b ±0.28 | 0.13b ±0.23 | 0.01 ±0.51 | −0.26 ±0.37 | 0.41 ±0.39 | 89.00 ±8.34 | 76.20 ±5.59 | 124.34 ±24.03 | 31.98 ±4.78 | |

| Chairs | Neutral | −3.97 ±4.96 | 0.26 ±0.39 | 0.13b ±0.27 | 0.14b ±0.27 | −0.04 ±0.53 | −0.35 ±0.41 | 0.39 ±0.39 | 88.56 ±11.02 | 76.11 ±6.14 | 120.13 ±24.42 | 32.85 ±4.73 | |

| Door | Neutral | −4.19 ±4.32 | 0.21 ±0.32 | 0.18a,b ±0.31 | 0.20a,b ±0.31 | −0.04 ±0.52 | −0.31 ±0.35 | 0.36 ±0.37 | 87.85 ±7.65 | 73.94 ±6.86 | 119.72 ±25.17 | 32.04 ±4.76 | |

| Stairs | Neutral | −4.52 ±5.04 | 0.18 ±0.32 | 0.22a,b ±0.34 | 0.22a,b ±0.32 | 0.01 0.48 | −0.23 ±0.39 | 0.31 ±0.37 | 86.78 ±9.11 | 74.58 ±6.96 | 121.76 ±26.89 | 31.90 ±4.78 | |

| Wheel | Neutral | −4.46 ±6.49 | 0.21 ±0.33 | 0.11b ±0.24 | 0.12b ±0.21 | 0.09 ±0.49 | −0.32 ±0.38 | 0.37 ±0.40 | 87.16 ±8.04 | 74.51 ±6.53 | 114.75 ±24.74 | 32.29 ±4.70 | |

| Baby | Positive | −3.48 ±3.11 | 0.19 ±0.34 | 0.34a ±0.36 | 0.33a ±0.36 | 0.05 ±0.52 | −0.21 ±0.37 | 0.41 ±0.39 | 86.27 ±7.83 | 73.43 ±6.55 | 117.03 ±24.60 | 31.73 ±4.70 | |

| Boat | Positive | −4.28 ±4.88 | 0.22 ±0.33 | 0.19a,b ±0.34 | 0.17a,b ±0.30 | −0.08 ±0.51 | −0.34 ±0.41 | 0.37 ±0.39 | 87.92 ±10.05 | 75.26 ±8.17 | 115.26 ±30.83 | 32.65 ±4.56 | |

| Dog | Positive | −4.22 ±4.03 | 0.19 ±0.31 | 0.18a,b ±0.29 | 0.15a,b ±0.27 | −0.05 ±0.44 | −0.31 ±0.37 | 0.38 ±0.36 | 88.71 ±8.61 | 75.84 ±6.88 | 122.71 ±27.97 | 31.81 ±4.81 | |

| Nature | Positive | 4.17 ±4.03 | 0.19 ±0.32 | 0.13b ±0.29 | 0.11b ±0.23 | 0.03 ±0.47 | −0.32 ±0.36 | 0.39 ±0.35 | 87.49 ±8.75 | 75.42 ±6.79 | 117.99 ±28.95 | 31.90 ±5.03 |

| Category | Self-Reported Response | Int. | HR | ST | Sur | Dis | Val | Neu | Sca | GD | LE | LEB | X-Head | Y-Head | Z-Head | Mou | REB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| General | FS | 7.85 | NS | NS | −0.11 p = 0.01 | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS |

| HappyEs | 1.86 | NS | NS | NS | NS | −0.42 p = 0.03 | NS | NS | NS | NS | NS | NS | +0.01 p = 0.01 | NS | NS | NS | |

| SadEs | 1.72 | NS | NS | NS | NS | NS | NS | NS | −0.40 p = 0.01 | NS | NS | NS | NS | NS | NS | NS | |

| ScaredEs | 2.56 | NS | NS | NS | NS | NS | −0.02 p = 0.00 | NS | NS | NS | NS | NS | NS | NS | NS | NS | |

| PeacefulEs | 2.32 | NS | NS | −0.03 p = 0.01 | NS | NS | NS | NS | −0.59 p = 0.01 | NS | NS | NS | +0.01 p = 0.01 | NS | NS | NS | |

| Positive | FS | 11.12 | NS | NS | NS | +0.09 p = 0.01 | NS | NS | NS | NS | NS | +2.30 p = 0.01 | NS | NS | NS | NS | NS |

| HappyEs | 3.25 | NS | NS | NS | NS | −0.72 p = 0.01 | NS | NS | NS | +0.74 p = 0.03 | +0.48 p = 0.02 | NS | NS | NS | NS | NS | |

| SadEs | 0.27 | NS | +0.03 p = 0.01 | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | +0.04 p = 0.01 | NS | NS | |

| ScaredEs | 3.45 | −0.02 p = 0.01 | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | −0.72 p = 0.01 | −0.51 p = 0.01 | |

| PeacefulEs | 2.74 | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | +0.02 p = 0.01 | NS | NS | NS | |

| CalmEs | 5.09 | NS | −0.05 p = 0.01 | NS | NS | −0.74 p = 0.01 | NS | NS | NS | NS | NS | NS | NS | −0.07 p = 0.01 | NS | NS | |

| Neutral | FS | 7.36 | NS | NS | NS | NS | NS | NS | NS | NS | −1.79 p = 0.04 | NS | +0.11 p = 0.01 | NS | NS | NS | NS |

| HappyEs | 1.74 | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | +0.03 p = 0.01 | NS | NS | NS | NS | |

| SadEs | 3.35 | −0.02 p = 0.04 | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | |

| ScaredEs | 1.34 | NS | NS | NS | NS | NS | NS | +0.02 p = 0.01 | NS | NS | NS | NS | NS | NS | NS | NS | |

| PeacefulEs | 1.98 | NS | NS | NS | NS | NS | NS | NS | NS | NS | NS | 0.04 p = 0.01 | NS | NS | NS | −0.65 p = 0.04 | |

| CalmEs | 3.08 | NS | NS | NS | NS | NS | −0.02 p = 0.04 | NS | NS | NS | NS | NS | NS | NS | NS | NS | |

| Negative | HappyEs | 0.86 | NS | NS | NS | NS | NS | +0.01 p = 0.02 | NS | NS | NS | NS | NS | NS | NS | NS | NS |

| SadEs | 2.91 | NS | NS | NS | NS | NS | −0.03 p = 0.01 | +0.04 p = 0.03 | NS | NS | NS | NS | NS | NS | NS | NS | |

| ScaredEs | 4.23 | NS | NS | NS | NS | NS | −0.04 p = 0.01 | NS | NS | 1.63 p = 0.04 | NS | NS | NS | NS | NS | NS |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gunaratne, N.M.; Viejo, C.G.; Gunaratne, T.M.; Torrico, D.D.; Ashman, H.; Dunshea, F.R.; Fuentes, S. Effects of Imagery as Visual Stimuli on the Physiological and Emotional Responses. J 2019, 2, 206-225. https://doi.org/10.3390/j2020015

Gunaratne NM, Viejo CG, Gunaratne TM, Torrico DD, Ashman H, Dunshea FR, Fuentes S. Effects of Imagery as Visual Stimuli on the Physiological and Emotional Responses. J. 2019; 2(2):206-225. https://doi.org/10.3390/j2020015

Chicago/Turabian StyleGunaratne, Nadeesha M., Claudia Gonzalez Viejo, Thejani M. Gunaratne, Damir D. Torrico, Hollis Ashman, Frank R. Dunshea, and Sigfredo Fuentes. 2019. "Effects of Imagery as Visual Stimuli on the Physiological and Emotional Responses" J 2, no. 2: 206-225. https://doi.org/10.3390/j2020015

APA StyleGunaratne, N. M., Viejo, C. G., Gunaratne, T. M., Torrico, D. D., Ashman, H., Dunshea, F. R., & Fuentes, S. (2019). Effects of Imagery as Visual Stimuli on the Physiological and Emotional Responses. J, 2(2), 206-225. https://doi.org/10.3390/j2020015