Abstract

The volume or mass throughput of a mechanical treatment plant for commercial waste represents a key performance parameter. This measurement parameter is often unavailable, as the sensor technology required is often expensive or does not provide accurate data. The first process stage is usually a shredding machine, converting the waste into a transportable and separable fraction size. Here, a methodical approach is pursued which enables an indirect estimation of the volume throughput capacity based on further machine parameters, such as the drum speed and the drum torque. Based on 32 test data sets, two models were developed to approximate the volume throughput rate. The two models developed are the regression model and the displacement model. Furthermore, two reference models were defined to evaluate the accuracy of the two approaches developed: the so-called mean value model and the ANOVA model. When looking at the 80th percentile of the sign-adjusted relative deviation, the results show that the regression model, with ±40%, followed by the displacement model, with ±42%, enable significantly more accurate estimates of the volumetric throughput performance than the two reference models, with ±63% and ±71%, respectively.

1. Introduction

Legal frameworks, such as the European Union’s Circular Economy Package, contain clear targets for waste recycling rates. Accordingly, 55% (by weight) of municipal waste must be recycled in every member state of the European Union by 2025 [1,2]. Taking into account the average recycling rate of 38% (by weight) reported by the EU-27 countries in 2019, it is clear that the quantities of material sent for treatment will have to increase significantly in the coming years [3]. Higher required recycling rates limit the possibility of sending untreated waste for incineration or energy recovery or sending it to landfill, which means that the quantities of waste sent for treatment will have to increase in the coming years [4]. There is also a focus on increasing the effectiveness of treatment plants, with the aim of utilizing unused recycling potential, particularly for commercial waste [5,6]. This can be achieved through more precise mechanical treatment and the even greater use of sensor-supported sorting machines [7,8,9]. Effective use of this technology in turn places clear requirements on the particle size and volume consistency of the waste stream [8,10,11,12].

The focus of this research is on the shredding process step of mixed commercial waste (MCW) in a mechanical treatment/processing line. In the case of MCW, it is a very inhomogeneous waste stream [13], which consists in particular of plastics, wood, paper, metals and inert materials [5]. The heterogeneity is primarily attributable to the variable composition of the materials. In the case of mechanical treatment of MCW, we speak of so-called splitting or plants producing refuse-derived fuels (RDFs). The shredding step is usually the first step in these treatment plants and is generally unavoidable in order to convert the waste into a transportable and separable piece size. It also has a direct influence on the quality of the material in terms of particle size, throughput and, in particular, its consistency [8,12,14]. Furthermore, the shredding process step accounts for a significant proportion of the energy consumption of the entire treatment plant. The throughput capacity of the shredder is of particular importance, as it ultimately provides information about the treatment capacity of the entire mechanical treatment plant and is therefore an important performance parameter. Currently, mechanical treatment units and plants usually provide a low level of machine, process, and material flow data, which also include throughput capacity [12]. The main reason for this lies in the cost structure for the necessary sensor technology, but also in the limited selection of suitable systems and solutions or their often difficult retrofitting [11]. For this reason, information such as throughput performance cannot currently be used on a large scale for online/on-time optimization of individual units or for optimization of the overall process [15]. Increased use of digitalization would contribute significantly to a better circular economy [16].

In principle, systems for measuring throughput are already available on the market, such as optical measuring systems (volume flow measurement) or belt scales integrated into the conveyor system (mass flow measurement). In addition to the high costs already mentioned, the difficult operating conditions should also be noted. For example, dust emissions lead to problems during operation, especially with optical measuring systems [17]. The accuracy of belt scales depends on a variety of factors and is often significantly lower in reality than in theory [18].

Based on this problem, it seems sensible to research alternative solutions in the direction of more cost-effective systems that are suitable for the ambient conditions (e.g., dust, low material density, etc.) and can also be retrofitted if necessary. In order to facilitate a cost-effective retrofit and thus ensure scalability, research must take into account the possibility of using conventional machine controls. In particular, high-performance industrial computers, which would facilitate the implementation and use of AI models, for example, are not state of the art in the field of (mobile) machine technology for the treatment of MCW. Consequently, the emphasis should be directed towards mathematically uncomplicated models that can be implemented in a localized manner. The specific focus of this work is on the question of whether knowledge of time series data of machine parameters, such as the drum speed or the drum power, as well as the geometry of the cutting tools and the machine settings (cutting-gap, etc.) enable an indirect measurement or estimation of the volume throughput rate when treating solid mixed waste.

In this work, a new methodological approach for measuring volumetric throughput was applied as part of the ReWaste F research project [19]. Models were developed which allow the indirect determination of the volumetric throughput rate on the basis of existing machine parameters. The volumetric throughput capacity is considered to be particularly relevant compared to the mass throughput capacity, as the performance of downstream units (e.g., drum screens) is more strongly influenced by the volumetric flow rate [20,21]. Depending on the modelling approach, existing machine parameters were taken into account as basic data for modelling. Large-scale shredding tests of mixed commercial waste carried out as part of the predecessor project (ReWaste4.0) served as the database for modelling and subsequent verification [22]. Using the example of a single-shaft shredder (Terminator 5000 SD; Komptech GmbH, Frohnleiten, Austria), three different configurations of cutting tool geometries and different setting parameters were analyzed and performance data in the form of volume and mass throughput were measured [23,24]. Two modelling approaches—the so-called regression model and the displacement model—were developed using these measurement data.

The method of indirect estimation of the volumetric throughput capacity of the shredder represents a completely new approach in this area of application. As a result, alternatives to conventional cost-intensive systems for measuring the volumetric throughput capacity will be available in the future, making a further significant contribution to the future digitalized mechanical treatment of solid waste.

2. Material and Methods

The first step deals with data collection. The different newly developed modelling approaches and the two reference models are described under Methodology and Model Development. The selected statistical methods are also described.

2.1. Data Collection

In this section, reference is made to the tests carried out and the test carriers analyzed, and the measurement setup, the measurement data obtained, and the data recording and preparation are discussed in detail.

The topics of pilot lines and experimental research based on large-scale tests are the primary research methods applied in the projects ReWaste4.0 and ReWaste F, which focus on the development of a Smart Waste Factory and, consequently, intelligent waste treatment. The database required to answer the research question was collected during large-scale tests (pilot line 4.0) as part of the ReWaste4.0 project in October 2019 [22]. Khodier et al. [23] describe all the details of the experimental setup and procedure. This is divided into the areas of material, process control, geometry of the cutting tools, and the entire test sequence. The focus was on the shredding of mixed commercial waste. At a glance, the experimental setup was as follows: The waste was fed directly into the shredder using a wheel loader. The same basic type of shredder was used in all cases. From the outfeed conveyor of the shredder, the waste was passed to a digital material flow monitoring system (DMFMS), which measures the current throughput in volume and mass. The waste leaving the machine was collected in a product pile. The process step of pre-shredding using a single-shaft shredder was analyzed in different equipment variants with regard to the cutting geometries. In addition, the influence of the machine parameters drum speed and cutting-gap setting was analyzed.

A Terminator 5000 SD from Komptech served as the test machine. This is a diesel engine-driven single-shaft shredder with a hydraulic drive system and drum drive on both sides. The drum drive enables load-dependent speed control including a reversing function. Overload protection is also integrated.

2.1.1. Measurement Setup for Data Acquisition

The model development described below is based on the machine data relevant to the object of research. Not all of the machine data required for the analysis were collected as standard or provided by the machine. For this reason, an additional measurement setup was required to record the drum torque of the machine. Feyerer et al. [25] describes the measurement application, taking into account that it is simple and cost-effective in order to enable later scalability. Due to the fact that the test carriers are machines with a diesel engine-powered hydraulic drive system, the mechanical output torque of the hydraulic motor was determined according to the formula for of the hydraulic motor, according to Formula (1). Ref. [26] appears to make sense. It is calculated from the hydraulic differential pressure of the hydraulic pump , the displacement volume , and the mechanical–hydraulic efficiency .

To determine the pressure difference , the inlet and outlet pressures at the axial piston variable displacement pump were determined using two pressure sensors of type SCP01-600-44-07 from Parker (Parker Hannifin GmbH, Vienna, Austria) [27], with a suitable measuring range of 0–600 bar. Due to the fact that this is an axial piston variable displacement pump, the displacement was also not constant. The displacement is defined by the swiveling angle. The adjustment is carried out electrically. By changing the intensity of the current, the swivel angle of the pump is adjusted accordingly, which changes the displacement volume. The mechanical–hydraulic efficiency was assumed to be 90% [26]. All three tested machines were equipped with a drum drive on both sides. Due to the mechanical coupling via the shredding drum and the resulting synchronization, only the one-sided drum torque was measured.

The volume throughput rate was recorded using a material flow measuring system specially developed by Komptech (Komptech GmbH, Frohnleiten, Austria), the so-called digital material flow monitoring system (DMFMS). This hook-lift mobile device is positioned as a following unit to the shredder under investigation during tests and essentially consists of a horizontally arranged 6.2 m-long and 1.4 m-wide conveyor belt, which has an integrated belt scale for measuring the mass throughput rate. The belt speed is 0.44 m/s. The belt scale works with an accuracy of ±2% in the spectrum of 25–100% of the absolute measured throughput, in a valid throughput rate range of 5–100 t/h, and provides measured values every 3 s, which correspond to the arithmetic mean value from this period. The volume throughput rate is measured via special measuring beams, using laser triangulation, above the conveyor belt. In detail, the system measures the height profile of the material flow at a frequency of 200 Hz. Taking the belt speed into account, the specific volume throughput is determined and updated every 2 s, calculated from the average value from this period.

2.1.2. Data Recording and Preparation

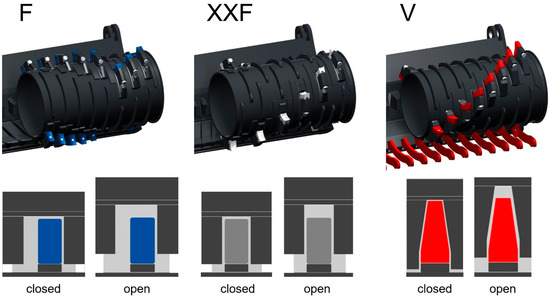

A Dewesoft Sirius R2DB DAQ data acquisition system (Trbovlje, Slovenia) was used for data recording. This ensured time-synchronized recording of all the measurement data described above. Further data analysis was carried out using the Dewesoft X3 and MATLAB R2022a software systems. All of the measurement data required for this research work were collected as part of the pilot line 4.0 [22]. The authors of [23] investigated the influences of the parameters cutting tool geometry, cutting-gap, and drum speed on the volume and mass throughput rate, as well as the energy consumption, in a total of 32 test series, according to Table 1. The different cutting tool geometries and cutting-gap settings are illustrated in Figure 1.

Table 1.

Series of tests carried out including setting parameters as part of pilot line 4.0.

Figure 1.

Analyzed cutting tool geometries and cutting-gap settings.

For the present research topic, the machine data of the drum speed and drum torque , as well as the process data of the specific volume throughput , each related to one hour, are relevant. In order to ensure a higher number of comparable machine settings for the subsequent evaluation of the model, it was necessary to reduce the different variants of the cutting-gap setting parameter. Subsequently, a distinction was only made between the settings open (cutting-gap ≥ 50%) or closed (cutting-gap < 50%).

No grouping is required in the case of the drum speed setting parameter. This parameter is subsequently taken into account in the modelling as a dynamic variable in the form of time series information (continuous measurement parameter). Based on these definitions, and taking into account the cutting tool geometries and the cutting-gap setting, the 32 individual test series were allocated to six groups according to Table 2. Irregularities were discovered in the time series data for test series 3, 4, 24, and 30, as the volume throughput rate in the measurement data increases without justification over a longer period of time despite the constant drum speed. The cause is suspected to be an error in the data recording of one of the two measurement signals. In addition, the time series data of test series 7 show a clear decrease in the volume throughput rate at constant drum speed, which can be explained by insufficient feeding of the machine during this period. Due to these findings, the test series mentioned were subsequently excluded from all considerations, which is why a total of 27 test series were available for modelling and evaluation.

Table 2.

Grouping according to cutting unit configuration and cutting-gap setting.

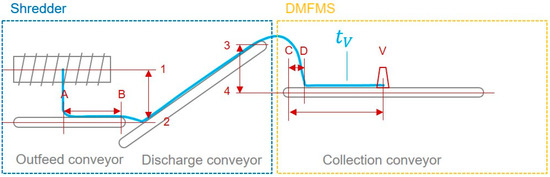

Due to the physical distance between the respective test machine (Terminator 5000 SD) and the DMFMS, a time correction or shift of the measurement data of the specific volume throughput capacity by the time offset had to be taken into account. These corrections were calculated from the geometric distances according to Figure 2, and take into account the conveyor belt speeds, as well as the assumption of the free fall of the material between the shredding drum and the discharge belt as well as between the discharge belt and the collection belt of the DMFMS, as according to Formula (2). Here, the height is representative of the vertical distance between the shredding drum and the outfeed conveyor, the length represents the center path on the outfeed conveyor, the length represents the total length of the discharge conveyor, the height represents the vertical distance between the discharge conveyor (of the shredder) and the collection conveyor of the DMFMS, the length represents the distance from the start of the collecting conveyor to the measuring point of the volume throughput rate, and the length represents the distance between the start of the collecting conveyor and the impact point of the material. The parameter represents the acceleration due to gravity, and the variables , and the conveyor belt speeds of the discharge outfeed, discharge, and collecting conveyor, respectively.

Figure 2.

Geometric relationships of the pilot line 4.0 (Shredder: Terminator 5000 SD, DMFMS).

The measurement data were initially stored in the form of time series data with a standardized resolution of 1000 Hz, and were updated with different resolutions depending on the sensor type. For example, the drum speed is available with a resolution of 10 Hz, the drum torque with 100 Hz, and the volume flow rate with 0.5 Hz. For further modelling, it was therefore necessary to reduce the resolution, as not all parameters could be updated at the same repetition rate, which is why the data resolution was subsequently set to 0.1 Hz in the first instance. This data reduction was carried out by calculating the arithmetic mean of the respective measurement points. Furthermore, each data set was limited to a test time of one hour, i.e., 360 (0.1 Hz) data points per parameter, in order to ensure identical observation periods. Initial analyses and observations showed that the selected resolution of 0.1 Hz was too high for the possible description of a correlation. Considerations suggested that a longer observation interval, i.e., a lower data resolution, would appear to make sense, as data-averaging reduces or smooths out disturbance variables and thus provides average values in the form of load or speed levels for the respective period as a basis for comparison. For this reason, the data resolution was ultimately set at 0.016 Hz. This corresponds to one measured value per minute, or 60 measuring points per hour and test series.

2.2. Methodology and Model Development

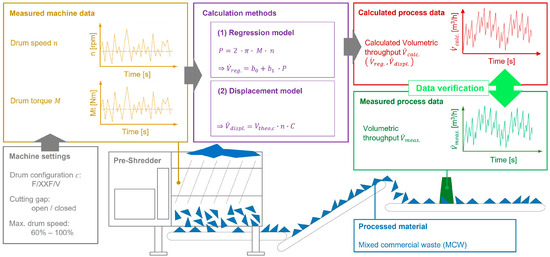

A new methodological approach was developed to address this research topic. This is based on the idea of enabling a model-based determination or approximation of the volume throughput rate on the basis of available machine parameters, as well as information on setting parameters and the input material. In detail, measured data from machine parameters are used to determine or approximate the volume throughput rate using two different calculation methods. These calculated process data were then verified using the measured volume throughput rate. Figure 3 illustrates the basic approach to the problem as a method graphic.

Figure 3.

Method diagram for approximating the volume throughput rate using the machine parameters drum speed and drum torque .

The two modelling approaches developed are described in detail in the following subsections. Furthermore, the two reference models for the subsequent evaluation are also described. Finally, reference is also made to the statistical methods used.

2.2.1. Regression Model

The so-called regression model illustrates the first modelling approach developed. This is based on the consideration that the power at the shredding drum, which can be calculated according to Formula (3), is possibly related to the volume throughput rate . This consideration is supported by the fact that for the calculation of the drum power, both the drum torque as a measure of the load, as well as the drum speed as a measure of the possible volume displacement, are considered. The drum torque can be determined using the measured output torque on the axial piston variable displacement pump, the reduction ratio of the planetary gearbox , and the consideration of the double-sided drum drive according to Formula (4), and subsequently also by the Formula (5). This allows the relationship between the tooth force and the effective distance to be portrayed, which results from the halving of the drum diameter . The author of [28] has already demonstrated that the force required for crushing is in direct proportion to the increase in surface area.

The drum speed is another important reference variable. Khodier et al. [23] have already shown that the drum speed has a significant influence on the volume throughput rate. Furthermore, it can be assumed that a drum speed of zero means that the volume throughput rate must also be zero, as no material can be crushed or displaced during this period. This can occur if the machine’s overload protection is activated, for example in the event of contact with an impurity. The reversing function of the machine is a special case [24]. This results in a negative drum speed. This mode is triggered depending on the situation, for example by the overload protection, or automatically by the program. These boundary conditions were also taken into account in the regression model, so that a drum speed equal to or less than zero leads to a volume throughput rate of zero in this period.

Based on the calculated drum performance and the measured volume throughput rate, the statistical method of linear regression was used to visualize the possible relationship between these two variables. A linear model was used, as preliminary investigations have shown that the use of a quadratic model does not lead to a better approximation. The volume throughput rate is referred to as the criterion value , which is subsequently calculated or estimated by the model. In the first instance, the measured volume throughput rate is used to describe the regression line. The drum power is used as a predictor value and represents the future input value. The variables and form the coefficients to be calculated, whereby is the slope of the regression line and represents the offset, i.e., the shift of the line along the y-axis at [29]. The regression line is described according to the general Formula (6) [29], where represents the estimated volume throughput.

The regression lines are modelled for each group according to Table 2. For the subsequent model evaluation, a model is formed for each test from the respective group, with the exception of the same test. The corresponding model coefficients are determined by arithmetically averaging all coefficients’ variables and of the respective groups. Using Formula (7), the model-based determination of the volume flow rate is carried out in the form of time series information for each test series.

2.2.2. Displacement Model

As an alternative to the regression model, a second modelling approach was developed with the so-called displacement model. This is based on the idea of determining the volumetric throughput based on the theoretical displacement volume and taking into account the drum speed. The theoretical displacement volume can vary depending on the design of the cutting geometry (see Figure 1) using the technical data from Table 3 and according to the Formulas (8) and (9), and describes the theoretical displacement volume per drum revolution.

Table 3.

Technical data of the three different cutting geometries.

The theoretical displacement volume describes the volume that results from the complete cross-sectional area of all cutting tools on the shredding drum during a complete 360° rotation. In reality, the material is fed into the hopper of the shredding machine from above. The material is caught by the shredding drum and pressed against the counter comb to shred the material at this point. It then falls down onto a conveyor belt and is discharged. Due to the installation situation, the effective range of the shredding drum is limited to approx. 120° per revolution due to the geometry. In addition, the geometry of the cutting tools, due to the relatively large cutting-gaps, tends to result in a ripping and not a cutting–shredding effect, whereby the resulting material quality is coarser and also more undefined [8]. Furthermore, in the case of the treatment of mixed commercial waste, a high degree of inhomogeneity in the material composition and a fluctuating material composition is to be expected [4].

In order to be able to take these effects and their influences into account, corresponding correction factors for the real displacement volume were determined on the basis of the available test data. This is calculated from the volume throughput of the respective test series and the average drum speed , according to Formula (10). Taking into account the previously determined theoretical displacement volume, the following can be calculated according to Formula (11), and the theoretical correction factor can be determined for each test series, depending on the respective cutting tool configuration.

The correction factors applicable for the model are determined for each test series by arithmetically averaging all theoretical correction factors of the respective groups, as according to Table 2. The theoretical correction factor determined explicitly for the respective test series is again not taken into account in order to ensure independence of the data. This results in an independent correction factor for each test series . With the help of these correction factors, the modeled volume flow rates of each test series can be calculated according to Formula (12).

2.2.3. Mean Value Model (Reference Model 1)

In order to evaluate the performance of the models, a meaningful reference is required: the simplest estimator, which is also often used in practice, is the average throughput from previous experience. This approach is implemented in the mean value model. In detail, the arithmetic mean values of the respective groups are calculated according to Table 2. The volume throughput capacity explicitly available for the respective test series is again not taken into account in order to ensure the independence of the data.

2.2.4. ANOVA Model (Reference Model 2)

Khodier et al. [23] have already analyzed the influences of cutting geometries, cutting-gap settings, and drum speeds, according to Table 1, on the volume and mass throughput as well as the energy requirement. A variance analysis was used to develop a model that describes the different influences. The volume throughput rates to be expected from the model for the respective test series based on the settings serve as a second reference for the performance measurement of the two newly developed model approaches.

2.3. Statistical Methods

The consideration of statistical methods is of particular relevance to this research topic. This allows, for example, correlations between different parameters to be categorized, the quality of the models to be examined on the basis of the accuracy of the predictions, or the significance in relation to the accuracy of the different models to be assessed. All methodologies for the statistical assessment of the analyses are described in detail in the following subsections.

2.3.1. Test for Normal Distribution

The reliable applicability of most parametric tests requires normally distributed data, so testing for normal distribution is also one of the most important goodness-of-fit tests in statistics [30]. For the correlation analysis, using the Pearson correlation coefficient and the calculation of confidence intervals, normally distributed data are required. For example, the Shapiro–Wilk test or Kolmogorov–Smirnov test are used to test data sets for the presence of a normal distribution [31]. Due to the small sample size, particular attention was paid to the Shapiro–Wilk test for the present investigations, as it is suitable for statistical testing for the normal distribution of data with small sample sizes [31].

2.3.2. Non-Parametric Data Analysis

Non-parametric statistical approaches can be used to compare test series that do not have normally distributed data sets. For example, the interquartile range (IQR) is used as a measure of statistical dispersion, whereby this represents the dispersion of the middle 50% of the data. The interquartile range is defined as the difference between the third quartile (Q3) and the first quartile (Q1) in a data set. This is often displayed graphically as part of a boxplot, allowing for quick interpretation. The boxplot visualizes the interquartile range as a box, usually in combination with the mean and median of the entire sample. The samples outside the interquartile range are displayed up to a distance of or and are plotted as whiskers. The significance of the interquartile range lies in the aspect of robustness against outliers, as only the middle 50% of the data are taken into account and the influence of outliers is therefore low.

In order to consider a larger proportion of the data in the evaluation, a larger range, for example between the 10th and 90th percentiles, can be considered instead of the interquartile range. This means that 80% of the data points are taken into account and only 20% are not considered.

3. Results and Discussion

The results and discussion of all analyses are presented in detail in the following sections.

3.1. Volume Flow Data of All Test Series and Models

As part of the evaluation, the data of the measured volume flow rates and those determined using the different calculation models were compared. The arithmetic mean values of the 60 data points per model were calculated in order to enable simple comparison of the absolute volume flow rates. As the observations are based on a test time of one hour in each case, this value also corresponds to the hourly average, i.e., the specific volume throughput capacity . This shows that the measured volume flow rates have a large scattering range, from to . This is due to the different cutting tool, cutting-gap, and speed configurations, but also, in particular, the material to be shredded. In addition, it can be stated that the variant F ( to ), followed by the XXF variant ( to ) and variant V ( to ), has the widest spread. These differences are mainly due to the cutting tool geometry itself. Variant F has the largest axial cutting-gaps (see Table 3 and Figure 1). In addition, the opening of the radial cutting-gap in this variant has a much greater effect on the resulting open cross-sectional area (clearance area). In addition, variant F has 45% more cutting tools (teeth). For this reason, it seems obvious that this cutting tool geometry has the greatest volume throughput potential and, at the same time, the spread in the setting-related volume throughput rates is also the greatest. Variant XXF, on the other hand, has the smallest axial cutting-gaps and the smallest number of teeth, but the influence of the radial cutting-gap setting is similar to that of variant F. Variant V, on the other hand, has similarly small axial cutting-gaps as variant XXF. Due to the conical tooth shape, however, the influence of the radial cutting-gap setting is much smaller than with the two variants described above. In addition, only this version has an additional comb element under the shredding drum, which is intended to ensure defined secondary shredding, but also limits the possible volume throughput rates.

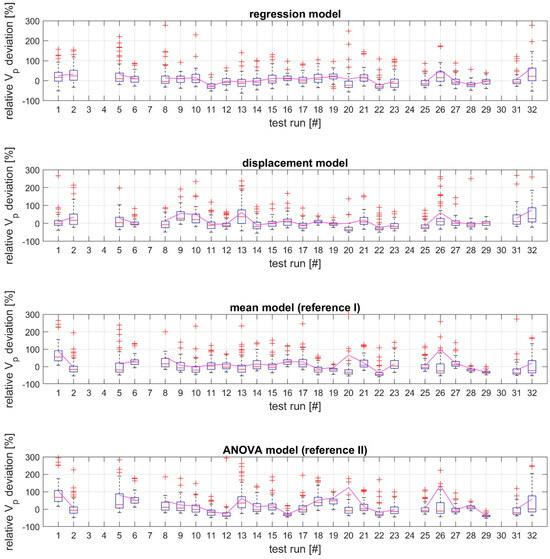

3.2. Performance Comparison of the Models

In order to subsequently compare the different models in terms of their performance, the relative deviations between the real measured volume flow rates and those of the respective models were determined for each model and each series of tests carried out. All investigations were carried out at a data resolution of 0.016 Hz, which is why at least 60 data points were available for each test series. The Shapiro–Wilk test was used to check the determined data sets for normal distribution. As this was not present in the majority of the data groups, non-parametric methods had to be used for the assessment. For this purpose, the interquartile range method was used as a measure of the dispersion of the data. This represents the middle 50% of the data points and is shown in Figure 4 for the four different modelling approaches and the 27 tests considered (see Table 2) in the form of a box plot.

Figure 4.

Average relative deviation of the respective model values from the measured (real) volume flow rates.

In Figure 4, it can be seen that the relative deviation downwards is limited to −100% and has never fallen below this value. This is based on the consideration that a deviation below −100% would lead to a negative volume throughput performance of the model and is therefore implausible. Furthermore, it can be seen that the median of the measured data is usually around the zero point of the relative deviation. It can therefore be assumed that the models underestimate the real volume throughput performance just as often as they overestimate it and that for this reason no offset in the form of a simple correction factor would significantly improve the performance. However, the mean value of the respective data (line in magenta) fluctuates much more strongly in the area of positive deviations. These observations can be explained primarily by the outliers in the positive deviation range, i.e., in the case of overestimation of the volume throughput performance.

In order to be able to compare the models, the interquartile ranges of all groups, calculated according to Table 2, were determined at the model level. Table 4 shows the differences in performance of the respective models, taking into account the cutting unit geometry and cutting-gap settings. The observations of the newly developed model approaches (regression model and displacement model) show the following picture: no consistent pattern can be recognized between the closed cutting-gap and open cutting-gap settings with regard to the accuracy of the model estimation, regardless of the model. However, the open cutting-gap setting tends to produce more accurate results. One reason for this could be the lower power requirement during shredding and the resulting lower load level in this setting [26]. This results in more continuous operation, which means that the models perform better. A clearer picture emerges at the level of the different cutting tools. Here, variant V delivers consistently better results for the interquartile ranges , followed by variant F and the XXF variant . With the method already described in Section 3.1, this result for variant V is easy to understand, whereas the relatively large deviations of variant XXF are unexpected. As these deviations are not only found in the developed modelling approaches, but also in the two reference models, the reason is expected to be found in the measurement data. For example, longer operating times (in individual tests) when the test carrier was not fully utilized could have caused the ratios between the load and speed levels and the volume throughput rates to fluctuate greatly, leading to a significantly greater scatter. Irrespective of the cutting unit geometries and the cutting-gap setting, it can be seen that both modelling approaches, the regression model () and the displacement model (), show similar, but significantly lower scatter compared to the two reference models (see Table 4). In general, the inhomogeneity of the input material must also be considered as a possible cause. The inhomogeneity of MCW is another possible explanation for the results.

Table 4.

Interquartile range and 80th percentile of the relative deviations between the measured (real) volume throughput and the model-based calculation, divided into cutting unit geometry and cutting-gap setting, cutting unit geometry, or without grouping.

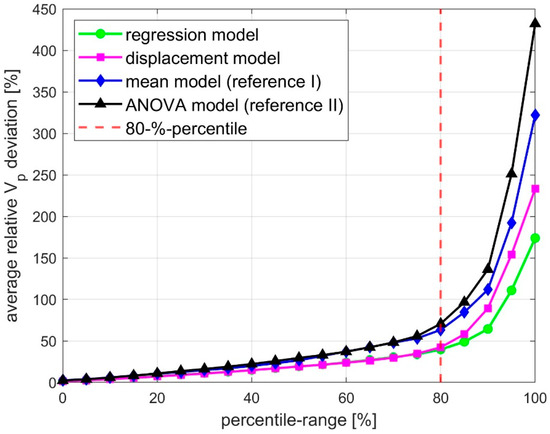

As the interquartile range only contains 50% of the original measurement data, an additional analysis was used. For this purpose, the absolute values of the relative deviations were determined in a first step. Based on this, the 0th to 99th percentiles were calculated in 5% iteration steps. Figure 5 visualizes the average relative deviation between the measured (real) volume throughput rates and those of the respective model according to percentiles.

Figure 5.

Average relative sign-adjusted deviation between the measured (real) volume flow rates and those of the respective model, by percentiles.

It can be seen that the average relative deviations of all models increase as the proportion of data points taken into account increases. This is to be expected insofar as the proportion of outliers taken into account in the calculation also increases as the percentiles increase. In general, it can be recognized that both model approaches developed deliver consistently better performance in the form of lower average deviations. The regression model shows the best performance in comparison. For the further analyses and the detailed analysis of the individual models, the 80th percentile was defined in order to neglect a smaller number of outliers compared to the interquartile range. The detailed results for this are again shown in Table 4, and again confirm the picture from the evaluation of the interquartile range. It can now be concluded that the displacement model for variant V delivers the highest accuracy in predicting the volume throughput performance in 80% of cases with ±27%. In contrast, the regression model delivers the slightly higher accuracy of ±40% in 80% of cases. Both models developed deliver a significantly higher accuracy than the two reference models according to the analysis carried out. In detail, based on the 80th error percentile, the regression model shows a 23% higher prediction accuracy compared to the mean model. The displacement model is at a similar accuracy level of 21%. A closer look at the two reference models shows that the mean value model performed consistently better than the ANOVA model. This result can be explained by the following reasons: Compared to the mean value model, the ANOVA model is not based on the grouping introduced in this research work (according to Table 2), but includes all 32 test series according to Table 1. Furthermore, the empirical significance p of the lack of fit is close to 0.05, which means that the model equation may not be sufficiently complex (underfitting).

4. Conclusions

The investigative process has shown that the regression and displacement models are credible and perform better than the reference models. The regression model provides a 23% more accurate estimate when the 80th percentile of the non-specific configuration (“all configurations”) is considered, and the displacement model provides 21% more accuracy than the best reference model (mean value model). However, these models are still less accurate than commercial solutions for direct measurement of the volume throughput capacity. The mixed commercial waste (input material) is inhomogeneous, which complicates the relationship. No proof exists that the cutting-gap setting influences prediction accuracy, but the results suggest better model results from an open cutting-gap. There are already major differences in configurations. The models’ performance for variant V is the best, with the highest levels of prediction accuracy. In consideration of the 80th percentile, the displacement model demonstrates a 27% higher accuracy in estimating volume throughput performance than the reference model (mean value model). In this instance, the developed displacement model provides an estimation accuracy of ±27%, the developed regression model provides an accuracy of ±32%, and the mean value model provides an accuracy of ±54%. A comparison of the regression and displacement models reveals a certain degree of similarity.

The regression model performs better when measurement data (including outliers) are fully taken into account. The displacement model is more cost-effective as it is based solely on drum speed and geometry data. The regression model requires supplementary drum torque information. The regression model’s key strength is its ability to calculate the throughput rate, which is dependent on the load (here, drum power). This eliminates the risk of errors caused by poor loading, showing that the model approaches are appropriate for measuring performance.

These findings are important for future advancements and emphasize the need for more accurate models. The models are currently too unreliable to replace existing direct measurement systems. They need to be about ±10% accurate for that to happen. Tests using more homogeneous materials, like wood, could give more data to optimize the models. More data are needed on the cutting-gap setting to spot finer differences. The next phase is to integrate the calculation models into the test vehicles’ machine control software, enabling their validation in future test series. Adding data from these test series in various settings will improve model accuracy.

In addition to optimizing the models described, the use of AI could also be an interesting area of research in this context. These methods, including deep-learning algorithms, impose greater demands on computing power, yet they may also enhance the precision of predictions.

Author Contributions

C.F.: Conceptualization, Data, curation, Formal analysis, Investigation, Methodology, Resources, Software, Validation, Visualization, Writing—original draft. K.K.: Data curation, Formal analysis, Investigation, Methodology, Validation, Writing—review & editing. T.L.: Investigation, Methodology, Resources, Validation, Writing—review & editing. R.P.: Conceptualization, Investigation, Project administration, Supervision, Writing—review & editing. R.S.: Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Writing—review & editing. All authors have read and agreed to the published version of the manuscript.

Funding

Partial funding for this work was provided by the Center of Competence for Recycling and Recovery of Waste 4.0 (acronym ReWaste4.0) (contract number 860 884) as well as the Center of Competence for Recycling and Recovery of Waste for Future (acronym ReWaste F) (contract number 882 512) under the scope of the COMET—Competence Centers for Excellent Technologies—financially supported by BMK, BMAW, and the federal state of Styria, managed by the FFG.

Data Availability Statement

The main data are already included in the article. Please contact the corresponding author for further information.

Conflicts of Interest

Author Christoph Feyerer was employed by Komptech GmbH and, at the time of investigation and publication of this article, is a PhD student at Montanuniversität Leoben within the framework of the COMET project ReWaste F. The remaining authors declare that the research was conducted without any commercial or financial relationships that could be construed as a potential conflict of interest.

Glossary

| analysis of variance | |

| linear regression coefficients | |

| average values of linear regression coefficients | |

| cutting tool geometry (types F, XXF, and V) | |

| correction factor and theoretical correction factor | |

| drum diameter | |

| digital material flow monitoring system | |

| tooth force | |

| number of test runs | |

| ratio of planetary gearbox | |

| interquartile range | |

| gravity | |

| height between point 1 and 2 | |

| height between point 3 and 4 | |

| height of shredding teeth | |

| height of counter comb teeth | |

| number of drives | |

| length between point A and B | |

| length of collection conveyor | |

| length between point C and D | |

| length of discharge conveyor | |

| length between point D and C | |

| length of shredding drum | |

| length of outfeed conveyor | |

| drum torque | |

| output torque hydraulic motor | |

| mixed commercial waste | |

| drum speed | |

| average drum speed | |

| number of teeth on shredding drum | |

| drum power | |

| difference pressure hydraulic pump | |

| refuse derived fuel | |

| offset time between shredding drum and volumetric throughput sensor | |

| displacement volume hydraulic pump | |

| real displacement volume and theoretical displacement volume | |

| volumetric and calculated volumetric throughput performance per hour | |

| calculated volumetric throughput performance per hour by ANOVA model | |

| calculated volumetric throughput performance per hour by displacement model | |

| measured volumetric throughput performance per hour | |

| calculated volumetric throughput performance per hour by regression model | |

| speed of collection conveyor | |

| speed of discharge conveyor | |

| speed of outfeed conveyor | |

| width of collection conveyor | |

| width of discharge conveyor | |

| width of outfeed conveyor | |

| width of shredding teeth | |

| width of counter comb teeth | |

| predictor value | |

| cutting-gap | |

| criteria value | |

| mechanical–hydraulic efficiency |

References

- European Commission. A European Green Deal. Available online: https://ec.europa.eu/info/strategy/priorities-2019-2024/european-green-deal_en (accessed on 11 February 2022).

- European Union. Directive (EU) 2018/851 of the European Parliament and of the Council of 30 May 2018 Amending Directive 2008/98/EC on Waste (Text with EEA Relevance). Off. J. Eur. Union 2018, 150, 109–140. [Google Scholar]

- Eurostat. Municipal Waste by Waste Management Operations: ENV_WASMUN; Eurostat: Luxembourg, 2019. [Google Scholar]

- Weißenbach, T. Development of an Online/Ontime Method for the Characterization of Waste Streams in Waste Pre-Treatment Plants. Ph.D. Thesis, Montanuniversitaet Leoben, Leoben, Austria, 2021. [Google Scholar]

- Wellacher, M.; Pomberger, R. Recyclingpotenzial von gemischtem Gewerbeabfall [Recycling potential of mixed commercial waste]. Osterr. Wasser Abfallwirtsch. 2017, 69, 437–445. [Google Scholar] [CrossRef]

- Burggräf, P.; Steinberg, F.; Sauer, C.R.; Nettesheim, P.; Wigger, M.; Becher, A.; Greiff, K.; Raulf, K.; Spies, A.; Köhler, H.; et al. Boosting the Circular Manufacturing of the Sustainable Paper Industry—A First Approach to Recycle Paper from Unexploited Sources such as Lightweight Packaging, Residual and Commercial Waste. Procedia CIRP 2023, 120, 505–510. [Google Scholar] [CrossRef]

- Friedrich, K.; Fritz, T.; Koinig, G.; Pomberger, R.; Vollprecht, D. Assessment of Technological Developments in Data Analytics for Sensor-Based and Robot Sorting Plants Based on Maturity Levels to Improve Austrian Waste Sorting Plants. Sustainability 2021, 13, 9472. [Google Scholar] [CrossRef]

- Kranert, M.; Cord-Landwehr, K. Einführung in die Abfallwirtschaft [Introduction to Waste Management], 4th ed.; Vieweg+Teubner: Wiesbaden, Germany, 2010. [Google Scholar]

- Redwave. Mechanische Verarbeitungsanlagen zur Abfallbehandlung [Mechanical Treatment Plants for Waste Treatment]. Available online: https://redwave.com/loesungen/mt-plants (accessed on 11 February 2022).

- Küppers, B.; Pomberger, R. Entwicklungen in der sensorgestützten Sortiertechnik [Developments in sensor-based sorting technology]. In Proceedings of the Österreichische Abfallwirtschaftstagung 2017, Graz, Austria, 10–11 May 2017. [Google Scholar]

- Curtis, A. Material Machine and Process Oriented Requirements for the Development of a Smart Waste Factory. Ph.D. Thesis, Montanuniversitaet Leoben, Leoben, Austria, 2021. [Google Scholar]

- Curtis, A.; Sarc, R. Real-time monitoring of volume flow, mass flow and shredder power consumption in mixed solid waste processing. Waste Manag. 2021, 131, 41–49. [Google Scholar] [CrossRef]

- Khodier, K.; Viczek, S.A.; Curtis, A.; Aldrian, A.; O’Leary, P.; Lehner, M.; Sarc, R. Sampling and analysis of coarsely shredded mixed commercial waste. Part I: Procedure, particle size and sorting analysis. Int. J. Environ. Sci. Technol. 2020, 17, 959–972. [Google Scholar] [CrossRef]

- Pomberger, R. Entwicklung von Ersatzbrennstoff für das HOTDISC-Verfahren und Analyse der Abfallwirtschaftlichen Relevanz [Development of Substitute Fuel for the HOTDISC Technology and Analysis of Waste Management Relevance]. Ph.D. Thesis, Montanuniversitaet Leoben, Leoben, Austria, 2008. [Google Scholar]

- Sarc, R.; Curtis, A.; Kandlbauer, L.; Khodier, K.; Lorber, K.E.; Pomberger, R. Digitalisation and intelligent robotics in value chain of circular economy oriented waste management—A review. Waste Manag. 2019, 95, 476–492. [Google Scholar] [CrossRef] [PubMed]

- Kurniawan, T.A.; Liang, X.; O’callaghan, E.; Goh, H.; Othman, M.H.D.; Avtar, R.; Kusworo, T.D. Transformation of Solid Waste Management in China: Moving towards Sustainability through Digitalization-Based Circular Economy. Sustainability 2022, 14, 2374. [Google Scholar] [CrossRef]

- Perg, D. Entwicklung eines Messsystems zur Durchsatzmessung am Beispiel eines Mobilen Zerkleinerers [Development of a Measuring System for Throughput Measurement Using the Example of a Mobile Shredder]. Master’s Thesis, Montanuniversität Leoben, Leoben, Austria, 2017. [Google Scholar]

- Aleksandrović, S.; Jović, M. Analysis of Belt Weigher Accuracy Limiting Factors. Int. J. Coal Prep. Util. 2011, 31, 223–241. [Google Scholar] [CrossRef]

- AVAW. ReWaste F. Available online: https://www.avaw-unileoben.at/en/research/projects/rewaste-f (accessed on 27 April 2025).

- Coskun, E.; Bosling, M.; Pretz, T.; Feil, A.; Dombrowa, M. Mechanische Aufbereitungsprozesse effizient gestalten [Efficient Design of Mechanical Treatment Processes]. Available online: https://www.semanticscholar.org/paper/Mechanische-Aufbereitungsprozesse-effizient-Coskun-Bosling/5227837e2c73f34d00794bab16f0b050282a4e49 (accessed on 27 April 2025).

- Thomé-Kozmiensky, K.J.; Goldmann, D. (Eds.) Recycling und Rohstoffe [Recycling and Raw Materials]; TK Verlag Karl Thomé-Kozmiensky: Neuruppin, Germany, 2017. [Google Scholar]

- AVAW. ReWaste4.0. Available online: https://www.avaw-unileoben.at/en/research/projects/rewaste4-0 (accessed on 27 April 2025).

- Khodier, K.; Feyerer, C.; Möllnitz, S.; Curtis, A.; Sarc, R. Efficient derivation of significant results from mechanical processing experiments with mixed solid waste: Coarse-shredding of commercial waste. Waste Manag. 2021, 121, 164–174. [Google Scholar] [CrossRef] [PubMed]

- Komptech. Komptech Maschinen & Anlagen [Komptech Machines & Plants]. Available online: https://www.komptech.com (accessed on 12 February 2022).

- Feyerer, C. Interaktion des Belastungskollektives und der Werkzeuggeometrie eines langsamlaufenden Einwellenzerkleinerers [Interaction of the Load Collective and the Tool Geometry of a Low-Speed Single-Shaft Shredder]. Master’s Thesis, Montanuniversität Leoben, Leoben, Austria, 2020. [Google Scholar]

- Hatami, H. Hydraulische Formelsammlung [Hydraulic Formulas]. Available online: https://de.scribd.com/document/467841566/hyd-formelsammlung-de-pdf (accessed on 27 April 2025).

- Parker Hannifin GmbH. Pressure Sensor SCP03. Available online: https://www.parker.com/content/dam/parker/emea/germany-austria-and-switzerland/about-parker/vw/powerco-en/SCP03%20UK.pdf (accessed on 27 April 2025).

- Von Rittinger, P.R. Taschenbuch der Aufbereitungskunde [Pocketbook of Processing Science]; Unveränderter Nachdruck der Originalausgabe von 1867. [S.l.]; Salzwasser-Verlag GmbH: Frankfurt, Germany, 1867. [Google Scholar]

- Moore, D.S.; McCabe, G.P.; Craig, B.A. Introduction to the Practice of Statistics, 6th ed.; W.H. Freeman and Co.: New York, NY, USA, 2009. [Google Scholar]

- Khatun, N. Applications of Normality Test in Statistical Analysis. Open J. Stat. 2021, 11, 113–122. [Google Scholar] [CrossRef]

- Marshall, E.; Samuels, P. Checking Normality for Parametric Tests; ResearchGate: Sheffield/Birmingham, UK, 2017. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).