Large-Space Fire Detection Technology: A Review of Conventional Detector Limitations and Image-Based Target Detection Techniques

Abstract

1. Introduction

2. Limitations of Conventional Fire Detection Technology in Large-Space Applications

2.1. Point-Type Smoke/Temperature-Sensing Detectors

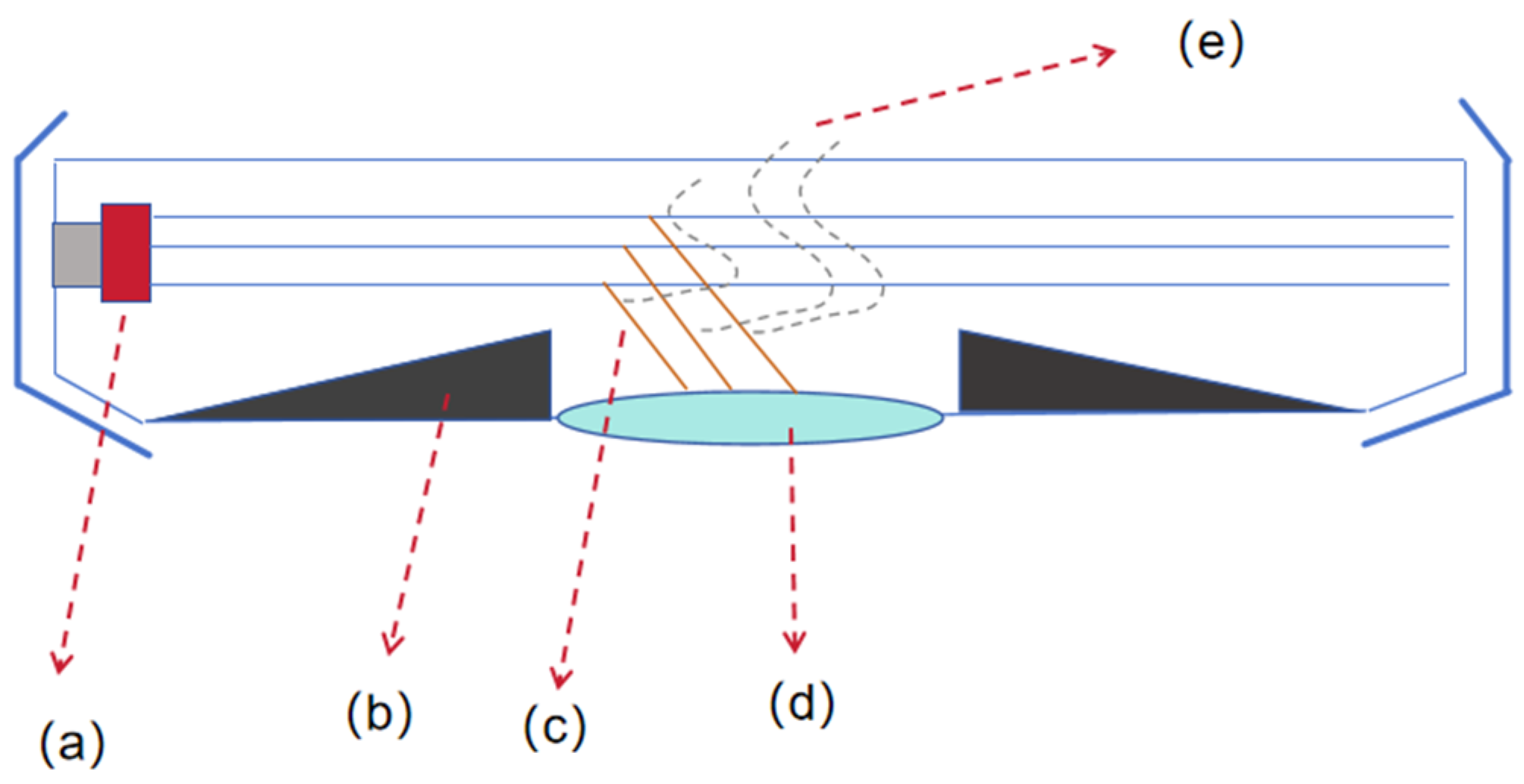

2.2. Line-Type Beam Smoke Fire Detectors

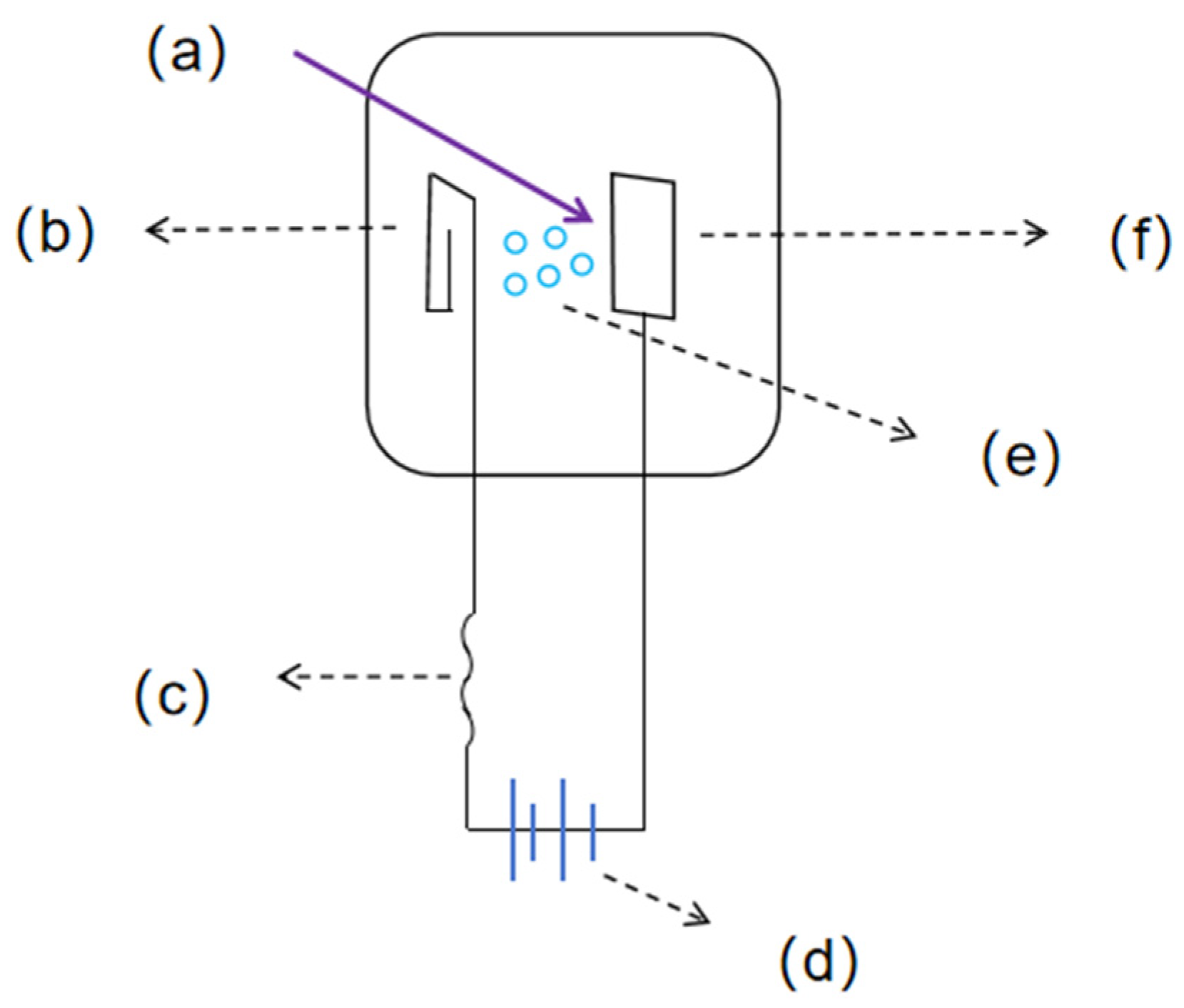

2.3. Infrared and Ultraviolet Flame Detectors

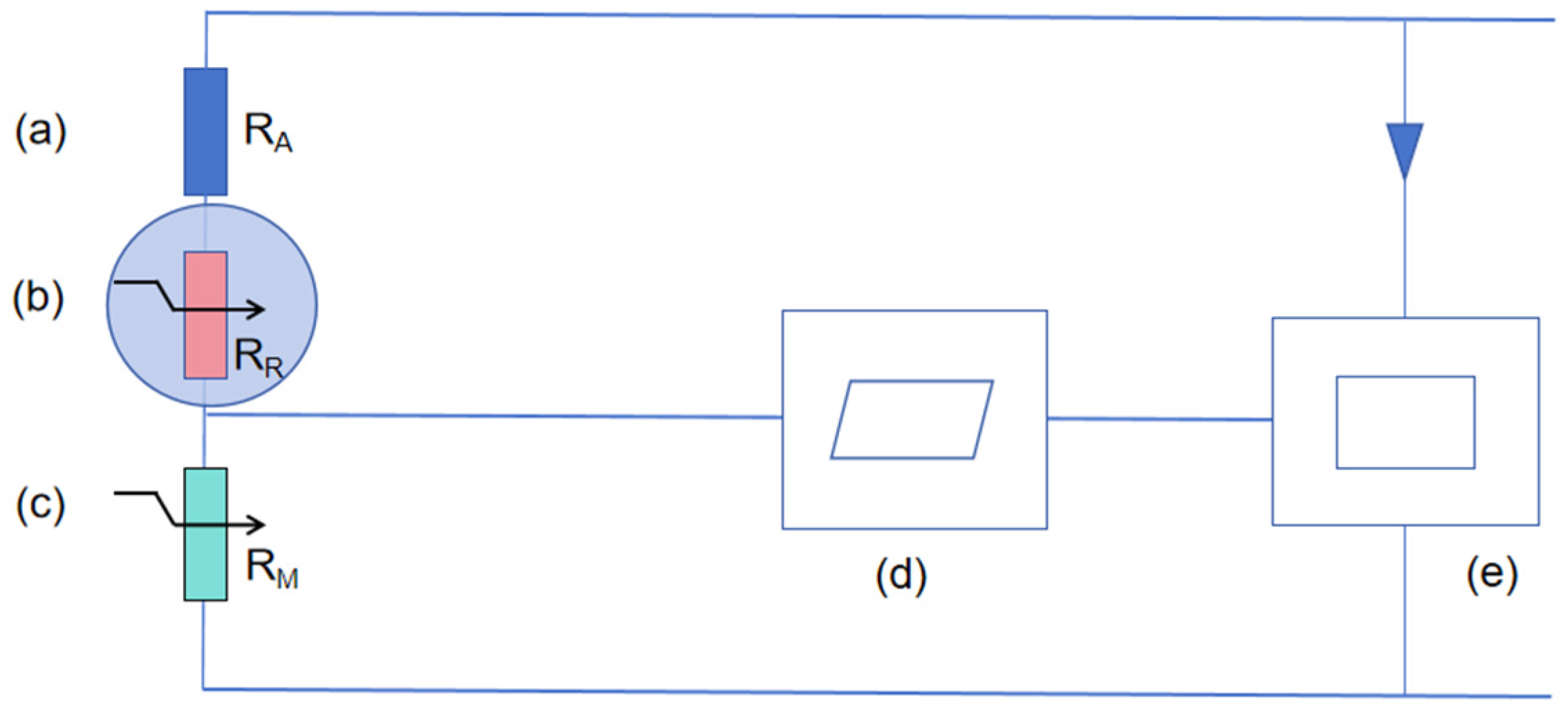

2.4. Aspirating Smoke Fire Detectors

2.5. Some Improvements in Conventional Fire Detection Techniques

2.6. The State of the Art in Large-Space Fire Detection Research

3. Image-Based Large-Space Fire Detection Technology

3.1. Characteristics of Image-Based Large-Space Fire Detection Technology

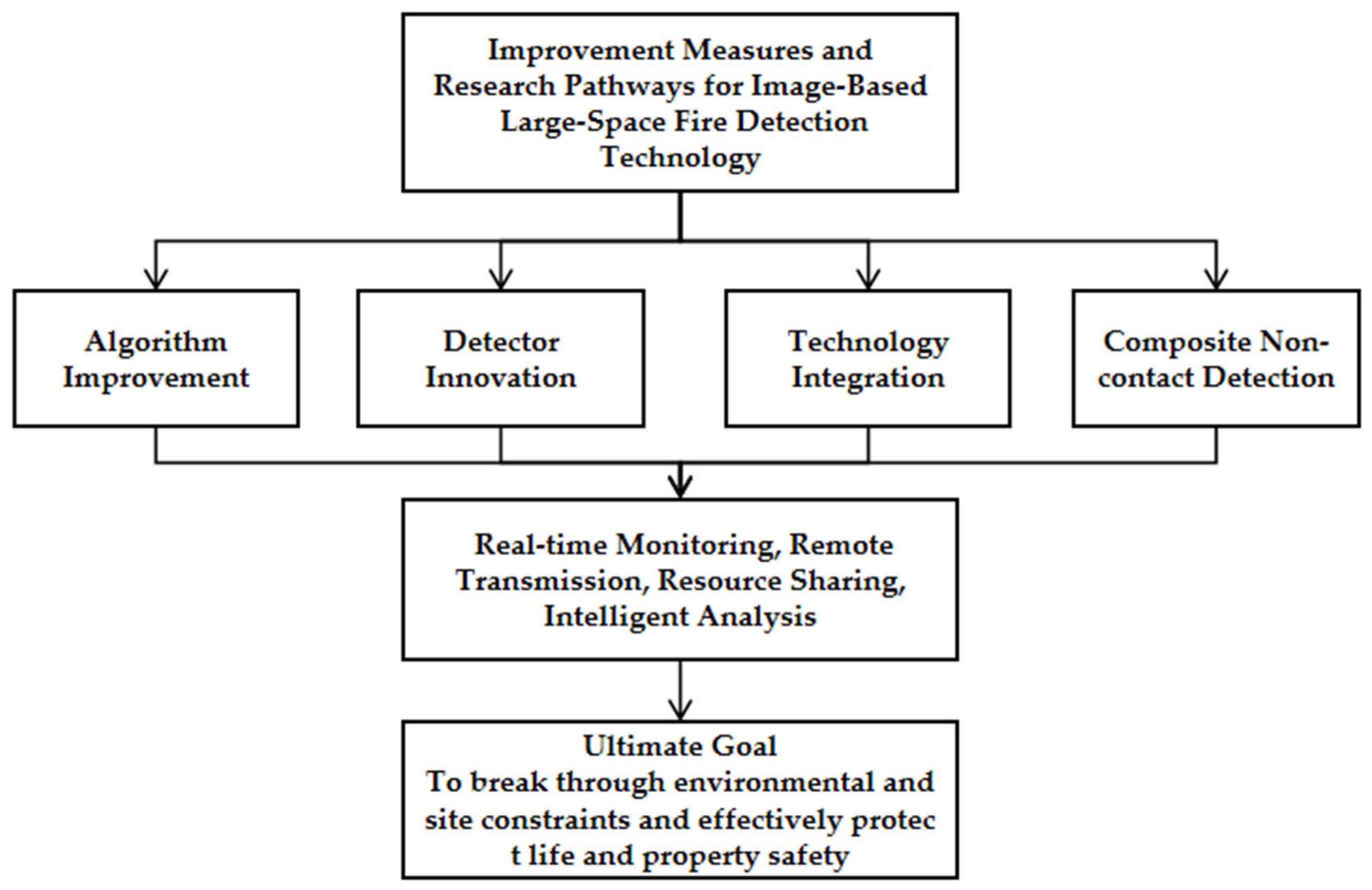

3.2. Improvement Measures

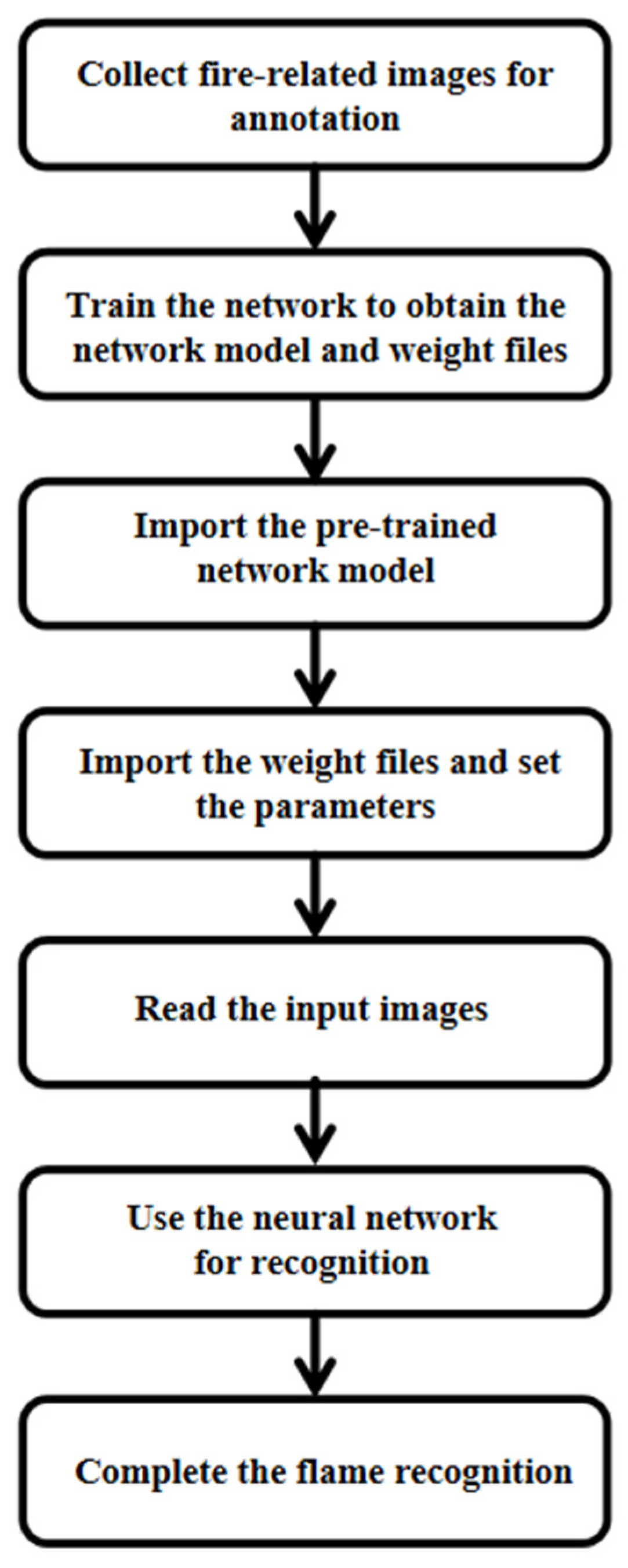

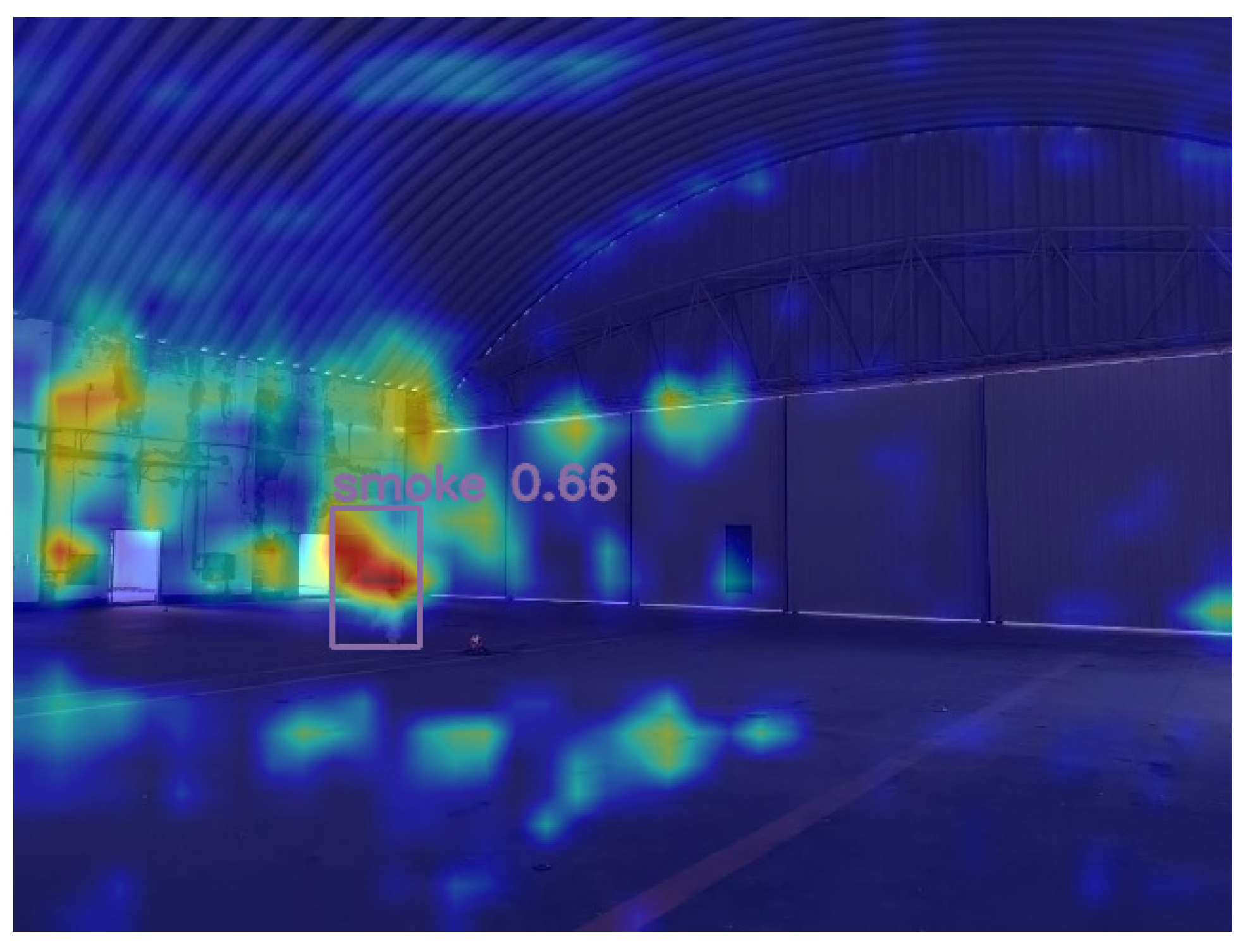

3.3. Target Recognition Algorithm

4. Innovation Directions in the Application of Image-Based Fire Detection Technology in Large-Space Environments

4.1. Selection of Image Acquisition Equipment and Fire Detection Equipment

4.2. Construction of the Dataset

4.3. Algorithm Improvement

4.4. Importance in the Selection of Technical Directions

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- CECS 263:2009; Technical Specification for Large-Space Intelligent Active Control Sprinkler Systems. China Association for Engineering Construction Standardization: Beijing, China, 2009.

- Hu, Y. Research on Image-based Fire Risk Recognition in Complex Large Space. Ph.D. Thesis, Xi’an University of Ar-chitecture and Technology, Xi’an, China, 2016. (In Chinese). Available online: https://kns.cnki.net/kcms2/article/abstract?v=RPbSoBw3VsFkk5qoQBYt9eWg3pC5HgNvnYaE_Da8WUjdDt8PTL8JKra53ij2ReXSjW92WXBKrtlcJcCKlX_olWk0QGGmAl06BJB6-KtEx_SdCyXeVxz6qR-QmY3sLKdVFzf4f-thB1KC9krc-urrcRUaPt_0VMneT6ewTYm8qZ1020UKPde_gtLuFyq83q9x&uniplatform=NZKPT&language=CHS (accessed on 20 June 2025).

- Chen, T.; Yuan, H.; Su, G.; Fan, W. An automatic fire searching and suppression system for large spaces. Fire Saf. J. 2004, 39, 297–307. [Google Scholar] [CrossRef]

- Jamali, M.; Samavi, S.; Nejati, M.; Mirmahboub, B. Outdoor Fire Detection based on Colorand Motion Characteristics. In Proceeding of the 21st Iranian Conference on Electrical Engineering (ICEE), Mashhad, Iran, 14–16 May 2013. [Google Scholar]

- Li, C.; Chen, J.; Li, H.; Hu, L.; Cao, J. Experimental research on fire spreading anddetection method of underground utility pipetunnel. Fire Sci. Technol. 2019, 38, 1258–1261. (In Chinese) [Google Scholar]

- Li, F.; Li, H. Fire Safety Strategies for Typical Space of Large Transportation Hubs. In Fire Protection Engineering Applications for Large Transportation Systems in China; Springer International Publishing: Cham, Switzerland, 2020; pp. 99–131. [Google Scholar]

- Cui, B.; Wang, C.; Wu, M.; Zhu, C.; Wang, D.; Li, B. Integrating Bluetooth-enabled sensors with cloud computing for fire hazard communication systems. ASCE-ASME J. Risk Uncertain. Eng. Syst. Part A Civ. Eng. 2024, 10, 04024035. [Google Scholar] [CrossRef]

- Gaur, A.; Singh, A.; Kumar, A.; Kulkarni, K.S.; Lala, S.; Kapoor, K. Fire sensing technologies: A review. IEEE Sens. J. 2019, 19, 3191–3202. [Google Scholar] [CrossRef]

- Yao, Y.; Yuyu, T.; Peng, C. Study on calibration method of filter for linear beam smoke detector AOPC 2022: Optoelec-tronics and Nanophotonics. SPIE 2023, 12556, 114–119. [Google Scholar]

- Meacham, B.J. Factors affecting the early detection of fire in electronic equipment and cable installations. Fire Technol. 1993, 29, 34–59. [Google Scholar] [CrossRef]

- Long, B. Research and Design of the Comprehensive Test System Based on Ultraviolet Flame Detector. Ph.D. Thesis, Xi’an Polytechnic University, Xi’an, China, 2013. (In Chinese). Available online: https://kns.cnki.net/kcms2/article/abstract?v=RPbSoBw3VsFL_R6t2Q-HsNTeiFZeS0bfmgnDWinJ2QMMAoClJqeMdblT6nOuqWMAarb67hcbsMcrjHR6HtcSBWT0o8ZxYiqK5mBL9akcmLgeEUNgu7xz0Ld1aZx8qCKBB9h8PnQAVAuZ0cjERTuOvHHFmrp_zS_q2lw_ZlS_vayc14CAFYMSsfVkmUXLSDEB&uniplatform=NZKPT&language=CHS (accessed on 20 June 2025).

- Tsai, C.F.; Young, M.S. Measurement system using ultraviolet and multiband infrared technology for identifying fire behavior. Rev. Sci. Instrum. 2006, 77, 014901. [Google Scholar] [CrossRef]

- Bordbar, H.; Alinejad, F.; Conley, K.; Ala-Nissila, T.; Hostikka, S. Flame detection by heat from the infrared spectrum: Optimization and sensitivity analysis. Fire Saf. J. 2022, 133, 103673. [Google Scholar] [CrossRef]

- Pauchard, A.R.; Manic, D.; Flanagan, A.; Besse, P.A.; Popovic, R.S. A method for spark rejection in ultraviolet flame detectors. IEEE Trans. Ind. Electron. 2000, 47, 168–174. [Google Scholar] [CrossRef]

- Li, Q.; Yue, L. Research on the Design Improvement of Aspirating Smoke Fire Detector. Fire Sci. Technol. 2021, 40, 1644–1647. (In Chinese) [Google Scholar]

- Lee, Y.M.; Khieu, H.T.; Kim, D.W.; Kim, J.T.; Ryou, H.S. Predicting the Fire Source Location by Using the Pipe Hole Network in Aspirating Smoke Detection System. Appl. Sci. 2022, 12, 2801. [Google Scholar] [CrossRef]

- Višak, T.; Baleta, J.; Virag, Z.; Vujanović, M.; Wang, J.; Qi, F. Multi objective optimization of aspirating smoke detector sampling pipeline. Optim. Eng. 2021, 22, 121–140. [Google Scholar] [CrossRef]

- Hou, X.L.; Jin, W.J. Numerical Simulation of Fires and Analysis of Detection Response in Large-scale Building Spaces. Technol. Wind. 2014, 164–165+167. [Google Scholar] [CrossRef]

- Xu, F.; Zhang, X. Test on application of flame detector for large space environment. Procedia Eng. 2013, 52, 489–494. [Google Scholar] [CrossRef]

- Khan, F.; Xu, Z.; Sun, J.; Khan, F.M.; Ahmed, A.; Zhao, Y. Recent advances in sensors for fire detection. Sensors 2022, 22, 3310. [Google Scholar] [CrossRef]

- Yu, P.; Wei, W.; Li, J.; Du, Q.; Wang, F.; Zhang, L.; Li, H.; Yang, K.; Yang, X.; Zhang, N.; et al. Fire-PPYOLOE: An Efficient Forest Fire Detector for Real-Time Wild Forest Fire Monitoring. J. Sens. 2024, 2024, 2831905. [Google Scholar] [CrossRef]

- Al Mojamed, M. Smart Mina: LoRaWAN Technology for Smart Fire Detection Application for Hajj Pilgrimage. Comput. Syst. Sci. Eng. 2022, 40, 259. [Google Scholar] [CrossRef]

- Töreyin, B.U.; Dedeoğlu, Y.; Güdükbay, U.; Çetin, A.E. Computer vision based method for real-time fire and flame detection. Pattern Recognit. Lett. 2006, 27, 49–58. [Google Scholar] [CrossRef]

- Chitram, S.; Kumar, S.; Thenmalar, S. Enhancing Fire and Smoke Detection Using Deep Learning Techniques. Eng. Proc. 2024, 62, 7. [Google Scholar] [CrossRef]

- Kim, Y.H.; Kim, A.; Jeong, H.Y. RGB color model based the fire detection algorithm in video sequences on wireless sensor network. Int. J. Distrib. Sens. Netw. 2014, 10, 923609. [Google Scholar] [CrossRef]

- Khalil, A.; Rahman, S.U.; Alam, F.; Ahmad, I.; Khalil, I. Fire detection using multi color space and background modeling. Fire Technol. 2021, 57, 1221–1239. [Google Scholar] [CrossRef]

- Yeh, C.H.; Liu, Y.H. Development of Two-Color pyrometry for flame impingement on oxidized metal surfaces. Exp. Therm. Fluid Sci. 2024, 152, 111108. [Google Scholar] [CrossRef]

- Hou, J.; Qian, J.; Zhang, W.; Zhao, Z.; Pan, P. Fire detection algorithms for video images of large space structures. Multimed. Tools Appl. 2011, 52, 45–63. [Google Scholar] [CrossRef]

- Zhang, G.; Cui, R.; Qi, K.; Wang, B. Research on Large Space Fire Monitoring Based on Image Processing. J. Phys. 2021, 2074, 012003. [Google Scholar] [CrossRef]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3520–3529. [Google Scholar]

- Lu, X.; Li, B.; Yue, Y.; Li, Q.; Yan, J. Grid r-cnn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7363–7372. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Zheng, H.; Duan, J.; Dong, Y.; Liu, Y. Real-time fire detection algorithms running on small embedded devices based on MobileNetV3 and YOLOv4. Fire Ecol. 2023, 19, 31. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2026; pp. 779–788. [Google Scholar]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A comprehensive review of yolo architectures in computer vision: From yolov1 to yolov8 and yolo-nas. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Zhang, Y.; Jiao, Y.; Dou, Y.; Zhao, L.; Liu, Q.; Zuo, G. A Lightweight Dynamically Enhanced Network for Wildfire Smoke Detection in Transmission Line Channels. Processes 2025, 13, 349. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. Yolov12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Cheng, X.; Yu, J. RetinaNet with difference channel attention and adaptively spatial feature fusion for steel surface defect detection. IEEE Trans. Instrum. Meas. 2020, 70, 1–11. [Google Scholar] [CrossRef]

- Cai, J.; Zhang, L.; Dong, J.; Guo, J.; Wang, Y.; Liao, M. Automatic identification of active landslides over wide areas from time-series InSAR measurements using Faster RCNN. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103516. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision 2017, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference (Proceedings, Part I 14), Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Xu, G.; Zhang, Q.; Liu, D.; Lin, G.; Wang, J.; Zhang, Y. Adversarial adaptation from synthesis to reality in fast detector for smoke detection. IEEE Access 2019, 7, 29471–29483. [Google Scholar] [CrossRef]

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020. [Google Scholar]

- Liu, Y.; Wu, D.; Liang, J.; Wang, H. Aeroengine blade surface defect detection system based on improved faster RCNN. Int. J. Intell. Syst. 2023, 2023, 1992415. [Google Scholar] [CrossRef]

- Zhang, Y.; Chi, M. Mask-R-FCN: A deep fusion network for semantic segmentation. IEEE Access 2020, 8, 155753–155765. [Google Scholar] [CrossRef]

- Zhao, H.; Gao, Y.; Deng, W. Defect detection using shuffle Net-CA-SSD lightweight network for turbine blades in IoT. IEEE Internet Things J. 2024, 11, 32804–32812. [Google Scholar] [CrossRef]

- Li, P.; Zhao, W. Image fire detection algorithms based on convolutional neural networks. Case Stud. Therm. Eng. 2020, 19, 100625. [Google Scholar] [CrossRef]

- Luan, T.; Zhou, S.; Liu, L.; Pan, W. Tiny-object detection based on optimized YOLO-CSQ for accurate drone detection in wildfire scenarios. Drones 2024, 8, 454. [Google Scholar] [CrossRef]

- Lin, T. LabelImg, 2015. Available online: https://github.com/tzutalin/labelImg (accessed on 28 June 2025).

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25February–4 March 2025; Volume 34, pp. 12993–13000. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zhang, H.; Zhang, S. Focaler-iou: More focused intersection over union loss. arXiv 2024, arXiv:2401.10525. [Google Scholar] [CrossRef]

- Lian, Q.; Luo, X.; Lin, D.; Lin, C.; Chen, B.; Guo, Z. ResNest-SVM-based method for identifying single-phase ground faults in active distribution networks. Front. Energy Res. 2024, 12, 1501737. [Google Scholar] [CrossRef]

- Siddiqui, F.; Yang, J.; Xiao, S.; Fahad, M. Enhanced deepfake detection with DenseNet and Cross-ViT. Expert Syst. Appl. 2025, 267, 126150. [Google Scholar] [CrossRef]

- Razavi, M.; Mavaddati, S.; Koohi, H. ResNet deep models and transfer learning technique for classification and quality detection of rice cultivars. Expert Syst. Appl. 2024, 247, 123276. [Google Scholar] [CrossRef]

- Wang, S.; Wu, M.; Wei, X.; Song, X.; Wang, Q.; Jiang, Y.; Gao, J.; Meng, L.; Chen, Z.; Zhang, Q.; et al. An advanced multi-source data fusion method utilizing deep learning techniques for fire detection. Eng. Appl. Artif. Intell. 2025, 142, 109902. [Google Scholar] [CrossRef]

- Elhassan, M.A.M.; Zhou, C.; Benabid, A.; Adam, A.B.M. P2AT: Pyramid pooling axial transformer for real-time semantic segmentation. Expert Syst. Appl. 2024, 255, 124610. [Google Scholar] [CrossRef]

- Dewi, C.; Chen, R.-C.; Yu, H.; Jiang, X. Robust detection method for improving small traffic sign recognition based on spatial pyramid pooling. J. Ambient Intell. Humaniz. Comput. 2023, 14, 8135–8152. [Google Scholar] [CrossRef]

- Li, Z.; He, Q.; Zhao, H.; Yang, W. Doublem-net: Multi-scale spatial pyramid pooling-fast and multi-path adaptive feature pyramid network for UAV detection. Int. J. Mach. Learn. Cybern. 2024, 15, 5781–5805. [Google Scholar] [CrossRef]

- Sun, W.; Liu, Y.; Wang, F.; Hua, L.; Fu, J.; Hu, S. A Study on Flame Detection Method Combining Visible Light and Thermal Infrared Multimodal Images. Fire Technol. 2024, 61, 2167–2188. [Google Scholar] [CrossRef]

- Sun, F.; Yang, Y.; Lin, C.; Liu, Z.; Chi, L. Forest fire compound feature monitoring technology based on infrared and visible binocular vision. Journal of Physics: Conference Series. IOP Publ. 2021, 1792, 012022. [Google Scholar]

- Song, T.; Tang, B.; Zhao, M.; Deng, L. An accurate 3-D fire location method based on sub-pixel edge detection and non-parametric stereo matching. Measurement 2014, 50, 160–171. [Google Scholar] [CrossRef]

- Li, G.; Lu, G.; Yan, Y. Fire detection using stereoscopic imaging and image processing techniques. In Proceedings of the 2014 IEEE International Conference on Imaging Systems and Techniques (IST) Proceedings, Santorini, Greece, 14–17 October 2014; pp. 28–32. [Google Scholar]

- Andrean, D.; Unik, M.; Rizki, Y. Hotspots and Smoke Detection from Forest and Land Fires Using the YOLO Algorithm (You Only Look Once). JIM-J. Int. Multidiscip. 2023, 1, 46–56. [Google Scholar]

- Gragnaniello, D.; Greco, A.; Sansone, C.; Vento, B. Fire and smoke detection from videos: A literature review under a novel taxonomy. Expert Syst. Appl. 2024, 255, 124783. [Google Scholar] [CrossRef]

- Wang, M.; Yue, P.; Jiang, L.; Yu, D.; Tuo, T.; Li, J. An open flame and smoke detection dataset for deep learning in remote sensing based fire detection. Geo-Spat. Inf. Sci. 2025, 28, 511–526. [Google Scholar] [CrossRef]

- El-Madafri, I.; Peña, M.; Olmedo-Torre, N. The wildfire dataset: Enhancing deep learning-based forest fire detection with a diverse evolving open-source dataset focused on data representativeness and a novel multi-task learning approach. Forests 2023, 14, 1697. [Google Scholar] [CrossRef]

- Liu, Y.; Zheng, C.; Liu, X.; Tian, Y.; Zhang, J.; Cui, W. Forest Fire Monitoring Method Based on UAV Visual and Infrared Image Fusion. Remote Sens. 2023, 15, 3173. [Google Scholar] [CrossRef]

- Pincott, J.; Tien, P.W.; Wei, S.; Calautit, J.K. Indoor fire detection utilizing computer vision-based strategies. J. Build. Eng. 2022, 61, 105154. [Google Scholar] [CrossRef]

- Gaur, A.; Singh, A.; Kumar, A.; Kumar, A.; Kapoor, K. Video flame and smoke based fire detection algorithms: A literature review. Fire Technol. 2020, 56, 1943–1980. [Google Scholar] [CrossRef]

- Yar, H.; Khan, Z.A.; Ullah, F.U.M.; Ullah, W.; Baik, S.W. A modified YOLOv5 architecture for efficient fire detection in smart cities. Expert Syst. Appl. 2023, 231, 120465. [Google Scholar] [CrossRef]

- Casas, E.; Ramos, L.; Bendek, E.; Rivas-Echeverria, F. Yolov5 vs. yolov8: Performance benchmarking in wildfire and smoke detection scenarios. J. Image Graph. 2024, 12, 127–136. [Google Scholar] [CrossRef]

- Wang, C.; Xu, C.; Akram, A.; Wang, Z.; Shan, Z.; Zhang, Q. Wildfire Smoke Detection System: Model Architecture, Training Mechanism, and Dataset. Int. J. Intell. Syst. 2025, 2025, 1610145. [Google Scholar] [CrossRef]

- Safarov, F.; Muksimova, S.; Kamoliddin, M.; Cho, Y.I. Fire and smoke detection in complex environments. Fire 2024, 7, 389. [Google Scholar] [CrossRef]

- Li, R.; Hu, Y.; Li, L.; Guan, R.; Yang, R.; Zhan, J.; Cai, W.; Wang, Y.; Xu, H.; Li, L. SMWE-GFPNNet: A high-precision and robust method for forest fire smoke detection. Knowl.-Based Syst. 2024, 289, 111528. [Google Scholar] [CrossRef]

- Pu, S.; Li, J.; Han, Z.; Zhu, X.; Xu, C. Flame region segmentation of a coal-fired power plant boiler burner through YOLOv8-MAH model. Fuel 2025, 398, 135518. [Google Scholar] [CrossRef]

- Deng, L.; Wu, S.; Zhou, J.; Zou, S.; Liu, Q. LSKA-YOLOv8n-WIoU: An Enhanced YOLOv8n Method for Early Fire Detection in Airplane Hangars. Fire 2025, 8, 67. [Google Scholar] [CrossRef]

- Xiong, C.; Zayed, T.; Abdelkader, E.M. A novel YOLOv8-GAM-Wise-IoU model for automated detection of bridge surface cracks. Constr. Build. Mater. 2024, 414, 135025. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Deng, L.; Wang, Z.; Liu, Q. AH-YOLO: An Improved YOLOv8-Based Lightweight Model for Fire Detection in Aircraft Hangars. Fire 2025, 8, 199. [Google Scholar] [CrossRef]

| Detector Type | Working principle | Advantages | Limitations | Typical Applications |

|---|---|---|---|---|

| 1. Point-type Smoke/Temperature Detectors | Smoke Sensing: Detects smoke particles via ionization or photoelectric effects. Heat Sensing: Triggers upon reaching a fixed temperature or a rapid rate of rise in ambient temperature. | Mature technology with low cost. Simple installation and maintenance. Widely available and versatile. Effective for both flaming and smoldering fires | Limited coverage area per unit; requires many devices for large spaces. Height restrictions for installation. Slow response; relies on smoke diffusion to the sensor. Prone to false alarms from environmental factors (e.g., dust, humidity, airflow). Unsuitable for large, open, or high-airflow environments. | Enclosed spaces with conventional ceiling heights: offices, hotel rooms, residences, small retail shops. |

| 2. Line-type Beam Smoke Detectors | Measures the obscuration (light attenuation) of a projected beam between an emitter and a receiver unit to detect smoke. | Large area coverage with a single system. Can be installed at greater heights. More suitable for large spaces than point-type detectors. | Complex alignment during installation; susceptible to misalignment from vibration. High maintenance requirement; optical lenses must be kept clean. Response delay persists due to reliance on smoke diffusion. Line of sight can be obstructed. Ineffective for non-particulate fires (e.g., clean burning alcohol). | Large warehouses, exhibition halls, atriums, sports arenas. |

| 3. Infrared and Ultraviolet Flame Detectors | Infrared Detectors: Sense characteristic infrared radiation patterns from flames. Ultraviolet Detectors: Sense ultraviolet radiation emitted by flames. | Extremely fast response; does not require smoke propagation. Long detection range. Highly effective for flaming fires. | High cost. Susceptible to false alarms from non-fire radiation sources (e.g., welding arcs, sunlight, halogen lamps, heaters). Ineffective for smoldering fires (requires visible flames). A clear line of sight to the fire is required, yet this line of sight can be obstructed by obstacles. | High-hazard areas with flammable liquids/gases: petrochemical plants, fuel storage facilities, munitions stores. |

| 4. Aspirating Smoke Fire Detectors | Actively draws air samples through a network of pipes to a central, highly sensitive laser detection chamber for analysis. | Very high sensitivity; can detect invisible combustion particles. Provides very early warning (incipient stage detection). Suitable for environments with complex airflows. Flexible pipe network design allows for extensive coverage. | The coverage area of a single device is limited. System complexity results in the highest cost. Demanding installation, design, and commissioning requirements. Requires regular maintenance (filter replacement, airflow checks). Sampling pipes can become clogged with dust or insects. | Mission-critical or sensitive sites requiring earliest possible warning: data centers, heritage buildings, high-value archives. |

| Two-Stage | One-Stage |

|---|---|

| Rcnn [30,31] | YOLO (v8-v12) [32,33,34,35,36,37,38] |

| Fast rcnn [39] | RetinaNet [40] |

| Faster rcnn [41,42] | Mask R-CNN [43] |

| SPPnet [44] | SSD [45,46] |

| Aspect | Visible Light Cameras | Infrared Cameras |

|---|---|---|

| Detection Principle | Relies on visible light imaging. Uses algorithms to identify flames based on color, shape and smoke based on texture, and motion characteristics. | Detects thermal radiation (infrared energy) emitted by objects. Identifies fire sources through temperature anomalies. |

| Light Dependency | Highly dependent on ambient lighting. Performance degrades significantly in darkness, strong backlight, and other complex lighting conditions. | Unaffected by visible-light conditions. Operates effectively in total darkness, glare, and any lighting scenario, enabling 24/7 reliable monitoring. |

| Smoke Detection Capability | A core strength. Effective at identifying the visual characteristics of smoke. However, performance is limited in dark environments (e.g., hangar corners) where attention becomes dispersed. | Cannot directly “see” smoke. Can indirectly detect fire through the heat source or temperature changes caused by smoke. |

| Flame Detection Capability | Effective at identifying visible flames. Prone to false alarms from objects with similar shapes and colors. | Detects the heat source itself, not its visual manifestation. Effectively identifies hidden fire sources invisible to the naked eye with strong resistance to visual false alarms. |

| Anti-Interference Capability | Can perform well in strong sunlight with optimized algorithms, but susceptible to interference from steam, dust, and visual deception. | Immune to visual camouflage. Can effectively penetrate smoke, dust, steam, and other particulates, offering significant advantages in low-visibility, harsh environments. |

| Conclusion for Hangar Use | While effective under specific conditions, its smoke detection weakness in low light and reliance on visible flames pose reliability risks in the complex, variable hangar environment. | Deemed the superior and more reliable solution for hangar applications due to its immunity to light and long-range and direct heat-sensing capabilities, which perfectly match the large, complex environment. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, L.; Wu, S.; Zou, S.; Liu, Q. Large-Space Fire Detection Technology: A Review of Conventional Detector Limitations and Image-Based Target Detection Techniques. Fire 2025, 8, 358. https://doi.org/10.3390/fire8090358

Deng L, Wu S, Zou S, Liu Q. Large-Space Fire Detection Technology: A Review of Conventional Detector Limitations and Image-Based Target Detection Techniques. Fire. 2025; 8(9):358. https://doi.org/10.3390/fire8090358

Chicago/Turabian StyleDeng, Li, Siqi Wu, Shuang Zou, and Quanyi Liu. 2025. "Large-Space Fire Detection Technology: A Review of Conventional Detector Limitations and Image-Based Target Detection Techniques" Fire 8, no. 9: 358. https://doi.org/10.3390/fire8090358

APA StyleDeng, L., Wu, S., Zou, S., & Liu, Q. (2025). Large-Space Fire Detection Technology: A Review of Conventional Detector Limitations and Image-Based Target Detection Techniques. Fire, 8(9), 358. https://doi.org/10.3390/fire8090358