1. Introduction

Fire safety is a crucial aspect of modern infrastructure design in a wide range of projects, including buildings and tunnels [

1]. In a fire, ensuring a safe evacuation for all occupants is a vital factor in protecting lives and property. One of the cornerstones of the performance-based fire engineering approach is the accurate assessment of the required safe escape time (RSET) and the available safe escape time (ASET). To achieve an acceptable fire safety level, the ASET must always exceed the RSET by a prudent safety margin to account for potential fire scenarios and uncertainties in predictions. This principle underscores the importance of integrating fire safety measures early in the design process to ensure occupants can evacuate safely during emergencies. By prioritising fire safety in design through precise escape time analysis, designers and engineers can create safer, more resilient structures that protect lives and adapt to the challenges of modern infrastructure. Since protecting human life is the primary aim of fire safety engineering, capturing human behaviour during a fire event and its attributes during the evacuation process is crucial in fire engineering. During the evacuation process, the behaviour of evacuees and their decision-making may be affected by the fire situation [

2,

3]. Therefore, it is essential to consider pertinent human factors, including individual decisions and parameters that characterise human behaviour [

2,

4,

5].

Various factors, including the environment, social dynamics, and individual characteristics and traits, influence human behaviour [

1,

5,

6]. Social factors involve interactions among occupants, such as their roles and responsibilities within the setting, whether as workers or family members [

7,

8,

9]. Individual characteristics include knowledge, experience, familiarity with the environment, and physical condition during incidents [

3,

8,

10,

11]. Additionally, individual traits such as stress resistance, observational skills, judgment, and mobility also play a significant role in decision-making during emergencies [

12]. Previous studies have explored these factors through questionnaires, simulations, and field experiments, providing a comprehensive understanding of how they influence behaviour during fire evacuations.

Environmental factors include aspects such as layout, visual access, and signage for identification or direction [

13,

14], as well as fire conditions like the presence of smoke and flames. Fire generates heat and toxic substances, while smoke reduces visibility, which poses significant risks to evacuees. These conditions can lead to compromised reactions, such as slowed movement or choosing longer escape routes.

In recent decades, field experimental research has been conducted to investigate the behaviour of evacuees under the influence of environmental factors. To replicate low visibility conditions during fire situations, early fire evacuation experiments often utilised artificial smoke [

13,

15,

16,

17,

18]. In these experiments, participants were typically required to simulate an evacuation by physically following designated routes. While artificial smoke can mimic low visibility, as demonstrated in full-scale fire experiments, it lacks the natural buoyancy of hot smoke produced in real fires. Hot smoke is subject to buoyant forces, forming stratified layers above cooler air. Without this buoyant behaviour, artificial smoke fails to accurately emulate the stratification and visibility dynamics critical for understanding evacuee behaviour in real fire scenarios [

19]. Additionally, in multiroom fire scenarios, artificial smoke fails to capture the movement and descent of the hot upper layer, further reducing the realism of the emulated fire situations [

20]. These limitations indicate that artificial smoke alone is insufficient for accurately modelling the complex thermal dynamics involved in fire evacuations. Traditional fire training methods, including fire drills and classroom-based simulations, present notable challenges. Although fire drills provide practical experience, they are resource-intensive and difficult to organise frequently [

16]. Furthermore, such drills do not always account for dynamic fire conditions or the influence of smoke and heat on evacuation behaviour [

17]. Computational simulations and video-based fire training help visualise fire dynamics but lack participant interaction and immersive decision-making experiences [

21].

Recently, virtual reality (VR) and augmented reality (AR) have emerged as low-cost, viable, and immersive alternatives for studying human behaviour during fire emergencies [

22]. VR enables the reconstruction of fire scenarios using computer-generated visuals in a fully immersive environment, while AR blends virtual, computer-generated content with actual in situ surroundings. Both AR and VR have been applied in various studies related to fires, including evacuation procedures from buildings [

21,

23,

24,

25,

26], understanding occupant behaviour during fire incidents [

27,

28,

29,

30], and enhancing fire training methodologies [

31,

32,

33,

34].

Compared to conventional field studies, VR and AR methodologies allow for the flexible construction of hypothetical fire conditions, ensuring consistent presentation of fire scenarios across all participants. More importantly, VR and AR provide a safe and immersive environment for participants to engage in hazardous scenarios, such as extreme fires, without exposure to physical danger. As a result, studies utilising VR and AR are considered ethically superior to field studies, as they replicate hazardous scenarios without risk to participants, simplifying the recruitment process. Additionally, VR and AR studies are generally more cost-effective than field studies, as the VR system setup can be reused indefinitely. These technologies also enable real-time feedback and precise measurements, such as body movement tracking, which can be easily monitored.

Nevertheless, there are instances where the VR experience may lack full immersion. Despite the interactivity of virtual environments, participants often rely on devices to navigate and interact within the virtual space or require a physical space of sufficient size to match the virtual environment. This requirement for tangible interaction can serve as a constant reminder to participants that they are engaged in an artificial scenario, potentially leading to biased behaviour. Furthermore, the VR approach is often labour-intensive, as creating highly detailed virtual environments that accurately mirror real-world settings requires substantial resources, including significant manpower.

Augmented reality (AR) offers a unique opportunity to explore human behaviour during evacuations, building upon the advantages of virtual reality (VR) [

35]. The key strength of AR lies in its integration with the real environment. AR enables users to interact with real objects alongside virtual elements within the augmented environment, enhancing their perception and understanding of the real world [

36]. Participants experience a heightened sense of immersion as they engage with real-time surroundings through AR. By leveraging the realism of the actual environment, participant reactions and behaviours can be measured with greater precision [

37]. With mobile devices like smartphones and tablets becoming increasingly compact, affordable, and powerful, AR applications are becoming more practical for field use [

38].

Despite these advantages, AR applications in fire safety training remain underexplored. Prior AR-based fire training studies have often focused on visualisation rather than detailed fire dynamics, limiting their applicability to performance-based fire safety training. Recent studies have demonstrated the potential of AR in enhancing emergency response training, particularly by simulating fire hazards and evacuation scenarios in real-world settings [

22,

23]. AR has also been used for improving situational awareness and decision-making during emergency evacuations, leveraging real-time hazard overlays and interactive user engagement [

24,

25]. Furthermore, research on AR-based human behaviour modelling has indicated its effectiveness in analysing evacuee responses under different levels of fire risk perception and environmental stressors [

30,

31]. These advancements highlight AR’s growing role in emergency research, bridging the gap between traditional fire studies and computational fire modelling. This study addresses this gap by developing a systematic framework that combines computational fire simulations with AR-based visualisations, creating an immersive yet realistic fire scenario for studying human behaviour. By integrating computational fluid dynamics (CFD)-based fire modelling into AR, this research enhances the accuracy and effectiveness of AR-based fire scenario visualisation. This paper bridges the gap by developing a comprehensive workflow, starting from geometry extraction and fire simulation to data processing and visualisation in AR. For the first time, this end-to-end approach ensures the accurate reconstruction of real-world fire scenarios, enabling realistic and immersive representations of fire dynamics and smoke behaviour. The subsequent sections will explore the development of the AR application, evaluate its outcomes, and discuss its potential applications in understanding human behaviour during evacuations. The initial phase involves establishing a methodology to integrate fire dynamics simulations into AR, accommodating both outdoor open environments and confined indoor spaces. This study’s findings demonstrate the feasibility and utility of the proposed AR platform as a transformative tool for fire safety training and evacuation planning. By offering real-time, immersive, and scientifically accurate visualisations, the platform paves the way for safer, more informed design and emergency response strategies.

2. Methodology

The primary purpose of this paper is to develop a systematic workflow to fuse high-fidelity transient fire predictions (e.g., flame shape, temperature, and smoke concentration) into an immersive AR environment. The workflow consists of four main steps: Geometry dimension extraction, fire dynamics simulation, data processing, and AR visualisation. Detailed descriptions of each step are provided in the following sections.

2.1. Geometry Dimension Extraction

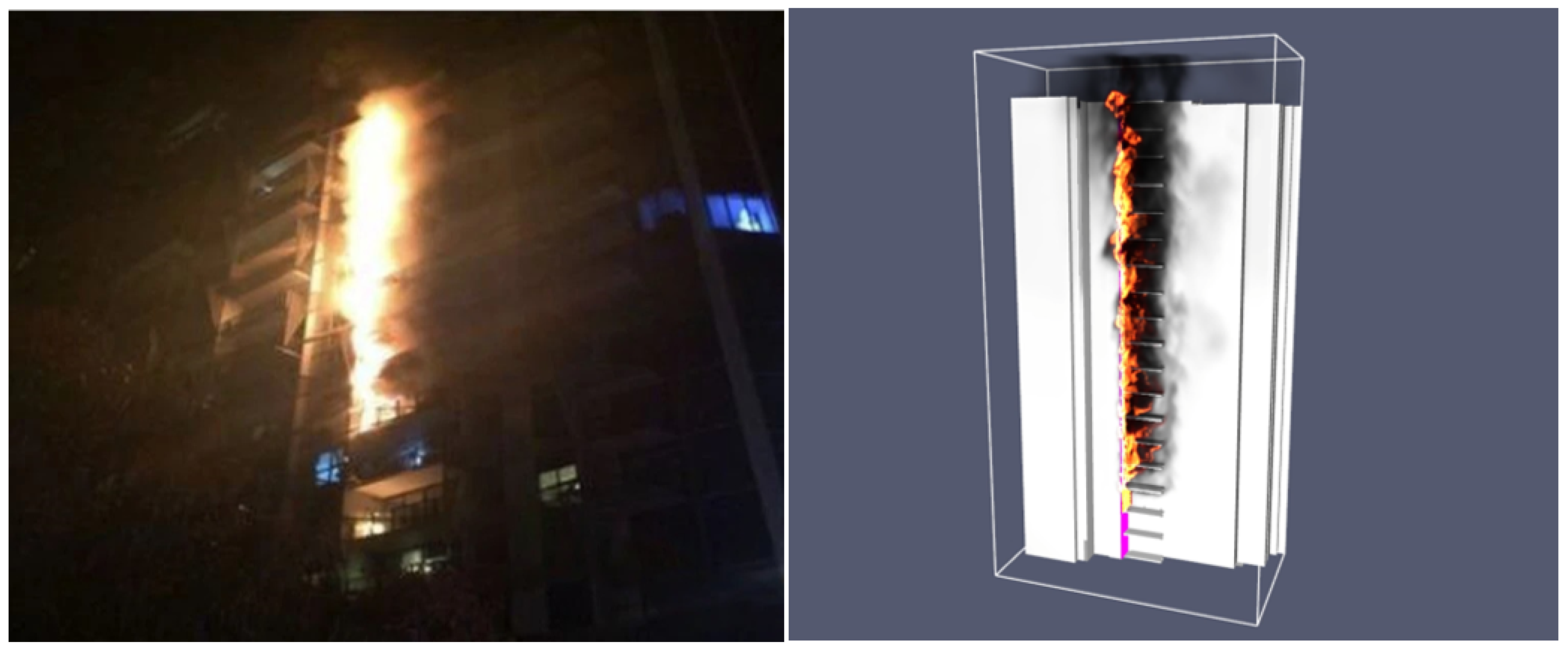

To ensure accurate predictions from fire dynamics simulation, it is essential to extract the geometry arrangement and precise dimension of the targeted building or room for computational model construction. The geometry can be imported from a CAD/BIM model or created using the bundled geometry creation interface within the computational simulation packages. To develop a generic workflow applicable to a wide range of fire scenarios, two distinct cases were selected for this study, demonstrating the feasibility of fusing fire model predictions with the AR immersive experience. In Case 1, a large-scale external cladding fire incident was reconstructed based on the well-documented Lacrosse Building fire in Melbourne. The Lacrosse fire, which occurred on November 25, 2014, in Melbourne’s Docklands, was caused by an unextinguished cigarette on an eighth-floor balcony and spread rapidly due to combustible aluminium composite panels (ACP) [

39]. According to official fire investigation reports, flames spread rapidly to the upper floors of the 21-story building, leading to an urgent evacuation [

40]. This study applies augmented reality (AR) to represent the fire dynamics of this event, integrating fire modelling data with immersive visualisation techniques to enhance the understanding of evacuation decision-making and environmental conditions during fire emergencies. In Case 2, a designed indoor fire scenario was developed to visualise flame structures and smoke propagation in a near-field indoor environment. The designed fire is located in a common student area on Level 4 of RMIT Building 8, connecting both Building 10 and Building 12.

In Case 1, this study utilises publicly available three-dimensional (3D) data from Google Earth [

41] to capture the high-level complexity of large-scale structures and geometries (see

Figure 1). Google Earth’s primary data sources include satellite and aerial imagery collected by commercial satellite companies, government agencies, and other organisations. A key challenge in the workflow is converting 3D data from the public domain into compatible file formats for CAD design packages such as CATIA [

42], Blender [

43], or SolidWorks [

44]. To address this, the open-source graphic debugger RenderDoc, developed by MIT [

45], was used in this study to extract 3D data from Google Earth. RenderDoc captures the desired frame and extracts 3D data from graphic applications. The captured frame, along with the embedded 3D data, is then converted and imported into Blender as a generic 3D model (e.g., STL format). A scaling process is performed in Blender to ensure that the model’s size aligns with the real-life geometry. Depending on computational requirements, the 3D model is subsequently imported into a CAD package (e.g., CATIA V5) for further editing, including cleaning up redundant surfaces and simplifying geometrical features for use in fire simulation models. Finally, the simplified 3D model is imported into Pyrosim [

46] for meshing and setting boundary conditions for fire simulations. In Case 2, the computational model is constructed directly within Pyrosim, following a conventional fire simulation workflow. The model is based on actual dimensions obtained from provided digital drawings and in situ measurements.

To achieve more accurate results in computational simulations, settings such as meshing and boundary conditions must be appropriately configured. Data processing involves converting all simulation results into a usable format for AR visualisation. Ultimately, an APK application for Android will be created and deployed to operate in the actual environment.

2.2. Scientific Fire Dynamics Reconstruction

Reconstructing scientific fire scenario visualisations in an immersive AR environment requires high-fidelity fire dynamics simulations as a critical step in the workflow. This study uses the Fire Dynamics Simulator (FDS) (Version 6.7.9) for fire dynamics predictions, as it is a widely available open-source package for fire simulations. FDS is a computational fluid dynamics (CFD) software developed by the National Institute of Standards and Technology (NIST) to model fire and smoke spread in buildings and other structures. It employs the finite difference method to numerically solve the Navier–Stokes equations for fluid flow, coupled with thermodynamic equations for heat transfer and combustion chemistry. Notably, the proposed workflow is also compatible with other CFD or multiphysics simulation packages, such as ANSYS FLUENT, STAR-CCM+, and COMSOL, offering flexibility for different applications.

2.3. Computational Mesh Construction and Numerical Details

This section summarises the numerical details of fire simulations for both scenarios (i.e., Case 1 and Case 2). The fire simulation was conducted using the Very Large Eddy Simulation (VLES) mode in FDS. The subgrid-scale turbulence was modelled using the Deardorff SGS model, and near-wall turbulence effects were handled using the Wall-Adapting Local Eddy-Viscosity (WALE) model. Due to the wide adoption of FDS, this paper does not include specific details regarding mathematical models, turbulence and combustion closures, and radiation treatments. Interested readers are referred to the latest fire modelling articles and their references [

47]. Multiple zones were created for both cases to ensure sufficient mesh resolution for resolving the fire source while maintaining manageable computational costs (see

Figure 2). The cell size of each zone was determined based on general flow behaviour and its significance in fire dynamics. This multi-zone approach enables parallel computing using multi-core systems, accelerating simulation times while ensuring consistent and accurate results. In Case 1, finer cells with dimensions of 0.0625 m were used near the fire source to capture the fire spread along the aluminium composite cladding. Away from the fire, the mesh spacing gradually coarsened, with the largest cell dimensions reaching 1 m at the far-field boundaries. Similarly, for Case 2, finer cells with dimensions of 0.05 m × 0.05 m × 0.04 m were used near the fire source, while coarser cells of up to 0.4 m × 0.4 m × 0.3 m were applied in the far-field regions. According to the following equation by NIST, an approximate fine mesh size can be calculated based on the heat release rate and fire scenario:

where

is the characteristic fire diameter,

is the total heat release rate of the fire,

is the density of ambient air,

is the specific heat of ambient air,

is the temperature of ambient air, and g is the gravitation constant. Case 1, involving aluminium composite cladding with a heat release rate of 1.5 MW, resulted in D* = 1.14 m. Case 2, with a heat release rate of 1 MW, resulted in D* = 0.93 m. For a fine mesh,

= 16, yielding

= 0.07 m for Case 1 and

= 0.06 m for Case 2. A refined mesh size was applied to ensure accurate fire dynamics representation in the simulation.

For case 1, the fire scenario was modelled using visual references from news footage, post-incident reports, and previous studies to approximate the fire’s behaviour [

39,

40,

48]. The combustion of polyethylene within aluminium composite panels (ACP) was assumed based on the known fire spread in the Lacrosse Building incident. Fire growth was estimated from observed flame spread and duration in available footage. The material properties and combustion reaction were modelled using the one-step decomposition model for low-density polyethylene (LDPE), which aligns with the known fire behaviour of ACP cladding [

49]. Case 2 was a designed fire scenario used to study evacuation behaviour in a controlled setting. The fire involved a sofa with burning characteristics based on typical furniture materials. The combustion model was informed by established polyurethane fire properties [

50]. For both cases, a static pressure of 1 atm is specified at all far-field boundaries. Transient fire prediction results are output in Plot3D file format, allowing field information (e.g., temperature, smoke concentration) to be extracted for data processing.

2.4. Data Processing

Similar to other simulation packages, FDS simulation results are stored in a proprietary file format that is not compatible across multiple platforms. To transfer results from FDS to an immersive AR visualisation platform, several steps are required to convert the original file format into a compatible format.

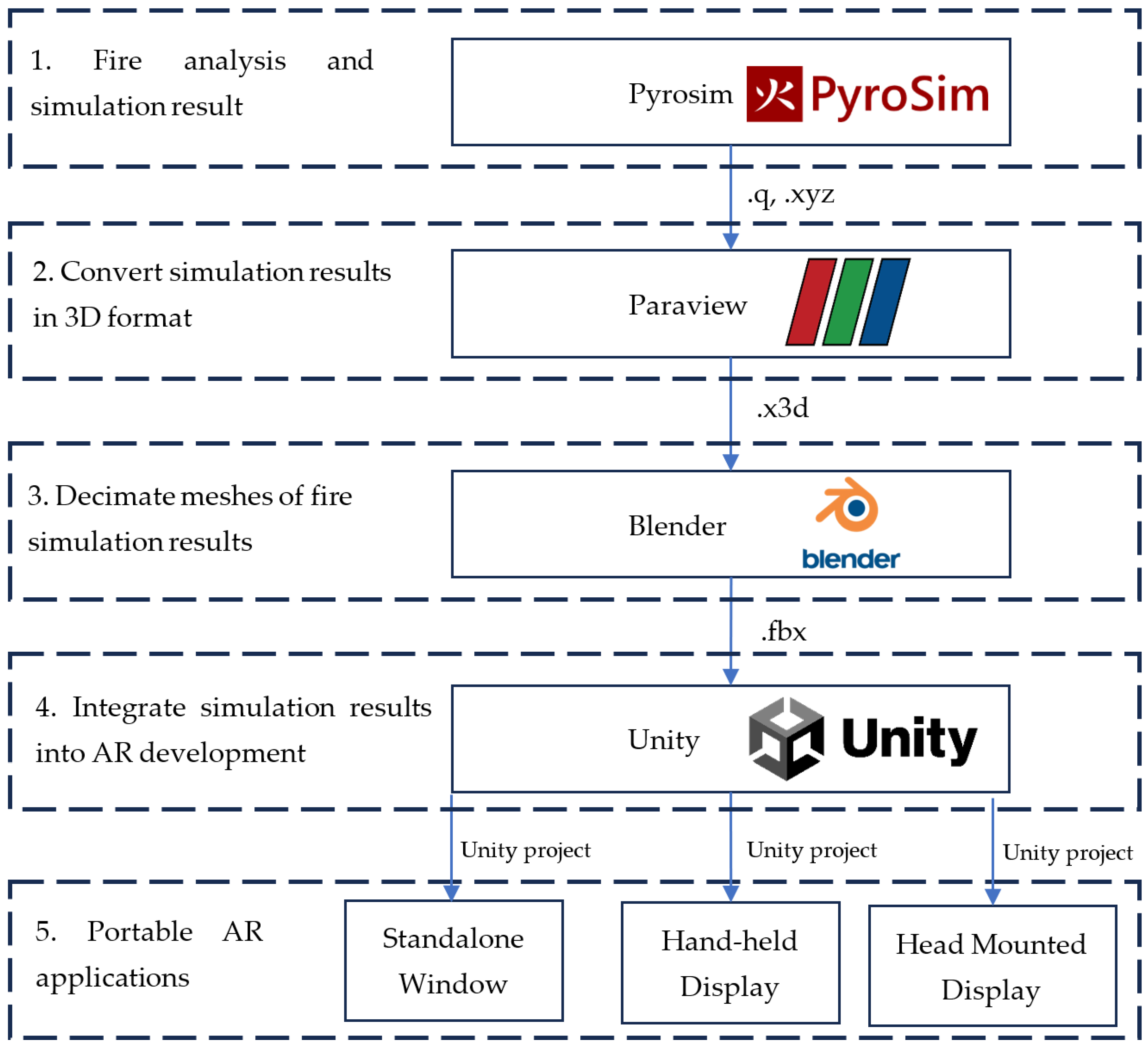

Figure 3 illustrates the workflow for transferring simulated results into portable AR applications. The conversion process involves multiple file formats at different stages: .q files store fire simulation data from PyroSim, .xyz is used to represent simulation results as point cloud data, .x3d is a 3D graphics format for visualisation and rendering, and .fbx ensures proper mesh structure and material mapping for Unity integration. These formats collectively enable the seamless transfer and visualisation of fire dynamics in AR. As shown in

Figure 3, the workflow involves multiple steps and utilises various software packages. First, after completing fire simulations in FDS, the results are imported into Paraview (Version 5.10.1) [

51] for post-processing. In Paraview, field data from the simulation (e.g., temperature and smoke concentration) are used to generate three-dimensional iso-surface contours representing flame shapes or smoke levels at various temperatures. These 3D contours are then imported into Blender, where irrelevant geometrical features are decimated or simplified to reduce complexity and ensure compatibility. Finally, the processed 3D models from Blender are exported and imported into Unity3D (Version 2020.3.16f1) [

52] for final setup and integration within the Unity environment before deployment to portable devices. Notably, this workflow is also compatible with other fire modelling packages (e.g., OpenFOAM, ANSYS) that can output simulation results in formats (e.g., CGNS) compatible with Paraview.

2.5. Immersive Augmented Reality (AR) Platform

The visualisation of simulation results in a real-world environment is achieved using AR technology. To accurately represent real-time, dynamic fire simulations in AR, Unity3D was utilised for the augmented reality environment setup. The Vuforia engine (SDK) was integrated into Unity3D to enable target tracking, facilitating the augmentation of virtual visualisations onto real-life objects or environments.

2.5.1. Target Trackers for Recognition in Vuforia Engine

The Vuforia engine (SDK) is a widely used platform for developing portable augmented reality applications, allowing for seamless integration of virtual content into real-world environments through image recognition and object tracking. Vuforia enables Unity3D applications to recognise objects and spaces, facilitating the overlay of augmented content in real time. In the context of our AR fire simulation application, Vuforia plays a central role in enabling real-time interaction between the users and the AR environment. The accuracy of AR object placement is crucial in ensuring a realistic fire scenario. A well-aligned AR fire model enhances the user’s perception of fire severity and evacuation decisions by reinforcing the spatial realism of flames and smoke in relation to escape routes.

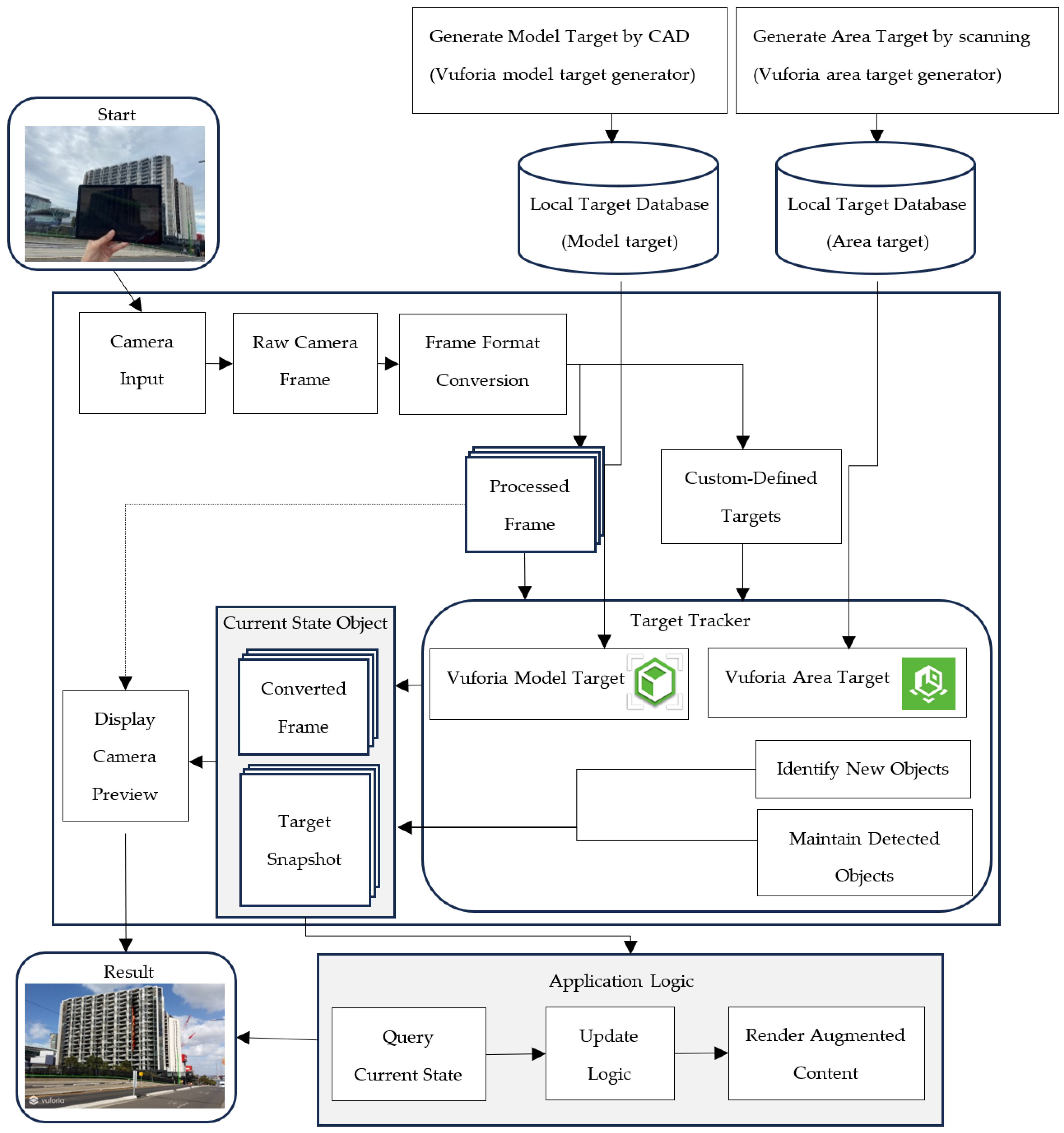

As illustrated in

Figure 4, the process begins with the camera capturing input from the real-world scene, which is converted into raw frames. These frames are processed and sent to the target tracker, where Vuforia identifies and tracks custom-defined targets, such as building models or fire locations. The system uses local databases to match the scene with predefined targets. Once the model or area target is detected, Vuforia maintains the tracking of objects and updates the current state. The application logic then queries the current state, allowing it to render the augmented content, such as fire dynamics, on the display. This real-time interaction provides participants with an immersive experience, enhancing decision-making during fire evacuation scenarios by integrating scientifically accurate fire models into real-world environments.

Vuforia offers multiple recognition types of target trackers, each tailored to specific AR applications and environments. For the large-scale fire scenario in Case 1, the Vuforia model target feature was utilised as the recognition method. This feature is particularly suited for tracking architectural landmarks, where the target object must be geometrically rigid with stable surface features. The model target for Unity3D was generated using the Vuforia Model Target Generator. In this scenario, a CAD model created in CATIA V5 was imported into the Vuforia Model Target Generator to produce the model target, which was subsequently integrated into the Unity3D setup for AR tracking (see

Figure 5a).

In contrast, the Vuforia area target feature was employed as the recognition method in Case 2. This method is particularly effective for tracking indoor environments, such as office areas and public spaces, by utilising a 3D scan to create an accurate model of the area. The area target requires an area with distinctive and stable objects that do not change frequently over time. Vuforia supports several scanning methods to create area targets, including ARKit-enabled devices with built-in LiDAR sensors, Matterport™ Pro2 3D cameras, NavVis M6 and VLX scanners, and Leica BLK360 and RTC360 scanners. In this case, the Vuforia Area Target Creator app, installed on an Apple iPad Pro with an inbuilt LiDAR scanner, was used to scan the indoor environment and generate the area target database (see

Figure 5b). This app produces dataset files, meshes, and Unity packages, which are subsequently utilised in Unity3D for AR tracking.

2.5.2. Unity3D AR Environment Setup

To achieve real-time fire dynamics visualisation, all results generated from PyroSim are imported into Unity3D after data processing. Recreating the simulation results in Unity3D requires careful management of the spatial location and time sequence of the data. The results are imported into Unity3D as a series of individual 3D objects corresponding to different time instants, with each object positioned at its correct coordinates. A custom script was developed in Unity3D to control the sequential appearance of these 3D objects, forming an animation that accurately represents real-time fire dynamics.

The Unity application is designed for mobile devices, with Android chosen as the target platform for the AR experience. The Unity3D settings are configured to target Android 9.0 ‘Pie’ (API level 28), and the application is built as an APK file. This file can be installed on Android devices, enabling users to launch the application and interact with its AR features.

2.6. Survey of AR User Perceptions and Its Impact on Evacuation Decision-Making

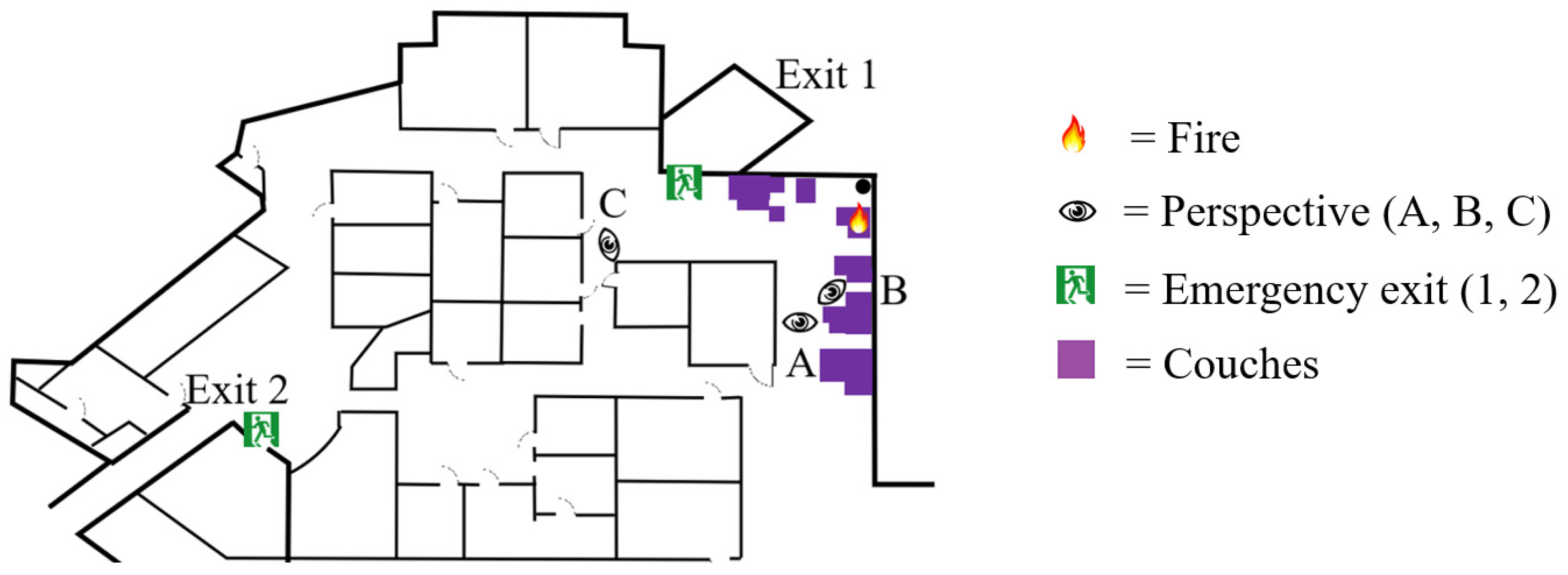

With the development of the AR fire visualisation application, a comprehensive survey was conducted to compare user perceptions of fire scenarios visualised through augmented reality with those presented using conventional rendered simulation results. More importantly, the survey aimed to evaluate how AR visualisation impacts participants’ evacuation decision-making, focusing on factors such as the visibility of exit signs and other dynamic environmental conditions.

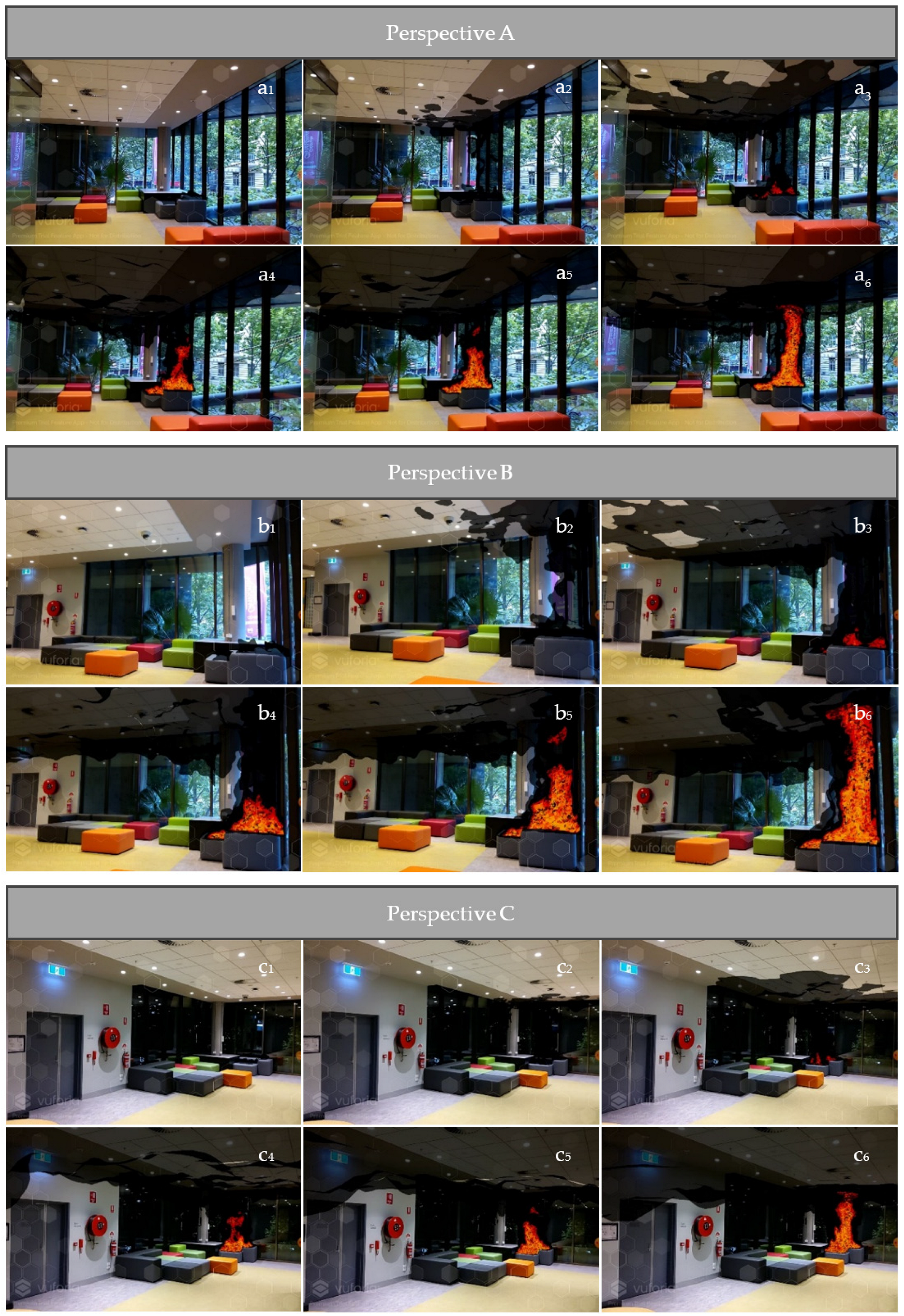

The survey was structured into two parts and designed to assess participants’ experiences with immersive fire scenarios using AR technology. In the first part, participants compared their perception of fire scenarios presented through two methods: the AR application and conventional rendered fire simulation results. This section aimed to evaluate how AR influences user perception compared to traditional simulation methods. The second part focused on assessing the feasibility of using AR to reconstruct a fire scenario in an indoor environment. Participants were shown three perspectives of the same fire scenario, along with a visual map indicating the fire location, two possible exit routes, and participant positions. They were then tasked with selecting an exit route using a multiple-choice (MC) format, focusing on how the visibility of exit signs and dynamic environmental factors impacted their decision-making. A total of 239 participants were surveyed, with demographic information such as age, gender, and prior experience with evacuation or fire safety training collected. The tasks were designed to closely simulate real-life conditions, ensuring the data accurately reflected participant behaviour and decision-making during fire evacuation scenarios.

4. Limitations and Future Work

There are some limitations and challenges associated with using AR platforms for on-site visualisations, particularly in outdoor environments. Recognition errors and dimensional inaccuracies may arise due to hardware constraints in capturing building dimensions, camera resolution, field of view, and sensor limitations, leading to target recognition errors. Additionally, varying light conditions—such as direct sunlight, shadows, and reflections—can adversely affect the recognition process, as AR tracking relies on well-lit environments to recognise feature points accurately. This limitation was a key factor in conducting the AR demonstration during daytime rather than nighttime, as stable lighting conditions improve tracking reliability and ensure an accurate overlay of fire visualisations. However, outdoor settings present additional challenges, such as glare or rapid changes in ambient light, which may impact AR object stability and alignment.

The accuracy of AR object placement plays a crucial role in shaping user perception of fire severity and influencing their decision-making regarding escape routes. A more precise alignment between the AR-generated fire and the real-world environment enhances realism, potentially making users more aware of the fire’s severity and encouraging safer evacuation choices. However, any misalignment in AR object placement—such as flames appearing to be offset from their actual position—could misrepresent the fire’s spread and severity, potentially affecting evacuation decisions. While recognition errors can occur due to hardware limitations in AR, previous research has demonstrated that AR-based spatial accuracy remains high across different scenarios. Previous research evaluating AR marker tracking accuracy reported depth recognition errors within ±1.0% for distances up to 1.05 m, confirming that spatial accuracy remains high within typical indoor AR viewing distances [

59]. These challenges, which have also been identified in previous studies [

35,

60,

61], highlight the need for further improvements in AR-based fire scenario visualisations. Future research will focus on refining fire scenario modelling and expanding user studies to enhance the accuracy and applicability of AR-based fire visualisation. Improvements in AR tracking algorithms and environmental adaptability could enhance spatial accuracy, especially in outdoor environments with dynamic lighting conditions. Additionally, future studies will extend participant surveys to analyse cognitive responses and assess the effectiveness of AR-based fire studies.

5. Conclusions

This study successfully developed an augmented reality (AR) platform to visualise transient fire dynamics in real time, providing a reliable and immersive approach to studying human behaviour and decision-making in realistic fire scenarios. By integrating advanced fire simulation techniques with AR technology, the platform offers a precise and interactive tool for analysing how fire structure, fire spread, and smoke propagation influence evacuation choices. A primary survey highlighted the advantages of AR applications over conventionally rendered visualisations, with 77% of participants favouring AR for its immersive experience and enhanced situational awareness. These findings underscore AR’s potential to revolutionise fire safety training and emergency preparedness by delivering realistic, interactive visualisations of fire dynamics.

Evacuation decisions were found to be strongly influenced by the visibility of exit signs and the presence of fire obstructions. For instance, 71% of participants in Perspective C chose the nearest exit when the exit sign was clearly visible, compared to only 31% in Perspective A, where the exit sign was obscured by internal walls. This progression emphasises the critical role of clear signage and unobstructed escape routes in improving evacuation efficiency. The study also supports previous observations that risky behaviours, such as taking shortcuts through smoke, are often driven by urgency and limited visibility.

The AR platform developed in this study bridges the gap between recent fire modelling and immersive training tools, offering a safe and realistic environment for analysing human behaviour and designing effective evacuation strategies. Expanding AR applications to diverse building types and fire scenarios will further validate its utility. By combining real-time fire dynamics with cutting-edge AR technology, this study makes a significant contribution to advancing fire safety engineering and emergency preparedness.