1. Introduction

Unmanned aerial vehicles (UAVs) are widely used in logistics and transportation, environmental monitoring, rescue missions, and film and television production due to their small size and low maintenance cost. However, large-scale UAV deployment has also brought security risks, such as unlawful aerial surveillance and unauthorized communications interception carried out by unidentified UAVs [

1]. Consequently, the design of a robust and effective UAV intrusion recognition framework has emerged as a pressing requirement for safeguarding public security.

Current object detection strategies include radar, acoustic sensing, radio-frequency, and optical imaging [

2]. Although these modalities provide complementary perspectives, they remain vulnerable to illumination variation, noise interference, and cloud occlusion, which often lead to biased or erroneous predictions. With the rapid progress of deep learning techniques, object recognition has achieved notable advancements, particularly in feature representation and semantic interpretation. Compared with conventional approaches, deep learning models demonstrate stronger robustness and adaptability, making them highly appropriate for precise and real-time UAV recognition. In recent years, region-based frameworks such as Faster R-CNN [

3] have achieved high accuracy but are hindered by slow inference; meanwhile, single-shot architectures like SSD (Single-Shot Multibox Detector) [

4] improve efficiency while maintaining comparable accuracy. Tracking-based schemes, including Siamese Fully Convolutional Network (SiamFC) [

5] and discriminative model prediction (DiMP) [

6], have been developed for stable UAV monitoring. Additionally, the YOLO (You Only Look Once) [

7] family offers a balanced trade-off between accuracy and inference speed. More recently, transformer-driven detectors, such as DETR (Detection Transformer) [

8], exploit the transformer backbone [

9] to model global contextual dependencies and capture long-range relationships.

Despite these advancements, identifying small-scale UAVs remains highly challenging. Such UAVs typically occupy merely a few pixels and are easily influenced by motion blur, often leading to missed detections or false alarms. Frequent variations in altitude, viewing angle, and flight attitude further demand robust multi-scale modeling. Moreover, cluttered scenes containing clouds, sky, or building edges may resemble UAVs, thereby introducing semantic confusion and resulting in false positives.

To address these challenges, we developed an accurate UAV detection model designed to enhance feature representation and multi-scale feature fusion. Specifically, we introduced a dilated-wise residual (DWR) module to strengthen contextual representation and enrich spatial details, and incorporated an asymptotic feature hierarchy network (AFPN) to optimize multi-level feature integration and semantic alignment. The resulting model, termed DRF-YOLO, significantly improves the precision and resilience of UAV identification under complex aerial scenarios, demonstrating its potential for reliable intrusion monitoring and public security protection.

2. Related Works

Limited pixels, insufficient semantic information, and strong background interference and noise make small-object detection (SOD) highly challenging. Therefore, extensive research has been conducted to enhance the performance of SOD models [

10].

2.1. Model Architecture

Researchers have explored various architectural modifications to improve SOD performance. Lou et al. [

11] introduced a depthwise separable convolution, max-pooling, and 3 × 3 convolution (MDC) module into the DC-YOLOv8 model for small-size object detection using cameras to compensate for information loss from downsampling and optimize feature fusion. However, their model introduced irrelevant features and increased computational overhead. Zamri et al. [

12] incorporated multiple attention mechanisms and a high-resolution detection head in their P2-YOLOv8n-ResCBAM model. While their model effectively distinguished UAVs from birds, the high-resolution head increased computational costs. Keles et al. [

13] used slicing fine-tuning and inference with YOLOv5 to reduce computational load and improve adaptability. However, this model split small objects, which compromised object integrity and detection accuracy. Cheng et al. [

14] combined a lightweight MobileViT backbone with a coordinate attention mechanism (CA-PANet) for feature fusion. This method improved accuracy and efficiency but presented limited ability to extract shallow features and lacked flexibility in multi-scale fusion. To avoid building multi-scale pyramids, Singh et al. [

15] cropped fixed-size image patches, which still maintained a time-consuming multi-scale testing process. Li et al. [

16] developed DN-DETR, which incorporates innovative denoising training, significantly improving the model’s training speed and detection performance. Hoanh et al. [

17] proposed a small-object detection framework that integrates an object-focus module and a dual-head mechanism within a feature pyramid network, leveraging a sparse computation strategy to improve efficiency. The framework first performs a coarse localization stage for small objects, followed by high-resolution feature refinement, thereby reducing computational overhead from background regions. However, it remains sensitive to the choice of thresholds and loss weight settings and incurs additional computational cost. Xu et al. [

18] developed a YOLOX-based detector incorporating a Spatio-Temporal Attention Module (STAM) and a lightweight Group SimSPPFCSP module in the backbone, and further designed an NRPP neck to enhance multi-level feature propagation. Although their method improved the robustness and efficiency of micro-UAV detection, it performed less effectively on low-contrast scenes. Wang et al. [

19] proposed Dist-Tracker, which integrates a Scale-Shape-Quality (SSQ) detector with a Fusion of L2-IoU Tracker (FLIT) for infrared multi-UAV tracking. Their method significantly improves detection sensitivity and motion robustness; however, the lack of appearance features leads to frequent identity switches under severe occlusion.

2.2. Evaluation and Data Augmentation

Xu et al. [

20] introduced the Dot Distance (DotD) metric, formulated as the standardized Euclidean distance between the centroids of predicted boxes and ground-truth annotations, serving as an alternative to the intersection over union (IoU) for assessing localization similarity in tiny object detection. However, because DotD depends only on center distance, it does not encode box width, height, aspect ratio, or orientation, which leads to misjudgments for tilted targets such as UAVs.

For data augmentation, Zhang et al. [

21] developed a scale-compensated anchor allocation strategy to expand the quantity of positive anchors for small targets, thereby enhancing recall. However, this approach also markedly increases the proportion of negative samples during the training process, which leads to a higher likelihood of false detections rate. Kisantal et al. [

22] augmented small instances by copying and pasting them within the same image created through random transformations. While effective at increasing the representation of small objects, their method distorts semantic context, creates redundant objects, and causes overfitting.

2.3. FPN

The Feature Pyramid Network (FPN) [

23] is widely employed since it leverages a top-down pathway to integrate multi-scale representations, thereby enhancing object detection capability across different resolutions. Nevertheless, FPN faces inherent limitations in small-target recognition for UAV surveillance or aerial image analysis. It often introduces noise during feature aggregation, lacks precise cross-scale semantic alignment, and ignores high-frequency textural cues, which can compromise the detection of very small objects.

To solve these problems, various methods to improve the FPN architecture have been developed. Liu et al. [

24] developed the denoising feature pyramid network (DN-FPN), adopting a contrastive learning mechanism for enhanced feature extraction while suppressing noise. DN-FPN improves small-object feature extraction but is highly sensitive to hyperparameters, requiring precise control of the ratio of positive to negative samples. Liu et al. [

25] developed a feature pyramid network (Dual SIEFPN) by integrating semantic and spatial information to minimize information loss during multi-scale feature transfer. While the network improves small-object detection, it relies on a complex attention mechanism, which increases inference time. Zhao et al. [

26] modeled interactions across pyramid levels using graph neural networks to facilitate inter-layer communication. However, the computational overhead of graph construction and message passing cannot completely integrate with lightweight detectors, limiting the models’ deployment in resource-constrained environments. Shi et al. [

27] developed high frequency and spatial perception FPN (HS-FPN) that enhances feature extraction and spatial awareness through high-frequency perception combined with spatial dependency. Despite its advantages, HS-FPN lacks global semantic guidance and cannot completely recognize textureless objects, showing limited adaptability to complex scale variations.

3. Methodology

To address the identified problems, a new model is needed for efficient feature extraction and fusion and robust handling of multi-scale information without increasing computational cost. Therefore, we developed the DRF-YOLO model in this study. We designed a DWR module based on the DWR segmentation network (DWRSeg) to decouple regional feature generation from multi-dilation semantic refinement. The module enables lightweight receptive-field expansion and effectively enlarges the receptive field for small objects to capture textural details with minimal computational overhead. We refined the YOLOv8 neck using an asymptotic fusion framework, extending it to a four-layer feature pyramid with a dedicated auxiliary head for detecting small objects. The developed DRF-YOLO obtains detailed and semantic information through iterative upsampling and downsampling, which significantly improves small-UAV detection accuracy.

3.1. Overall Structure

We employed YOLOv8n [

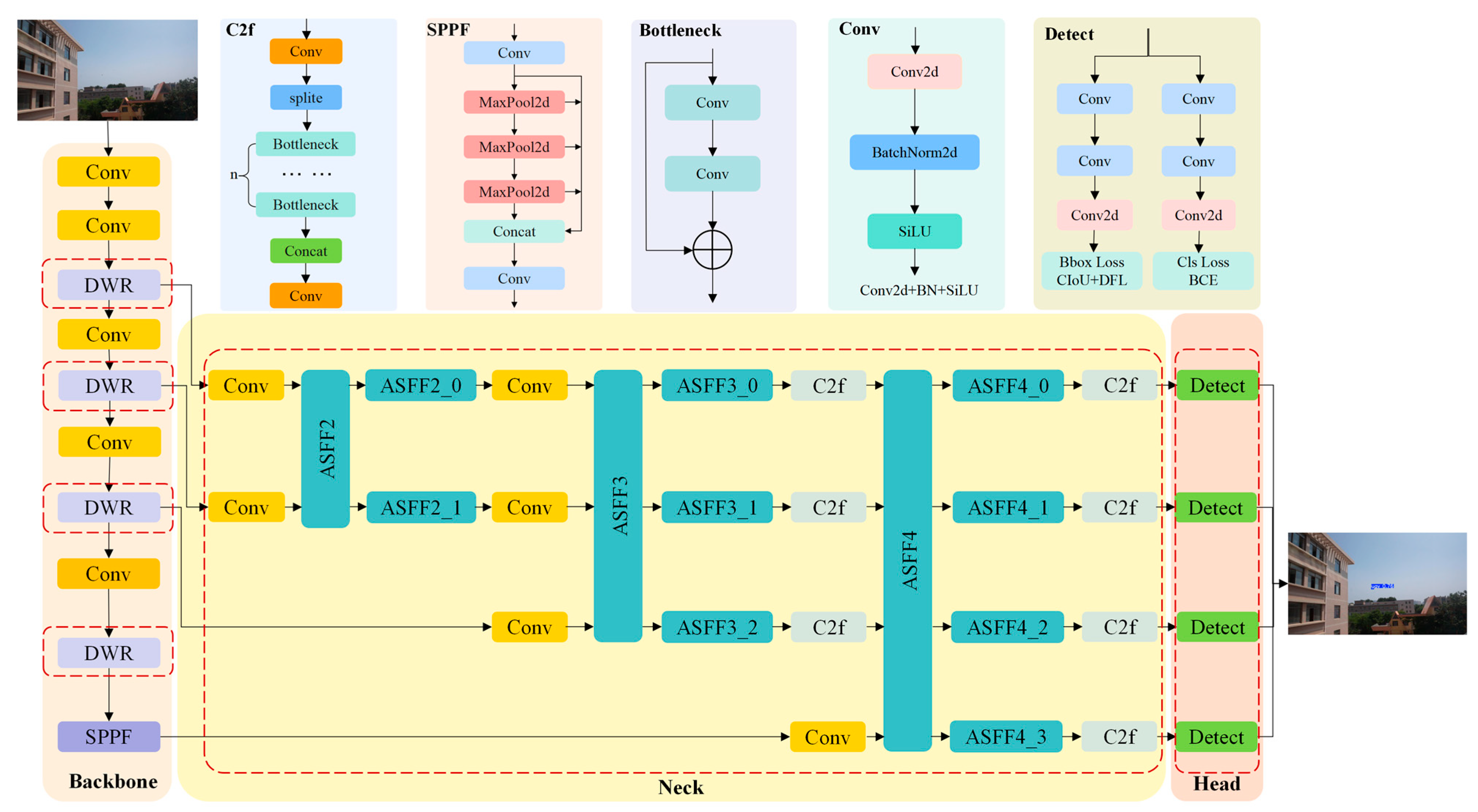

28] as the reference model owing to its fast inference, high training efficiency, and reliable accuracy in generic object recognition. Nevertheless, YOLOv8 demonstrates limitations when handling tiny targets in complex aerial contexts, as it relies heavily on shallow representations and lacks sufficient cross-scale perception. To address this issue, we incorporated a DWR block and an AFPN architecture. The DWR unit was embedded at multiple stages of the backbone to broaden the receptive field for small-target recognition and to strengthen scene interpretation in challenging conditions. Moreover, an additional detection head was introduced at the P2 layer, extending the three original detection branches of YOLOv8. Through the synergistic integration of progressive feature refinement and adaptive spatial fusion offered by AFPN, this extension supports cooperative modeling and fine-grained multi-scale perception, thereby enhancing the detection of small UAV instances in complex backgrounds. The complete network design is illustrated in

Figure 1, where the proposed improvements are highlighted in red boxes to provide a clear structural overview of how DWR and AFPN interact within the full architecture.

3.2. DWR

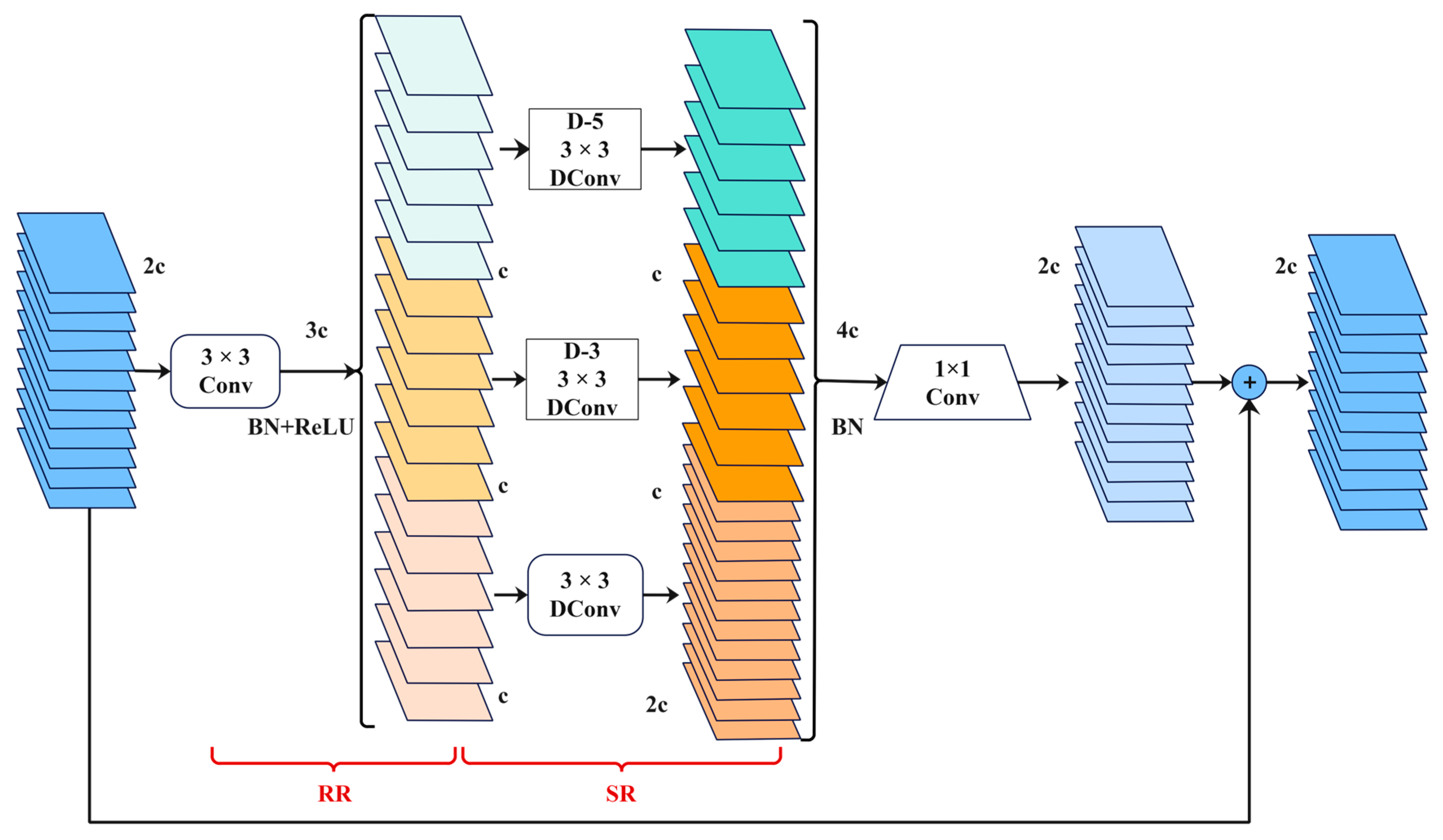

In UAV small-object detection, conventional convolutional neural networks (CNNs) employ static-sized convolutional filters that possess restricted receptive fields, limiting their capacity to capture anything beyond local features. When the target occupies only a few pixels or appears against a complex background, detecting local features is not efficient for accurate recognition, frequently resulting in either undetected objects or incorrect identifications. To address this, we introduced the DWR module as a substitute for the four C2f modules within the initial backbone architecture [

29]. The module captures fine-grained textures by leveraging regional residual components, then utilizes semantic residual learning to enlarge the receptive field, thereby improving the capacity for contextual understanding. The DWR module employs region residualization (RR) to generate compact multi-scale regional features and semantic residualization (SR) to apply depthwise separable dilated convolutions featuring customized dilation factors, execute morphological operations, and adaptively increase the receptive range. Concatenation and fusion of the output features through convolutional layers yield feature maps that contain both detailed textures and extensive receptive fields (

Figure 2). Such a design enables the DWR module to effectively detect small UAVs within complex environments by simultaneously preserving fine-grained details and expanding the receptive field.

In the module, for a given input

, a 3 × 3 convolution is used for the local channel compression. The feature map

R is derived through concurrent application of batch normalization (

BN) and sigmoid linear unit (SiLU) activation functions, which enables the execution of the following multi-branch computational process (Equation (1)).

R is then simultaneously fed into three depth-separable 3 × 3 cavity convolutions with expansion rates of

, respectively, to obtain the short-medium-long receptive field feature

for multi-scale feature capture (Equation (2)). By using

, local detail preservation and global semantic context were balanced while avoiding the redundancy of excessively large dilation rates.

After obtaining the three branch output features, they are spliced in the channel dimension to preserve the independent expression of each receptive field, forming a multi-scale contextual feature

U (Equation (3)).

For cross-scale information interaction, a 3 × 3 convolution is applied to compress the

-channel feature to

. Simultaneously, linear combinations are performed along the channel dimension to generate the fused feature

, which is subsequently used in the residual splicing operation (Equation (4)).

Finally, the original input

is added element-wise as a residual connection to preserve the original feature pathway and help mitigate gradient vanishing (Equation (5)).

Convolutional branches with varying dilation rates are fused with the initial features to enrich multi-scale contextual representations. This integration alleviates the information bottleneck that is commonly encountered in small UAV object detection and causes a lack of global semantic cues. The integration also enhances recognition and localization accuracy.

The DWR module broadens the effective receptive field while maintaining computational efficiency via a three-stage lightweight residualization pipeline: local compression, multi-dilation convolution, and adaptive fusion. Its design facilitates the extraction of both fine-grained edge details and semantically rich representations from small-scale targets, thereby mitigating the constraints imposed by the fixed receptive fields of standard convolutions. Moreover, it effectively reconciles the need for local texture preservation with the demand for global contextual awareness. Experiments confirm that, when integrated into YOLOv8 for UAV-based small-object detection, the DWR module surpasses the original C2f block in both accuracy and efficiency, providing a high-performance yet lightweight alternative.

3.3. AFPN

In complex aerial imagery, UAV targets appear as tiny objects and exhibit significant scale variations. The default FPN in YOLOv8 is ill-suited for this scenario, as it tends to weaken high-level semantic content across multiple levels and compromise low-level spatial details through repeated sampling. To mitigate these limitations, we substituted the original FPN with an AFPN [

30]. We further enhanced the baseline three-head YOLOv8 architecture by incorporating a fourth detection head, thereby forming a four-layer pyramid structure. This extension improves the model’s capability to extract fine-grained textures and multi-scale features, specifically optimized for small UAV detection.

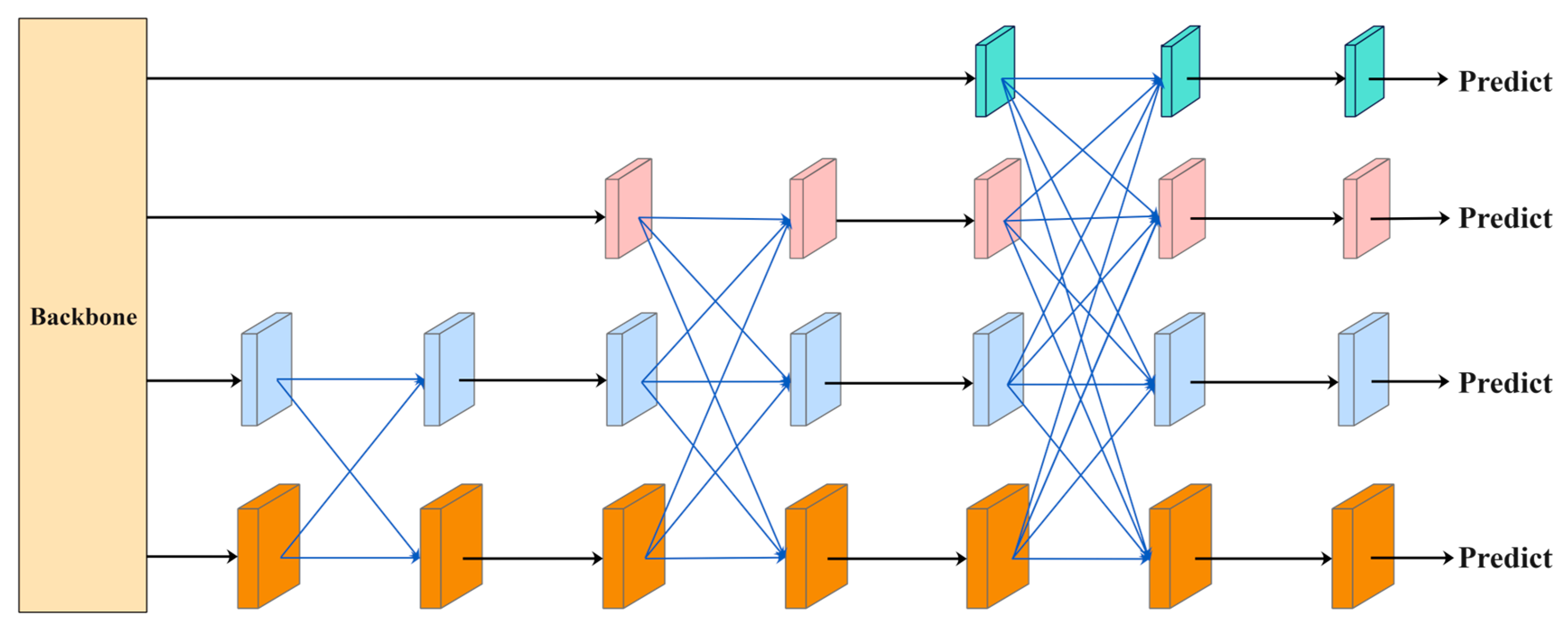

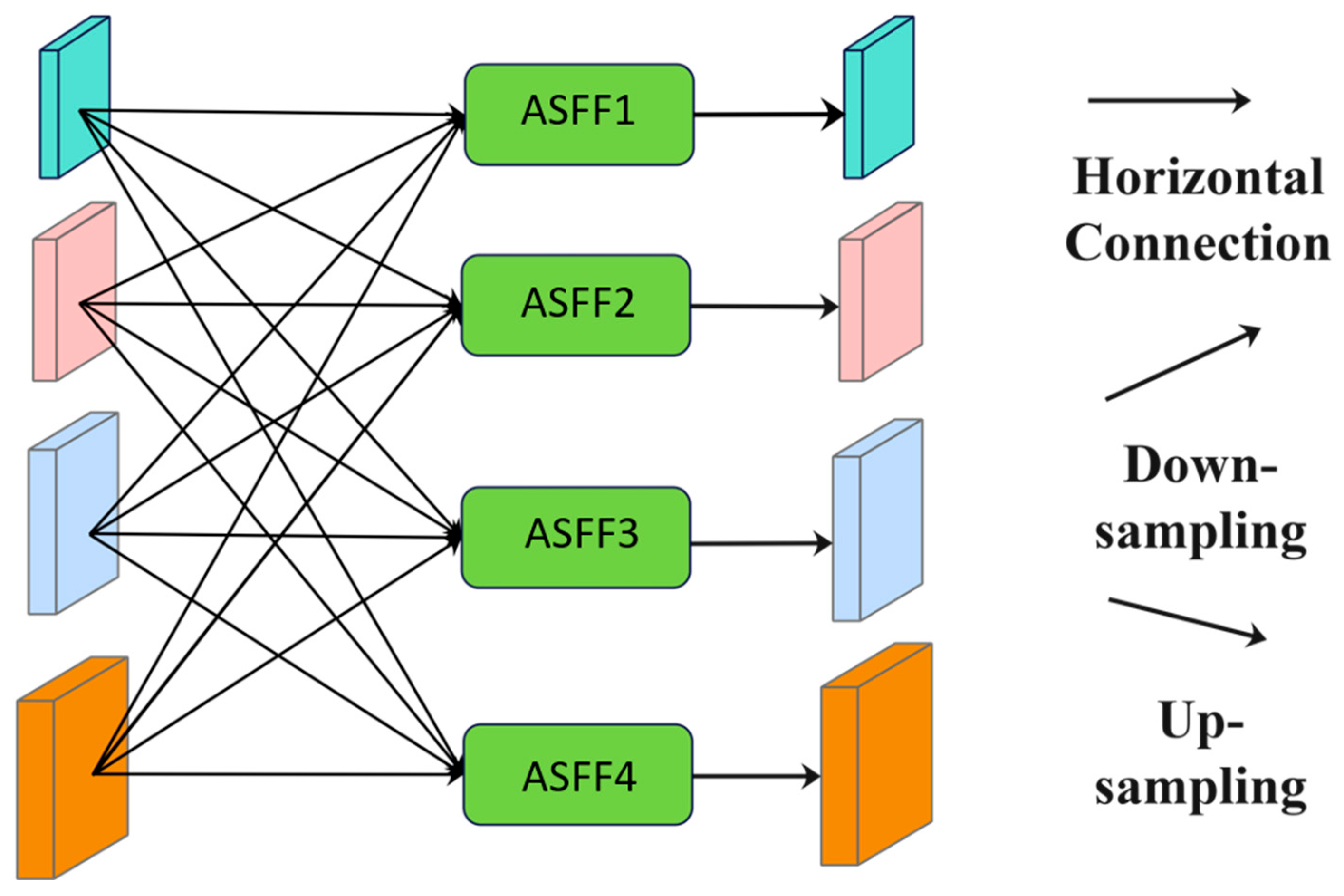

The AFPN architecture employs a progressive asymptotic fusion framework (

Figure 3). The architecture enables AFPN to preserve fine-grained texture information in the highest-resolution layer before incorporating deeper semantic information from neighboring and distant layers. After conducting fusion at each stage, AFPN applies the pixel-level adaptive weighting of the adaptive spatial feature fusion (ASFF) module [

31]. This process optimizes the contribution of AFPN across multiple scales to each spatial location, emphasizing the most informative levels while reducing potential conflicts between overlapping objects. The four feature layers undergo lightweight convolution to unify their channel dimensions before being fed into the corresponding detection heads (

Figure 4). The AFPN architecture generates outputs that integrate high-resolution spatial details with rich, multi-scale semantic context, facilitating precise identification of small UAVs within intricate aerial environments. A detailed breakdown of the AFPN workflow is presented in the following section.

AFPN aligns the spatial resolution of high-level and low-level feature maps via upsampling or downsampling operations, facilitating effective fusion of features across adjacent scales (

Figure 4). Subsequently, a feature-adaptive spatial fusion mechanism is implemented to dynamically assign fusion weights according to the significance of distinct spatial regions within each feature layer. This approach strengthens the representation capability of salient features and improves cross-layer information exchange. The integration of the four-layer feature vectors is formulated in Equation (6).

where

,

, and

denote the spatial weights of the four levels of features in the first level, respectively, and satisfy

,

that denotes the feature vectors from the first to the final levels.

The enhanced AFPN neck produces a series of multi-scale feature sets, derived from the integration of backbone network layers 2, 4, 6, and 9 (Equation (7)). This configuration allows for effective scale diversity in feature representation.

The initial features are then generated by channel downscaling them through a 1 × 1 size convolutional kernel (Equation (8)). In the equations,

represents a 1 × 1 convolution operator, which performs pixel-wise channel transformation on the input feature map.

The underlying features

and

are input into ASFF2, fused and input into ASFF3 with

for secondary fusion. The output of ASFF3 is input into ASFF4 along with

for final fusion (Equation (9)).

where

denotes a 3 × 3 convolutional filter applied to capture local contextual information,

indicates the total number of feature layers participating in the fusion stage, and

signifies the feature value at spatial coordinate

of the

k-th feature map following scale normalization.

Following the fusion stage, the channel dimensions of each of the four-level feature outputs are restored within C2f blocks. This yields a multi-scale feature set , which is subsequently forwarded to the detection head for small UAV object recognition.

Throughout this pipeline, AFPN captures rich semantic content while retaining fine-grained spatial details, leveraging progressive feature fusion and ASFF’s adaptive spatial weighting. The developed multi-scale cooperative and dynamic fusion strategy markedly enhances detection precision for small UAVs in complex scenarios.

3.4. Experimental Setup

We evaluated the DRF-YOLO model on the DUT-Anti-UAV and Det-Fly datasets [

32,

33]. These datasets are designed for UAV detection, featuring thousands of images, including small-scale targets, complex backgrounds, and varied lighting conditions, which are significant challenges in the task. The model was trained for 300 epochs using stochastic gradient descent (SGD) on a workstation equipped with an NVIDIA RTX 4090 GPU. Key hyperparameters are detailed in

Table 1.

Model performance was quantified using four standard metrics: precision, recall, mean average precision at an IoU of 0.5 (mAP@50), and mean average precision across IoU thresholds from 0.5 to 0.95 (mAP@50:95). These metrics are used for the assessment of classification accuracy and localization precision.

Precision is used to measure the ratio of correctly identified positive predictions to the total positive outputs produced by the model. It represents the model’s capability to make accurate positive classifications, as formulated in Equation (10).

where

TP refers to the number of true positives (objects correctly identified), while

FP indicates the number of false positives (objects incorrectly detected).

Recall is defined as the proportion of actual positive samples that the model successfully recognizes. It reflects the model’s capacity to detect all relevant instances, as specified in Equation (11).

Here, FN represents the number of false negatives, referring to objects that were not detected during the process.

The metric

mAP@50 is defined as the mean average precision calculated across all object categories with the IoU threshold set at 0.5. For each class, the average precision is obtained by computing the area under the corresponding precision–recall curve, as expressed in Equation (12). The final

mAP@50 value is derived by averaging these

AP scores over all categories, as formulated in Equation (13).

where

r denotes a specific recall value that maps each recall level to its associated precision value, and

AP represents the average precision.

mAP@50:95 serves as a robust and comprehensive evaluation of model performance. It is computed by averaging precision across IoU thresholds ranging from 0.5 to 0.95 in steps of 0.05. By aggregating results over multiple threshold levels, this metric offers a holistic assessment of detection accuracy and localization precision, as expressed in Equation (14).

3.5. Datasets

The DUT-Anti-UAV dataset, created by a research group at Dalian University of Technology, China, serves as a visible-light-based benchmark for anti-UAV detection and tracking. It contains 10,000 images, with 5200 designated for training, 2600 for validation, and 2200 reserved for testing. The dataset includes 10,109 detectable objects, many of which are small-scale UAVs. The Det-Fly dataset represents a compact air-to-air UAV detection resource, comprising more than 13,000 images of in-flight UAVs captured from another flying platform. These images exhibit diverse conditions, including varied background environments, camera angles, relative distances, flight altitudes, and illumination levels. Approximately half of the detected objects cover less than 5% of their respective image areas.

4. Results

We evaluated the developed DRF-YOLO model on two widely used single-class UAV datasets, DUT-Anti-UAV and Det-Fly, both selected for their challenging small-object instances and varied imaging conditions. DUT-Anti-UAV contains 10,109 annotated UAV instances, with its 10,000 images split into 5200 for training, 2600 for validation, and 2200 for testing. Det-Fly comprises more than 13,000 air-to-air images, and approximately half of its annotated targets occupy less than 5% of the image area. All experiments were conducted at an input resolution of 640 × 640 with standard normalization, and the models were trained for 300 epochs (

Table 1). This section reports the performance of the proposed DRF-YOLO on these datasets, including ablation studies to assess the contributions of the DWR module and AFPN architecture, comparisons with representative detectors to demonstrate performance advantages in small-UAV detection, and both training-curve analyses and qualitative visualizations to further illustrate the robustness and practical effectiveness of the model.

4.1. Ablation Experiment

Ablation experiments were conducted to determine the contributions of the DWR module and AFPN.

On DUT-Anti-UAV, the YOLOv8 baseline achieved mAP@50 = 85.4% and mAP@50:95 = 53.7% with a precision of 90.5% and a recall of 78.5%. Adding the DWR module improved contextual representation and raised mAP@50 to 85.7% and mAP@50:95 to 54.3% while increasing precision to 92.6% and recall to 79.1%. Introducing AFPN alone increased mAP@50 to 86.2% and recall to 79.7%. Combining DWR and AFPN (DRF-YOLO) yielded the largest consolidated gain, yielding mAP@50 = 86.9%, mAP@50:95 = 54.8%, Precision = 93.9%, and Recall = 80.3%, which confirms that DWR and AFPN provide complementary benefits in contextual modeling and cross-level feature fusion (

Table 2).

On Det-Fly, similar trends were observed (

Table 3). YOLOv8 baseline showed mAP@50 = 87.8% and mAP@50:95 = 53.1% (precision 92.4%, recall 82.7%); DWR alone increased mAP@50 to 88.5% and mAP@50:95 to 53.8%; AFPN alone raised mAP@50 to 89.9% and recall to 85.8%; DRF-YOLO reached mAP@50 = 91.1%, mAP@50:95 = 55.4%, precision = 95.0%, and recall = 86.5% (

Table 3).

The ablation results on DUT-Anti-UAV and Det-Fly demonstrate that the DWR module and AFPN architecture improve different aspects of detection. DWR enhances precision and mAP@50:95 by strengthening contextual representation, while AFPN increases recall and mAP@50 through progressive multi-scale fusion. On both datasets, combining the two modules yields the highest overall performance, indicating that their complementary strengths enable DRF-YOLO to achieve balanced improvements in localization accuracy and detection completeness.

4.2. Comparison with Other Models

We compared DRF-YOLO with detectors, Cascade R-CNN [

34], Faster R-CNN [

3], RetinaNet [

35], FCOS [

36], YOLOv5 [

37], YOLOv8 [

28], YOLOv10 [

38], YOLOv11 [

39], and RT-DETR [

40]. On DUT-Anti-UAV, DRF-YOLO achieves mAP@50 = 86.9%, mAP@50:95 = 54.8%, and recall = 80.3%, outperforming most baselines and improving on the YOLOv8 backbone across both mAP metrics (

Table 4).

Two-stage detectors (Cascade R-CNN and Faster R-CNN) showed significantly lower mAP and recall on these small-object datasets, whereas anchor-free methods (FCOS and RetinaNet) improved over two-stage baselines but remain behind modern single-stage and transformer methods in combined accuracy and recall. On Det-Fly, DRF-YOLO presented mAP@50 = 91.1%, mAP@50:95 = 55.4%, and recall = 86.5%, surpassing the YOLOv8 baseline and matching or exceeding other recent detectors in the balance between localization quality and detection completeness (

Table 5). RT-DETR attained a high mAP on Det-Fly but generally requires greater computational resources to achieve comparable recall.

The comparative experiments further highlight DRF-YOLO’s advantages in small-UAV detection. Traditional two-stage frameworks struggle with tiny targets, while anchor-free detectors offer partial improvements but still exhibit limited recall. Recent YOLO variants benefit from architectural scaling yet lack mechanisms specifically designed for fine-grained small-object recognition. By contrast, DRF-YOLO leverages enhanced receptive-field modeling and progressive multi-scale fusion, yielding consistently stronger detection quality across both datasets. Compared with transformer-based detectors such as RT-DETR, DRF-YOLO achieves a more favorable balance between accuracy and computational efficiency, making it better suited for UAV surveillance.

4.3. Training Stability and Qualitative Performance

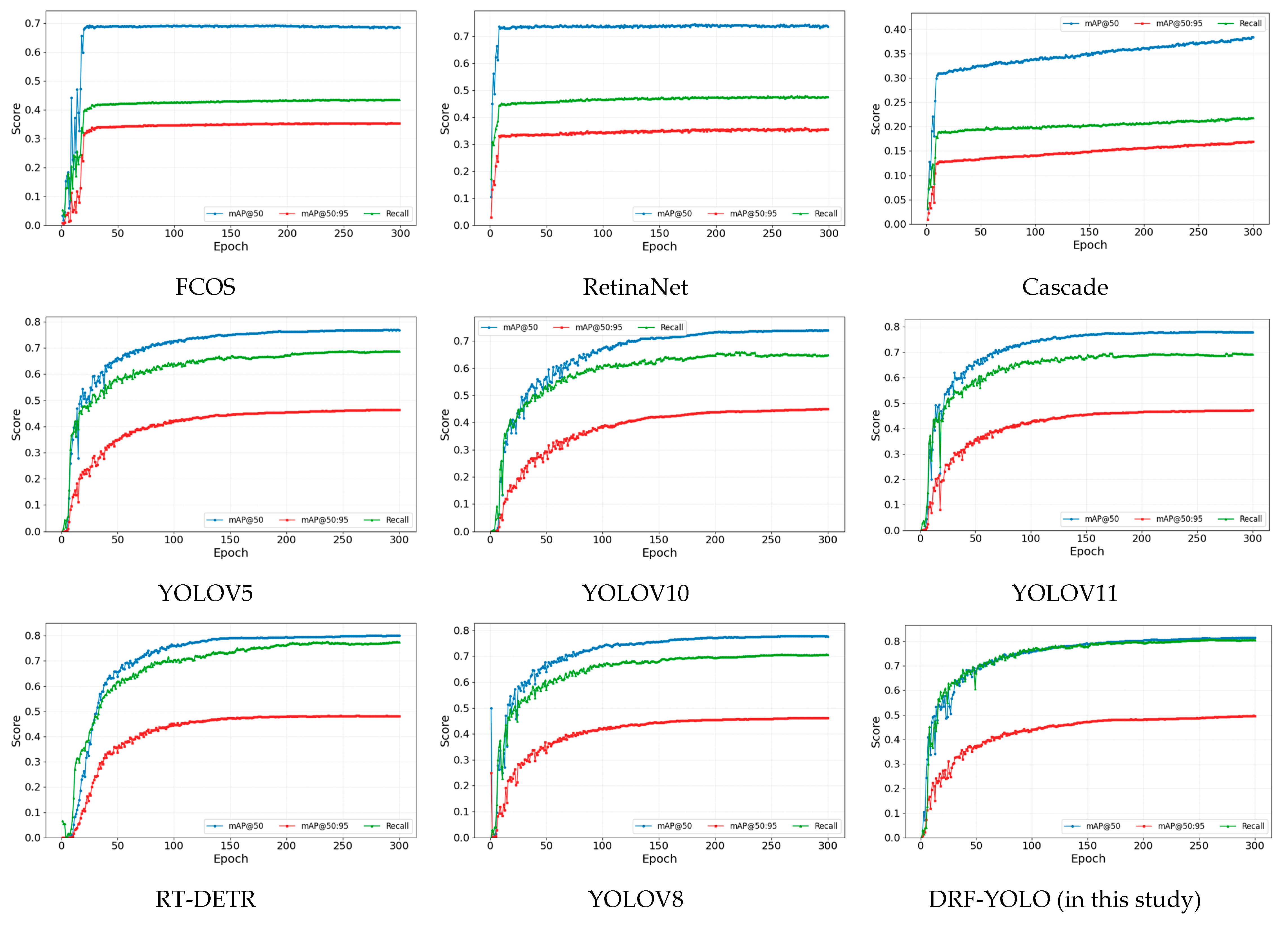

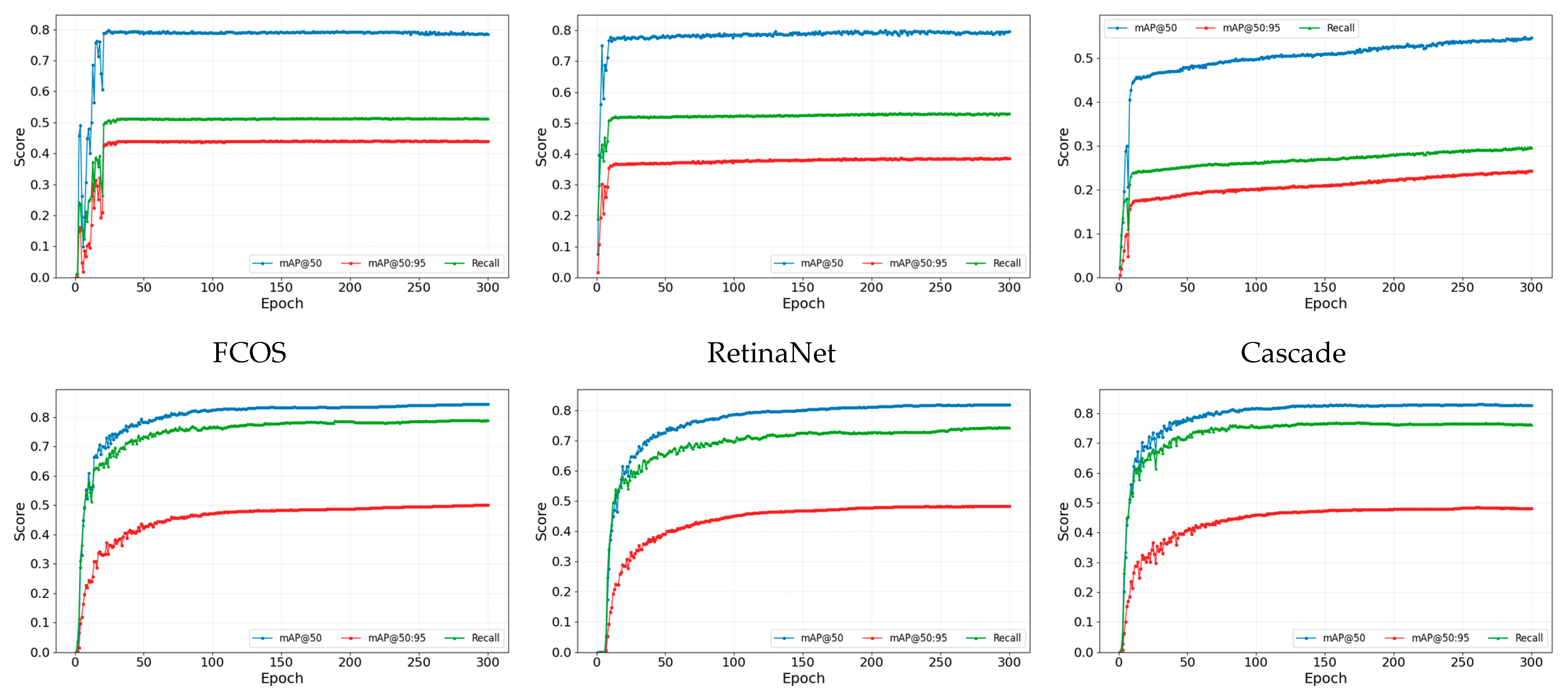

Training curves (

Figure 5 and

Figure 6) show that DRF-YOLO converges steadily to higher mAP and maintains consistently high recall compared with competing methods, indicating stable optimization behavior and effective feature representation for small objects. Each subfigure illustrates the progression of mAP alongside recall throughout the training process. The developed DRF-YOLO model exhibits a consistently increasing mAP and sustained high recall, indicating stable convergence, effective feature representation, and enhanced capability in detecting small-scale targets. In contrast, RT-DETR shows a slower improvement in mAP and comparatively lower recall, suggesting greater computational complexity and challenges in achieving optimal accuracy. Models such as YOLOv5, YOLOv8, YOLOv10, and YOLOv11 tend to present plateaus in mAP during later training epochs and yield only moderate recall, highlighting their limitations in small-object recognition. Although other methods like FCOS demonstrate continuous mAP growth, their recall remains inferior to that of DRF-YOLO, reflecting suboptimal feature utilization and reduced effectiveness in small-object detection.

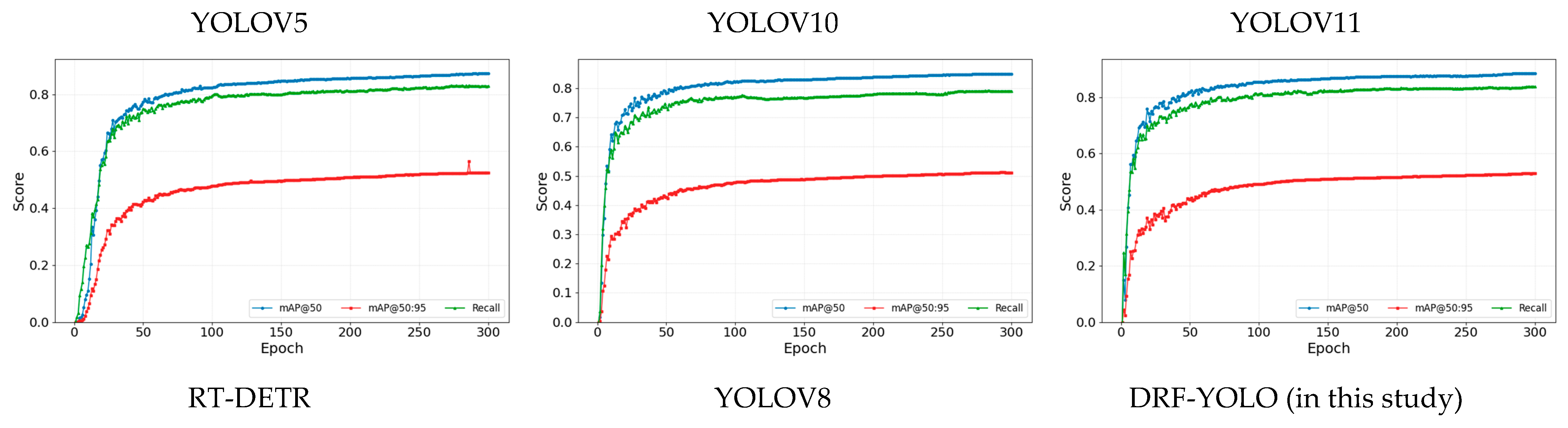

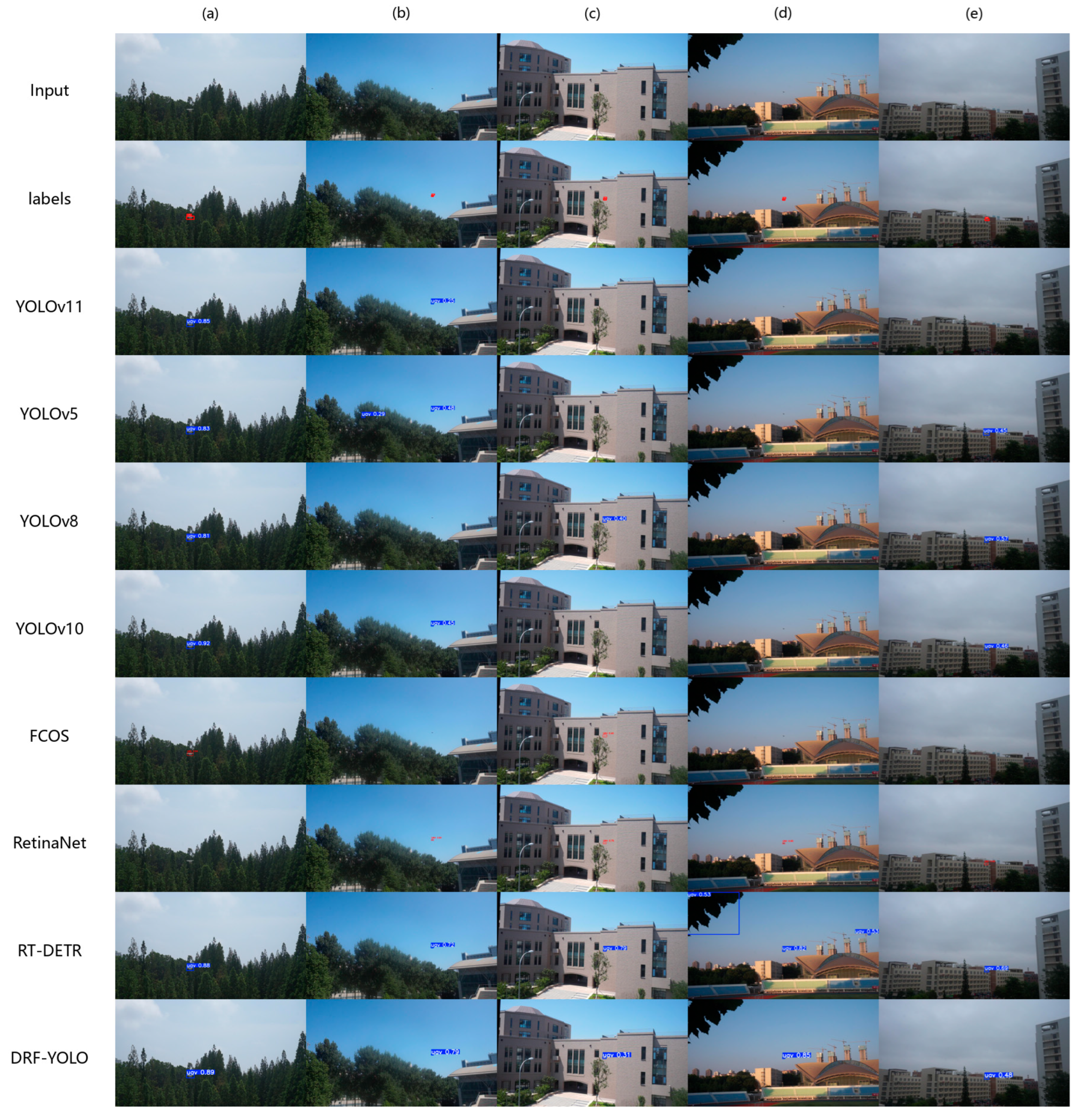

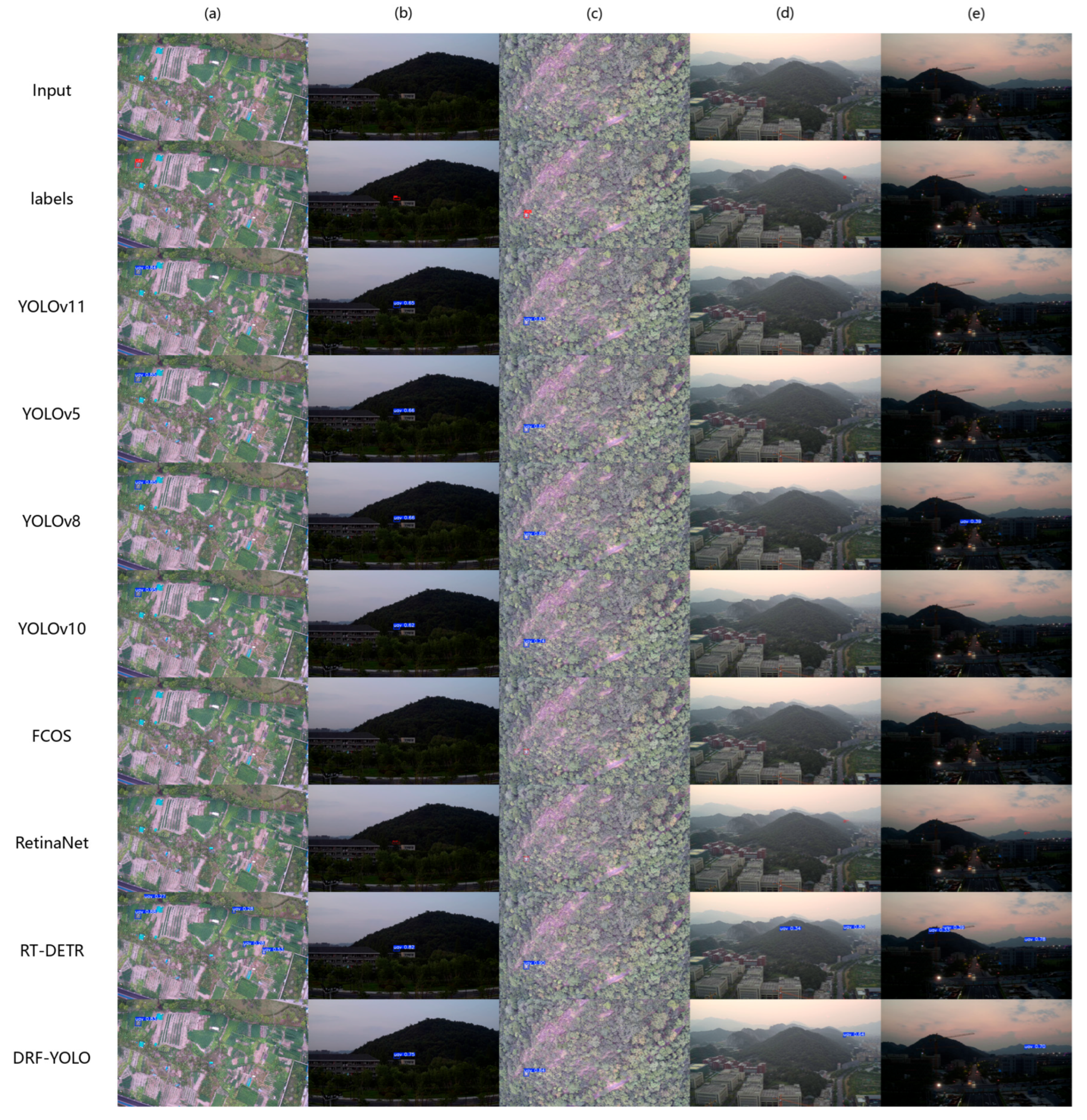

The qualitative prediction analysis confirms that DRF-YOLO generates tighter and more accurate bounding boxes for distant and occluded UAVs, reduces false positives in cluttered backgrounds, and detects a greater number of small targets compared to other models (

Figure 7 and

Figure 8).

The first row of

Figure 7 and

Figure 8 displays the original input images, followed by ground-truth annotations in the second row. Subsequent rows illustrate the prediction outputs from various models. DRF-YOLO consistently produced more accurate and stable predictions across diverse and complex scenarios. It demonstrated superior bounding-box regression and contour-fitting performance, particularly under conditions involving occlusion, uneven illumination, and background-texture interference, where other models frequently exhibited missed or false detections.

In contrast, DRF-YOLO accurately localized small UAVs, substantially reducing detection errors and demonstrating strong capability in small-object modeling and discrimination. This improvement is closely tied to its architectural design. The DWR module expands the receptive field and enhances contextual representation through multi-scale dilated convolutions and a two-stage residual structure, while AFPN enables progressive feature fusion and spatial weighting to extract discriminative features across multiple semantic levels. The synergy of these components allows DRF-YOLO to maintain robust performance across varying object scales, occlusion levels, and lighting conditions, thereby improving the model’s stability, accuracy, and interpretability in small-object detection tasks.

5. Discussion

UAVs operate in complex environments where image acquisition is significantly influenced by factors such as illumination variability, occlusion, and background-texture interference. Consequently, achieving robustness and strong generalization capability is essential for effective detection models. To address these challenges, we developed and evaluated the DRF-YOLO model through comparative experiments using the DUT-Anti-UAV and Det-Fly datasets, which offer diverse imaging conditions including varying backgrounds, flight altitudes, and viewing angles. Model performance was benchmarked against several existing approaches.

On the two datasets, DRF-YOLO achieved mAP@50 scores of 86.9 and 91.1%, respectively, outperforming the baseline YOLOv8. Furthermore, DRF-YOLO demonstrated superior results in mAP@50:95, precision, and recall, indicating its ability to maintain a favorable balance between detection accuracy and overall object recognition performance.

The results highlight the complementary strengths of the DWR module and AFPN. DWR effectively expands the receptive field and enhances contextual feature representation, while AFPN improves multi-scale detection through progressive feature fusion and spatial weighting, enabling stronger semantic integration across layers. Together, these modules deliver consistent gains—particularly in mAP@50 and recall—demonstrating clear synergy without introducing optimization instability. DRF-YOLO maintains accurate bounding-box regression and stable contour fitting even under challenging imaging conditions, confirming its robustness in detecting small objects within complex backgrounds. Quantitative and qualitative evaluations further reveal several patterns: improvements in mAP@50 generally exceed those in mAP@50:95, suggesting enhanced coarse-level localization for tiny UAVs; AFPN-based configurations reliably achieve higher recall than the baseline; and smooth training curves indicate that the added modules integrate well into the overall architecture. These findings collectively verify the model’s effectiveness across diverse UAV detection scenarios.

While conventional methods rely on scale-space enhancement or attention mechanisms, we integrated the DWR module and AFPN into the YOLO framework, resulting in the DRF-YOLO model. The DWR module extends the receptive field and enriches contextual representations, and AFPN facilitates multi-scale feature integration through progressive fusion and adaptive spatial weighting. The synergistic combination significantly enhances the detection ability of small UAV targets in complex environments, improving the robustness of aerial object detection systems.

However, the developed model is specifically designed for UAV detection and validated only on DUT-Anti-UAV and Det-Fly. Although these datasets encompass diverse backgrounds, altitudes, and viewpoints, their single-class nature might limit the evaluation of the model’s performance in multi-class or cross-domain scenarios. Additionally, only detection accuracy and robustness were evaluated without an analysis of inference speed or computational complexity. Therefore, it is necessary to evaluate the model with a broader range of UAV datasets with varied sensing modalities and complex aerial detection tasks, such as distinguishing UAVs from birds or other airborne objects. We plan to assess efficiency and resource utilization and validate the generalization and practical applicability of the DRF-YOLO model to address such limitations.

6. Conclusions

Detecting small UAVs in complex environments is a critical task. For the task, we developed and evaluated the DRF-YOLO model, which combines a DWR module and AFPN. In the model, the DWR module improves contextual awareness and feature discrimination, while AFPN enhances multi-scale semantic fusion. The experimental results in this study validated that the DRF-YOLO model addressed the challenges in small-object detection. Evaluation results on the DUT-Anti-UAV and Det-Fly datasets confirmed the model’s superior performance, with significant gains over the YOLOv8 baseline model and other widely used models. The ablation experiment results also validated the complementary contributions of the DWR module and AFPN. On the DUT-Anti-UAV and Det-Fly datasets, the model reached mAP@50 values of 86.9% and 91.1%, outperforming YOLOv8 by 1.5% and 3.3%, respectively. The model’s recall of 80.3 and 86.5% demonstrated its ability to correctly identify small objects. The DRF-YOLO model also showed superior performance to other models with two-stage detectors (e.g., Faster R-CNN), anchor-free detectors (e.g., FCOS, RetinaNet), and YOLO Series and RT-DETR models. The DRF-YOLO model made more accurate and stable predictions than other models, particularly in complex scenarios with occlusion, uneven illumination, and background-texture interference. The model consistently demonstrated superior bounding-box regression and contour-fitting capabilities. While other models showed unstable or lower learning curves in training, the DRF-YOLO model maintained a high recall and a smooth, continuous learning curve, indicating strong stability and promising generalization within UAV detection tasks.

The DRF-YOLO developed in this study provides a reliable and high-performance solution for small UAV detection, particularly in scenarios involving large scale variations, dense targets, and complex backgrounds. Its consistent improvements over multiple baselines on two challenging UAV benchmarks demonstrate that the proposed design is not only effective but also robust across diverse imaging conditions and levels of background clutter. It further establishes a solid foundation for developing broader applications in small-object detection.